Abstract

Building energy optimization (BEO) is a promising technique to achieve energy efficient designs. The efficacy of optimization algorithms is imperative for the BEO technique and is significantly dependent on the algorithm hyperparameters. Currently, studies focusing on algorithm hyperparameters are scarce, and common agreement on how to set their values, especially for BEO problems, is still lacking. This study proposes a metamodel-based methodology for hyperparameter optimization of optimization algorithms applied in BEO. The aim is to maximize the algorithmic efficacy and avoid the failure of the BEO technique because of improper algorithm hyperparameter settings. The method consists of three consecutive steps: constructing the specific BEO problem, developing an ANN-trained metamodel of the problem, and optimizing algorithm hyperparameters with nondominated sorting genetic algorithm II (NSGA-II). To verify the validity, 15 benchmark BEO problems with different properties, i.e., five building models and three design variable categories, were constructed for numerical experiments. For each problem, the hyperparameters of four commonly used algorithms, i.e., the genetic algorithm (GA), the particle swarm optimization (PSO) algorithm, simulated annealing (SA), and the multi-objective genetic algorithm (MOGA), were optimized. Results demonstrated that the MOGA benefited the most from hyperparameter optimization in terms of the quality of the obtained optimum, while PSO benefited the most in terms of the computing time.

1. Introduction

1.1. Background

The growth in global energy consumption has raised concerns about primary energy shortages and serious environmental impacts, such as global warming, ozone layer depletion, and climate change. According to the International Energy Agency, buildings consume nearly 36% of the world’s energy and are responsible for 39% of global greenhouse gas emissions [1]. Thus, building designs that emphasize energy efficiency are crucial to reducing carbon emissions, achieving energy savings, and adapting to climate change.

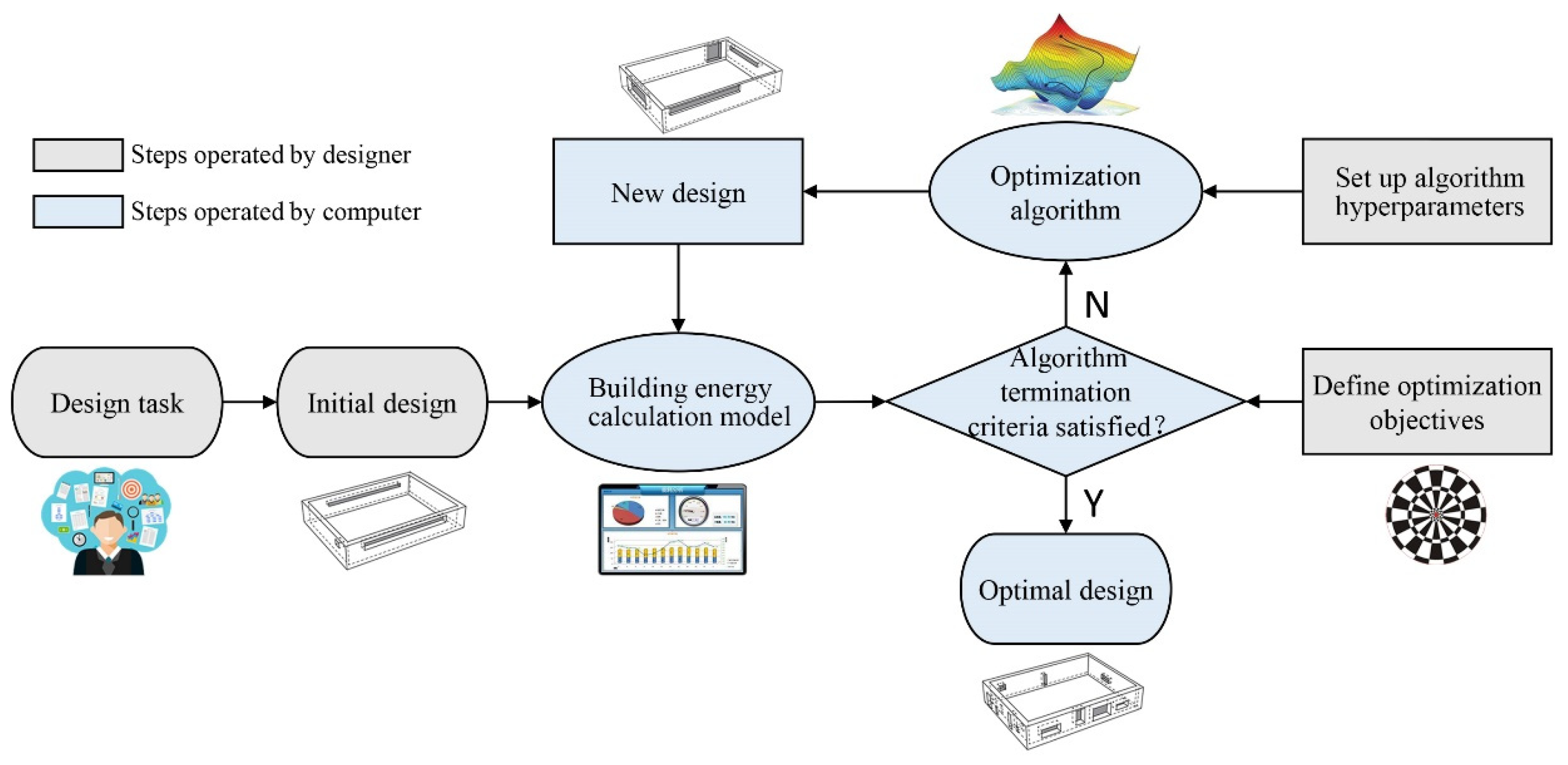

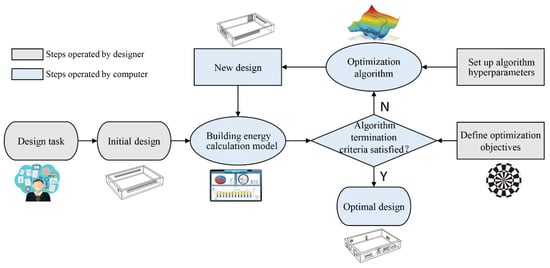

Several energy-efficient building technologies have been proposed to improve building energy efficiency, among which the building energy optimization (BEO) technique has emerged as a promising method to achieve high-performance design, with particular emphasis on building energy. It has been revealed that it can reduce building energy use by up to 30 percent compared to a benchmark design [2]. The general procedure of the BEO technique is illustrated in Figure 1. As shown, it relies on optimization algorithms to create new designs according to preset design objectives and simulation results. This technique has been widely applied in optimizing building systems (such as lighting and HVAC), building envelopes (such as form, construction, and double-skin facades) and renewable energy generation (such as solar and ground energy) [3].

Figure 1.

General workflow of the BEO technique.

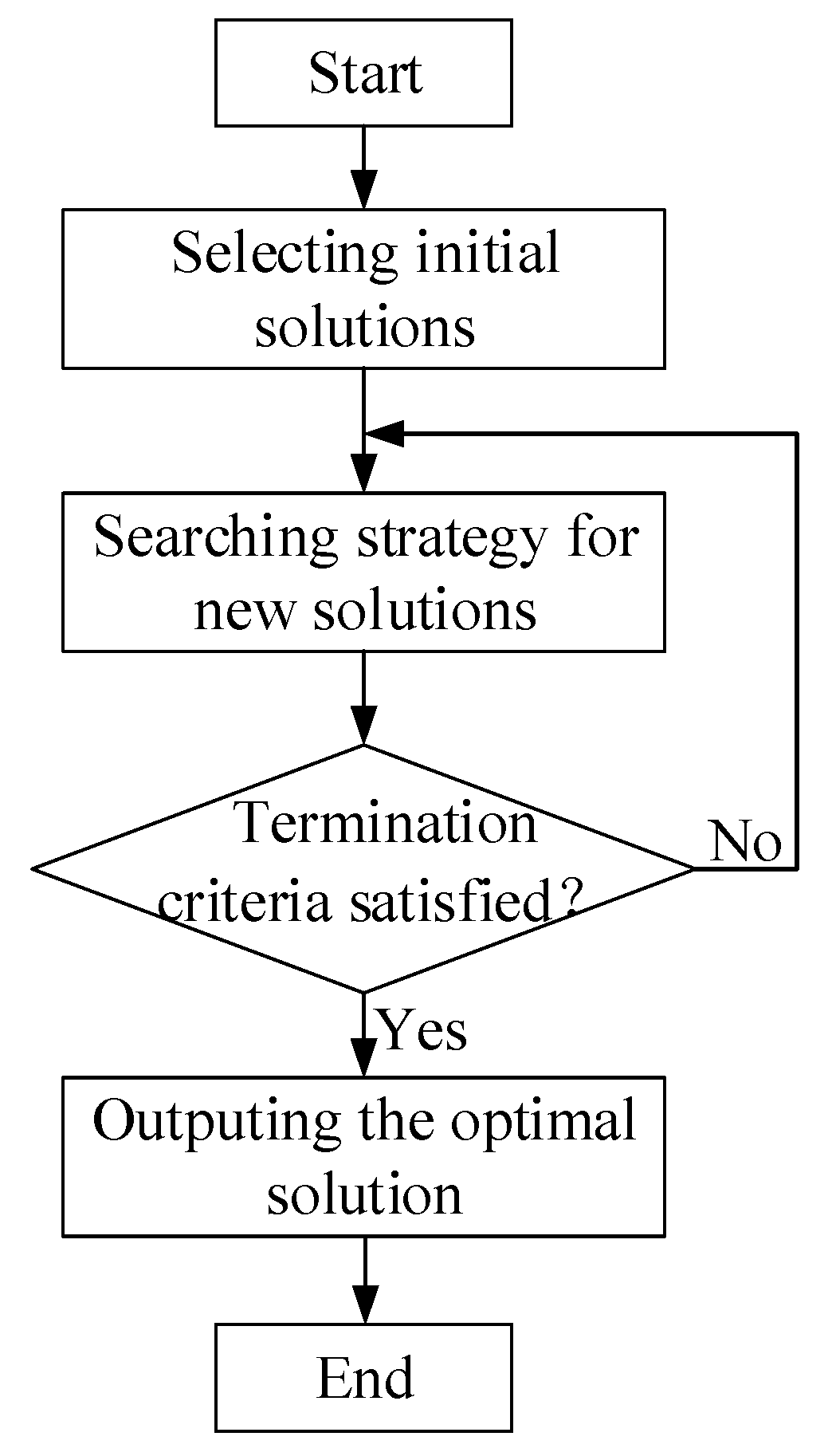

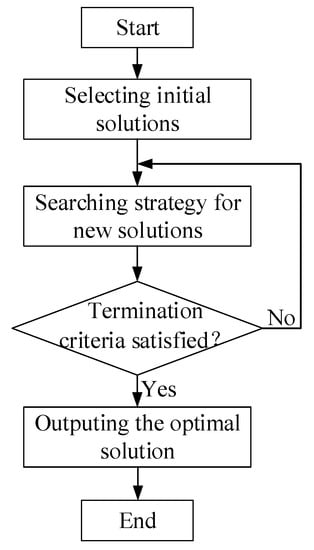

As illustrated in Figure 1, the optimization algorithm plays a vital role in the BEO workflow. The algorithm drives the whole technical process, automatically adjusts the design variables to generate new designs, and regularly finds an optimal design that meets the predefined objective. Therefore, the efficacy of an algorithm significantly affects the efficiency of the BEO technique. Currently, there are a variety of optimization tools can be integrated into the BEO technique [4], including GenOpt, modeFRONTIER, ModelCetner, Matlab, jEPlusþEA, MOBO, ENEROPT, GENE_ARCH, and Grasshopper plugins, such as Galapagos, Goat, Silvereye, Opossum, Optimus, RBFMopt, and Dodo. Among them, MATLAB is one of the most often used [5] and was adopted in this study. These optimization tools are often embedded with various optimization algorithms which generally fall into three categories: direct search, intelligent optimization, and hybrid. Our previous review found that [5] intelligent algorithms are the most popular in BEO, followed by direct search algorithms. Among intelligent algorithms, the genetic algorithm (GA) and its modified versions are dominant, followed by the particle swarm optimization (PSO) algorithm. Although many algorithms are currently used, not all algorithms work well for any given BEO problem. Figure 2 illustrates the general working mechanism of optimization algorithms. Starting from one or several initial solutions, a certain strategy is applied to guide the next search for new solutions, the search is stopped when the termination conditions are met, and finally, the optimal solution examined in the run is output. Therefore, the selection of the initial solutions, the strategy of searching for new solutions, and the hyperparameters of the algorithm that implement the searching strategy determine the search path, and thus determine the algorithm’s global search ability and the overall running time.

Figure 2.

General working mechanism of optimization algorithms.

Considering the crucial role of hyperparameters in algorithm efficacy and BEO efficiency, they should be accurately set to maximize the optimization ability of the algorithm so as to quickly and exactly find the global optimum. However, existing works targeting algorithm hyperparameters in BEO are rare. Effective methods for setting algorithm hyperparameters are still lacking. Due to barriers related to expertise, the current BEO research simply uses the common general hyperparameter settings (HPSs) indiscriminately without considering their validity and reliability. However, obtaining a general-purpose, universal HPS is impossible. The algorithm hyperparameters should be accurately tuned based on the specific BEO problem to be solved.

A challenge faced by tuning the HPS of an algorithm applied in BEO is the computationally expense of building energy evaluation. Generally, three types of models can be used to perform building energy evaluation: simplified analytical models, detailed simulation models and metamodels (or surrogate models). Simplified analytical models aim to establish mathematical functions between design variables and building energy by describing physical mechanisms. This kind of work can be found in [6]. Although mathematical formulas can be quickly calculated, describing a BEO problem mathematically is not easy. In most cases, the BEO problem is too complicated to allow its physical mechanism to be captured, especially with high dimension of design variables or complex building models. Therefore, simplified analytical models are only applicable to simple problems. Detailed simulation models are most frequently used in BEO. Much related work can be found in [7,8,9,10], where various programs were used, including EnergyPlus, TRNSYS, IDA-ICE, DOE2, etc. A major challenge faced in detailed models is the high computational cost, where one simulation can take minutes, hours, or even days. Consequently, design optimization, sensitivity analysis, and design space exploration become impracticable as they often require hundreds or thousands of simulation evaluations. One method of alleviating this burden is by developing metamodels that mimic the behavior of the detailed simulation models using a data-driven, bottom-up approach [11]. Metamodeling, also known as black-box modeling, behavioral modeling, or surrogate modeling, is trained on the basis of modeling the simulator’s response to a limited number of samples. It has been receiving increasing attention in a wide range of applications in building design; for example, sensitivity analysis [12], uncertainty analysis [13], and optimization [14]. Several methods have been successfully used to train metamodels in BEO, such as ANNs, Bayesian networks, support vector machines (SVMs), etc. Among them, ANNs have been proven to have high accuracy for learning, strong capacity for nonlinear mapping, and good robustness, and have been actively used in building-related studies; for example, building energy [15,16], thermal load [17], and daylighting prediction [18]. To fit an ANN model into different problems, its hyperparameters also must be tuned and optimized. It is crucial to select an appropriate technique to detect optimal hyperparameters of ANNs. The commonly used techniques include grid search, random search, Bayesian optimization, gradient-based optimization, and metaheuristic algorithms, such as GA and PSO.

1.2. Literature Review

1.2.1. The Efficacy of Optimization Algorithms in BEO

In view of the crucial role of algorithms in BEO technique, their efficacy has attracted the attention of building energy related research. Related works include Prada et al. [19], which compared the efficacy of three algorithms used for evaluating the optimal refurbishment of three reference buildings. The authors focused on two efficacy criteria of the algorithms, namely, the percentage of actual Pareto solutions and the number of cost function assessments, where the actual Pareto front was determined through a brute-force approach. Waibel et al. [20] investigated the performance behavior of a widely selected single-objective black-box optimization algorithms, including deterministic, randomized, and model-based algorithms. They also studied the effect of hyperparameters on the efficacy of the algorithms. The efficacy criteria of interest for these algorithms included convergence times, stability, and robustness. Chegari et al. [21] applied the BEO technique with integrated multilayer feedforward neural networks (MFNNs) to optimize the indoor thermal comfort and energy consumption of residential buildings. They compared the computation time required by the three most commonly used metaheuristic algorithms, i.e., the multi-objective genetic algorithm (MOGA), the nondominated sorting genetic algorithm II (NSGA-II), and the multi-objective particle swarm optimization (MOPSO). The results indicated that MOPSO performed the best. Si et al. developed sets of efficacy criteria for single-objective and multi-objective algorithms [22,23]. They also investigated the ineffectiveness of algorithms and the causal factors that may lead to ineffectiveness, including the selection of the initial designs and HPSs [24].

1.2.2. Methods of Setting Algorithm Hyperparameters in BEO

At present, four methods of setting algorithm hyperparameters can be found in BEO-related works. One is to simply use the default HPSs of the algorithms embedded in mature optimization tools described in Section 1.1. Related studies include that of Yu et al. [7], which applied NSGA-II to optimize thermal comfort and energy efficiency in a building design. NSGA-II was realized in MATLAB and run with the default HPS in the tool. Hamdy et al. [25] compared seven commonly used multi-objective evolutionary algorithms in solving a nearly zero-energy building design problem. Due to lacking scientific information on the algorithm hyperparameters for building energy problems, they employed the default HPSs in MATLAB. In these studies, the algorithms’ default HPSs in the optimization tools were assumed to be generally applicable for most problems. However, this assumption is unfair. In fact, most optimization tools (e.g., modeFRONTIER and MATLAB) were originally developed to solve problems encountered in non-architectural fields, such as mathematics and computer science. Whether the default HPSs for non-architectural problems apply to BEO problems is still an open question. One fact is that the requirements for the optimization capabilities of algorithms differ with the problems faced by different research fields. For example, the objective calculations of mathematics problems are generally fast when using mathematical software such as MATLAB, usually less than one second. Thus, compared with the computation cost, the quality of the final optimum obtained by algorithms is more significant in mathematics. However, the objective calculations of building models are usually time-consuming, ranging from minutes to hours when using detailed building simulation models. Considering the limited computing time, the available number of objective calculations for BEO problems is usually 100–1000 orders of magnitude less than that for mathematics problems. Thus, both the computation time and the quality of the final optimum are essential for BEO technique. In this case, the default HPSs that are valid for a mathematics problem may not have the same effect for a BEO problem.

The second common method is to manually evaluate algorithms’ efficacy with a limited number of HPSs and then choose the one that makes the algorithm perform best. Related studies include that of Wright and Alajmi [8], who investigated the robustness of a GA in finding solutions to an unconstrained building optimization problem. Experiments were performed for twelve different HPSs of GA, with nine trial optimizations completed for each HPS. Yang et al. [26] performed a sensitivity analysis to investigate the effects of two algorithm hyperparameters, namely, the number of generations and population size, on the optimal trade-off solution and the robustness of NSGA-II. Chen et al. [27] applied NSGA-II to optimize passively designed buildings. Different HPSs of NSGA-II were examined to improve the computational efficiency without jeopardizing the optimization productivity. In this area, due to the limitations of manual tuning and comparison, the number of hyperparameter combinations is usually inadequate, which leaves a large portion of possible combinations uninvestigated. In this case, the result is only the best in the compared group, not the real best in the whole feasible region.

The third widely used method in BEO for setting algorithm hyperparameters is to learn from the HPSs used in other similar studies or from the expertise of researchers. Related studies include that of Futrell et al. [28], which presented a methodology to optimize daylighting performance of a complex building design using dynamic climate-based lighting simulations. The authors compared four optimization algorithms and used HPSs that have performed well in other similar publications. Delgarm et al. [9] coupled a mono- and multi-objective PSO algorithm with EnergyPlus to search for a set of Pareto solutions to improve building energy performance. The hyperparameters of PSO were set up based on the authors’ expertise. Ascione et al. [29] proposed a new methodology for the evaluation of cost-optimality through the multi-objective optimization. The HPS of the GA were determined based on the expertise of the authors and some previous studies. Such methods in this sector still have limitations. On the one hand, most HPSs used in the referenced studies were initially identified through manual comparison studies or by using the default HPSs in optimization tools. Their reliability still needs to be further verified; blindly learning from other works will lead to unreliable optimization results. On the other hand, due to the diversity of building models, building design variables, and optimization objectives, the BEO problems to be solved are diverse. It is quite difficult for designers to find similar research to directly borrow their HPSs.

The fourth method involves using self-adaptive strategies to generate algorithmic hyperparameters, which allows the algorithm itself to be adapted to the characteristics of the problem. Related works include that of Cubukcuoglu et al. [30], which proposed a new optimization tool named Optimus for grasshopper algorithmic modeling in Rhinoceros CAD software. It implemented a self-adaptive differential evolution algorithm with an ensemble of mutation strategies (jEDE) which performed better than most other optimization tools in the tests of standard and CEC 2005 benchmark problems. Ramallo-González and Coley [31] used a self-adaptive differential evolution algorithm (jDE) to cope with changes in the objective landscape for low-energy building design optimization. Ekici et al. [32] adopted a multi-objective jDE in the conceptual phase to solve a form-finding problem in high-rise building design. Results indicated that jDE outperformed NSGA-II with a much more desirable Pareto front. Although self-adaptive strategies have shown their strengths in most works, in most cases, they only focused on limited algorithm hyperparameters; for example, the mutation rate of the differential evolution algorithm without considering other algorithm hyperparameters simultaneously and the interactions between them.

1.2.3. Summary of the Literature Review

The efficacy of optimization algorithms has raised concerns in the BEO research field. Although algorithm hyperparameters have a significant impact on algorithm efficacy, studies focusing on this topic are insufficient, and there still exist some unsolved problems: (1) the four common methods for setting algorithm hyperparameters fail to fully evaluate the possible combinations and interactions; (2) due to the variety of BEO problems, algorithm parameters should be set up based on the properties involved in the problem, such as multidimensionality, building types, optimization objectives, etc.; however, studies focusing on problems’ properties are scare.

1.3. Research Outline

This paper aims to fill these knowledge gaps and address the abovementioned issues by intelligently optimizing the HPSs of algorithms applied in BEO. The outline of this paper is as follows:

- A metamodel-based methodology for hyperparameter optimization of algorithms applied in BEO is introduced;

- A total of 15 benchmark BEO problems with different properties are constructed for numerical experiments;

- The HPSs of four commonly used algorithms are optimized.

The research presented in this paper is timely and valuable for a number of reasons. On the theoretical side, the proposed method can deepen our understanding of algorithm hyperparameters, the efficacy of algorithms and the properties of BEO problems in general. On the application side, the results obtained from this study can help engineers, architects, and consultants properly set algorithm hyperparameters so as to effectively apply the BEO technique and use various energy-saving measures.

The rest of this paper is organized as follows: Section 2 illustrates the methodology; Section 3 presents a step-by-step detailed numerical experiment; Section 4 offers the results and discussion; Section 5 summarizes the paper and discusses the challenges of this study and the prospects for future research.

2. Methodology

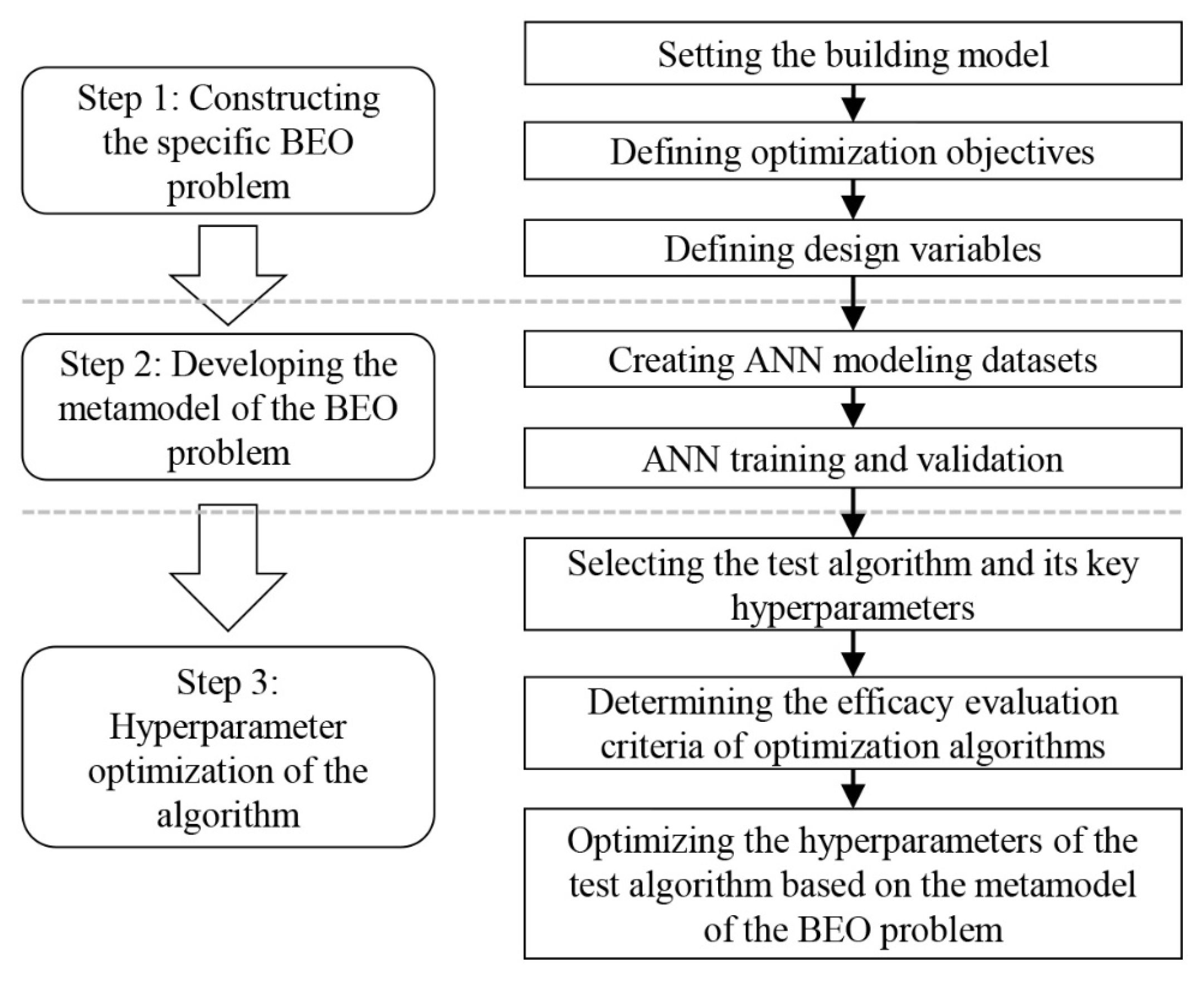

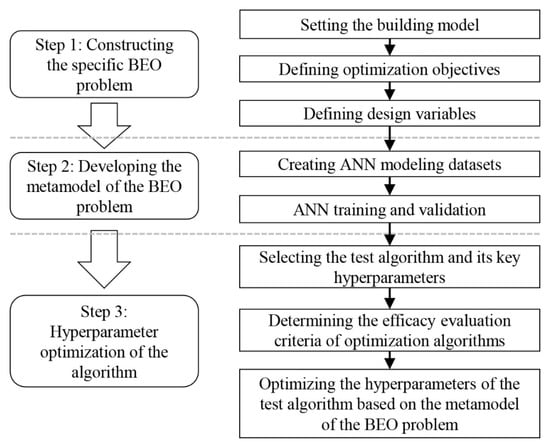

The workflow of the proposed methodology is summarized in Figure 3. As shown, it mainly includes three steps: constructing the specific BEO problem, developing the metamodel of the problem, and hyperparameter optimization of the algorithm applied in BEO. Detailed explanations of each step are given below.

Figure 3.

Flowchart of the methodology.

2.1. Step 1: Constructing the Specific BEO Problem

Generally, the mathematical form of a BEO problem can be as follows:

where X is a vector of n design variables, k ≥ 1 is the number of optimization objectives, F(X) ∈ Rk is the vector of objective functions in which fi(X) is the computation function of the ith objective, and gi(X) and hj(X) are equality and inequality constraints, respectively. If p and q equal 0, this is an unconstrained optimization problem. If k equals 1, the problem is a single-objective optimization problem. By convention, Equation (1) defines a minimization problem. A maximization problem can be solved by converting the objective function into a minimization problem.

According to the expression shown in Equation (1), design variables, objective functions, and constraints are the key elements of a BEO problem, and they are often computationally expensive to simulate, multimodal, non-smooth, non-differentiable, etc. [33] These properties contribute to the complexity of BEO problems and may seriously exacerbate the difficulty of algorithm optimization. For example, the high dimensions of input and output variables make problem modeling and optimization exponentially difficult. As the number of design variables increases, the search space expands exponentially, which dramatically increases the difficulties for algorithms in searching the entire design space. In these cases, it is more important to accurately set the algorithm parameters to help the algorithm effectively overcome specific property obstacles and achieve efficient optimization. Hence, the first step of the developed method is to construct the clear BEO problem to be solved, including the building model, design variables, constraints, and optimization objectives, and then optimizing the HPSs of the algorithm to overcome the property barriers involved in the specific problem.

2.2. Step 2: Developing the Metamodel of the BEO Problem

The hyperparameter optimization of an algorithm needs to investigate the efficacy of the algorithm when adopting different HPSs. Each adjustment of the HPS requires the BEO workflow illustrated in Figure 1 to run once. In this case, the total computing time T can be roughly calculated by Equation (2):

where m is the total number of HPS adjustments, nk is the number of building designs generated by the BEO process with the kth HPS tuning, and Δt is the computing time consumed in evaluating the energy objective of a design.

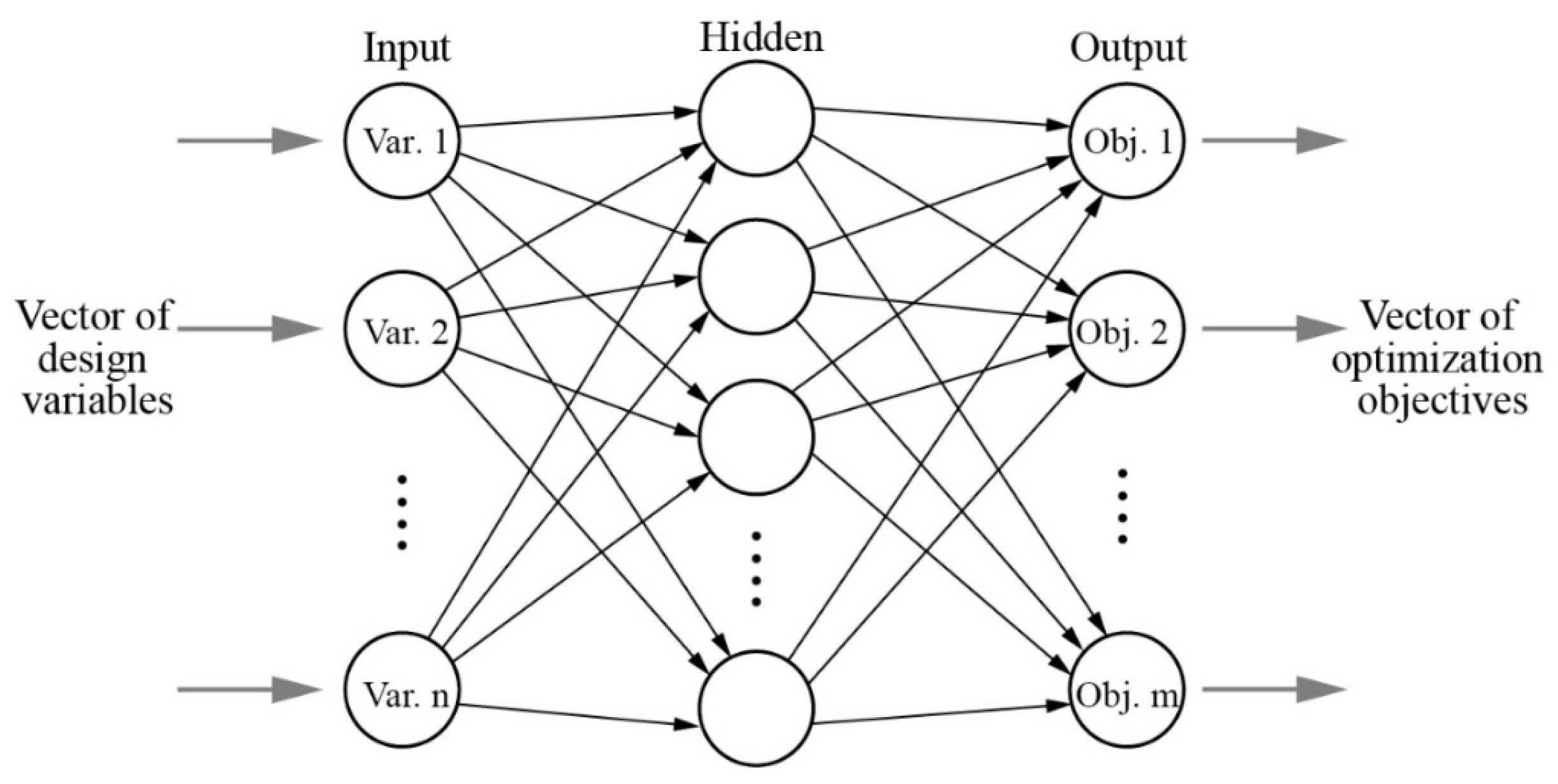

It is clear that Δt is crucial to the total computing time T. As introduced in Section 1.1, among the three evaluation methods of building energy, including simplified analytical models, detailed simulation models and metamodels, metamodels demonstrate great advantages in terms of accuracy and time cost. In this study, an ANN is chosen as the metamodeling technique. The general procedure for ANN-trained meta-modeling is as follows:

- Sampling input variables and using detailed building simulation tools to calculate the corresponding energy consumption of each sample to form a database for metamodel training;

- Based on the sample database, training the metamodel by ANN;

- Hyperparameter optimization of ANN and verifying the accuracy of the metamodel.

2.3. Step 3: Hyperparameter Optimization of Algorithms

Hyperparameter optimization of an algorithm needs to constantly tune the HPSs to determine the one that makes the algorithm perform best. If relying on manual tuning, the process will be time consuming and may be unable to fully evaluate the feasible domain of the hyperparameters. In addition, when the feasible domain of the hyperparameters are continuous, there are theoretically infinite possible values, making it impossible to manually traverse all the possibilities. Therefore, to overcome the trial-and-error limitations of manual tuning, this study employs NSGA-II to automatically tune the HPSs and finally output the optimum.

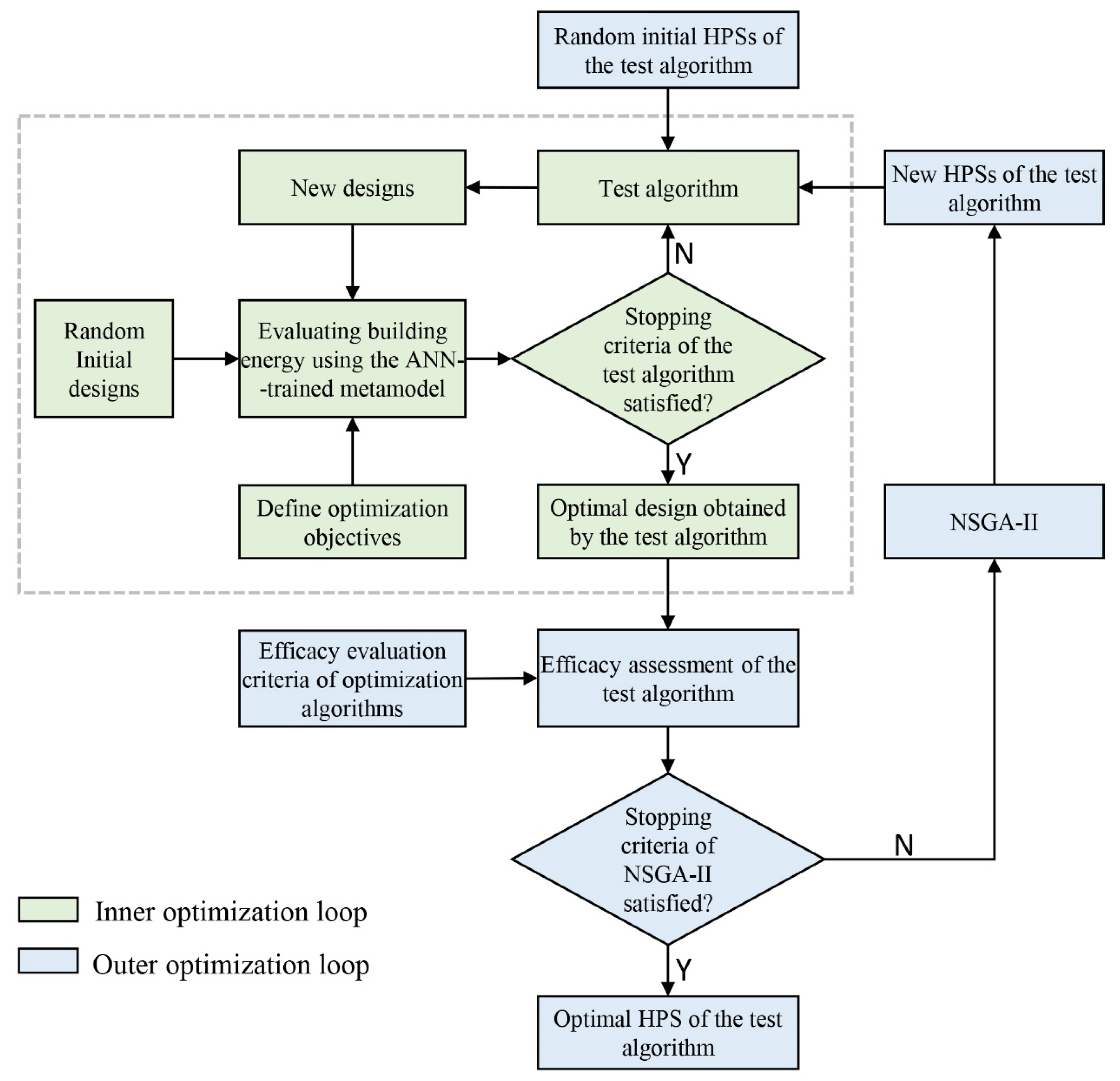

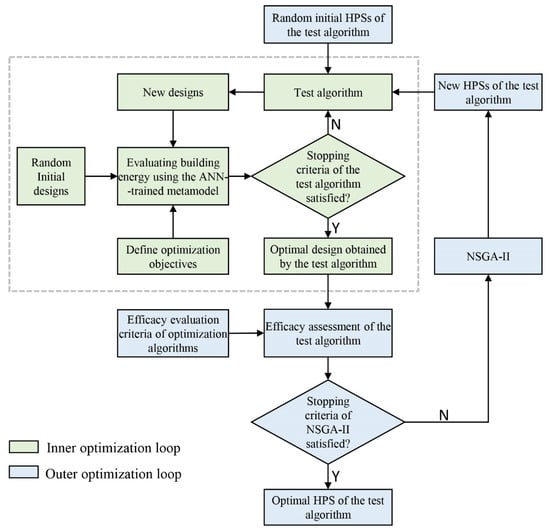

Figure 4 illustrates the flowchart of Step 3, which essentially involves two optimization loops. The inner loop is driven by a test algorithm that requires hyperparameter optimization. It solves the specific BEO problem modeled by the ANN. The outer optimization loop is driven by NSGA-II, which automatically tunes the HPSs of the test algorithm. For each adjustment, the inner loop is run accordingly, and the efficacy of the test algorithm is assessed. The outer loop ends when reaching the termination condition of NSGA-II, and the optimal HPS of the test algorithm over the whole computing run is output. The optimization objectives of the outer loop are the efficacy criteria of optimization algorithms proposed in our previous works [22,23], which aim to quantitatively describe the different performance aspects of algorithms applied in BEO. Specifically, the performance criteria for single-objective test algorithms include (1) the quality of the optimal design obtained, (2) the computing time, and (3) the diversity of all designs searched in an optimization run. The performance criteria for multi-objective test algorithms include (1) the number of Pareto solutions, (2) the quality of the Pareto solutions, (3) the diversity of the Pareto solutions, and (4) the computing time. In practice, users are recommended to select the criteria they care most about. Furthermore, the reasons for choosing NSGA-II as the optimizer of the outer loop are manifold. NSGA-II is one of the most commonly used algorithms in engineering optimization. Its robustness to HPSs has been verified as good in some previous works [34,35]. Moreover, appropriate HPSs for NSGA-II have been suggested in some previous works and have been successfully applied in some implementations. Andersson et al. [36] suggested the HPS for NSGA-II as a crossover rate of 0.9, a mutation rate of 0.008, and a population size of 100.

Figure 4.

Flowchart of hyperparameter optimization of the test algorithm applied in BEO.

3. Numerical Experiments

3.1. Step 1: Constructing 15 Benchmark BEO Problems with Different Properties

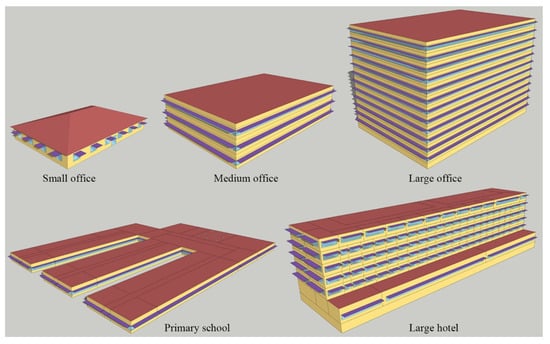

3.1.1. Benchmark Buildings

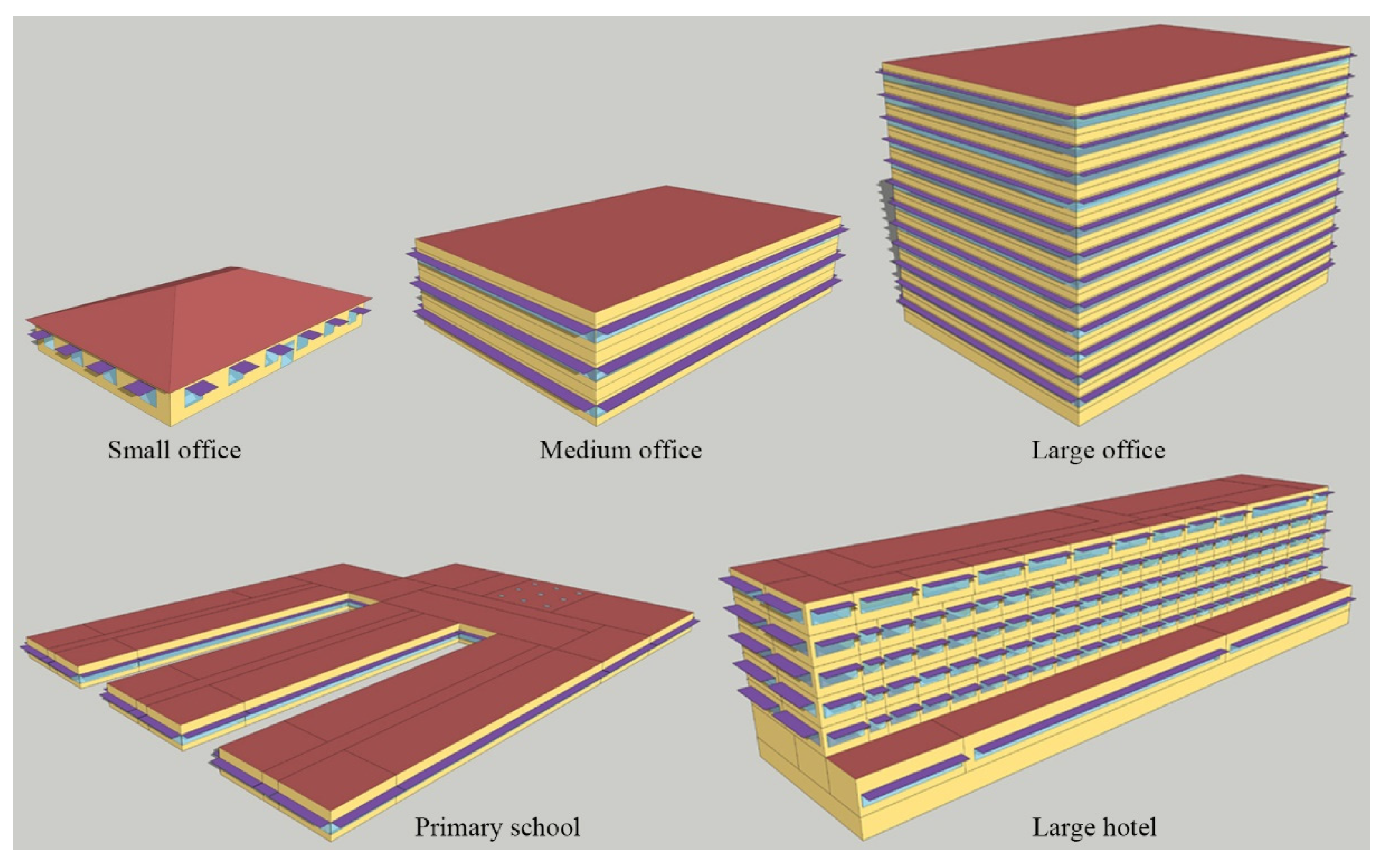

To investigate the applicability and reliability of the proposed methodology, a set of BEO problems with different properties was constructed based on the DOE benchmark commercial buildings [37]. Five new-construction building types, namely, small office, medium office, large office, primary school, and large hotel, were modified. Specifically, overhangs were added to the original DOE models to investigate their impact on building energy and thermal comfort. All these buildings were located in Baltimore, Maryland, in the 4A climate zone according to the US standard. Figure 5 shows the architectural schematic views of the five building models and Table 1 summarizes their characteristics.

Figure 5.

Architectural schematic views of the benchmark models.

Table 1.

Summary of the characteristics of the benchmark models.

3.1.2. Design Variables

In the case studies, a maximum of 30 design variables related to building energy consumption and thermal comfort were selected. Table 2 lists their symbols, units, and ranges of variation, as well as step sizes for discrete variables. As shown, one variable describes building orientation, which is continuous and has a great impact on the solar heat gain through the facade. Two variables are related to the cooling and heating set-point temperatures, which significantly impact the energy consumed by the mechanical system and the indoor thermal comfort. Optimizing the heat transfer through opaque walls and roofs is of great significance in designing high performance buildings. In this study, the conductivity and thickness of the insulation layers in the roofs and opaque walls were described and optimized by ten continuous design variables. Windows are usually the least insulated part of the envelope and determine the amount of indoor daylight and the view of the outside. Therefore, in the present case studies, the window sizes and the thermal properties of the windows (e.g., the solar heat gain coefficient (SHGC) and U-Factor), which have a critical impact on the indoor thermal comfort and energy, were considered. To easily define the size of a window, its lower position was fixed at 0.9 m, and only its upper position was changed. In addition, the windows on the same facade have equal areas. As shown in Figure 5, overhangs exist along the upper sides of the windows. The depths of the overhangs and their transmittances were optimized; these control the light and heat that enter the building through the windows. Moreover, the depths of the overhangs in the same façade were kept the same.

Table 2.

Specifications of the optimization variables.

As introduced in Section 2.2, the multidimensional property of a BEO problem determines the difficulty of optimization algorithms in searching the entire design space. In this study, three types of optimization problems with different dimensions, i.e., 10, 20, and 30 design variables, respectively, were constructed for each building model shown in Figure 5. Specifically, for problems with n design variables, the first n design variables listed in Table 2 were selected. In summary, a total of 15 BEO problems are constructed in this study.

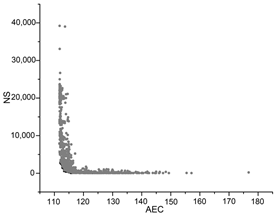

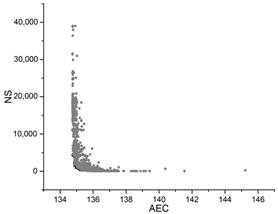

3.1.3. Optimization Objectives

The optimization objectives in BEO research include energy, cost, comfort, etc., among which energy is considered most often. In the numerical experiments, we constructed two types of problems, i.e., single-objective and multi-objective problems. In particular, minimizing the annual energy consumption (AEC) was defined for single-objective optimizations, while two conflicting objectives, minimizing the AEC vs. maximizing the indoor thermal comfort (ITC), were considered simultaneously in multi-objective optimizations. The AEC is calculated as the total amount of energy consumed throughout the year. The common methods of evaluating indoor thermal comfort include the predicted percentage of dissatisfied (PPD) (0–100%) and the predicted mean vote (PMV). They are assessed based on the Fanger thermal comfort model [38], which is widely used in air-conditioned buildings [11]. In this study, the annual averaged PPD (AAPPD) over all zones during occupied times for one year was used and is calculated as follows:

where n is the total number of conditioned zones and PPDi,t is the average PPD in zone i in the tth month of a year. In this case, maximizing the thermal comfort is converted into minimizing the AAPPD.

3.2. Step 2: Developing Metamodels of the Benchmark BEO Problems

3.2.1. Creating ANN Modeling Datasets

An important preliminary step in ANN modeling is to create training data sets. In this study, the Latin hypercube sampling (LHS) method embedded in the modeFRONTIER software was applied to create the design samples for each benchmark problem.

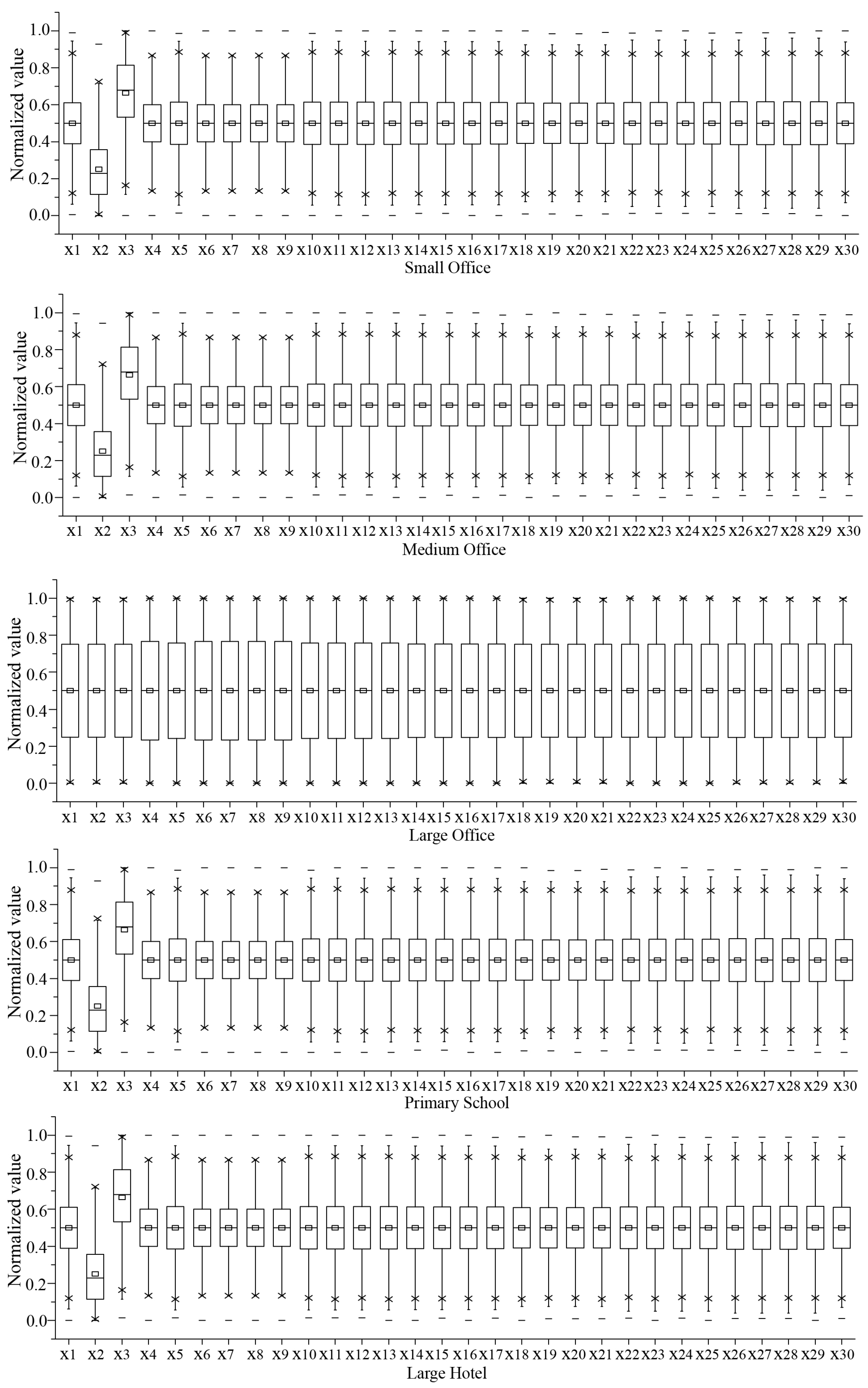

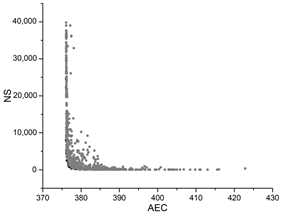

EnergyPlus was integrated with modeFRONTIER to automatically simulate the AEC and AAPPD of the samples. McKay [39] and Asadi et al. [40] found that it was sufficient to correctly characterize the search space when the number of cases sampled using LHS exceeded twice the number of design parameters. Therefore, the number of samples gathered for each BEO problem is equal to 50 times the number of inputs times the number of outputs. For example, for a bio-objective optimization problem with 30 design variables, 3000 design samples were generated. The time consumed for objective simulation of design samples is summarized in Table 3. Finally, the input (sample designs) and output (corresponding AEC and AAPPD) data were imported into the neural network toolbox of MATLAB to train and validate ANN models. The normalized sample distribution of benchmark problems with 30 design variables is illustrated in Figure 6. As shown, the value range of each variable is uniformly covered.

Table 3.

ANN training and validation results.

Figure 6.

Sample distribution of benchmark problems with 30 design variables.

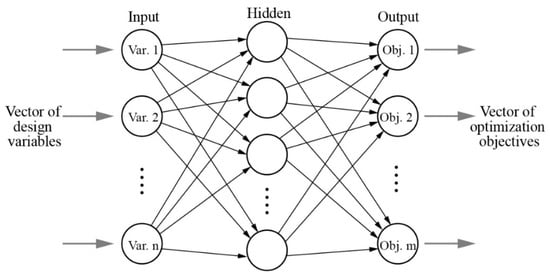

3.2.2. ANN Training and Validation

Before ANN modeling for a benchmark problem, as suggested by Shahin et al. [41], the sampling dataset was randomly divided into a training set, a validation set, and a test set based on the ratio of 70%, 15%, and 15%, respectively. As shown in Figure 7, the ANN employed in this study is a three-layer feedforward network containing a hidden layer. The neurons in the input layer receive data from the design variables, while the neurons in the output layer provide the prediction outcomes of the design objectives. Between the two layers, the number of neurons in the hidden layer is detected by means of a trial-and-error method according to the average relative error of ANN predictions (Table 3). The Levenberg–Marquardt backpropagation algorithm [42,43] was applied to train the network. The transfer functions included a sigmoidal function for the hidden layer and a linear function for the output layer. Similar network configurations have been employed in previous notable studies concerning BEO with very sound results [44,45]. When the mean square error (MSE) of the validation samples was stable, the training process stopped automatically. Specifically, MSE was the average squared difference between the original EnergyPlus simulations and the ANN predictions. Lower MSE values are better, and zero means there is no error. Furthermore, the coefficient of correlation (R) between the predictions and original simulations were calculated to further assess the ANN model. Since the test set did not participate in training, it was employed to independently assess the network performance during and after training by considering the distribution of the MSE and R. Table 3 reported the ANN testing results for each metamodel. As illustrated in Table 3, the R values were all very close to 1 for each benchmark BEO problem, and the MSEs were quite small, demonstrating great agreement between the simulations and the predictions.

Figure 7.

Structure of a three-layer feedforward neural network.

3.3. Step 3: Hyperparameter Optimization of the Test Algorithms

3.3.1. Test Algorithms and Hyperparameters

This study investigates three commonly used single-objective optimization algorithms, i.e., the GA, the PSO, and the simulated annealing (SA) algorithm, and one multi-objective optimization algorithm, namely, MOGA. All algorithms were implemented in the global optimization toolbox of MATLAB. Detailed descriptions of each algorithm and their working strategies are provided in Ref. [46]. Table 4 lists the hyperparameters of the algorithms and their descriptions along with the default values in MATLAB for comparative studies.

Table 4.

Hyperparameters of the test optimization algorithms, their ranges, and default values in MATLAB.

3.3.2. Performance Evaluation Criteria of the Test Algorithms

In our previous works, a set of criteria was proposed to evaluate the overall performance of an optimization algorithm [22,23]. As each criterion reflects a different performance aspect of an algorithm, there is no common agreement that one criterion is more important than the others. As suggested in Ref. [23], the importance of each criterion is mainly dependent on the needs of the users according to their specific requirements. In engineering practice, the most common demand for optimization algorithms is to find a desired design within a restricted runtime. Therefore, this study mainly focuses on two conflicting efficacy aspects, i.e., the quality of the optimal design obtained by the algorithm and the computing time. Generally, if an algorithm is pressured to converge quickly to an optimum, it will explore less of the searchable design space and may converge to a mediocre optimum. Similarly, if the algorithm strives to diversify the areas it explores in search of better designs, it may consume more computing time in evaluating the explored designs, especially when using the simulation models with high computation cost. In this sense, an algorithm that converges quickly and locates the desired optimum is preferred.

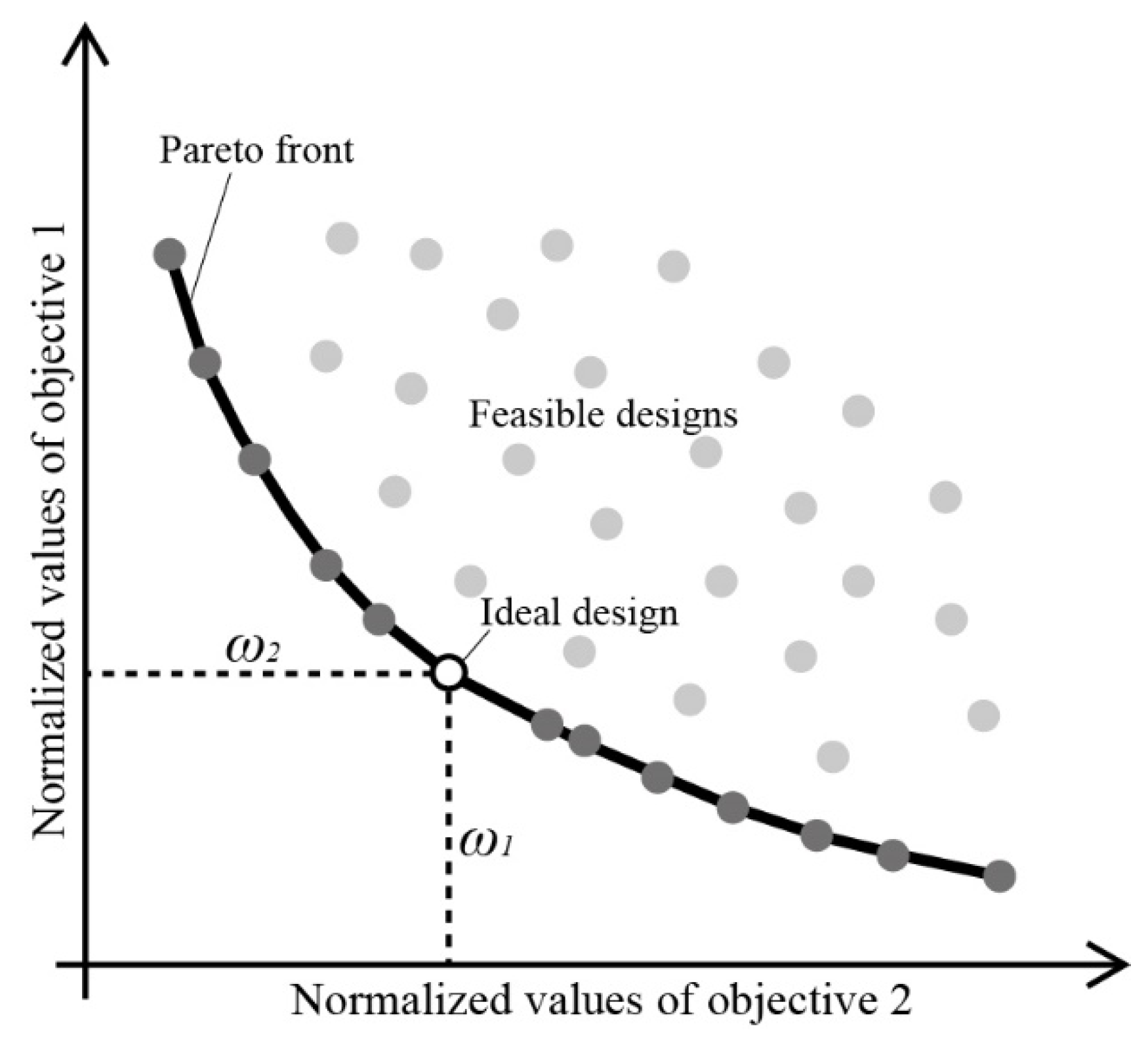

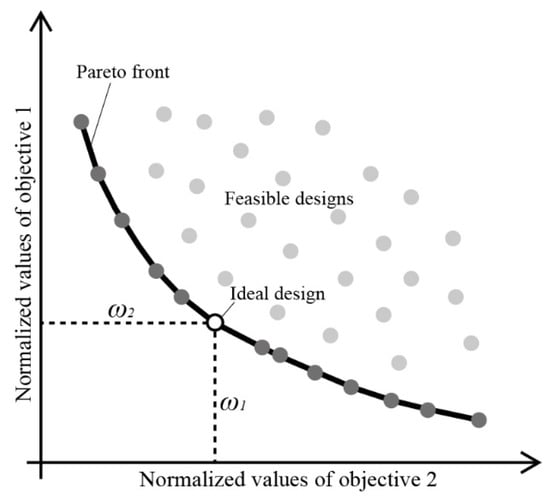

For a single-objective algorithm, which locates a unique optimum in an optimization run, the method of calculating the quality of the located optimal design is to directly evaluate the objective value of the design. When the multi-objective algorithm finally locates a set of nondominated solutions in an optimization run, one possible method is to first distinguish the ideal Pareto design on the Pareto front by the weighted sum model (WSM) method coupled with the min-max normalization method (Figure 8), and then evaluate the quality of the selected ideal design by assessing its objective values. As a result, the ideal design satisfies (in the minimization case) Equation (4):

where R*WSM-score is the WSM score of the ideal design, fij is the objective value of the ith Pareto-optimal design in the jth optimization objective, fminj and fmaxj are, respectively, the minimum and maximum values of the jth optimization objective that the optimization run reaches, ωj ≥ 0 is the importance of the jth objective, n is the total number of Pareto-optimal designs, and m is the number of optimization objectives. The method of evaluating the computing time of an optimization run is to calculate the total number of building simulations when it terminates, which reflects the number of designs found by the algorithm during the run. It is noted that all the test algorithms terminated when one of the following two conditions was met. One is that over MaxStallGenerations or MaxStallIterations generations, the average relative change of the best objective value reaches FunctionTolerance, which was set as 10−3. The other is the total number of objective function evaluations should not exceed 3000, which corresponds to the maximum number of samples generated to build metamodels in Section 3.2.1.

Figure 8.

Identifying the ideal design from the Pareto front based on the WSM.

3.3.3. Method for Identifying the Specific Optimal HPS from the Pareto Front of NSGA-II

Since the outer loop shown in Figure 4 is a multi-objective optimization run driven by NSGA-II, it locates multiple nondominated solutions on the Pareto front when NSGA-II terminates, and each solution corresponds to an HPS of the test algorithm. In theory, each HPS on the Pareto front is desirable, but it is still necessary to select the ideal one for application and evaluation. Thus, the WSM method is used to make multi-objective decisions for these nondominated solutions. To eliminate the unit differences between the two efficacy evaluation criteria of test algorithms, i.e., the quality of the obtained optimum and the computing time, the value of each criterion is first normalized using the max-min normalization method as in Equation (5). Then, a weight is assigned to each criterion based on its importance. Finally, the sum of the products of the normalized values of each evaluation criterion and its weight is calculated as in Equation (6). The ideal HPS identified from the Pareto front of NSGA-II is the one with the smallest R value.

where n is the total number of Pareto-optimal solutions searched by NSGA-II, m is the number of algorithm efficacy evaluation criteria, which is 2 in this study, and wj is the weight of the jth evaluation criterion. This study assumes that the quality of the searched optimum and the computation time are equally important for the test algorithms, so their weights are both set to 1.

4. Results and Discussion

4.1. Numerical Experiment Results

For different benchmark problems, the hyperparameter optimization convergence graphs of the four test algorithms are illustrated in Table A1 of Appendix A. The finally identified ideal HPS of the test algorithms are listed in Table 5, Table 6, Table 7 and Table 8. For BEO problems with the same complexities as the 15 benchmark problems constructed in this study, Table 5, Table 6, Table 7 and Table 8 offer guidance for users in setting hyperparameters for the four test algorithms. In this case, users can use the detailed simulation models to calculate building performance without developing metamodels, as the results are trade-offs between the quality of the obtained optimum and the computing time.

Table 5.

Optimal HPSs for the GA.

Table 6.

Optimal HPSs for the PSO.

Table 7.

Optimal HPSs for the SA.

Table 8.

Optimal HPSs for the MOGA.

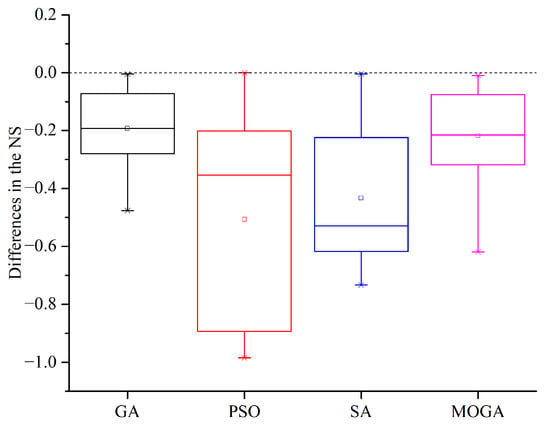

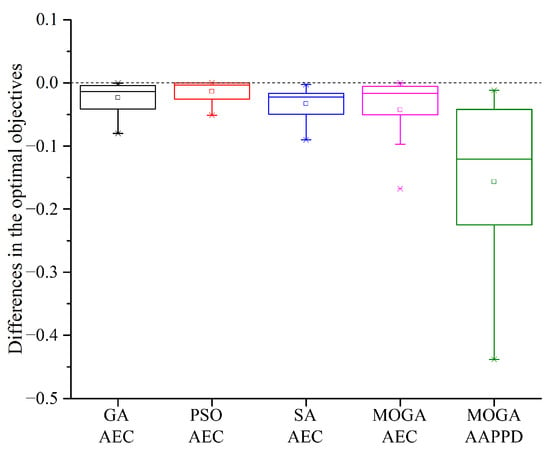

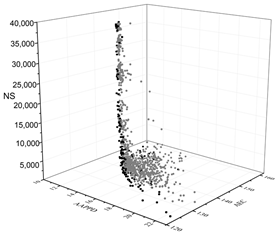

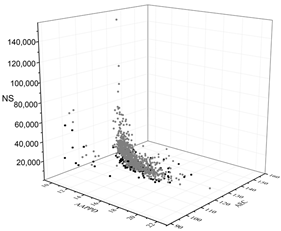

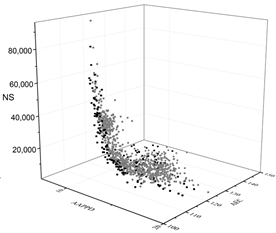

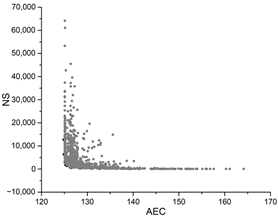

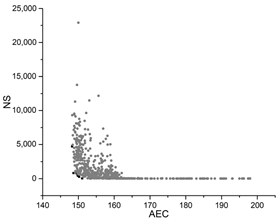

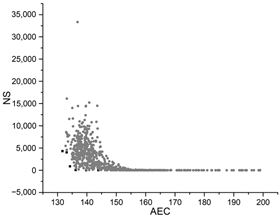

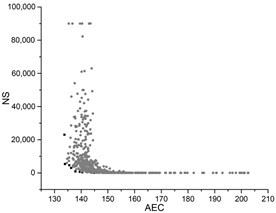

4.2. Comparison with the Default HPSs in MATLAB

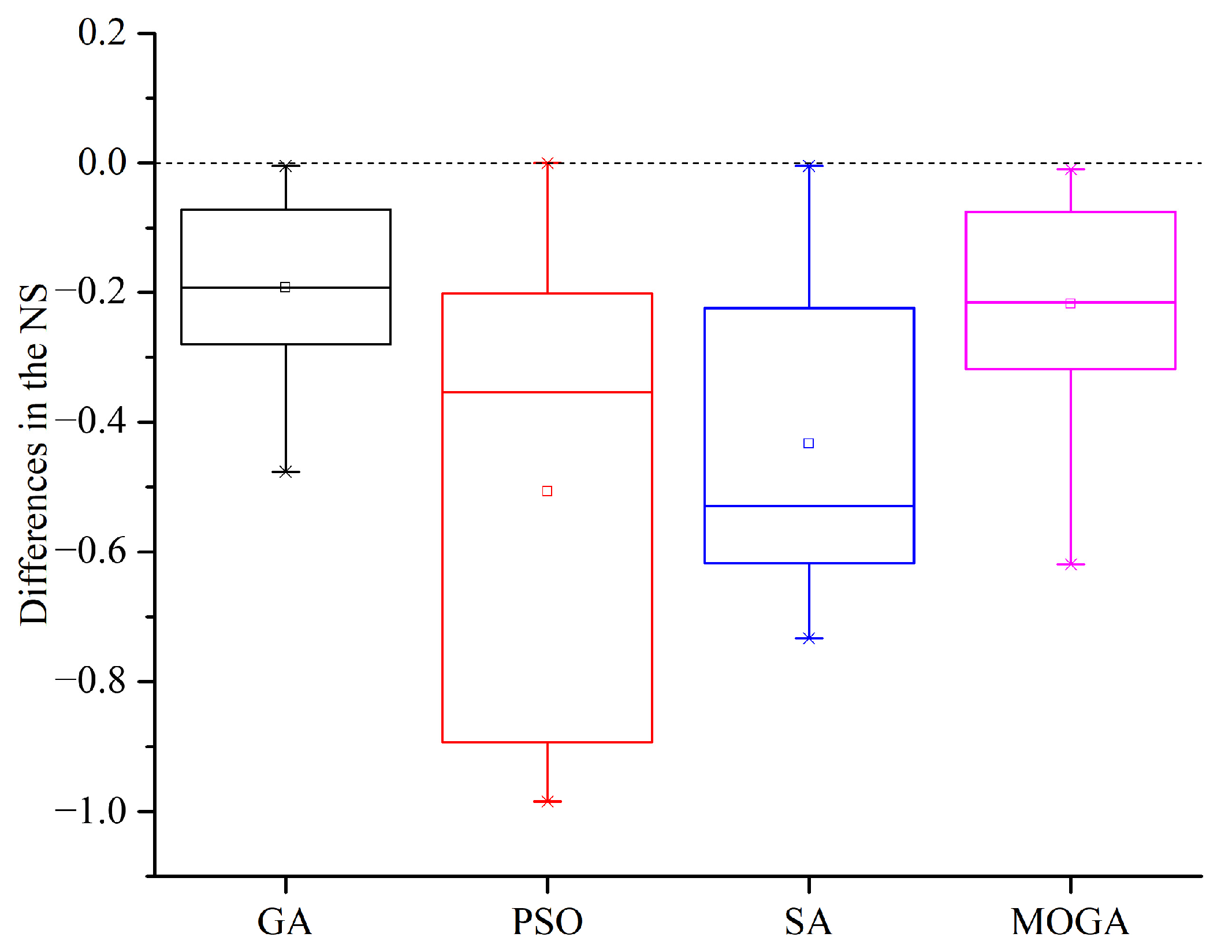

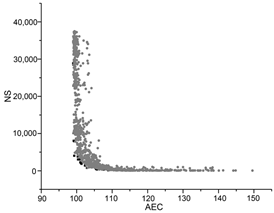

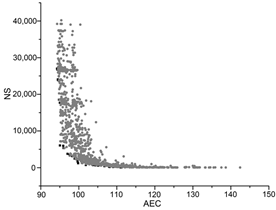

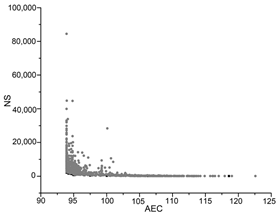

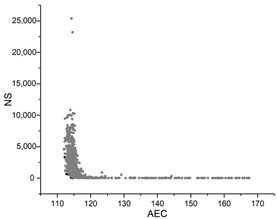

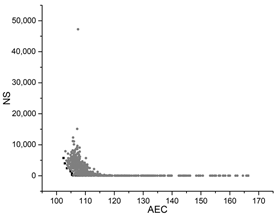

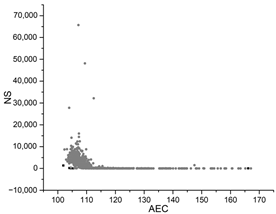

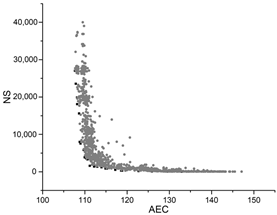

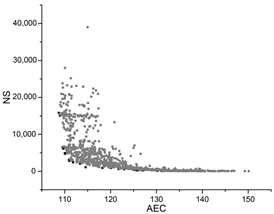

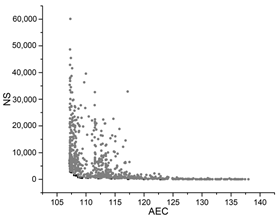

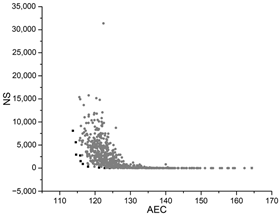

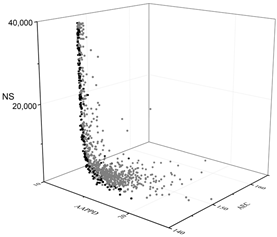

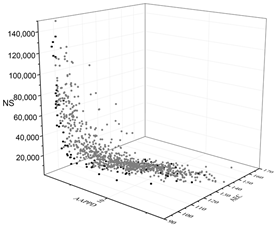

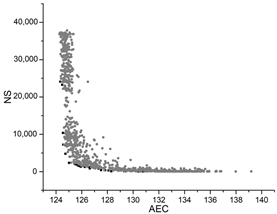

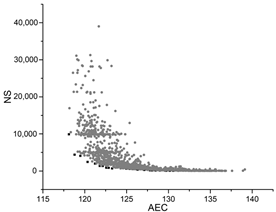

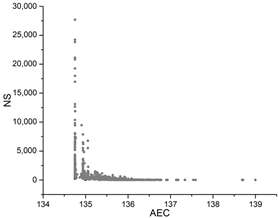

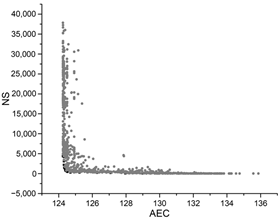

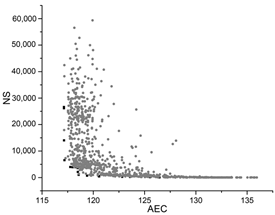

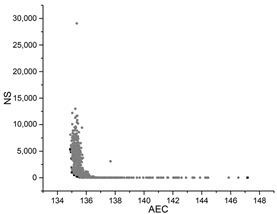

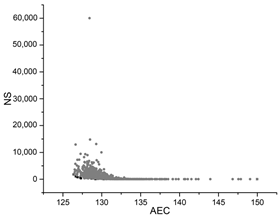

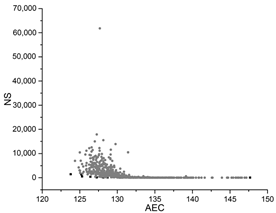

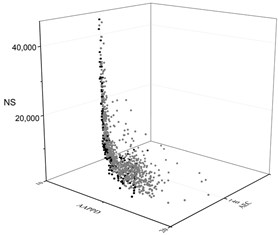

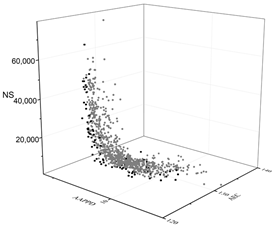

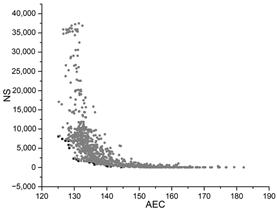

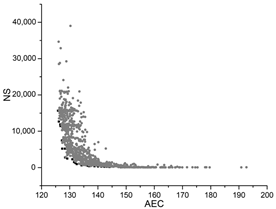

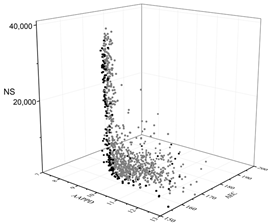

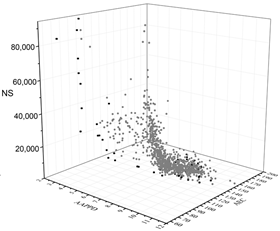

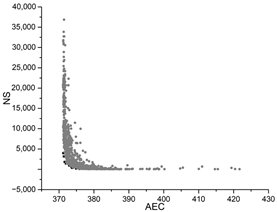

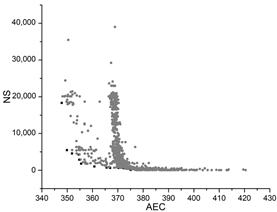

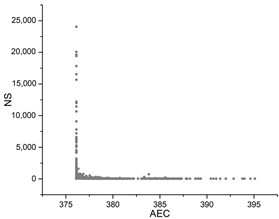

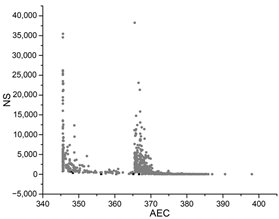

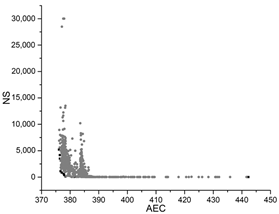

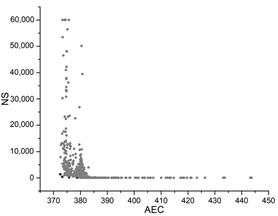

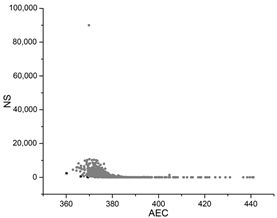

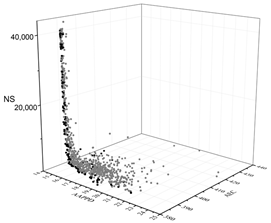

To validate the proposed methodology, this section compares the efficacy differences of the test algorithms on each evaluation criterion when they use the identified ideal HPSs and the default HPSs in MATLAB. As shown in Table 9, the number of simulations was greatly reduced, with reduction rates ranging from 0.5% to 47.6% for GA, 0 to 98.48% for PSO, 0.47% to 73.27% for SA, and 0.97% to 61.87% for MOGA. The reduction in the number of simulations is significantly helpful for BEO problems, especially for time-consuming performance simulations when using detailed simulation models. As shown in Figure 9, for all the 15 benchmark problems, the NS reduction of PSO is generally large, indicating that the running time of PSO is more dependent on its hyperparameters, and the final optimum can be found faster by hyperparameter optimization of PSO. Moreover, among the other three algorithms, SA benefited more from hyperparameter optimization in terms of NS, with the general largest NS reduction.

Table 9.

Differences in the quality of the optimum and the number of simulations when the test algorithms use the optimal HPSs and the default ones.

Figure 9.

Illustration of the differences in the NS when the test algorithms use the optimal HPSs and the default ones.

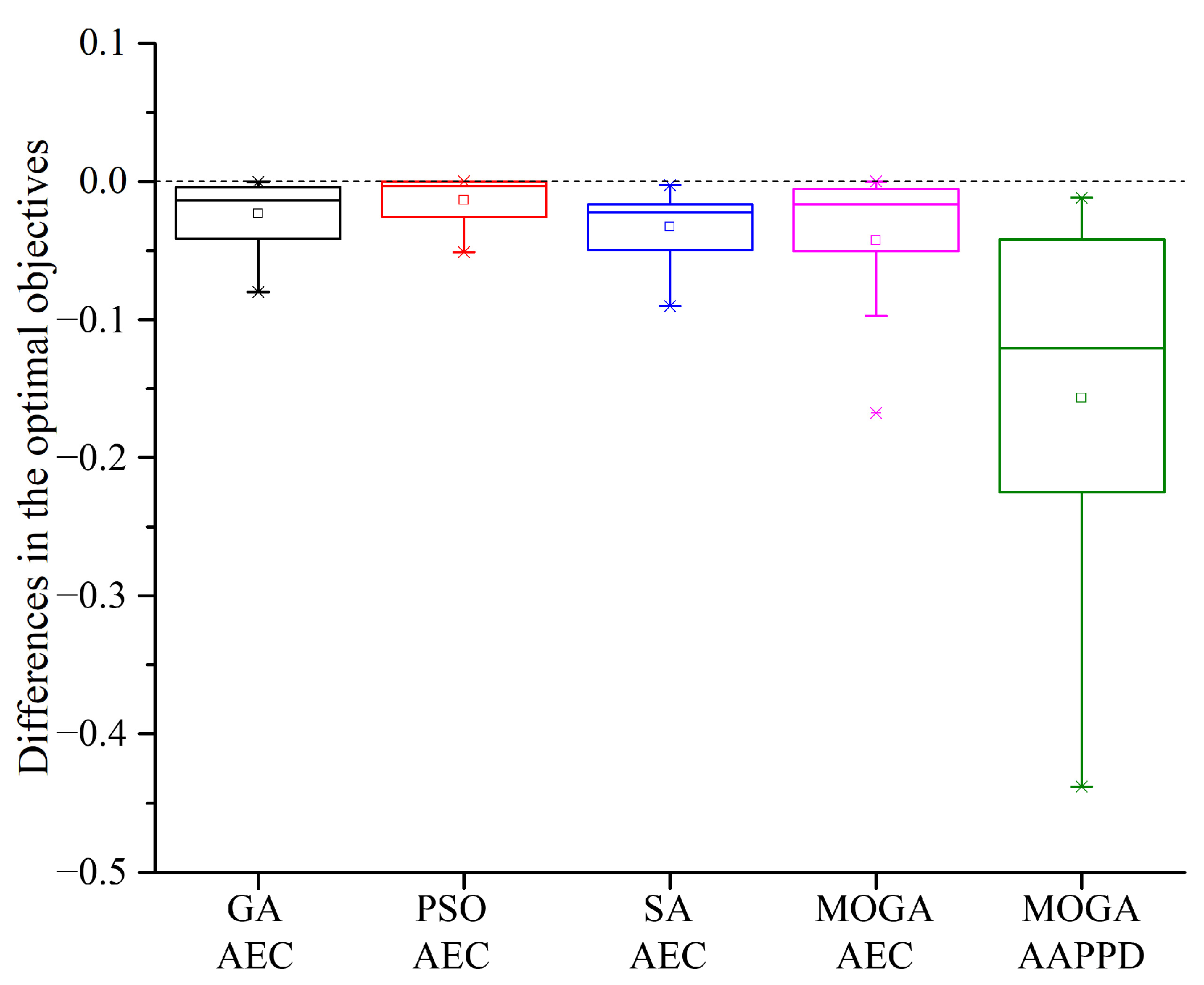

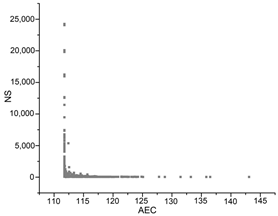

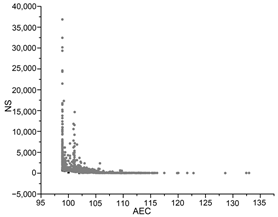

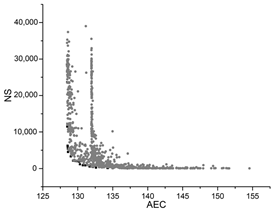

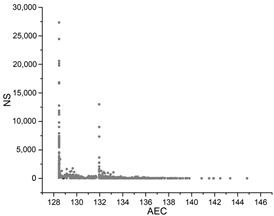

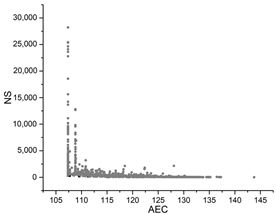

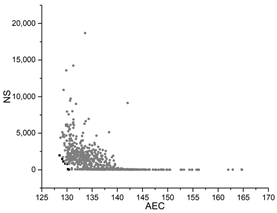

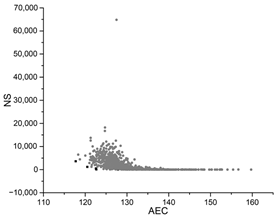

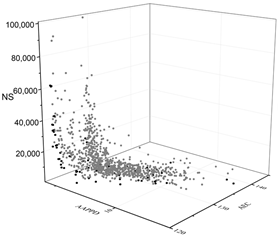

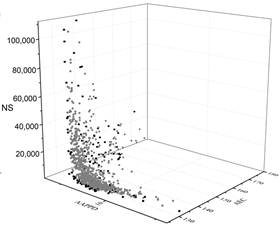

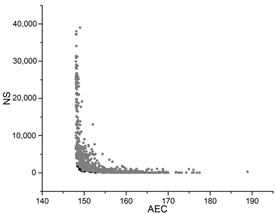

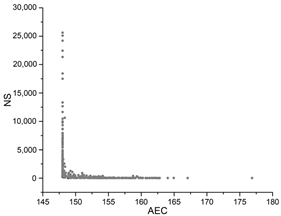

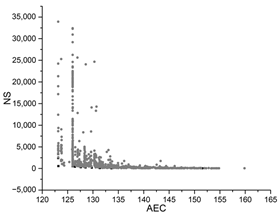

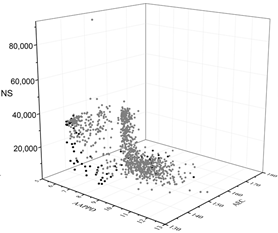

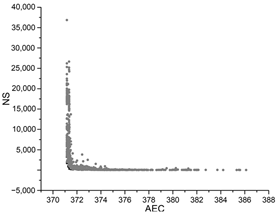

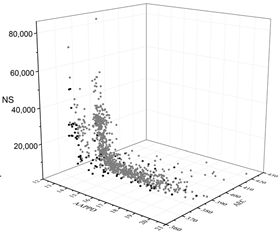

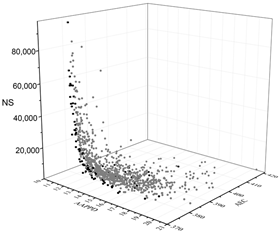

Regarding the quality of the optimums obtained by the test algorithms when solving the 15 benchmark problems, compared with the default HPSs, the AEC variation range of the GA, PSO, SA, and the MOGA when using the optimal HPSs is −7.99–−0.04%, −5.13–0%, −9.04–−0.28%, and −16.76–0%, respectively. The AAPPD variation range of MOGA is −43.84–−0.02%. As illustrated in Figure 10, the MOGA generally benefited the most from hyperparameter optimization with the highest reductions of AEC and AAPPD. Among the three single-objective algorithms, hyperparameter optimization is shown most favorable to SA, followed by the GA. By contrast, PSO had the smallest improvement in the quality of the optimum, which demonstrated an insensitivity to hyperparameters; therefore, hyperparameter optimization is mainly beneficial to the running time of PSO.

Figure 10.

Illustration of the differences in the optimal objectives when the test algorithms use the optimal HPSs and the default ones.

4.3. Efficacy Comparison between the Test Algorithms

To compare the efficacy of the three single-objective test algorithms when they use the identified optimal HPSs, we first ranked them in terms of the two specific performance assessment criteria and illustrated the ranking results in Table 10 and Table 11. As shown in Table 10, for the same benchmark problem, PSO generally found a better design than the other two algorithms and always came out on top, while SA exhibited the worst performance behavior, always ranking third. In addition, according to Table 11, the GA performed the worst in terms of the number of simulations, and generally required the largest number of simulations to find the optimum. In summary, considering the two conflicting efficacy criteria of optimal design quality and computing time, PSO performed the best in general and is preferentially recommended for solving BEO problems. In addition, according to Table 6, there is an obvious positive correlation between the NSs required by PSO and the dimensions of the BEO problems, indicating that as the number of design variables increases, PSO needs more time to locate the optimum. In contrast, the NS required by GA and SA does not increase significantly with the increase of the problem dimension, which indicates that when the search space is expanded, the two algorithms can find the optimum by precisely tuning the algorithm hyperparameters without extending the running time.

Table 10.

Ranking results of the optimal design quality obtained by the three single-objective test algorithms when using the optimal HPSs.

Table 11.

Ranking results of the number of simulations required by the three single-objective test algorithms when using the optimal HPSs.

5. Conclusions

5.1. Achievements

Optimization algorithms are essential to the effectiveness and efficiency of the BEO technology. Furthermore, the hyperparameters of algorithms are significant to their efficacy and should be set carefully based on the specific BEO problem. Aiming to avoid the failure of the BEO technique because of improper HPSs, this study proposes an metamodel-based methodology to optimize the HPSs of algorithms. It consists of three consecutive steps, i.e., constructing the BEO problem to be solved, developing the metamodel of the problem, and optimizing the algorithm hyperparameter values with NSGA-II. The method can automatically tune the HPSs of the algorithm without manual adjustment, drive the metamodel-based BEO workflow, and eventually output the ideal HPS that makes the algorithm most efficient. To validate the developed method, 15 benchmark BEO problems with five building models and three categories of design variables were constructed to perform numerical experiments. For each problem, the HPSs of four commonly used algorithms, i.e., the GA, PSO, SA, and the MOGA, were optimized. The results demonstrate the following findings concerning the algorithm hyperparameters:

- In terms of the two conflicting criteria, namely, the optimal design quality and the computing time, the efficiency of the four test algorithms was greatly improved when using the optimal HPSs compared with the default ones in MATLAB. For the BEO problems with similar complexity to the 15 benchmark problems, Table 5, Table 6, Table 7 and Table 8 are recommended for setting the hyperparameters of the GA, PSO, SA, and the MOGA, respectively;

- Practically, among the four test algorithms, the MOGA benefited the most from hyperparameter optimization in terms of the quality of the obtained optimum, while PSO benefited the least. In terms of the computing time, the PSO benefited the most, especially for the optimization problem containing 10 design variables;

- For the three single-objective optimization algorithms, i.e., the GA, PSO, and SA, PSO performed the best in general on the benchmark problems which can found a better design with a small computing time, so it is preferentially recommended for solving BEO problems;

- The proposed metamodel-based methodology for hyperparameter optimization of algorithms in BEO is universal. When a BEO problem arises in which the optimization algorithms applied and the efficacy evaluation criteria of the algorithms concerned differ from those used in this paper, the method illustrated in Figure 3 is also applicable to maximize the efficacy of the algorithm on specific evaluation criteria by optimizing the HPSs of the algorithm.

5.2. Challenges and Future Works

The main challenge with the developed method is to create ANN-trained metamodels of BEO problems, which has a high technical threshold for designers. The future work aims to establish an integrated platform based on the GenOpt software, which will automatically series three technical modules, including metamodeling, algorithm hyperparameter optimization, and the BEO technique. In addition, in order to further explore the HPSs distribution characteristics of algorithms for different BEO problems, more benchmark BEO problems with different properties will be constructed, the hyperparameters of more novel algorithms will be optimized, and the optimal HPSs characteristics of the algorithms will be analyzed based on the data mining technologies.

Author Contributions

Conceptualization, B.S. and F.L.; methodology, B.S. and F.L.; validation, B.S.; data curation, B.S. and Y.L.; writing—original draft preparation, B.S.; writing—review and editing, B.S., Y.L. and F.L.; funding acquisition, B.S. and F.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Jiangsu Province (grant number BK20220358), the Humanities and Social Sciences General Research Program of the Ministry of Education (grant number 22YJCZH149), the Science and Technology Project of Jiangsu Department Housing and Urban-Rural Development China (grant number 2019ZD001185), and the Natural Science Research of Jiangsu Higher Education Institutions of China (grant number 20KJB560027).

Data Availability Statement

Data is available at https://github.com/sbhnju/Metamodel-based-hyperparameter-optimization-of-optimization-algorithms-in-BEO (accessed on 6 January 2023).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

The hyperparameter optimization convergence graphs of the four test algorithms.

Table A1.

The hyperparameter optimization convergence graphs of the four test algorithms.

| Small office_10 Variables | Small office_20 Variables | Small office_30 Variables | |

|---|---|---|---|

| GA |  |  |  |

| PSO |  |  |  |

| SA |  |  |  |

| MOGA |  |  |  |

| Medium office_10 variables | Medium office_20 variables | Medium office_30 variables | |

| GA |  |  |  |

| PSO |  |  |  |

| SA |  |  |  |

| MOGA |  |  |  |

| Large office_10 variables | Large office_20 variables | Large office_30 variables | |

| GA |  |  |  |

| PSO |  |  |  |

| SA |  |  |  |

| MOGA |  |  |  |

| Primary school_10 variables | Primary school_20 variables | Primary school_30 variables | |

| GA |  |  |  |

| PSO |  |  |  |

| SA |  |  |  |

| MOGA |  |  |  |

| Large hotel_10 variables | Large hotel_20 variables | Large hotel_30 variables | |

| GA |  |  |  |

| PSO |  |  |  |

| SA |  |  |  |

| MOGA |  |  |  |

The black dots are non-dominant solutions, and the gray dots are dominant solutions.

References

- IEA. World Energy Statistics and Balances 2018; IEA: Paris, France, 2018. [Google Scholar]

- Wetter, M.; Wright, J. Comparison of a generalized pattern search and a genetic algorithm optimization method. In Proceedings of the 8th International Building Performance Simulation Association Conference, Eindhoven, The Netherlands, 11–14 August 2003; pp. 1401–1408. [Google Scholar]

- Barber, K.A.; Krarti, M. A review of optimization based tools for design and control of building energy systems. Renew. Sustain. Energy Rev. 2022, 160, 112359. [Google Scholar] [CrossRef]

- Tian, Z.; Zhang, X.; Jin, X.; Zhou, X.; Si, B.; Shi, X. Towards adoption of building energy simulation and optimization for passive building design: A survey and a review. Energy Build. 2018, 158, 1306–1316. [Google Scholar] [CrossRef]

- Shi, X.; Tian, Z.; Chen, W.; Si, B.; Jin, X. A review on building energy efficient design optimization from the perspective of architects. Renew. Sustain. Energy Rev. 2016, 65, 872–884. [Google Scholar] [CrossRef]

- Chen, X.; Yang, H.; Sun, K. Developing a meta-model for sensitivity analyses and prediction of building performance for passively designed high-rise residential buildings. Appl. Energy 2017, 194, 422–439. [Google Scholar] [CrossRef]

- Yu, W.; Li, B.; Jia, H.; Zhang, M.; Wang, D. Application of multi-objective genetic algorithm to optimize energy efficiency and thermal comfort in building design. Energy Build. 2015, 88, 135–143. [Google Scholar] [CrossRef]

- Wright, J.; Alajmi, A. The robustness of genetic algorithms in solving unconstrained building optimization problems. In Proceedings of the 9th IBPSA Conference: Building Simulation, Montréal, QC, Canada, 15–18 September 2005; pp. 1361–1368. [Google Scholar]

- Delgarm, N.; Sajadi, B.; Kowsary, F.; Delgarm, S. Multi-objective optimization of the building energy performance: A simulation-based approach by means of particle swarm optimization (PSO). Appl. Energy 2016, 170, 293–303. [Google Scholar] [CrossRef]

- Kim, J.; Hong, T.; Jeong, J.; Koo, C.; Jeong, K. An optimization model for selecting the optimal green systems by considering the thermal comfort and energy consumption. Appl. Energy 2016, 169, 682–695. [Google Scholar] [CrossRef]

- Eisenhower, B.; O’Neill, Z.; Narayanan, S.; Fonoberov, V.A.; Mezić, I. A methodology for meta-model based optimization in building energy models. Energy Build. 2012, 47, 292–301. [Google Scholar] [CrossRef]

- Pang, Z.; O’Neill, Z.; Li, Y.; Niu, F. The role of sensitivity analysis in the building performance analysis: A critical review. Energy Build. 2020, 209, 109659. [Google Scholar] [CrossRef]

- Westermann, P.; Evins, R. Surrogate modelling for sustainable building design—A review. Energy Build. 2019, 198, 170–186. [Google Scholar] [CrossRef]

- Thrampoulidis, E.; Mavromatidis, G.; Lucchi, A.; Orehounig, K. A machine learning-based surrogate model to approximate optimal building retrofit solutions. Appl. Energy 2021, 281, 116024. [Google Scholar] [CrossRef]

- Elbeltagi, E.; Wefki, H. Predicting energy consumption for residential buildings using ANN through parametric modeling. Energy Rep. 2021, 7, 2534–2545. [Google Scholar] [CrossRef]

- Dagdougui, H.; Bagheri, F.; Le, H.; Dessaint, L. Neural network model for short-term and very-short-term load forecasting in district buildings. Energy Build. 2019, 203, 109408. [Google Scholar] [CrossRef]

- D’Amico, A.; Ciulla, G. An intelligent way to predict the building thermal needs: ANNs and optimization. Expert Syst. Appl. 2022, 191, 116293. [Google Scholar] [CrossRef]

- Razmi, A.; Rahbar, M.; Bemanian, M. PCA-ANN integrated NSGA-III framework for dormitory building design optimization: Energy efficiency, daylight, and thermal comfort. Appl. Energy 2022, 305, 117828. [Google Scholar] [CrossRef]

- Prada, A.; Gasparella, A.; Baggio, P. A comparison of three evolutionary algorithms for the optimization of building design. Appl. Mech. Mater. 2019, 887, 140–147. [Google Scholar] [CrossRef]

- Waibel, C.; Wortmann, T.; Evins, R.; Carmeliet, J. Building energy optimization: An extensive benchmark of global search algorithms. Energy Build. 2019, 187, 218–240. [Google Scholar] [CrossRef]

- Chegari, B.; Tabaa, M.; Simeu, E.; Moutaouakkil, F.; Medromi, H. Multi-objective optimization of building energy performance and indoor thermal comfort by combining artificial neural networks and metaheuristic algorithms. Energy Build. 2021, 239, 110839. [Google Scholar] [CrossRef]

- Si, B.; Tian, Z.; Jin, X.; Zhou, X.; Tang, P.; Shi, X. Performance indices and evaluation of algorithms in building energy efficient design optimization. Energy 2016, 114, 100–112. [Google Scholar] [CrossRef]

- Si, B.; Wang, J.; Yao, X.; Shi, X.; Jin, X.; Zhou, X. Multi-objective optimization design of a complex building based on an artificial neural network and performance evaluation of algorithms. Adv. Eng. Inform. 2019, 40, 93–109. [Google Scholar] [CrossRef]

- Si, B.; Tian, Z.; Jin, X.; Zhou, X.; Shi, X. Ineffectiveness of optimization algorithms in building energy optimization and possible causes. Renew. Energy 2019, 134, 1295–1306. [Google Scholar] [CrossRef]

- Hamdy, M.; Nguyen, A.T.; Hensen, J.L. A performance comparison of multi-objective optimization algorithms for solving nearly-zero-energy-building design problems. Energy Build. 2016, 121, 57–71. [Google Scholar] [CrossRef]

- Yang, M.-D.; Lin, M.-D.; Lin, Y.-H.; Tsai, K.-T. Multiobjective optimization design of green building envelope material using a non-dominated sorting genetic algorithm. Appl. Therm. Eng. 2017, 111, 1255–1264. [Google Scholar] [CrossRef]

- Chen, X.; Yang, H.; Zhang, W. Simulation-based approach to optimize passively designed buildings: A case study on a typical architectural form in hot and humid climates. Renew. Sustain. Energy Rev. 2018, 82, 1712–1725. [Google Scholar] [CrossRef]

- Futrell, B.J.; Ozelkan, E.C.; Brentrup, D. Optimizing complex building design for annual daylighting performance and evaluation of optimization algorithms. Energy Build. 2015, 92, 234–245. [Google Scholar] [CrossRef]

- Ascione, F.; Bianco, N.; De Stasio, C.; Mauro, G.M.; Vanoli, G.P. A new methodology for cost-optimal analysis by means of the multi-objective optimization of building energy performance. Energy Build. 2015, 88, 78–90. [Google Scholar] [CrossRef]

- Cubukcuoglu, C.; Ekici, B.; Tasgetiren, M.F.; Sariyildiz, S. OPTIMUS: Self-Adaptive Differential Evolution with Ensemble of Mutation Strategies for Grasshopper Algorithmic Modeling. Algorithms 2019, 12, 141. [Google Scholar] [CrossRef]

- Ramallo-González, A.P.; Coley, D.A. Using self-adaptive optimisation methods to perform sequential optimisation for low-energy building design. Energy Build. 2014, 81, 18–29. [Google Scholar] [CrossRef]

- Ekici, B.; Chatzikonstantinou, I.; Sariyildiz, S.; Tasgetiren, M.F.; Pan, Q.-K. A multi-objective self-adaptive differential evolution algorithm for conceptual high-rise building design. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; pp. 2272–2279. [Google Scholar]

- Si, B.; Tian, Z.; Chen, W.; Jin, X.; Zhou, X.; Shi, X. Performance assessment of algorithms for building energy optimization problems with different properties. Sustainability 2018, 11, 18. [Google Scholar] [CrossRef]

- Vukadinović, A.; Radosavljević, J.; Đorđević, A.; Protić, M.; Petrović, N. Multi-objective optimization of energy performance for a detached residential building with a sunspace using the NSGA-II genetic algorithm. Sol. Energy 2021, 224, 1426–1444. [Google Scholar] [CrossRef]

- Ghaderian, M.; Veysi, F. Multi-objective optimization of energy efficiency and thermal comfort in an existing office building using NSGA-II with fitness approximation: A case study. J. Build. Eng. 2021, 41, 102440. [Google Scholar] [CrossRef]

- Andersson, M.; Bandaru, S.; Ng, A.H.C. Towards optimal algorithmic parameters for simulation-based multi-objective optimization. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; pp. 5162–5169. [Google Scholar]

- Torcellini, P.; Deru, M.; Griffith, M.; Benne, K. DOE Commercial Building Benchmark Models. National Renewable Energy Laboratory. 2008. Available online: http://www.nrel.gov/docs/fy08osti/43291.pdf (accessed on 6 January 2023).

- Fanger, P.O. Thermal Comfort: Analysis and Applications in Environmental Engineering; McGraw-Hill Book Company: New York, NY, USA, 1972; pp. 1–244. [Google Scholar]

- McKay, M.D. Sensitivity and uncertainty analysis using a statistical sample of input values. In Uncertainty Analysis; Ronen, Y., Ed.; CRC Press: Boca Raton, FL, USA, 1988; pp. 145–186. [Google Scholar]

- Asadi, E.; Silva, M.G.D.; Antunes, C.H.; Dias, L.; Glicksman, L. Multi-objective optimization for building retrofit: A model using genetic algorithm and artificial neural network and an application. Energy Build. 2014, 81, 444–456. [Google Scholar] [CrossRef]

- Shahin, M.A.; Maier, H.R.; Jaksa, M.B. Data division for developing neural networks applied to geotechnical engineering. J. Comput. Civ. Eng. 2004, 18, 105–114. [Google Scholar] [CrossRef]

- Li, L.; Fu, Y.; Fung, J.C.H.; Qu, H.; Lau, A.K. Development of a back-propagation neural network and adaptive grey wolf optimizer algorithm for thermal comfort and energy consumption prediction and optimization. Energy Build. 2021, 253, 111439. [Google Scholar] [CrossRef]

- Ye, Z.; Kim, M.K. Predicting electricity consumption in a building using an optimized back-propagation and Levenberg–Marquardt back-propagation neural network: Case study of a shopping mall in China. Sustain. Cities Soc. 2018, 42, 176–183. [Google Scholar] [CrossRef]

- Khayatian, F.; Sarto, L. Application of neural networks for evaluating energy performance certificates of residential buildings. Energy Build. 2016, 125, 45–54. [Google Scholar] [CrossRef]

- Ascione, F.; Bianco, N.; De Stasio, C.; Mauro, G.M.; Vanoli, G.P. Artificial neural networks to predict energy performance and retrofit scenarios for any member of a building category: A novel approach. Energy 2017, 118, 999–1017. [Google Scholar] [CrossRef]

- Kheiri, F. A review on optimization methods applied in energy-efficient building geometry and envelope design. Renew. Sustain. Energy Rev. 2018, 92, 897–920. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).