2.1. Review

DACSE (Detection and Classification of Acoustic Scenes and Events) is the most prestigious workshop in the machine audition research field, and the DCASE 2022 challenge includes tasks of acoustic scene classification, anomalous sound detection, sound event detection and localization, and automated audio captioning [

27]. However, only domestic and street scenes are included in the DCASE audio collection that is accessible to the public, which excludes construction scenes. Researchers in the construction industry seek to expand the sound event detection task for the recognition of construction activities on site. The research conducted for this purpose can be divided into traditional machine learning approaches and deep learning approaches, with the primary distinction being whether or not the models use deep neural networks. The two basic components of traditional machine learning approaches are the acoustic feature extraction method and the statistical learning-based model [

28]. Deep learning algorithms, on the other hand, employ spectrogram or filterbank outputs as input features and can thus give end-to-end models [

29].

In the early stages of constructing sound recognition and monitoring, machine learning methods such as SVM (Support Vector Machines), HMM (Hidden Markov Model), and ELM (Extreme Learning Machine) were intensively investigated. Typically, these techniques depend on manually picked sound features, which are usually produced directly from 2D spectrograms, MFCCs, or Mel-spectrograms. Thomas and Li reported the identification of construction equipment using acoustic data, which is the earliest known work in this field [

30]. Cho et al. suggested an HMM model capable of classifying the spectrogram-obtained acoustic features to identify three kinds of construction activities. Besides, they provided a method for displaying the identification results in BIM [

31]. Five feature types were collected from the audio using Fourier transform, and a probabilistic model was created to determine the scraper loader’s movement condition. Cheng et al. employed SVM to determine whether four different types of construction machines fell into the major or minor activity categories [

32]. In their work, STFT (Short-Time Fourier Transform) was used to transform audio signals into a time-frequency representation, which served as an input. They eventually extended the scope of this investigation and included 11 types of construction machinery [

12]. Cao et al. classified excavator construction operations using an Extreme Learning Machine (ELM) and retrieved spectrum dynamic characteristics [

33]. Moreover, they designed a cascade classifier that could handle a wide range of sound features, including short frame energy ratio, concentration of spectrum amplitude ratio, truncated energy range, and interval of pulse [

34]. Zhang et al. employed a three-state HMM architecture model to classify six different kinds of concreting work [

16]. In this research, MFCCs (Mel-scale Frequency Cepstral Coefficients) were used as acoustic characteristics to recognize sounds, and a maximum classification accuracy of 94.3% was also obtained. Rashid and Louis demonstrated that when SVM was used to classify construction activity sounds associated with modular construction, an F1-Score of 97% could be achieved [

13]. Akbal and Tuncer reported an SVM-based technique that achieved up to 99.45% accuracy using 256 retrieved acoustic features [

21]. Sabillon et al. developed a Bayesian model for the productivity estimation of cyclic construction activities, such as excavation, on construction sites [

15]. This model’s input consists of two components, i.e., the SVM as an audio feature classifier that identifies the current activity state, and the Markov chain that predicts the output based on temporal analysis [

35]. The Bayesian model will assess the current state of construction based on the outcomes of both inputs.

Traditional machine learning techniques entail first gathering annotated training sets of sounds and then training models using supervised learning [

36]. The primary benefit of these methods is the ability to train the model to an acceptable level with limited data samples. However, the accuracy of this strategy is dependent on the quality of the manually selected feature classes, making it difficult to exclude the effect of human design mistakes on model performance [

37]. As Rashid and Louis’ study indicated, there was substantial variation in the categorization performance of several sound characteristics associated with construction activities [

13]. Moreover, despite the fact that several researchers have tried to apply this family of approaches to the issue of polyphony detection, sophisticated model structures or extra training procedures are necessary [

38]. Therefore, traditional machine learning techniques are insufficient for complicated acoustic situations or when a high rate of identification accuracy is required.

Researchers have recently given attention to DBN (Deep Belief Network), CNN (Convolutional Neural Network), and RNN (Recurrent Neural Network) as a result of the development of deep learning technologies. Research in the field of machine audition has revealed the particular benefits of deep learning techniques. Maccagno et al. used CNN to classify the construction environmental sound [

39]. In their study, different types and brands of construction vehicles and devices could be recognized. Their study demonstrated the better performance of CNN in classifying construction activities compared to the traditional classifiers such as SVM, KNN (K-Nearest Neighbor), and RF (Random Forest). Lee et al. examined the classification accuracy of 17 classical classification techniques and three deep learning methods such as CNN, RNN, and Deep Belief Network (DBN) for construction activity noises [

20]. Moreover, it was found that the best approach achieved a classification accuracy of 93.16%. Scarpiniti et al. demonstrated up to 98% accuracy in categorizing more than 10 different types of construction equipment and device using a DBN [

22]. Sherafat et al. developed CNN models for recognizing multiple-equipment activities [

23,

40]. They used a two-level multi-label sound classification scheme that enables concurrent detection of the device kind and their associated activities.

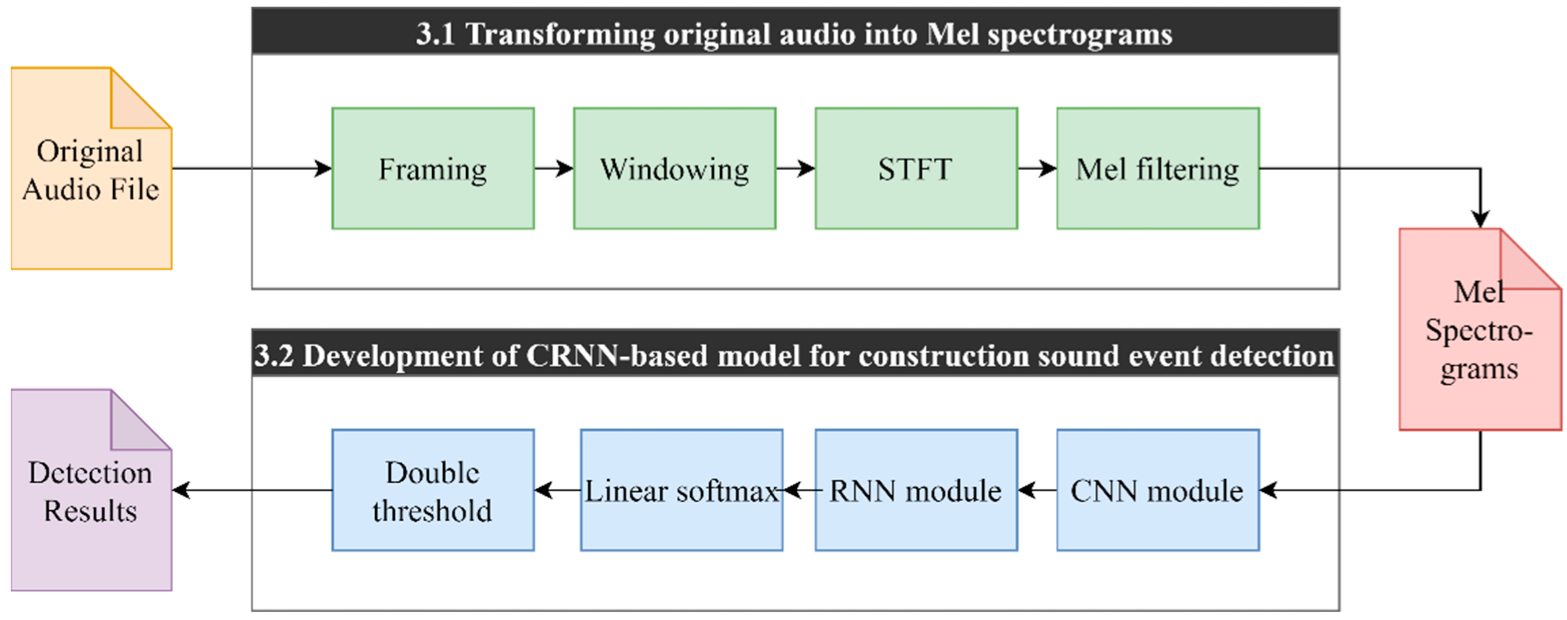

The emergence of deep learning techniques in the area is attributable to the enhanced performance of deep neural networks and the declining price of computer resources. Despite the advantages claimed in the area of computer audition for integrating CNN and RNN, construction-related sound event detection has not yet been researched. The deep neural network is capable of learning multi-layer representation and abstraction of data [

41,

42], and DAFs (Deep Audio Features) generated using CNNs outperform conventionally extracted characteristics in terms of accuracy and robustness [

43,

44,

45]. Although individual sound events often show interdependency, the context information amongst them can be used as a valid aid for identification [

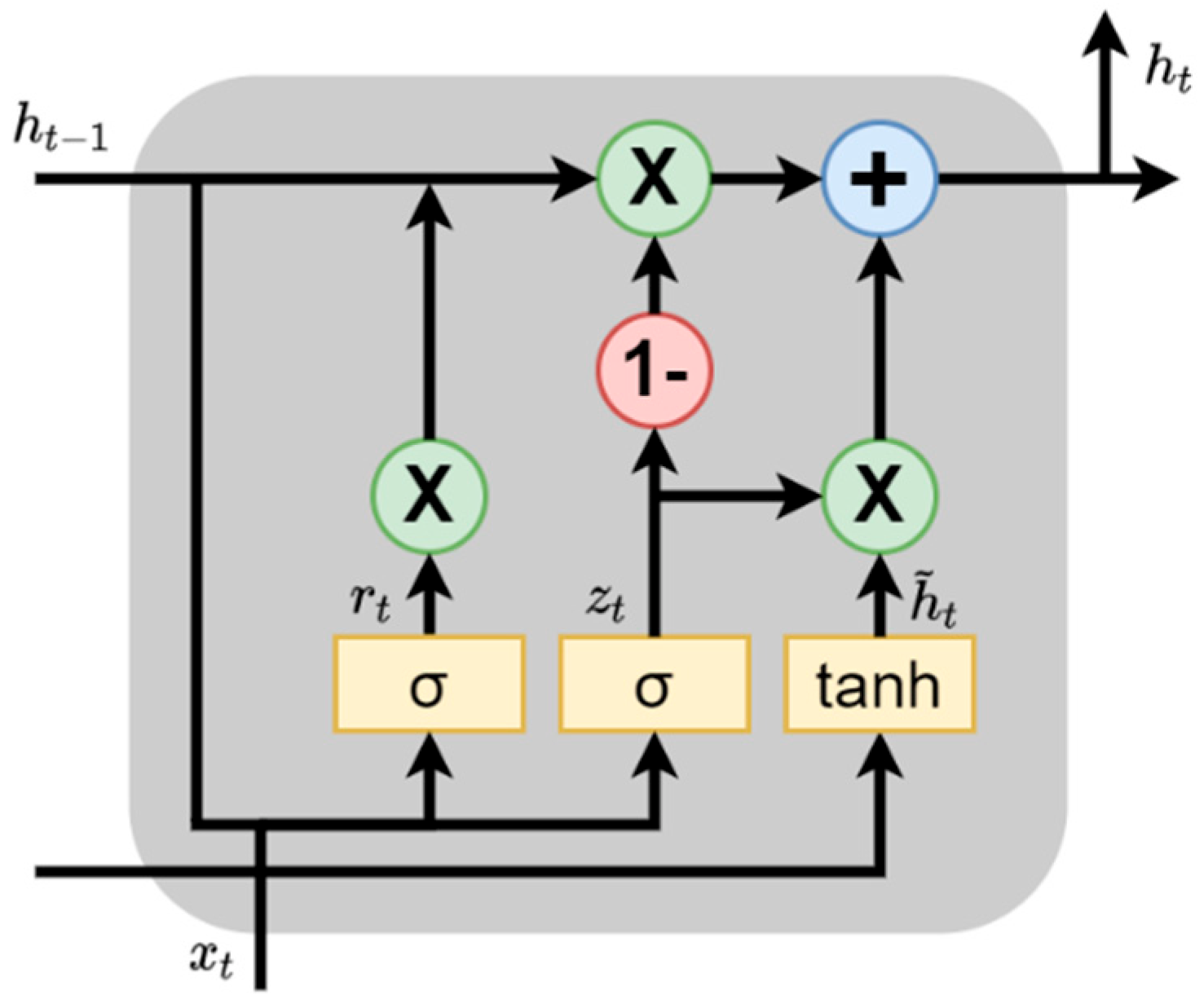

46]. RNNs excel at utilizing contextual information, and the recurrent mechanism in the hidden layers eliminates the need for smoothing frame-wise decisions [

29]. Therefore, SOTA deep network structures, such as CRNN, are required for study in this field.

2.2. Knowledge Gap

While existing studies have shown much progress in recognizing construction activities, there are still several limits to their applicability in the real world. Challenges arise from three primary aspects, i.e., (1) complex overlapping sound sources and strongly mingled ambient noise in construction sites; (2) failure of existing methods to localize the start and end time of sound events; and (3) lack of versatility and adaptability of existing methods.

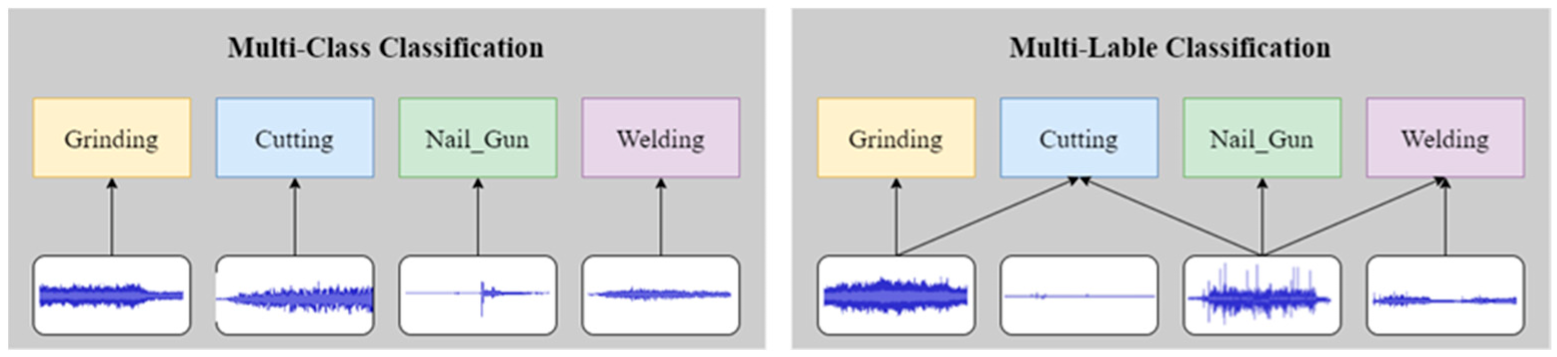

Prior studies of sound classifiers have mainly addressed issues associated with multi-class, namely, only one output class for each input [

26]. These classifiers were used to identify different construction activities with the single-construction-sound input. Other applications include determining the type of the monitored equipment or determining whether the monitored target is in operation or idle. It indicates that the existing methods trained on data with only one activity sound at a time cannot be employed in a polyphonic construction environment [

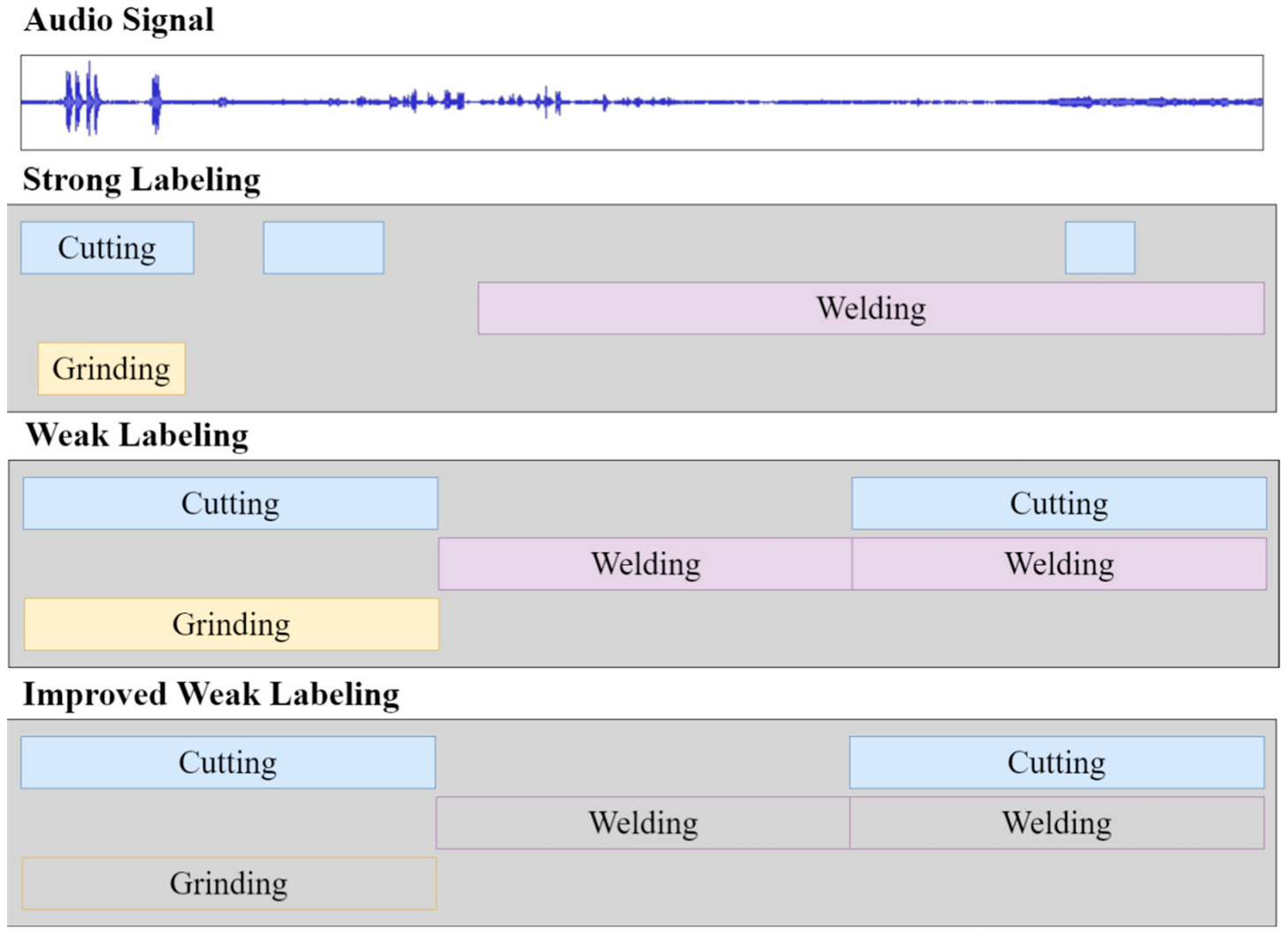

47]. Multi-label sound classifiers concurrently output multiple alternative categories for each input. The difference between multi-class and multi-label classification is shown in

Figure 1. Although the multi-class classification model can only detect the most prominent sound occurrence within a monophonic sound sample, the proposed model aims to solve the multi-label classification issue for polyphonic overlapping sound samples.

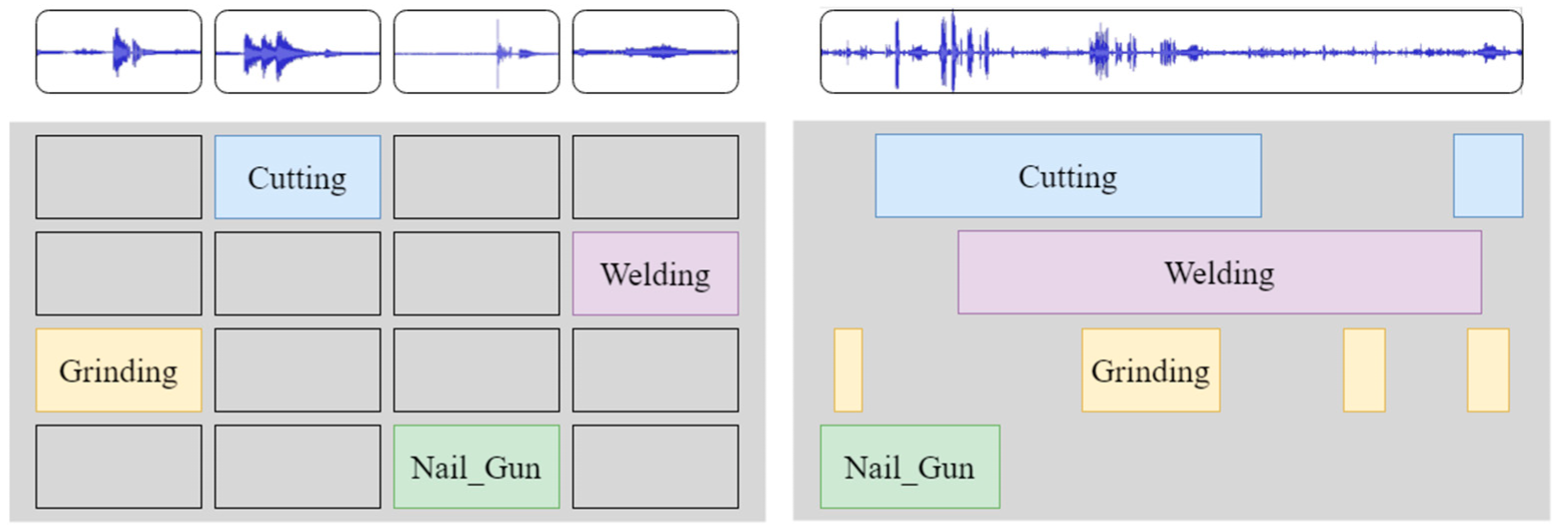

The distribution feature of sound waves in the time domain, regarded as the temporal pattern, is governed by the individual sound source or the construction activity that produces the sound. For instance, the sound wave shape of spot and continuous weld is identical, but the former is produced discretely, while the latter happens continuously. Moreover, construction activities are significantly time-connected, therefore the extraction of temporal contextual information is crucial for the comprehension of construction scenarios. This suggests that to identify the specific construction activity, one has to recognize not only the category of construction sound but also its temporal pattern. The start and end times for such activities are also needed in the scheduling and other project management tasks. To this end, this method focuses on locating the start and finishing times for individual activities, as seen in

Figure 2, which illustrates the comparison between our method and the existing research.

The presented research necessitates the development and training of models for sound event detection based on certain types of construction activities, without addressing the application of their models to other construction activities. Extending new classes is costly for traditional machine learning methods based on statistical learning since it often requires retraining the whole model. Due to the variety of construction sites, the model’s ability to generalize is essential for construction management. Different brands and models of construction equipment have varying acoustic qualities, and different construction sites often use various construction processes, etc., necessitating the adaptability of sound-based methods. In addition, the concept of transfer learning in deep learning has not yet been studied in the area, despite the fact that it is widely acknowledged as a successful method for improving the model’s adaptability to new tasks. To overcome this gap, the suggested model integrates CNN and RNN into an end-to-end model that eliminates redundant and step-by-step methods. This model is pre-trained using publicly accessible audio datasets, and its adaptability to extra tasks is evaluated. The suggested deep learning model is very adaptable for use in extra construction applications.