1. Introduction

Medium-thick plates (4.5~25 mm) are mainly used in mechanical manufacturing, container manufacturing, communications, the transportation industry, and other fields, including storage and transportation tanks in the energy and chemical industry, etc. [

1]. Cold metal transfer (CMT) welding is widely used due to its advantages of not producing slag splashing and low heat input, alternating cold and hot cycles, almost zero current during droplet transition, and faster welding speed [

2]. However, pure CMT welding cannot achieve the welding of medium-thick plates, so the cold metal transfer plus pulse (CMT+P) welding process was proposed [

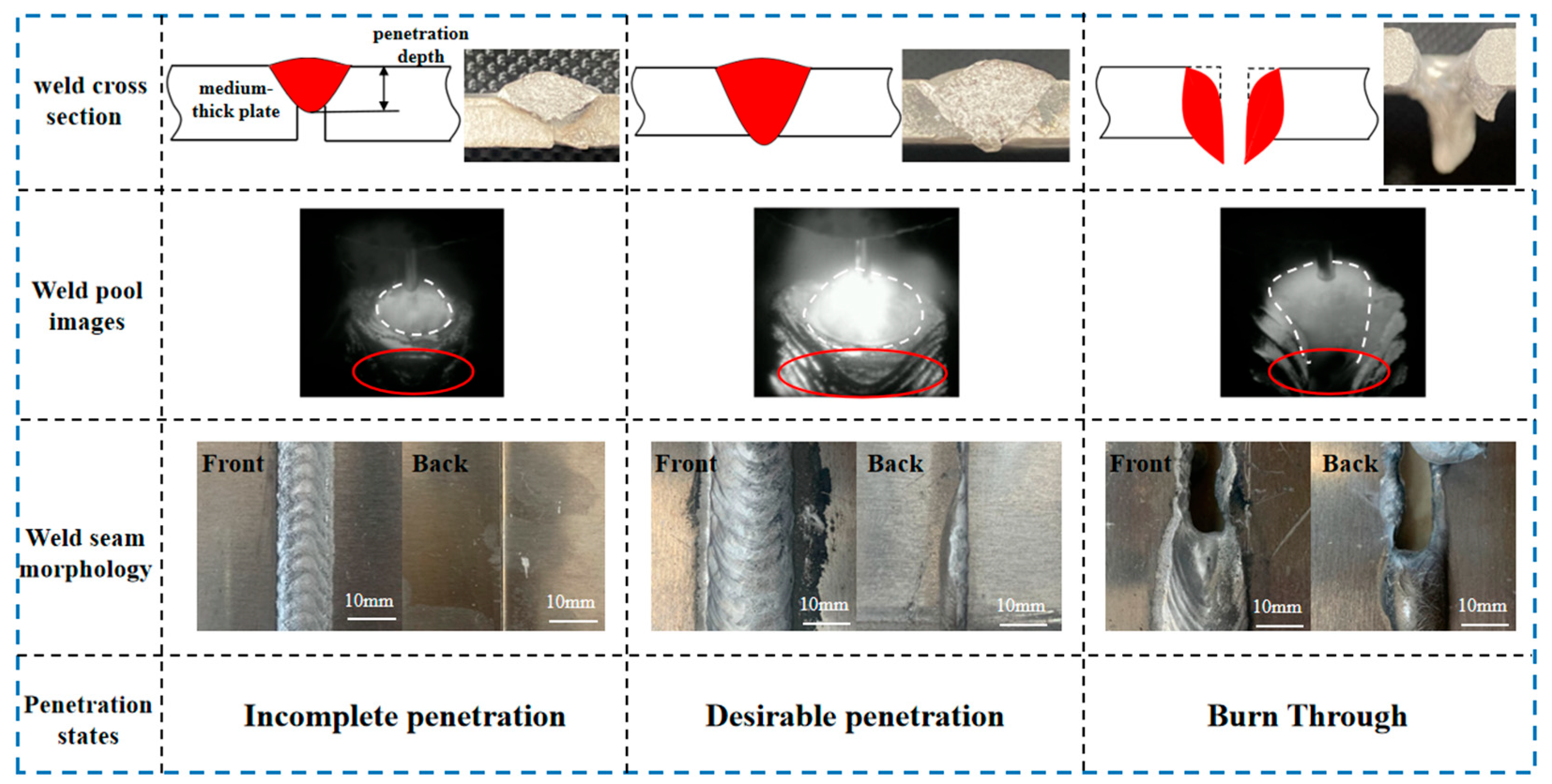

3]. Within this mode, the short-circuit transition from CMT mode and single droplet transmission from pulse mode are combined. The deposition rate is increased during operation by alternating between the pulse mode and the CMT mode, adding a droplet in each cycle. Medium-thick plate welding is sensitive to process variables, and there is a limited range of acceptable settings. The welding quality of medium-thick plates is impacted by welding flaws, including incomplete penetration (IP) and burn-through (BT), which are particularly common in lengthy welding seam welding operations. Traditional testing methods like appearance inspection, X-ray non-destructive testing, ultrasonic testing, and others are unable to detect welding defects like IP and BT in real time while welding is taking place. Instead, they can only do so after welding is finished, which surely results in material waste [

4]. The quality of the weld formation may be reflected by some information about the welding process, including light and sound signals, variations in welding voltage and current, and the morphology and temperature of the weld pool throughout the welding process. Therefore, how to realize high-quality monitoring-related information on the welding process and accurately predict the weld penetration states based on key information acquired during the welding process, such as welding sound, weld pool images, etc., has gradually become a research hotspot [

5,

6].

Machine vision is gradually gaining popularity in the field of welding manufacturing due to its advantages of safety, reliability, low cost, and real-time performance [

7]. Real-time welding process monitoring is achieved by selecting different industrial cameras to monitor the weld pool throughout the welding process based on varied work circumstances [

8]. The two categories of machine vision methods are active and passive vision. Active vision works on the basis of external light sources [

9]. Gao et al. [

10] designed an active vision system using the characteristics of the weld pool contour in the laser welding process by illuminating the weld pool with a laser and generating a contour shadow section; the camera receives light radiation from the laser and quantitatively describes the relationship between the weld pool morphology and the stability of the welding process by analyzing the weld pool contour parameters. Cheng et al. [

11] used a camera to capture images of the weld pool reflected from a single-stripe laser generator and investigated the key differences between partially and fully penetrated camera-received images. The passive vision method does not require any additional light source equipment and directly uses a welding light source or natural light source as background light sources when capturing images with industrial cameras. Compared to active vision, the equipment cost is lower. Chen et al. [

12] designed a passive vision image acquisition system by placing a bandpass filter in the near-infrared band in front of the camera lens to reduce the interference of arc light, information on the weld pool width, tail length, and surface height that eliminates some arc light can be obtained. Liang et al. [

13] designed a biprism stereovision passive vision system to sense the weld pool surface under different penetration states during pulsed gas metal arc welding (GMAW-P) with a V-groove joint.

Weld penetration states are crucial information for the quality of the weld formation, and the morphology of the weld pool is an important factor reflecting the weld penetration states [

14,

15,

16,

17,

18]. Monitoring weld pool morphology based on machine vision during the welding process, predicting weld penetration states accurately, and adaptively adjusting welding parameters are key technologies for intelligent welding [

19,

20]. The penetration states recognition development of the welding process based on the weld pool morphology is considerably improved by deep learning (DL) and image processing technology (IPT) in the area of intelligent welding [

21,

22]. Convolutional neural networks (CNNs) are an important component of deep learning due to their outstanding performance in image recognition and prediction in recent years, which have gradually become widely used in the field of welding process monitoring [

23]. Currently, there are two primary ways that are used to gather information representing penetration states based on machine vision: dynamic continuous weld pool images and single weld pool images. As for dynamic continuous weld pool images, scholars commonly combined CNN models with long-short-term memory (LSTM) networks to study the time series dependency features of dynamic continuous weld pool images that represent the penetration states. Yu et al. [

24] developed a CNN-LSTM model to extract features from dynamic continuous time series weld pool images, aiming to achieve an accurate prediction of weld back width. Zhou et al. [

25] proposed a novel model based on the CNN-LSTM model to extract both the spatial and temporal features of weld pool images, and in key welding scenarios, predicted values close to the actual penetration status were obtained, and more than 80% accuracy was achieved in predicting the next 2 s of keyhole behavior. However, a significant number of datasets are required to train the model because of the excessive parameters of CNN-LSTM. In the case of limited weld pool datasets, the CNN-LSTM model has poor performance in characterizing weld pool features, thereby reducing the accuracy of model predictions.

Under different penetration states, the situation of the weld pool and its surroundings will vary greatly. A single weld pool image has sufficient information to reflect the welding quality, including the size of the weld pool contour, the shape of the weld seam, etc. In recent years, with the rapid development of CNN, their accuracy and speed in image recognition and prediction have been greatly improved. The current mainstream CNN models include LeNet [

26], AlexNet [

27], VGGNet [

28], GoogLeNet (Inception) [

29], and ResNet [

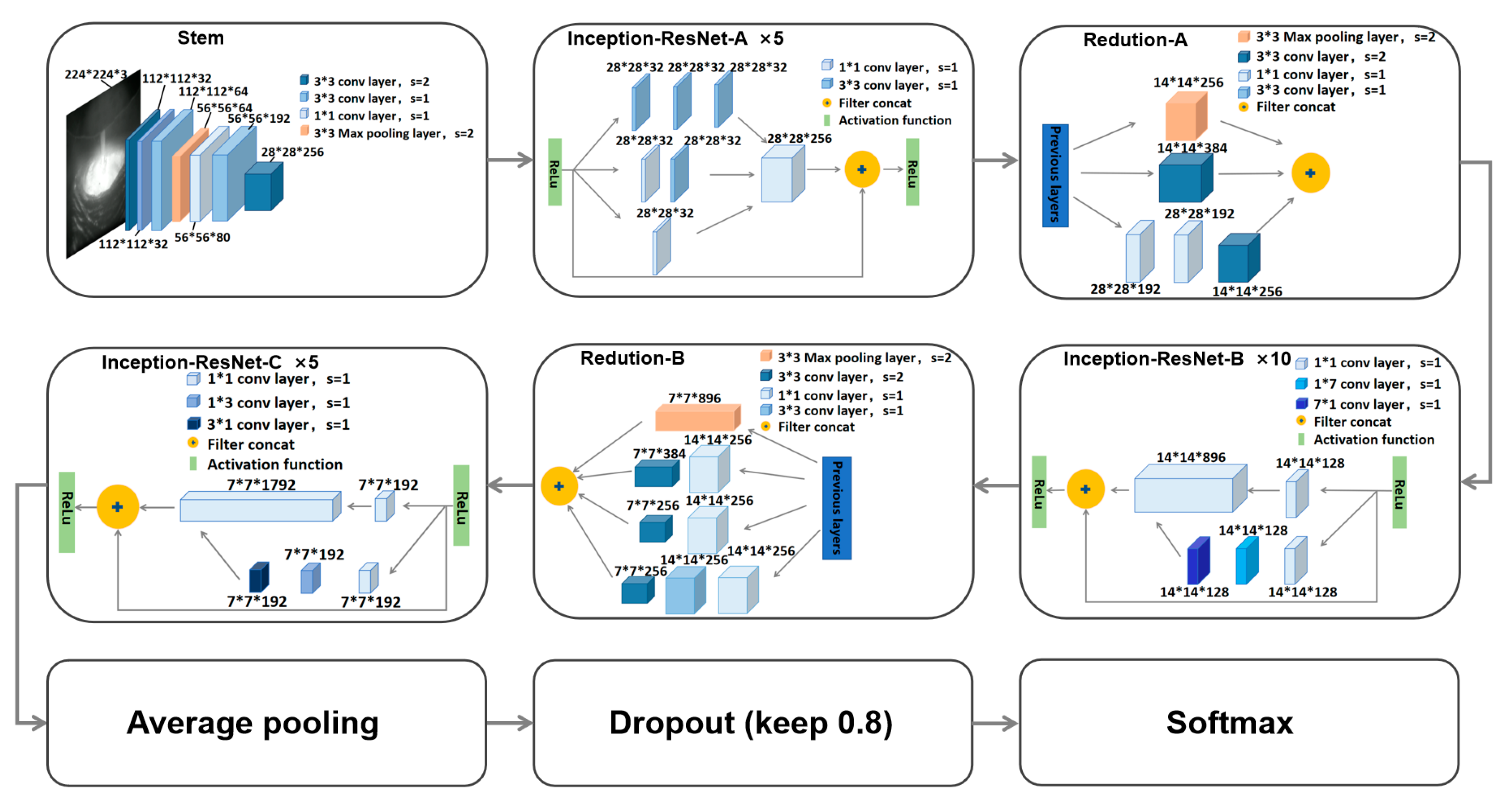

30], etc. Inception and ResNet are two typical network structures of CNNs, which are the most representative in the development process. The method to improve the performance of neural networks is generally to increase the depth and width of the network, where depth refers to the number of network layers and width refers to the number of neurons. The inception network is different from previous networks in that it no longer aims to increase the number of vertical network layers to improve model performance. Instead, it designs a sparse network structure that can generate dense data, not only increasing the width of the network and improving neural network performance but also increasing the adaptability of the network to scale, ensuring the efficiency of computing resource utilization. ResNet has made significant innovations in network structure rather than simply stacking layers. By using residual connections, the original features can be preserved, making the learning of the network smoother and more stable, further improving the accuracy and generalization ability of the model, and solving problems such as vanishing, exploding, and degenerating gradients caused by excessive network layers. A lot of welding monitoring research is based on these two networks. Li et al. [

31] proposed a Residual-Group convolution model (Res-GCM) based on the ResNet block to predict the penetration states by feature extraction and analysis of welding images in non-penetrating laser welding, which achieved an accuracy of 90.53%. Feng et al. [

32] designed a DeepWelding framework, which integrates image preprocessing, image selection, and weld penetration classification. Among them, based on five classic convolutional neural network weld penetration modules, ResNet achieved the highest accuracy of 78.9%, 99.5%, and 88.1% in the three datasets, respectively. Wang et al. [

33] proposed a semantic segmentation network, Res-Seg, based on the ResNet-50 network to extract the contour of the molten pool in TIG stainless steel welding, which achieved an accuracy of 92.14%. Sekhar et al. [

34] studied the classification of tungsten inert gas (TIG) welding defects using eight pre-trained typical convolutional neural networks combined with four optimizers. The accuracy rates of Inception were 92.15%, 85.83%, 91.68%, and 86.81%, respectively. Oh et al. [

35] proposed a Faster R-CNN for automatically detecting welding defects, comparing two internal feature extractors (ResNet and Inception-ResNet) of Faster R-CNN and evaluating their performance.

As described above, Inception and ResNet networks are widely used in the classification of welding defects and prediction of weld penetration states, but few of them consider combining the advantages of Inception and ResNet networks. The DL model needs to accurately predict the penetration states. Only in this way can it serve as a basis for adjusting more reliable welding process parameters during the welding process. In the process of monitoring the penetration states of medium-thick plate welding, the changes in weld pool morphology between IP, desirable penetration (DP), and BT are difficult to distinguish, especially in complex and harsh welding environments. Therefore, it is difficult to accurately recognize and predict the penetration states of medium-thick plate welding.

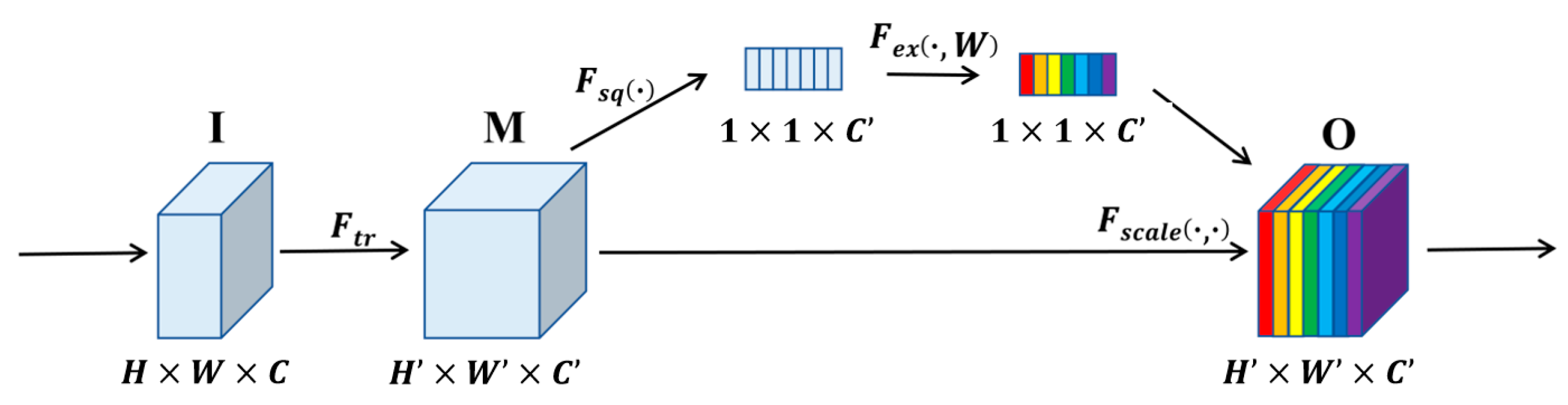

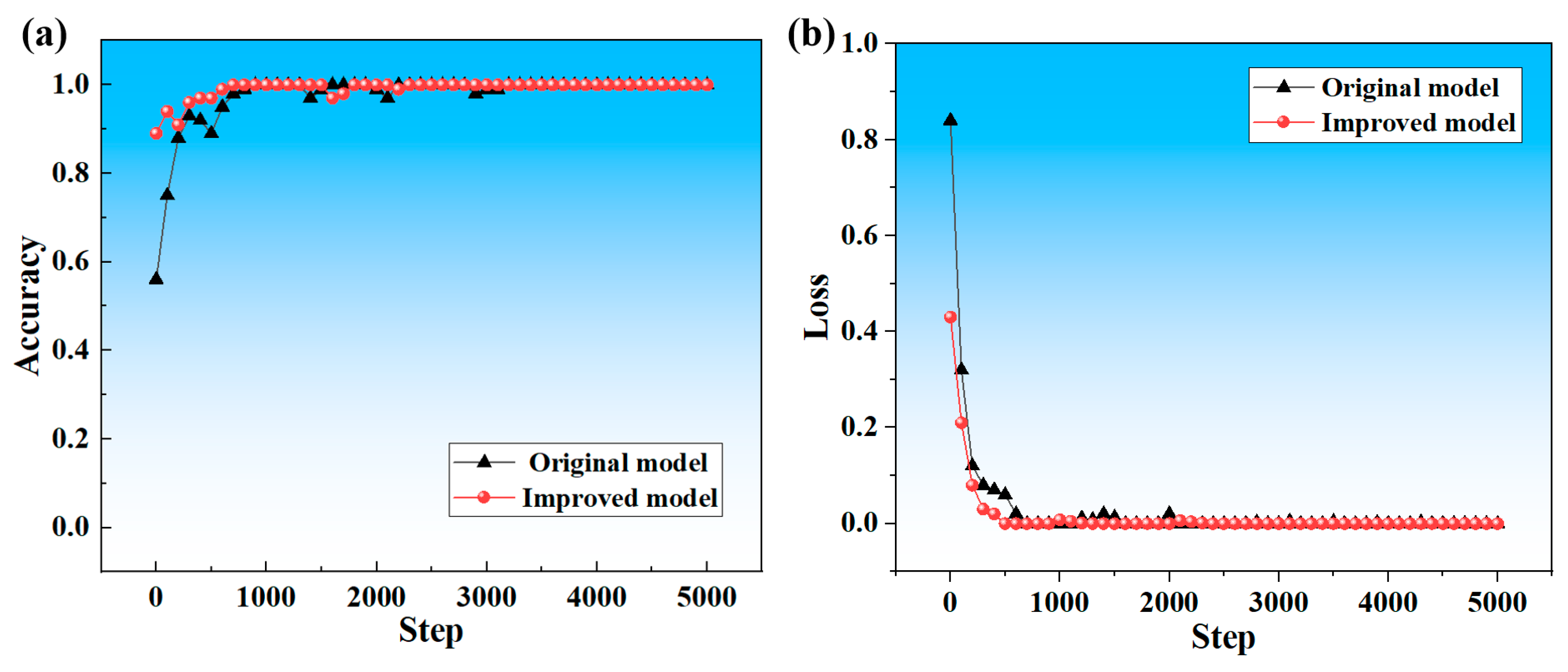

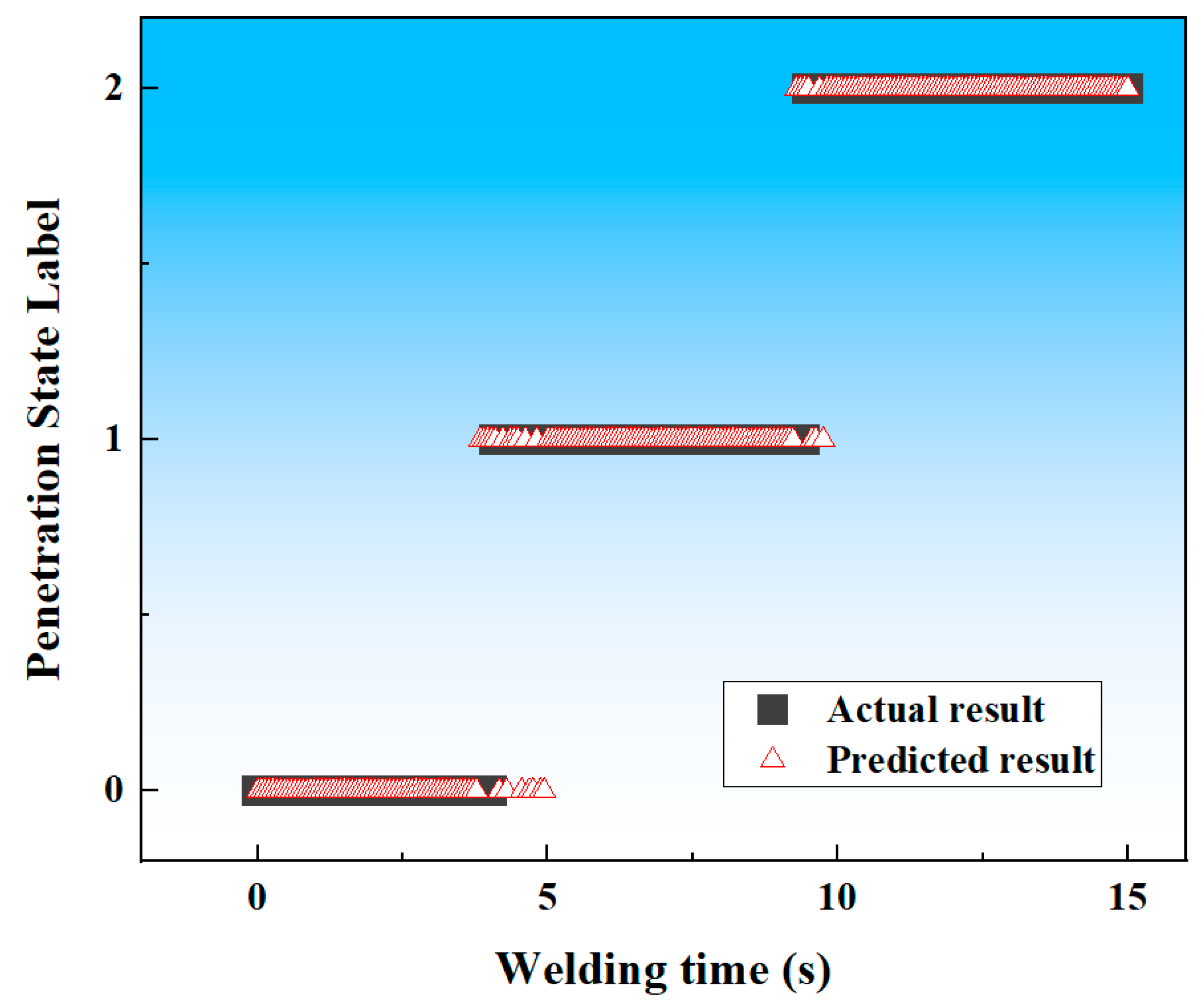

To solve the issues of difficulty in distinguishing the morphology of the weld pool in the welding process of medium-thick plates and accurately predicting the penetration states, a method for predicting the weld penetration states of medium-thick plates welding in complex interference sources was proposed based on a DL model. To achieve clear monitoring of weld pool morphology in complex welding environments, such as smoke and strong arc light, a welding monitoring system was designed. Based on this system, welding experiments of aluminum alloy medium-thick plates were carried out under different process parameters, and high-quality weld pool image datasets were obtained. A weld penetration states prediction model based on improved Inception-ResNet has been established, which adds an SE block after each Inception-ResNet block, recalibrating the weights of key features of the weld pool, enhancing the model’s ability to better extract key feature information, and significantly improving model performance in predicting penetration states. The model is trained, validated, tested, and finally embedded into the weld pool monitoring platform and applied to the actual welding experiments.