Correlational and Configurational Perspectives on the Determinants of Generative AI Adoption Among Spanish Zoomers and Millennials

Abstract

1. Introduction

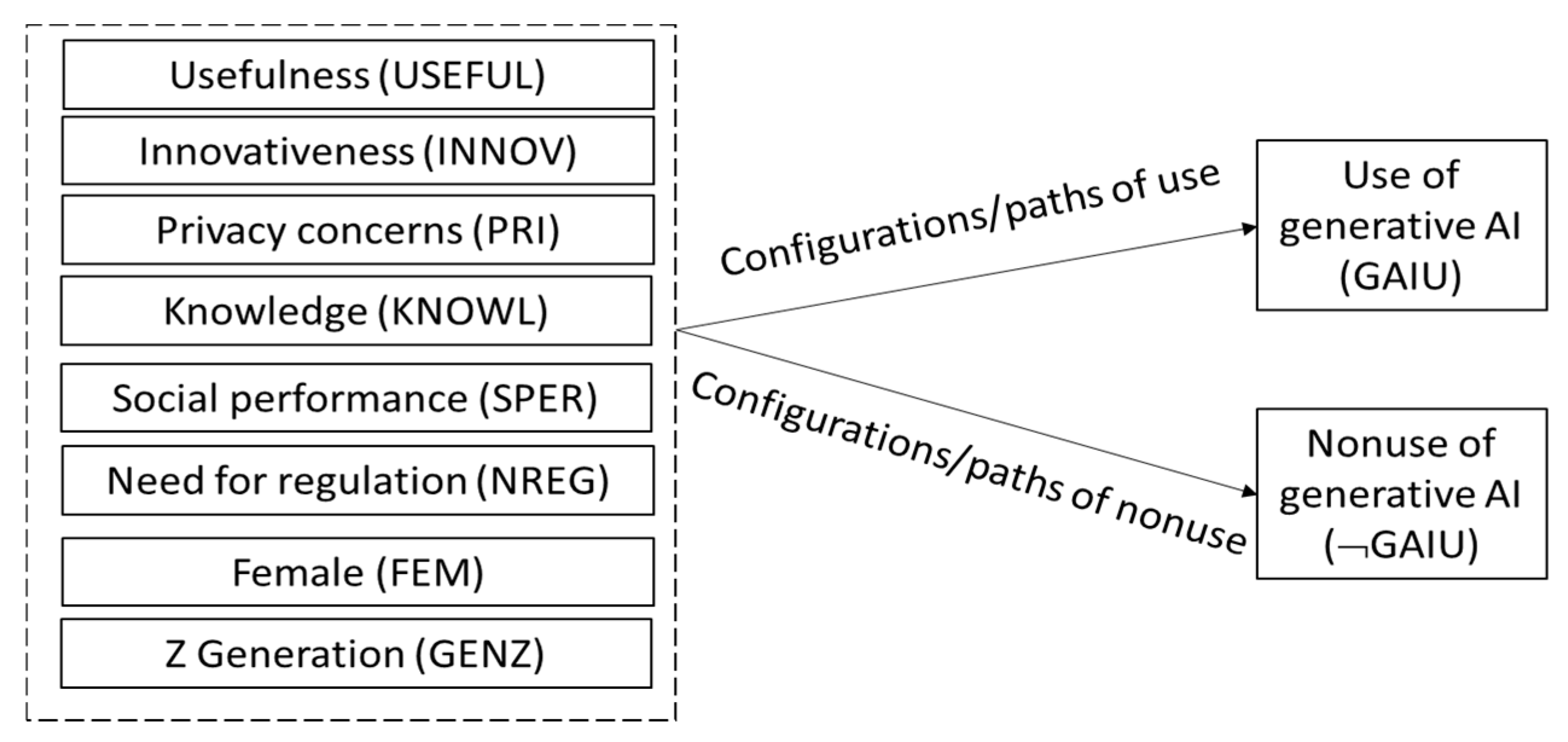

- The first (RQ1) aims to measure the average effect of the explanatory variables on willingness to use GAI, addressed using a variable-oriented approach through ordinal logistic regression.

- The second (RQ2) explores how the different factors in the conceptual model form causal combinations (paths) that affect both the adoption and reluctance to generate AI, analyzed through the configurational approach of fsQCA.

2. Conceptual Ground

2.1. Development of the Correlational Hypotheses of the Model

2.1.1. Hypotheses About Attitudinal Variables

2.1.2. Hypothesis About the Variable Related to Behavioral Control

2.1.3. Hypotheses About Subjective Norm Variables

2.1.4. Influence of Sociodemographic Variables

2.2. Development of Configurational Laws on the Use of Generative AI (GAIU)

3. Material and Data Analysis

3.1. Sampling

3.2. Sociodemographic Profile

3.3. Measurement of Variables

3.4. Data Analysis

3.4.1. Analysis of Research Question 1

3.4.2. Analysis of Research Question 2

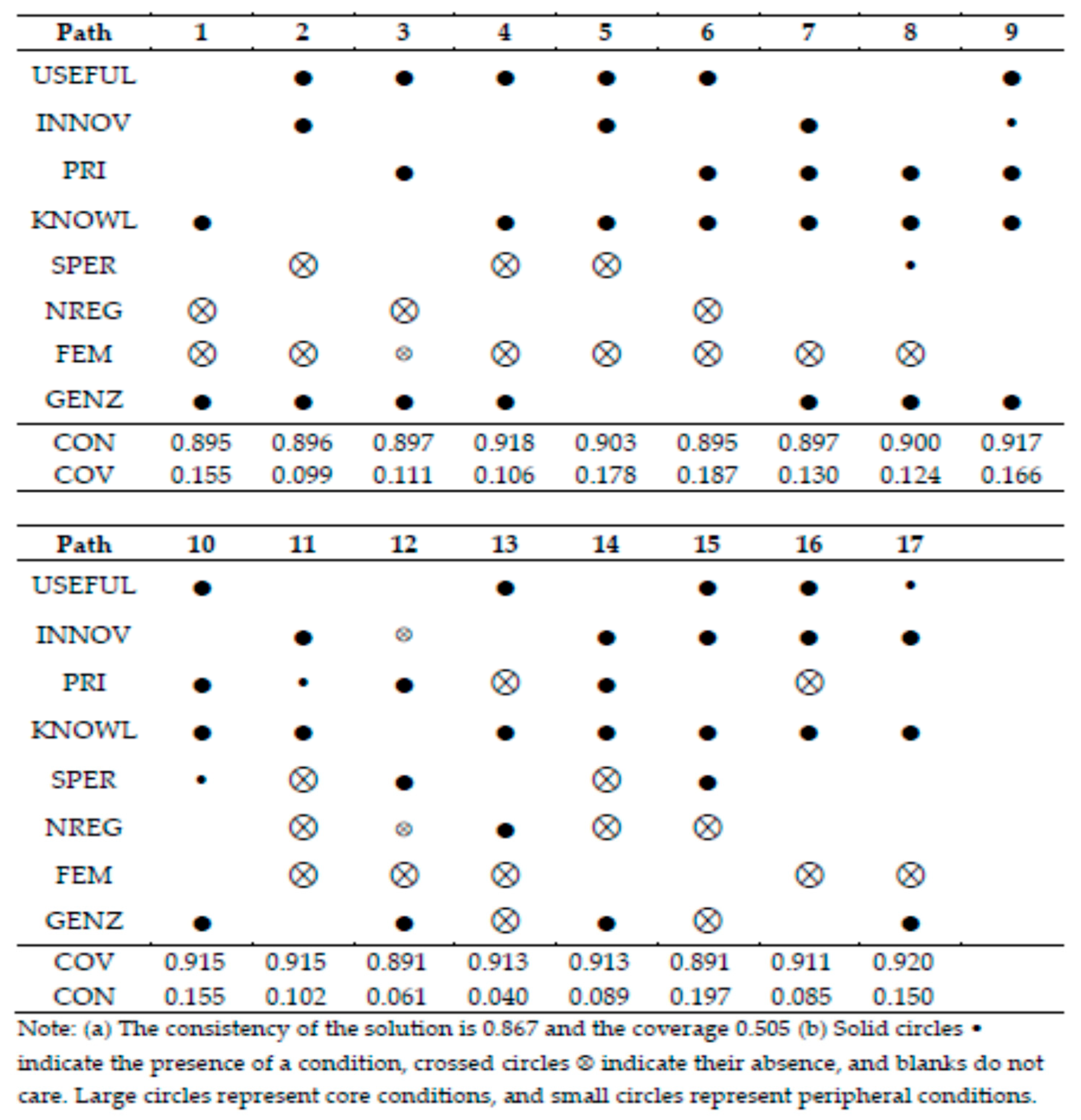

- 1

- We analyzed the necessary condition status for the presence and absence of explanatory factors in the willingness and non-willingness to try GAI. The presence of variable is measured by its membership function ; its absence, denoted as , is measured by . Thus, while the membership function of GAIU is denoted as , non-use GAIU has a membership degree of

- 2

- We performed an analysis of sufficient conditions. For this assessment, it is necessary to construct recipes (also referred to in the literature as prime implicates or configurations) that make up the intermediate solution (IS) and the parsimonious solution (PS) for both GAIU and ¬GAIU. These recipes are interpreted as antecedents, pathways, or profiles linked to adherence to and reluctance to GAI. Prime implications of the IS are obtained using assumptions about the presence or absence of the exogenous variables in WTR and ¬WTR, based on the hypotheses developed in Section 2.1.

- 3

- We present the set of primes that implicates WTR and ¬WTR (i.e., their intermediate solutions) and interpret them. We distinguish between core conditions that appear simultaneously in IS and PS, and peripheral conditions that appear only in the IS recipes. The former functions as a strong cause and the latter as a weaker cause [25].

- 4

- The measures of consistency (CON) and coverage (COV) allow the assessment of the explanatory power of the IS and of each implicated individual prime. Consistency quantifies the significance of a prime implicate or overall solution with desirable values of >0.8. Coverage indicates empirical relevance and can be interpreted as a measure of the effect size [26].

4. Results

4.1. Descriptive Statistics and Response of Research Objective 1

4.2. Analytical Outcomes of Research Objective 2

5. Discussion

5.1. General Considerations

5.2. Implications for Theory and Practice

6. Conclusions

6.1. Main Findings

6.2. Study Limitations

6.3. Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| AVE | Average variance extracted |

| FEM | Being female |

| fsQCA | Fuzzy set Qualitative Comparative Analysis |

| GAI | Generative artificial intelligence |

| GAIU | Use of Generative artificial intelligence |

| GENZ | Belonging to Generation Z |

| INNOV | Innovativeness |

| KNOWL | Knowledge |

| NREG | Need for regulation |

| PRI | Privacy concerns |

| SPER | Social performance |

| TPB | Theory of planned behaviour |

| USEFUL | Usefulness |

References

- Polak, P.; Anshari, M. Exploring the multifaceted impacts of artificial intelligence on public organizations, business, and society. Humanit. Soc. Sci. Commun. 2024, 11, 1–3. [Google Scholar] [CrossRef]

- Feuerriegel, S.; Hartmann, J.; Janiesch, C.; Zschech, P. Generative AI. Bus. Inf. Syst. Eng. 2024, 66, 111–126. [Google Scholar] [CrossRef]

- Lo, C.K. What is the impact of ChatGPT on education? A rapid review of the literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. Opinion paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Mahmoud, A.B.; Kumar, V.; Spyropoulou, S. Identifying the Public’s Beliefs about Generative Artificial Intelligence: A Big Data Approach. IEEE Trans. Eng. Manag. 2025, 77, 827–841. [Google Scholar] [CrossRef]

- Runcan, R.; Hațegan, V.; Toderici, O.; Croitoru, G.; Gavrila-Ardelean, M.; Cuc, L.D.; Rad, D.; Costin, A.; Dughi, T. Ethical AI in Social Sciences Research: Are We Gatekeepers or Revolutionaries? Societies 2025, 15, 62. [Google Scholar] [CrossRef]

- Doshi, A.R.; Hauser, O.P. Generative AI enhances individual creativity but reduces the collective diversity of novel content. Sci. Adv. 2024, 10, eadn5290. [Google Scholar] [CrossRef]

- Sebastian, G. Privacy and Data Protection in ChatGPT and Other AI Chatbots: Strategies for Securing User Information. Int. J. Secur. Priv. Pervasive Comput. 2024, 15, 14. [Google Scholar] [CrossRef]

- Shahriar, S.; Allana, S.; Fard, M.H.; Dara, R. A Survey of Privacy Risks and Mitigation Strategies in the Artificial Intelligence Life Cycle. IEEE Access 2023, 11, 61829–61854. [Google Scholar] [CrossRef]

- Centro de Investigaciones Sociológicas. Inteligencia Artificial. Estudio 3495 2025. Available online: https://www.cis.es/documents/d/cis/es3495mar-pdf (accessed on 3 June 2025).

- Wang, H.Y.; Sigerson, L.; Cheng, C. Digital nativity and information technology addiction: Age cohort versus individual difference approaches. Comput. Hum. Behav. 2019, 90, 1–9. [Google Scholar] [CrossRef]

- Kutlák, J. Individualism and self-reliance of Generations Y and Z and their impact on working environment: An empirical study across 5 European countries. Probl. Perspect. Manag. 2021, 19, 39–52. [Google Scholar] [CrossRef]

- Akçayır, M.; Dündar, H.; Akçayır, G. What makes you a digital native? Is it enough to be born after 1980? Comput. Hum. Behav. 2016, 60, 435–440. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Lee, K.K.W. The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and millennial generation teachers? Smart Learn. Environ. 2023, 10, 60. [Google Scholar] [CrossRef]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Process 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Al-Emran, M.; Abu-Hijleh, B.; Alsewari, A.A. Exploring the Effect of Generative AI on Social Sustainability Through Integrating AI Attributes, TPB, and T-EESST: A Deep Learning-Based Hybrid SEM-ANN Approach. IEEE Trans. Eng. Manag. 2024, 71, 14512–14524. [Google Scholar] [CrossRef]

- Gado, S.; Kempen, R.; Lingelbach, K.; Bipp, T. Artificial intelligence in psychology: How can we enable psychology students to accept and use artificial intelligence? Psychol. Learn. Teach. 2021, 21, 37–56. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Khizar, H.M.U.; Ashraf, A.; Yuan, J.; Al-Waqfi, M. Insights into ChatGPT adoption (or resistance) in research practices: The behavioral reasoning perspective. Technol. Forecast. Soc. Change 2025, 215, 124047. [Google Scholar] [CrossRef]

- Gansser, O.A.; Reich, C.S. A new acceptance model for artificial intelligence with extensions to UTAUT2: An empirical study in three segments of application. Technol. Soc. 2021, 65, 101535. [Google Scholar] [CrossRef]

- Ajzen, I. Perceived Behavioral Control, Self-Efficacy, Locus of Control, and the Theory of Planned Behavior. J. Appl. Soc. Psychol. 2002, 32, 665–683. [Google Scholar] [CrossRef]

- Sparks, P.; Guthrie, C.A.; Shepherd, R. The Dimensional Structure of the Perceived Behavioral Control Construct. J. Appl. Soc. Psychol. 1997, 27, 418–438. [Google Scholar] [CrossRef]

- Al Darayseh, A. Acceptance of artificial intelligence in teaching science: Science teachers’ perspective. Comput. Educ. Artif. Intell. 2023, 4, 100132. [Google Scholar] [CrossRef]

- Sobieraj, S.; Krämer, N.C. Similarities and differences between genders in the usage of computer with different levels of technological complexity. Comput. Hum. Behav. 2020, 104, 106145. [Google Scholar] [CrossRef]

- Fiss, P.C. Building better causal theories: A fuzzy set approach to typologies in organization research. Acad. Manag. J. 2011, 54, 393–420. [Google Scholar] [CrossRef]

- Woodside, A.G. Embrace perform model: Complexity theory, contrarian case analysis, and multiple realities. J. Bus. Res. 2014, 67, 2495–2503. [Google Scholar] [CrossRef]

- de Andrés-Sánchez, J.; Arias-Oliva, M.; Souto-Romero, M. Antecedents of the Intention to Use Implantable Technologies for Nonmedical Purposes: A Mixed-Method Evaluation. Hum. Behav. Emerg. Technol. 2024, 2024, 1064335. [Google Scholar] [CrossRef]

- Gadekallu, T.R.; Yenduri, G.; Kaluri, R.; Rajput, D.S.; Lakshmanna, K.; Fang, K.; Chen, J.; Wang, W. The role of GPT in promoting inclusive higher education for people with various learning disabilities: A review. PeerJ Comput. Sci. 2025, 11, e2400. [Google Scholar] [CrossRef]

- Singh, A.; Patel, N.P.; Ehtesham, A.; Kumar, S.; Khoei, T.T. A Survey of Sustainability in Large Language Models: Applications, Economics, and Challenges. In Proceedings of the 2025 IEEE 15th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2025; pp. 8–14. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, H. Green entrepreneurship success in the age of generative artificial intelligence: The interplay of technology adoption, knowledge management, and government support. Technol. Soc. 2024, 79, 102744. [Google Scholar] [CrossRef]

- Bossert, L.N.; Loh, W. Why the carbon footprint of generative large language models alone will not help us assess their sustainability. Nat. Mach. Intell. 2025, 7, 164–165. [Google Scholar] [CrossRef]

- Alier, M.; García-Peñalvo, F.; Camba, J.D. Generative artificial intelligence in education: From deceptive to disruptive. Int. J. Interact. Multimed. Artif. Intell. 2024, 8, 5–14. [Google Scholar] [CrossRef]

- Yudhistyra, W.I.; Srinuan, C. Exploring the Acceptance of Technological Innovation Among Employees in the Mining Industry: A Study on Generative Artificial Intelligence. IEEE Access 2024, 12, 165797–165809. [Google Scholar] [CrossRef]

- Hsu, W.-L.; Silalahi, A.D.K.; Tedjakusuma, A.P.; Riantama, D. How Do ChatGPT’s Benefit–Risk Paradoxes Impact Higher Education in Taiwan and Indonesia? An Integrative Framework of UTAUT and PMT with SEM & fsQCA. Comput. Educ. Artif. Intell. 2025, 8, 100412. [Google Scholar] [CrossRef]

- Sobaih, A.E.E.; Elshaer, I.A.; Hasanein, A.M. Examining students’ acceptance and use of ChatGPT in Saudi Arabian higher education. Eur. J. Investig. Health Psychol. Educ. 2024, 14, 709–721. [Google Scholar] [CrossRef]

- Strzelecki, A. To use or not to use ChatGPT in higher education? A study of students’ acceptance and use of technology. Interact. Learn. Environ. 2024, 32, 5142–5155. [Google Scholar] [CrossRef]

- Agarwal, R.; Prasad, J. A conceptual and operational definition of personal innovativeness in the domain of information technology. Inf. Syst. Res. 1998, 9, 204–215. [Google Scholar] [CrossRef]

- Li, F.; Wei, X.; Wang, C.; Zhang, C.; Yu, G.; Yang, Y.; Liu, Y. How do users perceive AI? A dual-process perspective on enhancement and replacement. Telemat. Inform. Rep. 2025, 19, 100227. [Google Scholar] [CrossRef]

- Montag, C.; Rahman, S.; Thrul, J.; AlBeyahi, F.; Ali, R. Bringing computer and social/psychological/medical sciences together: The case of digital well-being and AI well-being. Telemat. Inform. Rep. 2025, 19, 100225. [Google Scholar] [CrossRef]

- Lu, J.; Yao, J.E.; Yu, C.-S. Personal innovativeness, social influences and adoption of wireless Internet services via mobile technology. J. Strateg. Inf. Syst. 2005, 14, 245–268. [Google Scholar] [CrossRef]

- Chalutz Ben-Gal, H. Artificial intelligence (AI) acceptance in primary care during the coronavirus pandemic: What is the role of patients’ gender, age and health awareness? A two-phase pilot study. Front. Public Health 2023, 10, 931225. [Google Scholar] [CrossRef]

- Biloš, A.; Budimir, B. Understanding the Adoption Dynamics of ChatGPT among Generation Z: Insights from a Modified UTAUT2 Model. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 863–879. [Google Scholar] [CrossRef]

- Wirtz, J.; Lwin, M.O.; Williams, J.D. Causes and consequences of consumer online privacy concern. Int. J. Serv. Ind. Manag. 2007, 18, 326–348. [Google Scholar] [CrossRef]

- KPMG International. Privacy in the New World of AI: How to Build Trust in AI through Privacy 2023. Available online: https://kpmg.com/xx/en/our-insights/ai-and-technology/privacy-in-the-new-world-of-ai.html (accessed on 3 June 2025).

- Martin, K.D.; Zimmermann, J. Artificial intelligence and its implications for data privacy. Curr. Opin. Psychol. 2024, 58, 101829. [Google Scholar] [CrossRef]

- Moon, W.-K.; Xiaofan, W.; Holly, O.; Kim, J.K. Between Innovation and Caution: How Consumers’ Risk Perception Shapes AI Product Decisions. J. Curr. Issues Res. Advert. 2025, 1–23. [Google Scholar] [CrossRef]

- Chung, J.; Kwon, H. Privacy fatigue and its effects on ChatGPT acceptance among undergraduate students: Is privacy dead? Educ. Inf. Technol. 2025, 30, 12321–12343. [Google Scholar] [CrossRef]

- Compeau, D.R.; Higgins, C.A. Computer self-efficacy: Development of a measure and initial test. MIS Q. 1995, 19, 189–210. [Google Scholar] [CrossRef]

- Venkatesh, V.; Bala, H. Technology Acceptance Model 3 and a Research Agenda on Interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Araujo, T.; Helberger, N.; Kruikemeier, S.; de Vreese, C.H. In AI We Trust? Perceptions About Automated Decision-Making by Artificial Intelligence. AI Soc. 2020, 35, 611–623. [Google Scholar] [CrossRef]

- Alshutayli, A.A.; Asiri, F.M.; Arshi Abutaleb, Y.B.; Alomair, B.A.; Almasaud, A.K.; Almaqhawi, A. Assessing Public Knowledge and Acceptance of Using Artificial Intelligence Doctors as a Partial Alternative to Human Doctors in Saudi Arabia: A Cross-Sectional Study. Cureus 2024, 16, e64461. [Google Scholar] [CrossRef] [PubMed]

- Lokaj, B.; Pugliese, M.; Kinkel, K.; Lovis, C.; Schmid, J. Barriers and Facilitators of Artificial Intelligence Conception and Implementation for Breast Imaging Diagnosis in Clinical Practice: A Scoping Review. Eur. Radiol. 2023, 34, 2096–2109. [Google Scholar] [CrossRef]

- Kelly, S.; Kaye, S.-A.; Oviedo-Trespalacios, O. What factors contribute to the acceptance of artificial intelligence? A systematic review. Telemat. Inform. 2023, 77, 101925. [Google Scholar] [CrossRef]

- Camilleri, M.A. Factors affecting performance expectancy and intentions to use ChatGPT: Using SmartPLS to advance an information technology acceptance framework. Technol. Forecast. Soc. Change 2024, 201, 123247. [Google Scholar] [CrossRef]

- Elshaer, I.A.; Hasanein, A.M.; Sobaih, A.E.E. The Moderating Effects of Gender and Study Discipline in the Relationship between University Students’ Acceptance and Use of ChatGPT. Eur. J. Investig. Health Psychol. Educ. 2024, 14, 1981–1995. [Google Scholar] [CrossRef] [PubMed]

- Strzelecki, A.; ElArabawy, S. Investigation of the moderation effect of gender and study level on the acceptance and use of generative AI by higher education students: Comparative evidence from Poland and Egypt. Br. J. Educ. Technol. 2024, 55, 1209–1230. [Google Scholar] [CrossRef]

- Sætra, H.S. Generative AI: Here to stay, but for good? Technol. Soc. 2023, 75, 102372. [Google Scholar] [CrossRef]

- Liu, F.; Liang, C. Analyzing wealth distribution effects of artificial intelligence: A dynamic stochastic general equilibrium approach. Heliyon 2025, 11, e41943. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Wang, F.; Zhu, Z.; Wang, J.; Tran, T.; Du, Z. Artificial intelligence in education: A systematic literature review. Expert. Syst. Appl. 2024, 252, 124167. [Google Scholar] [CrossRef]

- Alkamli, S.; Alabduljabbar, R. Understanding privacy concerns in ChatGPT: A data-driven approach with LDA topic modeling. Heliyon 2024, 10, e39087. [Google Scholar] [CrossRef]

- Haidemariam, T.; Gran, A.-B. On the problems of training generative AI: Towards a hybrid approach combining technical and non-technical alignment strategies. AI Soc. 2025. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.L.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Rahman, M.M.; Babiker, A.; Ali, R. Motivation, Concerns, and Attitudes Towards AI: Differences by Gender, Age, and Culture. In Proceedings of the International Conference on Human-Computer Interaction, Washington, DC, USA, 29 June–4 July 2024. [Google Scholar] [CrossRef]

- Armutat, S.; Wattenberg, M.; Mauritz, N. Artificial Intelligence—Gender-Specific Differences in Perception, Understanding, and Training Interest. Int. Conf. Gend. Res. 2024, 7, 36–43. [Google Scholar] [CrossRef]

- Moravec, V.; Hynek, N.; Skare, M.; Gavurova, B.; Kubak, M. Human or machine? The perception of artificial intelligence in journalism, its socio-economic conditions, and technological developments toward the digital future. Technol. Forecast. Soc. Change 2024, 200, 123162. [Google Scholar] [CrossRef]

- Bartneck, C.; Yogeeswaran, K.; Sibley, C.G. Personality and demographic correlates of support for regulating artificial intelligence. AI Ethics 2024, 4, 419–426. [Google Scholar] [CrossRef]

- Rogers, E.M. Diffusion of Innovations, 5th ed.; Free Press: New York, NY, USA, 2003. [Google Scholar]

- Birkland, J.L.H. Understanding the ICT User Typology and the User Types. In Gerontechnology; Emerald Publishing Limited: Leeds, UK, 2019; pp. 95–106. [Google Scholar] [CrossRef]

- Tanković, A.; Prodan, M.; Benazić, D. Consumer segments in blockchain technology adoption. South. East. Eur. J. Econ. Bus. 2023, 18, 162–172. [Google Scholar] [CrossRef]

- Augustin, L.; Pfrang, S.; Wolffram, A.; Beyer, C. The value of the non-user: Developing (non-)user profiles for the development of a robot vacuum with the use of the (non-)persona. Proc. Des. Soc. 2021, 1, 3131–3140. [Google Scholar] [CrossRef]

- Gauttier, S. ‘I’ve got you under my skin’–The role of ethical consideration in the (non-) acceptance of insideables in the workplace. Technol. Soc. 2019, 56, 93–108. [Google Scholar] [CrossRef]

- Pappas, I.O.; Woodside, A.G. Fuzzy-set Qualitative Comparative Analysis (fsQCA): Guidelines for research practice in Information Systems and marketing. Int. J. Inf. Manag. 2021, 58, 102310. [Google Scholar] [CrossRef]

- Rutten, R.; Rubinson, C. A Vocabulary for QCA 2022. Available online: https://compasss.org/wp-content/uploads/2023/02/vocabulary.pdf (accessed on 3 June 2025).

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.-G. Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behav. Res. Methods 2009, 41, 1149–1160. [Google Scholar] [CrossRef] [PubMed]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Taber, K.S. The use of Cronbach’s alpha when developing and reporting research instruments in science education. Res. Sci. Educ. 2018, 48, 1273–1296. [Google Scholar] [CrossRef]

- Ragin, C.C. User’s Guide to Fuzzy-Set/Qualitative Comparative Analysis 3.0 2018. Available online: https://sites.socsci.uci.edu/~cragin/fsQCA/download/fsQCAManual.pdf (accessed on 1 January 2020).

- McFadden, D. Conditional Logit Analysis of Qualitative Choice Behavior. In Frontiers in Econometrics; Zarembka, P., Ed.; Academic Press: New York, NY, USA, 1974; pp. 105–142. [Google Scholar]

| Factor | Number | Percentage |

|---|---|---|

| Sex | ||

| Men | 653 | 42.60% |

| Women | 880 | 57.40% |

| Age | ||

| Up to 25 years | 294 | 19.18% |

| From 26 to 35 years | 562 | 36.66% |

| From 36 to 45 years | 677 | 44.16% |

| Nationality | ||

| Spanish | 1363 | 88.91% |

| Spanish and others | 81 | 5.28% |

| Other | 89 | 5.81% |

| Academic degree | ||

| Primary or less | 98 | 6.39% |

| Secondary | 551 | 35.94% |

| University | 884 | 57.66% |

| Monthly Household Income | ||

| Less than €900 | 76 | 4.96% |

| From €900 to €1800 | 321 | 20.94% |

| From €1801 to €3000 | 493 | 32.16% |

| From €3001 to €6000 | 504 | 32.88% |

| From € 6001 | 89 | 5.81% |

| Non answered | 50 | 3.26% |

| Variables | Responses |

|---|---|

| Output variable: frequency of using GAI (GAIU) | |

| GAIU1 = Chat GPT GAIU2 = Gemini GAIU3 = Microsoft copilot GAIU4 = Perplexity GAIU5 = Other | Never = 1; Once = 1; Multiple times a year = 2; Multiple times a year = 3; Multiple times a week = 4; Daily = 5 |

| Input variables | |

| Usefulness (USEFUL): GAI is useful for USEFUL1 = Labour market USEFUL2 = Environment USEFUL3 = Heathcare USEFUL4 = Economy | From disagreement = 1 to agreement = 3 |

| Innovativeness (INNOV): What is your degree of well-being in the next circumstances: INNOV1: Get a surgery by a robot INNOV2: Traveling in an autonomous automobile INNOV3: Using a chatbot to get a customer service | From completely disagreement = 1 to completely agreement =10 |

| Privacy risk (PRIV): Is the privacy in internet important for you? | From nothing = 1 to a lot = 4 |

| Knowledge (KNOWL) = Assess your knowledge and familiarity with artificial intelligence. | From complete unawareness = 1 to complete awareness = 10. |

| Social performance (SPER): AI may promote SPER1= Human analytical and reflective capacity SPER2 = The protection of people’s rights SPER3 = Culture, values, and ways of life SPER4 = Humanity as a whole | Harmful = 1; Neutral = 2; Beneficial = 3 |

| Need for regulation (NREG). I belief that: NREG1 = The design, programming, and training of artificial intelligence systems should be subject to regulatory oversight. NREG2 = Companies and organizations must be required to disclose whenever artificial intelligence is used in place of human involvement. NREG3 = The application and deployment of artificial intelligence ought to be regulated. NREG4 = Artificial intelligence poses risks to the protection of intellectual property rights. NREG5 = The establishment of stronger ethical guidelines and legal safeguards for artificial intelligence is among the most critical challenges currently confronting humanity. | From full disagreement = 1 to full agreement = 5. Neutral value = 3. |

| Sex (FEM) | Male = 0 and Female = 1 |

| Generation Z (GENZ) | Determined based on age |

| Variables | Ordinal Logit Regression (RO1) | fsQCA (RO2) |

|---|---|---|

| Output variable: Frequency of using GAI (GAIU) | The standardized value of GAIU = Max{USE1, USE2,…, USE5}. The categories are GAIU∈{0,1,2,3,4,5} | |

| Input variables | ||

| Usefulness (USEFUL) | The standardized first principal component of its items | is equal to 1 for values of USEFUL at or above the 90th percentile, and 0 for values below the 10th percentile. Between the 10th and 90th percentiles, the degree of membership is linearly graded. |

| Innovativeness (INNOV) | The standardized first principal component of its items | is equal to 1 for values of INNOV at or above the 90th percentile, and 0 for values below the 10th percentile. Between the 10th and 90th percentiles, the degree of membership is linearly graded. |

| Privacy risk (PRIV) | The standardized value of the item | is equal to 1 for values of PRIV at or above the 90th percentile, and 0 for values below the 10th percentile. Between the 10th and 90th percentiles, the degree of membership is linearly graded. |

| Knowledge (KNOWL) | The standardized value of the item | is equal to 1 for values of KNOWL at or above the 90th percentile, and 0 for values below the 10th percentile. Between the 10th and 90th percentiles, the degree of membership is linearly graded. |

| Social performance (SPER) | The standardized value of the first principal component of its items | is equal to 1 for values of SPER at or above the 90th percentile, and 0 for values below the 10th percentile. Between the 10th and 90th percentiles, the degree of membership is linearly graded. |

| Need for regulation (NREG). | The standardized value of the first principal component of its items | is equal to 1 for values of NREG at or above the 90th percentile, and 0 for values below the 10th percentile. Between the 10th and 90th percentiles, the degree of membership is linearly graded. |

| Sex (FEM) | Male = 0 and Female = 1 | |

| Generation Z (GENZ) | Continuous variable in the [0, 1] range. Being 25 years old or younger indicates full membership in Generation Z (value = 1), while being older than 35 indicates full membership in Generation Y (value = 0). For individuals between 25 and 35 years old, membership is linearly graded. |

| Variables | Mean | SD | FL | CA | CR | AVE |

|---|---|---|---|---|---|---|

| Output variable (GAIU) | ||||||

| GAIU1 = ChatGPT | 2.40 | 1.85 | ||||

| GAIU2 = Gemini | 0.57 | 1.23 | ||||

| GAIU3 = Microsoft copilot | 0.73 | 1.44 | ||||

| GAIU4 = Perplexity | 0.16 | 0.662 | ||||

| GAIU5 = Other | 0.93 | 1.55 | ||||

| GAIU | 2.66 | 1.82 | ||||

| Input variables | ||||||

| Usefulness (USEFUL) | 0.631 | 0.781 | 47.70% | |||

| USEFUL 1 | 1.76 | 0.832 | 0.713 | |||

| USEFUL 2 | 2.19 | 0.857 | 0.631 | |||

| USEFUL 3 | 2.59 | 0.703 | 0.655 | |||

| USEFUL 4 | 2.11 | 0.838 | 0.757 | |||

| Innovativeness (INNOV) | 0.612 | 0.791 | 56.90% | |||

| INNOV1 | 4.17 | 2.96 | 0.767 | |||

| INNOV2 | 4.45 | 2.79 | 0.844 | |||

| INNOV3 | 4.98 | 2.95 | 0.639 | |||

| Privacy risk (PRI) | 3.75 | 0.511 | 1 | 1 | 1 | 100% |

| Knowledge (KNOWL) | 5.18 | 2.12 | 1 | 1 | 1 | 100% |

| Social performance (SPER) | 0.668 | 0.803 | 51.40% | |||

| SPER1 | 1.84 | 0.889 | 0.614 | |||

| SPER2 | 1.67 | 0.756 | 0.733 | |||

| SPER3 | 1.62 | 0.754 | 0.741 | |||

| SPER4 | 1.83 | 0.837 | 0.771 | |||

| Need for Regulation (NREG) | 0.805 | 0.869 | 57.50% | |||

| NREG1 | 4.3 | 1.02 | 0.835 | |||

| NREG2 | 4.46 | 0.902 | 0.681 | |||

| NREG3 | 4.42 | 0.963 | 0.851 | |||

| NREG4 | 3.9 | 1.19 | 0.642 | |||

| NREG5 | 4.19 | 1.06 | 0.76 | |||

| Factors | Coefficient | SD | OR | z-Statistic | p-Value | Acceptance |

|---|---|---|---|---|---|---|

| USEFUL | 0.379 | 0.060 | 1.461 | 6.286 | <0.0001 | H1 = Accepted |

| INNOV | 0.311 | 0.058 | 1.364 | 5.400 | <0.0001 | H2 = Accepted |

| PRI | −0.019 | 0.048 | 0.981 | −0.390 | 0.6965 | H3 = Rejected |

| KNOW | 0.935 | 0.056 | 2.547 | 16.560 | <0.0001 | H4 = Accepted |

| SPER | 0.090 | 0.058 | 1.094 | 1.546 | 0.1221 | H5 = Rejected |

| NREG | −0.175 | 0.054 | 0.840 | −3.230 | 0.0012 | H6 = Accepted |

| FEM | −0.009 | 0.100 | 0.991 | −0.092 | 0.9265 | H7 = Rejected |

| GENZ | 0.553 | 0.117 | 1.739 | 4.714 | <0.0001 | H8 = Accepted |

| Use of GAI | Non Use of GAI | |||

|---|---|---|---|---|

| CONS | COV | CONS | COV | |

| USEFUL | 0.73 | 0.67 | 0.49 | 0.51 |

| INNOV | 0.74 | 0.68 | 0.48 | 0.50 |

| PRI | 0.52 | 0.76 | 0.48 | 0.81 |

| KNOWL | 0.76 | 0.77 | 0.46 | 0.53 |

| SPER | 0.72 | 0.62 | 0.49 | 0.48 |

| NREG | 0.55 | 0.66 | 0.59 | 0.79 |

| FEM | 0.46 | 0.37 | 0.54 | 0.50 |

| GENZ | 0.68 | 0.44 | 0.44 | 0.32 |

| ¬USEFUL | 0.56 | 0.54 | 0.66 | 0.72 |

| ¬INNOV | 0.54 | 0.52 | 0.66 | 0.72 |

| ¬PRI | 0.58 | 0.24 | 0.42 | 0.19 |

| ¬KNOWL | 0.52 | 0.46 | 0.73 | 0.73 |

| ¬SPER | 0.55 | 0.56 | 0.63 | 0.72 |

| ¬NREG | 0.73 | 0.51 | 0.51 | 0.40 |

| ¬FEM | 0.59 | 0.63 | 0.41 | 0.50 |

| ¬GENZ | 0.52 | 0.64 | 0.54 | 0.77 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pérez-Portabella, A.; Arias-Oliva, M.; Padilla-Castillo, G.; Andrés-Sánchez, J.d. Correlational and Configurational Perspectives on the Determinants of Generative AI Adoption Among Spanish Zoomers and Millennials. Societies 2025, 15, 285. https://doi.org/10.3390/soc15100285

Pérez-Portabella A, Arias-Oliva M, Padilla-Castillo G, Andrés-Sánchez Jd. Correlational and Configurational Perspectives on the Determinants of Generative AI Adoption Among Spanish Zoomers and Millennials. Societies. 2025; 15(10):285. https://doi.org/10.3390/soc15100285

Chicago/Turabian StylePérez-Portabella, Antonio, Mario Arias-Oliva, Graciela Padilla-Castillo, and Jorge de Andrés-Sánchez. 2025. "Correlational and Configurational Perspectives on the Determinants of Generative AI Adoption Among Spanish Zoomers and Millennials" Societies 15, no. 10: 285. https://doi.org/10.3390/soc15100285

APA StylePérez-Portabella, A., Arias-Oliva, M., Padilla-Castillo, G., & Andrés-Sánchez, J. d. (2025). Correlational and Configurational Perspectives on the Determinants of Generative AI Adoption Among Spanish Zoomers and Millennials. Societies, 15(10), 285. https://doi.org/10.3390/soc15100285