Modelling Training Adaptation in Swimming Using Artificial Neural Network Geometric Optimisation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Approach

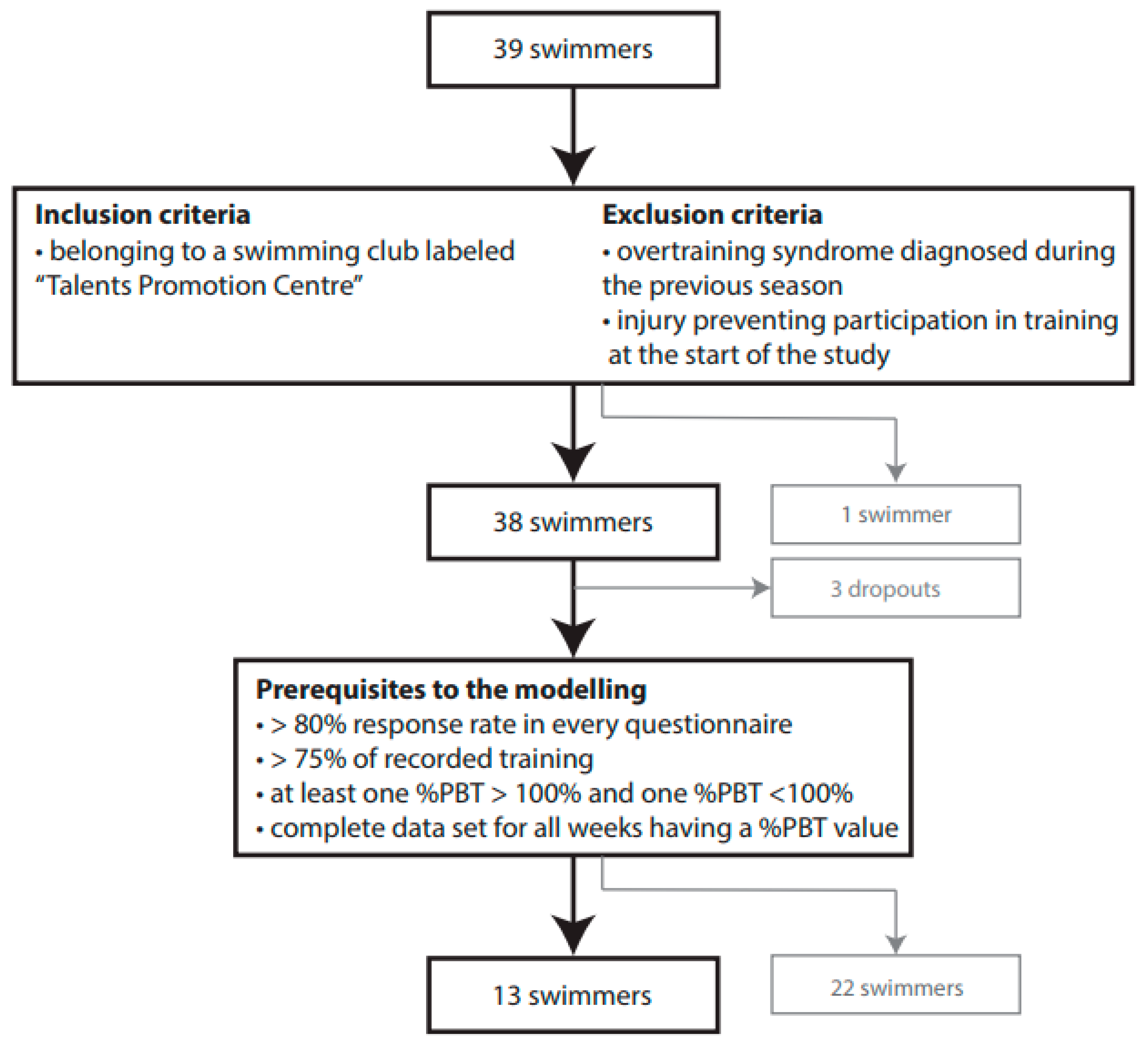

2.2. Recruitment

2.3. Exclusion and Inclusion Criteria

2.4. Data Collection

2.4.1. “Well-Being Questionnaire”

2.4.2. “Profile of Mood State—Adolescents (POMS-A)”

2.4.3. Training Log

2.4.4. Performance Outcome

2.5. Modelling Training Adaptation Using ANN Geometric Optimisation

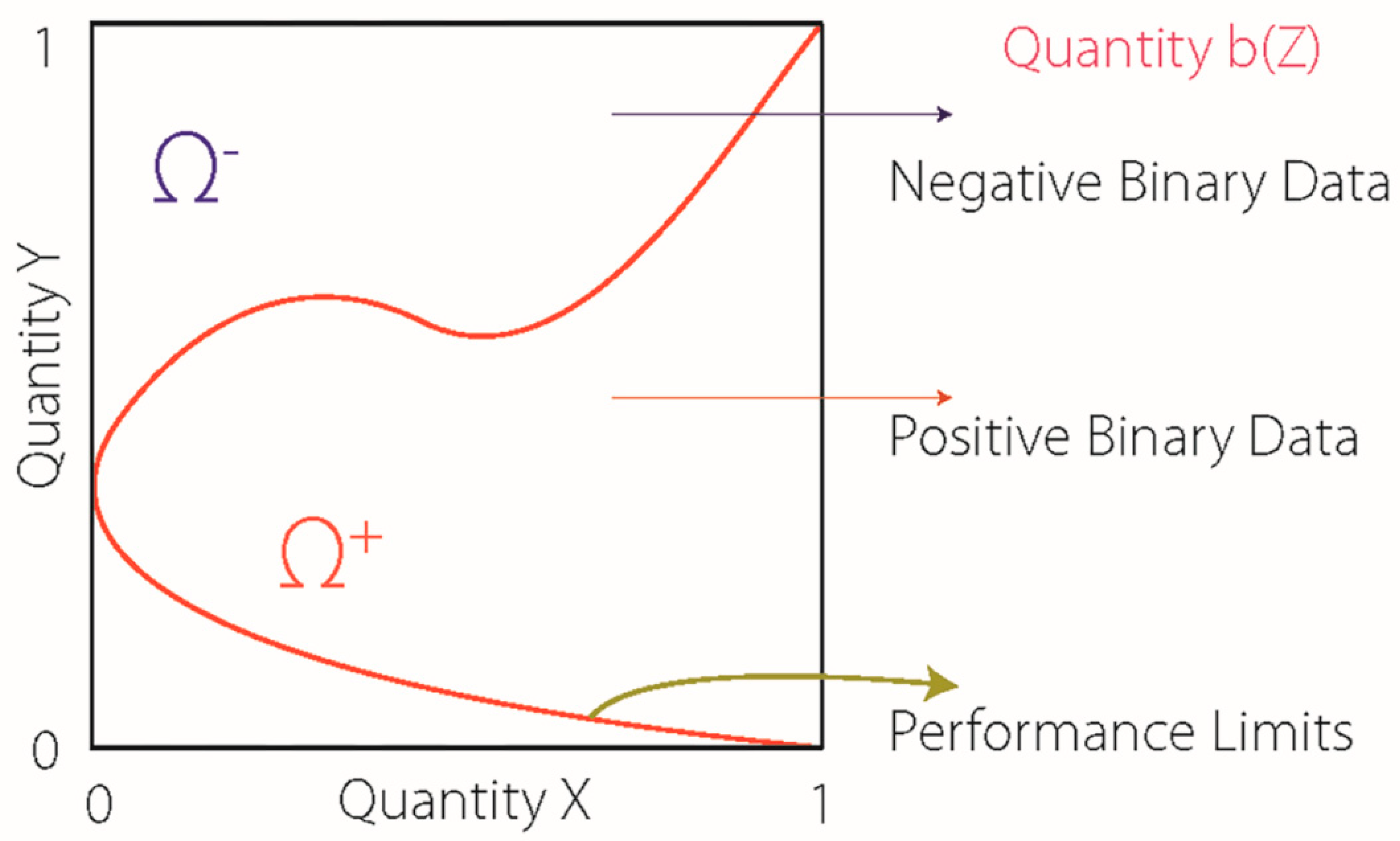

2.5.1. Concept

2.5.2. Mathematical Considerations Regarding the Development of the Model

2.5.3. Inputs to the Model

2.5.4. Overfitting

2.5.5. Goodness of Fit of the Model

2.5.6. Geometric Activity Performance Index

2.5.7. Prerequisites for the Modelling

3. Results

3.1. Swimmers’ Characteristics

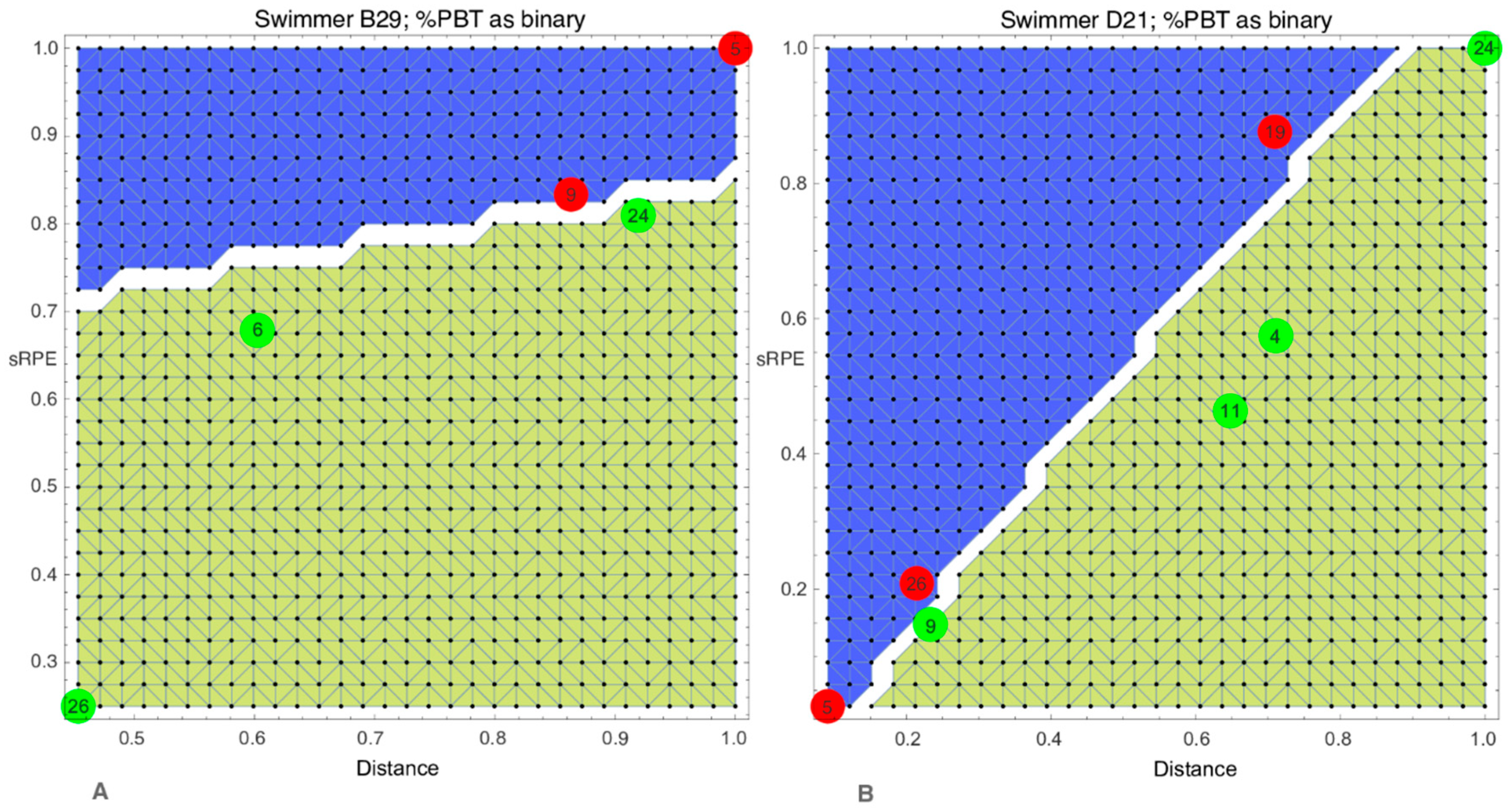

3.2. Modelling Training Adaptation using ANN Geometric Optimisation

3.2.1. Goodness of Fit of the Model

3.2.2. Geometric Activity Performance Index

4. Discussion

4.1. Strengths and Limitations

4.2. Future Studies

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Data Sharing Statement

References

- Bourdon, P.C.; Cardinale, M.; Murray, A.; Gastin, P.; Kellmann, M.; Varley, M.C.; Gabbett, T.J.; Coutts, A.J.; Burgess, D.J.; Gregson, W.; et al. Monitoring athlete training loads: Consensus statement. Int. J. Sports Physiol. Perform. 2017, 12, S2161–S2170. [Google Scholar] [CrossRef] [PubMed]

- Soligard, T.; Schwellnus, M.; Alonso, J.M.; Bahr, R.; Clarsen, B.; Dijkstra, H.P.; Gabbett, T.; Gleeson, M.; Hagglund, M.; Hutchinson, M.R.; et al. How much is too much? (part 1) international olympic committee consensus statement on load in sport and risk of injury. Br. J. Sports Med. 2016, 50, 1030–1041. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Halson, S.L. Monitoring training load to understand fatigue in athletes. Sports Med. 2014, 44 (Suppl. 2), S139–S147. [Google Scholar] [CrossRef] [Green Version]

- Gabbett, T.J.; Nassis, G.P.; Oetter, E.; Pretorius, J.; Johnston, N.; Medina, D.; Rodas, G.; Myslinski, T.; Howells, D.; Beard, A.; et al. The athlete monitoring cycle: A practical guide to interpreting and applying training monitoring data. Br. J. Sports Med. 2017, 51, 1451–1452. [Google Scholar] [CrossRef] [Green Version]

- Foster, C. Sport science: Progress, hubris, and humility. Int. J. Sports Physiol. Perform. 2019, 14, 141–143. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jobson, S.A.; Passfield, L.; Atkinson, G.; Barton, G.; Scarf, P. The analysis and utilization of cycling training data. Sports Med. 2009, 39, 833–844. [Google Scholar] [CrossRef]

- Pfeiffer, M.; Hohmann, A. Applications of neural networks in training science. Hum. Mov. Sci. 2012, 31, 344–359. [Google Scholar] [CrossRef]

- Edelmann-Nusser, J.; Hohmann, A.; Henneberg, B. Modeling and prediction of competitive performance in swimming upon neural networks. Eur. J. Sport Sci. 2002, 2, 1–10. [Google Scholar] [CrossRef]

- Fister, I.; Ljubič, K.; Suganthan, P.N.; Perc, M.; Fister, I. Computational intelligence in sports: Challenges and opportunities within a new research domain. Appl. Math. Comput. 2015, 262, 178–186. [Google Scholar] [CrossRef]

- Balagué, N.; Torrents, C. Thinking before computing: Changing approaches in sports performance. Int. J. Comput. Sci. Sport 2005, 4, 5–13. [Google Scholar]

- Churchill, T. Modelling Athletic Training and Performance: A Hybrid Artificial Neural Network Ensemble Approach. PhD in Information Sciences and Engineering. Ph.D. Thesis, University of Canberra, Canberra, Australia, 2014. [Google Scholar]

- Hellard, P.; Avalos, M.; Lacoste, L.; Barale, F.; Chatard, J.-C.; Millet, G.P. Assessing the limitations of the banister model in monitoring training. J. Sports Sci. 2006, 24, 509–520. [Google Scholar] [CrossRef] [PubMed]

- Bunker, R.P.; Thabtah, F. A machine learning framework for sport result prediction. Appl. Comput. Inf. 2019, 15, 27–33. [Google Scholar] [CrossRef]

- Samarasinghe, S. Neural Networks for Applied Sciences and Engineering: From Fundamentals to Complex Pattern Recognition; Auerbach Publications: New York, NY, USA, 2016; Volume 1, p. 561. [Google Scholar]

- Haar, B. Analyse und Prognose von Trainingswirkungen: Multivariate Zeitreihenanalyse Mit Künstlichen Neuronalen Netzen. Analysis and Prediction of Training Effects: Multivariate Time Series Analysis with Artificial Neural Networks. Ph.D. Thesis, Universität Stuttgart, Stuttgart, Germany, 2011. [Google Scholar]

- Bishop, C.M.; Tipping, M.E. A hierarchical latent variable model for data visualization. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 281–293. [Google Scholar] [CrossRef]

- Tipping, M.E. Sparse bayesian learning and the relevance vector machine. J. Mach. Learn. Res. 2001, 1, 211–244. [Google Scholar]

- Khodaee, M.; Edelman, G.T.; Spittler, J.; Wilber, R.; Krabak, B.J.; Solomon, D.; Riewald, S.; Kendig, A.; Borgelt, L.M.; Riederer, M.; et al. Medical care for swimmers. Sports Med. Open 2016, 2, 27. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Meeusen, R.; Duclos, M.; Foster, C.; Fry, A.; Gleeson, M.; Nieman, D.; Raglin, J.; Rietjens, G.; Steinacker, J.; Urhausen, A. Prevention, diagnosis, and treatment of the overtraining syndrome: Joint consensus statement of the european college of sport science and the american college of sports medicine. Med. Sci. Sports Exerc. 2013, 45, 186–205. [Google Scholar] [CrossRef] [Green Version]

- Matos, N.F.; Winsley, R.J.; Williams, C.A. Prevalence of nonfunctional overreaching/overtraining in young english athletes. Med. Sci. Sports Exerc. 2011, 43, 1287–1294. [Google Scholar] [CrossRef] [Green Version]

- McLean, B.D.; Coutts, A.J.; Kelly, V.; McGuigan, M.R.; Cormack, S.J. Neuromuscular, endocrine, and perceptual fatigue responses during different length between-match microcycles in professional rugby league players. Int. J. Sports Physiol. Perform. 2010, 5, 367–383. [Google Scholar] [CrossRef] [Green Version]

- Terry, P.C.; Lane, A.M.; Lane, H.J.; Keohane, L. Development and validation of a mood measure for adolescents. J. Sports Sci. 1999, 17, 861–872. [Google Scholar] [CrossRef] [Green Version]

- Terry, P.C.; Lane, A.M.; Fogarty, G.J. Construct validity of the profile of mood states—adolescents for use with adults. Psychol. Sport Exerc. 2003, 4, 125–139. [Google Scholar] [CrossRef] [Green Version]

- Cayrou, S.; Dickès, P.; Dolbeault, S. Version française du profile of mood states (poms-f). The french version of the profile of mood states (poms-f). J. Thér. Comport. Cogn. 2003, 13, 83–88. [Google Scholar]

- Albani, C.; Blaser, G.; Geyer, M.; Schmutzer, G.; Brahler, E.; Bailer, H.; Grulke, N. Überprüfung der gütekriterien der deutschen kurzform des fragebogens profile of mood states (poms) in einer repräsentativen bevölkerungsstichprobe. The german short version of profile of mood states (poms): Psychometric evaluation in a representative sample. Psychother. Psychosom. Med. Psychol. 2005, 55, 324–330. [Google Scholar]

- Foster, C. Monitoring training in athletes with reference to overtraining syndrome. Med. Sci. Sports Exerc. 1998, 30, 1164–1168. [Google Scholar] [CrossRef]

- Wallace, L.K.; Slattery, K.M.; Coutts, A.J. The ecological validity and application of the session-rpe method for quantifying training loads in swimming. J. Strength Cond. Res. 2009, 23, 33–38. [Google Scholar] [CrossRef] [Green Version]

- Gabbett, T.J. The training-injury prevention paradox: Should athletes be training smarter and harder? Br. J. Sports Med. 2016, 50, 273–280. [Google Scholar] [CrossRef] [Green Version]

- Fédération Internationale de Natation (FINA), Fina Points. Available online: http://www.fina.org/content/fina-points (accessed on 1 March 2019).

- Badii, R.; Politi, A. Hausdorff dimension and uniformity factor of strange attractors. Phys. Rev. Lett. 1984, 52, 1661. [Google Scholar] [CrossRef]

- De Boer, P.-T.; Kroese, D.P.; Mannor, S.; Rubinstein, R.Y. A tutorial on the cross-entropy method. Ann. Oper. Res. 2005, 134, 19–67. [Google Scholar] [CrossRef]

- Caliński, T.; Harabasz, J. A dendrite method for cluster analysis. Commun. Stat. Theory Methods 1974, 3, 1–27. [Google Scholar] [CrossRef]

- Greenacre, M. Ordination with any dissimilarity measure: A weighted euclidean solution. Ecology 2017, 98, 2293–2300. [Google Scholar] [CrossRef] [PubMed]

- Van Cutsem, B. Classification and Dissimilarity Analysis; Springer Science & Business Media: New York, NY, USA, 2012; Volume 93. [Google Scholar]

- Cantrell, C.D. Modern Mathematical Methods for Physicists and Engineers; Cambridge University Press: Cambridge, UK, 2000; p. 784. [Google Scholar]

- Krebs, C.J. Ecological Methodology; Harper & Row: New York, NY, USA, 1989; p. 620. [Google Scholar]

- Lee, D.-T.; Schachter, B.J. Two algorithms for constructing a delaunay triangulation. Int. J. Comput. Inf. Sci. 1980, 9, 219–242. [Google Scholar] [CrossRef]

- Field, D.A. Laplacian smoothing and delaunay triangulations. Commun. Appl. Numer. Methods 1988, 4, 709–712. [Google Scholar] [CrossRef]

- Kloucek, P. Cassiopée Applied Analytical Systems. Available online: http://www.cassiopee.org/index.html (accessed on 1 March 2019).

- Kentta, G.; Hassmen, P. Overtraining and recovery. A conceptual model. Sports Med. 1998, 26, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Perrone, M.; Cooper, L. When networks disagree: Ensemble methods for hybrid neural networks. Neural Netw. Speech Image Process. 1993, 7, 342–358. [Google Scholar]

- Tufféry, S. Data Mining and Statistics for Decision Making; John Wiley & Sons: Hoboken, NJ, USA, 2011; p. 716. [Google Scholar]

- Zhang, G.P. A neural network ensemble method with jittered training data for time series forecasting. J. Inf. Sci. 2007, 177, 5329–5346. [Google Scholar] [CrossRef]

- Kuo, H.-H. White Noise Distribution Theory; CRC Press: Boca Raton, FL, USA, 2018; p. 400. [Google Scholar]

- Sharkey, A.J.C. On combining artificial neural nets. Connect. Sci. 1996, 8, 299–314. [Google Scholar] [CrossRef]

- Aickin, M.; Gensler, H. Adjusting for multiple testing when reporting research results: The bonferroni vs holm methods. Am. J. Public Health 1996, 86, 726–728. [Google Scholar] [CrossRef] [Green Version]

- Poggio, T.; Rifkin, R.; Mukherjee, S.; Niyogi, P. General conditions for predictivity in learning theory. Nature 2004, 428, 419–422. [Google Scholar] [CrossRef]

- Expensive, Labour-Intensive, Time-Consuming: How Researchers Overcome Barriers in Machine Learning. Available online: https://medium.com/@1nst1tute/expensive-labour-intensive-time-consuming-how-researchers-overcome-barriers-in-machine-learning-4f686b2a1979 (accessed on 1 September 2019).

- Hellard, P.; Avalos, M.; Guimaraes, F.; Toussaint, J.F.; Pyne, D.B. Training-related risk of common illnesses in elite swimmers over a 4-yr period. Med. Sci. Sports Exerc. 2015, 47, 698–707. [Google Scholar] [CrossRef]

- Cain, G. Artificial Neural Networks: New Research; Nova Publishers: New York, NY, USA, 2017; p. 241. [Google Scholar]

- Olawoyin, A.; Chen, Y. Predicting the future with artificial neural network. Procedia Comput. Sci. 2018, 140, 383–392. [Google Scholar] [CrossRef]

- Issurin, V.B. New horizons for the methodology and physiology of training periodization. Sports Med. 2010, 40, 189–206. [Google Scholar] [CrossRef]

| Frequency | Data Type | Reminders |

|---|---|---|

| Daily After every training session | Training log: Rating of perceived exertion (RPE) using the modified Borg CR-10 RPE scale Sport type Distance (meters, if swimming) Duration (minutes) | If no training was entered on the previous day, the web platform automatically sent a reminder email on the following day. |

| Twice a week Every Tuesday and Friday | The Well-being questionnaire | An email was sent on the day of completion and if needed up to two additional reminders were sent (on the day after and on the day after next). |

| Fortnightly Every second Sunday | The POMS-A | An email was sent on the day of completion and if needed up to two additional reminders were sent (on the day after and on the day after next). |

| Time Series ► | x | y | z |

|---|---|---|---|

| Combinations ▼ | |||

| 1 | Distance | Session-RPE | %PBT (binary) |

| 2 | Session-RPE | Recovery | %PBT (binary) |

| 3 | Training strain | Recovery | %PBT (binary) |

| 4 | Training monotony | Recovery | %PBT (binary) |

| 5 | Distance | Acute: Chronic Workload Ratio | %PBT (binary) |

| Load Parameters | Coping Parameters | |

|---|---|---|

| External Load | Internal Load | |

| Distance | Session-RPE Training strain Training monotony Acute:Chronic Workload Ratio | Recovery Sleep quality Sleep quantity Soreness Pleasure Stress Total Mood Disturbance |

| Swimmer | Sex | Age (Year) | Quartile | Best Discipline (meter) | FINA Points 2013 | Quartile | Best %PBT | Quartile | Weekly Mean Internal Training Load (AU) | Quartile | Weekly Mean Distance (meter) | Quartile |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A2 | ♂ | 18 | 3 | 400 freestyle ld | 765 | 4 | 100.1 | 1 | 4258.46 | 4 | 30,100 | 4 |

| B5 | ♀ | 14 | 1 | 200 breaststroke ld | 633 | 3 | 110 | 4 | 3144.23 | 3 | 18,826.92 | 2 |

| B6 | ♀ | 15 | 1 | 100 freestyle sd | 459 | 1 | 100.7 | 1 | 2775 | 2 | 17,148 | 2 |

| B29 | ♀ | 15 | 1 | 50 breaststroke ld | 504 | 1 | 105.9 | 3 | 2377.31 | 1 | 15,426.92 | 1 |

| C10 | ♂ | 19 | 4 | 100 medley sd | 582 | 2 | 103.1 | 2 | 2504.81 | 1 | 13,905.77 | 1 |

| C13 | ♂ | 16 | 2 | 100 freestyle ld | 471 | 1 | 103.7 | 3 | 3353.08 | 3 | 23,386.54 | 3 |

| C14 | ♂ | 16 | 2 | 400 medley ld | 445 | 1 | 109.2 | 4 | 2365.38 | 1 | 16,350 | 1 |

| D21 | ♀ | 15 | 1 | 400 freestyle ld | 640 | 3 | 106.1 | 4 | 4417.71 | 4 | 27,253.33 | 4 |

| D22 | ♀ | 15 | 1 | 200 breaststroke ld | 617 | 2 | 102.4 | 1 | 2946.4 | 2 | 32,212.8 | 4 |

| D35 | ♀ | 18 | 3 | 100 freestyle ld | 631 | 3 | 100.7 | 1 | 2850.38 | 2 | 25,732.69 | 3 |

| E24 | ♂ | 19 | 4 | 100 freestyle ld | 646 | 4 | 104.9 | 3 | 2112.5 | 1 | 15,411.46 | 1 |

| E27 | ♀ | 18 | 3 | 100 backstroke ld | 619 | 2 | 102.7 | 2 | 4703.27 | 4 | 23,744.23 | 3 |

| E28 | ♂ | 20 | 4 | 50 butterfly ld | 673 | 4 | 103.0 | 2 | 3128.46 | 3 | 22,900 | 2 |

| Combinations ► | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Swimmers▼ | |||||

| A2 | 75 | 100 | 100 | 100 | 100 |

| B5 | 88 | 75 | 88 | 88 | 88 |

| B6 | 100 | 100 | 83 | 100 | 100 |

| B29 | 100 | 80 | 80 | 100 | 100 |

| C10 | 100 | 100 | 100 | 100 | 100 |

| C13 | 100 | 100 | 100 | 100 | 100 |

| C14 | 100 | 100 | 100 | 100 | 75 |

| D21 | 100 | 100 | 100 | 100 | 86 |

| D22 | 100 | 100 | 100 | 86 | 100 |

| D35 | 100 | 100 | 100 | 100 | 100 |

| E24 | 100 | 100 | 100 | 100 | 100 |

| E27 | 100 | 100 | 100 | 100 | 100 |

| E28 | 75 | 63 | 88 | 88 | 75 |

| Average | 95 | 94 | 95 | 97 | 94 |

| Global average | 95 | ||||

| Correlation Tests | Quartile | Best %PBT | |||||

|---|---|---|---|---|---|---|---|

| Statistic | Original p-Value | Corrected p-Value | Statistic | Original p-Value | Corrected p-Value | ||

| GAPI_1 | Spearman rank | 0.85 | <0.01 | <0.01 | 0.85 | <0.01 | <0.01 |

| Blomqvist β | 0.93 | <0.01 | <0.01 | 0.92 | <0.01 | <0.01 | |

| GAPI_2 | Spearman rank | 0.35 | 0.25 | 0.51 | 0.33 | 0.28 | 0.28 |

| Blomqvist β | 0.46 | 0.25 | 0.75 | 0.42 | 0.08 | 0.32 | |

| GAPI_3 | Spearman rank | 0.56 | 0.05 | 0.13 | 0.59 | 0.03 | 0.13 |

| Blomqvist β | 0.46 | 0.25 | 0.25 | 0.42 | 0.08 | 0.16 | |

| GAPI_4 | Spearman rank | 0.62 | 0.02 | 0.02 | 0.65 | 0.01 | 0.04 |

| Blomqvist β | 0.74 | 0.02 | 0.03 | 0.67 | <0.01 | <0.01 | |

| GAPI_5 | Spearman rank | 0.39 | 0.20 | 0.40 | 0.47 | 0.10 | 0.31 |

| Blomqvist β | 0.46 | 0.25 | 0.25 | 0.42 | 0.08 | 0.32 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carrard, J.; Kloucek, P.; Gojanovic, B. Modelling Training Adaptation in Swimming Using Artificial Neural Network Geometric Optimisation. Sports 2020, 8, 8. https://doi.org/10.3390/sports8010008

Carrard J, Kloucek P, Gojanovic B. Modelling Training Adaptation in Swimming Using Artificial Neural Network Geometric Optimisation. Sports. 2020; 8(1):8. https://doi.org/10.3390/sports8010008

Chicago/Turabian StyleCarrard, Justin, Petr Kloucek, and Boris Gojanovic. 2020. "Modelling Training Adaptation in Swimming Using Artificial Neural Network Geometric Optimisation" Sports 8, no. 1: 8. https://doi.org/10.3390/sports8010008

APA StyleCarrard, J., Kloucek, P., & Gojanovic, B. (2020). Modelling Training Adaptation in Swimming Using Artificial Neural Network Geometric Optimisation. Sports, 8(1), 8. https://doi.org/10.3390/sports8010008