High-Precision Pest Management Based on Multimodal Fusion and Attention-Guided Lightweight Networks

Simple Summary

Abstract

1. Introduction

- A multi-branch feature fusion network with cross-modal alignment is developed, wherein RGB and IR image features are extracted through dedicated visual encoders. A cross-modal attention mechanism is introduced to align semantic regions across modalities, enhancing fusion quality and reducing semantic inconsistency.

- An environment-guided modality selection and reweighting mechanism is constructed using real-time temperature, humidity, and illumination data. A modality scheduling sub-network dynamically adjusts the fusion weights of RGB and IR channels based on environmental conditions, improving model robustness and adaptability in extreme weather or complex lighting scenarios.

- A decoupled recognition head is introduced to support joint detection of pest and predator targets. This structure addresses issues related to category imbalance and semantic confusion by employing separate detection and classification branches, thereby reducing cross-category misclassification and improving overall joint recognition accuracy.

- The code and datasets supporting this study are publicly available on GitHub to facilitate reproducibility and collaboration in the field (access date: 15 August 2025): https://github.com/Aurelius-04/pestdetection.

2. Related Work

2.1. Pest Target Detection Methods

2.2. Applications of Multimodal Sensor Fusion in Agricultural Contexts

2.3. Advances in Deep Fusion and Attention Mechanisms for Multimodal Learning

3. Materials and Method

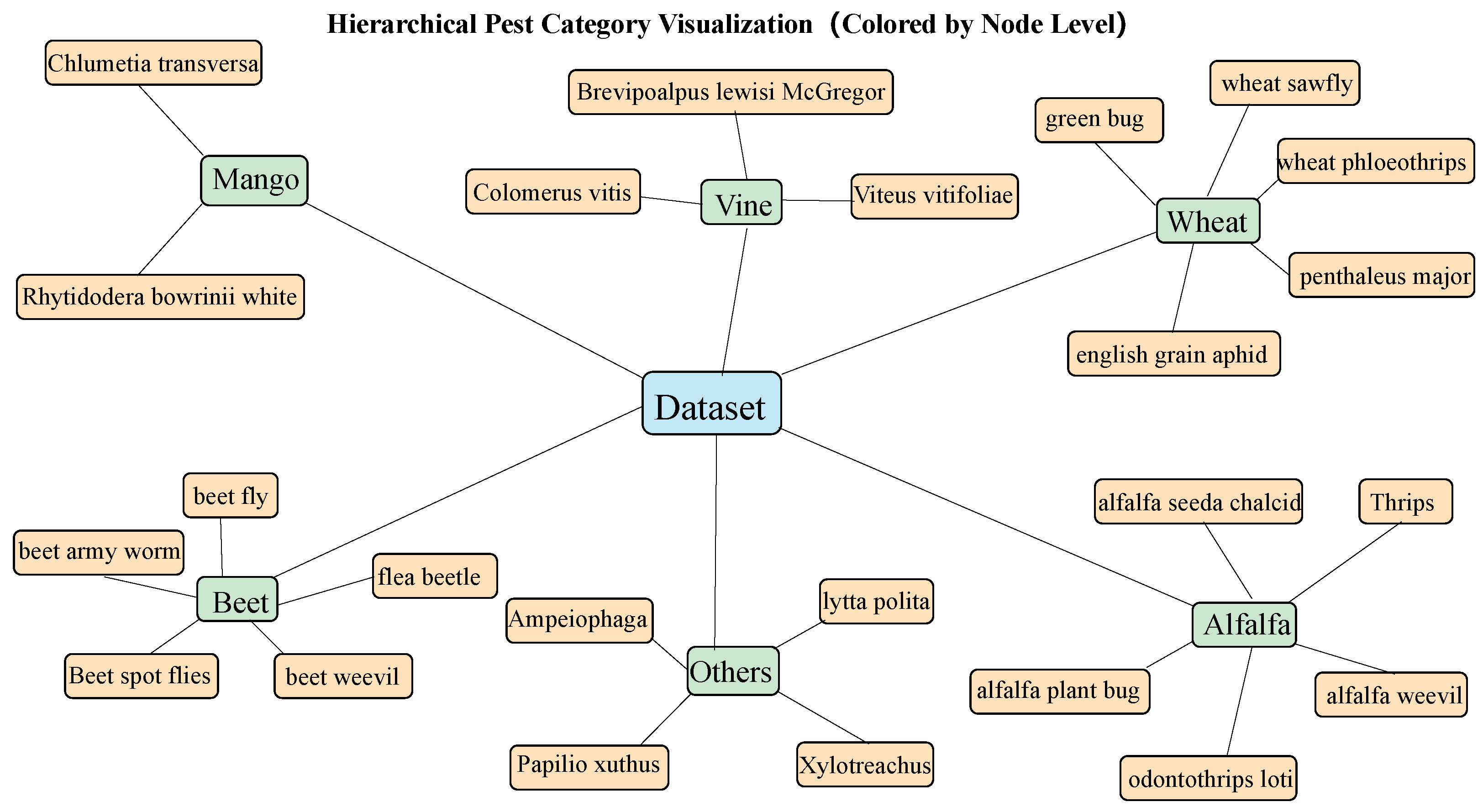

3.1. Data Collection

3.2. Data Preprocessing and Augmentation

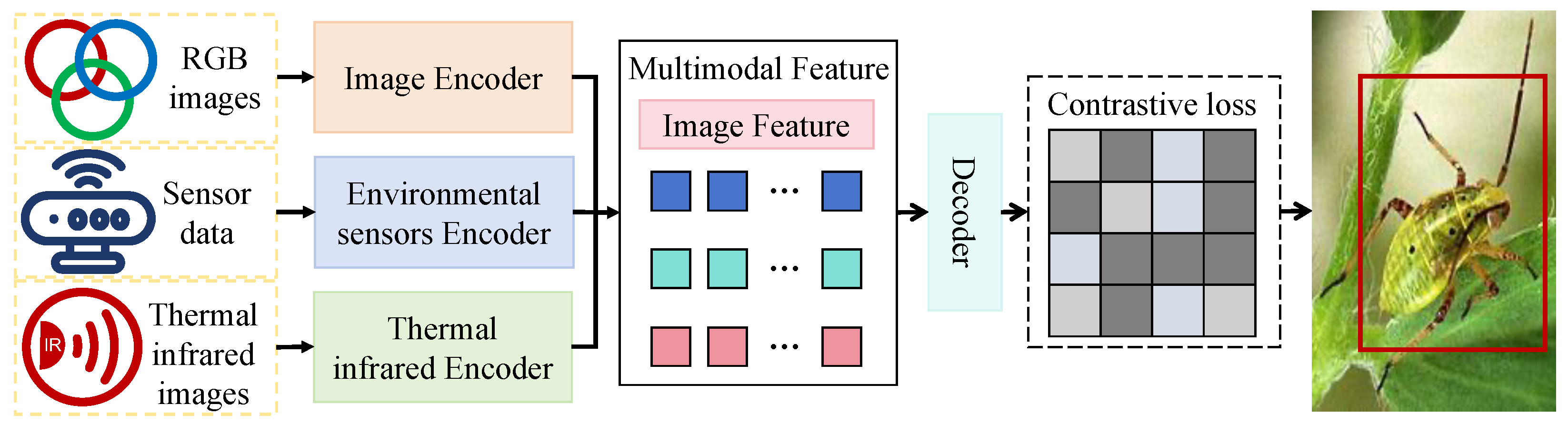

3.3. Proposed Method

3.3.1. Overall

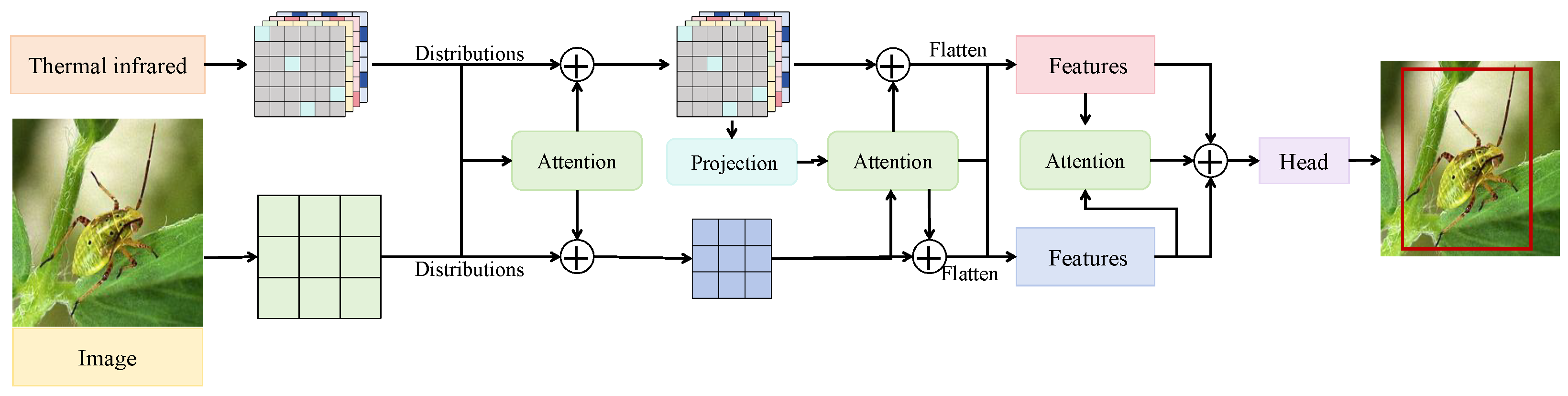

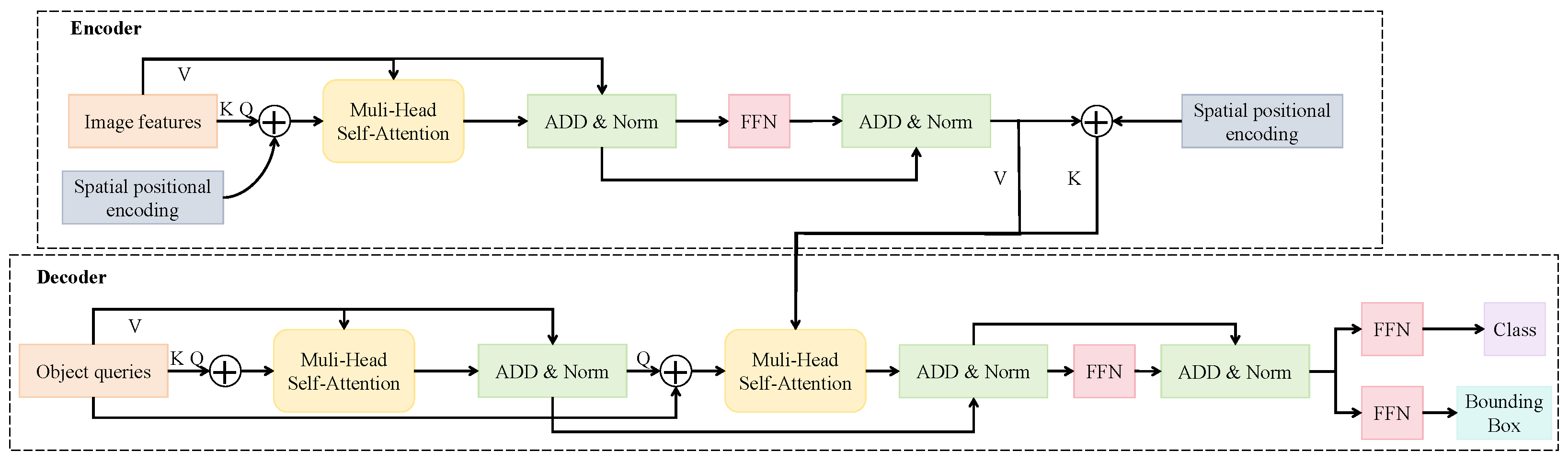

3.3.2. Cross-Modal Attention-Guided Feature Fusion Module

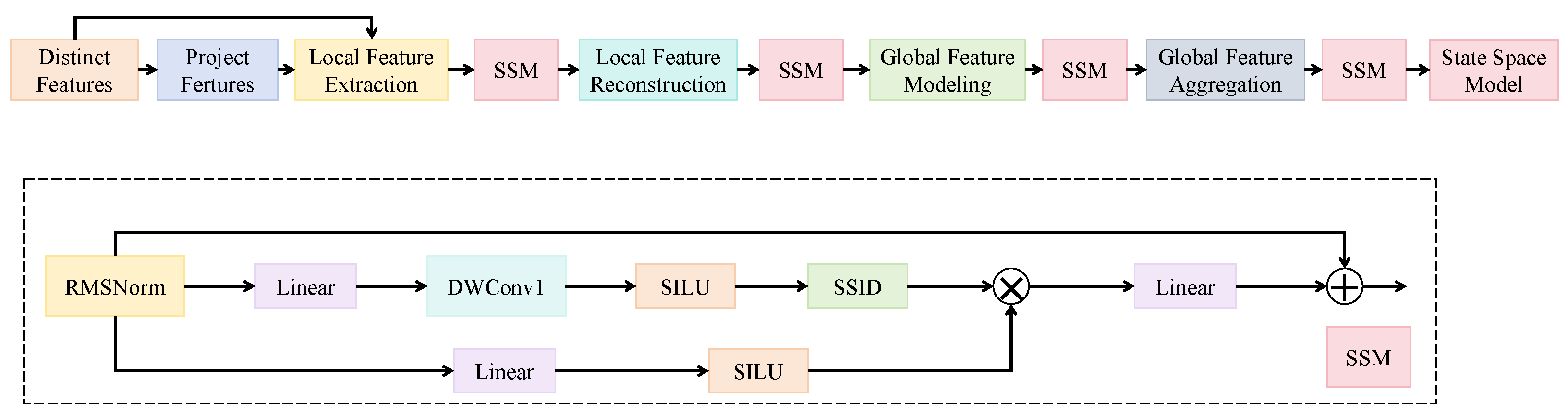

3.3.3. Environment-Guided Modality Attention Mechanism

3.3.4. Decoupled Dual-Target Detection Head

4. Results and Discussion

4.1. Experimental Setup

4.1.1. Evaluation Metrics

4.1.2. Platform Configuration

4.1.3. Baseline Models

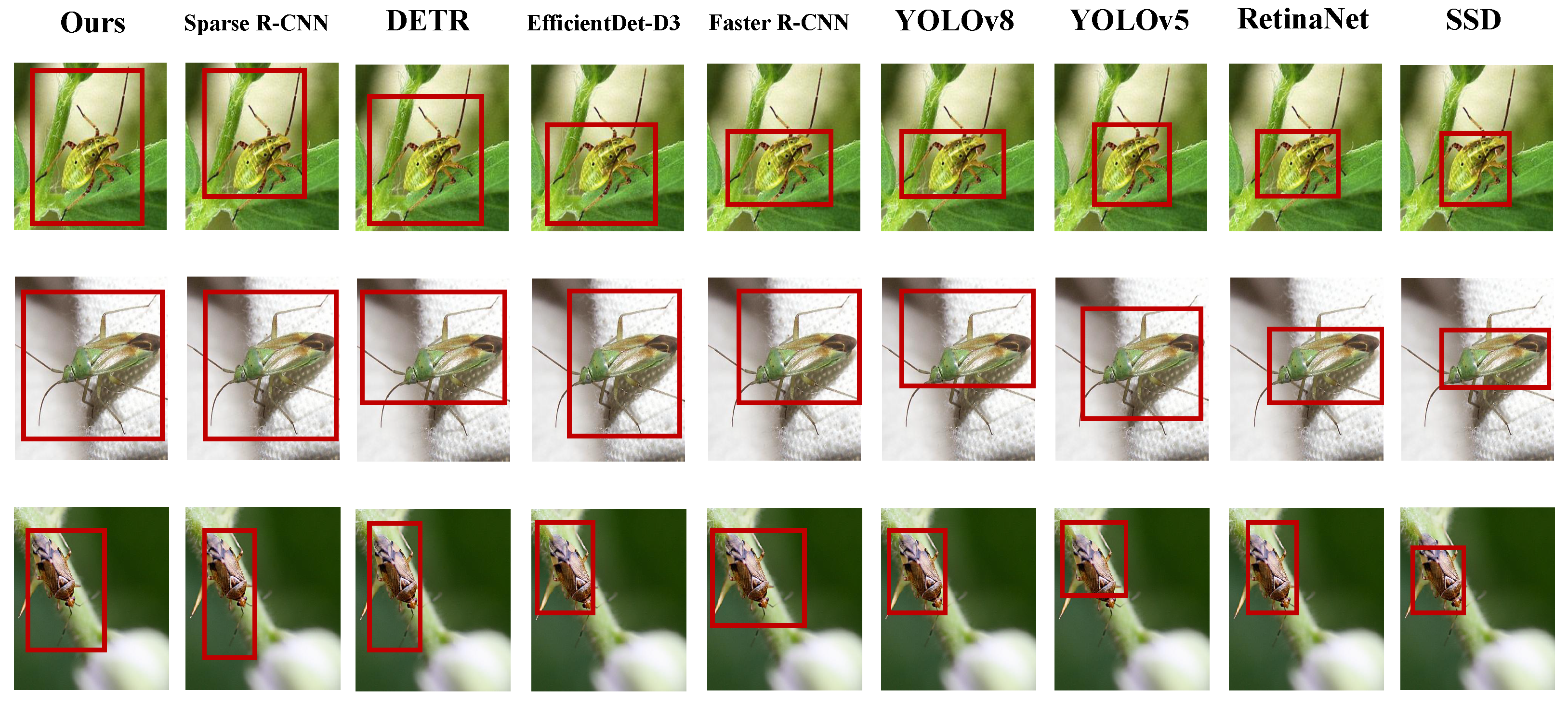

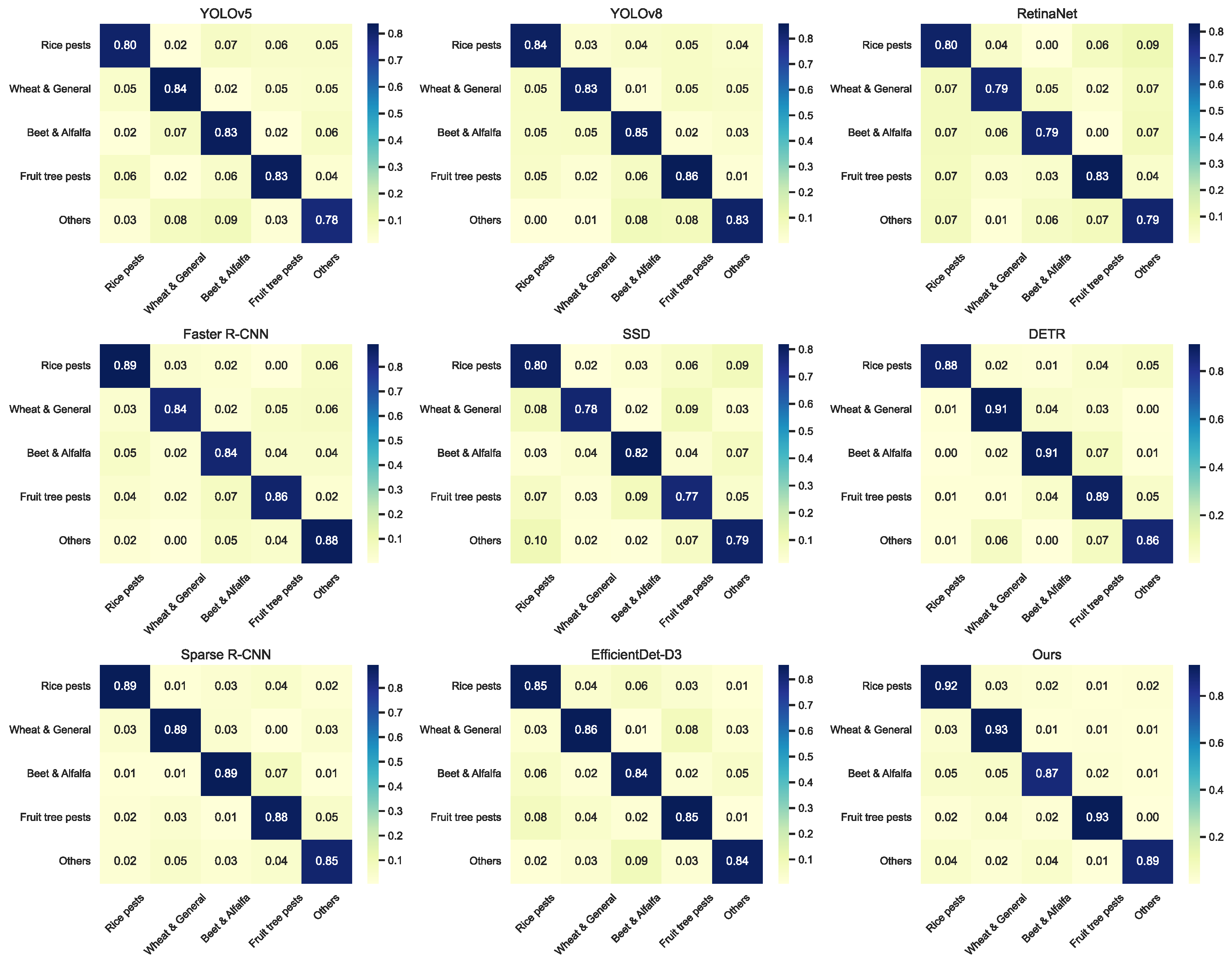

4.2. Overall Performance Comparison of Detection Models on Multimodal Pest and Predator Recognition Tasks

4.3. Ablation Study on Module-Level Contributions in the Multimodal Recognition Framework

4.4. Impact of Modality Ablation on Recognition Performance

4.5. Inference Efficiency and Model Size Comparison on Edge Platforms

4.6. Performance Evaluation Under Different Time-of-Day and Weather Conditions

4.7. Discussion

4.7.1. Environmental Robustness and Deployability

4.7.2. Practical Deployment and Application Considerations

4.8. Limitation and Future Work

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhou, X.; Chen, S.; Ren, Y.; Zhang, Y.; Fu, J.; Fan, D.; Lin, J.; Wang, Q. Atrous Pyramid GAN Segmentation Network for Fish Images with High Performance. Electronics 2022, 11, 911. [Google Scholar] [CrossRef]

- Zhang, Y.; Wa, S.; Zhang, L.; Lv, C. Automatic plant disease detection based on tranvolution detection network with GAN modules using leaf images. Front. Plant Sci. 2022, 13, 875693. [Google Scholar] [CrossRef] [PubMed]

- Kenis, M.; Lukas Seehausen, M. Considerations for selecting natural enemies in classical biological control. In Biological Control of Insect Pests in Plantation Forests; Springer: Berlin/Heidelberg, Germany, 2025; pp. 53–69. [Google Scholar]

- Krndija, J.; Ivezić, A.; Sarajlić, A.; Barošević, T.; Kuzmanović, B.; Petrović, K.; Stojačić, I.; Trudić, B. Natural Enemies of the Pear Psylla, Cacopsylla pyri (Hemiptera: Psyllidae), and the Possibilities for Its Biological Control: A Case Study Review in the Western Balkan Countries. Agronomy 2024, 14, 668. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, M.; Ma, X.; Wu, X.; Wang, Y. High-precision wheat head detection model based on one-stage network and GAN model. Front. Plant Sci. 2022, 13, 787852. [Google Scholar] [CrossRef]

- Zhang, Y.; Wa, S.; Sun, P.; Wang, Y. Pear defect detection method based on resnet and dcgan. Information 2021, 12, 397. [Google Scholar] [CrossRef]

- Zhang, Y.; Wa, S.; Liu, Y.; Zhou, X.; Sun, P.; Ma, Q. High-accuracy detection of maize leaf diseases CNN based on multi-pathway activation function module. Remote Sens. 2021, 13, 4218. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y.; Ma, X. A New Strategy for Tuning ReLUs: Self-Adaptive Linear Units (SALUs). In Proceedings of the ICMLCA 2021; 2nd International Conference on Machine Learning and Computer Application, Shenyang, China, 17–19 December 2021; VDE: Osaka, Japan, 2021; pp. 1–8. [Google Scholar]

- Zhang, Y.; Lv, C. TinySegformer: A lightweight visual segmentation model for real-time agricultural pest detection. Comput. Electron. Agric. 2024, 218, 108740. [Google Scholar] [CrossRef]

- Chen, H.; Wen, C.; Zhang, L.; Ma, Z.; Liu, T.; Wang, G.; Yu, H.; Yang, C.; Yuan, X.; Ren, J. Pest-PVT: A model for multi-class and dense pest detection and counting in field-scale environments. Comput. Electron. Agric. 2025, 230, 109864. [Google Scholar] [CrossRef]

- Saidi, S.; Idbraim, S.; Karmoude, Y.; Masse, A.; Arbelo, M. Deep-Learning for Change Detection Using Multi-Modal Fusion of Remote Sensing Images: A Review. Remote Sens. 2024, 16, 3852. [Google Scholar] [CrossRef]

- Singh, R.; Nisha, R.; Naik, R.; Upendar, K.; Nickhil, C.; Deka, S.C. Sensor fusion techniques in deep learning for multimodal fruit and vegetable quality assessment: A comprehensive review. J. Food Meas. Charact. 2024, 18, 8088–8109. [Google Scholar] [CrossRef]

- Ali, F.; Qayyum, H.; Iqbal, M.J. Faster-PestNet: A Lightweight deep learning framework for crop pest detection and classification. IEEE Access 2023, 11, 104016–104027. [Google Scholar] [CrossRef]

- Tang, Z.; Lu, J.; Chen, Z.; Qi, F.; Zhang, L. Improved Pest-YOLO: Real-time pest detection based on efficient channel attention mechanism and transformer encoder. Ecol. Inform. 2023, 78, 102340. [Google Scholar] [CrossRef]

- Yin, J.; Huang, P.; Xiao, D.; Zhang, B. A lightweight rice pest detection algorithm using improved attention mechanism and YOLOv8. Agriculture 2024, 14, 1052. [Google Scholar] [CrossRef]

- Dong, Q.; Sun, L.; Han, T.; Cai, M.; Gao, C. PestLite: A novel YOLO-based deep learning technique for crop pest detection. Agriculture 2024, 14, 228. [Google Scholar] [CrossRef]

- Huang, Y.; Liu, Z.; Zhao, H.; Tang, C.; Liu, B.; Li, Z.; Wan, F.; Qian, W.; Qiao, X. YOLO-YSTs: An improved YOLOv10n-based method for real-time field pest detection. Agronomy 2025, 15, 575. [Google Scholar] [CrossRef]

- Liu, H.; Zhan, Y.; Sun, J.; Mao, Q.; Wu, T. A transformer-based model with feature compensation and local information enhancement for end-to-end pest detection. Comput. Electron. Agric. 2025, 231, 109920. [Google Scholar] [CrossRef]

- Wang, F.; Liu, L.; Dong, S.; Wu, S.; Huang, Z.; Hu, H.; Du, J. Asp-det: Toward appearance-similar light-trap agricultural pest detection and recognition. Front. Plant Sci. 2022, 13, 864045. [Google Scholar] [CrossRef]

- El Sakka, M.; Ivanovici, M.; Chaari, L.; Mothe, J. A Review of CNN Applications in Smart Agriculture Using Multimodal Data. Sensors 2025, 25, 472. [Google Scholar] [CrossRef]

- Zhao, X.; Liu, Y.; Huang, Z.; Li, G.; Zhang, Z.; He, X.; Du, H.; Wang, M.; Li, Z. Early diagnosis of Cladosporium fulvum in greenhouse tomato plants based on visible/near-infrared (VIS/NIR) and near-infrared (NIR) data fusion. Sci. Rep. 2024, 14, 20176. [Google Scholar] [CrossRef]

- Qiu, G.; Li, J.; Zhang, J.; Deng, J.; Wang, W. Multi-Sensor Fusion for Environmental Perception and Decision-Making in Smart Fertilization Robots. In Proceedings of the 2025 5th International Conference on Sensors and Information Technology, Nanjing, China, 21–23 March 2025; pp. 669–673. [Google Scholar]

- Zhang, Q.; Wei, Y.; Han, Z.; Fu, H.; Peng, X.; Deng, C.; Hu, Q.; Xu, C.; Wen, J.; Hu, D.; et al. Multimodal fusion on low-quality data: A comprehensive survey. arXiv 2024, arXiv:2404.18947. [Google Scholar] [CrossRef]

- Kim, W.; Son, B.; Kim, I. Vilt: Vision-and-language transformer without convolution or region supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; PMLR, 2021; pp. 5583–5594. [Google Scholar]

- Dou, Z.Y.; Xu, Y.; Gan, Z.; Wang, J.; Wang, S.; Wang, L.; Zhu, C.; Zhang, P.; Yuan, L.; Peng, N.; et al. An Empirical Study of Training End-to-End Vision-and-Language Transformers. arXiv 2022, arXiv:2111.02387. [Google Scholar]

- Sharma, H.; Srivastava, S. Integrating multimodal features by a two-way co-attention mechanism for visual question answering. Multimed. Tools Appl. 2024, 83, 59577–59595. [Google Scholar] [CrossRef]

- Quintero Bernal, D.; Kaschel, H.; Kern, J. A survey of multimodal data fusion: Applications and adverse conditions. Inge CUC 2025, 21, 95–114. [Google Scholar] [CrossRef]

- Gao, S.; Zhang, N.; Liu, Y.; Pan, J. Multimodal fusion method for knowledge extraction and inference of agricultural robots. Int. J. Robot. Autom. 2025, 40, 321–333. [Google Scholar] [CrossRef]

- Wu, X.; Zhan, C.; Lai, Y.; Cheng, M.M.; Yang, J. IP102: A Large-Scale Benchmark Dataset for Insect Pest Recognition. In Proceedings of the IEEE CVPR, Long Beach, CA, USA, 15–20 June 2019; pp. 8787–8796. [Google Scholar]

- DeVries, T.; Taylor, G.W. Improved regularization of convolutional neural networks with cutout. arXiv 2017, arXiv:1708.04552. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 25. [Google Scholar]

- Tynan, R.; O’Hare, G.; Marsh, D.; O’Kane, D. Interpolation for wireless sensor network coverage. In Proceedings of the Second IEEE Workshop on Embedded Networked Sensors, EmNetS-II, Sydney, NSW, Australia, 31–31 May 2005; pp. 123–131. [Google Scholar]

- Huang, L. Normalization Techniques in Deep Learning; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Van Erven, T.; Harremos, P. Rényi divergence and Kullback-Leibler divergence. IEEE Trans. Inf. Theory 2014, 60, 3797–3820. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Zhang, Y.; Guo, Z.; Wu, J.; Tian, Y.; Tang, H.; Guo, X. Real-time vehicle detection based on improved yolo v5. Sustainability 2022, 14, 12274. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A small-object-detection model based on improved YOLOv8 for UAV aerial photography scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. Automatic ship detection based on RetinaNet using multi-resolution Gaofen-3 imagery. Remote Sens. 2019, 11, 531. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Sun, P.; Zhang, R.; Jiang, Y.; Kong, T.; Xu, C.; Zhan, W.; Tomizuka, M.; Li, L.; Yuan, Z.; Wang, C.; et al. Sparse r-cnn: End-to-end object detection with learnable proposals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14454–14463. [Google Scholar]

- Sung-Pil, S.; Lee, S.-Y.; Le, T.H.M. Feasibility of EfficientDet-D3 for Accurate and Efficient Void Detection in GPR Images. Infrastructures 2025, 10, 140. [Google Scholar]

| Modality | Quantity | Resolution/Format | Description |

|---|---|---|---|

| RGB images | 3249 | 1920 × 1080 | Captured under varying lighting conditions |

| Infrared images | 1210 | 640 × 480 | Synchronized with RGB frames |

| Temperature and humidity | 720,000 | Temp + RH | 1 Hz, spanning two sites over five months |

| Light intensity | 720,000 | Single-channel LDR | 1 Hz, aligned with image timestamps |

| Facet | Category | Count (Images) |

|---|---|---|

| Species classification | Pest species | 6 |

| Predator species | 2 | |

| Host crops | Rice | 1200 |

| Wheat | 1050 | |

| Citrus | 999 | |

| Time of day | Morning | 1050 |

| Noon | 1099 | |

| Evening | 1100 | |

| Weather | Sunny | 1300 |

| Cloudy | 1049 | |

| Foggy | 900 |

| Model | Precision (%) | Recall (%) | F1-Score (%) | mAP@50 (%) |

|---|---|---|---|---|

| YOLOv5 | 82.4 | 78.6 | 80.4 | 76.9 |

| YOLOv8 | 84.2 | 81.0 | 82.6 | 79.5 |

| YOLO-YSTs | 86.5 | 83.4 | 84.9 | 81.8 |

| RetinaNet | 80.1 | 76.7 | 78.3 | 74.8 |

| Faster R-CNN | 85.7 | 82.3 | 84.0 | 81.2 |

| SSD | 78.3 | 74.5 | 76.3 | 71.6 |

| DETR | 87.1 | 84.2 | 85.6 | 82.9 |

| Sparse R-CNN | 88.4 | 85.1 | 86.7 | 84.1 |

| EfficientDet-D3 | 86.0 | 82.8 | 84.3 | 81.7 |

| Early Fusion | 88.7 | 86.0 | 87.3 | 84.8 |

| Late Fusion | 89.3 | 86.5 | 87.9 | 85.5 |

| Cross-Modal Attention | 90.2 | 87.5 | 88.8 | 86.7 |

| Ours | 91.5 | 89.2 | 90.3 | 88.0 |

| Setting | Precision (%) | Recall (%) | F1-Score (%) | mAP@50 (%) |

|---|---|---|---|---|

| A. Full Model (All Modules) | 91.5 | 89.2 | 90.3 | 88.0 |

| B. w/o Cross-Modal Attention | 78.4 | 74.6 | 76.4 | 72.1 |

| C. w/o Environment-Guided Attention | 79.2 | 75.3 | 77.2 | 73.5 |

| D. w/o Modality Reweighting | 77.8 | 73.1 | 75.3 | 71.8 |

| E. w/o Decoupled Detection Head | 78.6 | 74.2 | 76.3 | 72.7 |

| Modality Configuration | Precision (%) | Recall (%) | F1-Score (%) | mAP@50 (%) |

|---|---|---|---|---|

| RGB + Thermal + Sensor (Full) | 91.5 | 89.2 | 90.3 | 88.0 |

| RGB + Thermal only | 84.7 | 81.5 | 83.1 | 80.2 |

| RGB + Sensor only | 82.9 | 78.6 | 80.7 | 78.1 |

| RGB only | 80.3 | 75.8 | 77.9 | 75.4 |

| Model | FPS | Model Size (MB) | mAP@50 (%) |

|---|---|---|---|

| YOLOv5 | 34.6 | 22.4 | 76.9 |

| YOLOv8 | 36.8 | 25.7 | 79.5 |

| YOLO-YSTs | 33.2 | 28.4 | 81.8 |

| RetinaNet | 27.5 | 145.2 | 74.8 |

| Faster R-CNN | 12.1 | 174.6 | 81.2 |

| SSD | 29.7 | 98.4 | 71.6 |

| DETR | 10.4 | 165.9 | 82.9 |

| Sparse R-CNN | 11.6 | 172.3 | 84.1 |

| EfficientDet-D3 | 28.3 | 52.1 | 81.7 |

| Early Fusion | 27.8 | 50.2 | 84.8 |

| Late Fusion | 26.9 | 49.8 | 85.5 |

| Cross-Modal Attention | 26.2 | 48.9 | 86.7 |

| Ours | 25.7 | 48.3 | 88.0 |

| Model | FPS | Model Size (MB) | mAP@50 (%) |

|---|---|---|---|

| YOLOv8 | 13.8 | 25.7 | 79.5 |

| YOLOv5 | 12.9 | 22.4 | 76.9 |

| YOLO-YSTs | 12.1 | 28.4 | 81.8 |

| RetinaNet | 9.6 | 145.2 | 74.8 |

| Faster R-CNN | 4.2 | 174.6 | 81.2 |

| SSD | 10.8 | 98.4 | 71.6 |

| DETR | 3.9 | 165.9 | 82.9 |

| Sparse R-CNN | 4.1 | 172.3 | 84.1 |

| EfficientDet-D3 | 10.4 | 52.1 | 81.7 |

| Early Fusion | 10.1 | 50.2 | 84.8 |

| Late Fusion | 9.8 | 49.8 | 85.5 |

| Cross-Modal Attention | 9.6 | 48.9 | 86.7 |

| Ours | 9.4 | 48.3 | 88.0 |

| Condition | Method | mAP@50 (%) | F1-Score (%) |

|---|---|---|---|

| Morning | w/o EGA | 86.2 | 84.7 |

| Proposed (EGA) | 88.5 | 86.4 | |

| Noon | w/o EGA | 87.5 | 85.9 |

| Proposed (EGA) | 89.1 | 87.0 | |

| Evening | w/o EGA | 84.3 | 82.5 |

| Proposed (EGA) | 87.4 | 85.2 | |

| Sunny | w/o EGA | 88.0 | 86.2 |

| Proposed (EGA) | 90.1 | 87.8 | |

| Cloudy | w/o EGA | 85.7 | 83.9 |

| Proposed (EGA) | 88.0 | 85.6 | |

| Foggy | w/o EGA | 82.1 | 80.0 |

| Proposed (EGA) | 86.6 | 83.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Li, S.; Yang, Y.; Jiang, X.; Wang, M.; Chen, D.; Jiang, T.; Dong, M. High-Precision Pest Management Based on Multimodal Fusion and Attention-Guided Lightweight Networks. Insects 2025, 16, 850. https://doi.org/10.3390/insects16080850

Liu Z, Li S, Yang Y, Jiang X, Wang M, Chen D, Jiang T, Dong M. High-Precision Pest Management Based on Multimodal Fusion and Attention-Guided Lightweight Networks. Insects. 2025; 16(8):850. https://doi.org/10.3390/insects16080850

Chicago/Turabian StyleLiu, Ziye, Siqi Li, Yingqiu Yang, Xinlu Jiang, Mingtian Wang, Dongjiao Chen, Tianming Jiang, and Min Dong. 2025. "High-Precision Pest Management Based on Multimodal Fusion and Attention-Guided Lightweight Networks" Insects 16, no. 8: 850. https://doi.org/10.3390/insects16080850

APA StyleLiu, Z., Li, S., Yang, Y., Jiang, X., Wang, M., Chen, D., Jiang, T., & Dong, M. (2025). High-Precision Pest Management Based on Multimodal Fusion and Attention-Guided Lightweight Networks. Insects, 16(8), 850. https://doi.org/10.3390/insects16080850