Feature Selection Framework for Improved UAV-Based Detection of Solenopsis invicta Mounds in Agricultural Landscapes

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

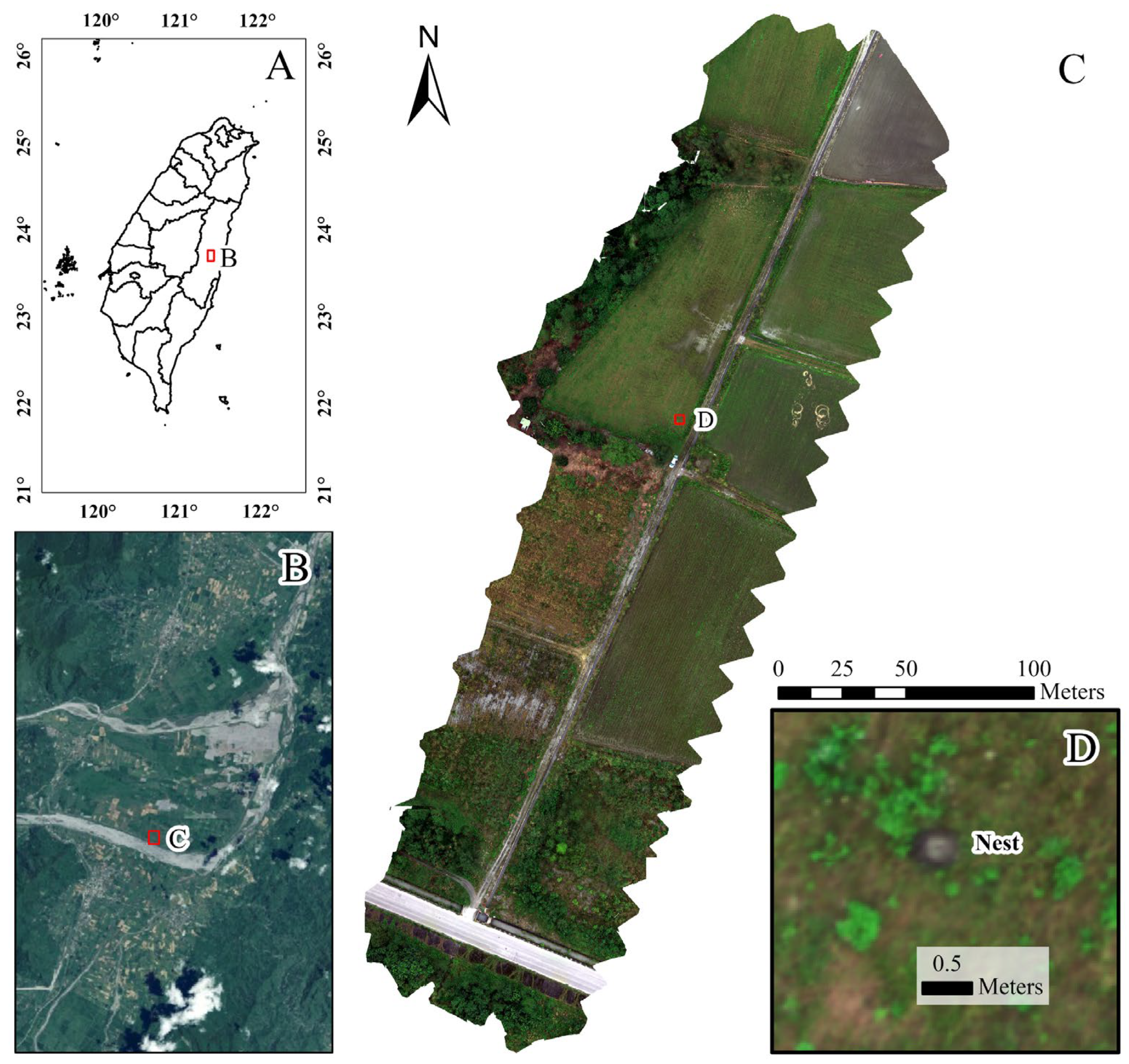

2.1. Study Area

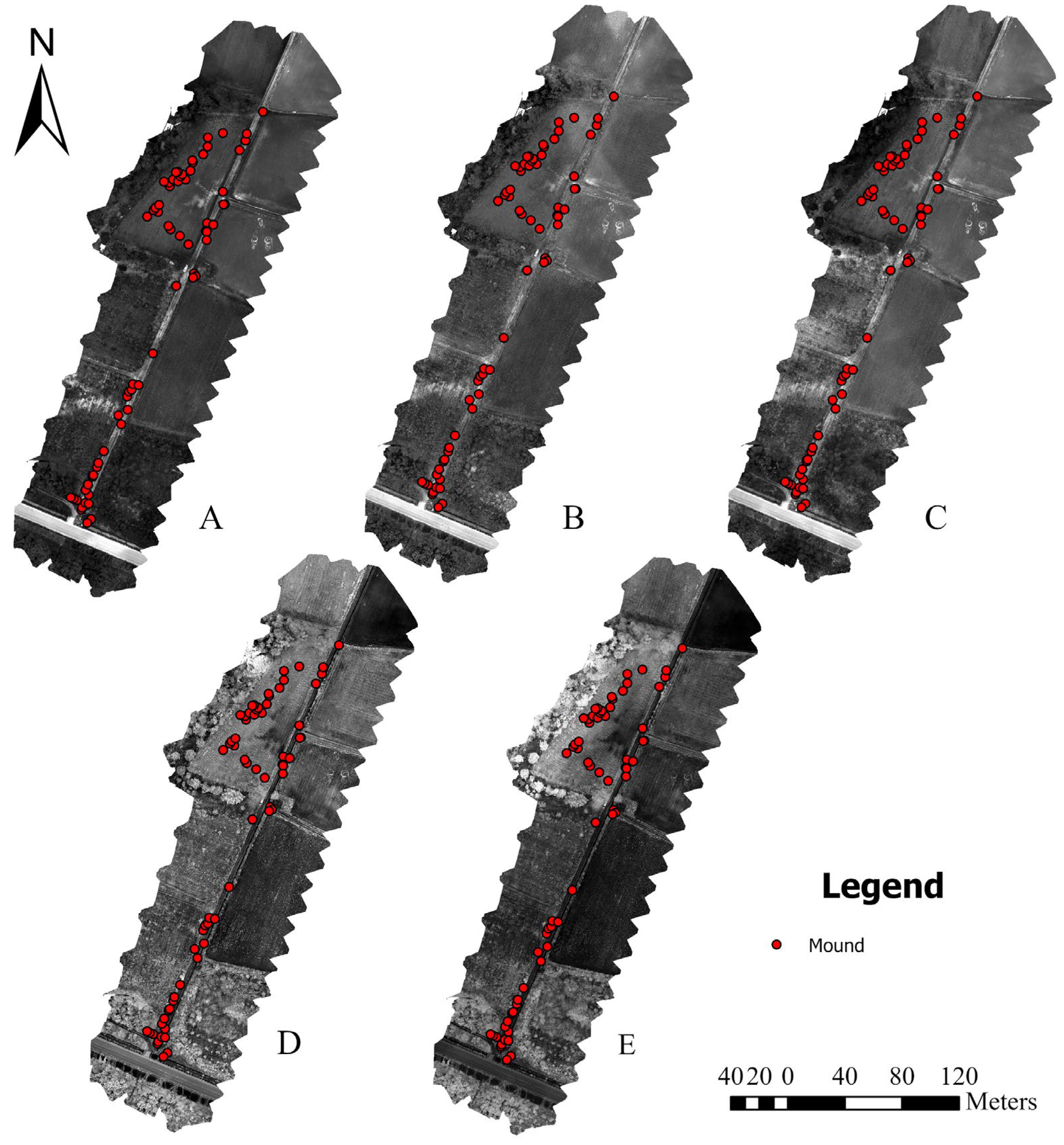

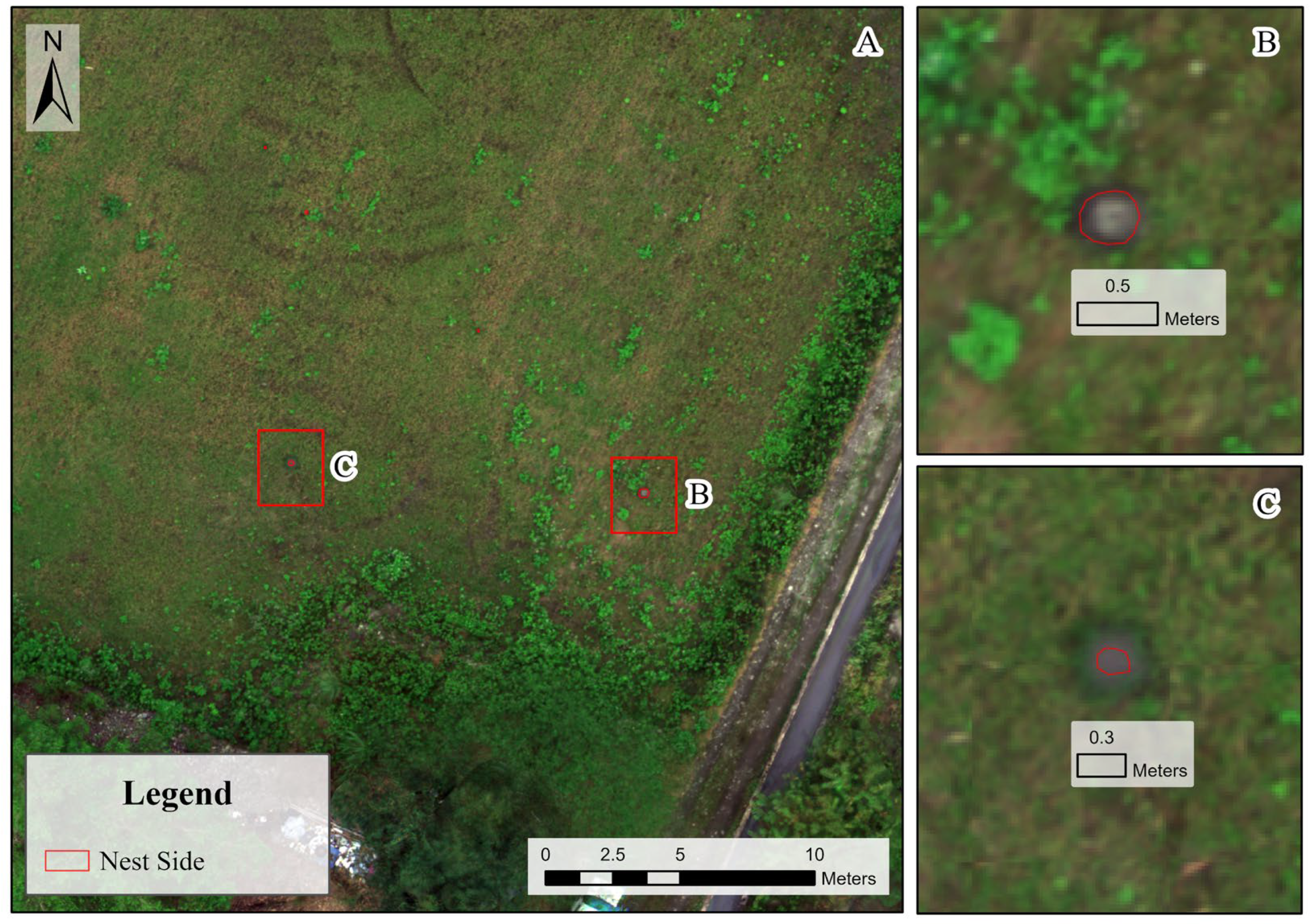

2.2. Data Collection Methods

2.2.1. UAV Specification

2.2.2. Flight Parameters

2.2.3. Ground Control Points

2.2.4. Sampling

2.3. Image Processing and Analysis

2.3.1. Reflectance Conversion

2.3.2. Orthomosaic Generation and Layer Stacking

2.3.3. Spatial Scale of Analysis: Pixel-Level Versus Object Representation

2.4. Feature Selection and Redundancy Removal Strategy

3. Results

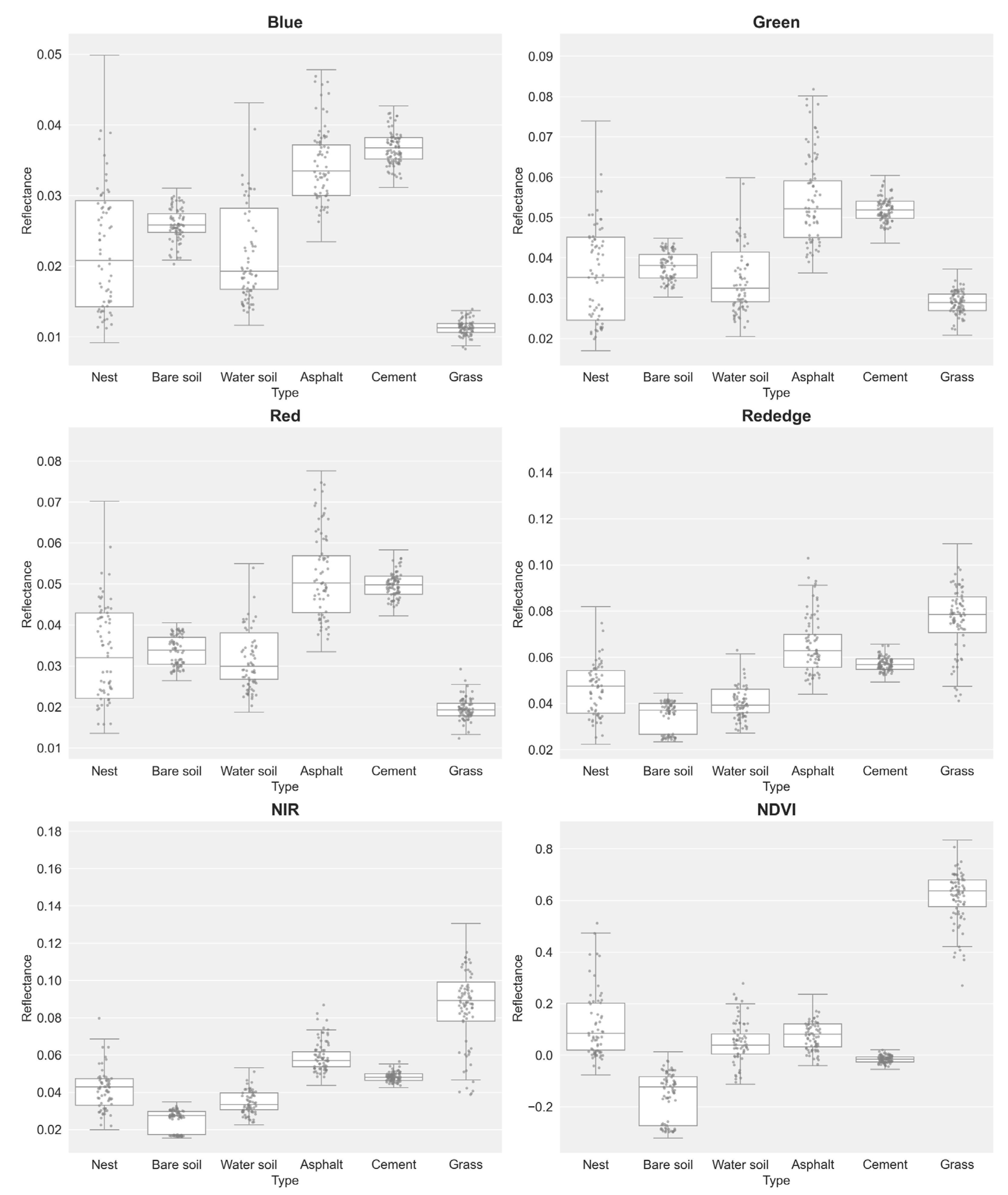

3.1. Analysis of Multispectral Characteristics

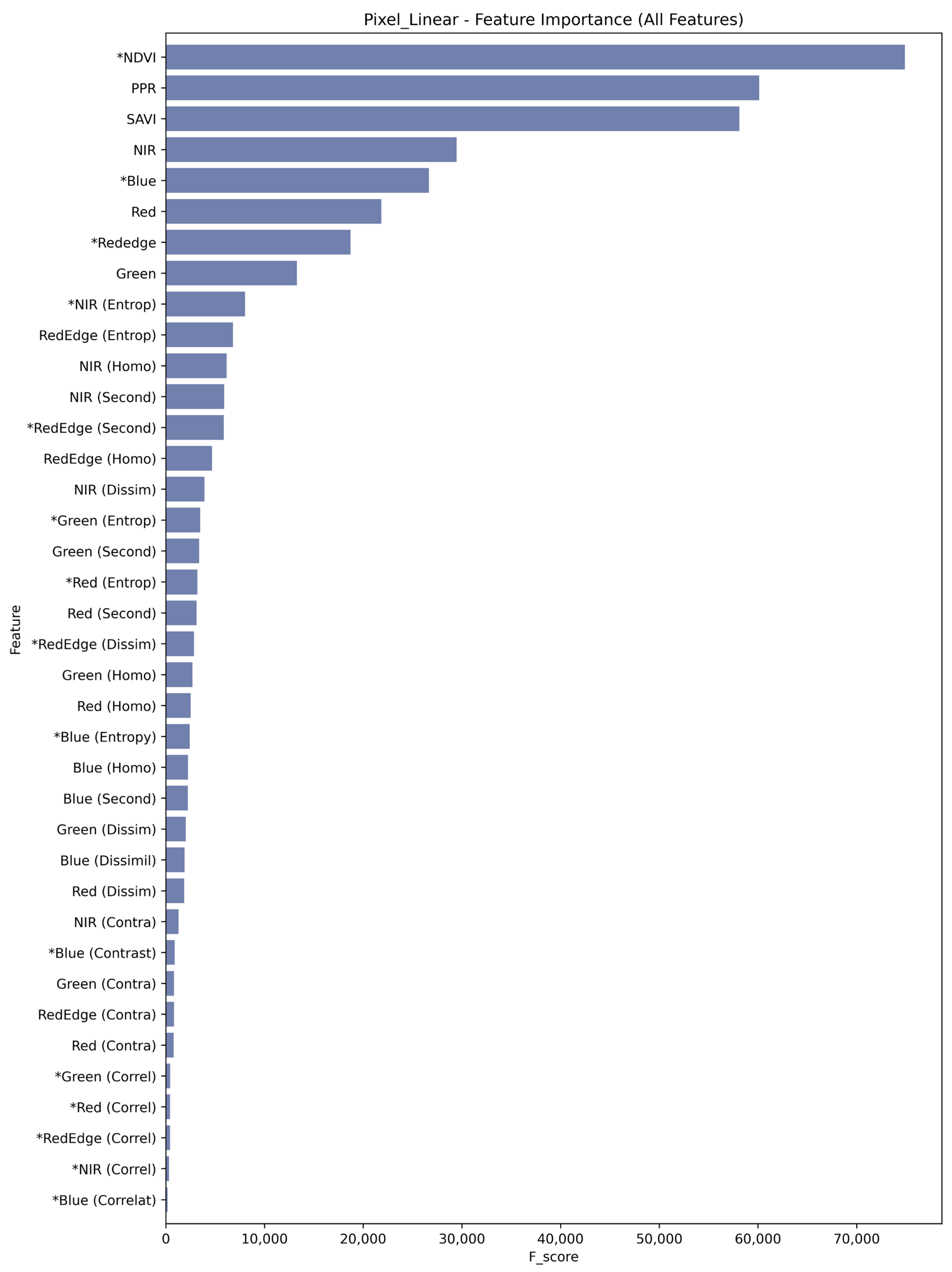

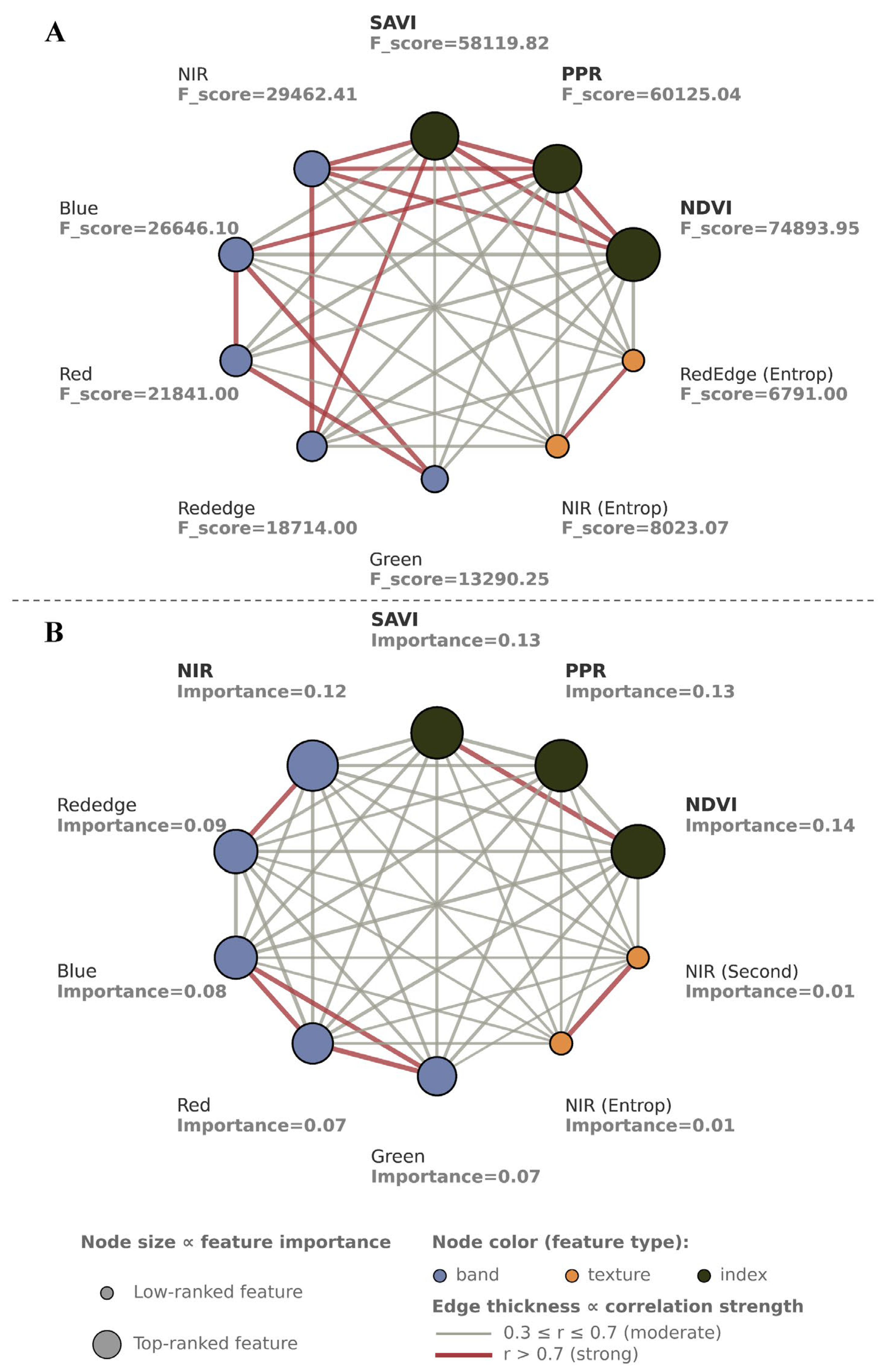

3.2. Feature Selection for Pixel-Level Data

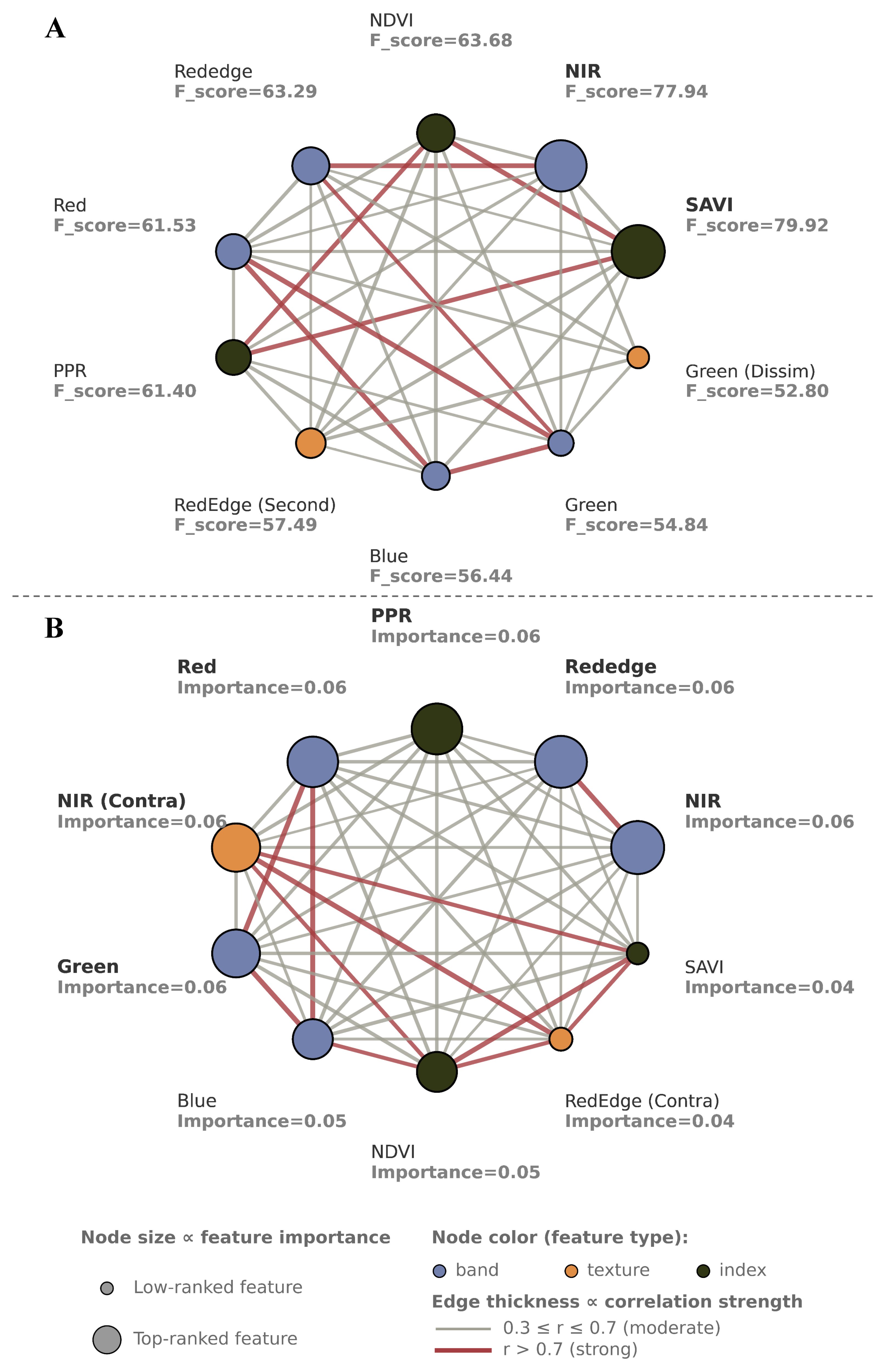

3.3. Feature Selection for Object-Level Data

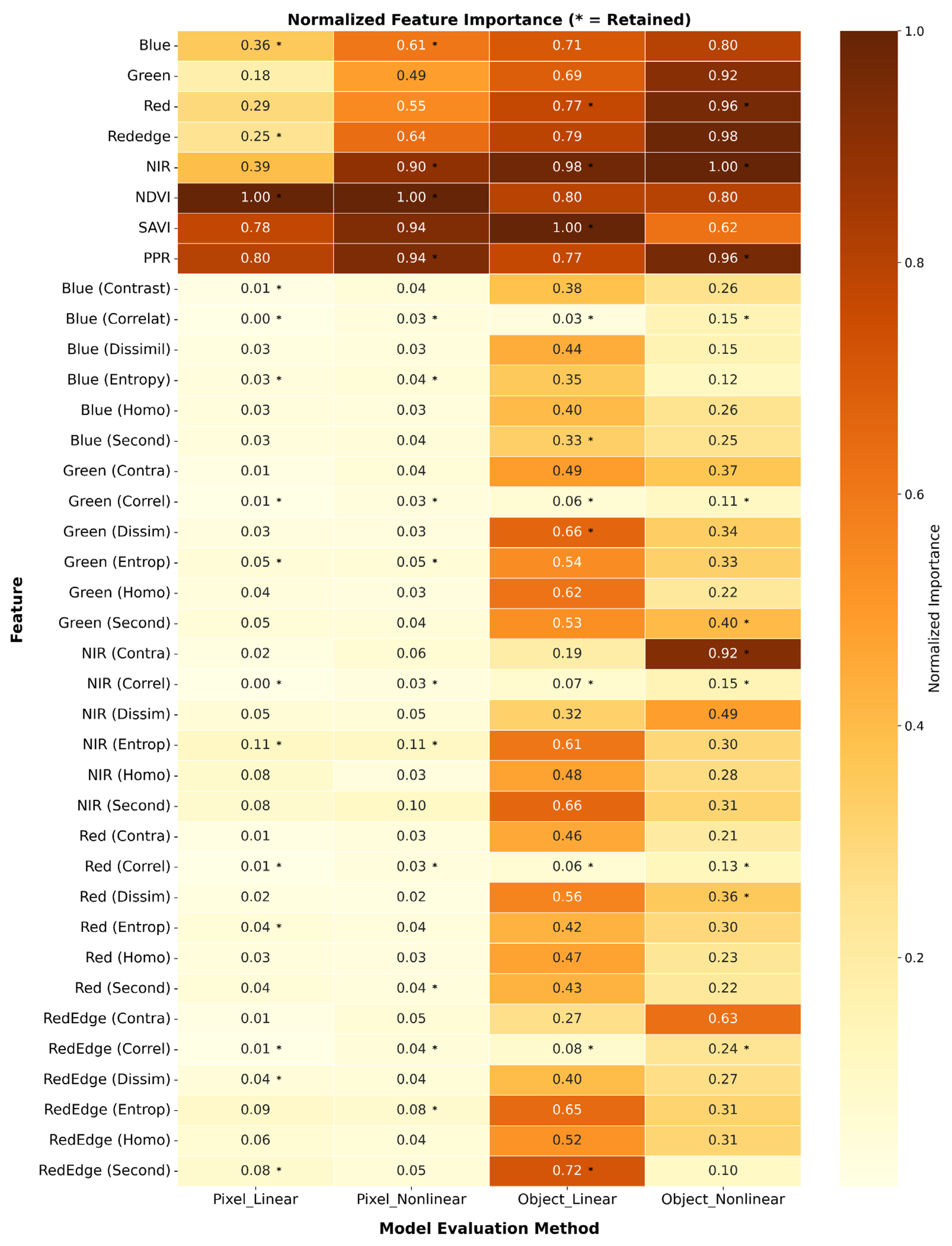

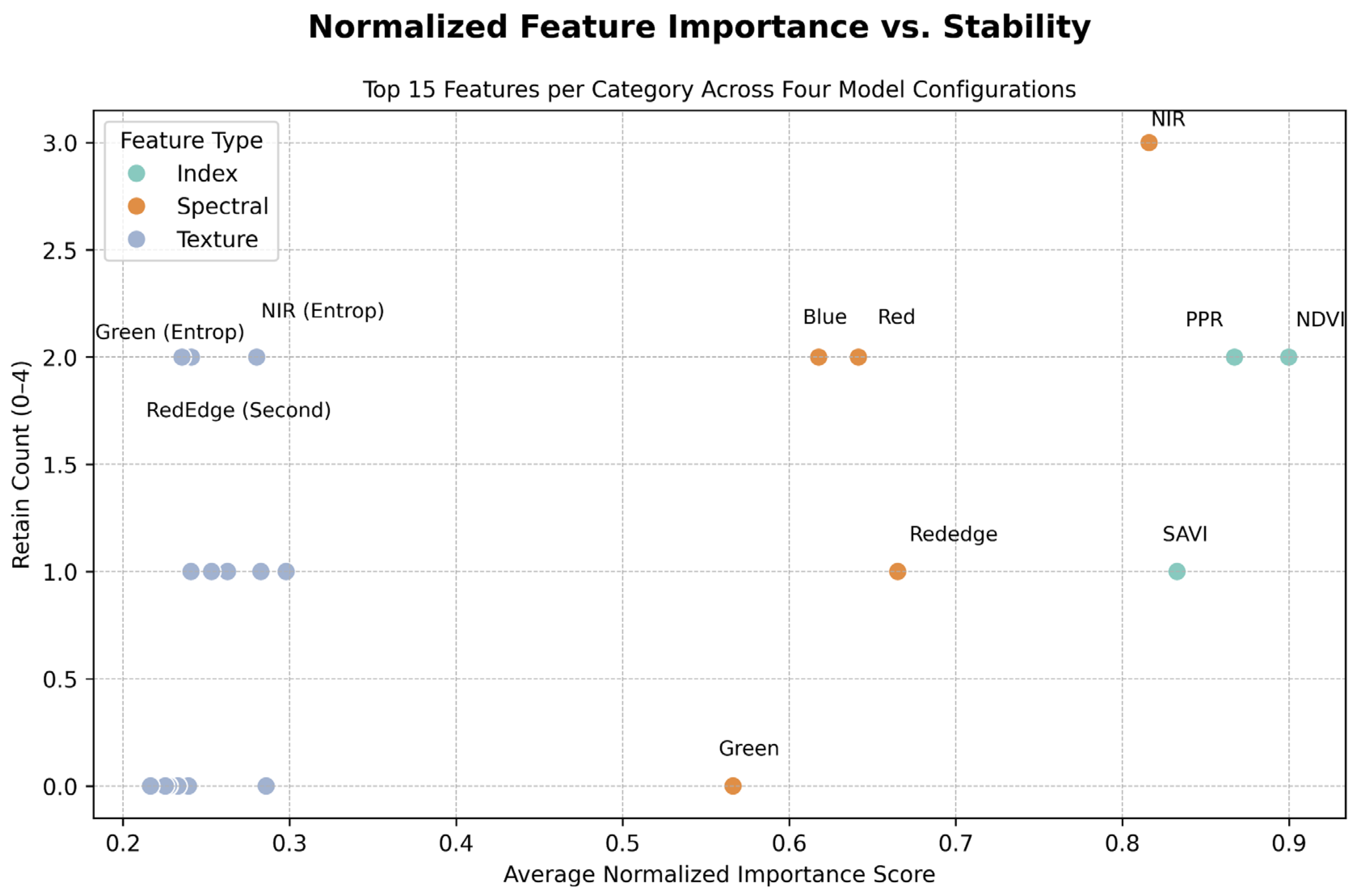

3.4. Comparison of Feature Selection Scales and Methods

4. Discussion

4.1. Discriminative Strength Across Feature Types

4.2. Feature Stability and Importance Across Methods

4.3. Research Limitations

4.4. Comparison of Detection Approaches: Deep Learning and Feature Selection Models

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANOVA | Analysis of variance |

| CNN | Convolutional neural network |

| GLCM | Gray-level co-occurrence matrix |

| GNSS | Global navigation satellite system |

| MIC | Maximal information coefficient |

| NDVI | Normalized difference vegetation index |

| NIR | Near-infrared |

| P4M | Phantom 4 Multispectral Tool |

| PPR | Photochemical pigment reflectance index |

| RFE | Recursive feature elimination |

| RIFA | Red imported fire ant |

| RGB | Red–green–blue |

| ROI | Region of interest |

| RTK | Real-time kinematic |

| SAVI | Soil-adjusted vegetation index |

| UAV | Unmanned aerial vehicle |

| VRS-RTK | Virtual reference station real-time kinematic |

| YOLO | You only look once |

Appendix A. Supplementary Figures for Feature Selection

Appendix A.1. Pixel-Level Linear Model—ANOVA F-Score Ranking

Appendix A.2. Pixel-Level Nonlinear Model—Full Feature Importance

Appendix A.3. Object-Level Linear Model—ANOVA F-Score Ranking

Appendix A.4. Object-Level Nonlinear Model—Full Feature Importance

References

- Perrings, C.; Mooney, H.; Williamson, M. Bioinvasions and Globalization: Ecology, Economics, Management, and Policy; OUP Oxford: Oxford, UK, 2009. [Google Scholar]

- Sax, D.F.; Stachowicz, J.J.; Gaines, S.D. Species Invasions: Insights into Ecology, Evolution and Biogeography; Sinauer Associates: Sunderland, MA, USA, 2005. [Google Scholar]

- Simberloff, D. Invasive Species: What Everyone Needs to Know; OUP US: New York, NY, USA, 2013. [Google Scholar]

- Lee, C.C.; Weng, Y.M.; Lai, L.C.; Suarez, A.V.; Wu, W.J.; Lin, C.C.; Yang, C.S. Analysis of Recent Interception Records Reveals Frequent Transport of Arboreal Ants and Potential Predictors for Ant Invasion in Taiwan. Insects 2020, 11, 356. [Google Scholar] [CrossRef]

- Lowe, S.; Browne, M.; Boudjelas, S.; De Poorter, M. 100 of the World’s Worst Invasive Alien Species: A Selection from the Global Invasive Species Database; Invasive Species Specialist Group Auckland: Auckland, New Zealand, 2000; Volume 12. [Google Scholar]

- Fantle-Lepczyk, J.E.; Haubrock, P.J.; Kramer, A.M.; Cuthbert, R.N.; Turbelin, A.J.; Crystal-Ornelas, R.; Diagne, C.; Courchamp, F. Economic costs of biological invasions in the United States. Sci. Total Environ. 2022, 806, 151318. [Google Scholar] [CrossRef]

- Haddad Junior, V.; Larsson, C.E. Anaphylaxis caused by stings from the Solenopsis invicta, lava-pés ant or red imported fi re ant. An. Bras. Dermatol. 2015, 90, 22–25. [Google Scholar] [CrossRef]

- Solley, G.O.; Vanderwoude, C.; Knight, G.K. Anaphylaxis due to red imported fire ant sting. Med. J. Aust. 2002, 176, 521–523. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.C.; Shoemaker, D.D.; Wu, W.J.; Shih, C.J. Population genetic structure of the red imported fire ant, Solenopsis invicta, in Taiwan. Insectes Sociaux 2007, 55, 54–65. [Google Scholar] [CrossRef]

- Wylie, R.; Yang, C.C.S.; Tsuji, K. Invader at the gate: The status of red imported fire ant in Australia and Asia. Ecol. Res. 2020, 35, 6–16. [Google Scholar] [CrossRef]

- Martinez, B.; Reaser, J.K.; Dehgan, A.; Zamft, B.; Baisch, D.; McCormick, C.; Giordano, A.J.; Aicher, R.; Selbe, S. Technology innovation: Advancing capacities for the early detection of and rapid response to invasive species. Biol. Invasions 2020, 22, 75–100. [Google Scholar] [CrossRef]

- Vander Zanden, M.J.; Hansen, G.J.; Higgins, S.N.; Kornis, M.S. A pound of prevention, plus a pound of cure: Early detection and eradication of invasive species in the Laurentian Great Lakes. J. Great Lakes Res. 2010, 36, 199–205. [Google Scholar] [CrossRef]

- Chi, W.-L.; Chen, C.-H.; Lin, H.-M.; Lin, C.-C.; Chen, W.-T.; Chen, Y.-C.; Lien, Y.-Y.; Tsai, Y.-L. Utilizing Odor-Adsorbed Filter Papers for Detection Canine Training and Off-Site Fire Ant Indications. Animals 2021, 11, 2204. [Google Scholar] [CrossRef]

- Lin, H.-M.; Chi, W.-L.; Lin, C.-C.; Tseng, Y.-C.; Chen, W.-T.; Kung, Y.-L.; Lien, Y.-Y.; Chen, Y.-Y. Fire ant-detecting canines: A complementary method in detecting red imported fire ants. J. Econ. Entomol. 2011, 104, 225–231. [Google Scholar] [CrossRef]

- Liu, Y.-S.; Huang, S.-A.; Lin, I.-L.; Lin, C.-C.; Lai, H.-K.; Yang, C.-H.; Huang, R.-N. Establishment and Social Impacts of the Red Imported Fire Ant, Solenopsis invicta,(Hymenoptera: Formicidae) in Taiwan. Nternational J. Environ. Res. Public Health 2021, 18, 5055. [Google Scholar] [CrossRef] [PubMed]

- Alston-Knox, C.L.; Mengersen, K.L.; Denham, R.; Strickland, C.M. Modelling habitat and planning surveillance using Landsat imagery: A case study using Imported Red Fire ants. Biol. Invasions 2018, 20, 1349–1367. [Google Scholar] [CrossRef]

- Wylie, F.R.; Oakey, J.; Williams, E.R. Alleles and algorithms: The role of genetic analyses and remote sensing technology in an ant eradication program. NeoBiota 2021, 66, 55–73. [Google Scholar] [CrossRef]

- Nansen, C.; Elliott, N. Remote sensing and reflectance profiling in entomology. Annu. Rev. Entomol. 2016, 61, 139–158. [Google Scholar] [CrossRef]

- Ward, C.A.; Starks, S.A.J.C.; Engineering, E. An approach to predict Africanized honey bee migration using remote sensing. Comput. Electr. Eng. 2000, 26, 33–45. [Google Scholar] [CrossRef]

- Keszthelyi, S.; Pónya, Z.; Csóka, Á.; Bázár, G.; Morschhauser, T.; Donkó, T. Non-destructive imaging and spectroscopic techniques to investigate the hidden-lifestyle arthropod pests: A review. J. Plant Dis. Prot. 2020, 127, 283–295. [Google Scholar] [CrossRef]

- Song, Y.; Chen, F.; Liao, K. Comparison of UAV-based multispectral sensors for detection of Solenopsis invicta Nests. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Online, 20–22 October 2020; p. 12051. [Google Scholar]

- Hughes, G. On the mean accuracy of statistical pattern recognizers. IEEE Trans. Inf. Theory 1968, 14, 55–63. [Google Scholar] [CrossRef]

- Song, C.-E.; Wang, U.-H.; Lin, G.-S.; Wang, P.-J.; Jan, J.-F.; Chen, Y.-C.; Wang, S.-F. Establishing automatic classification models for forest cover using airborne hyperspectral and LiDAR data. Taiwan J. For. Sci. 2022, 37, 121–143. [Google Scholar] [CrossRef]

- Zhang, F.; Yang, X. Improving land cover classification in an urbanized coastal area by random forests: The role of variable selection. Remote Sens. Environ. 2020, 251, 112105. [Google Scholar] [CrossRef]

- Choe, H.A.J.; Jinha, J. DJI Phantom 4 Multispectral Raw Image Radiometric Calibration. Available online: https://github.com/gdslab/p4m (accessed on 18 February 2025).

- MicaSense, I. MicaSense RedEdge and Altum Image Processing Tutorials. Available online: https://github.com/micasense/imageprocessing (accessed on 24 April 2025).

- Hall-Beyer, M. Practical guidelines for choosing GLCM textures to use in landscape classification tasks over a range of moderate spatial scales. Int. J. Remote Sens. 2017, 38, 1312–1338. [Google Scholar] [CrossRef]

- Dormann, C.F.; Elith, J.; Bacher, S.; Buchmann, C.; Carl, G.; Carré, G.; Marquéz, J.R.G.; Gruber, B.; Lafourcade, B.; Leitão, P.J. Collinearity: A review of methods to deal with it and a simulation study evaluating their performance. Ecography 2013, 36, 27–46. [Google Scholar] [CrossRef]

- Reshef, D.N.; Reshef, Y.A.; Finucane, H.K.; Grossman, S.R.; McVean, G.; Turnbaugh, P.J.; Lander, E.S.; Mitzenmacher, M.; Sabeti, P.C. Detecting novel associations in large data sets. Science 2011, 334, 1518–1524. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Du, W.; Yang, C.; Wang, Y.; Huang, T.; Xu, X.; Li, W. Source identification and prediction of nitrogen and phosphorus pollution of Lake Taihu by an ensemble machine learning technique. Front. Environ. Sci. Eng. 2023, 17, 55. [Google Scholar] [CrossRef]

- Kupidura, P. The comparison of different methods of texture analysis for their efficacy for land use classification in satellite imagery. Remote Sens. 2019, 11, 1233. [Google Scholar] [CrossRef]

- Dos Santos, A.; Santos, I.C.D.L.; Silva, N.d.; Zanetti, R.; Oumar, Z.; Guimarães, L.F.R.; Camargo, M.B.d.; Zanuncio, J.C. Mapping defoliation by leaf-cutting ants Atta species in Eucalyptus plantations using the Sentinel-2 sensor. Int. J. Remote Sens. 2020, 41, 1542–1554. [Google Scholar] [CrossRef]

- Santos, I.C.d.L.; Santos, A.d.; Oumar, Z.; Soares, M.A.; Silva, J.C.C.; Zanetti, R.; Zanuncio, J.C. Remote sensing to detect nests of the leaf-cutting ant Atta sexdens (Hymenoptera: Formicidae) in teak plantations. Remote Sens. 2019, 11, 1641. [Google Scholar] [CrossRef]

- Aziz, G.; Minallah, N.; Saeed, A.; Frnda, J.; Khan, W. Remote sensing based forest cover classification using machine learning. Sci. Rep. 2024, 14, 69. [Google Scholar] [CrossRef]

- Liu, L.; Chen, J.; Fieguth, P.; Zhao, G.; Chellappa, R.; Pietikäinen, M. From BoW to CNN: Two decades of texture representation for texture classification. Int. J. Comput. Vis. 2019, 127, 74–109. [Google Scholar] [CrossRef]

- Nsimba, C.B.; Levada, A.L. Nonlinear dimensionality reduction in texture classification: Is manifold learning better than PCA? In Proceedings of the Computational Science–ICCS 2019: 19th International Conference, Faro, Portugal, 12–14 June 2019; pp. 191–206. [Google Scholar]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Nembrini, S. Bias in the intervention in prediction measure in random forests: Illustrations and recommendations. Bioinformatics 2019, 35, 2343–2345. [Google Scholar] [CrossRef] [PubMed]

- Strobl, C.; Boulesteix, A.-L.; Kneib, T.; Augustin, T.; Zeileis, A. Conditional variable importance for random forests. BMC Bioinform. 2008, 9, 307. [Google Scholar] [CrossRef]

- Meyer, H.; Reudenbach, C.; Wöllauer, S.; Nauss, T. Importance of spatial predictor variable selection in machine learning applications–Moving from data reproduction to spatial prediction. Ecol. Model. 2019, 411, 108815. [Google Scholar] [CrossRef]

- Lei, W.; Ling, Z.; Yong-Yue, L. Impact of the red imported fire ant Solenopsis invicta Buren on biodiversity in South China: A review. J. Integr. Agric. 2019, 18, 788–796. [Google Scholar] [CrossRef]

- Quamar, M.M.; Al-Ramadan, B.; Khan, K.; Shafiullah, M.; El Ferik, S. Advancements and applications of drone-integrated geographic information system technology—A review. Remote Sens. 2023, 15, 5039. [Google Scholar] [CrossRef]

- Guo, H.-Y. Drones Application in Farming Management in Taiwan. Agric. Policy FFTC E-J. 2019. [Google Scholar]

- Zaka, M.M.; Samat, A. Advances in remote sensing and machine learning methods for invasive plants study: A comprehensive review. Remote Sens. 2024, 16, 3781. [Google Scholar] [CrossRef]

- Nasiri, V.; Beloiu, M.; Darvishsefat, A.A.; Griess, V.C.; Maftei, C.; Waser, L.T. Mapping tree species composition in a Caspian temperate mixed forest based on spectral-temporal metrics and machine learning. Int. J. Appl. Earth Obs. Geoinf. 2023, 116, 103154. [Google Scholar] [CrossRef]

- Zhu, H.; Huang, Y.; An, Z.; Zhang, H.; Han, Y.; Zhao, Z.; Li, F.; Zhang, C.; Hou, C. Assessing radiometric calibration methods for multispectral UAV imagery and the influence of illumination, flight altitude and flight time on reflectance, vegetation index and inversion of winter wheat AGB and LAI. Comput. Electron. Agric. 2024, 219, 108821. [Google Scholar] [CrossRef]

- Daniels, L.; Eeckhout, E.; Wieme, J.; Dejaegher, Y.; Audenaert, K.; Maes, W.H. Identifying the optimal radiometric calibration method for UAV-based multispectral imaging. Remote Sens. 2023, 15, 2909. [Google Scholar] [CrossRef]

- Di Gennaro, S.F.; Toscano, P.; Gatti, M.; Poni, S.; Berton, A.; Matese, A. Spectral comparison of UAV-based hyper and multispectral cameras for precision viticulture. Remote Sens. 2022, 14, 449. [Google Scholar] [CrossRef]

- Monsimet, J.; Sjögersten, S.; Sanders, N.J.; Jonsson, M.; Olofsson, J.; Siewert, M. UAV data and deep learning: Efficient tools to map ant mounds and their ecological impact. Remote. Sens. Ecol. Conserv. 2025, 11, 5–19. [Google Scholar] [CrossRef]

- Liu, X.; Xing, Z.; Liu, H.; Peng, H.; Xu, H.; Yuan, J.; Gou, Z. Combination of UAV and Raspberry Pi 4B: Airspace detection of red imported fire ant nests using an improved YOLOv4 model. Math. Biosci. Eng. 2022, 19, 13582–13606. [Google Scholar] [CrossRef]

- Thapa, A.; Horanont, T.; Neupane, B.; Aryal, J. Deep learning for remote sensing image scene classification: A review and meta-analysis. Remote Sens. 2023, 15, 4804. [Google Scholar] [CrossRef]

| Feature Name | Feature Type | Definition | Calculation |

|---|---|---|---|

| NDVI | Vegetation Index | Measures vegetation vigor according to the normalized difference between NIR and red reflectance | |

| SAVI | Vegetation Index | Adjusts NDVI to account for soil background effects on the basis of a soil brightness correction factor | |

| PPR | Vegetation Index | Measures the relative levels of chlorophyll to other plant pigments, which indicate vegetation health, vigor, and potential issues like weed infestation or nutrient stress | |

| Homogeneity | Texture Index (GLCM) | Measures local uniformity | |

| Contrast | Texture Index (GLCM) | Measures local variation | |

| Dissimilarity | Texture Index (GLCM) | Measures gray-level differences between pixel pairs | |

| Entropy | Texture Index (GLCM) | Measures randomness in image texture | |

| Second Moment | Texture Index (GLCM) | Measures textural smoothness | |

| Correlation | Texture Index (GLCM) | Measures the linear dependency of pixel pairs |

| Pixal_Linear | Pixal_ NonLinear | Object_Linear | Object_NonLinear |

|---|---|---|---|

| NDVI | NDVI | SAVI | NIR |

| Blue | PPR | NIR | PPR |

| Rededge | NIR | Red | Red |

| NIR (Entrop) | Blue | RedEdge (Second) | NIR (Contra) |

| RedEdge (Second) | NIR (Entrop) | Green (Dissim) | Green (Second) |

| Green (Entrop) | RedEdge (Entrop) | Blue (Second) | Red (Dissim) |

| Red (Entrop) | Green (Entrop) | RedEdge (Correl) | RedEdge (Correl) |

| RedEdge (Dissim) | Blue (Entropy) | NIR (Correl) | NIR (Correl) |

| Blue (Entropy) | RedEdge (Correl) | Green (Correl) | Blue (Correlat) |

| Blue (Contrast) | Red (Second) | Red (Correl) | Red (Correl) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shih, C.-H.; Song, C.-E.; Wang, S.-F.; Lin, C.-C. Feature Selection Framework for Improved UAV-Based Detection of Solenopsis invicta Mounds in Agricultural Landscapes. Insects 2025, 16, 793. https://doi.org/10.3390/insects16080793

Shih C-H, Song C-E, Wang S-F, Lin C-C. Feature Selection Framework for Improved UAV-Based Detection of Solenopsis invicta Mounds in Agricultural Landscapes. Insects. 2025; 16(8):793. https://doi.org/10.3390/insects16080793

Chicago/Turabian StyleShih, Chun-Han, Cheng-En Song, Su-Fen Wang, and Chung-Chi Lin. 2025. "Feature Selection Framework for Improved UAV-Based Detection of Solenopsis invicta Mounds in Agricultural Landscapes" Insects 16, no. 8: 793. https://doi.org/10.3390/insects16080793

APA StyleShih, C.-H., Song, C.-E., Wang, S.-F., & Lin, C.-C. (2025). Feature Selection Framework for Improved UAV-Based Detection of Solenopsis invicta Mounds in Agricultural Landscapes. Insects, 16(8), 793. https://doi.org/10.3390/insects16080793