High-Precision Stored-Grain Insect Pest Detection Method Based on PDA-YOLO

Simple Summary

Abstract

1. Introduction

- We propose PDA-YOLO, an improved algorithm based on YOLO11n specifically optimized for small target size, complex backgrounds, and real-time detection requirements in stored-grain insect pest presence detection and classification, achieving an optimal balance between detection accuracy, computational efficiency, and real-time performance.

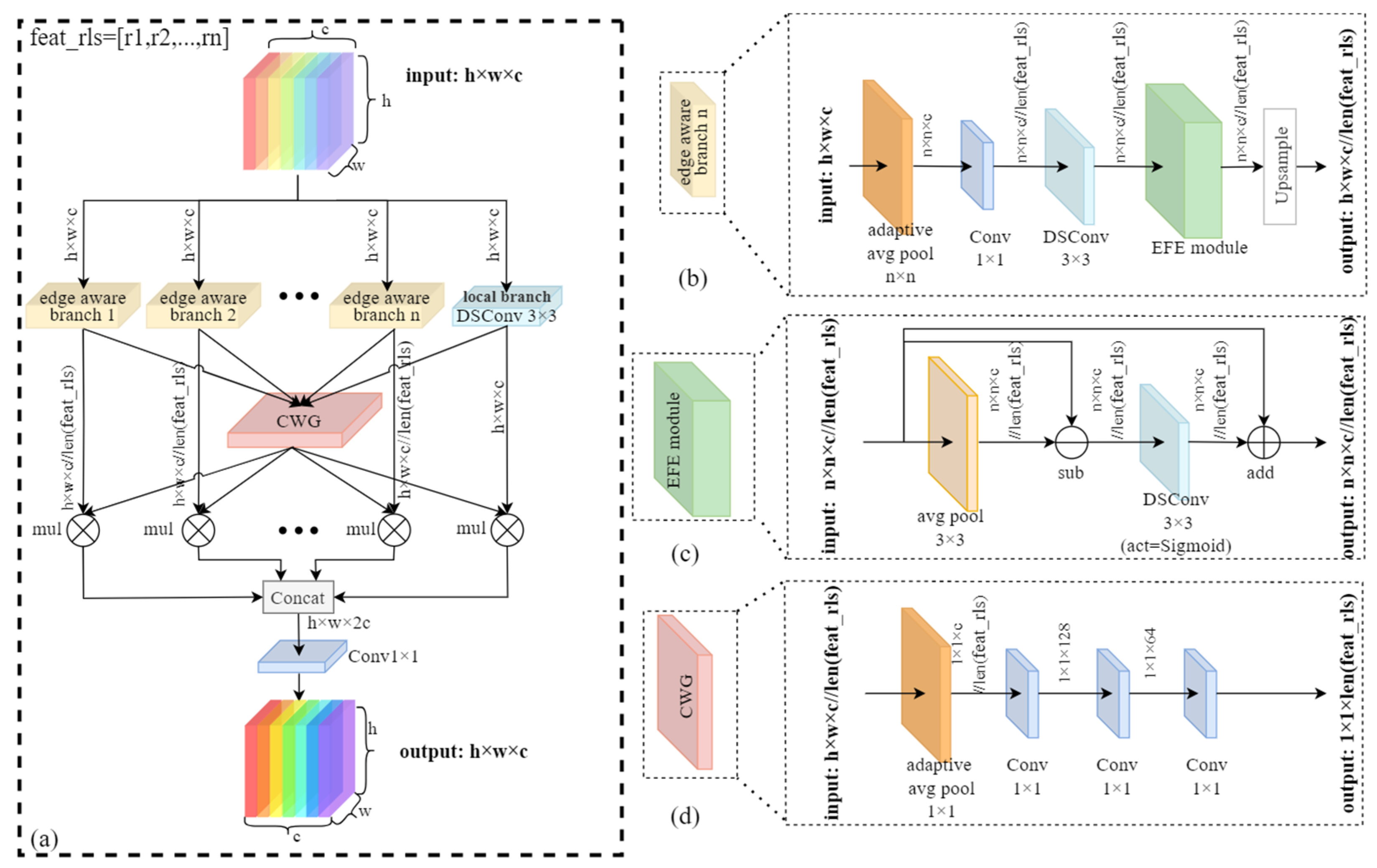

- We introduce the Dynamic Multi-scale Aware Edge (DMAE) module, which adaptively enhances edge features across multiple scales while dynamically adjusting branch weights based on input complexity, enabling the precise delineation of small insect pest boundaries and further improving detection accuracy.

2. Materials and Methods

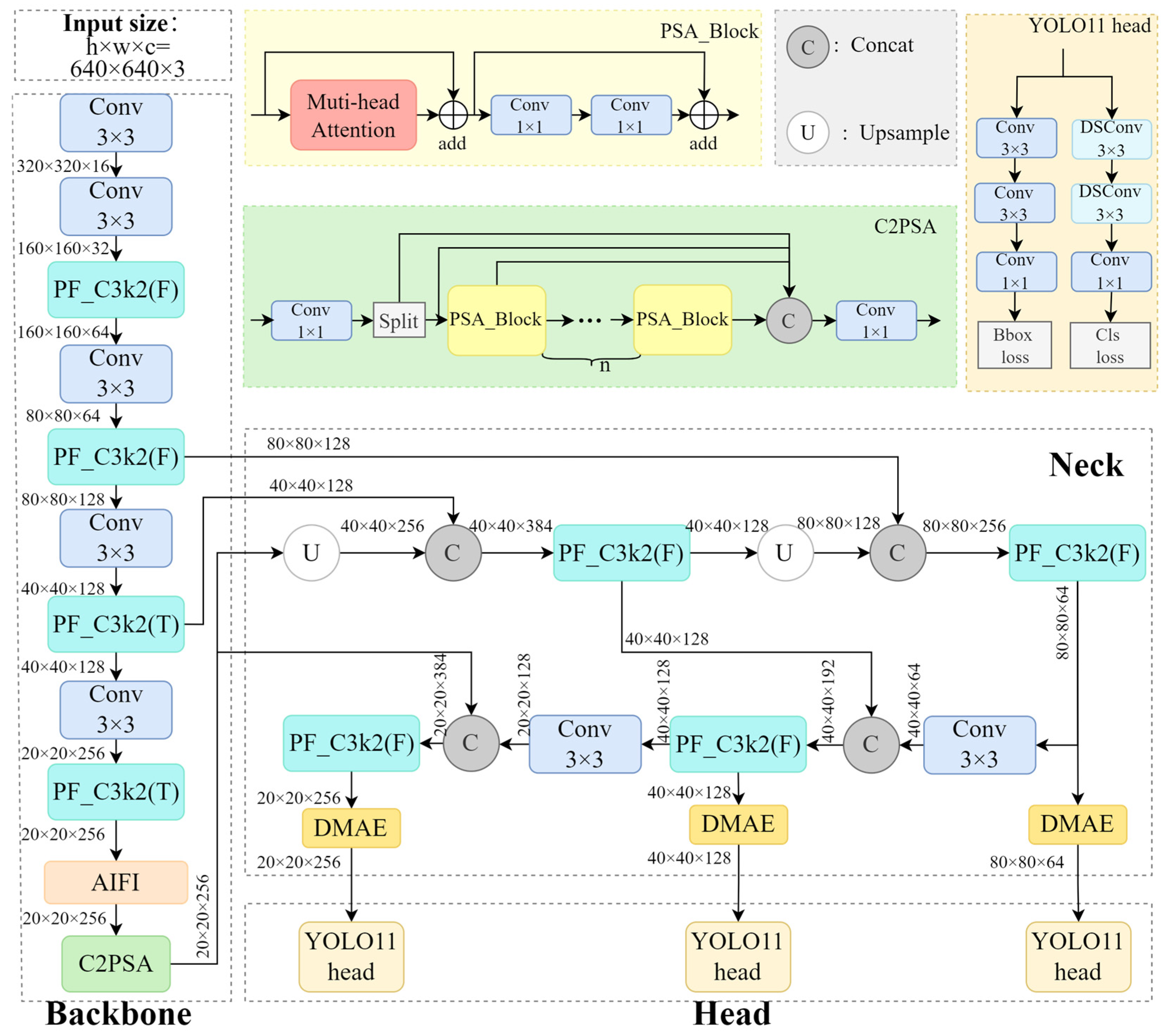

2.1. YOLO11

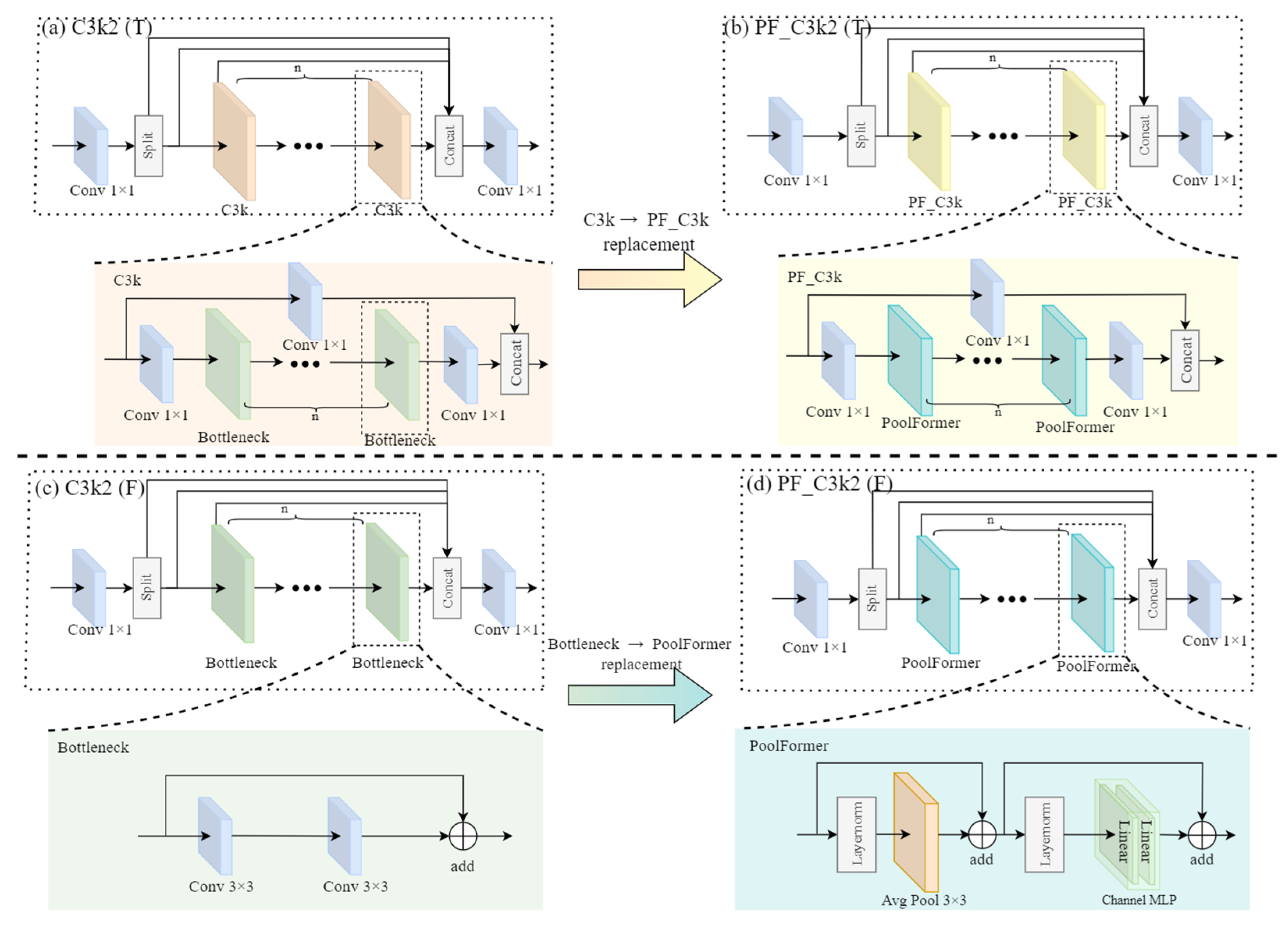

2.2. Improved C3k2 Module

2.3. The Proposed DMAE Module

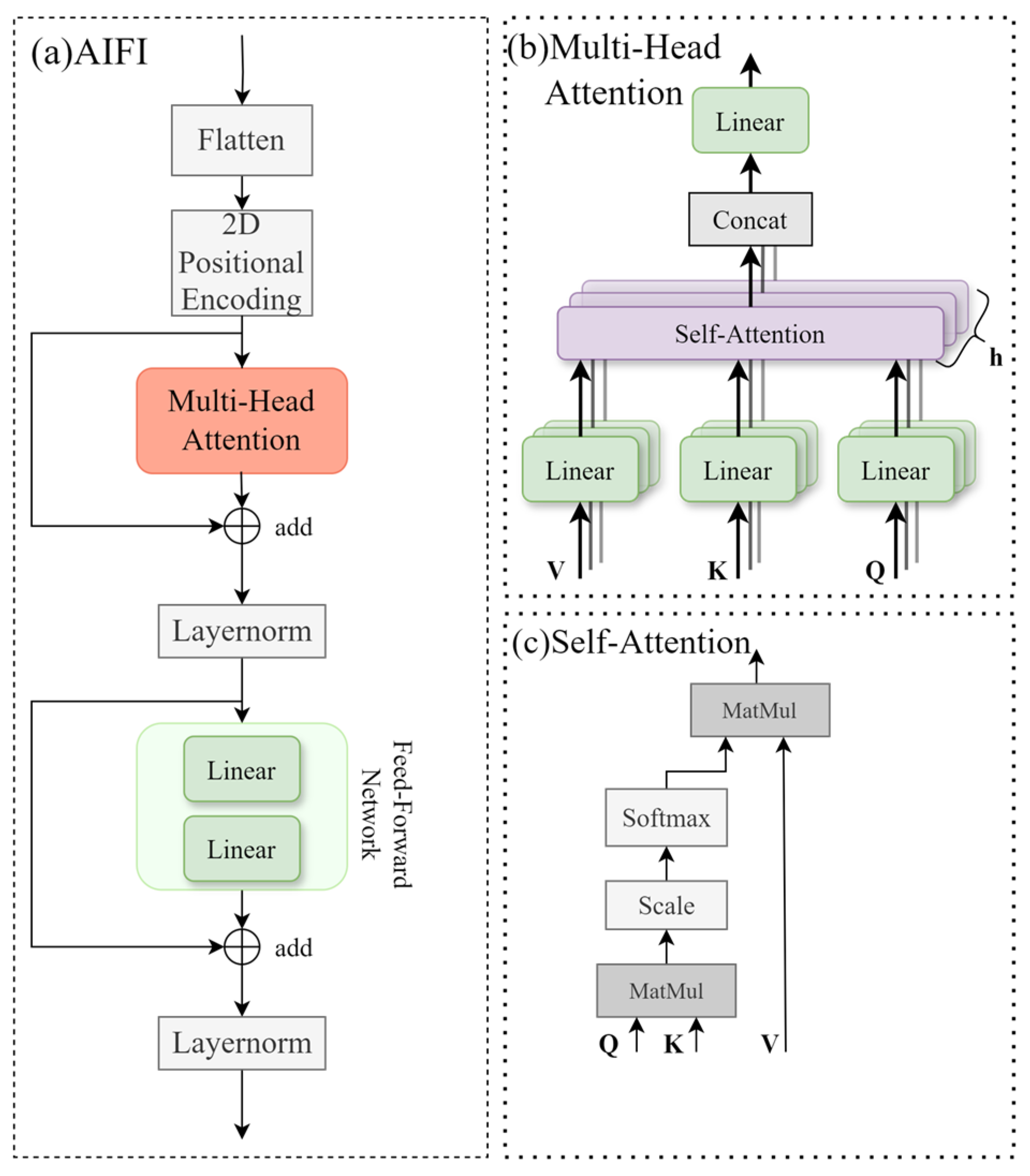

2.4. AIFI Module

3. Materials and Implementation Details

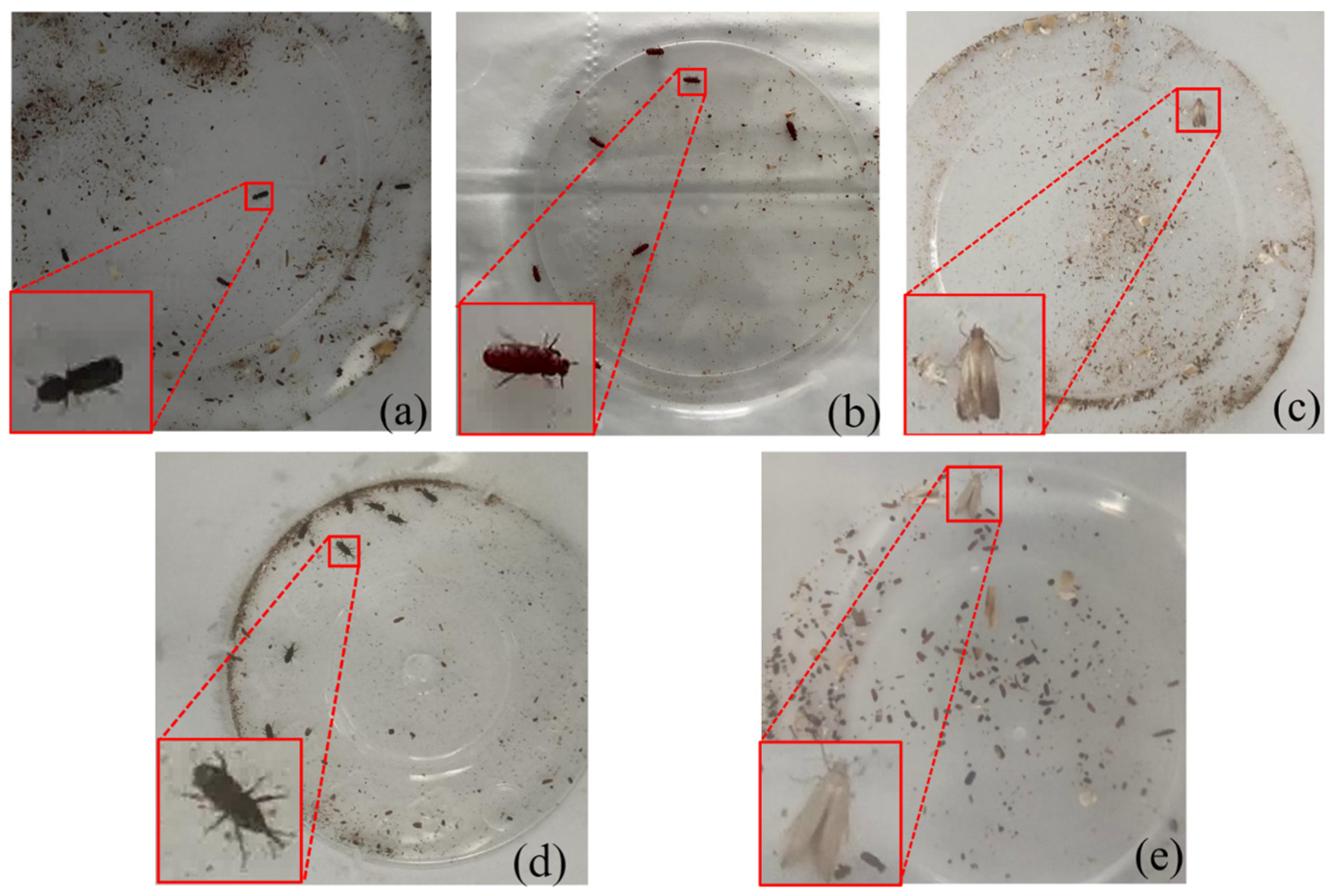

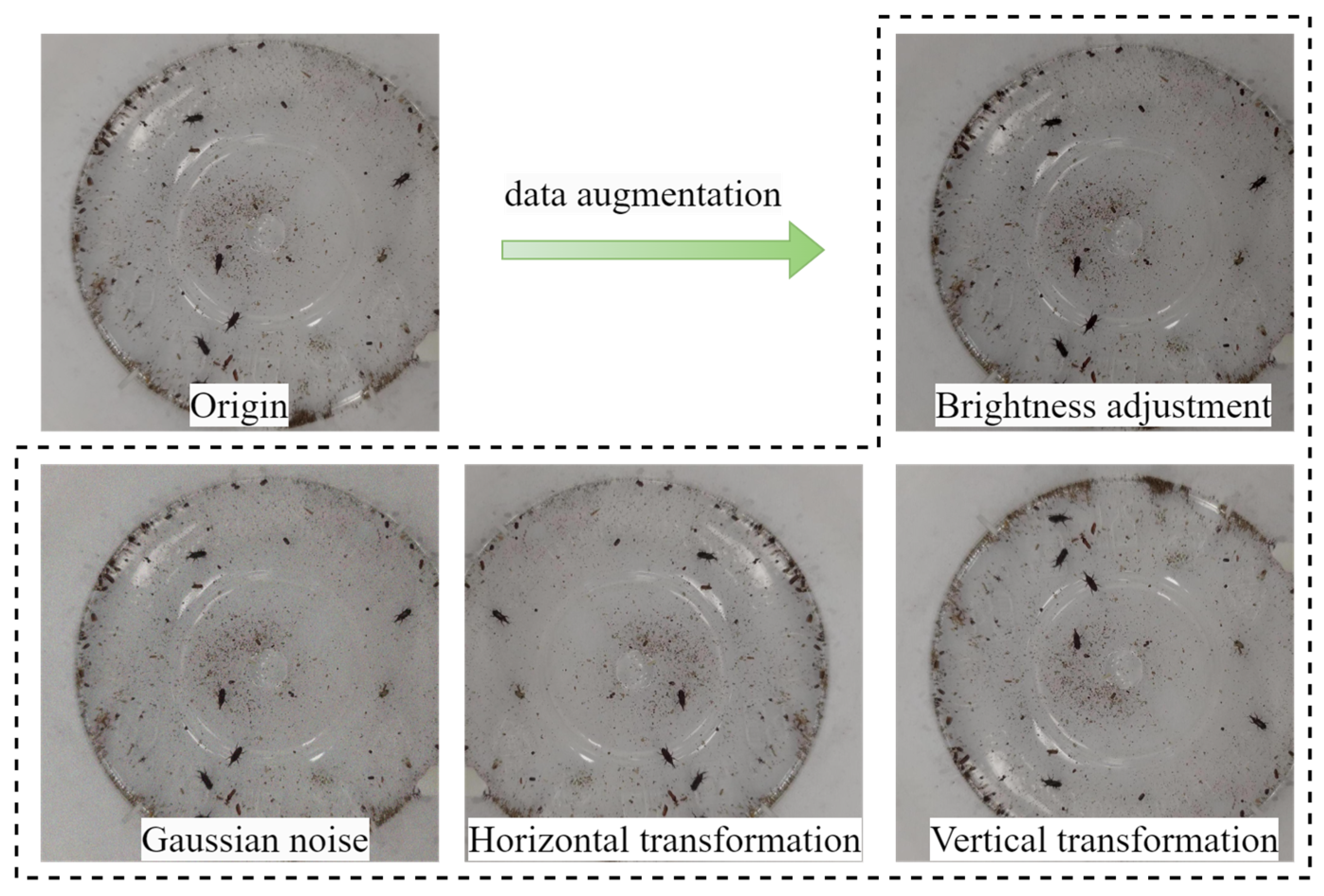

3.1. Stored-Grain Insect Pest Dataset

| Index | Species (Body Length) | Images | Training Instances | Validation Instances | Test Instances | Total Instances |

|---|---|---|---|---|---|---|

| 1 | LGB (2.3–3.0 mm) | 1240 | 4920 | 161 | 314 | 5395 |

| 2 | RFB (2.3–4.4 mm) | 1240 | 6944 | 234 | 470 | 7648 |

| 3 | IMM (8.0–10.0 mm) | 1240 | 3832 | 160 | 367 | 4359 |

| 4 | MW (2.5–4.5 mm) | 1240 | 7144 | 136 | 442 | 7722 |

| 5 | AGM (4.0–6.0 mm) | 1240 | 5580 | 201 | 447 | 6228 |

3.2. Experimental Setup

| Parameters | Values |

|---|---|

| Epochs | 150 |

| Batch size | 16 |

| Learning rate | 0.01 |

| Optimizer | SGD |

| Image size | 640 × 640 |

| Momentum | 0.937 |

| Weight decay | 0.0005 |

3.3. Evaluation Metrics

4. Results

4.1. Ablation Study

| Index | PF_C3k2 | DMAE | AIFI | F1 Score (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | FLOPs (G) | mDt (ms) |

|---|---|---|---|---|---|---|---|---|

| 1 | 90.1 | 93.3 | 58.9 | 6.3 | 5.8 | |||

| 2 | √ | 90.6 | 95.0 | 59.4 | 6.0 | 6.2 | ||

| 3 | √ | 91.3 | 94.3 | 58.8 | 6.6 | 8.0 | ||

| 4 | √ | 91.5 | 94.7 | 59.5 | 6.9 | 6.4 | ||

| 5 | √ | √ | 92.1 | 95.6 | 59.9 | 6.3 | 8.3 | |

| 6 | √ | √ | 91.7 | 93.8 | 59.3 | 7.2 | 8.7 | |

| 7 | √ | √ | 92.9 | 96.0 | 60.0 | 6.6 | 7.1 | |

| 8 | √ | √ | √ | 93.5 | 96.6 | 60.4 | 6.9 | 9.9 |

4.2. Comparison with Mainstream Algorithms

4.2.1. Comparative Analysis of Overall Performance Metrics

| Algorithms | F1 Score (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | FLOPs (G) | mDt (ms) |

|---|---|---|---|---|---|

| Faster R-CNN_r50 | 90.3 | 94.2 | 56.2 | 90.9 | 27.2 |

| SSD300_vgg16 | 88.8 | 92.1 | 52.5 | 138.0 | 22.8 |

| CenterNet_r18 | 79.2 | 83.3 | 44.7 | 10.2 | 37.0 |

| RT-DETR_r18 | 93.0 | 93.6 | 57.0 | 57.0 | 46.9 |

| YOLOv8n | 89.6 | 91.8 | 57.0 | 8.1 | 5.0 |

| YOLOv9t | 92.0 | 93.5 | 59.4 | 11.7 | 15.8 |

| YOLOv10n | 90.8 | 93.6 | 56.9 | 8.3 | 6.7 |

| YOLO11n | 90.1 | 93.3 | 58.9 | 6.3 | 5.8 |

| Mamba-YOLO | 87.5 | 93.9 | 58.9 | 12.3 | 23.2 |

| PDA-YOLO(Ours) | 93.5 | 96.6 | 60.4 | 6.9 | 9.9 |

4.2.2. Category-Wise Detection Performance Analysis

| Algorithms | Pest Insect Species | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| LGB | RFB | IMM | MW | AGM | ||||||

| I | II | I | II | I | II | I | II | I | II | |

| Faster R-CNN_r50 | 88.4 | 49.5 | 93.8 | 52.9 | 96.9 | 57.6 | 95.3 | 60.9 | 96.8 | 60.1 |

| SSD300_vgg16 | 85.4 | 44.3 | 87.9 | 45.3 | 95.5 | 54.8 | 93.6 | 58.1 | 98.0 | 60.1 |

| CenterNet_r18 | 55.2 | 28.0 | 87.7 | 42.4 | 92.2 | 51.8 | 91.4 | 56.5 | 89.9 | 44.8 |

| RT-DETR_r18 | 98.2 | 56.7 | 92.3 | 55.3 | 96.8 | 57.7 | 84.3 | 52.5 | 96.6 | 62.8 |

| YOLOv8n | 93.9 | 54.1 | 80.6 | 45.3 | 98.0 | 62.2 | 87.8 | 57.7 | 98.6 | 65.6 |

| YOLOv9t | 98.1 | 59.7 | 77.8 | 46.6 | 98.3 | 62.1 | 93.8 | 63.0 | 99.3 | 65.8 |

| YOLOv10n | 92.5 | 54.2 | 92.0 | 52.4 | 96.9 | 59.3 | 93.9 | 62.0 | 97.6 | 62.2 |

| YOLO11n | 81.0 | 48.9 | 94.2 | 54.0 | 98.1 | 62.5 | 94.6 | 64.4 | 98.7 | 64.9 |

| Mamba-YOLO | 89.1 | 53.2 | 93.0 | 54.0 | 97.4 | 60.7 | 91.3 | 61.5 | 98.6 | 65.2 |

| PDA-YOLO(Ours) | 94.7 | 55.5 | 94.5 | 54.0 | 98.1 | 60.1 | 97.2 | 67.3 | 98.6 | 65.0 |

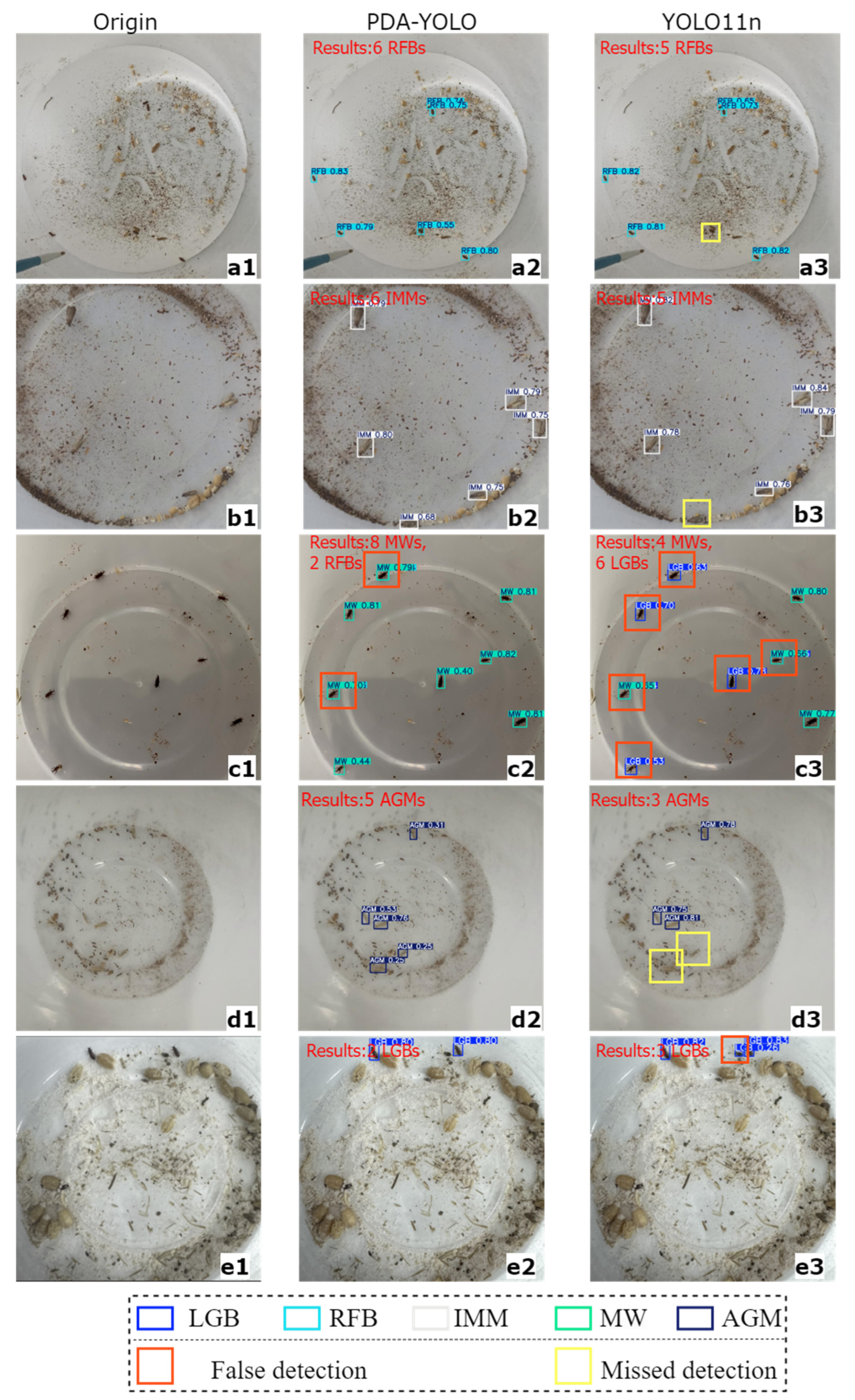

4.3. Analysis of Stored-Grain Insect Pest Detection Results

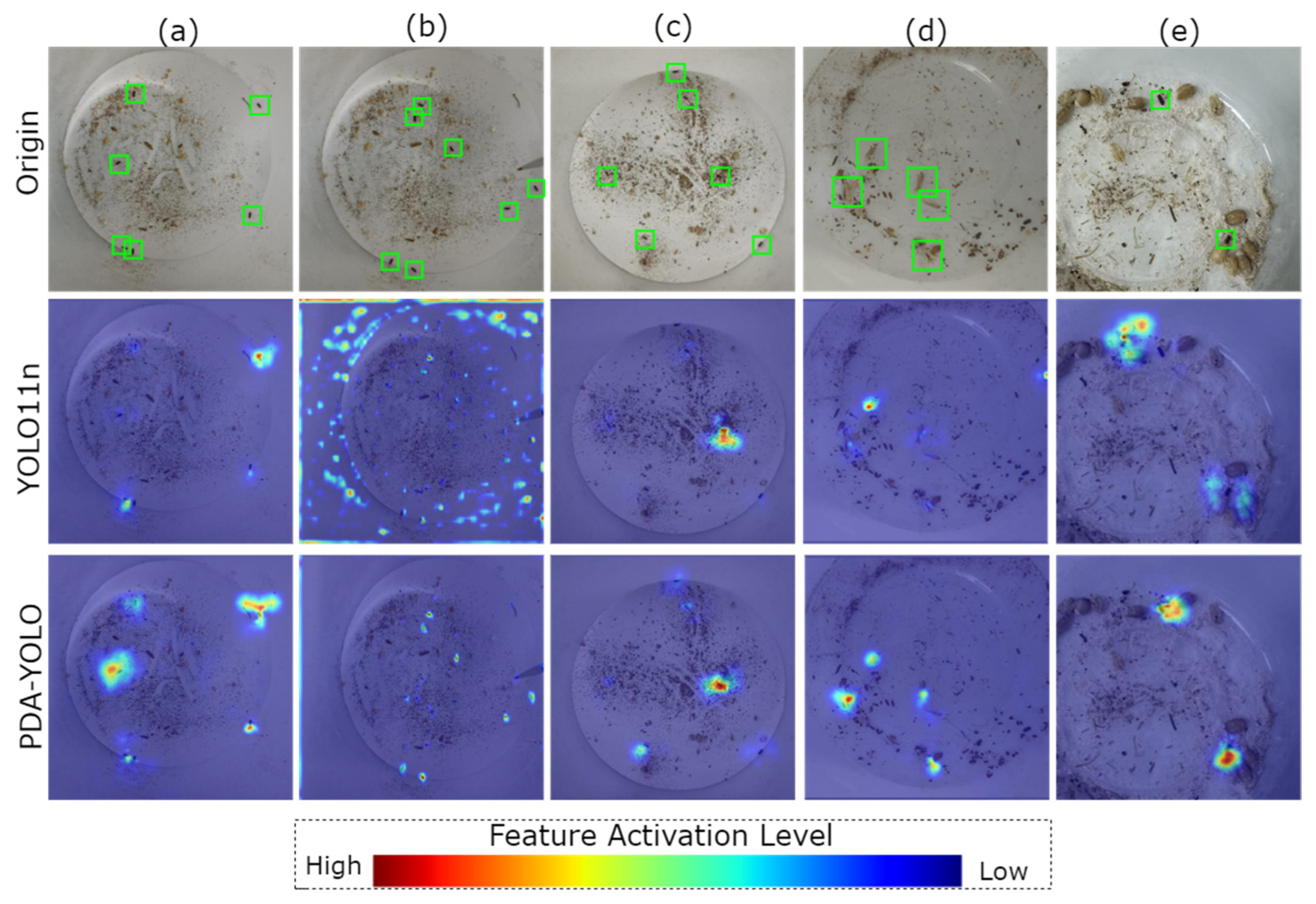

4.4. Grad-CAM

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1

Appendix A.2

References

- van Dijk, M.; Morley, T.; Rau, M.L.; Saghai, Y. A meta-analysis of projected global food demand and population at risk of hunger for the period 2010–2050. Nat. Food 2021, 2, 494–501. [Google Scholar] [CrossRef] [PubMed]

- Sharon, M.; Abirami, C.; Alagusundaram, K. Grain storage management in India. J. Postharvest Technol. 2014, 2, 12–24. [Google Scholar]

- Neethirajan, S.; Karunakaran, C.; Jayas, D.; White, N. Detection techniques for stored-product insects in grain. Food Control 2007, 18, 157–162. [Google Scholar] [CrossRef]

- Mason, L.J.; Obermeyer, J. Stored Grain Insect Pest Management; Purdue Extension: West Lafayette, IN, USA, 2010; Available online: https://extension.entm.purdue.edu/publications/E-66.pdf (accessed on 1 June 2025).

- Waongo, A.; Traore, F.; Ba, M.N.; Dabire-Binso, C.; Murdock, L.L.; Baributsa, D.; Sanon, A. Effects of PICS bags on insect pests of sorghum during long-term storage in Burkina Faso. J. Stored Prod. Res. 2019, 83, 261–266. [Google Scholar] [CrossRef]

- Mendoza, Q.A.; Pordesimo, L.; Neilsen, M.; Armstrong, P.; Campbell, J.; Mendoza, P.T. Application of machine learning for insect monitoring in grain facilities. AI 2023, 4, 348–360. [Google Scholar] [CrossRef]

- Kummu, M.; de Moel, H.; Porkka, M.; Siebert, S.; Varis, O.; Ward, P. Lost food, wasted resources: Global food supply chain losses and their impacts on freshwater, cropland, and fertiliser use. Sci. Total Environ. 2012, 438, 477–489. [Google Scholar] [CrossRef]

- Banga, K.S.; Kotwaliwale, N.; Mohapatra, D.; Giri, S.K. Techniques for insect detection in stored food grains: An overview. Food Control 2018, 94, 167–176. [Google Scholar] [CrossRef]

- Elmouttie, D.; Hammond, N.; Hamilton, G. A review of current statistical methodologies for in-storage sampling and surveillance in the grains industry. Bull. Èntomol. Res. 2012, 103, 140–147. [Google Scholar] [CrossRef]

- Eliopoulos, P.A.; Potamitis, I.; Kontodimas, D.C.; Givropoulou, E.G. Detection of adult beetles inside the stored wheat mass based on their acoustic emissions. J. Econ. Èntomol. 2015, 108, 2808–2814. [Google Scholar] [CrossRef]

- Mankin, R.; Hagstrum, D.; Guo, M.; Eliopoulos, P.; Njoroge, A. Automated applications of acoustics for stored product insect detection, monitoring, and management. Insects 2021, 12, 259. [Google Scholar] [CrossRef]

- Mankin, R.W.; Jetter, E.; Rohde, B.; Yasir, M. Performance of a low-cost acoustic insect detector system with Sitophilus oryzae (coleoptera: Curculionidae) in stored grain and Tribolium castaneum (Coleoptera: Tenebrionidae) in flour. J. Econ. Èntomol. 2020, 113, 3004–3010. [Google Scholar] [CrossRef] [PubMed]

- Johnson, J.B. An overview of near-infrared spectroscopy (NIRS) for the detection of insect pests in stored grains. J. Stored Prod. Res. 2020, 86, 101558. [Google Scholar] [CrossRef]

- Crépon, K.; Cabacos, M.; Bonduelle, F.; Ammari, F.; Faure, M.; Maudemain, S. Using Internet of Things (IoT), Near-Infrared Spectroscopy (NIRS), and Hyperspectral Imaging (HSI) to Enhance Monitoring and Detection of Grain Pests in Storage and Handling Operators. Agriculture 2023, 13, 1355. [Google Scholar] [CrossRef]

- Hou, Y.; Xiong, L.; Luo, X.; Han, S.; Tang, X. Detection of pest infestation in stored grain using an electronic nose system optimized for sensor arrays. J. Food Meas. Charact. 2024, 19, 439–452. [Google Scholar] [CrossRef]

- Zhou, M.; Khir, R.; Pan, Z.; Campbell, J.F.; Mutters, R.; Hu, Z. Feasibility of detection of infested rice using an electronic nose. J. Stored Prod. Res. 2021, 92, 101805. [Google Scholar] [CrossRef]

- Høye, T.T.; Ärje, J.; Bjerge, K.; Hansen, O.L.P.; Iosifidis, A.; Leese, F.; Mann, H.M.R.; Meissner, K.; Melvad, C.; Raitoharju, J. Deep learning and computer vision will transform entomology. Proc. Natl. Acad. Sci. USA 2021, 118, e2002545117. [Google Scholar] [CrossRef]

- Shen, Y.; Zhou, H.; Li, J.; Jian, F.; Jayas, D.S. Detection of stored-grain insects using deep learning. Comput. Electron. Agric. 2018, 145, 319–325. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Lyu, Z.; Jin, H.; Zhen, T.; Sun, F.; Xu, H. Small object recognition algorithm of grain pests based on ssd feature fusion. IEEE Access 2021, 9, 43202–43213. [Google Scholar] [CrossRef]

- Zhao, C.; Bai, C.; Yan, L.; Xiong, H.; Suthisut, D.; Pobsuk, P.; Wang, D. AC-YOLO: Multi-category and high-precision detection model for stored grain pests based on integrated multiple attention mechanisms. Expert Syst. Appl. 2024, 255, 124659. [Google Scholar] [CrossRef]

- Li, B.; Liu, L.; Jia, H.; Zang, Z.; Fu, Z.; Xi, J. YOLO-TP: A lightweight model for individual counting of Lasioderma serricorne. J. Stored Prod. Res. 2024, 109, 102456. [Google Scholar] [CrossRef]

- Chen, C.; Liang, Y.; Zhou, L.; Tang, X.; Dai, M. An automatic inspection system for pest detection in granaries using YOLOv4. Comput. Electron. Agric. 2022, 201, 107302. [Google Scholar] [CrossRef]

- Badgujar, C.M.; Armstrong, P.R.; Gerken, A.R.; Pordesimo, L.O.; Campbell, J.F. Real-time stored product insect detection and identification using deep learning: System integration and extensibility to mobile platforms. J. Stored Prod. Res. 2023, 104, 102196. [Google Scholar] [CrossRef]

- Glenn, J.; Qiu, J.; Ayush, C. Ultralytics YOLO. Ultralytics. 2024. Available online: https://ultralytics.com (accessed on 10 December 2024).

- Yu, W.; Luo, M.; Zhou, P.; Si, C.; Zhou, Y.; Wang, X.; Feng, J.; Yan, S. Metaformer is actually what you need for vision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10819–10829. [Google Scholar]

- Yu, W.; Si, C.; Zhou, P.; Luo, M.; Zhou, Y.; Feng, J. Metaformer baselines for vision. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 896–912. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. Yolov9: Learning what you want to learn using programmable gradient information. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2024; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Wang, Z.; Li, C.; Xu, H.; Zhu, X. Mamba YOLO: SSMs-based YOLO for object detection. arXiv 2024, arXiv:2406.05835. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Shi, Z.; Dang, H.; Liu, Z.; Zhou, X. Detection and identification of stored-grain insects using deep learning: A more effective neural network. IEEE Access 2020, 8, 163703–163714. [Google Scholar] [CrossRef]

- Tian, Y.; Wang, S.; Li, E.; Yang, G.; Liang, Z.; Tan, M. MD-YOLO: Multi-scale Dense YOLO for small target pest detection. Comput. Electron. Agric. 2023, 213, 108233. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, F.; Guan, Z.; Lyu, Z.; Liu, S. High-Precision Stored-Grain Insect Pest Detection Method Based on PDA-YOLO. Insects 2025, 16, 610. https://doi.org/10.3390/insects16060610

Sun F, Guan Z, Lyu Z, Liu S. High-Precision Stored-Grain Insect Pest Detection Method Based on PDA-YOLO. Insects. 2025; 16(6):610. https://doi.org/10.3390/insects16060610

Chicago/Turabian StyleSun, Fuyan, Zhizhong Guan, Zongwang Lyu, and Shanshan Liu. 2025. "High-Precision Stored-Grain Insect Pest Detection Method Based on PDA-YOLO" Insects 16, no. 6: 610. https://doi.org/10.3390/insects16060610

APA StyleSun, F., Guan, Z., Lyu, Z., & Liu, S. (2025). High-Precision Stored-Grain Insect Pest Detection Method Based on PDA-YOLO. Insects, 16(6), 610. https://doi.org/10.3390/insects16060610