Ecological Entomology: How Is Gibson’s Framework Useful?

Abstract

:Simple Summary

Abstract

1. Perceptual Information Used in Flight Control in Insects: A Brief Review

- Terrain-following task: The ventral part of the optic flow is useful to follow the ground [3,10,11,12,13,14,15,16,17]. Srinivasan et al. [11] proposed “the image velocity of the ground is held approximately constant” to achieve a terrain-following behavior. Portelli et al. [13] proposed: “(1) honeybees reacted to a ventral optic flow perturbation by gradually restoring their ventral optic flow to the value they had previously perceived, (2) honeybees restored their ventral optic flow mainly by adjusting their flight height while keeping their airspeed relatively constant”. However, Straw et al. [18] “found that flies do not regulate altitude by maintaining a fixed value of optic flow beneath them”, but “Drosophila flies establish an altitude set point on the basis of nearby horizontal edges and tend to fly at the same height as such features”. A similar conclusion was drawn by David [10] about altitude regulation: “flies did not adjust the angular velocity of image movement that they held constant” for speed control purposes.

- Centering and wall-following tasks: The lateral/frontal parts of the optic flow are useful to center in a narrow corridor by balancing the lateral parts of the optic flow (in honeybees [3,19,20], in bumblebees [15,21], in flies [10,22], in hawkmoths [23]), or to follow a wall along a wide corridor by restoring the optic flow pattern from one side (in honeybees [19,20,24], in bumblebees [25]). Kirchner and Srinivasan [24] suggested “bees maintained equidistance by balancing the apparent angular velocities of the two walls, or, equivalently, the velocities of the retinal images in the two eyes”. Dyhr et al. [25] found a similar conclusion in bumblebees: “the centering response relies on a direct comparison of the optic flow from each eye providing a more accurate measure of the perceived differences”. Lecoeur et al. [15] found that “lateral position is controlled by balancing the maximum optic flow in the frontal visual field”. Stöckl et al. [23] found that “hawkmoths use a similar strategy for lateral position control to bees and flies in balancing the magnitude of translational optic flow perceived in both eyes”. Serres et al. [20] found that “bee follows the right or left wall by regulating whichever lateral optic flow (right or left) is greater”.

- Speed adjustment task: The bilateral (or bi-vertical) part of the optic flow is useful to adjust flight speed [3,21,26,27] (or [28]). Srinivasan et al. [26] showed that honeybees decrease their flight speed in a narrowing tunnel, and increase it as the tunnel widens. The authors of this study suggested that the visuomotor strategy consists of “holding constant the average image velocity as seen by the two eyes” without specifying any part of the visual field. A similar conclusion was drawn in flies [10,29]. Baird et al. [27] confirmed these results by manipulating the bilateral part of the optic flow and concluded that “honeybees regulate their flight speed by keeping the velocity of the image of the environment in their eye constant” by taking into account both the lateral and the ventral part of the optic flow. Portelli et al. [28] found in honeybees “that the ground speed decreased so as to maintain the larger of the two optic flow sums (”left plus right“ optic flows or ”ventral plus dorsal“ optic flows) constant according to whether the minimum cross-section was in the horizontal or vertical plane”.

- Landing task: The ventral part of the optic flow can also be regulated by honeybees to land [11,26]. Srinivasan et al. [11] concluded that honeybees “tend to hold the angular velocity of the image of the surface constant as they approach it” in order to adjust their height above a flat surface. Srinivasan et al. [11] also concluded that “the bee decelerates continuously and in such a way as to keep the projected time to touchdown constant as the surface is approached”. This “projected time” stringently means a time-to-contact (TTC). This procedure ensures the agent’s speed decreases proportionally with the distance to the ground, reaching a value near zero at touchdown [3,5,9].

2. Ecological Perception of the Visual World: Reminders of the Gibson’s Conceptual Framework

2.1. A Conceptual Framework Anchored on a Double Postulate

2.2. A Necessity: Redefining the Nature of (Perceptual) Stimulation

2.3. Where Is the Useful Information for Controlling the Action?

2.4. The Need for Precise Terminology

2.5. Identifying the Appropriate Experimental Agenda?

3. How the Ecological Approach Allows a Better Understanding of the Processes Underlying Trajectory Control in Insects

3.1. The Ecological Framework as a Transversal Framework

3.2. New Reading of the Data from the Literature

- Terrain-following tasks: The studies mentioned [3,10,11,12,13,14,15,16,17] indicate that honeybees rely on the value of optic flow velocity (sometimes called global optic flow rate), a low order variable, to maintain their height. The key point in this particular task is that the high order variable is the OVRC, and not the value of the OV per se. Indeed, as OV is a ratio of speed over distance to the ground, further combinations of height and speed can provide the same OV value. In the case of forward displacement speed being constant, any variation in the OVRC “tells” the insect that the height is changing and requires a change in altitude. A close coupling between a high order variable and an action parameter allows the terrain-following task to be performed.

- Centering task: The centering behavior observed in many insect species [3,10,15,19,20,21,22,26] arises, in all probability, from the detection of a high order variable: motion parallax. Motion parallax corresponds to the OV gradient following a displacement of the agent in the environment [60]. This gradient makes it possible to locate the objects of the environment in relation to each other. When the two side walls have the same OV, they are equidistant from the observation point, i.e., the agent is moving along the center of the corridor. Equalizing the OV of the two walls guarantees the production of a centered displacement.

- Speed adjustment task: The studies reviewed in Section 1 seem to indicate that OVRC could be used as part of a safety principle. When a flight tunnel narrows or widens for a given displacement of the agent, the OV increases or decreases, respectively. Cancelling any change in OV despite changes in tunnel section gives rises to a safe behavior, i.e., a decrease in forward displacement speed when the tunnel narrows, and an increase in displacement speed when the tunnel widens.

- Landing task: Regardless of the fact that several high order variables can be used to control landing tasks, the study by Srinivasan [11] is interesting because it allows us to distinguish, as part of the control of a landing task, between a high order variable (tau: ), the PAES it specifies (first order TTC) and how the high order variable can be used as part of a control strategy. Within the framework of the strategy described, maintaining the -value constant is a sufficient condition for zeroing velocity displacement as the surface is approached.

3.3. The Challenge of Determining Which Variable Is Used and How It Is Used

3.4. The Challenge of Understanding the Whole Informational Landscape

4. Opening Up New Avenues for the Community of Entomologists

4.1. How This Informational Abundance Can Be Used

4.2. Adaptation and Learning: Two Processes at Serving Behavioral Flexibility

5. Conclusions and Perspectives

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AES | Agent-Environment System |

| FOE | Focus of Optical Expansion |

| OV | Optical Velocity |

| OVRC | Optical Velocity Rate of Change |

| PAES | Property of the Agent-Environment System |

| SARC | Splay Angle Rate of Change |

| TTC | Time-To-Contact |

References

- Kennedy, J.S. The visual responses of flying mosquitoes. Proc. Zool. Soc. Lond. 1940, 109, 221–242. [Google Scholar] [CrossRef]

- Kennedy, J.S. The migration of the desert locust (schistocerca gregaria forsk.). i. the behaviour of swarms. ii. a theory of long-range migrations. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 1951, 235, 163–290. [Google Scholar]

- Srinivasan, M.V. Honeybees as a model for the study of visually guided flight, navigation, and biologically inspired robotics. Physiol. Rev. 2011, 91, 413–460. [Google Scholar] [CrossRef]

- Egelhaaf, M.; Boeddeker, N.; Kern, R.; Kurtz, R.; Lindemann, J.P. Spatial vision in insects is facilitated by shaping the dynamics of visual input through behavioral action. Front. Neural Circuits 2012, 6, 108. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Serres, J.R.; Ruffier, F. Optic flow-based collision-free strategies: From insects to robots. Arthropod Struct. Dev. 2017, 46, 703–717. [Google Scholar] [CrossRef] [PubMed]

- Gibson, J.J. The Perception of the Visual World; Houghton, Mifflin and Company: Boston, MA, USA, 1950. [Google Scholar]

- Nakayama, K.; Loomis, J.M. Optical velocity patterns, velocity-sensitive neurons, and space perception: A hypothesis. Perception 1974, 3, 63–80. [Google Scholar] [CrossRef]

- Koenderink, J.J.; van Doorn, A.J. Facts on optic flow. Biol. Cybern. 1987, 56, 247–254. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, M.V. Vision, perception, navigation and ‘cognition’in honeybees and applications to aerial robotics. Biochem. Biophys. Res. Commun. 2021, 564, 4–17. [Google Scholar] [CrossRef] [PubMed]

- David, C.T. Compensation for height in the control of groundspeed by Drosophila in a new ‘Barber’s Pole’ wind tunnel. J. Comp. Physiol. A 1982, 147, 485–493. [Google Scholar] [CrossRef]

- Srinivasan, M.V.; Zhang, S.; Chahl, J.S.; Barth, E.; Venkatesh, S. How honeybees make grazing landings on flat surfaces. Biol. Cybern. 2000, 83, 171–183. [Google Scholar] [CrossRef] [PubMed]

- Franceschini, N.; Ruffier, F.; Serres, J. A bio-inspired flying robot sheds light on insect piloting abilities. Curr. Biol. 2007, 17, 329–335. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Portelli, G.; Ruffier, F.; Franceschini, N. Honeybees change their height to restore their optic flow. J. Comp. Physiol. A 2010, 196, 307–313. [Google Scholar] [CrossRef] [PubMed]

- Portelli, G.; Serres, J.R.; Ruffier, F. Altitude control in honeybees: Joint vision-based learning and guidance. Sci. Rep. 2017, 7, 1–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lecoeur, J.; Dacke, M.; Floreano, D.; Baird, E. The role of optic flow pooling in insect flight control in cluttered environments. Sci. Rep. 2019, 9, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Serres, J.R.; Morice, A.H.P.; Blary, C.; Montagne, G.; Ruffier, F. Honeybees flying over a mirror crash irremediably. In Proceedings of the 4th International Conference on Invertebrate Vision (ICIV), Bäckaskog, Sweden, 5–12 August 2019; p. 260. Available online: https://www.youtube.com/watch?v=KH9z8eqOBbU (accessed on 26 November 2021).

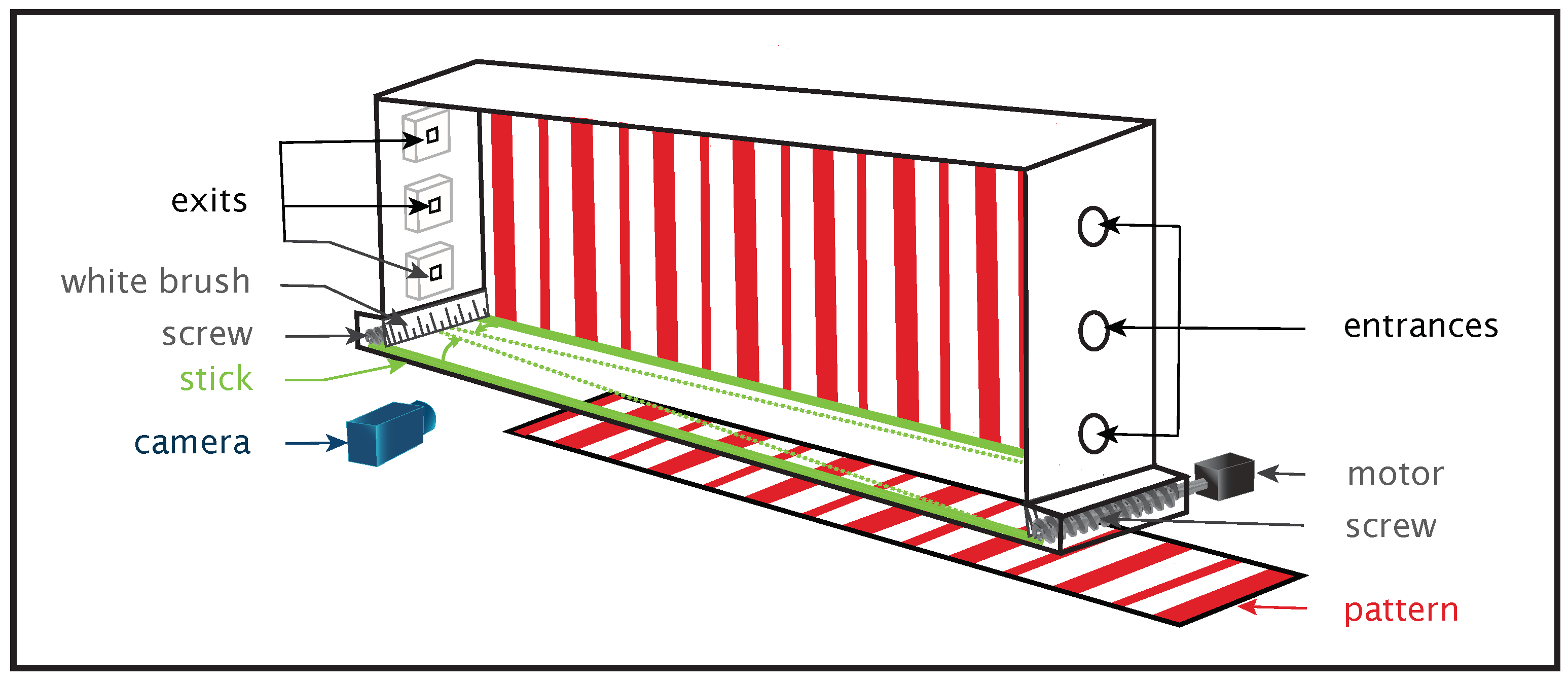

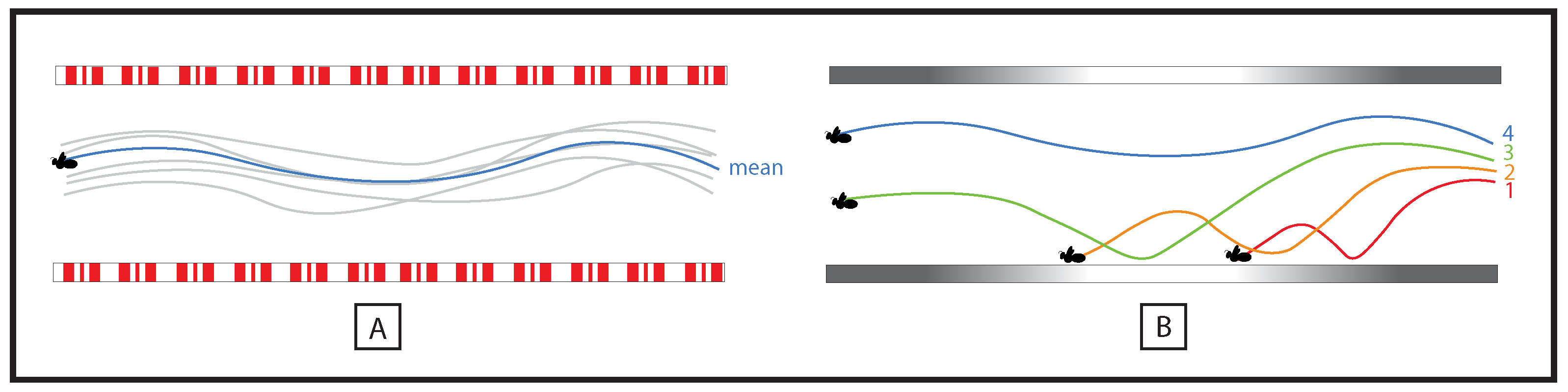

- Serres, J.R.; Morice, A.H.; Blary, C.; Miot, R.; Montagne, G.; Ruffier, F. An innovative optical context to make honeybees crash repeatedly. bioRxiv 2021. [Google Scholar] [CrossRef]

- Straw, A.D.; Lee, S.; Dickinson, M.H. Visual control of altitude in flying drosophila. Curr. Biol. 2010, 20, 1550–1556. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Srinivasan, M.; Lehrer, M.; Kirchner, W.; Zhang, S. Range perception through apparent image speed in freely flying honeybees. Vis. Neurosci. 1991, 6, 519–535. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Serres, J.R.; Masson, G.P.; Ruffier, F.; Franceschini, N. A bee in the corridor: Centering and wall-following. Naturwissenschaften 2008, 95, 1181. [Google Scholar] [CrossRef]

- Baird, E.; Kornfeldt, T.; Dacke, M. Minimum viewing angle for visually guided ground speed control in bumblebees. J. Exp. Biol. 2010, 213, 1625–1632. [Google Scholar] [CrossRef] [Green Version]

- Kern, R.; Boeddeker, N.; Dittmar, L.; Egelhaaf, M. Blowfly flight characteristics are shaped by environmental features and controlled by optic flow information. J. Exp. Biol. 2012, 215, 2501–2514. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stöckl, A.; Grittner, R.; Pfeiffer, K. The role of lateral optic flow cues in hawkmoth flight control. J. Exp. Biol. 2019, 222, jeb199406. [Google Scholar] [CrossRef] [Green Version]

- Kirchner, W.; Srinivasan, M. Freely flying honeybees use image motion to estimate object distance. Naturwissenschaften 1989, 76, 281–282. [Google Scholar] [CrossRef]

- Dyhr, J.P.; Higgins, C.M. The spatial frequency tuning of optic-flow-dependent behaviors in the bumblebee Bombus impatiens. J. Exp. Biol. 2010, 213, 1643–1650. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Srinivasan, M.; Zhang, S.; Lehrer, M.; Collett, T. Honeybee navigation en route to the goal: Visual flight control and odometry. J. Exp. Biol. 1996, 199, 237–244. [Google Scholar] [CrossRef]

- Baird, E.; Srinivasan, M.V.; Zhang, S.; Lamont, R.; Cowling, A. Visual control of flight speed and height in the honeybee. From Animals to Animats 9. In Proceedings of the 9th International Conference on Simulation of Adaptive Behavior, Rome, Italy, 25–29 September 2006; pp. 40–51. [Google Scholar]

- Portelli, G.; Ruffier, F.; Roubieu, F.L.; Franceschini, N. Honeybees’ speed depends on dorsal as well as lateral, ventral and frontal optic flows. PLoS ONE 2011, 6, e19486. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fry, S.N.; Rohrseitz, N.; Straw, A.D.; Dickinson, M.H. Visual control of flight speed in Drosophila melanogaster. J. Exp. Biol. 2009, 212, 1120–1130. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Warren, W.H.; Kay, B.A.; Zosh, W.D.; Duchon, A.P.; Sahuc, S. Optic flow is used to control human walking. Nat. Neurosci. 2001, 4, 213–216. [Google Scholar] [CrossRef]

- Chardenon, A.; Montagne, G.; Laurent, M.; Bootsma, R. A robust solution for dealing with environmental changes in intercepting moving balls. J. Mot. Behav. 2005, 37, 52–62. [Google Scholar] [CrossRef]

- Duchon, A.P.; Kaelbling, L.P.; Warren, W.H. Ecological robotics. Adapt. Behav. 1998, 6, 473–507. [Google Scholar] [CrossRef]

- Ibáñez-Gijón, J.; Díaz, A.; Lobo, L.; Jacobs, D.M. On the ecological approach to information and control for roboticists. Int. J. Adv. Robot. Syst. 2013, 10, 265. [Google Scholar] [CrossRef] [Green Version]

- Baird, E.; Kreiss, E.; Wcislo, W.; Warrant, E.; Dacke, M. Nocturnal insects use optic flow for flight control. Biol. Lett. 2011, 7, 499–501. [Google Scholar] [CrossRef] [Green Version]

- Bigge, R.; Pfefferle, M.; Pfeiffer, K.; Stöckl, A. Natural image statistics in the dorsal and ventral visual field match a switch in flight behaviour of a hawkmoth. Curr. Biol. 2021, 31, R280–R281. [Google Scholar] [CrossRef]

- Baird, E.; Boeddeker, N.; Ibbotson, M.; Srinivasan, M. A universal strategy for visually guided landing. Proc. Natl. Acad. Sci. USA 2013, 110, 18686–18691. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gibson, J. The Senses Considered as Perceptual Systems; Houghton Mifflin: Boston, MA, USA, 1966. [Google Scholar]

- Gibson, J.J. The Ecological Approach to Visual Perception; Houghton, Mifflin and Company: Boston, MA, USA, 1979. [Google Scholar]

- Michaels, C.F.; Carello, C. Direct Perception; Prentice-Hall: Englewood Cliffs, NJ, USA, 1981. [Google Scholar]

- Gibson, J.J. Visually controlled locomotion and visual orientation in animals. Br. J. Psychol. 1958, 49, 182–194. [Google Scholar] [CrossRef]

- Gibson, J.J. Ecological optics. Vis. Res. 1961, 1, 253–262. [Google Scholar] [CrossRef]

- Stoffregen, T.A.; Bardy, B.G. On specification and the senses. Behav. Brain Sci. 2001, 24, 195–213. [Google Scholar] [CrossRef] [Green Version]

- Warren, W.H. Information is where you find it: Perception as an ecologically well-Posed Problem. i-Perception 2021, 12, 20416695211000366. [Google Scholar] [CrossRef]

- Warren, W.H.; Hannon, D.J. Direction of self-motion is perceived from optical flow. Nature 1988, 336, 162–163. [Google Scholar] [CrossRef]

- McLeod, P.; Dienes, Z. Running to catch the ball. Nature 1993, 362, 23. [Google Scholar] [CrossRef]

- Michaels, C.F.; Oudejans, R.R. The optics and actions of catching fly balls: Zeroing out optical acceleration. Ecol. Psychol. 1992, 4, 199–222. [Google Scholar] [CrossRef]

- Gibson, J.J. The theory of affordances. Hilldale 1977, 1, 67–82. [Google Scholar]

- Ravi, S.; Bertrand, O.; Siesenop, T.; Manz, L.S.; Doussot, C.; Fisher, A.; Egelhaaf, M. Gap perception in bumblebees. J. Exp. Biol. 2019, 222, jeb184135. [Google Scholar] [CrossRef] [Green Version]

- Ravi, S.; Siesenop, T.; Bertrand, O.; Li, L.; Doussot, C.; Warren, W.H.; Combes, S.A.; Egelhaaf, M. Bumblebees perceive the spatial layout of their environment in relation to their body size and form to minimize inflight collisions. Proc. Natl. Acad. Sci. USA 2020, 117, 31494–31499. [Google Scholar] [CrossRef] [PubMed]

- Crall, J.D.; Ravi, S.; Mountcastle, A.M.; Combes, S.A. Bumblebee flight performance in cluttered environments: Effects of obstacle orientation, body size and acceleration. J. Exp. Biol. 2015, 218, 2728–2737. [Google Scholar] [CrossRef] [Green Version]

- Duchon, A.P.; Warren, W.H., Jr. A visual equalization strategy for locomotor control: Of honeybees, robots, and humans. Psychol. Sci. 2002, 13, 272–278. [Google Scholar] [CrossRef]

- Flach, J.M.; Warren, R.; Garness, S.A.; Kelly, L.; Stanard, T. Perception and control of altitude: Splay and depression angles. J. Exp. Psychol. Hum. Percept. Perform. 1997, 23, 1764. [Google Scholar] [CrossRef]

- Michaels, C.F.; de Vries, M.M. Higher order and lower order variables in the visual perception of relative pulling force. J. Exp. Psychol. Hum. Percept. Perform. 1998, 24, 526. [Google Scholar] [CrossRef] [PubMed]

- Cutting, J.E. Perception with an Eye for Motion; Mit Press: Cambridge, MA, USA, 1986; Volume 1. [Google Scholar]

- Smith, M.R.; Flach, J.M.; Dittman, S.M.; Stanard, T. Monocular optical constraints on collision control. J. Exp. Psychol. Hum. Percept. Perform. 2001, 27, 395. [Google Scholar] [CrossRef] [PubMed]

- Huet, M.; Camachon, C.; Fernandez, L.; Jacobs, D.M.; Montagne, G. Self-controlled concurrent feedback and the education of attention towards perceptual invariants. Hum. Mov. Sci. 2009, 28, 450–467. [Google Scholar] [CrossRef] [PubMed]

- Jacobs, D.M.; Michaels, C.F. Direct learning. Ecol. Psychol. 2007, 19, 321–349. [Google Scholar] [CrossRef]

- Lee, D.N. A theory of visual control of braking based on information about time-to-collision. Perception 1976, 5, 437–459. [Google Scholar] [CrossRef] [PubMed]

- François, M.; Morice, A.; Bootsma, R.; Montagne, G. Visual control of walking velocity. Neurosci. Res. 2011, 70, 214–219. [Google Scholar] [CrossRef] [PubMed]

- Koenderink, J.; van Doorn, A. Local structure of movement parallax of the plane. J. Opt. Soc. Am. 1976, 66, 717–723. [Google Scholar] [CrossRef]

- Baird, E.; Dacke, M. Visual flight control in naturalistic and artificial environments. J. Comp. Physiol. A 2012, 198, 869–876. [Google Scholar] [CrossRef] [PubMed]

- Burnett, N.P.; Badger, M.A.; Combes, S.A. Wind and route choice affect performance of bees flying above versus within a cluttered obstacle field. bioRxiv 2021. [Google Scholar] [CrossRef]

- Evangelista, C.; Kraft, P.; Dacke, M.; Reinhard, J.; Srinivasan, M.V. The moment before touchdown: Landing manoeuvres of the honeybee Apis mellifera. J. Exp. Biol. 2010, 213, 262–270. [Google Scholar] [CrossRef] [Green Version]

- Wagner, H. Flow-field variables trigger landing in flies. Nature 1982, 297, 147–148. [Google Scholar] [CrossRef]

- Van Breugel, F.; Dickinson, M. The visual control of landing and obstacle avoidance in the fruit fly Drosophila melanogaster. J. Exp. Biol. 2012, 215, 1783–1798. [Google Scholar] [CrossRef] [Green Version]

- Balebail, S.; Raja, S.K.; Sane, S.P. Landing maneuvers of houseflies on vertical and inverted surfaces. PLoS ONE 2019, 14, e0219861. [Google Scholar] [CrossRef] [Green Version]

- Reber, T.; Baird, E.; Dacke, M. The final moments of landing in bumblebees, Bombus terrestris. J. Comp. Physiol. A 2016, 202, 277–285. [Google Scholar] [CrossRef]

- Baird, E.; Fernandez, D.C.; Wcislo, W.T.; Warrant, E.J. Flight control and landing precision in the nocturnal bee Megalopta is robust to large changes in light intensity. Front. Physiol. 2015, 6, 305. [Google Scholar] [CrossRef] [Green Version]

- Bruno, N.; Cutting, J.E. Minimodularity and the perception of layout. J. Exp. Psychol. Gen. 1988, 117, 161. [Google Scholar] [CrossRef] [PubMed]

- Rushton, S.K.; Wann, J.P. Weighted combination of size and disparity: A computational model for timing a ball catch. Nat. Neurosci. 1999, 2, 186–190. [Google Scholar] [CrossRef]

- François, M. Les Limites D’Application d’un Principe de Contrôle Perceptivo-Moteur. Ph.D. Thesis, Université Aix-Marseille II, Marseille, France, 2010. [Google Scholar]

- Jacobs, D.M.; Michaels, C.F. Lateral interception I: Operative optical variables, attunement, and calibration. J. Exp. Psychol. Hum. Percept. Perform. 2006, 32, 443. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Task | Denomination of the Perceptual Information in Entomology | Species |

|---|---|---|

| Centering and wall following | (lateral) “image angular velocity” in [34] “horizontal optic flow cues” in [34] “speed of retinal image motion” in [26] “apparent angular speed” in [26] “lateral optic flow” in [20] “the magnitude of translational optic flow perceived in both eyes” in [23] “translational optic flow cues” in [35] | Apis mellifera, Bombus terrestris, Megalopta genalis, Macroglossum stellatarum |

| Speed adjustment | (lateral) “image angular velocity” in [11,27] “optic flow cues in the lateral visual field” in [27] “velocity of the perceived image motion” in [27] “rate of optic flow” in [21,27] “image motion signal” in [27] “optic flow cues” in [27] “apparent velocity of the surrounding environment” in [26] “apparent movement of the surrounding patterns relative to themselves” in [10] “retinal slip speed” in [29] | Apis mellifera, Bombus terrestris, Drosophila hydei, Drosophila melanogaster |

| Terrain following | “ventral optic flow” in [13] “apparent (ventral) speed of image” in [26] “image angular velocity” in [27] “optic flow cues in the ventral region of the visual speed” in [27] “rate of optic flow” in [27] “perceived image velocity of motion of the image” in [27] | Apis mellifera |

| Landing on vertical surface | “tau: apparent rate of expansion of the image” in [11] “magnitude of optic flow” in [36] “speed of image motion on the retina” in [36] | Apis mellifera |

| Landing on horizontal surface | “angular velocity of the image” in [11,26] | Apis mellifera |

| Heading | “apparent movement” in [1] “retinal image displacement” in [1] | Aëdes aegipty |

| Task | High Order Variable | Relevant PAES |

|---|---|---|

| Centering in a narrow corridor | motion parallax [51] | distance to center of corridor |

| Maintaining speed | OVRC [27,28] | speed maintenance |

| Maintaining altitude | splay angle rate of change (SARC) [52], OVRC [13,27] | altitude maintenance |

| Landing on a vertical surface | [36] | Time-to-Contact (TTC), relevance of current deceleration |

| Landing on a horizontal surface | OVRC [11] | altitude change |

| Heading | Focus Of Expansion (FOE) [30] | direction of agent’s displacement in relation to environment |

| Object interception | bearing angle rate of change [31], optical velocity rate of change [46] | adequacy of the current velocity in relation to the object’s trajectory |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Berger Dauxère, A.; Serres, J.R.; Montagne, G. Ecological Entomology: How Is Gibson’s Framework Useful? Insects 2021, 12, 1075. https://doi.org/10.3390/insects12121075

Berger Dauxère A, Serres JR, Montagne G. Ecological Entomology: How Is Gibson’s Framework Useful? Insects. 2021; 12(12):1075. https://doi.org/10.3390/insects12121075

Chicago/Turabian StyleBerger Dauxère, Aimie, Julien R. Serres, and Gilles Montagne. 2021. "Ecological Entomology: How Is Gibson’s Framework Useful?" Insects 12, no. 12: 1075. https://doi.org/10.3390/insects12121075

APA StyleBerger Dauxère, A., Serres, J. R., & Montagne, G. (2021). Ecological Entomology: How Is Gibson’s Framework Useful? Insects, 12(12), 1075. https://doi.org/10.3390/insects12121075