Abstract

In recent years, an increasing number of machine learning applications in tribology and coating design have been reported. Motivated by this, this contribution highlights the use of Gaussian processes for the prediction of the resulting coating characteristics to enhance the design of amorphous carbon coatings. In this regard, by using Gaussian process regression (GPR) models, a visualization of the process map of available coating design is created. The training of the GPR models is based on the experimental results of a centrally composed full factorial 23 experimental design for the deposition of a-C:H coatings on medical UHMWPE. In addition, different supervised machine learning (ML) models, such as Polynomial Regression (PR), Support Vector Machines (SVM) and Neural Networks (NN) are trained. All models are then used to predict the resulting indentation hardness of a complete statistical experimental design using the Box–Behnken design. The results are finally compared, with the GPR being of superior performance. The performance of the overall approach, in terms of quality and quantity of predictions as well as in terms of usage in visualization, is demonstrated using an initial dataset of 10 characterized amorphous carbon coatings on UHMWPE.

1. Introduction

Machine Learning (ML) as a subfield of artificial intelligence (AI) has become an integral part of many areas of public life and research in recent years. ML is used to create learning systems that are considerably more powerful than rule-based algorithms and are thus predestined for problems with unclear solution strategies and a high number of variants. ML algorithms are used from product development and production [1] to patient diagnosis and therapy [2]. ML algorithms are also playing an increasingly important role in the field of medical technology, for example, in coatings for joint replacements.

Particularly in coating technology and design, the use of ML algorithms enables the identification of complex relationships between several deposition process parameters on the process itself as well as on the properties of the resulting coatings [3,4]. From this view on the complex relationships between the deposition process parameters, coating designers can base their experiments and obtain valuable insights on their coating designs and the necessary parameter settings for coating deposition.

This contribution looks into the application of a possible ML algorithm in the coating design of amorphous carbon coatings. It first provides an overview of the necessary experimental setup for data generation and the concept of machine learning and its algorithms. Likewise, the deposition of amorphous carbon coatings and their properties are presented. Subsequently, the capabilities of the selected supervised ML algorithms: Polynomial Regression (PR), Support Vector Machines (SVM), Neural Networks (NN), Gaussian Process Regression (GPR) are explained and the resulting data visualization is shown. Afterwards, the obtained results are discussed, with the GPR being the superior prediction model. Finally, the main findings are summarized and an outlook is given as well as further potentials and applications are identified.

2. Related Work and Main Research Questions

2.1. Amorphous Carbon Coating Design

An example of a complex process is the coating of metal and plastic parts, as used for joint replacements, with amorphous carbon coatings [5]. In the field of machine elements [6,7], engine components [8,9] and tools [10,11], amorphous carbon coatings are commonly used. In contrast, amorphous carbon coatings are rarely used for load-bearing, tribologically stressed implants [12,13]. The coating of engine and machine elements has so far been used with the primary aim of reducing friction, whereas the coating of forming tools has been used to adjust friction while increasing the service life of the tools. Therefore, the application of tribologically effective coating systems on the articulating implant surfaces is a promising approach to reduce wear and friction [14,15,16].

The coating process depends on many different coating process parameters, such as the bias voltage [17], the target power [18], the gas flow [19] or the temperature, which influence the chemical and mechanical properties as well as the tribological behavior of the resulting coatings [20]. Therefore, it is vital to ensure both the required coating properties and a robust and reproducible coating process to meet the high requirements for medical devices. Compared to experience-based parameter settings, which are often based on trial-and-error, ML algorithms provide clearer and more structured correlations.

However, several experimental investigations focus on improving the tribological effectiveness of joint replacements [21,22,23] and lubrication conditions in prostheses [24,25,26], some experimental investigations are complemented with computer-aided or computational methods to improve the prediction and findings [27,28,29]. Nevertheless, the exact interactions of coating process parameters and resulting properties are mostly qualitative and only valid for certain coating plants and in certain parameter ranges.

2.2. Coating Process and Design Parameters

The use of ML algorithms is a promising approach [30] to not only qualitatively describe such interactions, which have to be determined in elaborate experiments, but also to quantify them [21]. Using ML, the aim is to generate reproducible, robust coating processes with appropriate, required coating properties. For this purpose, the main coating properties, such as coating thickness, roughness, adhesion, hardness and indentation modulus, of the coating parameter variations have to be analyzed and trained with suitable ML algorithms [31].

Within this contribution, the indentation modulus and the coating hardness are examined in more detail, since these parameters can be determined and reproduced with high accuracy and have a relatively high predictive value for the subsequent tribological behavior, such as the resistance to abrasive wear [32,33].

2.3. Research Questions

Resulting from the above-mentioned considerations it was found that existing solutions are solely based on a trial-and-error approach. ML was not considered in the specific coating design in joint replacements. So, in brief, this contribution wants to answer the following central questions. The first one is can ML algorithms predict resulting properties in amorphous carbon coatings? Based on this, the second one is how good is the resulting prediction of resulting properties in terms of quality and quantity? And lastly, can ML support in visualizing the coating properties results and the coating deposition parameters leading to those results? When ML can be used in these cases, the main advantages would be a more efficient approach to coating design with fewer to none trial-and-error steps and, lastly, the co-design of coating experts and ML. The following sections are to present the materials and methods used in trying to answer the stated research questions and provide an outlook on what would be possible via ML.

3. Materials and Methods

First, the studied materials and methods will be described briefly. In this context, the application of the amorphous carbon coating to the materials used (UHMWPE) as well as the setup and procedure of the experimental tests to determine the mechanical properties (hardness and elasticity) are described. Secondly, the pipeline for ML and the used methods are explained. Finally, the programming language Python and the deployed toolkits are described.

3.1. Experimental Setup

3.1.1. Materials

The investigated substrate was medical UHMWPE [34] (Chirulen® GUR 1020, Mitsubishi Chemical Advanced Materials, Vreden, Germany). The specimens to be coated were flat disks, which have been used for mechanical characterization (see [35]). The UHMWPE disks had a diameter of 45 mm and a height of 8 mm. Before coating, the specimens were mirror-polished in a multistage polishing process (Saphir 550-Rubin 520, ATM Qness, Mammelzen, Germany) and cleaned with ultrasound (Sonorex Super RK 255 H 160 W 35 Hz, Bandelin electronic, Berlin, Germany) in isopropyl alcohol.

3.1.2. Coating Deposition

Monolayer a-C:H coatings were prepared on UHMWPE under two-fold rotation using an industrial-scale coating equipment (TT 300 K4, H-O-T Härte- und Oberflächentechnik, Nuremberg, Germany) for physical vapor deposition and plasma-enhanced chemical vapor deposition (PVD/PECVD). The recipient was evacuated to a base pressure of at least 5.0 × 10−4 Pa before actual deposition. The recipient was not preheated before deposition on UHMWPE to avoid the deposition-related heat flux into UHMWPE. The specimens were then cleaned and activated for 2 min in an argon (Ar, purity 99.999%)+-ion plasma with a bipolar pulsed bias of −350 V and an Ar flow of 450 sccm. The deposition time of 290 min was set to achieve a resulting a-C:H coating thickness of approximately 1.5 to 2.0 μm. Using reactive PVD, the a-C:H coating was deposited by medium frequency (MF)-unbalanced magnetron (UBM) sputtering of a graphite (C, purity 99.998%) target under Ar–ethyne (C2H2) atmosphere (C2H2, purity 99.5%). During this process, the cathode (dimensions 170 × 267.5 mm) was operated with bipolar pulsed voltages. The negative pulse amplitudes correspond to the voltage setpoints, whereas the positive pulses were represented by 15% of the voltage setpoints. The pulse frequency f of 75 kHz was set with a reverse recovery time RRT of 3 μs. A negative direct current (DC) bias voltage was used for all deposition processes. The process temperature was kept below 65 °C during the deposition of a-C:H functional coatings on UHMWPE. In Table 1, the main, varied deposition process parameters are summarized. Besides the reference coating (Ref), the different coating variations (C1 to C9) of a centrally composed full factorial 23 experimental design are presented in randomized run order. In this context, the deposition process parameters shown here for the generation of different coatings represent the basis for the machine learning process.

Table 1.

Summary of the main deposition process parameters for a-C:H on UHMWPE.

3.1.3. Mechanical Characterization

According to [36,37], the indentation modulus EIT and the indentation hardness HIT were determined by nanoindentation with Vickers tips (Picodentor HM500 and WinHCU, Helmut Fischer, Sindelfingen, Germany). For minimizing substrate influences, care was taken to ensure that the maximum indentation depth was considerably less than 10% of the coating thicknesses [38,39]. Considering the surface roughness, lower forces also proved suitable to obtain reproducible results. Appropriate distances of more than 40 µm were maintained between individual indentations. For statistical reasons, 10 indentations per specimen were performed and evaluated. A value for Poisson’s ratio typical for amorphous carbon coatings was assumed to determine the elastic–plastic parameters [40,41]. The corresponding settings and parameters are shown in Table 2. In Section 3, the results of nanoindentation are presented and discussed.

Table 2.

Settings for determining the indentation modulus EIT and the indentation hardness HIT.

3.2. Machine Learning and Used Models

3.2.1. Supervised Learning

The goal of machine learning is to derive relationships, patterns and regularities from data sets [42]. These relationships can then be applied to new, unknown data and problems to make predictions. ML algorithms can be divided into three subclasses: supervised, unsupervised and reinforced learning. In the following, only the class of supervised learning will be discussed in more detail, since algorithms from this subcategory were used in this paper, namely Gaussian process regression (GPR). Supervised ML was used because of the available labelled data.

In supervised learning, the system is fed classified training examples. In this data, the input values are already associated with known output data values. This can be done, for example, by an already performed series of measurements with certain input parameters (input) and the respective measured values (output). The goal of supervised learning is to train the model or the algorithms using the known data in such a way that statements and predictions can also be made about unknown test data [42]. Due to the already classified data, supervised learning represents the safest form of machine learning and is therefore very well suited for optimization tasks [42].

In the field of supervised learning, one can distinguish between the two problem types of classification and regression. In a classification problem, the algorithm must divide the data into discrete classes or categories. In contrast, in a regression problem, the model is to estimate the parameters of pre-defined functional relationships between multiple features in the data sets [42,43].

A fundamental danger with supervised learning methods is that the model learns the training data by role and thus learns the pure data points rather than the correlations in data. As a result, the model can no longer react adequately to new, unknown data values. This phenomenon is called overfitting and must be avoided by choosing appropriate training parameters [31]. In the following, basic algorithms of supervised learning are presented, ranging from PR and SVM to NN and GPR.

3.2.2. Polynomial Regression

At first, we want to introduce polynomial regression (PR) for supervised learning. PR is a special case of linear regression and tries to predict data with a polynomial regression curve. The parameters of the model are often fitted using a least square estimator and the overall approach is applied to various problems, especially in the engineering domain. A basic PR model can lead to the following equation [44]:

with being the regression parameters and being the error values. The prediction targets are formulated as and the features used for prediction are described as . A more sophisticated technique based on regression models are support vector machines, which are described in the next section.

3.2.3. Support Vector Machines

Originally, support vector machines (SVM) are a model commonly used for classification tasks, but the ideas of SVM can be extended to regression as well. SVM try to find higher order planes within the parameter space to describe the underlying data [45]. Thereby, SVM are very effective in higher dimensional spaces and make use of kernel functions for prediction. SVM are widely used and can be applied to a variety of problems. In this regard, SVM can also be applied nonlinear problems. For a more detailed theoretical insight, we refer to [45].

3.2.4. Neural Networks

Another supervised ML technique is neural networks (NN), which rely on the concept of the human brain to build interconnected multilayer perceptrons (MLP) capable of predicting arbitrary feature–target correlations. The basic building block of such MLP are neurons based on activation functions which allow the neuron to fire when different threshold values are reached [46]. When training a NN, the connections and the parameters of those activation functions are optimized to minimize training errors; this process is called backpropagation [31].

3.2.5. Gaussian Process Regression

The Gaussian processes are supervised generic learning methods, which were developed to solve regression and classification problems [43]. While classical regression algorithms apply a polynomial with a given degree or special models like the ones mentioned above, GPR uses input data more subtly [47]. Here, the Gaussian process theoretically generates an infinite number of approximation curves to approximate the training data points as accurately as possible. These curves are assigned probabilities and Gaussian normal distributions, respectively. Finally, the curve which fits its probability distribution best to that of the training data is selected. In this way, the input data gain significantly more influence on the model, since in the GPR altogether fewer parameters are fixed in advance than in the classical regression algorithms [47]. However, the behavior of the different GPR models can be defined via kernels. This can be used, for example, to influence how the model should handle outliers and how finely the data should be approximated.

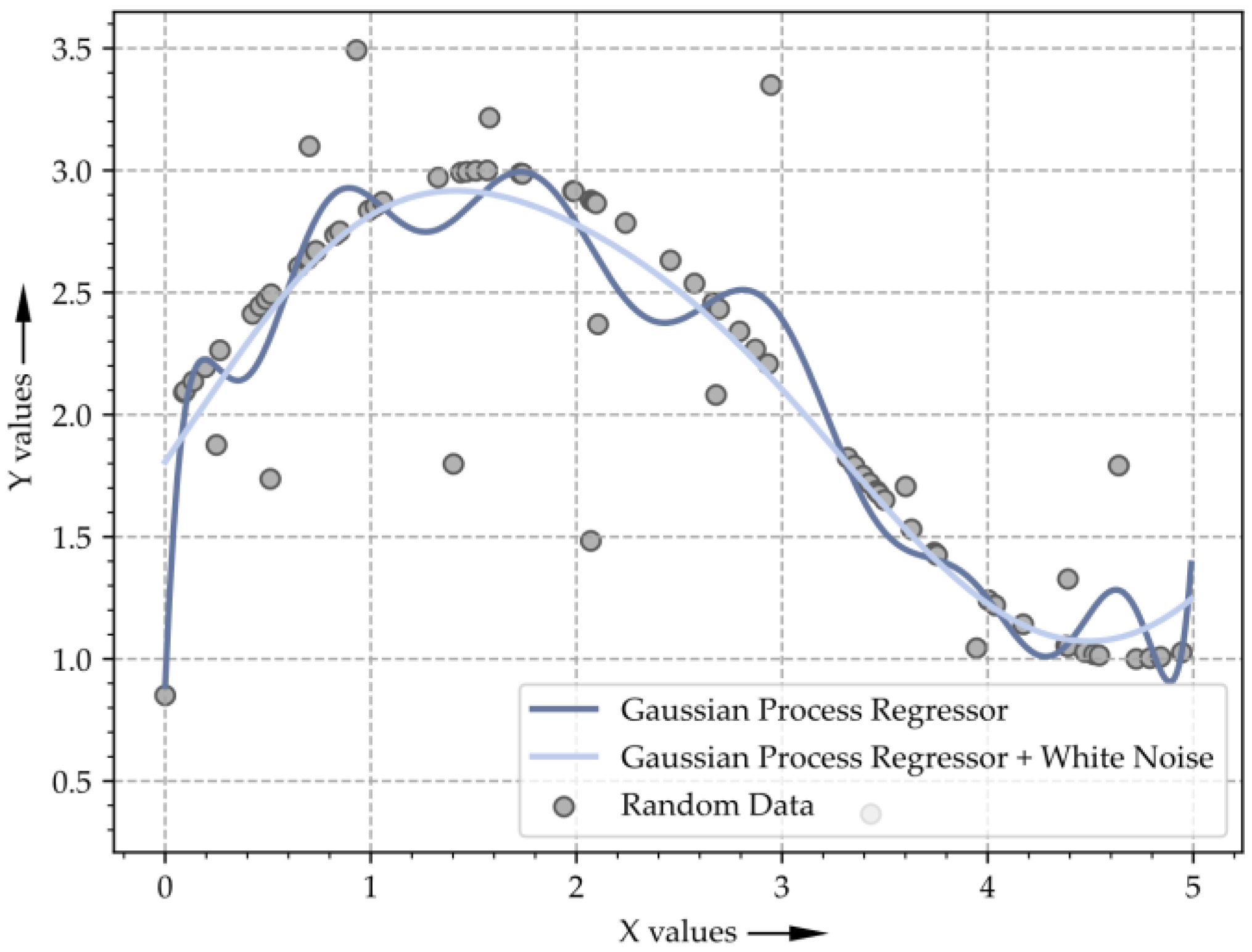

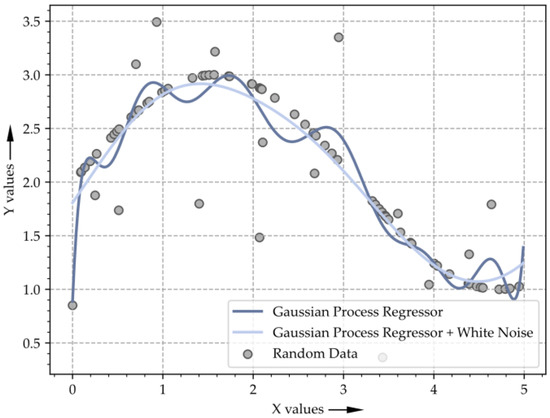

In Figure 1, two different GPR models have been used to approximate a sinusoid. The input data points are sinusoidal but contain some outliers. The model with the lightblue approximation curve has an additional kernel extension for noise suppression compared to the darkblue model. Therefore, the lightblue model is less sensitive to outliers and has a smoother approximation curve. This is also the main advantage when using GPR compared to other regression models like linear or polynomial regression. GPR are more robust to outliers or messy data and are also relatively stable on small datasets [47] like the one used for this contribution. That is why they were mainly selected for the later-described use case.

Figure 1.

Gaussian process regression for the regression of a sinusoid.

3.2.6. Python

The Python programming language was chosen for the present work, as it is the de-facto standard language for ML and Data Science. This programming environment is particularly suitable in the field of machine learning, as it allows the easy integration of external libraries. In order to use machine learning algorithms in practice, many libraries and environments have been developed in the meantime. One of them is the open-source Python library scikit-learn [48]. For the above-described methods, the following scikit-learn libraries were used: the scikit-learn module Polynomial Features for the modeling of the PR models, which was combined with the Linear Regression module to facilitate a PR model for prediction of coating parameters. For modeling via SVM, the SVR or support vector regressor module of scikit-learn was used. The NN were modeled via the MLP Regressor module and lastly the GPR were implemented using the Gaussian Process Regressor module of scikit-learn. All models were trained using the standard parameters, and only for the GPR model was the kernel function smoothed via adding some white noise; this was necessary because the GPR of scikit-learn has no real standard parameters.

4. Use Case with Practical Example in a-C:H Coating Design

4.1. Data Generation

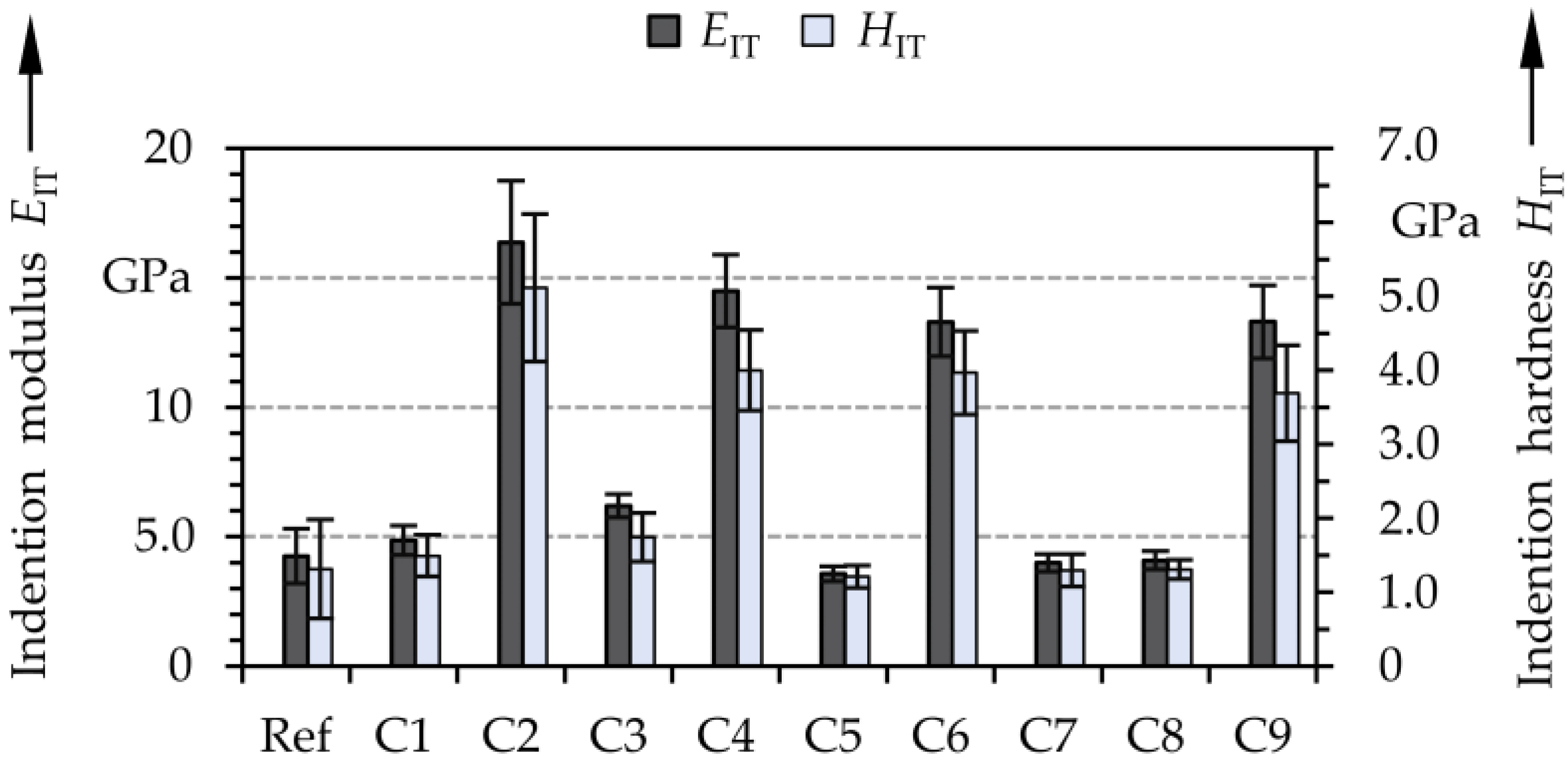

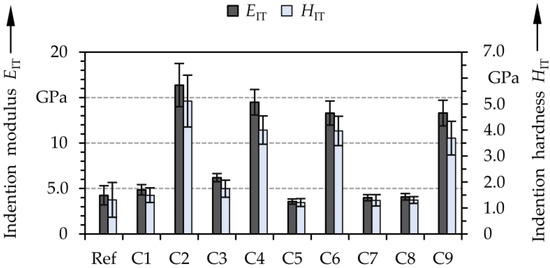

The average indentation modulus and indentation hardness values are presented in Figure 2 Obviously, elasticity and hardness differed significantly between the various coated groups. A considerable influence of the sputtering power on the achieved EIT and HIT values was revealed. For example, C2, C4, C6 and C9, which were produced with a sputtering power of 2.0 kW, had indentation modulus between 13.3 and 16.4 GPa and indentation hardness between 3.7 and 5.1 GPa. In contrast, specimens Ref, C1, C5, C7 and C8 exhibited significantly lower EIT and HIT values, ranging from 3.6 to 4.9 GPa and 1.2 to 1.5 GPa, respectively. Compared to the latter, the central point represented by C3 did not indicate significantly higher elastic–plastic values. The variation of the bias voltage or the combined gas flow did not allow us to derive a distinct trend, especially concerning the standard deviation. In general, increased sputtering power could increase EIT and HIT by more than a factor of three. Accordingly, the higher coating hardness is expected to shield the substrates from adhesive and abrasive wear and also to shift the cracking towards higher stresses [28,35]. At the same time, the relatively lower indentation modulus leads to an increased ability of the coatings to sag without flowing [33]. As a result, the pressures induced by tribological loading may be reduced by increasing the contact dimensions [49]. Thus, it can be considered that the developed a-C:H coatings enable a very advantageous wear behavior [28,50].

Figure 2.

Averaged values of indentation modulus EIT and indentation hardness HIT and standard deviation of the different a-C:H coatings (n = 10).

4.2. Data Processing

4.2.1. Reading in and Preparing Data

After the coating characterization, the measured values were available in a standardized Excel dataset, which contains the plant parameters and the resulting coating characteristics for each sample. It could also be possible that the relevant measurements are already in a machine-readable format, for example the tribAIn ontology [51], but for our case we focused on the data handling via Excel and Python. To facilitate the import of the data into Python, the dataset had to be modified in such a way that a column-by-column import of the data was possible. Afterwards, the dataset needed to be imported into our Python program via the pandas library [52]. To facilitate further data processing, the plant parameters sputtering power, bias voltage and combined Ar and C2H2 were combined in an array of features and the coating characteristic such as the indentation hardness as a target for prediction.

4.2.2. Model Instantiation

The class Gaussian Process Regressor (GPR) of the scikit-learn package class allows the implementation of Gaussian process models. For the instantiation in particular, a definition of a kernel was needed. This kernel is also called covariance function in connection with Gaussian processes and influences the probability distributions of the Gaussian processes decisively. The main task of the kernel is to calculate the covariance of the Gaussian process between the individual data points. Two GPR objects were instantiated with two different kernels. The first one was created with a standard kernel and the second one was additionally linked with a white noise kernel. During the later model training, the hyperparameters of the kernel were optimized. Due to possibly occurring local maxima, the passing parameter n_restarts_optimizer can be used to determine how often this optimization process should be run. In the case of GPR, a standardization of the data was carried out. This standardization was achieved by scaling the data mean to 0 and the standard deviation to 1.

4.2.3. Training the Model

As described before, one of the main tasks of machine learning algorithms was the training of the model. The scikit-learn environment offers the function fit(X,y), with the input variables X and y. Here, X was the feature vector, which contains the feature data of the test data set (the control variables of the coating plant). The variable y was defined as the target vector and contains the target data of the test data set (the characteristic values of the coating characterization). By calling the method reg_model.fit(X,y) with the available data and the selected regression model (GPR, in general reg_model) the model was trained and fitted on the available data.

Particularly with small datasets, there was the problem that the dataset shrank even further when the data was divided into training and test data. For this reason, the k-fold cross-validation approach could be used [31]. Here, the training data set was split into k smaller sets, with one set being retained as a test data set per training run. In the following runs, the set distributions change. This approach can be used to obtain more training datasets despite small datasets, thus significantly improving the training performance of the model.

4.2.4. Model Predictions

After the models were trained on the available data, the models can compute or predict corresponding target values for the feature variables that were previously unknown to the model. Unknown feature values are equally distributed data points from a specified interval as well as the features of a test data set. For the former, the minima and maxima of the feature values of the training data set were extracted. Afterwards, equally distributed data points were generated for each feature in this min-max interval.

For predicting the targets, the scikit-learn library provides the method predict(x), where the feature variables are passed as a vector x to the function. Calling the method reg_model.predict(x) then returns the corresponding predicted target values. The predictions for the test data were further evaluated in terms of the root mean squared error, the mean absolute error and the coefficient of prognosis (CoP) [53] and showed good quality, especially for the GPR model (see Table 3).

Table 3.

Prediction quality of the models based on the initial dataset.

From Table 3, it follows that the GPR model is the most suitable model for further evaluation in our test case since it shows the highest coefficient of prognosis. Therefore, we selected the GPR model for the demonstration and visualization of our use case.

4.2.5. Visualization

The Python library matplotlib was used to visualize the data in Python. This allowed an uncomplicated presentation of numerical data in 2D or 3D. Since the feature vector contained three variables (sputter power P sputter, gas flow φ and bias voltage Ubias), a three-dimensional presentation of the feature space was particularly suitable. Here, the three variables were plotted on the x-, y- and z-axis and the measurement points were placed in this coordinate system. For the presentation of the corresponding numerical target value, color-coding serves as the fourth dimension. The target value of the measuring point could then be inferred from a color bar.

This presentation was especially suitable for small data sets, e.g., to get an overview of the actual position of the training data points. For large data sets with several thousand data points, a pure 3D visualization is too confusing, since measurement points inside the feature space were no longer visible. For this reason, a different visualization method was used to display the results of ML prediction of uniformly distributed data.

This visualization method is based on the visualization of computer tomography (CT) data set using a slice-based data view. Here, the 3D images of the body are skipped through layer-by-layer to gain insights into the interior of the workings level-by-level. Similar to this principle, the feature space was also traversed layer-by-layer.

Two feature variables span a 2D coordinate system. The measured values were again colored and displayed in the x–y plane analogous to the 3D display.

The third feature vector served as a run variable in the z-axis, i.e., into the plane. Employing a slider, the z-axis can be traversed, and the view of the feature space was then obtained layer-by-layer.

5. Results and Discussion

5.1. Gaussian Process Regression and Visualization

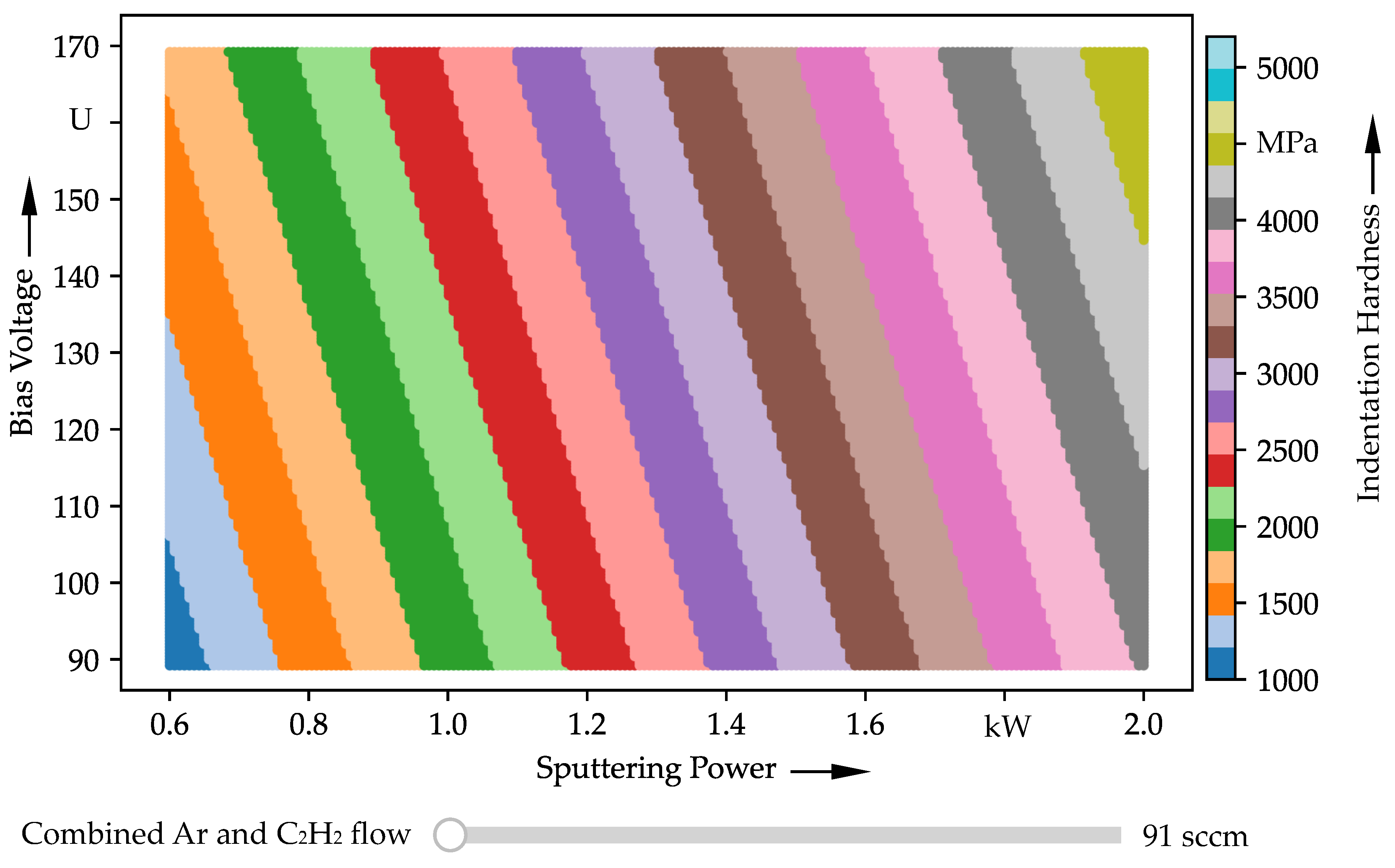

For the above-described initial dataset created from a design of experiments approach, different GPR models were trained. Before training the different models, the dataset was scaled to only contain values between 0 and 1. This was especially useful for GPR, to reduce training effort and stabilize the optimization of the model parameters. The main difference between the different GPR models was the used kernel function for the gaussian processes. The used GPR supports a variety of different kernel functions which were optimized during the training of the GPR model. It was found that with a dot product kernel with some additional white noise the prediction capabilities of the model were enhanced to reach a mean absolute error of around 440 MPa. Moreover, the root mean squared error was around 387 MPa. This results in an CoP of around 90%, which means that the prediction quality and quantity is acceptable to classify this model for a prediction model. For model training, a train-test-split of 80–20% was used and the training data was shuffled before training. The overall prediction quality is a notable finding since the dataset used for training is relatively small. Here also GPR with little white noise show their strengths on sparse datasets. However, model performance can further benefit from more data. This prediction model is also capable of visualizing the prediction space, see Figure 3.

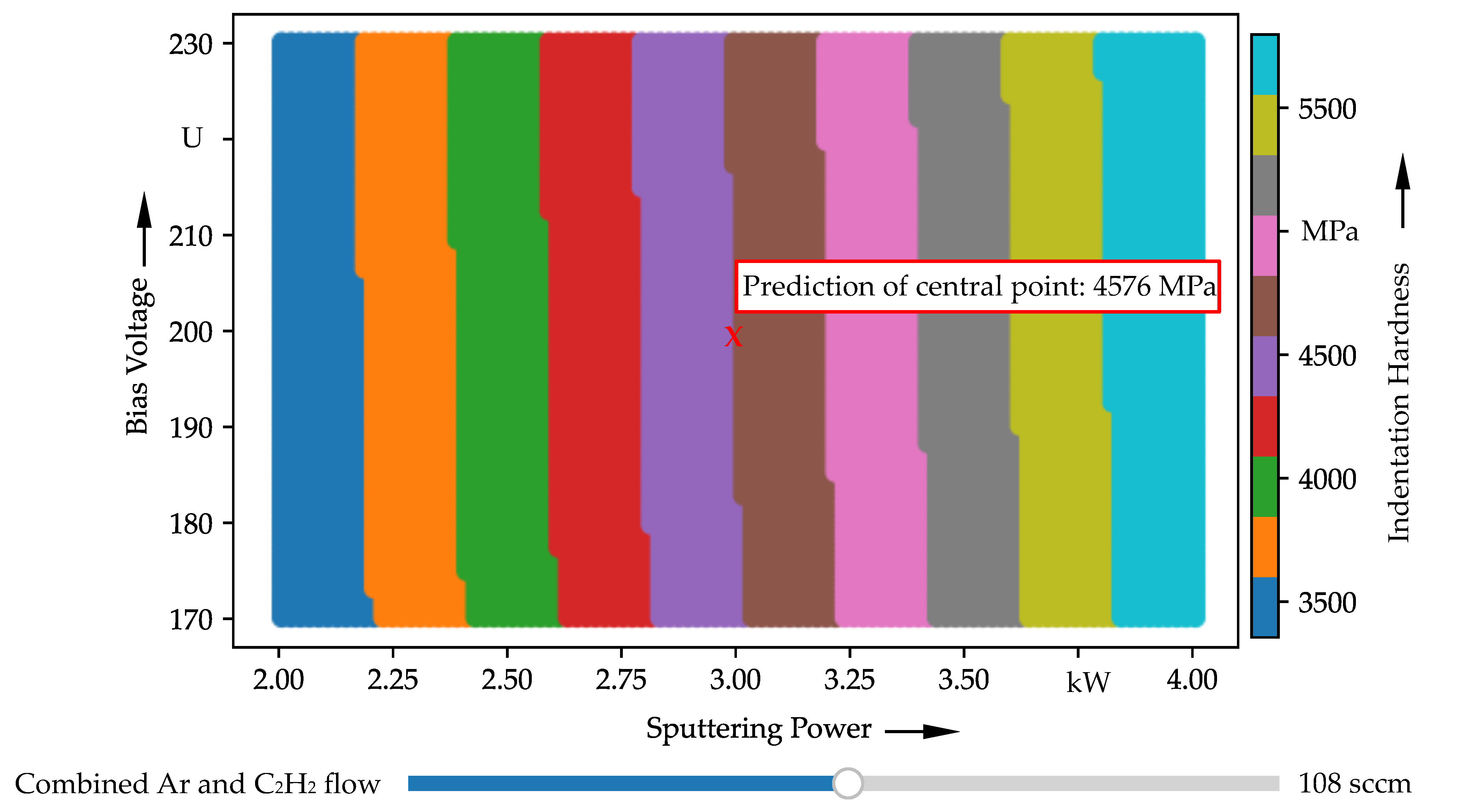

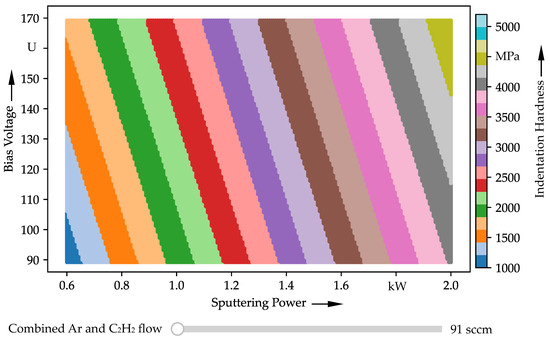

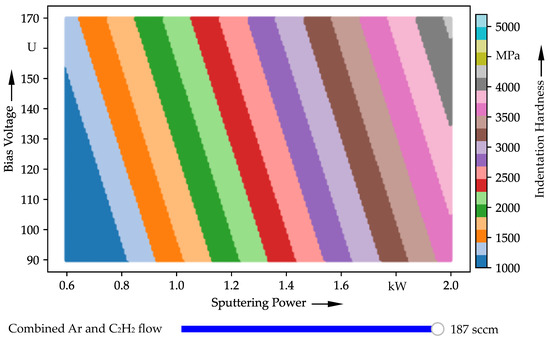

Figure 3.

Predicted space in a 20-color colormap for better differentiation between the different areas of resulting hardness for minimum combined gas flow.

The striped pattern emerges from the usage of a 20-color-based colormap for drawing. This is done to further show the different sections of the predicted data. The whole plot can be viewed as a process map. In order to find the ideal coating properties, the tribology experts need to look for their color in indentation hardness and then easily see the bias voltage and sputtering power needed. For tuning purposes, the gas flow can be changed via the slider at the bottom. The plot for the maximum combined gas flow is depicted in Figure 4.

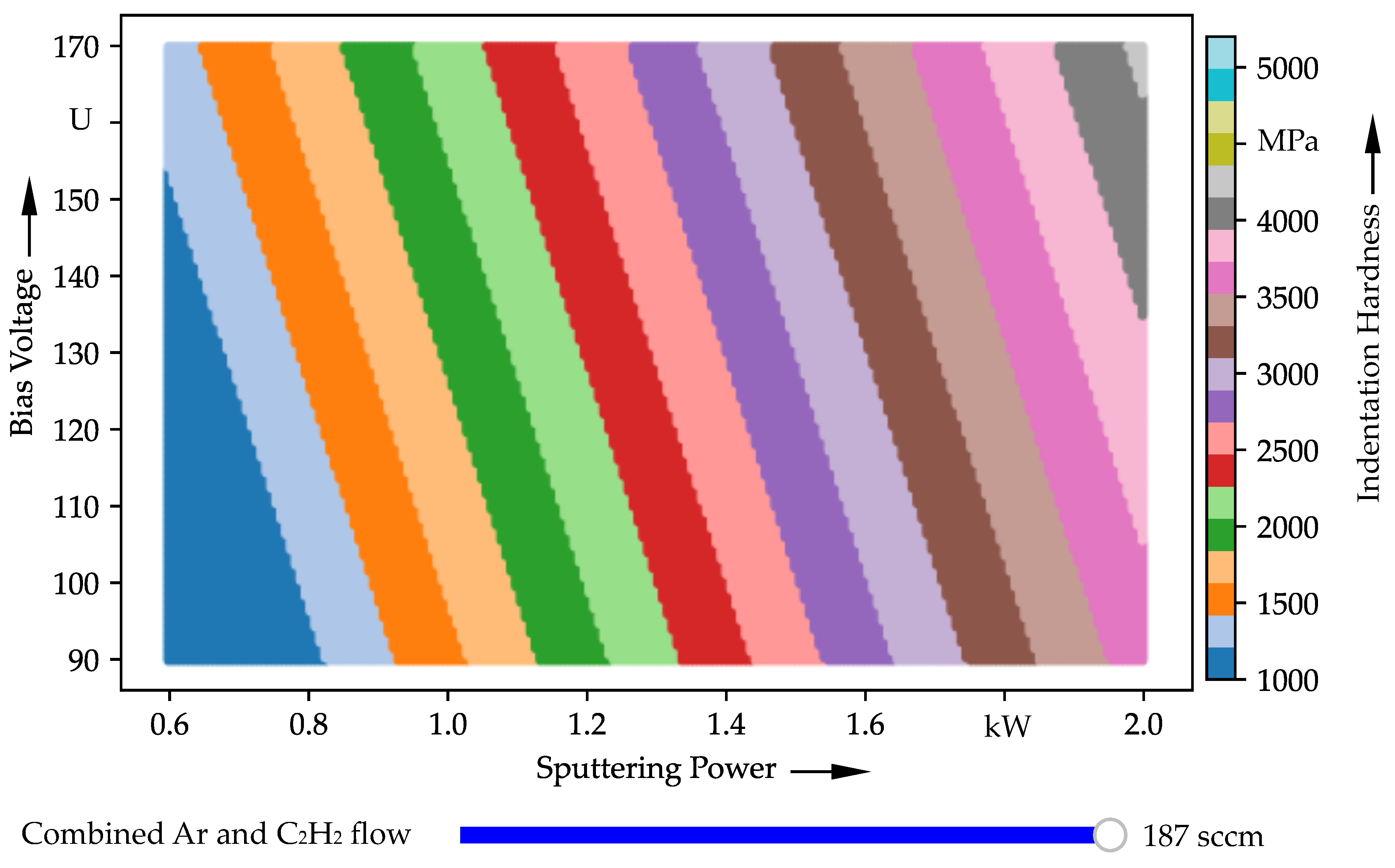

Figure 4.

Predicted space for maximum combined gas flow.

The space for lower indentation hardness is getting bigger and the highest indentation hardness of around 4.2 GPa vanished. This correlates with the experience made from initial experimental studies. It was expected that the gas flow—especially the C2H2 gas flow [15]—influenced the hydrogen content and thus the mechanical properties and further affected the tribologically effective behavior. Based on these visualizations, it can be easily seen which parameters lead to the desired indentation hardness. This visualization technique benefits the process of where to look for promising parameter sets for ideal indentation hardness.

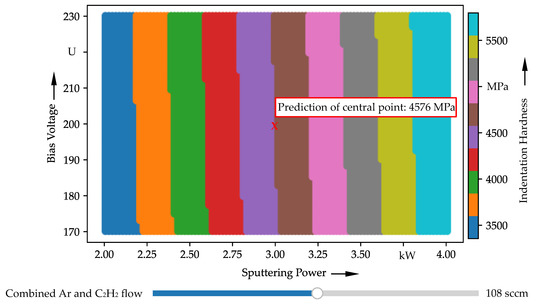

For validation of our model, we performed another experimental design study based on a Box–Behnken design with 3 factors and two stages (see Table 4). Initially, the indentation hardness was predicted using our GPR model. Subsequently, the GPR model was evaluated—after coating the specimens—by determining the indentation hardness experimentally. For illustrative purposes, the prediction of the central point, which was deposited at a sputtering power of 3 kW, a bias voltage of 200 V, and a combined gas flow of 108 sccm, is shown in Figure 5. In this context, it should be noted that the prediction space included a significant extension of the training space and thus could be influenced by many factors.

Table 4.

Summary of main deposition process parameters and predictions for a-C:H on UHMWPE, prediction of HIT by the GPR model as well as experimental determination of HIT based on the average values and standard deviations of the different a-C:H coatings (n = 10).

Figure 5.

Predicted extended space for probe points.

As shown in Figure 5 and Table 4, the HIT values of the previously performed prediction of the GPR model largely coincided with the experimentally determined HIT values. Especially with regard to the standard deviation of the experimentally determined HIT values, all values were in a well-usable range for further usage and processing of the data. Despite a similar training space, the prediction for the coating variations P1–P4 showed a slightly lower accuracy than for the coating variations beyond the training space, but this could be attributed to the difficulty of determining the substrate-corrected coating hardness. Thus, during the indentation tests, the distinct influence of the softer UHMWPE substrate [54,55] was more pronounced for the softer coatings (P1–P4), which were coated with lower target power than for the harder coatings (P5–P13). However, the standard deviation of the hardness values increased with hardness, which could be attributed to increasing coating defects locations and roughness. In brief, the predictions match with the implicit knowledge of the coating experts. This is the only physical conceivable conceptual model that can be considered when looking at the results presented, as the coating deposition is a complex and multi-scale process.

Though the visualization of the prediction space in Figure 5 differed slightly from the prediction spaces in Figure 3 and in Figure 4 due to steeper dividing lines, the prediction space in Figure 5 spanned larger coating process parameter dimensions.

Generally, the prediction quality and especially the quantity of the model was very good, so the model can be used for further coating development processes and adjustments of the corresponding coating process parameters. An extension of the GPR model to other coating types, such as ceramic coatings, e.g., CrN, or solid lubricants, e.g., MoS2, or different coating systems on various substrates is conceivable.

5.2. Comparison to Polynomial Regression, Support Vector Machines and Neural Network Models

For the purpose of comparing our results and trained models with the other models described previously, Table 5 shows the different predictions generated by the models for the previously unknown dataset in our test study.

Table 5.

Comparison of the predictions of the different models used in this contribution.

It is shown that only the GPR model is capable of producing meaningful outputs, while the other models are not able to achieve a prediction quality close to the GPR model. When comparing the training results on root mean squared error, mean absolute error and coefficient of prognosis set, the story becomes even more clearer (see Table 6).

Table 6.

Comparison of the prediction qualities of the models on the unknown data set.

The results show that the GPR model was the best model compared to PR, SVM and NN. It is worth noting that we have used polynomial degree of 2 for the PR models, as this produced the best prediction results, a higher polynomial degree of 3 to 5 led to a decrease in RMSE, MAE and CoP. This also shows that especially the SVM and NN are not capable of producing meaningful prediction output. The PR fitting overall shows acceptable prediction quality of around 70%, however the GPR has better RMSE and MAE values, so it would be selected for further consideration. Furthermore, GPR provided better results on the training dataset. It is important to always evaluate RMSE, MAE and CoP together, as all three values allow a thorough evaluation of the prediction model. In brief, RMSE and MAE characterize the spread predictions better than the CoP, the CoP returns an overall performance score of the model. The weak performance of SVM can possibly be explained by the small dataset used for training, since SVM need way more training data, as the model only scores around 30% CoP on the training dataset. For extrapolation on the test dataset the trained SVM model was not feasible. The same could be the case for the NN, as NN rely on big datasets for training and show weaker extrapolation capabilities.

6. Conclusions

This contribution evaluated the use of Gaussian processes and advanced data visualization in the design of amorphous carbon coatings on UHMWPE. This study focused on elaborating an overview of the required experimental setup for data generation and the concepts of ML, and also provided the corresponding ML algorithms. Afterwards, the deposition and characterization of amorphous carbon coatings were presented.

The use of ML in coating technology and tribology represents a very promising approach for the selective optimization of coating process parameters and coating properties. In particular, this could be demonstrated by the GPR models used to optimize the mechanical properties of the coatings and, consequently, the tribological behavior, by increasing the hardness and thus the abrasive wear resistance. However, further experimental studies and parameter tuning are needed to obtain better predictive models and better process maps. The initial results of these visualizations and the GPR models provide a good basis for further studies. For our approach the following conclusions could be drawn:

- The GPR models and the materials used showed the potentials of the selected ML algorithms. One data visualization method using the GPR was detailed;

- The usage of ML looked very promising in this case, which can benefit the area of ML in coating technology and tribology. The prediction accuracy of the hardness values with our approach showed a high agreement with the experimentally determined hardness values;

For our use case we implemented a four-step process, mainly consisting of data generation via design of experiments to create the initial dataset. This initial dataset was then analyzed via Python-based scripting tools, to create meaningful prediction models via GPR. Those GPR models are then used for the presented visualization approach. To put it all together one Python script was created to lead through the process. This Python script can be configured to look into different values, however we focused on indentation hardness.

Based on this work, further experimental studies will be conducted, and the proposed models will then be re-trained using the available data. The dataset generated for this article was considered as a starting point for the ML algorithms used and will be supplemented with future experimental data and thus grow. When more data is available, maybe different ML models like neural networks will come into perspective.

Author Contributions

Conceptualization, C.S. and B.R.; methodology, C.S. and B.R.; specimen preparation B.R.; coating deposition, B.R.; mechanical experiments, B.R.; data analysis, C.S., B.R. and N.P.; writing—original draft preparation, C.S. and B.R.; writing—review and editing, N.P., M.B., B.S. and S.W.; visualization, C.S. and B.R.; supervision, M.B., B.S. and S.W. All authors have read and agreed to the published version of the manuscript.

Funding

We acknowledge financial support by Deutsche Forschungsgemeinschaft and Friedrich-Alexander-Universität Erlangen-Nürnberg within the funding programme “Open Access Publication Funding”.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

For tinkering with the visualization a similar model can be found under http://csmfk.pythonanywhere.com/ (accessed on 1 February 2022). The related data generated and analyzed for the contribution is available from the corresponding author on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bertolini, M.; Mezzogori, D.; Neroni, M.; Zammori, F. Machine Learning for industrial applications: A comprehensive literature review. Expert Syst. Appl. 2021, 175, 114820. [Google Scholar] [CrossRef]

- Lynch, C.J.; Liston, C. New machine-learning technologies for computer-aided diagnosis. Nat. Med. 2018, 24, 1304–1305. [Google Scholar] [CrossRef]

- Marian, M.; Tremmel, S. Current Trends and Applications of Machine Learning in Tribology—A Review. Lubricants 2021, 9, 86. [Google Scholar] [CrossRef]

- Caro, M.A.; Csányi, G.; Laurila, T.; Deringer, V.L. Machine learning driven simulated deposition of carbon films: From low-density to diamondlike amorphous carbon. Phys. Rev. B 2020, 102, 174201. [Google Scholar] [CrossRef]

- Shah, R.; Gashi, B.; Hoque, S.; Marian, M.; Rosenkranz, A. Enhancing mechanical and biomedical properties of protheses—Surface and material design. Surf. Interfaces 2021, 27, 101498. [Google Scholar] [CrossRef]

- Kröner, J.; Kursawe, S.; Musayev, Y.; Tremmel, S. Analysing the Tribological Behaviour of DLC-Coated Dry-Running Deep Groove Ball Bearings with Regard to the Ball Material. Appl. Mech. Mater. 2016, 856, 143–150. [Google Scholar] [CrossRef]

- Khadem, M.; Penkov, O.V.; Yang, H.-K.; Kim, D.-E. Tribology of multilayer coatings for wear reduction: A review. Friction 2017, 5, 248–262. [Google Scholar] [CrossRef]

- Marian, M.; Weikert, T.; Tremmel, S. On Friction Reduction by Surface Modifications in the TEHL Cam/Tappet-Contact-Experimental and Numerical Studies. Coatings 2019, 9, 843. [Google Scholar] [CrossRef]

- Liu, K.; Kang, J.; Zhang, G.; Lu, Z.; Yue, W. Effect of temperature and mating pair on tribological properties of DLC and GLC coatings under high pressure lubricated by MoDTC and ZDDP. Friction 2020, 9, 1390–1405. [Google Scholar] [CrossRef]

- Häfner, T.; Rothammer, B.; Tenner, J.; Krachenfels, K.; Merklein, M.; Tremmel, S.; Schmidt, M. Adaption of tribological behavior of a-C:H coatings for application in dry deep drawing. MATEC Web Conf. 2018, 190, 14002. [Google Scholar] [CrossRef][Green Version]

- Krachenfels, K.; Rothammer, B.; Zhao, R.; Tremmel, S.; Merklein, M. Influence of varying sheet material properties on dry deep drawing process. IOP Conf. Ser. Mater. Sci. Eng. 2019, 651, 012012. [Google Scholar] [CrossRef]

- Hauert, R.; Thorwarth, K.; Thorwarth, G. An overview on diamond-like carbon coatings in medical applications. Surf. Coat. Technol. 2013, 233, 119–130. [Google Scholar] [CrossRef]

- Hauert, R. A review of modified DLC coatings for biological applications. Diam. Relat. Mater. 2003, 12, 583–589. [Google Scholar] [CrossRef]

- McGeough, J.A. The Engineering of Human Joint Replacements; John Wiley & Sons Ltd.: Chichester, UK, 2013; ISBN 978-1-118-53684-1. [Google Scholar]

- Döring, J.; Crackau, M.; Nestler, C.; Welzel, F.; Bertrand, J.; Lohmann, C.H. Characteristics of different cathodic arc deposition coatings on CoCrMo for biomedical applications. J. Mech. Behav. Biomed. Mater. 2019, 97, 212–221. [Google Scholar] [CrossRef] [PubMed]

- Dorner-Reisel, A.; Gärtner, G.; Reisel, G.; Irmer, G. Diamond-like carbon films for polyethylene femoral parts: Raman and FT-IR spectroscopy before and after incubation in simulated body liquid. Anal. Bioanal. Chem. 2017, 390, 1487–1493. [Google Scholar] [CrossRef]

- Wang, L.; Li, L.; Kuang, X. Effect of substrate bias on microstructure and mechanical properties of WC-DLC coatings deposited by HiPIMS. Surf. Coat. Technol. 2018, 352, 33–41. [Google Scholar] [CrossRef]

- Bociąga, D.; Sobczyk-Guzenda, A.; Szymanski, W.; Jedrzejczak, A.; Jastrzebska, A.; Olejnik, A.; Jastrzębski, K. Mechanical properties, chemical analysis and evaluation of antimicrobial response of Si-DLC coatings fabricated on AISI 316 LVM substrate by a multi-target DC-RF magnetron sputtering method for potential biomedical applications. Appl. Surf. Sci. 2017, 417, 23–33. [Google Scholar] [CrossRef]

- Bobzin, K.; Bagcivan, N.; Theiß, S.; Weiß, R.; Depner, U.; Troßmann, T.; Ellermeier, J.; Oechsner, M. Behavior of DLC coated low-alloy steel under tribological and corrosive load: Effect of top layer and interlayer variation. Surf. Coat. Technol. 2013, 215, 110–118. [Google Scholar] [CrossRef]

- Hetzner, H.; Schmid, C.; Tremmel, S.; Durst, K.; Wartzack, S. Empirical-Statistical Study on the Relationship between Deposition Parameters, Process Variables, Deposition Rate and Mechanical Properties of a-C:H:W Coatings. Coatings 2014, 4, 772–795. [Google Scholar] [CrossRef]

- Kretzer, J.P.; Jakubowitz, E.; Reinders, J.; Lietz, E.; Moradi, B.; Hofmann, K.; Sonntag, R. Wear analysis of unicondylar mobile bearing and fixed bearing knee systems: A knee simulator study. Acta Biomater. 2011, 7, 710–715. [Google Scholar] [CrossRef]

- Polster, V.; Fischer, S.; Steffens, J.; Morlock, M.M.; Kaddick, C. Experimental validation of the abrasive wear stage of the gross taper failure mechanism in total hip arthroplasty. Med. Eng. Phys. 2021, 95, 25–29. [Google Scholar] [CrossRef] [PubMed]

- Ruggiero, A.; Zhang, H. Editorial: Biotribology and Biotribocorrosion Properties of Implantable Biomaterials. Front. Mech. Eng. 2020, 6, 17. [Google Scholar] [CrossRef]

- Rufaqua, R.; Vrbka, M.; Choudhury, D.; Hemzal, D.; Křupka, I.; Hartl, M. A systematic review on correlation between biochemical and mechanical processes of lubricant film formation in joint replacement of the last 10 years. Lubr. Sci. 2019, 31, 85–101. [Google Scholar] [CrossRef]

- Rothammer, B.; Marian, M.; Rummel, F.; Schroeder, S.; Uhler, M.; Kretzer, J.P.; Tremmel, S.; Wartzack, S. Rheological behavior of an artificial synovial fluid—Influence of temperature, shear rate and pressure. J. Mech. Behav. Biomed. Mater. 2021, 115, 104278. [Google Scholar] [CrossRef]

- Nečas, D.; Vrbka, M.; Marian, M.; Rothammer, B.; Tremmel, S.; Wartzack, S.; Galandáková, A.; Gallo, J.; Wimmer, M.A.; Křupka, I.; et al. Towards the understanding of lubrication mechanisms in total knee replacements—Part I: Experimental investigations. Tribol. Int. 2021, 156, 106874. [Google Scholar] [CrossRef]

- Gao, L.; Hua, Z.; Hewson, R.; Andersen, M.S.; Jin, Z. Elastohydrodynamic lubrication and wear modelling of the knee joint replacements with surface topography. Biosurface Biotribol. 2018, 4, 18–23. [Google Scholar] [CrossRef]

- Rothammer, B.; Marian, M.; Neusser, K.; Bartz, M.; Böhm, T.; Krauß, S.; Schroeder, S.; Uhler, M.; Thiele, S.; Merle, B.; et al. Amorphous Carbon Coatings for Total Knee Replacements—Part II: Tribological Behavior. Polymers 2021, 13, 1880. [Google Scholar] [CrossRef]

- Ruggiero, A.; Sicilia, A. A Mixed Elasto-Hydrodynamic Lubrication Model for Wear Calculation in Artificial Hip Joints. Lubricants 2020, 8, 72. [Google Scholar] [CrossRef]

- Rosenkranz, A.; Marian, M.; Profito, F.J.; Aragon, N.; Shah, R. The Use of Artificial Intelligence in Tribology—A Perspective. Lubricants 2020, 9, 2. [Google Scholar] [CrossRef]

- Witten, I.H.; Frank, E.; Hall, M.; Pal, C. Data Mining: Practical Machine Learning Tools and Techniques, 4th ed.; Morgan Kaufmann: Burlington, MA, USA, 2017. [Google Scholar] [CrossRef]

- Fontaine, J.; Donnet, C.; Erdemir, A. Fundamentals of the Tribology of DLC Coatings. In Tribology of Diamond-Like Carbon Films; Springer: Boston, MA, USA, 2020; pp. 139–154. [Google Scholar] [CrossRef]

- Leyland, A.; Matthews, A. On the significance of the H/E ratio in wear control: A nanocomposite coating approach to optimised tribological behaviour. Wear 2000, 246, 1–11. [Google Scholar] [CrossRef]

- ISO 5834-2:2019; Implants for Surgery—Ultra-High-Molecular-Weight Polyethylene—Part 2: Moulded Forms. ISO: Geneva, Switzerland, 2019.

- Rothammer, B.; Neusser, K.; Marian, M.; Bartz, M.; Krauß, S.; Böhm, T.; Thiele, S.; Merle, B.; Detsch, R.; Wartzack, S. Amorphous Carbon Coatings for Total Knee Replacements—Part I: Deposition, Cytocompatibility, Chemical and Mechanical Properties. Polymers 2021, 13, 1952. [Google Scholar] [CrossRef] [PubMed]

- Oliver, W.C.; Pharr, G.M. An improved technique for determining hardness and elastic modulus using load and displacement sensing indentation experiments. J. Mater. Res. 1992, 7, 1564–1583. [Google Scholar] [CrossRef]

- Oliver, W.C.; Pharr, G.M. Measurement of hardness and elastic modulus by instrumented indentation: Advances in understanding and refinements to methodology. J. Mater. Res. 2004, 19, 3–20. [Google Scholar] [CrossRef]

- DIN EN ISO 14577-1:2015-11; Metallic Materials—Instrumented Indentation Test for Hardness and Materials Parameters—Part 1: Test Method. DIN: Berlin, Germany, 2015.

- DIN EN ISO 14577-4:2017-04; Metallic Materials—Instrumented Indentation Test for Hardness and Materials Parameters—Part 4: Test Method for Metallic and Non-Metallic Coatings. DIN: Berlin, Germany, 2017.

- Jiang, X.; Reichelt, K.; Stritzker, B. The hardness and Young’s modulus of amorphous hydrogenated carbon and silicon films measured with an ultralow load indenter. J. Appl. Phys. 1989, 66, 5805–5808. [Google Scholar] [CrossRef]

- Cho, S.-J.; Lee, K.-R.; Eun, K.Y.; Hahn, J.H.; Ko, D.-H. Determination of elastic modulus and Poisson’s ratio of diamond-like carbon films. Thin Solid Films 1999, 341, 207–210. [Google Scholar] [CrossRef]

- Müller, A.C.; Guido, S. Introduction to Machine Learning with Python: A Guide for Data Scientists; O’Reilly: Beijing, China, 2017; ISBN 9781449369880. [Google Scholar]

- Williams, C.K.; Rasmussen, C.E. Gaussian Processes for Machine Learning; Adaptive Computation and Machine Learning; MIT Press: Cambridge, MA, USA, 2003; Volume 2, p. 4. ISBN 026218253X. [Google Scholar]

- Ostertagová, E. Modelling using Polynomial Regression. Procedia Eng. 2012, 48, 500–506. [Google Scholar] [CrossRef]

- Smola, A.J.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Hinton, G.E. 20—Connectionist Learning Procedures. In Machine Learning: An Artificial Intelligence Approach; Elsevier: Amsterdam, The Netherlands, 1990; Volume III. [Google Scholar] [CrossRef]

- Ebden, M. Gaussian Processes: A Quick Introduction. arXiv 2015, arXiv:1505.02965. Available online: https://arxiv.org/abs/1505.02965 (accessed on 1 February 2022).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. JMLR 2011, 12, 2825–2830. [Google Scholar]

- Weikert, T.; Wartzack, S.; Baloglu, M.V.; Willner, K.; Gabel, S.; Merle, B.; Pineda, F.; Walczak, M.; Marian, M.; Rosenkranz, A.; et al. Evaluation of the surface fatigue behavior of amorphous carbon coatings through cyclic nanoindentation. Surf. Coat. Technol. 2021, 407, 126769. [Google Scholar] [CrossRef]

- Rothammer, B.; Weikert, T.; Tremmel, S.; Wartzack, S. Tribologisches Verhalten amorpher Kohlenstoffschichten auf Metallen für die Knie-Totalendoprothetik. Tribol. Schmierungstech. 2019, 66, 15–24. [Google Scholar] [CrossRef]

- Kügler, P.; Marian, M.; Schleich, B.; Tremmel, S.; Wartzack, S. tribAIn—Towards an Explicit Specification of Shared Tribological Understanding. Appl. Sci. 2020, 10, 4421. [Google Scholar] [CrossRef]

- McKinney, W. Data structures for statistical computing in Python. In Proceedings of the 9th Python in Science Conference (SCIPY 2010), Austin, TX, USA, 28 June–3 July 2010; pp. 51–56. [Google Scholar]

- Most, T.; Will, J. Metamodel of Optimal Prognosis—An automatic approach for variable reduction and optimal metamodel selection. Proc. Weimar. Optim. Stochastiktage 2008, 5, 20–21. [Google Scholar]

- Poliakov, V.P.; Siqueira, C.J.d.M.; Veiga, W.; Hümmelgen, I.A.; Lepienski, C.M.; Kirpilenko, G.G.; Dechandt, S.T. Physical and tribological properties of hard amorphous DLC films deposited on different substrates. Diam. Relat. Mater. 2004, 13, 1511–1515. [Google Scholar] [CrossRef]

- Martínez-Nogués, V.; Medel, F.J.; Mariscal, M.D.; Endrino, J.L.; Krzanowski, J.; Yubero, F.; Puértolas, J.A. Tribological performance of DLC coatings on UHMWPE. J. Phys. Conf. Ser. 2010, 252, 012006. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).