Abstract

Background: Wrong blood in tube (WBIT) is a critical pre-analytical error in laboratory medicine in which a blood sample is mislabeled with the wrong patient identity. These errors are often undetected due to the limitations of current detection strategies (e.g., delta checks). Methods: We evaluated Random Forest models for WBIT detection and conducted a detailed analyte importance analysis. In total, 799,721 samples from a German tertiary care center were analyzed and filtered for applicability. Model input features were derived by pairing consecutive same-patient samples for non-WBIT cases, simulating WBIT by pairing samples from different patients, and computing per-analyte first-order differences for each pair. We exhaustively searched all subsets of nine CBC analytes and evaluated models using F1 score, AUC, sensitivity, and PPV. Analyte importance was assessed via SHAP, permutation, and impurity decrease. Results: Models using as few as three analytes (MCV, RDW, MCH) reached F1 scores above 90%, with performance plateauing beyond six analytes. MCV and RDW were consistently top-ranked. Two-dimensional and three-dimensional visualizations revealed interpretable decision boundaries. Conclusions: Findings demonstrate that robust WBIT detection is achievable using a minimal subset of CBC analytes, offering a practical, interpretable, and broadly generalizable ML-based solution suitable for diverse clinical environments.

1. Introduction

Laboratory diagnostics plays a pivotal role in nearly all medical treatments, providing critical data that informs clinical decisions and patient management [1]. However, the reliability of these diagnostics can be compromised by pre-analytical errors—among the most serious of which is the wrong blood in tube (WBIT) error, where the blood in a sample tube does not originate from the patient whose name is printed on the label. This type of error can have serious clinical consequences, including misdiagnosis and inappropriate treatment. WBIT errors are estimated to occur in approximately 1 out of every 1000 samples, although this figure is likely an underestimation due to substantial under-reporting [2,3,4].

Several strategies have been implemented in clinical practice to address the issue of WBIT errors. Pre-analytical approaches, such as staff training and manual cross-checks, are designed to prevent such errors from occurring. In contrast, post-analytical measures, including automated delta checks and both technical and medical validation, aim to detect WBIT cases before they result in clinical harm.

However, the effectiveness of these methods in reducing or detecting WBIT errors has been shown to be limited [2]. The identification is challenging because of the high number of samples to be validated by laboratory personnel. Therefore, such errors often remain undetected or are identified only after a delay—potentially leading to serious patient harm [5].

Contemporary electronic solutions for the prevention and detection of WBIT, such as the implementation of two-dimensional barcode identification systems [6] and RFID scanning technologies [7], have proven effective. However, their widespread adoption is limited by the substantial costs and time requirement associated with implementation and ongoing maintenance [2].

Recently, data-driven artificial intelligence (AI) approaches, particularly machine learning (ML) models, have been increasingly applied across various medical domains [8,9,10]. In laboratory medicine, where large volumes of data are generated and managed through Laboratory Information Systems (LISs), ML models offer the potential to identify patterns in patients’ blood test results [11]. These patterns can help alert medical professionals when new results deviate significantly from what would be expected, as shown in studies such as [12,13]. For example, when two samples from the same patient show unusually large or inconsistent differences, it may indicate a pre-analytical error such as a WBIT event.

ML-based WBIT detection systems have been developed in several recent studies and have demonstrated superior performance compared to single-analyte delta checks [14] and manual result review [15,16]. Although ML-based techniques developed to date have achieved impressive performance in detecting WBIT errors, a comprehensive feature analysis that investigates the impact of individual analytes on model performance has not yet been conducted. Such analysis is crucial because the availability of measured parameters in patient samples can vary significantly both within and across clinical institutions. Without this level of insight, the interpretability of the trained models remains limited, and the mechanisms enabling their accurate predictions are not well understood. This lack of transparency reflects a broader challenge associated with applying ML methods in medical contexts, where explainability is often as important as accuracy.

In this study, a comprehensive feature analysis was conducted to evaluate ML-based WBIT detection using classifier models trained on a dataset obtained from a German tertiary care center. The models were trained exclusively on complete blood count (CBC) data, as these are widely used in clinical practice. Exhaustive search was performed to identify the most effective analyte combinations for different subgroup sizes. In this approach, analyte combinations were systematically ranked based on the classification performance of the models trained with them.

2. Materials and Methods

2.1. Python and Libraries

All scripts were implemented with Python 3.9. The library scikit-learn 1.2 [17] was used for the training and evaluation of the machine learning models, matplotlib 3.6.3 for the visualization, pandas 1.5.2 for the data manipulation, and mlxtend 0.23.1 for the exhaustive feature selection.

2.2. Dataset

The clinical dataset used in this study was collected from a German tertiary care center, Klinikum Lippe Detmold (KLD), and includes 799,721 anonymized blood samples from 154,998 patients over a period of 1585 days. This study was approved by the Ethics Commission of the University of Münster under the reference number 2024-064-f-S. Different combinations of analytes were determined in the laboratory for the samples, according to the parameters that were ordered in the clinic. For this study, the analysis was limited to the analytes included in the complete blood count (CBC) panel (without differential blood count), as this is the most common panel in clinical practice.

The CBC was performed using a flow cytometry-based hematology analyzer (Advia 2120i, Siemens Healthineers, Erlangen, Germany), which reported the following nine analytes: red blood cells (ERY), hemoglobin (HB), hematocrit (HK), mean corpuscular hemoglobin (MCH), mean corpuscular volume (MCV), mean corpuscular hemoglobin concentration (MCHC), red blood cell distribution width (RDW), platelets (PLT), and white blood cells (LEUKO). Of these, ERY, LEUKO, PLT, MCV, RDW, and HB were measured directly. HK, MCH, and MCHC were calculated by the analyzer based on the following formulas: , , .

The baseline characteristics of the dataset after filtering are summarized in Table 1, including means, standard deviations, and value ranges for all analytes. An overview of sample distribution by patient sex, sampling frequency, and inter-sample intervals is provided in Table 2.

Table 1.

Baseline characteristics of the filtered dataset.

Table 2.

Baseline counts of the filtered dataset.

2.3. Filtering

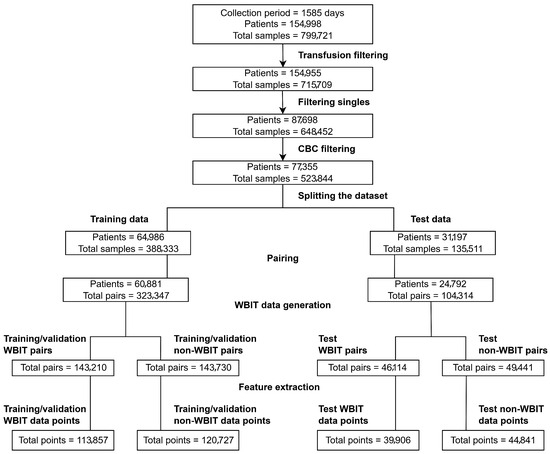

The dataset was filtered in the following steps: Transfusion filtering: Samples from patients who had received a blood transfusion were excluded if they were collected within 90 days following the transfusion. Filtering singles: Patients with only one sample in the dataset were removed. Complete blood count (CBC) filtering: Samples that did not contain all nine CBC analytes measured by the laboratory at KLD were excluded. These steps, along with the corresponding sample and patient counts after each filtering stage, are visualized in Figure 1.

Figure 1.

Overview of the data preprocessing workflow.

2.4. Splitting the Dataset

The dataset was split such that samples from the first 1269 days were assigned to the training/validation set, and the remaining 316 days (approximately 20% of the total duration) were assigned to the test set. While the train–test split respected temporal order to prevent information leakage, patient-level separation was not enforced, and some patients may appear in both sets. Although this could introduce a mild overestimation of performance, it also reflects a realistic deployment setting where models are periodically retrained and encounter both new and previously seen patients. Future work may additionally assess performance under patient-disjoint conditions to quantify this effect more precisely. All preprocessing steps following the split were performed independently for the training/validation and test sets.

2.5. Pairing

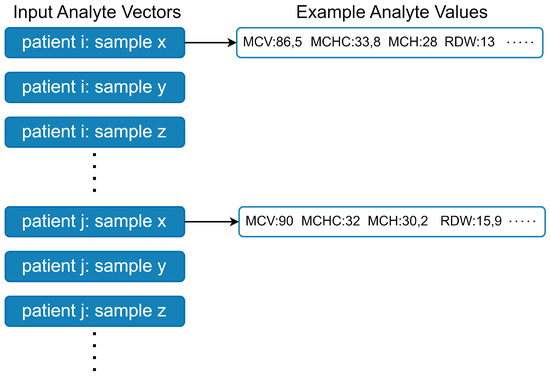

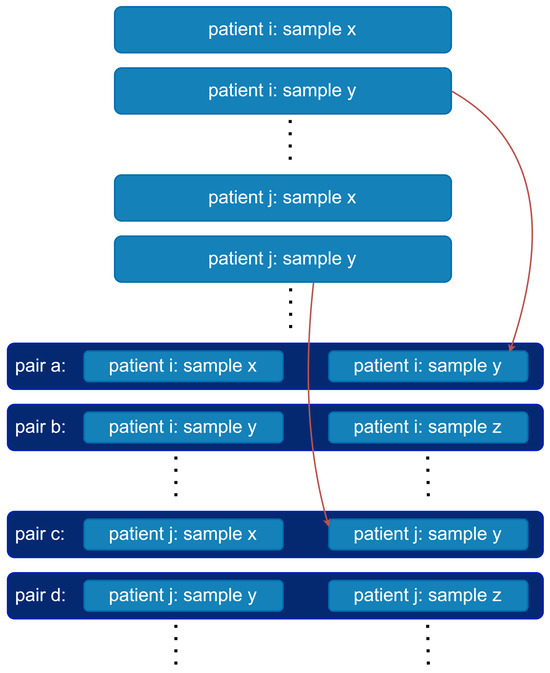

The dataset contains multiple analytes measured for each blood sample, which together form analyte vectors (see Figure A1). A common approach in ML-based WBIT detection is to train binary classifiers, where a given analyte vector is classified into one of two categories: WBIT or non-WBIT. While class labels for training could theoretically be generated by swapping portions of analyte vectors from different patients and labeling them as WBIT, such synthetic labels do not reflect meaningful patterns that the model can learn, as there is no inherent relationship between the features and the assigned classes. To address this, it is standard practice in the literature to use analyte vector pairs (see Figure A2) as input. A pair is labeled as WBIT if it consists of vectors from samples belonging to two different patients, and as non-WBIT if both samples originate from the same patient. This setup enables the model to learn distinguishing patterns between pairs that represent the same individual and those that do not.

2.6. Data Quality

It is likely that there are some naturally occurring WBIT errors in our dataset that were not recognized in the clinical setting. A future study is planned to address the identification and removal of such cases using outlier elimination techniques, such as those described in [18]. We also intend to evaluate an iterative approach in which a trained model is used to filter out potential WBIT cases, followed by retraining the model on the refined dataset. For the purposes of this study, we assumed that the training data did not contain any undetected WBIT errors.

2.7. Non-WBIT Pair Generation

In order to generate non-WBIT pairs, we did not form random pairs. Instead, in this study, we paired consecutively drawn samples from each patient (see Figure A2). This approach offers two key advantages: (1) it minimizes variation within non-WBIT pairs by reducing time-dependent biological drift between samples; and (2) it supports practical deployment, as it enables straightforward construction of prediction inputs by pairing each new sample with the most recent previous sample from the same patient. Consecutive samples separated by more than 30 days were excluded from pairing.

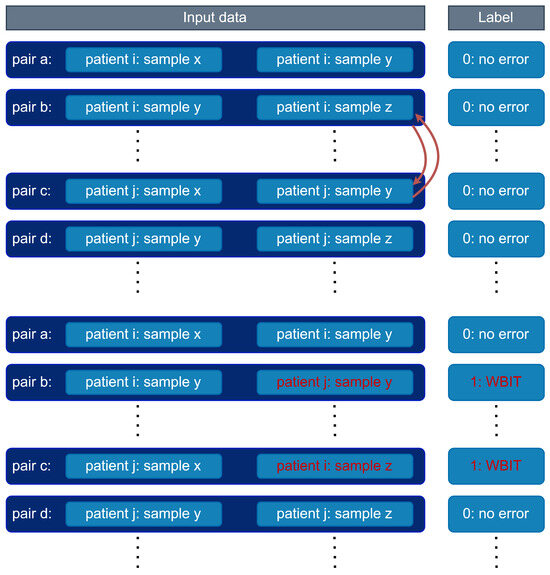

2.8. WBIT Pair Generation

We generated/simulated WBIT data using the following steps:

- Temporal segmentation: The dataset was divided into 24-h intervals based on sample collection timestamps.

- Ward-based grouping: Within each 24-h interval, analyte vector pairs were grouped based on the ward associated with the second vector (i.e., the more recent sample in the pair).

- Pair swapping to simulate WBIT: For each (day × ward) group, we randomly selected pairs of analyte vector pairs, each from different patients. In each selected pair, the second analyte vectors were swapped to simulate a WBIT error (see Figure A3).

- This process was repeated until approximately 50% of the pairs in each (day × ward) group were converted to WBIT-labeled cases.

- Groups with fewer than two distinct patients were excluded from the simulation process. Additionally, slight class imbalance may occur as (day × ward) groups with an odd number of eligible pairs retain one unmatched non-WBIT pair, which is not discarded.

The data preparation steps including preprocessing and WBIT generation are visualized in Figure 1.

2.9. Feature Generation

In addition to the analyte vector pairs described in Section 2.2, input data points are often further processed or extended with additional features in the literature. Several approaches have been proposed: ref. [19] used consecutive analyte vectors formatted not as pairs but as quadruples, as illustrated in Figure A2; ref. [15] incorporated patient metadata such as age and sex; ref. [14] included absolute changes and velocity; ref. [20] combined absolute and relative changes; and ref. [21] further integrated the time difference between the timestamps of the two samples in each pair.

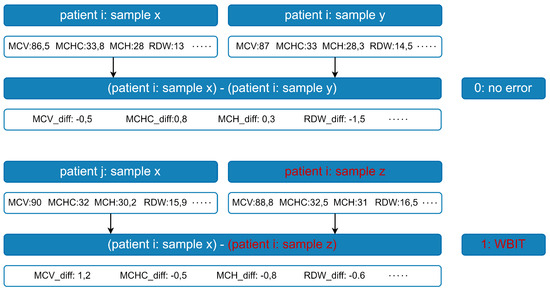

Since the focus of this study was exclusively on analyte values rather than extensive feature engineering, a simple and robust method was preferred. Specifically, first-order differences were computed between the values of the two analyte vectors in each pair: the values of the first vector were subtracted element-wise from those of the second to obtain the feature set (see Figure A4). This transformation was applied to all analyte vector pairs for each patient, resulting in 9-dimensional feature vectors per pair.

2.10. Model Training

Random Forest classifiers (RFCs), introduced in [22], are widely used across diverse domains and have shown strong performance in WBIT detection tasks [15,16]. In preliminary experiments, we also evaluated logistic regression, support vector machines (SVMs), and dense feedforward neural networks. These models were more sensitive to hyperparameter settings and, despite tuning efforts, yielded inferior performance and/or lower robustness compared to RFCs. Given their efficient training, relatively low memory usage, minimal hyperparameter complexity, and high interpretability, Random Forests were selected for this study. Throughout the remainder of this paper, the term model refers specifically to Random Forest classifiers.

All models were implemented using the scikit-learn library. Each Random Forest classifier was trained with class-balanced weights to account for label imbalance. All other hyperparameters were left at their default values.

3. Results

3.1. Analyte Importance Analysis

In order to explore the full range of predictive performance, an exhaustive feature search was conducted. For each n in the range , all possible combinations of n analytes were used to train models, and their performance was compared.

For each evaluated feature combination, 5-fold cross-validation was performed using the training/development set (see Figure 1), and average scores were computed across the folds. Model performance was assessed and ranked using the F1 score, a widely adopted metric in binary classification tasks that represents the harmonic mean of positive predictive value (PPV) and sensitivity.

While exhaustive search is effective for identifying high-performing analyte combinations, its application becomes impractical for larger analyte sets. In such cases, a more scalable approach is to perform an analyte importance analysis using dedicated metrics. These metrics offer complementary insights into the role of each analyte, including its relative impact on model performance, the magnitude of that impact, and the conditions under which the analyte proves most beneficial.

Accordingly, we applied feature importance analysis to the best-performing models identified through exhaustive search for each n. Analytes were ranked based on their contribution to the classification decisions across all samples. Three different importance metrics were used:

SHapley Additive exPlanations (SHAP) [23] is a method based on game theory that assigns each “player” (analyte) a fair “payout” proportional to its contribution to the final prediction. In the context of tree-based models such as RFCs, the Tree SHAP algorithm [24] is used to efficiently compute SHAP values by accumulating the marginal contribution of each feature along the path from the root to the leaf during classification.

Permutation Importance (PI) [25] estimates the influence of each feature by measuring the decrease in model performance when the values of that feature are randomly shuffled while keeping all others fixed. A greater drop in performance indicates higher importance of the shuffled feature.

Mean Decrease in Impurity (MDI) [22] is a tree-specific metric that quantifies feature importance based on how much a given feature contributes to reducing impurity in the decision tree. Impurity reflects how mixed the class labels are within a node; features that lead to greater impurity reduction during splits are considered more important. MDI is computed by summing the impurity reductions across all splits involving the feature and averaging the result.

Some analytes perform well individually, while others exhibit synergistic effects when used in combination. MDI and SHAP primarily capture the individual importance of features, whereas PI can better reflect the utility of features in interaction with others. For this reason, all three metrics were used to obtain a more comprehensive and reliable assessment of analyte importance.

Accuracy, positive predictive value (PPV), sensitivity, area under the receiver operating characteristic curve (ROC AUC), and F1 scores of the top five performing models for each analyte set size (n) are reported in Table 3.

Table 3.

Exhaustive feature selection. For each analyte set size n, models are trained and evaluated using every possible combination of analytes. Scores for the top 5 combinations for each n, sorted with respect to accuracy, are shown.

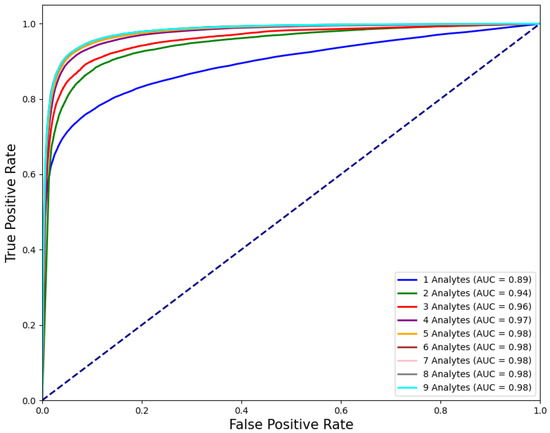

The best performing models for each n were further evaluated on the test set (see Figure 1) as follows. Receiver operating characteristic (ROC) curves are shown in Figure 2.

Figure 2.

ROC curves of the models that are trained on analyte combinations that yield the best performance for each n.

F1 scores, ROC AUC values, and their bootstrap confidence intervals (bsCIs), along with PPV at sensitivities of and under an assumed WBIT error rate of (reflecting a realistic WBIT rate in the test set), are summarized in Table 4. The corresponding metrics are reported using the abbreviations PPV@S0.8E0.005 and PPV@S0.5E0.005, respectively. Feature importance metrics are presented in Table 5 and for the best models trained using two and three analytes; the distribution of the data points and the associated class predictions are visualized in Figure 3 and Figure 4, respectively.

Table 4.

The models are evaluated that are trained on analyte combinations that yield the best performance for each n. Positive predictive value (PPV) scores are abbreviated such that PPV@S0.8E0.005 corresponds to PPV at sensitivity and an assumed WBIT error rate of . ROC AUC and F1 are given with 95% bootstrap confidence intervals over 1000 iterations.

Table 5.

Analyte importance analysis. For each analyte set size n, the models are analyzed that are trained on analyte combinations that yield the best performance according to exhaustive search (see Table 3). Three feature importance metrics are measured (see Section 3) and sorted with respect to MDI. Ranges: MDI, SHAP: ≥0; PI: 0–1. Higher values indicate greater importance.

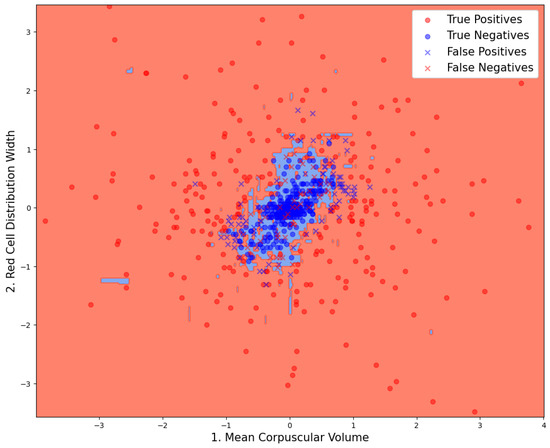

Figure 3.

Data point distribution on the model that is trained on the combination of analytes that yield the best performance for . Classification results and decision boundaries of the model can be seen.

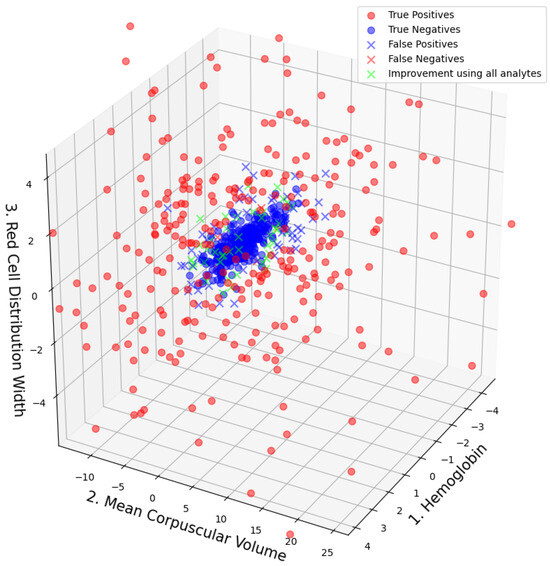

Figure 4.

Data point distribution on the model that is trained on the combination of analytes that yield the best performance for . Classification results and the data points that would have been correctly classified if the used model was trained on all available analytes are plotted in green.

3.2. Analyte Importance Rankings

In Table 3, the analytes MCV and RDW consistently appear among the highest ranking combinations across different values of n. MCH is among the top-performing combinations only for , where it typically ranks second or third. Notably, MCV and RDW are the only analytes that appear in the top three across all importance metrics and remain part of the best-performing combinations for every value of n. In Table 5, which is sorted by MDI, a clear drop in both MDI and SHAP values is observed for analytes ranked below MCV, RDW, and MCH for . When sorting by PI, similar drops occur for analytes ranking below MCV and RDW.

3.3. Model Performance Using Smaller Analyte Sets

As shown in Figure 3, model performance improves with the number of analytes used, as expected. However, this improvement plateaus beyond (; pairwise statistical comparisons between the top-performing combinations of consecutive analyte set sizes were conducted using a paired t-test on the cross-validation fold scores; accuracy, F1, ROC AUC, PPV and sensitivity) for all evaluated metrics except sensitivity, which continues to show significant gains up to , after which no substantial improvements are observed. Even with only , the F1 score exceeds 90%, highlighting that strong classification accuracy can be achieved with a minimal set of features. This trend is consistent with the PI values reported in Table 5, where analytes ranked below the top five for have zero importance. This suggests that these lower-ranking analytes contribute to performance only in combination with more influential features.

Performance variation between top-performing analyte combinations (see Table 5) within each fixed set size was statistically significant (; pairwise comparisons between consecutive top-five combinations within each set size were conducted using a paired t-test on cross-validation F1 scores) for smaller sets (e.g., and ), while such differences diminished for . This suggests that performance is more sensitive to analyte selection when fewer features are used, whereas models with larger analyte sets tend to converge to similar performance levels across combinations.

In certain cases, models trained on a relatively smaller number of features can outperform those trained on the full feature set, as they may generalize better and are less prone to overfitting. The results in Table 4 reflect this phenomenon: PPV values peak at , while ROC AUC and F1 scores reach their highest values at n = 7, with a slight decline observed for larger values of n. Additionally, sensitivity is consistently lower than PPV, particularly for , indicating an imbalance in the error rates that favors precision over recall in models with fewer analytes.

3.4. Explanations by Visualizations

In Figure 3 and Figure 4, the true class labels of the data points are distinguished by color: samples belonging to the WBIT class are shown in red, while those from the non-WBIT class are shown in blue. In Figure 3, the decision regions learned by the random forest model for the two classes are visualized as light red and light blue areas. A new data point falling within the blue region would be classified as non-WBIT, and one in the red region as WBIT. Correctly classified data points are marked with circles (“o”), while misclassified points are indicated with crosses (“x”). In Figure 4, green crosses denote misclassified points that would have been correctly classified if the model had been trained on all available analytes ().

4. Discussion

4.1. Analyte Importance

Recent reports highlight substantial variation in the composition of CBC reports across clinical sites, with the number and types of analytes differing significantly between institutions [26]. In this context, the ability to achieve strong model performance using small, well-chosen subsets of analytes—as demonstrated in Section 3.3—is particularly relevant. This feature reduction enhances generalizability across heterogeneous datasets and enables operational flexibility for deployment in constrained or embedded environments. Moreover, the resulting low-dimensional models improve interpretability, allowing for direct visualization of decision boundaries and model behavior. Finally, identifying compact, high-performing subsets offers scientific insights into which analytes contribute most strongly to WBIT detection—potentially informing future diagnostic heuristics.

The high predictive power of MCV, RDW, and MCH is consistent with findings from previous studies [27] and can be considered biologically plausible. This is because the models rely on differences between consecutive samples, and these analytes are known to have lower biological variation ratios within individuals, both compared to the within individual variation of other analytes and their own between individual variation [28]. These analytes also stand out with substantially higher feature importance scores compared to others, suggesting that they account for the majority of the predictive information.

As discussed in Section 2.2, HK, MCH, and MCHC are derived analytes and do not offer additional independent information. In theory, models trained exclusively on directly measured analytes should be sufficient for effective prediction. Nevertheless, including derived features can still be beneficial in practice—for instance, by simplifying model learning or acting as proxies for latent patterns that may not be directly captured by the original variables. This appears particularly relevant for MCH, which is calculated from HB and ERY.

It is important to note that the analyte rankings based on importance metrics do not always align with the combinations that yield the best predictive performance. For example, while MCH ranks among the most important features for , it does not appear in the most predictive combinations for (see Figure 3). This discrepancy illustrates the limitation of relying solely on feature importance metrics when selecting subsets for model training.

Although exhaustive search is a powerful tool for identifying high-performing analyte combinations, it becomes computationally infeasible for larger values of n due to the combinatorial explosion. In order to maximize predictive performance under resource constraints, a more scalable strategy may be to first use importance metrics or feature selection algorithms like Boruta [29] on a model trained using all analytes to narrow the analyte pool to a smaller set with consistently high scores. Subsequently, exhaustive search can be applied within this reduced set to determine the optimal feature combination for a given n.

4.2. Model Behavior

Inspection of the misclassified data points in Figure 3 and Figure 4 reveals that most false positives are located near the cluster of true negatives. This is expected, as data points from both classes overlap in these regions, increasing the likelihood of classification errors. Although a direct visualization is not possible for , it can be inferred from Figure 3 that the green data points—representing samples misclassified by the model but correctly classified by the model—are likely located farther from the cluster center in the higher-dimensional space. This spatial separation may allow the model trained on all nine analytes to distinguish such points more effectively. The boundaries between the blue and red decision areas in Figure 3 can be interpreted as complex, multi-analyte delta check rules. Each straight boundary segment corresponds to a single rule associated with the analyte to which it is perpendicular.

The models in this study produce class probabilities for each data point, representing the likelihood of the sample belonging to the WBIT class. A probability threshold (set to 0.5 throughout this study) is then used to assign class labels. This threshold directly influences the size of the decision regions: increasing the threshold reduces the size of the WBIT (blue) area, resulting in fewer false positives but more false negatives. The appropriate threshold should be chosen according to the laboratory’s tolerance for these trade-offs. False negatives, on the other hand, are primarily located within the central region of the true negative cluster in Figure 3. These errors appear more difficult to eliminate using only two analytes. It is plausible that, in these WBIT cases, the mismatched patients happened to have very similar blood profiles, making the error more difficult to detect. The consistently lower sensitivity compared to other scores observed in Figure 3 can be explained by the underlying data distribution (Figure 3 and Figure 4): the non-WBIT (negative) class forms a tightly clustered group, while the WBIT (positive) class is more dispersed. This asymmetry in spatial density increases the model’s tendency to misclassify WBIT data points, thereby lowering sensitivity.

4.3. Limitations and Outlook

4.3.1. Applicability

In clinical practice, the set of analytes measured in each sample varies considerably depending on the laboratory tests ordered. While this study focused exclusively on complete blood count (CBC) data due to its clinical ubiquity and interpretability, prior work has employed a broader range of analyte sets. For example, ref. [19] incorporated both hematology and biochemistry markers, including liver enzymes and electrolytes; ref. [14] used metabolic parameters such as glucose, BUN, and creatinine; ref. [20] worked with standard panels like the basic and comprehensive metabolic panels; and ref. [16] relied on electrolyte–urea–creatinine (EUC) results. These studies demonstrate the feasibility of using diverse analyte sets for WBIT detection.

Our original dataset contained many samples in which only a subset of analytes was measured, reflecting the variability of test orders in routine clinical practice. Restricting the dataset to samples with complete CBC panels substantially reduces the overall dataset size, potentially discarding valuable information. ROC curves in Figure 2, and the ROC AUC and F1 scores in Table 4, show substantial performance gains up to (; pairwise statistical significance between models of adjacent analyte set sizes was assessed using a paired t-test on per-iteration bootstrap estimates of F1 and ROC AUC scores). For larger n values, performance improvements become negligible or inconsistent across metrics, with no further statistically significant gains. This suggests that analytes that do not contribute to performance improvement could be excluded before filtering, thereby increasing the number of usable samples. Conversely, for analytes that do improve performance but are less frequently measured, imputation techniques such as those used in [30] could be employed to reconstruct missing values. This would enable the use of a dataset in the analyte vector format shown in Figure A1 before model training. Alternatively, models that can handle varying input dimensions could be used. For instance, the widely applied, tree-based XGBoost algorithm can adapt well via its sparsity aware splitting mechanism (see [31] Section 3.4). Another candidate is Graph Neural Networks [32], which can naturally model variable-sized and irregularly structured data by representing analytes and their relationships as nodes and edges in a graph. Future research should explore such adaptive modeling approaches to support WBIT detection across a broader range of clinical scenarios, where analyte availability may differ between samples.

The analyte importance analysis framework presented in this paper is not limited to CBC data. These techniques are also promising for identifying informative features in other laboratory panels and should be systematically evaluated in future work to assess their applicability beyond CBC analytes.

4.3.2. Undetected WBIT in Training Data

This study assumed that the training data did not contain undetected WBIT errors. In reality, many WBIT cases may go unnoticed in clinical workflows. Ref. [3] estimated the rate of undetected WBIT errors at 3.17 per 1000 samples, compared to a detected error rate of 1.15, resulting in an estimated total error rate of 4.32 per 1000 samples. This poses a challenge for both training and evaluation, as undetected cases are incorrectly treated as normal, potentially distorting the model’s learning. As an unsupervised learning approach, outlier detection models such as Local Outlier Factor (LOF) [18] aim to identify anomalies in unlabeled data. Such models could be used as a preprocessing step to remove potentially mislabeled WBIT cases from the dataset, enabling the training of more robust ML models.

4.3.3. Time Series

Similar to previous studies [14,15,16,20,21,30], only the most recent prior sample was used in this study to determine whether a current sample is a WBIT case. In contrast, ref. [19] employed analyte vector quadruples rather than pairs. Theoretically, all previously collected samples for a patient could be leveraged to extract richer temporal patterns in analyte value changes. However, due to computational limitations, this would require either machine learning algorithms capable of handling variable-length input—such as Long Short-Term Memory (LSTM) networks [33]—or the use of workaround strategies such as averaging all previous samples or combining separate models trained on inputs of different lengths.

The time threshold between consecutive samples used to form analyte vector pairs (see Section 2.5) varies across studies. For instance, ref. [30] used 6 days, ref. [20] used 10 days, and ref. [14] used 30 days, as adopted in this study, while ref. [19] did not apply a time threshold. To the best of our knowledge, only ref. [30] explicitly accounted for transfusion history by separately handling the data of patients who received blood transfusions within the past three months. In our study, such samples were removed entirely from the dataset (see Section 2.3). These parameters—time thresholds and transfusion exclusion criteria—are typically determined based on laboratory constraints or arbitrarily set. Future work should focus on systematically optimizing these parameters to maximize model performance while maintaining operational feasibility for clinical deployment.

4.3.4. Higher Order Classification-Algorithmic Extensions

To date, most studies have framed WBIT detection as a binary classification problem. An idealized alternative would be a model capable of learning patient-specific analyte profiles and classifying samples directly as belonging to individual patients, rather than determining whether a sample is WBIT or not. While conceptually promising, this approach is impractical in real-world settings, as most patients in clinical databases have only one or two samples, which is insufficient for modeling individual longitudinal patterns. Nevertheless, certain long-term patients contribute a significantly higher number of samples (see Table 2). Future research could explore hybrid approaches that combine binary classification with patient-specific modeling, provided that enough patient-specific data are available.

As explained in Section 2.10, Random Forest classifiers were the only models trained in this study. Tree-based models such as Random Forests have been shown to perform particularly well on tabular datasets [34], such as the one used in this study (see Figure A1). More advanced tree-based algorithms like XGBoost have demonstrated outstanding performance in WBIT detection in prior studies [19,30]. Other widely used machine learning algorithms, including Neural Networks and Support Vector Machines (SVMs), have also been successfully applied in related work [14,15,20].

In addition to these traditional models, recent deep learning architectures specifically designed for tabular data—such as the FT-Transformer [35] and SAINT [36]—may offer further potential for WBIT detection due to their capacity to model complex interactions among features. However, the application of such deep learning models is computationally demanding. Their practical implementation in clinical settings depends heavily on the availability of sufficient computational resources within the laboratory environment.

4.3.5. Clinical Considerations

The positive predictive value (PPV) scores achieved in this study (see Table 4) may not yet be sufficient for deployment in clinical laboratory settings. This is particularly important because a high number of false positives could undermine trust in the system, leading clinical staff to disregard valid alerts. Furthermore, ROC AUC values exceeding 99.99% have been reported in the literature [30], which is substantially higher than the maximum of 97.93% achieved in this study. Combining the analyte impact analysis presented here with state-of-the-art machine learning techniques could yield models with improved accuracy, reduced false alarm rates, and greater robustness across varying clinical environments.

Furthermore, all WBIT cases in this study were simulated, and therefore, PPV estimates are conditional on the assumed prevalence. We used a fixed prevalence of 0.5%, based on the estimate of 0.432% reported in [3] (see Section 4.3.2). Notably, ref. [19] also adopted a 0.5% assumed prevalence when estimating PPV. While this does not reflect confirmed WBIT events in our dataset, it provides a reasonable reference point for threshold calibration during deployment.

WBIT generation and simulation strategies also differ across studies. While many employ simple random swapping, more realistic methods—such as restricting swaps to samples collected from the same ward [19,21] or, as in this study, from the same ward and within a 24-h period—have been proposed to better reflect clinical conditions. Although realistic simulation is desirable, future research should investigate how different WBIT generation strategies affect classification performance.

An additional limitation is the exclusion of post-transfusion samples. While this step helped isolate analyte-driven variation and aligns with standard preprocessing in prior studies, it may limit generalizability in real-world settings where transfusions are frequent. Future work could explore whether including transfusion history—including timing and type—allows models to retain robustness in such contexts.

It is important to emphasize that, unlike in retrospective studies based on simulated WBIT errors, definitive confirmation of a WBIT event is not feasible in routine clinical practice. This limitation presents two major challenges: (1) accurately estimating the true incidence of WBIT errors, and (2) prospectively evaluating the performance of detection models. To address the latter, several strategies may be considered: (1) contacting the treating physician to assess whether drastic changes in analyte values between samples can be clinically explained—although this can be particularly difficult when the suspected WBIT case falls within or near the dense cluster of non-WBIT data points, (see Figure 3 and Figure 4); (2) examining additional analytes not used in model training, which may be inconclusive if the WBIT affects only specific sample tubes (e.g., an error in the EDTA tube used for CBC might not manifest in other tubes); (3) performing supplementary tests such as HbA1c, BSG, or blood group typing on the original sample, though this becomes impractical when the model is trained on analyte vector pairs (see Section 2.5), as the first sample is often no longer available; and (4) obtaining a follow-up sample to assess whether the model flags another WBIT alert—a strategy ultimately constrained by the model’s inherent limitations. Given these challenges, the uncertainty in identifying true WBIT cases must be acknowledged, and both WBIT incidence rates and model performance metrics should ideally be reported with appropriate upper and lower bounds to reflect this ambiguity.

A common strategy for estimating undetected WBIT rates involves focusing on cases flagged as suspicious and performing additional blood group testing. For example, in [3], WBIT rates were estimated based on the probability of two patients having the same ABO/Rh blood group, using blood group frequency data. In a prospective evaluation, ref. [30] selected the five samples with the highest predicted WBIT probability each week for follow-up blood group testing. Although this strategy is promising for both clinical integration and prospective assessment, further research is needed to reduce false alarm rates while also addressing the risk that valid alerts may be incorrectly dismissed due to the difficulty of confirming WBIT errors. Further details on possible methodologies for integrating ML-based WBIT detection into routine laboratory workflows can be found in [30]. Future work should aim to develop implementation frameworks that account for economic, organizational, and infrastructural constraints in diverse clinical environments.

4.3.6. Explainability

While our analysis centers on global feature importance for model interpretability and analyte selection, future integration of local explanation techniques—such as per-sample SHAP value analysis—could further enhance clinical transparency by highlighting the specific analyte shifts driving individual predictions [37]. Such methods may assist laboratory staff in verifying WBIT alarms and support model refinement by providing insight into misclassifications.

As the field moves toward clinical deployment of interpretable ML systems, it is important to acknowledge that explainable AI still lacks standardized methodologies and consensus on how to assess the real-world utility of these tools—representing both a limitation of this work and a broader challenge for the community.

5. Conclusions

This study presents a comprehensive analyte importance analysis for machine learning-based detection of wrong blood in tube (WBIT) errors using complete blood count (CBC) data. By performing exhaustive feature selection for all analyte combinations and evaluating multiple importance metrics—SHAP, permutation importance, and mean decrease in impurity—we were able to identify the analytes with the highest predictive utility. MCV and RDW consistently ranked as the most impactful features, providing robust classification performance even when used in smaller subsets.

Our approach extends previous studies by incorporating model interpretability at both global and local levels. We increased transparency through visual explanations in two and three dimensions, illustrating how the models distinguish WBIT from non-WBIT cases based on feature space distribution. These visualization techniques not only demystify the decision boundaries learned by the classifiers, but also provide insight into misclassifications and the trade-off between precision and recall.

In this way, we provide a methodological framework for feature selection in ML-based WBIT detection systems, enabling better transferability of the model to other laboratories with different test profiles. The results show that high accuracy can be achieved with a minimal subset of routinely available analytes, making the method potentially suitable for integration into clinical practice.

Furthermore, this work provides a structured overview of the current state of research in ML-based WBIT detection, identifying gaps in simulation methods, algorithm selection, and confirmation strategies for use in practice. Future work should focus on utilizing advanced deep learning architectures for tabular data, overcoming challenges in clinical verification, and integrating adaptive techniques for missing data and patient-specific time series patterns. Ultimately, improving WBIT detection can increase patient safety by reducing pre-analytical errors in laboratory diagnostics.

Author Contributions

Conceptualization, R.S., H.D., and T.K.; methodology, B.G.S., R.S., C.R., and T.K.; software, B.G.S., R.S., and S.A.-D.; validation, B.G.S., R.S., C.R., and T.K.; formal analysis, B.G.S.; investigation, B.G.S., R.S., and T.K.; resources, S.A.-D.; data curation, B.G.S. and R.S.; writing—original draft preparation, B.G.S.; writing—review and editing, B.G.S., R.S., C.R., S.A.-D., H.D., and T.K.; visualization, B.G.S.; supervision, R.S., H.D., and T.K.; project administration, R.S., H.D., and T.K.; funding acquisition, R.S., H.D., and T.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Culture and Science of the State of North Rhine-Westphalia under the grant No. NW21-059C.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of Ethik-Kommission Westfalen-Lippe (protocol codes 2023-567-f-S, 2024-064-f-S and date of approval 12 May 2025).

Informed Consent Statement

The approved ethics application for this study (see above) explained that obtaining individual consent was neither feasible nor reasonable due to strict pseudonymization and the retrospective nature of data processing.

Data Availability Statement

The original data presented in the study are openly available in Zenodo at https://doi.org/10.5281/zenodo.15674541 accessed on 25 June 2025. To mitigate re-identification risks associated with rare analyte patterns, the published dataset includes only samples retained after the filtering steps described in Section 2.3.

Acknowledgments

ChatGPT 4o and DeepL were used for the final linguistic fine-tuning. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AUC | Area Under the Receiver Operating Characteristic Curve |

| CBC | Complete Blood Count |

| CI | Confidence Interval |

| EDTA | Ethylenediaminetetraacetic Acid |

| ERY | Erythrocytes (Red Blood Cells) |

| HB | Hemoglobin |

| HK | Hematocrit |

| IQR | Interquartile Range |

| LEUKO | Leukocytes (White Blood Cells) |

| LIS | Laboratory Information System |

| LOF | Local Outlier Factor |

| LSTM | Long Short-Term Memory |

| MCH | Mean Corpuscular Hemoglobin |

| MCHC | Mean Corpuscular Hemoglobin Concentration |

| MCV | Mean Corpuscular Volume |

| MDI | Mean Decrease in Impurity |

| ML | Machine Learning |

| PI | Permutation Importance |

| PLT | Platelets |

| PPV | Positive Predictive Value |

| RFC | Random Forest Classifier |

| ROC | Receiver Operating Characteristic |

| SHAP | SHapley Additive exPlanations |

| SVM | Support Vector Machine |

| WBIT | Wrong Blood in Tube |

Appendix A

Figure A1.

Data is input to the model as analyte vectors. Each analyte vector corresponds to a sample, while there are multiple samples from multiple patients. The example values are randomly generated for visualization purposes and they do not belong to patients.

Figure A2.

Pairing of analyte vectors. For each patient, their consecutive analyte vectors are concatenated to form pairs.

Figure A3.

WBIT generation. Second analyte vectors in the pairs of different patients are swapped. Pairs are picked randomly among the pairs where the corresponding samples of the second analyte vectors in the pairs are drawn in the same day and ward.

Figure A4.

Feature generation. First order differences are calculated for each analyte vector pair; the first vector in each pair is element-wise subtracted from the second.

References

- Olver, P.; Bohn, M.; Adeli, K. Central role of laboratory medicine in public health and patient care. Clin. Chem. Lab. Med. 2023, 61, 666–673. [Google Scholar] [CrossRef] [PubMed]

- Bolton-Maggs, P.H.; Wood, E.M.; Wiersum-Osselton, J.C. Wrong blood in tube–potential for serious outcomes: Can it be prevented? Br. J. Haematol. 2015, 168, 3–13. [Google Scholar] [CrossRef] [PubMed]

- Raymond, C.; Dell’Osso, L.; Guerra, D.; Hernandez, J.; Rendon, L.; Fuller, D.; Villasante-Tezanos, A.; Garcia, J.; McCaffrey, P.; Zahner, C. How many mislabelled samples go unidentified? Results of a pilot study to determine the occult mislabelled sample rate. J. Clin. Pathol. 2024, 77, 647–650. [Google Scholar] [CrossRef]

- Lippi, G.; Blanckaert, N.; Bonini, P.; Green, S.; Kitchen, S.; Palicka, V.; Vassault, A.J.; Mattiuzzi, C.; Plebani, M. Causes, consequences, detection, and prevention of identification errors in laboratory diagnostics. Clin. Chem. Lab. Med. 2009, 47, 143–153. [Google Scholar] [CrossRef]

- Dunn, E.J.; Moga, P.J. Patient misidentification in laboratory medicine: A qualitative analysis of 227 root cause analysis reports in the Veterans Health Administration. Arch. Pathol. Lab. Med. 2010, 134, 244–255. [Google Scholar] [CrossRef]

- Turner, C.L.; Casbard, A.C.; Murphy, M.F. Barcode technology: Its role in increasing the safety of blood transfusion. Transfusion 2003, 43, 1200–1209. [Google Scholar] [CrossRef]

- Knels, R.; Ashford, P.; Bidet, F.; Böcker, W.; Briggs, L.; Bruce, P.; Csöre, M.; Distler, P.; Gutierrez, A.; Henderson, I.; et al. Guidelines for the use of RFID technology in transfusion medicine. Vox Sang. 2010, 98, 1–24. [Google Scholar] [CrossRef]

- Seok, H.S.; Choi, Y.; Yu, S.; Shin, K.H.; Kim, S.; Shin, H. Machine learning-based delta check method for detecting misidentification errors in tumor marker tests. Clin. Chem. Lab. Med. 2024, 62, 1421–1432. [Google Scholar] [CrossRef]

- Lahdenoja, O.; Hurnanen, T.; Iftikhar, Z.; Nieminen, S.; Knuutila, T.; Saraste, A.; Koivisto, T. Atrial fibrillation detection via accelerometer and gyroscope of a smartphone. IEEE J. Biomed. Health Inform. 2017, 22, 108–118. [Google Scholar] [CrossRef] [PubMed]

- Quinodoz, M.; Royer-Bertrand, B.; Cisarova, K.; Di Gioia, S.A.; Superti-Furga, A.; Rivolta, C. DOMINO: Using machine learning to predict genes associated with dominant disorders. Am. J. Hum. Genet. 2017, 101, 623–629. [Google Scholar] [CrossRef]

- Çubukçu, H.C.; Topcu, D.I.; Yenice, S. Machine learning-based clinical decision support using laboratory data. Clin. Chem. Lab. Med. 2024, 62, 793–823. [Google Scholar] [CrossRef]

- Steinbach, D.; Ahrens, P.C.; Schmidt, M.; Federbusch, M.; Heuft, L.; Lübbert, C.; Nauck, M.; Grundling, M.; Lsermann, B.; Gibb, S.; et al. Applying machine learning to blood count data predicts sepsis with ICU admission. Clin. Chem. 2024, 70, 506–515. [Google Scholar] [CrossRef]

- Karaglani, M.; Gourlia, K.; Tsamardinos, I.; Chatzaki, E. Accurate blood-based diagnostic biosignatures for Alzheimer’s disease via automated machine learning. J. Clin. Med. 2020, 9, 3016. [Google Scholar] [CrossRef]

- Rosenbaum, M.W.; Baron, J.M. Using machine learning-based multianalyte delta checks to detect wrong blood in tube errors. Am. J. Clin. Pathol. 2018, 150, 555–566. [Google Scholar] [CrossRef]

- Farrell, C.J. Identifying mislabelled samples: Machine learning models exceed human performance. Ann. Clin. Biochem. 2021, 58, 650–652. [Google Scholar] [CrossRef] [PubMed]

- Farrell, C.J. Decision support or autonomous artificial intelligence? The case of wrong blood in tube errors. Clin. Chem. Lab. Med. 2022, 60, 1993–1997. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Breunig, M.M.; Kriegel, H.P.; Ng, R.T.; Sander, J. LOF: Identifying density-based local outliers. In Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data, Dallas, TX, USA, 15–18 May 2000; pp. 93–104. [Google Scholar]

- Mitani, T.; Doi, S.; Yokota, S.; Imai, T.; Ohe, K. Highly accurate and explainable detection of specimen mix-up using a machine learning model. Clin. Chem. Lab. Med. 2020, 58, 375–383. [Google Scholar] [CrossRef]

- Jackson, C.R.; Cervinski, M.A. Development and characterization of neural network-based multianalyte delta checks. J. Lab. Precis. Med. 2020, 5, 10. [Google Scholar] [CrossRef]

- Farrell, C.J.; Giannoutsos, J. Machine learning models outperform manual result review for the identification of wrong blood in tube errors in complete blood count results. Int. J. Lab. Hematol. 2022, 44, 497–503. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Shapley, L.S. A value for n-person games. Contrib. Theory Games 1953, 2, 307–317. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30, pp. 4765–4774. [Google Scholar]

- Altmann, A.; Toloşi, L.; Sander, O.; Lengauer, T. Permutation importance: A corrected feature importance measure. Bioinformatics 2010, 26, 1340–1347. [Google Scholar] [CrossRef] [PubMed]

- Go, L.T.; Go, L.T.; Gunaratne, M.D.; Abeykoon, J.P. Variation in Complete Blood Count Reports Across US Hospitals. JAMA Netw. Open 2025, 8, e2514050. [Google Scholar] [CrossRef] [PubMed]

- Balamurugan, S.; Rohith, V. Receiver Operator Characteristics (ROC) Analyses of Complete Blood Count (CBC) Delta. J. Clin. Diagn. Res. 2019, 13, EC09–EC11. [Google Scholar] [CrossRef]

- Aarsand, A.K.; Fernandez-Calle, P.; Webster, C.; Coskun, A.; Gonzales-Lao, E.; Diaz-Garzon, J.; Sufrate, B.; Parillo, I.M.; Galior, K.; Topcu, D.; et al. The EFLM Biological Variation Database. 2022. Available online: https://biologicalvariation.eu/ (accessed on 15 October 2024).

- Kursa, M.B.; Rudnicki, W.R. Feature selection with the Boruta package. J. Stat. Softw. 2010, 36, 1–13. [Google Scholar] [CrossRef]

- Farrell, C.J.; Makuni, C.; Keenan, A.; Maeder, E.; Davies, G.; Giannoutsos, J. A Machine Learning Model for the Routine Detection of “Wrong Blood in Complete Blood Count Tube” Errors. Clin. Chem. 2023, 69, 1031–1037. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Zhou, J.; Cui, G.; Hu, S.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph neural networks: A review of methods and applications. AI Open 2020, 1, 57–81. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Grinsztajn, L.; Oyallon, E.; Varoquaux, G. Why do tree-based models still outperform deep learning on typical tabular data? In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 507–520. [Google Scholar]

- Gorishniy, Y.; Rubachev, I.; Khrulkov, V.; Babenko, A. Revisiting deep learning models for tabular data. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–14 December 2021; Volume 34, pp. 18932–18943. [Google Scholar]

- Somepalli, G.; Goldblum, M.; Schwarzschild, A.; Bruss, C.B.; Goldstein, T. Saint: Improved neural networks for tabular data via row attention and contrastive pre-training, 2021. arXiv 2021, arXiv:2106.01342. [Google Scholar]

- Topcu, D. How to explain a machine learning model: HbA1c classification example. J. Med. Palliat. Care 2023, 4, 117–125. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).