The Integration of 3D Virtual Reality and 3D Printing Technology as Innovative Approaches to Preoperative Planning in Neuro-Oncology

Abstract

1. Introduction

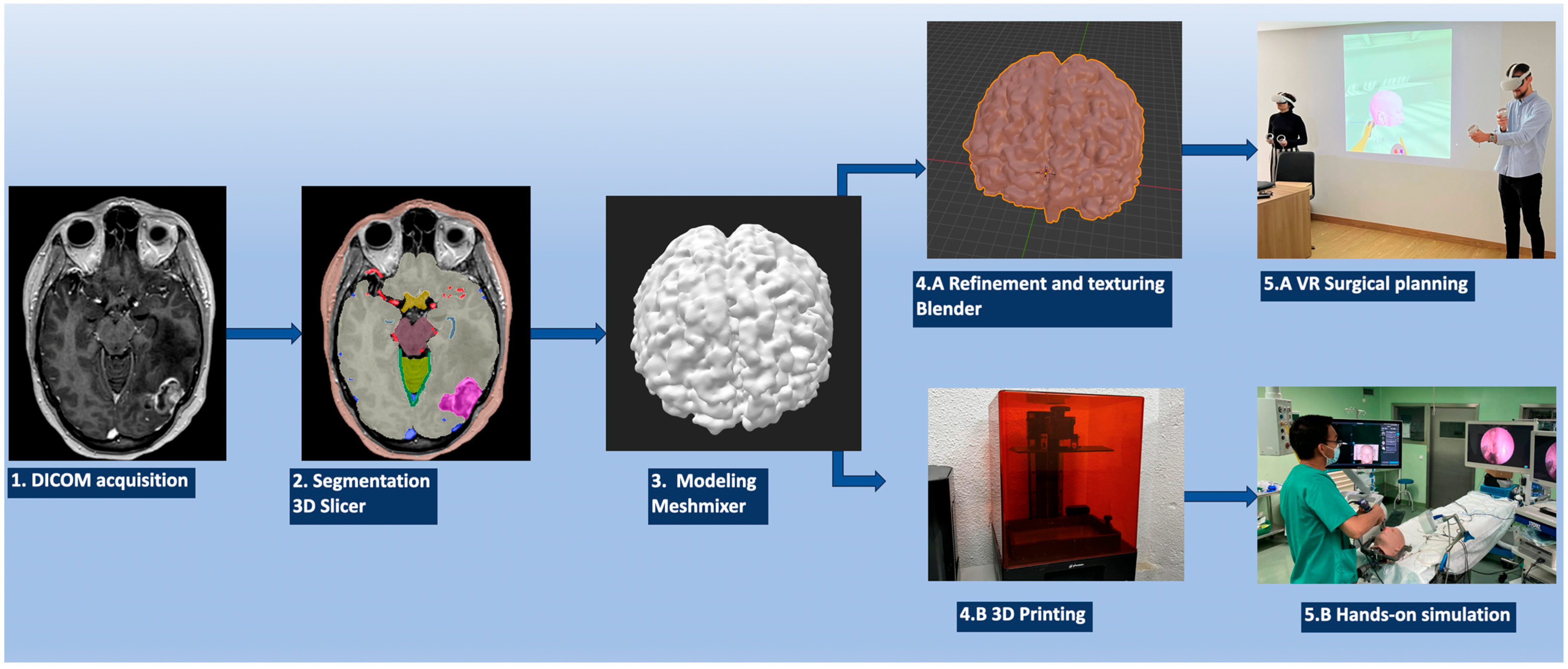

2. Materials and Methods

2.1. Image Data Acquisition

2.2. Object Segmentation

2.3. Sculpting the 3D Objects

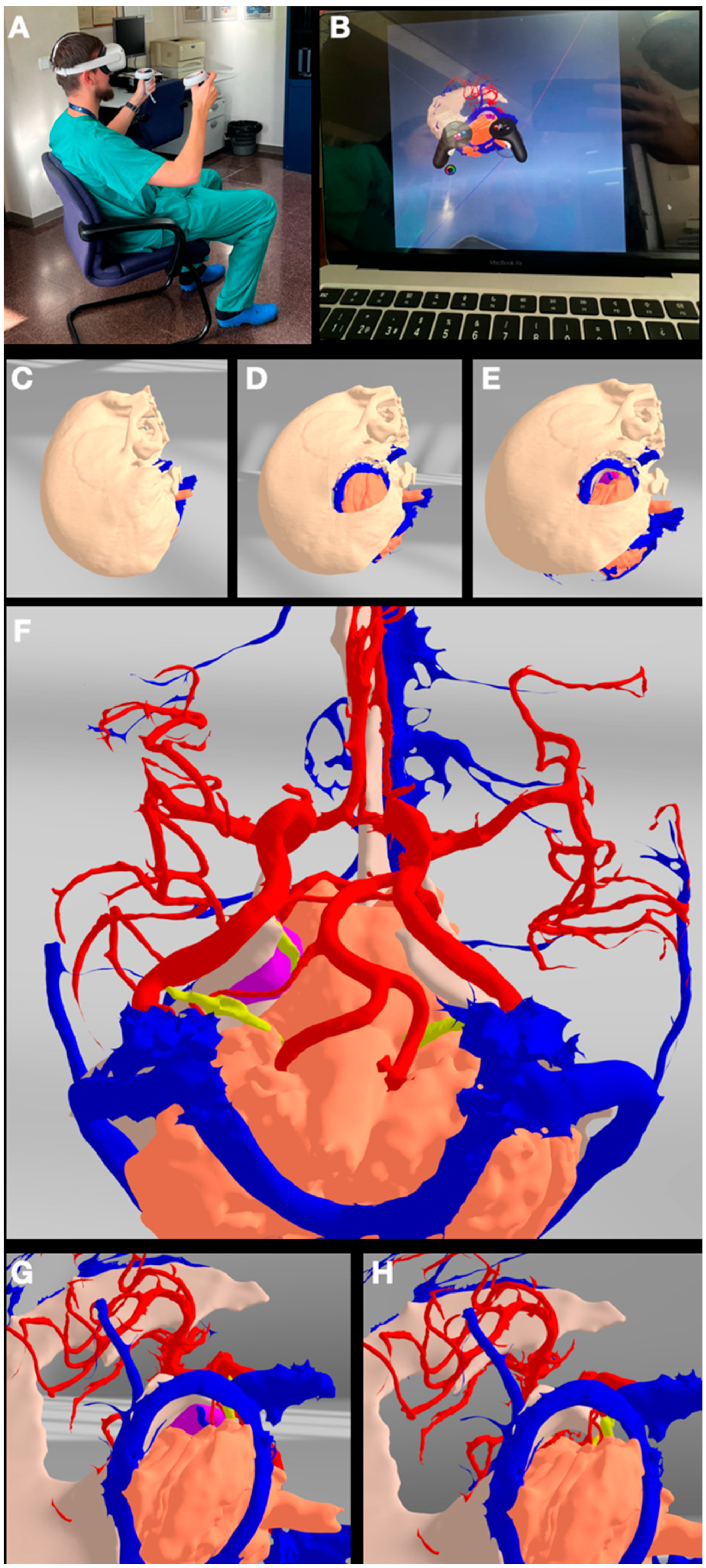

2.4. VR/AR Implementation

2.5. 3D Printing

3. Results

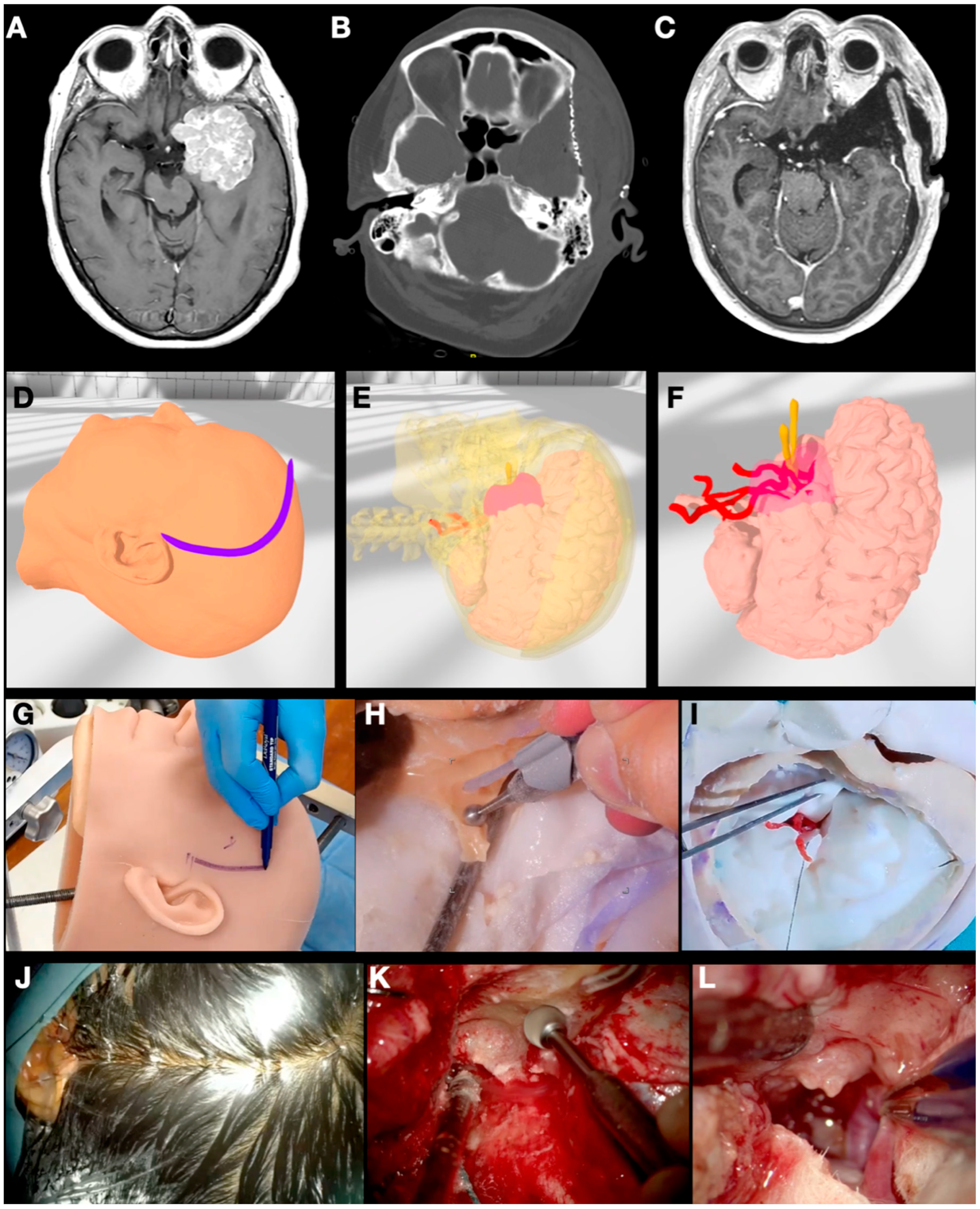

3.1. Case 1: Sphenoid Wing Meningioma

3.2. Case 2: Pituitary Adenoma

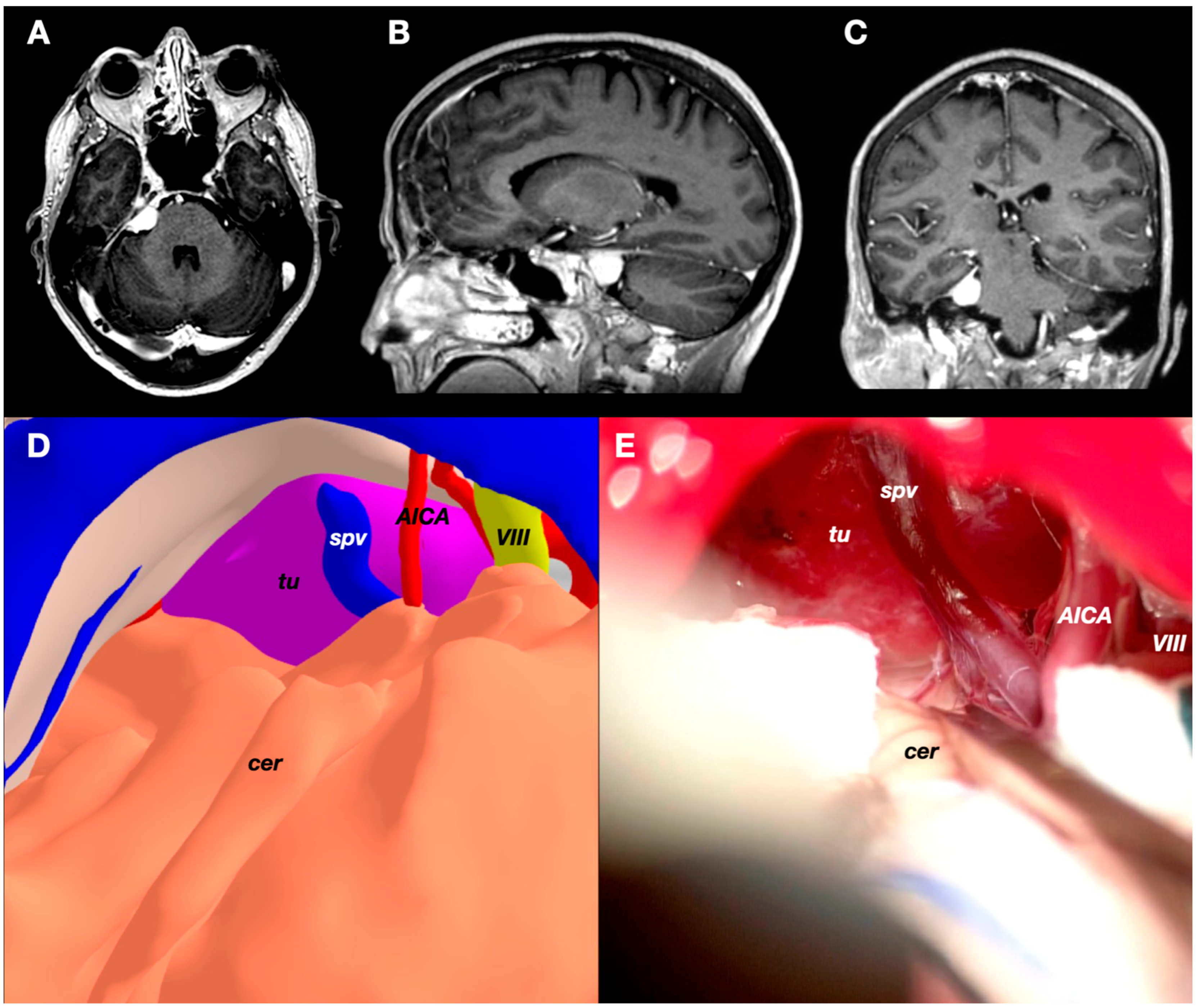

3.3. Case 3: Petrous Apex Meningioma

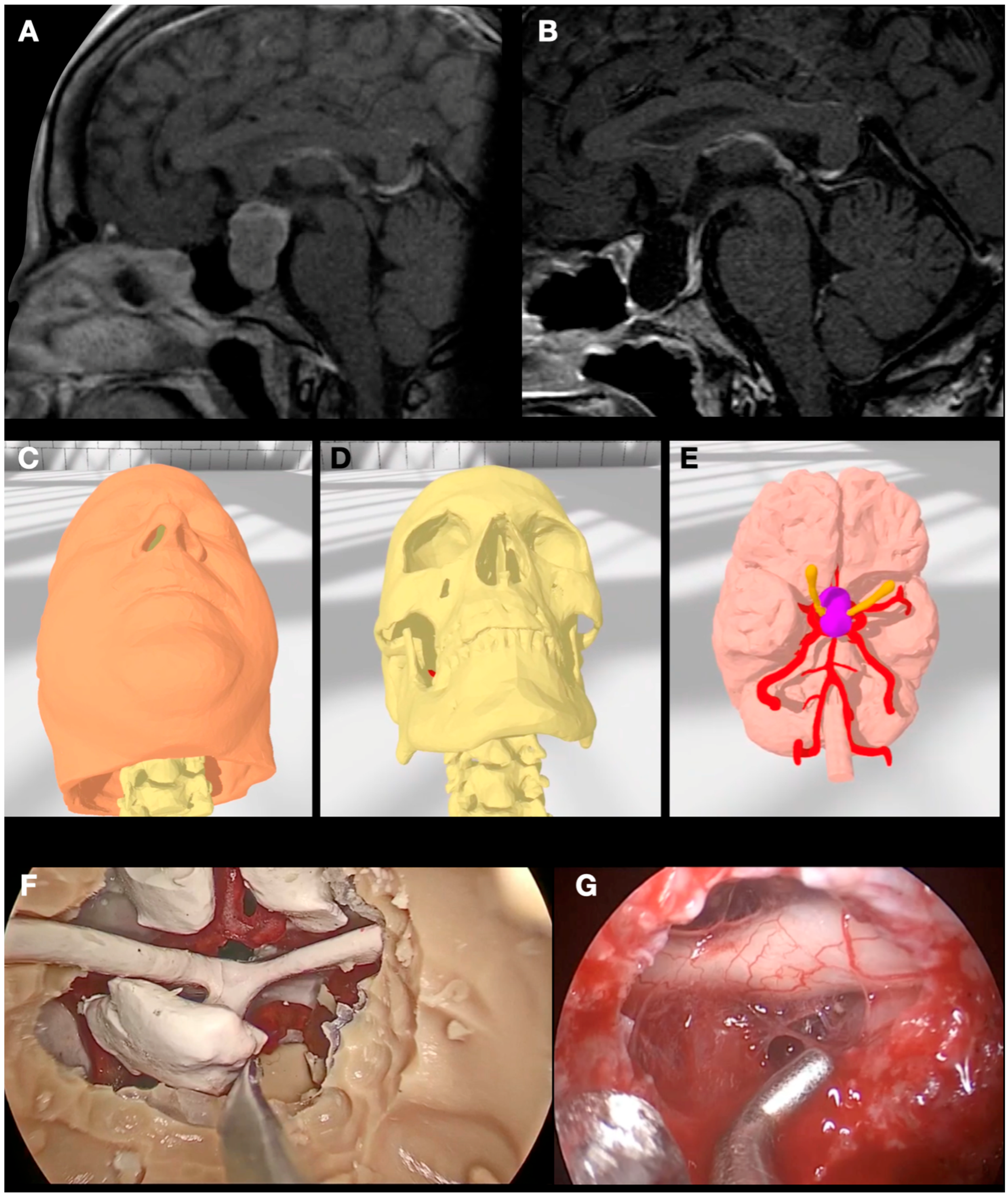

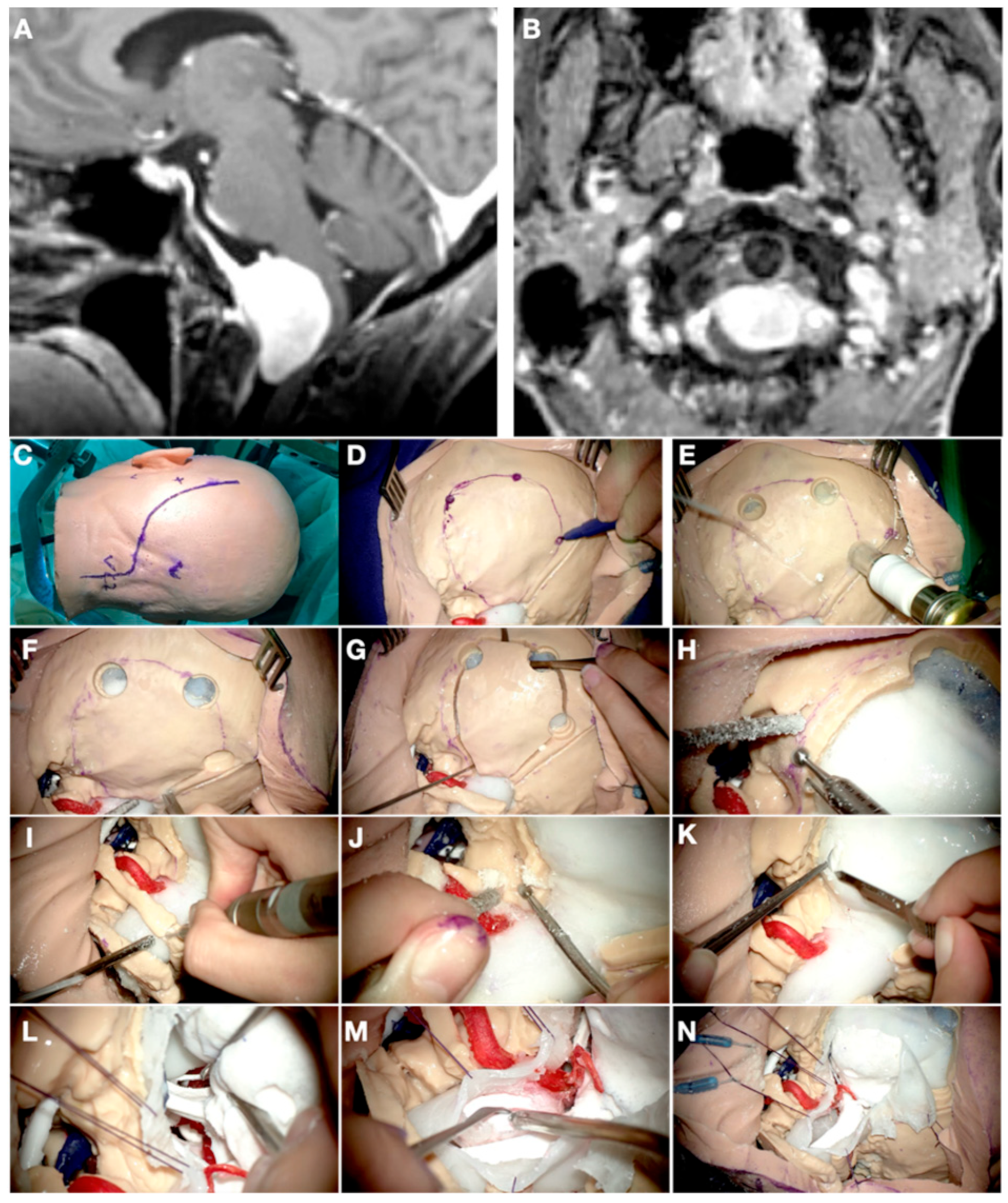

3.4. Case 4: Foramen Magnum Meningioma

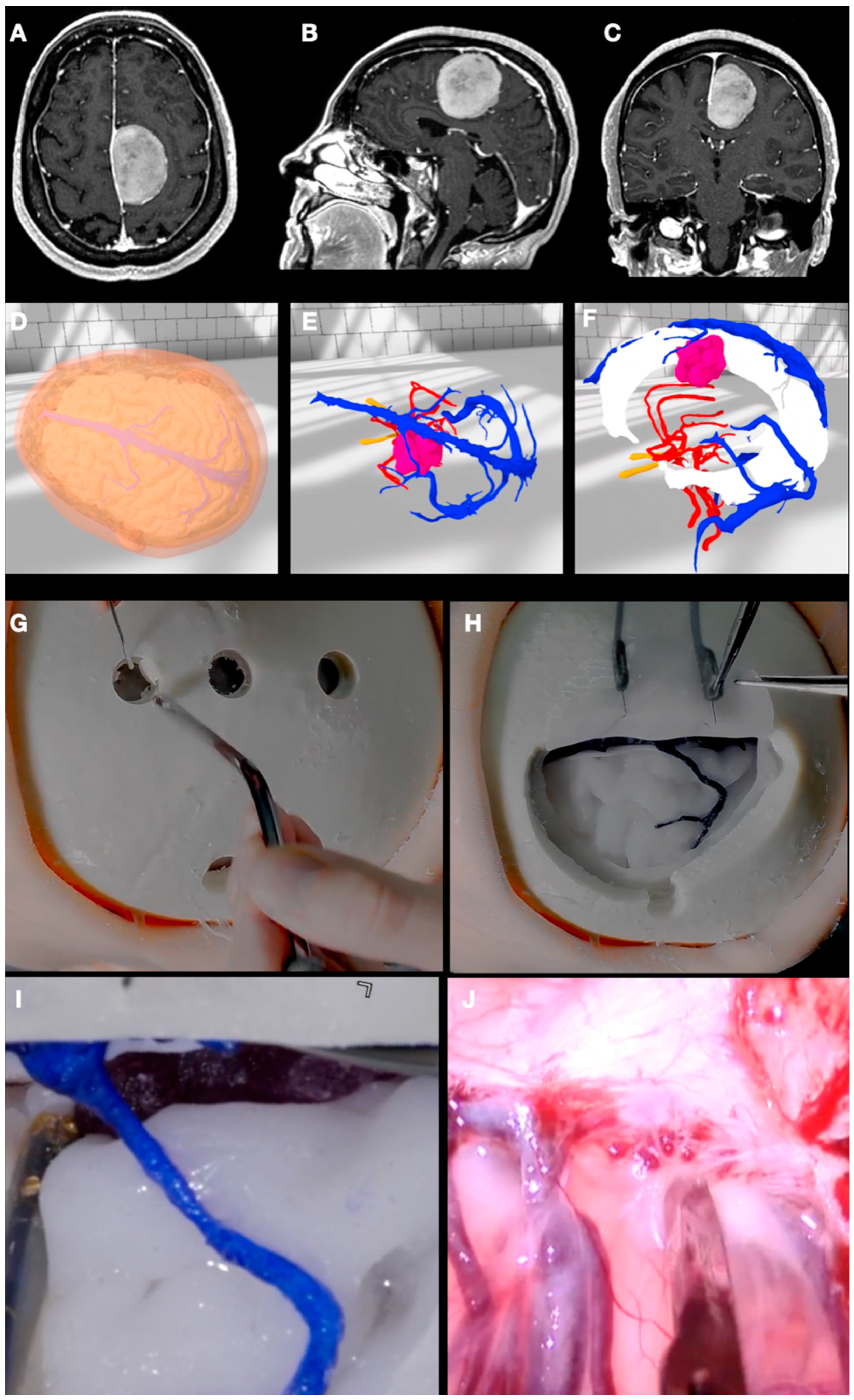

3.5. Case 5: Falcine Meningioma

4. Discussion

4.1. Surgical Planning

4.2. Virtual Reality

4.3. 3D Printing

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sunaert, S. Presurgical planning for tumor resectioning. J. Magn. Reson. Imaging 2006, 23, 887–905. [Google Scholar] [CrossRef] [PubMed]

- Armstrong, T.S.; Cohen, M.Z.; Weinberg, J.; Gilbert, M.R. Imaging techniques in neuro-oncology. Semin. Oncol. Nurs. 2004, 20, 231–239. [Google Scholar] [CrossRef] [PubMed]

- Jean, W.C. Virtual and augmented reality in neurosurgery: The evolution of its application and study designs. World Neurosurg. 2022, 161, 459–464. [Google Scholar] [CrossRef] [PubMed]

- Ruparelia, J.; Manjunath, N.; Nachiappan, D.S.; Raheja, A.; Suri, A. Virtual reality in preoperative planning of complex cranial surgery. World Neurosurg. 2023, 180, e11–e18. [Google Scholar] [CrossRef]

- Mishra, R.; Narayanan, K.; Umana, G.E.; Montemurro, N.; Chaurasia, B.; Deora, H. Virtual reality in neurosurgery: Beyond neurosurgical planning. Int. J. Environ. Res. Public Health 2022, 19, 1719. [Google Scholar] [CrossRef] [PubMed]

- Kazemzadeh, K.; Akhlaghdoust, M.; Zali, A. Advances in artificial intelligence, robotics, augmented and virtual reality in neurosurgery. Front. Surg. 2023, 10, 1241923. [Google Scholar] [CrossRef] [PubMed]

- Sugiyama, T.; Clapp, T.; Nelson, J.; Eitel, C.; Motegi, H.; Nakayama, N.; Sasaki, T.; Tokairin, K.; Ito, M.; Kazumata, K.; et al. Immersive 3-dimensional virtual reality modeling for case-specific presurgical discussions in cerebrovascular neurosurgery. Oper. Neurosurg. 2021, 20, 289–299. [Google Scholar] [CrossRef]

- Bartikian, M.; Ferreira, A.; Gonçalves-Ferreira, A.; Neto, L.L. 3D printing anatomical models of head bones. Surg. Radiol. Anat. 2019, 41, 1205–1209. [Google Scholar] [CrossRef]

- Dho, Y.-S.; Lee, D.; Ha, T.; Ji, S.Y.; Kim, K.M.; Kang, H.; Kim, M.-S.; Kim, J.W.; Cho, W.-S.; Kim, Y.H.; et al. Clinical application of patient-specific 3D printing brain tumor model production system for neurosurgery. Sci. Rep. 2021, 11, 7005. [Google Scholar] [CrossRef]

- Peng, Y.; Xie, Z.; Chen, S.; Wu, Y.; Dong, J.; Li, J.; He, J.; Chen, X.; Gao, H. Application effect of head-mounted mixed reality device combined with 3D printing model in neurosurgery ventricular and hematoma puncture training. BMC Med. Educ. 2023, 23, 670. [Google Scholar] [CrossRef]

- Blohm, J.E.; Salinas, P.A.; Avila, M.J.; Barber, S.R.; Weinand, M.E.; Dumont, T.M. Three-dimensional printing in neurosurgery residency training: A systematic review of the literature. World Neurosurg. 2022, 161, 111–122. [Google Scholar] [CrossRef]

- Baby, B.; Singh, R.; Suri, A.; Dhanakshirur, R.R.; Chakraborty, A.; Kumar, S.; Kalra, P.K.; Banerjee, S. A review of virtual reality simulators for neuroendoscopy. Neurosurg. Rev. 2020, 43, 1255–1272. [Google Scholar] [CrossRef]

- Colombo, E.; Lutters, B.; Kos, T.; van Doormaal, T. Application of virtual and mixed reality for 3D visualization in intracranial aneurysm surgery planning: A systematic review. Front. Surg. 2023, 10, 1227510. [Google Scholar] [CrossRef]

- Iop, A.; El-Hajj, V.G.; Gharios, M.; de Giorgio, A.; Monetti, F.M.; Edström, E.; Elmi-Terander, A.; Romero, M. Extended reality in neurosurgical education: A systematic review. Sensors 2022, 22, 6067. [Google Scholar] [CrossRef]

- Yan, C.; Wu, T.; Huang, K.; He, J.; Liu, H.; Hong, Y.; Wang, B. The application of virtual reality in cervical spinal surgery: A review. World Neurosurg. 2021, 145, 108–113. [Google Scholar] [CrossRef]

- Pelargos, P.E.; Nagasawa, D.T.; Lagman, C.; Tenn, S.; Demos, J.V.; Lee, S.J.; Bui, T.T.; Barnette, N.E.; Bhatt, N.S.; Ung, N.; et al. Utilizing virtual and augmented reality for educational and clinical enhancements in neurosurgery. J. Clin. Neurosci. 2017, 35, 1–4. [Google Scholar] [CrossRef]

- Larobina, M. Thirty years of the DICOM standard. Tomography 2023, 9, 1829–1838. [Google Scholar] [CrossRef]

- Fedorov, A.; Beichel, R.; Kalpathy-Cramer, J.; Finet, J.; Fillion-Robin, J.-C.; Pujol, S.; Bauer, C.; Jennings, D.; Fennessy, F.; Sonka, M.; et al. 3D Slicer as an image computing platform for the Quantitative Imaging Network. Magn. Reson. Imaging 2012, 30, 1323–1341. [Google Scholar] [CrossRef]

- Kikinis, R.; Pieper, S. 3D Slicer as a tool for interactive brain tumor segmentation. In Proceedings of the 2011 33rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 6982–6984. [Google Scholar] [CrossRef]

- Damon, A.; Clifton, W.; Valero-Moreno, F.; Quinones-Hinojosa, A. Cost-effective method for 3-dimensional printing dynamic multiobject and patient-specific brain tumor models: Technical note. World Neurosurg. 2020, 140, 173–179. [Google Scholar] [CrossRef]

- Duda, S.; Meyer, L.; Musienko, E.; Hartig, S.; Meyer, T.; Fette, M.; Wessling, H. The manufacturing of 3D printed models for the neurotraumatological education of military surgeons. Mil. Med. 2020, 185, e2013–e2019. [Google Scholar] [CrossRef]

- Kubben, P.; Sinlae, R.N. Feasibility of using a low-cost head-mounted augmented reality device in the operating room. Surg. Neurol. Int. 2019, 10, 26. [Google Scholar] [CrossRef]

- Akehasu, R.; Fuchi, T.; Joto, A.; Nonaka, M.; Onishi, K.; Jozen, T. VR interface for accumulation and sharing of knowledge database in neurosurgery. In Human-Computer Interaction Technological Innovation; Springer International Publishing: Cham, Switzerland, 2022; pp. 3–13. [Google Scholar]

- Trandzhiev, M.; Vezirska, D.I.; Maslarski, I.; Milev, M.D.; Laleva, L.; Nakov, V.; Cornelius, J.F.; Spiriev, T. Photogrammetry applied to neurosurgery: A literature review. Cureus 2023, 15, e46251. [Google Scholar] [CrossRef]

- Algethami, H.C.; Lam, F.; Rojas, R.M.; Kasper, E. Pre-surgical and surgical planning in neurosurgical oncology—A case-based approach to maximal safe surgical resection in neurosurgery. In Frontiers in Clinical Neurosurgery; IntechOpen: London, UK, 2021. [Google Scholar]

- Mitchell, D.; Shireman, J.M.; Dey, M. Surgical neuro-oncology: Management of glioma. Neurol. Clin. 2022, 40, 437–453. [Google Scholar] [CrossRef]

- Wadley, J.; Dorward, N.; Kitchen, N.; Thomas, D. Pre-operative planning and intra-operative guidance in modern neurosurgery: A review of 300 cases. Ann. R. Coll. Surg. Engl. 1999, 81, 217–225. [Google Scholar]

- Renfrow, J.J.; Strowd, R.E.; Laxton, A.W.; Tatter, S.B.; Geer, C.P.; Lesser, G.J. Surgical considerations in the optimal management of patients with malignant brain tumors. Curr. Treat. Options Oncol. 2017, 18, 46–146. [Google Scholar] [CrossRef]

- Bruening, D.M.; Truckenmueller, P.; Stein, C.; Fuellhase, J.; Vajkoczy, P.; Picht, T.; Acker, G. 360° 3D virtual reality operative video for the training of residents in neurosurgery. Neurosurg. Focus 2022, 53, E4. [Google Scholar] [CrossRef]

- Chan, J.; Pangal, D.J.; Cardinal, T.; Kugener, G.; Zhu, Y.; Roshannai, A.; Markarian, N.; Sinha, A.; Anandkumar, A.; Hung, A.; et al. A systematic review of virtual reality for the assessment of technical skills in neurosurgery. Neurosurg. Focus 2021, 51, E15. [Google Scholar] [CrossRef]

- Mofatteh, M.; Mashayekhi, M.S.; Arfaie, S.; Chen, Y.; Mirza, A.B.; Fares, J.; Bandyopadhyay, S.; Henich, E.; Liao, X.; Bernstein, M. Augmented and virtual reality usage in awake craniotomy: A systematic review. Neurosurg. Rev. 2022, 46, 19. [Google Scholar] [CrossRef]

- Knafo, S.; Penet, N.; Gaillard, S.; Parker, F. Cognitive versus virtual reality simulation for evaluation of technical skills in neurosurgery. Neurosurg. Focus 2021, 51, E9. [Google Scholar] [CrossRef]

- Chytas, D.; Paraskevas, G.; Noussios, G.; Demesticha, T.; Asouhidou, I.; Salmas, M. Considerations for the value of immersive virtual reality platforms for neurosurgery trainees’ anatomy understanding. Surg. Neurol. Int. 2023, 14, 173. [Google Scholar] [CrossRef]

- Yuk, F.J.; Maragkos, G.A.; Sato, K.; Steinberger, J. Current innovation in virtual and augmented reality in spine surgery. Ann. Transl. Med. 2021, 9, 94. [Google Scholar] [CrossRef]

- Putze, F.; Vourvopoulos, A.; Lécuyer, A.; Krusienski, D.; Bermúdez i Badia, S.; Mullen, T.; Herff, C. Editorial: Brain-computer interfaces and augmented/virtual reality. Front. Hum. Neurosci. 2020, 14, 144. [Google Scholar] [CrossRef]

- Sabbagh, A.J.; Bajunaid, K.M.; Alarifi, N.; Winkler-Schwartz, A.; Alsideiri, G.; Al-Zhrani, G.; Alotaibi, F.E.; Bugdadi, A.; Laroche, D.; Del Maestro, R.F. Roadmap for developing complex virtual reality simulation scenarios: Subpial neurosurgical tumor resection model. World Neurosurg. 2020, 139, e220–e229. [Google Scholar] [CrossRef]

- Zaed, I.; Chibbaro, S.; Ganau, M.; Tinterri, B.; Bossi, B.; Peschillo, S.; Capo, G.; Costa, F.; Cardia, A.; Cannizzaro, D. Simulation and virtual reality in intracranial aneurysms neurosurgical training: A systematic review. J. Neurosurg. Sci. 2022, 66, 494–500. [Google Scholar] [CrossRef]

- Zhang, C.; Gao, H.; Liu, Z.; Huang, H. The Potential Value of Mixed Reality in Neurosurgery. J. Craniofacial Surg. 2021, 32, 940–943. [Google Scholar] [CrossRef]

- Durrani, S.; Onyedimma, C.; Jarrah, R.; Bhatti, A.; Nathani, K.R.; Bhandarkar, A.R.; Mualem, W.; Ghaith, A.K.; Zamanian, C.; Michalopoulos, G.D.; et al. The virtual vision of neurosurgery: How augmented reality and virtual reality are transforming the neurosurgical operating room. World Neurosurg. 2022, 168, 190–201. [Google Scholar] [CrossRef]

- Taghian, A.; Abo-Zahhad, M.; Sayed, M.S.; El-Malek, A.H.A. Virtual and augmented reality in biomedical engineering. Biomed. Eng. Online 2023, 22, 76. [Google Scholar] [CrossRef]

- McCloskey, K.; Turlip, R.; Ahmad, H.S.; Ghenbot, Y.G.; Chauhan, D.; Yoon, J.W. Virtual and augmented reality in spine surgery: A systematic review. World Neurosurg. 2023, 173, 96–107. [Google Scholar] [CrossRef]

- Higginbotham, G. Virtual connections: Improving global neurosurgery through immersive technologies. Front. Surg. 2021, 8, 629963. [Google Scholar] [CrossRef]

- Gonzalez-Romo, N.I.; Mignucci-Jiménez, G.; Hanalioglu, S.; Gurses, M.E.; Bahadir, S.; Xu, Y.; Koskay, G.; Lawton, M.T.; Preul, M.C. Virtual neurosurgery anatomy laboratory: A collaborative and remote education experience in the metaverse. Surg. Neurol. Int. 2023, 14, 90. [Google Scholar] [CrossRef]

- Lee, C.; Wong, G.K.C. Virtual reality and augmented reality in the management of intracranial tumors: A review. J. Clin. Neurosci. 2019, 62, 14–20. [Google Scholar] [CrossRef]

- Bernardo, A. Virtual Reality and Simulation in Neurosurgical Training. World Neurosurg. 2017, 106, 1015–1029. [Google Scholar] [CrossRef]

- Katsevman, G.A.; Greenleaf, W.; García-García, R.; Perea, M.V.; Ladera, V.; Sherman, J.H.; Rodríguez, G. Virtual reality during brain mapping for awake-patient brain tumor surgery: Proposed tasks and domains to test. World Neurosurg. 2021, 152, e462–e466. [Google Scholar] [CrossRef]

- Fiani, B.; De Stefano, F.; Kondilis, A.; Covarrubias, C.; Reier, L.; Sarhadi, K. Virtual reality in neurosurgery: “can you see it?”–A review of the current applications and future potential. World Neurosurg. 2020, 141, 291–298. [Google Scholar] [CrossRef]

- Shao, X.; Yuan, Q.; Qian, D.; Ye, Z.; Chen, G.; Le Zhuang, K.; Jiang, X.; Jin, Y.; Qiang, D. Virtual reality technology for teaching neurosurgery of skull base tumor. BMC Med. Educ. 2020, 20, 3. [Google Scholar] [CrossRef]

- Vayssiere, P.; Constanthin, P.E.; Herbelin, B.; Blanke, O.; Schaller, K.; Bijlenga, P. Application of virtual reality in neurosurgery: Patient missing. A systematic review. J. Clin. Neurosci. 2022, 95, 55–62. [Google Scholar] [CrossRef]

- Dadario, N.B.; Quinoa, T.; Khatri, D.; Boockvar, J.; Langer, D.; D’Amico, R.S. Examining the benefits of extended reality in neurosurgery: A systematic review. J. Clin. Neurosci. 2021, 94, 41–53. [Google Scholar] [CrossRef]

- Perin, A.; Prada, F.U.; Moraldo, M.; Schiappacasse, A.; Galbiati, T.F.; Gambatesa, E.; D’orio, P.; Riker, N.I.; Basso, C.; Santoro, M.; et al. USim: A new device and app for case-specific, intraoperative ultrasound simulation and rehearsal in neurosurgery. A preliminary study. Oper. Neurosurg. 2018, 14, 572–578. [Google Scholar] [CrossRef]

- Perin, A.; Galbiati, T.F.; Gambatesa, E.; Ayadi, R.; Orena, E.F.; Cuomo, V.; Riker, N.I.; Falsitta, L.V.; Schembari, S.; Rizzo, S.; et al. Filling the gap between the OR and virtual simulation: A European study on a basic neurosurgical procedure. Acta Neurochir. 2018, 160, 2087–2097. [Google Scholar] [CrossRef]

- Perin, A.; Carone, G.; Rui, C.B.; Raspagliesi, L.; Fanizzi, C.; Galbiati, T.F.; Gambatesa, E.; Ayadi, R.; Casali, C.; Meling, T.R.; et al. The “STARS–CT-MADE” study: Advanced rehearsal and intraoperative navigation for skull base tumors. World Neurosurg. 2021, 154, e19–e28. [Google Scholar] [CrossRef]

- Perin, A.; Galbiati, T.F.; Ayadi, R.; Gambatesa, E.; Orena, E.F.; Riker, N.I.; Silberberg, H.; Sgubin, D.; Meling, T.R.; DiMeco, F. Informed consent through 3D virtual reality: A randomized clinical trial. Acta Neurochir. 2021, 163, 301–308. [Google Scholar] [CrossRef]

- Perin, A.; Gambatesa, E.; Rui, C.B.; Carone, G.; Fanizzi, C.; Lombardo, F.M.; Galbiati, T.F.; Sgubin, D.; Silberberg, H.; Cappabianca, P.; et al. The “STARS” study: Advanced preoperative rehearsal and intraoperative navigation in neurosurgical oncology. J. Neurosurg. Sci. 2023, 67, 671–678. [Google Scholar] [CrossRef]

- Perin, A.; Gambatesa, E.; Galbiati, T.F.; Fanizzi, C.; Carone, G.; Rui, C.B.; Ayadi, R.; Saladino, A.; Mattei, L.; Sop, F.Y.L.; et al. The “STARS-CASCADE” study: Virtual reality simulation as a new training approach in vascular neurosurgery. World Neurosurg. 2021, 154, e130–e146. [Google Scholar] [CrossRef]

- Habib, A.; Jovanovich, N.; Muthiah, N.; Alattar, A.; Alan, N.; Agarwal, N.; Ozpinar, A.; Hamilton, D.K. 3D printing applications in spine surgery: An evidence-based assessment toward personalized patient care. Eur. Spine J. 2022, 31, 1682–1690. [Google Scholar] [CrossRef]

- Langdon, C.; Hinojosa-Bernal, J.; Munuera, J.; Gomez-Chiari, M.; Haag, O.; Veneri, A.; Valldeperes, A.; Valls, A.; Adell, N.; Santamaria, V.; et al. 3D printing as surgical planning and training in pediatric endoscopic skull base surgery—Systematic review and practical example. Int. J. Pediatr. Otorhinolaryngol. 2023, 168, 111543. [Google Scholar] [CrossRef]

- Graffeo, C.S.; Perry, A.; Carlstrom, L.P.; Peris-Celda, M.; Alexander, A.; Dickens, H.J.; Holroyd, M.J.; Driscoll, C.L.W.; Link, M.J.; Morris, J. 3D printing for complex cranial surgery education: Technical overview and preliminary validation study. J. Neurol. Surg. Part B Skull Base 2021, 83, e105–e112. [Google Scholar] [CrossRef]

- Thiong’o, G.M.; Bernstein, M.; Drake, J.M. 3D printing in neurosurgery education: A review. 3D Print. Med. 2021, 7, 9. [Google Scholar] [CrossRef]

- Wang, J.-L.; Yuan, Z.-G.; Qian, G.-L.; Bao, W.-Q.; Jin, G.-L. 3D printing of intracranial aneurysm based on intracranial digital subtraction angiography and its clinical application. Medicine 2018, 97, e11103. [Google Scholar] [CrossRef]

- Bannon, R.; Parihar, S.; Skarparis, Y.; Varsou, O.; Cezayirli, E. 3D printing the pterygopalatine fossa: A negative space model of a complex structure. Surg. Radiol. Anat. 2017, 40, 185–191. [Google Scholar] [CrossRef]

- Parthasarathy, J.; Krishnamurthy, R.; Ostendorf, A.; Shinoka, T.; Krishnamurthy, R. 3D printing with MRI in pediatric applications. J. Magn. Reson. Imaging 2020, 51, 1641–1658. [Google Scholar] [CrossRef]

- Chopra, S.; Boro, A.K.; Sinha, V.D. 3D printing-assisted skull base tumor surgeries: An institutional experience. J. Neurosci. Rural. Pract. 2021, 12, 630–634. [Google Scholar] [CrossRef]

- Huang, X.; Liu, Z.; Wang, X.; Li, X.-D.; Cheng, K.; Zhou, Y.; Jiang, X.-B. A small 3D-printing model of macroadenomas for endoscopic endonasal surgery. Pituitary 2019, 22, 46–53. [Google Scholar] [CrossRef]

- Nagassa, R.G.; McMenamin, P.G.; Adams, J.W.; Quayle, M.R.; Rosenfeld, J.V. Advanced 3D printed model of middle cerebral artery aneurysms for neurosurgery simulation. 3D Print. Med. 2019, 5, 11. [Google Scholar] [CrossRef]

- Jiang, W.; Jiang, W.; Jin, P.; Zhang, J.; Xia, J.; Wei, W.; Qing, B. Application of 3D printing technology combined with PBL teaching method in clinical teaching of cerebrovascular disease: An observational study. Medicine 2022, 101, e31970. [Google Scholar] [CrossRef]

- Li, S.; Wang, F.; Chen, W.; Su, Y. Application of three dimensional (3D) curved multi-planar reconstruction images in 3D printing mold assisted eyebrow arch keyhole microsurgery. Brain Behav. 2020, 10, e01785. [Google Scholar] [CrossRef]

- Zhou, L.; Wang, W.; Li, Z.; Wei, H.; Cai, Q.; Chen, Q.; Liu, Z.; Ye, H.; Song, P.; Cheng, L.; et al. Clinical application of 3D-Slicer + 3D printing guide combined with transcranial neuroendoscopic in minimally invasive neurosurgery. Sci. Rep. 2022, 12, 20421. [Google Scholar] [CrossRef]

- Martín-Noguerol, T.; Paulano-Godino, F.; Riascos, R.F.; Calabia-Del-Campo, J.; Márquez-Rivas, J.; Luna, A. Hybrid computed tomography and magnetic resonance imaging 3D printed models for neurosurgery planning. Ann. Transl. Med. 2019, 7, 684. [Google Scholar] [CrossRef]

- Cogswell, P.M.; Rischall, M.A.; Alexander, A.E.; Dickens, H.J.; Lanzino, G.; Morris, J.M. Intracranial vasculature 3D printing: Review of techniques and manufacturing processes to inform clinical practice. 3D Print. Med. 2020, 6, 18. [Google Scholar] [CrossRef]

- Sidabutar, R.; Yudha, T.W.; Sutiono, A.B.; Huda, F.; Faried, A. Low-cost and open-source three-dimensional (3D) printing in neurosurgery: A pilot experiment using direct drive modification to produce multi-material neuroanatomical models. Clin. Neurol. Neurosurg. 2023, 228, 107684. [Google Scholar] [CrossRef]

- Gillett, D.; Bashari, W.; Senanayake, R.; Marsden, D.; Koulouri, O.; MacFarlane, J.; van der Meulen, M.; Powlson, A.S.; Mendichovszky, I.A.; Cheow, H.; et al. Methods of 3D printing models of pituitary tumors. 3D Print. Med. 2021, 7, 24. [Google Scholar] [CrossRef]

- Kosterhon, M.; Neufurth, M.; Neulen, A.; Schäfer, L.; Conrad, J.; Kantelhardt, S.R.; Müller, W.E.G.; Ringel, F. Multicolor 3D printing of complex intracranial tumors in neurosurgery. J. Vis. Exp. 2020, e60471. [Google Scholar] [CrossRef]

- Yadav, Y.; Bajaj, J. Precision Neurosurgery with 3D Printing. Neurol. India 2023, 71, 207–208. [Google Scholar] [CrossRef]

- Ganguli, A.; Pagan-Diaz, G.J.; Grant, L.; Cvetkovic, C.; Bramlet, M.; Vozenilek, J.; Kesavadas, T.; Bashir, R. 3D printing for preoperative planning and surgical training: A review. Biomed. Microdevices 2018, 20, 65. [Google Scholar] [CrossRef]

- Chen, P.-C.; Chang, C.-C.; Chen, H.-T.; Lin, C.-Y.; Ho, T.-Y.; Chen, Y.-J.; Tsai, C.-H.; Tsou, H.-K.; Lin, C.-S.; Chen, Y.-W.; et al. The accuracy of 3D printing assistance in the spinal deformity surgery. BioMed Res. Int. 2019, 2019, 7196528. [Google Scholar] [CrossRef]

- Meyer-Szary, J.; Luis, M.S.; Mikulski, S.; Patel, A.; Schulz, F.; Tretiakow, D.; Fercho, J.; Jaguszewska, K.; Frankiewicz, M.; Pawłowska, E.; et al. The role of 3D printing in planning complex medical procedures and training of medical professionals—cross-sectional multispecialty review. Int. J. Environ. Res. Public Health 2022, 19, 3331. [Google Scholar] [CrossRef]

- Morris, J.M.; Wentworth, A.; Houdek, M.T.; Karim, S.M.; Clarke, M.J.; Daniels, D.J.; Rose, P.S. The role of 3D printing in treatment planning of spine and sacral tumors. Neuroimaging Clin. N. Am. 2023, 33, 507–529. [Google Scholar] [CrossRef]

- Karuppiah, R.; Munusamy, T.; Bahuri, N.F.A.; Waran, V. The utilisation of 3D printing in paediatric neurosurgery. Child’s Nerv. Syst. 2021, 37, 1479–1484. [Google Scholar] [CrossRef]

- Murray-Douglass, A.; Snoswell, C.; Winter, C.; Harris, R. Three-dimensional (3D) printing for post-traumatic orbital reconstruction, a systematic review and meta-analysis. Br. J. Oral Maxillofac. Surg. 2022, 60, 1176–1183. [Google Scholar] [CrossRef]

- Soldozy, S.; Yağmurlu, K.; Akyeampong, D.K.; Burke, R.; Morgenstern, P.F.; Keating, R.F.; Black, J.S.; Jane, J.A.; Syed, H.R. Three-dimensional printing and craniosynostosis surgery. Child’s Nerv. Syst. 2021, 37, 2487–2495. [Google Scholar] [CrossRef]

- You, Y.; Niu, Y.; Sun, F.; Huang, S.; Ding, P.; Wang, X.; Zhang, X.; Zhang, J. Three-dimensional printing and 3D slicer powerful tools in understanding and treating neurosurgical diseases. Front. Surg. 2022, 9, 1030081. [Google Scholar] [CrossRef]

| Step 1 | Patient image acquisition (CT, CTA, MRI, etc.) | |

| Step 2 | Download the DICOM images | |

| Step 3 | Load the DICOM images into 3D Slicer | |

| Open the “Segment Editor” module: the “Threshold”, “Draw”, and “Grow from seeds” tools, among others, are used to segment the neuroanatomical structures | ||

| Export files to an .STL format | ||

| Step 4 (*) | Load the .STL file into MeshMixer | |

| Use the “Inspector” and “Sculpt” tools, among others, to refine the reconstructed radio-anatomical structure | ||

| Export the refined object into an .OBJ file | ||

| VR | 3D printing | |

| Step 5 (*) | Open the .OBJ file with Blender | Select the desired material: polylactic acid, acrylonitrile butadiene styrene, polyethylene terephthalate glycol; resins like stereolithography; digital light processing; and ballistic gels, among others |

| In the “Material” menu, select the desired color and texture | ||

| Export the file to an .FBX format | ||

| Step 6 | Import the reconstructed structures to Gravity Sketch on a Meta Oculus Quest 2 VR headset | Print the reconstructed structures |

| Assemble all the desired structures into the VR model | Assemble all the printed structures into the model | |

| Step 7 | The surgical case can be studied, analyzed, and detailed in VR | The surgical case can be performed, trained, and studied hands-on on the printed model |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

González-López, P.; Kuptsov, A.; Gómez-Revuelta, C.; Fernández-Villa, J.; Abarca-Olivas, J.; Daniel, R.T.; Meling, T.R.; Nieto-Navarro, J. The Integration of 3D Virtual Reality and 3D Printing Technology as Innovative Approaches to Preoperative Planning in Neuro-Oncology. J. Pers. Med. 2024, 14, 187. https://doi.org/10.3390/jpm14020187

González-López P, Kuptsov A, Gómez-Revuelta C, Fernández-Villa J, Abarca-Olivas J, Daniel RT, Meling TR, Nieto-Navarro J. The Integration of 3D Virtual Reality and 3D Printing Technology as Innovative Approaches to Preoperative Planning in Neuro-Oncology. Journal of Personalized Medicine. 2024; 14(2):187. https://doi.org/10.3390/jpm14020187

Chicago/Turabian StyleGonzález-López, Pablo, Artem Kuptsov, Cristina Gómez-Revuelta, Jaime Fernández-Villa, Javier Abarca-Olivas, Roy T. Daniel, Torstein R. Meling, and Juan Nieto-Navarro. 2024. "The Integration of 3D Virtual Reality and 3D Printing Technology as Innovative Approaches to Preoperative Planning in Neuro-Oncology" Journal of Personalized Medicine 14, no. 2: 187. https://doi.org/10.3390/jpm14020187

APA StyleGonzález-López, P., Kuptsov, A., Gómez-Revuelta, C., Fernández-Villa, J., Abarca-Olivas, J., Daniel, R. T., Meling, T. R., & Nieto-Navarro, J. (2024). The Integration of 3D Virtual Reality and 3D Printing Technology as Innovative Approaches to Preoperative Planning in Neuro-Oncology. Journal of Personalized Medicine, 14(2), 187. https://doi.org/10.3390/jpm14020187