Gastrointestinal Tract Polyp Anomaly Segmentation on Colonoscopy Images Using Graft-U-Net

Abstract

:1. Introduction

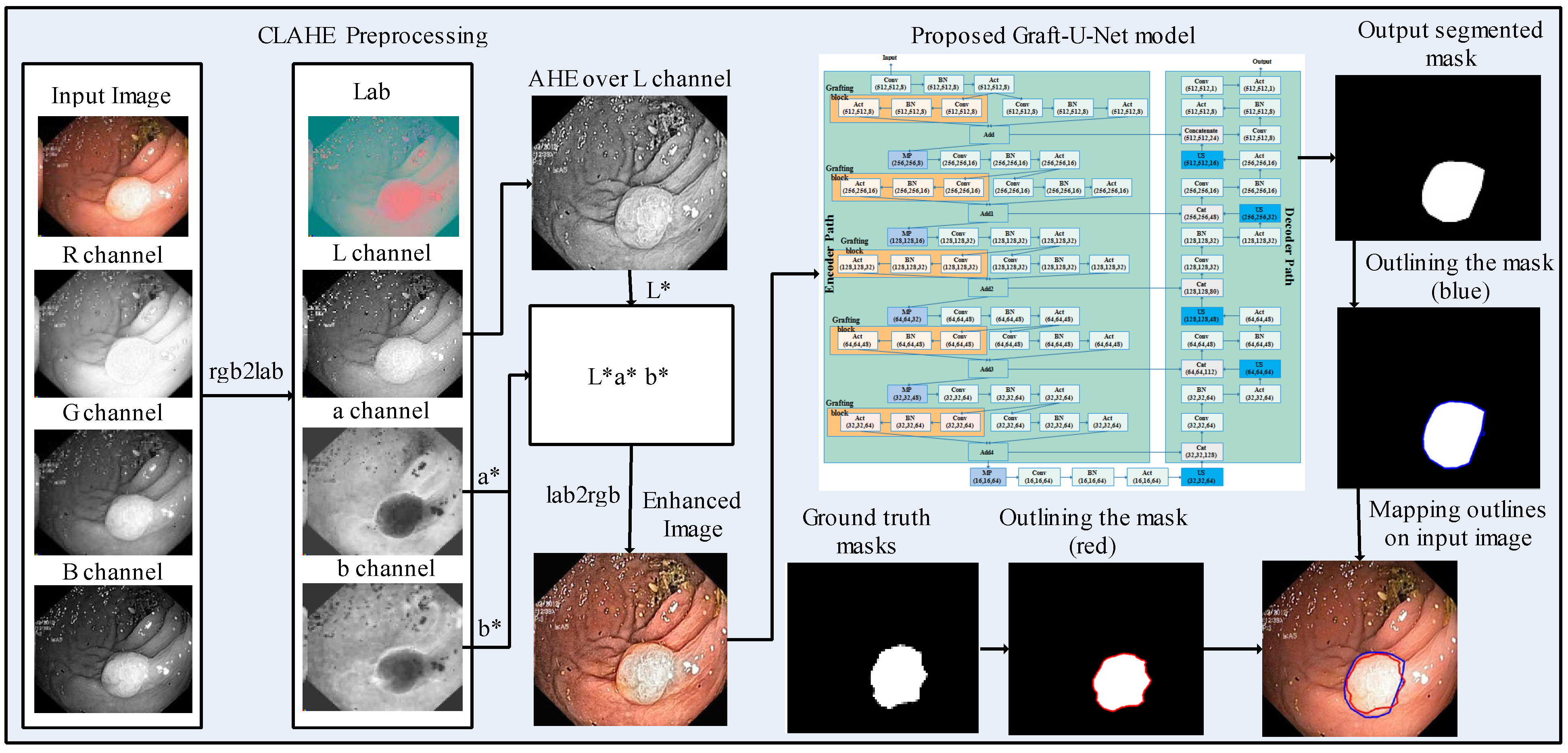

- The CLAHE technique is applied at the preprocessing stage over the Kvasir-SEG dataset for improving the contrast of the frames, which has an impact on the overall execution of the deep learning model.

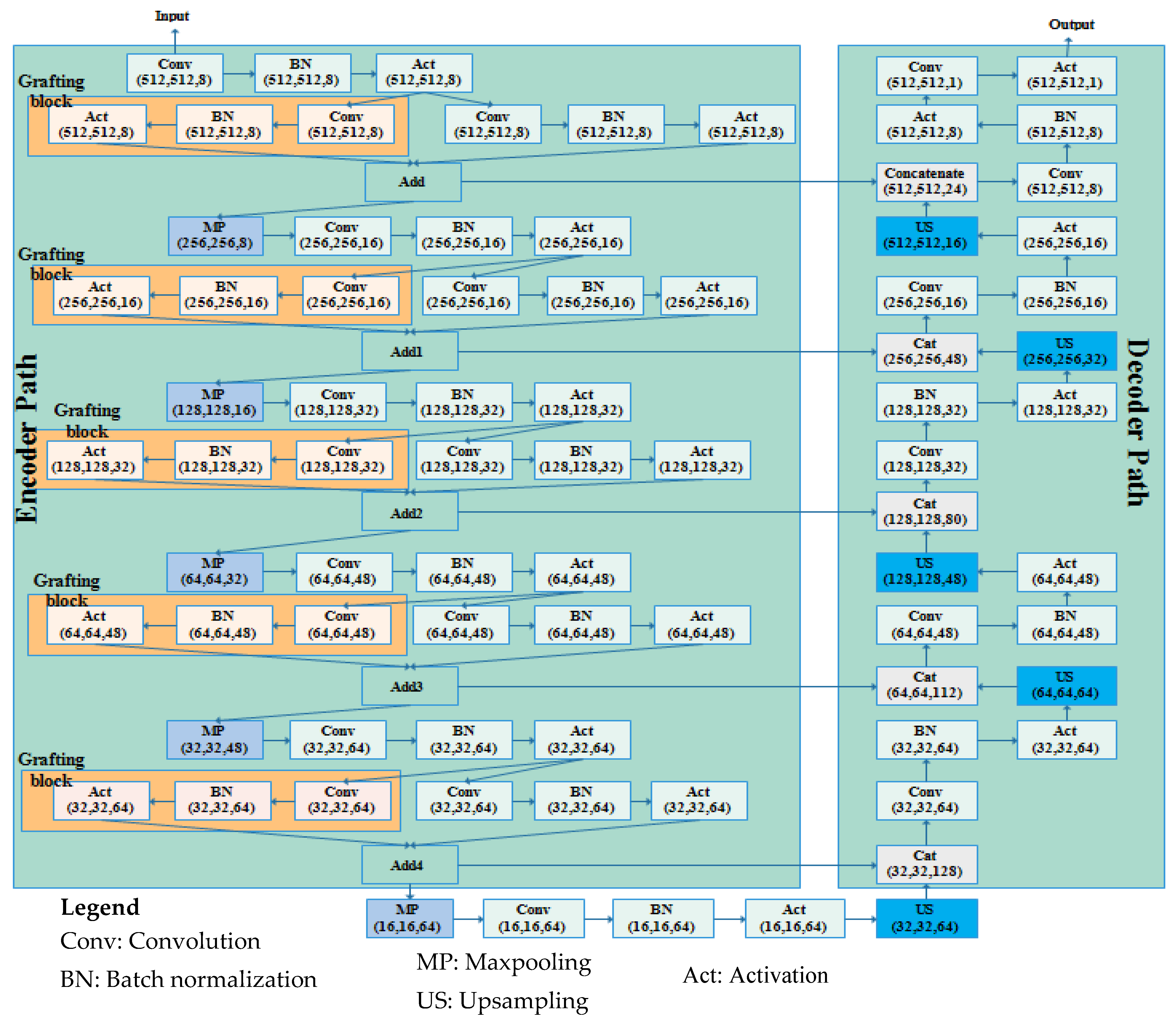

- A CNN-based 74-layer Graft-U-Net architecture is proposed, which is composed of an encoder (analyzing) and decoder (synthesizing) block. In the encoder and decoder blocks, different depth sizes of the filters are employed: 8,16,32,48, and 64. The encoder is modified by the inclusion of the grafting layers parallel to the conventional UNet layers in the encoder block. The derivations of the features of parallel networks are added and forwarded to the next layers. The results of the model are improved by including a graft network layer in the encoder block.

2. Related Works

3. Materials and Methods

3.1. Preprocessing

3.2. Proposed Graft-U-Net Model

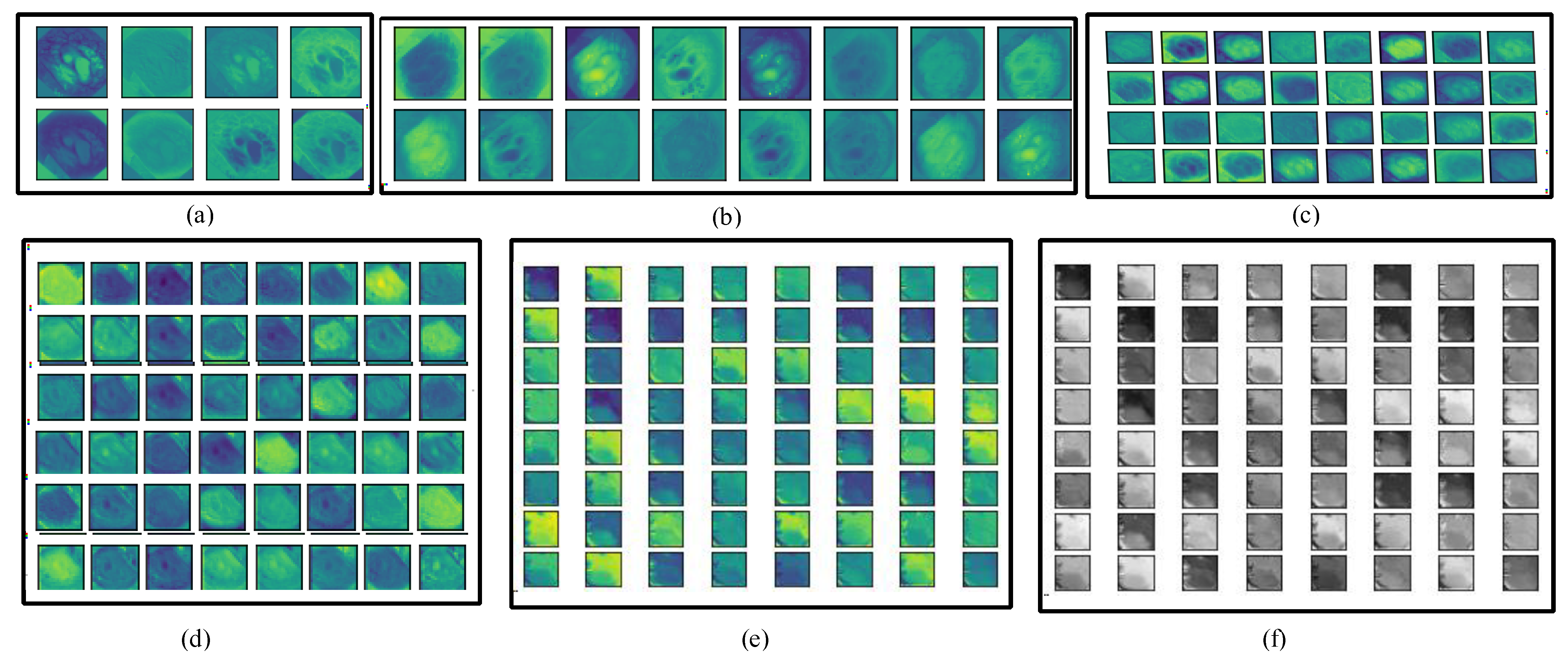

3.2.1. Encoder DSB Blocks (Analysis Blocks)

3.2.2. Decoder USB Blocks (Synthesis blocks)

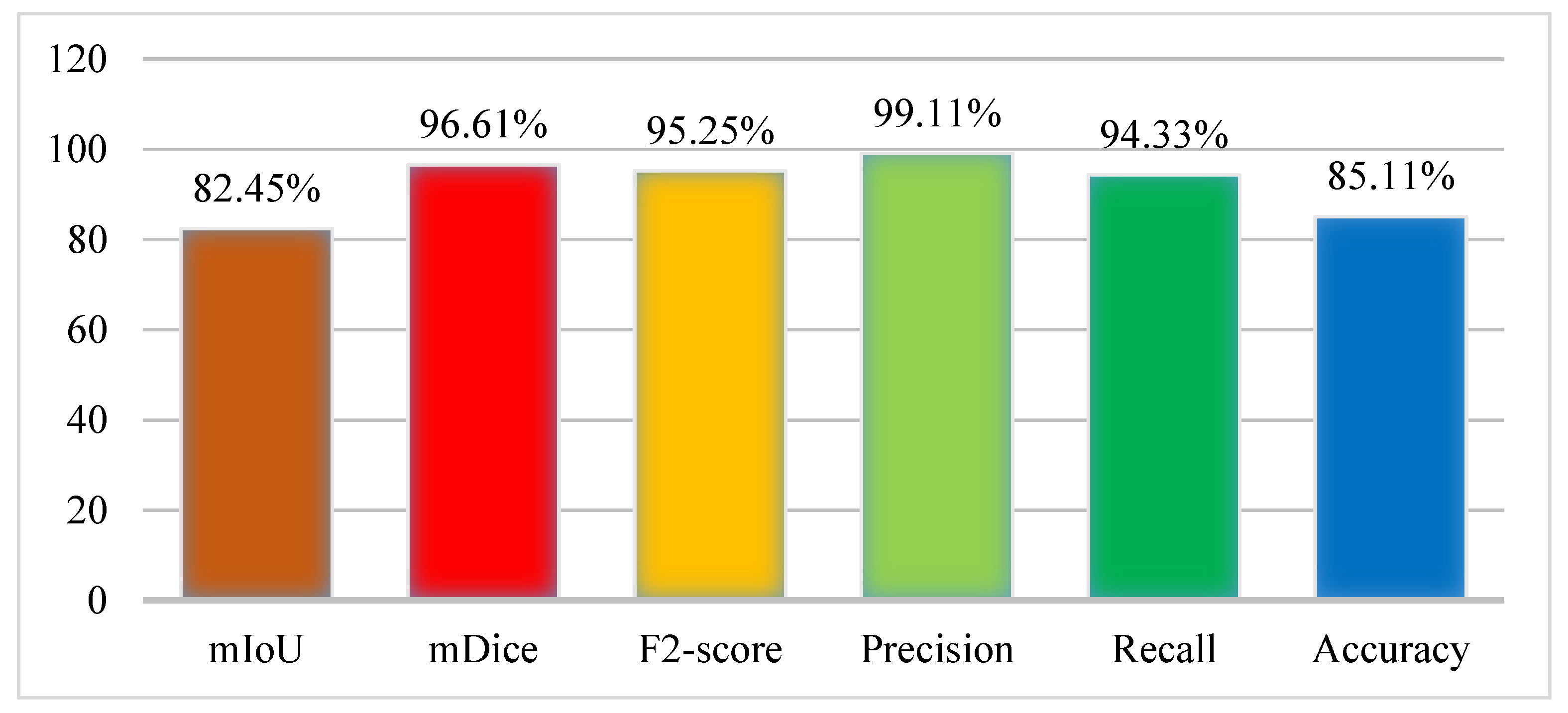

4. Results and Discussion

4.1. Datasets

4.2. Performance Evaluation Measures

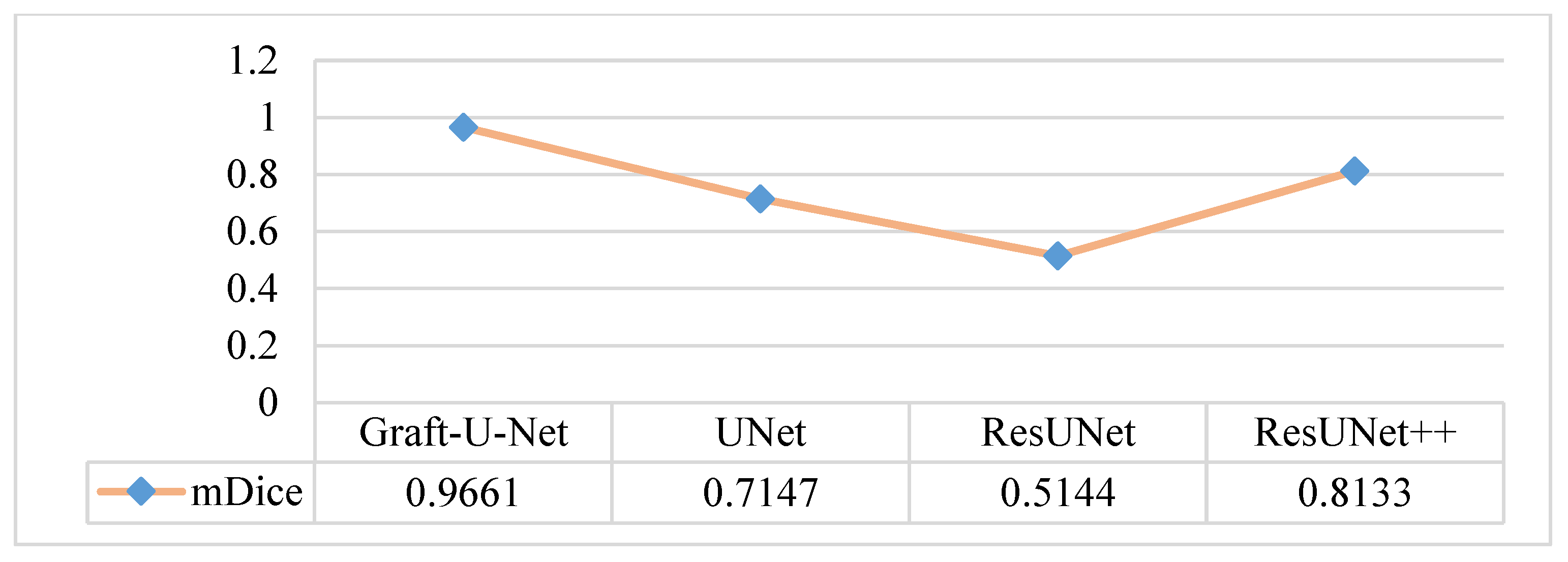

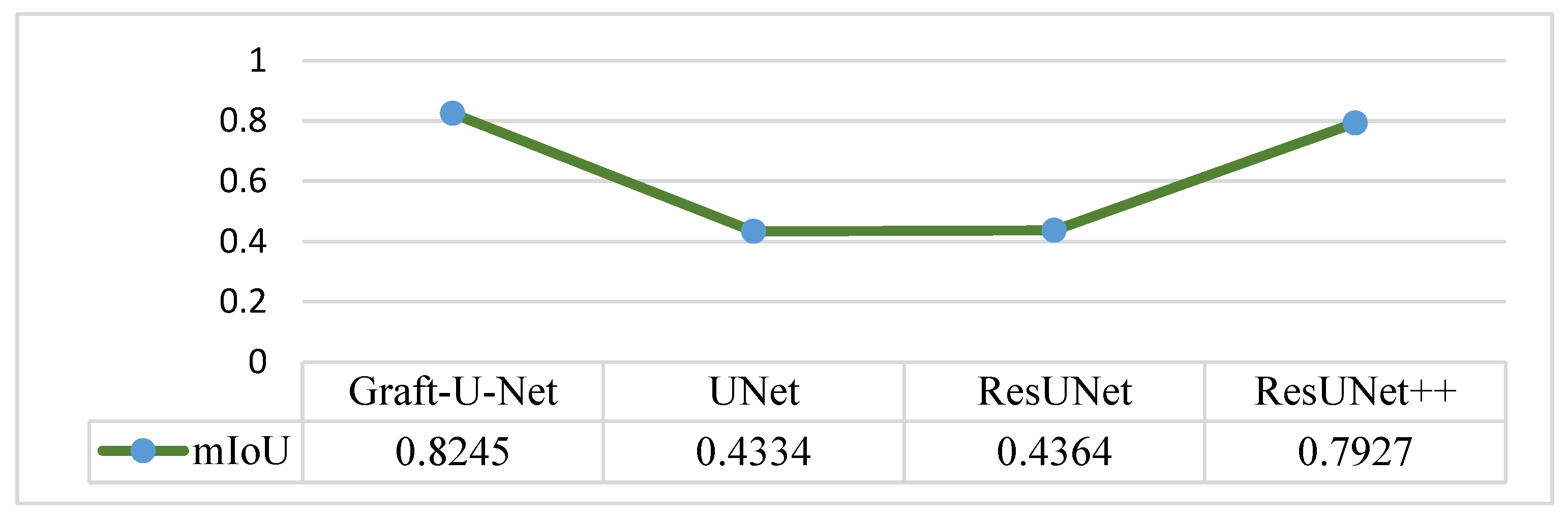

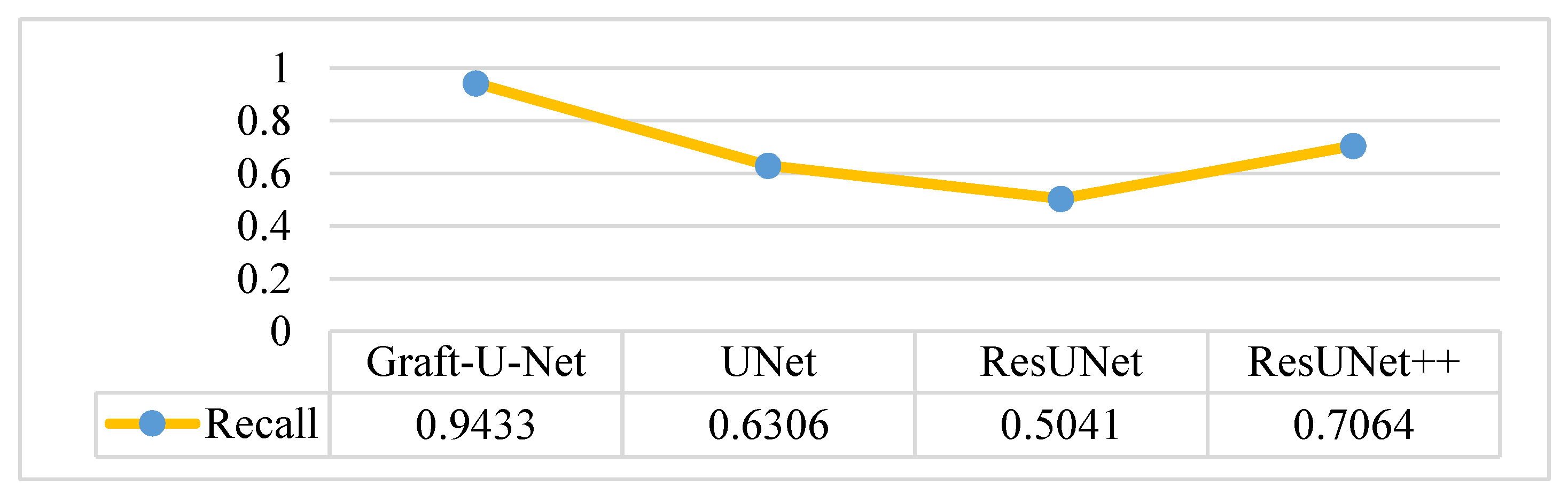

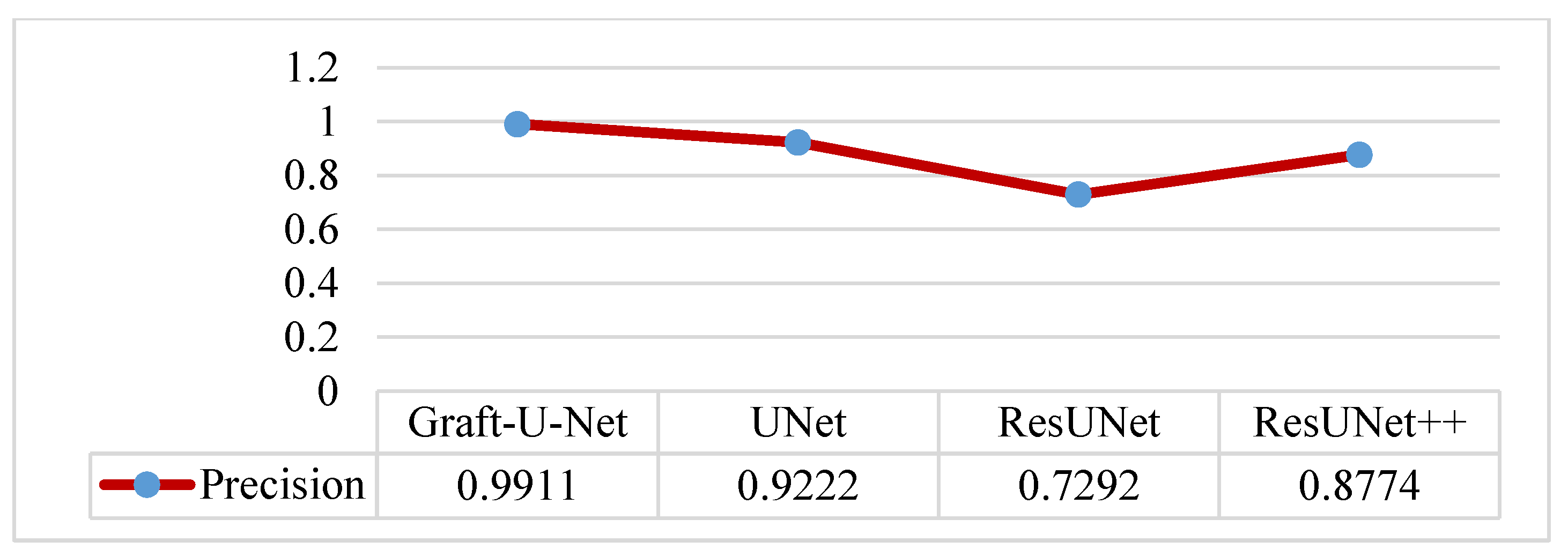

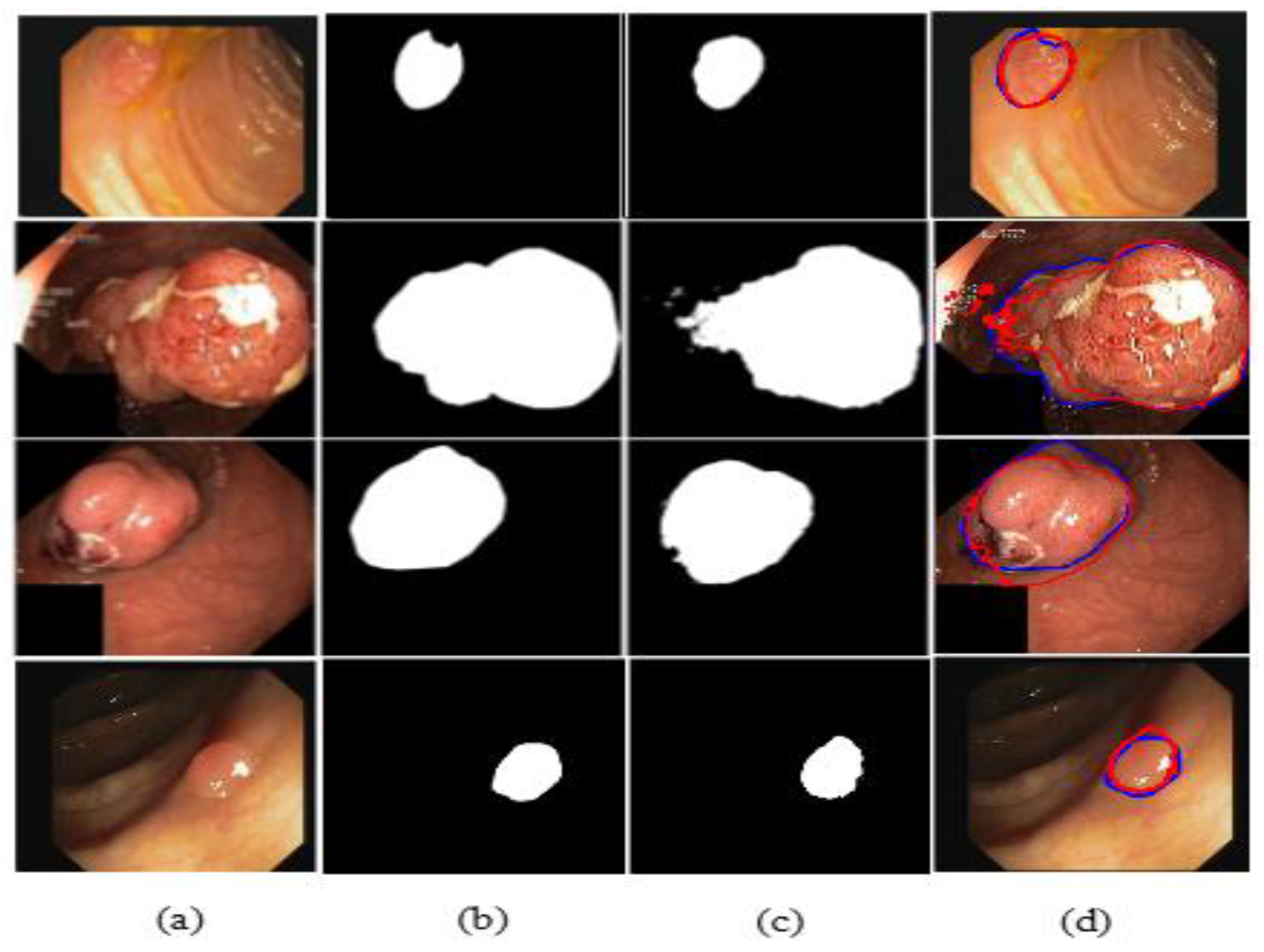

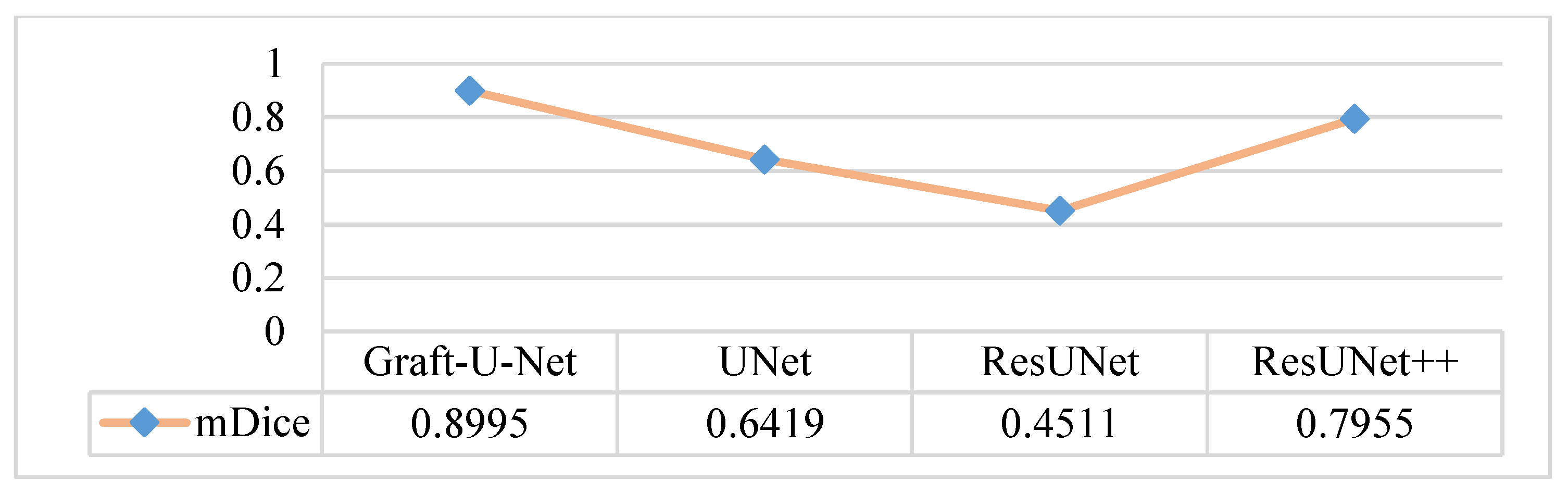

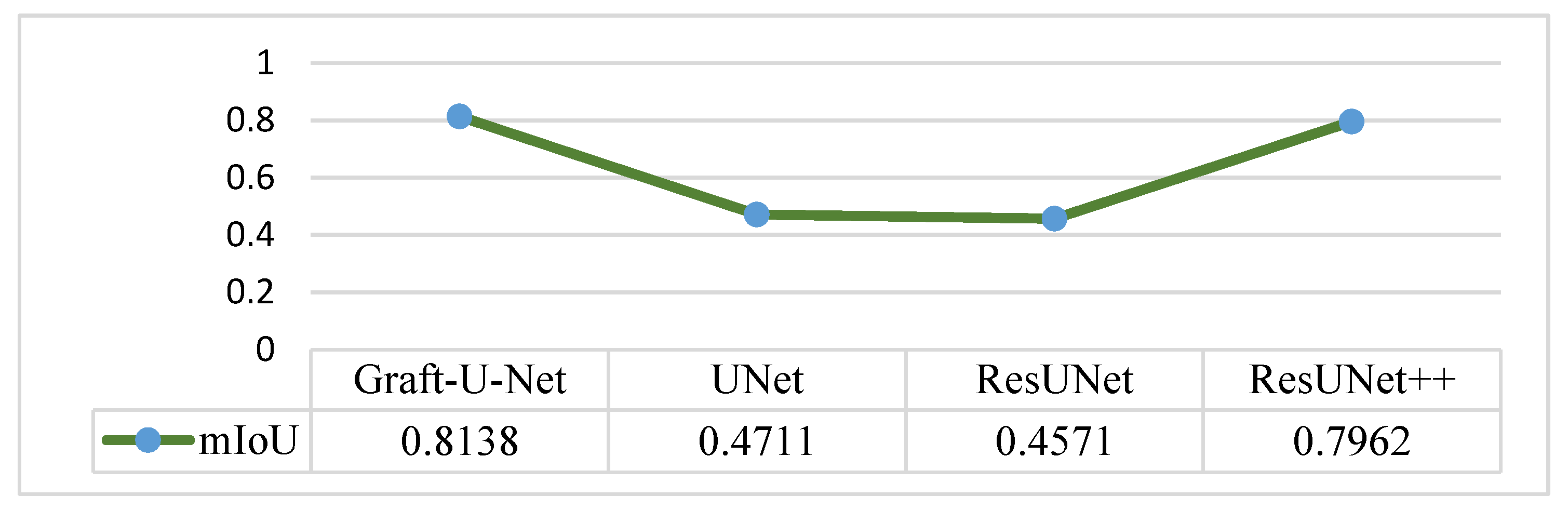

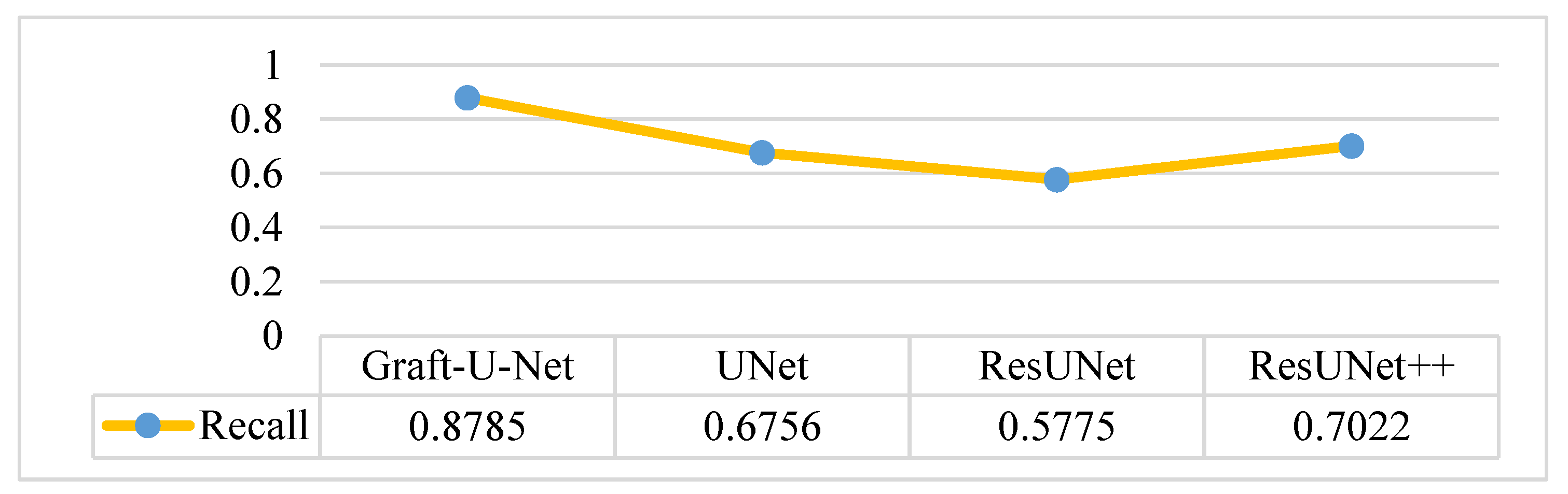

4.3. Experiment 1: Results of Kvasir-SEG Dataset Using Graft-U-Net

4.4. Experiment 2: Results of the CVC-ClinicDB Dataset Using Graft-U-Net

4.5. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Grady, W.M. Epigenetic Alterations in the Gastrointestinal Tract: Current and Emerging Use for Biomarkers of Cancer. Adv. Cancer Res. 2021, 151, 425–468. [Google Scholar] [CrossRef] [PubMed]

- Naz, J.; Sharif, M.; Raza, M.; Shah, J.H.; Yasmin, M.; Kadry, S.; Vimal, S. Recognizing Gastrointestinal Malignancies on WCE and CCE Images by an Ensemble of Deep and Handcrafted Features with Entropy and PCA Based Features Optimization. Neural Process. Lett. 2021, 1–26. [Google Scholar] [CrossRef]

- Nagahara, A.; Shiotani, A.; Iijima, K.; Kamada, T.; Fujiwara, Y.; Kasugai, K.; Kato, M.; Higuchi, K. The Role of Advanced Endoscopy in The Management of Inflammatory Digestive Diseases (upper gastrointestinal tract). Dig. Endosc. 2021, 34, 63–72. [Google Scholar] [CrossRef] [PubMed]

- Naz, J.; Sharif, M.; Yasmin, M.; Raza, M.; Khan, M.A. Detection and Classification of Gastrointestinal Diseases using Machine Learning. Curr. Med. Imaging Former. Curr. Med Imaging Rev. 2021, 17, 479–490. [Google Scholar] [CrossRef] [PubMed]

- Chen, P.-J.; Lin, M.-C.; Lai, M.-J.; Lin, J.-C.; Lu, H.H.-S.; Tseng, V.S. Accurate Classification of Diminutive Colorectal Polyps Using Computer-Aided Analysis. Gastroenterology 2018, 154, 568–575. [Google Scholar] [CrossRef] [PubMed]

- Banik, D.; Roy, K.; Bhattacharjee, D.; Nasipuri, M.; Krejcar, O. Polyp-Net: A Multimodel Fusion Network for Polyp Segmentation. IEEE Trans. Instrum. Meas. 2020, 70, 1–12. [Google Scholar] [CrossRef]

- Sun, S.; Guo, J.; Bhutani, M.S.; Giovannini, M.; Li, Z.; Jin, Z.; Yang, A.; Xu, G.; Wang, G. Can Endoscopic Ultrasound-Guided Needle-Based Confocal Laser Endomicroscopy Replace Fine-Needle Aspiration for Pancreatic and Mediastinal Diseases? Endosc. Ultrasound 2017, 6, 376–381. [Google Scholar] [CrossRef]

- Yang, Y.J.; Cho, B.-J.; Lee, M.-J.; Kim, J.H.; Lim, H.; Bang, C.S.; Jeong, H.M.; Hong, J.T.; Baik, G.H. Automated Classification of Colorectal Neoplasms in White-Light Colonoscopy Images via Deep Learning. J. Clin. Med. 2020, 9, 1593. [Google Scholar] [CrossRef]

- Mori, Y.; Kudo, S.; Ikehara, N.; Wakamura, K.; Wada, Y.; Kutsukawa, M.; Misawa, M.; Kudo, T.; Kobayashi, Y.; Miyachi, H.; et al. Comprehensive Diagnostic Ability of Endocytoscopy Compared with Biopsy for Colorectal Neoplasms: A Prospective Randomized Noninferiority Trial. Laryngo-Rhino-Otologie 2013, 45, 98–105. [Google Scholar] [CrossRef]

- Tomar, N.K. Automatic Polyp Segmentation using Fully Convolutional Neural Network. arXiv 2021, arXiv:2101.04001. [Google Scholar]

- Kronborg, O.; Regula, J. Population Screening for Colorectal Cancer: Advantages and Drawbacks. Dig. Dis. 2007, 25, 270–273. [Google Scholar] [CrossRef] [PubMed]

- Riegler, M. EIR-A Medical Multimedia System for Efficient Computer Aided Diagnosis. Ph.D. Thesis, University of Oslo, Oslo, Norway, 2017. [Google Scholar]

- Ramzan, M.; Raza, M.; Sharif, M.; Khan, M.A.; Nam, Y. Gastrointestinal Tract Infections Classification Using Deep Learning. Comput. Mater. Contin. 2021, 69, 3239–3257. [Google Scholar] [CrossRef]

- Rasheed, S.; Raza, M.; Sharif, M.; Kadry, S.; Alharbi, A. Single Channel Image Enhancement (SCIE) of White Blood Cells Based on Virtual Hexagonal Filter (VHF) Designed over Square Trellis. J. Pers. Med. 2022, 12, 1232. [Google Scholar] [CrossRef] [PubMed]

- Amin, J.; Anjum, M.A.; Sharif, A.; Raza, M.; Kadry, S.; Nam, Y. Malaria Parasite Detection Using a Quantum-Convolutional Network. Cmc-Comput. Mater. Contin. 2022, 70, 6023–6039. [Google Scholar] [CrossRef]

- Shahzad, A.; Raza, M.; Shah, J.H.; Sharif, M.; Nayak, R.S. Categorizing White Blood Cells by Utilizing Deep Features of Proposed 4B-Additionnet-Based CNN Network with Ant Colony Optimization. Complex Intell. Syst. 2022, 8, 3143–3159. [Google Scholar] [CrossRef]

- Naz, M.; Shah, J.H.; Khan, M.A.; Sharif, M.; Raza, M.; Damaševičius, R. From ECG Signals to Images: A Transformation Based Approach for Deep Learning. PeerJ Comput. Sci. 2021, 7, e386. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, M.; Anjum, M.A.; Siddiqa, A.; Kadry, S.; Nam, Y.; Raza, M. 3D Semantic Deep Learning Networks for Leukemia Detection. Comput. Mater. Contin. 2021, 69, 785–799. [Google Scholar] [CrossRef]

- Sharif, M.I.; Khan, M.A.; Alhussein, M.; Aurangzeb, K.; Raza, M. A decision support system for multimodal brain tumor classification using deep learning. Complex Intell. Syst. 2022, 8, 3007–3020. [Google Scholar] [CrossRef]

- Shin, Y.; Qadir, H.A.; Balasingham, I. Abnormal Colon Polyp Image Synthesis Using Conditional Adversarial Networks for Improved Detection Performance. IEEE Access 2018, 6, 56007–56017. [Google Scholar] [CrossRef]

- Huang, C.-H.; Wu, H.-Y.; Lin, Y.-L. HarDNet-MSEG: A Simple Encoder-Decoder Polyp Segmentation Neural Network that Achieves over 0.9 Mean Dice and 86 FPS. arXiv 2021, arXiv:2101.07172. [Google Scholar]

- Nogueira-Rodríguez, A.; Domínguez-Carbajales, R.; López-Fernández, H.; Iglesias, J.; Cubiella, J.; Fdez-Riverola, F.; Reboiro-Jato, M.; Glez-Peña, D. Deep Neural Networks approaches for detecting and classifying colorectal polyps. Neurocomputing 2020, 423, 721–734. [Google Scholar] [CrossRef]

- Liu, W.N.; Zhang, Y.Y.; Bian, X.Q.; Wang, L.J.; Yang, Q.; Zhang, X.D.; Huang, J. Study on Detection Rate of Polyps and Adenomas in Artificial-Intelligence-Aided Colonoscopy. Saudi J. Gastroenterol. Off. J. Saudi Gastroenterol. Assoc. 2020, 26, 13. [Google Scholar] [CrossRef]

- Lee, J.Y.; Jeong, J.; Song, E.M.; Ha, C.; Lee, H.J.; Koo, J.E.; Yang, D.-H.; Kim, N.; Byeon, J.-S. Real-Time Detection of Colon Polyps During Colonoscopy Using Deep Learning: Systematic Validation with Four Independent Datasets. Sci. Rep. 2020, 10, 8379. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Jha, D.; Smedsrud, P.H.; Riegler, M.A.; Johansen, D.; De Lange, T.; Halvorsen, P.; Johansen, H.D. Resunet++: An Advanced Architecture for Medical Image Segmentation. In Proceedings of the 2019 IEEE International Symposium on Multimedia (ISM), San Diego, CA, USA, 9–11 December 2019; pp. 225–2255. [Google Scholar]

- Sharif, M.; Amin, J.; Raza, M.; Anjum, M.A.; Afzal, H.; Shad, S.A. Brain Tumor Detection Based on Extreme Learning. Neural Comput. Appl. 2020, 32, 15975–15987. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, M.; Raza, M.; Saba, T.; Sial, R.; Shad, S.A. Brain Tumor Detection: A Long Short-Term Memory (LSTM)-Based Learning Model. Neural Comput. Appl. 2019, 32, 15965–15973. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, M.; Gul, N.; Raza, M.; Anjum, M.A.; Nisar, M.W.; Bukhari, S.A.C. Brain Tumor Detection by Using Stacked Autoencoders in Deep Learning. J. Med. Syst. 2020, 44, 1–12. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, M.; Raza, M.; Saba, T.; Anjum, M.A. Brain Tumor Detection Using Statistical and Machine Learning Method. Comput. Methods Programs Biomed. 2019, 177, 69–79. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, M.; Raza, M.; Saba, T.; Rehman, A. Brain Tumor Classification: Feature Fusion. In Proceedings of the 2019 International Conference on Computer and Information Sciences (ICCIS), Sakaka, Saudi Arabia, 3–4 April 2019; pp. 1–6. [Google Scholar]

- Khan, M.A.; Sharif, M.I.; Raza, M.; Anjum, A.; Saba, T.; Shad, S.A. Skin Lesion Segmentation and Classification: A Unified Framework of Deep Neural Network Features Fusion and Selection. Expert Syst. 2019, 39, e12497. [Google Scholar] [CrossRef]

- Saba, T.; Bokhari, S.T.F.; Sharif, M.; Yasmin, M.; Raza, M. Fundus Image Classification Methods for the Detection of Glaucoma: A Review. Microsc. Res. Technol. 2018, 81, 1105–1121. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, M.; Rehman, A.; Raza, M.; Mufti, M.R. Diabetic Retinopathy Detection and Classification Using Hybrid Feature Set. Microsc. Res. Technol. 2018, 81, 990–996. [Google Scholar] [CrossRef] [PubMed]

- Ameling, S.; Wirth, S.; Paulus, D.; Lacey, G.; Vilarino, F. Texture-Based Polyp Detection in Colonoscopy. In Bildverarbeitung für die Medizin 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 346–350. [Google Scholar] [CrossRef] [Green Version]

- Bernal, J.; Tajkbaksh, N.; Sanchez, F.J.; Matuszewski, B.J.; Chen, H.; Yu, L.; Angermann, Q.; Romain, O.; Rustad, B.; Balasingham, I.; et al. Comparative Validation of Polyp Detection Methods in Video Colonoscopy: Results from the MICCAI 2015 Endoscopic Vision Challenge. IEEE Trans. Med. Imaging 2017, 36, 1231–1249. [Google Scholar] [CrossRef]

- Wang, Y.; Tavanapong, W.; Wong, J.; Oh, J.H.; De Groen, P.C. Polyp-Alert: Near Real-Time Feedback During Colonoscopy. Comput. Methods Programs Biomed. 2015, 120, 164–179. [Google Scholar] [CrossRef]

- Shin, Y.; Qadir, H.A.; Aabakken, L.; Bergsland, J.; Balasingham, I. Automatic Colon Polyp Detection Using Region Based Deep CNN and Post Learning Approaches. IEEE Access 2018, 6, 40950–40962. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Yamada, M.; Saito, Y.; Imaoka, H.; Saiko, M.; Yamada, S.; Kondo, H.; Takamaru, H.; Sakamoto, T.; Sese, J.; Kuchiba, A.; et al. Development of a Real-Time Endoscopic Image Diagnosis Support System Using Deep Learning Technology in Colonoscopy. Sci. Rep. 2019, 9, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Chou, K.; Yeung, S.; Naik, N.; Madani, A.; Mottaghi, A.; Liu, Y.; Topol, E.; Dean, J.; Socher, R. Deep Learning-Enabled Medical Computer Vision. NPJ Digit. Med. 2021, 4, 1–9. [Google Scholar] [CrossRef]

- Yeung, M.; Sala, E.; Schönlieb, C.-B.; Rundo, L. Unified Focal loss: Generalising Dice and Cross Entropy-Based Losses to Handle Class Imbalanced Medical Image Segmentation. Comput. Med. Imaging Graph. 2021, 95, 102026. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Amin, J.; Sharif, M.; Anjum, M.A.; Raza, M.; Bukhari, S.A.C. Convolutional Neural Network with Batch Normalization for Glioma and Stroke Lesion Detection Using MRI. Cogn. Syst. Res. 2020, 59, 304–311. [Google Scholar] [CrossRef]

- Song, P.; Li, J.; Fan, H. Attention Based Multi-Scale Parallel Network for Polyp Segmentation. Comput. Biol. Med. 2022, 146, 105476. [Google Scholar] [CrossRef]

- Lin, Y.; Wu, J.; Xiao, G.; Guo, J.; Chen, G.; Ma, J. BSCA-Net: Bit Slicing Context Attention Network for Polyp Segmentation. Pattern Recognit. 2022, 132, 108917. [Google Scholar] [CrossRef]

- Park, K.B.; Lee, J.Y. SwinE-Net: Hybrid Deep Learning Approach to Novel Polyp Segmentation Using Convolutional Neural Network and Swin Transformer. J. Comput. Des. Eng. 2022, 9, 616–632. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, L.; Lu, H. Automatic Polyp Segmentation Via Multi-Scale Subtraction Network. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 7 September 2021; pp. 120–130. [Google Scholar]

- Wei, J.; Hu, Y.; Zhang, R.; Li, Z.; Zhou, S.K.; Cui, S. Shallow Attention Network for Polyp Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 7 September 2021; pp. 699–708. [Google Scholar]

- Kim, T.; Lee, H.; Kim, D. Uacanet: Uncertainty Augmented Context Attention for Polyp Segmentation. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event China, 17 October 2021; pp. 2167–2175. [Google Scholar]

- Hasan, M.; Islam, N.; Rahman, M.M. Gastrointestinal Polyp Detection Through a Fusion of Contourlet Transform and Neural Features. J. King Saud Univ.-Comput. Inf. Sci. 2020, 34, 526–533. [Google Scholar] [CrossRef]

- Kang, J.; Gwak, J. Ensemble of Instance Segmentation Models for Polyp Segmentation in Colonoscopy Images. IEEE Access 2019, 7, 26440–26447. [Google Scholar] [CrossRef]

- Kassani, S.H.; Kassani, P.H.; Wesolowski, M.J.; Schneider, K.A.; Deters, R. Deep Transfer Learning Based Model for Colorectal Cancer Histopathology Segmentation: A Comparative Study of Deep Pre-Trained Models. Int. J. Med. Informatics 2021, 159, 104669. [Google Scholar] [CrossRef]

- Ribeiro, J.; Nóbrega, S.; Cunha, A. Polyps Detection in Colonoscopies. Procedia Comput. Sci. 2022, 196, 477–484. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; 2015; pp. 448–456. [Google Scholar]

- Jha, D.; Smedsrud, P.H.; Riegler, M.A.; Halvorsen, P.; Lange, T.D.; Johansen, D.; Johansen, H.D. Kvasir-Seg: A Segmented Polyp Dataset. In Proceedings of the International Conference on Multimedia Modeling, Daejeon, South Korea, 5–8 January 2020; pp. 451–462. [Google Scholar]

- Bernal, J.; Sánchez, F.J.; Fernández-Esparrach, G.; Gil, D.; Rodríguez, C.; Vilariño, F. WM-DOVA Maps for Accurate Polyp Highlighting in Colonoscopy: Validation Vs. Saliency Maps from Physicians. Comput. Med. Imaging Graph. 2015, 43, 99–111. [Google Scholar] [CrossRef]

- Jha, D.; Riegler, M.A.; Johansen, D.; Halvorsen, P.; Johansen, H.D. Doubleu-Net: A Deep Convolutional Neural Network for Medical Image Segmentation. In Proceedings of the 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS), Rochester, MN, USA, 28–30 July 2020; pp. 558–564. [Google Scholar]

| Refs. | Years | Type of CNN | Dataset | Results (mDice) |

|---|---|---|---|---|

| [47] | 2022 | AMNet | Kvasir-SEG | 91.20% |

| [48] | 2022 | BSCA-Net | 91.00% | |

| [49] | 2022 | SwinE-Net | 93.80% | |

| [50] | 2021 | MSNet | 90.70% | |

| [51] | 2021 | SANet | 90.40% | |

| [52] | 2021 | UACANet | 90.50% |

| Layer No | Network Layers | Feature Map Dimension | Sliding Window Size | Stride Information | Padding Size | Pooling Window Details |

|---|---|---|---|---|---|---|

| 1 | Input | 512 × 512 × 3 | 3 × 3 × 3 × 8 | [1 1] | [0 0 0 0] | - |

| 2,3,4 | C1, BN1,A1 | 512 × 512 × 8 | 3 × 3 × 3 × 8 | [1 1] | Same | - |

| 5,6,7 | C2,BN2,A2 | 512 × 512 × 8 | 3 × 3 × 3 × 8 | [1 1] | Same | - |

| 8,9,10 | C3,BN3,A3 | 512 × 512 × 8 | 3 × 3 × 3 × 8 | [1 1] | Same | - |

| 11 | MP | 256 × 256 × 8 | 3 × 3 × 3 × 8 | [1 1] | Same | Max pooling 3 × 3 |

| 12,13,14 | C4,BN4,A4 | 256 × 256 × 16 | 3 × 3 × 3 × 16 | [1 1] | Same | - |

| 15,16,17 | C5,BN5,A5 | 256 × 256 × 16 | 3 × 3 × 3 × 16 | [1 1] | Same | - |

| 18,19,20 | C6,BN6,A6 | 256 × 256 × 16 | 3 × 3 × 3 × 16 | [1 1] | Same | - |

| 21 | MP | 128 × 128 × 16 | 3 × 3 × 3 × 16 | [1 1] | Same | Max pooling 3 × 3 |

| 22,23,24 | C7,BN7,A7 | 128 × 128 × 32 | 3 × 3 × 3 × 32 | [1 1] | Same | - |

| 25,26,27 | C8,BN8,A8 | 128 × 128 × 32 | 3 × 3 × 3 × 32 | [1 1] | Same | - |

| 28,29,30 | C9,BN9,A9 | 128 × 128 × 32 | 3 × 3 × 3 × 32 | [1 1] | Same | - |

| 31 | MP | 64 × 64 × 32 | 3 × 3 × 3 × 32 | [1 1] | Same | Max pooling 3 × 3 |

| 32,33,34 | C10,BN10,A10 | 64 × 64 × 48 | 3 × 3 × 3 × 48 | [1 1] | Same | - |

| 35,36,37 | C11,BN11,A11 | 64 × 64 × 48 | 3 × 3 × 3 × 48 | [1 1] | Same | - |

| 38,39,40 | C12,BN11,A12 | 64 × 64 × 48 | 3 × 3 × 3 × 48 | [1 1] | Same | - |

| 41 | MP | 32 × 32 × 48 | 3 × 3 × 3 × 48 | [1 1] | Same | Max pooling 3 × 3 |

| 42,43,44 | C13,BN13,A13 | 32 × 32 × 64 | 3 × 3 × 3 × 64 | [1 1] | Same | - |

| 45,46,47 | C14,BN14,A14 | 32 × 32 × 64 | 3 × 3 × 3 × 64 | [1 1] | Same | - |

| 48,49,50 | C15,BN15,A15 | 32 × 32 × 64 | 3 × 3 × 3 × 64 | [1 1] | Same | - |

| 51 | MP | 16 × 16 × 64 | 3 × 3 × 3 × 64 | [1 1] | Same | Max pooling 3 × 3 |

| 52,53,54 | C16,BN16,A16 | 16 × 16 × 64 | 3 × 3 × 3 × 64 | [1 1] | Same | - |

| 55 | UPS1 | 32 × 32 × 64 | 3 × 3 × 3 × 64 | [1 1] | Same | - |

| 56 | CNC1 | 32 × 32 × 128 | - | - | - | - |

| 57,58,59 | C17,BN17,A17 | 32 × 32 × 64 | 3 × 3 × 3 × 64 | [1 1] | Same | - |

| 60 | UPS2 | 64 × 64 × 64 | 3 × 3 × 3 × 64 | [1 1] | Same | - |

| 61 | CNC2 | 64 × 64 × 112 | - | - | - | - |

| 62,63,64 | C18,BN18,A18 | 64 × 64 × 48 | 3 × 3 × 3 × 48 | [1 1] | Same | - |

| 65 | UPS3 | 128 × 128 × 48 | 3 × 3 × 3 × 48 | [1 1] | Same | - |

| 66 | CNC3 | 128 × 128 × 80 | - | - | - | - |

| 67,68,69 | C19,BN19,A19 | 128 × 128 × 32 | 3 × 3 × 3 × 32 | [1 1] | Same | - |

| 70 | UPS4 | 256 × 256 × 32 | 3 × 3 × 3 × 32 | [1 1] | Same | - |

| 71 | CNC4 | 256 × 256 × 48 | - | - | - | - |

| 72,73,74 | C20,BN20,A20 | 256 × 256 × 16 | 3 × 3 × 3 × 16 | [1 1] | Same | - |

| 75 | UPS5 | 512 × 512 × 16 | 3 × 3 × 3 × 16 | [1 1] | Same | - |

| 76 | CNC5 | 512 × 512 × 24 | - | - | - | - |

| 77,78,79 | C21,BN21,A21 | 512 × 512 × 8 | 3 × 3 × 3 × 8 | [1 1] | Same | - |

| 80,81 | C22,A22 | 512 × 512 × 1 | 3 × 3 × 3 × 1 | [1 1] | Same | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ramzan, M.; Raza, M.; Sharif, M.I.; Kadry, S. Gastrointestinal Tract Polyp Anomaly Segmentation on Colonoscopy Images Using Graft-U-Net. J. Pers. Med. 2022, 12, 1459. https://doi.org/10.3390/jpm12091459

Ramzan M, Raza M, Sharif MI, Kadry S. Gastrointestinal Tract Polyp Anomaly Segmentation on Colonoscopy Images Using Graft-U-Net. Journal of Personalized Medicine. 2022; 12(9):1459. https://doi.org/10.3390/jpm12091459

Chicago/Turabian StyleRamzan, Muhammad, Mudassar Raza, Muhammad Imran Sharif, and Seifedine Kadry. 2022. "Gastrointestinal Tract Polyp Anomaly Segmentation on Colonoscopy Images Using Graft-U-Net" Journal of Personalized Medicine 12, no. 9: 1459. https://doi.org/10.3390/jpm12091459

APA StyleRamzan, M., Raza, M., Sharif, M. I., & Kadry, S. (2022). Gastrointestinal Tract Polyp Anomaly Segmentation on Colonoscopy Images Using Graft-U-Net. Journal of Personalized Medicine, 12(9), 1459. https://doi.org/10.3390/jpm12091459