Deep Learning to Measure the Intensity of Indocyanine Green in Endometriosis Surgeries with Intestinal Resection

Abstract

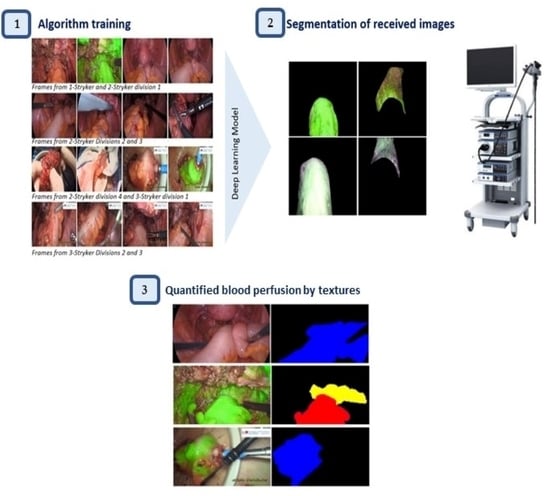

:1. Introduction

2. Materials and Methods

2.1. Pilot Protocol for Video Recording

- Optics placement: The camera needs to be optimally placed before injecting the ICG and just after performing the resection. For this, the lens is directed to the area of the anastomosis and positioned forming a 90° with the anatomical plane (the intestinal serosa). Then, it is positioned in such a way that 2/3 of the proximal part and 1/3 of the distal part are shown. The distance at which the optic should be placed is approximately 8 cm. This distance would correspond to two open fenestrated grasping forceps.

- Video recording: After correctly positioning the optics, 5 mL of ICG is injected (i.v. 2.5 mg/mL). The surgeon should record the same shot for at least 140 s to ensure that the ICG has reached the bowel and is correctly stabilized [17].

- Completion: After successfully recording both areas of the bowel the surgery can be resumed.

2.2. Data Collection and Processing

- Type I (Figure 1A): the intestinal tract, primarily the colon, in healthy conditions or with deep infiltrating nodules.

- Type II (Figure 1B): the bowel after segmental resection or discoid excision. These scenes were captured with or without ICG, the first ones being used to analyze blood perfusion.

- Type III (Figure 1C): the proximal part of the bowel outside the peritoneal cavity after a segmental resection. They appear to a lesser extent than the previous ones.

2.3. Automatic Segmentation

2.4. Feature Representation through Textures of Image

3. Results

3.1. Automatic Segmentation and Performance Evaluation

3.2. Analysis of the Blood Perfusion Assessment through the Textures

4. Discussion

Strengths, Limitations and Future Perspectives

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mehedintu, C.; Plotogea, M.N.; Ionescu, S.; Antonovici, M. Endometriosis Still a Challenge. J. Med. Life 2014, 7, 349–357. [Google Scholar] [PubMed]

- Burney, R.O.; Giudice, L.C. Pathogenesis and Pathophysiology of Endometriosis. Fertil. Steril. 2012, 98, 511–519. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Koninckx, P.R.; Ussia, A.; Adamyan, L.; Wattiez, A.; Donnez, J. Deep Endometriosis: Definition, Diagnosis, and Treatment. Fertil. Steril. 2012, 98, 564–571. [Google Scholar] [CrossRef] [PubMed]

- Rousset, P.; Peyron, N.; Charlot, M.; Chateau, F.; Golfier, F.; Raudrant, D.; Cotte, E.; Isaac, S.; Réty, F.; Valette, P. Bowel Endometriosis: Preoperative Diagnostic Accuracy of 3.0-T MR Enterography—Initial Results. Radiology 2014, 273, 117–124. [Google Scholar] [CrossRef]

- Bianchi, A.; Pulido, L.; Espín, F.; Antonio Hidalgo, L.; Heredia, A.; José Fantova, M. Endometriosis Intestinal. Estado Actual. Cir. Española 2007, 81, 170–176. [Google Scholar] [CrossRef]

- Hernández Gutiérrez, A.; Spagnolo, E.; Zapardiel, I.; Garcia-Abadillo Seivane, R.; López Carrasco, A.; Salas Bolívar, P.; Pascual Miguelañez, I. Post-Operative Complications and Recurrence Rate After Treatment of Bowel Endometriosis: Comparison of Three Techniques. Eur. J. Obstet. Gynecol. Reprod. Biol. X 2019, 4, 100083. [Google Scholar] [CrossRef]

- Gobierno de España. Guía De Atención a Las Mujeres Con Endometriosis En El Sistema Nacional De Salud. 2013. Available online: https://www.sanidad.gob.es/organizacion/sns/planCalidadSNS/pdf/equidad/ENDOMETRIOSIS.pdf (accessed on 30 March 2022).

- Dunselman, G.A.; Vermeulen, N.; Becker, C.; Calhaz-Jorge, C.; D’Hooghe, T.; Bie, B.D.; Heikinheimo, O.; Horne, A.W.; Kiesel, L.; Nap, A.; et al. ESHRE Guideline: Management of Women with Endometriosis. Hum. Reprod. 2014, 29, 400–412. [Google Scholar] [CrossRef]

- Ferrero, S.; Stabilini, C.; Barra, F.; Clarizia, R.; Roviglione, G.; Ceccaroni, M. Bowel Resection for Intestinal Endometriosis. Best Pract. Res. Clin. Obstet. Gynaecol. 2021, 71, 114–128. [Google Scholar] [CrossRef]

- Donnez, O.; Roman, H. Choosing the Right Surgical Technique for Deep Endometriosis: Shaving, Disc Excision, Or Bowel Resection? Fertil. Steril. 2017, 108, 931–942. [Google Scholar] [CrossRef]

- Narvaez-Sanchez, R.; Chuaire, L.; Sanchez, M.C.; Bonilla, J. Circulacion Intestinal: Su Organizacion, Control Y Papel En El Paciente Critico. Colomb. Med. 2004, 35, 231. [Google Scholar]

- Karliczek, A.; Benaron, D.A.; Baas, P.C.; Zeebregts, C.J.; Wiggers, T.; Van Dam, G.M. Intraoperative Assessment of Microperfusion with Visible Light Spectroscopy for Prediction of Anastomotic Leakage in Colorectal Anastomoses. Colorectal Dis. 2010, 12, 1018–1025. [Google Scholar] [CrossRef]

- Chang, Y.K.; Foo, C.C.; Yip, J.; Wei, R.; Ng, K.K.; Lo, O.; Choi, H.K.; Law, W.L. The Impact of Indocyanine-Green Fluorescence Angiogram on Colorectal Resection. Surgeon 2018, 17, 270–276. [Google Scholar] [CrossRef] [PubMed]

- Greene, A.D.; Lang, S.A.; Kendziorski, J.A.; Sroga-Rios, J.M.; Herzog, T.J.; Burns, K.A. Endometriosis: Where are we and Where are we Going? Reproduction 2016, 152, R63–R78. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jafari, M.D.; Wexner, S.D.; Martz, J.E.; McLemore, E.C.; Margolin, D.A.; Sherwinter, D.A.; Lee, S.W.; Senagore, A.J.; Phelan, M.J.; Stamos, M.J. Perfusion Assessment in Laparoscopic Left-Sided/Anterior Resection (PILLAR II): A Multi-Institutional Study. J Am. Coll. Surg. 2015, 220, 82–92. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nagaya, T.; Nakamura, Y.A.; Choyke, P.L.; Kobayashi, H. Fluorescence-Guided Surgery. Front. Oncol. 2017, 7, 314. [Google Scholar] [CrossRef]

- Son, G.M.; Kwon, M.S.; Kim, Y.; Kim, J.; Kim, S.H.; Lee, J.W. Quantitative Analysis of Colon Perfusion Pattern using Indocyanine Green (ICG) Angiography in Laparoscopic Colorectal Surgery. Surg. Endosc. 2018, 33, 1640–1649. [Google Scholar] [CrossRef] [Green Version]

- Gosvig, K.; Jensen, S.S.; Qvist, N.; Nerup, N.; Agnus, V.; Diana, M.; Ellebæk, M.B. Quantification of ICG Fluorescence for the Evaluation of Intestinal Perfusion: Comparison between Two Software-Based Algorithms for Quantification. Surg. Endosc. 2020, 35, 5043–5050. [Google Scholar] [CrossRef]

- Gosvig, K.; Jensen, S.S.; Qvist, N.; Agnus, V.; Jensen, T.S.; Lindner, V.; Marescaux, J.; Diana, M.; Ellebæk, M.B. Remote Computer-Assisted Analysis of ICG Fluorescence Signal for Evaluation of Small Intestinal Anastomotic Perfusion: A Blinded, Randomized, Experimental Trial. Surg. Endosc. 2019, 34, 2095–2102. [Google Scholar] [CrossRef]

- Lütken, C.D.; Achiam, M.P.; Osterkamp, J.; Svendsen, M.B.; Nerup, N. Quantification of Fluorescence Angiography: Toward a Reliable Intraoperative Assessment of Tissue Perfusion—A Narrative Review. Langenbecks Arch. Surg. 2020, 406, 251–259. [Google Scholar] [CrossRef]

- Boni, L.; David, G.; Dionigi, G.; Rausei, S.; Cassinotti, E.; Fingerhut, A. Indocyanine Green-Enhanced Fluorescence to Assess Bowel Perfusion during Laparoscopic Colorectal Resection. Surg. Endosc. 2015, 30, 2736–2742. [Google Scholar] [CrossRef] [Green Version]

- Oliveira, M.A.P.; Pereira, T.R.D.; Gilbert, A.; Tulandi, T.; de Oliveira, H.C.; De Wilde, R.L. Bowel Complications in Endometriosis Surgery. Best Pract. Res. Clin. Obstet. Gynaecol. 2016, 35, 51–62. [Google Scholar] [CrossRef] [PubMed]

- Joshi, S.; Lo Menzo, E.; Dip, F.; Szomstein, S.; Rosenthal, R.J. Vascular Perfusion in Small Bowel Anastomosis. In Video Atlas of Intraoperative Applications of Near Infrared Fluorescence Imaging; Aleassa, E., El-Hayek, K., Eds.; Springer: Cham, Switzerland, 2020; pp. 95–101. [Google Scholar]

- Goutsias, J.; Heijmans, H.J.A.M.; Sivakumar, K. Morphological Operators for Image Sequences. Comput. Vis. Image Underst. 1995, 62, 326–346. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 4th ed.; Pearson: London, UK, 2018. [Google Scholar]

- Siddique, N.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V. U-Net and its Variants for Medical Image Segmentation: A Review of Theory and Applications. Access 2021, 9, 82031–82057. [Google Scholar] [CrossRef]

- Qadri, S.F.; Shen, L.; Ahmad, M.; Qadri, S.; Zareen, S.S.; Akbar, M.A. SVseg: Stacked Sparse Autoencoder-Based Patch Classification Modeling for Vertebrae Segmentation. Mathematics 2022, 10, 796. [Google Scholar] [CrossRef]

- Ahmad, M.; Qadri, S.F.; Qadri, S.; Saeed, I.A.; Zareen, S.S.; Iqbal, Z.; Alabrah, A.; Alaghbari, H.M.; Mizanur Rahman, S. A Lightweight Convolutional Neural Network Model for Liver Segmentation in Medical Diagnosis. Comput. Intell. Neurosci. 2022, 2022, 7954333. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Lecture Notes in Computer Science; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Montserrat, D.M.; Lin, Q.; Allebach, J.; Delp, E. Training Object Detection and Recognition CNN Models using Data Augmentation. Electron. Imaging 2017, 2017, 27–36. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Stutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Aggarwal, P. Data Augmentation in Dermatology Image Recognition using Machine Learning. Ski. Res. Technol. 2019, 25, 815–820. [Google Scholar] [CrossRef]

- Wong, S.C.; Gatt, A.; Stamatescu, V.; McDonnell, M.D. Understanding Data Augmentation for Classification: When to Warp? In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, QLD, Australia, 30 November–2 December 2016; pp. 1–6. [Google Scholar]

- Scherer, D.; Müller, A.; Behnke, S. Evaluation of Pooling Operations in Convolutional Architectures for Object Recognition. In Artificial Neural Networks–ICANN 2010. ICANN 2010. Lecture Notes in Computer Science; Diamantara, K., Duch, W., Iliadis, L.S., Eds.; Springer: Cham, Switzerland; Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevski, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Zhang, Z.; Sabuncu, M.R. Generalized Cross Entropy Loss for Training Deep Neural Networks with Noisy Labels. arXiv 2018, arXiv:1805.07836. [Google Scholar]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Cardoso, M.J. Generalised Dice Overlap as a Deep Learning Loss Function for Highly Unbalanced Segmentations. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Davies, E.R. Computer Vision: Principles, Algorithms, Applications, Learning, 5th ed.; Academic Press Elsevier: London, UK, 2017. [Google Scholar]

- Hung, C.; Song, E.; Lan, Y. Foundation of Deep Machine Learning in Neural Networks. In Image Texture Analysis; Springer International Publishing: Cham, Switzerland, 2019; pp. 201–232. [Google Scholar]

- Kobayashi, T.; Sundaram, D.; Nakata, K.; Tsurui, H. Gray-Level Co-Occurrence Matrix Analysis of several Cell Types in Mouse Brain using Resolution-Enhanced Photothermal Microscopy. J. Biomed. Opt. 2017, 22, 036011. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Safavian, S.R.; Landgrebe, D. A Survey of Decision Tree Classifier Methodology. IEEE Trans. Syst. Man Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef] [Green Version]

- Komen, N.; Slieker, J.; Kort, P.; Wilt, J.; Harst, E.; Coene, P.P.; Gosselink, M.P.; Tetteroo, G.; Graaf, E.; Beek, T.; et al. High Tie Versus Low Tie in Rectal Surgery: Comparison of Anastomotic Perfusion. Int. J. Colorectal Dis. 2011, 26, 1075–1078. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McCool, M.; Robison, A.D.; Reinders, J. (Eds.) K-Means Clustering. In Structured Parallel Programming; Elsevier: Waltham, MA, USA, 2012; pp. 279–289. [Google Scholar]

- Cunningham, J.P.; Ghahramani, Z. Linear Dimensionality Reduction: Survey, Insights, and Generalizations. J. Mach. Learn. Res. 2015, 16, 2859–2900. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing Data using T-SNE. J. Mach. Learn. Res. 2018, 9, 2579–2605. [Google Scholar]

- Tiu, E. Metrics to Evaluate Your Semantic Segmentation Model. Available online: https://towardsdatascience.com/metrics-to-evaluate-your-semantic-segmentation-model-6bcb99639aa2 (accessed on 8 June 2022).

| Model | Data Augmentation | Number of Filters | Loss Function |

|---|---|---|---|

| A | No | 16 | LCCE |

| B | Yes | 16 | LCCE |

| C | Yes | 16 | LCCE + LDLS |

| D | Yes | 24 | LCCE |

| E | Yes | 24 | LCCE + LDLS |

| Model | Accuracy | MCC | DC | JI |

|---|---|---|---|---|

| A | 0.6715 | – | – | – |

| B | 0.7800 | 0.6877 | 0.7055 | 0.6875 |

| C | 0.8752 | 0.8183 | 0.8345 | 0.8052 |

| D | 0.8259 | 0.7127 | 0.7251 | 0.6903 |

| E | 0.9245 | 0.9478 | 0.9568 | 0.9316 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hernández, A.; de Zulueta, P.R.; Spagnolo, E.; Soguero, C.; Cristobal, I.; Pascual, I.; López, A.; Ramiro-Cortijo, D. Deep Learning to Measure the Intensity of Indocyanine Green in Endometriosis Surgeries with Intestinal Resection. J. Pers. Med. 2022, 12, 982. https://doi.org/10.3390/jpm12060982

Hernández A, de Zulueta PR, Spagnolo E, Soguero C, Cristobal I, Pascual I, López A, Ramiro-Cortijo D. Deep Learning to Measure the Intensity of Indocyanine Green in Endometriosis Surgeries with Intestinal Resection. Journal of Personalized Medicine. 2022; 12(6):982. https://doi.org/10.3390/jpm12060982

Chicago/Turabian StyleHernández, Alicia, Pablo Robles de Zulueta, Emanuela Spagnolo, Cristina Soguero, Ignacio Cristobal, Isabel Pascual, Ana López, and David Ramiro-Cortijo. 2022. "Deep Learning to Measure the Intensity of Indocyanine Green in Endometriosis Surgeries with Intestinal Resection" Journal of Personalized Medicine 12, no. 6: 982. https://doi.org/10.3390/jpm12060982

APA StyleHernández, A., de Zulueta, P. R., Spagnolo, E., Soguero, C., Cristobal, I., Pascual, I., López, A., & Ramiro-Cortijo, D. (2022). Deep Learning to Measure the Intensity of Indocyanine Green in Endometriosis Surgeries with Intestinal Resection. Journal of Personalized Medicine, 12(6), 982. https://doi.org/10.3390/jpm12060982