Breast Ultrasound Image Synthesis using Deep Convolutional Generative Adversarial Networks

Abstract

1. Introduction

2. Materials and Methods

2.1. Patients

2.2. Data Set

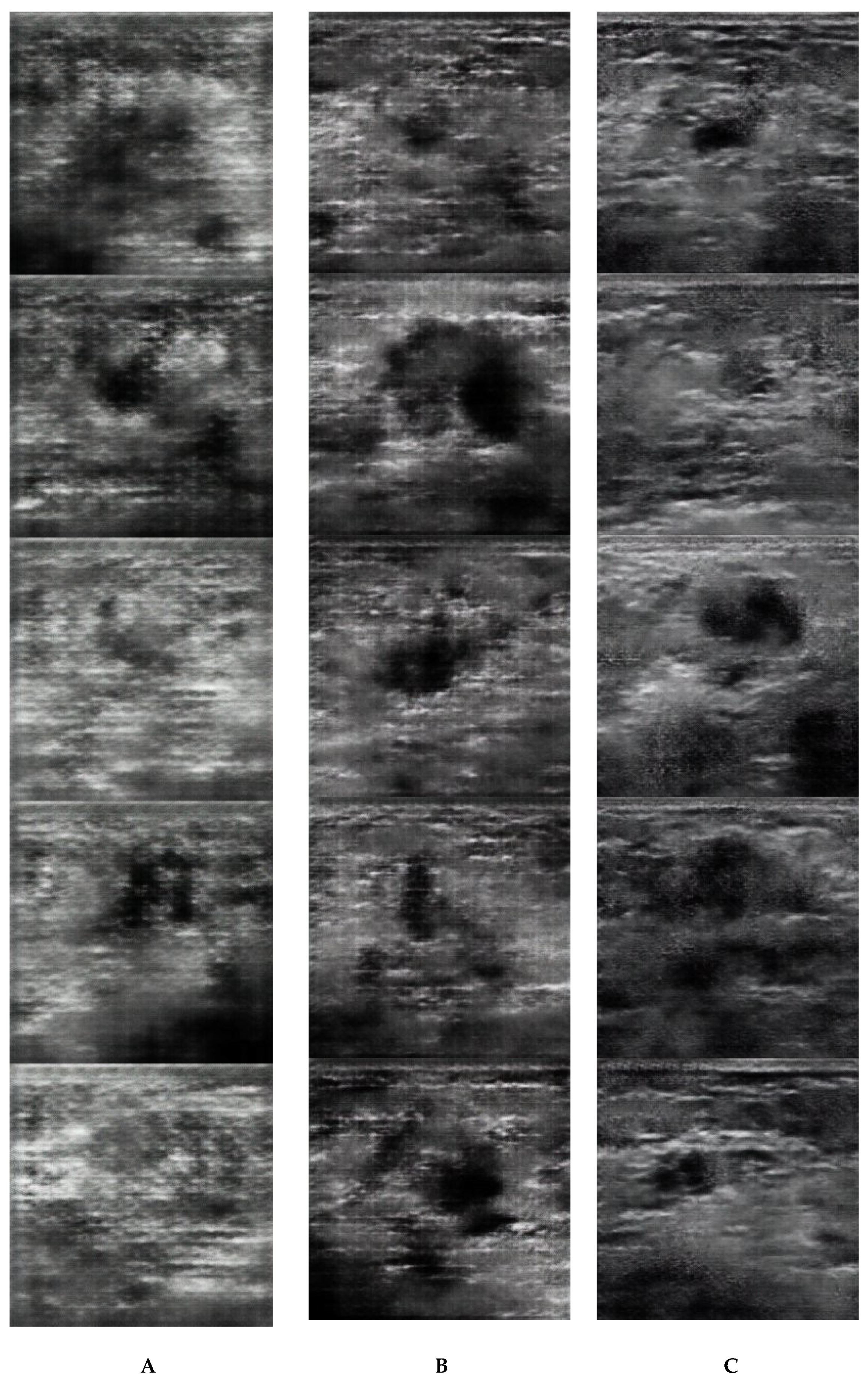

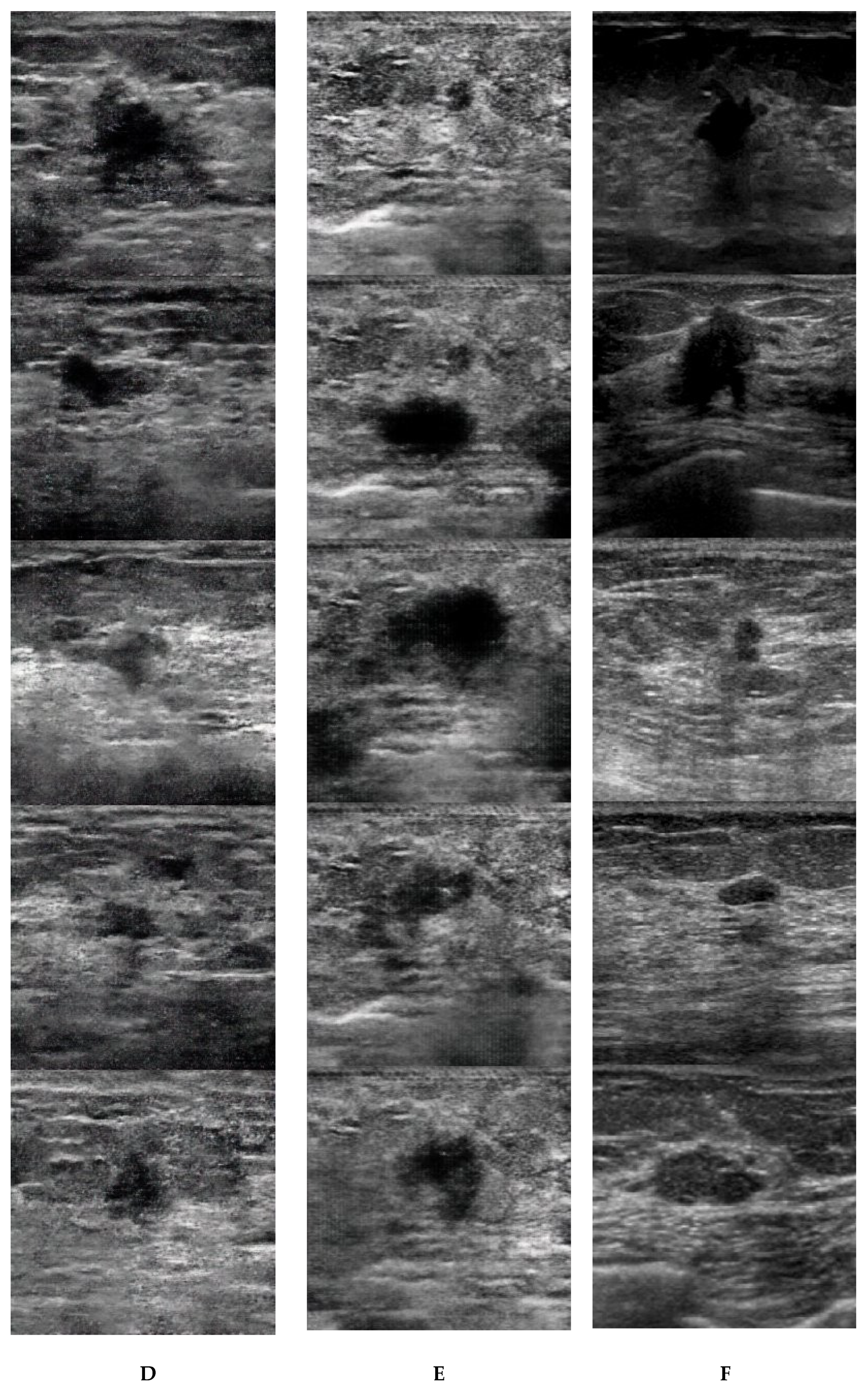

2.3. Image Synthesis

2.4. Radiologist Readout

2.5. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yasaka, K.; Akai, H.; Kunimatsu, A.; Kiryu, S.; Abe, O. Deep learning with convolutional neural network in radiology. Jpn J. Radiol. 2018, 36, 257–272. [Google Scholar] [CrossRef] [PubMed]

- Chartrand, G.; Cheng, P.M.; Vorontsov, E.; Drozdzal, M.; Turcotte, S.; Pal, C.J.; Kadoury, S.; Tang, A. Deep learning: A primer for radiologists. Radiographics 2017, 37, 2113–2131. [Google Scholar] [CrossRef] [PubMed]

- Suzuki, K. Overview of deep learning in medical imaging. Radiol. Phys. Technol. 2017, 10, 257–273. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Yi, X.; Walia, E.; Babyn, P. Generative adversarial network in medical imaging: A review. Med. Image. Anal. 2019, 58, 101552. [Google Scholar] [CrossRef] [PubMed]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Fujioka, T.; Kubota, K.; Mori, M.; Kikuchi, Y.; Katsuta, L.; Kasahara, M.; Oda, G.; Ishiba, T.; Nakagawa, T.; Tateishi, U. Distinction between benign and malignant breast masses at breast ultrasound using deep learning method with convolutional neural network. Jpn J. Radiol. 2019, 37, 466–472. [Google Scholar] [CrossRef] [PubMed]

- Kanda, Y. Investigation of the freely available easy-to-use software ‘EZR’ for medical statistics. Bone Marrow. Transplant. 2013, 48, 452–458. [Google Scholar] [CrossRef] [PubMed]

- Beers, A.; Brown, J.; Chang, K.; Campbell, J.P.; Ostmo, S.; Chiang, M.F.; Kalpathy-Cramer, J. High-resolution medical image synthesis using progressively grown generative adversarial networks. arXiv preprint. arXiv 2018, arXiv:1805.03144. [Google Scholar]

- Frid-Adar, M.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. Gan-based data augmentation for improved liver lesion classification. arXiv 2018, arXiv:1803.01229. [Google Scholar]

- Chuquicusma, M.J.; Hussein, S.; Burt, J.; Bagci, U. How to Fool Radiologists with Generative Adversarial Networks? A Visual Turing Test for Lung Cancer Diagnosis. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging, Washington, DC, USA, 4–7 April 2018; pp. 240–244. [Google Scholar]

- Salehinejad, H.; Valaee, S.; Dowdell, T.; Colak, E.; Barfett, J. Generalization of Deep Neural Networks for Chest Pathology Classification in X-rays using Generative Adversarial Networks. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing, Calgary, AB, Canada, 15–20 April 2018; pp. 990–994. [Google Scholar]

- Korkinof, D.; Rijken, T.; O’Neill, M.; Yearsley, J.; Harvey, H.; Glocker, B. High-resolution mammogram synthesis using progressive generative adversarial networks. arXiv 2018, arXiv:1807.03401. [Google Scholar]

| Benign | Malignant | p | ||

|---|---|---|---|---|

| Patients (n) | 141 | 214 | ||

| Masses (n) | 144 | 216 | ||

| Images (n) | 528 | 529 | ||

| Age | Mean (y) | 48.8 ± 12.1 | 60.3 ± 12.6 | p < 0.001 |

| Range (y) | 21–84 | 27–84 | ||

| Maximum Diameter | Mean (mm) | 13.5 ± 8.1 | 17.1 ± 7.9 | p < 0.001 |

| Range (mm) | 4–50 | 5–41 | ||

| Benign (n = 144) | Malignant (n = 216) |

|---|---|

| Fibroadenoma 47 | Ductal Carcinoma in Situ 17 |

| Mastopathy 19 | Invasive Ductal Cancer 168 |

| Intraductal Papilloma 17 | Invasive Lobular Carcinoma 9 |

| Phyllodes Tumor (Benign) 2 | Mucinous Carcinoma 8 |

| Fibrous Disease 1 | Apocrine Carcinoma 7 |

| Lactating Adenoma 1 | Invasive Micropapillary Carcinoma 2 |

| Abscess 1 | Malignant Lymphoma 1 |

| Adenosis 1 | Medullary Carcinoma 1 |

| Pseudoangiomatous Stromal Hyperplasia 1 | Adenoid Cystic Carcinoma 1 |

| Radial scar/Complex Sclerosing Lesion 1 | Phyllodes Tumor (Malignant) 1 |

| No Malignancy 5 | Adenomyoepithelioma With Carcinoma 1 |

| Not Known 48 (Diagnosed by Follow-up) |

| Overall Quality of Images | Definition of Anatomic Structures | Visualization of the Masses | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Reader | R1 | R2 | R1&2 | R1 | R2 | R1&2 | R1 | R2 | R1&2 |

| 50 epochs | 4.85 ± 0.37 | 4.25 ± 0.72 | 4.55 ± 0.48 | 4.85 ± 0.37 | 4.20 ± 0.89 | 4.53 ± 0.53 | 4.30 ± 0.57 | 4.05 ± 0.69 | 4.18 ± 0.54 |

| 100 epochs | 4.55 ± 0.51 | 4.05 ± 0.51 | 4.30 ± 0.41 | 4.80 ± 0.41 | 4.00 ± 0.65 | 4.40 ± 0.38 | 4.15 ± 0.49 | 3.50 ± 0.69 | 3.83 ± 0.49 |

| 200 epochs | 4.10 ± 0.64 | 3.00 ± 0.92 | 3.55 ± 0.63 | 4.05 ± 0.60 | 2.95 ± 0.83 | 3.50 ± 0.54 | 4.25 ± 0.85 | 2.85 ± 0.88 | 3.55 ± 0.71 |

| 500 epochs | 3.45 ± 0.83 | 2.35 ± 0.59 | 2.90 ± 0.55 | 3.50 ± 0.83 | 2.55 ± 0.60 | 3.03 ± 0.60 | 3.45 ± 0.83 | 2.10 ± 0.45 | 2.78 ± 0.41 |

| 1000 epochs | 3.70 ± 0.92 | 2.65 ± 0.93 | 3.18 ± 0.73 | 4.00 ± 0.86 | 2.80 ± 0.70 | 3.40 ± 0.64 | 3.40 ± 0.94 | 2.15 ± 0.99 | 2.78 ± 0.85 |

| Real | 1.48 ± 0.60 | 1.20 ± 0.41 | 1.34 ± 0.40 | 1.45 ± 0.64 | 1.38 ± 0.59 | 1.41 ± 0.44 | 1.55 ± 0.75 | 1.35 ± 0.62 | 1.45 ± 0.53 |

| Overall Quality of Images (p) | Definition of Anatomic Structures (p) | Visualization of the Masses (p) | |

|---|---|---|---|

| 50 vs. 100 | 0.470 | 1.000 | 0.426 |

| 50 vs. 200 | <0.001 | <0.001 | 0.100 |

| 50 vs. 500 | <0.001 | <0.001 | <0.001 |

| 50 vs. 1000 | <0.001 | <0.001 | <0.001 |

| 50 vs. Real | <0.001 | <0.001 | <0.001 |

| 100 vs. 200 | <0.001 | <0.001 | 1.000 |

| 100 vs. 500 | <0.001 | <0.001 | <0.001 |

| 100 vs. 1000 | <0.001 | <0.001 | <0.001 |

| 100 vs. Real | <0.001 | <0.001 | <0.001 |

| 200 vs. 500 | 0.016 | 0.095 | 0.016 |

| 200 vs. 1000 | 0.897 | 1.000 | 0.071 |

| 200 vs. Real | <0.001 | <0.001 | <0.001 |

| 500 vs. 1000 | 1.000 | 0.725 | 1.000 |

| 500 vs. Real | <0.001 | <0.001 | <0.001 |

| 1000 vs. Real | <0.001 | <0.001 | <0.001 |

| Possibility of Original IMAGES | 50 epochs | 100 epochs | 200 epochs | 500 epochs | 1000 epochs | Real | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Score | R1 | R2 | R1 | R2 | R1 | R2 | R1 | R2 | R1 | R2 | R1 | R2 |

| 1 (100%) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 18 | 25 |

| 2 (75%) | 0 | 0 | 0 | 0 | 0 | 2 | 1 | 2 | 1 | 1 | 13 | 13 |

| 3 (50%) | 0 | 1 | 0 | 0 | 1 | 2 | 2 | 10 | 2 | 5 | 9 | 2 |

| 4 (25%) | 0 | 4 | 0 | 8 | 3 | 15 | 10 | 8 | 5 | 11 | 0 | 0 |

| 5 (0%) | 20 | 15 | 20 | 12 | 16 | 1 | 7 | 0 | 12 | 3 | 0 | 0 |

| Indistinguishable Images (%) (Average of R1&R2) | 0% | 5% | 0% | 0% | 5% | 20% | 15% | 60% | 15% | 30% | 23% | 5% |

| (2.5%) | (0%) | (12.5%) | (37.5%) | (22.5%) | (14%) | |||||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fujioka, T.; Mori, M.; Kubota, K.; Kikuchi, Y.; Katsuta, L.; Adachi, M.; Oda, G.; Nakagawa, T.; Kitazume, Y.; Tateishi, U. Breast Ultrasound Image Synthesis using Deep Convolutional Generative Adversarial Networks. Diagnostics 2019, 9, 176. https://doi.org/10.3390/diagnostics9040176

Fujioka T, Mori M, Kubota K, Kikuchi Y, Katsuta L, Adachi M, Oda G, Nakagawa T, Kitazume Y, Tateishi U. Breast Ultrasound Image Synthesis using Deep Convolutional Generative Adversarial Networks. Diagnostics. 2019; 9(4):176. https://doi.org/10.3390/diagnostics9040176

Chicago/Turabian StyleFujioka, Tomoyuki, Mio Mori, Kazunori Kubota, Yuka Kikuchi, Leona Katsuta, Mio Adachi, Goshi Oda, Tsuyoshi Nakagawa, Yoshio Kitazume, and Ukihide Tateishi. 2019. "Breast Ultrasound Image Synthesis using Deep Convolutional Generative Adversarial Networks" Diagnostics 9, no. 4: 176. https://doi.org/10.3390/diagnostics9040176

APA StyleFujioka, T., Mori, M., Kubota, K., Kikuchi, Y., Katsuta, L., Adachi, M., Oda, G., Nakagawa, T., Kitazume, Y., & Tateishi, U. (2019). Breast Ultrasound Image Synthesis using Deep Convolutional Generative Adversarial Networks. Diagnostics, 9(4), 176. https://doi.org/10.3390/diagnostics9040176