Abstract

Background: Artificial intelligence (AI) developed for skin cancer recognition has been shown to have comparable or superior performance to dermatologists. However, it is uncertain if current AI models trained predominantly with lighter Fitzpatrick skin types can be effectively adapted for Asian populations. Objectives: A systematic review was performed to summarize the existing use of artificial intelligence for skin cancer detection in Asian populations. Methods: Systematic search was conducted on PubMed and EMBASE for articles published regarding the use of artificial intelligence for skin cancer detection amongst Asian populations. Information regarding study characteristics, AI model characteristics, and outcomes was collected. Conclusions: Current studies show optimistic results in utilizing AI for skin cancer detection in Asia. However, the comparison of image recognition abilities might not be a true representation of the diagnostic abilities of AI versus dermatologists in the real-world setting. To ensure appropriate implementation, maximize the potential of AI, and improve the transferability of AI models across various Asian genotypes and skin cancers, it is crucial to focus on prospective, real-world-based practice, as well as the expansion and diversification of existing Asian databases used for training and validation.

1. Introduction

Skin cancer incidence and clinical presentations differ between Asian and Caucasian populations due to variations in Fitzpatrick skin phototype [1], lifestyle, and genetic background [2]. Non-melanoma skin cancers (NMSC) such as basal cell carcinomas (BCC) and squamous cell carcinomas (SCC) are historically less prevalent amongst Asian populations as darker skin types are associated with increased epidermal melanin and melanocyte activity, which confers greater protection against ultraviolet B (UVB) radiation [3]. In contrast to Caucasian populations, BCCs tend to affect older Asians and may occur on less typical sites such as the trunk or as a more pigmented lesion [3]. Likewise in Asia, the incidence rate of cutaneous melanoma is significantly lower than in Caucasian populations, and the most common histological subtype, acral lentiginous melanoma (ALM), which accounts for roughly 50% of all melanoma cases, only constitutes about 2–3% of cases in Caucasian populations [4]. Despite lower prevalence of melanoma, the mortality rate is often higher in Asian populations, whereby patients are typically diagnosed at a later stage of disease [5,6].

Even within Asia or a multi-ethnic country, like Singapore [1,4], skin cancer prevalence can differ significantly as well due to heterogeneity in the intrinsic skin phototype, genetics, lifestyle, environmental factors, and disease awareness [7]. For instance, basal cell carcinoma is the most prevalent among Chinese and Japanese individuals, while squamous cell carcinoma is more common among people of Indian descent [3]. The rates of skin cancers have also been found to differ amongst the ethnic Chinese, Indians, and Malays in Singapore [1]. Socio-economic background may also contribute to differences in incidence rate, as skin cancer detection rates might be higher in urban or resource-rich countries where there is greater access to the healthcare system [6].

The global rise in skin cancer incidence [8] and increasing recognition of disparity in healthcare access [9,10] have led to a search for solutions to aid in earlier skin cancer detection and improvement of healthcare equity.

Artificial intelligence (AI) refers to the development of intelligent computer systems that can simulate human behavior and cognition to perform tasks independently [11]. In recent years, the advent of large-scale datasets and computational power has propelled the transition of AI from conventional rule-based systems to sophisticated machine learning [12], which consists of deep learning models, such as artificial neural networks (ANNs) and convolutional neural networks (CNNs) [13,14]. ANNs are non-linear statistical prediction models, which utilize multi-layer neural networks to learn the complex associations between data, while CNNs are a subtype of ANN that specializes in image recognition and classification [15]. In the last two years, there has also been an increasing trend to tap on transformers and large language models (LLMs), especially multi-modal LLMs, composed of multiple transformer layers, which allows the algorithms to selectively focus on different parts of the input data to analyze and interpret both visual and textual information [16].

As compared to the use of non-invasive devices such as confocal and photo microscopy for skin cancer detection, the development of these automated visual recognition AI models [17,18] is especially suitable for visual-based specialties, such as dermatology, and can allow even inexperienced practitioners to mitigate the limitations associated with real-life consultations and healthcare inequality [19,20] to provide more accurate diagnoses, which not only benefit patients but also relieve healthcare systems.

While previous systematic reviews [21,22] have shown promising results with the use of AI for skin cancer detection, the current literature mainly constitutes research and databases derived from Caucasian populations. This limits understanding of regional variations and trends in AI development for skin cancer detection and raises concerns as to whether successful AI models implemented thus far can be adapted for Asian contexts given innate differences between Caucasian and Asian populations.

Therefore, our systematic review will only focus on relevant studies utilizing Asian population databases for the development or validation of AI models to (1) summarize the current use of artificial intelligence for skin cancer detection in various Asian countries, (2) identify trends and challenges faced, and (3) propose areas for improvement.

2. Materials and Methods

The PubMed and EMBASE databases were searched for relevant articles without restrictions up till 27 August 2024. We used the following keywords (“skin neoplasm” OR “skin carcinoma” OR “skin cancer” OR “basal cell carcinoma” OR “squamous cell carcinoma” OR “melanoma” OR “keratinocyte carcinoma”) AND (“artificial intelligence” OR “machine learning” OR “neural networks” OR “computer aided” OR “deep learning”) for our search.

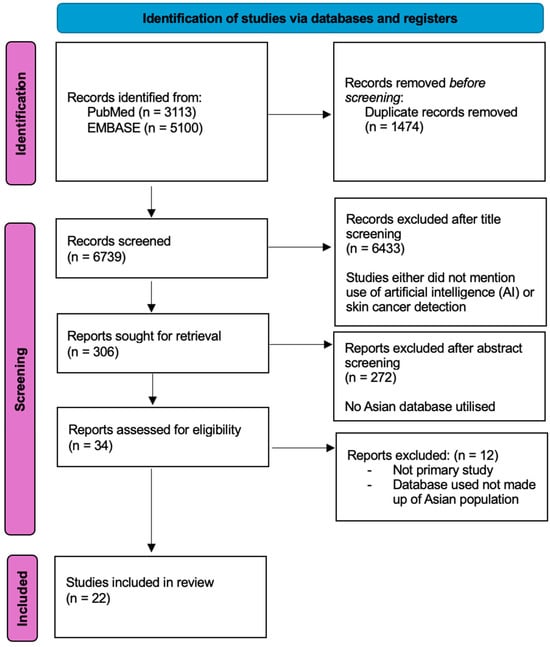

The review protocol was not registered with PROSPERO but was performed in accordance with the PRISMA guidelines and registration information, whereby only primary studies, which evaluated the use of AI for skin cancer detection were included, as shown in Figure 1 below. After duplicates were removed, the authors screened titles and abstracts to identify studies that met the inclusion criteria. Studies that did not specify skin cancer, the use of artificial intelligence, or the use of Asian population databases for training or validation of AI models were excluded to ensure that our review focuses on outcomes achieved with Asian databases. Where studies did not clearly specify details of the database included, we sought information from the database website wherever possible. Of the titles and abstracts, 34 studies were included for full-text screening. Any disagreements were discussed by the authors, and a consensus was reached. Conference papers, letters, or reviews were excluded. Eventually, 22 studies were included in the final review.

Figure 1.

Summary of systematic review performed according to the preferred reporting items for systematic reviews and meta-analysis guidelines.

Data extraction was undertaken independently by the reviewers into a predesigned data extraction spreadsheet on Microsoft Excel. The following data were extracted from the included studies: (a) characteristics of studies—country of origin of study, source of database, publication year, types of skin cancers included in dataset, modality of assessment (dermoscopic versus clinical images), sample size of each study, gold standard diagnosis; (b) AI model—type of AI model utilized, details of AI model, data input and output processing methods; and (c) outcomes—classification accuracy, sensitivity, and specificity of the AI model, comparison across models, comparison across modalities (clinical versus dermoscopic images), or performance of AI against physicians.

The two reviewers independently assessed the quality of the studies included and the risk of bias using QUADAS-2 [23]. Based on the questions, we classified each QUADAS-2 domain as low (0), high (1), or unknown (2) risk of bias.

3. Results

The initial database search from PubMed and EMBASE identified 3113 and 5100 studies, respectively. A total of 1474 duplicates were removed. After screening by titles, abstracts, and full text, only twenty-two studies [24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45] were included in the final review.

3.1. Characteristics of Studies and Datasets

As summarized in Table 1, studies included were mostly from East Asian countries—China (8/22), Japan (4/22), South Korea (8/22), Taiwan (1/22) and Iran (1/22), with a peak in publication rate in the year 2020. Only institutional or private databases were used in the studies included.

Table 1.

Characteristics of studies (n = 22).

Pre-processing of data was employed in 12/22 studies to improve the accuracy of feature extraction and interpretation by AI. Methods include the removal of low-quality or mislabeled images and artifacts (e.g., clothing, hair, markings, and appearance augmented by previous treatment) as well as cropping, resizing, or enhancement of images to ensure that skin lesions are properly captured and fitted to the input requirements of AI algorithms. Some studies utilized unprocessed images to mimic real-life applications whereby photos might not always be taken by professionals or be of high quality, especially in the community setting.

Data augmentation was also performed in 4/22 studies via rotating and flipping the original image at varying angles to increase the number of images available for training and validation. This is commonly adopted by studies with small datasets to increase the robustness of databases for training AI algorithms.

Most studies utilized histopathological diagnosis as the standard for ground truth (11/22). Six studies included skin lesions that were diagnosed clinically—either because the skin lesion was clinically benign with no justification for biopsy in a real-life setting or in cases whereby histopathological diagnosis was not certain and consensus from a few dermatologists was obtained for clinicopathological correlation (6/22). Five studies did not clearly state the basis of determining the gold standard diagnosis.

In terms of skin cancers evaluated, malignant melanoma was the most commonly included skin cancer (16/22), with five papers focusing on the use of AI algorithms for the detection of an acral melanoma subtype (4/22). This is followed by basal cell carcinoma (15/22) and squamous cell carcinoma (7/22). Only four studies included rarer skin cancers such as intraepithelial carcinoma, Kaposi’s sarcoma, or adenocarcinoma of lips (4/22).

3.2. Types of AI Models Used

As shown in Table 1, the studies were categorized into either shallow or deep AI techniques based on the complexity of the AI architecture under the model. Shallow techniques are typically easier to train with simpler structures and only a few layers of neural networks. In contrast, deep technique refers to those with complex architectures containing at least three layers of multiple intervening layers, allowing them to better understand the relationships between inputs and outputs [3].

Table 2 summarizes the AI models used and characteristics of datasets included. CNN-based models were the most popular AI models studied, with Inception ResNetV2 being the most highly utilized algorithm. Some studies custom-built their own algorithms, while others utilized transfer learning by re-training certain convolutional layers or incorporating other algorithms into established AI architectures trained with large Caucasian-derived datasets to improve adaptability to Asian population datasets. Common tasks performed by AI algorithms include feature extraction, segmentation, and binary or multiclass classification of skin lesions. While deep AI techniques, such as CNNs, are able to incorporate and perform all of the common tasks, some studies which employed shallow AI techniques required a combination of AI models to perform different aspects of tasks to allow better classification of skin lesions.

Table 2.

Characteristics of AI models trained and datasets included.

In terms of image input, 11/22 used clinical images of skin lesions that were either taken from mobile phones or high-resolution cameras, while 9/22 utilized dermoscopic photographs instead. Only 2/22 studies included dual modality (both clinical and dermoscopic photographs) to assess skin cancer detection ability. Direct comparison of the input modalities did not reveal any significant difference in the accuracy rate of the AI model for melanoma [39] or basal cell carcinoma [42] detection. However, when both modalities were concurrently used as input, the accuracy rate reduced to 76.2% [42].

3.3. Study Outcomes

Table 3 summarizes the study outcomes of studies included. Binary or multiclass classification abilities of AI models were commonly assessed, and 10/22 studies reported AI accuracy more than 90% [25,27,29,30,32,34,37,41,44,45]. Out of these ten studies, three were custom-built algorithms, while seven [25,27,29,30,34,37,41] of them utilized the transfer learning approach, which involves the adaptation of knowledge acquired through learning from large datasets in related domains by fine-tuning them on smaller Asian datasets [46].

Table 3.

Study outcomes.

Amongst convolutional neural network (CNN) models, two studies compared performance between various CNNs. Abbas et al. found that transfer learning with ResNet-18 attained higher accuracy versus AlexNet and the custom-built Deep ConvNet model [25]. Xie et al. showed that Xception outperformed Inception, ResNet50, Inception ResNetV2, and DenseNet121 when applied to Asian populations [30]. Inception involves multi-scale feature extraction with inception modules; ResNet uses residual learning with skip connections that allow very deep networks; DenseNet ensures dense connections between all layers for efficient feature reuse, while Xception uses depth-wise separable convolutions for more efficient computation [47]. In the context of skin cancer detection, the depthwise separable convolutions technology will allow Xception to be more efficient in processing high-resolution complex images with fewer parameters without overwhelming computational resources or overfitting and allow more robustness and generalizability.

Sixteen studies evaluated the binary classification ability of AI as ‘benign’ versus ‘malignant’. Nine studies [24,27,30,31,32,34,35,39,43] showed comparable performance between algorithms and expert dermatologists; two studies [25,36] showed that AI outperformed experts, while four outperformed trainees or non-experts [24,27,40,43] in their ability to differentiate benign from malignant skin cancer lesions. However, Han et al. showed that the accuracy of the first clinical impression generated by AI was inferior to that of physicians [26].

Twelve studies assessed the multiclass classification ability of AI algorithms, which is the ability to distinguish lesions according to specific disease type [26,28,29,32,33,34,35,37,39,40,42,43]. For instance, these AI models can differentiate the skin lesions to identify if they belong to basal cell carcinoma, malignant melanoma, or basal cell carcinoma groups instead of just discerning if they are likely to be benign or malignant. Three studies showed that the multiclass classification ability of AI algorithms based on a single image was comparable to physicians [26,34,42], while two surpassed the performances of dermatologists [35,39].

In terms of the efficacy of AI assistance, Han et al. [28] showed that AI improved the ability of dermatologists in predicting malignancy and making decisions on treatment options as well as multi-disease classification tasks with significant improvement in the mean sensitivity and specificity of dermatologists. However, another study by Han et al. [40] showed that AI assistance mainly benefited non-dermatology trainees with the top accuracy of the AI-assisted group being significantly higher than that of unaided group, but there was no significant change in performance amongst dermatology residents.

4. Discussion

We present, to our knowledge, the first systematic review summarizing the existing AI image-based algorithms developed for skin cancer detection, trained or validated exclusively with a predominantly Asian database.

4.1. Potentials and Benefits of Using AI for Skin Cancer Detection

Based on current evidence, several studies suggested the comparability of AI’s skin cancer detection ability to experts [24,25,27,30,32,34,35,39,43] and saw an improvement in accuracy rates when AI is used to augment the decision-making of non-dermatologists in real-world settings [40]. As such, AI’s potential promises several folds of benefits not only to patients but also to physicians and the healthcare system.

AI can increase health equity through improving access to skin screenings. This is especially useful in rural areas or resource-scarce areas where it may take longer for a patient to seek medical attention or obtain a biopsy of suspected skin lesions [48]. For primary care physicians with less experience, AI models with the ability to discern benign versus malignant classifiers can help triage skin lesions requiring specialist referral, and multiclass classifiers can help diagnose skin lesions to tailor treatment and improve explainability and trust for clinicians [49]. Beyond primary care settings, especially in times of pandemic where physical consultations are discouraged [50], AI-augmented tele-dermatology can further enhance accessibility by streamlining referrals and reducing waiting time [48]. In the tertiary institution, a good AI model can also offer diagnostic support to physicians when encountering an atypical skin lesion or non-local population [37]—for instance, an AI model developed for non-local skin cancer detection can offer a second opinion to a doctor who might not have much exposure to non-local patients with differing Fitzpatrick skin type.

4.2. Comparison of AI Versus Physicians’ Performance in Skin Cancer Detection

In a pragmatic setting, dermatologists are often equipped with additional information to improve diagnostic accuracy through history-taking, access to medical records, and screening for associated signs and symptoms to support diagnosis. While some studies suggest comparable or better performance by AI models in terms of skin cancer image recognition due to AI’s ability to extract intricate features and discern inconspicuous findings to better distinguish similar-looking lesions, it is difficult to comment if this is a true reflection of AI’s overall diagnostic abilities. For instance, the reduction in accuracy rate when both clinical and dermoscopy images were used as input for AI interpretation [41] might suggest AI’s inability to assimilate findings since the additional details are supposed to improve the accuracy of dermatologists. When skin cancers manifest atypically, the lack of pre-training with similar images might limit AI’s diagnostic ability as well.

Physicians are often presented with myriads of skin lesions not limited to the few groups, which current AI algorithms are trained for. Without adequate external validation, it remains unclear if AI can function equally well in the dynamic real-world setting when confounders are present or when a larger range of skin conditions are introduced. In addition, it is also crucial to note that the first clinical impression of physicians was superior to AI [26], and the diagnostic accuracy of trainees dropped after consulting the AI model if the model’s diagnosis was incorrect, highlighting a possible pitfall of using current AI models to augment decision-making [40].

4.3. Comparison of AI Models

Unlike traditional machine learning approaches, deep learning (DL) models have more sophisticated feature extraction techniques to identify correlations within data samples to optimize classification accuracy, detection precision, or segmentation performance and extract higher-order representations from data that are not easily discernible through traditional methods [51].

In the last decade, there has been a shift in machine learning, with the focus now on deep learning approaches such as CNNs, transformers, or their variants, which are refinements on traditional ANNs to improve predictive accuracy and reduce the complexity of previous algorithms [18,52]. One such method is CNNs, which are specially engineered for image processing and analysis. CNNs consist of multiple cascading non-linear modeling units called “layers” that filter the input data by filtering redundant information, deciphering correlations, and summarizing critical information into a distilled representation called “features” before mapping the extracted features to target diagnostic labels or outputs [51]. These require less computational time and power but produce higher predictive accuracies than traditional machine learning approaches [52].

More recently, vision transformers (ViTs), which are “attention”-based models capable of selectively focusing on relevant parts of the input data to generate output, have been garnering increasing popularity for image recognition tasks as they offer several key advantages over CNNs. ViTs are capable of capturing long-range relationships and are able to process images by dividing them into smaller patches and encoding them through self-attention mechanisms to capture relationships between tokens [51,53]. In terms of interpretability, ViTs’ attention maps can provide a clear, localized picture of attention, which provides researchers with new insight into how the model makes decisions, unlike traditional CNNs, which typically utilize explainability methods, such as class activation maps (CAM) and Grad-CAM, which provide limited receptive fields, coarse visualizations, and potentially reduces the accuracy of algorithms [53,54]. Studies by Matsoukas and Xin et al., which employed ViTs showing high accuracy rates of >90% for skin image classification, suggest promising performance in skin cancer classification tasks [54,55]. Large language models built on transformer architectures, such as Skin-GPT4, can allow patients to upload photos of their skin lesions and ask direct questions to simulate tele-dermatology services [56].

Despite the benefits of transformers, at the time of our review, there has not been any published studies developed or trained with Asian databases [16,54,55,56]. This could be due to the lack of significant performance improvement over traditional CNN models when dealing with existing small Asian datasets [54].

Other practical aspects such as training time, inference speed, and hardware requirements are crucial to consider when deciding the suitability and ease of adaptation for real-world application. As compared to traditional machine learning models, such as decision trees or SVMs, deep learning models (like CNNs and transformers) have slower inference speed and require longer training time as well as GPUs or TPUs for training and inference [55]. While consistent input images through data pre-processing and segmentation may reduce computational load and achieve faster training and inference time [57], these can be challenging to implement, especially in resource-poor countries.

4.4. Current Challenges and Development of AI in Asia

Amongst the twenty-two studies included, most were derived from East Asian countries, with the highest research output from China, South Korea, and Japan. While there have been studies published from other Asian countries as well [58,59], there is currently a lack of representation from Asian countries with generally darker skin tones, such as Southeast Asian countries or India, as some of these studies were also excluded as they did not utilize local Asian databases.

The disparity in terms of research output and database availability amongst Asian countries can possibly be attributed to income disparity, as developing countries often lack the funding, resources, and talent to explore and utilize AI technology [3,20]. Similarly, from the patient perspective, they may be less motivated to seek medical treatment for skin lesions, especially if they are asymptomatic [3], leading to fewer reported cases available for inclusion in local databases for research.

4.5. Existing Asian Databases

The current Asian databases identified are mainly owned by private institutions. In terms of skin cancer type, there has been a greater focus on malignant melanoma detection, likely due to the increased awareness of high mortality associated with late diagnosis. This is followed by basal cell carcinoma, which is the most prevalent skin cancer worldwide. Transfer learning with established CNN-based models trained with large-scale Caucasian databases has been shown to have superior accuracy rates as compared to custom-built algorithms trained with small-scale local Asian databases, suggesting the importance of using large-scale validated databases to fine-tune and improve the accuracy of deep learning models. However, the yield of transfer learning might be limited when conventional models developed based on Caucasian databases for malignant melanoma (using the ABCD approach or 7-point scale approach) cannot be readily transferable to skin cancers with unique clinical features or developing at uncommon anatomical sites such as acral melanoma.

The lack of a large-scale Asian database not only hinders the development of existing deep learning AI models, limits innovation but also jeopardizes patient safety. The fact that existing databases are mostly derived from tertiary hospitals also risks selection bias for patients at higher risk of malignancy [9]. Cho et al.’s study, which compared the results of test sets to external validation sets, revealed a reduction in accuracy rate when an AI algorithm was validated against an external dataset [36]. As Asians are not a homogenous population, diversity exists, and hence, it underscores the importance of constructing a publicly available, validated image database to ensure greater representation across Asian skin genotypes and conditions, and to allow for stringent quality control of AI developed.

Clinical imaging was the most popular input method amongst the studies included. This predilection could be due to the relative ease of collecting clinical images and the lack of a publicly available Asian dermoscopic image database, such as the Asian equivalent of the International Skin Image Collaboration (ISIC) database, for use in the training and validation of AI models developed for skin cancer detection [60]. Test performance of deep learning models is known to be influenced by variations in image acquisition and quality. Therefore, while this review found no significant differences in accuracy rates for skin cancer detection across studies using different modalities, such comparisons may be limited by the lack of data on the quality variations in the clinical images used. Existing studies may not accurately reflect real-world outcomes, where clinical images captured by patients or in primary and secondary care settings often vary in quality and clarity, in contrast to high-resolution macroscopic or dermoscopic images taken by skilled dermatologists. [61].

Additionally, promising results have been observed with the use of automated algorithms for sequential digital dermoscopy (SDD) to support the early detection of malignant melanoma. AI can be trained to identify subtle differences between consecutive dermoscopic images, differences that might not be easily detected by the naked eye [57]. Hence, given the variability of macroscopic images in real-life applications as well as the additional benefits and specificity with dermoscopic images, the creation of an Asian dermoscopic image database will likely contribute to a better and more consistent training set for AI.

4.6. Future Research Directions

Moving forward, while we aim to maximize the transformative potential of AI, it is prudent to keep in mind the ethical and legal challenges that comes with it. Deep learning models require large amounts of data and image annotation for training and validation. This highlights the need for stringent quality control of images and AI models used to ensure that patient safety is not undermined.

Accessing medical data poses privacy and legal concerns [62]. Unequal racial and ethnic representation in current databases used for training AI models can further exacerbate healthcare inequality. AI decisions should also be assessed for safety and transparency, as incorrect decisions can be fatal [63]. Undue reliance on AI, especially amongst the younger and less experienced clinicians, may risk compromising clinicians’ expertise with traditional diagnostic and treatment methods [64].

Future collaborations between institutions to construct a publicly available, validated image database made up of diverse Asian populations and skin lesion types will be crucial to mitigate biases, improve the robustness of deep learning algorithms, and allow us to better adapt results into real-life practice. Appropriate legal regulations should be implemented to facilitate the safe exchange of patient medical data between clinical centers and other scientific institutions [64]. More efforts should also be put into the development of prospective clinical trials to develop AI models that are capable of multimodal input to incorporate more information, just as a physician would, to arrive at a more accurate final diagnosis. Human–machine collaboration can be studied to identify loopholes and for constructive feedback on the training of the AI algorithms. Researchers and developers should also be cognizant of the target group that their AI model should benefit, be it patient-facing, primary care, or tertiary care settings, so that the design of AI algorithms can be tailored to suit the needs of the target audience. For instance, patient-facing AI technologies will need to be able to analyze and classify macroscopic images accurately with high specificity to avoid unnecessary referrals to specialist clinics.

4.7. Strengths and Limitations

The main strengths of our study lie in the extensive and systematic search in two different databases and strict inclusion criteria of only studies which utilized Asian databases for training or validation.

Limitations include the potential for selection bias, as articles from databases not included in this review may have been excluded. While the stricter selection criteria resulted in inclusion of fewer Asian studies for review, this approach helps minimize the risk of publication bias and misrepresentation as studies that rely on widely used public databases like ISIC and HAM10000, which predominantly feature Caucasian populations with lighter Fitzpatrick skin types, tend to be more statistically significant. These studies might also present risks of overlap in images used for training and testing, introducing further biases. Therefore, our approach enables a more comprehensive understanding of the AI models currently in use, their outcomes, and the limitations associated with existing Asian population databases.

The transferability of the results, particularly for skin cancers more prevalent in Asian populations, may also be uncertain since only around half of the studies used histology as the gold standard for diagnosis, coupled with a lack of quality control to ensure accurate and consistent annotation of images, sufficient database diversity, and external validation.

5. Conclusions

Overall, AI technologies have shown great potential to aid in the early detection and diagnosis of skin cancers in Asian populations. There has been a shift towards the adoption of deep learning models such as CNNs, but research generated using newer and more refined transformer models remains limited. The current literature lacks representation from Asian populations with darker Fitzpatrick skin type, as well as dedicated dermoscopic images or rare skin cancer databases.

Appropriate implementation of AI, guided by evidence-based approaches, is prudent to maximize its efficacy. Ongoing efforts should focus on diversifying and expanding Asian databases to encompass a wide range of Asian genotypes and skin conditions, while ensuring rigorous quality control standards are maintained. Future collaborations should focus on prospective, real-world studies to improve transferability of the current AI technologies developed in Asia and address potential ethical and legal challenges.

Author Contributions

Conceptualization, C.C.O. and X.L.A.; methodology, C.C.O. and X.L.A.; writing—original draft preparation and editing, X.L.A.; writing—review and editing, C.C.O.; supervision, C.C.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this systematic review were primarily obtained from publicly accessible databases of PubMed and EMBASE. However, some studies required additional data access through contacting the original authors. Due to data-sharing restrictions imposed by certain studies, not all data analyzed are readily available. Requests for access to specific datasets should be directed at the relevant study authors where possible.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Oh, C.C.; Jin, A.; Koh, W.-P. Trends of cutaneous basal cell carcinoma, squamous cell carcinoma, and melanoma among the Chinese, Malays, and Indians in Singapore from 1968–2016. JAAD Int. 2021, 4, 39–45. [Google Scholar] [CrossRef] [PubMed]

- Bradford, P.T. Skin cancer in skin of color. Dermatol. Nurs./Dermatol. Nurses’ Assoc. 2009, 21, 170. [Google Scholar]

- Kim, G.K.; Del Rosso, J.Q.; Bellew, S. Skin cancer in asians: Part 1: Nonmelanoma skin cancer. J. Clin. Aesthetic Dermatol. 2009, 2, 39–42. [Google Scholar]

- Lee, H.Y.; Chay, W.Y.; Tang, M.B.; Chio, M.T.; Tan, S.H. Melanoma: Differences between Asian and Caucasian Patients. Ann. Acad. Med. 2012, 41, 17–20. [Google Scholar] [CrossRef]

- Chang, J.W.C.; Guo, J.; Hung, C.Y.; Lu, S.; Shin, S.J.; Quek, R.; Ying, A.; Ho, G.F.; Nguyen, H.S.; Dhabhar, B.; et al. Sunrise in melanoma management: Time to focus on melanoma burden in Asia. Asia-Pac. J. Clin. Oncol. 2017, 13, 423–427. [Google Scholar] [CrossRef]

- Stephens, P.M.; Martin, B.; Ghafari, G.; Luong, J.; Nahar, V.K.; Pham, L.; Luo, J.; Savoy, M.; Sharma, M. Skin cancer knowledge, attitudes, and practices among Chinese population: A narrative review. Dermatol. Res. Pr. 2018, 2018, 1965674. [Google Scholar] [CrossRef]

- Zhou, L.; Zhong, Y.; Han, L.; Xie, Y.; Wan, M. Global, regional, and national trends in the burden of melanoma and non-melanoma skin cancer: Insights from the global burden of disease study 1990–2021. Sci. Rep. 2025, 15, 5996. [Google Scholar] [CrossRef]

- Urban, K.; Mehrmal, S.; Uppal, P.; Giesey, R.L.; Delost, G.R. The global burden of skin cancer: A longitudinal analysis from the Global Burden of Disease Study, 1990–2017. JAAD Int. 2021, 2, 98–108. [Google Scholar] [CrossRef] [PubMed]

- Kinyanjui, N.M.; Odonga, T.; Cintas, C.; Codella, N.C.; Panda, R.; Sattigeri, P.; Varshney, K.R. Fairness of classifiers across skin tones in dermatology. In International Conference on Medical Image Computing and computer-Assisted Intervention; Springer International Publishing: Cham, Switzerland, 2020; pp. 320–329. [Google Scholar]

- Daneshjou, R.; Vodrahalli, K.; Novoa, R.A.; Jenkins, M.; Liang, W.; Rotemberg, V.; Ko, J.; Swetter, S.M.; Bailey, E.E.; Gevaert, O.; et al. Disparities in dermatology AI performance on a diverse, curated clinical image set. Sci. Adv. 2022, 8, eabq6147. [Google Scholar] [CrossRef]

- Abraham, A.; Sobhanakumari, K.; Mohan, A. Artificial intelligence in dermatology. J. Ski. Sex. Transm. Dis. 2021, 3, 99–102. [Google Scholar] [CrossRef]

- Kaul, V.; Enslin, S.; Gross, S.A. History of artificial intelligence in medicine. Gastrointest. Endosc. 2020, 92, 807–812. [Google Scholar] [CrossRef] [PubMed]

- Tran, B.X.; Vu, G.T.; Ha, G.H.; Vuong, Q.-H.; Ho, M.-T.; Vuong, T.-T.; La, V.-P.; Ho, M.-T.; Nghiem, K.-C.P.; Nguyen, H.L.T.; et al. Global evolution of research in artificial intelligence in health and medicine: A bibliometric study. J. Clin. Med. 2019, 8, 360. [Google Scholar] [CrossRef]

- Jones, L.D.; Golan, D.; Hanna, S.A.; Ramachandran, M. Artificial intelligence, machine learning and the evolution of healthcare: A bright future or cause for concern? Bone Jt. Res. 2018, 7, 223–225. [Google Scholar]

- Stiff, K.M.; Franklin, M.J.; Zhou, Y.; Madabhushi, A.; Knackstedt, T.J. Artificial intelligence and melanoma: A com-prehensive review of clinical, dermoscopic, and histologic applications. Pigment Cell Melanoma Res. 2022, 35, 203–211. [Google Scholar]

- Zarfati, M.; Nadkarni, G.N.; Glicksberg, B.S.; Harats, M.; Greenberger, S.; Klang, E.; Soffer, S. Exploring the Role of Large Language Models in Melanoma: A Systematic Review. J. Clin. Med. 2024, 13, 7480. [Google Scholar] [CrossRef]

- Thomas, S.M.; Lefevre, J.G.; Baxter, G.; Hamilton, N.A. Interpretable deep learning systems for multi-class segmentation and classification of non-melanoma skin cancer. Med. Image Anal. 2021, 68, 101915. [Google Scholar] [PubMed]

- Patel, S.; Wang, J.V.; Motaparthi, K.; Lee, J.B. Artificial intelligence in dermatology for the clinician. Clin. Dermatol. 2021, 39, 667–672. [Google Scholar] [CrossRef] [PubMed]

- Hartmann, T.; Passauer, J.; Hartmann, J.; Schmidberger, L.; Kneilling, M.; Volc, S. Basic principles of artificial intelligence in dermatology explained using melanoma. JDDG: J. Der Dtsch. Dermatol. Ges. 2024, 22, 339–347. [Google Scholar]

- Lee, B.; Ibrahim, S.A.; Zhang, T. Mobile apps leveraged in the COVID-19 pandemic in East and South-East Asia: Review and content analysis. JMIR Mhealth Uhealth 2021, 9, e32093. [Google Scholar] [CrossRef]

- Furriel, B.C.; Oliveira, B.D.; Prôa, R.; Paiva, J.Q.; Loureiro, R.M.; Calixto, W.P.; Reis, M.R.C.; Giavina-Bianchi, M. Artificial intelligence for skin cancer detection and classification for clinical environment: A systematic review. Front. Med. 2024, 10, 1305954. [Google Scholar] [CrossRef]

- Salinas, M.P.; Sepúlveda, J.; Hidalgo, L.; Peirano, D.; Morel, M.; Uribe, P.; Rotemberg, V.; Briones, J.; Mery, D.; Navarrete-Dechent, C. A systematic review and meta-analysis of artificial intelligence versus clinicians for skin cancer diagnosis. NPJ Digit. Med. 2024, 7, 125. [Google Scholar] [PubMed]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.G.; Sterne, J.A.C.; Bossuyt, P.M.M.; QUADAS-2 Group. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar]

- Yu, C.; Yang, S.; Kim, W.; Jung, J.; Chung, K.-Y.; Lee, S.W.; Oh, B. Acral melanoma detection using a convolutional neural network for dermoscopy images. PLoS ONE 2018, 13, e0193321. [Google Scholar]

- Abbas, Q.; Ramzan, F.; Ghani, M.U. Acral melanoma detection using dermoscopic images and convolutional neural networks. Vis. Comput. Ind. Biomed. Art 2021, 4, 1–12. [Google Scholar]

- Han, S.S.; Moon, I.J.; Kim, S.H.; Na, J.-I.; Kim, M.S.; Park, G.H.; Park, I.; Kim, K.; Lim, W.; Lee, J.H.; et al. Assessment of deep neural networks for the diagnosis of benign and malignant skin neoplasms in comparison with dermatologists: A retrospective validation study. PLoS Med. 2020, 17, e1003381. [Google Scholar]

- Huang, K.; He, X.; Jin, Z.; Wu, L.; Zhao, X.; Wu, Z.; Wu, X.; Xie, Y.; Wan, M.; Li, F.; et al. Assistant diagnosis of basal cell carcinoma and seborrheic keratosis in Chinese population using convolutional neural network. J. Healthc. Eng. 2020, 2020, 1713904. [Google Scholar] [PubMed]

- Han, S.S.; Park, I.; Chang, S.E.; Lim, W.; Kim, M.S.; Park, G.H.; Chae, J.B.; Huh, C.H.; Na, J.-I. Augmented intelligence dermatology: Deep neural networks empower medical professionals in diagnosing skin cancer and predicting treatment options for 134 skin disorders. J. Investig. Dermatol. 2020, 140, 1753–1761. [Google Scholar]

- Han, S.S.; Kim, M.S.; Lim, W.; Park, G.H.; Park, I.; Chang, S.E. Classification of the clinical images for benign and malignant cutaneous tumors using a deep learning algorithm. J. Investig. Dermatol. 2018, 138, 1529–1538. [Google Scholar] [PubMed]

- Xie, B.; He, X.; Huang, W.; Shen, M.; Li, F.; Zhao, S. Clinical image identification of basal cell carcinoma and pigmented nevi based on convolutional neural network. J. Cent. South University. Med. Sci. 2019, 44, 1063–1070. [Google Scholar]

- Barzegari, M.; Ghaninezhad, H.; Mansoori, P.; Taheri, A.; Naraghi, Z.S.; Asgari, M. Computer-aided dermoscopy for diagnosis of melanoma. BMC Dermatol. 2005, 5, 8. [Google Scholar]

- Chang, W.-Y.; Huang, A.; Yang, C.-Y.; Lee, C.-H.; Chen, Y.-C.; Wu, T.-Y.; Chen, G.-S. Computer-aided diagnosis of skin lesions using conventional digital photography: A reliability and feasibility study. PLoS ONE 2013, 8, e76212. [Google Scholar]

- Ba, W.; Wu, H.; Chen, W.W.; Wang, S.H.; Zhang, Z.Y.; Wei, X.J.; Wang, W.J.; Yang, L.; Zhou, D.M.; Zhuang, Y.X.; et al. Convolutional neural network assistance significantly improves dermatologists’ diagnosis of cutaneous tumours using clinical images. Eur. J. Cancer 2022, 169, 156–165. [Google Scholar]

- Wang, S.-Q.; Zhang, X.-Y.; Liu, J.; Tao, C.; Zhu, C.-Y.; Shu, C.; Xu, T.; Jin, H.-Z. Deep learning-based, computer-aided classifier developed with dermoscopic images shows comparable performance to 164 dermatologists in cutaneous disease diagnosis in the Chinese population. Chin. Med. J. 2020, 133, 2027–2036. [Google Scholar]

- Fujisawa, Y.; Otomo, Y.; Ogata, Y.; Nakamura, Y.; Fujita, R.; Ishitsuka, Y.; Watanabe, R.; Okiyama, N.; Ohara, K.; Fujimoto, M. Deep-learning-based, computer-aided classifier developed with a small dataset of clinical images surpasses board-certified dermatologists in skin tumour diagnosis. Br. J. Dermatol. 2019, 180, 373–381. [Google Scholar] [PubMed]

- Cho, S.; Sun, S.; Mun, J.; Kim, C.; Kim, S.; Youn, S.; Kim, H.; Chung, J. Dermatologist-level classification of malignant lip diseases using a deep convolutional neural network. Br. J. Dermatol. 2020, 182, 1388–1394. [Google Scholar] [PubMed]

- Minagawa, A.; Koga, H.; Sano, T.; Matsunaga, K.; Teshima, Y.; Hamada, A.; Houjou, Y.; Okuyama, R. Dermoscopic diagnostic performance of Japanese dermatologists for skin tumors differs by patient origin: A deep learning convolutional neural network closes the gap. J. Dermatol. 2021, 48, 232–236. [Google Scholar]

- Guan, H.; Yuan, Q.; Lv, K.; Qi, Y.; Jiang, Y.; Zhang, S.; Miao, D.; Wang, Z.; Lin, J. Dermoscopy-based Radiomics Help Distinguish Basal Cell Carcinoma and Actinic Keratosis: A Large-scale Real-world Study Based on a 207-combination Machine Learning Computational Framework. J. Cancer 2024, 15, 3350. [Google Scholar]

- Li, C.-X.; Fei, W.-M.; Shen, C.-B.; Wang, Z.-Y.; Jing, Y.; Meng, R.-S.; Cui, Y. Diagnostic capacity of skin tumor artificial intelligence-assisted decision-making software in real-world clinical settings. Chin. Med. J. 2020, 133, 2020–2026. [Google Scholar] [PubMed]

- Han, S.S.; Kim, Y.J.; Moon, I.J.; Jung, J.M.; Lee, M.Y.; Lee, W.J.; Lee, M.W.; Kim, S.H.; Navarrete-Dechent, C.; Chang, S.E. Evaluation of artificial intelligence–assisted diagnosis of skin neoplasms: A single-center, paralleled, unmasked, randomized controlled trial. J. Investig. Dermatol. 2022, 142, 2353–2362. [Google Scholar]

- Xie, F.; Fan, H.; Li, Y.; Jiang, Z.; Meng, R.; Bovik, A. Melanoma classification on dermoscopy images using a neural network ensemble model. IEEE Trans. Med. Imaging 2016, 36, 849–858. [Google Scholar]

- Yang, Y.; Xie, F.; Zhang, H.; Wang, J.; Liu, J.; Zhang, Y.; Ding, H. Skin lesion classification based on two-modal images using a multi-scale fully-shared fusion network. Comput. Methods Programs Biomed. 2023, 229, 107315. [Google Scholar] [CrossRef] [PubMed]

- Jinnai, S.; Yamazaki, N.; Hirano, Y.; Sugawara, Y.; Ohe, Y.; Hamamoto, R. The development of a skin cancer classification system for pigmented skin lesions using deep learning. Biomolecules 2020, 10, 1123. [Google Scholar] [CrossRef]

- Yang, S.; Oh, B.; Hahm, S.; Chung, K.-Y.; Lee, B.-U. Ridge and furrow pattern classification for acral lentiginous melanoma using dermoscopic images. Biomed. Signal Process. Control 2017, 32, 90–96. [Google Scholar] [CrossRef]

- Iyatomi, H.; Oka, H.; Celebi, M.E.; Ogawa, K.; Argenziano, G.; Soyer, H.P.; Koga, H.; Saida, T.; Ohara, K.; Tanaka, M. Computer-based classification of dermoscopy images of melanocytic lesions on acral volar skin. J. Investig. Dermatol. 2008, 128, 2049–2054. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D.D. A survey of transfer learning. J. Big Data 2016, 3, 1345–1459. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Schwalbe, N.; Wahl, B. Artificial intelligence and the future of global health. Lancet 2020, 395, 1579–1586. [Google Scholar] [CrossRef] [PubMed]

- Jones, O.T.; Matin, R.N.; Walter, F.M. Using artificial intelligence technologies to improve skin cancer detection in primary care. Lancet Digit. Health 2025, 7, e8–e10. [Google Scholar] [CrossRef]

- Ashrafzadeh, S.; Nambudiri, V.E. The COVID-19 crisis: A unique opportunity to expand dermatology to underserved populations. J. Am. Acad. Dermatol. 2020, 83, E83–E84. [Google Scholar] [CrossRef]

- Mendi, B.I.; Kose, K.; Fleshner, L.; Adam, R.; Safai, B.; Farabi, B.; Atak, M.F. Artificial Intelligence in the Non-Invasive Detection of Melanoma. Life 2024, 14, 1602. [Google Scholar] [CrossRef]

- Vawda, M.I.; Lottering, R.; Mutanga, O.; Peerbhay, K.; Sibanda, M. Comparing the utility of Artificial Neural Networks (ANN) and Convolutional Neural Networks (CNN) on Sentinel-2 MSI to estimate dry season aboveground grass biomass. Sustainability 2024, 16, 1051. [Google Scholar] [CrossRef]

- Meedeniya, D.; De Silva, S.; Gamage, L.; Isuranga, U. Skin cancer identification utilizing deep learning: A survey. IET Image Process. 2024, 18, 3731–3749. [Google Scholar] [CrossRef]

- Matsoukas, C.; Haslum, J.F.; Söderberg, M.; Smith, K. Is it time to replace cnns with transformers for medical images? arXiv 2021, arXiv:2108.09038. [Google Scholar]

- Xin, C.; Liu, Z.; Zhao, K.; Miao, L.; Ma, Y.; Zhu, X.; Zhou, Q.; Wang, S.; Li, L.; Yang, F.; et al. An improved transformer network for skin cancer classification. Comput. Biol. Med. 2022, 149, 105939. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; He, X.; Sun, L.; Xu, J.; Chen, X.; Chu, Y.; Zhou, L.; Liao, X.; Zhang, B.; Afvari, S.; et al. Pre-trained multimodal large language model enhances dermatological diagnosis using SkinGPT-4. Nat. Commun. 2024, 15, 5649. [Google Scholar]

- Yu, Z.; Nguyen, J.; Nguyen, T.D.; Kelly, J.; Mclean, C.; Bonnington, P.; Zhang, L.; Mar, V.; Ge, Z. Early melanoma diagnosis with se-quential dermoscopic images. IEEE Trans. Med. Imaging 2021, 41, 633–646. [Google Scholar] [CrossRef] [PubMed]

- Guo, L.N.; Lee, M.S.; Kassamali, B.; Mita, C.; Nambudiri, V.E. Bias in, bias out: Underreporting and underrepresentation of diverse skin types in machine learning research for skin cancer detection—A scoping review. J. Am. Acad. Dermatol. 2022, 87, 157–159. [Google Scholar] [CrossRef]

- Takiddin, A.; Schneider, J.; Yang, Y.; Abd-Alrazaq, A.; Househ, M. Artificial intelligence for skin cancer detection: Scoping review. J. Med. Internet Res. 2021, 23, e22934. [Google Scholar] [CrossRef]

- ISIC 2018: Skin Lesion Analysis Towards Melanoma Detection. 11 June 2020. Available online: https://challenge2018.isic-archive.com/ (accessed on 21 February 2025).

- Carse, J.; Süveges, T.; Chin, G.; Muthiah, S.; Morton, C.; Proby, C.; Trucco, E.; Fleming, C.; McKenna, S. Classifying real-world macroscopic images in the primary–secondary care interface using transfer learning: Implications for development of artificial intelligence solutions using nondermoscopic images. Clin. Exp. Dermatol. 2024, 49, 699–706. [Google Scholar] [CrossRef]

- Sachdev, S.S.; Tafti, F.; Thorat, R.; Kshirsagar, M.; Pinge, S.; Savant, S. Utility of artificial intelligence in dermatology: Challenges and perspectives. IP Indian J. Clin. Exp. Dermatol. 2025, 11, 1–9. [Google Scholar] [CrossRef]

- Melarkode, N.; Srinivasan, K.; Qaisar, S.M.; Plawiak, P. AI-powered diagnosis of skin cancer: A contemporary review, open challenges and future research directions. Cancers 2023, 15, 1183. [Google Scholar] [CrossRef] [PubMed]

- Strzelecki, M.; Kociołek, M.; Strąkowska, M.; Kozłowski, M.; Grzybowski, A.; Szczypiński, P.M. Artificial intelligence in the detection of skin cancer: State of the art. Clin. Dermatol. 2024, 42, 280–295. [Google Scholar] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).