The Use of Artificial Intelligence for Skin Cancer Detection in Asia—A Systematic Review

Abstract

1. Introduction

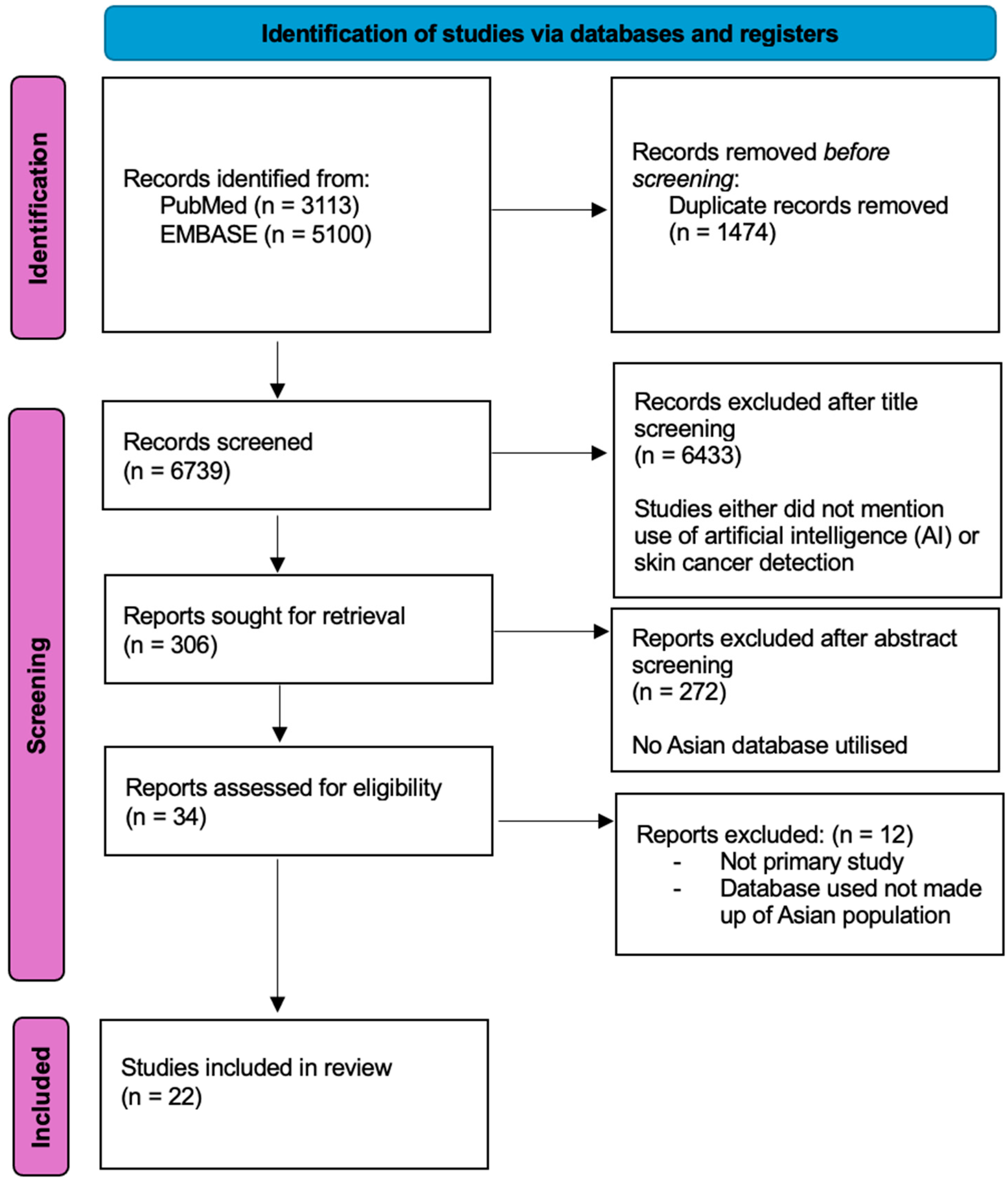

2. Materials and Methods

3. Results

3.1. Characteristics of Studies and Datasets

3.2. Types of AI Models Used

3.3. Study Outcomes

4. Discussion

4.1. Potentials and Benefits of Using AI for Skin Cancer Detection

4.2. Comparison of AI Versus Physicians’ Performance in Skin Cancer Detection

4.3. Comparison of AI Models

4.4. Current Challenges and Development of AI in Asia

4.5. Existing Asian Databases

4.6. Future Research Directions

4.7. Strengths and Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Oh, C.C.; Jin, A.; Koh, W.-P. Trends of cutaneous basal cell carcinoma, squamous cell carcinoma, and melanoma among the Chinese, Malays, and Indians in Singapore from 1968–2016. JAAD Int. 2021, 4, 39–45. [Google Scholar] [CrossRef] [PubMed]

- Bradford, P.T. Skin cancer in skin of color. Dermatol. Nurs./Dermatol. Nurses’ Assoc. 2009, 21, 170. [Google Scholar]

- Kim, G.K.; Del Rosso, J.Q.; Bellew, S. Skin cancer in asians: Part 1: Nonmelanoma skin cancer. J. Clin. Aesthetic Dermatol. 2009, 2, 39–42. [Google Scholar]

- Lee, H.Y.; Chay, W.Y.; Tang, M.B.; Chio, M.T.; Tan, S.H. Melanoma: Differences between Asian and Caucasian Patients. Ann. Acad. Med. 2012, 41, 17–20. [Google Scholar] [CrossRef]

- Chang, J.W.C.; Guo, J.; Hung, C.Y.; Lu, S.; Shin, S.J.; Quek, R.; Ying, A.; Ho, G.F.; Nguyen, H.S.; Dhabhar, B.; et al. Sunrise in melanoma management: Time to focus on melanoma burden in Asia. Asia-Pac. J. Clin. Oncol. 2017, 13, 423–427. [Google Scholar] [CrossRef]

- Stephens, P.M.; Martin, B.; Ghafari, G.; Luong, J.; Nahar, V.K.; Pham, L.; Luo, J.; Savoy, M.; Sharma, M. Skin cancer knowledge, attitudes, and practices among Chinese population: A narrative review. Dermatol. Res. Pr. 2018, 2018, 1965674. [Google Scholar] [CrossRef]

- Zhou, L.; Zhong, Y.; Han, L.; Xie, Y.; Wan, M. Global, regional, and national trends in the burden of melanoma and non-melanoma skin cancer: Insights from the global burden of disease study 1990–2021. Sci. Rep. 2025, 15, 5996. [Google Scholar] [CrossRef]

- Urban, K.; Mehrmal, S.; Uppal, P.; Giesey, R.L.; Delost, G.R. The global burden of skin cancer: A longitudinal analysis from the Global Burden of Disease Study, 1990–2017. JAAD Int. 2021, 2, 98–108. [Google Scholar] [CrossRef] [PubMed]

- Kinyanjui, N.M.; Odonga, T.; Cintas, C.; Codella, N.C.; Panda, R.; Sattigeri, P.; Varshney, K.R. Fairness of classifiers across skin tones in dermatology. In International Conference on Medical Image Computing and computer-Assisted Intervention; Springer International Publishing: Cham, Switzerland, 2020; pp. 320–329. [Google Scholar]

- Daneshjou, R.; Vodrahalli, K.; Novoa, R.A.; Jenkins, M.; Liang, W.; Rotemberg, V.; Ko, J.; Swetter, S.M.; Bailey, E.E.; Gevaert, O.; et al. Disparities in dermatology AI performance on a diverse, curated clinical image set. Sci. Adv. 2022, 8, eabq6147. [Google Scholar] [CrossRef]

- Abraham, A.; Sobhanakumari, K.; Mohan, A. Artificial intelligence in dermatology. J. Ski. Sex. Transm. Dis. 2021, 3, 99–102. [Google Scholar] [CrossRef]

- Kaul, V.; Enslin, S.; Gross, S.A. History of artificial intelligence in medicine. Gastrointest. Endosc. 2020, 92, 807–812. [Google Scholar] [CrossRef] [PubMed]

- Tran, B.X.; Vu, G.T.; Ha, G.H.; Vuong, Q.-H.; Ho, M.-T.; Vuong, T.-T.; La, V.-P.; Ho, M.-T.; Nghiem, K.-C.P.; Nguyen, H.L.T.; et al. Global evolution of research in artificial intelligence in health and medicine: A bibliometric study. J. Clin. Med. 2019, 8, 360. [Google Scholar] [CrossRef]

- Jones, L.D.; Golan, D.; Hanna, S.A.; Ramachandran, M. Artificial intelligence, machine learning and the evolution of healthcare: A bright future or cause for concern? Bone Jt. Res. 2018, 7, 223–225. [Google Scholar]

- Stiff, K.M.; Franklin, M.J.; Zhou, Y.; Madabhushi, A.; Knackstedt, T.J. Artificial intelligence and melanoma: A com-prehensive review of clinical, dermoscopic, and histologic applications. Pigment Cell Melanoma Res. 2022, 35, 203–211. [Google Scholar]

- Zarfati, M.; Nadkarni, G.N.; Glicksberg, B.S.; Harats, M.; Greenberger, S.; Klang, E.; Soffer, S. Exploring the Role of Large Language Models in Melanoma: A Systematic Review. J. Clin. Med. 2024, 13, 7480. [Google Scholar] [CrossRef]

- Thomas, S.M.; Lefevre, J.G.; Baxter, G.; Hamilton, N.A. Interpretable deep learning systems for multi-class segmentation and classification of non-melanoma skin cancer. Med. Image Anal. 2021, 68, 101915. [Google Scholar] [PubMed]

- Patel, S.; Wang, J.V.; Motaparthi, K.; Lee, J.B. Artificial intelligence in dermatology for the clinician. Clin. Dermatol. 2021, 39, 667–672. [Google Scholar] [CrossRef] [PubMed]

- Hartmann, T.; Passauer, J.; Hartmann, J.; Schmidberger, L.; Kneilling, M.; Volc, S. Basic principles of artificial intelligence in dermatology explained using melanoma. JDDG: J. Der Dtsch. Dermatol. Ges. 2024, 22, 339–347. [Google Scholar]

- Lee, B.; Ibrahim, S.A.; Zhang, T. Mobile apps leveraged in the COVID-19 pandemic in East and South-East Asia: Review and content analysis. JMIR Mhealth Uhealth 2021, 9, e32093. [Google Scholar] [CrossRef]

- Furriel, B.C.; Oliveira, B.D.; Prôa, R.; Paiva, J.Q.; Loureiro, R.M.; Calixto, W.P.; Reis, M.R.C.; Giavina-Bianchi, M. Artificial intelligence for skin cancer detection and classification for clinical environment: A systematic review. Front. Med. 2024, 10, 1305954. [Google Scholar] [CrossRef]

- Salinas, M.P.; Sepúlveda, J.; Hidalgo, L.; Peirano, D.; Morel, M.; Uribe, P.; Rotemberg, V.; Briones, J.; Mery, D.; Navarrete-Dechent, C. A systematic review and meta-analysis of artificial intelligence versus clinicians for skin cancer diagnosis. NPJ Digit. Med. 2024, 7, 125. [Google Scholar] [PubMed]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.G.; Sterne, J.A.C.; Bossuyt, P.M.M.; QUADAS-2 Group. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar]

- Yu, C.; Yang, S.; Kim, W.; Jung, J.; Chung, K.-Y.; Lee, S.W.; Oh, B. Acral melanoma detection using a convolutional neural network for dermoscopy images. PLoS ONE 2018, 13, e0193321. [Google Scholar]

- Abbas, Q.; Ramzan, F.; Ghani, M.U. Acral melanoma detection using dermoscopic images and convolutional neural networks. Vis. Comput. Ind. Biomed. Art 2021, 4, 1–12. [Google Scholar]

- Han, S.S.; Moon, I.J.; Kim, S.H.; Na, J.-I.; Kim, M.S.; Park, G.H.; Park, I.; Kim, K.; Lim, W.; Lee, J.H.; et al. Assessment of deep neural networks for the diagnosis of benign and malignant skin neoplasms in comparison with dermatologists: A retrospective validation study. PLoS Med. 2020, 17, e1003381. [Google Scholar]

- Huang, K.; He, X.; Jin, Z.; Wu, L.; Zhao, X.; Wu, Z.; Wu, X.; Xie, Y.; Wan, M.; Li, F.; et al. Assistant diagnosis of basal cell carcinoma and seborrheic keratosis in Chinese population using convolutional neural network. J. Healthc. Eng. 2020, 2020, 1713904. [Google Scholar] [PubMed]

- Han, S.S.; Park, I.; Chang, S.E.; Lim, W.; Kim, M.S.; Park, G.H.; Chae, J.B.; Huh, C.H.; Na, J.-I. Augmented intelligence dermatology: Deep neural networks empower medical professionals in diagnosing skin cancer and predicting treatment options for 134 skin disorders. J. Investig. Dermatol. 2020, 140, 1753–1761. [Google Scholar]

- Han, S.S.; Kim, M.S.; Lim, W.; Park, G.H.; Park, I.; Chang, S.E. Classification of the clinical images for benign and malignant cutaneous tumors using a deep learning algorithm. J. Investig. Dermatol. 2018, 138, 1529–1538. [Google Scholar] [PubMed]

- Xie, B.; He, X.; Huang, W.; Shen, M.; Li, F.; Zhao, S. Clinical image identification of basal cell carcinoma and pigmented nevi based on convolutional neural network. J. Cent. South University. Med. Sci. 2019, 44, 1063–1070. [Google Scholar]

- Barzegari, M.; Ghaninezhad, H.; Mansoori, P.; Taheri, A.; Naraghi, Z.S.; Asgari, M. Computer-aided dermoscopy for diagnosis of melanoma. BMC Dermatol. 2005, 5, 8. [Google Scholar]

- Chang, W.-Y.; Huang, A.; Yang, C.-Y.; Lee, C.-H.; Chen, Y.-C.; Wu, T.-Y.; Chen, G.-S. Computer-aided diagnosis of skin lesions using conventional digital photography: A reliability and feasibility study. PLoS ONE 2013, 8, e76212. [Google Scholar]

- Ba, W.; Wu, H.; Chen, W.W.; Wang, S.H.; Zhang, Z.Y.; Wei, X.J.; Wang, W.J.; Yang, L.; Zhou, D.M.; Zhuang, Y.X.; et al. Convolutional neural network assistance significantly improves dermatologists’ diagnosis of cutaneous tumours using clinical images. Eur. J. Cancer 2022, 169, 156–165. [Google Scholar]

- Wang, S.-Q.; Zhang, X.-Y.; Liu, J.; Tao, C.; Zhu, C.-Y.; Shu, C.; Xu, T.; Jin, H.-Z. Deep learning-based, computer-aided classifier developed with dermoscopic images shows comparable performance to 164 dermatologists in cutaneous disease diagnosis in the Chinese population. Chin. Med. J. 2020, 133, 2027–2036. [Google Scholar]

- Fujisawa, Y.; Otomo, Y.; Ogata, Y.; Nakamura, Y.; Fujita, R.; Ishitsuka, Y.; Watanabe, R.; Okiyama, N.; Ohara, K.; Fujimoto, M. Deep-learning-based, computer-aided classifier developed with a small dataset of clinical images surpasses board-certified dermatologists in skin tumour diagnosis. Br. J. Dermatol. 2019, 180, 373–381. [Google Scholar] [PubMed]

- Cho, S.; Sun, S.; Mun, J.; Kim, C.; Kim, S.; Youn, S.; Kim, H.; Chung, J. Dermatologist-level classification of malignant lip diseases using a deep convolutional neural network. Br. J. Dermatol. 2020, 182, 1388–1394. [Google Scholar] [PubMed]

- Minagawa, A.; Koga, H.; Sano, T.; Matsunaga, K.; Teshima, Y.; Hamada, A.; Houjou, Y.; Okuyama, R. Dermoscopic diagnostic performance of Japanese dermatologists for skin tumors differs by patient origin: A deep learning convolutional neural network closes the gap. J. Dermatol. 2021, 48, 232–236. [Google Scholar]

- Guan, H.; Yuan, Q.; Lv, K.; Qi, Y.; Jiang, Y.; Zhang, S.; Miao, D.; Wang, Z.; Lin, J. Dermoscopy-based Radiomics Help Distinguish Basal Cell Carcinoma and Actinic Keratosis: A Large-scale Real-world Study Based on a 207-combination Machine Learning Computational Framework. J. Cancer 2024, 15, 3350. [Google Scholar]

- Li, C.-X.; Fei, W.-M.; Shen, C.-B.; Wang, Z.-Y.; Jing, Y.; Meng, R.-S.; Cui, Y. Diagnostic capacity of skin tumor artificial intelligence-assisted decision-making software in real-world clinical settings. Chin. Med. J. 2020, 133, 2020–2026. [Google Scholar] [PubMed]

- Han, S.S.; Kim, Y.J.; Moon, I.J.; Jung, J.M.; Lee, M.Y.; Lee, W.J.; Lee, M.W.; Kim, S.H.; Navarrete-Dechent, C.; Chang, S.E. Evaluation of artificial intelligence–assisted diagnosis of skin neoplasms: A single-center, paralleled, unmasked, randomized controlled trial. J. Investig. Dermatol. 2022, 142, 2353–2362. [Google Scholar]

- Xie, F.; Fan, H.; Li, Y.; Jiang, Z.; Meng, R.; Bovik, A. Melanoma classification on dermoscopy images using a neural network ensemble model. IEEE Trans. Med. Imaging 2016, 36, 849–858. [Google Scholar]

- Yang, Y.; Xie, F.; Zhang, H.; Wang, J.; Liu, J.; Zhang, Y.; Ding, H. Skin lesion classification based on two-modal images using a multi-scale fully-shared fusion network. Comput. Methods Programs Biomed. 2023, 229, 107315. [Google Scholar] [CrossRef] [PubMed]

- Jinnai, S.; Yamazaki, N.; Hirano, Y.; Sugawara, Y.; Ohe, Y.; Hamamoto, R. The development of a skin cancer classification system for pigmented skin lesions using deep learning. Biomolecules 2020, 10, 1123. [Google Scholar] [CrossRef]

- Yang, S.; Oh, B.; Hahm, S.; Chung, K.-Y.; Lee, B.-U. Ridge and furrow pattern classification for acral lentiginous melanoma using dermoscopic images. Biomed. Signal Process. Control 2017, 32, 90–96. [Google Scholar] [CrossRef]

- Iyatomi, H.; Oka, H.; Celebi, M.E.; Ogawa, K.; Argenziano, G.; Soyer, H.P.; Koga, H.; Saida, T.; Ohara, K.; Tanaka, M. Computer-based classification of dermoscopy images of melanocytic lesions on acral volar skin. J. Investig. Dermatol. 2008, 128, 2049–2054. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D.D. A survey of transfer learning. J. Big Data 2016, 3, 1345–1459. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Schwalbe, N.; Wahl, B. Artificial intelligence and the future of global health. Lancet 2020, 395, 1579–1586. [Google Scholar] [CrossRef] [PubMed]

- Jones, O.T.; Matin, R.N.; Walter, F.M. Using artificial intelligence technologies to improve skin cancer detection in primary care. Lancet Digit. Health 2025, 7, e8–e10. [Google Scholar] [CrossRef]

- Ashrafzadeh, S.; Nambudiri, V.E. The COVID-19 crisis: A unique opportunity to expand dermatology to underserved populations. J. Am. Acad. Dermatol. 2020, 83, E83–E84. [Google Scholar] [CrossRef]

- Mendi, B.I.; Kose, K.; Fleshner, L.; Adam, R.; Safai, B.; Farabi, B.; Atak, M.F. Artificial Intelligence in the Non-Invasive Detection of Melanoma. Life 2024, 14, 1602. [Google Scholar] [CrossRef]

- Vawda, M.I.; Lottering, R.; Mutanga, O.; Peerbhay, K.; Sibanda, M. Comparing the utility of Artificial Neural Networks (ANN) and Convolutional Neural Networks (CNN) on Sentinel-2 MSI to estimate dry season aboveground grass biomass. Sustainability 2024, 16, 1051. [Google Scholar] [CrossRef]

- Meedeniya, D.; De Silva, S.; Gamage, L.; Isuranga, U. Skin cancer identification utilizing deep learning: A survey. IET Image Process. 2024, 18, 3731–3749. [Google Scholar] [CrossRef]

- Matsoukas, C.; Haslum, J.F.; Söderberg, M.; Smith, K. Is it time to replace cnns with transformers for medical images? arXiv 2021, arXiv:2108.09038. [Google Scholar]

- Xin, C.; Liu, Z.; Zhao, K.; Miao, L.; Ma, Y.; Zhu, X.; Zhou, Q.; Wang, S.; Li, L.; Yang, F.; et al. An improved transformer network for skin cancer classification. Comput. Biol. Med. 2022, 149, 105939. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; He, X.; Sun, L.; Xu, J.; Chen, X.; Chu, Y.; Zhou, L.; Liao, X.; Zhang, B.; Afvari, S.; et al. Pre-trained multimodal large language model enhances dermatological diagnosis using SkinGPT-4. Nat. Commun. 2024, 15, 5649. [Google Scholar]

- Yu, Z.; Nguyen, J.; Nguyen, T.D.; Kelly, J.; Mclean, C.; Bonnington, P.; Zhang, L.; Mar, V.; Ge, Z. Early melanoma diagnosis with se-quential dermoscopic images. IEEE Trans. Med. Imaging 2021, 41, 633–646. [Google Scholar] [CrossRef] [PubMed]

- Guo, L.N.; Lee, M.S.; Kassamali, B.; Mita, C.; Nambudiri, V.E. Bias in, bias out: Underreporting and underrepresentation of diverse skin types in machine learning research for skin cancer detection—A scoping review. J. Am. Acad. Dermatol. 2022, 87, 157–159. [Google Scholar] [CrossRef]

- Takiddin, A.; Schneider, J.; Yang, Y.; Abd-Alrazaq, A.; Househ, M. Artificial intelligence for skin cancer detection: Scoping review. J. Med. Internet Res. 2021, 23, e22934. [Google Scholar] [CrossRef]

- ISIC 2018: Skin Lesion Analysis Towards Melanoma Detection. 11 June 2020. Available online: https://challenge2018.isic-archive.com/ (accessed on 21 February 2025).

- Carse, J.; Süveges, T.; Chin, G.; Muthiah, S.; Morton, C.; Proby, C.; Trucco, E.; Fleming, C.; McKenna, S. Classifying real-world macroscopic images in the primary–secondary care interface using transfer learning: Implications for development of artificial intelligence solutions using nondermoscopic images. Clin. Exp. Dermatol. 2024, 49, 699–706. [Google Scholar] [CrossRef]

- Sachdev, S.S.; Tafti, F.; Thorat, R.; Kshirsagar, M.; Pinge, S.; Savant, S. Utility of artificial intelligence in dermatology: Challenges and perspectives. IP Indian J. Clin. Exp. Dermatol. 2025, 11, 1–9. [Google Scholar] [CrossRef]

- Melarkode, N.; Srinivasan, K.; Qaisar, S.M.; Plawiak, P. AI-powered diagnosis of skin cancer: A contemporary review, open challenges and future research directions. Cancers 2023, 15, 1183. [Google Scholar] [CrossRef] [PubMed]

- Strzelecki, M.; Kociołek, M.; Strąkowska, M.; Kozłowski, M.; Grzybowski, A.; Szczypiński, P.M. Artificial intelligence in the detection of skin cancer: State of the art. Clin. Dermatol. 2024, 42, 280–295. [Google Scholar] [PubMed]

| Characteristics | n (%) | Reference |

|---|---|---|

| Publication year | ||

| 2005 | 1 | [31] |

| 2008 | 1 | [45] |

| 2013 | 1 | [32] |

| 2017 | 2 | [41,44] |

| 2018 | 2 | [24,29] |

| 2019 | 2 | [30,35] |

| 2020 | 7 | [26,27,28,34,36,39,43] |

| 2021 | 2 | [25,37] |

| 2022 | 2 | [33,40] |

| 2023 | 1 | [42] |

| 2024 | 1 | [38] |

| Country of publication | ||

| China | 8 | [27,30,33,34,38,39,41,42] |

| South Korea | 8 | [24,25,26,28,29,36,40,44] |

| Japan | 4 | [35,37,43,45] |

| Taiwan | 1 | [32] |

| Iran | 1 | [31] |

| AI model employed | ||

| Deep | ||

| CNN | 17 | [24,25,26,27,28,29,30,33,34,35,36,37,38,39,40,42,43] |

| ANN | 2 | [31,41] |

| Shallow | ||

| SVM | 1 | [32] |

| Linear Model | 1 | [45] |

| Machine learning (histogram) | 1 | [44] |

| Classification types | ||

| Binary (benign vs. malignant) | 16 | [24,25,27,28,30,31,33,35,36,38,39,41,43,44,45] |

| Multiclass | 12 | [26,28,29,32,33,34,35,37,39,40,42,43] |

| Modality used | ||

| Dermoscopy | 9 | [24,25,31,34,37,38,41,44,45] |

| Clinical | 11 | [26,27,28,29,30,32,33,35,36,40,43] |

| Clinical + Dermoscopy | 2 | [39,42] |

| Gold standard diagnosis of skin lesion included | ||

| Histopathological | 11 | [24,25,26,27,31,33,34,35,38,39,44] |

| Mixture of Clinical consensus on diagnosis and histopathological confirmation | 6 | [28,36,37,40,43,45] |

| Not specified | 5 | [29,30,32,41,42] |

| Types of skin cancer evaluated | ||

| Melanoma (all subtypes) | 16 | |

| Cutaneous melanoma | 12 | [26,29,31,32,33,35,36,37,39,40,41,43] |

| Acral melanoma | 4 | [24,25,44,45] |

| Basal cell carcinoma | 15 | [26,27,29,30,32,33,34,35,36,37,38,39,40,42,43] |

| Squamous cell carcinoma | 7 | [26,29,32,33,35,36,39,40] |

| Intraepithelial Ca | 2 | [26,29] |

| Kaposi’s sarcoma | 1 | [32] |

| Adenocarcinoma of lips | 1 | [36] |

| Data pre-processing | ||

| Pre-processing performed | 12 | [25,27,30,32,33,35,36,37,38,39,41,43] |

| Pre-processing not performed/not specified | 10 | [24,26,28,29,31,34,40,42,44,45] |

| Data augmentation | ||

| Performed | 4 | [24,25,33,35] |

| Not performed/specified | 18 | [26,27,28,29,30,31,32,34,36,37,38,39,40,41,42,43,44,45] |

| Ref. | AI | AI Model | Tasks of AI Model | Dataset | Dataset Sample Size | Skin Cancers Included in Dataset | Classification Types | Dermoscopy/Clinical |

|---|---|---|---|---|---|---|---|---|

| [24] | CNN | VGG-16 model | Feature extraction and binary classification | Severance Hospital in Yonsei University Health System and Dongsan Hospital in Keimyung University Health System | 350 acral melanoma, 374 benign naevus | AM | Binary (acral melanoma vs. benign naevus) | Dermoscopy |

| [25] | CNN | Deep ConvNet (custom-built) AlexNet ResNet-18 | Feature extraction and binary classification | Yonsei University Health System South Korea | 350 acral melanoma, 374 benign naevus | AM | Binary (acral melanoma vs. benign naevus) | Dermoscopy |

| [26] | CNN | Model Dermatology with RCNN (custom-built) Disease classifier (SENet and SE-ResNeXt-50) was trained with help of region-based CNN (faster RCNN) | Cropped-image analysis, blob detector and fine image selector of unprocessed images for detection, multiclass classification of diagnosis and calculation of malignancy outputs | Severance Hospital, Korea | 10,426 (Severance dataset A for binary classification) 10,315 (Severance dataset B * for multiclass classification analysis) | MM, BCC, Intraepithelial Ca (SCC-in situ), SCC | Binary (benign vs. malignant) multiclass predict exact diagnosis of skin disease | Clinical |

| [27] | CNN | Inception V3, InceptionResNetV2, DenseNet121, ResNet50 | Binary classification | Xiangya Hospital, Central South University, China | 541 BCC, 684 SK | BCC | Binary (BCC vs. seborrheic keratosis) | Clinical |

| [28] | CNN | Custom CNN | Binary classification (predicting malignancy and suggesting treatment options), multiclass classification of skin disorders | SNU dataset, Korea | 2201 (134 disorders) | NA | Binary multiclass | Clinical |

| [29] | CNN | Microsoft ResNet-152 (Trained with Asan, MED-NODE and atlas dataset) | Multiclass classification of cutaneous tumors | ASAN medical centre (> 99% asians) testing portion of dataset, Hallym Korea | 1276 (Asan test set), 152 BCC (Hallym test set) | BCC, SCC, intra-epithelial Ca, MM | Multiclass | Clinical |

| [30] | CNN | Xception, ResNet50, InceptionV3, InceptionResNetV2, DenseNet121 | Binary classification | Xiangya Skin Disease Dataset, China | 349 BCC, 497 pigmented naevi | BCC | Binary (BCC vs. pigmented naevi) | Clinical |

| [31] | ANN | Visiomed AG (ver.350) based on ANN trained using images from Europe-wide multicenter study (DANAOS) | Binary classification (calculate likelihood of malignancy) | Iranian patients; Pakistan, Razi hospital | 122 pigmented skin lesions | MM | Binary (benign vs. melanoma) | Dermoscopy |

| [32] | SVM | custom CAD | Feature extraction (shape, color, and texture), ranking of differentiating criteria, selection and multiclass classification | Kaohsiung Medical University, Taiwan | 769 (174 malignant and 595 benign)—110 BCC, 8 MM, 14 Kaposi’s sarcoma, 20 SCC | MM, BCC, Kaposi’s sarcoma, SCC | Multiclass (a) exact diagnosis; (b) benign vs. malignant vs. indeterminate | Clinical |

| [33] | CNN | EfficientNet-B3 | Binary and multiclass classification | Chinese PLA General Hospital & Medical School | 25,773 used for training and validation; 2107 for testing (2178 BCC, 1108 SCC, 1030 MM) | BCC, SCC, MM | Binary multiclass (disease specific classification) | Clinical |

| [34] | CNN | GoogLeNet Inception v3 | Multiclass classification | Peking Union Medical College Hospital, China | 378 BCC | BCC | Multiclass | Dermoscopy |

| [35] | CNN | GoogLeNet DCCN | Binary and multiclass classification | Dermatology division of Tsukuba Hospital, Japan | 6009 (some were different angles of same lesion), 4867 used for training, 1142 for testing | SCC, BCC, MM | 1st level: Binary (benign vs. malignant) 3rd level: Multiclass | Clinical |

| [36] | CNN | Inception-Resnet-V2 | Binary classification of lip disorders | Seoul National University Hospital (SNUH), Seoul National University Bundang Hospital, SMG-SNU Boramae Medical Center | 1629 for training (743 malignant, 886 benign), 344 SNUH for internal validation, 281 SNUBH and SMG-SNNU for external validation | MM, BCC, SCC, AdenoCa over Lips | Binary (benign vs. malignant) | Clinical |

| [37] | CNN | Inception-ResNeet V2 | Multiclass four disease classification | Shinsu database | 594 training set (49 MM, 132 BCC), 50 test set (12 MM, 12 BCC) | MM, BCC | Multiclass (4 disease classifier) | Dermoscopy |

| [38] | Machine learning | Combination model developed from 207 machine learning models, integrating XGBoost combined with Lasso regression for analysis of data | Binary classification | DAYISET 1 (First Affiliated Hospital of Dalian Medical University) for external validation set | 63 (32 BCC, 31 Actinic keratosis) | BCC | Binary (BCC vs. AK) | Dermoscopy |

| [39] | CNN | Youzhi AI software (Shanghai Maise Information Technology Co., Ltd., Shanghai, China) (GoogLeNet Inception v4 CNN used as basis) | Binary classification | China Skin Image Database (CSID), China–Japan Friendship Hospital, China | 106 (4 MM, 5 SCC, 24 BCC) | MM, SCC, BCC | 1st level: Binary (benign vs. malignant) 2nd level: Multiclass (14 types of skin tumors) | Clinical and dermoscopy |

| [40] | CNN | Model Dermatology (custom-built)—output values of SENet, SE-ResNeXt-101, SE-ResNeXt-50, ResNeSt-101, ResNeSr-50 arithmetically averaged to obtain a final model output | Multiclass classification | ASAN dataset, South Korea (and web dataset) | 120,780 clinical images from ASAN dataset used for training; 17,125 subset of ASAN dataset used for validation | MM, SCC, BCC | Multiclass | Clinical |

| [41] | ANN | Neural network meta-ensemble model: combination of BP neural networks with fuzzy neural networks to increase individual net diversity | Segmentation, feature extraction and binary classification | Xanthous set from General Hospital of the Air Force of The Chinese People’s Liberation Army, China | 240 images (80 malignant, 160 benign) | MM | Binary (benign vs. malignant) | Dermoscopy |

| [42] | CNN | Proposed MFF-Net (multi-scale fusion structure combines deep and shallow features within individual modalities to reduce the loss of spatial information in high-level feature maps) on EfficientNet as backbone in a single modality | Feature extraction, multiclass classifications | Peking Union Medical College Hospital, China | 3853 image pairs | BCC | Multiclass (BCC vs. naevus, Seb K, wart) | Clinical and dermoscopy |

| [43] | CNN | FRCNN with VGG-16 as backbone | Binary and multiclass classification | National Cancer Center Hospital, Tokyo, Japan | 5846 brown to black pigmented skin lesions (1611 MM, 401 BCC) | MM, BCC | Binary (benign vs. malignant) multiclass (6-class classification) | Clinical |

| [44] | Machine learning | custom algorithm (Gaussian derivative filtering + histogram fo width ratio to classify lesions according to ridge/furrow width ratio) | Binary classification | Severance Hospital at Yonsei University Health System, Korea | 297 images (184 acral melanoma, 113 benign naevi) | AM | Binary (acral melanoma vs. benign naevus) | Dermoscopy |

| [45] | Machine learning | Linear classifier model (custom-built) (categorized into color, symmetry, border, and texture) | Automated tumor area extraction, Binary (melanoma–nevus and three pattern detectors) classification | Japanese hospitals (Keio University Hospital, Tornoman Hospital, Shinshu University Hospital, Inagi-Hospital) and two European universities as EDRA-CDROM database (only Japanese results included in this review); computer-based classification of dermoscopy images of melanocytic lesions on acral volar skin. | 213 images (176 naevi, 37 melanomas) | AM | Binary (melanoma vs. benign naevus) | Dermoscopy |

| Ref. | Evaluation Metrics (for AI Models and Clinicians) | Outcomes | ||

|---|---|---|---|---|

| AUC | Sensitivity | Specificity | ||

| [24] | CNN | CNN | CNN | Accuracy of CNN comparable to experts but surpasses non-experts |

| (Group A) 83.51% | (Group A) 92.57% | (Group A) 75.39% | ||

| (Group B) 80.23% | (Group B) 92.57% | (Group B) 68.16% | ||

| (Average) 81.87% | (Average) 92.57% | (Average) 71.78% | ||

| Experts | Experts | Experts | ||

| (Group A) 81.08% | (Group A) 94.88% | (Group A) 68.72% | ||

| (Group B) 81.64% | (Group B) 98.29% | (Group B) 65.36% | ||

| Non-experts | Non-experts | Non-experts | ||

| (Group A) 67.84% | (Group A) 41.71% | (Group A) 91.28% | ||

| (Group B) 62.71% | (Group B) 48% | (Group B) 77.10% | ||

| [25] | (Proposed ConvNet) 91.0% | NA | NA | CNN sensitivity is higher than human experts and can be used for early diagnosis of AM by non-experts. Transfer learning achieved better results than custom-built AI model. |

| (ResNet-18) 97.5% | ||||

| (AlexNet) 95.9% | ||||

| [26] | (CNN binary) 86.3% | (CNN Binary) 62.7% | (CNN Binary) 90% | Performances of algorithms were comparable to dermatologists in experimental setting but inferior to dermatologists in real-world practice. This could be due to the limited data relevancy and diversity involved in differential diagnoses in practice. |

| (Clinician binary) 70.2% | (Clinician binary) 95.6% | |||

| (CNN multiclass): 66.9% | (CNN multiclass): 87.4% | |||

| (CNN BCC) 66.6% | (CNN BCC) 90% | |||

| (Clinician BCC) 74% | (Clinician BCC) 95.6% | |||

| (CNN SCC) 70.9% | (CNN SCC) 90% | |||

| (Clinician SCC) 65.8% | (Clinician SCC) 95.6% | |||

| (CNN MM) 61.4% | (CNN MM) 90% | |||

| (Clinician MM) 68.7% | (Clinician MM) 95.6% | |||

| [27] | Trained from scratch | Trained from scratch | Trained from scratch | InceptionResNetV2 outperformed average of 13 general dermatologists, comparable to average of 8 expert dermatologists in ability of clinical image classification. Transfer learning achieved better results than custom-built AI model. |

| (Inception V3) 89.4% | (Inception V3) 85.2% | (Inception V3) 84.6% | ||

| (Inception ResNetV2) 89.5% | (Inception ResNetV2) 85.4% | (Inception ResNetV2) 85.9% | ||

| (DenseNet 121) 89% | (DenseNet 121) 84.6% | (DenseNet 121) 84.8% | ||

| (ResNet50) 87.9% | (ResNet50) 78.3% | (ResNet50) 88.3% | ||

| Fine-tuned with ImageNet | Fine-tuned with ImageNet | Fine-tuned with ImageNet | ||

| (Inception V3) 89.6% | (Inception V3) 85.2% | (Inception V3) 83.7% | ||

| (Inception ResNetV2) 91.9% | (Inception ResNetV2) 79.1% | (Inception ResNetV2) 91.5% | ||

| (DenseNet 121) 91.3% | (DenseNet 121) 88.5% | (DenseNet 121) 81.6% | ||

| (ResNet50) 90.5% | (ResNet50) 80.8% | (ResNet50) 89.4% | ||

| [28] | (CNN Binary) 93.70% | NA | NA | CNN showed similar performance as dermatology residents but slightly lower than dermatologists. |

| (CNN multiclass) 97.8% | ||||

| [29] | Asan dataset | Asan dataset | Asan dataset | Varied subtypes in BCC among different ethnic groups can explain the lower AUC obtained by AI model trained with Asian dataset when validated in an Edinburgh test set. |

| (BCC) 96% | (BCC) 88.8% | (BCC) 91.7% | ||

| (SCC) 83% | (SCC) 82% | (SCC) 74.3% | ||

| (MM) 96% | (MM) 91% | (MM) 90.4% | ||

| (Intraepithelial Ca) 82% | (Intraepithelial Ca) 77.7% | (Intraepithelial Ca) 74.9% | ||

| Hallym dataset | AI showed comparable performance to 16 dermatologists in ability to classify 12 skin tumor types and was superior in diagnosis of BCC. | |||

| (BCC) 87.1% | ||||

| [30] | (InceptionV3): | (InceptionV3): | (InceptionV3): | Xception had the best performance out of the five mainstream CNNs. Ability of Xception model to identify clinical images of BCC and nevi was comparable to that of professional dermatologists. |

| 94.4% | 93.4% | 88.1% | ||

| (ResNet50): | (ResNet50): | (ResNet50): | ||

| 91.9% | 86.3% | 89.7% | ||

| (InceptionResNetV2): | (InceptionResNetV2): | (InceptionResNetV2): | ||

| 96.3% | 90% | 93.6% | ||

| (DenseNet121): | (DenseNet121): | (DenseNet121): | ||

| 96.3% | 90.8% | 93.2% | ||

| (Xception): | (Xception): | (Xception): | ||

| 97.4% | 94.8% | 93.0% | ||

| [31] | NA | (Melanoma) 83% | (Melanoma): 96% | Diagnostic accuracy of the computer-aided dermoscopy system was at the level of clinical examination by dermatologists with naked eyes and can help to reduce unnecessary excisions or improve early melanoma detection. It serves to improve diagnostic accuracy of inexperienced clinicians in evaluation of pigmented skin lesions. |

| [32] | (CADx) 90.64% | (CADx) 85.63% (Physician) 83.33% | (CADx) 87.65% (Physician) 85.88% | CADx performed similarly to that of dermatologists in ability to classify both melanocytic and non-melanocytic skin lesions by utilizing conventional digital macrophotographs. |

| BCC: 90% | ||||

| MM: 75% | ||||

| Kaposi’s sarcoma: 71.4% | ||||

| SCC: 80% | ||||

| (Physician) 85.31% | ||||

| BCC: 88.1% | ||||

| MM: 75% | ||||

| Kaposi’s sarcoma: 78.5% | ||||

| SCC: 85% | ||||

| [33] | CNN | Binary (dermatologists without CNN assistance vs. with assistance): Sn 83.21% vs. 89.56% | Binary (Dermatologists without CNN assistance vs. with assistance) Sp 80.92% vs. 87.90% | Performance of dermatologists improved with CNN assistance especially for lesions with similar visual appearances. |

| General top diagnosis: 78.45% | ||||

| BCC: 78.0% | ||||

| SCC: 91% | ||||

| MM: 87% | ||||

| Dermatologists without CNN assistance | ||||

| General: 62.78% | ||||

| BCC 55% | ||||

| SCC 64% | ||||

| MM 65% | ||||

| Dermatologists with CNN assistance | ||||

| General: 76.6% | ||||

| BCC 71% | ||||

| SCC 82% | ||||

| MM 82% | ||||

| CNN | ||||

| [34] | CNN Multiclass average: 81.49% BCC: 97.2% | CNN BCC: 80% Dermatologists BCC: 77% | CNN BCC: 100% Dermatologists BCC: 96.2% | Based on a single dermoscopic image, the performance of CNN was comparable to 164 board-certified dermatologists in the classification of skin tumors. |

| [35] | CNN | CNN Binary/1st level classification: 96.30% | CNN Binary/1st level classification: 89.50% | Trained DCNN could classify skin tumors more accurately than board-certified dermatologists (BCD) on basis of a single clinical image. |

| Binary/1st level classification: 93.4% | ||||

| Multiclass/3rd level classification: | ||||

| General: 74.5% | ||||

| SCC: 82.5% | ||||

| BCC: 80.3% | ||||

| MM: 72.6% | ||||

| Dermatologists | ||||

| Binary/1st level classification: | ||||

| (BCD): 85.3% | ||||

| (Dermatolgy trainees): 74.4% | ||||

| Multiclass/3rd level classification: | ||||

| BCD vs. trainees: 59.7% vs. 41.7% | ||||

| (BCD) | ||||

| SCC: 59.5% | ||||

| BCC: 64.8% | ||||

| MM: 72.8% | ||||

| [36] | (DCNN test set): 82.7% | (DCNN Test set): 75.5% | (DCNN test set): 80.3% | Specificity of the algorithm at the dermatologist’ mean sensitivity was significantly higher than human readers (p < 0.001). Sensitivity and specificity of dermatology residents, non-specialist and medical students improved after referencing DCNN output. But sensitivity and specificity of board-certified dermatologist did not have any significant improvement. |

| (DCNN non-SNUH test set): 77.4% | (DCNN non-SNUH test set):70.2% | (DCNN non-SNUH test set): 75.9% | ||

| (DCNN combined): 81.1% | (DCNN combined): 73.7% | (DCNN combined): 77.9% | ||

| [37] | Deep Neural Network | Dermatologist (Shinshu set): 85.30% | Deep Neural Network (Shinshu set): 96.2% Dermatologist (Shinshu set): 92.20% | DNN diagnostic performance with vs. without training >sn: improved from 0.875 > 0.917 >accuracy of malignancy prediction: improved from 0.920 > 0.940 >sp: did not change at 0.962 Dermoscopic diagnostic performance of Japanese dermatologists for skin tumors diminished for patients of non-local populations, particularly in relation to the dominant skin type. DNN may help close this gap in clinical settings. |

| (Shinshu set, MM): 75% | ||||

| (Shinshu set, BCC): 75% | ||||

| General AUC: 94% | ||||

| Dermatologist | ||||

| (Shinshu set, MM) 65% | ||||

| (Shinshu set, BCC) 80% | ||||

| [38] | (DAYISET 1/DATASET 4): 63.4% | (DAYISET BCC): 68.8% | NA | The model demonstrated high accuracy in discrimination and diagnosis of BCC and AK and can assist less experienced dermatologists in distinguishing skin lesions. |

| [39] | Binary: | Binary: AI (clinical + dermoscopic): 74.8% AI (clinical): 71.1% AI (dermoscopic): 78.6% | Binary: AI (clinical + dermoscopic): 93.0% AI (clinical): 90.6% AI (dermoscopic): 95.3% | No statistical difference in the diagnostic accuracy of Youzhi AI software and dermatologist under the two modes. Diagnostic accuracy of the Youzhi AI software was reduced in practical work possibly because dermoscopic images collected in clinical work tend to be less typical, increasing diagnostic difficulty for dermatologist and software. |

| AI (Dermoscopic + clinical) 85.6% | ||||

| AI (dermoscopic) 88.7% | ||||

| AI (clinical) 83.0% | ||||

| Dermatologists (dermoscopic + clinical) 83.3% | ||||

| Dermatologists (dermoscopic): 89.6% | ||||

| Dermatologists (clinical) 79.5% | ||||

| Multiclass classification | ||||

| AI (dermoscopic + clinical): 73.1% | ||||

| AI (dermoscopic): 76.4% | ||||

| AI (clinical): 68.9% | ||||

| Dermatologists (dermoscopic + clinical): 61.4% | ||||

| (dermoscopic): 63.4% | ||||

| (clinical): 59.4% | ||||

| [40] | AI: BCC: 53.3% MM: 66.7% SCC: 50% | Sensitivity (based on top-3 predictions for malignancy determination) - AI assisted group; 84.6% - unaided group: 75.6% | NA | Multiclass AI algorithm augmented the diagnostic accuracy of non-expert physicians in dermatology. |

| Top-1 accuracy (AI standalone): 50.5% (AI augmented group vs. unaided in general): 53.9% vs. 43.8% (AIGP group vs. unaidedGP): 54.7% vs. 29.7% (AIresident group vs. unaidedresident): 53.1% vs. 57.3% | ||||

| Top-1 accuracy performance BCC:

| ||||

Melanoma

| ||||

SCC

| ||||

| [41] | 94.17% | 95% | NA | The proposed lesion border features are particularly beneficial for differentiating malignant from benign skin lesions |

| [42] | EfficientNet-B4 without multi-scale integration (BCC) (Dermoscopic): 78.4% (Clinical): 84.5% (Dermoscopic + clinical): 74% | NA | NA | The multi-scale fusion structure integrating the features of various scales within a single modality demonstrates the significance of intra-modality relations between clinical images and dermoscopic images. |

| EfficientNet-B4 with multi-scale (BCC) (Dermoscopic): 80.1% (Clinical): 83% (Dermoscopic + clinical): 76.2% | ||||

| [43] | FRCNN (MM): 80.1% (BCC): 81.8% (Binary): 91.5% | FRCNN: 83.3% BCDs: 86.3% TRNs: 85.3% | FRCNN: 94.5% BCDs: 86.6% TRNs: 85.9% | Similar in performance between FRCNN and BCDs but slightly better than TRNs for specific diagnosis. But for differentiation between malignant vs. benign—FRCNN had highest accuracy. |

| Board-certified dermatologists (BCDs) (MM) 83.3% (BCC) 78.8% (Binary) 86.6% | ||||

| Trainees (TRN) (MM) 80.1% (BCC) 65.9% (Binary) 85.3% | ||||

| For multiclass (six classes) classification, FRCNN had higher accuracy rates than BCD and TRN (FRCNN) 86.2% (BCDs) 79.5% (TRNs) 75.1% | ||||

| [44] | 99.70% | 100% | 99.1% | The proposed algorithm agrees with dermatologists’ observations and achieved high accuracy rates. |

| [45] | 93.30% | 93.3% | 91.1% | NA |

| (MM, images) 80.6% | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ang, X.L.; Oh, C.C. The Use of Artificial Intelligence for Skin Cancer Detection in Asia—A Systematic Review. Diagnostics 2025, 15, 939. https://doi.org/10.3390/diagnostics15070939

Ang XL, Oh CC. The Use of Artificial Intelligence for Skin Cancer Detection in Asia—A Systematic Review. Diagnostics. 2025; 15(7):939. https://doi.org/10.3390/diagnostics15070939

Chicago/Turabian StyleAng, Xue Ling, and Choon Chiat Oh. 2025. "The Use of Artificial Intelligence for Skin Cancer Detection in Asia—A Systematic Review" Diagnostics 15, no. 7: 939. https://doi.org/10.3390/diagnostics15070939

APA StyleAng, X. L., & Oh, C. C. (2025). The Use of Artificial Intelligence for Skin Cancer Detection in Asia—A Systematic Review. Diagnostics, 15(7), 939. https://doi.org/10.3390/diagnostics15070939