1. Introduction

Dental caries are among the most widespread and prevalent dental diseases globally. The progressive nature of caries highlights the essential requirement for early detection since prompt intervention can greatly reduce tooth damage and the ensuing need for extensive therapies [

1]. Timely detection of carious lesions allows healthcare providers to employ preventive measures, including fluoride treatments and minimally invasive restorative techniques, thus safeguarding tooth structure and function [

2]. Moreover, managing caries in their early phases not only improves individual dental health results but also alleviates the overall strain on healthcare systems by reducing the demand for time-consuming and expensive complicated treatments like root canals or extractions. This highlights the necessity of developing clinical decision-support tools to aid professionals in evaluating these lesions.

Visual inspection and radiographic analysis are typically employed in the diagnosis of carious lesions. Imaging techniques, particularly bitewing radiographs (BRs), are essential for the identification of interproximal caries, as the location of these lesions makes them challenging to detect clinically. BRs provide a focused perspective, facilitating a more precise evaluation of interproximal decay. They typically cover the area from the distal surface of the canine teeth to the distal surface of the last molar. Dental lesions are depicted as a radiolucent region in bitewing films, which is indicative of their diminished X-ray absorption [

3]. However, the assessment of lesion depth using radiographic examination may demonstrate low sensitivity, particularly for early-stage lesions [

4]. In addition, the clarity of BRs can be influenced by various factors, including noise and artifacts, overlapping structures, and cervical burnout [

5], which may obfuscate the diagnosis. Consequently, the reliability of evaluations can be substantially improved by implementing supplementary methods incorporating computational analysis.

In recent years, artificial intelligence algorithms, particularly deep learning (DL) methods, have acquired traction in the field of dental medicine, yielding impressive outcomes [

6,

7,

8,

9]. The application of convolutional neural networks (CNNs) has been effective in the identification of a variety of lesions when analyzing radiographs as a supplementary diagnostic instrument such as periodontal bone loss [

10], apical lesions [

11], and maxillary sinusitis [

12]. CNNs are a distinct category of DL algorithms specifically engineered to proficiently evaluate visual data, especially images, by autonomously extracting hierarchical characteristics across numerous processing layers. These networks employ convolutional layers to identify patterns and characteristics, rendering them exceptionally successful for computer vision tasks [

13].

The detection of caries has been effectively handled by diverse strategies, including classification, object detection, and segmentation techniques. Classification yields a binary presence/absence determination of caries, object detection facilitates spatial localization by generating a Bbox around the area of interest, and segmentation provides intricate delineation of carious lesions by highlighting the targeted region, each contributing distinctly to the thorough evaluation of dental caries.

Classification: Mao et al. [

14] developed a model to classify the restoration and caries. Several image-processing techniques were employed to isolate 700 teeth retrieved from 278 BRs, comprising 350 targeted and 350 non-targeted specimens. Each tooth was subsequently split in half and utilized as an input for the classification model. Four DL networks were assessed, ResNet50, GoogleNet, Vgg19, and AlexNet, where the latter achieved the best accuracy results of 95.56%. Moran et al. [

15] also used image processing methods to prepare the data for the classification model by extracting the teeth from 112 BRs. Each tooth was subsequently labeled by one of three classes: normal (305 teeth), incipient caries (113 teeth), and advanced caries (62 teeth). The study employed DL-based classifiers, Inception and ResNet, with Inception achieving the highest accuracy of 73.3%.

Object detection: Object-detection-based models are typically classified into two main categories: one- and two-stage models. One-stage models execute object detection in a singular pass, such as YOLO and SSD. The model concurrently predicts Bboxes and class probabilities from the entire image, resulting in increased processing speed. In contrast, two-stage models initially produce region proposals, followed by the refinement of these proposals to classify objects and predict their Bboxes, resulting in a slower speed but higher accuracy, such as R-CNN, Fast R-CNN, and faster R-CNN. Several studies used YOLO of different versions to detect caries from BRs. Bayraktar and Ayan [

16] employed YOLOv3 to detect proximal caries without assessing their severity. A total of 1000 BRs were utilized to train and assess the model, which achieved a precision of 0.866 and a recall of 0.723. The YOLOv3 was also used in the study [

17] to detect caries and identify their severity according to ICCMS

TM. The BRs were annotated by drawing a Bbox around the tooth and categorizing it into one of the following classes: non-carious, initial1, initial2, initial3, moderate4, extensive5, and extensive6. This annotation method can lead to confusion when two different cases of caries with varying severity are present on the same tooth. The same method of annotation was followed in a later study [

18] that utilized a pre-trained YOLOv7. The authors also assessed the performance of YOLOv3. The findings indicated that YOLOv7 had enhanced performance relative to YOLOv3. In the study [

19], a Faster R-CNN was used to detect proximal caries, attaining precision, recall, and F1-score values of 0.86, 0.89, and 0.87, respectively, without considering caries severity. Ayhan et al. introduced an innovative model that concurrently detects proximal caries and enumerates teeth utilizing YOLOv7 [

20]. The F1-score, precision, and recall of matching the caries area with tooth number were 0.842, 0.851, and 0.834, respectively. However, the severity of carious lesions was not considered.

Segmentation: Several studies in the literature used U-Net architecture to segment caries by highlighting targeted objects. The U-Net was designed specifically for semantic segmentation. Semantic segmentation aims to categorize each pixel in an image into a distinct class [

21]. Lee et al. designed a U-Net-based model for the segmentation of dental structures, including enamel, dentin, pulp, restoration, gutta-percha, and caries [

22]. A total of 354 BRs were utilized. The model achieved precision, recall, and F1-score values of 0.633, 0.65, and 0.641, respectively. Despite the inclusion of various caries forms, the severity of the caries was not assessed. Cantu et al. [

23] used U-Net to detect caries of different severity. The model achieved a precision of 0.7 and a recall of 0.75. However, the severity of caries was not distinguished in the output where all predicted caries were represented by the same color. In contrast, a study published by Ahmed et al. added a further procedure to classify the severity of caries into five categories by employing three different encoders: Vgg19, ResNet50, and ResNext101 [

24]. Their proposed model attained a mean F1-score of 0.612 and a mean IoU of 0.566, which ResNext101 achieved.

YOLO, an acronym for “You Only Look Once”, is one of the most well-known rapid object recognition algorithms. Its purpose is to efficiently detect and localize objects in images or video by generating bounding boxes (Bboxes) surrounding them [

25]. The latest versions of YOLO have enhanced their functionalities to encompass a wider array of computer vision tasks beyond conventional object detection, such as instance segmentation, pose/keypoints, and classification. The YOLO-based instance segmentation enhances the conventional YOLO object detection method by not only recognizing objects in an image but also accurately outlining their shapes. The design of YOLO was modified to predict both Bboxes and pixel-level masks for each identified object. This entailed incorporating further output layers that precisely manage mask predictions. For each identified object, the model produces a binary mask that delineates the pixels associated with that object. This is generally accomplished by a CNN that learns to generate these masks during training. Throughout training, the model acquires the ability to optimize both the Bbox coordinates and the associated masks. During inference, when an image is processed by the model, it generates Bboxes accompanied by their respective confidence scores and masks. The anticipated masks are subsequently applied to the Bboxes to provide accurate segmentations of each object. Ultimately, post-processing techniques like non-maximum suppression (NMS) are employed to enhance the predictions, assuring proper management of overlapping boxes and precise representation of each object. By including these procedures, YOLO can proficiently execute instance segmentation, delivering comprehensive information regarding the existence and form of objects within an image.

To the best of our knowledge, no research exists that identifies caries of varying severity while offering adequate diagnosis, including the number of affected teeth and the impacted surfaces. The previous studies are restricted to detecting caries and determining their severity in a few cases which is insufficient for dentists to complete the dental chart. Therefore, this study aims to develop a novel proximal caries diagnostic system based on deep learning (DL) that provides a comprehensive and accurate diagnosis of proximal caries, including the severity of the caries, the affected tooth, and the involved surface. This study aims to develop a novel proximal caries diagnostic system based on DL that delivers accurate diagnoses of proximal caries, including the severity of caries, the tooth affected, and the surface involved. To achieve this, the proposed system integrates three main tasks: detecting caries, numbering teeth, and identifying affected teeth and surfaces. The purpose of this system is to assist dentists, rather than replace them, in providing accurate and efficient caries diagnosis. The severity of caries follows the International Caries Classification and Management System (ICCMS

TM) [

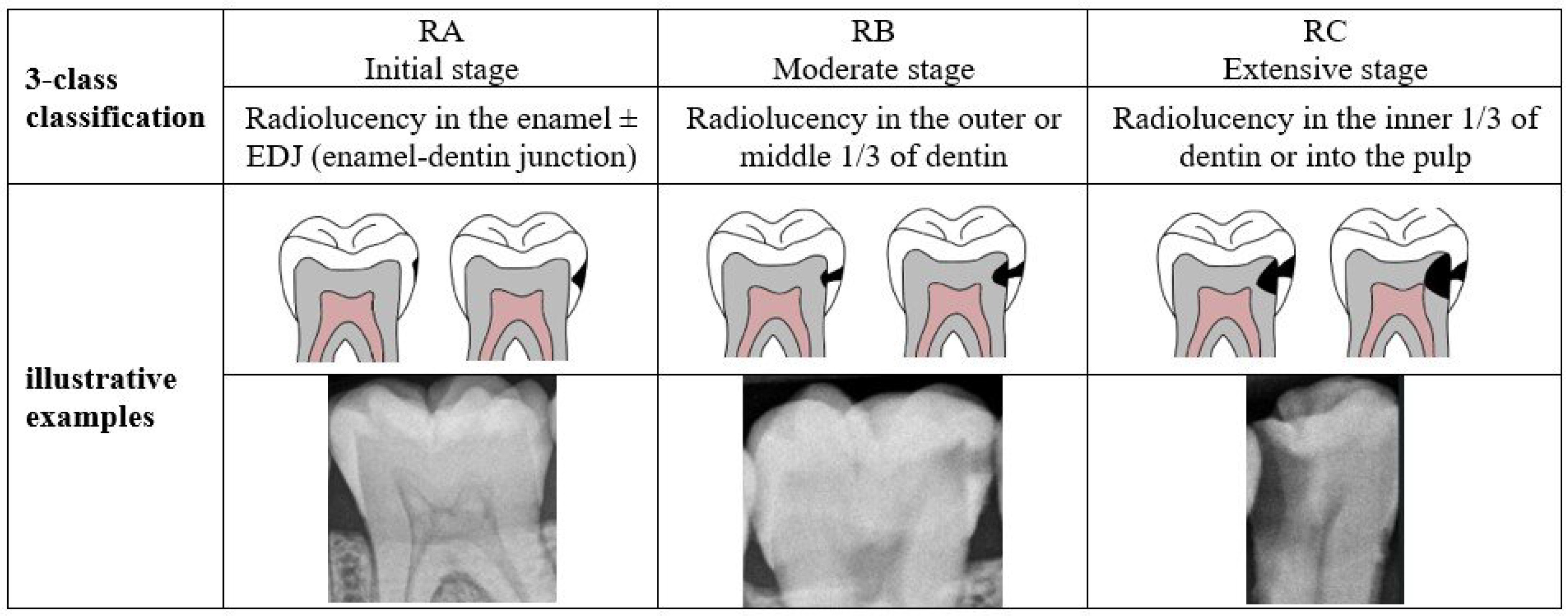

3] which categorizes the stages of carious lesions into three main categories: initial, moderate, and extensive. The details of these stages are presented in

Figure 1. A recent iteration of YOLO-based instance segmentation, in short YOLOv11-seg, is used to identify proximal caries and their severity. The effectiveness of the system is assessed by comparing its performance to that of dentists.

4. Discussion

To the best of our knowledge, this is the first study utilizing YOLOv11 and offering a detailed description of the caries, encompassing its severity rating, the affected tooth, and the implicated surface. The proposed system attained exceptional performance, often equaling or surpassing that of dentists, rendering it a valuable tool for caries diagnosis.

The detection of caries encounters several challenges that hinder accurate diagnosis and treatment. The variety in appearance indicates that caries can manifest in many shapes, hues, and stages, complicating standardization. Moreover, the quality and sharpness of photographs may fluctuate, influencing the clarity of carious lesions. Overlapping structures in dental anatomy can conceal caries, resulting in false negatives or positives. Moreover, noise and abnormalities in dental pictures might hinder precise detection, and attaining real-time processing in clinical environments imposes further technical challenges. The research in the field has used DL methods to circumvent these problems [

28]. Nevertheless, there is potential to improve the detection of caries.

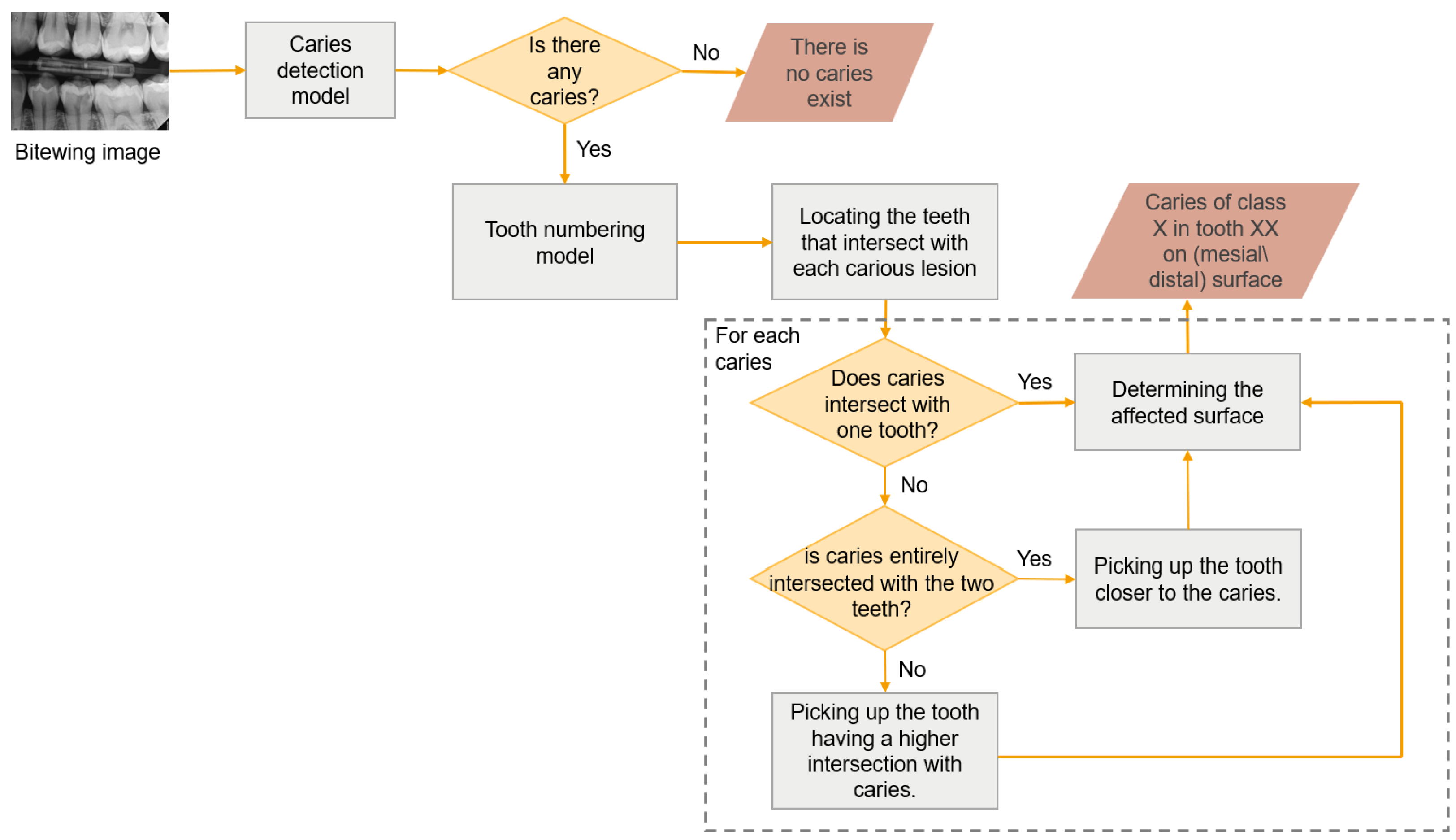

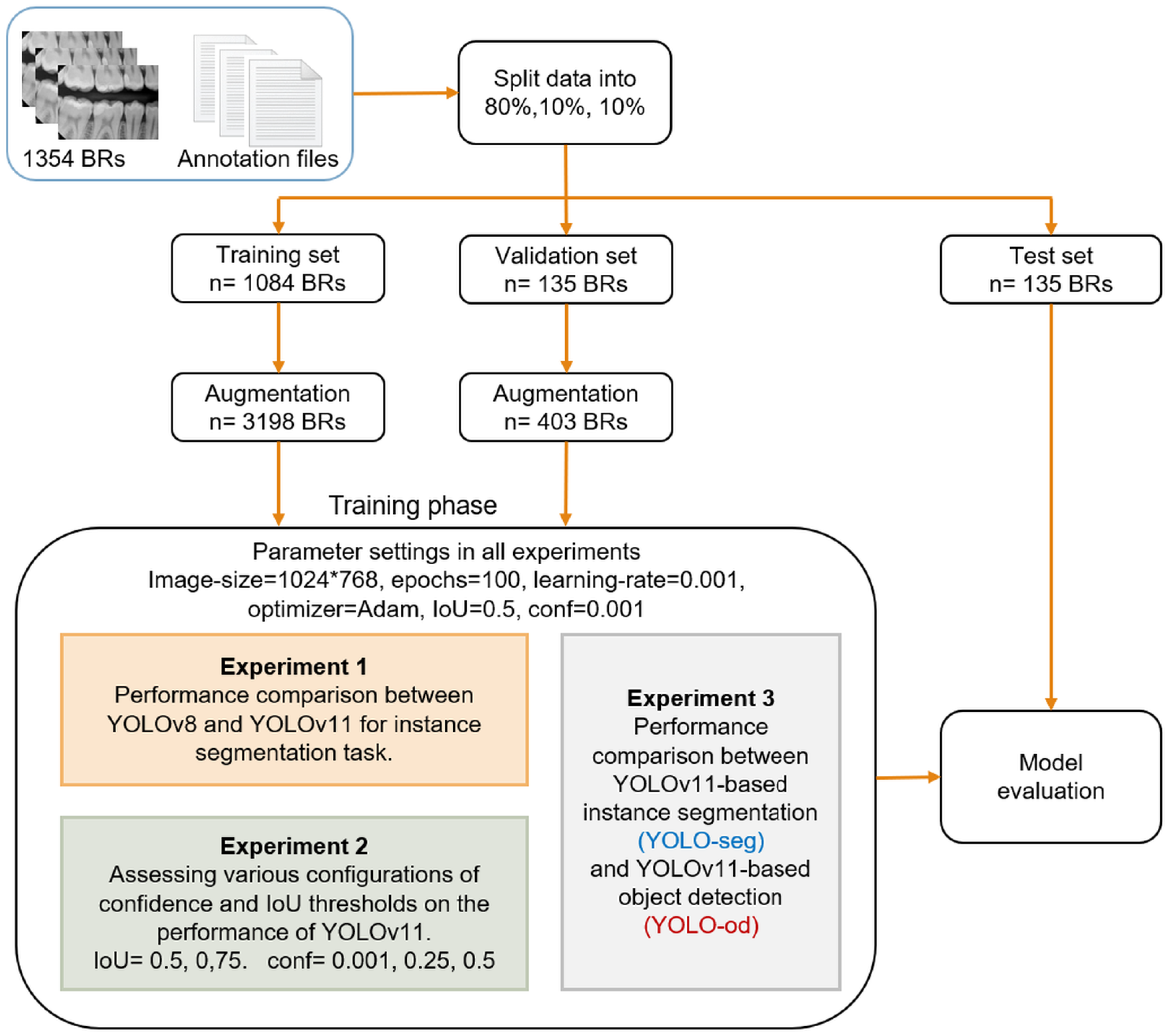

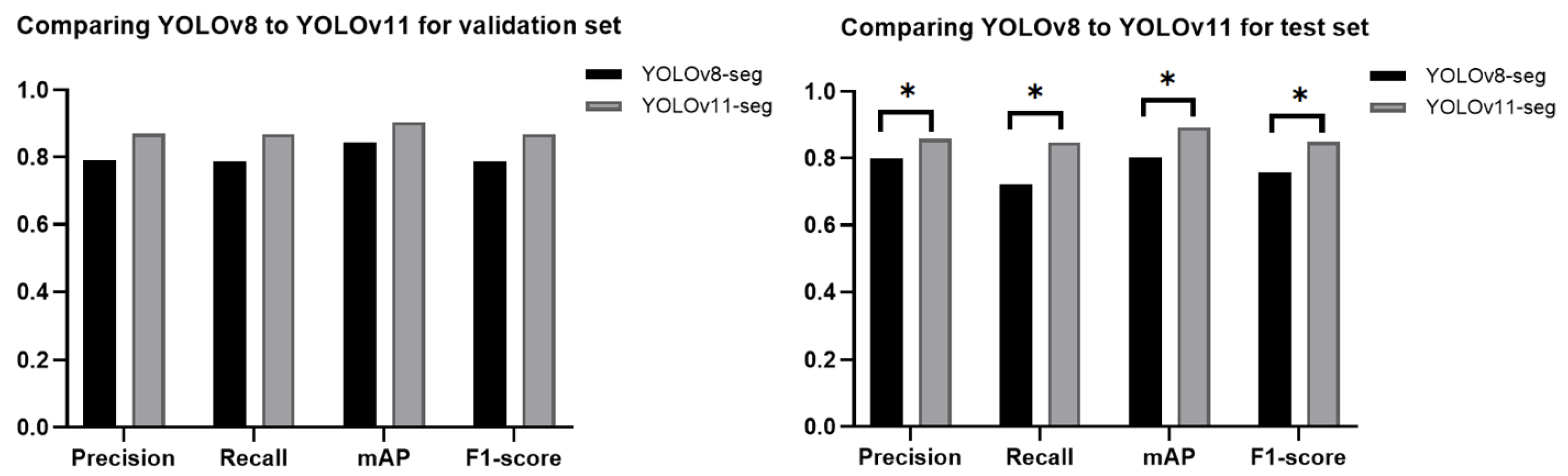

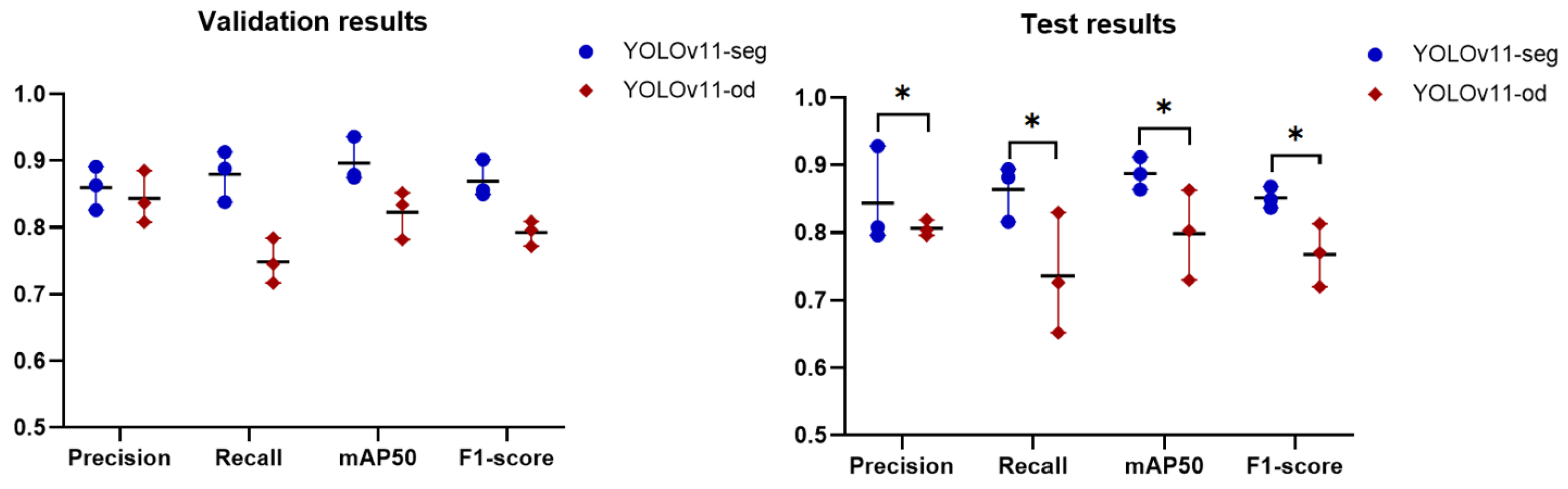

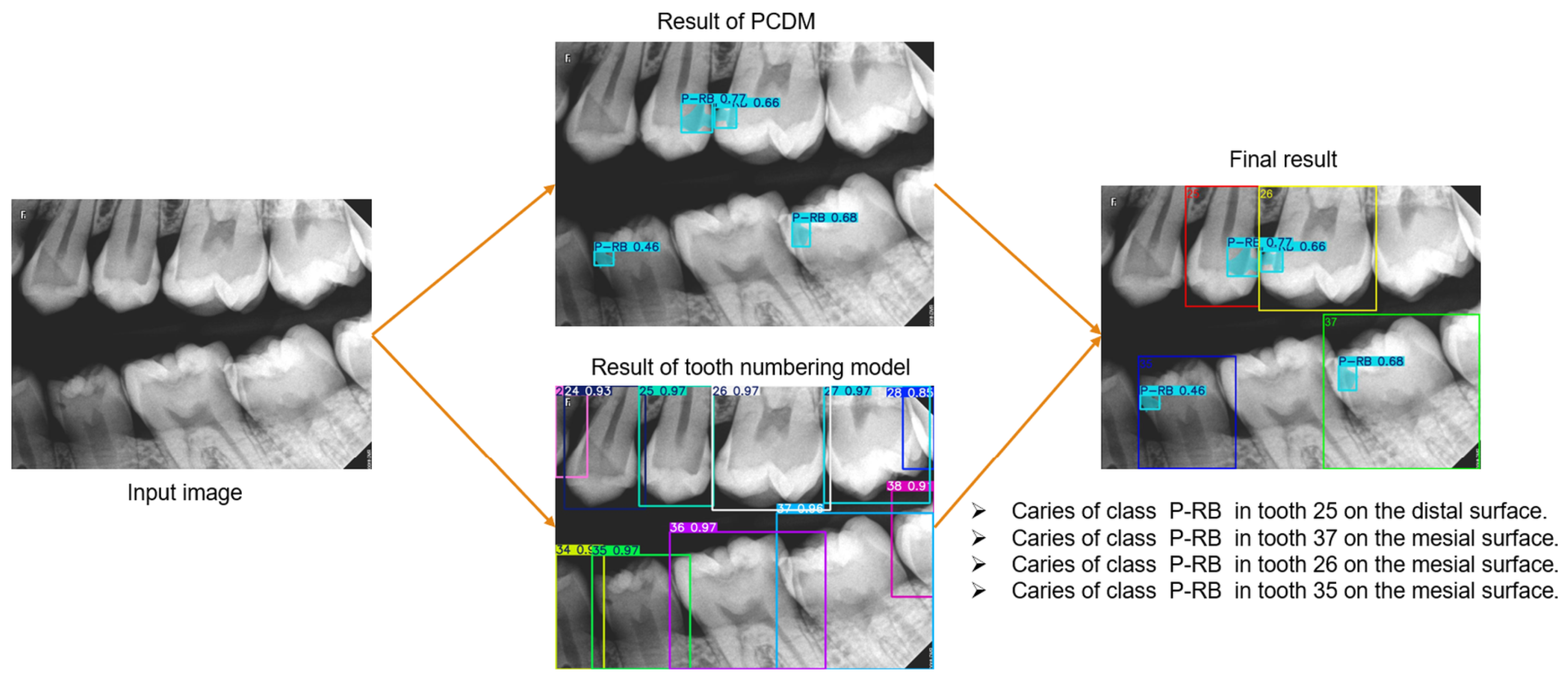

This study aimed to create a novel diagnostic system for proximal caries from BRs. This system performs three critical tasks, see

Figure 2, and the results were combined to provide the final diagnosis, with each task assigned to a distinct module to facilitate reuse in other applications. We addressed the tasks of detecting proximal caries of different depths and describing their location. The tooth numbering task was performed in our previous study [

26], where the YOLOv8-od model was used and achieved 96.7% precision, 98% recall, and 97.2% mAP. This study presented and assessed various scenarios concerning caries detection, aiming to provide a comprehensive overview of the most effective methods for identifying caries. The YOLOv11-seg demonstrated superior performance compared to the YOLOv8-seg under the same setting of parameters. It is attributed to the improvements made in YOLOv11, which include the enhancement of the model’s capacity to detect smaller objects, which addresses a common challenge in object detection tasks. Additionally, the backbone and neck have been updated to improve feature extraction and aggregation, resulting in improved detection accuracy and efficiency. The model was able to optimize more effectively during training as a result of the updated loss functions, which resulted in a reduction in false positives and an increase in accuracy.

The findings of comparing the instance segmentation approach with object detection showed that YOLOv11-seg outperformed YOLOv11-od in all metrics, which can be attributed to the method’s mechanism. The instance segmentation method not only identifies objects but also defines their exact contours. It offers pixel-level segmentation, facilitating a more precise delineation of the caries’ boundaries. This comprehensive information aids in more efficiently differentiating between several classes, particularly when the objects are in proximity or overlap. Object detection finds objects and delineates them using bounding boxes, but it lacks specific shape information. This may result in mistakes when carious lesions are close or overlapping, leading to probable misclassification or undetected cases. Therefore, the choice between the two methods should be influenced by the particular objectives of the detection task, the resources at hand, and the requirement for diagnostic precision. In the recent studies [

17,

18] used YOLOv3 and YOLOv7, a caries was annotated by making a bounding box around the tooth it belonged to and its severity was assigned according to ICCMS

TM. With an

, YOLO3 attained an mAP of 0.58 for detecting four classes (sound, RA, RB, RC), while YOLOv7 obtained 0.564 for seven classes (sound, RA1, RA2, RA3, RB4, RC5, RC6), and our model reached 0.888. Due of the imprecise marking of the caries, the models became sensitive to any dark areas such as pulp. This underscores the efficacy of the segmentation task in caries diagnosis.

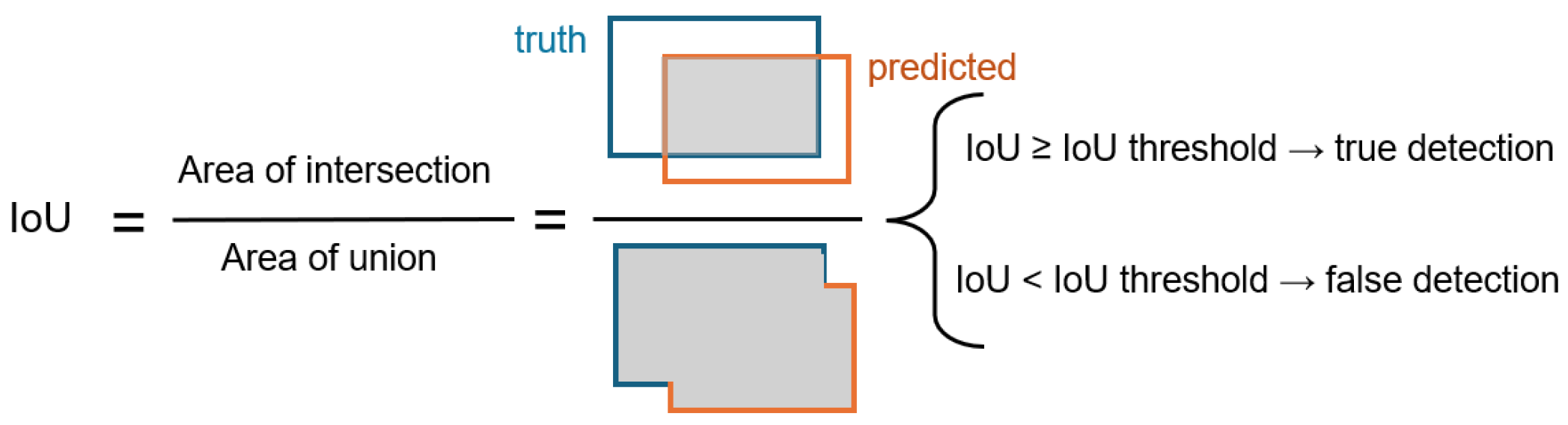

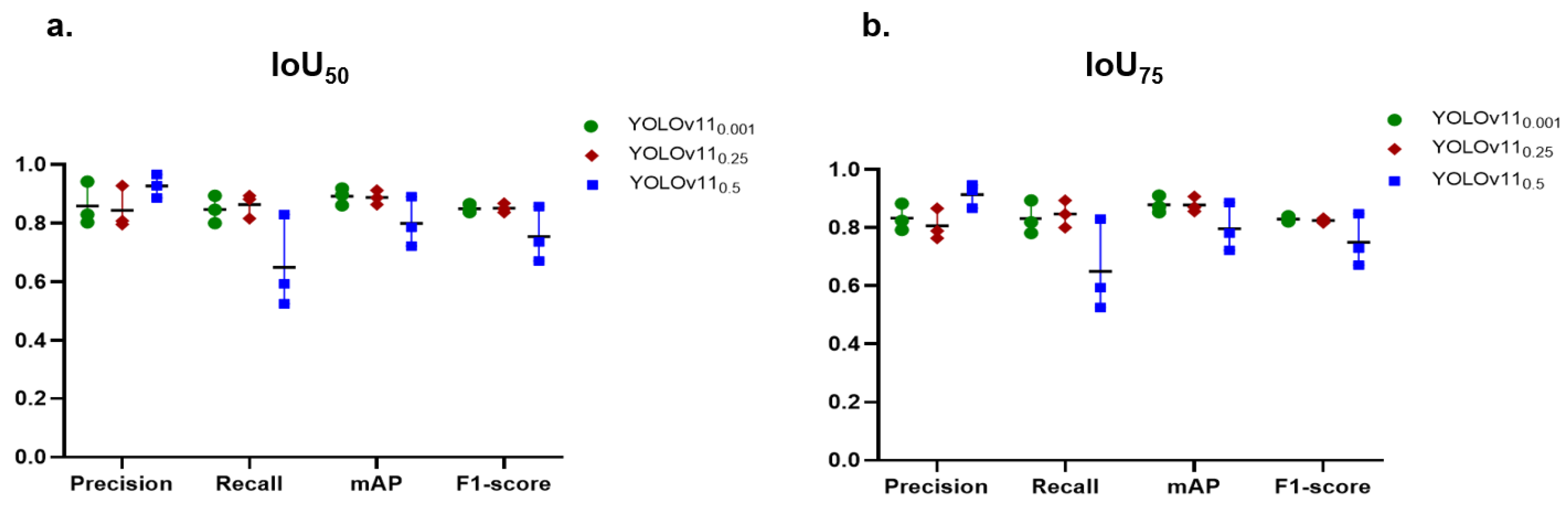

This study addressed the influence of IoU and confidence thresholds on the task of caries detection. These thresholds play a crucial role in the YOLO network, significantly improving the evaluation and performance of object recognition. They enhance accuracy, regulate sensitivity, and guarantee that the model’s predictions are trustworthy and significant. Two values of IoU were studied, and , and three levels of confidence thresholds, 0.001, 0.25, 0.5. For example, indicates at least 50% of the expected box must cross with the ground truth box to be regarded as a successful detection. The confidence threshold ranges from 0 to 1 and setting it to 0 incorporates all identified objects. For instance, with a criterion as minimal as 0.001, the model preserves all items exhibiting a confidence level exceeding 0.001, or 0.1%. This threshold may be useful in situations when catching as many objects as possible at the expense of accuracy is crucial. Example: surveillance systems that prioritize identifying every potential action over accuracy.

The findings underscore the trade-offs between precision and recall when adjusting IoU and confidence levels. In general, a lower IoU of 0.5, more than the higher IoU of 0.75, tended to boost both precision and recall, which, in turn, raised the value of mAP and F1-score as well. This indicated that the stricter threshold made it harder for the model to meet the criteria for TPs. In contrast, our previous model of tooth numbering achieved excellent performance with , which emphasizes that the setting of these parameters depends on the object’s context. The annotation of caries is subjective, as annotators may encounter difficulty in precisely defining the boundaries of caries in contrast to the well-defined boundaries of teeth. As a result, the PCDM may accurately detect caries; however, the boundaries it identifies may not be precisely aligned with those in the reference dataset. Consequently, the IoU of 50% yielded superior outcomes compared to 75%.

The findings of variation of confidence thresholds revealed that lowering the confidence threshold increased the number of predictions, which can improve recall but could also lower precision because of more FPs. For instance, precision values are generally lower at a confidence level of 0.001 than at higher confidence levels, suggesting that many but not all predictions are made but not accurate. On the other hand, raising the confidence threshold resulted in reduced recall but greater precision. The model runs the danger of missing actual positive cases even as it becomes more discriminating about what it defines as a good prediction. For a confidence level of 0.5, for example, precision usually rises dramatically, but this comes at the cost of lower recall values. In fields like medical image analysis, high precision is crucial for achieving accurate diagnoses and treatments. An FP in medical imaging can lead to unnecessary interventions, whereas an FN may result in missed diagnoses, delayed treatments, or even harm to the patient. Therefore, this work proceeded with IoU50 and confidence of 0.25 to achieve a balance between accuracy and recall of 84.4% for precision and 86.4% for recall.

The PCDM demonstrated good recall for the initial stage (RA), indicating effective identification of many cases, which is crucial for early intervention. However, the precision of 0.796 suggests that it also includes a notable number of FPs. The model may have learned to recognize common patterns associated with initial caries, yet the complexity of distinguishing these subtle lesions from healthy enamel leads to some misclassifications. In addition, the overlapping teeth in the enamel region may produce shadows in the overlap area, which the model classified as RA in certain instances. In the moderate stage (RB), the high precision of 0.928 signifies that the model effectively detects the majority of cases, hence, reducing FPs. This is particularly important as timely detection can prevent further progression of caries. The recall of 0.816 indicates that certain mild cases are overlooked, perhaps due to the over-presence of class RB in the dataset, which may result in overfitting to particular features while disregarding other essential ones for identification. The extensive stage (RC) results demonstrate a balanced performance, with a high recall of 0.894, signifying the effective detection of class RC. A precision of 0.808 indicates a moderate degree of accuracy, implying that the model effectively identifies advanced caries but may erroneously identify certain non-caries cases, such as fractured and damaged teeth, as caries. Overall, these findings demonstrate the model’s promise as a dental diagnostic tool that can improve the accuracy of proximal caries detection and treatment planning.

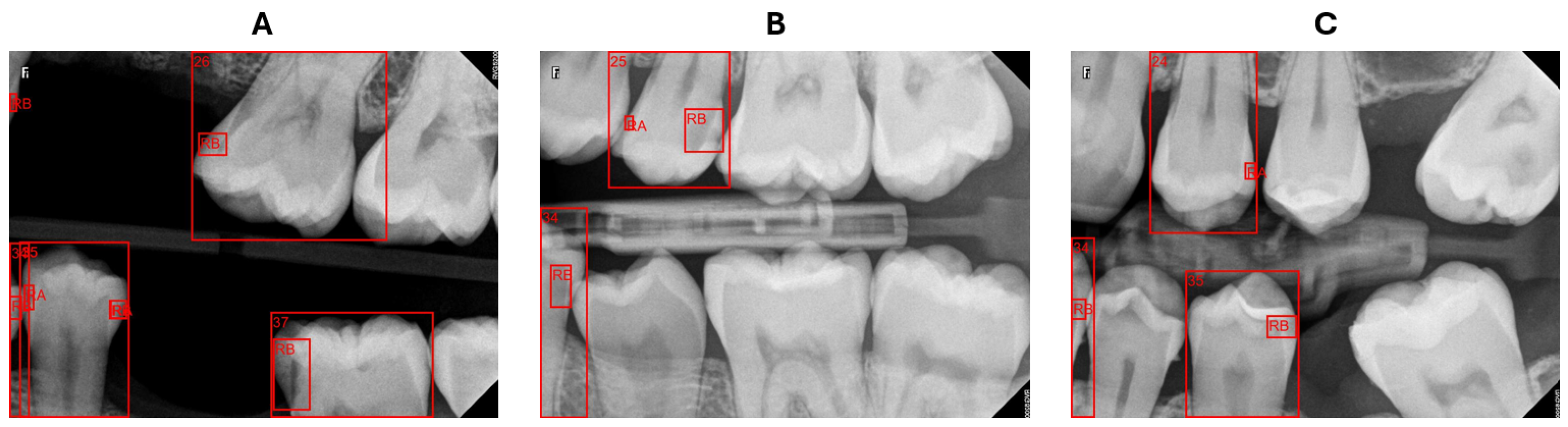

The BRs are intended to concentrate on particular areas of the dental arch; however, they may depict teeth that are only partially visible or not entirely captured in the image. Our system struggled with these cases of teeth either in numbering teeth or determining the affected surface. In certain instances, as presented in

Figure 10A, the system identified the caries but failed to recognize the corresponding tooth, rendering it incapable of describing this caries’ location. When determining the affected surface, the x-midpoint of a tooth does not reflect the actual half of the tooth, as illustrated in

Figure 10B,C tooth 34, resulting in an incorrect surface assignment. However, teeth partially visible at the edges of radiography pictures are not a major issue for the dentist, who prioritizes obtaining comprehensive images of the pertinent teeth for precise evaluation and diagnosis.

Compared to previous studies utilizing deep learning algorithms for caries detection in dental radiographs, our results exhibit competitive efficacy. For instance, the study by Ayhan et al. [

20] showcases a caries detection F1-score of 0.822, alongside precision and recall values of 0.866 and 0.833, respectively. While our precision is slightly lower than theirs, our recall is higher, indicating that our model is more effective at identifying TP cases of caries. This enhanced recall suggests that our approach may be more adept at minimizing FNs, which is critical in clinical settings where timely intervention is essential.

The study by Panyarak et al. [

17] evaluated the YOLOv3 model for caries detection based on the ICCMS

TM. The precision and recall values for the RA category in the current study are 0.796 and 0.882, respectively, which significantly exceed those reported by Panyarak et al., who found values of 0.75 for precision and 0.67 for recall. In the RB category, the metrics of the current study (0.928 precision and 0.816 recall) demonstrate enhanced performance relative to the YOLOv3 model, which achieved 0.62 precision and 0.53 recall. The RC category demonstrated superior metrics in the current study, with precision at 0.808 and recall at 0.894, compared to the previous study.

The findings of this study indicate improved efficacy in caries detection across all severity levels relative to the earlier research. Advancements in model architecture and instance segmentation techniques, exemplified by YOLOv11, may significantly enhance diagnostic accuracy in dental radiology.

Although the system attained exceptional performance, it is limited to diagnosing proximal caries and BRs from one source. As a consequential work, we aim to incorporate more caries types, such as occlusal caries, and gather data from diverse sources and populations to enhance the generalizability of the system.