Abstract

Background: The implementation of radiological artificial intelligence (AI) solutions remains challenging due to limitations in existing testing methodologies. This study assesses the efficacy of a comprehensive methodology for performance testing and monitoring of commercial-grade mammographic AI models. Methods: We utilized a combination of retrospective and prospective multicenter approaches to evaluate a neural network based on the Faster R-CNN architecture with a ResNet-50 backbone, trained on a dataset of 3641 mammograms. The methodology encompassed functional and calibration testing, coupled with routine technical and clinical monitoring. Feedback from testers and radiologists was relayed to the developers, who made updates to the AI model. The test dataset comprised 112 medical organizations, representing 10 manufacturers of mammography equipment and encompassing 593,365 studies. The evaluation metrics included the area under the curve (AUC), accuracy, sensitivity, specificity, technical defects, and clinical assessment scores. Results: The results demonstrated significant enhancement in the AI model’s performance through collaborative efforts among developers, testers, and radiologists. Notable improvements included functionality, diagnostic accuracy, and technical stability. Specifically, the AUC rose by 24.7% (from 0.73 to 0.91), the accuracy improved by 15.6% (from 0.77 to 0.89), sensitivity grew by 37.1% (from 0.62 to 0.85), and specificity increased by 10.7% (from 0.84 to 0.93). The average proportion of technical defects declined from 9.0% to 1.0%, while the clinical assessment score improved from 63.4 to 72.0. Following 2 years and 9 months of testing, the AI solution was integrated into the compulsory health insurance system. Conclusions: The multi-stage, lifecycle-based testing methodology demonstrated substantial potential in software enhancement and integration into clinical practice. Key elements of this methodology include robust functional and diagnostic requirements, continuous testing and updates, systematic feedback collection from testers and radiologists, and prospective monitoring.

1. Introduction

The early detection of breast cancer is essential to improving patient outcomes, and mammography stands at the forefront of breast cancer screening and diagnosis. Mammography, as a widely adopted imaging technique, has demonstrated efficacy in identifying breast abnormalities before progression to advanced stages [1,2,3,4]. Studies have shown that early detection significantly increases treatment options and improves survival rates [5,6,7,8]. Thus, mammography plays a critical role in public health efforts aimed at reducing breast cancer morbidity and mortality.

In recent years, the integration of artificial intelligence (AI) and machine learning technologies into healthcare has gained considerable traction, particularly within the field of radiology. These innovations improve diagnostic accuracy and efficiency, transforming the landscape of medical imaging [9,10,11,12]. By leveraging vast datasets and complex algorithms, AI systems support radiologists’ decision-making, enabling more nuanced and precise interpretations of imaging results [13,14]. The incorporation of AI into radiological practice streamlines workflows and allows healthcare professionals to focus on patient care [15,16].

Mammography represents one of the most extensively researched applications of AI. AI solutions demonstrate substantial promise as diagnostic support tools, exhibiting remarkable speed and accuracy in mammogram analysis [17,18,19,20]. AI assists radiologists by improving detection rates, reducing false-positive and false-negative results, and achieving better diagnostic outcomes [21,22]. AI addresses several challenges currently faced by radiologists, including an increasing workload from rising screening volumes, demand for high throughput in clinical environments, and the need for continuous training in an evolving healthcare landscape [23,24,25].

Despite these advantages, the integration of mammographic AI remains a challenge. One major issue is the need for external validation of AI models to confirm their effectiveness across various populations and clinical scenarios [26]. External validation involves testing AI models with data acquired from sources different from those used during the development to ensure that the algorithm’s applicability extends beyond its training data [27]. However, most studies of AI in radiology are limited to a single institution and lack external validation [27,28,29]. Although some researchers are expanding their datasets, such single-center studies fail to accurately reflect real-world environments. Additionally, most studies employ a retrospective design, which prevents an assessment of AI generalizability, potentially undermining its integration into clinical decision-making [30,31,32,33,34].

Furthermore, a multicenter and prospective study design alone may not be sufficient for the successful deployment of AI into clinical practice. First, integration of radiological AI requires radiologist feedback during the testing and utilization to refine AI performance and usability. It is essential that AI solutions be designed with the end user in mind [10,35,36]. Secondly, iterative development and regular updates help AI to stay relevant and effective. An update mechanism is necessary to continually refine AI models to keep up with the needs of clinical practice [14,37,38,39,40]. However, both radiologist feedback and continuous AI updating are still missing in most studies. The scientific and regulatory fields rely on the “build and freeze” framework, requiring developers to validate their model on static datasets, report the results, and then apply for regulatory approval [41].

Routine testing and monitoring of AI models enable the identification of weaknesses and provide developers with insights supporting improvement [42,43,44]. Several publications provide methodologies for testing and monitoring radiological AI models [45,46,47,48]. These methodologies are universal across AI fields including medicine and radiology and do not take into account the specifics of imaging modalities, such as mammography. The cited papers give instructions for use and do not contain original findings and use case scenarios. Finally, they lack the concept of continuous learning, which is essential for AI model performance in routine clinical scenarios.

This paper evaluates the effectiveness of a multi-stage testing and monitoring methodology applied to a mammographic AI model for enhancing its performance and reliability. This paper targets AI developers, radiologists, and clinical researchers, offering valuable insights that can contribute to enhancing the practical application of AI in breast cancer screening and diagnosis while ensuring the highest standards of patient care.

2. Materials and Methods

2.1. Study Setting

This study was conducted as part of the Moscow Computer Vision Experiment (hereafter referred to as “the Experiment”). The goal of the Experiment is to investigate the integration of AI technologies into radiological practice. Initiated in 2020 and ongoing until the present (2025), the Moscow Center for Diagnostics & Telemedicine assesses and compares AI solutions from various vendors to identify and integrate the best-performing models into Moscow’s unified picture archiving and communication system (PACS). Over the course of the Experiment, 52 unique AI models have processed 13,925,132 imaging studies [49].

2.2. AI Solution Overview

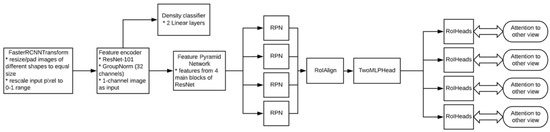

This paper investigates the Celsus Mammography® AI model [50]. The model’s primary task is to detect and classify signs of breast cancer on mammograms. For each breast, the model generates a prediction score ranging from 0 to 1, with 1 indicating the highest probability of breast cancer. The neural network is based on the Faster-RCNN architecture [51] with a ResNet-50 backbone module [52] (Figure 1). In 2021, the AI solution received a market authorization certificate from Russia’s National Regulatory Authority, Roszdravnadzor [53], and a Conformité Européenne (CE) mark [54]. These certifications confirm the device’s compliance with safety standards for medical use. To enhance this study’s reliability, we collaborated with the developer to obtain detailed insights into the AI architecture and employed datasets, as outlined in Supplementary Data S1.

Figure 1.

Model architecture. * Module components or actions performed.

2.3. Study Design

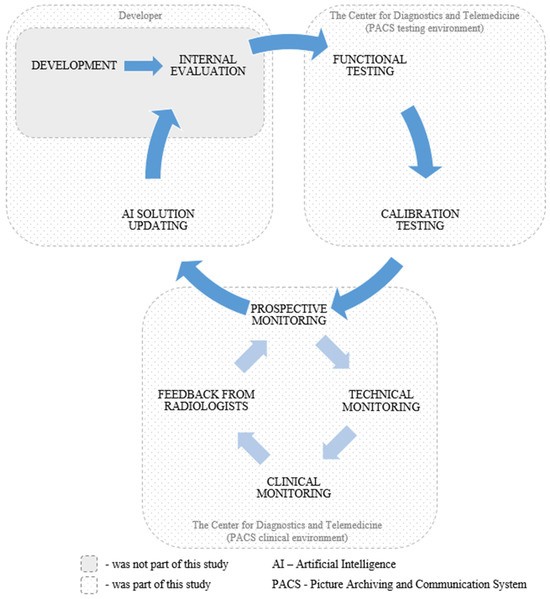

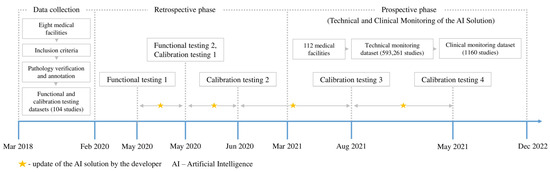

This study employed a retrospective and prospective multicenter cohort design. The external evaluation of the AI solution employed our previously published methodology [55]. This study encompassed functional testing, calibration testing, technical monitoring, clinical monitoring, radiologist feedback collection, and AI model updates by the developer. An overview of each testing stage is presented in Table 1. Visual representations of the study design and timeline are presented in Figure 2 and Figure 3, respectively. This study adhered to the guidelines outlined in the Checklist for Artificial Intelligence in Medical Imaging (CLAIM) to ensure transparency and reproducibility [56]. The datasets used for external evaluation and the testing and monitoring methodology are described in detail below.

Table 1.

Objectives and criteria for passing the testing and monitoring stages.

Figure 2.

Overview of the study design.

Figure 3.

Overview of the study timeline.

2.4. External Data

This study utilized four datasets: two retrospective (functional and calibration testing datasets) and two prospective (technical and clinical monitoring datasets). Detailed information about the datasets is provided below.

2.4.1. Data Sources and Collection Period

Retrospective data for functional and calibration testing datasets were aggregated from eight medical facilities in Moscow (City clinic №8, City clinic №22, City clinic №36, Diagnostic center №3, Clinical diagnostic center №1, Clinical diagnostic center №4, Clinical diagnostic center №121, M.P. Konchalovsky City clinical hospital №3) between 6 March 2018 and 3 February 2020. Prospective data for technical and clinical monitoring datasets were collected from 112 medical facilities in Moscow between 5 March 2021 and 31 December 2022. The extensive data collection was made possible by the Moscow Radiology Reference Center integrated with the Moscow PACS.

2.4.2. Eligibility Criteria

Inclusion criteria for all datasets were (1) female sex; (2) age ≥ 18 years; and (3) mammograms available in craniocaudal (CC) and mediolateral oblique (MLO) views. Studies with insufficient image quality were excluded using the PGMI (Perfect, Good, Moderate, Inadequate) scale. Studies classified as inadequate included cases such as partial or incomplete mammography, incorrect exposure, blur, and tissue overlapping.

2.4.3. Anonymization Methods and Data Preprocessing

To comply with the applicable regulations [57], all datasets were anonymized according to the Confidentiality Profile Attributes [58]. Four anonymization methods (deletion, cleaning, replacement, and identifier generation) were applied to 433 Digital Imaging and Communications in Medicine (DICOM) tags within the datasets. The anonymization method was documented in the “[0x0012, 0x0063]” tag. In addition, pseudo-anonymization of studies was employed to replace the original study identifier with a randomly generated one. Anonymization was performed using a dataset preparation platform [59]. No other data preprocessing methods were used.

2.4.4. Equipment Manufacturers and Image Acquisition Protocol

The functional and calibration datasets included mammography data acquired from General Electric, TrioDM, and Fujifilm units. The technical and clinical monitoring datasets included equipment from ten manufacturers, including General Electric (n = 64), Medical Technologies LTD (n = 27), Fujifilm (n = 13), etc. Automatic adjustment of imaging parameters, including exposure time, tube voltage, and tube current, was available across all the mammography units. The studies utilized no views other than CC and MLO.

2.4.5. Data Subsets and Sample Size Justification

The functional testing dataset contained four studies derived from the preliminary testing design. The purpose of the functional testing was to evaluate the main functions of the AI model without using quantitative metrics. The limited dataset size also expedited the feedback for the developer. The calibration dataset encompassed 100 studies, with the sampling rationale found in previous publications [60,61]. The calibration dataset is currently available in the public domain [62]. During the technical monitoring, 601,609 studies were sent to the AI model for processing (178,402 studies in 2021 and 423,207 in 2022). For several reasons, the AI model did not process 8348 studies. The final size of the technical monitoring dataset was 593,261 studies. The clinical monitoring dataset contained 1160 studies derived from the technical monitoring dataset each month. During the first ten months of the clinical monitoring (March to December 2021), 20 studies were sampled each month. In the following year (January to December 2022), the monthly sample size increased to 80 studies as the situation with resources for the testing organization had improved. The samples were formed randomly. The sampling rationale may be found in our previous work [63].

2.4.6. Experts, Annotation, and Reference Standard

Two expert radiologists, each with over five years of mammography experience, annotated the studies from the functional and calibration datasets. These radiologists had access to patients’ electronic medical records, including clinical information, prior mammograms, and biopsies conducted within the 12 months following each mammogram. Inclusion required consensus between both specialists; therefore, inter- and intra-reader variabilities were not assessed. The annotation classified studies into two classes (“with pathology” and “without pathology”) according to the Breast Imaging Reporting and Data System (BI-RADS) guidelines [64]. Of these, the “with pathology” class included studies categorized as BI-RADS 3, 4, and 5, while the “without pathology” class consisted of studies interpreted as BI-RADS 1 or 2 [65]. For malignant cases, histological verification and consensus agreement established the ground truth, while consensus agreement alone determined the ground truth for benign cases. Pathology reports were sourced from the Moscow Cancer Registry [66].

During the technical monitoring in the clinical setting, the AI model performed the initial reading, followed by the radiologist’s (i.e., the end user) second reading. The Experiment was designed to replace a second radiologist with an AI system registered as a medical device in compliance with the applicable regulations.

During the clinical monitoring phase, an expert radiologist reviewed the clinical monitoring dataset. The expert had more than 10 years of mammography experience and was trained in working with AI solutions.

The objectives and prospective design of the technical and clinical monitoring phases employed the radiologist’s conclusion as the ground truth.

2.4.7. Demographic and Clinical Characteristics of the Dataset

The functional testing dataset included four females with a median age of 61 years (range: 56–68). The calibration dataset included 100 females with a median age of 63 years (range: 48–71). The functional and calibration datasets were built using a 50/50% class balance (“with pathology”/”without pathology”). Data types of the technical and clinical monitoring datasets are presented in Table 2.

Table 2.

Data types in the technical and clinical monitoring datasets.

2.5. Testing and Monitoring Methodology

2.5.1. Technical Integration of the AI Solution into the PACS

The AI model was integrated into the testing environment of the unified Moscow PACS for external validation. Anonymized studies from static functional and calibration datasets were forwarded to the AI. The previous section provides full details about both datasets. The AI model processed each study and generated an additional series of images and a Digital Imaging and Communications in Medicine Structured Report (DICOM SR). The model also dispatched a Kafka message in JavaScript Object Notation (JSON) format containing the numerical probability of pathological findings for each study. The Kafka messaging framework enabled communication between the AI model and the PACS for seamless data exchange and integration.

2.5.2. Functional Testing

An expert radiologist with over five years of experience in mammography and specialization in radiological AI testing conducted the initial evaluation of the AI model. The functional testing protocol comprised a two-step assessment. First, the radiologist examined the AI’s core functionality as per the Baseline Functional Requirements for radiological AI models (publicly available) [67]. These requirements specify essential criteria governing model operations, including specifications for DICOM SR and additional series, as detailed in Table 3.

Table 3.

Baseline Functional Requirements for outputs of radiological AI models.

Afterwards, the radiologist performed a comprehensive assessment of the AI model from a clinical perspective. This included detailed analysis of the AI’s ability to detect abnormalities such as masses, calcifications, and enlarged lymph nodes. Table 4 shows outcomes of the evaluation performed in line with the Baseline Diagnostic Requirements for mammographic AI (also in the public domain) [67]. Distinguishing between the two types of requirements for AI deliverables is necessary because the Baseline Functional Requirements apply to all clinical AI models, whereas the Baseline Clinical Requirements are task-specific and depend on the model’s intended purpose.

Table 4.

Baseline Diagnostic Requirements for mammographic AI outputs detecting signs of breast cancer.

Once the AI model met the Baseline Functional and Diagnostic Requirements, the functional testing was considered passed. The purpose of this testing stage was to filter out various AI models in their early development phase. This approach prevented resource wastage on testing unfinished AI models.

2.5.3. Calibration Testing: Methodology and Statistics

The calibration testing compared finding probability scores generated by the AI model against the annotated calibration dataset as ground truth. This comparison evaluated several diagnostic accuracy metrics, including the area under the curve (AUC), accuracy, sensitivity, specificity, precision, and F1 score [68,69]. These metrics utilized the standard classification into true positives (TPs), true negatives (TNs), false positives (FPs), and false negatives (FNs):

To assess the diagnostic performance of the AI model, a receiver operating characteristic (ROC) curve was constructed. This diagram plots the TP rate (sensitivity) against the FP rate (1 − specificity) across various thresholds. The AUC represents the model’s ability to distinguish between positive and negative cases, with values ranging from 0 to 1. The optimal classification threshold was identified using the Youden index method, which maximizes the biomarker’s discriminative ability by balancing sensitivity and specificity [70]. The AI probability score determined class assignment: “no pathology” for scores below the threshold and “with pathology” for scores equal to or above the threshold. The calibration testing was considered successful based on the two criteria presented in Table 1. The AUC threshold of 0.81 was selected based on empirical findings that matched the lower limit of the radiologist’s AUC confidence interval [55]. When the model met or exceeded the thresholds, the calibration testing was considered successful. Otherwise, we recommended that the developer update the AI model and repeat the calibration testing.

2.5.4. Technical Monitoring: Methodology and Statistics

During the next phase, the AI model was integrated into the PACS clinical environment, enabling real-time analysis of anonymized studies acquired in the participating medical facilities. The technical monitoring methodology assessed technical defects originating from the AI model. Technical defects were categorized into two groups: group A (no AI output) and group B (incomplete image analysis). Group B included cases where the AI model analyzed only one, two, or three of the four images comprising a standard mammogram (MLO and CC views for the right and left breasts). Technical defects were monitored on a monthly basis. To assess the defect level in each group, the following ratio was used:

Here, D represents the number of processed studies with technical defects, and S represents the total number of studies submitted to the AI model over one month. The average defect level was calculated using the arithmetic mean. To visualize changes over time, we used a linear regression equation to construct a trend line representing the average defect level:

where y represents the average technical defect level, x represents the month of observation, and b represents the line’s slope. For each group, the technical defect threshold was set to 10% [55]. If the threshold was exceeded, the developer was advised to investigate possible causes and update the AI model.

2.5.5. Clinical Monitoring: Methodology and Statistics

The clinical monitoring methodology involved expert radiologist review. During months 1–10 of monitoring, the expert provided a qualitative assessment of AI outputs with screenshots. In 2022, quantitative assessment was introduced, where the radiologist assigned scores on a scale from 0 to 1 for both graphical mask localization and the DICOM SR interpretation generated by the AI. A score of 0 indicated a false positive, while a score of 1 indicated agreement with the AI results (Table 5).

Table 5.

Clinical evaluation criteria for mammographic AI models.

The mean localization and interpretation scores across all studies in the clinical monitoring sample were calculated using the arithmetic mean. The overall clinical assessment score was derived from these means using the following formula:

where L represents the mean localization score, and I represents the mean interpretation score. Clinical monitoring was conducted by one radiologist at a time. However, due to staff turnover, the radiologist was replaced during the 15th month of monitoring, potentially affecting the results. At the end of each month, both technical and clinical monitoring reports were sent to the developer.

2.5.6. Collecting Feedback from Radiologists and Developers

This study also gathered feedback from the end users (radiologists) of AI in routine clinical practice. Feedback was collected through the dedicated interface in the radiologist workstation, which provided two response options: (1) I agree with the AI outputs; (2) I disagree with the AI outputs. This feedback served as a preliminary assessment of the agreement rate and was forwarded to the developer. The end users participated in the study voluntarily. We also collected written feedback from the developer.

2.5.7. Updating the AI Model—Developer’s Perspective

The developer updated the AI models in two scenarios: (1) testing outcomes were unsatisfactory, and (2) during testing to enhance performance. The first scenario required re-testing to assess update effectiveness. In the second scenario, testing continued without interruption, allowing real-time monitoring of model performance. At the same time, updates were categorized into two types: (1) architectural updates that introduced changes to the model architecture and modified the original functionality, and (2) rectification of coding errors without modifying the architecture or the core functionality. The architectural updates involved comprehensive calibration testing to evaluate the diagnostic accuracy of the updated model. On the contrary, repeated calibration testing post code rectification was considered redundant. Thus, the method for assessing the effectiveness of non-architectural updates was the approach of watchful waiting and continued testing and monitoring. Finally, for both update types, the developer notified testers about version updates and provided change documentation.

3. Results

3.1. Functional Testing and Initial Update of the AI Model

Initial functional testing of the AI model (version 0.14.0) revealed two inconsistencies with the Baseline Functional Requirements: (1) absence of a “For research purposes only” label in both DICOM SR and additional image series, and (2) using color graphic masks to highlight pathological findings (Figure 4). Color masks (2) were considered inconsistent because they are difficult to distinguish on monochrome mammography monitors. We provided the developer with a functional testing report with recommendations to address these issues. After implementing the requested changes, re-testing we undertaken to make sure that both discrepancies had been resolved.

Figure 4.

Additional image series with graphical masks highlighting pathological findings.

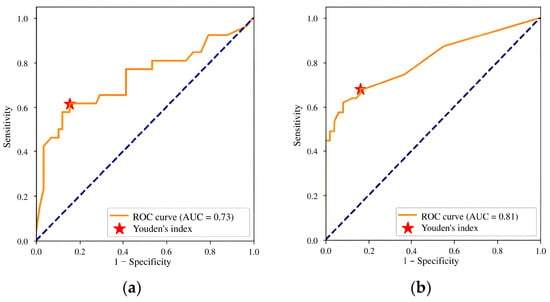

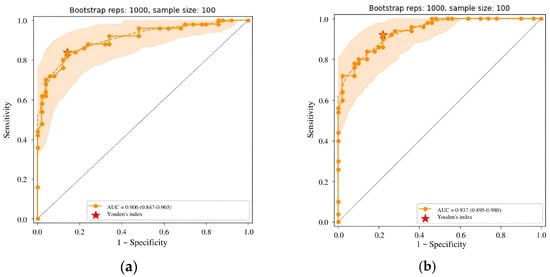

3.2. Calibration Testing and Subsequent Update of the AI Solution

Initial calibration testing of the AI model yielded promising results; however, the AUC and relative accuracy deviation fell below the established thresholds (Figure 5a, Table 6). After identifying these discrepancies, we provided the developer with a calibration testing report and recommendations for improvement. Over the following month, the developer made several modifications, including expanding the training dataset, enhancing image preprocessing, adding a channel for image windowing and histogram equalization, introducing a head module for assessing input image quality, updating the backbone module to ResNeSt [52], switching from standard RoIPooling to precise RoIPooling [71], and modifying the activation function.

Figure 5.

ROC curves for (a) version 0.14.0 and (b) version 0.15.0 of the Celsus Mammography AI model: results of the first and second calibration tests.

Table 6.

Diagnostic accuracy metrics of the Celsus Mammography AI model: results of the first and second calibration tests.

The second calibration testing of model version 0.15.0 demonstrated an improvement in diagnostic accuracy. The AUC increased to 0.81, meeting the threshold criteria, and no metric deviated downward by more than 10% compared to the claimed values (Figure 5b, Table 6). These outcomes enable integration of the AI model into the PACS clinical environment for prospective monitoring.

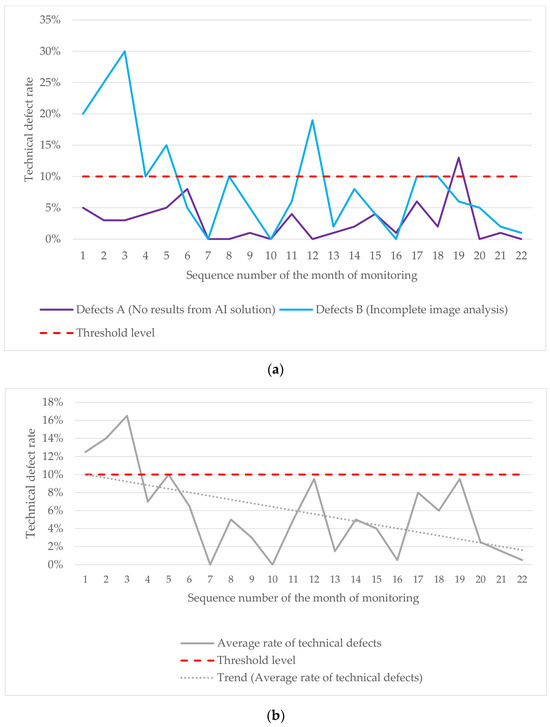

3.3. Technical Monitoring Results

The technical defect monitoring plot highlights several instances where the technical defects exceeded the established threshold (Figure 6a). The average line demonstrates a downward trend in technical defects, indicating improved model stability (Figure 6b). Technical defects peaked during the initial monitoring phase (months 1–5), with additional spikes in months 12 and 19. Most defects fell into group B, corresponding to incomplete image analysis. Key contributing factors included mammography unit configuration problems, incorrect examination parameters set by technicians, PACS technical issues, and AI developer errors.

Figure 6.

(a) Variations in A and B defects during the technical monitoring; (b) changes in the average rate of technical defects observed during the technical monitoring.

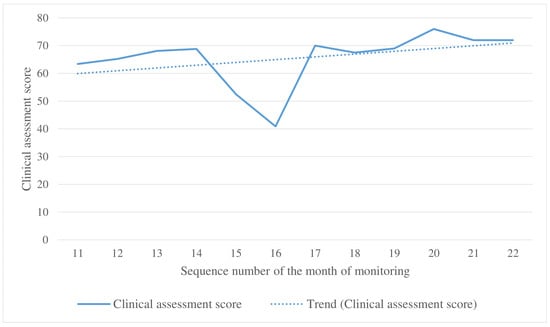

3.4. Clinical Monitoring Results

The clinical evaluation plot shows a steady upward trend (Figure 7). A decrease in the clinical assessment score during months 15–16 was attributed to the replacement of the evaluating expert radiologist.

Figure 7.

Changes in the clinical assessment score during the clinical monitoring.

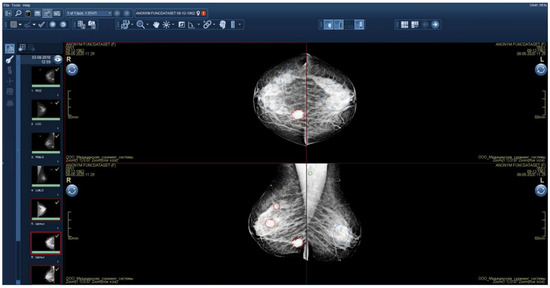

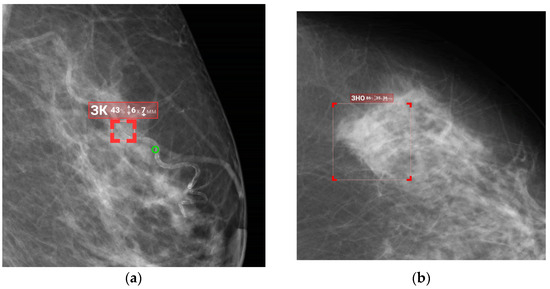

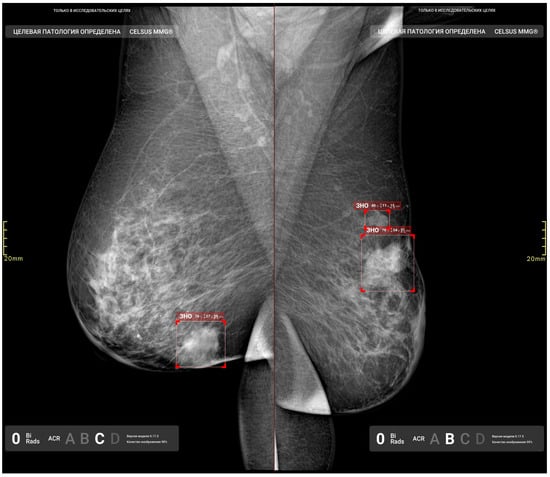

Figure 8 and Figure 9 illustrate false positives and true positives identified by an expert radiologist. The color-coded boxes represent predicted sites of potential abnormalities. Red boxes marking malignancy sites contain text and numbers indicating the class name (e.g., ‘malignant mass’ or ‘malignant calcification’), class probability, and physical dimensions.

Figure 8.

False-positive findings by the AI model: (a) benign vascular calcifications misclassified as malignant calcification, and (b) normal fibroglandular tissue misclassified as a malignant mass.

Figure 9.

True-positive results from the AI model, illustrating the detected irregular masses with indistinct margins in the right and left breasts.

3.5. Updates to the AI Model During Prospective Technical and Clinical Monitoring

Throughout the prospective phase, the developer implemented the third and fourth updates to the AI model. The third update, installed during month 9 into monitoring, introduced a new class of findings—fibrocystic changes—and a classification system based on the BI-RADS categorization. These updates were driven by radiologist feedback requesting functionality relevant to the clinical setting. Additionally, several architectural adjustments were made. These included a revised approach to meta-model training to enhance the probability assessment and an expanded training dataset. Following these changes, the model version was updated to 0.16.0. A third calibration testing demonstrated a slight improvement in diagnostic accuracy (see Table 7 and Figure 10a).

Table 7.

Diagnostic accuracy metrics of the Celsus Mammography seen in the third and fourth calibration tests.

Figure 10.

(a) ROC curve for Celsus Mammography (version 0.16.0) from the third calibration test; (b) ROC curve for Celsus Mammography (version 0.17.0) from the fourth calibration test.

The fourth update took place in month 15 into the monitoring. It introduced further enhancements, including new classes of findings (inverted nipple and skin thickening), PGMI-based quality classification, and breast quadrants to enhance abnormality localization. The neural network architecture was transitioned to D2Det [72]. AI training was enhanced with new local augmentations and gradient accumulation techniques, while a new meta-model enabled study categorization. The training dataset was also expanded. Diagnostic accuracy metrics for AI model version 0.17.0 demonstrated further improvement (see Table 7 and Figure 10b).

3.6. Feedback from Radiologists and the Developer

A total of 8406 feedback responses were collected from 33 end users. The radiologists agreed with the model in 6661 cases (79.2%), and disagreed in 1745 cases (20.8%). Feedback from the AI developer on completed tests is available in Supplementary Materials S2.

3.7. Additional Study Results

In January 2023, the Moscow compulsory health insurance system introduced a new reimbursable service, “Description and Interpretation of Mammography Using Artificial Intelligence,” powered by the Celsus AI solution [73]. The integration was preceded by the fifth calibration test. Table 8 presents the results, demonstrating a slight variation in trends compared to the previous calibration testing.

Table 8.

Diagnostic accuracy metrics of the Celsus Mammography version 0.18.0: results of the fifth calibration test.

4. Discussion

4.1. Discussion of the Study Results

This study demonstrates the evolution of a mammographic AI model through testing and monitoring following a lifecycle-based methodology [55]. Our findings show that continuous testing and monitoring significantly enhance the model performance through collaboration among developers, testers, and radiologists. Key improvements include expanded functionality, higher diagnostic accuracy, and improved performance stability.

The initial functional testing identified critical discrepancies that could impede the model’s clinical applicability, specifically non-compliance with the requirements related to labeling and display requirements for AI outputs. Prompt rectification of these issues, followed by successful re-testing, exemplifies the critical importance of functional validation prior to deployment to clinical environments. Unique open-access functional and diagnostic requirements for radiological AI solutions standardize the testing and provide developers with insights for effective integration [67], a matter the developer drew attention to during the course of this study (Supplementary Materials S2). Radiological AI standardization remains a critical topic. Several initiatives were presented by ACR’s Data Science Institute (DSI) Use Cases and the ACR Recognized Center for Healthcare-AI (ARCH-AI) [57]. Most existing standards emphasize ethical and legal aspects rather than technical and clinical requirements.

Further calibration testing revealed inadequate AUC diagnostic accuracy and insufficient sensitivity. Expanding the training dataset and refining the model architecture led to a significant performance improvement. Version 0.15.0 demonstrated a higher AUC of 0.81, achieving 0.94 in version 0.17.0. These adjustments demonstrate the applicability of the lifecycle-based testing and refinement of AI solutions. A recent systematic review on the external validation of mammographic AI models demonstrated an AUC range of 0.71 to 0.96, so our results are consistent with those of other authors [74]. However, the inherent heterogeneity of AI architectures and datasets complicates precise comparisons of diagnostic accuracy across scholarly papers.

Initially, technical monitoring revealed a high defect rate that did not match the stability claims. Further updates led to an unsteady decline in defect rates, demonstrating improved stability. Group B defects (incomplete image analysis) highlighted multiple contributing factors, including human error and technical issues with the PACS and AI. In earlier studies, we demonstrated the prevalence of incorrect DICOM tag entries in chest radiography examinations [75], leading us to suggest that comprehensive technician training could address these discrepancies and improve the adherence with acquisition protocols. A recent systematic review of AI errors in mammography included only one publication focusing on technical rather than clinical errors [76], which indicates that this topic deserves further attention.

Clinical monitoring results revealed an overall improvement in assessment scores, despite personnel-related fluctuations. The presence of false-positive and false-negative results necessitates continuous refinement of AI algorithms alongside experienced radiologists validating AI outputs to improve patient safety and diagnostic accuracy. Although the application of AI in clinical practice is in its early stages, the recent years have witnessed an emergence of prospective studies of mammographic AI [77,78]. Both studies demonstrate increased or stable cancer detection rates compared to the standard approach. However, methodological limitations may present. For example, both radiologists were aware of the AI results when discussing the disputed cases, which may introduce interpretation bias [77]. Since our research did not focus on nuances of AI performance in routine mammography, the prospective phase was limited to binary feedback from end user (agree/disagree) and expert radiologist reviews. Thus, the methodology for prospective studies of radiological AI offers valid contributions to the field and is discussed in this paper.

The end user feedback revealed high radiologist–AI agreement (79.2%), which is promising for the broader introduction of AI into the clinical environment. However, the 20.8% disagreement rate points towards the areas requiring further model training or refinement. Engaging radiologists in the development cycle fosters a collaborative environment and enriches the clinical context, which enhances the AI’s diagnostic capabilities [35,79]. Developer feedback highlighted the testing methodology as a valid contribution to the development and refinement of the AI model. Most of the developer’s responses indicate that third-party testing motivated further improvement (Supplementary Materials S2).

Our findings also showcase the successful integration of the AI model into the compulsory health insurance system, marking a significant milestone in real-world application. Although our previous observation suggested no statistically significant differences in performance between AI and radiologists [73], monitoring AI within clinical workflows is essential for long-term success.

Despite the limited literature on testing methodologies for radiological AI [80,81,82,83], our study aligns with frameworks such as Jorge Cardoso et al.’s Radiology AI Safety, an End-to-end lifecycle approach (RAISE) addressing quality concerns across regulatory, clinical, technical, and ethical domains [46]. While they encompass a broader scope, our methodology provides a narrow perspective on clinical and technical testing.

AI-related publications can be classified into four domains—Plan, Do, Study, Act—with Plan being the most common (rationale for using specific tools, ethical issues, transparency, etc.) [15]. Publications devoted to the Do (appropriate technical expertise, integration into existing workflow) and Act (algorithm improvement for better performance) domains are necessary for deploying AI in clinical practice [84]. We hope this study will contribute to the global adoption of AI in healthcare.

4.2. Study Limitations

Several limitations warrant discussion. First, we did not collect various clinical data or register clinical outcomes, such as the cancer detection rate, recall rate, and interval cancers. While this was consistent with our study aims, it may limit the result interpretability. Second, despite a large volume of test data, diagnostic accuracy metrics were calculated using only a calibration dataset of 100 studies. Additionally, the geographical coverage was limited to Moscow, which may also impact result generalizability. Finally, we did not address potential biases associated with AI utilization, which could further affect generalizability.

4.3. Future Prospects

Future research directions include expanding testing datasets and investigating the clinical outcomes and biases related to the AI solution’s implementation. Further applications of the testing and monitoring methodology to other AI solutions, along with exploration of prospective clinical monitoring frameworks, are necessary to enhance the robustness of AI implementations in healthcare.

5. Conclusions

Our study demonstrates the importance of a structured lifecycle-based methodology for enhancing the mammographic AI performance. Through systematic testing and collaboration among stakeholders, we observed notable improvements in diagnostic accuracy, technical stability, and clinical integration. While our findings offer insights into the effectiveness of mammographic AI, inherent limitations highlight the necessity of further research, particularly concerning study design and data diversity. The successful integration of AI within clinical workflows represents a promising step towards advanced diagnostic radiology. By fostering an active dialogue between developers, clinicians, and regulatory bodies, we can harness the full potential of AI to improve patient outcomes and redefine diagnostic practices in healthcare.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/diagnostics15070822/s1, Supplementary Materials S1. AI model profile; Supplementary Materials S2. Feedback from the AI solution developer.

Author Contributions

Conceptualization, Y.V.; methodology, K.A.; software, E.N.; investigation, D.R; writing—original draft preparation, D.R. and E.N.; writing—review and editing, L.P., K.A. and O.O.; supervision, A.V. and K.A.; project administration, O.O. and I.S.; funding acquisition, A.K. All authors have read and agreed to the published version of the manuscript.

Funding

This paper was prepared by a group of authors as a part of the research and development effort titled “Development of a platform for improving the quality of AI services for clinical diagnostics” (USIS No.: 123031400006-0) in accordance with Order No. 1196 dated 21 December 2022 “On approval of state assignments funded by means of allocations from the budget of the city of Moscow to the state budgetary (autonomous) institutions subordinate to the Moscow Health Care Department, for 2023 and the planned period of 2024 and 2025” issued by the Moscow Health Care Department.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki (as revised in 2013) and approved by the Independent Ethics Committee of the Moscow Regional Branch of the Russian Society of Roentgenologists and Radiologists (extract from Protocol No. 2 dated 20 February 2020). The clinical trial number is NCT04489992 (https://ClinicalTrials.gov, accessed on 15 January 2025).

Informed Consent Statement

Informed consent was given by patients during clinical examinations.

Data Availability Statement

The public datasets used in this study can be found at https://mosmed.ai/en/datasets/.

Acknowledgments

We thank Andrey Romanov for translating the publication.

Conflicts of Interest

Evgeniy Nikitin and Artem Kapninskiy are employees of the company Celsus. They had no role in the design of this study or in the collection, analyses, or interpretation of data. The other authors declare no relationships with any companies whose products or services may be related to the subject matter of the article.

References

- Katsika, L.; Boureka, E.; Kalogiannidis, I.; Tsakiridis, I.; Tirodimos, I.; Lallas, K.; Tsimtsiou, Z.; Dagklis, T. Screening for Breast Cancer: A Comparative Review of Guidelines. Life 2024, 14, 777. [Google Scholar] [CrossRef] [PubMed]

- Akwo, J.; Hadadi, I.; Ekpo, E. Diagnostic Efficacy of Five Different Imaging Modalities in the Assessment of Women Recalled at Breast Screening—A Systematic Review and Meta-Analysis. Cancers 2024, 16, 3505. [Google Scholar] [CrossRef]

- Ding, L.; Greuter, M.J.W.; Truyen, I.; Goossens, M.; Van der Vegt, B.; De Schutter, H.; Van Hal, G.; de Bock, G.H. Effectiveness of Organized Mammography Screening for Different Breast Cancer Molecular Subtypes. Cancers 2022, 14, 4831. [Google Scholar] [CrossRef] [PubMed]

- Nicosia, L.; Gnocchi, G.; Gorini, I.; Venturini, M.; Fontana, F.; Pesapane, F.; Abiuso, I.; Bozzini, A.C.; Pizzamiglio, M.; Latronico, A.; et al. History of Mammography: Analysis of Breast Imaging Diagnostic Achievements over the Last Century. Healthcare 2023, 11, 1596. [Google Scholar] [CrossRef] [PubMed]

- Magnus, M.C.; Ping, M.; Shen, M.M.; Bourgeois, J.; Magnus, J.H. Effectiveness of Mammography Screening in Reducing Breast Cancer Mortality in Women Aged 39–49 Years: A Meta-Analysis. J. Women’s Health 2011, 20, 845–852. [Google Scholar] [CrossRef]

- Marmot, M.G.; Altman, D.G.; Cameron, D.A.; Dewar, J.A.; Thompson, S.G.; Wilcox, M. The Benefits and Harms of Breast Cancer Screening: An Independent Review. Br. J. Cancer 2013, 108, 2205–2240. [Google Scholar] [CrossRef]

- Nelson, H.D.; Fu, R.; Cantor, A.; Pappas, M.; Daeges, M.; Humphrey, L. Effectiveness of Breast Cancer Screening: Systematic Review and Meta-Analysis to Update the 2009 U.S. Preventive Services Task Force Recommendation. Ann. Intern. Med. 2016, 164, 244–255. [Google Scholar] [CrossRef]

- Dibden, A.; Offman, J.; Duffy, S.W.; Gabe, R. Worldwide Review and Meta-Analysis of Cohort Studies Measuring the Effect of Mammography Screening Programmes on Incidence-Based Breast Cancer Mortality. Cancers 2020, 12, 976. [Google Scholar] [CrossRef]

- Hirani, R.; Noruzi, K.; Khuram, H.; Hussaini, A.S.; Aifuwa, E.I.; Ely, K.E.; Lewis, J.M.; Gabr, A.E.; Smiley, A.; Tiwari, R.K.; et al. Artificial Intelligence and Healthcare: A Journey through History, Present Innovations, and Future Possibilities. Life 2024, 14, 557. [Google Scholar] [CrossRef]

- Najjar, R. Redefining Radiology: A Review of Artificial Intelligence Integration in Medical Imaging. Diagnostics 2023, 13, 2760. [Google Scholar] [CrossRef]

- Avanzo, M.; Stancanello, J.; Pirrone, G.; Drigo, A.; Retico, A. The Evolution of Artificial Intelligence in Medical Imaging: From Computer Science to Machine and Deep. Learning. Cancers 2024, 16, 3702. [Google Scholar] [CrossRef] [PubMed]

- Pinto-Coelho, L. How Artificial Intelligence Is Shaping Medical Imaging Technology: A Survey of Innovations and Applications. Bioengineering 2023, 10, 1435. [Google Scholar] [CrossRef] [PubMed]

- Obuchowicz, R.; Strzelecki, M.; Piórkowski, A. Clinical Applications of Artificial Intelligence in Medical Imaging and Image Processing—A Review. Cancers 2024, 16, 1870. [Google Scholar] [CrossRef] [PubMed]

- Karalis, V.D. The Integration of Artificial Intelligence into Clinical Practice. Appl. Biosci. 2024, 3, 14–44. [Google Scholar] [CrossRef]

- Khan, S.D.; Hoodbhoy, Z.; Raja, M.H.R.; Kim, J.Y.; Hogg, H.D.J.; Manji, A.A.A.; Gulamali, F.; Hasan, A.; Shaikh, A.; Tajuddin, S.; et al. Frameworks for Procurement, Integration, Monitoring, and Evaluation of Artificial Intelligence Tools in Clinical Settings: A Systematic Review. PLoS Digit. Health 2024, 3, e0000514. [Google Scholar] [CrossRef]

- Maleki Varnosfaderani, S.; Forouzanfar, M. The Role of AI in Hospitals and Clinics: Transforming Healthcare in the 21st Century. Bioengineering 2024, 11, 337. [Google Scholar] [CrossRef]

- Al-Karawi, D.; Al-Zaidi, S.; Helael, K.A.; Obeidat, N.; Mouhsen, A.M.; Ajam, T.; Alshalabi, B.A.; Salman, M.; Ahmed, M.H. A Review of Artificial Intelligence in Breast Imaging. Tomography 2024, 10, 705–726. [Google Scholar] [CrossRef]

- Zhu, Z.; Sun, Y.; Honarvar Shakibaei Asli, B. Early Breast Cancer Detection Using Artificial Intelligence Techniques Based on Advanced Image Processing Tools. Electronics 2024, 13, 3575. [Google Scholar] [CrossRef]

- Shamir, S.B.; Sasson, A.L.; Margolies, L.R.; Mendelson, D.S. New Frontiers in Breast Cancer Imaging: The Rise of AI. Bioengineering 2024, 11, 451. [Google Scholar] [CrossRef]

- Khalid, A.; Mehmood, A.; Alabrah, A.; Alkhamees, B.F.; Amin, F.; AlSalman, H.; Choi, G.S. Breast Cancer Detection and Prevention Using Machine Learning. Diagnostics 2023, 13, 3113. [Google Scholar] [CrossRef]

- Carriero, A.; Groenhoff, L.; Vologina, E.; Basile, P.; Albera, M. Deep. Learning in Breast Cancer Imaging: State of the Art. and Recent. Advancements in Early 2024. Diagnostics 2024, 14, 848. [Google Scholar] [CrossRef] [PubMed]

- Adachi, M.; Fujioka, T.; Ishiba, T.; Nara, M.; Maruya, S.; Hayashi, K.; Kumaki, Y.; Yamaga, E.; Katsuta, L.; Hao, D.; et al. AI Use in Mammography for Diagnosing Metachronous Contralateral Breast Cancer. J. Imaging 2024, 10, 211. [Google Scholar] [CrossRef] [PubMed]

- Wing, P.; Langelier, M.H. Workforce Shortages in Breast Imaging: Impact on Mammography Utilization. Am. J. Roentgenol. 2009, 192, 370–378. [Google Scholar] [CrossRef]

- Kalidindi, S.; Gandhi, S. Workforce Crisis in Radiology in the UK and the Strategies to Deal. With It: Is. Artificial Intelligence the Saviour? Cureus 2023, 15, e43866. [Google Scholar] [CrossRef]

- Kwee, T.C.; Kwee, R.M. Workload of Diagnostic Radiologists in the Foreseeable Future Based on Recent Scientific Advances: Growth Expectations and Role of Artificial Intelligence. Insights Imaging 2021, 12, 88. [Google Scholar] [CrossRef]

- Gastounioti, A.; Eriksson, M.; Cohen, E.A.; Mankowski, W.; Pantalone, L.; Ehsan, S.; McCarthy, A.M.; Kontos, D.; Hall, P.; Conant, E.F. External Validation of a Mammography-Derived AI-Based Risk Model in a U.S. Breast Cancer Screening Cohort of White and Black Women. Cancers 2022, 14, 4803. [Google Scholar] [CrossRef]

- Yu, A.C.; Mohajer, B.; Eng, J. External Validation of Deep Learning Algorithms for Radiologic Diagnosis: A Systematic Review. Radiol. Artif. Intell. 2022, 4, e210064. [Google Scholar] [CrossRef]

- Martin-Noguerol, T.; Luna, A. External Validation of AI Algorithms in Breast Radiology: The Last Healthcare Security Checkpoint? Quant. Imaging Med. Surg. 2021, 11, 2888–2892. [Google Scholar] [CrossRef]

- Vasilev, Y.; Vladzymyrskyy, A.; Omelyanskaya, O.; Blokhin, I.; Kirpichev, Y.; Arzamasov, K. AI-Based CXR First Reading: Current Limitations to Ensure Practical Value. Diagnostics 2023, 13, 1430. [Google Scholar] [CrossRef]

- Kim, D.W.; Jang, H.Y.; Kim, K.W.; Shin, Y.; Park, S.H. Design Characteristics of Studies Reporting the Performance of Artificial Intelligence Algorithms for Diagnostic Analysis of Medical Images: Results from Recently Published Papers. Korean J. Radiol. 2019, 20, 405–410. [Google Scholar] [CrossRef]

- Petersson, L.; Larsson, I.; Nygren, J.M.; Nilsen, P.; Neher, M.; Reed, J.E.; Tyskbo, D.; Svedberg, P. Challenges to Implementing Artificial Intelligence in Healthcare: A Qualitative Interview Study with Healthcare Leaders in Sweden. BMC Health Serv. Res. 2022, 22, 850. [Google Scholar] [CrossRef] [PubMed]

- Ramwala, O.A.; Lowry, K.P.; Cross, N.M.; Hsu, W.; Austin, C.C.; Mooney, S.D.; Lee, C.I. Establishing a Validation Infrastructure for Imaging-Based Artificial Intelligence Algorithms Before Clinical Implementation. J. Am. Coll. Radiol. 2024, 21, 1569–1574. [Google Scholar] [CrossRef] [PubMed]

- Freeman, K.; Geppert, J.; Stinton, C.; Todkill, D.; Johnson, S.; Clarke, A.; Taylor-Phillips, S. Use of Artificial Intelligence for Image Analysis in Breast Cancer Screening Programmes: Systematic Review of Test Accuracy. BMJ 2021, 374, n1872. [Google Scholar] [CrossRef]

- Hickman, S.E.; Baxter, G.C.; Gilbert, F.J. Adoption of Artificial Intelligence in Breast Imaging: Evaluation, Ethical Constraints and Limitations. Br. J. Cancer 2021, 125, 15–22. [Google Scholar] [CrossRef] [PubMed]

- Dikici, E.; Bigelow, M.; Prevedello, L.M.; White, R.D.; Erdal, B.S. Integrating AI into Radiology Workflow: Levels of Research, Production, and Feedback Maturity. J. Med. Imaging 2020, 7, 016502. [Google Scholar] [CrossRef]

- Brady, A.P.; Allen, B.; Chong, J.; Kotter, E.; Kottler, N.; Mongan, J.; Oakden-Rayner, L.; dos Santos, D.P.; Tang, A.; Wald, C.; et al. Developing, Purchasing, Implementing and Monitoring AI Tools in Radiology: Practical Considerations. A Multi-Society Statement from the ACR, CAR, ESR, RANZCR & RSNA. Insights Imaging 2024, 68, 7–26. [Google Scholar] [CrossRef]

- Pianykh, O.S.; Langs, G.; Dewey, M.; Enzmann, D.R.; Herold, C.J.; Schoenberg, S.O.; Brink, J.A. Continuous Learning AI in Radiology: Implementation Principles and Early Applications. Radiology 2020, 297, 6–14. [Google Scholar] [CrossRef]

- Sinha, S.; Lee, Y.M. Challenges with Developing and Deploying AI Models and Applications in Industrial Systems. Discov. Artif. Intell. 2024, 4, 55. [Google Scholar] [CrossRef]

- Smith, A.; Severn, M. An Overview of Continuous Learning Artificial Intelligence-Enabled Medical Devices. Can. J. Health Technol. 2022, 2, 341. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, X.; Su, H.; Zhu, J. A Comprehensive Survey of Continual Learning: Theory, Method and Application. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 14, 5362–5383. [Google Scholar] [CrossRef]

- Harvey, H.; Heindl, A.; Khara, G.; Korkinof, D.; O’Neill, M.; Yearsley, J.; Karpati, E.; Rijken, T.; Kecskemethy, P.; Forrai, G. Deep Learning in Breast Cancer Screening. In Artificial Intelligence in Medical Imaging: Opportunities, Applications and Risks; Springer: Berlin/Heidelberg, Germany, 2019; pp. 187–215. ISBN 9783319948782. [Google Scholar]

- Gichoya, J.W.; Thomas, K.; Celi, L.A.; Safdar, N.; Banerjee, I.; Banja, J.D.; Seyyed-Kalantari, L.; Trivedi, H.; Purkayastha, S. AI Pitfalls and What Not to Do: Mitigating Bias in AI. Br. J. Radiol. 2023, 96, 20230023. [Google Scholar] [CrossRef] [PubMed]

- Tejani, A.S.; Ng, Y.S.; Xi, Y.; Rayan, J.C. Understanding and Mitigating Bias in Imaging Artificial Intelligence. Radiographics 2024, 44, e230067. [Google Scholar] [CrossRef] [PubMed]

- Vrudhula, A.; Kwan, A.C.; Ouyang, D.; Cheng, S. Machine Learning and Bias in Medical Imaging: Opportunities and Challenges. Circ. Cardiovasc. Imaging 2024, 17, e015495. [Google Scholar] [CrossRef]

- Park, S.H.; Han, K.; Jang, H.Y.; Park, J.E.; Lee, J.G.; Kim, D.W.; Choi, J. Methods for Clinical Evaluation of Artificial Intelligence Algorithms for Medical Diagnosis. Radiology 2023, 306, 20–31. [Google Scholar] [CrossRef]

- Cardoso, M.J.; Moosbauer, J.; Cook, T.S.; Erdal, B.S.; Genereaux, B.; Gupta, V.; Landman, B.A.; Lee, T.; Somasundaram, E.; Summers, R.M.; et al. RAISE-Radiology AI Safety, an End-to-End Lifecycle Approach. [CrossRef]

- Ng, M.Y.; Kapur, S.; Blizinsky, K.D.; Hernandez-Boussard, T. The AI Life Cycle: A Holistic Approach to Creating Ethical AI for Health Decisions. Nat. Med. 2022, 28, 2247–2249. [Google Scholar] [CrossRef]

- De Silva, D.; Alahakoon, D. An Artificial Intelligence Life Cycle: From Conception to Production. Patterns 2022, 3, 100489. [Google Scholar] [CrossRef]

- Experiment on the Introduction of Artificial Intelligence Technologies. Available online: https://telemedai.ru/en/proekty/eksperiment-po-vnedreniyu-tehnologij-iskusstvennogo-intellekta (accessed on 15 January 2025).

- Celsus Mammography. Available online: https://celsus.ai/en/products-mammography/ (accessed on 15 January 2025).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R.; et al. ResNeSt: Split-Attention Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar] [CrossRef]

- CELS® Software. Registration Certificate for a Medical Device 2021/14449. Available online: https://nevacert.ru/reestry/med-reestr/rzn-202114449-46522.html (accessed on 15 January 2025).

- The Celsus Medical Decision Support System Has Received a CE Mark. Available online: https://celsus.ai/en/news/the-celsus-medical-decision-support-system-has-received-a-ce-mark/ (accessed on 15 January 2025).

- Vasilev, Y.A.; Vladzymyrskyy, A.V.; Omelyanskaya, O.V.; Arzamasov, K.M.; Chetverikov, S.F.; Rumyantsev, D.A.; Zelenova, M.A. Methodology for Testing and Monitoring Artificial Intelligence-Based Software for Medical Diagnostics. Digit. Diagn. 2023, 4, 252–267. [Google Scholar] [CrossRef]

- Mongan, J.; Moy, L.; Kahn, C.E. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): A Guide for Authors and Reviewers. Radiol. Artif. Intell. 2020, 2, e200029. [Google Scholar] [CrossRef]

- Avrin, D. HIPAA Privacy and DICOM Anonymization for Research. Acad. Radiol. 2008, 15, 273. [Google Scholar] [CrossRef]

- DICOM Standards Committee. Application Level Confidentiality Profile Attributes. Available online: https://dicom.nema.org/medical/dicom/current/output/html/part15.html#table_E.1-1 (accessed on 15 January 2025).

- Vasiliev, Y.A.; Vladzimirskyy, A.V.; Omelyanskaya, O.V.; Arzamasov, K.M.; Savkina, E.F.; Kasimov, S.D.; Kosov, P.N.; Ponomarenko, A.P.; Medvedev, K.E.; Burtsev, T.A.; et al. Certificate of State Registration of Computer Program No. 2025610804 Russian Federation. Dataset Preparation Platform: No. 2024691653: Declared 20 December 2024: Published 14 January 2025. Available online: https://www.elibrary.ru/item.asp?id=80277623 (accessed on 15 January 2025).

- Bobrovskaya, T.M.; Nikitin, N.Y.; Vladzymyrskyy, A.V.; Omelyanskaya, O.V. Sample Size for Assessing a Diagnostic Accuracy of AI-Based Software in Radiology. Sib. J. Clin. Exp. Med. 2024, 39, 188–198. [Google Scholar] [CrossRef]

- Arzamasov, K.; Vasilev, Y.; Zelenova, M.; Pestrenin, L.; Busygina, Y.; Bobrovskaya, T.; Chetverikov, S.; Shikhmuradov, D.; Pankratov, A.; Kirpichev, Y.; et al. Independent Evaluation of the Accuracy of 5 Artificial Intelligence Software for Detecting Lung Nodules on Chest X-Rays. Quant. Imaging Med. Surg. 2024, 14, 5288–5303. [Google Scholar] [CrossRef] [PubMed]

- MosMedData: MMG with and without Signs of Breast Malignancies, Enriched with Clinical Information. Available online: https://mosmed.ai/en/datasets/mosmeddata-mmg-s-nalichiem-i-otsutstviem-priznakov-zlokachestvennih-novoobrazovanii-molochnoi-zhelezi-obogaschennii-klinicheskoi-informatsiei/ (accessed on 15 January 2024).

- Chetverikov, S.F.; Arzamasov, K.M.; Andreichenko, A.E.; Novik, V.P.; Bobrovskaya, T.M.; Vladzimirskyy, A.V. Approaches to Sampling for Quality Control of Artificial Intelligence in Biomedical Research. Sovrem. Tehnol. Med. 2023, 15, 19–25. [Google Scholar] [CrossRef]

- Spak, D.A.; Plaxco, J.S.; Santiago, L.; Dryden, M.J.; Dogan, B.E. BI-RADS® Fifth Edition: A Summary of Changes. Diagn. Interv. Imaging 2017, 98, 179–190. [Google Scholar]

- Chang Sen, L.Q.; Mayo, R.C.; Lesslie, M.D.; Yang, W.T.; Leung, J.W.T. Impact of Second-Opinion Interpretation of Breast Imaging Studies in Patients Not Currently Diagnosed with Breast Cancer. J. Am. Coll. Radiol. 2018, 15, 980–987. [Google Scholar] [CrossRef]

- Kaprin, A.D.; Chissov, V.I.; Starinsky, V.V.; Gretsova, O.P.; Petrova, G.V.; Prostov, Y.I. The Information Analytical System for Registration of Cancer Patients in the Russian Federation. P.A. Herzen J. Oncol. 2015, 4, 40–43. [Google Scholar] [CrossRef]

- Baseline Functional and Diagnostic Requirements for AI Services. Available online: https://mosmed.ai/en/ai/docs/ (accessed on 15 January 2025).

- Baratloo, A.; Hosseini, M.; Negida, A.; El Ashal, G. Part 1: Simple Definition and Calculation of Accuracy, Sensitivity and Specificity. Emergency 2015, 3, 48–49. [Google Scholar]

- Hicks, S.A.; Strümke, I.; Thambawita, V.; Hammou, M.; Riegler, M.A.; Halvorsen, P.; Parasa, S. On Evaluation Metrics for Medical Applications of Artificial Intelligence. Sci. Rep. 2022, 12, 1–9. [Google Scholar] [CrossRef]

- Ruopp, M.D.; Perkins, N.J.; Whitcomb, B.W.; Schisterman, E.F. Youden Index and Optimal Cut-Point Estimated from Observations Affected by a Lower Limit of Detection. Biom. J. 2008, 50, 419–430. [Google Scholar] [CrossRef]

- Jiang, B.; Luo, R.; Mao, J.; Xiao, T.; Jiang, Y. Acquisition of Localization Confidence for Accurate Object Detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 14 September 2018. [Google Scholar] [CrossRef]

- Cao, J.; Cholakkal, H.; Anwer, R.M.; Khan, F.S.; Pang, Y.; Shao, L. D2DET: Towards High Quality Object Detection and Instance Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Vasiliev, Y.A.; Vladzimirskyy, A.V.; Arzamasov, K.M.; Shulkin, I.M.; Aksenova, L.E.; Pestrenin, L.D.; Semenov, S.S.; Bondarchuk, D.V.; Smirnov, I.V. The First 10,000 Mammography Exams Performed as Part of the “Description and Interpretation of Mammography Data Using Artificial Intelligence” Service. Manag. Zdr. 2023, 8, 54–67. [Google Scholar] [CrossRef]

- Branco, P.E.S.C.; Franco, A.H.S.; de Oliveira, A.P.; Carneiro, I.M.C.; de Carvalho, L.M.C.; de Souza, J.I.N.; Leandro, D.R.; Cândido, E.B. Artificial Intelligence in Mammography: A Systematic Review of the External Validation. Rev. Bras. Ginecol. Obstet. 2024, 46, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Borisov, A.A.; Arzamasov, K.M.; Semenov, S.S.; Vladzimirsky, A.V.; Vasiliev, Y.A. Investigation of the Capabilities of Algorithms for Automated Quality Assurance of DICOM Metadata of Chest X-ray Examinations. Med. Vis. 2024, 28, 134–144. [Google Scholar] [CrossRef]

- Zeng, A.; Houssami, N.; Noguchi, N.; Nickel, B.; Marinovich, M.L. Frequency and Characteristics of Errors by Artificial Intelligence (AI) in Reading Screening Mammography: A Systematic Review. Breast Cancer Res. Treat. 2024, 207, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Dembrower, K.; Crippa, A.; Colón, E.; Eklund, M.; Strand, F. Artificial Intelligence for Breast Cancer Detection in Screening Mammography in Sweden: A Prospective, Population-Based, Paired-Reader, Non-Inferiority Study. Lancet Digit. Health 2023, 5, e703–e711. [Google Scholar] [CrossRef] [PubMed]

- Lång, K.; Josefsson, V.; Larsson, A.M.; Larsson, S.; Högberg, C.; Sartor, H.; Hofvind, S.; Andersson, I.; Rosso, A. Artificial Intelligence-Supported Screen Reading versus Standard Double Reading in the Mammography Screening with Artificial Intelligence Trial (MASAI): A Clinical Safety Analysis of a Randomised, Controlled, Non-Inferiority, Single-Blinded, Screening Acc. Lancet Oncol. 2023, 24, 936–944. [Google Scholar] [CrossRef]

- Allen, B.; Gish, R.; Dreyer, K. The Role of an Artificial Intelligence Ecosystem in Radiology. In Artificial Intelligence in Medical Imaging: Opportunities, Applications and Risks; Springer: Berlin/Heidelberg, Germany, 2019; pp. 291–327. ISBN 9783319948782. [Google Scholar]

- Waller, J.; O’connor, A.; Rafaat, E.; Amireh, A.; Dempsey, J.; Martin, C.; Umair, M. Applications and Challenges of Artificial Intelligence in Diagnostic and Interventional Radiology. Polish J. Radiol. 2022, 87, e113–e117. [Google Scholar] [CrossRef]

- Chang, J.Y.; Makary, M.S. Evolving and Novel Applications of Artificial Intelligence in Thoracic Imaging. Diagnostics 2024, 14, 1456. [Google Scholar] [CrossRef]

- Brady, A.P.; Neri, E. Artificial Intelligence in Radiology-Ethical Considerations. Diagnostics 2020, 10, 231. [Google Scholar] [CrossRef]

- Allen, B.; Dreyer, K.; Stibolt, R.; Agarwal, S.; Coombs, L.; Treml, C.; Elkholy, M.; Brink, L.; Wald, C. Evaluation and Real-World Performance Monitoring of Artificial Intelligence Models in Clinical Practice: Try It, Buy It, Check It. J. Am. Coll. Radiol. 2021, 18, 1489–1496. [Google Scholar] [CrossRef]

- Isa, I.G.T.; Ammarullah, M.I.; Efendi, A.; Nugroho, Y.S.; Nasrullah, H.; Sari, M.P. Constructing an Elderly Health Monitoring System Using Fuzzy Rules and Internet of Things. AIP Adv. 2024, 14, 1–15. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).