Abstract

Background/Objectives: Integrating deep learning (DL) into radiomics offers a noninvasive approach to predicting molecular markers in gliomas, a crucial step toward personalized medicine. This study aimed to assess the diagnostic accuracy of DL models in predicting various glioma molecular markers using MRI. Methods: Following PRISMA guidelines, we systematically searched PubMed, Scopus, Ovid, and Web of Science until 27 February 2024 for studies employing DL algorithms to predict gliomas’ molecular markers from MRI sequences. The publications were assessed for the risk of bias, applicability concerns, and quality using the QUADAS-2 tool and the radiomics quality score (RQS). A bivariate random-effects model estimated pooled sensitivity and specificity, accounting for inter-study heterogeneity. Results: Of 728 articles, 43 were qualified for qualitative analysis, and 30 were included in the meta-analysis. In the validation cohorts, MGMT methylation had a pooled sensitivity of 0.74 (95% CI: 0.66–0.80) and a pooled specificity of 0.75 (95% CI: 0.65–0.82), both with significant heterogeneity (p = 0.00, I2 = 80.90–84.50%). ATRX and TERT mutations had a pooled sensitivity of 0.79 (95% CI: 0.67–0.87) and 0.81 (95% CI: 0.72–0.87) and a pooled specificity of 0.85 (95% CI: 0.78–0.91) and 0.70 (95% CI: 0.61–0.77), respectively. Meta-regression analyses revealed that significant heterogeneity was influenced by data sources, MRI sequences, feature extraction methods, and validation techniques. Conclusions: While the DL models show promising prediction accuracy for glioma molecular markers, variability in the study settings complicates clinical translation. To bridge this gap, future efforts should focus on harmonizing multi-center MRI datasets, incorporating external validation, and promoting open-source studies and data sharing.

1. Introduction

Gliomas are the most common primary brain tumors, characterized by significant histological and molecular variability. The 2021 WHO Classification of Tumors of the Central Nervous System emphasizes the need for accurate identification of molecular markers to ensure precise glioma classification [1]. Currently, genetic profiling of tumor tissue, obtained through a biopsy or surgical resection, is the standard approach. However, these invasive procedures carry risks such as bleeding and infection and may not fully capture the heterogeneity of the tumor [2]. As a result, noninvasive methods for obtaining genetic and histologic information are critically important. Magnetic resonance imaging (MRI) offers a noninvasive alternative and is particularly important due to its widespread clinical use and its ability to capture diverse tumor characteristics across various imaging sequences [3]. Despite its advantages, MRI interpretation remains challenging due to human limitations and the presence of radiological mimics, such as inflammation, stroke, and infections [4].

Radiomics aims to bridge the gap by linking high-throughput quantitative image features with molecular phenotypes [5]. The radiomics pipeline involves the following critical steps: image processing and segmentation, feature extraction and selection, and model classification, each significantly influencing outcomes [6,7]. Concerns over the reliability of manually segmented, handcrafted features have driven the integration of deep learning (DL) into the radiomics framework. DL methods can either automate various tasks within this framework, reducing human bias and error, or fully replace the conventional radiomics pipeline by enabling direct classification without relying on predefined features [6]. In this article, we refer to both approaches as DL-based radiomics. Since the integration of DL into radiomics, numerous studies have explored the molecular markers associated with various gliomas [8,9,10,11,12]. Given the extensive research in this field, a systematic review and meta-analysis are needed to critically evaluate and quantify the existing data. Such an analysis would help determine whether these advanced approaches can be effectively translated into clinical practice.

In our previous systematic review [8], we thoroughly examined two key genetic markers—isocitrate dehydrogenase (IDH) mutations and 1p/19q codeletion—which are fundamental to glioma diagnosis and classification [9]. Building upon this work, the present study shifts focus to other molecular abnormalities that are often neglected and have been the subject of fewer investigations. Existing systematic reviews predominantly focus on O6-methylguanineDNA methyltransferase (MGMT) gene promoter methylation [10,11] and present contradictory outcomes [12,13]. Additionally, reviews assessing other genetic and epigenetic abnormalities [14,15,16] are either based on conventional radiomics or need to be updated. This study addresses these gaps by comprehensively reviewing MRI-based DL models for predicting a broad spectrum of glioma molecular markers. These markers include mutations in phosphatase and tensin homolog (PTEN), alpha-thalassemia/mental retardation syndrome X-linked (ATRX), and telomerase reverse transcriptase (TERT), as well as CDKN2A/B homozygous deletions and epidermal growth factor receptor (EGFR) amplification. Additionally, we reviewed the high expression of Ki-67, p53 proteins, the Synaptophysin (SYP) gene combined aneuploidy of chromosomes 7 and 10 and MGMT methylation. More importantly, we performed an extensive meta-regression analysis to account for inter-study heterogeneity while quantitatively examining the relationship between study settings and diagnostic accuracy.

2. Materials and Methods

This study involves a systematic literature review and meta-analysis following PRISMA guidelines, and no ethical approval was required [17]. This study is registered on PROSPERO (CRD42024542505).

2.1. Search Strategy and Study Selection

We conducted a comprehensive search of PubMed, Scopus, Ovid, and Web of Science up to 27 February 2024 and reviewed article bibliographies (Supplementary Section S1). Studies were included if they involved any glioma grade, predicted at least one of the aforementioned molecular markers using MRI, and applied DL algorithms within a radiomics framework. For studies assessing multiple biomarkers, each biomarker was analyzed separately. To be included in the meta-analysis, studies needed to report sufficient data to construct a 2 × 2 diagnostic table (true positives, false positives, false negatives, and true negatives). Studies lacking adequate validation metrics were included in the qualitative synthesis only. We excluded non-original research and non-human studies. Two reviewers (S.F. and S.M.) independently screened the abstracts and full texts, resolving disagreements through discussion. Initial duplicate removal and screening were conducted using Zotero (version 6.0.36).

2.2. Data Extraction

Data on the study design, patient characteristics, utilized datasets, MRI sequences, data augmentation techniques, and computational methodologies were independently collected by two reviewers (S.F. and S.M.) using a standardized form (Supplementary Section S2). Performance metrics, including the diagnostic confusion matrix, were obtained from training (internal validation) and unseen validation datasets. Missing data prompted contacting the corresponding authors; since there was no response, the metrics were computed using other provided metrics and patient counts for altered and intact biomarkers. For studies presenting only receiver operating characteristic curves, the top-left method was used to determine sensitivity and specificity. Values were rounded to the nearest whole number, potentially causing slight deviations from the true sensitivity and specificity. When multiple DL models were evaluated in one study, the best-performing one was chosen.

2.3. Quality Assessment

The risk of bias and applicability concerns were assessed using a modified QUADAS-2 tool [18], adapted for DL-based radiomics studies by incorporating elements from the Checklist for Artificial Intelligence in Medical Imaging (CLAIM) and the radiomics quality score (RQS) (Supplementary Section S3). QUADAS-2 evaluates four domains: patient selection, index test, reference standard, and flow/timing. The key considerations included standardized imaging protocols, appropriate data selection, handling of missing data, reliable reference standards, and minimizing genotype imbalances. The index test assessment emphasized segmentation consistency and model robustness. To ensure clinical generalizability, we addressed applicability concerns by validating models on unseen datasets. For each article, methodology, quality, translatability, strengths, and limitations were further evaluated using the RQS, a 16-component score ranging from −8 to 36 [19] (Supplementary Section S4). Two reviewers (S.F. and S.M.) independently conducted the QUADAS-2 and RQS assessments. When data were insufficient, the authors were contacted for clarification. The RQS scores were averaged for discrepancies of ≤2 points, while larger discrepancies (>2 points) were resolved through a discussion.

2.4. Statistical Analysis

The meta-analysis assessed a DL model’s ability to detect molecular markers, with significance at p < 0.05. We calculated pooled sensitivity and specificity and derived the summary receiver operating characteristic (SROC) curve using a bivariate random-effects model when at least five studies were available. Heterogeneity was assessed through Cochran’s Q test, I2 statistic, prediction intervals, and the Spearman correlation coefficient (SCC) between sensitivity and the false positive rate (FPR), indicating a threshold effect when the SCC > 0.6 [20,21]. Subgroup analysis was conducted only for MGMT methylation due to the insufficient number of included studies for other biomarkers. It investigated sources of heterogeneity based on the glioma grade, data source (single or multi-center), the inclusion of clinical information, data augmentation, use of pretrained models, image segmentation, feature extraction methods, level of DL integration in the radiomics pipeline, MRI sequences, and validation methods when sufficient studies were available [22].

We conducted a leave-one-out meta-analysis to assess each study’s impact on the overall effect size. Publication bias was evaluated using funnel plots and Egger’s test. If potential bias was detected, the Trim and Fill method by Duval and Tweedie was applied to adjust the pooled sensitivity and specificity estimates. We calculated the statistical power across various effect sizes to ensure the robustness of our findings [23]. Analyses were conducted using the R packages “mada”, “dmetar”, “metameta”, and “metafor” in R Stats v4.4.1, along with the MetaBayesDTA web application (v1.5.2) [24].

3. Results

3.1. Study Characteristics

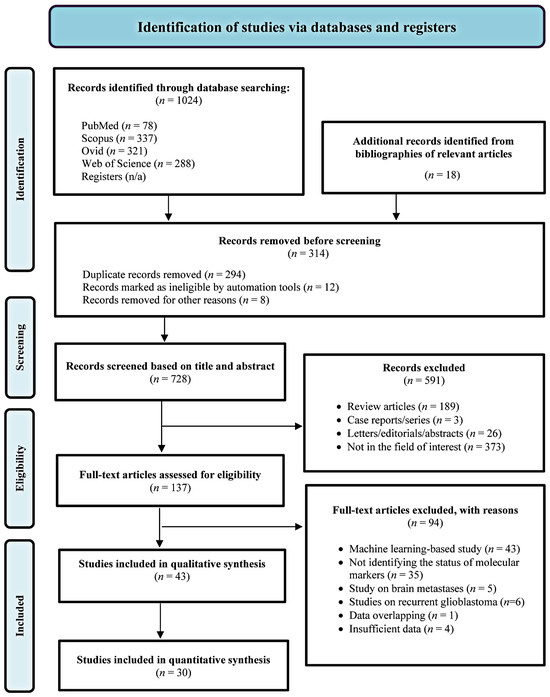

Seven hundred and twenty-two unique articles were initially identified through primary searches and relevant study bibliographies. Following screening and full-text reviews, 43 studies were eligible for qualitative analysis, of which 30 were included in the meta-analysis (Figure 1). One non-English study was included in our review [25].

Figure 1.

Flow diagram of the study selection process.

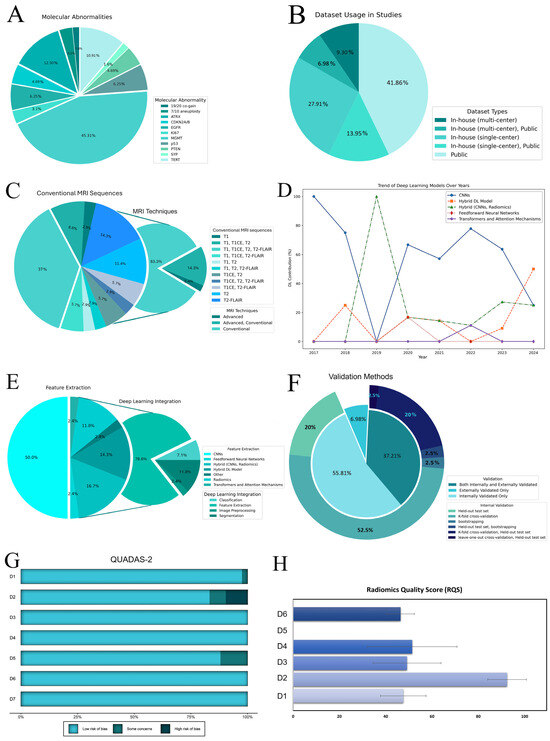

Table 1 summarizes the main study characteristics. China and the USA dominate the publication volume, conducting about 63% of experiments in this field. Additionally, the studies varied widely in sample size, ranging from 42 to 985 patients. Seven studies focused on multiple molecular markers [26,27,28,29,30,31,32], with 45.31% targeting MGMT methylation, followed by ATRX (12.50%) and TERT mutations (10.94%). Other markers were studied less frequently, accounting for about 31% in total (Figure 2A). Over half of the studies focused on high-grade gliomas (HGG), with 80% emphasizing MGMT prediction. Furthermore, 9.30% studied low-grade gliomas (LGG) in grades 2 and 3. Approximately one-third of the studies explored a broader spectrum, including both LGG and HGG, primarily focusing on MGMT classification.

Table 1.

Characteristics of the included studies. All studies had a retrospective design. Abbreviations: Total no. pts, total number of patients; MGMT, O6-methylguanine-DNA methyltransferase; ATRX, alpha thalassemia/mental retardation syndrome X-linked; TERT, telomerase reverse transcriptase; 19/20 co-gain, 19/20 chromosomes co-gain; CDKN2A/B, CDKN2A/B homozygous deletion; SYP, Synaptophysin gene expression; EGFR, epidermal growth factor receptor; PTEN, phosphatase, and tensin homolog; DL, deep learning; CNNs, convolutional neural networks; T1, T1-weighted imaging; T2, T2-weighted imaging; T1CE, T1-weighted contrast-enhanced imaging with gadolinium; T2-FLAIR, T2-weighted fluid-attenuated inversion recovery imaging; DSC, dynamic susceptibility contrast MR perfusion; SWI, susceptibility-weighted imaging; DWI, diffusion-weighted imaging; ASL, Arterial Spin Labeling; 2D 55-direction HARDI, 2D 55-direction high angular resolution diffusion imaging; RQS, radiomics quality score; AUC, area under the curve.

Figure 2.

(A) Distribution of molecular abnormalities among studies. (B) Dataset usage in studies. (C) MRI sequence usage in studies. (D) The trend of deep learning models over the years. (E) DL integration in included studies. The charts display the use of different DL models for feature extraction, with CNNs being the most common. Integration mainly involves classification and feature extraction, with less focus on image preprocessing and segmentation. (F) The validation methods used in the studies depict types of validation and distribution of internal validation techniques. The main pie chart shows the overall validation approaches, while the outer ring details specific internal methods. (G) The QUADAS-2 results. The chart shows the risk of bias across different domains (D1–D7). The domains are categorized as follows: D1–D4 for risk of bias, with D1 (patient selection), D2 (index test), D3 (reference standard), and D4 (flow and timing); and D5–D7 for applicability concerns, with D5 (patient selection), D6 (index test), and D7 (reference standard). (H) RQS across six domains (D1 (protocol quality), D2 (feature selection and validation), D3 (biologic/clinical validation and utility), D4 (model performance index), D5 (level of evidence), and D6 (open science and data)) for included articles are depicted as average percentage scores with standard errors. Abbreviations: DL, deep learning; CNNs, convolutional neural networks; D1–D7, Domain 1 to Domain 7; QUADAS-2, quality assessment of diagnostic accuracy studies-2; RQS, radiomics quality scores; MGMT, O6-methylguanine-DNA methyltransferase; ATRX, alpha thalassemia/mental retardation syndrome X-linked; TERT, telomerase reverse transcriptase; 19/20 co-gain, 19/20 chromosomes co-gain; CDKN2A/B, CDKN2A/B homozygous deletion; SYP, Synaptophysin gene expression; EGFR, epidermal growth factor receptor; PTEN, phosphatase, and tensin homolog.

Private (single and multi-center) and public datasets (mainly The Cancer Imaging Archive (TCIA)) [67,68] were employed, with over 20% utilizing a hybrid of both (Figure 2B). Conventional data augmentation was applied in 48.84% of the studies to address overfitting and the genotype class imbalance. Pretrained models, primarily ImageNet, were used in 53.49% of the studies, with 20 models incorporating non-radiomics data such as age and sex. The widespread use of public datasets influenced the MRI sequence selection (Figure 2C). Conventional sequences were predominantly used, with only one study exclusively utilizing the advanced technique, specifically dynamic susceptibility contrast scans [39]. The contrast-enhanced T1 modality was the most frequently employed, appearing in 71.43% of the studies alongside other MR scans (Supplementary Section S11).

Our review highlights a rise in the use of DL for radiomics since 2017 [33]. In the early stages, convolutional neural networks (CNNs) such as AlexNet, DenseNet, and EfficientNet dominated the field—accounting for 100% of the methods in 2017 and 75% in 2018. By 2020, although CNNs remained the most common approach at 66%, hybrid models began to emerge as alternatives [42].

Many studies utilize CNNs to directly classify molecular markers from MRI [35,36,51,52,58]. These models typically consist of multiple convolutional layers for feature extraction, followed by fully connected layers for prediction. For example, Korfiatis et al. [33] compared three residual network architectures (ResNet50, ResNet34, and ResNet18) for predicting the MGMT methylation status without requiring explicit tumor segmentation. In another approach, Han and Kamdar [36] employed a bi-directional convolutional recurrent neural network (CRNN) that first extracts slice-level features through convolutional, pooling, and fully connected layers and then uses a bi-directional GRU to capture inter-slice spatial and sequential information in 3D MRI scans.

In parallel, a number of studies have integrated DL-based tumor segmentation into their pipelines, either as preprocessing within the conventional radiomics framework or as part of a fully DL-based approach. Segmentation-focused architectures, such as U-Net variants, are commonly used to delineate tumor regions so that a subsequent classification can focus on tumor-specific pixels. Some studies extract radiomics features from these segmented subregions [27,29,34,38,45], while others derive deep features directly from the segmented areas [30,38,45]. Several works have explored hybrid models that combine radiomics and deep features [30,38]. For instance, Calabrese et al. [30] used two parallel processing limbs—a CNN limb employing a 3D multiscale convolutional autoencoder with residual bottleneck blocks and max-pooling layers, and a radiomics limb based on a random forest classifier trained on a curated set of handcrafted features. Averaging the outputs of these limbs resulted in improved prediction performance over the models relying solely on one feature type.

In 2022, transformers and attention mechanisms introduced a new paradigm in the field (Figure 2D). Xu et al. [31] developed a multitask Vision Transformer (ViT) framework to predict multiple molecular expressions (IDH, MGMT, Ki67, and P53) from MR images. Their approach reshapes the input image into a sequence of flattened 2D patches, which are projected into a latent embedding space. Positional and classification embeddings are then added to retain spatial and task-specific information before processing the sequence through multiple transformer blocks—each composed of multi-head self-attention and multilayer perceptron layers. Finally, task-specific fully connected layers output predictions for each molecular marker. Compared to CNN-based models, the ViT architecture employs a global self-attention mechanism to capture long-range dependencies and salient features across the entire image, potentially enhancing classification performance [31,58].

Overall, most DL methods have been applied primarily for feature extraction in radiomic workflows, with fewer studies focusing on image processing [52], tumor segmentation [27,28,29,34,54], and classification [39,48,49] (Figure 2E). Notably, CNNs have also been the primary method for tumor segmentation, used in 32.56% of the studies, while manual and semi-automatic methods were employed in 27.91% and 13.95% of the studies, respectively. Regarding software, Python was utilized in 41 out of 43 studies, with Matlab used in only 2. Among the DL frameworks, PyTorch was most popular at 30.00%, followed by Keras at 21.00%, and TensorFlow at 12.00%; however, 10 studies did not specify the framework or share code (Table 1).

In model development and evaluation, 46.51% of the studies conducted external validation, while 23 experiments did not. Additionally, 54.76% relied solely on internal validation, and 16 studies utilized both internal and external validation approaches (Figure 2F). Exclusive external validation was rare, observed in only three studies [34,50,53].

3.2. Quality Assessment

According to the QUADAS-2, four studies had a high risk of bias, primarily due to limited segmentation methods [26,38,39] or the absence of resampling techniques [34] (Figure 2G; Supplementary Section S3). The median RQS score was 16, ranging from 11 (25.00%) to 22 (50.00%) out of 36. In Domain 1 (average score: 2.4 ± 0.49), studies described imaging protocols, but none included multiple time points or phantom studies; however, 17 studies performed multiple segmentations. Domain 2 achieved the highest score, with 72% of the studies validating findings on unseen datasets. Domain 3 (average score: 2.93 ± 0.88) saw approximately 40% of the studies utilizing multivariable analysis with non-radiomics features and 48.84% discussing biological correlates, but only one study employing decision curve analysis to assess clinical utility [64]. In Domain 4, around half of the studies conducted cut-off analyses, with five studies reporting calibration statistics [26,32,59,62,64]. All studies were retrospective, lacking prospective validation or cost-effectiveness analysis. While 65.12% of the experiments utilized open-source data, only 13 studies made their code accessible (Figure 2H; Supplementary Section S4).

3.3. Publication Bias and Statistical Power

The funnel plot asymmetry and Egger’s test suggested potential publication bias for the MGMT studies in validation cohorts (p = 0.00) but not in training sets or for the ATRX and TERT studies (p > 0.05). However, except for MGMT in the validation datasets, the number of studies in other groups was too small (<10) to test for small study effects. Given the potential presence of publication bias for MGMT prediction, we performed the Trim and Fill method by Duval and Tweedie to further explore and adjust for bias in the pooled sensitivity and specificity estimates (Supplementary Section S6).

The power analysis of the included studies revealed variability in detecting changes in sensitivity and specificity, with most studies demonstrating high power for clinically meaningful effect sizes (Supplementary Section S9).

3.4. Sensitivity Analysis

The sensitivity analysis identified significant variability in the pooled estimates for MGMT methylation in the validation cohorts. The sensitivity was stabilized at 0.71 [95% CI: 0.66–0.76] with a prediction interval of 0.54–0.84 (I2 = 54.3%, p = 0.00) when five studies [27,29,43,48,59] showing influential effects were removed. When eight outliers [13,27,29,43,48,49,51,59] were excluded, the specificity was increased to a point estimate of 0.75 [95% CI: 0.68–0.81], with a prediction interval of 0.49–0.90 (I2 = 63.7%, p = 0.00). No outliers were detected for other molecular abnormalities (Supplementary Section S5).

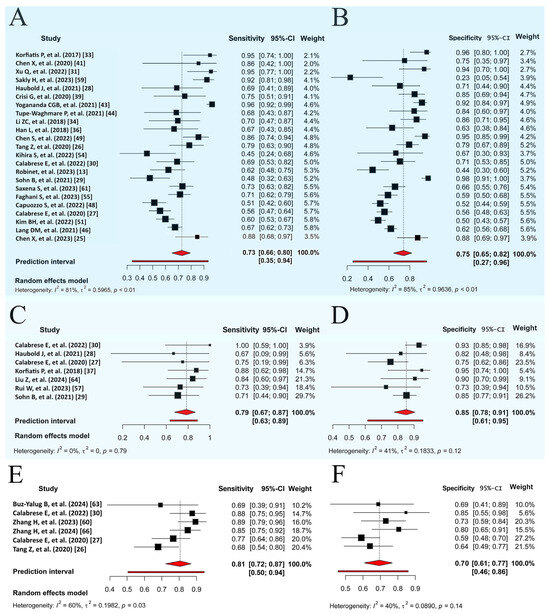

3.5. Prediction of Molecular Marker Status

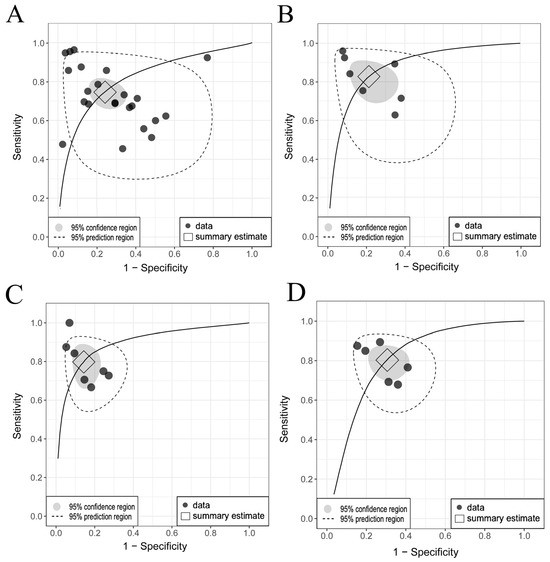

MGMT methylation prediction had consistent sensitivity and specificity across both the training and validation cohorts, with SCC values of −0.67 (95% CI: −0.95 to 0.16) and −0.31 (95% CI: −0.64 to 0.11), respectively (Figure 3A,B, Figures S7 and S8). The validation cohort showed significant heterogeneity (p = 0.00, I2 = 80.90–84.50%), with a pooled sensitivity and specificity of 0.74 and 0.75, respectively. The prediction intervals showed a sensitivity range of 0.35 to 0.94 and a specificity range of 0.27 to 0.96, implying that true effect sizes would be within these intervals in 95% of similar populations. To address potential publication bias in the validation cohort of MGMT prediction, the Trim and Fill method was applied, resulting in an adjusted pooled sensitivity and specificity of 0.89 (95% CI: 0.78–1.00, I2 = 94.3%, p = 0.0001) and 0.91 (95% CI: 0.79–1.00, I2 = 95.9%, p = 0.03), respectively. This adjustment accounted for 11 imputed studies to achieve funnel plot symmetry (Supplementary Section S6). Training diagnostic performance for MGMT prediction also demonstrated significant heterogeneity in sensitivity and specificity (Table 2), as displayed in the SROC curves (Figure 4A,B), highlighting significant differences between the confidence and prediction regions.

Figure 3.

Random forest visualization of validation cohorts for molecular marker prediction. (A) Sensitivity for MGMT methylation prediction. (B) Specificity for MGMT methylation prediction. (C) Sensitivity for ATRX mutation prediction. (D) Specificity for ATRX mutation prediction. (E) Sensitivity for TERT mutation prediction. (F) Specificity for TERT mutation prediction. Each plot shows sensitivity and specificity with a 95% CI and study weights. Pooled estimates and prediction intervals under a random effects model are at the bottom. Numbers represent pooled estimates with a 95% CI, depicted by the horizontal lines. Abbreviations: MGMT, O6-methylguanine-DNA methyltransferase; ATRX, alpha thalassemia/mental retardation syndrome X-linked; TERT, telomerase reverse transcriptase; CI, confidence interval.

Table 2.

Sensitivity and specificity for deep learning models in the prediction of gliomas’ molecular markers. Each entry details the number of studies, sensitivity, and specificity with a 95% CI, PI with 95% CI, and p-value for both training (if available) and validation datasets. Abbreviations: No. of Studies, number of studies; MGMT, O6-methylguanine-DNA methyltransferase; ATRX, alpha thalassemia/mental retardation syndrome X-linked; TERT, telomerase reverse transcriptase; CDKN2A/B, CDKN2A/B homozygous deletion; SYP, Synaptophysin gene expression; EGFR, epidermal growth factor receptor; PTEN, phosphatase and tensin homolog; CI, confidence interval; AUC, area under the curve; PI, prediction internal. * Sensitivity and specificity ranges were reported for molecular markers with an inadequate number of studies (<5).

Figure 4.

Comparison of SROC curves for genetic abnormalities in training and validation cohorts. (A) MGMT validation: pooled sensitivity 0.74 [95% CI, 0.66–0.80], specificity 0.75 [95% CI, 0.65–0.82]. (B) MGMT training: pooled sensitivity 0.83 [95% CI, 0.72–0.90], specificity 0.79 [95% CI, 0.68–0.88]. (C) ATRX validation: pooled sensitivity 0.79 [95% CI, 0.67–0.87], specificity 0.85 [95% CI, 0.78–0.91]. (D) TERT validation: pooled sensitivity 0.81 [95% CI, 0.72–0.87], specificity 0.70 [95% CI, 0.61–0.77]. Considerable differences between the 95% confidence and prediction regions, particularly for MGMT methylation, highlight significant between-study heterogeneity. Abbreviations: SROC, summary receiver operating characteristic; MGMT, O6-methylguanine-DNA methyltransferase; ATRX, alpha thalassemia/mental retardation syndrome X-linked; TERT, telomerase reverse transcriptase; CI, confidence interval.

The prediction performance of the ATRX and TERT statuses in the validation sets (Figure 3C–F) showed no significant threshold between sensitivity and specificity, with SCCs of −0.78 (95% CI: −0.96 to −0.06) and −0.70 (95% CI: −0.96 to 0.25), respectively. The pooled sensitivities were 0.79 and 0.81, and the specificities were 0.85 and 0.70 for ATRX and TERT prediction, respectively. Significant between-study heterogeneity was observed only across the TERT studies for sensitivity (PI = 0.51–0.94, I2 = 60.20%, p = 0.03), as shown in the SROC curves (Figure 4C,D) and detailed in Table 2.

Due to the limited number of included studies, a meta-analysis was infeasible for other molecular markers; however, their sensitivity and specificity ranges are reported in Table 2. EGFR mutation and p53 protein expression were assessed in three studies each, followed by PTEN loss, CDKN2A/B deletion, and chromosome 7/10 aneuploidy in two studies each. In contrast, Ki67 and SYP expression were mentioned in only one study each. The sensitivity ranged from 0.57 to 0.98, and the specificity ranged from 0.59 to 0.95 for these markers.

3.6. Meta-Regression and Subgroup Analysis

Given the heterogeneity of the MGMT studies and sufficient validation cohort data, we investigated the impact of various covariates (Table 3). Although studies that included both HGG and LGG showed higher sensitivity than those that focused solely on HGG, the difference was statistically insignificant. Single-center datasets had higher sensitivity and specificity than those that combined public datasets. While the CNN-derived features had higher sensitivity than the conventional radiomics, no significant difference existed between the DL-based and manual segmentation methods. DL for feature extraction achieved significantly higher sensitivity than DL solely for segmentation. Pretrained DL models reduced specificity without affecting sensitivity, whereas incorporating non-radionics data and implementing data augmentation had no significant effect. Furthermore, the k-fold cross-validation models had higher sensitivity than those using a held-out test approach (Supplementary Section S10).

Table 3.

Subgroup analysis of heterogeneity via meta-regression for predicting MGMT methylation in validation cohorts. The table shows sensitivity and specificity with 95% confidence intervals (CI) across various covariates and subgroups. Abbreviations: No. of Studies, number of studies; CI, confidence interval; DL, deep learning; CNNs, convolutional neural networks; HGG, high-grade glioma; LGG, low-grade glioma.

4. Discussion

Our systematic review provides a comprehensive and rigorous assessment of the current state of MRI-based DL in predicting genetic abnormalities in gliomas. Consistent with previous studies [10,16], the findings indicate that DL-based radiomics demonstrates moderate to high diagnostic accuracy for key molecular markers, highlighting its potential as a noninvasive tool for glioma characterization. However, the substantial variability in outputs and study designs underscores the necessity for standardized approaches to enhance reproducibility and clinical applicability.

Conducting a statistical power analysis for meta-analysis studies is crucial for assessing data reliability and generalizability [23]. Our statistical power analysis revealed that the majority of studies possessed sufficient power to detect clinically meaningful effect sizes, particularly in the prediction of MGMT methylation [40,46,48] and TERT mutation [60,66]. High-powered studies typically employed larger sample sizes and robust validation cohorts, thereby enhancing the reliability of their findings. In contrast, studies with smaller sample sizes or limited validation datasets exhibited reduced statistical power [28,54]. Overall, the studies were sufficiently powered to detect the estimated effect sizes across the molecular markers analyzed.

The availability of public datasets has fueled growth in glioma DL-based radiomics, with nearly half of all studies using public datasets, most notably TCIA. These large-scale datasets enable the training and validation of complex DL models, fostering innovation and facilitating comparative analyses across studies. However, the integration of public datasets with institutional data remains limited due to the discrepancies in imaging protocols and patient demographics. Our subgroup analysis showed that models trained and validated on single-center datasets outperformed multi-center datasets. This highlights the critical need for data harmonization efforts to maximize the benefits of open data, ensuring consistency and reliability in radiomics analyses. Several harmonization techniques have been proposed [69]. Feature-level methods like ComBat standardize radiomic feature distributions by correcting for scanner-induced “batch effects”, thereby improving consistency and classification accuracy [70]. On the image side, DL approaches such as cycle-consistent GANs and style transfer methods can transform images into a common domain, though careful validation is necessary to avoid artifacts [69,71]. In addition, physics-based corrections—such as bias field inhomogeneity correction, noise filtering, and intensity normalization techniques (e.g., z-score scaling or histogram matching)—serve as fundamental steps to reduce variability at the source [72].

The shift from classical radiomics to DL-based approaches has transformed analysis workflows. Our meta-regression shows DL models outperform traditional radiomics. Understanding this variability requires comparing traditional radiomics and DL models, considering their respective strengths and limitations. In traditional radiomics, machine learning (ML) models—such as support vector machines, random forests, and k-nearest neighbors—serve solely as classifiers, correlating predefined radiomic features extracted from the region of interest (ROI) with clinical endpoints [7]. ML methods generally require less training data and offer greater interpretability [73], making it easier to understand how input variables influence predictions. However, they are limited in capturing complex patterns [73,74,75]. Additionally, conventional radiomics faces challenges with the stability of radiomic feature computation, which is compromised by the lack of standardization and high variability in image acquisition protocols, ROI definition, and image processing techniques. These issues are particularly pronounced in MRI, where image intensities are influenced by factors such as scanner manufacturer, magnetic field strength, sequence protocol, and image reconstruction [6]. Although initiatives like the Image Biomarker Standardization Initiative (IBSI) [76] have sought to mitigate variability, challenges related to the variability of image quality persist.

In contrast, DL methods can operate in an end-to-end manner or be integrated into specific stages of the radiomics pipeline, including data augmentation, image preprocessing, ROI segmentation, feature extraction, and classification [6]. In terms of deep features, these models are capable of extracting intricate, non-linear representations that traditional ML struggles to capture. However, this advantage comes with challenges, including the “black-box” nature of DL models, where internal decision-making is not transparent [74,75,77,78]. This limited interpretability can compromise clinician trust and accountability. Additionally, the implicit DL-based feature representations highlight the need for new guidelines to ensure robustness and clinical utility [79]. To address this issue, integrating explainable artificial intelligence techniques—such as saliency mapping [80] and Shapley Additive exPlanations methods [81]—can provide much-needed transparency and facilitate a better understanding of the decision-making processes.

Another challenge of DL models is their higher computational costs. Conventional radiomics models are computationally lightweight—training a ML classifier on a few hundred features from a few hundred patients can be completed in seconds to minutes on a standard CPU without the need for specialized hardware. DL techniques, however, require extensive processing power and memory in training and inference (prediction), especially when dealing with large 3D medical images. For instance, training a 3D CNN, such as a 3D U-Net on MRI volumes, can take from several hours to days depending on the dataset size and hardware capabilities, often necessitating GPUs with at least 16–24 GB of VRAM to handle the large memory requirements during training [82]. Moreover, in clinical practice, the inference speed is critical for the workflow. While inference times on GPUs are typically within seconds to a minute, processing on CPUs can significantly slow down the prediction process, making them unsuitable for real-time applications. To mitigate these issues, strategies such as mixed-precision training [83], quantization [84], model pruning [85], development of more efficient architectures (e.g., using MobileNet [66] or EfficientNet [61]), and pipeline optimization [86] have been implemented. Using these methods, researchers have managed to deploy DL algorithms within the constraints of hospital infrastructure, achieving near-real-time inference while maintaining acceptable levels of accuracy [86].

Our quality assessment highlights several areas for improvement. The median RQS score of 16 reflects moderate methodological quality across studies. Although most studies detailed imaging protocols, none incorporated multiple time points or phantom studies to assess inter-scanner variability—a critical factor for ensuring reproducibility. Additionally, less than half of the studies performed multiple segmentations, with the remainder relying on a single expert despite the well-documented impact of inter- and intra-observer variability on extracted features [6,87]. While 72% of studies validated their models on unseen datasets, the absence of independent test sets in half raises concerns about generalizability. In the model performance domain, only five studies reported calibration statistics that are vital for assessing the agreement between predicted and observed outcomes. Notably, all studies were retrospective, lacking both prospective validation and cost-effectiveness evaluations that are crucial for demonstrating real-world clinical utility. By adapting the QUADAS-2 tool to include questions on multiple segmentation, resampling techniques, and validation with unseen datasets, we identified significant bias in some studies—highlighting challenges to the generalizability and clinical application of these models.

These methodological limitations, consistent with previous reviews [12,88,89], indicate that while DL-based radiomics demonstrates promising accuracy in predicting glioma molecular markers, several challenges must be addressed to fully realize its clinical potential. One major challenge is the need for extensive external validation to ensure the robustness and generalizability of the model in real-world conditions. Performance often declines when models are tested on different settings due to dataset shift [90]. Our meta-regression analysis confirmed this issue, showing significant variability across different validation methods. To address this, external test cohorts should always be included, and when performance drops, techniques like domain adaptation can help improve generalizability. Additionally, to ensure robustness and fairness across diverse patient populations, models should be stress-tested on different subgroups during development and validation. This is particularly important given that fewer than 4% of FDA-approved AI devices report race or ethnicity data [91]. Furthermore, prospective validation through real-time studies or clinical trials is crucial to show that DL models not only achieve high diagnostic accuracy but also improve patient outcomes compared to standard care. Unlike retrospective studies, prospective validation captures the full clinical workflow—data acquisition, model inference, and clinician decision-making—without hindsight bias, providing a more realistic assessment of the model [92].

Given the limitations in data availability and generalizability, researchers are turning to foundation DL models. These large-scale models are pretrained on vast, unlabeled datasets using self-supervised learning, helping to overcome the scarcity of expert annotations in medical applications while enhancing performance in downstream tasks [93]. In radiology, RadImageNet—a CNN pretrained on 1.35 million medical images across multiple modalities—provides robust, general features [94]. More specifically, BrainSegFounder, based on the SWIN-UNETR architecture and pretrained on over 42,000 brain MRIs, has demonstrated superior segmentation accuracy compared to baseline models [95]. Additionally, a recently developed ViT-based foundation model for brain tumor imaging biomarkers was trained on 57,000 MRI volumes and fine-tuned for tasks such as IDH mutation and 1p/19q codeletion classification [96]. Overall, these foundation models offer a promising starting point, requiring fewer glioma-specific cases for fine-tuning while potentially outperforming traditional DL models in accuracy, robustness, and generalizability.

Moreover, regulatory challenges remain a significant barrier to deploying DL-based radiomics in healthcare. For instance, traditional approval pathways like the FDA’s 510 (k) clearance are designed for static devices and struggle with continuously evolving AI algorithms that adapt post-deployment [97]. In Europe, the Medical Device Regulation (MDR) classifies many AI-based tools as high-risk, demanding conformity assessments that are hard to apply to adaptive models [97]. Additionally, data privacy laws add complexity, and the limited transparency regarding clinical benefit and patient safety in many ML or DL tools further complicates regulatory approval [98]. These challenges highlight the need for adaptive regulatory frameworks and robust post-market surveillance systems.

Finally, integrating DL into clinical workflows requires seamless compatibility with electronic health records, proper training for clinicians, and a strong IT infrastructure to support ongoing model updates and real-time data flow. To ensure clinical effectiveness and cost-efficiency, pilot programs should be followed by multi-center prospective studies [99,100]. Implementing DL into healthcare comes with various costs, including hardware, software, staffing, and maintenance. Hospitals need to carefully assess these costs against potential benefits. However, most studies focus on technical performance, with few conducting formal cost-benefit analyses. Our quality analysis found that only one study used decision curve analysis to measure clinical net benefit. Addressing these challenges will help transition DL models from research to clinical practice, ultimately enabling personalized treatments and improving patient outcomes in oncology.

This systematic review has several limitations. We focused on top-performing DL models and broadly categorized them due to the limited number of experiments. However, variations within a single study, such as DL methods or MRI sequences, were treated as separate experiments for detailed subgroup analysis. While our meta-regression addressed some heterogeneity, it could not explain all the discrepancies. These findings are observational rather than causal due to a lack of randomization between studies, a common problem in meta-analyses [20]. Other confounding variables may influence the findings, especially given the small number of studies on some subgroups. Lastly, our review excluded gray literature, although we thoroughly searched major databases with no date or language restrictions.

5. Conclusions

In conclusion, while DL-based radiomics shows significant promise in predicting glioma molecular markers, its clinical translation is challenged by data heterogeneity, reduced external performance, computational limitations, and regulatory barriers. Multi-center studies must begin by standardizing MRI acquisition and preprocessing, followed by applying transfer learning or domain adaptation alongside calibration analyses. Prospective validations are needed to provide a realistic assessment of the model. Additionally, integrating phantom and multi-time point tests will enhance model robustness. Regular inter-center communication, improved open-source code practices, and innovative approaches such as federated learning, shared platforms, and foundation DL models are essential for developing clinically translatable DL models while ensuring patient privacy and regulatory compliance.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/diagnostics15070797/s1.

Author Contributions

S.F.: Conceptualization, data extraction, formal analysis, writing—original draft, and writing—review and editing; M.H.: Conceptualization and writing—review and editing; S.M.: Data extraction and formal analysis; A.D.I.: Conceptualization and writing—review and editing; E.F.: Conceptualization and writing—review and editing; S.L.: Conceptualization, project administration, supervision, and writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This study did not receive any funding or financial support.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MGMT | O-6-methylguanine-DNA methyltransferase |

| PTEN | Phosphatase and tensin homolog |

| ATRX | alpha-thalassemia/mental retardation syndrome X-linked |

| TERT | telomerase reverse transcriptase |

| CDKN2A/B | cyclin-dependent kinase inhibitor 2A/B |

| EGFR | epidermal growth factor receptor |

| SYP | Synaptophysin |

| QUADAS-2 | Quality assessment of diagnostic accuracy studies-2 |

| RQS | Radiomics quality score |

References

- Louis, D.N.; Perry, A.; Wesseling, P.; Brat, D.J.; Cree, I.A.; Figarella-Branger, D.; Hawkins, C.; Ng, H.K.; Pfister, S.M.; Reifenberger, G.; et al. The 2021 WHO Classification of Tumors of the Central Nervous System: A Summary. Neuro-Oncology 2021, 23, 1231–1251. [Google Scholar] [CrossRef]

- Perakis, S.; Speicher, M.R. Emerging Concepts in Liquid Biopsies. BMC Med. 2017, 15, 75. [Google Scholar] [CrossRef]

- Guarnera, A.; Romano, A.; Moltoni, G.; Ius, T.; Palizzi, S.; Romano, A.; Bagatto, D.; Minniti, G.; Bozzao, A. The Role of Advanced MRI Sequences in the Diagnosis and Follow-Up of Adult Brainstem Gliomas: A Neuroradiological Review. Tomography 2023, 9, 1526–1537. [Google Scholar] [CrossRef]

- Wei, R.-L.; Wei, X.-T. Advanced Diagnosis of Glioma by Using Emerging Magnetic Resonance Sequences. Front. Oncol. 2021, 11, 694498. [Google Scholar] [CrossRef]

- Liu, Z.; Duan, T.; Zhang, Y.; Weng, S.; Xu, H.; Ren, Y.; Zhang, Z.; Han, X. Radiogenomics: A Key Component of Precision Cancer Medicine. Br. J. Cancer 2023, 129, 741–753. [Google Scholar] [CrossRef] [PubMed]

- Scalco, E.; Rizzo, G.; Mastropietro, A. The Stability of Oncologic MRI Radiomic Features and the Potential Role of Deep Learning: A Review. Phys. Med. Biol. 2022, 67, 09TR03. [Google Scholar] [CrossRef]

- Hosny, A.; Aerts, H.J.; Mak, R.H. Handcrafted versus Deep Learning Radiomics for Prediction of Cancer Therapy Response. Lancet Digit. Health 2019, 1, e106–e107. [Google Scholar] [CrossRef]

- Farahani, S.; Hejazi, M.; Tabassum, M.; Ieva, A.D.; Mahdavifar, N.; Liu, S. Diagnostic Performance of Deep Learning for Predicting Gliomas’ IDH and 1p/19q Status in MRI: A Systematic Review and Meta-Analysis. arXiv 2024, arXiv:2411.02426. [Google Scholar]

- Brat, D.J.; Aldape, K.; Bridge, J.A.; Canoll, P.; Colman, H.; Hameed, M.R.; Harris, B.T.; Hattab, E.M.; Huse, J.T.; Jenkins, R.B.; et al. Molecular Biomarker Testing for the Diagnosis of Diffuse Gliomas: Guideline From the College of American Pathologists in Collaboration With the American Association of Neuropathologists, Association for Molecular Pathology, and Society for Neuro-Oncology. Arch. Pathol. Lab. Med. 2022, 146, 547–574. [Google Scholar] [CrossRef]

- Huang, H.; Wang, F.; Luo, S.; Chen, G.; Tang, G. Diagnostic Performance of Radiomics Using Machine Learning Algorithms to Predict MGMT Promoter Methylation Status in Glioma Patients: A Meta-Analysis. Diagn. Interv. Radiol. 2021, 27, 716. [Google Scholar] [CrossRef]

- Samartha, M.V.S.; Dubey, N.K.; Jena, B.; Maheswar, G.; Lo, W.-C.; Saxena, S. AI-Driven Estimation of O6 Methylguanine-DNA-Methyltransferase (MGMT) Promoter Methylation in Glioblastoma Patients: A Systematic Review with Bias Analysis. J. Cancer Res. Clin. Oncol. 2023, 150, 57. [Google Scholar] [CrossRef] [PubMed]

- Doniselli, F.M.; Pascuzzo, R.; Mazzi, F.; Padelli, F.; Moscatelli, M.; Akinci D’Antonoli, T.; Cuocolo, R.; Aquino, D.; Cuccarini, V.; Sconfienza, L.M. Quality Assessment of the MRI-Radiomics Studies for MGMT Promoter Methylation Prediction in Glioma: A Systematic Review and Meta-Analysis. Eur. Radiol. 2024, 34, 5802–5815. [Google Scholar] [CrossRef] [PubMed]

- Robinet, L.; Siegfried, A.; Roques, M.; Berjaoui, A.; Cohen-Jonathan Moyal, E. MRI-Based Deep Learning Tools for MGMT Promoter Methylation Detection: A Thorough Evaluation. Cancers 2023, 15, 2253. [Google Scholar] [CrossRef] [PubMed]

- van Kempen, E.J.; Post, M.; Mannil, M.; Kusters, B.; ter Laan, M.; Meijer, F.J.A.; Henssen, D.J.H.A. Accuracy of Machine Learning Algorithms for the Classification of Molecular Features of Gliomas on MRI: A Systematic Literature Review and Meta-Analysis. Cancers 2021, 13, 2606. [Google Scholar] [CrossRef]

- Lasocki, A.; Abdalla, G.; Chow, G.; Thust, S.C. Imaging Features Associated with H3 K27-Altered and H3 G34-Mutant Gliomas: A Narrative Systematic Review. Cancer Imaging 2022, 22, 63. [Google Scholar] [CrossRef]

- Jian, A.; Jang, K.; Manuguerra, M.; Liu, S.; Magnussen, J.; Di Ieva, A. Machine Learning for the Prediction of Molecular Markers in Glioma on Magnetic Resonance Imaging: A Systematic Review and Meta-Analysis. Neurosurgery 2021, 89, 31–44. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Moher, D. Updating Guidance for Reporting Systematic Reviews: Development of the PRISMA 2020 Statement. J. Clin. Epidemiol. 2021, 134, 103–112. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.S.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.G.; Sterne, J.A.C.; Bossuyt, P.M.M. the QUADAS-2 Group QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Lambin, P.; Leijenaar, R.T.H.; Deist, T.M.; Peerlings, J.; de Jong, E.E.C.; van Timmeren, J.; Sanduleanu, S.; Larue, R.T.H.M.; Even, A.J.G.; Jochems, A.; et al. Radiomics: The Bridge between Medical Imaging and Personalized Medicine. Nat. Rev. Clin. Oncol. 2017, 14, 749–762. [Google Scholar] [CrossRef]

- Borenstein, M. Common Mistakes in Meta-Analysis and How to Avoid Them; Biostat, Incorporated: Englewood, NJ, USA, 2019; ISBN 978-1-7334367-1-7. [Google Scholar]

- Lee, J.; Kim, K.W.; Choi, S.H.; Huh, J.; Park, S.H. Systematic Review and Meta-Analysis of Studies Evaluating Diagnostic Test Accuracy: A Practical Review for Clinical Researchers-Part II. Statistical Methods of Meta-Analysis. Korean J. Radiol. 2015, 16, 1188–1196. [Google Scholar] [CrossRef]

- Deeks, J.J.; Higgins, J.P.; Altman, D.G.; Cochrane Statistical Methods Group. Analysing Data and Undertaking Meta-Analyses. In Cochrane Handbook for Systematic Reviews of Interventions; John Wiley & Sons: Hoboken, NJ, USA, 2019; pp. 241–284. ISBN 978-1-119-53660-4. [Google Scholar]

- Quintana, D. A Guide for Calculating Study-Level Statistical Power for Meta-Analyses. Adv. Methods Pract. Psychol. Sci. 2023, 6, 25152459221147260. [Google Scholar]

- Cerullo, E.; Sutton, A.J.; Jones, H.E.; Wu, O.; Quinn, T.J.; Cooper, N.J. MetaBayesDTA: Codeless Bayesian Meta-Analysis of Test Accuracy, with or without a Gold Standard. BMC Med. Res. Methodol. 2023, 23, 127. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, R.; Zhou, Y.; Liu, S.; Wang, Z.; Zhang, S.; Chen, Z. Multi-Sequence MRI-Based Convolutional Neural Network Predicts the Methylation Status of MGMT Promoter in Glioma. Chin. J. Magn. Reson. Imaging 2023, 14, 34–39, 78. [Google Scholar]

- Tang, Z.; Xu, Y.; Jin, L.; Aibaidula, A.; Lu, J.; Jiao, Z.; Wu, J.; Zhang, H.; Shen, D. Deep Learning of Imaging Phenotype and Genotype for Predicting Overall Survival Time of Glioblastoma Patients. IEEE Trans. Med. Imaging 2020, 39, 2100–2109. [Google Scholar] [CrossRef] [PubMed]

- Calabrese, E.; Villanueva-Meyer, J.E.; Cha, S. A Fully Automated Artificial Intelligence Method for Non-Invasive, Imaging-Based Identification of Genetic Alterations in Glioblastomas. Sci. Rep. 2020, 10, 11852. [Google Scholar] [CrossRef]

- Haubold, J.; Hosch, R.; Parmar, V.; Glas, M.; Guberina, N.; Catalano, O.A.; Pierscianek, D.; Wrede, K.; Deuschl, C.; Forsting, M.; et al. Fully Automated MR Based Virtual Biopsy of Cerebral Gliomas. Cancers 2021, 13, 6186. [Google Scholar] [CrossRef] [PubMed]

- Sohn, B.; An, C.; Kim, D.; Ahn, S.S.; Han, K.; Kim, S.H.; Kang, S.-G.; Chang, J.H.; Lee, S.-K. Radiomics-Based Prediction of Multiple Gene Alteration Incorporating Mutual Genetic Information in Glioblastoma and Grade 4 Astrocytoma, IDH-Mutant. J. Neuro-Oncol. 2021, 155, 267–276. [Google Scholar] [CrossRef]

- Calabrese, E.; Rudie, J.D.; Rauschecker, A.M.; Villanueva-Meyer, J.E.; Clarke, J.L.; Solomon, D.A.; Cha, S. Combining Radiomics and Deep Convolutional Neural Network Features from Preoperative MRI for Predicting Clinically Relevant Genetic Biomarkers in Glioblastoma. Neuro-Oncol. Adv. 2022, 4, vdac060. [Google Scholar] [CrossRef]

- Xu, Q.; Xu, Q.Q.; Shi, N.; Dong, L.N.; Zhu, H.; Xu, K. A Multitask Classification Framework Based on Vision Transformer for Predicting Molecular Expressions of Glioma. Eur. J. Radiol. 2022, 157, 110560. [Google Scholar] [CrossRef]

- Chaddad, A.; Hassan, L.; Katib, Y. A Texture-Based Method for Predicting Molecular Markers and Survival Outcome in Lower Grade Glioma. Appl. Intell. 2023, 53, 24724–24738. [Google Scholar] [CrossRef]

- Korfiatis, P.; Kline, T.L.; Lachance, D.H.; Parney, I.F.; Buckner, J.C.; Erickson, B.J. Residual Deep Convolutional Neural Network Predicts MGMT Methylation Status. J. Digit. Imaging 2017, 30, 622–628. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.-C.; Bai, H.; Sun, Q.; Li, Q.; Liu, L.; Zou, Y.; Chen, Y.; Liang, C.; Zheng, H. Multiregional Radiomics Features from Multiparametric MRI for Prediction of MGMT Methylation Status in Glioblastoma Multiforme: A Multicentre Study. Eur. Radiol. 2018, 28, 3640–3650. [Google Scholar] [CrossRef] [PubMed]

- Chang, P.; Grinband, J.; Weinberg, B.D.; Bardis, M.; Khy, M.; Cadena, G.; Su, M.-Y.; Cha, S.; Filippi, C.G.; Bota, D.; et al. Deep-Learning Convolutional Neural Networks Accurately Classify Genetic Mutations in Gliomas. Am. J. Neuroradiol. 2018, 39, 1201–1207. [Google Scholar] [CrossRef] [PubMed]

- Han, L.; Kamdar, M.R. MRI to MGMT: Predicting Methylation Status in Glioblastoma Patients Using Convolutional Recurrent Neural Networks. In Proceedings of the Pacific Symposium on Biocomputing 2018 (PSB), The Big Island of Hawaii, HI, USA, 3–7 January 2018; Altman, R.B., Dunker, A.K., Hunter, L., Ritchie, M.D., Murray, T., Klein, T.E., Eds.; World Scientific Publ Co Pte Ltd.: Singapore, 2018; pp. 331–342. [Google Scholar]

- Korfiatis, P.; Kline, T.L.; Erickson, B.J. Evaluation of a Deep Learning Architecture for MR Imaging Prediction of ATRX in Glioma Patients. In Proceedings of the Medical Imaging 2018: Computer-Aided Diagnosis, Houston, TX, USA, 10–15 February 2018; Petrick, N., Mori, K., Eds.; Spie-Int Soc Optical Engineering: Bellingham, WA, USA, 2018; Volume 10575, p. UNSP 105752G. [Google Scholar]

- Fukuma, R.; Yanagisawa, T.; Kinoshita, M.; Shinozaki, T.; Arita, H.; Kawaguchi, A.; Takahashi, M.; Narita, Y.; Terakawa, Y.; Tsuyuguchi, N.; et al. Prediction of IDH and TERT Promoter Mutations in Low-Grade Glioma from Magnetic Resonance Images Using a Convolutional Neural Network. Sci. Rep. 2019, 9, 20311. [Google Scholar] [CrossRef]

- Crisi, G.; Filice, S. Predicting MGMT Promoter Methylation of Glioblastoma from Dynamic Susceptibility Contrast Perfusion: A Radiomic Approach. J. Neuroimaging 2020, 30, 458–462. [Google Scholar] [CrossRef]

- Hedyehzadeh, M.; Maghooli, K.; MomenGharibvand, M.; Pistorius, S. A Comparison of the Efficiency of Using a Deep CNN Approach with Other Common Regression Methods for the Prediction of EGFR Expression in Glioblastoma Patients. J. Digit. Imaging 2020, 33, 391–398. [Google Scholar] [CrossRef]

- Chen, X.; Zeng, M.; Tong, Y.; Zhang, T.; Fu, Y.; Li, H.; Zhang, Z.; Cheng, Z.; Xu, X.; Yang, R.; et al. Automatic Prediction of MGMT Status in Glioblastoma via Deep Learning-Based MR Image Analysis. BioMed Res. Int. 2020, 2020, 9258649. [Google Scholar] [CrossRef]

- Jonnalagedda, P.; Weinberg, B.; Allen, J.; Bhanu, B. Feature Disentanglement to Aid Imaging Biomarker Characterization for Genetic Mutations. In Proceedings of the Third Conference on Medical Imaging with Deep Learning, Montreal, QC, Canada, 6–8 July 2020; ML Research Press: Seattle, WA, USA, 2020; Volume 121, pp. 349–364. [Google Scholar]

- Yogananda, C.G.B.; Shah, B.R.; Nalawade, S.S.; Murugesan, G.K.; Yu, F.F.; Pinho, M.C.; Wagner, B.C.; Mickey, B.; Patel, T.R.; Fei, B.; et al. MRI-Based Deep-Learning Method for Determining Glioma MGMT Promoter Methylation Status. Am. J. Neuroradiol. 2021, 42, 845–852. [Google Scholar] [CrossRef]

- Tupe-Waghmare, P.; Malpure, P.; Kotecha, K.; Beniwal, M.; Santosh, V.; Saini, J.; Ingalhalikar, M. Comprehensive Genomic Subtyping of Glioma Using Semi-Supervised Multi-Task Deep Learning on Multimodal MRI. IEEE Access 2021, 9, 167900–167910. [Google Scholar] [CrossRef]

- Chen, H.; Lin, F.; Zhang, J.; Lv, X.; Zhou, J.; Li, Z.-C.; Chen, Y. Deep Learning Radiomics to Predict PTEN Mutation Status From Magnetic Resonance Imaging in Patients With Glioma. Front. Oncol. 2021, 11, 734433. [Google Scholar] [CrossRef]

- Lang, D.M.; Peeken, J.C.; Combs, S.E.; Wilkens, J.J.; Bartzsch, S. A Video Data Based Transfer Learning Approach for Classification of MGMT Status in Brain Tumor MR Images. In Proceedings of the Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries, Brainles 2021, PT I, Virtual Event, 27 September 2021; Crimi, A., Bakas, S., Eds.; Springer International Publishing AG: Cham, Switzerland, 2022; Volume 12962, pp. 306–314. [Google Scholar]

- Xiao, Z.; Yao, S.; Wang, Z.; Zhu, D.; Bie, Y.; Zhang, S.; Chen, W. Multiparametric MRI Features Predict the SYP Gene Expression in Low-Grade Glioma Patients: A Machine Learning-Based Radiomics Analysis. Front. Oncol. 2021, 11, 663451. [Google Scholar] [CrossRef]

- Capuozzo, S.; Gravina, M.; Gatta, G.; Marrone, S.; Sansone, C. A Multimodal Knowledge-Based Deep Learning Approach for MGMT Promoter Methylation Identification. J. Imaging 2022, 8, 321. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Xu, Y.; Ye, M.; Li, Y.; Sun, Y.; Liang, J.; Lu, J.; Wang, Z.; Zhu, Z.; Zhang, X.; et al. Predicting MGMT Promoter Methylation in Diffuse Gliomas Using Deep Learning with Radiomics. J. Clin. Med. 2022, 11, 3445. [Google Scholar] [CrossRef]

- Farzana, W.; Temtam, A.G.; Shboul, Z.A.; Rahman, M.M.; Sadique, M.S.; Iftekharuddin, K.M. Radiogenomic Prediction of MGMT Using Deep Learning with Bayesian Optimized Hyperparameters. In Proceedings of the Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries, Brainles 2021, PT II, Virtual Event, 27 September 2021; Crimi, A., Bakas, S., Eds.; Springer International Publishing AG: Cham, Switzerland, 2022; Volume 12963, pp. 357–366. [Google Scholar]

- Kim, B.-H.; Lee, H.; Choi, K.S.; Nam, J.G.; Park, C.-K.; Park, S.-H.; Chung, J.W.; Choi, S.H. Validation of MRI-Based Models to Predict MGMT Promoter Methylation in Gliomas: BraTS 2021 Radiogenomics Challenge. Cancers 2022, 14, 4827. [Google Scholar] [CrossRef] [PubMed]

- Nalawade, S.S.; Yu, F.F.; Bangalore Yogananda, C.G.; Murugesan, G.K.; Shah, B.R.; Pinho, M.C.; Wagner, B.C.; Xi, Y.; Mickey, B.; Patel, T.R.; et al. Brain Tumor IDH, 1p/19q, and MGMT Molecular Classification Using MRI-Based Deep Learning: An Initial Study on the Effect of Motion and Motion Correction. J. Med. Imaging 2022, 9, 016001. [Google Scholar] [CrossRef] [PubMed]

- Spoorthy, K.R.; Mahdev, A.R.; Vaishnav, B.; Shruthi, M.L. Deep Learning Approach for Radiogenomic Classification of Brain Tumor. In Proceedings of the 2022 IEEE 19th India Council International Conference (INDICON), Kochi, India, 24–26 November 2022; pp. 1–6. [Google Scholar]

- Kihira, S.; Mei, X.; Mahmoudi, K.; Liu, Z.; Dogra, S.; Belani, P.; Tsankova, N.; Hormigo, A.; Fayad, Z.A.; Doshi, A.; et al. U-Net Based Segmentation and Characterization of Gliomas. Cancers 2022, 14, 4457. [Google Scholar] [CrossRef]

- Faghani, S.; Khosravi, B.; Moassefi, M.; Conte, G.M.; Erickson, B.J. A Comparison of Three Different Deep Learning-Based Models to Predict the MGMT Promoter Methylation Status in Glioblastoma Using Brain MRI. J. Digit. Imaging 2023, 36, 837–846. [Google Scholar] [CrossRef]

- Chu, W.; Zhou, Y.; Cai, S.; Chen, Z.; Cai, C. A Comprehensive Multi-Modal Domain Adaptative Aid Framework for Brain Tumor Diagnosis. In Proceedings of the Pattern Recognition and Computer Vision: 6th Chinese Conference, PRCV 2023, Proceedings, Xiamen, China, 13–15 October 2023; Lecture Notes in Computer Science (14437). Liu, Q., Wang, H., Ma, Z., Zheng, W., Zha, H., Chen, X., Wang, L., Ji, R., Eds.; Springer Nature: Singapore, 2023; p. 94, ISBN 978-981-99-8558-6. [Google Scholar]

- Rui, W.; Zhang, S.; Shi, H.; Sheng, Y.; Zhu, F.; Yao, Y.; Chen, X.; Cheng, H.; Zhang, Y.; Aili, A.; et al. Deep Learning-Assisted Quantitative Susceptibility Mapping as a Tool for Grading and Molecular Subtyping of Gliomas. Phenomics 2023, 3, 243–254. [Google Scholar] [CrossRef]

- Saeed, N.; Ridzuan, M.; Alasmawi, H.; Sobirov, I.; Yaqub, M. MGMT Promoter Methylation Status Prediction Using MRI Scans? An Extensive Experimental Evaluation of Deep Learning Models. Med. Image Anal. 2023, 90, 102989. [Google Scholar] [CrossRef]

- Sakly, H.; Said, M.; Seekins, J.; Guetari, R.; Kraiem, N.; Marzougui, M. Brain Tumor Radiogenomic Classification of O6-Methylguanine-DNA Methyltransferase Promoter Methylation in Malignant Gliomas-Based Transfer Learning. Cancer Control. 2023, 30, 10732748231169149. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, H.; Zhang, Y.; Zhou, B.; Wu, L.; Lei, Y.; Huang, B. Deep Learning Radiomics for the Assessment of Telomerase Reverse Transcriptase Promoter Mutation Status in Patients With Glioblastoma Using Multiparametric MRI. J. Magn. Reson. Imaging 2023, 58, 1441–1451. [Google Scholar] [CrossRef]

- Saxena, S.; Jena, B.; Mohapatra, B.; Gupta, N.; Kalra, M.; Scartozzi, M.; Saba, L.; Suri, J.S. Fused Deep Learning Paradigm for the Prediction of O6-Methylguanine-DNA Methyltransferase Genotype in Glioblastoma Patients: A Neuro-Oncological Investigation. Comput. Biol. Med. 2023, 153, 106492. [Google Scholar] [CrossRef]

- Saxena, S.; Agrawal, A.; Dash, P.; Jena, B.; Khanna, N.N.; Paul, S.; Kalra, M.M.; Viskovic, K.; Fouda, M.M.; Saba, L.; et al. Prediction of O-6-Methylguanine-DNA Methyltransferase and Overall Survival of the Patients Suffering from Glioblastoma Using MRI-Based Hybrid Radiomics Signatures in Machine and Deep Learning Framework. Neural Comput. Appl. 2023, 35, 13647–13663. [Google Scholar] [CrossRef]

- Buz-Yalug, B.; Turhan, G.; Cetin, A.I.; Dindar, S.S.; Danyeli, A.E.; Yakicier, C.; Pamir, M.N.; Özduman, K.; Dincer, A.; Ozturk-Isik, E. Identification of IDH and TERTp Mutations Using Dynamic Susceptibility Contrast MRI with Deep Learning in 162 Gliomas. Eur. J. Radiol. 2024, 170, 111257. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Xu, X.; Zhang, W.; Zhang, L.; Wen, M.; Gao, J.; Yang, J.; Kan, Y.; Yang, X.; Wen, Z.; et al. A Fusion Model Integrating Magnetic Resonance Imaging Radiomics and Deep Learning Features for Predicting Alpha-Thalassemia X-Linked Intellectual Disability Mutation Status in Isocitrate Dehydrogenase–Mutant High-Grade Astrocytoma: A Multicenter Study. Quant. Imaging Med. Surg. 2024, 14, 251–263. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, R.; Gao, J.; Tang, Y.; Xu, X.; Kan, Y.; Cao, X.; Wen, Z.; Liu, Z.; Cui, S.; et al. A Novel MRI-Based Deep Learning Networks Combined with Attention Mechanism for Predicting CDKN2A/B Homozygous Deletion Status in IDH-Mutant Astrocytoma. Eur. Radiol. 2024, 34, 391–399. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Zhou, B.; Zhang, H.; Zhang, Y.; Lei, Y.; Huang, B. Peritumoural Radiomics for Identification of Telomerase Reverse Transcriptase Promoter Mutation in Patients With Glioblastoma Based on Preoperative MRI. Can. Assoc. Radiol. J. 2024, 75, 143–152. [Google Scholar] [CrossRef]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and Operating a Public Information Repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef]

- Welcome to the Cancer Imaging Archive. Available online: https://www.cancerimagingarchive.net/ (accessed on 4 August 2024).

- Stamoulou, E.; Spanakis, C.; Manikis, G.C.; Karanasiou, G.; Grigoriadis, G.; Foukakis, T.; Tsiknakis, M.; Fotiadis, D.I.; Marias, K. Harmonization Strategies in Multicenter MRI-Based Radiomics. J. Imaging 2022, 8, 303. [Google Scholar] [CrossRef]

- Leithner, D.; Nevin, R.B.; Gibbs, P.; Weber, M.; Otazo, R.; Vargas, H.A.; Mayerhoefer, M.E. ComBat Harmonization for MRI Radiomics: Impact on Non-Binary Tissue Classification by Machine Learning. Investig. Radiol. 2023, 58, 697–701. [Google Scholar] [CrossRef]

- Evaluation of Conventional and Deep Learning Based Image Harmonization Methods in Radiomics Studies—IOPscience. Available online: https://iopscience.iop.org/article/10.1088/1361-6560/ac39e5/meta (accessed on 6 December 2024).

- Li, X.T.; Huang, R.Y. Standardization of Imaging Methods for Machine Learning in Neuro-Oncology. Neuro-Oncol. Adv. 2021, 2, iv49–iv55. [Google Scholar] [CrossRef] [PubMed]

- Lu, S.-C.; Swisher, C.L.; Chung, C.; Jaffray, D.; Sidey-Gibbons, C. On the Importance of Interpretable Machine Learning Predictions to Inform Clinical Decision Making in Oncology. Front. Oncol. 2023, 13, 1129380. [Google Scholar] [CrossRef]

- Cheng, P.M.; Montagnon, E.; Yamashita, R.; Pan, I.; Cadrin-Chênevert, A.; Perdigón Romero, F.; Chartrand, G.; Kadoury, S.; Tang, A. Deep Learning: An Update for Radiologists. RadioGraphics 2021, 41, 1427–1445. [Google Scholar] [CrossRef]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2021, 23, 18. [Google Scholar] [CrossRef] [PubMed]

- The Image Biomarker Standardization Initiative: Standardized Quantitative Radiomics for High-Throughput Image-Based Phenotyping|Radiology. Available online: https://pubs.rsna.org/doi/full/10.1148/radiol.2020191145 (accessed on 6 December 2024).

- Deep Learning|Nature. Available online: https://www.nature.com/articles/nature14539 (accessed on 8 March 2025).

- Afshar, P.; Mohammadi, A.; Plataniotis, K.N.; Oikonomou, A.; Benali, H. From Handcrafted to Deep-Learning-Based Cancer Radiomics: Challenges and Opportunities. IEEE Signal Process. Mag. 2019, 36, 132–160. [Google Scholar] [CrossRef]

- Huang, E.P.; O’Connor, J.P.B.; McShane, L.M.; Giger, M.L.; Lambin, P.; Kinahan, P.E.; Siegel, E.L.; Shankar, L.K. Criteria for the Translation of Radiomics into Clinically Useful Tests. Nat. Rev. Clin. Oncol. 2023, 20, 69–82. [Google Scholar] [CrossRef] [PubMed]

- Zhao, R.; Ouyang, W.; Li, H.; Wang, X. Saliency Detection by Multi-Context Deep Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1265–1274. [Google Scholar]

- Mangalathu, S.; Hwang, S.-H.; Jeon, J.-S. Failure Mode and Effects Analysis of RC Members Based on Machine-Learning-Based SHapley Additive exPlanations (SHAP) Approach. Eng. Struct. 2020, 219, 110927. [Google Scholar] [CrossRef]

- Avesta, A.; Hossain, S.; Lin, M.; Aboian, M.; Krumholz, H.M.; Aneja, S. Comparing 3D, 2.5D, and 2D Approaches to Brain Image Auto-Segmentation. Bioengineering 2023, 10, 181. [Google Scholar] [CrossRef]

- Micikevicius, P.; Narang, S.; Alben, J.; Diamos, G.; Elsen, E.; Garcia, D.; Ginsburg, B.; Houston, M.; Kuchaiev, O.; Venkatesh, G.; et al. Mixed Precision Training. arXiv 2017, arXiv:1710.03740. [Google Scholar]

- Famili, A.; Lao, Y. Deep Neural Network Quantization Framework for Effective Defense against Membership Inference Attacks. Sensors 2023, 23, 7722. [Google Scholar] [CrossRef]

- A Survey of the Vision Transformers and Their CNN-Transformer Based Variants|Artificial Intelligence Review. Available online: https://link.springer.com/article/10.1007/s10462-023-10595-0 (accessed on 9 March 2025).

- Zhang, L.; LaBelle, W.; Unberath, M.; Chen, H.; Hu, J.; Li, G.; Dreizin, D. A Vendor-Agnostic, PACS Integrated, and DICOM-Compatible Software-Server Pipeline for Testing Segmentation Algorithms within the Clinical Radiology Workflow. Front. Med. 2023, 10, 1241570. [Google Scholar] [CrossRef]

- Pati, S.; Verma, R.; Akbari, H.; Bilello, M.; Hill, V.B.; Sako, C.; Correa, R.; Beig, N.; Venet, L.; Thakur, S.; et al. Reproducibility Analysis of Multi-Institutional Paired Expert Annotations and Radiomic Features of the Ivy Glioblastoma Atlas Project (Ivy GAP) Dataset. Med. Phys. 2020, 47, 6039–6052. [Google Scholar] [CrossRef]

- Brancato, V.; Cerrone, M.; Lavitrano, M.; Salvatore, M.; Cavaliere, C. A Systematic Review of the Current Status and Quality of Radiomics for Glioma Differential Diagnosis. Cancers 2022, 14, 2731. [Google Scholar] [CrossRef] [PubMed]

- A Systematic Review Reporting Quality of Radiomics Research in Neuro-Oncology: Toward Clinical Utility and Quality Improvement Using High-Dimensional Imaging Features|BMC Cancer. Available online: https://link.springer.com/article/10.1186/s12885-019-6504-5 (accessed on 8 March 2025).

- Yu, A.C.; Mohajer, B.; Eng, J. External Validation of Deep Learning Algorithms for Radiologic Diagnosis: A Systematic Review. Radiol. Artif. Intell. 2022, 4, e210064. [Google Scholar] [CrossRef]

- Muralidharan, V.; Adewale, B.A.; Huang, C.J.; Nta, M.T.; Ademiju, P.O.; Pathmarajah, P.; Hang, M.K.; Adesanya, O.; Abdullateef, R.O.; Babatunde, A.O.; et al. A Scoping Review of Reporting Gaps in FDA-Approved AI Medical Devices. NPJ Digit. Med. 2024, 7, 273. [Google Scholar] [CrossRef] [PubMed]

- Macheka, S.; Ng, P.Y.; Ginsburg, O.; Hope, A.; Sullivan, R.; Aggarwal, A. Prospective Evaluation of Artificial Intelligence (AI) Applications for Use in Cancer Pathways Following Diagnosis: A Systematic Review. BMJ Oncol. 2024, 3, e000255. [Google Scholar] [CrossRef] [PubMed]

- Khan, W.; Leem, S.; See, K.B.; Wong, J.K.; Zhang, S.; Fang, R. A Comprehensive Survey of Foundation Models in Medicine. arXiv 2024, arXiv:2406.10729. [Google Scholar] [CrossRef]

- Mei, X.; Liu, Z.; Robson, P.M.; Marinelli, B.; Huang, M.; Doshi, A.; Jacobi, A.; Cao, C.; Link, K.E.; Yang, T.; et al. RadImageNet: An Open Radiologic Deep Learning Research Dataset for Effective Transfer Learning. Radiol. Artif. Intell. 2022, 4, e210315. [Google Scholar] [CrossRef]

- Cox, J.; Liu, P.; Stolte, S.E.; Yang, Y.; Liu, K.; See, K.B.; Ju, H.; Fang, R. BrainSegFounder: Towards 3D Foundation Models for Neuroimage Segmentation. Med. Image Anal. 2024, 97, 103301. [Google Scholar] [CrossRef]

- Chen, M.; Zhang, M.; Yin, L.; Ma, L.; Ding, R.; Zheng, T.; Yue, Q.; Lui, S.; Sun, H. Medical Image Foundation Models in Assisting Diagnosis of Brain Tumors: A Pilot Study. Eur. Radiol. 2024, 34, 6667–6679. [Google Scholar] [CrossRef]

- Santra, S.; Kukreja, P.; Saxena, K.; Gandhi, S.; Singh, O.V. Navigating Regulatory and Policy Challenges for AI Enabled Combination Devices. Front. Med. Technol. 2024, 6, 1473350. [Google Scholar] [CrossRef] [PubMed]

- McKee, M.; Wouters, O.J. The Challenges of Regulating Artificial Intelligence in Healthcare. Int. J. Health Policy Manag. 2022, 12, 7261. [Google Scholar] [CrossRef] [PubMed]

- Hofer, I.S.; Burns, M.; Kendale, S.; Wanderer, J.P. Realistically Integrating Machine Learning into Clinical Practice: A Road Map of Opportunities, Challenges, and a Potential Future. Anesth. Analg. 2020, 130, 1115–1118. [Google Scholar] [CrossRef] [PubMed]

- Preti, L.M.; Ardito, V.; Compagni, A.; Petracca, F.; Cappellaro, G. Implementation of Machine Learning Applications in Health Care Organizations: Systematic Review of Empirical Studies. J. Med. Internet Res. 2024, 26, e55897. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).