2. Methods

2.1. Participants

We retrospectively collected data from patients who underwent EBUS-TBB for the diagnosis of PPLs at the Division of Thoracic Medicine, National Taiwan University Cancer Center, between November 2019 and January 2022. The study protocol was approved by the Institutional Review Board of the National Taiwan University Cancer Center (IRB# 202207064RINB, date 19 August 2022). The requirement for informed consent was waived because only de-identified data were analyzed. All procedures were conducted in accordance with the principles of the Declaration of Helsinki.

Patient characteristics and bronchoscopy findings, including age, gender, and EBUS image patterns, were recorded before or during the procedure. EBUS patterns were classified as type I (homogeneous), type II (hyperechoic dots and linear arcs), and type III (heterogeneous), according to the criteria described by Kurimoto et al. [

5].

2.2. EBUS-TBB Procedure and Image Collection

All EBUS-TBB procedures were performed by pulmonologists with over 10 years of EBUS experience. After conscious sedation with propofol, midazolam, and fentanyl, a flexible bronchoscope was inserted through a laryngeal mask airway. A 20 MHz radial probe EBUS (UM-S20-17S; Olympus, Tokyo, Japan) was advanced through the working channel into the target bronchus under computed tomography (CT) guidance. Once the target lesion was identified, the probe was moved back and forth slowly for at least 5 s to scan the entire lesion. All EBUS videos were recorded using a medical video recording system (TR2103; TWIN BEANS Co., New Taipei City, Taiwan). During the procedures, two pulmonologists simultaneously assessed the EBUS image patterns.

After confirming the biopsy site, TBB was performed with forceps. Samples were fixed in 10% formalin, paraffin-embedded, and stained with hematoxylin and eosin for evaluation by experienced cytopathologists. Following EBUS-TBB, 25 mL of sterile saline was instilled into the target bronchus, and the retrieved fluid was sent for microbiological analysis. In each EBUS-TBB procedure, only a single target lesion was sampled.

Definitive diagnoses for the EBUS videos were determined based on the final diagnosis of the corresponding PPLs, using cytopathological findings, microbiological results, or clinical follow-up. Histological results that were inconclusive—such as nonspecific fibrosis, chronic inflammation, necrosis, atypical cells, or “suspicious” findings—were classified as non-diagnostic. Benign inflammatory lesions that could not be confirmed pathologically or microbiologically were diagnosed through radiological and clinical follow-up, defined as stability or reduction in lesion size on CT imaging for at least 12 months after EBUS-TBB.

2.3. Data Preprocessing

All EBUS videos were sampled at a rate of one frame every 0.4 s. Every eight consecutive frames were stacked into a single 3D image, hereafter referred to as a clip, which served as the model input. To maintain temporal continuity and avoid splitting adjacent frames into different clips, a 50% overlap was applied between neighboring clips. As a result, each clip encompassed 3.2 s of video information, with a 1.6 s interval between successive clips.

The images were cropped to 960 × 960 pixels and subsequently downsampled to 224 × 224 pixels to reduce computational demands. To mitigate overfitting, horizontal flipping was applied with a probability of 50% as a data augmentation strategy. In addition, the Noise CutMix method was employed in selected experiments during SwAV model training to assess its impact on noise robustness.

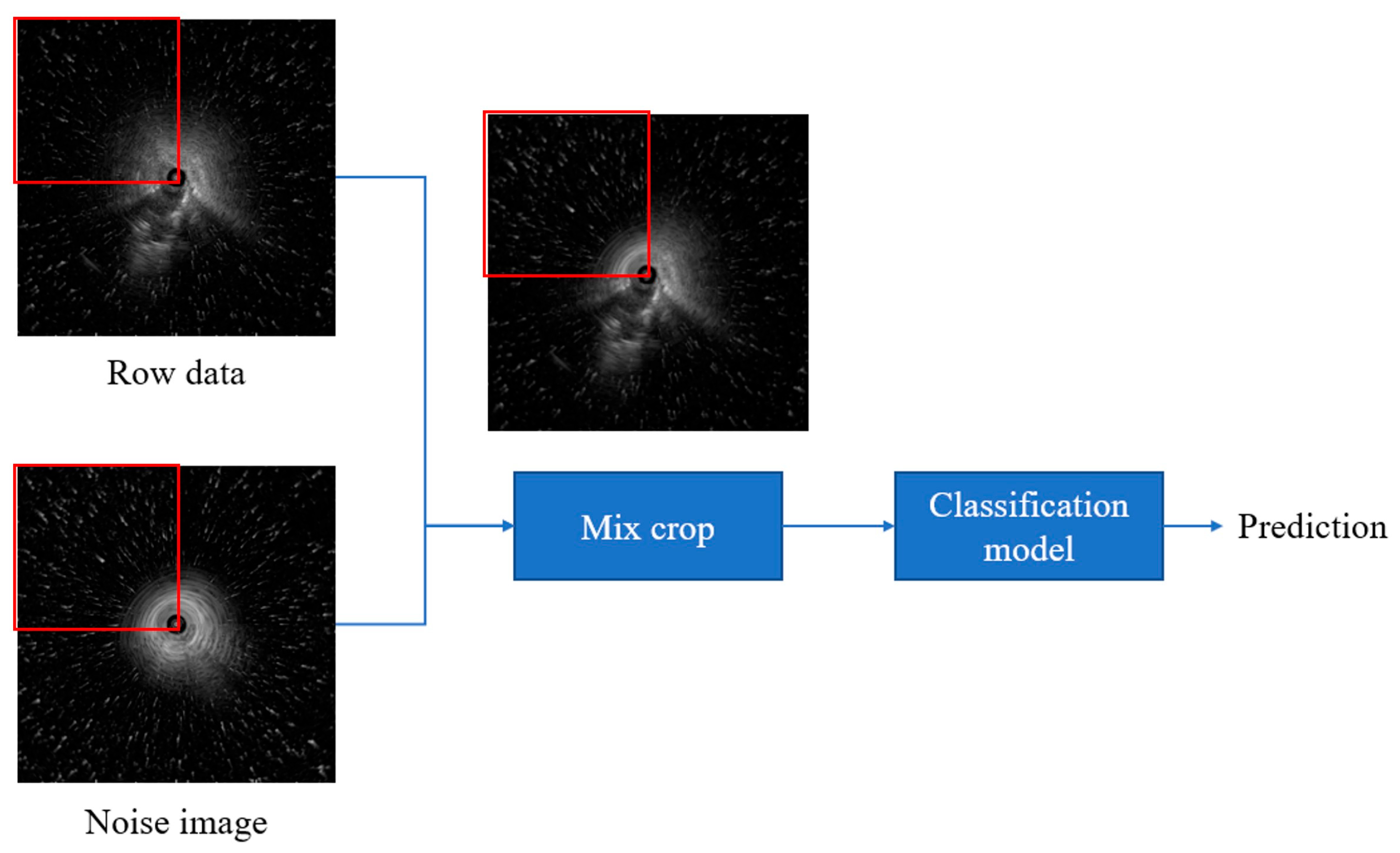

2.4. Noise CutMix

In this study, we introduced “Noise CutMix”, a variation of CutMix that replaces image regions with noise patches by adding additional noise to the image, the model’s ability to extract features from images containing noise is enhanced (

Figure 1). We first randomly capture 200 images from segments containing no lesions only air echoes. Then, to simulate real-world fan-shaped noise, we segment the radial ultrasound images in 90-degree increments, forming four rectangles: upper left, upper right, lower left, and lower right. Next, we randomly replace the relative positions in the original images to create images with noise. This method is used for training SwAV contrastive learning and subsequent validation of noisy data to ensure that the images contain noise from air echoes. Because our goal was to simulate the types of noise encountered during actual surgery, fan-shaped noise was generated, so no other data augmentation strategies were used.

2.5. SlowFast

In video recognition, 3D networks better capture temporal dynamics than 2D models but incur higher computational costs. To balance efficiency and performance, Feichtenhofer et al. [

15] proposed the SlowFast dual-stream architecture, where the Slow pathway (3D ResNet50 backbone) emphasizes static spatial features with fewer frames and more channels, while the Fast pathway captures temporal dynamics with more frames and fewer channels. In our implementation, the Fast pathway samples at four times the frequency of the Slow pathway with 32 input frames, and its feature maps are fused with the Slow pathway via 3D convolution to integrate spatial and temporal information.

2.6. SwAV

Contrastive learning is an unsupervised approach that improves clustering by pulling similar (positive) samples closer in feature space while pushing dissimilar (negative) samples apart. Unlike conventional methods, SwAV eliminates the need for negative samples [

16]. It compares differently augmented views of the same image by assigning them to prototype cluster centers and swapping their soft-label codes for prediction. The SwAV method first calculates the similarity between the feature and K cluster centers (prototypes) to generate codes belonging to soft labels. Then, these codes are used as pseudo-labels to exchange and predict each other. The loss calculation of this method is shown in Equations (1)–(3).

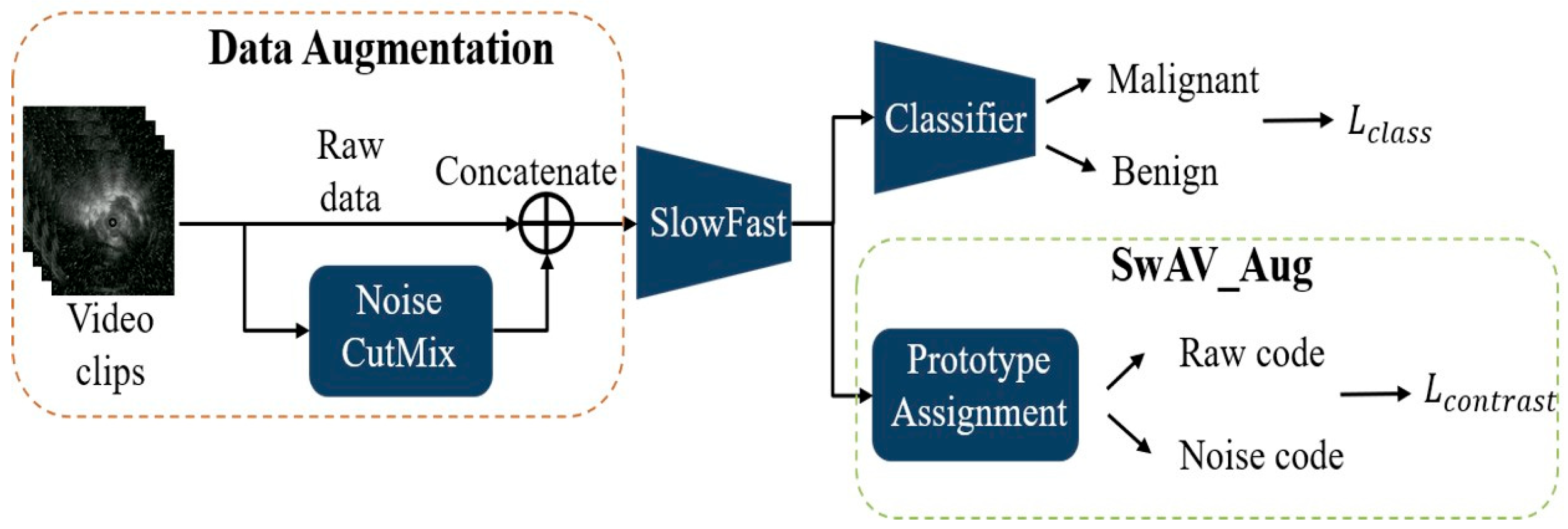

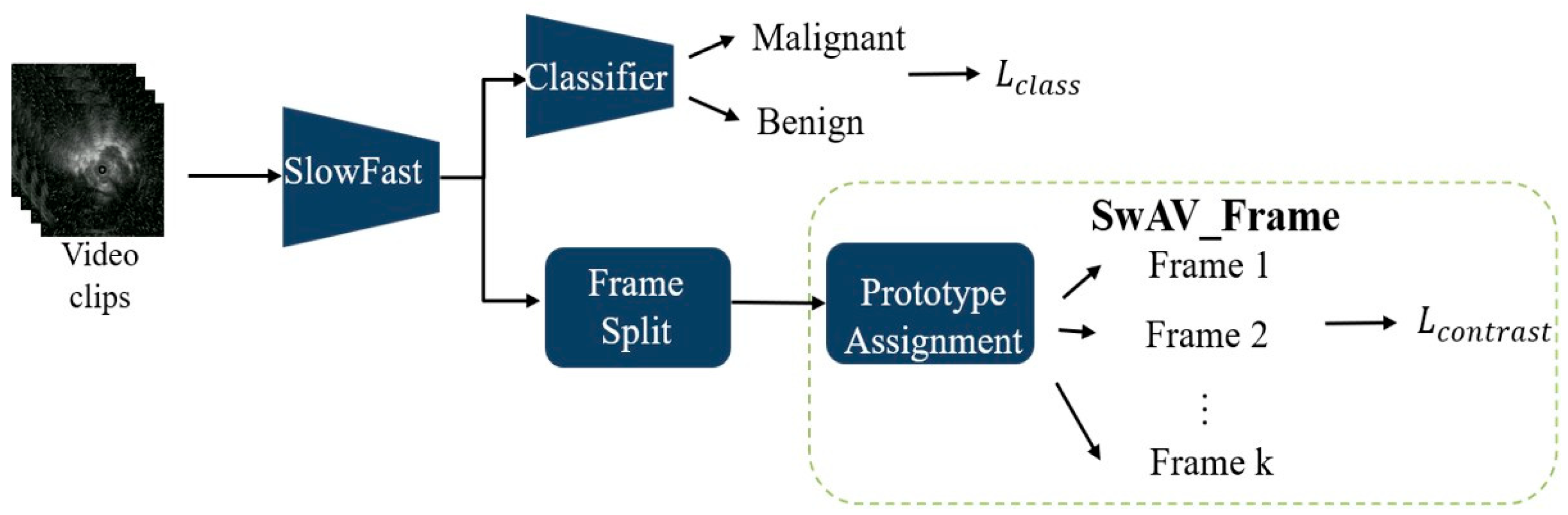

2.7. Model Overview

During training, the model integrates two modules: SlowFast and the SwAV. SwAV is applied in two ways: SwAV_Aug (

Figure 2), which indicates that the positive pairs in the SwAV module are generated through data augmentation. The classification loss is computed by comparing the predicted scores of benign and malignant lesions—produced by the classifier—with the corresponding pathological ground truth labels. The contrastive loss, on the other hand, is derived from the proposed SwAV_Aug approach. In this method, data augmentation is performed using Noise CutMix during input processing. Each augmented and original image is passed through the SlowFast network to extract their respective feature representations. The original and noise-augmented images serve as positive pairs, whose feature embeddings are compared in the clustering space to compute the contrastive loss. This mechanism aims to enhance the feature consistency of images originating from the same source and improve the model’s robustness to noise; and SwAV_Frame (

Figure 3), which the positive samples are derived from frames within the same clip. Since these frames are temporally continuous, we expect them to be predicted as belonging to the same class, thereby preventing transient noise from disrupting classification consistency. Therefore, these frames are treated as mutual positive pairs for computing the contrastive loss, encouraging the model to maintain consistent predictions across temporally adjacent frames. SlowFast is trained jointly with SwAV, optimizing both classification and contrastive losses.

2.8. Statistical Analysis

We evaluated temporal information and model generalizability by comparing 2D and 3D neural networks for classifying benign versus malignant lung lesions from EBUS videos. The effects of contrastive learning and attention mechanisms were also assessed under different training strategies. To account for variable video lengths, segment-level predictions were averaged to generate case-level results, with malignancy assigned when the probability exceeded 0.5. Performance was measured by AUC, accuracy, sensitivity, specificity, and F1-score.

The F1-score, defined as the harmonic mean of precision and recall, was calculated as:

Precision is calculated as:

Recall (also referred to as sensitivity) is defined as:

Pulmonologists’ assessments on the validation set served as the benchmark, with type II and type III EBUS image patterns classified as malignant, and type I patterns classified as benign. We utilized SPSS version 22.0 (IBM, SPSS, Chicago, IL, USA) for statistical analysis.

4. Discussion

This retrospective study demonstrated that our proposed system, which analyzes EBUS videos, is highly effective in distinguishing between benign and malignant processes, outperforming conventional 2D models and even surpassing pulmonologists’ assessments.

Previous studies have shown that convolutional neural networks (CNNs) can achieve high efficiency in classifying EBUS-TBB lesions. Chen et al. combined a CaffeNet-based architecture with support vector machines (SVMs) to distinguish benign from malignant lesions, achieving an accuracy of 85.4% and an AUC of 0.87 [

14]. Hotta et al. [

12] developed a deep convolutional network that demonstrated superior accuracy, sensitivity, specificity, positive predictive value, and negative predictive value compared with bronchoscopists. The model achieved an accuracy of 83.3%, outperforming two experienced physicians (73.8% and 66.7%) [

12]. Consistent with these findings, our proposed system also exceeded pulmonologists’ diagnostic accuracy, sensitivity, and F1-score. These results suggest that CAD systems may have stronger interpretative capability for EBUS images than even experienced bronchoscopists and could be particularly helpful in evaluating lesions that are more challenging to interpret during clinical EBUS examinations.

However, previous studies on EBUS lesion classification have predominantly relied on 2D CNN architectures applied to static ultrasound images [

12,

13,

14]. These approaches were based on manually selected frames and lacked temporal modeling, which limits their applicability in real-time clinical workflows. As shown in

Table 2, our results confirm these limitations of 2D approaches. Although certain 2D CNN models, such as VGG19 and ViT, achieved high sensitivity (≥97%), their specificity was markedly lower (≤33%), indicating a bias toward predicting malignancy.

To our knowledge, this study presents the first end-to-end CAD architecture specifically developed for real-time classification of full-length EBUS videos in clinical settings. By incorporating temporal information, particularly through the motion-sensitive SlowFast network, our 3D models achieved a more balanced diagnostic performance between sensitivity and specificity. The SlowFast model demonstrated the highest AUC (0.864) among all evaluated architectures, with a sensitivity of 95.45% and an F1-score of 87.50%, outperforming 2D models and even surpassing pulmonologists’ assessments in overall diagnostic accuracy. These results highlight the clinical value of video-based analysis, in which temporal cues such as probe motion, tissue deformation, and vascular pulsation provide essential contextual information for accurate lesion characterization.

Human visual processing studies have shown that even complex natural-scene categorization can occur within approximately 150 ms [

17], which is 6.7 FPS, suggesting that near-real-time feedback is essential for practical integration into bronchoscopy workflows. The inference speed of our model (15–30 FPS) falls within this latency range according to our setup, indicating that the proposed system is computationally feasible for real-time use.

The results in

Table 2 illustrate how different contrastive learning strategies affect model bias and robustness. Incorporating SwAV_Aug maximized sensitivity (100%) but substantially lowered specificity (22.22%), reflecting a bias toward malignancy predictions. Such a strategy may be advantageous in high-sensitivity screening scenarios where minimizing false negatives is critical. In contrast, SwAV_Frame achieved the highest specificity (55.56%) and the best F1-score (88.17%) among the SlowFast-based models, providing a more balanced trade-off that is better suited for confirmatory diagnostic applications.

Table 3 shows that generalizability testing revealed performance declines across all models—particularly in specificity—when applied to external datasets. Notably, the SwAV_Frame configuration maintained specificity comparable to that of pulmonologists’ assessments under domain shift, demonstrating greater resistance to overfitting and improved reliability in real-world clinical settings. Compared with pulmonologists, whose validation set performance yielded an F1-score of 79.07% and specificity of 55.56%, several AI configurations—including SlowFast and SwAV_Frame—not only exceeded pulmonologists’ sensitivity (≥93.18% vs. 77.27%) but also achieved comparable specificity in select cases. These findings highlight the potential of AI-assisted diagnosis to enhance sensitivity without compromising specificity in routine bronchoscopy practice.

Although the 95% confidence intervals of AUC values could not be obtained due to the lack of raw prediction scores, the relative ranking of model performance remains valid, as all models were evaluated on the same dataset using identical procedures. The conclusions based on accuracy, sensitivity, specificity, and F1-score—each of which has well-defined confidence intervals—therefore remain statistically reliable.

The integration of temporal modeling and contrastive learning offers a versatile diagnostic framework adaptable to different clinical needs. High-sensitivity configurations—such as those incorporating SwAV_Aug—may be particularly valuable for early detection or screening of high-risk patients, where minimizing missed malignancies is critical. Conversely, high-specificity models—such as those based on SwAV_Frame—could be applied in confirmatory settings to reduce unnecessary biopsies and their associated risks.

Importantly, the model’s diagnostic performance was comparable to—and in some configurations exceeded—that of experienced pulmonologists, particularly with respect to sensitivity. Unlike 2D approaches that require manual frame selection, our system processes full-length EBUS videos without interrupting the procedure. These features highlight the potential of the system as a real-time decision-support tool to enhance diagnostic consistency, reduce inter-operator variability, and guide optimal biopsy site selection during bronchoscopy.

Our study has several limitations. First, this study was based on a single-center dataset with retrospectively collected data. It is well recognized that evaluating a model on a single real-world dataset may not fully capture its performance across diverse healthcare settings, and testing on multiple datasets would help validate its generalizability. In addition, the relatively limited histopathologic heterogeneity and the uneven distribution of histopathologic subtypes among the training, validation, and testing sets may also have influenced the accuracy of the model. However, this study is the first to utilize EBUS video recordings for model training, and currently, no publicly available dataset exists for this purpose. Operator variability may also introduce potential bias, particularly when operators have differing levels of experience with EBUS procedures. Moreover, although the proposed models demonstrate potential for real-time deployment, their integration into routine bronchoscopy workflows has not yet been validated. Future multi-center, prospective studies are needed to confirm robustness across diverse patient populations, imaging platforms, and operator techniques, as well as to evaluate usability, and clinical impact.

Second, we did not use a surgical gold standard to define all final pathologies; however, all benign processes without definitive cytopathologic or microbiologic evidence were clinically followed for at least 12 months and characterized using chest CT. This approach is more consistent with real-world clinical practice. Finally, although AI-assisted diagnosis showed promise in matching or exceeding clinical performance, its optimal role—whether as a screening tool, confirmatory aid, or both—remains to be clarified. Integration into clinical workflows, together with operator training and performance monitoring, will be essential to ensure sustained benefits.

5. Conclusions

Our study developed a comprehensive model integrating the SlowFast architecture with the SwAV contrastive learning approach to classify lung lesions as benign or malignant from EBUS videos. The findings demonstrate that incorporating temporal information enhances model performance, while self-supervised contrastive learning markedly improves both accuracy and generalizability. Using the SlowFast + SwAV_Frame architecture, the model achieved high diagnostic efficiency on both the validation and test sets, surpassing pulmonologists’ assessments across multiple metrics. We believe that our proposed artificial intelligence-assisted diagnostic system, which operates without interrupting the procedure, has the potential to support pulmonologists during EBUS-TBB by shortening the time required to differentiate benign from malignant lesions and by identifying optimal biopsy sites. This may reduce biopsy failure and the number of sampling attempts, lower procedure-related risks, and ultimately improve the diagnostic yield of EBUS-TBB.

In addition to achieving strong diagnostic performance, the incorporation of self-supervised contrastive learning substantially enhances model generalizability while reducing reliance on large manually annotated datasets. This property is particularly advantageous in ultrasound-based applications, where labeling is time-consuming and operator-dependent. These strengths further support the potential clinical applicability and scalability of the proposed framework.

To our knowledge, this is also the first study to present an end-to-end CAD architecture specifically developed for the real-time classification of full-length EBUS videos in clinical settings. However, this study was based on a single-center dataset, and its retrospective design may limit the generalizability of the findings. A multicenter, prospective study is needed to confirm the model’s robustness across diverse patient populations.