1. Introduction

Schizophrenia is a serious psychiatric disorder that affects the thoughts, speech, behavior, emotions, physical, and social well-being of a person. Schizophrenia affects around 24 million (0.32%) people worldwide according to the World Health Organization (WHO). According to the National Mental Health Survey (NMHS) in India, the occurrence and prevalence of schizophrenia spectrum disorders were examined during 2015 and 2016 through a multistage, stratified, random cluster sampling technique [

1]. It was found that the prevalence of schizophrenia spectrum disorder was about 1.41% in their entire life, and nearly 0.42% people were diagnosed with schizophrenia at that moment. Schizophrenia is characterized by impairments in the way things are perceived and changes in behavior. This causes psychosis, which may affect the personal, family, social, educational, and occupational functioning of a person since they survive in a world that is disconnected from reality. Participants who show at least one of the symptoms of delusions, hallucinations, or disorganized speech during a period of one month are included in this study. Further, this disorder is diagnosed between late teens and early thirties. In males, it is found earlier between late adolescence and early twenties and, similarly, in females, it is found between early twenties and early thirties. Participants who show a history of autism spectrum disorder, communication disorder in childhood, or depressive or bipolar disorder are excluded from this study. This disorder is more commonly found in men than in women. Schizophrenia shows a variety of symptoms, which makes diagnosis and treatment very difficult. It becomes hard to recognize a person with schizophrenia. Experts view schizophrenia as a spectrum of conditions.

Signs and symptoms are classified based on the characteristics of the brain’s cognitive functions. The first kind of symptom comprises positive or psychotic symptoms. The positive symptoms are characterized by the following:

Delusion: The affected person has false beliefs and is strongly convinced of them despite contradictory evidence.

Hallucinations: The affected people can hear unusual voices or sounds.

Disorganized or incoherent speech: People might have problems conveying their thoughts. They find difficulty in organizing their thoughts while speaking. People find it difficult to understand.

Grandiosity: The person feels superior to others.

Suspicion: The person frequently feels scared, and becomes suspicious of everything around him/her.

The negative symptoms are characterized by emotional withdrawal, disinterest in their appearance or personal hygiene, poor social involvement, lack of spontaneity, and difficulty in abstract thinking. Apart from these positive and negative symptoms, they also show cognitive symptoms characterized by a lack of memory, poor attention, low processing speed, a lack of visual and verbal learning, substantial deficits in reasoning, a lack of planning, and problem-solving. Integration of genetic predisposition along with environmental, social, and psychological factors causes abnormalities in neurodevelopmental features, and this leads to brain dysfunction and improper balance of chemicals, which results in schizophrenia. Analyzing these factors and symptoms through machine learning helps to enhance the performance in diagnosing schizophrenia.

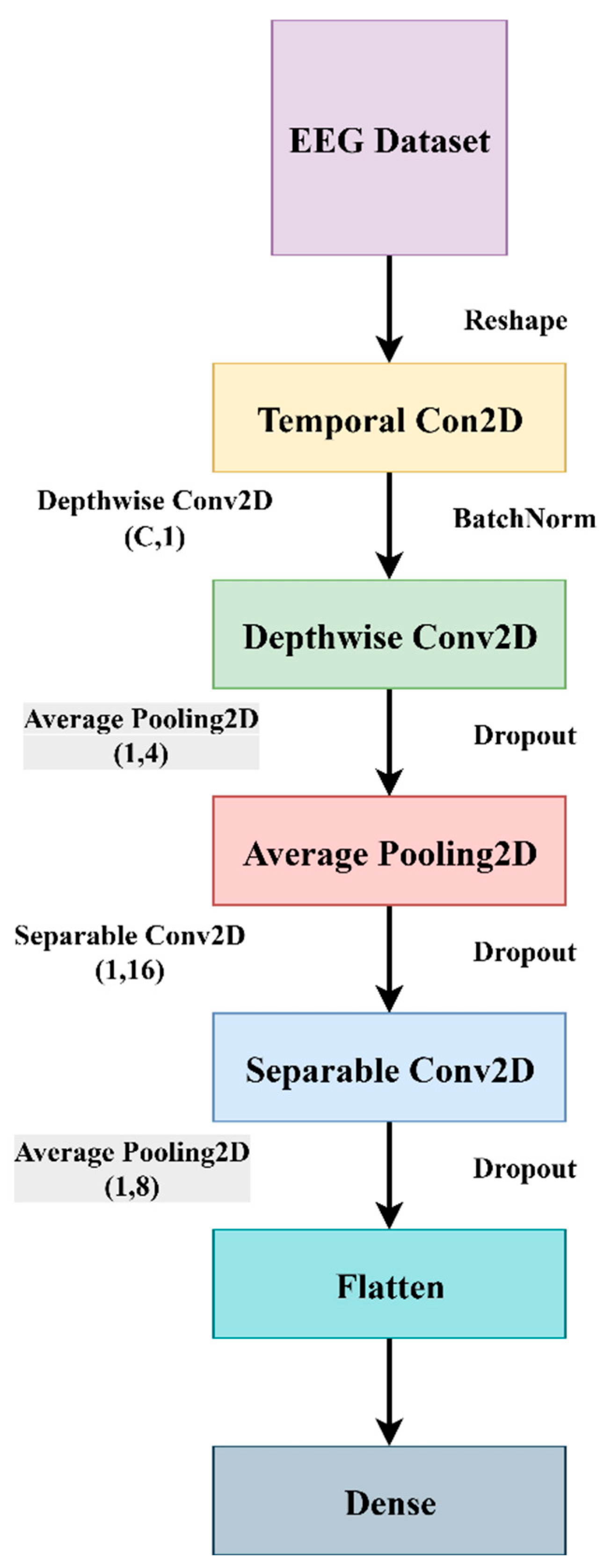

EEGNet is the most efficient and compact convolutional neural network (CNN) architecture, mainly suitable for EEG-based brain–computer interface (BCI) tasks. This method is highly effective in the detection of schizophrenia [

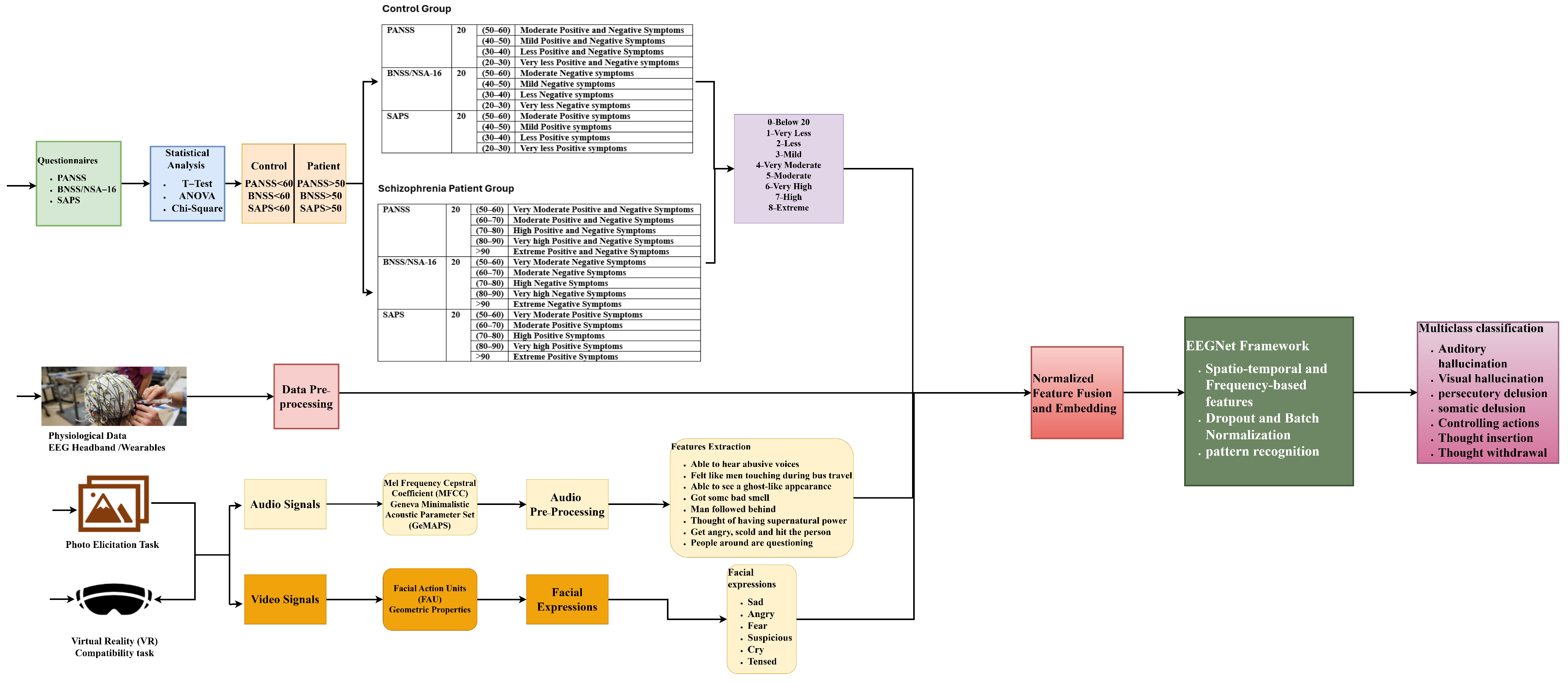

2]. This architecture mainly extracts spatio-temporal and frequency-based features from EEG data. The diagnosis process is carried out by extracting physiological data, either EEG/ECG or MRI data, textual data through questionnaires, video signals through photo elicitation tasks, and speech signals through VR stimuli assessment tasks from the control group and schizophrenia patients. These raw data undergo pre-processing, where the artifacts and noises are removed before being fed into a federated learning network. Applying machine learning algorithms, the features and patterns are trained in a model to classify the presence of schizophrenia. This paper mainly concentrates on the questionnaire and how the system behaves when the publicly available dataset is fed into the proposed EEGNet architecture.

2. Related Work

This article focuses on the use of EEGNet in the diagnosis of schizophrenia by statistically analyzing self-report questionnaires and studying the traits of schizophrenia using textual and physiological data aspects. Various data privacy preservation techniques are also examined, which improve the system’s functionality.

For the diagnosis of schizophrenia, a prediction model is developed using aperiodic neural activity, which has been linked to the excitation−inhibition balance and neural spikes in the brain [

3]. A questionnaire and resting-state electroencephalogram (EEG) are used to investigate the link between anomalies in the first episode of schizophrenia spectrum psychosis (FESSP) and to study the intensity of symptoms. The severity of symptoms is assessed through assessment tools such as the Brief Psychiatric Rating Scale (BPRS), the Scale for Assessment of Negative Symptoms (SANS), and the Scale for Assessment of Positive Symptoms (SAPS). The EEG data were collected using a 60-channel cap based on the 10–20 international system, and the power spectral density of each electrode was measured for every individual. A correlation of r = 0.89 and

p < 0.001 is displayed in the aperiodic offset and exponent features.

Several studies have explored the characteristics and benefits of multimodal fusion, which is used to capture complex emotional and behavioral patterns that are associated with mental illness [

4]. A study of mental health videos on the Douyin platform illustrates how different modalities like visual, linguistic, and textual cues help in understanding how people think about and talk about mental illness.

Detection of schizophrenia is improved by using two new methods, namely Automatic Speech Recognition (ASR) and Natural Language Processing (NLP) [

5]. Word Error Rate (WER) is used to measure the ASR data. Similarly, error type and position are used to examine the speech’s character qualitatively. The accuracy of classification is improved by measuring the word similarity. To investigate the similarity between automatic and manual transcripts, two random forest classifiers were used. The accuracy of manual transcription was 79.8% whereas that of automatic transcription was found to be 76.7%. The accuracy of classification was improved when NLP and ASR were combined.

An automatic EEG detection method for the early diagnosis of schizophrenia is designed using a vision transformer [

6]. 19 channels of electrode placements are used in a 10–20 international system to map 1D EEG sequence data into a 3D image. This preserves the temporal and spatial characteristics. The performance of the model is evaluated through leave-one-subject-out cross-validation and 10-fold cross-validation methods. This was examined for the assessment of both subject-independent and subject-dependent categories. For the subject-independent category, the model’s average accuracy was 98.99% and the model’s average accuracy was 85.04% for the subject-dependent category. This supports early diagnosis and treatment.

Multimodal MRI and deep graph neural networks are used for extracting neuroimaging modalities to assess the presence of schizophrenia [

7]. This framework reveals region-specific weight irregularities that are linked to transcriptomic features. Thus, integration of neurobiological and behavioral data has proved as a promising technique in understanding the heterogeneity of disorder. Similarly, machine learning models integrated with neuroimaging modalities are used to differentiate schizophrenia, bipolar disorder, and borderline personality disorders by capturing multimodal features in order to improve the diagnostic accuracy.

An enhanced system with a single-channel EEG can be used to diagnose schizophrenia automatically with little input data [

8]. To create a model, this EEG data is extracted using knowledge distillation and transfer learning. The effectiveness of the system can be improved using this method, with the help of the continuous wavelet transform (CWT), which makes use of previously learned models, and EEG data are also converted to images. The automatic diagnosis of schizophrenia can be improved by expanding the number of pre-trained models in the image domain. The accuracy is improved by 97.81% by integrating self-distillation and VGG16 in the P4 channel.

To extract the spatial and temporal patterns from the EEG, temporal and spatial convolutions are used. A novel deep learning method called CALSczNet is applied to improve the detection performance [

9]. The technique uses local attention (LA), temporal attention (TA), and long-short-term memory (LSTM) to address the discriminative characteristics of non-stationary EEG signals. Using the publicly available Kaggle dataset for simple sensory tasks, the model’s performance can be assessed through 10-fold cross-validation. The model has achieved about 98.6% accuracy, 98.65% sensitivity, 98.72% specificity, and a 98.65% F1-score. The model is capable of detecting schizophrenia at an early stage.

Resting-state functional magnetic resonance imaging (rs-fMRI) plays an important role in analyzing the brain’s functional connectivity for diagnosing schizophrenia at an early stage [

10]. To determine the association of brain connectivity data, graph attention networks (GAT) are utilized to derive node-level features. The integration of the multi-graph attention network (MGAT) and a bilinear convolution (BC) neural network makes measuring functional connectivity easier. Grid search and multistage cross-validation are used in the MGAT-BC model to assess performance. This approach improves the diagnosis of schizophrenia by capturing topological aspects with an accuracy of 92%.

The overlap of symptoms of schizophrenia with other diseases can be resolved by a data-driven diagnosis that uses EEG data to identify brain connection biomarkers [

11]. By extracting the EEG spectral power data, a new feature interaction-based explainability technique for multimodal explanations is created. To find the biomarkers for neuropsychiatric illnesses, the impacts of schizophrenia are studied on various frequency bands, the alpha, beta, and theta frequency bands, and the explainable machine learning models. The mean and standard deviation of relevance for each fold were used to evaluate the model’s performance. For the mean, this model has shown an accuracy of 0.73 and an F1-score of 0.70. Similarly, for standard deviation, this model has achieved an accuracy of 0.09 and an F1-score of 0.13.

The nature of psychotic episodes and the fact that symptoms vary from person to person have made it difficult to identify them [

12]. Important information may occasionally be omitted when information is extracted from electronic health records (EHR). To diagnose psychosis, admission notes are extracted using Natural Language Processing (NLP) techniques. The models are trained using keywords, and the algorithms are assessed through a rule-based methodology. The effectiveness of the two models’ keyword extraction was measured. The first model achieved better results using an XGBoost classifier that utilizes term frequency-inverse document frequency (TF-IDF) for extracting features from notes through expert-curated keywords and achieved an F1-score of 0.8881. Another model achieved an F1-score of 0.8841 using BlueBERT to extract the same notes.

Recent studies have focused on the diagnosis of schizophrenia and analyzing the severity of symptoms using multimodal data. Self-supervised speech representations are used to assess the symptoms of schizophrenia [

13]. Multimodal biomarkers are used for measuring the severity of individual symptoms. These biomarkers are used for capturing speech and facial signals in psychiatric assessment. The effectiveness of speech, language, and orofacial signals is studied for remotely assessing the positive, negative, and cognitive symptoms.

P300 is utilized to examine the processing, stimulus-based responses and the instability of brain disorders [

14]. When tracking the locations of the brain during the cognitive process, more emphasis is paid to trial-to-trial variability, or TTV. This TTV is in a time-varying EEG network across the beta1, beta2, alpha, theta, and delta bands and is examined in this paper for the diagnosis of schizophrenia. A cross-band time-varying network can successfully extract the characteristics of schizophrenia with an accuracy of 83.39%, sensitivity of 89.22%, and specificity of 74.55%, according to the performance evaluation.

Social media-based multimodal data is also used to identify the early signs of mental distress, which highlights the role of digital behavioral footprints in screening [

15]. Digital footprints represent the participant’s status, comments, likes, language styles, sleep–wake patterns, and social activity, which demonstrates cognitive and emotional fluctuations. These modalities are analyzed using multimodal machine learning models and their performance is evaluated.

Schizophrenia patients experience disturbances in emotion, expression, and social interaction, and conventional methods depend upon in-person visits. This can be resolved by developing a remote, multimodal digital system that helps physicians assess the emotional dynamics in remote areas [

16]. This method uses a dialog-based human–machine interaction and multimodal markers that can detect and track the emotional changes in schizophrenia patients.

Anxiety symptoms in social anxiety disorder (SAD) are predicted using machine learning algorithms through multimodal data from Virtual Reality (VR) sessions [

17]. The severity of various anxiety symptoms is assessed through questionnaires and six VR sessions where the participants are allowed to interact with virtual characters and perform meditation-based relaxation exercises. Participants are exposed to video recording and physiological data during the VR session. The Extended Geneva minimalistic acoustic parameter set (eGeMAPS) is used to extract the acoustic features through the open SMILE toolkit. Core symptoms and cognitive symptoms of SAD were evaluated through catBoost and XGBoost models.

Digital assessment and monitoring of schizophrenia patients can be performed remotely by developing a scalable multimodal dialog platform [

18]. This system employs a virtual agent to carry out automated tasks using the Neurological and Mental Health Screening Instrument (NEMSI), a multimodal dialog system. Twenty-four participants diagnosed with schizophrenia were assessed through PANSS, BNSS, CDSS, CGI-S, AIMS, and BARS scales, speech signals were extracted through PRAAT, and facial expressions were extracted through MediaPipe and the FaceMesh algorithm. Speech metrics showed a result of mean AUC: 0.84 ± 0.02, which performed better than facial metrics with mean AUC: 0.75 ± 0.04 in classifying patients from controls. Speech and facial metrics showed test–retest-reliability performance of about >0.6 (moderate to high).

Communicative and expressive features that distinguish schizophrenia patients from healthy controls are evaluated by developing a multimodal assessment model using machine learning [

19]. Thirty-two participants with schizophrenia were assessed on the assessment battery for communication (ABaCo) for inter-rater reliability. Linguistic and extralinguistic scales assess the communicative and gestural. This assessment consists of 72 items in the form of interview interactions, which were recorded for about 100 short clips with a duration of 20–25 s each. The decision tree model was trained and achieved a mean accuracy of 82%, sensitivity of 76%, and precision of 91%.

Early diagnosis of mental health among corporate professionals can be assessed by developing an emotion-aware ensemble learning (EAEL) framework [

20]. This framework assesses facial expression and typing patterns. Facial expressions were captured through webcam interaction and cognitive and motor processes through typing patterns. SVM, CNN, and RF algorithms are used in this framework to capture emotions like sentiments, happiness, sadness, anger, surprise, disgust, fear, neutrality, confusion, and typing tasks that measure average typing speed, key hold time, key latency time, number of typos, and stress level. The EAEL framework showed an accuracy of 0.95, precision of 0.96, recall of 0.94, and F1-score of 0.95.

To analyze schizotypy traits across the psychosis spectrum, an uncertainty-aware model is developed for extracting acoustic and linguistic features [

21]. From 114 participants taken to the study by the author of [

21], 32 individuals with early psychosis and 82 individuals with low or high schizotypy were identified. The performance of speech and language models in classification and multimodal fusion models is analyzed. The multidimensional schizotypy scale (MSS) and Oxford-Liverpool Inventory of Feelings and Experiences (O-LIFE) were used for initial level screening. Acoustic features were extracted using open SMILE and whisperX. The model performs well across various interactions when employing random forest, linear discriminant analysis, and support vector machine SHAP for model prediction, achieving an F1-score of 83% and ECE 4.5 × 10

−2.

Acoustic speech features are extracted for distinguishing schizophrenia spectrum disorders (SSD) from healthy controls [

22]. The speech markers are used to recognize the negative and cognitive symptoms. Participants are allowed to take up a speech-eliciting task where they are asked to express their thoughts on viewing the picture. Prosody, temporal, spectral, articulatory, and rhythm dimensions are some of the parameters that are assessed using ML. Automated and objective architecture is also used for the detection of schizophrenia using multimodal behavioral features that fuses feature-level and decision-level features, which is responsible for identifying which modality is responsible for the symptoms obtained. [

23].

This work mainly focuses on the role of EEGNet in diagnosing schizophrenia through questionnaires and EEG data. The questionnaire data are analyzed through statistical approaches and the EEG data are analyzed by pre-processing the signals before feeding them into the EEGNet network. Machine learning techniques are used to extract spatio-temporal and frequency-based data and find patterns in order to distinguish between patients and those with schizophrenia. The methods of preserving data privacy, thereby enhancing the performance of the system, are also analyzed.

4. Results and Discussion

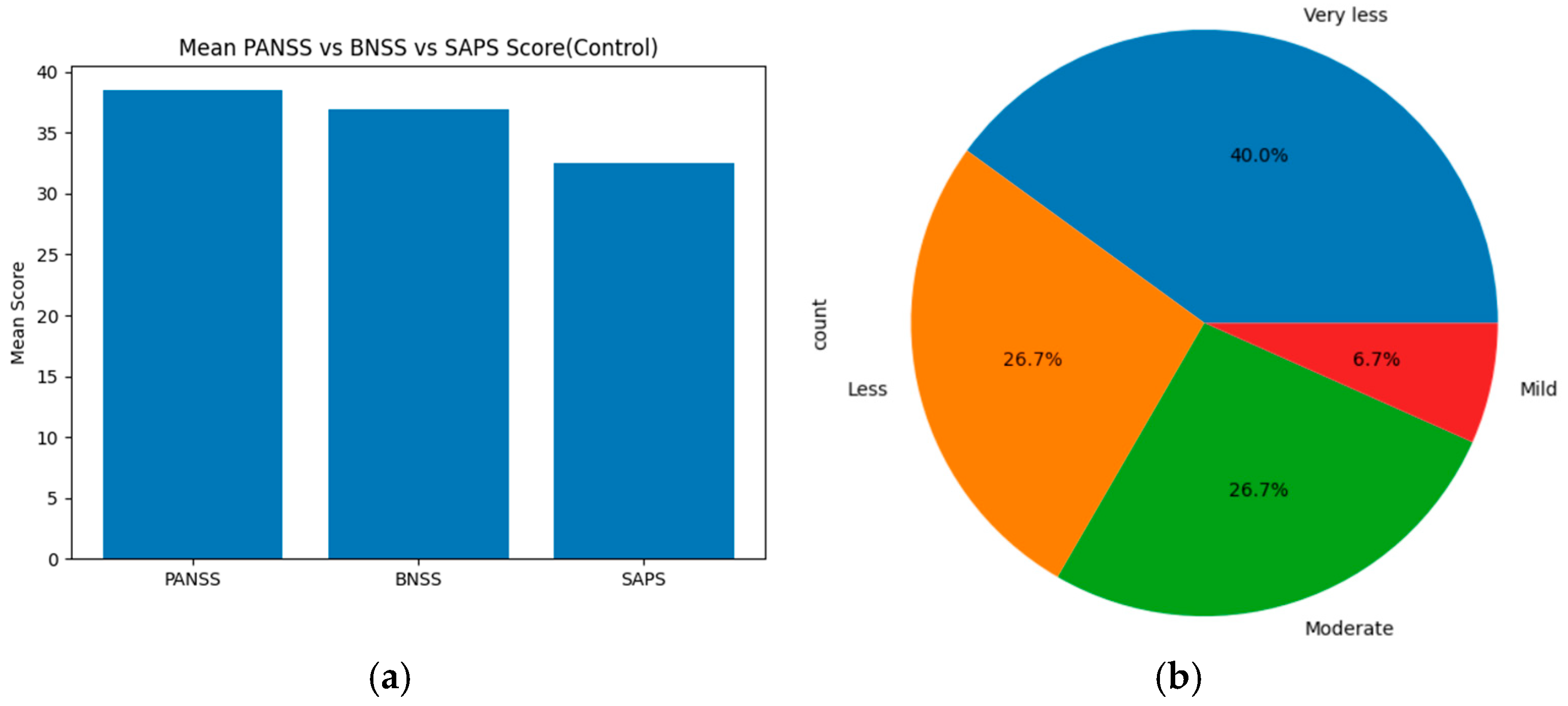

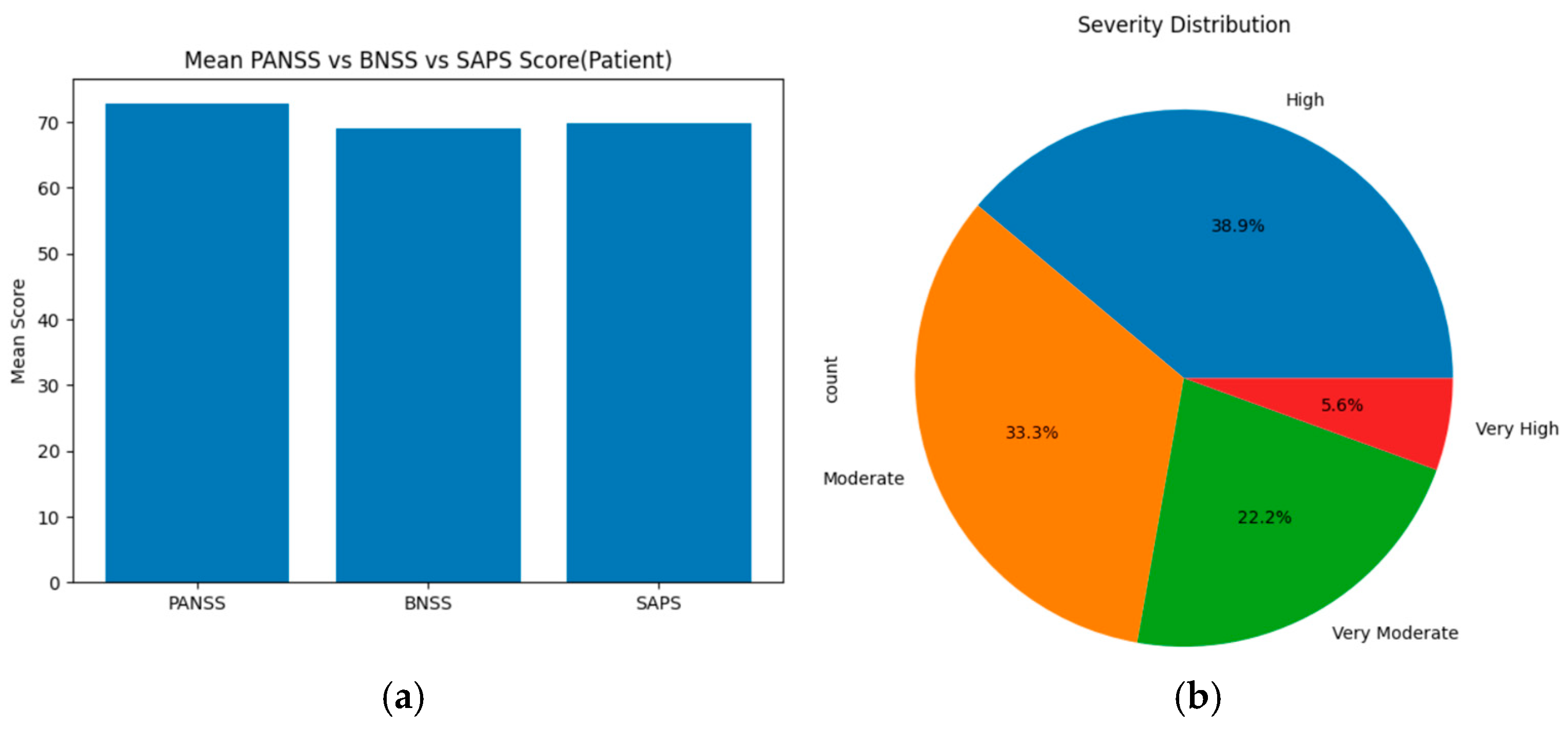

The statistical results obtained from the PANSS, SAPS, and BNSS scores indicate the severity of symptoms. For the healthy control group, based on the threshold, the severity levels were categorized as follows: ‘Mild, less, very less, very moderate, moderate, high, very high, and extreme’ for each assessment tool (PANSS, SAPS, and BNSS). These severity levels are compared using statistical methods, such as ANOVA, T-test, and chi-square, across all scales. On merging the total mean score and severity levels of PANSS, SAPS and BNSS, the results show that PANSS displays the highest mean score value of about 38. BNSS has a slightly lower score of around 37, and SAPS displays the lowest mean score of about 32–33. Based on the severity level distribution, nearly 40% of individuals show very less symptoms, 26.7% show less and moderate symptoms, and 6.7% individuals show mild symptoms. On applying statistical methods, ANOVA showed an F score of about 67.4797 and

p-value of 2.674 × 10

−9, the Welch

t-test showed a t-score of about −8.374 and

p-value of about 1.364 × 10

−7, and the chi-square showed a goodness of fit (GOF) value of about 24.8.

Figure 6a displays the mean score across PANSS, SAPS, and BNSS assessment tools of the control group, and (b) represents the severity distribution across three assessment tools of the healthy control group.

For the schizophrenia patients’ group, based on the threshold, the severity levels were categorized as follows: ‘Mild, less, very less, very moderate, moderate, high, very high, and extreme’ for each assessment tool (PANSS, SAPS, and BNSS). These severity levels are compared using statistical methods, such as ANOVA, T-test, and chi-square, across all scales. On merging the total mean score and severity levels of PANSS, SAPS, and BNSS, the results show that PANSS displays the highest mean score value of about 73, indicating high positive, negative, and general symptoms. SAPS has a slightly lower score of around 70 and BNSS displays the lowest mean score of about 69. Based on the severity level distribution, nearly 38.9% of individuals show high symptoms, 33.3% show moderate symptoms, 22.2% individuals show very moderate symptoms, and 5.6% individuals show very high symptoms. Upon applying statistical methods, ANOVA showed an F score of about 44.827 and a

p-value of 4.704 × 10

−7; T-test was not performed since they need more than one sample in both low and high groups; and the chi-square showed a goodness of fit (GOF) value of about 35.473.

Figure 7a displays the mean score across PANSS, SAPS, and BNSS assessment tools of the schizophrenia patients’ group, and (b) represents the severity distribution across three assessment tools of the schizophrenia patient group.

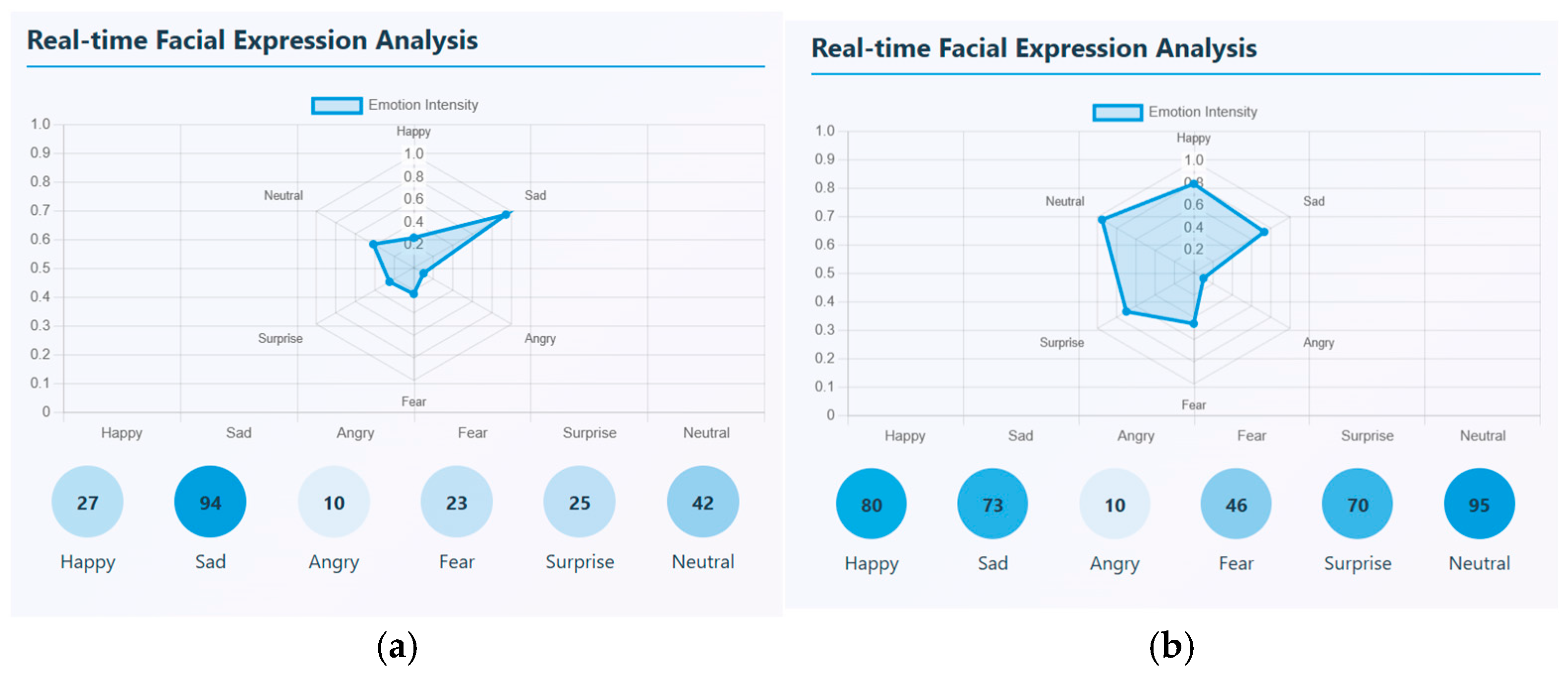

Photo elicitation and VR stimuli task data are captured in mp4 video format. There are, in total, 20 videos for healthy controls and 20 videos for schizophrenia patients that were taken for each task. Facial expressions and speech signals are extracted through ‘PsycheScan AI’, a multimodal psychological disorder application. This application is used to recognize various facial emotions like happy, sad, angry, fear, surprise, and neutral when no expression is given and also estimates the emotion’s intensity. Different facial emotions can be detected in real-time and can be estimated through an emotion intensity radar plot. This radar chart represents the strength of each facial emotion that is detected with a scale ranging from 0 and 1. In the video segment, the number of occurrences of each emotion is also calculated. From the samples uploaded, the most dominant emotional state that occurred many times is sadness with the highest intensity of about 0.6. For a sample, it occurred around 94 times. This sample showed negative emotional pattern. Though emotions vary, sadness is the most frequent and intense emotion found in affected patients. Similarly, a sample of a healthy control was taken, and facial emotions were detected and estimated. Here, the most dominant emotion that was strongly expressed was happy with the highest intensity of about 0.8; the next highest emotion was neutral. This shows that, emotionally, a healthy person has a positive affect and shows emotional stability. A sample real-time facial expression of a schizophrenia patient and healthy control is shown in

Figure 8. (a) represents the facial expression of a schizophrenia patient and (b) represents the facial expression of a healthy control.

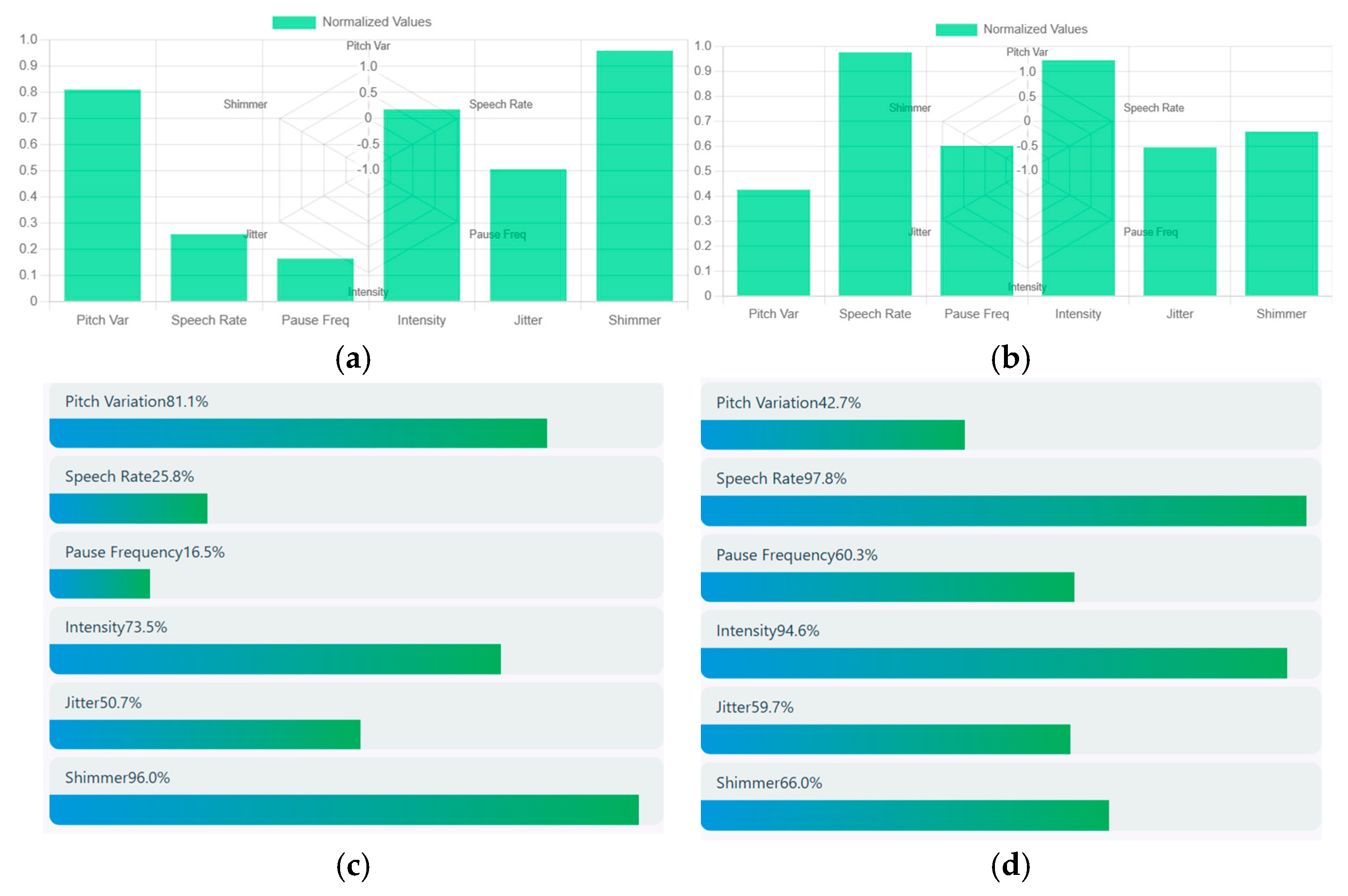

Speech signals are obtained through a bar chart that estimates the acoustic speech features such as pitch variation, speech rate, pause frequency, intensity, jitter, and shimmer. Speech signal from the above schizophrenia sample is taken and it shows a pitch variation of about 0.8 that represents high expressive speech having a slow speech rate of about 0.25. The intensity of speech has a relatively loud voice of around 0.7 and moderate voice instability (jitter) of about 0.5 with a high vocal instability (shimmer) of about 0.9. Hence, the acoustic analysis shows that the person was expressive but had a slow and continuous speech with strong vocal intensity. Higher shimmer and moderate jitter represent the emotional strain. Similarly, the speech signal of a healthy control showed moderate intensity of about 0.42, and a very rapid speech of about 1. The intensity of speech was very loud with high vocal energy and moderate jitter of about 0.58 with a high vocal amplitude instability of about 0.70. Hence, the acoustic analysis shows that the person speaks with loud and quick frequent pauses, showing high emotional speech. Increased shimmer and jitter show high vocal strain. A sample of normalized acoustic speech features of a schizophrenia patient and healthy control is shown in

Figure 9. (a) represents the acoustic speech features of a schizophrenia patient and (b) represents the acoustic speech features of a healthy control. Similarly, a comparative visualization of various acoustic features of schizophrenia patients and healthy control is depicted in (c) and (d).

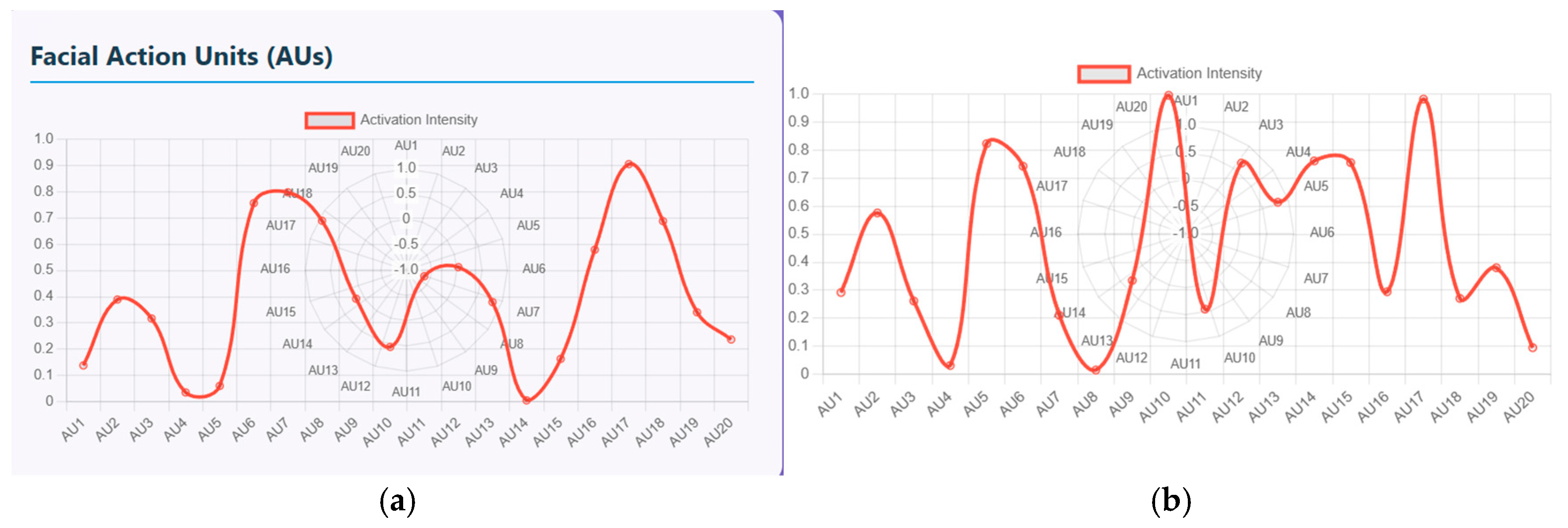

Furthermore, these facial emotions and speech signals can be quantified using facial action units (FAU) and vocal feature timeline. The FAU displays the activation intensity of different facial muscle movements. There are nearly 20 facial units ranging from AU1 to AU20 and the intensity ranging from 0 to 1.

Figure 10a shows the plot of measured intensity for each AU of schizophrenia patients. The AUs with high intensity that range from 0.8 to 0.9 represent muscle groups that are strongly activated, indicating either chin raise and lip compression, disgust or frustration emotion (AU17-19), and eye tightening and stress related expressions at AU7/8. Similarly, lower intensity that ranges from 0 to 0.1 shows symptoms like minimal activation with no anger, little brow lowering and cheek puffing. Similarly, (b) shows the plot of measured intensity for each AU of healthy controls. In this figure, AU10 and AU17/18/20 display near-maximum intensity of about 1 that represents strong facial expressions. AU5 and AU14 display high activation, ranging from 0.75 and 0.85, that represents eye widening and cheek movement. Here, the pattern is non-linear with multiple peaks indicating mixed emotional expressions.

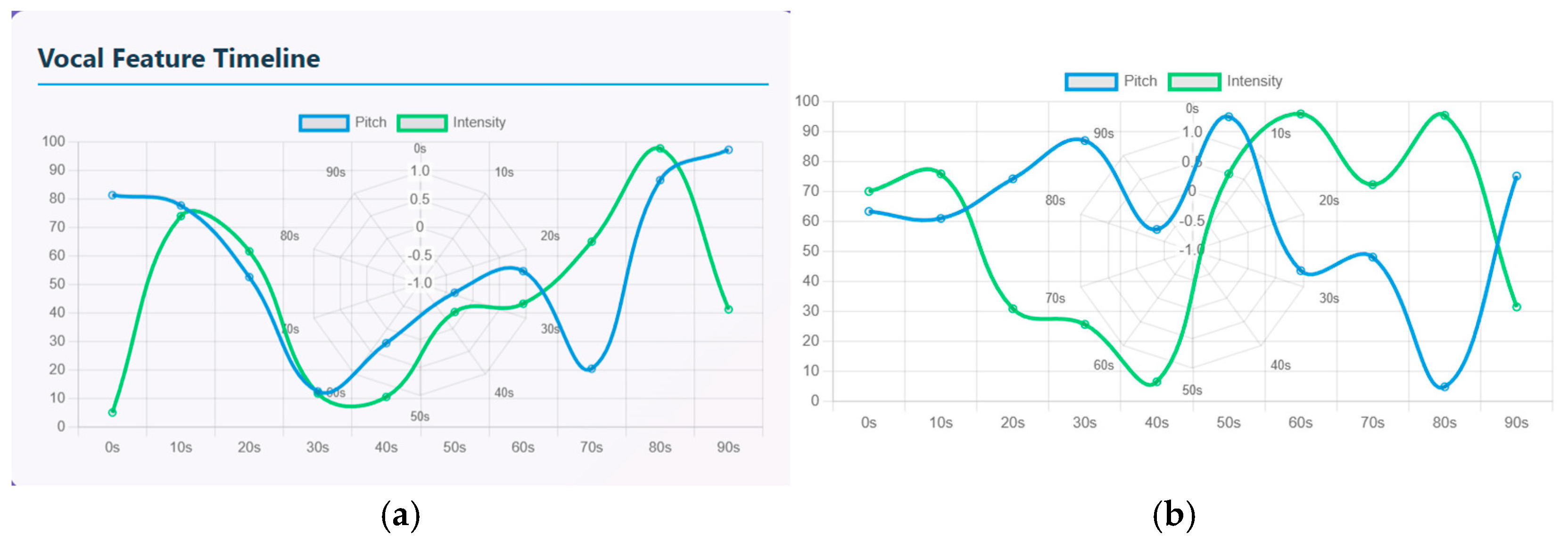

The vocal feature timeline is used to quantify the speech signals that compares pitch (represented by a blue line) and intensity (represented by a green line) over a 90-s timeline. Both the signals have a rise-and-fall waveform indicating emotional fluctuations. In schizophrenia patients, there is an increasing intensity and slight drop in pitch initially from the 0 to 20 s timeline. Intensity has a sharp rise from 0 to 75 and pitch is high at 80 where the patient starts with an expressive tone. From 20 to 40 s, there is strong drop in both intensity and pitch. This period represents reduced vocal responsiveness. From 40 to 60 s, the waves show diverging dynamics where the pitch rises more steeply than intensity. From 60 to 90 s, there is a strong peak where the pitch and intensity have the highest peak of about 85 to 95. Similarly in healthy controls, both the pitch and intensity display oscillatory patterns. The waveform shows repeated rise and fall that indicates shifts between low arousal and high arousal phases. From 0 to 20 s, there is a moderate rise in pitch (60–70) and intensity (70) that indicates steady pitch, then decrease in vocal energy. From 20 to 40 s, there is a sharp decline in pitch (55–60) and intensity (10–20) indicating low expression. From 40 to 60 s, both the pitch and intensity rise sharply, indicating strong emotional burst. From 60 to 80 s, intensity again rises to another peak of about 90 and pitch drops by about 40; that indicates mixed emotions and speech transitions. From 80 to 90 s, pitch rises again and intensity drops again, indicating high tone with less loudness.

Figure 11a represents the vocal feature timeline of schizophrenia patients and (b) represents the vocal feature timeline of healthy controls.

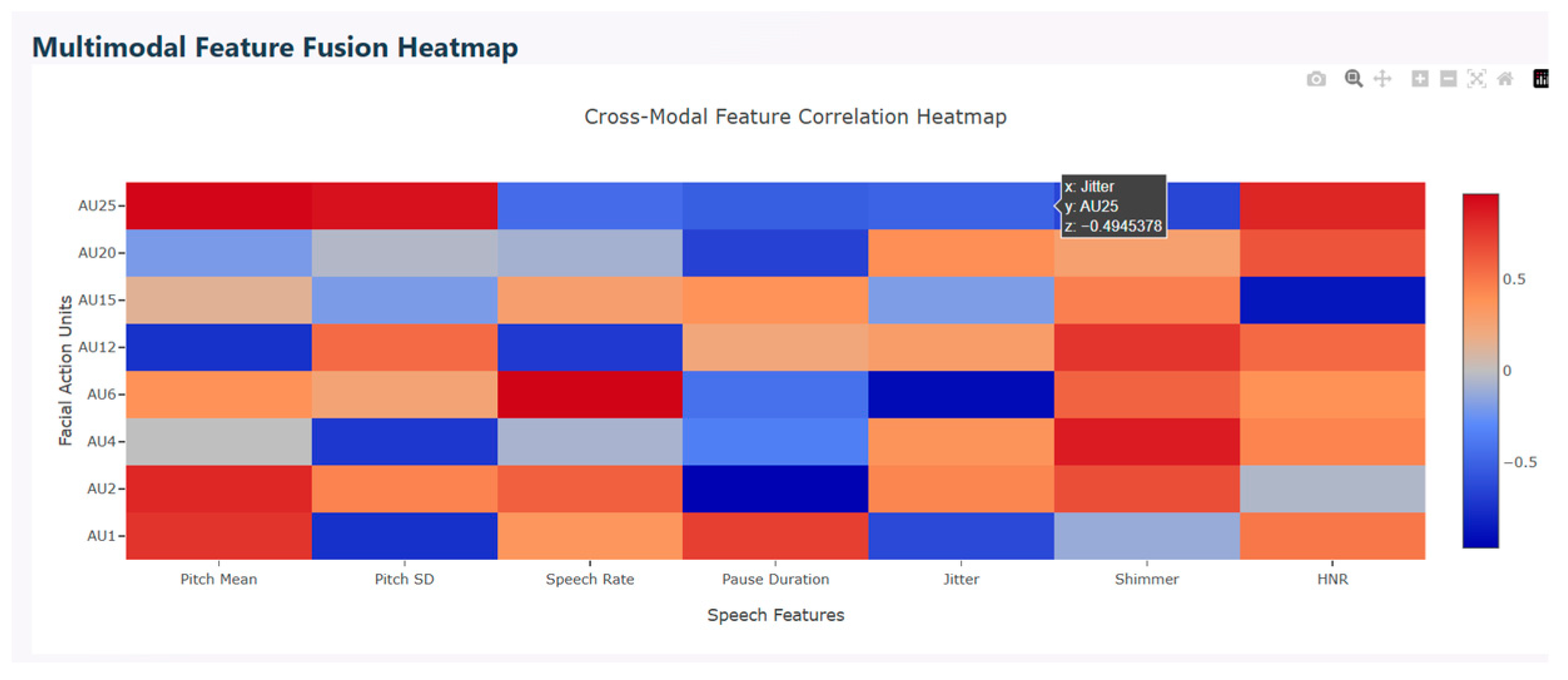

Multimodal correlation heatmap is used to find the relationship between facial action units (FAU) and speech features. This describes the interaction between facial expressions and vocal characteristics. The red color in the figure represents positive correlation that show the features’ increase together, the blue color indicates negative correlation that show the features’ movement in opposite directions, and light shades indicate weak or near zero correlation. Various AUs show strong positive correlation with speech features. AU25, AU2, AU12, and AU1 correlate with shimmer and harmonic-to-noise ratio which represent high emotional intensity. AU6, AU15, and AU25 correlate with jitter, indicating that during calm vocal states, these facial expressions exhibit strong emotions and stabilized pitch. Mixed correlation is observed with positive correlation between AU25 and AU2 and negative correlation between AU6 and AU15, indicating that upper facial movements correlate with higher pitch. Similarly, lower face tension correlates to stable pitch.

Figure 12 depicts the multimodal feature fusion heatmap.

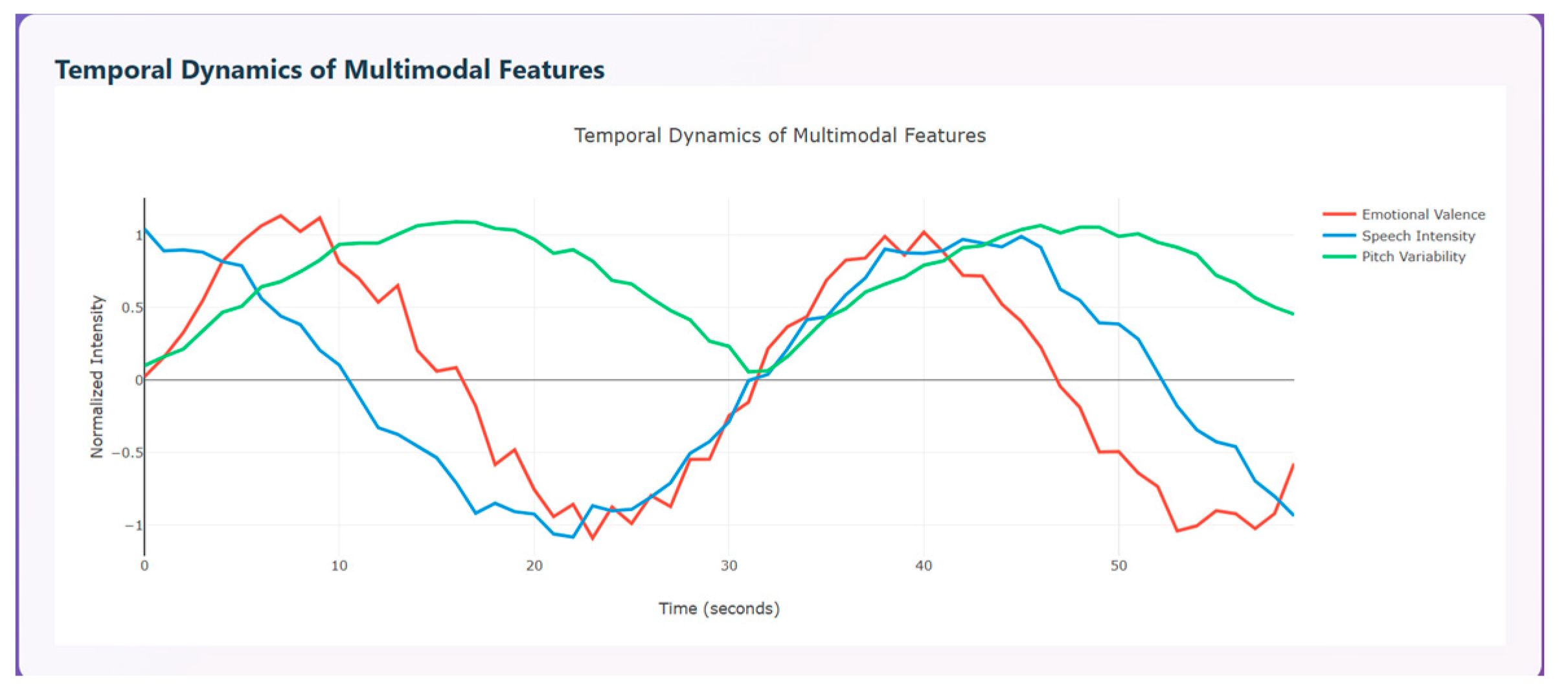

Temporal dynamics of multimodal features are used to compare the modalities over time (0–55 s). The three curves shown indicate how emotional change, represented in red, speech intensity, represented in blue, and pitch variability, represented in green, change with respect to time. All three modalities show clear ups and downs that indicate temporal coherence between emotions and the vocal system. The red curve initially has a peak around 5–10 s indicating pleasant emotion, a strong drop around 20–25 s indicating unpleasant emotion, and a subsequent rise again around 35–45 s and a drop around 50 s, displaying that a person shows a transition of emotions over time. The blue curve initially starts with high vocal energy, then drops steadily around 20–25 s, then rises around 35–40 s, and drops again around 45 s. This variation denotes that speech intensity is high during positive emotions and lowest during negative phases. The green curve initially rises sharply, then maintains stable, high changes around 10–35 s and declines at the end.

Figure 13 depicts the temporal dynamics of multimodal features.

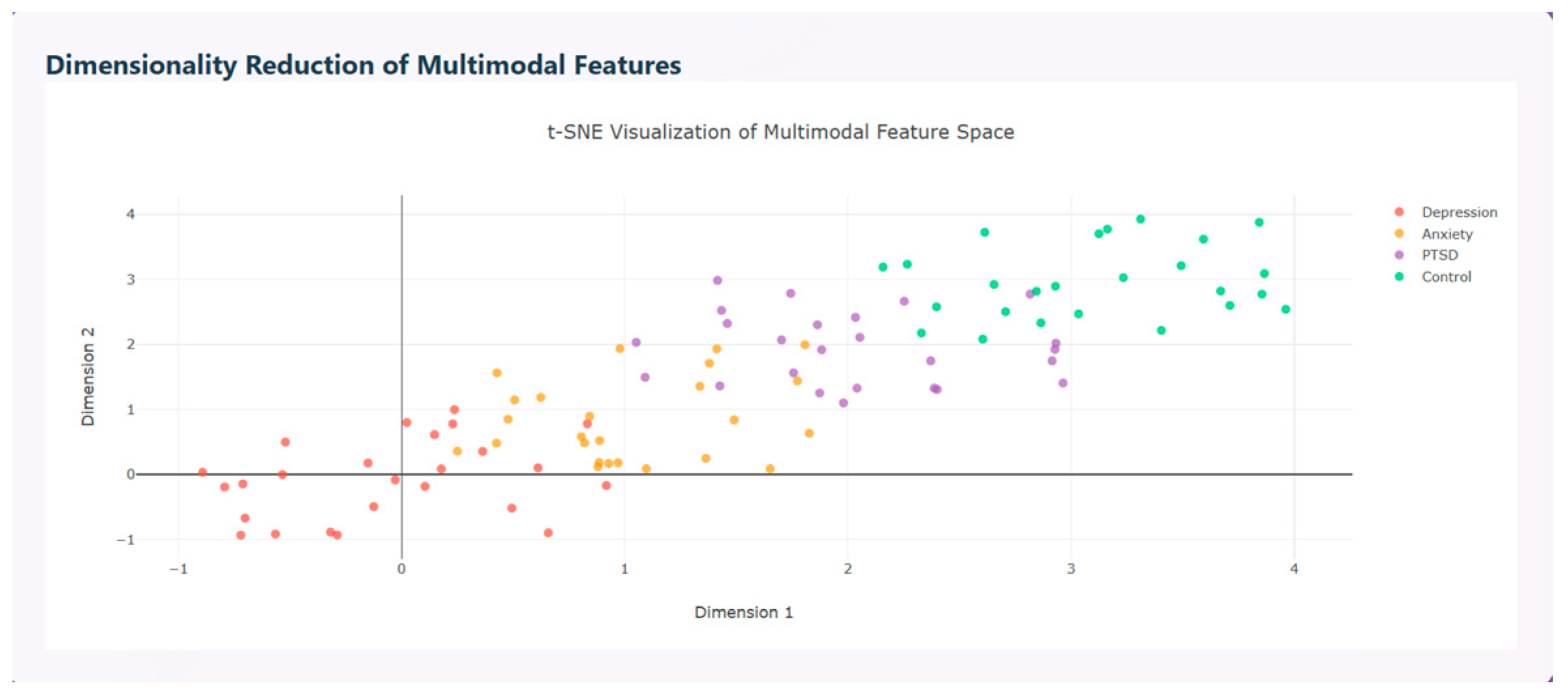

Multimodal features are visualized using t-SNE visualization that distinguishes the disorders based on the features extracted as shown in

Figure 14.

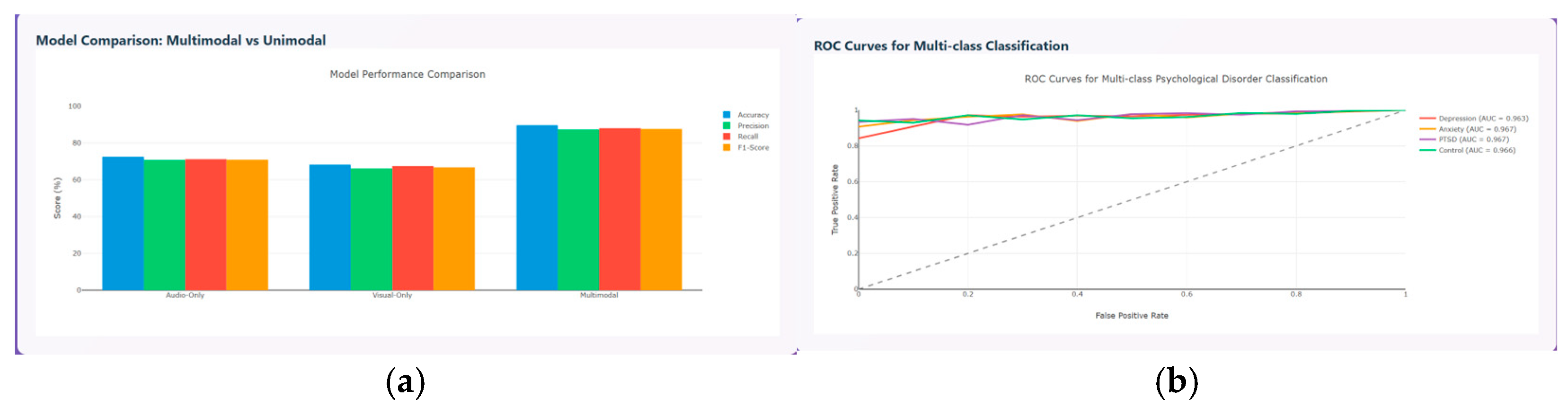

The model performance metrics are evaluated and compared between the unimodal and multimodal model in terms of accuracy, precision, recall, and F1-score. The results prove that the multimodal model performs better than the unimodal model with accuracy around 90%, precision around 85%, recall around 86%, and F1-score around 86%, as shown in

Figure 15a, and the ROC curve for the various psychological disorders are depicted in

Figure 15b, where ROC for anxiety and PTSD shows AUC of about 0.967, depression of about 0.963, and control of about 0.966, respectively.

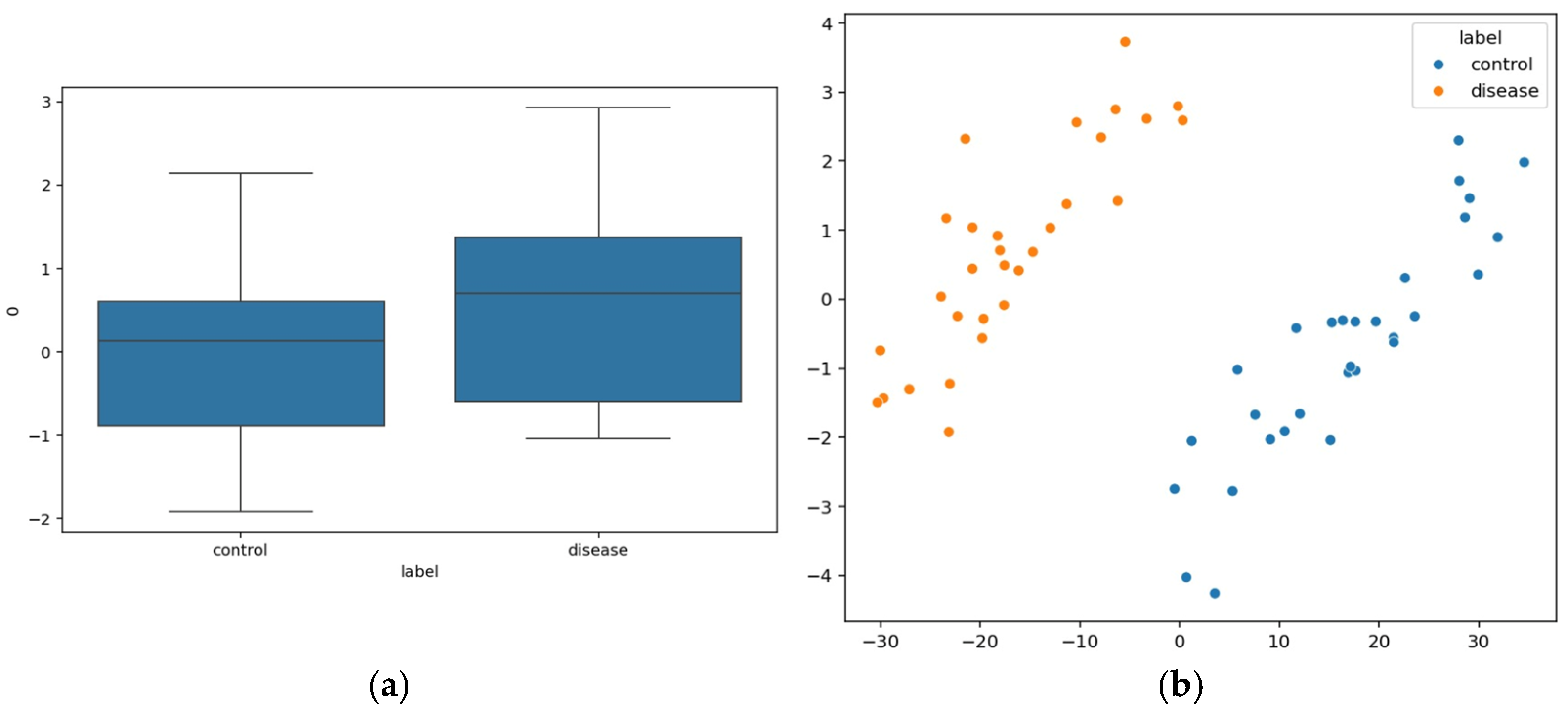

Speech signals that are extracted through quantifiable features are evaluated numerically through the Mel-frequency Cepstral Coefficient (MFCCs), which describes the spectral shape of speech that is received from the sound perceived by humans.

Figure 16a depicts the MFCC1 difference between control and diseased patients. The schizophrenia patients’ group exhibits higher MFCC1 values where the median is around 1 compared to that of the control group. The features are represented in a Principal Component Analysis (PCA) scatter plot that distinguished control and patient data, shown in

Figure 16b.

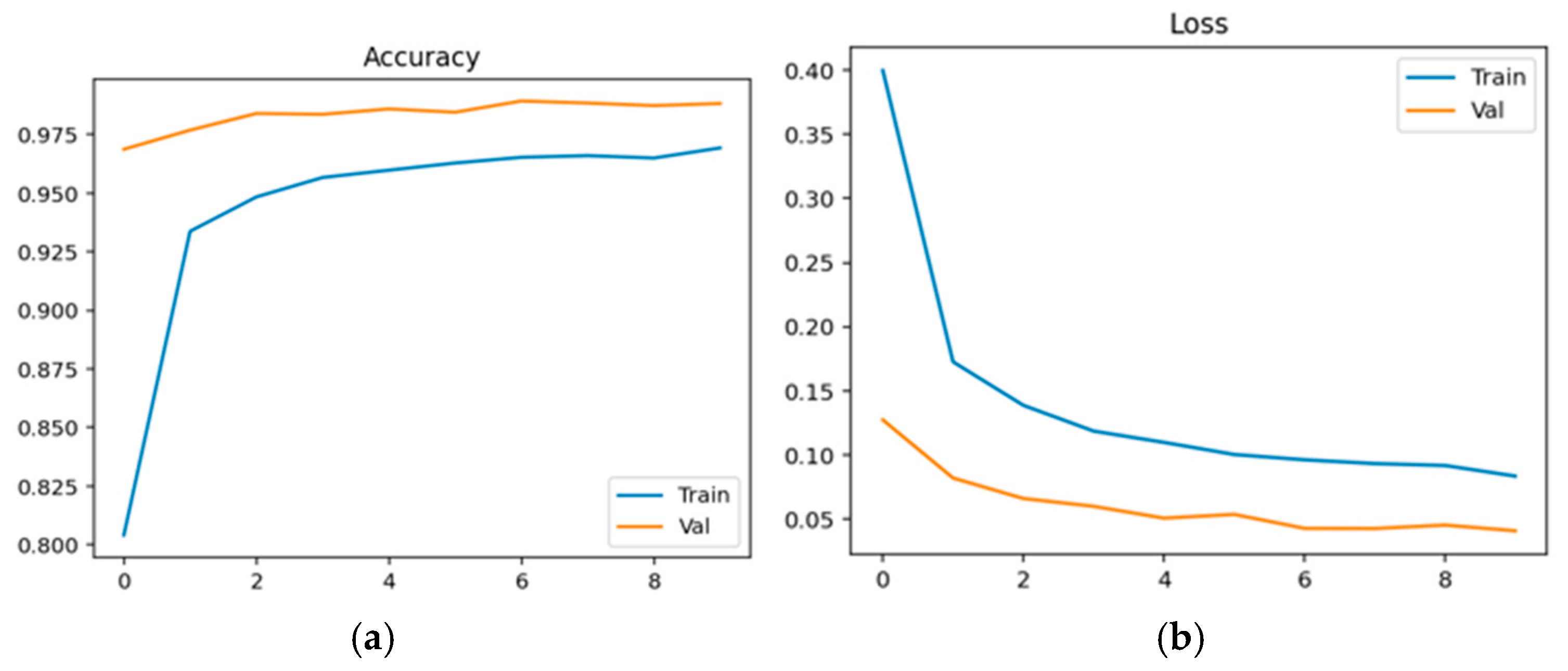

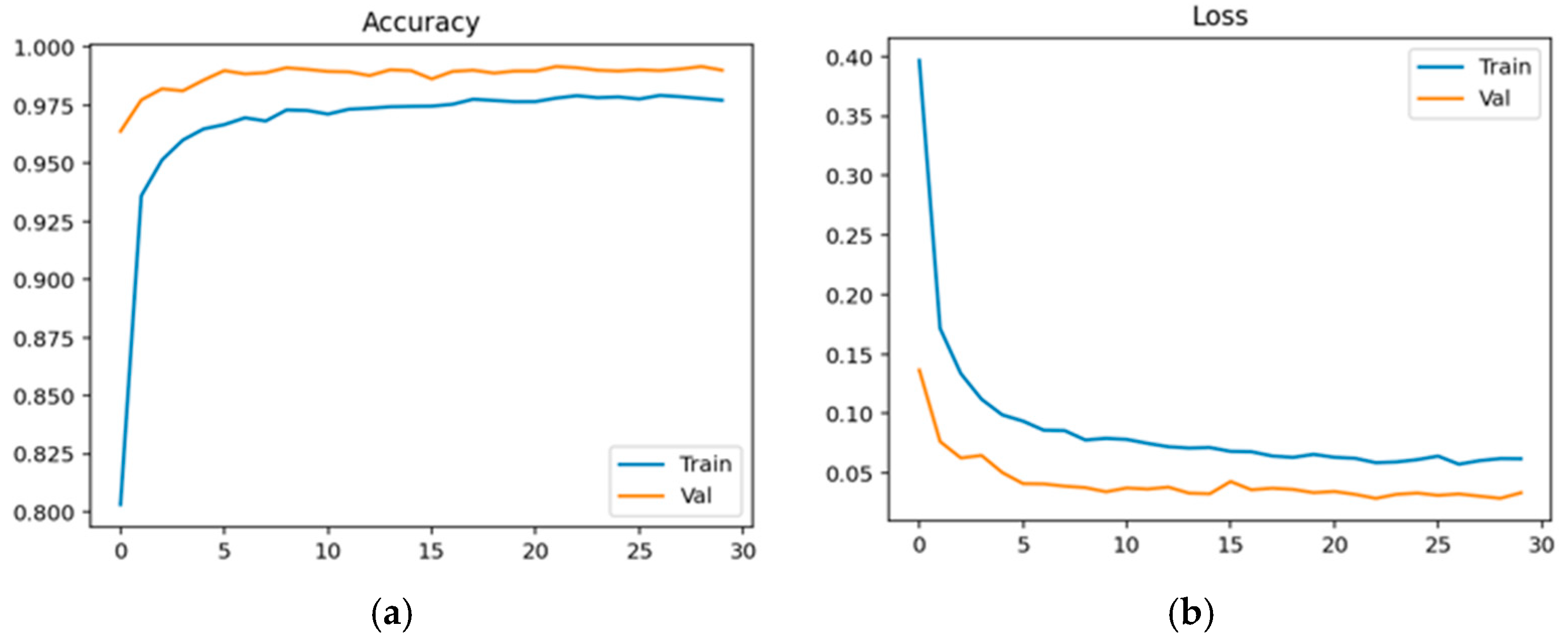

Physiological data obtained as EEG signals are pre-processed and sent to the EEGNet framework. The model is trained and classified under two classes: healthy (class 0 with 2540 samples) and schizophrenia-affected patients (class 1 with 3089). The dataset contains 14 healthy controls and 14 schizophrenia patients. The EEG data is taken from a 14-channel lightweight system that provides minimal technical training and easy setup that is suitable for taking data in psychiatric scenarios. This 14-channel EEG includes frontal, central, and temporal regions placed at 14 different positions using electrodes. This EEG biomarkers help to capture the features relevant to schizophrenia by tracing the alpha and frontal theta irregularities which causes auditory and temporal responses. This framework also supports the integration of other modalities with enhanced performance. Recent studies have proven results for effective diagnosis of schizophrenia using 8–16 channel systems that ensure critical neural biomarkers are identified without high-density arrays. The dataset comprises 80% as training and 20% as testing data. The training and validation accuracy and loss were evaluated for both control group and schizophrenia patient group. The model achieved a precision of 0.99, a recall of 0.98, and an F1-score of 0.99 for healthy patients and a precision of 0.98, a recall of 0.99, and an F1-score of 0.99 for affected patients. The overall accuracy was achieved as 0.99 for a total of 5629 samples. Taking the macro and weighted accuracy, the ROC AUC was achieved as 0.998.

Figure 17a displays the training and validation accuracy for healthy controls, and (b) displays the training and validation loss for healthy controls.

Figure 18a displays the training and validation accuracy for schizophrenia patients, and (b) displays the training and validation loss for schizophrenia patients.

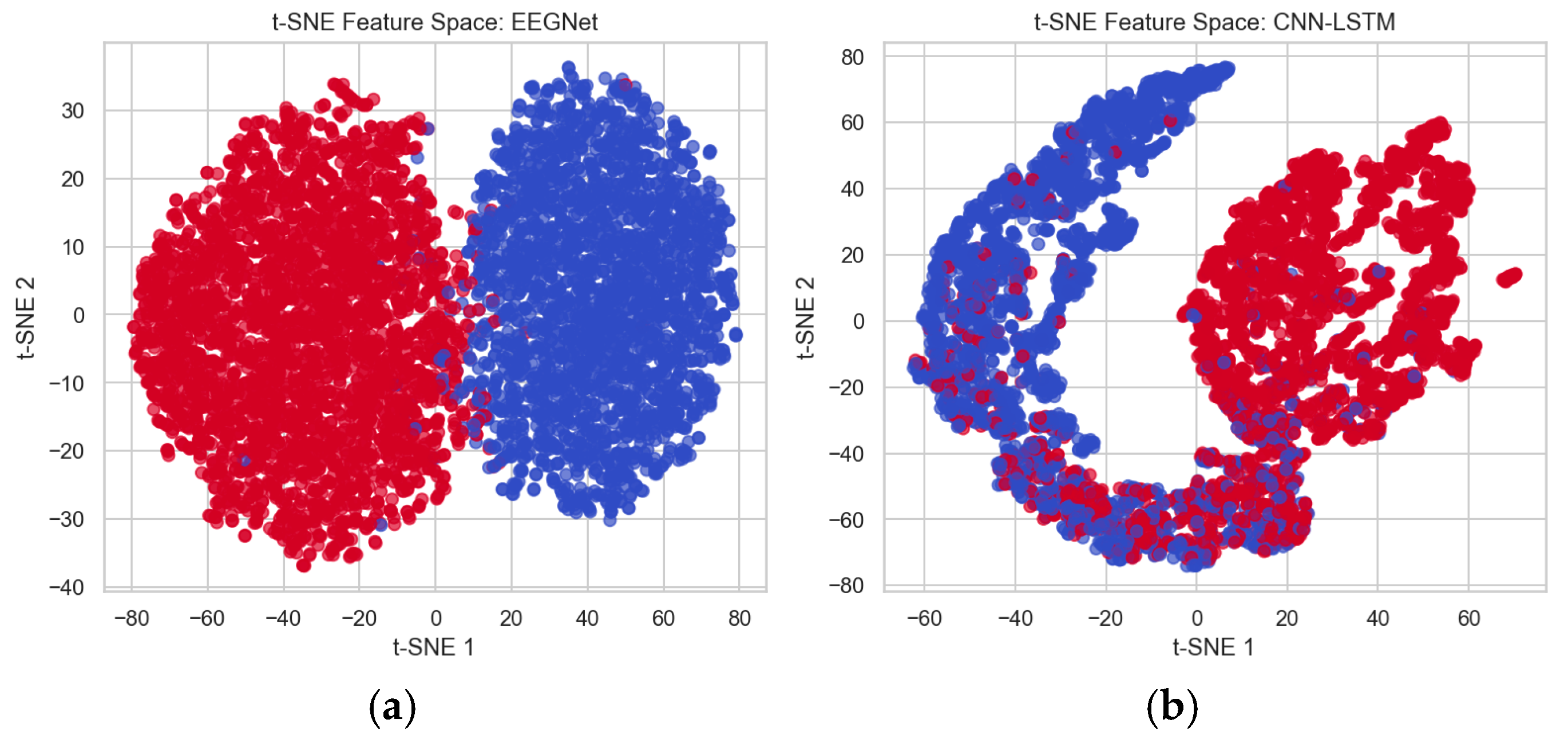

To differentiate the performance between the existing CNN-LSTM method and the proposed EEGNet method, the features extracted from these architectures are analyzed using the t-distributed Stochastic Neighbor Embedding (t-SNE) technique, which is used for visualizing high-dimensional data. This helps to better separate the data points and minimizes overlapping for better classification. This method shows that the EEGNet feature space shows better separation of data points and very minimal overlapping, which distinguishes healthy and affected patients’ classes easily compared to the CNN-LSTM feature space, which has maximum overlapping between healthy and affected data points. Thus, more overlapping makes distinguishing difficult.

Figure 19a shows the feature space mapping between healthy and affected data points using EEGNet, and (b) shows the feature space mapping between healthy and schizophrenia patients’ data points using CNN-LSTM, where blue represents healthy controls and red represents affected patients.

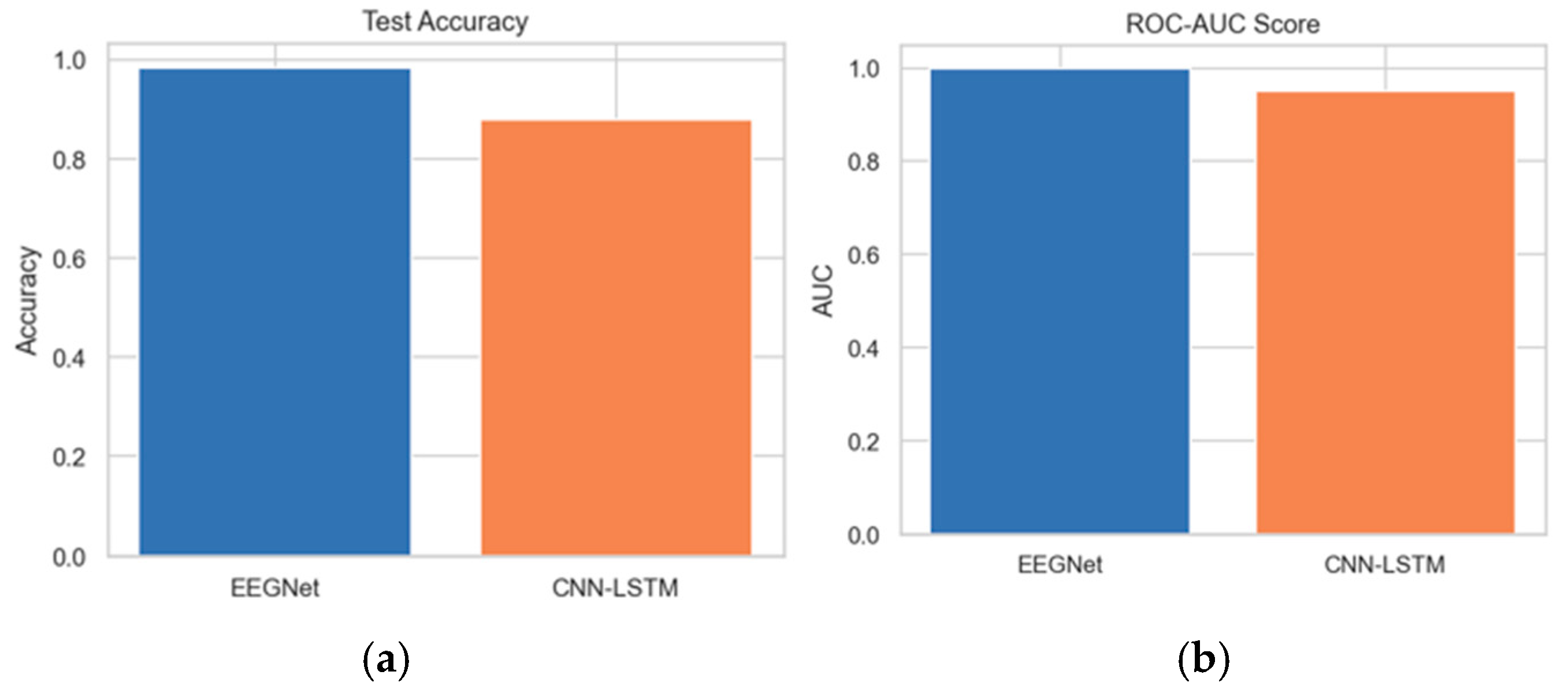

The test accuracy comparison between EEGNet and CNN-LSTM reveals that EEGNet achieves an accuracy of 0.99. Furthermore, the ROC-AUC score analysis between the proposed and existing architectures indicates that the AUC of the proposed EEGNet reaches 1.0.

Figure 20a,b depict the test accuracy and ROC-AUC score between the EEGNet and CNN-LSTM architectures.

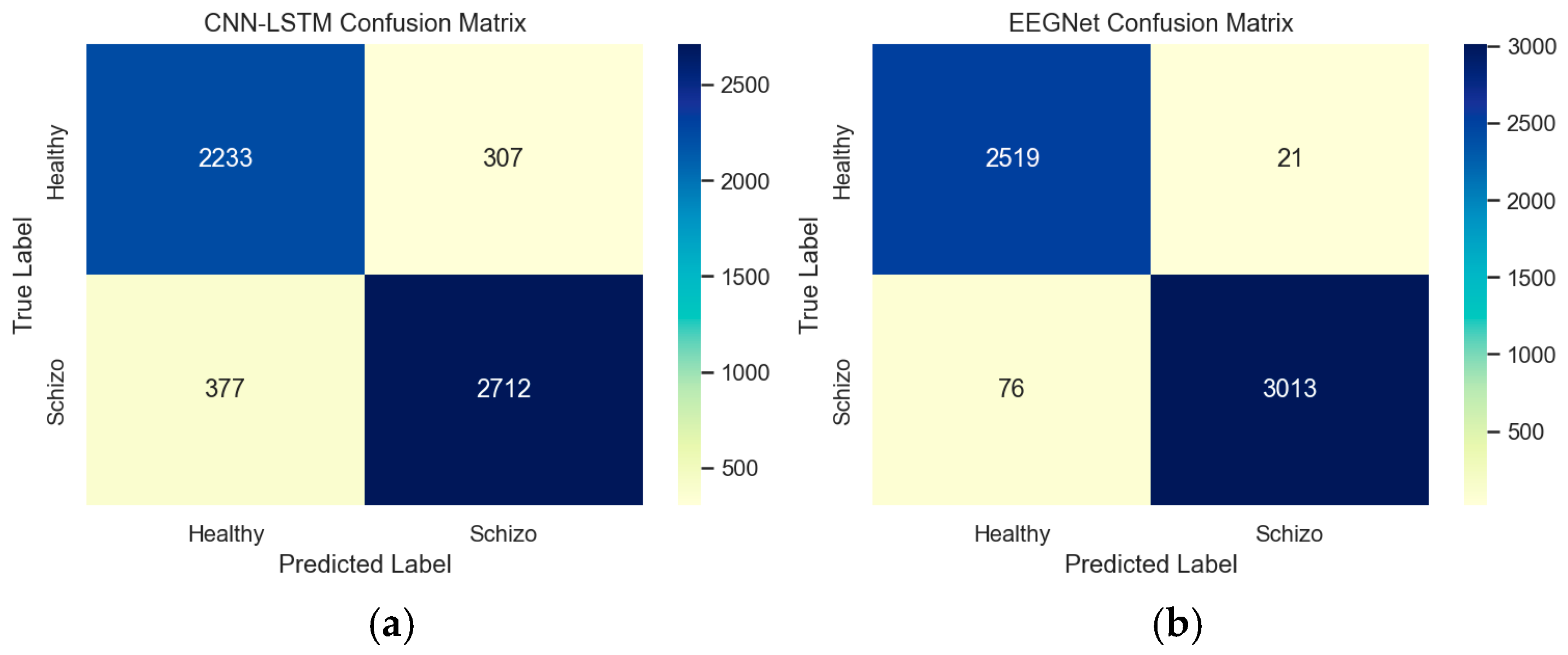

The confusion matrix provides insight into the performance and patterns of EEGNet and CNN-LSTM architectures. This confusion matrix represents the model’s detection against true labels and predicted labels between healthy and schizophrenia patients. Based on the confusion matrix, from a total of 5629 random samples in the data, it was determined that 307 samples of healthy controls were miscategorized as schizophrenia patients in CNN-LSTM, while 21 samples of healthy controls were miscategorized as schizophrenia patients by EEGNet architecture.

Figure 21a,b depict the confusion matrix between the EEGNet and CNN-LSTM architectures.

Strengths and Weaknesses

The main advantage of this system is that integration of EEG data with other modalities like textual, audio, and video is feasible for 14 14-channel EEG acquisition that captures temporal, spatial, and task-evoked potentials. However, the weakness of this system is that the misclassification rates indicate that the model requires better specificity since the sample size is small and this constrains the generalizability of results. This method is more effective in identifying schizophrenia patients than differentiating healthy controls. This result, with the existing literature, shows that EEG data can easily identify schizophrenia patients, whereas healthy controls may overlap with other features that lead to false positives. There is a requirement for multiple performance metrics to confirm the results.