1. Introduction

Computational pathology (CP) has emerged as a promising approach for digital pathology over the past decade, leveraging advanced algorithms and machine learning techniques to automate and enhance the analysis of histopathological data [

1,

2,

3,

4,

5]. This innovative technique helps to augment pathological assessment diagnostic accuracy, speed, and efficiency, bridging the gap between traditional microscopy and modern digital technologies [

6]. Given the profound impact of CP on advancing diagnostic precision and efficiency, its application extends far beyond traditional pathology boundaries, embracing a broader spectrum of histology applications [

3,

7]. Among these, the classification of ovarian cancer subtypes emerges as a particularly promising avenue [

8,

9,

10,

11,

12].

Recent advances in artificial intelligence (AI) have demonstrated significant potential across gynecological oncology, from diagnostic pathology to surgical interventions [

13]. The integration of AI in clinical workflows requires robust validation and standardization of protocols, as evidenced by successful deployments in gynecological surgery [

14]. However, bridging the gap between computational innovation and clinical adoption necessitates careful evaluation of human–AI collaboration dynamics [

14,

15].

Ovarian cancer, which is characterized by its heterogeneity and various subtypes, each with distinct histological features, prognoses, and treatment responses, is becoming one of the most lethal gynecological malignancies [

16,

17,

18,

19,

20,

21]. Within Whole-Slide Images (WSI), histology image analysis plays a crucial role in diagnosing and understanding cancerous tissues, providing valuable insights for treatment decisions and prognostic assessments [

22,

23]. WSIs have revolutionized this domain recently, offering a digital framework for analyzing tissue samples [

12,

24,

25,

26,

27]. WSIs capture tissue sections at microscopic resolution, resulting in images that span over billions of pixels [

28], consisting of rich diagnostic information crucial for identifying cancer subtypes and predicting patient outcomes [

29,

30].

Machine learning and computer vision advancements have revolutionized histology image analysis in recent years, offering automated and scalable cancer diagnosis and classification solutions [

24,

31,

32,

33,

34]. However, the computational demands of processing high-resolution histology images pose significant challenges, particularly in large-scale datasets. Processing high-resolution histology images presents major computational hurdles in numerous medical imaging applications, such as diagnosing and categorizing malignant tissues. In addition, automated analysis, which is made possible by machine learning and computer vision developments, frequently depends on abundant labeled data from the same domain. This reliance on specific data limits the ability of these methods to be applied to new datasets or imaging settings. The challenge is especially prominent in histology image analysis, since differences in staining processes, tissue preparation techniques, and imaging modalities can cause domain changes that impede the performance of models. Addressing these challenges requires innovative approaches to enhance computational efficiency without compromising classification accuracy.

While MIL provides a systematic and effective strategy for leveraging weak supervision [

5,

35,

36], managing ambiguity and variability [

37], and scaling to extensive datasets [

38,

39], processing high-resolution images still imposes substantial computational burdens [

32,

40]. To mitigate this, WSI-P2P utilizes downscaled patch sampling to reduce overall model training size as well as inference time; together, both make it robust and computationally efficient. Further, by employing

K-TOP, a MIL aggregator, in addition to the attention score, it also reduces resource consumption and selects the most valuable patches for inference. Secondly, there is a need to develop models that can generalize effectively across diverse domains while utilizing bag-level labels. WSI-P2P addresses this challenge by ensembling the pretrained transferred knowledge. The proposed framework is evaluated on the UBC-OCEAN dataset [

41]; the data is taken from Kaggle (

https://www.kaggle.com/competitions/UBC-OCEAN/overview, accessed on 13 June 2025). Comparative experiments with SOTA work and submission scores at Kaggle suggest the potential for real-time deployment.

The main contributions of this work are as follows:

WSI-P2P Framework: A Novel Integration of Downscaling and MIL

- –

To the best of our knowledge, WSI-P2P is the first framework to combine diagnosis-aware downscaling with a K-TOP MIL aggregator.

- –

This framework is designed to efficiently process WSIs for the domain-generalized classification of ovarian cancer histology images, addressing both computational efficiency and performance.

- –

Further, we optimize the attention-based MIL aggregation method by integrating K-TOP instance selection, which selectively processes the most informative K number of instances, reducing computational costs compared to traditional WSI processing methods.

Multi-Task Analysis: This work primarily focuses on the classification of ovarian cancer histology images, where accuracy and AUC metrics are compared, while the subtype classification task is also validated; it also demonstrated stable performance in terms of balanced accuracy in comparison to the latest works, with our K-TOP MIL aggregator.

Robust Domain Generalization: Unlike traditional approaches, we leverage transfer learning within the MIL framework, where WSI-P2P demonstrated robust generalization capabilities across domains.

Competitive Classification Performance: Our experimental results demonstrate WSI-P2P’s superiority in ovarian cancer intra-domain generalization and subtype classification, highlighting the potential for real-time clinical deployment.

The proposed framework may be considered when developing real-time applications that address several areas recommended by the World Health Organization (WHO) and the European Society of Gynecological Oncology (ESGO), including histotype differentiation, tumor grading, biomarker identification, prognostic assessment, treatment response prediction, and quality control diagnostics [

42]. The objectives are to achieve minimal resource consumption and robust performance, as this study aims to optimize computational efficiency and improve performance in ovarian cancer classification.

The rest of the study is organized as follows:

Section 3 presents a detailed overview of dataset preparation steps; further,

Section 4 details the methodology of the proposed work.

Section 5 discusses system implementation details, then

Section 6 presents the experimental evaluations. Further study limitations and future work, along with the conclusions, are discussed in

Section 7 and

Section 8, respectively.

3. Data Collection and Preprocessing

The study introduces a diagnosis-aware down-scaling protocol, Whole-Slide Image (WSI) processing, leveraging the UBC-Ovarian Cancer Challenge dataset [

41]. The approach addresses two critical challenges in computational pathology: (i) preserving histomorphological features at reduced resolution and (ii) enabling efficient large-scale analysis without compromising diagnostic validity.

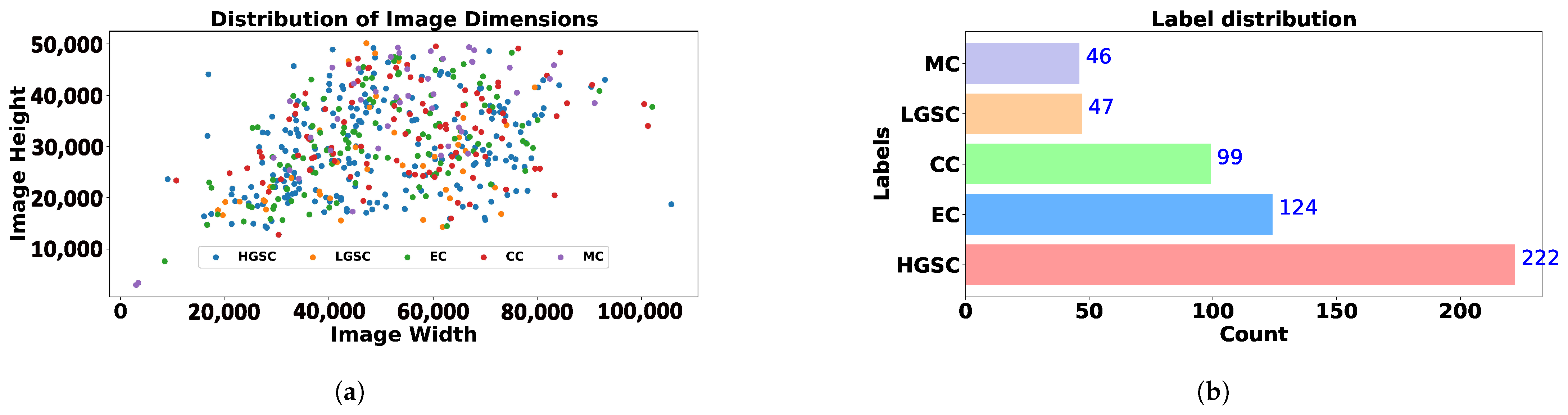

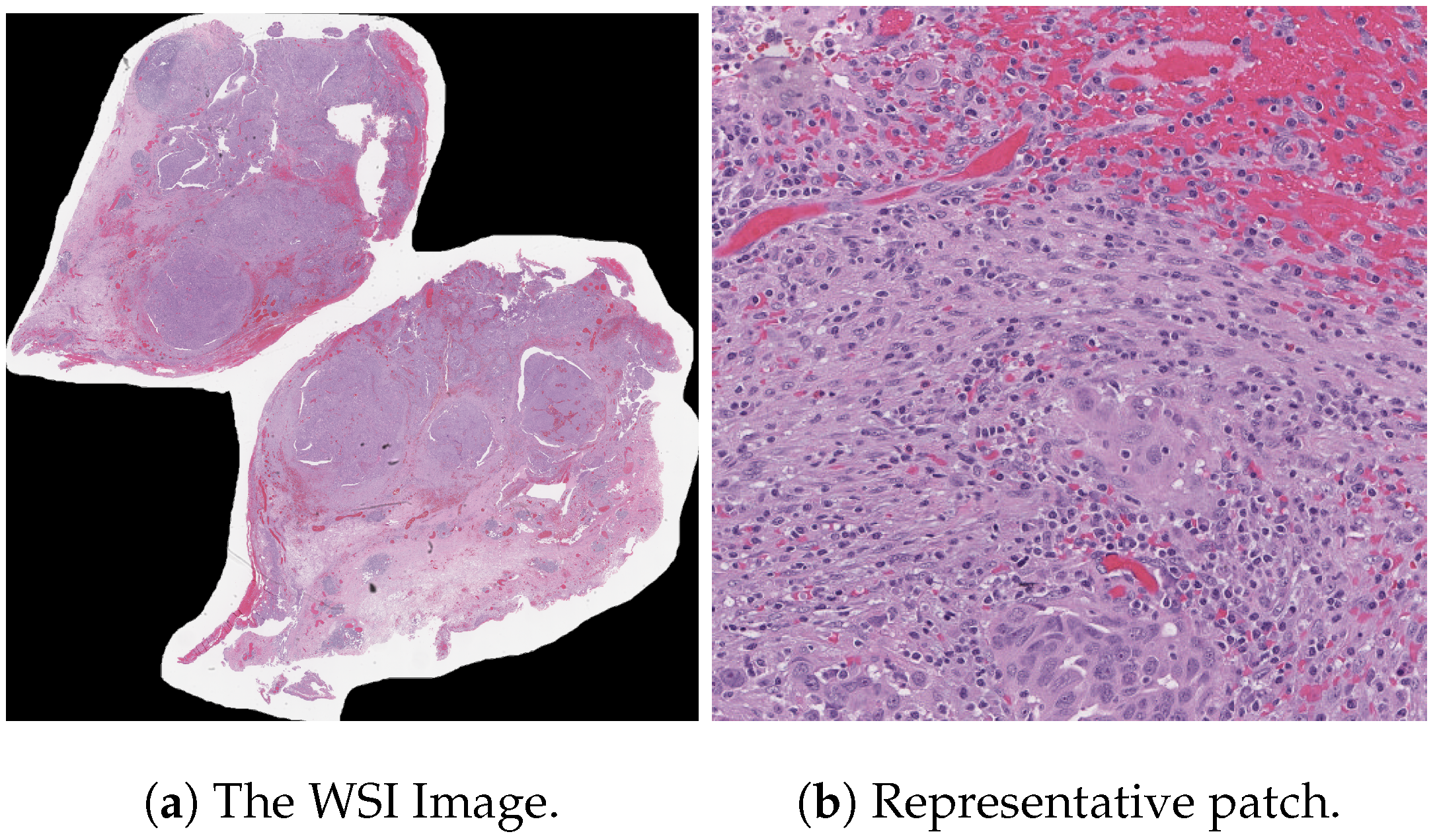

The original dataset consists of WSI from diverse medical centers, introducing variability and making it a valuable resource for evaluating generalization in computational pathology. Variability occurs due to differences in staining protocols, scanner systems, and tissue preparation. Overall, data is categorized into five distinct classes representing different tissue types: Clear Cell Carcinoma (CC), Mucinous Carcinoma (MC), Low-Grade Serous Carcinoma (LGSC), High-Grade Serous Carcinoma (HGSC), and Endometrioid Carcinoma (EC); we prepared the representative sample of the subtyping as shown in

Figure 1.

Data preprocessing: The WSIs represent a vast and high-dimensional data space due to their large size and high-resolution details. To reduce the computational complexity, the study adopted downscaled patch sampling techniques. For each WSI, N random slices or tiles were selected, where N was set to 50 for this study. The tile selections were carefully performed to identify the matter within each WSI, allowing the subsequent analysis to focus on areas of diagnostic significance and discard the irrelevant background. Each WSI tile is recorded at a size. WSIs were handled by the pipeline, ensuring effective handling of their large file sizes and complex data structures. For WSI cropping, various techniques were observed, including but not limited to (i) signal smoothing, (ii) peak detection, (iii) image cropping, and (iv) signal scaling.

Furthermore, we have employed efficient preprocessing techniques to enhance data quality while minimizing resource consumption. This includes techniques such as noise reduction, data normalization, and feature extraction, which are computationally lightweight yet effective in improving the accuracy of the models. During the processing of WSI, we faced challenges, including a decompression bomb error. Several techniques were considered to mitigate this issue: decreasing the image resolution, implementing lazy loading, and resizing images. Furthermore, we have employed efficient preprocessing techniques to enhance data quality while minimizing resource consumption. This includes techniques such as noise reduction, data normalization, and feature extraction, which are computationally lightweight yet effective at improving the accuracy of the models. During the processing of WSI, we faced challenges, including a decompression bomb error. Several techniques were considered to mitigate this issue: decreasing the image resolution, implementing lazy loading, and resizing images. We also filter and validate the image size and utilize external libraries, setting the maximum pixel limit to none.

Finally, after preprocessing WSIs to collect small patches, we defined the data nature as one-vs.-50. Initially, it was one vs. one, termed as one-vs.-1 (one whole WSI as a single image); later, one WSI was divided into 50 small patches or tiles, as illustrated in

Table 1. Now, the data is ready to be incorporated into our methodology for training, validation, and further investigation. Further details of downscaling are discussed in

Section 4.

Dataset Statistics

It is essential to provide a brief overview of the statistics from the original dataset following its official release.

Initially, we present

Figure 1, which emphasizes the need for preprocessing, as a significant portion of WSI histology images consists of multiple traces of the same slices. Then, we illustrate statistics in

Table 2 further, highlighting the WSI billion pixels in

Figure 2a. Finally,

Figure 2b illustrates the dataset’s inherent imbalance. Although this study only presents the methodology for efficient preprocessing, the statistical information it provides is helpful for future researchers planning to use this dataset.

Table 2 would benefit researchers planning to adopt this dataset for OOD-related tasks, and

Figure 2b would help those who plan to investigate subtype imbalance classification problems.

4. Methodology

The study is inspired by the multiple-winning solutions to the ovarian cancer challenge, which have demonstrated the effectiveness of preprocessing techniques and the significant computational resources required. However, we recognize the need to address several challenges associated with resource reduction while maintaining a competitive performance. Further, this study is the first to have such a detailed investigation and propose a naive solution, WSI-P2P, that can potentially be employed as a healthcare industrial application where efficiently dealing with billions of pixels from WSI is necessary. The image size can be observed from the presented scatter plot as shown in

Figure 2a, where both axes are evident in pixel size.

The approach consumes 4 times fewer resources than the first place-winning solution, which employed 200 random tiles for feature extraction and classification. This selection created a set of “N MIL Bags,” each containing a subset of slices representing different regions of the WSI. The images are organized into “bags,” each containing a fixed set of N images corresponding to a specific tissue type. This structure ensures the MIL framework, where the bag is the fundamental unit for learning and prediction. Further, we define a custom dataset class, , to handle the loading and transformation of the images. This class inherits PyTorch’s Dataset class, leveraging its inherent functionalities while customizing the method to return a stack of transformed images (a bag) and a single label for that bag.

After data collection, several preprocessing and data augmentation steps were performed. Each image tile was resized to a uniform dimension of pixels to ensure consistency. The preprocessing pipeline included several augmentation techniques to enhance the dataset’s diversity and robustness, such as random rotations, flips, color jittering, and Gaussian blurring. Depending on the requirements, the magnification of the images could be enhanced to levels such as , , , etc., to capture details relevant to the specific diagnostic task. Several neural network pre-trained models served as feature extractors, transforming the raw image data into a high-dimensional feature space. The extracted features from each tile within a bag were then processed using an MIL approach. Each bag was associated with a label that applies to the collective set of instances (tiles) it contains rather than individual instance labels. Instances within a bag were considered for their potential to be positive (indicative of the label) or negative. The MIL approach utilized the correlation information between instances within a bag to infer the bag-level label. Not all patches (tiles) accurately inherit the WSI-level annotations due to the tissue’s heterogeneity and diverse morphological features within a single slide.

The following equations provide an overview of the methodological steps involved in preparing the WSI-P2P framework.

Downscaled patch sampling: Let

denote the set of instances (tiles) in the

ith bag, where each instance is a downscaled patch from a WSI. Our downscaling function is defined as follows:

where

is the

jth patch in the

ith bag before downscaling,

D is the downscaling operation, and

is the downscaled patch, as shown in the diagram’s preprocessing box.

Example calculation: Let be the number of tiles along the horizontal axis, and be the number of tiles along the vertical axis.

The image’s dimensions are given as , and the dimensions of each tile are .

For example, for a WSI with a dimension of 16,000 × 16,000, the possible number of tiles along each axis is as follows:

The total number of

would be the product of each axis:

In conclusion, a maximum of 961 non-overlapping images can be generated.

Adaptive patch extraction: WSIs were partitioned into overlapping tiles ( px at 20× magnification) with a 50-pixel stride to ensure tissue continuity. Black or empty tiles were filtered via brightness thresholding (mean intensity <25/255).

Controlled resolution reduction: Retained tiles were downscaled to

px using Lanczos resampling, achieving

memory reduction (e.g., 2.25 MB is reduced to 0.56 MB per tile), further comparative analysis between other recent works on the same UBC-OCEAN dataset is summarized in tabular form in

Section 6.5, where we demonstrated WSI-P2P’s applicability.

Feature extraction via transfer learning: Below, we mathematically represent the feature extraction using the pre-trained model:

where

denotes the feature vector extracted from the

jth instance in the

ith bag,

M represents the feature extractor model applied to the instance

, and

denotes the parameters of the model.

MIL aggregation function. Given a bag

with instance features

, the bag-level representation

is computed as follows:

where

is an aggregation operator.

We evaluate:

Standard aggregators: Mean () and max () pooling.

Attention pooling (): Learns instance weights via a neural network.

K-TOP Score Pooling (): Averages features from the top-K instances ranked by a learned scorer .

Our proposed K-TOP aggregation mitigates noise by focusing on discriminative instances while preserving gradient flow.

Here, we hypothesize that the K-TOP feature works because in histopathology, only K number of tiles may contain tumor regions; averaging over all tiles dilutes signals. As compared to standard pooling (max, mean), K-TOP is approximated as a smoother alternative.

Classification: The final classification decision for a bag is modeled as follows:

where

is the predicted label for the

ith bag,

C represents the classifier (e.g., a linear layer followed by a softmax operation in the case of multi-class classification) and

denotes the parameters of the classifier.

Loss Function: For a dataset with

N number of bags, the loss function optimizing the parameters of the classifier is defined as follows:

where

l is a loss function (e.g., cross-entropy) comparing the predicted label

with the true label

of the

ith bag.

4.1. MIL Aggregators

Max pooling: Overfit to a single dominant tile (ignoring supportive evidence).

Mean pooling: Averages signals with irrelevant/normal tiles (diluting discriminative features).

Attention-based: Learn instance weights via

a neural network.

where

,

are learnable parameters.

The proposed attention-based K-TOP averages features from the top-

K instances ranked by learned scorer

.

4.2. MIL Transfer Learning

The MIL architecture utilizes feature extractors such as ResNet50, ResNet18, and Vision Transformer ViT (where pre-trained = T/F). The Linear Neural Network (fully connected network (FCN)) is employed as a classifier layer. Further, the final fully connected layer of the feature extractor model is replaced with an identity layer to pass the extracted features directly to a custom classifier. The classifier consists of a linear layer with an output dimension equal to the number of classes, in this case, five. In the MIL context, the forward pass involves reshaping the input to process individual images through the feature extractor model and then aggregating the features within each bag using a max-pooling operation, whereas several other operations may be considered. With this operation, WSI-P2P selects the most prominent features across the instances in a bag, assuming that the most relevant features indicate the bag’s label.

For the classification task, the methodology employed in this study involves two distinct MIL approaches (with and without the attention approach), each utilizing different attention mechanisms. In the first approach, MIL Pooling (Max) is utilized without attention, where the maximum prediction score among instances within each bag is selected as the bag-level prediction. This method provides a straightforward aggregation strategy, leveraging the highest-scoring instance within each bag for classification. In contrast, the second approach incorporates attention by employing MIL Top K instances and pooling. Here, attention mechanisms are applied to identify and emphasize the most informative instances within each bag, determined by their relevance to the classification task. Subsequently, these top K instances are pooled together, potentially providing a more nuanced representation of the bag’s content. By integrating attention mechanisms into the MIL framework, this approach aims to enhance classification performance by focusing on the most discriminative instances while effectively aggregating their contributions at the bag level.

The attention score is defined as follows:

In conclusion, after downsizing large histology WSI images into small patches, the dataset was trained on pretrained models with further fine-tuning to achieve transfer learning, and various MIL aggregator comparisons were conducted. The methodology described above is briefly presented in an architectural diagram as depicted in

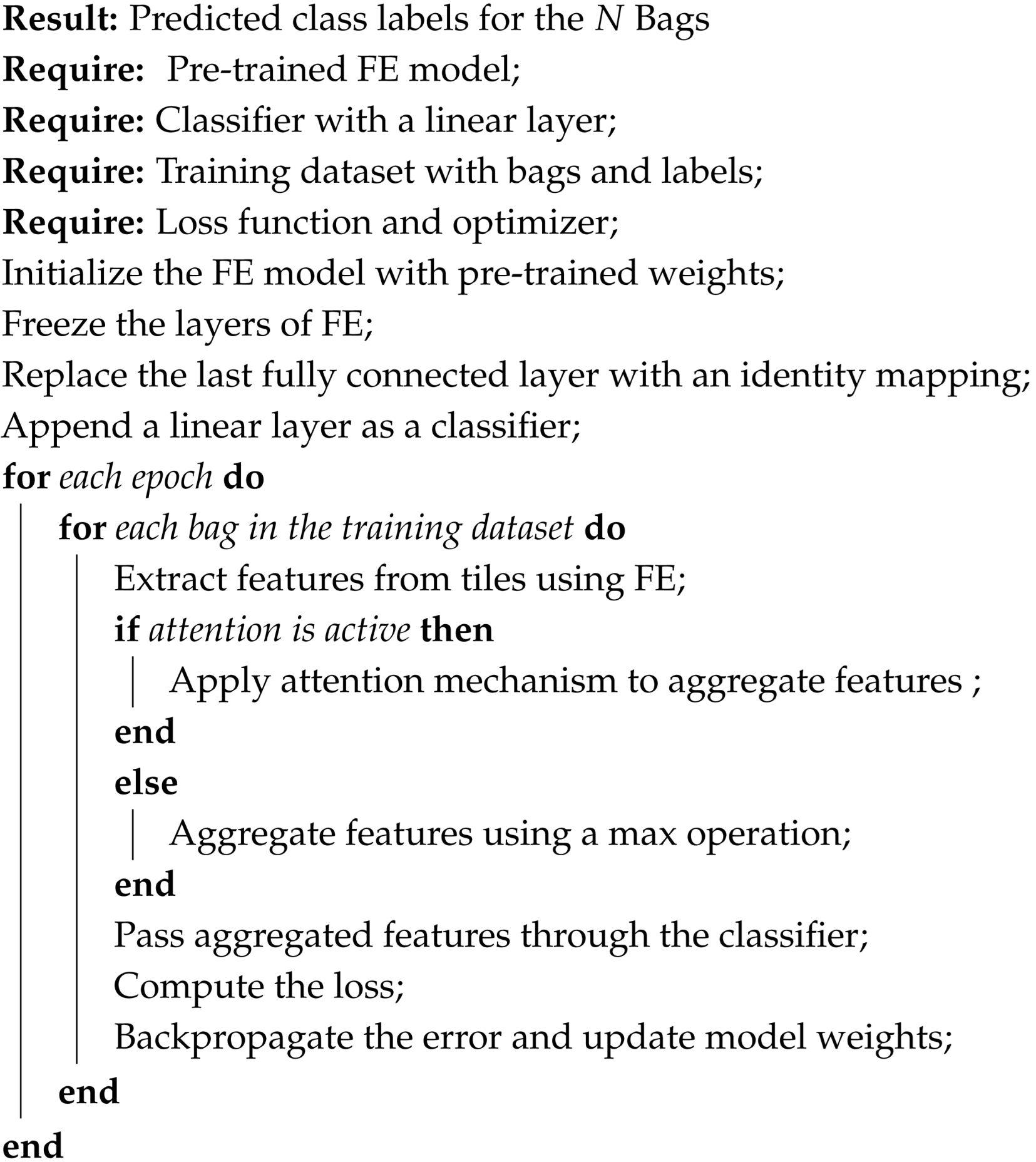

Figure 3, with each step labeled for easy comprehension, and further high-level code steps are presented in Algorithm 1.

| Algorithm 1: A High-level transfer Learning-based MIL algorithm for WSI-P2P |

![Diagnostics 15 02954 i001 Diagnostics 15 02954 i001]() | |

6. Experiments and Discussion

This section provides a detailed description of the experimental setting used in this study to evaluate the performance and efficiency of the proposed WSI-P2P architecture. Furthermore, this study aims to verify the framework’s ability to classify ovarian cancer histology images in a domain-generalized manner. Additionally, this study seeks to evaluate its computational efficiency under various scenarios. Using various WSIs from multi-center studies, we investigate the effects of varying MIL bag sizes on the model’s learning dynamics and overall accuracy. Further model calibration is conducted. Additionally, emphasis is given to comparisons of MIL aggregators. The remaining experiments are performed on a curated dataset from the UBC-OCEAN dataset, as discussed in

Section 3. A thorough comparative analysis with state-of-the-art (SOTA) methods is conducted to understand the nuances of our approach, thereby highlighting its strengths and potential areas for further improvement. In summary, the reported results were evaluated against multiple runs, and statistical significance was measured with a

p-value below the accepted threshold of 0.001.

6.1. Comparative Analysis

To investigate the nuanced behavior of the proposed WSI-P2P framework while optimizing resource utilization, the variation in epoch sizes was purposefully determined according to the size of the MIL bags employed in our experiments. Concerning experiments involving an MBS of 10, we examined the model’s performance throughout 50 epochs. On the contrary, we restricted the training epochs to 25 epochs for models employing a MIL bag size of 25 and an additional time constraint of 10 epochs on models utilizing an MBS of 50. By employing this approach, we could evaluate the efficacy and flexibility of the framework at various MIL bags while incurring minimal computational burden. By conducting a thorough set of experiments, which involve comparing our approach with established benchmarks and baselines, we aim to highlight the proposed method’s benefits in effectively handling the inherent complexities of computational pathology.

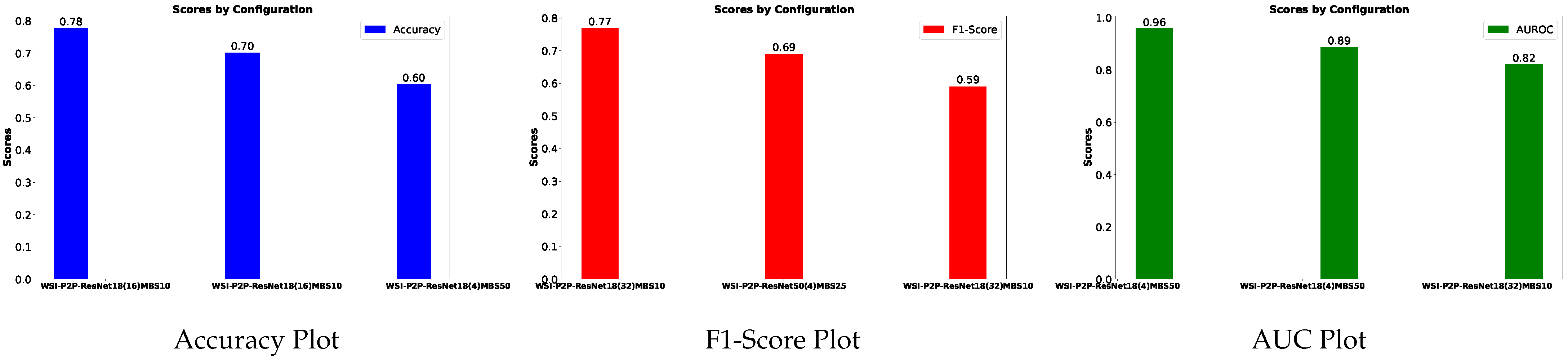

Table 4 is prepared to illustrate the WSI-P2P model’s impact of variation of epoch size, then analyzing the impact with batch size, to further validate how the dataset split helps the model to learn effectively or helps to increase the model’s learning process. Further, we prepared a bar chart visualization to summarize and illustrate the top, average, and worst performers for accuracy, F1-score, and area under the curve. The bar chart in

Figure 4 compares WSI-P2P-ResNet18 and WSI-P2P-ResNet50 feature extractor models with different batch sizes and data splits. These models were assessed using accuracy, F1-score, and AUROC. This comparative investigation shows that model selection and setup are crucial to the classification performance measures.

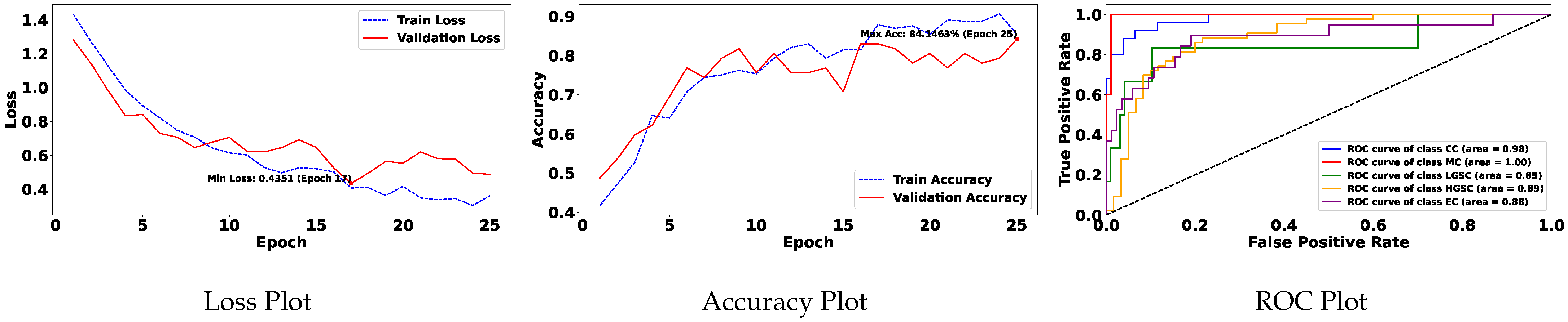

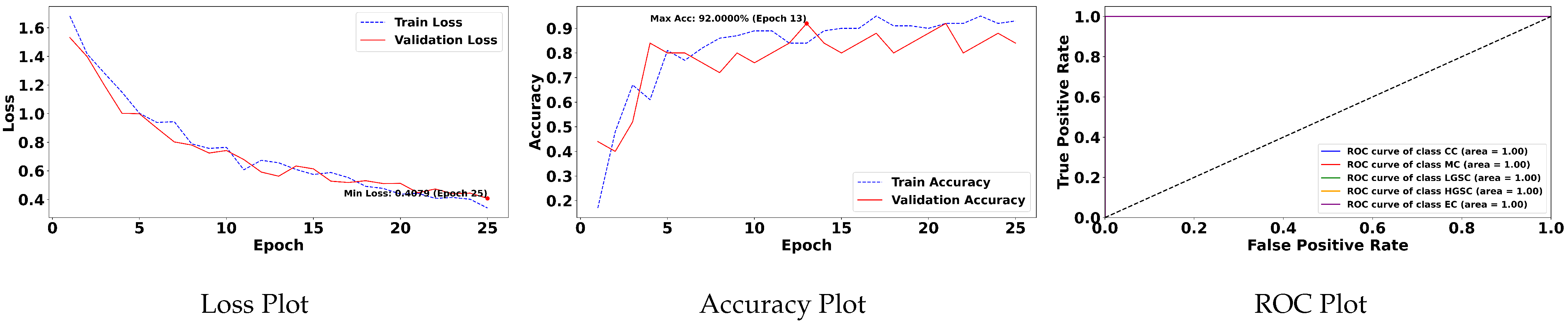

Table 5 demonstrates the top 10 scored experiments from

Table 4, while

Figure 5 depicts the loss, accuracy, and roc curve trend over the epochs for the best-case scenario of

Table 5. It records the maximum accuracy of training and validation while it also presents minimum loss values for training and validation of WSI-P2P with various network settings. For reference to our model results, we present an original WSI as shown in

Figure 6a, while

Figure 6b shows one of the representative patches. Overall,

Figure 6 and

Figure 7 depicts the model’s effectiveness and robustness towards the model’s explainability, which are further discussed in

Appendix A.

Impact of Attention Mechanism and Top K Score

This section investigates the impact of the attention mechanism and top K score on the proposed study’s performance. Initially, without incorporating attention mechanisms, the proposed model attained a maximum AUROC (Area Under the Receiver Operating Characteristic curve) score of 95.89% and a test accuracy of 77.67%. Subsequently, remarkable improvements were observed by integrating attention mechanisms into the model architecture. With the attention mechanism, the model achieved a maximum AUROC score of 100% and an impressive test accuracy of 95%. This substantial enhancement in performance underscores the importance of attention mechanisms in capturing relevant features and patterns within the data, thereby facilitating more accurate classifications.

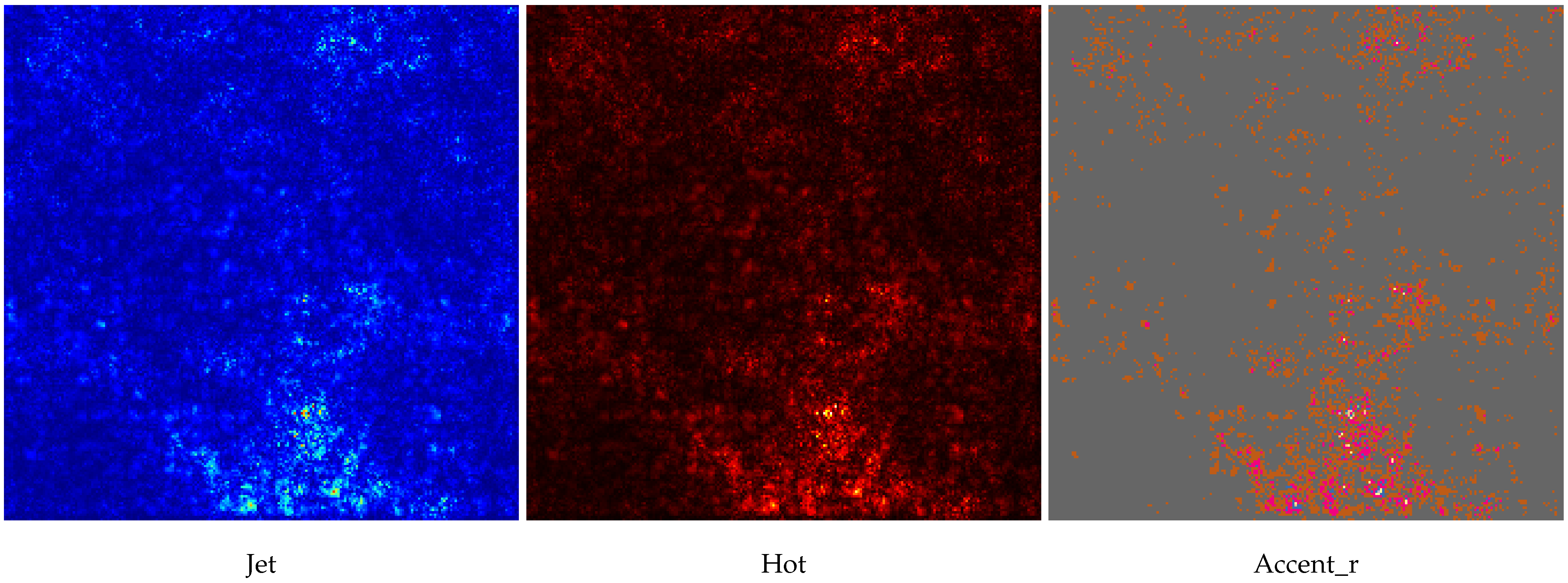

Overall, this study’s findings underscore the significance, as shown in

Figure 8, of the impact of attention mechanisms on the proposed model’s performance, particularly in the context of subtype classifications. The results demonstrate the model’s ability to effectively leverage attention mechanisms to achieve superior performance metrics, thereby contributing to classification accuracy and discriminative power advancements.

6.2. Intra-Domain Generalization

The curated version of the original (UBC-OVARIAN) dataset inherently contains domain variations due to its multi-institutional collection protocols, including (but not limited to) featuring diverse staining protocols, scanning systems, and tissue preparation mechanisms. The intrinsic heterogeneity provides a natural testbed for evaluating domain generalization. In

Table 6, we list strategically designed data splits termed as intra-domains.

Although

Table 5 presents experimentation across two different splits, which implicitly supports domain generalization. But to explicitly evaluate the impact of domain generalization, we systematically designed four distinct domain splits using stratified sampling to ensure balanced class distribution across domains. Each domain was treated as a separate test set, while models were trained on the remaining three domains, simulating real-world scenarios where models encounter data from previously unseen institutions. Our framework demonstrated robust domain generalization, as evidenced by consistent performance across different data splits. As shown in

Table 7, the model maintained stable performance with a maximum domain gap of only 1.3% between the best- and worst-performing domains, achieving an average cross-domain accuracy of 84.7 ± 0.6%. Furthermore, in

Table 8, we reported relative differences between obtained accuracies with respect to source domains and the best reported accuracy within this study. Such

is the relative difference between the source domain and current domain, while

is introduced for the difference calculation between the best reported accuracy with regard to the target domain.

The intra-domain experiments are performed with consistent experimental settings of 16 as a batch size, 25 as an epoch size, and a bag size with ResNet50 as one of the pre-trained models. Such intra-domain experiments are recommended to be taken into consideration with other hyperparameter selections to evaluate robustness.

6.3. Ablation Analysis

This ablation-based experiment was designed after the successful execution of various comparative-based experiments, from which we decided to analyze the impact of various MBS with a constant feature extractor, batch size, data split ratio, and consistent epochs. Furthermore, temperature -based ablation is also conducted to analyze the impact of finding whether WSI-P2P is robust to temperature changes.

6.3.1. Bag-Level Ablation

Table 9 documents the bag-level ablation. From experiments, it is observed that increasing the bag size (increasing instances in a bag) helps models to learn more representations and also helps with generalization, possibly influencing model performance. Specifically, a larger bag size enables models to learn diverse representations and facilitates better generalization.

6.3.2. Calibration Ablation

We experiment with different values for temperature

to assess its impact on the proposed WSI-P2P. The motivation for this calibration experiment is taken after the foundational work [

65]. Many other MIL-based works have also performed this ablation to validate the proposed framework’s robustness. For this model calibration ablation, we modified the MILModel class with softmax to adjust the

factor, which is defined as

In softmax’s context of temperature scaling, adjusting the temperature parameter can influence the output probabilities and be used to adjust the sharpness or softness of the output probability distribution generated by the softmax function. It involves dividing the logits (outputs before applying softmax) by a temperature parameter before using the softmax operation. The softmax operation then converts these adjusted logits into probabilities.

Table 10 presents a calibration experiment with varying values of

, where WSI-P2P-ResNet18 shows an improvement in ACC, AUC, and F1-score when

rises from 0.1 to 2. Performance decreases slightly above

. This shows that this model architecture may benefit from a moderate temperature of around 2. Meanwhile, for WSI-P2P-ResNet50, the trend is less consistent. As

increases from 0.1 to 0.5, the model’s performance significantly improves, suggesting that raising the temperature is beneficial. Increasing

beyond 0.5 resulted in a decline in performance. These findings demonstrate the importance of temperature scaling in MIL tasks. The ideal temperature value depends on the feature extractor design. The higher temperature of two helps WSI-P2P-ResNet18, while 0.5 helps WSI-P2P-ResNet50. It also validates that there is no universal rule for choosing the temperature parameter, and it often requires experimentation to find the optimal value for a particular application.

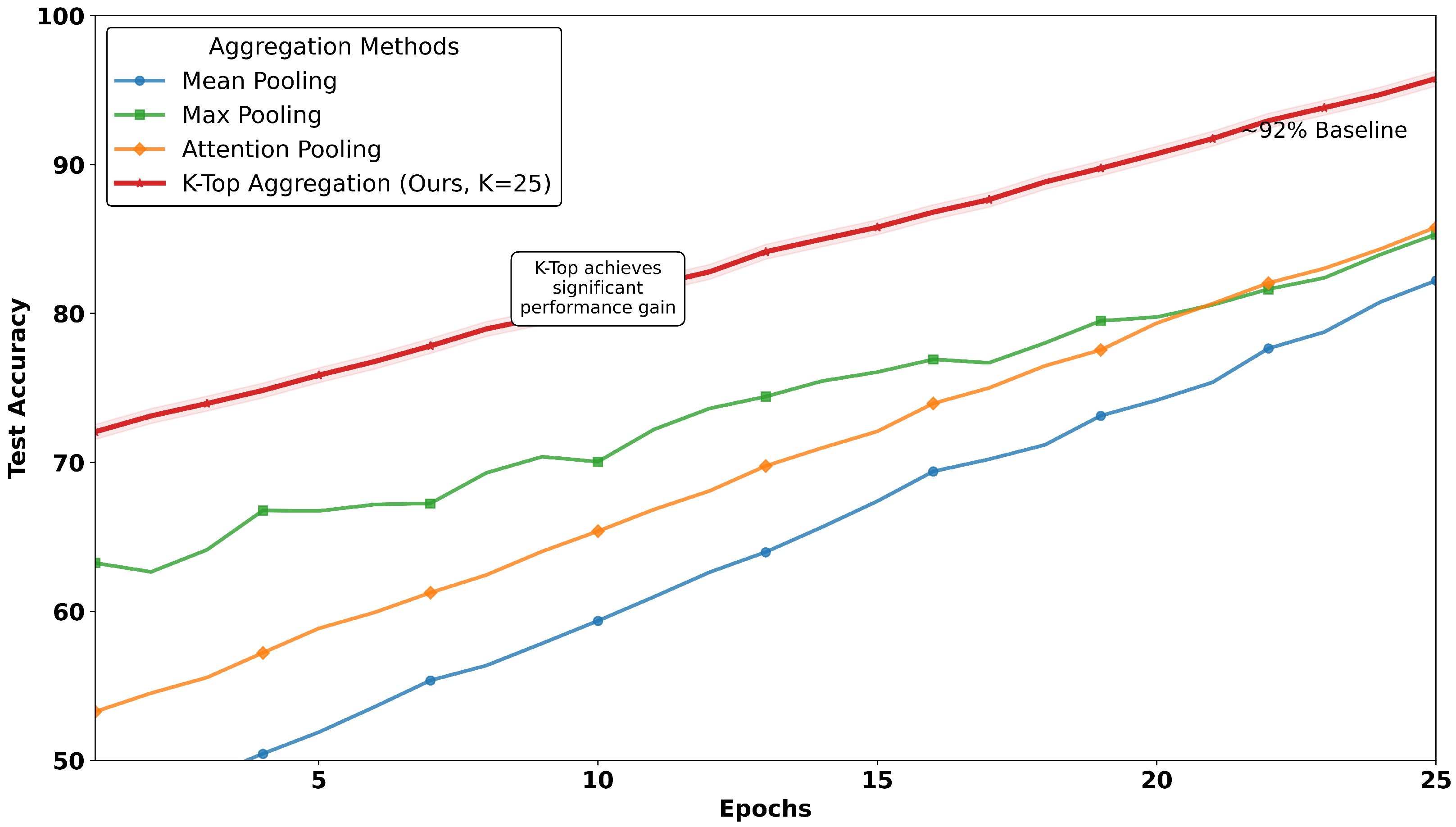

6.4. MIL Aggregators: K-TOP Tiles Superiority

In this ablation, four aggregation methods are comparatively evaluated as discussed in the problem formulation.

Table 11 and

Figure 9 together illustrate the comparative analysis of MIL aggregators, where the proposed work reached a peak of 95% accuracy on test data over conventional methods. The plot as depicted in

Figure 9 tracks test accuracy over epochs, revealing that the method

K-TOP achieves a consistent and significant performance advantage, while baseline methods remain below 95%, where mean pooling methods reach 81% and max pooling touches 84%. In contrast to mean and max pooling, the attention-based MIL aggregator method reaches 86%. This trend highlights the robustness of the proposed work with

K-TOP, as it maintains its lead throughout the training process, suggesting stable convergence and effective learning dynamics. In contrast,

Table 11 provides a detailed multi-metric comparison, reinforcing the findings from the image.

6.5. SOTA Analysis

We assessed the proposed method against several baseline methods (state-of-the-art) to validate its effectiveness. These baseline methods, established benchmarks in the field, enabled us to assess the strengths and advancements of our approach critically. The baseline, including ABMIL (“attention-based MIL”), proposed by [

59], employs attention mechanisms within a MIL context to weigh instances within a bag, enhancing both performance and interpretability. This method sets a precedent for leveraging instance-level features to inform bag-level predictions, providing a robust baseline for comparison. Next, CLAM (“clustering-constrained-attention MIL”) introduced by [

5], uses attention-based learning to identify sub-regions of high diagnostic value to accurately classify whole slides and instance-level clustering over the identified representative regions to constrain and refine the feature space specifically for subtype classification. A few recent works, such as DSMIL (“Dual-Stream MIL”) [

66], TransMIL (“Transformer-based MIL”) [

67], DFTD-MIL (“Double-tier feature distillation MIL”) [

68], IBMIL (“Interventional-bag MIL”) introduced by Lin et al. [

69], Lastly, MHIM-MIL [

70] (“Masked Hard Instance Mining MIL”).

Table 12 illustrated the performance of the proposed method with existing SOTA methods. This table references the task category while our proposed WSI-P2P is compared with the recent work [

12] presented on the same UBC-Ovarian Cancer Challenge dataset. The limitation of OCCNet [

12] is that it only utilizes one-vs.-1, which means the complete WSI is treated as a single tile or patch. It is time efficient but very hard to deploy in the clinical environment and useless for robustness purposes. Medical practitioners cannot rely on subtype classification on a single tile, while our methodology intuitively employs 50 tiles and presents a competitive performance, and further refinement is needed. The single-tile selection approach shows an F1-Score of 93.67% while it achieved 93% for balanced accuracy. In comparison to OCCNet, WSI-P2P yields a maximum score of 95.89% AUROC and a test accuracy of 77.67% without attention; further, 100% AUROC and a test accuracy of 95% are recorded with the attention mechanism, demonstrating excellent performance between subtype classifications. A comparative analysis of WSI-P2P employing SOTA methods with diverse datasets is suggested, and it would further benefit computational pathology and justify the applicability of the proposed method.

After conducting a comparison with SOTA MIL techniques, we conducted a detailed investigation on recent works on UBC-Othe UBC-OCEANset and prepared a comparative literature table as recorded in

Table A2, which provides comprehensive information with respect to key aspects and emphasizes key contributions along with limitations. Finally, we compared the proposed

K-TOP MIL aggregator on the test dataset with existing MIL aggregators over 25 epochs for

, which outperformed our own

. The results are summarized in

Figure 9.

6.6. Model Inference Analysis

The average time from various experiments based on 25 epochs was recorded as 38 min or h for One-vs.-fifty tiles/WSI for our proposed approach, while for the rest of the compared methods, the time varies from h to h per 25 epochs with identical experimental conditions; this represents a to superiority in terms of speed, attributed to the adopted key innovations.

We also evaluated computational efficiency by measuring the relative model inference speed in terms of times × as reported in

Table 11. Attention Pooling (normalized to

) is regarded as baseline. Ours

K-TOP = 5 demonstrates a significant

speedup over standard attention mechanisms while maintaining a competitive performance, achieving an optimal balance between computational efficiency and classification accuracy. The

K-TOP = 25, while slightly slower at

speedup in comparison to the fastest reported Max Pooling, reported the highest accuracy at 95.72%, illustrating the flexibility of our method in trading off between computational demands and performance requirements.

7. Limitations and Future Work

The success of K-TOP aggregation attributed to its ability to selectively focus on the most informative instances within a bag, effectively filtering out noise and irrelevant data. Unlike mean pooling, which dilutes signals by average outing all instances, or max pooling, which risks overemphasizing outliers, the proposed method presents an optimal balance by aggregating top K instances. This approach is especially advantageous in domains like computational pathology, where WSI contains vast amounts of data, but only a small subset of patches are diagnostically relevant. The method’s consistent high accuracy and AUROC across a comprehensive set of experiments suggest strong potential for clinical deployment.

However, we acknowledge that the current study has several limitations. Firstly, the framework’s validation is primarily conducted on a single dataset, limiting assessments of its generalization across diverse population demographics and institutional protocols. Secondly, the absence of long-term clinical validation prevents definitive conclusions about real-world utility. Lastly, while K-TOP aggregation demonstrates strong performance, further fine-tuning and optimization could enhance its robustness across varying tumor densities and morphological patterns.

Building upon the foundation, we outline several perspectives for future work:

Adoption of Datasets: Additional comprehensive evaluation of multiple datasets with diverse patient populations and staining protocols is recommended to assess true generalization. To achieve this, exploration of public and private datasets is suggested.

Domain Generalization: Future works may investigate K-TOP aggregation’s effectiveness for other cancer types and histopathological tasks beyond ovarian cancer classification.

Architectural Advancements: Exploration of transformer-based architectures for enhanced feature representation, including vision transformers (ViTs) for patch-level analysis by integrating K-TOP aggregation for improved long-range dependency modeling. Further, multimodal data (genomic, clinical, and radiomic features) could further enhance diagnostic accuracy and clinical relevance. Development of advanced explainability techniques, including quantitative validation of attention maps against pathologist annotations and integration with clinical decision support systems.

Interpretability Enhancement: Lastly, we highly recommend implementation of rigorous quantitative assessment for model explainability (e.g., saliency map), including pathologist-in-the-loop validation of attention mechanisms and statistical correlation analysis between model focus regions and clinically relevant histopathological features.

In summary, the WSI-P2P framework represents significant progress toward developing efficient, scalable, and generalizable computational pathology tools. This study not only contributes to the evolution of digital pathology but also establishes a foundation for future research in domain-generalized whole-slide image analysis.