Abstract

Background: Multimodal Deep learning has emerged as a crucial method for automated patient-specific quality assurance (PSQA) in radiotherapy research. Integrating image-based dose matrices with tabular plan complexity metrics enables more accurate prediction of quality indicators, including the Gamma Passing Rate (GPR) and dose difference (DD). However, modality imbalance remains a significant challenge, as tabular encoders often dominate training, suppressing image encoders and reducing model robustness. This issue becomes more pronounced under task heterogeneity, with GPR prediction relying more on tabular data, whereas dose difference prediction (DDP) depends heavily on image features. Methods: We propose BMMQA (Balanced Multi-modal Quality Assurance), a novel framework that achieves modality balance by adjusting modality-specific loss factors to control convergence dynamics. The framework introduces four key innovations: (1) task-specific fusion strategies (softmax-weighted attention for GPR regression and spatial cascading for DD prediction); (2) a balancing mechanism supported by Shapley values to quantify modality contributions; (3) a fast network forward mechanism for efficient computation of different modality combinations; and (4) a modality-contribution-based task weighting scheme for multi-task multimodal learning. A large-scale multimodal dataset comprising 1370 IMRT plans was curated in collaboration with Peking Union Medical College Hospital (PUMCH). Results: Experimental results demonstrate that, under the standard 2%/3 mm GPR criterion, BMMQA outperforms existing fusion baselines. Under the stricter 2%/2 mm criterion, it achieves a 15.7% reduction in mean absolute error (MAE). The framework also enhances robustness in critical failure cases (GPR < 90%) and achieves a peak SSIM of 0.964 in dose distribution prediction. Conclusions: Explicit modality balancing improves predictive accuracy and strengthens clinical trustworthiness by mitigating overreliance on a single modality. This work highlights the importance of addressing modality imbalance for building trustworthy and robust AI systems in PSQA and establishes a pioneering framework for multi-task multimodal learning.

1. Introduction

Cancer remains one of the leading causes of mortality worldwide, with radiation therapy serving as a cornerstone in its clinical management. Among advanced techniques, Intensity-Modulated Radiation Therapy (IMRT) has emerged as a pivotal technique in the clinical management of cancer, owing to its advanced capabilities in delivering precise and highly conformal dose distributions that are meticulously tailored to the tumor’s shape and location [1,2]. Nevertheless, the efficacy of IMRT fundamentally depends on the accuracy of dose delivery. This necessitates rigorous verification procedures to ensure the safe and accurate execution of each treatment plan. Patient-specific quality assurance (PSQA) fulfills this need by providing a comprehensive assessment of the treatment plan, thereby ensuring that each treatment plan can be executed both safely and accurately. In clinical practice, two key metrics are widely employed in PSQA: Gamma Passing Rate (GPR) and dose difference (DD) [3,4]. The GPR quantifies the percentage of dose points that satisfy predefined spatial and dosimetric tolerances, providing a coarse-grained measure of treatment plan fidelity. Conversely, the DD offers a detailed spatial distribution of dose discrepancies, yielding fine-grained insight into potential discrepancies between planned and delivered doses. Together, these metrics form the foundation for evaluating and improving the reliability of radiotherapy delivery.

Traditional PSQA methodologies are often labor-intensive, relying significantly on physical measurements and gamma analysis [5,6,7,8,9]. Recent advancements can be classified as follows: manually defined complexity metrics, which tend to have limited clinical relevance; (2) classical machine learning strategies that utilize tabular features, which are constrained by a dependence on low-dimensional representations; and (3) deep learning methods that exploit dose matrices, although these often neglect the advantages of multimodal fusion. Emerging multimodal frameworks seek to enhance the prediction process by integrating dose distribution images with tabular data concerning plan complexity [10,11,12,13,14,15].

Nevertheless, prevailing fusion methodologies often neglect a critical imbalance issue, wherein the tabular modality converges at a significantly swifter pace than its image counterpart due to its lower dimensionality and simpler encoder architecture. This phenomenon is referred to as the modality imbalance problem, where the faster-converging modality tends to dominate the joint training process, thereby suppressing the contributions of image-based features and resulting in suboptimal fusion and degraded performance [16]. The issue of modality imbalance in multimodal learning has been extensively explored, especially within classification tasks, where methodologies typically involve the use of an imbalance indicator (such as output logits or gradient magnitudes) alongside an adjustment strategy (including gradient scaling, adaptive sampling, or loss reweighting) [2,17,18,19,20,21,22,23,24,25,26]. However, PSQA introduces unique challenges as a regression problem characterized by continuous outputs and multi-task requirements (e.g., Gamma Passing Rate prediction and dose difference maps). Unlike classification tasks, regression endeavors exhibit distinct optimization dynamics, rendering traditional balancing strategies less effective.

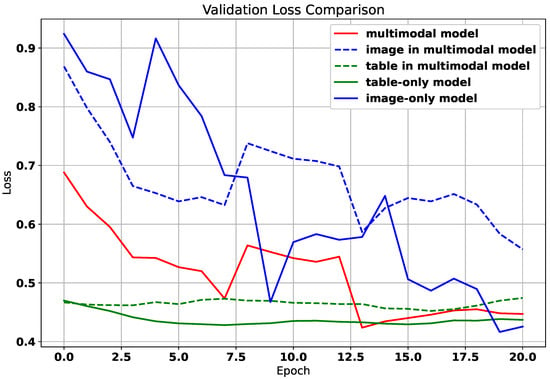

Figure 1 illustrates an introductory experiment that visualizes the convergence dynamics of different modalities. The solid lines indicate the performance of each modality when trained independently, while the dashed lines represent the performance of unimodal representations during multimodal joint training. Notably, the tabular modality converges as early as the second epoch in both scenarios but subsequently succumbs to overfitting, as evidenced by a gradual increase in loss. In contrast, the image modality continues to improve and remains unconverged even by the twentieth epoch. Importantly, the performance of each modality during joint training consistently lags behind that achieved through independent training. In this experiment, the image modality exhibits the highest performance upon convergence when trained alone, with the blue dashed line significantly surpassing its solid-line counterpart. Additionally, these results reveal a challenge related to modality preference divergence: while the tabular data primarily supports GPR prediction, the image data is more effective for fine-grained dose difference prediction. This heterogeneity complicates joint modeling but also presents an opportunity for targeted balancing.

Figure 1.

The training loss curve of different modalities.

To mitigate the above-mentioned challenges in multi-task regression for PSQA, we introduce BMMQA (Balanced Multi-modal Quality Assurance), a robust framework designed to harmonize modality- and task-specific dynamics. BMMQA integrates modality-specific learning rate adjustments to decelerate dominant modality convergence, alongside gradient normalization for stabilized joint training. Central to our approach is a hierarchical fusion mechanism: for coarse-grained GPR regression, we deploy attention-based softmax weighting to adaptively integrate modality embeddings, while spatial concatenation preserves fine-grained structures for dose difference prediction (DDP). Crucially, we derive Shapley-value-driven imbalance indicators to quantify modality contributions in continuous-output regression, enabling dynamic task-weight adjustment via gradient normalization and learning rate modulation. This dual-strategy framework not only resolves convergence asynchrony but also optimizes feature collaboration, ensuring clinically reliable predictions. With the help of Peking Union Medical College Hospital, we created a large multimodal dataset of 1370 IMRT plans. Extensive validation on it demonstrates that BMMQA achieves state-of-the-art performance, significantly reducing GPR MAE by 15.7% under 2%/2 mm criteria while attaining a peak SSIM of 0.964 for dose distribution prediction.

Our main contributions are as follows:

- BMMQA is the first dedicated solution to modality imbalance for radiotherapy QA and multi-task multimodal learning, explicitly addressing convergence asynchrony where tabular features dominate training. A network forward mechanism enabling fast computation of outputs from different modality combinations is also proposed.

- BMMQA introduces task-specific fusion protocols: attention-based softmax weighting for GPR regression dynamically balances modality contributions, while spatial concatenation for DDP preserves structural fidelity. This dual-path design resolves representational conflicts between global scalar prediction and fine-grained spatial mapping.

- BMMQA develops a theoretically grounded Shapley value framework for quantifying modality contributions, tailored explicitly for continuous-output regression. This framework underpins a dynamic balancing mechanism that modulates training to prevent modality suppression.

- BMMQA innovatively addresses the problem of multi-task multimodal learning by introducing a modality-contribution-based task weighting scheme: assigning different task-specific weights according to modality contributions to resolve the modality imbalance problem in multi-task learning.

- Extensive experiments on clinically relevant datasets validate that BMMQA outperforms existing methods on both coarse-grained and fine-grained PSQA tasks, significantly improving modality collaboration and task generalization in real clinical settings.

The rest of the study is structured as follows: Section 2 discusses recent related work, followed by Section 3, which describes the dataset and includes relevant discussions, as well as a preliminary task. Further, in Section 4, we present the methodology in a detailed manner, whereas Section 5 provides a thorough discussion of the experimental results. Lastly, Section 6 summarizes this work, and in addition, we highlight the limitations of the current work and discuss future work.

2. Related Work

2.1. Multimodal PSQA

Multimodal deep learning frameworks have emerged to enhance PSQA by integrating dose distribution matrices (image modality) with plan complexity features (tabular modality), enabling richer and more informative predictions [10,11,12,13,14,15]. These approaches can be broadly categorized based on the stage of modality fusion. Some studies adopt early fusion models, where features from different modalities are extracted separately, concatenated, and then jointly processed by an algorithm, which is typically a machine learning model in existing studies, such as Random Forest [11] or logistic regression [13]. Other works employ a late fusion strategy using ensemble models, where each modality is trained independently to produce its own prediction. These outputs are then combined—commonly via weighted averaging, majority voting, or meta-learning—to yield the final decision. In contrast, other works [10,12] adopt intermediate fusion using end-to-end deep learning architectures, where the multimodal fusion module is jointly optimized with the encoder module during training.

For early fusion methods, existing studies typically employ traditional machine learning algorithms as the modality fusion model. Han et al. [11] incorporated a broad set of tabular features beyond MU values, including MU per control point (MU/CP), the proportion of control points with MU < 3 (%MU/CP < 3), and small segment area per control point (SA/CP). Deep features were extracted from dose matrices using a DenseNet-121 backbone. All modalities—deep, dosimetric, and plan complexity—were independently processed using dimensionality reduction techniques such as PCA and Lasso, and the resulting fused feature vector was used as input to a Random Forest (RF) model for GPR prediction. Li et al. [13] followed a similar approach, performing Lasso-based selection on dosimetric and plan complexity features, and employing logistic regression as the final prediction model. These early fusion approaches generally outperform unimodal baselines and offer relative interpretability. However, their reliance on static feature concatenation and low-dimensional input hinders fine-grained regression tasks such as DDP.

In contrast, ref. [14] proposed a late fusion approach by training two separate support vector machine models—one based on plan complexity features and the other on radiomics features—and combining their outputs to enhance beam-level GPR prediction and classification performance in SBRT (VMAT) plans. The late fusion method is typically included as a baseline in existing studies, such as in the work by Hu et al. [10], where outputs from individual modalities are aggregated at the decision level.

For intermediate fusion methods, Hu et al. [10] proposed a 3D ResNet-based architecture that encodes MLC aperture images to extract spatial features, which are then concatenated with corresponding MU values—a tabular feature representing plan complexity—in a one-to-one manner. This fused representation is used for GPR prediction and is referred to as Feature–Data Fusion (FDF). Huang et al. [12] adopted a similar input structure. Still, they employed a simpler fusion mechanism: compressing the dose matrix into a 99-dimensional vector and concatenating it with a single MU value before passing the result through fully connected layers. Ref. [15] converted delivery log files into MU-weighted fluence maps and used them as inputs to a DenseNet model to predict GPRs under multiple gamma criteria. While these intermediate fusion approaches effectively capture high-dimensional cross-modal embeddings and are well-suited for fine-grained prediction tasks such as dose deviation prediction (DDP), they tend to overlook a key challenge in the PSQA context: the modality imbalance problem, where the tabular modality converges significantly faster than the image modality, leading to sub-optimal optimization and degraded model performance [16]. Table 1 provides a summary of multimodal PSQA methods.

Table 1.

Summary of multimodal PSQA methods. Symbols: ✓ = Yes; ✗ = No.

2.2. Imbalanced Multimodal Learning

The issue of modality imbalance has also drawn increasing attention in multimodal learning. In intermediate fusion settings, faster-converging modalities often dominate the joint training process, effectively suppressing the contribution of slower, more complex modalities. As a result, rich but harder-to-optimize inputs, such as high-dimensional image features, may be underutilized, leading to sub-optimal multimodal representations. Researchers have proposed modality-specific learning rate scheduling, gradient normalization (e.g., GradNorm), and adaptive weighting of modality contributions during training to mitigate this effect.

The multimodal model should do better than the unimodal model since it works with data that has information from more than one view. However, the widely used multimodal joint training model does not always work well based on existing studies [26], prompting researchers to investigate the reasons for this. Recent studies point out that the jointly trained multimodal model cannot effectively improve the performance with more information as expected due to the discrepancy between modalities [23,26,27,28,29]. Wang et al. [26] found that multiple modalities often converge and generalize at different rates, thus training them jointly with a uniform learning objective is sub-optimal, leading to the multimodal model sometimes is inferior to the unimodal ones. Also, Winterbottom et al. [27] indicated an inherent bias in the TVQA dataset towards the textual subtitle modality. Besides the empirical observation, Huang et al. [29] further theoretically proved that the jointly trained multimodal model cannot efficiently learn features of all modalities, and only a subset of them can capture sufficient representation. They called this process “Modality Competition”. Recently, several methods have emerged attempting to alleviate this problem [23,24,26,30]. Wang et al. [26] proposed to add additional unimodal loss functions besides the original multimodal objective to balance the training of each modality. Du et al. [30] utilized well-trained unimodal encoders to improve the multimodal model by knowledge distillation. Wu et al. [23] found out how quickly the model learns from one type of input compared to the other types of input, and then proposed to guide the model to learn from previously underutilized modalities. Wei et al. [31] introduced a Shapley-based sample-level modality valuation metric to observe and alleviate the fine-grained modality discrepancy. Wei et al. [32] further considered the possible limited capacity of modality and utilized the re-initialization strategy to control unimodal learning. In contrast, Yang et al. [33] focused on the influence of imbalanced multimodal learning on multimodal robustness, and proposed a robustness enhancement strategy.

While these methods have improved multimodal learning, a comprehensive analysis of imbalanced multimodal learning is still lacking. Most previous studies have addressed modality imbalance in single-task scenarios. However, in real-world applications—such as PSQA in the medical domain—multimodal models are often designed for multi-task settings, where modality imbalance typically results in the degradation of the performance of an entire task. This is the central problem our work aims to address.

3. Foundations

This section establishes the methodological foundations for our study.

3.1. Clinically Validated Data Curation

For this study, we utilized the private data set obtained in collaboration with the Department of “Radiation Oncology” at “Peking Union Medical College Hospital” https://www.pumch.cn/en/introduction.html, accessed on 3 October 2025. The dataset was collected between December 2020 and July 2021. It comprises 210 FF-IMRT treatment plans, encompassing 1370 beam fields for various treatment sites, and the treatment plans by site are detailed in Table 2.

Table 2.

Treatment plans by site.

All plans were generated using Eclipse TPS version 15.6 (Varian Medical System) and delivered via Halcyon 2.0 linac with SX2 dual-layer MLC. The sliding window was employed for plan optimization, with dose calculation performed using the Anisotropic Analytic Algorithm (AAA, version 15.6.06) at a 2.5 mm grid resolution.

Under the TG-218 report recommendation [34], PSQA measurements were conducted before treatment delivery using actual beam angles and employing portal dosimetry. Dose calibration was performed daily before data collection. Gamma analyses were executed with criteria of mm, mm, and mm at a 10% threshold of maximum dose, analyzing only points with doses greater than 10% of the global maximum dose per beam, as detailed in Table 3. The gamma analyses were carried out in absolute dose mode, applying global normalization to the results. The treatment planning system calculated fluence maps, exported in DICOM format, which served as inputs for the deep learning network. The raw fluence maps underwent preprocessing to standardize input data: resampling to 1 × 1 resolution, cropping to 512 × 512 pixels, and Min-Max normalization to scale pixel values to [0, 1]. This comprehensive dataset, alongside rigorous PSQA measurements, underpins the proposed Virtual Dose Verification.

Table 3.

Selected complexity metrics, their references, and one sample in each lesion, namely, Head and Neck (H&N), Chest (C), Abdominal (A), and Pelvic (P).

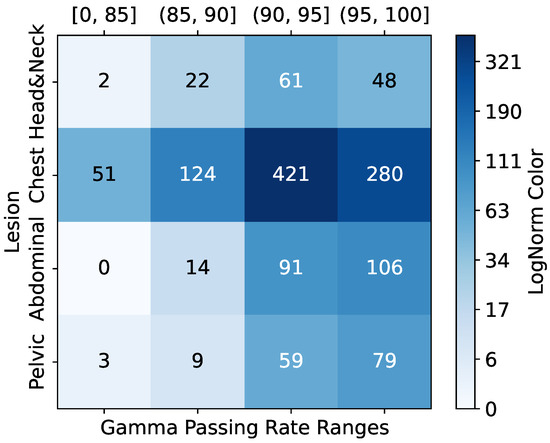

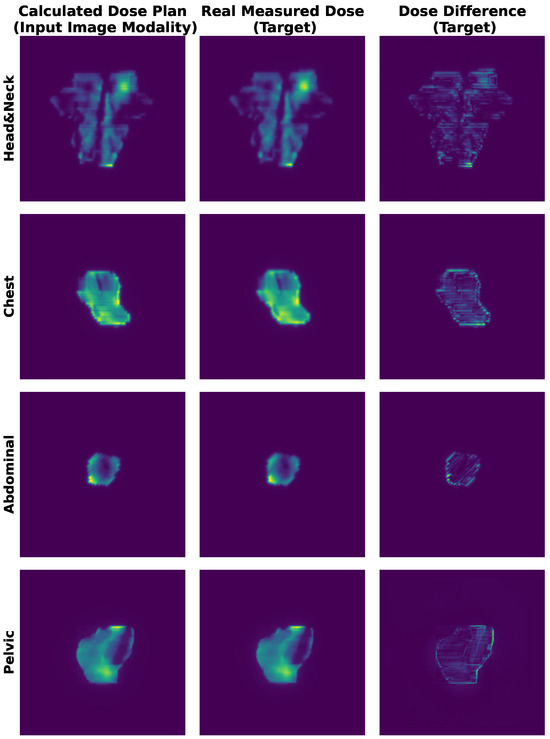

The dataset’s GPR distribution, categorized into clinical intervals (<85%, 85–90%, 90–95%, >95%), revealed site-specific trends as shown in Figure 2; for example, head and neck (H&N) plans exhibited higher complexity (51 beams below 85% GPR). In comparison, chest (C) plans demonstrated optimal compliance (106 beams > 95% GPR). Qualitative PSQA validation as shown in Figure 3, further compared TPS-calculated doses, measured doses, and absolute dose differences across four representative IMRT plans, with the pixel intensity (darker = higher dose Gy) highlighting discrepancies, particularly in high-gradient regions. These visualizations collectively underscore the dataset’s variability and the critical role of rigorous QA, especially for an anatomically complex site like H&N.

Figure 2.

Sample GPR and lesion distribution.

Figure 3.

Representative IMRT QA results for four treatment plans. (Left) TPS-calculated dose distribution. (Middle) Measured dose via portal dosimetry. (Right) Absolute dose differences. Pixel intensity corresponds to delivered dose (Gy).

3.2. Task Formulation

In our PSQA task, each input sample X comprises two modalities: an image modality and a tabular modality . The image modality is a () matrix representing relative dose intensity values normalized to the range . The tabular modality is a 33-dimensional vector composed of plan complexity metrics.

We consider two prediction tasks. The first is GPR prediction, where the target variable is a three-dimensional vector corresponding to three standard clinical criteria. The second is DDP, where the target variable is a matrix aligned with the spatial resolution of the image input.

Table 4 summarizes the mathematical symbols used throughout this study. Superscripts indicate modalities (e.g., img, tab), while subscripts refer to task-specific roles (e.g., gpr, ddp). These definitions provide a consistent multimodal framework for modeling the PSQA task, enabling both coarse-grained and fine-grained prediction objectives.

Table 4.

Mathematical symbol table.

3.3. Shapley Value

The Shapley value [42] was originally formulated in coalitional game theory to fairly allocate the total payoff among players based on their contributions. A multi-player game is typically described by a real-valued utility function V defined over a set of players . Players can form various coalitions (i.e., subsets of ), and their collective interactions can be either cooperative or competitive.

To quantify the contribution of a specific player i, consider a coalition . The marginal contribution of player i to coalition S is defined as

Since a player’s influence depends on all possible coalitions it may join, the Shapley value is defined as the average of its marginal contributions across all such subsets, weighted by the size of the coalitions:

A fair allocation of payouts in cooperative game theory is characterized by four fundamental axioms: Efficiency, Symmetry, Dummy, and Additivity. Among attribution methods, the Shapley value is uniquely defined as the only solution that satisfies all four properties [43]. More formally:

Efficiency: The total payout is fully distributed among all players, i.e., , where represents the utility of the empty set (baseline with no players).

Symmetry: If two players i and j contribute equally to every coalition, that is, for all , then their attributions must be identical: .

Dummy: A player i is considered a dummy if its inclusion does not affect any coalition beyond its standalone contribution, i.e., for all . In this case, the player’s attribution equals its value: .

Additivity: For any two games with utility functions V and W, their combined game is defined as for all . The attribution in the combined game satisfies linearity: .

These axioms collectively define the criteria for fair attribution, and it has been mathematically proven that the Shapley value is the only allocation method that satisfies them all [44].

Game-theoretic principles have been widely adopted in machine learning research [45,46,47]. For instance, game theory has been used to explain the effectiveness of ensemble methods such as AdaBoost [45]. In a related line of work, Hu et al. [48] utilize the Shapley value to assess the overall contribution of individual modalities across an entire dataset. However, their approach does not address modality contributions at the sample level and lacks a deeper analysis of underperforming modalities.

In this work, we propose a method for sample-level modality valuation, providing a more fine-grained understanding of modality importance. Furthermore, we analyze and propose solutions to mitigate the impact of low-contributing modalities.

4. Methodology

4.1. Overview

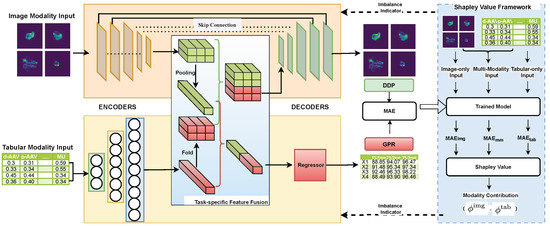

As Figure 4 depicts, the BMMQA framework integrates four modular components for robust radiotherapy quality assurance: Input Layer processes dual-modality data (spatial dose matrices and tabular complexity metrics); modality encoders transform inputs into high-level features via pre-trained image encoding and MLP-based tabular embedding; task-specific feature fusion dynamically balances contributions using attention-based weighting for GPR regression and spatial concatenation for DDP mapping; and decoders generate task-specific predictions (scalar GPR values and 512 × 512 DDP matrices via U-Net decoding). This structured pipeline ensures modality-aware feature collaboration while preserving spatial integrity for clinical reliability.

Figure 4.

Architecture diagram of the proposed BMMQA.

To further mitigate modality imbalance during training, BMMQA incorporates a dynamic balancing mechanism that integrates multiple synergistic components: (1) Task-specific fusion strategies: a softmax-weighted attention mechanism is used for GPR regression, while spatial cascading is applied for DD prediction. (2) A theoretically supported balancing mechanism: Shapley values, particularly suited for multi-task regression, are employed to quantify the contribution of each modality. (3) A fast network forward mechanism designed to compute the output of different modality combinations efficiently. (4) A modality-contribution-based task weighting scheme developed explicitly for multi-task multimodal learning: assigning different task-specific weights according to modality contributions to address the modality imbalance problem in multi-task learning.

This closed-loop design enhances robustness, particularly in critical failure cases (e.g., GPR < 90%), by preserving feature collaboration integrity across both coarse-grained and fine-grained PSQA tasks.

4.2. Modality Encoders

The model input consists of two modalities: image modality dose plan arrays , and tabular modality plan complexity metrics . The image modality is passed through a convolutional or Transformer-based pretrained encoder (e.g., ResNet) to extract spatial features, yielding a high-dimensional embedding , where is the number of channels, and denote the spatial dimensions.

To ensure dimensional compatibility for subsequent fusion, the tabular modality is processed by an MLP encoder to extract structured feature embeddings. These embeddings are initially represented as , where · denotes scalar multiplication.

The encoding process can be formalized as:

4.3. Task-Specific Modality Fusion

The intrinsic task heterogeneity between Gamma Passing Rate (GPR) regression (scalar output) and dose difference prediction (DDP) (spatial matrix output) necessitates divergent fusion strategies. This duality manifests in two critical dimensions: Representational Conflict: the GPR requires compact, modality-agnostic feature abstraction for global dose compliance assessment, while DD demands high-resolution spatial preservation for localized discrepancy mapping. Optimization Incompatibility: Naive uniform fusion (e.g., concatenation or averaging) causes representational interference—spatial details critical for DD are diluted by low-dimensional tabular features optimized for GPRs. To resolve this duality, BMMQA introduces a task-specific modality fusion mechanism, GPR-Oriented Fusion prioritizes attention-based modality weighting to distill task-relevant signals while suppressing noise through differentiable softmax gates. DDP-Oriented Fusion maintains structural integrity via channel-wise spatial alignment, preserving high-resolution image embeddings without destructive compression.

4.3.1. GPR-Oriented Fusion

In the GPR task, the objective is to predict coarse-grained scalar values rather than spatial maps. We first apply spatial pooling to the high-dimensional feature maps extracted from the image modality to align the modalities for this point-wise regression. This yields a compact image embedding that is dimensionally compatible with the tabular embedding. The two embeddings are then concatenated to form a joint representation for regression.

Due to the opaque nature of deep networks, assessing modality imbalance during training requires visibility into how each modality contributes to the final output. While prior work has proposed feed-forward architectures that isolate modal contributions, these are typically designed for classification tasks, where the output is normalized via softmax. In regression tasks like PSQA, the outputs are not inherently normalized, which leads to scale shift problems when isolating modality contributions, rendering many imbalance indicators ineffective.

To address this, we adopt an attention-based fusion mechanism on the modality embeddings. Instead of normalizing at the output layer, we apply a softmax weighting to the modality embeddings during fusion. This design enables transparent and differentiable attribution of modality contributions, making it suitable for imbalance assessment in regression settings.

Each modality embedding is projected to a scalar score s via a learnable linear transformation:

For notational simplicity, we write as a matrix multiplication, where are feature maps. In practice, spatial pooling (e.g., global average pooling) is applied to ensure shape compatibility before the projection. This convention is adopted throughout the remainder of this paper.

The scores s are normalized using a softmax function to obtain attention weights that reflect the relative contribution of each modality:

The final fused representation is computed as a weighted combination of the modality-specific embeddings:

We denote as the joint representation derived from both image and tabular modalities. For clarity, we simplify this as when both modalities are present.

4.3.2. DDP-Oriented Fusion

Since dose difference prediction requires generating spatial outputs, it is essential to preserve spatial information from the high-dimensional image modality. We argue that applying modality attention in this task is suboptimal. Therefore, this stage does not use the attention-based modality fusion mechanism described in the previous section.

To align the tabular features with the spatial structure of the image features, we first fold the tabular embedding vector into feature maps . The reshaped is then concatenated with the image feature map along the channel dimension to form the joint representation . Notably, the number of tabular channels is intentionally set much smaller than the number of image channels , reflecting our prior assumption that the DDP task should place greater emphasis on the image modality, which inherently preserves spatial information.

4.4. Task-Specific Decoders

The fused multimodal representations ( and ) require distinct decoding pathways tailored to the dimensional characteristics of their respective prediction tasks. The scalar output of GPRs necessitates progressive feature compression through fully connected layers, which transform high-dimensional inputs into compact regression targets. Conversely, the matrix output of DDP requires the maintenance of topological consistency through convolutional decoders that preserve spatial relationships.

4.4.1. GPR Decoder

After attention-based fusion, the resulting joint representation is further processed to enhance non-linearity and enable prediction. Specifically, the fused vector is first passed through a ReLU activation function, followed by an MLP to generate the final GPR prediction:

Here, the MLP consists of one or more fully connected layers that transform the activated fusion output into a scalar regression value. This structure allows the model to capture complex interactions between modalities beyond the initial linear fusion.

To optimize the GPR regression model, we adopt the mean absolute error (MAE) as the training loss:

4.4.2. Dose Difference Decoder

To generate matrix-form outputs, we adopt a U-Net-style decoder, commonly used in image segmentation tasks. The joint representation is passed through a series of convolutional layers that progressively reduce the channel dimension to 1, resulting in a continuous-valued prediction of the dose difference map .

To ensure a consistent loss scale for multi-task training, we also adopt the MAE as the training loss for the DDP matrix regression task:

Here, and are the ground-truth and predicted dose difference matrices, respectively, and denotes the total number of spatial elements.

4.5. Dynamic Balancing Mechanism

The persistent dominance of tabular modalities during joint optimization necessitates a rigorous quantification framework to address two fundamental manifestations of imbalance: (1) gradient suppression, where earlier-converging modalities produce vanishing gradients that hinder updates to slower modality encoders, and (2) representational collapse, wherein non-dominant modalities fail to achieve optimal capacity due to inhibited feature learning. To mitigate these issues, we base our balancing mechanism on coalitional game theory, utilizing Shapley values to provide an axiomatically fair attribution metric suitable for continuous-output regression scenarios.

4.5.1. Shapley-Based Imbalance Indicator

To reveal the extent of modality imbalance, we formalize modality ablation within the feed-forward process by defining the following single-modality and null-modality variants:

Image-only Forward. When only the image modality is used, the fusion reduces to:

Tabular-only Forward Similarly, when only the tabular modality is used, the fused representation becomes:

Null-modality Forward To formulate a no-input reference, we define the null-modality fusion as:

Since Shapley values are typically concerned with relative differences in contribution, and this null case is shared across all Shapley value calculations, it can be safely assigned a fixed value of zero in practice without affecting the consistency of the final attribution results.

Building on this foundation, we adopt a Shapley value framework as a principled attribution method to quantify the degree of modality imbalance in our multimodal regression setting. Given a set of modalities, we define the power set of possible modality combinations as:

For each subset , we compute a value function as the negative mean absolute error (MAE) over the validation set when the model is evaluated with only the modalities in S:

where denotes the model prediction with only modality subset S available, as defined in our modality ablation forward formulation.

Following the standard two-modality Shapley decomposition, the contribution (or marginal utility) of each modality can be computed as:

These values reflect each modality’s average marginal contribution across all possible input subsets. In our implementation, since is a constant term shared across all Shapley computations, it is set to zero without loss of generality. The resulting Shapley scores serve as modality imbalance indicators: lower values indicate weaker modality utility during prediction, highlighting convergence imbalance or representation dominance issues.

Equation (16) provides the formal definition of modality-level Shapley values. In practice, we substitute the contribution function defined in Equation (15) into the expression in Equation (16), yielding the following MAE-based formulation:

This formulation allows us to interpret the marginal contribution of each modality as the average reduction in prediction error across all possible inclusion orders.

4.5.2. Adjustment Strategy

Given that the two tasks exhibit distinct modality preferences, we introduce a loss weighting strategy to regulate modality balance during training. Specifically, in the GPR prediction task, the low-dimensional tabular features tend to dominate early convergence due to their high information density. In contrast, the dose difference task, which requires fine-grained spatial matrix regression, relies more heavily on image modality to capture spatial context.

Thus, we propose a dynamic loss weighting strategy based on modality attribution to address modality imbalance. We define the DDP loss coefficient as:

where is the Shapley value quantifying the contribution of the tabular modality in GPR prediction. r is the Modality Balance Factor as the hyperparameter. The overall training objective is thus formulated as:

This formulation increases the emphasis on the image modality (via the DDP task) when the tabular modality exhibits dominance, helping to balance modality convergence across tasks.

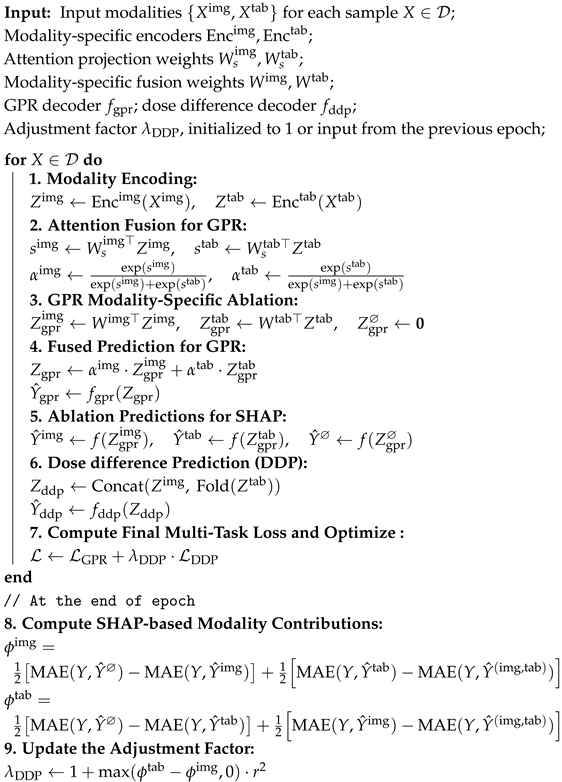

Algorithm 1 shows the per-epoch training procedure of BMMQA.

| Algorithm 1: Per-epoch Training Procedure of BMMQA |

|

5. Evaluation and Results

This section comprehensively analyzes our proposed BMMQA for achieving robust automated PSQA.

5.1. Implementation Setting

The framework processes resized dose arrays through a dual-task architecture: (1) a GPR regression head (AdaptiveAvgPool2d → Flatten → Dropout(0.1) → Linear(512, 3)) predicting three gamma criteria, and (2) a U-Net decoder with channels [768, 384, 192, 128, 32] for dose prediction. Training used Adam () with a 32-batch/30-epoch configuration and a 7:1:2 Train:Validation:Test split, with regularization applied via dropout () throughout the network as detailed in Table 5. To ensure representativeness, we employed multi-factor stratified sampling (Figure 2), taking into account both binned Gamma Passing Rates (continuous regression targets) and lesion categories (categorical feature). This approach prevents biased splits (e.g., training only on success cases or rare lesion types in isolation) and ensures that each subset covers the full difficulty spectrum and lesion diversity, thereby improving fairness and reproducibility.

Table 5.

Parameter settings.

5.2. Evaluation Metrics

We evaluate BMMQA performance using mean absolute error (MAE), Root Mean Square Error (RMSE) and for the GPR task, and both MAE and Structural Similarity Index Measure (SSIM) for the DDP task.

5.3. Baseline Methods

This subsection presents the baseline PSQA methods as benchmarks for comparison with the proposed BMMQA approach.

Previous studies have predominantly focused on single-modal approaches, which form the foundation of the single-modal baseline methods selected in this work.

To comprehensively evaluate the impact of different modalities in PSQA, we categorized our experimental methods into tabular, image, and unbalanced (a combination of tabular and image modalities) and balanced multimodalities. For each setting, both tasks (GPR and DDP) were trained simultaneously. We then analyzed the performance across various metrics and visualized the results using diagrams and tables.

Methods based on the tabular modality have primarily relied on traditional machine learning approaches in previous studies. In particular, regression tree models and linear regression models have been the most widely adopted in PSQA research: tree-based models have been employed in several works [41,49,50,51], while linear regression models have also been tested in related studies [41,50,52], including Poisson Lasso regression as a representative approach. Following Zhu et al. (2023) [41], we therefore employed Gradient Boosting Decision Tree (GBDT), Random Forest (RF), and Poisson Lasso (PL) models as representative baselines. These models utilize manually designed complexity metrics as input features to predict the Gamma Passing Rate (GPR), ensuring consistency with prior work, enabling direct comparability, and supporting reproducibility. Detailed descriptions of their implementation can be found in [41].

For image modality baselines, ref. [53] proposed a ResNet-based UNet++ architecture for predicting GPR and dose differences, while [54] introduced TransQA, a Transformer-based model designed for the same tasks. To address data imbalance, ref. [8] combined RankLoss with a DenseNet backbone to enhance the robustness of GPR predictions. For consistency in comparison, we also incorporated RegNet [55] to evaluate the performance of a more recent CNN backbone.

In our study, the last two categories of methods—unbalanced and balanced multimodal approaches—share the same network architecture, with the key distinction being whether a modality balancing algorithm is applied. Since the tabular modality is relatively simple, it typically employs a fixed encoder structure [10]; therefore, the main variation across settings lies in the choice of the image modality encoder. Specifically, we selected ResNet18 [53,56], DenseNet121 [8,15,57], MobileVit [54,58], and RegNet [55,59]. ResNet and DenseNet represent widely adopted classical CNN backbones in prior PSQA-related studies, MobileVit provides a lightweight variant, while RegNet is a relatively recent CNN family proposed around the same period as Transformer-based approaches and has demonstrated promising potential in medical imaging applications. Together, these encoders cover classical, lightweight, and modern high-performing architectures, ensuring consistency with prior baselines and enabling fair comparisons.

The main architectures employed in PSQA prediction are convolutional neural networks (CNNs) and, more recently, Transformer-based models. Among CNNs, ResNet and DenseNet have been widely adopted in prior PSQA-related studies [8,15,53,56,60], while MobileVit [54,58] represents a lightweight variant. To complement these, we also included RegNet [61], a relatively recent CNN family proposed around the same period as Transformer-based approaches, which has demonstrated promising potential in medical image generation tasks [59]. Together, these encoders provide a representative spectrum across classical, lightweight, and modern high-performing architectures, ensuring consistency with prior baselines and enabling fair comparisons.

The overall experimental settings remain largely consistent for the DDP task. However, since the tabular modality alone cannot independently accomplish the DDP task, it is not included in the corresponding results table.

5.4. EXP-A: Comparative Experiment with PSQA Methods

5.4.1. GPR Result Analysis

Table 6 reports the GPR prediction performance of various models under three commonly used gamma criteria (1%/1 mm, 2%/2 mm, and 2%/3 mm), with results further stratified by ground truth GPR intervals: All, 95–100, 90–95, and <90. The choice of intervals follows the recommendations of the AAPM Task Group 218 report [34], which defines a universal tolerance limit of GPR ≥ 95% (with 3%/2mm criteria and 10% dose threshold) for clinical acceptance, and a universal action limit of GPR ≥ 90% under the same criteria. Therefore, we stratified the results into three clinically meaningful categories: 95–100% (passing and acceptable), 90–95% (borderline, close to the action limit), and <90% (failing plans requiring further investigation). Since is known to be overly sensitive under sparse subgroup data distributions, we only report the overall () values to ensure meaningful and stable interpretation.

Table 6.

GPR performance comparison.

This stratification allows for a more nuanced evaluation beyond global accuracy, especially regarding clinically relevant but underrepresented failure cases. In practical radiotherapy quality assurance, plans with GPR < 90% are usually considered to have failed. Misclassifying these failing plans as acceptable poses a significant clinical risk, far greater than conservative rejection of marginally acceptable plans. Moreover, such failure cases account for only a small proportion of the dataset, making the <90 subgroup rare and highly valuable for evaluating model robustness and practical deployment readiness.

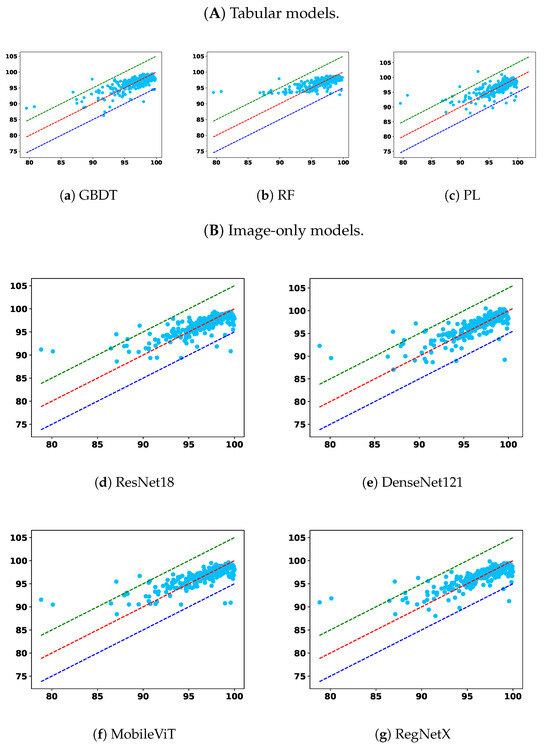

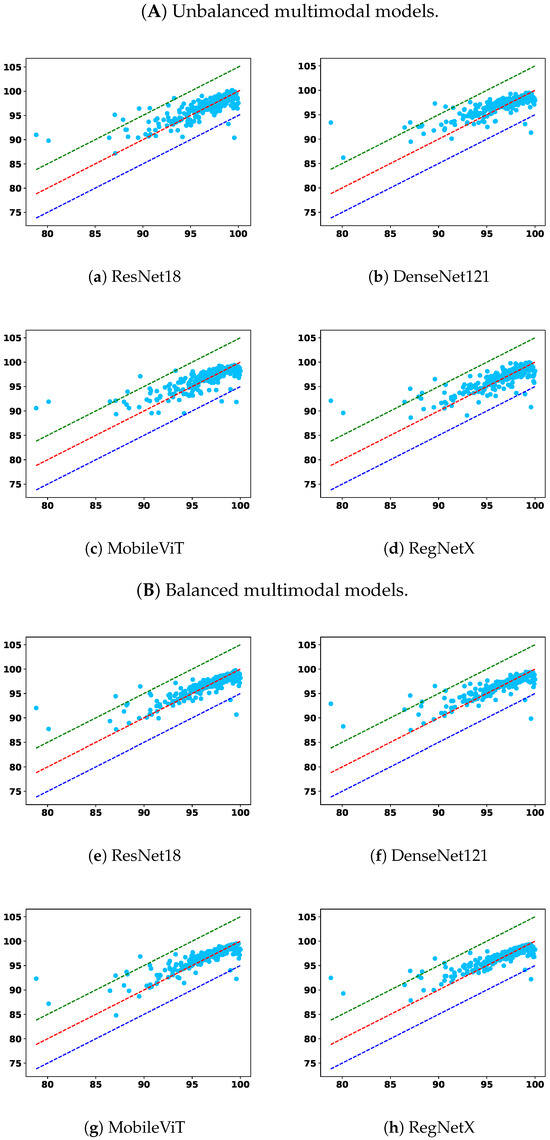

To complement the tabulated results, Figure 5 and Figure 6 show GPR scatter plots under the 2%/2mm criterion, with ground-truth GPR on the x-axis and predicted GPR on the y-axis. Light-blue dots represent individual samples. The diagonal dashed lines indicate prediction bands: the red line denotes the top 5% correct predictions, the green line the upper 5% deviation, and the dark-blue line the lower 5% deviation.

Figure 5.

Comparison across the remaining two model types: (A) tabular only and (B) image only.

Figure 6.

Comparison across the remaining two model types: (A) unbalanced multimodal and (B) balanced multimodal.

From the overall trend, it is clear that models trained solely on the tabular modality underperform across nearly all settings. Among them, RF and PL show lower overall accuracy and higher prediction errors in the <90 subgroup, indicating poor generalization and limited robustness. While the GBDT model demonstrates relatively better robustness in identifying failure cases under relaxed criteria, namely, 2%/2 mm and 2%/3 mm, this comes at the cost of diminished performance in the 95–100 and overall categories, suggesting an overfitting tendency toward rare cases or a lack of adaptability across GPR ranges. This reflects a broader limitation of relying on low-dimensional tabular features alone: such features may capture plan complexity but lack the spatial context necessary for comprehensive dose distribution assessment.

Image-only models, on the other hand, benefit from richer spatial information and demonstrate superior overall accuracy across nearly all criteria. Notably, models such as UNet++, TransQA, and RegNet consistently outperform tabular baselines in the “All” and 90–100 interval. However, their performance in the critical <90 subgroup is not always optimal. For instance, in some settings, the RMSEs in this subgroup are higher than those of GBDT, indicating a potential weakness in detecting outliers or failure cases. This suggests that while image modality provides strong general pattern learning capabilities, it may require additional mechanisms to capture minority failure modes effectively.

Multimodal models integrate image and tabular information and offer a compelling balance between overall performance and robustness. Across all settings, multimodal models outperform their unimodal counterparts, confirming the complementary nature of these modalities. More importantly, the proposed modality-balanced variants demonstrate consistent improvements over unbalanced versions. This is particularly evident in the <90 subgroup, where balanced models reduce the MAE and RMSE for nearly all encoder types. The benefit is pronounced in ResNet18, DenseNet121, and MobileViT-based variants, where balancing helps prevent the tabular modality from prematurely dominating the learning process. This validates our hypothesis that early convergence of simpler modalities can hinder effective feature fusion, and that regulating this imbalance can substantially enhance the model’s ability to improve overall prediction fidelity and remain sensitive to critical failure cases.

Although RegNet-based balanced models show slightly less consistent improvements—especially in RMSE under strict criteria—their performance remains competitive and still offers benefits in terms of MAE. This variation may stem from architectural sensitivity or optimization dynamics, suggesting that the effect of balancing may interact with encoder complexity.

These findings highlight the necessity of addressing modality imbalance in multimodal learning for PSQA. Simple fusion of modalities without considering training dynamics can lead to suboptimal utilization of complementary information. Our approach, which combines learning rate modulation and gradient normalization, offers an effective solution. In particular, the observed robustness improvements in the <90 subgroup emphasize the clinical importance of balanced learning for enhancing average accuracy and ensuring patient safety by reducing the likelihood of high-risk misclassifications. As such, modality balancing should be considered a key component in building safe, trustworthy AI systems for clinical deployment in radiotherapy quality assurance.

In addition to MAE and RMSE, the values in Table 6 further corroborate these observations. Tabular-only models generally yield a lower , often below 0.60, confirming their limited explanatory power and inability to capture the variance in GPR outcomes. Image-only models achieve moderately higher (around 0.62–0.64), reflecting their stronger alignment with ground-truth variations but with persistent weaknesses in the <90 subgroup. In contrast, multimodal models—particularly the balanced variants—consistently attain the highest values across nearly all criteria, with RegNet-based models exceeding 0.67 in some settings. These improvements indicate not only reduced absolute errors but also enhanced consistency in capturing the underlying variance structure of GPR. Importantly, the higher in the balanced multimodal models suggests improved generalization, supporting their reliability for both routine QA and the detection of clinically critical outliers.

5.4.2. DDP Result Analysis

Table 7 reports the DDP performance under different modality configurations and encoder backbones. We evaluate MAE (%), SSIM and , with further stratification by ground truth GPR intervals: 95–100, 90–95, and <90, in addition to the overall average. These strata correspond to varying clinical acceptance levels, with lower GPR values indicating more challenging cases. Since is highly sensitive to small sample sizes and may yield unstable or even misleading values when computed on narrow strata, we report values only for the overall (all samples) case to ensure robustness and interpretability.

Table 7.

DDP performance comparison.

Since the tabular modality lacks spatial resolution, we focus on three representative settings: (1) image-only baselines, (2) unbalanced multimodality (MM) models without contribution control, and (3) balanced multimodality (BMM) models using our proposed modality regulation mechanism.

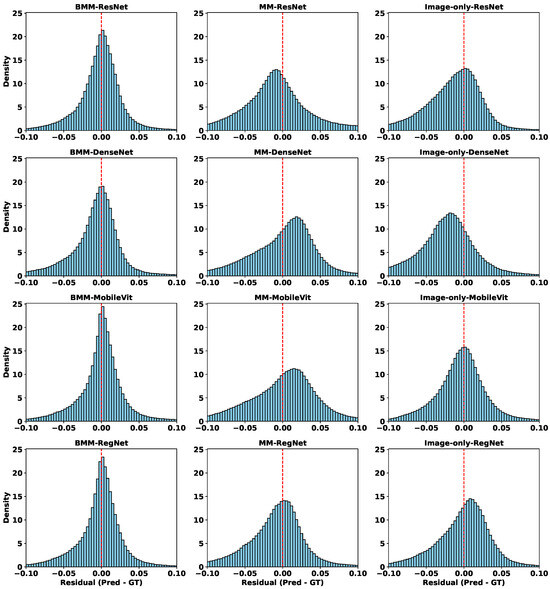

From Table 7, we observe that unbalanced fusion can degrade DDP performance, sometimes even underperforming image-only models—especially in high-GPR regions. This suggests that DDP is primarily image-sensitive, and that naive modality fusion can introduce interference when less-suitable modalities dominate. In contrast, BMM models consistently achieve the lowest MAE, highest SSIM, and strongest values across backbones, highlighting the value of modality balancing in spatially structured prediction. The consistently higher further indicates that BMM models explain a greater proportion of variance in voxel-wise dose differences, reinforcing their predictive robustness.

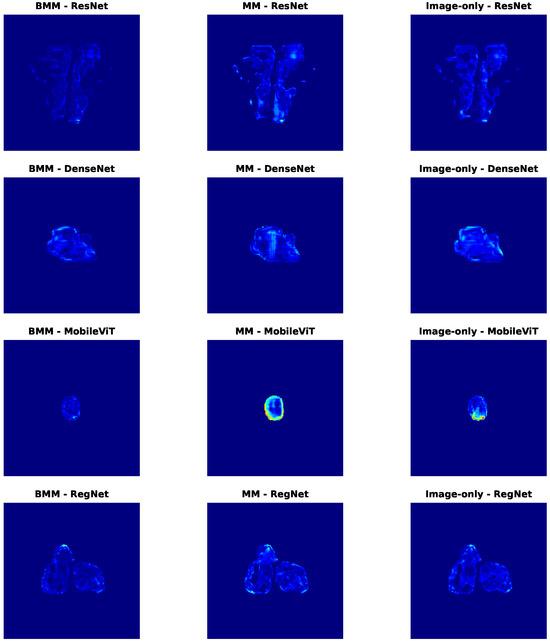

To better interpret these quantitative results, Figure 7 shows residual distributions across all modality-backbone combinations. Each row corresponds to a backbone (ResNet, DenseNet, MobileViT, RegNet), and columns represent BMM, MM, and image-only settings. The histograms confirm that BMM models not only reduce average errors, but also produce more symmetric, narrow residual distributions centered near zero—indicating better generalization and reduced voxel-level variance. In contrast, MM models show broader and often skewed distributions, reflecting prediction instability due to unregulated modality mixing.

Figure 7.

Residual distributions of different models in the DDP task. Each row corresponds to a different backbone (ResNet, DenseNet, MobileViT, RegNet), and each column represents a different modality configuration: balanced multimodal (BMM), unbalanced multimodal (MM), and image-only.

To further explore spatial behavior, Figure 8 visualizes voxel-wise error maps on selected test cases. Consistently, BMM models yield cleaner and more localized error patterns. Notably, MobileViT-MM exhibits strong overestimation artefacts (bright yellow) in the center, which are suppressed mainly in its BMM counterpart. Similar spatial refinements are observed across other backbones, especially in clinically critical regions.

Figure 8.

Dose difference error maps for selected samples in the DDP task. Each row corresponds to a different backbone (ResNet, DenseNet, MobileViT, RegNet), and each column represents a different modality configuration: balanced multimodal (BMM), unbalanced multimodal (MM), and image-only. Darker red areas indicate larger differences, whereas lighter blue areas indicate smaller differences.

Together, these results demonstrate that modality regulation not only improves quantitative metrics like MAE and SSIM, but also enhances spatial fidelity—reducing high-error artifacts and increasing clinical reliability. The complementary insights from Table 7 and Figure 7 and Figure 8 strongly validate the effectiveness of our balanced multimodal strategy in spatially sensitive PSQA tasks.

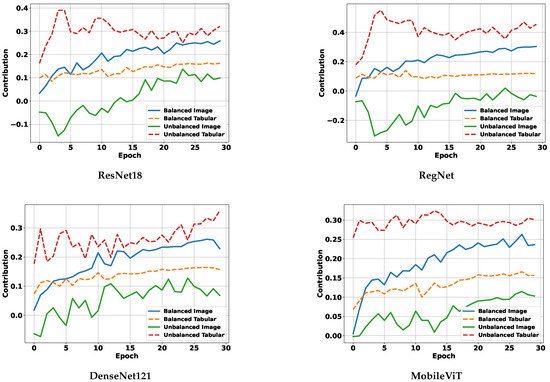

5.4.3. Modality Contribution Dynamics During Training

Figure 9 presents the evolution of modality contributions across training epochs for four representative backbone encoders. In the unbalanced setting (dashed lines), the tabular modality consistently dominates, exhibiting significantly higher contribution scores than the image modality across all backbones. This imbalance persists throughout training and suggests that the network overfits to the easier-to-learn tabular features while underutilizing spatial information, which is critical for DDP.

Figure 9.

Modality contribution curves for different backbones.

In contrast, under our balanced multimodality strategy (solid lines), modality contributions become more equitable. The image modality sees a clear increase in contribution, while the tabular modality’s influence is moderated, resulting in a more stable and task-appropriate fusion. These results empirically demonstrate that our balancing mechanism effectively mitigates modality dominance during joint training.

When viewed alongside Table 7, it is evident that balanced modality contributions lead to superior DDP performance, especially for image-sensitive tasks. This further validates our claim that addressing modality imbalance is essential for achieving both accurate and robust predictions in multimodal PSQA models.

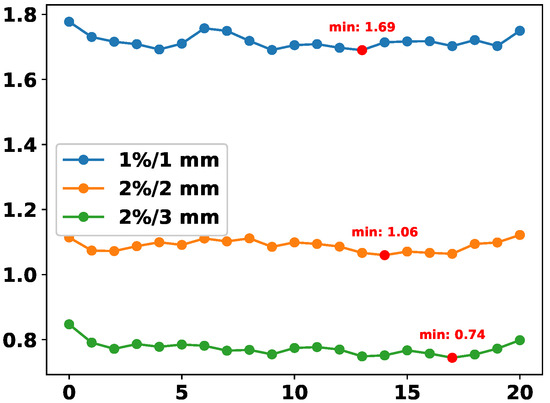

5.5. EXP-B: Ablation Experiment with Modality Balance Factor r

We conduct an ablation experiment to analyze the influence of the hyperparameter r in the formulation of . This parameter serves as an amplification factor, dynamically rebalancing the contributions of different modalities, particularly when one modality dominates the learning process. In this experiment, for each fixed r, we train a separate model and record its final DDP prediction error (MAE) under three standard GPR thresholds.

As shown in Figure 10, the error curves generally decrease as r increases, reaching their minima around to for all three criteria. This indicates that moderate amplification of modality imbalance yields the best DDP accuracy. When r is too small (e.g., close to 0), modality imbalance is insufficiently addressed, while overly large values (e.g., ) may lead to overcompensation and degrade performance. The consistent minima across criteria demonstrate the robustness of our balancing strategy.

Figure 10.

DDP error (MAE) across different values of r in the computation of . Each curve corresponds to a different GPR threshold.

5.6. Computational Resource Utilization

SHAP-based models are often associated with concerns regarding computational cost, as exact SHAP computation typically requires exponential complexity. However, in our proposed framework, we circumvent this limitation by enabling the simultaneous derivation of multiple modality ablation results within a single forward pass, thus significantly reducing the overhead traditionally linked to SHAP.

Table 8 compares the computational resource usage of our BMMQA framework against several deep learning-based PSQA baselines. The comparison includes model names, floating-point operations (FLOPs in GMac), and parameter counts (in millions). Among the baselines, Unet++, TranQA, and RegNet represent PSQA models with varying computational demands.

Table 8.

Comparative analysis of computational resource utilization by deep learning baselines and the proposed BMMQA.

Notably, BMMQA achieves competitive performance with substantially lower FLOPs and parameter size, underscoring its efficiency and suitability for resource-constrained or computation-sensitive clinical scenarios.

6. Conclusions

Through a comprehensive review and empirical analysis of existing multimodal deep learning approaches for PSQA, we identified modality imbalance—where faster-converging tabular features dominate training and suppress image feature learning—as a key factor limiting model robustness and clinical reliability. To address this, we propose BMMQA, a modality- and task-aware multimodal framework that explicitly regulates modality contributions during training. BMMQA leverages SHAP-derived imbalance indicators with task-specific weighting strategies to balance convergence across modalities, and incorporates a fast network forward mechanism along with tailored fusion protocols—attention-based weighting for GPR and spatial concatenation for DDP—to enhance feature collaboration. Experiments on a large clinical IMRT dataset show that BMMQA consistently outperforms state-of-the-art fusion baselines in both coarse-grained (GPR) and fine-grained (DDP) tasks, while improving interpretability and reliability. These results highlight the importance of explicitly addressing modality imbalance for the safe and effective deployment of AI in radiotherapy quality assurance, establishing a novel example in imbalanced multi-task multimodal learning.

This study has two main limitations. First, the dataset was collected from a single center (PUMCH) within a limited timeframe, which may restrict the generalizability of the results and introduce potential biases associated with technological evolution. Second, the current framework relies on only two modalities: image-based dose distributions and tabular plan complexity features, while excluding other potentially informative clinical data, such as CT scans or electronic health records (EHRs). Future work will aim to validate the framework on multi-center, multi-device datasets to enhance robustness and to extend the multimodal design by incorporating additional clinical information, thereby further improving predictive performance and clinical applicability.

Author Contributions

X.Z. contributed to conceptualization, methodology, software, validation, data curation, and writing—original draft preparation; A.A. handled formal analysis, supervision, investigation, and writing—review and editing, visualization; M.H.T. was involved in writing—reviewing and editing and visualization. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the China West Normal University Doctoral Start-up Fund under Grant No. 24KE033.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki (as revised in 2013) and was approved by the Institutional Ethic Review Board of the Peking Union Medical College Hospital (approval date: 5 December 2023, no. I-23PJ2059).

Informed Consent Statement

Informed consent was obtained from all participants involved in the study. Participants were informed about the study’s purpose, procedures, and their right to withdraw at any time without any consequences.

Data Availability Statement

The data supporting the findings of this study are available upon reasonable request from the first or corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Meijers, A.; Marmitt, G.G.; Siang, K.N.W.; van der Schaaf, A.; Knopf, A.C.; Langendijk, J.A.; Both, S. Feasibility of patient specific quality assurance for proton therapy based on independent dose calculation and predicted outcomes. Radiother. Oncol. 2020, 150, 136–141. [Google Scholar] [CrossRef] [PubMed]

- Zeng, X.; Zhu, Q.; Ahmed, A.; Hanif, M.; Hou, M.; Jie, Q.; Xi, R.; Shah, S.A. Multi-granularity prior networks for uncertainty-informed patient-specific quality assurance. Comput. Biol. Med. 2024, 179, 108925. [Google Scholar] [CrossRef] [PubMed]

- Park, J.M.; Kim, J.I.; Park, S.Y.; Oh, D.H.; Kim, S.T. Reliability of the gamma index analysis as a verification method of volumetric modulated arc therapy plans. Radiat. Oncol. 2018, 13, 175. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Pi, Y.; Ma, K.; Miao, X.; Fu, S.; Chen, H.; Wang, H.; Gu, H.; Shao, Y.; Duan, Y.; et al. Image-based features in machine learning to identify delivery errors and predict error magnitude for patient-specific IMRT quality assurance. Strahlenther. Onkol. 2023, 199, 498–510. [Google Scholar] [CrossRef]

- Kui, X.; Liu, F.; Yang, M.; Wang, H.; Liu, C.; Huang, D.; Li, Q.; Chen, L.; Zou, B. A review of dose prediction methods for tumor radiation therapy. Meta-Radiol. 2024, 2, 100057. [Google Scholar] [CrossRef]

- Tan, H.S.; Wang, K.; Mcbeth, R. Deep Evidential Learning for Dose Prediction. arXiv 2024, arXiv:2404.17126. [Google Scholar] [CrossRef]

- Bi, Q.; Lian, X.; Shen, J.; Zhang, F.; Xu, T. Exploration of radiotherapy strategy for brain metastasis patients with driver gene positivity in lung cancer. J. Cancer 2024, 15, 1994. [Google Scholar] [CrossRef]

- Liu, W.; Zhang, L.; Xie, L.; Hu, T.; Li, G.; Bai, S.; Yi, Z. Multilayer perceptron neural network with regression and ranking loss for patient-specific quality assurance. Knowl.-Based Syst. 2023, 271, 110549. [Google Scholar] [CrossRef]

- Li, H.; Peng, X.; Zeng, J.; Xiao, J.; Nie, D.; Zu, C.; Wu, X.; Zhou, J.; Wang, Y. Explainable attention guided adversarial deep network for 3D radiotherapy dose distribution prediction. Knowl.-Based Syst. 2022, 241, 108324. [Google Scholar] [CrossRef]

- Hu, T.; Xie, L.; Zhang, L.; Li, G.; Yi, Z. Deep multimodal neural network based on data-feature fusion for patient-specific quality assurance. Int. J. Neural Syst. 2022, 32, 2150055. [Google Scholar] [CrossRef]

- Han, C.; Zhang, J.; Yu, B.; Zheng, H.; Wu, Y.; Lin, Z.; Ning, B.; Yi, J.; Xie, C.; Jin, X. Integrating plan complexity and dosiomics features with deep learning in patient-specific quality assurance for volumetric modulated arc therapy. Radiat. Oncol. 2023, 18, 116. [Google Scholar] [CrossRef]

- Huang, Y.; Pi, Y.; Ma, K.; Miao, X.; Fu, S.; Zhu, Z.; Cheng, Y.; Zhang, Z.; Chen, H.; Wang, H.; et al. Deep learning for patient-specific quality assurance: Predicting gamma passing rates for IMRT based on delivery fluence informed by log files. Technol. Cancer Res. Treat. 2022, 21, 15330338221104881. [Google Scholar] [CrossRef]

- Li, C.; Su, Z.; Li, B.; Sun, W.; Wu, D.; Zhang, Y.; Li, X.; Xie, Z.; Huang, J.; Wei, Q. Plan complexity and dosiomics signatures for gamma passing rate classification in volumetric modulated arc therapy: External validation across different LINACs. Phys. Med. 2025, 133, 104962. [Google Scholar] [CrossRef] [PubMed]

- Sun, W.; Mo, Z.; Li, Y.; Xiao, J.; Jia, L.; Huang, S.; Liao, C.; Du, J.; He, S.; Chen, L.; et al. Machine learning-based ensemble prediction model for the gamma passing rate of VMAT-SBRT plan. Phys. Med. 2024, 117, 103204. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Cai, R.; Pi, Y.; Ma, K.; Kong, Q.; Zhuo, W.; Kong, Y. A feasibility study to predict 3D dose delivery accuracy for IMRT using DenseNet with log files. J. X-Ray Sci. Technol. 2024, 32, 1199–1208. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Cui, M.; Huang, C.; Wang, H.; Hu, D. BalanceBenchmark: A Survey for Multimodal Imbalance Learning. arXiv 2025, arXiv:2502.10816. [Google Scholar]

- Du, C.; Teng, J.; Li, T.; Liu, Y.; Yuan, T.; Wang, Y.; Yuan, Y.; Zhao, H. On Uni-Modal Feature Learning in Supervised Multi-Modal Learning. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; Krause, A., Brunskill, E., Cho, K., Engelhardt, B., Sabato, S., Scarlett, J., Eds.; PMLR, Proceedings of Machine Learning Research. Volume 202, pp. 8632–8656. [Google Scholar]

- Hua, C.; Xu, Q.; Bao, S.; Yang, Z.; Huang, Q. ReconBoost: Boosting Can Achieve Modality Reconcilement. arXiv 2024, arXiv:2405.09321. [Google Scholar] [CrossRef]

- Peng, X.; Wei, Y.; Deng, A.; Wang, D.; Hu, D. Balanced Multimodal Learning via On-the-Fly Gradient Modulation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 21–24 June 2022; pp. 8238–8247. [Google Scholar]

- Xu, R.; Feng, R.; Zhang, S.X.; Hu, D. MMCosine: Multi-Modal Cosine Loss Towards Balanced Audio-Visual Fine-Grained Learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Li, H.; Li, X.; Hu, P.; Lei, Y.; Li, C.; Zhou, Y. Boosting Multi-modal Model Performance with Adaptive Gradient Modulation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 22214–22224. [Google Scholar]

- Ma, H.; Zhang, Q.; Zhang, C.; Wu, B.; Fu, H.; Zhou, J.T.; Hu, Q. Calibrating Multimodal Learning. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; Proceedings of Machine Learning Research. Krause, A., Brunskill, E., Cho, K., Engelhardt, B., Sabato, S., Scarlett, J., Eds.; PMLR. 2023; Volume 202, pp. 23429–23450. [Google Scholar]

- Wu, N.; Jastrzebski, S.; Cho, K.; Geras, K.J. Characterizing and Overcoming the Greedy Nature of Learning in Multi-modal Deep Neural Networks. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; Proceedings of Machine Learning Research. Chaudhuri, K., Jegelka, S., Song, L., Szepesvari, C., Niu, G., Sabato, S., Eds.; PMLR. 2022; Volume 162, pp. 24043–24055. [Google Scholar]

- Wei, Y.; Hu, D. MMPareto: Boosting Multimodal Learning with Innocent Unimodal Assistance. arXiv 2024, arXiv:2405.17730. [Google Scholar] [CrossRef]

- Fan, Y.; Xu, W.; Wang, H.; Wang, J.; Guo, S. PMR: Prototypical Modal Rebalance for Multimodal Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, USA, 18–22 June 2023; pp. 20029–20038. [Google Scholar]

- Wang, W.; Tran, D.; Feiszli, M. What makes training multi-modal classification networks hard? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 12695–12705. [Google Scholar]

- Winterbottom, T.; Xiao, S.; McLean, A.; Moubayed, N.A. On modality bias in the tvqa dataset. arXiv 2020, arXiv:2012.10210. [Google Scholar] [CrossRef]

- Sun, Y.; Mai, S.; Hu, H. Learning to Balance the Learning Rates Between Various Modalities via Adaptive Tracking Factor. IEEE Signal Process. Lett. 2021, 28, 1650–1654. [Google Scholar] [CrossRef]

- Huang, Y.; Lin, J.; Zhou, C.; Yang, H.; Huang, L. Modality Competition: What Makes Joint Training of Multi-modal Network Fail in Deep Learning? (Provably). arXiv 2022, arXiv:2203.12221. [Google Scholar] [CrossRef]

- Du, C.; Li, T.; Liu, Y.; Wen, Z.; Hua, T.; Wang, Y.; Zhao, H. Improving Multi-Modal Learning with Uni-Modal Teachers. arXiv 2021, arXiv:2106.11059. [Google Scholar]

- Wei, Y.; Feng, R.; Wang, Z.; Hu, D. Enhancing multimodal Cooperation via Sample-level Modality Valuation. In Proceedings of the CVPR, Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Wei, Y.; Li, S.; Feng, R.; Hu, D. Diagnosing and Re-learning for Balanced Multimodal Learning. In Proceedings of the ECCV, Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Yang, Z.; Wei, Y.; Liang, C.; Hu, D. Quantifying and Enhancing Multi-modal Robustness with Modality Preference. arXiv 2024, arXiv:2402.06244. [Google Scholar] [CrossRef]

- Miften, M.; Olch, A.; Mihailidis, D.; Moran, J.; Pawlicki, T.; Molineu, A.; Li, H.; Wijesooriya, K.; Shi, J.; Xia, P.; et al. TG 218: Tolerance limits and methodologies for IMRT measurement-based verification QA: Recommendations of AAPM Task Group No. 218. Med. Phys. 2018, 45, e53–e83. [Google Scholar] [CrossRef]

- McNiven, A.L.; Sharpe, M.B.; Purdie, T.G. A new metric for assessing IMRT modulation complexity and plan deliverability. Med. Phys. 2010, 37, 505–515. [Google Scholar] [CrossRef]

- Du, W.; Cho, S.H.; Zhang, X.; Hoffman, K.E.; Kudchadker, R.J. Quantification of beam complexity in intensity-modulated radiation therapy treatment plans. Med. Phys. 2014, 41, 021716. [Google Scholar] [CrossRef]

- Götstedt, J.; Karlsson Hauer, A.; Bäck, A. Development and evaluation of aperture-based complexity metrics using film and EPID measurements of static MLC openings. Med. Phys. 2015, 42, 3911–3921. [Google Scholar] [CrossRef]

- Crowe, S.; Kairn, T.; Kenny, J.; Knight, R.; Hill, B.; Langton, C.M.; Trapp, J. Treatment plan complexity metrics for predicting IMRT pre-treatment quality assurance results. Australas. Phys. Eng. Sci. Med. 2014, 37, 475–482. [Google Scholar] [CrossRef]

- Crowe, S.; Kairn, T.; Middlebrook, N.; Sutherland, B.; Hill, B.; Kenny, J.; Langton, C.M.; Trapp, J. Examination of the properties of IMRT and VMAT beams and evaluation against pre-treatment quality assurance results. Phys. Med. Biol. 2015, 60, 2587. [Google Scholar] [CrossRef]

- Nauta, M.; Villarreal-Barajas, J.E.; Tambasco, M. Fractal analysis for assessing the level of modulation of IMRT fields. Med. Phys. 2011, 38, 5385–5393. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Zhu, Q.; Wang, Z.; Yang, B.; Zhang, W.; Qiu, J. Patient-specific quality assurance prediction models based on machine learning for novel dual-layered MLC linac. Med. Phys. 2023, 50, 1205–1214. [Google Scholar] [CrossRef] [PubMed]

- Shapley, L. The value of n-person games. Ann. Math. Stud. 1953, 28, 307–317. [Google Scholar]

- Molnar, C. Interpretable Machine Learning; Lulu. Press: Morrisville, NC, USA, 2020. [Google Scholar]

- Weber, R.J. Probabilistic values for games. The Shapley Value. Essays in Honor of Lloyd S. Shapley; Cambridge University Press: New York, NY, USA, 1988; pp. 101–119. [Google Scholar]

- Freund, Y.; Schapire, R.E. Game theory, on-line prediction and boosting. In Proceedings of the Ninth Annual Conference on Computational Learning Theory, Garda, Italy, 28 June–1 July 1996; pp. 325–332. [Google Scholar]

- Wang, K.; Gou, C.; Duan, Y.; Lin, Y.; Zheng, X.; Wang, F.Y. Generative adversarial networks: Introduction and outlook. IEEE/CAA J. Autom. Sin. 2017, 4, 588–598. [Google Scholar] [CrossRef]

- Gemp, I.; McWilliams, B.; Vernade, C.; Graepel, T. EigenGame: PCA as a Nash Equilibrium. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 30 April 2020. [Google Scholar]

- Hu, P.; Li, X.; Zhou, Y. Shape: An unified approach to evaluate the contribution and cooperation of individual modalities. arXiv 2022, arXiv:2205.00302. [Google Scholar] [CrossRef]

- Ono, T.; Hirashima, H.; Iramina, H.; Mukumoto, N.; Miyabe, Y.; Nakamura, M.; Mizowaki, T. Prediction of dosimetric accuracy for VMAT plans using plan complexity parameters via machine learning. Med. Phys. 2019, 46, 3823–3832. [Google Scholar] [CrossRef]

- Lay, L.M.; Chuang, K.C.; Wu, Y.; Giles, W.; Adamson, J. Virtual patient-specific QA with DVH-based metrics. J. Appl. Clin. Med. Phys. 2022, 23, e13639. [Google Scholar] [CrossRef]

- Thongsawad, S.; Srisatit, S.; Fuangrod, T. Predicting gamma evaluation results of patient-specific head and neck volumetric-modulated arc therapy quality assurance based on multileaf collimator patterns and fluence map features: A feasibility study. J. Appl. Clin. Med. Phys. 2022, 23, e13622. [Google Scholar] [CrossRef]

- Valdes, G.; Scheuermann, R.; Hung, C.; Olszanski, A.; Bellerive, M.; Solberg, T. A mathematical framework for virtual IMRT QA using machine learning. Med. Phys. 2016, 43, 4323–4334. [Google Scholar] [CrossRef]

- Huang, Y.; Pi, Y.; Ma, K.; Miao, X.; Fu, S.; Chen, H.; Wang, H.; Gu, H.; Shan, Y.; Duan, Y.; et al. Virtual patient-specific quality assurance of IMRT using UNet++: Classification, gamma passing rates prediction, and dose difference prediction. Front. Oncol. 2021, 11, 700343. [Google Scholar] [CrossRef]

- Yoganathan, S.; Ahmed, S.; Paloor, S.; Torfeh, T.; Aouadi, S.; Al-Hammadi, N.; Hammoud, R. Virtual pretreatment patient-specific quality assurance of volumetric modulated arc therapy using deep learning. Med. Phys. 2023, 50, 7891–7903. [Google Scholar] [CrossRef]

- Radosavovic, I.; Kosaraju, R.P.; Girshick, R.; He, K.; Dollár, P. Designing network design spaces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10428–10436. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 4700–4708. [Google Scholar]

- Mehta, S.; Rastegari, M. Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Suwanraksa, C.; Bridhikitti, J.; Liamsuwan, T.; Chaichulee, S. CBCT-to-CT translation using registration-based generative adversarial networks in patients with head and neck cancer. Cancers 2023, 15, 2017. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Liu, J.; Fang, Z.; Hong, R.; Lu, H. Densely Connected Attention Flow for Visual Question Answering. In Proceedings of the IJCAI, Macao, China, 10–16 August 2019; pp. 869–875. [Google Scholar]

- Xu, J.; Pan, Y.; Pan, X.; Hoi, S.; Yi, Z.; Xu, Z. RegNet: Self-regulated network for image classification. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 9562–9567. [Google Scholar] [CrossRef]

- Zeng, L.; Zhang, M.; Zhang, Y.; Zou, Z.; Guan, Y.; Huang, B.; Yu, X.; Ding, S.; Liu, Q.; Gong, C. TransQA: Deep hybrid transformer network for measurement-guided volumetric dose prediction of pre-treatment patient-specific quality assurance. Phys. Med. Biol. 2023, 68, 205010. [Google Scholar] [CrossRef]

- Yang, X.; Li, S.; Shao, Q.; Cao, Y.; Yang, Z.; Zhao, Y.Q. Uncertainty-guided man–machine integrated patient-specific quality assurance. Radiother. Oncol. 2022, 173, 1–9. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).