Artificial Intelligence-Powered Chronic Obstructive Pulmonary Disease Detection Techniques—A Review

Abstract

1. Introduction

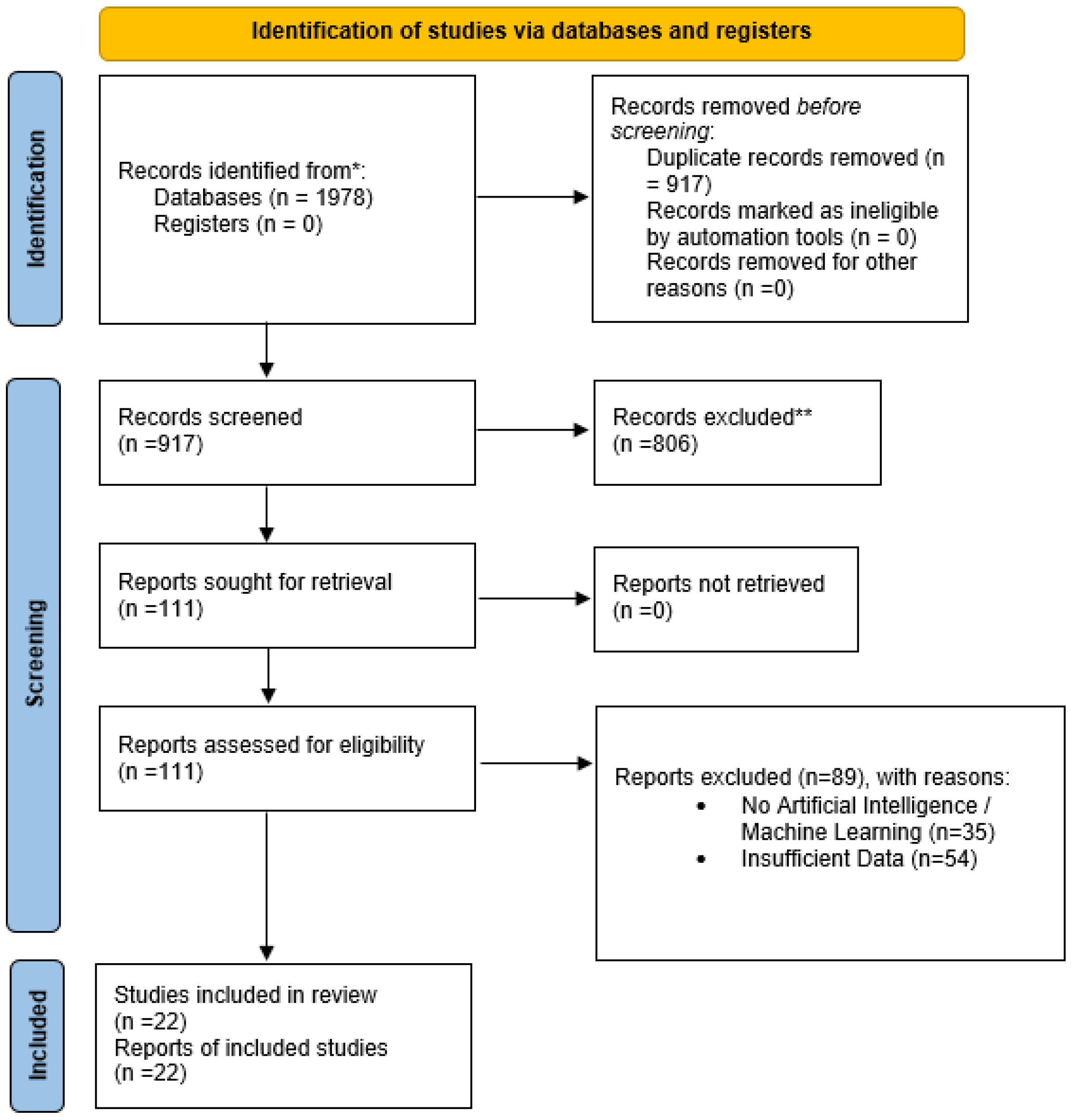

2. Materials and Methods

2.1. Research Questions

2.2. Data Sources and Search Strategy

2.3. Study Selection

2.4. Data Extraction

2.5. Risk of Bias and Quality Assessment

2.6. Data Synthesis and Ethical Considerations

3. Results and Discussions

3.1. RQ1: What AI Techniques Have Been Introduced to the Identification of COPD?

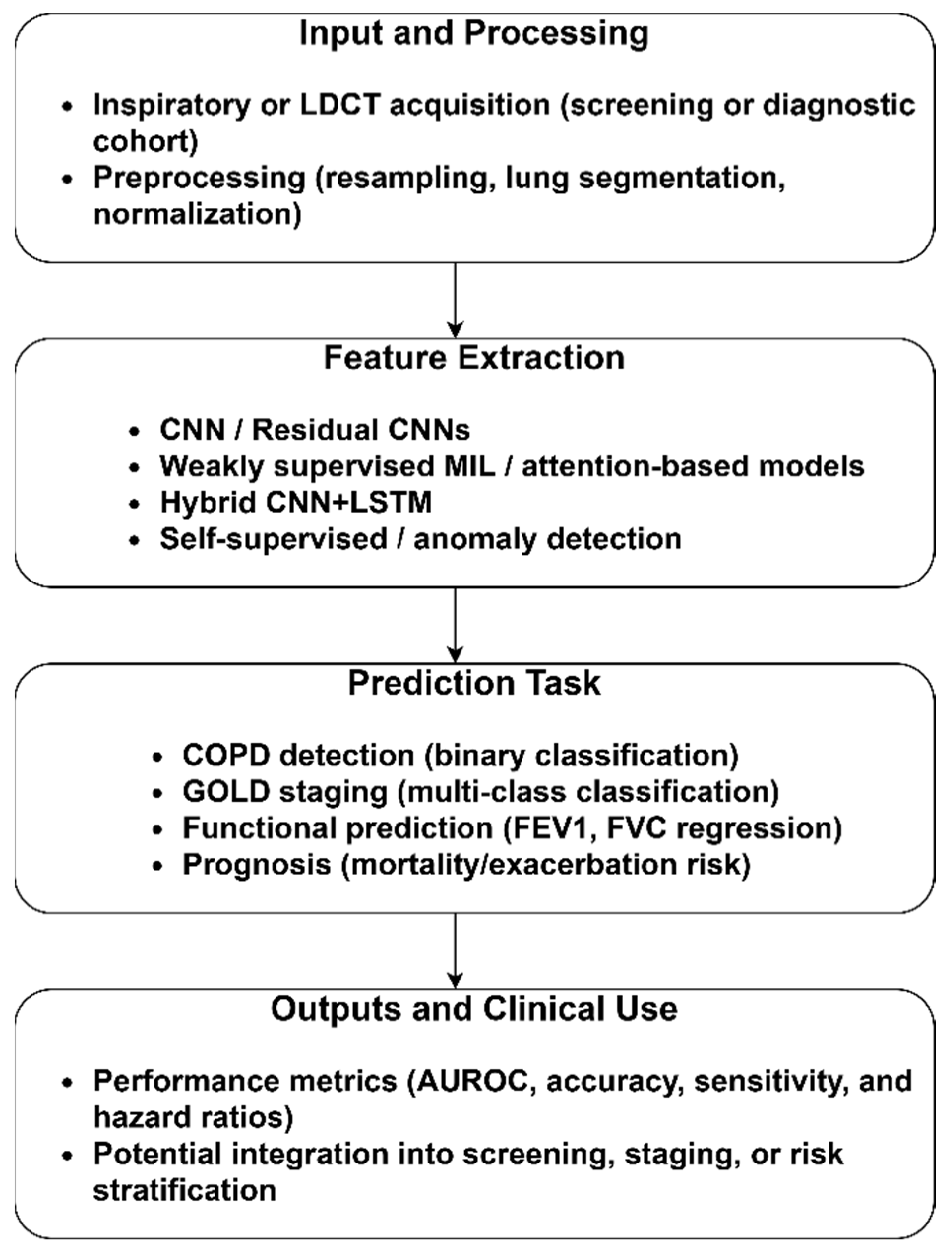

3.1.1. CT-Based COPD Identification

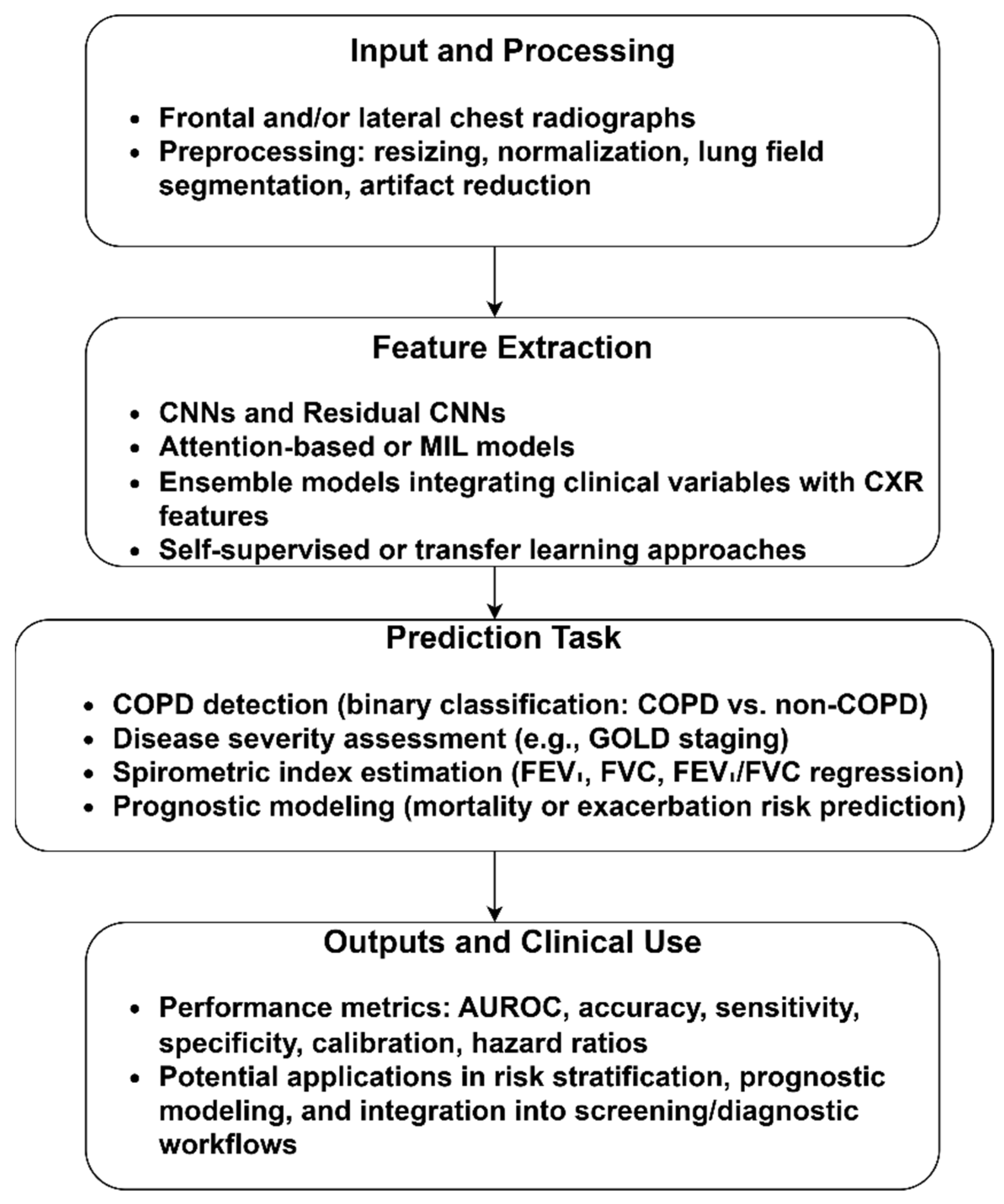

3.1.2. CXR-Based COPD Identification

3.1.3. Spirometry/Spirogram-Based COPD Identification

3.1.4. Other Modalities

3.2. RQ2: What Evaluation Strategies and Performance Metrics Are Employed to Determine the Model’s Performance?

3.3. RQ3: What Limitations, Biases, and Challenges Are Reported in the Existing Literature?

3.4. Future Directions

3.5. The Review’s Limitations and Potential Biases

4. Conclusions

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Adeloye, D.; Song, P.; Zhu, Y.; Campbell, H.; Sheikh, A.; Rudan, I. Global, regional, and national prevalence of, and risk factors for, chronic obstructive pulmonary disease (COPD) in 2019: A systematic review and modelling analysis. Lancet Respir. Med. 2022, 10, 447–458. [Google Scholar] [CrossRef]

- Safiri, S.; Carson-Chahhoud, K.; Noori, M.; Nejadghaderi, S.A.; Sullman, M.J.M.; Heris, J.A.; Ansarin, K.; Mansournia, M.A.; Collins, G.S.; Kolahi, A.-A.; et al. Burden of chronic obstructive pulmonary disease and its attributable risk factors in 204 countries and territories, 1990-2019: Results from the Global Burden of Disease Study 2019. BMJ 2022, 378, e069679. [Google Scholar] [CrossRef]

- Wang, Y.; Han, R.; Ding, X.; Feng, W.; Gao, R.; Ma, A. Chronic obstructive pulmonary disease across three decades: Trends, inequalities, and projections from the Global Burden of Disease Study 2021. Front. Med. 2025, 12, 1564878. [Google Scholar] [CrossRef]

- Li, Y.; Tang, X.; Zhang, R.; Lei, Y.; Wang, D.; Wang, Z.; Li, W. Research progress in early states of chronic obstructive pulmonary disease: A narrative review on PRISm, pre-COPD, young COPD and mild COPD. Expert Rev. Respir. Med. 2025, 19, 1063–1079. [Google Scholar] [CrossRef]

- Faner, R.; Cho, M.H.; Koppelman, G.H.; Melén, E.; Verleden, S.E.; Dharmage, S.C.; Meiners, S.; Agusti, A. Towards early detection and disease interception of COPD across the lifespan. Eur. Respir. Rev. 2025, 34, 240243. [Google Scholar] [CrossRef]

- Long, H.; Li, S.; Chen, Y. Digital health in chronic obstructive pulmonary disease. Chronic Dis. Transl. Med. 2023, 9, 90–103. [Google Scholar] [CrossRef]

- d’Elia, A.; Jordan, R.E.; Jordan, R.; Cheng, K.K.; Chi, C.; Correia-de-Sousa, J.; Dickens, A.P.; Dickens, A.; Enocson, A.; Farley, A.; et al. COPD burden and healthcare management across four middle-income countries within the Breathe Well research programme: A descriptive study. Glob. Health Res. 2024, 18, 1–7. [Google Scholar] [CrossRef]

- Roberts, N.J.; Smith, S.F.; Partridge, M.R. Why is spirometry underused in the diagnosis of the breathless patient: A qualitative study. BMC Pulm. Med. 2011, 11, 37. [Google Scholar] [CrossRef]

- Farha, F.; Abass, S.; Khan, S.; Ali, J.; Parveen, B.; Ahmad, S.; Parveen, R. Transforming pulmonary health care: The role of artificial intelligence in diagnosis and treatment. Expert Rev. Respir. Med. 2025, 19, 575–595. [Google Scholar] [CrossRef]

- Kumar, Y.; Koul, A.; Singla, R.; Ijaz, M.F. Artificial intelligence in disease diagnosis: A systematic literature review, synthesizing framework and future research agenda. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 8459–8486. [Google Scholar] [CrossRef]

- Gupta, J.; Mehrotra, M.; Aggarwal, A.; Gashroo, O.B. Meta-Learning Frameworks in Lung Disease Detection: A survey. Arch. Comput. Methods Eng. 2025, 1, 1–31. [Google Scholar] [CrossRef]

- Robertson, N.M.; Centner, C.S.; Siddharthan, T. Integrating artificial intelligence in the diagnosis of COPD globally: A way forward. Chronic Obstr. Pulm. Dis. J. COPD Found. 2023, 11, 114–120. [Google Scholar] [CrossRef]

- Kaplan, A.; Cao, H.; FitzGerald, J.M.; Iannotti, N.; Yang, E.; Kocks, J.W.H.; Kostikas, K.; Price, D.; Reddel, H.K.; Tsiligianni, I.; et al. Artificial intelligence/machine learning in respiratory medicine and potential role in asthma and COPD diagnosis. J. Allergy Clin. Immunol. Pract. 2021, 9, 2255–2261. [Google Scholar] [CrossRef]

- Calzetta, L.; Pistocchini, E.; Chetta, A.; Rogliani, P.; Cazzola, M. Experimental drugs in clinical trials for COPD: Artificial intelligence via machine learning approach to predict the successful advance from early-stage development to approval. Expert Opin. Investig. Drugs 2023, 32, 525–536. [Google Scholar] [CrossRef]

- Lin, C.-H.; Cheng, S.-L.; Chen, C.-Z.; Chen, C.-H.; Lin, S.-H.; Wang, H.-C. Current progress of COPD early detection: Key points and novel strategies. Int. J. Chronic Obstr. Pulm. Dis. 2023, 18, 1511–1524. [Google Scholar] [CrossRef]

- Díaz, A.A.; Nardelli, P.; Wang, W.; Estépar, R.S.J.; Yen, A.; Kligerman, S.; Maselli, D.J.; Dolliver, W.R.; Tsao, A.; Orejas, J.L.; et al. Artificial intelligence–based CT assessment of bronchiectasis: The COPDGene Study. Radiology 2023, 307, e221109. [Google Scholar] [CrossRef]

- Ajitha, E.; Abishekkumaran, S.; Deva, J. Enhancing diagnostic accuracy in lung disease: A ViT approach. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS) 2024, Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Sabir, M.W.; Farhan, M.; Almalki, N.S.; Alnfiai, M.M.; Sampedro, G.A. FibroVit—Vision transformer-based framework for detection and classification of pulmonary fibrosis from chest CT images. Front. Med. 2023, 10, 1282200. [Google Scholar] [CrossRef]

- Kabir, F.; Akter, N.; Hasan, K.; Ahmed, T.; Akter, M. Predicting Chronic Obstructive Pulmonary Disease Using ML and DL Approaches and Feature Fusion of X-Ray Image and Patient History. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 149–158. [Google Scholar] [CrossRef]

- Hadhoud, Y.; Mekhaznia, T.; Bennour, A.; Amroune, M.; Kurdi, N.A.; Aborujilah, A.H.; Al-Sarem, M. From binary to multi-class classification: A two-step hybrid cnn-vit model for chest disease classification based on x-ray images. Diagnostics 2024, 14, 2754. [Google Scholar] [CrossRef]

- Rabby, A.S.; Chaudhary, M.F.; Saha, P.; Sthanam, V.; Nakhmani, A.; Zhang, C.; Barr, R.G.; Bon, J.; Cooper, C.B.; Curtis, J.L.; et al. Light Convolutional Neural Network to Detect Chronic Obstructive Pulmonary Disease (COPDxNet): A Multicenter Model Development and External Validation Study. medRxiv 2025. [Google Scholar] [CrossRef]

- Nakrani, H.; Shahra, E.Q.; Basurra, S.; Mohammad, R.; Vakaj, E.; Jabbar, W.A. Advanced Diagnosis of Cardiac and Respiratory Diseases from Chest X-Ray Imagery Using Deep Learning Ensembles. J. Sens. Actuator Netw. 2025, 14, 44. [Google Scholar] [CrossRef]

- Mezina, A.; Burget, R. Detection of post-COVID-19-related pulmonary diseases in X-ray images using Vision Transformer-based neural network. Biomed. Signal Process. Control 2024, 87, 105380. [Google Scholar] [CrossRef]

- Saha, P.K.; Nadeem, S.A.; Comellas, A.P. A survey on artificial intelligence in pulmonary imaging. WIREs Data Min. Knowl. Discov. 2023, 13, e1510. [Google Scholar] [CrossRef]

- Abdullah; Fatima, Z.; Abdullah, J.; Rodríguez, J.L.O.; Sidorov, G. A Multimodal AI Framework for Automated Multiclass Lung Disease Diagnosis from Respiratory Sounds with Simulated Biomarker Fusion and Personalized Medication Recommendation. Int. J. Mol. Sci. 2025, 26, 7135. [Google Scholar] [CrossRef]

- Yadav, S.; Rizvi, S.A.M.; Agarwal, P. Advancing pulmonary infection diagnosis: A comprehensive review of deep learning approaches in radiological data analysis. Arch. Comput. Methods Eng. 2025, 32, 3759–3786. [Google Scholar] [CrossRef]

- Liu, X.; Pan, F.; Song, H.; Cao, S.; Li, C.; Li, T. MDFormer: Transformer-Based Multimodal Fusion for Robust Chest Disease Diagnosis. Electronics 2025, 14, 1926. [Google Scholar] [CrossRef]

- Caliman Sturdza, O.A.; Filip, F.; Terteliu Baitan, M.; Dimian, M. Deep Learning Network Selection and Optimized Information Fusion for Enhanced COVID-19 Detection: A Literature Review. Diagnostics 2025, 15, 1830. [Google Scholar] [CrossRef]

- Abhishek, S.; Ananthapadmanabhan, A.J.; Anjali, T.; Reyma, S.; Perathur, A.; Bentov, R.B. Multimodal Integration of an Enhanced Novel Pulmonary Auscultation Real-Time Diagnostic System. IEEE Multimed. 2024, 31, 18–43. [Google Scholar] [CrossRef]

- Damaševičius, R.; Jagatheesaperumal, S.K.; Kandala, R.N.V.P.S.; Hussain, S.; Alizadehsani, R.; Gorriz, J.M. Deep learning for personalized health monitoring and prediction: A review. Comput. Intell. 2024, 40, e12682. [Google Scholar] [CrossRef]

- Akhter, Y.; Singh, R.; Vatsa, M. AI-based radiodiagnosis using chest X-rays: A review. Front. Big Data 2023, 6, 1120989. [Google Scholar] [CrossRef]

- Aburass, S.; Dorgham, O.; Al Shaqsi, J.; Abu Rumman, M.; Al-Kadi, O. Vision Transformers in Medical Imaging: A Comprehensive Review of Advancements and Applications Across Multiple Diseases. J. Imaging Inform. Med. 2025, 12, 1–17. [Google Scholar] [CrossRef]

- Jha, T.; Suhail, S.; Northcote, J.; Moreira, A.G. Artificial Intelligence in Bronchopulmonary Dysplasia: A Review of the Literature. Information 2025, 16, 262. [Google Scholar] [CrossRef]

- Chaudhari, D.; Kamboj, P. Harnessing Machine Learning and Deep Learning for Non-Communicable Disease Diagnosis: An In-Depth analysis. Arch. Comput. Methods Eng. 2025, 7, 1–45. [Google Scholar] [CrossRef]

- Fischer, A.M.; Varga-Szemes, A.; Martin, S.S.; Sperl, J.I.; Sahbaee, P.; Neumann, D.M.; Gawlitza, J.; Henzler, T.; Johnson, C.M.B.; Nance, J.W.; et al. Artificial intelligence-based fully automated per lobe segmentation and emphysema-quantification based on chest computed tomography compared with global initiative for chronic obstructive lung disease severity of smokers. J. Thorac. Imaging 2020, 35, S28–S34. [Google Scholar] [CrossRef]

- Ebrahimian, S.; Digumarthy, S.R.; Bizzo, B.; Primak, A.; Zimmermann, M.; Tarbiah, M.M.; Kalra, M.K.; Dreyer, K.J. Artificial intelligence has similar performance to subjective assessment of emphysema severity on chest CT. Acad. Radiol. 2022, 29, 1189–1195. [Google Scholar] [CrossRef]

- Yevle, D.V.; Mann, P.S.; Kumar, D. AI Based Diagnosis of Chronic Obstructive Pulmonary Disease: Acomparative Review. Arch. Comput. Methods Eng. 2025, 4, 1–38. [Google Scholar] [CrossRef]

- Feng, Y.; Wang, Y.; Zeng, C.; Mao, H. Artificial intelligence and machine learning in chronic airway diseases: Focus on asthma and chronic obstructive pulmonary disease. Int. J. Med. Sci. 2021, 18, 2871–2889. [Google Scholar] [CrossRef] [PubMed]

- Exarchos, K.P.; Aggelopoulou, A.; Oikonomou, A.; Biniskou, T.; Beli, V.; Antoniadou, E.; Kostikas, K. Review of artificial intelligence techniques in chronic obstructive lung disease. IEEE J. Biomed. Health Inform. 2021, 26, 2331–2338. [Google Scholar] [CrossRef] [PubMed]

- Bian, H.; Zhu, S.; Zhang, Y.; Fei, Q.; Peng, X.; Jin, Z.; Zhou, T.; Zhao, H. Artificial Intelligence in Chronic Obstructive Pulmonary Disease: Research Status, Trends, and Future Directions—A Bibliometric Analysis from 2009 to 2023. Int. J. Chronic Obstr. Pulm. Dis. 2024, 19, 1849–1864. [Google Scholar]

- Xu, Y.; Long, Z.A.; Setyohadi, D.B. A comprehensive review on the application of artificial intelligence in Chronic Obstructive Pulmonary Disease (COPD) management. In Proceedings of the 2024 18th International Conference on Ubiquitous Information Management and Communication (IMCOM), Kuala Lumpur, Malaysia, 3–5 January 2024; pp. 1–8. [Google Scholar]

- Chu, S.H.; Wan, E.S.; Cho, M.H.; Goryachev, S.; Gainer, V.; Linneman, J.; Scotty, E.J.; Hebbring, S.J.; Murphy, S.; Lasky-Su, J.; et al. An independently validated, portable algorithm for the rapid identification of COPD patients using electronic health records. Sci. Rep. 2021, 11, 19959. [Google Scholar] [CrossRef] [PubMed]

- Rauf, A.; Muhammad, N.; Mahmood, H.; Aftab, M. Healthcare service quality: A systematic review based on PRISMA guidelines. Int. J. Qual. Reliab. Manag. 2025, 42, 837–850. [Google Scholar] [CrossRef]

- Veroniki, A.A.; Hutton, B.; Stevens, A.; McKenzie, J.E.; Page, M.J.; Moher, D.; McGowan, J.; Straus, S.E.; Li, T.; Munn, Z.; et al. Update to the PRISMA guidelines for network meta-analyses and scoping reviews and development of guidelines for rapid reviews: A scoping review protocol. JBI Evid. Synth. 2025, 23, 517–526. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, R.; Sounderajah, V.; Martin, G.; Ting, D.S.W.; Karthikesalingam, A.; King, D.; Ashrafian, H.; Darzi, A. Diagnostic accuracy of deep learning in medical imaging: A systematic review and meta-analysis. npj Digit. Med. 2021, 4, 65. [Google Scholar] [CrossRef] [PubMed]

- LIDC-IDRI Dataset. Available online: https://www.cancerimagingarchive.net/collection/lidc-idri/ (accessed on 9 September 2025).

- Tang, L.Y.W.; Coxson, H.O.; Lam, S.; Leipsic, J.; Tam, R.C.; Sin, D.D. Towards large-scale case-finding: Training and validation of residual networks for detection of chronic obstructive pulmonary disease using low-dose CT. Lancet Digit. Health 2020, 2, e259–e267. [Google Scholar] [CrossRef]

- González, G.; Ash, S.Y.; Vegas-Sánchez-Ferrero, G.; Onieva, J.O.; Rahaghi, F.N.; Ross, J.C.; Díaz, A.; Estépar, R.S.J.; Washko, G.R.; Copdgene, F.T. Disease staging and prognosis in smokers using deep learning in chest computed tomography. Am. J. Respir. Crit. Care Med. 2018, 197, 193–203. [Google Scholar] [CrossRef]

- Sun, J.; Liao, X.; Yan, Y.; Zhang, X.; Sun, J.; Tan, W.; Liu, B.; Wu, J.; Guo, Q.; Gao, S.; et al. Detection and staging of chronic obstructive pulmonary disease using a computed tomography–based weakly supervised deep learning approach. Eur. Radiol. 2022, 32, 5319–5329. [Google Scholar] [CrossRef]

- Xue, M.; Jia, S.; Chen, L.; Huang, H.; Yu, L.; Zhu, W. CT-based COPD identification using multiple instance learning with two-stage attention. Comput. Methods Programs Biomed. 2023, 230, 107356. [Google Scholar] [CrossRef]

- Park, H.; Yun, J.; Lee, S.M.; Hwang, H.J.; Seo, J.B.; Jung, Y.J.; Hwang, J.; Lee, S.H.; Lee, S.W.; Kim, N. Deep learning–based approach to predict pulmonary function at chest CT. Radiology 2023, 307, e221488. [Google Scholar] [CrossRef]

- Humphries, S.M.; Notary, A.M.; Centeno, J.P.; Strand, M.J.; Crapo, J.D.; Silverman, E.K.; Lynch, D.A.; For the Genetic Epidemiology of COPD (COPDGene) Investigators. Deep learning enables automatic classification of emphysema pattern at CT. Radiology 2020, 294, 434–444. [Google Scholar] [CrossRef]

- Almeida, S.D.; Norajitra, T.; Lüth, C.T.; Wald, T.; Weru, V.; Nolden, M.; Jäger, P.F.; von Stackelberg, O.; Heußel, C.P.; Weinheimer, O.; et al. Prediction of disease severity in COPD: A deep learning approach for anomaly-based quantitative assessment of chest CT. Eur. Radiol. 2024, 34, 4379–4392. [Google Scholar] [CrossRef]

- Almeida, S.D.; Norajitra, T.; Lüth, C.T.; Wald, T.; Weru, V.; Nolden, M.; Jäger, P.F.; von Stackelberg, O.; Heußel, C.P.; Weinheimer, O.; et al. How do deep-learning models generalize across populations? Cross-ethnicity generalization of COPD detection. Insights Into Imaging 2024, 15, 198. [Google Scholar] [CrossRef]

- Nam, J.G.; Kang, H.-R.; Lee, S.M.; Kim, H.; Rhee, C.; Goo, J.M.; Oh, Y.-M.; Lee, C.-H.; Park, C.M. Deep learning prediction of survival in patients with chronic obstructive pulmonary disease using chest radiographs. Radiology 2022, 305, 199–208. [Google Scholar] [CrossRef]

- Ueda, D.; Matsumoto, T.; Yamamoto, A.; Walston, S.L.; Mitsuyama, Y.; Takita, H.; Asai, K.; Watanabe, T.; Abo, K.; Kimura, T.; et al. A deep learning-based model to estimate pulmonary function from chest x-rays: Multi-institutional model development and validation study in Japan. Lancet Digit. Health 2024, 6, e580–e588. [Google Scholar] [CrossRef]

- Schroeder, J.D.; Bigolin Lanfredi, R.; Li, T.; Chan, J.; Vachet, C.; Paine, R., III; Srikumar, V.; Tasdizen, T. Prediction of obstructive lung disease from chest radiographs via deep learning trained on pulmonary function data. Int. J. Chronic Obstr. Pulm. Dis. 2020, 5, 3455–3466. [Google Scholar] [CrossRef]

- Doroodgar Jorshery, S.; Chandra, J.; Walia, A.S.; Stumiolo, A.; Corey, K.; Zekavat, S.M.; Zinzuwadia, A.N.; Patel, K.; Short, S.; Mega, J.L.; et al. Leveraging Deep Learning of Chest Radiograph Images to Identify Individuals at High Risk for Chronic Obstructive Pulmonary Disease. medRxiv 2024. [Google Scholar] [CrossRef]

- Zou, X.; Ren, Y.; Yang, H.; Zou, M.; Meng, P.; Zhang, L.; Gong, M.; Ding, W.; Han, L.; Zhang, T. Screening and staging of chronic obstructive pulmonary disease with deep learning based on chest X-ray images and clinical parameters. BMC Pulm. Med. 2024, 24, 153. [Google Scholar] [CrossRef]

- Mac, A.; Xu, T.; Wu, J.K.Y.; Belousova, N.; Kitazawa, H.; Vozoris, N.; Rozenberg, D.; Ryan, C.M.; Valaee, S.; Chow, C.-W. Deep learning using multilayer perception improves the diagnostic acumen of spirometry: A single-centre Canadian study. BMJ Open Respir. Res. 2022, 9, e001396. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Li, Q.; Chen, W.; Jian, W.; Liang, J.; Gao, Y.; Zhong, N.; Zheng, J. Deep Learning-based analytic models based on flow-volume curves for identifying ventilatory patterns. Front. Physiol. 2022, 13, 824000. [Google Scholar] [CrossRef]

- Cosentino, J.; Behsaz, B.; Alipanahi, B.; McCaw, Z.R.; Hill, D.; Schwantes-An, T.-H.; Lai, D.; Carroll, A.; Hobbs, B.D.; Cho, M.H.; et al. Inference of chronic obstructive pulmonary disease with deep learning on raw spirograms identifies new genetic loci and improves risk models. Nat. Genet. 2023, 55, 787–795. [Google Scholar] [CrossRef] [PubMed]

- Hill, D.; Torop, M.; Masoomi, A.; Castaldi, P.; Dy, J.; Cho, M.; Hobbs, B. Deep Learning Utilizing Discarded Spirometry Data to Improve Lung Function and Mortality Prediction in the UK Biobank. In Proceedings of the A95. the Idea Generator: Novel Risk Factors and Fresh Approaches to Disease Characterization 2022, San Francisco, CA, USA, 13–18 May 2022; p. A2145. [Google Scholar]

- Altan, G.; Kutlu, Y.; Gökçen, A. Chronic obstructive pulmonary disease severity analysis using deep learning on multi-channel lung sounds. Turk. J. Electr. Eng. Comput. Sci. 2020, 28, 2979–2996. [Google Scholar] [CrossRef]

- Naqvi, S.Z.H.; Choudhry, M.A. An automated system for classification of chronic obstructive pulmonary disease and pneumonia patients using lung sound analysis. Sensors 2020, 20, 6512. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Zhao, J.; Liu, D.; Chen, Z.; Sun, J.; Zhao, X. Multi-channel lung sounds intelligent diagnosis of chronic obstructive pulmonary disease. BMC Pulm. Med. 2021, 21, 321. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Xu, Z.; Sun, L.; Yu, K.; Hersh, C.P.; Boueiz, A.; Hokanson, J.E.; Sciurba, F.C.; Silverman, E.K.; Castaldi, P.J.; et al. Deep learning integration of chest computed tomography imaging and gene expression identifies novel aspects of copd. Chronic Obstr. Pulm. Dis. J. COPD Found. 2023, 10, 355–368. [Google Scholar] [CrossRef] [PubMed]

| Inclusion | Exclusion |

|---|---|

| Original research articles and preprints with methodological detail. | Narrative reviews, systematic reviews, and conference abstracts that lack sufficient data. |

| Studies based on human-derived datasets. | Animal studies and simulated data without clinical relevance. |

| AI-based COPD detection, classification, or diagnosis. | Statistical models without algorithmic learning. |

| Studies reporting quantitative performance metrics. | Studies without measurable performance outcomes. |

| Studies published in the English language between 2010 and 2025. | Non-English publications. |

| Study | Dataset Number of Participants (n) | AI Approach | Validation Design | Quantitative Performance | Limitations |

|---|---|---|---|---|---|

| Tang et al. [47] | Training Dataset: n = 2589 (Male: 1440 and Female: n = 1149) Location: Canada External Dataset: n = 2195 (Male: 1318 and Female: 877) Location: ECLIPSE (Multinational) | Fine-tuned Residual CNNs architecture | Three-fold cross-validation (CV) on PanCan; external test on ECLIPSE with no model change. | CV-AUC 0.889 ± 0.017; external AUC 0.886 ± 0.017; positive predictive value (PPV) 0.847 ± 0.056, negative predictive value (NPV) 0.755 ± 0.097 | Screening bias, retrospective, and limited generalization |

| González et al. [48] | n = 7983 COPDGene (US, multicenter smokers) + n = 1672 ECLIPSE (multinational smokers) | 2D CNN using four canonical CT views for COPD detection, Global Initiative for Chronic Obstructive Lung Disease (GOLD) staging, and prognosis. | Internal test in COPDGene; external test in ECLIPSE; logistic/Cox models for events & mortality. | AUC = ~0.85 for COPD Accuracy = 74.95% | Smoker-only cohorts and cross-study CT protocol differences. |

| Sun et al. [49] | Multicenter China CT cohort: n = 1393 from four large public hospitals; labels from spirometry; external validation on NLST subset (~620 cases). | Weakly supervised CT DL (multiple-instance/patch-bag paradigm) for COPD detection + GOLD staging. | Internal split in the Chinese cohort; external check on National Lung Screening Trial (NLST). | GOLD grading accuracy ≈ 76.4% with weighted κ ≈ 0.619 | Retrospective and site-specific protocols. |

| Xue et al. [50] | n = 800 for training and testing. n = 260 for the external validation | Two-Stage-Attention MIL (TSA-MIL) using slice/patch bags; compares against conventional MIL and CNN baselines. | Cross-validation; external test set included. | Internal accuracy ≈ 92%, AUC ≈ 0.95; external AUC ≈ 0.87 | Potential label noise from weak supervision, single-country data, and limited reporting on scanner harmonization. |

| Park et al. [51] | n = 16,148 with mean age of 55 ± 10 (standard deviation) | Volumetric CT deep learning and regression/classification techniques to predict PFTs (FEV1, FVC, FEV1/FVC) and flag abnormality. | Train/validation/test splits, internal validation, and threshold-based screening analyses. | Reported sensitivities were 61.6%, 46.9%, and 36.1% for different abnormality thresholds. | Single-country screening population and limited generalization. |

| Humphries et al. [52] | COPDGene test cohort n = 7143; external ECLIPSE n = 1962; US/multinational smokers with baseline inspiratory CT and outcomes. | CNNs + LSTM to automate emphysema grading on CT. | Internal testing (COPDGene) + external testing (ECLIPSE). | Weighted κ (DL vs. visual) 0.60; mortality hazard ratios vs. “no emphysema”: 1.5, 1.7, 2.9, 5.3, 9.7 | Smoker-enriched cohorts and demand substantial computational resources. |

| Almeida et al. [53] | COPDGene: train/val/test n = 3144/n = 786/n = 1310; external COSYCONET n = 446 (Germany). | SSL representation + anomaly-detection on 3D CT patches to quantify COPD severity as “anomaly score.” | Internal test (COPDGene) and external test (COSYCONET); compared to supervised DL baselines; linked anomaly score to PFTs, SGRQ, emphysema, and air-trapping. | AUC = 84.3 ± 0.3 (COPDGene) and AUC = 76.3 ± 0.6 (COSYCONET) for COPD versus low-risk; the anomaly score is significantly associated with lung function, symptoms, and exacerbations (p < 0.001). | Scanner/protocol variability and anomaly approach improve generalization. However, interpretability and thresholds for clinical use are in an emerging stage. |

| Almeida et al. [54] | COPDGene: train/val/test n = 3144/n = 786/n = 1310 | SSL versus Supervised CNN for COPD Detection: Uncertainty Estimation and Fairness Analysis. | Cross-ethnic internal/external style evaluation. | SSL showed significantly higher AUC than supervised across ethnic groups (p < 0.001; article emphasizes generalization rather than a single pooled AUC). | US-centric cohorts, limited emphasis on generalization and fairness metrics. |

| Study | Dataset Number of Participants (n) | AI Approach | Validation Design | Quantitative Performance | Limitations |

|---|---|---|---|---|---|

| Nam et al. [55] | 4225 patients Training (n = 3475), validation (n = 435), and test (n = 315) | DL survival model (DLSP_CXR and integrated DLSP_integ). Outcomes via time-to-event modeling. | Development + validation cohorts; time-dependent AUC analyses vs. established indices. | The time-dependent AUC of the DL model showed no difference compared to BODE (0.87 vs. 0.80; p = 0.34), ADO (0.86 vs. 0.89; p = 0.51), SGRQ (0.86 vs. 0.70; p = 0.09), and was higher than CAT (0.93 vs. 0.55; p < 0.001); good calibration. | Prognostic (not diagnostic), Korean cohorts, and treatment decisions require prospective testing. |

| Ueda et al. [56] | Multi-institution Japan; 81,902 patients with 141,734 CXR-spirometry pairs from five hospitals and external tests at two independent institutions (n = 2137 CXRs; n = 5290). | DL model to estimate FVC and FEV1 from a single CXR (regression). | Training/validation/testing on three sites, external testing on two sites. | FVC r = 0.91/0.90, ICC = 0.91/0.89, MAE = 0.31 L/0.31 L; FEV1 r = 0.91/0.91, ICC = 0.90/0.90, MAE = 0.28 L/0.25 L. | Retrospective, Japan-only cohorts, and estimate lung function (not a COPD label). |

| Schroeder et al. [57] | Single-institution (USA); 6749 two-view CXRs (2012–2017) from 4436 subjects with near-concurrent PFTs (≤180 days). | ResNet-18 (frontal + lateral) trained on PFT labels to predict airflow obstruction. Compared with an NLP model of radiology reports. | Training/validation/testing subject splits. | AUC 0.814 for obstructive lung disease (FEV1/FVC < 0.70); for severe COPD (FEV1 < 0.5), AUC 0.837; both > NLP (0.704 and 0.770; p < 0.001). | Single-center, retrospective, and limited generalizability. |

| Doroodgar Jorshery et al. [58] | Ever-smokers (n = 12,550) (mean age 62·4 ± 6·8 years, 48.9% male, 12.4% rate of 6-year COPD) and never-smokers (n = 15,298) (mean age 63.0 ± 8.1 years, 42.8% male, 3.8% rate of 6-year COPD), collected at Massachusetts General Brigham (MGB) hospital, Boston, USA. | Uses pre-trained CXR-Lung-Risk CNN to stratify risk for incident COPD from a single baseline CXR. | External retrospective validation; time-to-event analyses for incident COPD. | Reports risk stratification for 6-year incident COPD; (focuses on HRs/C-indices rather than diagnostic AUC) | Retrospective design and single-site primary cohort. |

| Zou et al. [59] | Multicenter China; 1055 participants (COPD n = 535, controls n = 520) with frontal CXR + clinical data; internal test n = 284; external test n = 105. | Ensemble DL combining CXR features + clinical parameters for COPD screening and GOLD staging. | Internal split + external test from another site. | COPD detection AUC: internal 0.969 (fusion), external 0.934; CXR-only 0.946; clinical-only 0.963. Staging AUC: 0.894 (3-class) and 0.852 (5-class). | Retrospective; modest external cohort; exclusion of many comorbid lung diseases may overstate real-world performance. |

| Study | Dataset Number of Participants (n) | AI Approach | Validation Design | Quantitative Performance | Limitations |

|---|---|---|---|---|---|

| Mac et al. [60] | ~1400 patients, Toronto General Hospital, Canada; single-center cohort with spirometry + plethysmography | Multilayer perceptron (MLP) deep learning vs. pulmonologists | Internal validation with cross-validation; performance compared against clinicians and PFT “gold standard” | Outperformed pulmonologists in classification; comparable to complete PFT diagnostic acumen (exact accuracy not specified, κ > 0.7 reported) | Single-center, relatively small sample, limited external validation, restricted to Canadian patients, and may not capture device/institutional variability |

| Wang et al. [61] | 18,909 subjects, First Affiliated Hospital of Guangzhou Medical University, China; large hospital-based PFT dataset | CNN-based models (ResNet, VGG13) on flow–volume curves | Split into training, validation, and test sets; compared DL vs. pulmonologists and primary care physicians | VGG13 accuracy 95.6%; pulmonologists 76.9% (κ = 0.46); primary care physicians 56.2% | Single-institution dataset, Chinese-only population, retrospective, limited interpretability, imbalance across ventilatory patterns |

| Cosentino et al. [62] | ~350,000 participants, UK Biobank, United Kingdom; raw spirograms (ages 40–69, general population) | Deep learning on raw spirograms → COPD liability score | Training/validation/test splits within UK Biobank; evaluated against traditional phenotypes and genetic associations. | AUROC ~0.82 for COPD; AUROC ~0.89 for COPD hospitalization; HR ~1.22 for mortality prediction; identified 67 novel loci. | Labels are noisy due to their reliance on EHR/ICD codes, a UK-only cohort (limited generalizability), no bronchodilator data, and limited ethnic diversity. |

| Hill et al. [63] | ~350,000 participants, UK Biobank, United Kingdom; included QC-passed and suboptimal spirograms. | Contrastive learning (Spiro-CLF) using all efforts, incl. discarded curves. | Train-test split within the UK Biobank and held-out test set. | AUROC of 0.981 for obstruction; C-index 0.654 for mortality (vs. ~0.59 best effort only). | UK-only cohort (limited generalizability), limited ethnic diversity, and retrospective. |

| Study | Dataset Number of Participants (n) | AI Approach | Validation Design | Quantitative Performance | Limitations |

|---|---|---|---|---|---|

| Altan et al. [64] | 41 patients underwent 12-channel auscultations (posterior/anterior chest points) in Turkey. | Feature engineering through 3D second-order difference plots (chaos plots); quantization; Deep Extreme Learning Machine. | Retrospective single-dataset development; internal evaluation only (no independent external site). | Overall accuracy 94.31%, weighted sensitivity 94.28%, weighted specificity 98.76%, AUC 0.9659 for five-class COPD severity. | Small, single-source dataset, no external validation, and explicit external testing not reported. |

| Naqvi & Choudhry [65] | The International Conference on Biomedical and Health Informatics (ICBHI) dataset comprises 920 recordings and 126 subjects in total. The study subset utilized 703 recordings for the COPD, pneumonia/healthy classes, making it a multi-site public dataset. | Region of Interest (ROI) extraction + denoising, fusion of time/cepstral/spectral features; backward elimination feature selection; classifiers compared. | Internal cross-validation and hold-out evaluation on ICBHI; no self-collected or clinical external cohort | Best model: Accuracy 99.70%, TPR > 99%, FNR < 1% on selected fused features | Limited patient metadata, public mixed-source recordings, potential label/device noise, and no external clinical validation. |

| Yu et al. [66] | 12-channel lung-sound recordings from 42 COPD patients (age 38–68; 34 men/8 women). Data recorded by pulmonologists with Littmann 3200. | Hand-engineered Hilbert–Huang Transform (EEMD) time–frequency–energy features → ReliefF channel/feature selection → SVM classifier (compared to Bayes, decision tree, DBN). Uses 4 channels (L1–L4) after selection. | Retrospective model development on the public dataset; experiments compare multi-class tasks (mild vs. moderate + severe; moderate vs. severe). Internal resampling; no external cohort. | For mild vs. moderate + severe: Accuracy 89.13%, Sensitivity 87.72%, Specificity 91.01%. For moderate vs. severe: Accuracy 94.26%, Sensitivity 97.32%, Specificity 89.93%. | Small sample, single public dataset, and no external validation. |

| Chu et al. [42] | Mass General Brigham (MGB) Biobank (Boston, MA)—3420 screen-positive candidates, gold-standard chart-review labels by pulmonologists; train/test gold standards n = 182/100 (77/46 COPD cases). External validation: Marshfield Clinic (Wisconsin) independent EHR/biobank sample. | Elastic-net logistic models using structured EHR (ICD, meds, etc.) + NLP features (SAFE) from notes; compared models with/without spirometry features. | Internal testing on MGB gold-standard test set; independent external validation at Marshfield with identical screening/filtering and blinded chart review. | Internal MGB: PPV 91.7%, Sensitivity 71.7%, Specificity 94.4%. External (Marshfield): PPV 93.5%, Sensitivity 61.4%, Specificity 90%. | Trained in biobank/EHR populations; sensitivity drops without spirometry; portability shown across two U.S. systems; However, international generalizability is not investigated. |

| Chen et al. [67] | 1223 participants with inspiratory/expiratory CT + blood RNA-seq (50% female; 82% NHW, 18% African American; mean age 67). Train/test split 923/300; additional independent replication on 1527 COPDGene participants with CT but without RNA-seq. The Siemens B31F kernel is included for analysis. | CT patch features via context-aware self-supervised representation learning (CSRL) → MLP to learn image-expression axes (IEAs) linking CT structure and blood transcriptome; associations tested with clinical traits and Cox survival models; pathway enrichment. | Nested 5-fold CV within training; held-out test set; independent replication in non-RNA-seq COPDGene subset; focuses on associations and prognosis rather than binary classification. | Identified emphysema-predominant, airway-predominant strongly correlated with CT emphysema/airway measures and lung function; significant associations with outcomes via Cox models and enriched biological pathways (adjusted p < 0.001). | Single vendor/kernel (Siemens b31f) constraint; U.S. smoker cohort; not a straightforward “COPD vs. non-COPD” classifier; generalizability beyond COPDGene not demonstrated. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sait, A.R.W.; Shaikh, M.A. Artificial Intelligence-Powered Chronic Obstructive Pulmonary Disease Detection Techniques—A Review. Diagnostics 2025, 15, 2562. https://doi.org/10.3390/diagnostics15202562

Sait ARW, Shaikh MA. Artificial Intelligence-Powered Chronic Obstructive Pulmonary Disease Detection Techniques—A Review. Diagnostics. 2025; 15(20):2562. https://doi.org/10.3390/diagnostics15202562

Chicago/Turabian StyleSait, Abdul Rahaman Wahab, and Mujeeb Ahmed Shaikh. 2025. "Artificial Intelligence-Powered Chronic Obstructive Pulmonary Disease Detection Techniques—A Review" Diagnostics 15, no. 20: 2562. https://doi.org/10.3390/diagnostics15202562

APA StyleSait, A. R. W., & Shaikh, M. A. (2025). Artificial Intelligence-Powered Chronic Obstructive Pulmonary Disease Detection Techniques—A Review. Diagnostics, 15(20), 2562. https://doi.org/10.3390/diagnostics15202562