1. Introduction

Computer-aided diagnosis (CAD) systems have revolutionized endoscopic practice by enhancing the accuracy of lesion detection, standardizing diagnostic criteria, and aiding in clinical decision making [

1]. However, developing robust AI models for endoscopic applications remains fundamentally constrained by limited comprehensive datasets that adequately represent the complete spectrum of disease progression. This limitation becomes particularly pronounced when considering the emerging paradigm of personalized disease trajectory prediction, which represents a transformative frontier in medical AI [

2,

3,

4]. Such predictive capabilities, successfully demonstrated across various medical domains through longitudinal symptom monitoring [

5,

6,

7,

8], remain largely unexplored in endoscopic applications. This challenge is particularly acute in inflammatory bowel diseases such as Ulcerative Colitis (UC), where current scoring systems inadequately represent the continuous nature of pathological evolution.

Ulcerative Colitis is a chronic inflammatory bowel disease characterized by inflammation and ulceration of the colonic mucosa. The severity of UC is generally assessed using the Mayo Endoscopic Score (MES), which ranges from 0 to 3 [

9]; while this scoring system provides a useful standardized clinical assessment, it imposes artificial discrete categories on what is inherently a continuous pathological process [

10,

11,

12]. Although UC severity exists along a continuum, medical image datasets commonly consist of isolated samples annotated with discrete MES levels and controlled image sets capturing the same anatomical region as it progresses through different severity stages are often lacking. In addition, current computer-aided endoscopic systems primarily focus on static image analysis, largely due to this lack of longitudinal data; while the generation of longitudinal disease progression has been explored in other medical domains [

8,

13,

14], there remains a research gap regarding endoscopic applications of disease progression. Such longitudinal data would be valuable for developing comprehensive visual training materials and simulation tools, helping clinicians better recognize subtle transitions between MES stages and gain deeper insight into disease dynamics on a patient-specific level.

Recent advances in generative artificial intelligence, particularly diffusion models, have demonstrated remarkable capabilities in medical image synthesis [

15,

16]. Unlike Generative Adversarial Networks (GANs), diffusion models offer better training stability, sample diversity, and generation quality, making them particularly suitable for medical applications requiring high accuracy and reliability [

17]. On the other hand, since only discrete MES data are available, current generative models can only synthesize discrete UC classes, leaving a significant gap in data generation.

This study addresses these fundamental limitations by introducing novel ordinal class-embedding architectures specifically designed for medical image generation. Our approach converts cross-sectional endoscopic datasets into continuous progression models, enabling the synthesis of realistic intermediate disease stages that are often underrepresented in clinical data. Non-ordinal embedding approaches treat all distinct classes as separate entities in a space. However, for medical datasets, it is imperative to employ ordinal embeddings particularly for the disease severity datasets which are inherently ordinal. The key innovation lies in developing specialized embeddings that capture the cumulative nature of pathological features, recognizing that higher severity levels encompass the characteristics of lower levels while also introducing additional pathological manifestations.

The primary contributions are:

Development of ordinality-aware embedding strategies that model disease progression relationships, rather than treating severity levels as independent categories.

Adaptation of state-of-the-art diffusion models for medical image synthesis with domain-specific modifications.

Generation of realistic synthetic longitudinal datasets to unlock new possibilities for image-based UC trajectory analysis.

Exploration of integration with generative data augmentation techniques to improve deep learning model training using synthetic data.

2. Related Work

2.1. Computer-Aided Diagnosis in Endoscopy

Computer-aided diagnosis has emerged as a transformative technology in endoscopic practice, with deep learning models demonstrating exceptional performance in detecting and classifying gastrointestinal lesions [

18]. The Mayo Endoscopic Score (MES) serves as the standard index for evaluating UC disease activity, grading inflammation severity on a scale from 0 to 3: 0 indicates normal mucosa; 1 corresponds to mild disease with erythema and decreased vascular pattern; 2 reflects moderate disease with marked erythema and friability; and 3 denotes severe disease characterized by spontaneous bleeding and ulceration [

19].

Existing CAD systems predominantly rely on static image analysis and require extensive datasets covering the full pathological spectrum [

20]. Recent advances in edge computing have enabled real-time AI deployment for endoscopic applications [

21]; yet, these systems remain focused on static classification tasks, limiting their ability to model temporal disease evolution.

2.2. Evolution of Generative Models and Medical Image Synthesis

The progression from traditional generative approaches to modern diffusion models represents a significant advancement in medical image synthesis. The early frameworks included Variational Autoencoders (VAEs) [

22] and autoregressive models such as PixelRNN [

23], which established foundational probabilistic approaches. Generative Adversarial Networks (GANs) [

24] subsequently revolutionized the field through adversarial training between generator and discriminator networks, leading to sophisticated architectures including StyleGAN variants [

25,

26].

The comparative analysis by Dhariwal and Nichol [

27] revealed that diffusion models outperform GANs in image quality and diversity while providing superior training stability. Given these advantages, the research on diffusion-based approaches for medical imaging applications is accelerated, leveraging models’ reliability and consistency.

GAN-based medical image synthesis has been extensively applied across multiple imaging modalities, including radiography, computed tomography, magnetic resonance imaging, and histopathology [

17]. Çağlar et al. [

28] specifically addressed the classification of Ulcerative Colitis by combining StyleGAN2-ADA with active learning, demonstrating that class-specific synthetic data augmentation enhances classification accuracy when training data are limited, with separate models trained for each Mayo Endoscopic Score category.

Despite these achievements, GANs face substantial limitations in clinical applications, including mode collapse, training instability, and limited sample diversity [

17]. These issues are particularly problematic in medical contexts where subtle image features carry significant clinical implications.

Recent diffusion model applications in medical imaging have demonstrated superior capabilities for preserving anatomical details and maintaining clinical validity. Kazerouni et al. [

16] provide a comprehensive survey categorizing diffusion models across medical applications including image translation, reconstruction, and generation. Zhang et al. [

29] introduced texture-preserving diffusion models for cross-modal synthesis, while Müller-Franzes et al. [

17] developed Medfusion, a conditional latent diffusion model specifically designed for medical image generation that outperforms state-of-the-art GANs across multiple medical domains.

2.3. Ordinal Relationships in Medical Image Generation

A critical limitation of existing generative approaches is their treatment of disease severity as independent categorical variables rather than ordinal progressions. Takezaki and Uchida [

30] introduced an Ordinal Diffusion Model by incorporating ordinal relationship loss functions to maintain severity class relationships during generation. This framework improves distribution estimation through interpolation/extrapolation capabilities, making it particularly applicable to medical conditions with well-defined severity scales.

However, current ordinal approaches primarily focus on discrete classification improvements rather than generating smooth progression sequences that could support medical education and training applications. This represents a significant gap where comprehensive disease progression modeling remains underexplored.

2.4. Disease Progression Modeling

Traditional disease progression modeling has relied primarily on longitudinal clinical data and statistical approaches that fail to capture visual manifestations of disease evolution [

8]. Recent innovations have explored temporal medical data generation through video synthesis frameworks. Cao et al. [

8] introduced Medical Video Generation (MVG), a zero-shot framework for disease progression simulation enabling manipulation of disease-specific characteristics without requiring pre-existing video datasets.

Li et al. [

31] developed Endora, combining spatial-temporal video transformers with latent diffusion models for high-resolution endoscopy video synthesis; while these approaches demonstrate promise for temporal content generation, they typically require substantial computational resources and complex training procedures that may limit clinical applicability.

In particular, for UC disease progression, the discrete nature of current scoring systems fails to capture the continuous pathological evolution characteristics [

32]. The scarcity of intermediate disease states in clinical datasets restricts the development of comprehensive educational resources and training materials for medical practitioners.

Current approaches to UC image generation have primarily focused on classification tasks rather than progression modeling; while GAN-based methods [

28] and ordinal diffusion approaches [

30] have shown promise for discrete severity classification, the generation of smooth, clinically meaningful progression sequences remains an unsolved challenge that could significantly benefit medical education and computer-aided diagnosis systems.

3. Materials and Methods

This work presents a conditional diffusion framework for synthesizing realistic disease progression sequences from cross-sectional endoscopic data. Conditional diffusion models are selected over alternative generative approaches due to their capability to preserve anatomical details crucial for medical applications [

17]. The approach employs specialized ordinal class embeddings that capture the progressive nature of disease severity, enabling the generation of smooth transitions between discrete MES levels while maintaining anatomical consistency. Two novel embedding strategies are introduced: the Basic Ordinal Embedder, which facilitates smooth transitions via linear interpolation between discrete severity classes, and the Additive Ordinal Embedder, which explicitly models the cumulative nature of pathological features. The AOE represents higher disease severity levels as incremental additions to the characteristics of lower levels, thereby capturing the progressive accumulation of pathological changes throughout disease progression.

By adapting the Stable Diffusion v1.4 architecture with medical specific modifications and replacing general purpose text conditioning with domain specific ordinal embeddings, the framework enables precise control over disease severity while maintaining high image quality and training stability.

3.1. Model Architecture

The proposed framework adapts Stable Diffusion v1.4 [

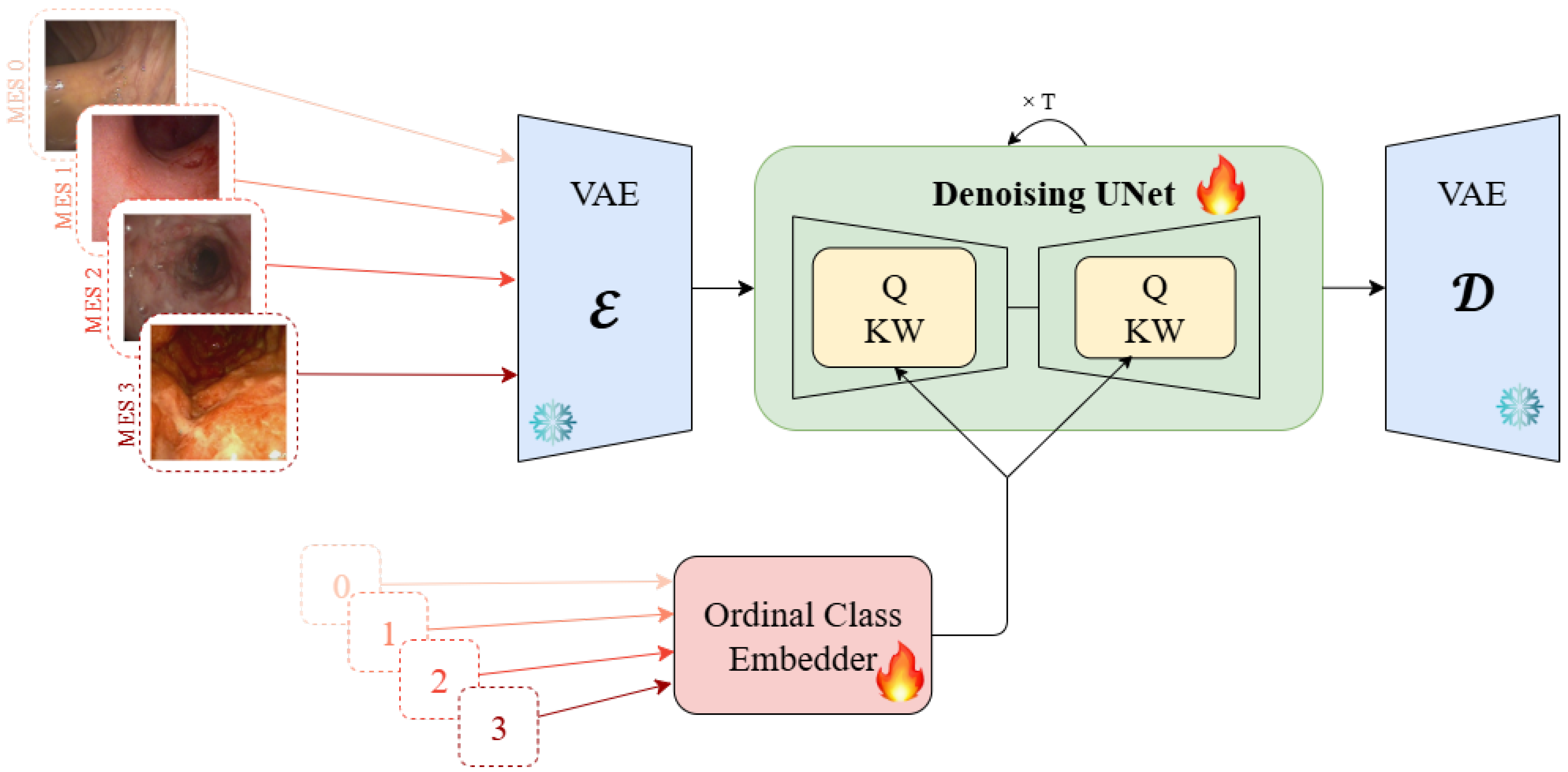

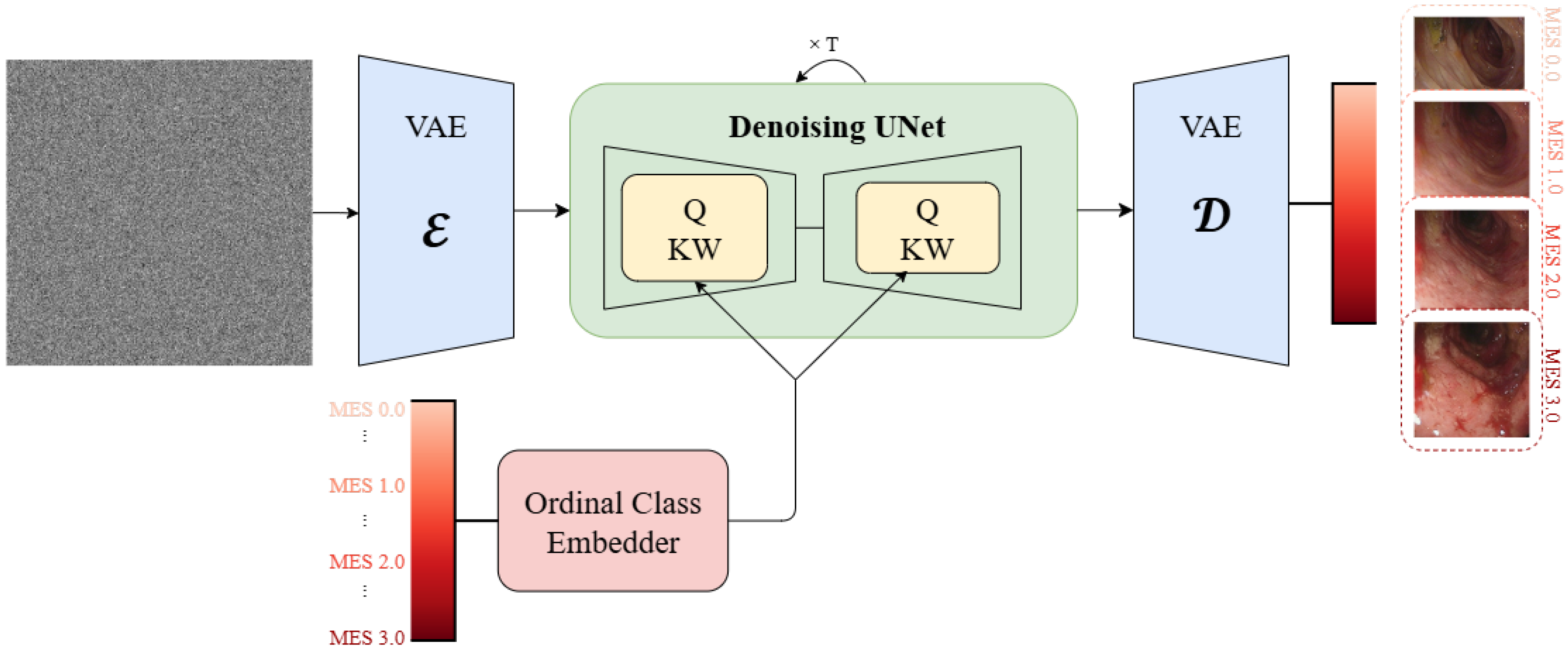

33] for medical image generation by replacing the standard CLIP text encoder with domain-specific ordinal embeddings. The architecture comprises three key components: (1) a frozen Variational Autoencoder (VAE) for efficient latent space operations, (2) a fine-tuned U-Net backbone for the denoising process, and (3) specialized ordinal class embeddings for medical conditioning. The training pipeline is demonstrated in

Figure 1 and the inference pipeline is presented in

Figure 2.

The denoising process follows the standard diffusion objective with ordinal class conditioning:

where

c represents ordinal class conditioning,

is the noisy latent at timestep

t,

is added noise, and

is the predicted noise.

The Basic Ordinal Embedder (BOE) creates learnable embeddings for each integer Mayo Endoscopic Score of 0 to 3 and performs linear interpolation for fractional values:

where

denotes a class label as

K is the total number of ordinal classes,

and

are learnable embeddings.

The Additive Ordinal Embedder (AOE) explicitly models the cumulative nature of pathological features by initializing embeddings monotonically:

where

represents normal mucosa and

represents additive pathological features at severity level

j.

Compared to the standard CLIP text encoder with approximately 63 million parameters, both proposed ordinal embedders require only 3072 parameters and maintain O(1) inference complexity, demonstrating significantly lower computational complexity.

3.2. Dataset and Preprocessing

The study used the Labeled Images for Ulcerative Colitis (LIMUC) dataset [

34], comprising 11,276 endoscopic images from 564 patients across 1043 colonoscopy procedures, which is the largest publicly available labeled dataset for UC research. Expert gastroenterologists assigned Mayo Endoscopic Scores (MES) using majority voting for discordant cases. The dataset exhibits significant class imbalance where high severity classes are scare: MES 0 (54.14%), MES 1 (27.70%), MES 2 (11.12%), and MES 3 (7.67%).

Following established protocols [

28], 992 images containing medical instruments or annotations were excluded to prevent model bias, resulting in 10,284 images. Images were cropped from

to

pixels to remove metadata while preserving the endoscopic view, then resized to

using bicubic interpolation. Patient-level data splitting ensured no leakage between the training (70%), validation (15%), and test (15%) datasets.

3.3. Training Protocol

Training was conducted on NVIDIA V100 GPU, with 16 GB VRAM, batch size 32. The learning rate was set to for 21,000 optimization steps, corresponding to approximately 672,000 image–conditioning pairs. Three key stabilization strategies were implemented.

Exponential Moving Average (EMA) was applied with a decay rate

to reduce training volatility and improve sample quality [

35]:

Min-SNR-

Weighting was utilized with

to address gradient conflicts across timesteps and accelerate convergence [

36].

Class-Balanced Sampling with inverse class frequencies was implemented to address dataset imbalance. The 30% synthetic data augmentation strategy was implemented to address severe class imbalance, particularly for MES 3, comprising only 7.67% of the dataset. Synthetic images were generated using the GAN-based method from [

28], which demonstrated improved minority class representation without compromising model performance. The addition of the synthetic images for the imbalanced dataset can potentially add bias or artifacts from the generative model. However, with an elimination method, such as the core set used in [

28], it is possible to inject synthetic samples that respect the inter-class distance and intra-class variety. The 30% proportion was empirically determined to maximize training stability while preventing overfitting to synthetic data patterns.

3.4. Evaluation Framework

Four complementary metrics were employed to assess generation quality: Fréchet Inception Distance (FID) [

37], CLIP Maximum Mean Discrepancy (CMMD) [

38], and precision/recall metrics [

39]. This multi-metric approach addresses known limitations of individual metrics and provides comprehensive quality assessment.

Ordinal consistency was assessed using Root Mean Square Error (RMSE), Mean Absolute Error (MAE), classification accuracy, and Quadratic Weighted Kappa (QWK) metrics. Additionally, Uniform Manifold Approximation and Projection (UMAP) [

40] analysis compared manifold structures between real and generated datasets using ResNet-18 feature representations.

3.5. Experimental Design

Comprehensive comparisons were conducted between proposed ordinal embedding approaches and CLIP text embedder baseline under identical experimental conditions. All methods employed the same diffusion architecture, training procedures, and evaluation protocols to ensure fair comparison. Progressive generation evaluation assessed interpolation capabilities using fine-grained MES increments to examine transition smoothness and anatomical consistency preservation.

Guidance scale of 2.0 provides optimal balance between conditioning strength and natural image appearance. All experiments employed DDPM scheduler for training and inference with 50 denoising steps.

4. Results

4.1. Quantitative Performance Evaluation

The comparative results across embedding strategies with four complementary image quality metrics are presented in

Table 1. AOE achieves better performance in terms of CMMD and Recall metrics, indicating better distributional alignment and improved coverage of the data manifold. Although FID scores are marginally higher for the ordinal methods, they perform better according to the CMMD metric. The literature reports that CMMD provides a more reliable assessment of medical content compared to inception-based metrics [

41,

42]. The class-wise analysis in

Figure 3 reveals that the AOE consistently outperforms alternatives across all Mayo Endoscopic Score severity levels, demonstrating robust performance throughout the entire spectrum of the disease.

The observed non-monotonic performance trends across Mayo Endoscopic Score severity classes in

Figure 3 further illuminate the relationship between dataset characteristics and generative model behavior. For less severe classes like MES 0 and 1, the model generates images with increased variety while preserving fidelity, resulting in improved FID scores but reduced precision. Conversely, high-severity classes like MES 2 and 3 suffer from limited training diversity due to their clinical rarity. The generated images for these classes exhibit greater diversity than the constrained training distribution, producing higher FID scores and lower precision while improving recall through enhanced coverage of underrepresented pathological manifestations. This pattern demonstrates that generative models can effectively augment rare disease classes by expanding beyond the limited diversity present in clinical datasets.

4.2. Disease Progression Synthesis Results

The framework’s capability to generate clinically meaningful disease progression sequences was evaluated through systematic analysis of synthetic progression trajectories.

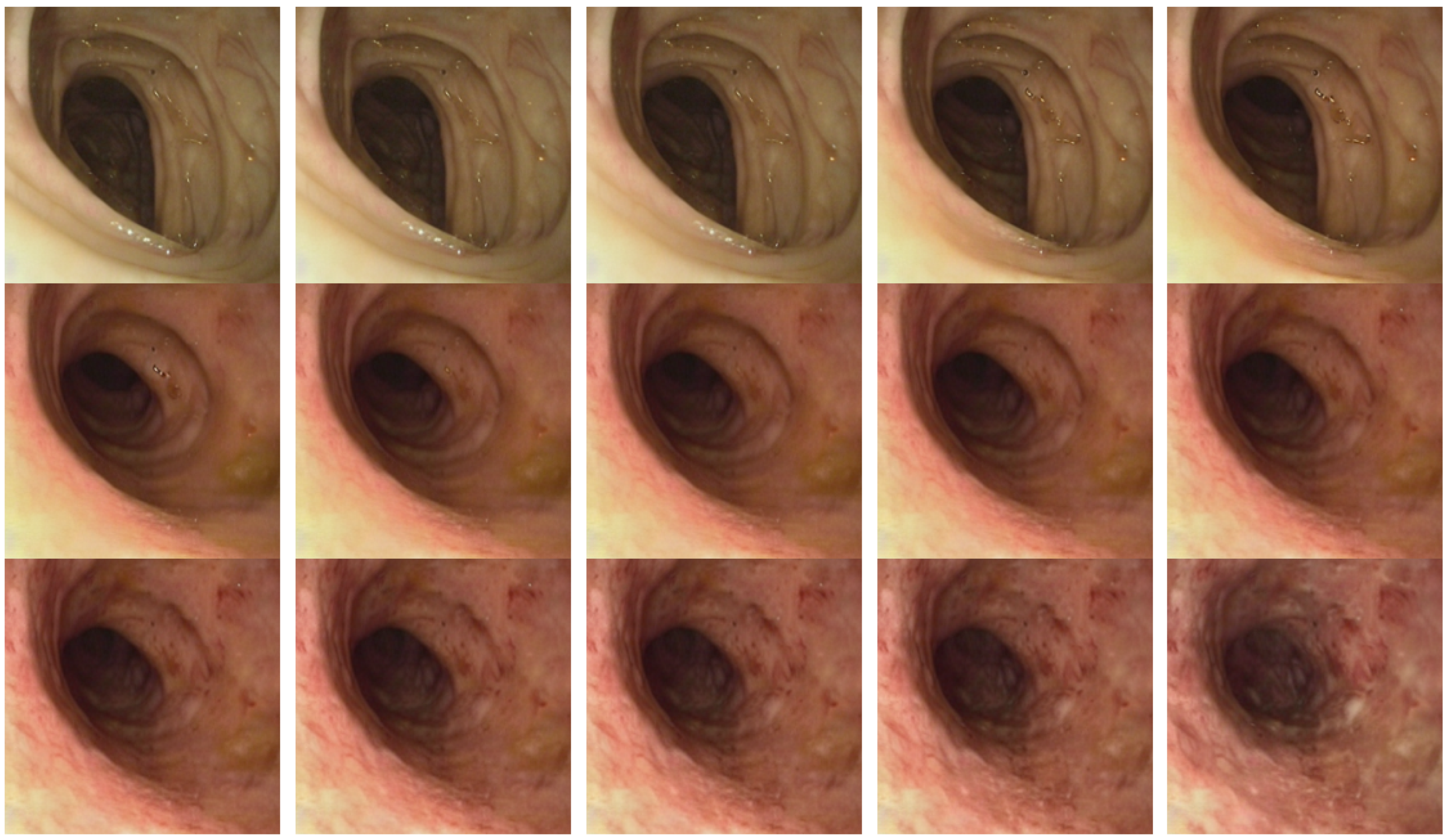

Figure 4 demonstrates smooth transitions from normal mucosa (MES 0) to severe Ulcerative Colitis (MES 3) in increments of 0.20.

The generated sequences exhibit key characteristics essential for clinical validity, such as maintaining anatomical consistency in the progression stages, the gradual introduction of pathological characteristics corresponding to the Mayo Endoscopic Scoring criteria and realistic intermediate stages. Notably, the framework successfully interpolates between discrete training classes to create plausible intermediate disease states typically underrepresented in clinical datasets.

4.3. Validation and Consistency Analysis

Consistency was assessed using a downstream task which is a ResNet-18 regression model trained on the LIMUC dataset to predict continuous MES from generated progression sequences. A total of 1100 progression sequences with 0.1 increments were generated from MES 0.0 to 3.0. Due to the stochastic nature of the diffusion model, which occasionally produces suboptimal images, the best performing 80% of sequences were selected for evaluation, resulting in a total of 27,280 synthetic images. This selection process effectively eliminates stochastic outliers while maintaining sufficient data volume for robust evaluation. A detailed analysis of this process is provided in

Appendix B. The Oracle baseline represents the upper bound performance achievable using real test data from the LIMUC dataset, comprising 1443 images, and serves as the reference standard for assessing the validity of synthetically generated progression sequences.

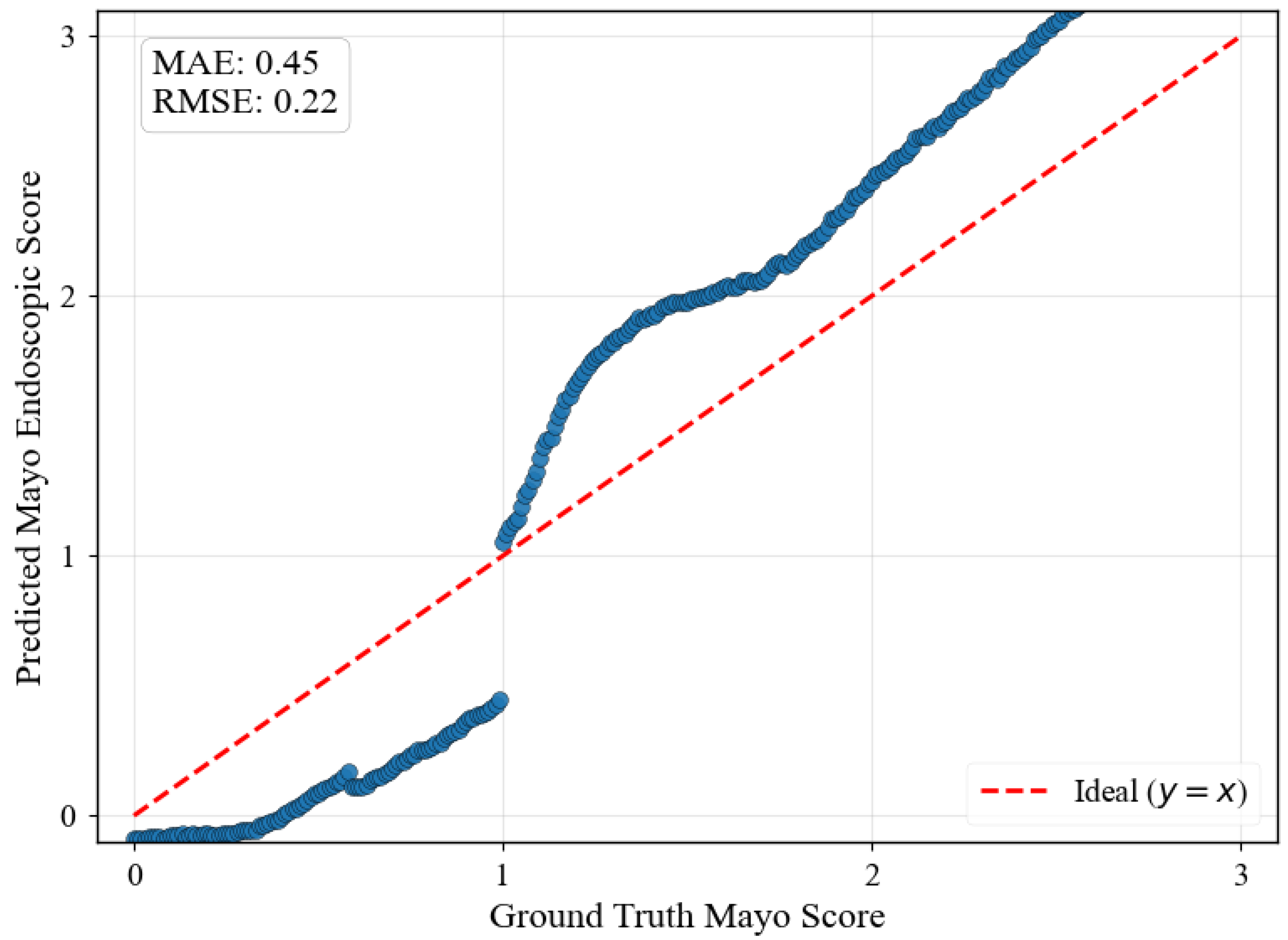

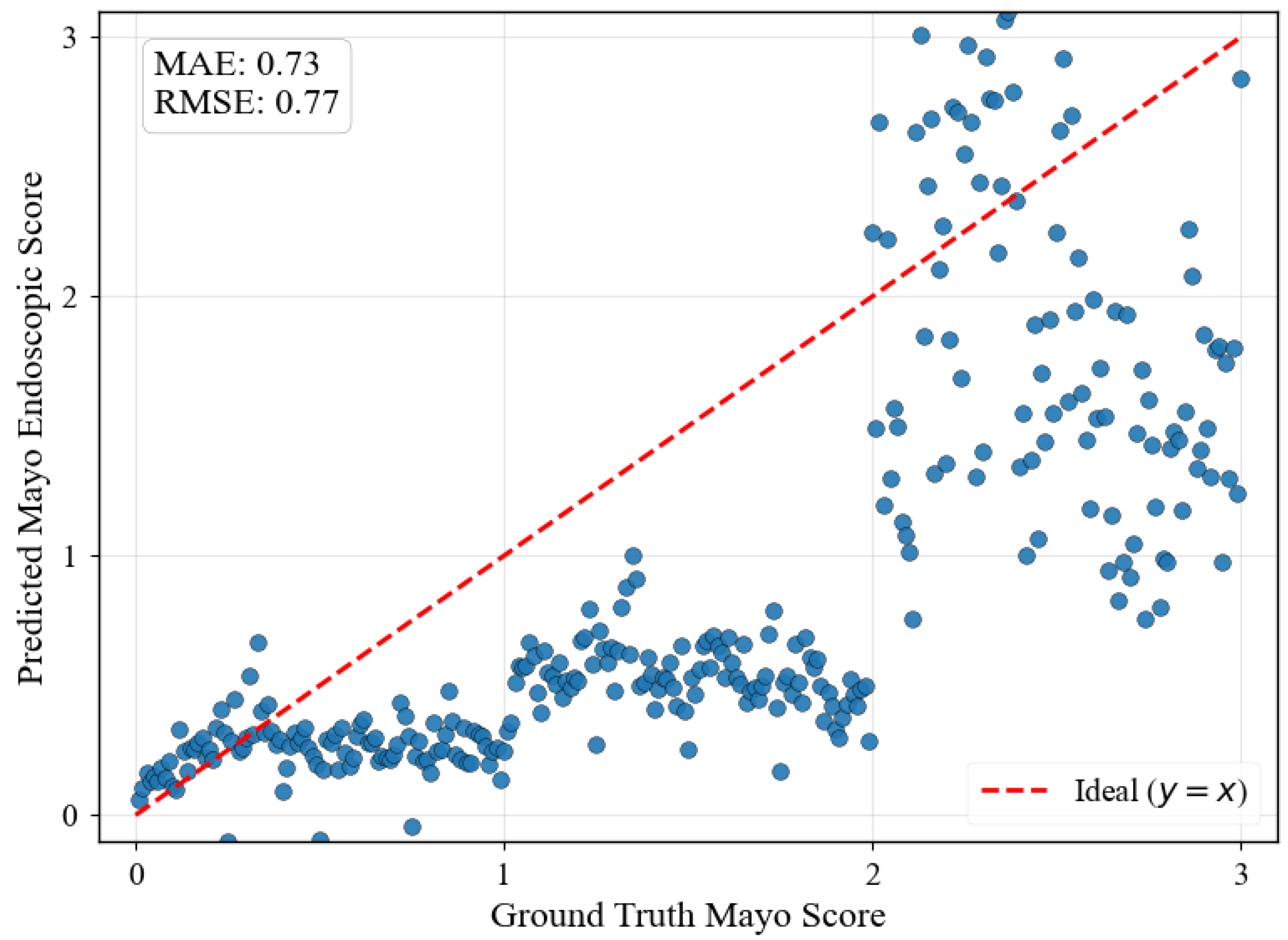

The results shown in

Table 2 demonstrate that ordinal embedding approaches achieve ordinal consistency comparable to real data, with Quadratic Weighted Kappa (QWK) scores of 0.8420 and 0.8425 closely matching the test data performance. The CLIP baseline exhibits poor ordinal consistency with QWK of 0.4625, highlighting the critical importance of task-specific embedding approaches for medical severity assessment. More results on various regression models are provided in

Appendix A.

Figure 5 illustrates regression predictions for the specific progression sequence shown in

Figure 4, demonstrating smooth ordinal relationships maintained across continuous MES values with MAE of 0.45 and RMSE of 0.22.

4.4. Ablation Studies and Design Validation

Comprehensive ablation studies confirm the necessity of ordinal aware embedding design. The CLIP baseline fails to maintain clinical consistency across MES levels, producing discontinuous progressions with inadequate interpolation capabilities.

UMAP manifold analysis in

Figure 6 demonstrates that generated progression sequences occupy relevant regions of the feature space while providing enhanced coverage of the disease progression continuum. The Silhouette Score of 0.0571 indicates substantial manifold overlap between real and synthetic data, where a score approaching 0 signifies that the manifolds overlap significantly. The synthetic data maintain distributional alignment with real clinical data and extend into intermediate stages, effectively addressing class-imbalance problems often encountered in CAD systems by populating underrepresented classes while preserving the real feature space.

Figure 7 illustrates the fundamental limitation of CLIP embeddings for medical progression modeling. The regression predictions show significant scatter across all MES levels, with predictions failing to follow the expected diagonal progression pattern. This poor ordinal consistency arises because CLIP’s text-based training objective optimizes for semantic similarity between textual descriptions and visual content rather than capturing numerical relationships or ordinal progressions. When MES are converted to text strings like “0.0”, “1.0”, “2.0”, and “3.0”, the embedding space interprets these as distinct semantic categories instead of points along a continuous severity spectrum, preventing meaningful interpolation between severity levels and resulting in discontinuous progression sequences unsuitable for clinical applications requiring smooth disease evolution modeling.

5. Discussion

5.1. Educational Impact and Applications

The proposed framework shows promise for endoscopic training by generating realistic Ulcerative Colitis progression sequences covering the full disease spectrum. The sequences demonstrate strong ordinal consistency, with a Quadratic Weighted Kappa of 0.84, suggesting suitability for integration into computer-aided diagnosis systems requiring accurate severity assessment. By synthesizing the intermediate disease stages, the framework may help in addressing gaps in medical education where examples of disease progression are limited. Additionally, its ability to produce anatomically consistent sequences with gradual pathological changes supports development of automated assessment and disease trajectory estimation tools for UC severity grading. These results indicate potential to enhance training programs and clinical decision making through synthetic data augmentation of underrepresented disease states.

5.2. Technical Contributions and Methodological Advances

The superior performance of ordinal embedding architectures supports the hypothesis that specialized conditioning mechanisms, rather than general-purpose text embeddings, are required for effectively modeling disease progression. The Additive Ordinal Embedder’s design, which models higher severity levels as cumulative accumulations of pathological features, aligns with clinical understanding of UC progression and demonstrates measurable improvements across all evaluation metrics.

Ablation studies reveal that CLIP embeddings fail to capture ordinal relationships essential for progression modeling in medical applications. When MESs are converted to text strings, the embedding space interprets these as distinct semantic categories rather than points along a continuous severity spectrum, which prevents meaningful interpolation between severity levels. This categorical interpretation results in poor clinical consistency, demonstrating why domain-specific embedding design is crucial for medical image generation.

The successful adaptation of diffusion models for medical applications through ordinal conditioning represents a methodological advancement with broader implications. Unlike previous approaches, which have treated disease severity as an independent categorical variable, the framework explicitly models progressive pathological evolution, enabling the generation of clinically meaningful intermediate stages that support both educational and research applications. The proposed approach enables the augmentation of underrepresented classes, particularly those corresponding to higher disease severity levels, by generating synthetic data conditioned on healthy samples. This synthetic data are used to effectively estimate disease progression from the current severity state, allowing simulation of how conditions such as Ulcerative Colitis may worsen or improve over time. Furthermore, our continuous progression framework addresses common issues in previous categorical data generation methods, such as mode collapse and lack of diversity, thereby enhancing the robustness and realism of the generated samples.

5.3. Computer-Aided Diagnosis and Clinical Integration

The computational validation results indicate the potential for integrating synthetic progression data into computer-aided diagnosis systems. Generated sequences maintain ordinal relationships which could enhance the robustness of automated assessment systems by providing additional training examples and improving classification accuracy across all severity levels.

For potential real-time endoscopic applications, synthetic progression sequences could serve as reference standards during colonoscopy procedures. This would enable clinicians to compare observed pathology against expected progression patterns. Furthermore, the framework’s ability to generate fine-grained severity increments offers unprecedented granularity for severity assessment when combined with regressive models, potentially supporting more nuanced diagnostic decisions than traditional discrete scoring systems like MES.

UMAP manifold analysis reveals that generated sequences occupy relevant feature space regions while extending coverage to intermediate stages that are underrepresented in clinical datasets. This enhanced coverage is particularly valuable for training robust disease progression models requiring comprehensive pathological spectrum representation, addressing the limitations of datasets that are biased toward discrete MES assessments.

5.4. Limitations and Methodological Considerations

Several limitations warrant consideration despite the framework’s demonstrated advantages. The jump in MES 1 shown in

Figure 5 indicates that the dataset does not contain smooth transition images from healthy to mild UC, which may suggest that the framework’s reliance on discrete training classes introduces artificial boundaries that do not fully capture the continuous nature of biological processes.

Furthermore, the current approach concentrates on visual progression modeling, omitting temporal dynamics and patient-specific factors that also influence disease evolution. Addressing these limitations in future studies by incorporating longitudinal data and personalized progression modeling approaches would be beneficial; while current computational validation demonstrates promising results, clinical validation by medical professionals is essential before any practical implementations are undertaken in clinical settings.

6. Conclusions

This study proposes ordinal class-embedding architectures for conditional diffusion models to generate Ulcerative Colitis progression sequences from cross-sectional endoscopic data. By transforming discrete Mayo Endoscopic Score classifications into continuous progression models, the framework enables the synthesis of intermediate disease stages that are typically underrepresented in traditional datasets. The experimental results show that the Additive Ordinal Embedder performs favorably across metrics.

ResNet-18 regression analysis further suggests that generated sequences preserve medically relevant ordinal relationships and outperform general-purpose CLIP embeddings. This capacity to generate smooth transitions between severity levels addresses limitations of classification-based datasets and offers potential tools for endoscopic training and computer-aided diagnosis. The synthesized intermediate stages could augment training datasets, enhance automated assessment systems, and support clinical decision making across the UC severity spectrum. Additionally, generating more data for underrepresented disease classes may help mitigate data imbalance issues, while the progressive nature of the sequences could facilitate disease trajectory forecasting.

Generating synthetic longitudinal datasets opens new avenues for disease trajectory prediction research. These sequences can enable predictive models to forecast disease evolution from current severity assessments, supporting personalized treatment planning and monitoring. Integration with real-time endoscopic systems offers promising clinical translation potential by enhancing quality assessment and providing intra-procedural feedback. Cross-modal applications linking endoscopic progression with histopathology or clinical biomarkers could further deepen disease understanding and individualized care. Future work should explore advanced data augmentation and interpolation methods to address discrete transitions, standardize evaluation protocols, and develop benchmark datasets to accelerate research and adoption.

Despite these advances, clinical validity must be confirmed by experienced gastrointestinal physicians to ensure practical utility and safety. Future research should also focus on preserving anatomical consistency, capturing temporal dynamics for longitudinal modeling, incorporating additional clinical data, and generalizing the framework to other progressive conditions with ordinal staging.

Author Contributions

Conceptualization, Ü.M.Ç. and A.T.; methodology, M.M.K. and Ü.M.Ç.; software, M.M.K.; validation, M.M.K. and Ü.M.Ç.; formal analysis, M.M.K.; investigation, M.M.K.; resources, A.T.; data curation, Ü.M.Ç.; writing—original draft preparation, M.M.K.; writing—review and editing, M.M.K., Ü.M.Ç., and A.T.; visualization, M.M.K.; supervision, A.T. All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by ASELSAN Inc.

Institutional Review Board Statement

Ethical review and approval were waived for this study because it utilizes publicly available datasets and does not involve human subjects research requiring ethical approval.

Informed Consent Statement

Not applicable.

Data Availability Statement

LIMUC (Labeled Images for Ulcerative Colitis) dataset used in this study is publicly available.

Acknowledgments

This work has been supported by Middle East Technical University Scientific Research Projects Coordination Unit under grant number ADEP-704-2024-11486. The experiments reported in this work were fully performed at TUBITAK ULAKBIM, High Performance and Grid Computing Center (TRUBA).

Conflicts of Interest

Author Meryem Mine Kurt was employed by the ASELSAN Inc. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | artificial intelligence |

| CAD | computer-aided diagnosis |

| CLIP | Contrastive Language-Image Pretraining |

| CMMD | CLIP Maximum Mean Discrepancy |

| EMA | Exponential Moving Average |

| FID | Fréchet Inception Distance |

| GANs | Generative Adversarial Networks |

| LIMUC | Labeled Images for Ulcerative Colitis |

| MAE | mean absolute error |

| MES | Mayo Endoscopic Score |

| MVG | Medical Video Generation |

| QWK | Quadratic Weighted Kappa |

| RMSE | root mean square error |

| UC | Ulcerative Colitis |

| UMAP | Uniform Manifold Approximation and Projection |

| VAE | Variational Autoencoder |

Appendix A

To ensure the robustness and generalizability of our findings regarding ordinal consistency, we conducted comprehensive validation using multiple regression architectures. This appendix presents validation results using InceptionV3, ResNet-50, and Vision Transformer (ViT) regression models, all trained on the LIMUC dataset following identical protocols.

Table A1 presents the InceptionV3 regression validation results. The Additive Ordinal Embedder (AOE) closely approaches the Oracle performance in ordinal consistency. The InceptionV3 architecture’s multi-scale feature extraction capabilities appear particularly well-suited for capturing the visual characteristics relevant to UC severity assessment.

Table A2 shows the ResNet-50 validation results. These results validate that the benefits of ordinal embeddings scale effectively with increased model capacity.

Table A3 presents the Vision Transformer validation results, extending our evaluation to modern attention-based architectures; while they show slightly lower absolute performance compared to convolutional networks, the relative ordering between methods remains consistent. The performance decrease observed in ViT compared to convolutional networks may be attributed to the small and imbalanced nature of the LIMUC dataset, as transformer architectures typically require larger datasets for optimal performance.

Table A1.

Validation results using InceptionV3 regression model for ordinal consistency assessment comparing Basic Ordinal Embedder (BOE) and Additive Ordinal Embedder (AOE) with CLIP Baseline.

Table A1.

Validation results using InceptionV3 regression model for ordinal consistency assessment comparing Basic Ordinal Embedder (BOE) and Additive Ordinal Embedder (AOE) with CLIP Baseline.

| Dataset | #Images | RMSE | MAE | Accuracy | QWK |

|---|

| Oracle | 1443 | 0.4447 | 0.3010 | 0.7762 | 0.8647 |

| CLIP | 27,280 | 0.9775 | 0.7637 | 0.3781 | 0.4328 |

| BOE | 27,280 | 0.4593 | 0.3683 | 0.6449 | 0.8451 |

| AOE | 27,280 | 0.4494 | 0.3568 | 0.6594 | 0.8490 |

Table A2.

Validation results using ResNet-50 regression model for ordinal consistency assessment.

Table A2.

Validation results using ResNet-50 regression model for ordinal consistency assessment.

| Dataset | #Images | RMSE | MAE | Accuracy | QWK |

|---|

| Oracle | 1443 | 0.4726 | 0.3360 | 0.7505 | 0.8437 |

| CLIP | 27,280 | 0.9698 | 0.7614 | 0.3723 | 0.4474 |

| BOE | 27,280 | 0.5204 | 0.4259 | 0.6164 | 0.8370 |

| AOE | 27,280 | 0.5086 | 0.4116 | 0.6362 | 0.8431 |

Table A3.

Validation results using ViT regression model for ordinal consistency assessment.

Table A3.

Validation results using ViT regression model for ordinal consistency assessment.

| Dataset | #Images | RMSE | MAE | Accuracy | QWK |

|---|

| Oracle | 1443 | 0.5421 | 0.4058 | 0.7159 | 0.8022 |

| CLIP | 27,280 | 1.2682 | 1.0299 | 0.3011 | 0.3124 |

| BOE | 27,280 | 0.5577 | 0.4567 | 0.5848 | 0.8222 |

| AOE | 27,280 | 0.5508 | 0.4547 | 0.5885 | 0.8146 |

Across all four regression architectures, the results consistently validate the superiority of ordinal embedding strategies over general-purpose CLIP embeddings. This architectural independence demonstrates that the observed benefits are fundamental to the ordinal embedding approach rather than artifacts of specific model designs, providing strong evidence for robustness of our framework across diverse computer vision architectures commonly used in medical imaging applications.

Appendix B

This appendix provides a comprehensive analysis of the filtering methodology used to select the top-performing 80% of generated sequences, along with a characterization of failure cases. The ResNet-18 regression model used for sequence evaluation was trained exclusively on the LIMUC training dataset, ensuring no data leakage between synthetic sequence generation and performance assessment. All 1100 generated progression sequences were initially evaluated, with systematic ranking based on multiple regression metrics to identify statistical outliers.

Appendix B.1. Complete Dataset Performance Assessment

Table A4 presents the comprehensive evaluation results for all 1100 generated sequences without filtering, providing transparent reporting of complete dataset performance. Both embedding methods demonstrate substantial ordinal consistency when evaluated across the entire generated dataset, with Quadratic Weighted Kappa values of 0.8079 for BOE and 0.8066 for AOE, indicating strong preservation of disease severity relationships despite the inclusion of failure cases. The complete dataset evaluation reveals RMSE values of 0.5844 and 0.5803 for BOE and AOE, respectively, representing expected performance degradation when statistical outliers are included in the analysis.

Table A4.

Ordinal consistency assessment for complete unfiltered dataset of 1100 progression sequences, demonstrating comprehensive performance evaluation including statistical outliers and failure cases.

Table A4.

Ordinal consistency assessment for complete unfiltered dataset of 1100 progression sequences, demonstrating comprehensive performance evaluation including statistical outliers and failure cases.

| Dataset | #Images | RMSE | MAE | Accuracy | QWK |

|---|

| BOE | 34,100 | 0.5844 | 0.4816 | 0.5605 | 0.8079 |

| AOE | 34,100 | 0.5803 | 0.4742 | 0.5735 | 0.8066 |

Appendix B.2. Performance-Based Filtering Methodology

The composite score metric used for sequence ranking combines model fit quality with prediction accuracy:

This metric prioritizes both correlation strength () and absolute prediction error (RMSE), with the scaling factor of 10 empirically determined to balance the relative contributions of both components. Higher scores indicate better ordinal consistency and anatomical coherence in generated progression sequences. The filtering threshold was established through statistical analysis revealing natural performance clustering, rather than arbitrary selection.

Appendix B.3. Statistical Characterization of Performance Groups

Table A5 presents comprehensive statistical comparison between high-quality sequences (80%,

n = 880) and failure cases (20%,

n = 220) based on the filtered evaluation approach. The failure group exhibits significantly degraded performance across all measures: mean

drops from 0.6658 to 0.0085, mean RMSE increases from 0.4996 to 0.8810, and mean QWK decreases from 0.8426 to 0.7147. Notably, the failure group includes sequences with negative

values, indicating fundamental model mismatch where generated progressions fail to follow expected Mayo Endoscopic Score trajectories. Comparison between

Table A4 and

Table A5 demonstrates the impact of quality filtering on evaluation metrics, justifying the methodological approach for robust clinical validation assessment.

Table A5.

Statistical comparison between high-quality sequences (80%, n = 880) and failure cases (20%, n = 220) based on ResNet-18 regression performance.

Table A5.

Statistical comparison between high-quality sequences (80%, n = 880) and failure cases (20%, n = 220) based on ResNet-18 regression performance.

| Metric | Good Set (880) | Failure Set (220) |

|---|

| Min | Max | Mean | Std. | Min | Max | Mean | Std. |

|---|

| RMSE | 0.0828 | 0.7369 | 0.4996 | 0.1334 | 0.7430 | 1.6692 | 0.8810 | 0.1306 |

| MAE | 0.0680 | 0.7105 | 0.4374 | 0.1277 | 0.5679 | 1.5638 | 0.7769 | 0.1221 |

| 0.3212 | 0.9914 | 0.6658 | 0.1624 | −2.4829 | 0.3099 | 0.0085 | 0.3341 |

| QWK | 0.6316 | 0.9832 | 0.8426 | 0.0460 | 0.2530 | 0.8439 | 0.7147 | 0.1076 |

| Score | 0.2475 | 0.9831 | 0.6158 | 0.1756 | −2.6499 | 0.2356 | −0.0796 | 0.3471 |

Appendix B.4. Failure Case Analysis and Clinical Implications

Figure A1 presents representative examples from the failure group, illustrating common failure modes observed in the discarded sequences. The failure cases exhibit several characteristic patterns that justify their exclusion from the primary analysis.

Figure A1.

Sample failure cases from the discarded sequences, demonstrating common failure modes.

Figure A1.

Sample failure cases from the discarded sequences, demonstrating common failure modes.

Anatomical Inconsistencies: Sequences containing unrealistic tissue textures or morphological features that deviate significantly from endoscopic appearance standards, compromising clinical validity.

Progression Discontinuities: Generated sequences that fail to maintain smooth transitions between severity levels, resulting in abrupt changes inconsistent with biological disease evolution.

Ordinal Relationship Breakdown: Cases where the regression model predictions show significant scatter across Mayo Endoscopic Score levels, indicating loss of severity-based ordering essential for clinical applications.

Artifacts and Other Errors: Synthetic samples where the anatomical morphology and disease severity are consistent but the generative model introduces an artifact or an out-of-focus error.

The systematic nature of these failures reflects inherent limitations of diffusion model stochasticity, where occasional sampling from low-probability regions of the learned distribution produces suboptimal outputs. This phenomenon represents expected behavior rather than a methodological flaw in generative modeling.

Appendix B.5. Score Distribution Analysis

Figure A2 presents the histogram distribution of composite scores across all 1100 generated sequences. The negative score range corresponds to failure cases that demonstrate fundamental breakdown in disease progression modeling. Although the primary target is to eliminate negative scored-samples, an 80/20 data partitioning strategy was implemented to maintain equivalent sample sizes for generated sequences by BOE and AOE. The concentration of sequences in the positive score range demonstrates that successful disease progression modeling represents the expected outcome, while negative-scoring sequences represent distinct failure modes requiring exclusion for robust clinical validation assessment.

Figure A2.

Histogram distribution of composite scores where green bars represent high-quality sequences (80%, n = 880) and red bars represent failure cases (20%, n = 220).

Figure A2.

Histogram distribution of composite scores where green bars represent high-quality sequences (80%, n = 880) and red bars represent failure cases (20%, n = 220).

Appendix B.6. Clinical Deployment Considerations

The observed failure rate aligns with expected performance characteristics of generative models in medical applications, where quality control mechanisms are essential for clinical deployment. In practical implementation, automated quality assessment metrics similar to those employed in this study would be integrated to filter suboptimal synthetic data before clinical use. The transparent reporting of both filtered and unfiltered performance provides comprehensive evaluation while acknowledging the necessity of quality control in medical image synthesis applications.

References

- Ochiai, K.; Ozawa, T.; Shibata, J.; Ishihara, S.; Tada, T. Current status of artificial intelligence-based computer-assisted diagnosis systems for gastric cancer in endoscopy. Diagnostics 2022, 12, 3153. [Google Scholar] [CrossRef]

- Li, L.; Qiu, J.; Saha, A.; Li, L.; Li, P.; He, M.; Guo, Z.; Yuan, W. Artificial intelligence for biomedical video generation. arXiv 2024, arXiv:2411.07619. [Google Scholar] [CrossRef]

- Sun, T.; He, X.; Li, Z. Digital twin in healthcare: Recent updates and challenges. Digit. Health 2023, 9, 20552076221149651. [Google Scholar] [CrossRef]

- Chen, J.; Shi, Y.; Yi, C.; Du, H.; Kang, J.; Niyato, D. Generative AI-driven human digital twin in IoT-healthcare: A comprehensive survey. IEEE Internet Things J. 2024, 11, 34749–34773. [Google Scholar] [CrossRef]

- Schulam, P.; Arora, R. Disease trajectory maps. In Advances in Neural Information Processing Systems 29, 30th Annual Conference on Neural Information Processing Systems 2016, Barcelona, Spain, 5–10 December 2016; NeurIPS: San Diego, CA, USA, 2016. [Google Scholar]

- Lim, B.; van der Schaar, M. Disease-atlas: Navigating disease trajectories using deep learning. In Proceedings of the Machine Learning for Healthcare Conference, PMLR, Palo Alto, CA, USA, 17–18 August 2018; pp. 137–160. [Google Scholar]

- Bhagwat, N.; Viviano, J.D.; Voineskos, A.N.; Chakravarty, M.M.; Alzheimer’s Disease Neuroimaging Initiative. Modeling and prediction of clinical symptom trajectories in Alzheimer’s disease using longitudinal data. PLoS Comput. Biol. 2018, 14, e1006376. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Liang, K.; Liao, K.D.; Gao, T.; Ye, W.; Chen, J.; Ding, Z.; Cao, J.; Rehg, J.M.; Sun, J. Medical video generation for disease progression simulation. arXiv 2024, arXiv:2411.11943. [Google Scholar] [CrossRef]

- Satsangi, J.; Silverberg, M.; Vermeire, S.; Colombel, J. The Montreal classification of inflammatory bowel disease: Controversies, consensus, and implications. Gut 2006, 55, 749–753. [Google Scholar] [CrossRef]

- Fuerstein, J.; Moss, A.C.; Farraye, F. Ulcerative Colitis. Mayo Clin. Proc. 2019, 94, 1357–1373. [Google Scholar] [CrossRef] [PubMed]

- Xie, T.; Zhang, T.; Ding, C.; Dai, X.; Li, Y.; Guo, Z.; Wei, Y.; Gong, J.; Zhu, W.; Li, J. Ulcerative Colitis Endoscopic Index of Severity (UCEIS) versus Mayo Endoscopic Score (MES) in guiding the need for colectomy in patients with acute severe colitis. Gastroenterol. Rep. 2018, 6, 38–44. [Google Scholar] [CrossRef]

- Kellermann, L.; Riis, L.B. A close view on histopathological changes in inflammatory bowel disease, a narrative review. Dig. Med. Res. 2021, 4, 3. [Google Scholar] [CrossRef]

- Jung, E.; Luna, M.; Park, S.H. Conditional GAN with 3D discriminator for MRI generation of Alzheimer’s disease progression. Pattern Recognit. 2023, 133, 109061. [Google Scholar] [CrossRef]

- Ravi, D.; Blumberg, S.B.; Ingala, S.; Barkhof, F.; Alexander, D.C.; Oxtoby, N.P.; Alzheimer’s Disease Neuroimaging Initiative. Degenerative adversarial neuroimage nets for brain scan simulations: Application in ageing and dementia. Med Image Anal. 2022, 75, 102257. [Google Scholar] [CrossRef] [PubMed]

- Ktena, I.; Wiles, O.; Albuquerque, I.; Rebuffi, S.A.; Tanno, R.; Roy, A.G.; Azizi, S.; Belgrave, D.; Kohli, P.; Cemgil, T.; et al. Generative models improve fairness of medical classifiers under distribution shifts. Nat. Med. 2024, 30, 1166–1173. [Google Scholar] [CrossRef]

- Kazerouni, A.; Aghdam, E.K.; Heidari, M.; Azad, R.; Fayyaz, M.; Hacihaliloglu, I.; Merhof, D. Diffusion models in medical imaging: A comprehensive survey. Med Image Anal. 2023, 88, 102846. [Google Scholar] [CrossRef]

- Müller-Franzes, G.; Niehues, J.M.; Khader, F.; Arasteh, S.T.; Haarburger, C.; Kuhl, C.; Wang, T.; Han, T.; Nolte, T.; Nebelung, S.; et al. A multimodal comparison of latent denoising diffusion probabilistic models and generative adversarial networks for medical image synthesis. Sci. Rep. 2023, 13, 12098. [Google Scholar] [CrossRef]

- Nie, B.; Zhang, G. Ulcerative Severity Estimation Based on Advanced CNN–Transformer Hybrid Models. Appl. Sci. 2025, 15, 7484. [Google Scholar] [CrossRef]

- Matsuoka, K.; Kobayashi, T.; Ueno, F.; Matsui, T.; Hirai, F.; Inoue, N.; Kato, J.; Kobayashi, K.; Kobayashi, K.; Koganei, K.; et al. Evidence-based clinical practice guidelines for inflammatory bowel disease. J. Gastroenterol. 2018, 53, 305–353. [Google Scholar] [CrossRef]

- Kawamoto, A.; Takenaka, K.; Okamoto, R.; Watanabe, M.; Ohtsuka, K. Systematic review of artificial intelligence-based image diagnosis for inflammatory bowel disease. Dig. Endosc. 2022, 34, 1311–1319. [Google Scholar] [CrossRef] [PubMed]

- Gong, E.J.; Bang, C.S. Edge Artificial Intelligence Device in Real-Time Endoscopy for the Classification of Colonic Neoplasms. Diagnostics 2025, 15, 1478. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar] [CrossRef]

- van den Oord, A.; Kalchbrenner, N.; Kavukcuoglu, K. Pixel Recurrent Neural Networks. arXiv 2016, arXiv:1601.06759. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 73–76. [Google Scholar] [CrossRef]

- Karras, T.; Laine, S.; Aila, T. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4401–4410. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8110–8119. [Google Scholar] [CrossRef]

- Dhariwal, P.; Nichol, A. Diffusion models beat gans on image synthesis. Adv. Neural Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar] [CrossRef]

- Çağlar, Ü.M.; İnci, A.; Hanoğlu, O.; Polat, G.; Temizel, A. Ulcerative Colitis Mayo Endoscopic Scoring Classification with Active Learning and Generative Data Augmentation. In Proceedings of the 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkiye, 5–8 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 462–467. [Google Scholar]

- Zhang, Y.; Li, L.; Wang, J.; Yang, X.; Zhou, H.; He, J.; Xie, Y.; Jiang, Y.; Sun, W.; Zhang, X.; et al. Texture-preserving diffusion model for CBCT-to-CT synthesis. Med. Image Anal. 2025, 99, 103362. [Google Scholar] [CrossRef]

- Takezaki, S.; Uchida, S. An Ordinal Diffusion Model for Generating Medical Images with Different Severity Levels. In Proceedings of the 2024 IEEE International Symposium on Biomedical Imaging (ISBI), Athens, Greece, 27–30 May 2024; pp. 1–5. [Google Scholar]

- Li, C.; Liu, H.; Liu, Y.; Feng, B.Y.; Li, W.; Liu, X.; Chen, Z.; Shao, J.; Yuan, Y. Endora: Video generation models as endoscopy simulators. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Marrakesh, Morocco, 6–10 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 230–240. [Google Scholar]

- Gajendran, M.; Loganathan, P.; Jimenez, G.; Catinella, A.P.; Ng, N.; Umapathy, C.; Ziade, N.; Hashash, J.G. A comprehensive review and update on Ulcerative Colitis. Dis. Mon. 2019, 65, 100851. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Polat, G.; Kani, H.; Ergenc, I.; Alahdab, Y.; Temizel, A.; Atug, O. Labeled Images for Ulcerative Colitis (Limuc) Dataset; Zenodo: Geneva, Switzerland, 2022. [Google Scholar]

- Karras, T.; Aittala, M.; Lehtinen, J.; Hellsten, J.; Aila, T.; Laine, S. Analyzing and improving the training dynamics of diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 24174–24184. [Google Scholar]

- Hang, T.; Gu, S.; Li, C.; Bao, J.; Chen, D.; Hu, H.; Geng, X.; Guo, B. Efficient diffusion training via min-snr weighting strategy. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 7441–7451. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Jayasumana, S.; Ramalingam, S.; Veit, A.; Glasner, D.; Chakrabarti, A.; Kumar, S. Rethinking fid: Towards a better evaluation metric for image generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 9307–9315. [Google Scholar] [CrossRef]

- Kynkäänniemi, T.; Karras, T.; Laine, S.; Lehtinen, J.; Aila, T. Improved precision and recall metric for assessing generative models. arXiv 2019, arXiv:1904.06991. [Google Scholar] [CrossRef]

- McInnes, L.; Healy, J.; Melville, J. Umap: Uniform manifold approximation and projection for dimension reduction. arXiv 2018, arXiv:1802.03426. [Google Scholar]

- Liu, Y.; Yuan, X.; Zhou, Y. EIVS: Unpaired Endoscopy Image Virtual Staining via State Space Generative Model. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Lisbon, Portugal, 3–6 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 2198–2203. [Google Scholar]

- Martyniak, S.; Kaleta, J.; Dall’Alba, D.; Naskręt, M.; Płotka, S.; Korzeniowski, P. Simuscope: Realistic endoscopic synthetic dataset generation through surgical simulation and diffusion models. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 4268–4278. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).