1. Introduction

Colorectal cancer remains a significant public health challenge globally due to its high incidence and mortality rates [

1]. Early detection and accurate histological classification through colonoscopy are critical for improving patient outcomes and guiding effective treatment strategies [

2]. Colonoscopy allows for direct visual assessment and removal of colorectal lesions, significantly reducing the risk of progression to invasive cancer [

3].

Despite these advantages, conventional colonoscopic evaluation relies heavily on endoscopists’ subjective judgment and experience, resulting in variability in diagnosis accuracy [

4]. Histological confirmation via biopsy, while accurate, introduces additional delays, costs, and procedural complexities [

5]. These limitations underscore the need for enhanced, reliable, and immediate diagnostic techniques.

Artificial intelligence (AI), particularly deep learning algorithms, has demonstrated considerable promise in improving diagnostic accuracy across various medical imaging domains, including endoscopy [

6,

7]. Previous studies utilizing AI for colorectal polyp classification have shown potential for assisting endoscopists in achieving more consistent and precise diagnoses [

8]. However, most AI systems require powerful computing resources, typically relying on centralized cloud or high-end computing infrastructures, posing challenges such as latency issues, data privacy concerns, and dependence on continuous internet connectivity [

9].

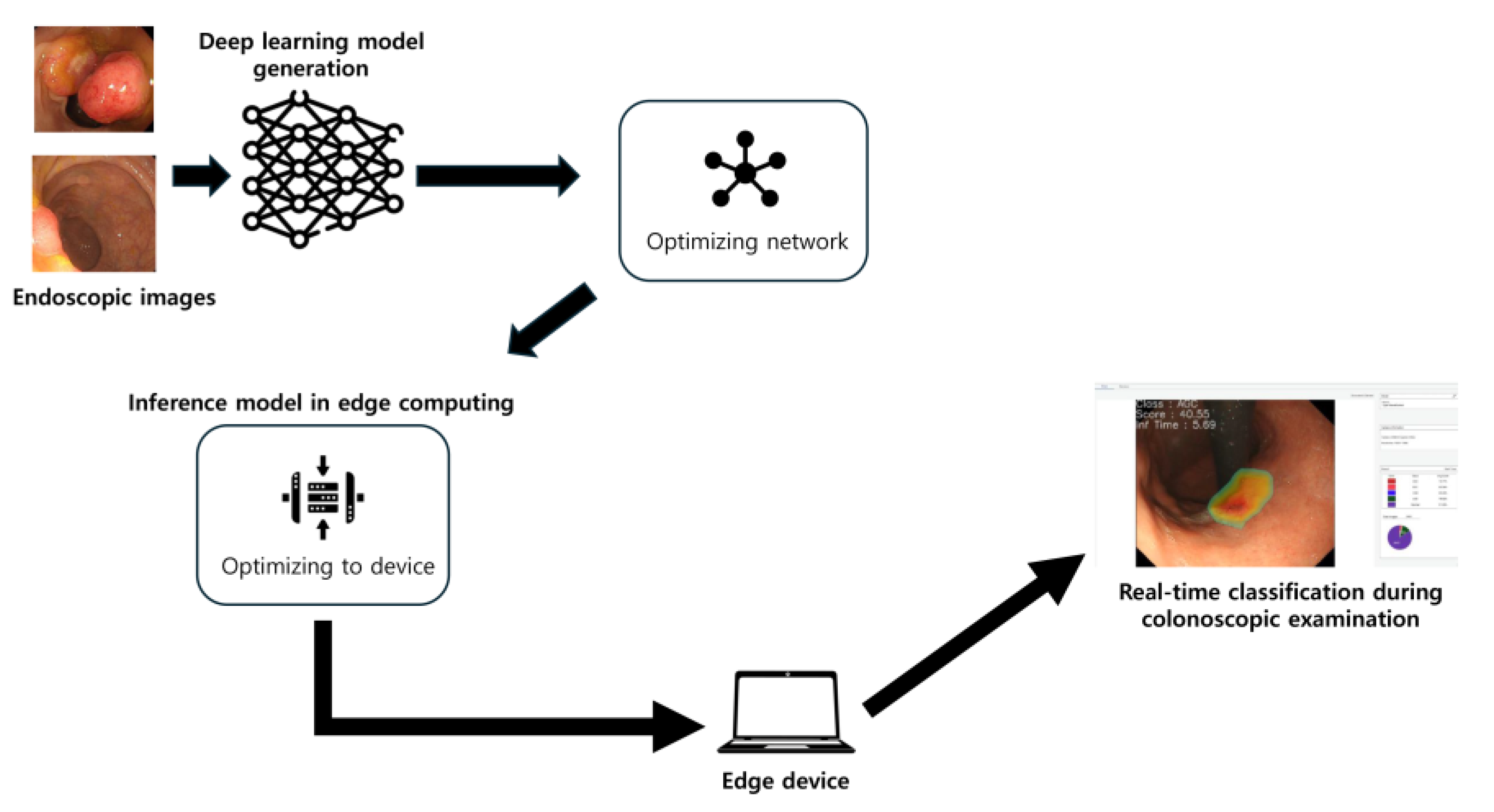

Recent advancements in edge computing technology offer promising solutions to these practical barriers. Edge computing facilitates the execution of AI models locally on medical devices, significantly reducing latency, enhancing data security, and minimizing reliance on internet connectivity. These features make edge computing particularly suitable for real-time clinical decision-making environments, such as endoscopic examinations [

10].

This study aims to leverage these advancements to develop and validate a novel optimized deep-learning classification model specifically designed for deployment on an edge computing platform. The primary objective is to automatically and accurately classify colorectal lesions in real time during endoscopy, distinguishing between advanced colorectal cancer, early cancers/high-grade dysplasia, tubular adenomas, and nonneoplastic lesions, as well as differentiating broadly between neoplastic and nonneoplastic lesions. Through the integration of biomimetic principles with technological advancements, this study seeks to demonstrate how nature-inspired frameworks might enhance the diagnostic capabilities of medical AI systems.

In colorectal neoplasia, standardized classification systems provide a framework to stratify lesions by malignant potential. The revised Vienna classification delineates five categories of gastrointestinal epithelial neoplasia: Category 1 (negative for neoplasia/dysplasia), Category 2 (indefinite for neoplasia/dysplasia), Category 3 (mucosal low-grade neoplasia, e.g., tubular adenoma), Category 4 (mucosal high-grade neoplasia, such as high-grade dysplasia or intramucosal carcinoma), and Category 5 (invasive neoplasia with submucosal or deeper invasion). The World Health Organization (WHO) histological classification similarly distinguishes benign colorectal adenomas from malignant adenocarcinomas, using the presence of submucosal invasion as the key criterion for malignancy—consistent with the Vienna system’s division between non-invasive (categories 1–4) and invasive (category 5) lesions [

11]. In this study, our AI model was trained to categorize lesions in analogous terms—nonneoplastic, tubular adenoma, early cancer/high-grade dysplasia, and advanced cancer—mirroring these classification schemes and emphasizing the overarching dichotomy between neoplastic and nonneoplastic findings.

We hypothesize that the integration of AI into an edge computing platform will significantly improve real-time diagnostic accuracy, reduce unnecessary biopsies, enhance early colorectal cancer detection rates, and standardize diagnostic practices among endoscopists. Ultimately, this research aims to validate an effective, clinically deployable AI solution that can seamlessly integrate into routine colorectal cancer screening and surveillance programs, improving both clinical efficiency and patient outcomes.

4. Discussion

In this study, we deliberately implemented our colorectal lesion classifier on an accessible edge computing device to assess real-world clinical feasibility. Unlike purely engineering-driven projects, our focus was pragmatic—we wanted to validate that practicing clinicians could use AI during colonoscopy in real time without specialized hardware or cloud support. Edge computing enables data processing at the point of care, avoiding the latency and connectivity issues of remote servers [

15]. Indeed, by running locally, our system avoids dependence on internet connections and protects patient data privacy, an important advantage in healthcare settings. The result is an AI tool that fits smoothly into the endoscopy suite. We achieved an inference speed of under 5 milliseconds per image, meaning that the model’s predictions were essentially instantaneous during live endoscopic viewing. Notably, the endoscopists reported no perceptible delay or disruption from the AI device while performing the procedures. This confirms that real-time deployment is not only technically possible but also clinically practical using modest, readily available hardware. The team’s experience suggests that a clinician-led approach to integrating AI—emphasizing usability over raw engineering optimizations—can successfully bring cutting-edge models to the bedside. We maintain an optimistic outlook that AI can be seamlessly embedded into colonoscopy workflows here and now, acting as a real-time assistant without hindering the procedure.

Our deep learning model demonstrated strong classification performance on both internal-test and external-test data. The accuracy achieved is on par with prior colonoscopy AI systems that were developed on more powerful computing platforms [

14]. This high performance was maintained despite using a compact edge device, underscoring that excellent accuracy is attainable without cloud-scale resources. In terms of speed, the model’s ultra-fast inference (on the order of only a few milliseconds) is a key strength. Such speed ensures truly real-time operation—a critical requirement for AI assistance during live colonoscopies—with no noticeable lag in producing results [

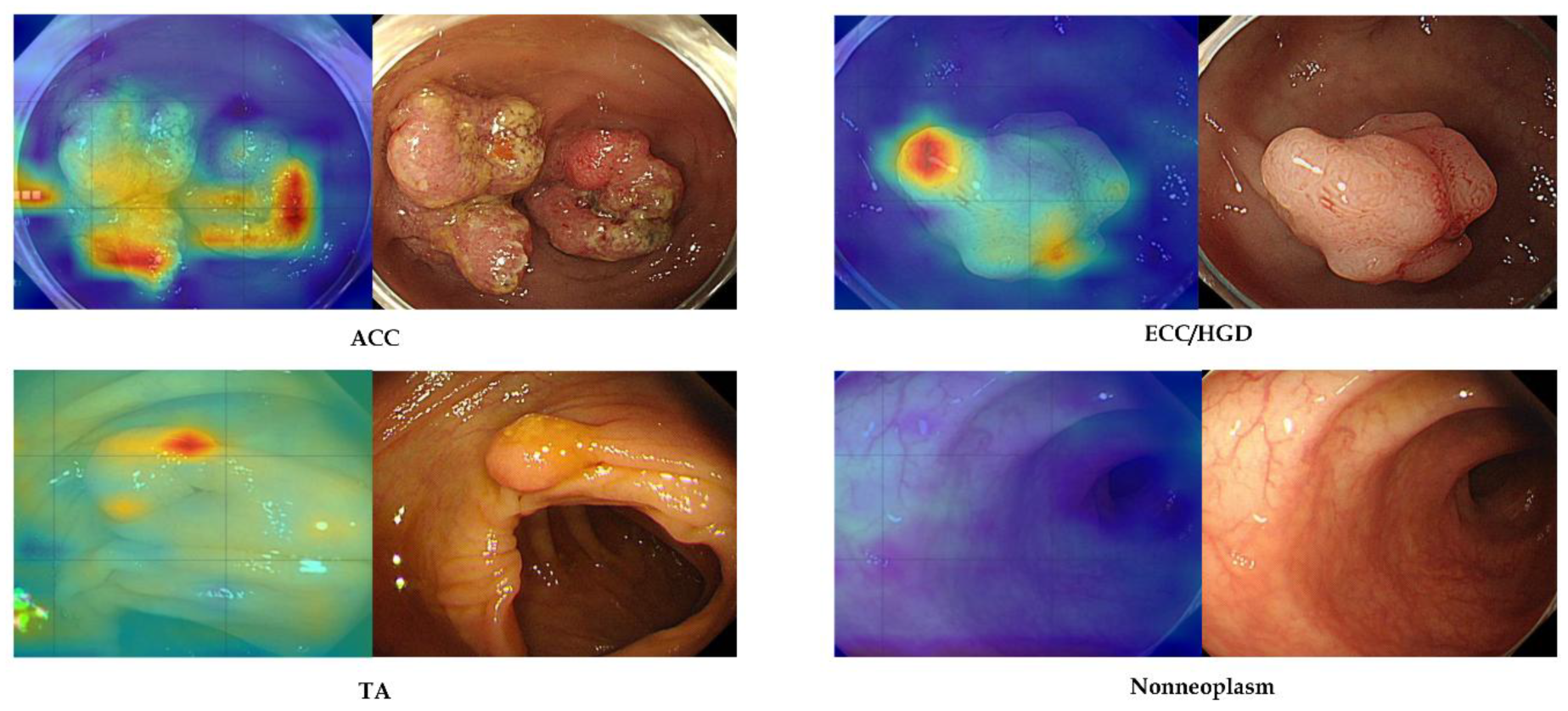

10]. Equally important is the model’s explainability. We incorporated Grad-CAM visual attention maps to accompany each prediction, highlighting the image regions most influential to the model’s decision [

16]. In practice, these heatmaps tended to coincide with the same areas that an experienced endoscopist would examine when evaluating a lesion. This alignment provides an intuitive validation of the AI’s reasoning and can help build end-user trust. Explainable AI outputs make it easier for clinicians to understand and verify the model’s suggestions, addressing the “black box” concern often cited with deep learning. By offering a visual rationale for its classifications, the system functions not just as a predictive tool but also as a teaching aid or second pair of eyes, focusing attention on key mucosal features. Early adoption studies in endoscopy emphasize that such transparency is vital for clinician acceptance of AI. Another notable strength of our model is its generalizability. We validated the classifier on external datasets from different sources, and it retained excellent performance across these independent test sets. In other words, the AI did not overly “memorize” quirks of the development dataset, but rather learned features of colorectal lesions that are broadly applicable. This robustness against dataset shifts is encouraging, given that many AI models trained on single-center data experience accuracy degradation when applied to new hospitals or imaging conditions. Our results suggest that the model captures fundamental visual patterns of neoplasia that generalize well, which is essential for any system intended for wide clinical use. Taken together, the combination of high accuracy, real-time speed, explainable output, and cross-site generalizability positions our edge-deployed model as a compelling tool for AI-assisted colonoscopy.

In resource-constrained settings, model compression techniques can substantially reduce the computational and memory burden of deep learning models. Pruning and quantization are particularly promising. Applying such strategies to our colorectal lesion classifier would enable deployment on lower-cost edge hardware. By leveraging pruning and quantization, we can deploy our model on less powerful devices without significant performance sacrifice. These optimizations would maintain near-original accuracy and real-time inference speeds, even on hardware lacking a high-end GPU. In short, model compression broadens the accessibility of our AI system, enabling it to run effectively in lower-resource environments [

17,

18].

To our knowledge, this study is among the first to successfully implement real-time colorectal lesion classification on an edge device in a clinical context. Most prior research in endoscopic AI involved algorithms running on standard workstations or cloud servers, rather than on portable hardware at the bedside. Recently, our group and others have started to explore edge AI in endoscopy. Our work extends this paradigm to colonoscopy, showing that even complex tasks like multi-category colorectal lesion classification can be handled locally in real time. On the commercial front, an AI-assisted polyp detection device has already received FDA approval and is in clinical use, providing real-time computer vision support during colonoscopies. That system, and others like it, have proven that AI can increase polyp detection rates in practice—for example, studies report significant improvements in adenoma detection rates when using real-time AI as a “second observer” during colonoscopy [

19]. Our study differs by targeting lesion classification (CADx) rather than detection (CADe). While CADe systems draw attention to polyps, they do not determine lesion histology or malignancy risk on the fly. There is a parallel line of research on AI-based polyp characterization. Pioneering studies by Mori et al. and others have shown that deep learning can differentiate adenomatous from hyperplastic polyps in real time using advanced imaging, approaching the performance needed for an optical biopsy strategy [

6]. In fact, when clinicians use AI assistance for polyp characterization, some prospective studies have achieved >90% negative predictive value for ruling out adenomas in diminutive polyps—a threshold recommended for leaving such polyps in place [

20,

21]. These efforts underscore that AI has the potential not only to find lesions but also to guide management decisions during procedures. Our classifier contributes to this growing field of CADx by providing highly accurate lesion diagnoses instantaneously, which could aid endoscopists in making on-the-spot decisions about biopsies or resections. Moreover, unlike most prior CADx studies, we emphasize deployment on a cost-effective edge platform. Other researchers have experimented with lightweight models for classifying endoscopic images on embedded hardware or with fast object detectors like YOLO to flag polyps at high frame rates [

22]. These reports demonstrated the technical possibility of real-time endoscopy AI. Our work advances beyond technical feasibility, offering a thorough validation of an edge AI system in a clinical-like setting with clinicians in the loop. In summary, compared to prior studies, we combine several desirable attributes—real-time operation, on-device processing, high-accuracy classification, and explainability—into one integrated system. This represents a step toward making AI a practical, everyday companion in endoscopic practice, building on the successes of earlier detection-focused systems and moving into the realm of comprehensive diagnostic support.

We acknowledge several limitations of our study, which also point to directions for future research. First, the training and internal-test data were relatively limited in size and originated from a single center. Although we did include an external test to examine generalizability, the model might still be biased by the specific imaging systems and patient population at our institution. AI models in endoscopy are known to suffer when confronted with data that differ from their training set (for example, variations in endoscope brand, bowel preparation quality, or lesion prevalence). A larger, multi-center dataset would bolster confidence that the model generalizes to diverse settings. Second, our evaluation was performed on retrospectively collected images, rather than in a prospective live clinical trial. While we simulated real-time use and even recorded demonstration videos, we did not formally measure the model’s impact on live procedure outcomes. This means we have yet to prove that using the AI assistant during actual colonoscopies will measurably improve performance metrics like adenoma detection rate or accuracy of optical diagnoses. Notably, the field of AI polyp characterization still lacks randomized trials to confirm the outcome benefits. Thus, a logical next step is a prospective study where endoscopists perform colonoscopies with the AI device in situ, to assess improvements in polyp detection, characterization accuracy, procedure time, and other clinically relevant endpoints. Third, although we used an edge computing device, it was a fairly high-performance unit with GPU acceleration. Less resourceful clinics might not have the exact device we used. The model’s current form relies on that hardware to achieve its sub-5 ms inference time; deploying it on a significantly weaker processor could affect performance. This hardware dependency is a concern for widespread adoption. Fortunately, there are engineering strategies (model pruning, quantization, distillation, etc.) that could reduce the computational load of the model. Future work will involve optimizing the model so that even lower-cost or older devices can run it effectively, widening the accessibility of this technology. Fourth, our current explainability analysis (Grad-CAM heatmaps) is qualitative and post hoc. Incorporating formal interpretability metrics would provide a more rigorous evaluation of our model’s attention, as there is indeed a recognized need for quantitative explainability assessment in AI research. In future work, we plan to integrate several quantitative interpretability metrics to validate the alignment between the model’s focus and the clinically relevant lesion regions using such metrics as intersection over union (IoU), pointing game accuracy (hit rate), or deletion/insertion curves. Fifth, while the current study focused on technical validation using retrospective image datasets, the ultimate value of such a system lies in its capacity to improve clinical outcomes. Specifically, by providing real-time histologic predictions, our model could play a key role in reducing unnecessary biopsies, improving adenoma detection and characterization, or supporting decision-making in a low-resource setting. Our future work will include a prospective study to evaluate how the use of this AI system influences key procedural metrics such as adenoma detection rate, biopsy rates, resection decisions, and diagnostic accuracy during live colonoscopy. Finally, we should consider the user interface and workflow integration aspects. In our experiments, the Grad-CAM heatmaps were displayed on the image. There is a possibility that a continuously updating heatmap could distract or obscure the endoscopist’s view if not designed carefully. We plan to refine how the AI feedback is presented—for example, by using subtle bounding boxes or translucent overlays or by triggering the display only when the model is highly confident—to ensure the AI advice remains helpful and not intrusive. We are also interested in incorporating a form of online learning or periodic retraining so the system can improve as it encounters more data in clinical use. In summary, none of these limitations undermine the core findings of our study, but they highlight important avenues to make the system even more robust and useful. Ongoing and future efforts will focus on (1) multi-center validation, testing the model on colonoscopy data from multiple hospitals and endoscope manufacturers; (2) prospective clinical trials to quantify the real-world impact on lesion detection rates, pathology concordance, and patient outcomes; (3) model and hardware optimization to maintain real-time performance on a wider range of edge devices, potentially even without a dedicated GPU; and (4) enhancing explainability and usability by improving the visualization of AI results and enabling the model to continually learn from new cases. By addressing these points, we aim to transition our system from a promising prototype to a clinically hardened tool.

In conclusion, this study successfully demonstrates the feasibility of a deep learning-powered colonoscopy image classification system designed for the rapid, real-time histologic categorization of colorectal lesions on edge computing platforms.