Deep Learning-Based Mpox Skin Lesion Detection and Real-Time Monitoring in a Smart Healthcare System †

Abstract

1. Introduction

- We developed ITMA’INN, an AI-powered healthcare system for early detection and monitoring of Mpox disease from skin lesion images with efficient, accurate, and fast diagnostic capabilities, supporting the healthcare system and preventing disease transmission.

- The system supports both binary classification (Mpox vs. non-Mpox) and multiclass classification (Mpox, Chickenpox, Measles, Cowpox, hand-foot-mouth disease (HFMD) and Normal), leveraging fine-tuned pretrained deep learning models including ViT, TNT, Swin Transformer, MobileViT, ViT Hybrid, ResNetViT, VGG16, ResNet-50, and EfficientNet-B0.

- We developed and implemented a user-friendly and cross-platform mobile application that enables users to upload skin lesion images for real-time AI-based diagnosis, track symptoms, receive guidance on the nearest healthcare centers, and access relevant and up-to-date disease information.

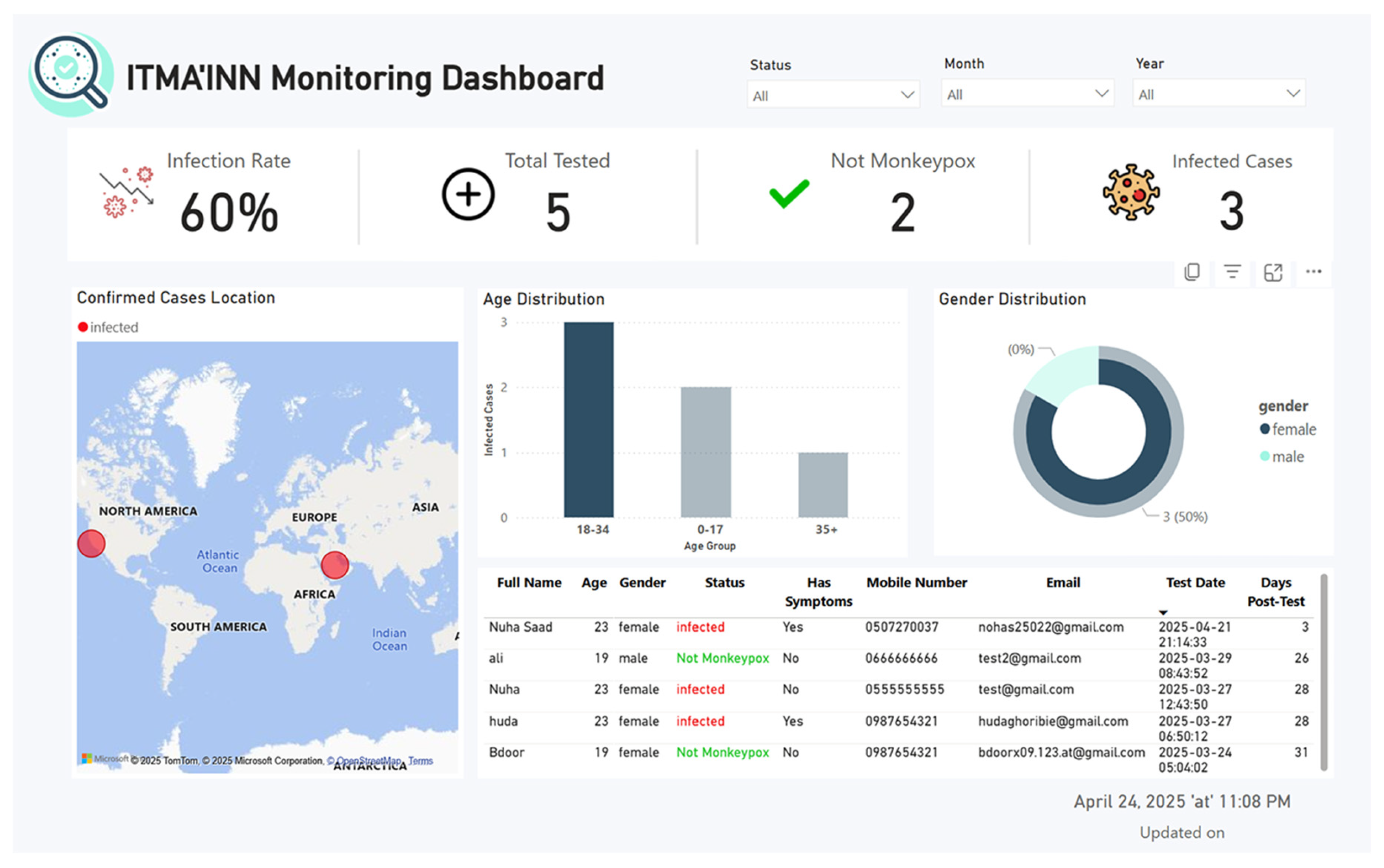

- We created a real-time monitoring dashboard to support healthcare authorities by visualizing case distribution, tracking patients’ trends and patterns, and facilitating data-driven decision-making in response to potential outbreaks.

2. Related Works

3. Methodology

3.1. Datasets

3.1.1. Binary Classification Dataset (MSLD)

3.1.2. Multiclass Classification Dataset (MSLD v2.0)

3.1.3. Multiclass Classification Dataset II: MSID

3.2. ITMA’INN System Overview and General Framework

- Patient interactions with the mobile application: Users can access the mobile application as registered users or continue as guests. The patient can upload an image of the suspected skin lesions for Mpox detection and optionally input symptoms for analysis.

- Data Transmission and Preprocessing: Uploaded data is transmitted securely to the backend, where image processing techniques are applied to the images to enhance quality and ensure compatibility with the DL-based model.

- DL-Based Image Classification: The preprocessed image is fed into a fine-tuned DL model based on pretrained vision transformers or CNN, which predicts the likelihood of Mpox infection (binary or multiclass).

- Diagnosis and User Feedback: The model’s prediction, along with the confidence score, is displayed to the user on the application screen. The patients can also receive some recommendations and educational content.

- Health Authority Dashboard: A real-time dashboard, synchronized with the app database, allows public health authorities to monitor Mpox case trends, track geographic spread, and support timely interventions.

- System Administration: Backend operations, including performance monitoring, user management, and system maintenance, are handled by the system administrator.

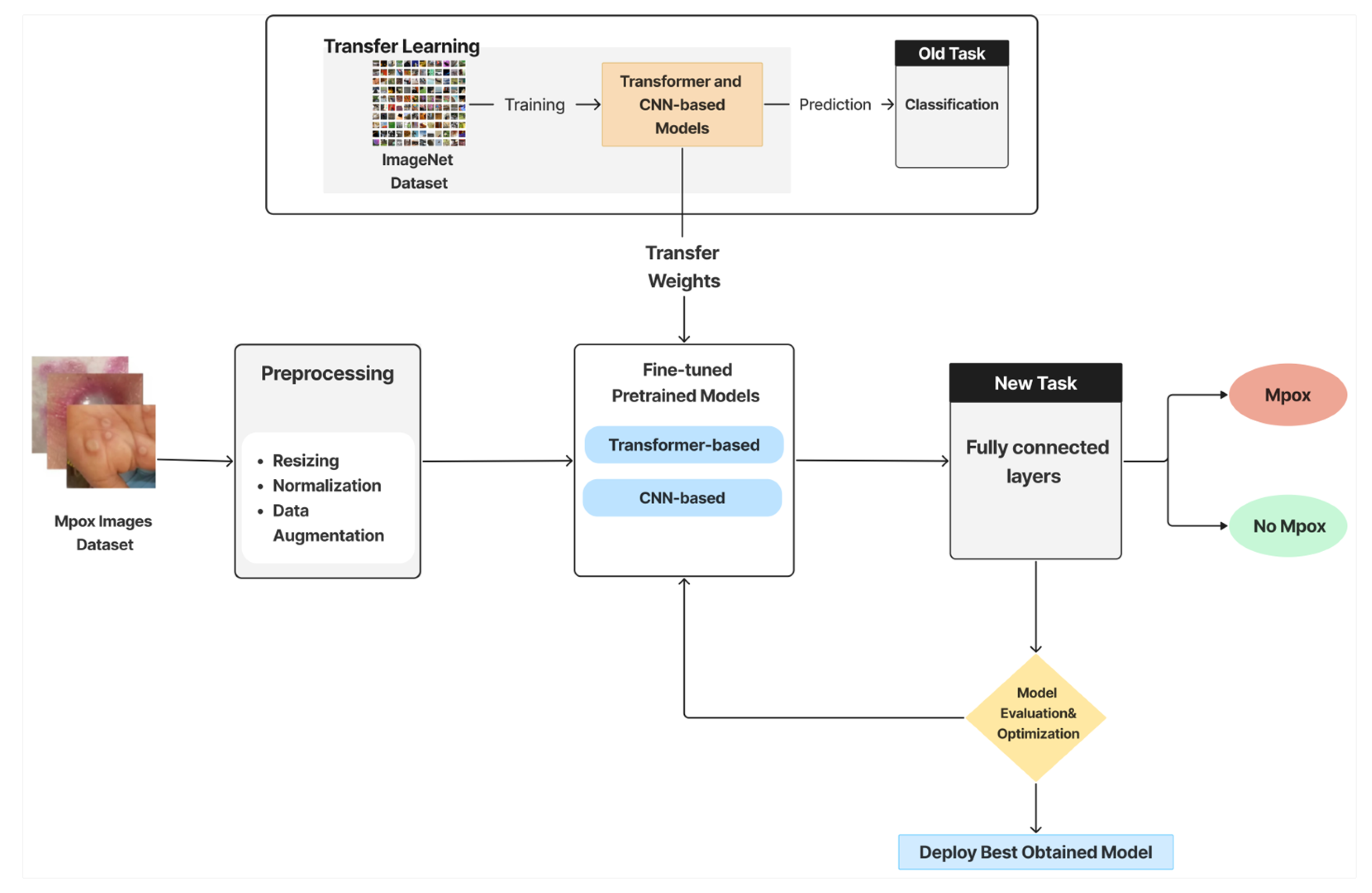

3.3. AI-Model Pipeline

- Image Preprocessing and Augmentation: This initial stage consists of 2 steps. First, preprocessing entails a few crucial steps to guarantee model compatibility, including image enhancement, resizing (to match model input dimensions), normalization, and optional cropping. Data augmentation is the second step, which is employed to boost the training data’s volume and diversity.

- Feature Extraction via Pretrained Models and Transfer Learning: Pretrained DL models are employed to extract relevant features from images automatically. These models, originally trained on large-scale datasets such as ImageNet, offer a significant advantage in terms of saving time and computational resources. Next, these models are adapted to the Mpox detection task by fine-tuning selected layers in a transfer learning style.

- Classification: The extracted features are passed to fully connected layers to predict a label to indicate whether the image shows signs of Mpox, and the classifier categorizes these predictions into one of the two classes (Mpox or Not Mpox) in the binary classification. In the multiclass classification scenario, the image is classified into one of six categories (e.g., Mpox, Chickenpox, Measles, etc.).

- Evaluation and Optimization: Models are evaluated using validation data and standard metrics. Hyperparameter tuning is employed to enhance model performance and generalization. Detailed experimental settings and metric definitions are explained later in the Experiments and Evaluation section.

- Model Deployment: The best-performing model is selected and deployed within the ITMA’INN mobile application.

3.3.1. Pretrained Models

3.3.2. Fine-Tuning and Transfer Learning

- Loading the pretrained weights for each model using timm.create_model.

- Freezing most of the backbone layers to preserve general feature representations and fine-tuning the final layers to learn from the Mpox dataset.

- Replacing the classification head with custom fully connected layers for binary or multiclass classification tasks, followed by a Sigmoid activation function for binary classification and a SoftMax activation function for multiclass classification.

- Setting random seeds for reproducibility across multiple experiments.

- Applying classical data augmentation techniques such as resizing, normalization, and interpolation via timm.data.create_transform to improve generalization.

- Using early stopping and other regularization techniques to prevent overfitting based on validation loss.

- Hyperparameter optimization was conducted by tuning several key parameters, with selected tested values summarized in Table 6. Key hyperparameters such as the learning rate, batch size, optimizer, dropout rate, and weight decay were adjusted repeatedly through multiple training iterations.

4. Experiments and Evaluation Protocols

4.1. Experimental Setting

- Stratified 80:20 train–test split, where each subset included the same proportion of class labels. Importantly, the split was applied at the patient level, ensuring that all images belonging to a single patient (even if they depicted different lesions or body parts) were included entirely in either the training or the test set, thus preventing data leakage.

- Stratified 5-fold cross-validation (CV), where the data was divided into five folds. In each iteration, one fold was reserved for testing and the remaining four were used for training. This process was also conducted patient-wise whenever identifiers were available, ensuring no overlap of patient images across folds. The results were averaged across all folds to provide a more reliable and generalizable estimate of model performance.

4.2. Experimental Environment and Implementation Details

4.3. Evaluation Metrics

5. Results and Discussion

5.1. AI Model Prediction Performance

5.1.1. Binary Classification Task

5.1.2. Multiclass Classification Task

5.1.3. Multiclass Classification Task II and External Validation: MSID

5.2. Ablation Study Results

5.3. Comparison with the State-of-the-Art Methods

6. Mobile Application and Dashboard Development and Integration

6.1. Mobile Application Design and Testing

6.2. Monitoring Dashboard Design and Testing

- Secure authentication through organizational credentials;

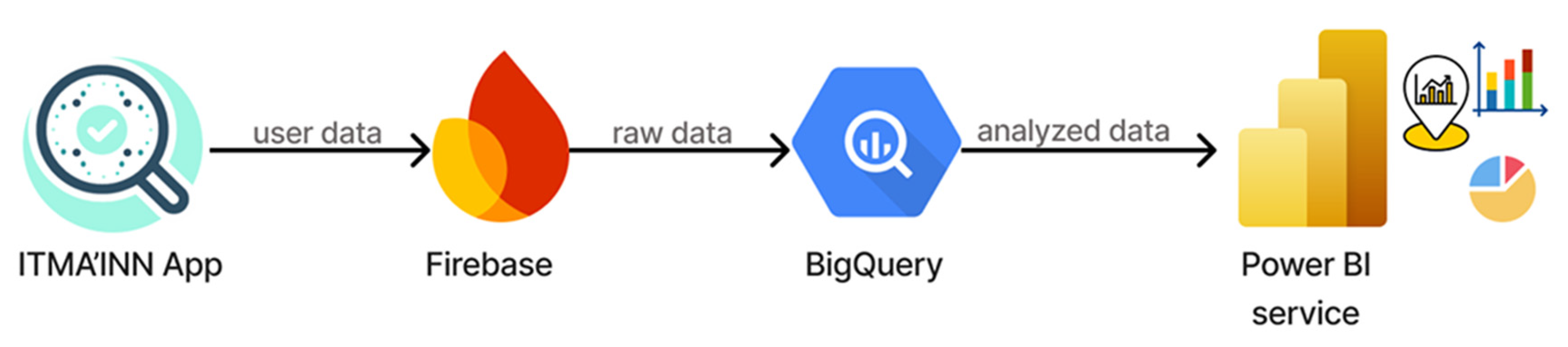

- Data flows through established enterprise platforms (Firebase, Google BigQuery, Power BI), as shown in Figure 4;

- Session management and credential verification.

7. Study Limitations

8. Conclusions

- Lightweight transformer architecture optimized for smartphone deployment, enabling widespread community access for early detection;

- Real-time monitoring dashboard providing continuous epidemiological surveillance and trend analysis for health authorities;

- The system reduces the pressure on hospitals for early detection, which contributes to limiting infection transmission and improving healthcare quality;

- Scalable architecture capable of expansion to other dermatological conditions.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sandoiu, A. Why Does SARS-CoV-2 Spread So Easily? Available online: https://www.medicalnewstoday.com/articles/why-does-sars-cov-2-spread-so-easily (accessed on 2 October 2024).

- Multi-Country Monkeypox Outbreak in Non-Endemic Countries: Update. Available online: https://www.who.int/emergencies/disease-outbreak-news/item/2022-DON388 (accessed on 2 October 2024).

- Sorayaie Azar, A.; Naemi, A.; Babaei Rikan, S.; Bagherzadeh Mohasefi, J.; Pirnejad, H.; Wiil, U.K. Monkeypox Detection Using Deep Neural Networks. BMC Infect. Dis. 2023, 23, 438. [Google Scholar] [CrossRef]

- Nasr, M.M.; Islam, M.M.; Shehata, S.; Karray, F.; Quintana, Y. Smart Healthcare in the Age of AI: Recent Advances, Challenges, and Future Prospects. IEEE Access 2021, 9, 145248–145270. [Google Scholar] [CrossRef]

- Albaradei, S.; Thafar, M.A.; Alsaedi, A.; Van Neste, C.; Gojobori, T.; Essack, M.; Gao, X. Machine Learning and Deep Learning Methods That Use Omics Data for Metastasis Prediction. Comput. Struct. Biotechnol. J. 2021, 19, 5008–5018. [Google Scholar] [CrossRef] [PubMed]

- Thafar, M.A.; Albaradei, S.; Uludag, M.; Alshahrani, M.; Gojobori, T.; Essack, M.; Gao, X. OncoRTT: Predicting Novel Oncology-Related Therapeutic Targets Using BERT Embeddings and Omics Features. Front. Genet. 2023, 14, 1139626. [Google Scholar] [CrossRef] [PubMed]

- Alamro, H.; Thafar, M.A.; Albaradei, S.; Gojobori, T.; Essack, M.; Gao, X. Exploiting Machine Learning Models to Identify Novel Alzheimer’s Disease Biomarkers and Potential Targets. Sci. Rep. 2023, 13, 4979. [Google Scholar] [CrossRef]

- Zhang, P.; Boulos, M. Generative AI in Medicine and Healthcare: Promises, Opportunities and Challenges. Future Internet 2023, 15, 286. [Google Scholar] [CrossRef]

- Albaradei, S.; Alganmi, N.; Albaradie, A.; Alharbi, E.; Motwalli, O.; Thafar, M.A.; Gojobori, T.; Essack, M.; Gao, X. A Deep Learning Model Predicts the Presence of Diverse Cancer Types Using Circulating Tumor Cells. Sci. Rep. 2023, 13, 21114. [Google Scholar] [CrossRef]

- Tsuneki, M. Deep Learning Models in Medical Image Analysis. J. Oral Biosci. 2022, 64, 312–320. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Zhang, L.; Yang, J.; Teng, F. Role of Artificial Intelligence in Medical Image Analysis: A Review of Current Trends and Future Directions. J. Med. Biol. Eng. 2024, 44, 231–243. [Google Scholar] [CrossRef]

- De, A.; Mishra, N.; Chang, H.-T. An Approach to the Dermatological Classification of Histopathological Skin Images Using a Hybridized CNN-DenseNet Model. PeerJ Comput. Sci. 2024, 10, e1884. [Google Scholar] [CrossRef]

- Yolcu Oztel, G. Vision Transformer and CNN-Based Skin Lesion Analysis: Classification of Monkeypox. Multimed. Tools Appl. 2024, 83, 71909–71923. [Google Scholar] [CrossRef]

- Curti, N.; Merli, Y.; Zengarini, C.; Giampieri, E.; Merlotti, A.; Dall’Olio, D.; Marcelli, E.; Bianchi, T.; Castellani, G. Effectiveness of Semi-Supervised Active Learning in Automated Wound Image Segmentation. Int. J. Mol. Sci. 2022, 24, 706. [Google Scholar] [CrossRef]

- Griffa, D.; Natale, A.; Merli, Y.; Starace, M.; Curti, N.; Mussi, M.; Castellani, G.; Melandri, D.; Piraccini, B.M.; Zengarini, C. Artificial Intelligence in Wound Care: A Narrative Review of the Currently Available Mobile Apps for Automatic Ulcer Segmentation. BioMedInformatics 2024, 4, 2321–2337. [Google Scholar] [CrossRef]

- Fiscone, C.; Curti, N.; Ceccarelli, M.; Remondini, D.; Testa, C.; Lodi, R.; Tonon, C.; Manners, D.N.; Castellani, G. Generalizing the Enhanced-Deep-Super-Resolution Neural Network to Brain MR Images: A Retrospective Study on the Cam-CAN Dataset. eNeuro 2024, 11, ENEURO.0458-22.2023. [Google Scholar] [CrossRef]

- Musthafa, M.M.; R, M.T.; V, V.K.; Guluwadi, S. Enhanced Skin Cancer Diagnosis Using Optimized CNN Architecture and Checkpoints for Automated Dermatological Lesion Classification. BMC Med. Imaging 2024, 24, 201. [Google Scholar] [CrossRef]

- Debelee, T.G. Skin Lesion Classification and Detection Using Machine Learning Techniques: A Systematic Review. Diagnostics 2023, 13, 3147. [Google Scholar] [CrossRef]

- Singh, R.K.; Gorantla, R.; Allada, S.G.R.; Narra, P. SkiNet: A Deep Learning Framework for Skin Lesion Diagnosis with Uncertainty Estimation and Explainability. PLoS ONE 2022, 17, e0276836. [Google Scholar] [CrossRef] [PubMed]

- Sreekala, K.; Rajkumar, N.; Sugumar, R.; Sagar, K.V.D.; Shobarani, R.; Krishnamoorthy, K.P.; Saini, A.K.; Palivela, H.; Yeshitla, A. Skin Diseases Classification Using Hybrid AI Based Localization Approach. Comput. Intell. Neurosci. 2022, 2022, 6138490. [Google Scholar] [CrossRef] [PubMed]

- Chadaga, K.; Prabhu, S.; Sampathila, N.; Nireshwalya, S.; Katta, S.S.; Tan, R.-S.; Acharya, U.R. Application of Artificial Intelligence Techniques for Monkeypox: A Systematic Review. Diagnostics 2023, 13, 824. [Google Scholar] [CrossRef]

- Patel, M.; Surti, M.; Adnan, M. Artificial Intelligence (AI) in Monkeypox Infection Prevention. J. Biomol. Struct. Dyn. 2023, 41, 8629–8633. [Google Scholar] [CrossRef]

- Asif, S.; Zhao, M.; Li, Y.; Tang, F.; Ur Rehman Khan, S.; Zhu, Y. AI-Based Approaches for the Diagnosis of Mpox: Challenges and Future Prospects. Arch. Comput. Methods Eng. 2024, 31, 3585–3617. [Google Scholar] [CrossRef]

- Nayak, T.; Chadaga, K.; Sampathila, N.; Mayrose, H.; Gokulkrishnan, N.; G, M.B.; Prabhu, S.; S, S.K.; Umakanth, S. Deep Learning Based Detection of Monkeypox Virus Using Skin Lesion Images. Med. Nov. Technol. Devices 2023, 18, 100243. [Google Scholar] [CrossRef]

- Altun, M.; Gürüler, H.; Özkaraca, O.; Khan, F.; Khan, J.; Lee, Y. Monkeypox Detection Using CNN with Transfer Learning. Sensors 2023, 23, 1783. [Google Scholar] [CrossRef] [PubMed]

- Jaradat, A.S.; Al Mamlook, R.E.; Almakayeel, N.; Alharbe, N.; Almuflih, A.S.; Nasayreh, A.; Gharaibeh, H.; Gharaibeh, M.; Gharaibeh, A.; Bzizi, H. Automated Monkeypox Skin Lesion Detection Using Deep Learning and Transfer Learning Techniques. Int. J. Environ. Res. Public Health 2023, 20, 4422. [Google Scholar] [CrossRef]

- Thieme, A.H.; Zheng, Y.; Machiraju, G.; Sadee, C.; Mittermaier, M.; Gertler, M.; Salinas, J.L.; Srinivasan, K.; Gyawali, P.; Carrillo-Perez, F.; et al. A Deep-Learning Algorithm to Classify Skin Lesions from Mpox Virus Infection. Nat. Med. 2023, 29, 738–747. [Google Scholar] [CrossRef]

- Sahin, V.H.; Oztel, I.; Yolcu Oztel, G. Human Monkeypox Classification from Skin Lesion Images with Deep Pre-Trained Network Using Mobile Application. J. Med. Syst. 2022, 46, 79. [Google Scholar] [CrossRef]

- Ali, S.N.; Ahmed, M.T.; Paul, J.; Jahan, T.; Sani, S.M.S.; Noor, N.; Hasan, T. Monkeypox Skin Lesion Detection Using Deep Learning Models: A Feasibility Study. arXiv 2022, arXiv:2207.03342. [Google Scholar]

- Ali, S.N.; Ahmed, M.T.; Jahan, T.; Paul, J.; Sani, S.M.S.; Noor, N.; Asma, A.N.; Hasan, T. A Web-Based Mpox Skin Lesion Detection System Using State-of-the-Art Deep Learning Models Considering Racial Diversity. Biomed. Signal Process. Control 2024, 98, 106742. [Google Scholar] [CrossRef]

- Bala, D.; Hossain, M.S.; Hossain, M.A.; Abdullah, M.I.; Rahman, M.M.; Manavalan, B.; Gu, N.; Islam, M.S.; Huang, Z. MonkeyNet: A robust deep convolutional neural network for monkeypox disease detection and classification. Neural Netw. 2023, 161, 757–775. [Google Scholar] [CrossRef]

- Nie, Y.; Sommella, P.; Carratù, M.; O’Nils, M.; Lundgren, J. A Deep CNN Transformer Hybrid Model for Skin Lesion Classification of Dermoscopic Images Using Focal Loss. Diagnostics 2022, 13, 72. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Mao, X.; Qi, G.; Chen, Y.; Li, X.; Duan, R.; Ye, S.; He, Y.; Xue, H. Towards Robust Vision Transformer. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Zhou, D.; Kang, B.; Jin, X.; Yang, L.; Lian, X.; Jiang, Z.; Hou, Q.; Feng, J. DeepViT: Towards Deeper Vision Transformer. arXiv 2021, arXiv:2103.11886. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 87–110. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Mehta, S.; Rastegari, M. MobileViT: Light-Weight, General-Purpose, and Mobile-Friendly Vision Transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Davis, J.; Goadrich, M. The Relationship between Precision-Recall and ROC Curves. In Proceedings of the 23rd International Conference on Machine Learning—ICML ’06, New York, NY, USA, 25–29 June 2006. [Google Scholar]

- Martinez-Ramon, M.; Ajith, M.; Kurup, A.R. Deep Learning: A Practical Introduction; John Wiley & Sons: Hoboken, NJ, USA, 2024. [Google Scholar]

| Study (Year) | Task | Dataset | Split | Metrics | Classification Used | Limitations | Best Model |

|---|---|---|---|---|---|---|---|

| Nayak et al. (2023) [24] | Binary (Mpox vs. non-Mpox) | Public Mpox dataset (skin lesion images) | Not specified (train/test) | Accuracy, Precision, Recall, F1 | CNNs (ResNet-18, GoogLeNet, SqueezeNet, AlexNet) | Lack of detail on splitting protocol; limited dataset size | ResNet-18 (99.49% accuracy) |

| Altun et al. (2023) [25] | Binary | Public skin lesion dataset | 80/20, hyperparameter tuning | Accuracy, F1, Recall, AUC | CNNs (MobileNetV3-s, EfficientNetV2, ResNet-50, VGG19, DenseNet-11) | Possible overfitting due to limited diversity; needs real-world testing | MobileNetV3-s (96% accuracy, F1 = 0.98, AUC = 0.9) |

| Jaradat et al. (2023) [26] | Binary | Public dataset (skin lesion images) | Train/test (fine-tuned) | Accuracy, Recall, Precision, F1 | CNNs (MobileNetV2, VGG19, VGG16, ResNet-50, EfficientNet3) | Dataset imbalance; limited validation in clinical practice | MobileNetV2 (98.16% accuracy, F1 = 0.9) |

| Thieme et al. (2023, Nature Medicine) [27] | Binary & Multiclass | Large clinical + dermoscopic dataset (~130k images) | Cross-validation | Accuracy | CNN-based (ResNet-18, ResNet-34, DenseNet-19, VGG19_b) | High computational cost; dataset may not generalize across populations | MPXV-CNN (90% accuracy, robust real-world deployment) |

| Ali et al. (2022) [29] | Multiclass (6 classes) | MSLD v2.0 (Kaggle) | Train/test | Accuracy | CNNs (DenseNet-121, VGG, ResNet) | Moderate accuracy; limited augmentation | DenseNet-121 (83.59% accuracy) |

| Image Classes | Original Images | Augmented Images (Training Only) |

|---|---|---|

| Mpox | 102 | 1148 |

| Non-Mpox | 126 | 1414 |

| Total | 228 | 2562 |

| Image Classes | Original Images | Augmented Images (Training Only) |

|---|---|---|

| Mpox | 284 | 2579 |

| Chickenpox | 75 | 725 |

| Measles | 55 | 565 |

| Cowpox | 66 | 662 |

| HFMD | 161 | 1546 |

| Healthy | 114 | 1131 |

| Total | 755 | 7208 |

| Image Classes | Original Images |

|---|---|

| Mpox | 279 |

| Chickenpox | 107 |

| Measles | 91 |

| Healthy | 293 |

| Total | 770 |

| Model | Variant Used | Feature Dimension | Key Architectural Note |

|---|---|---|---|

| VGG16 | Standard (VGG16) | 4096 | Features extracted from the second fully connected layer (FC2) |

| ResNet-50 | Standard (ResNet50) | 2048 | Features extracted after global average pooling |

| EfficientNet-B0 | B0 | 1280 | Features from the final embedding layer before classification |

| Vision Transformer (ViT) | Base/16 (vit_b_16) | 768 | Hidden size of the transformer encoder |

| Transformer-iN-Transformer (TNT) | Small (tnt_s_patch16_224) | 384 | Hidden size of the transformer encoder |

| Swin Transformer | Tiny (swin_t) | 768 | Hidden size of the transformer encoder |

| MobileViT | Small (mobilevit_s) | 640 | Embedding size from the MobileViT block |

| ViT Hybrid | Base-BiT-384 (vit-hybrid-base) | 768 | Hidden size of the hybrid transformer encoder |

| ResNetViT | vit_base_r50_s16_224 | 768 | ResNet-50 backbone with ViT encoder; hidden size is 768 |

| Hyperparameters | Values |

|---|---|

| Learning rate | 0.001, 0.0001, 1 × 10−5, 2 × 10−5 |

| Batch size | 8, 16, 32 |

| Dropout rate | 0.2, 0.3, 0.4 |

| Weight decay | 1 × 10−4, 1 × 10−5 |

| Optimizer | Adam, AdamW |

| Epochs | 5, 10, 50, 100 |

| Early stopping | 3, 5, 10, 15, 50 |

| Metrics | Definition | Mathematical Formula |

|---|---|---|

| Accuracy (Acc) | It is the ratio of correctly classified samples to the total number of samples in the dataset. | |

| Precision (PR) | The proportion of true positive predictions among all positive predictions, reflecting the model’s ability to avoid false positives. | |

| Recall (RC) | The proportion of true positives among all actual positives, indicating how well the model captures relevant cases. | |

| F1-score | The harmonic mean of precision and recall, providing a balance between the two. | F1-score |

| AUC-ROC | Is the area under the ROC curve, measuring the model’s ability to distinguish between classes | AUC |

| Loss | It measures how well the model’s predictions match the actual outcomes, representing the model’s prediction error. | MSE = |

| Model Name | Accuracy | Precision | F1-Score | Recall | Loss | AUC |

|---|---|---|---|---|---|---|

| 80:20 Train–Test Split | ||||||

| ResNetViT | 0.9556 | 0.9596 | 0.9557 | 0.9556 | 0.2355 | 0.9600 |

| ResNet-50 | 0.9111 | 0.9152 | 0.9114 | 0.9111 | 0.2575 | 0.9150 |

| TNT | 0.9778 | 0.9788 | 0.9778 | 0.9778 | 0.1729 | 0.9800 |

| Swin Transformer | 0.9556 | 0.9596 | 0.9557 | 0.9556 | 0.1770 | 0.9600 |

| ViT | 0.9778 | 0.9788 | 0.9778 | 0.9778 | 0.1669 | 0.9800 |

| VGG16 | 0.9778 | 0.9788 | 0.9778 | 0.9778 | 0.1030 | 0.9800 |

| ViT Hybrid | 0.9556 | 0.9596 | 0.9557 | 0.9556 | 0.1656 | 0.9600 |

| MobileViT | 0.9778 | 0.9786 | 0.9777 | 0.9788 | 0.1967 | 0.9750 |

| EfficientNet-B0 | 0.9333 | 0.9345 | 0.9335 | 0.9333 | 0.2969 | 0.9720 |

| 5-Fold Cross-validation | ||||||

| ViT | 0.8903 | 0.8914 | 0.8898 | 0.8903 | 0.4701 | 0.8871 |

| MobileViT | 0.8773 | 0.8783 | 0.8768 | 0.8773 | 0.4133 | 0.8731 |

| TNT | 0.8990 | 0.9022 | 0.8984 | 0.8990 | 0.5395 | 0.8944 |

| ResNet-50 | 0.7971 | 0.8050 | 0.7914 | 0.7971 | 0.6498 | 0.7808 |

| VGG16 | 0.7783 | 0.7809 | 0.7744 | 0.7783 | 0.8790 | 0.7648 |

| Model Name | Accuracy | Precision | F1-Score | Recall | Loss | AUC |

|---|---|---|---|---|---|---|

| 80:20 Train–Test Split | ||||||

| ResNetViT | 0.9216 | 0.9271 | 0.9224 | 0.9216 | 0.3079 | 0.9823 |

| ResNet-50 | 0.8039 | 0.8169 | 0.8043 | 0.8039 | 0.6292 | 0.9509 |

| TNT | 0.8170 | 0.8301 | 0.8157 | 0.8170 | 0.4797 | 0.9611 |

| Swin Transformer | 0.8497 | 0.8596 | 0.8503 | 0.8497 | 0.4288 | 0.9736 |

| ViT | 0.8431 | 0.8551 | 0.8413 | 0.8431 | 0.5175 | 0.9655 |

| VGG16 | 0.8039 | 0.8062 | 0.8046 | 0.8039 | 0.7762 | 0.9570 |

| ViT Hybrid | 0.9216 | 0.9262 | 0.9219 | 0.9216 | 0.2501 | 0.9286 |

| MobileViT | 0.8758 | 0.8782 | 0.8746 | 0.8758 | 0.4905 | 0.9730 |

| EfficientNet-B0 | 0.8954 | 0.9002 | 0.8961 | 0.8954 | 0.3559 | 0.9797 |

| 5-Fold Cross-validation | ||||||

| ResNetViT | 0.85288 | 0.85734 | 0.85188 | 0.85288 | 0.45666 | 0.9665 |

| ViT Hybrid | 0.8388 | 0.84282 | 0.83604 | 0.8388 | 0.45642 | 0.96602 |

| ViT | 0.7814 | 0.7824 | 0.7771 | 0.7814 | 0.6157 | 0.9450 |

| ResNet-50 | 0.7572 | 0.7643 | 0.7535 | 0.7572 | 0.6866 | 0.9327 |

| VGG16 | 0.7145 | 0.7209 | 0.7105 | 0.7145 | 1.0992 | 0.9080 |

| Model Name | Accuracy | Precision | F1-Score | Recall | Loss | AUC |

|---|---|---|---|---|---|---|

| 80:20 Train–Test Split | ||||||

| ResNetViT | 0.9423 | 0.9427 | 0.9423 | 0.9423 | 0.9913 | 0.9423 |

| ResNet-50 | 0.8590 | 0.8663 | 0.8561 | 0.8590 | 0.9687 | 0.8590 |

| TNT | 0.8526 | 0.8487 | 0.8493 | 0.8526 | 0.9717 | 0.8526 |

| Swin Transformer | 0.8846 | 0.8871 | 0.8849 | 0.8846 | 0.9729 | 0.8846 |

| ViT | 0.8846 | 0.8884 | 0.8848 | 0.8846 | 0.9708 | 0.8846 |

| VGG16 | 0.8526 | 0.8512 | 0.8487 | 0.8526 | 0.9682 | 0.8526 |

| ViT Hybrid | 0.9487 | 0.9496 | 0.9487 | 0.9487 | 0.9926 | 0.9487 |

| MobileViT | 0.9231 | 0.9255 | 0.9240 | 0.9231 | 0.9785 | 0.9231 |

| EfficientNet-B0 | 0.9231 | 0.9231 | 0.9224 | 0.9231 | 0.9812 | 0.9231 |

| Model | Accuracy | Precision (Macro) | Recall (Macro) | F1-Score (Macro) | Precision (Weighted) | Recall (Weighted) | F1-Score (Weighted) |

|---|---|---|---|---|---|---|---|

| ResNetViT | 0.82 | 0.84 | 0.75 | 0.78 | 0.83 | 0.82 | 0.81 |

| ViT Hybrid | 0.84 | 0.84 | 0.76 | 0.79 | 0.84 | 0.84 | 0.83 |

| Classification Task | Model | Accuracy | Precision | F1-Score | Recall | AUC |

|---|---|---|---|---|---|---|

| Multiclass Classification (6 Classes) | ResnetViT | 0.8902 | 0.8913 | 0.888 | 0.890 | 0.9718 |

| ResnetViT + Data Augmentation | 0.9024 | 0.9177 | 0.9065 | 0.902 | 0.9808 | |

| ViT Hybrid | 0.8659 | 0.8719 | 0.8666 | 0.865 | 0.9708 | |

| ViT Hybrid + Data Augmentation | 0.8720 | 0.8804 | 0.8731 | 0.872 | 0.9734 | |

| Binary Classification | MobileViT | 0.8667 | 0.8667 | 0.8667 | 0.866 | 0.8650 |

| MobileViT +Data Augmentation | 0.9778 | 0.9786 | 0.9777 | 0.978 | 0.9750 | |

| TNT | 0.9556 | 0.9596 | 0.9557 | 0.955 | 0.9600 | |

| TNT + Data Augmentation | 0.9778 | 0.9788 | 0.9778 | 0.977 | 0.9800 |

| Study | Model | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) | AUC (%) |

|---|---|---|---|---|---|---|

| Sahin et al. [28] | MobileNetv2 | 91.11% | 90.00% | 90.00% | 90.00% | - |

| Nayak et al. [24] | ResNet-18 | 99.49% | 98.52% | 99.44% | 99.49% | - |

| GoogLeNet | 97.37% | 97.46% | 94.12% | 97.05% | - | |

| Proposed system: ITMA’INN | MobileViT | 97.77% | 97.86% | 97.88% | 97.77% | 97.50% |

| Study | Model | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) | AUC (%) |

|---|---|---|---|---|---|---|

| Ali et al. [30] | DenseNet-121 | 83.59% | 85% | - | - | - |

| Proposed system: ITMA’INN | ResNetViT | 92.16% | 92.71% | 92.16% | 92.24% | 98.23% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alghoraibi, H.; Alqurashi, N.; Alotaibi, S.; Alkhudaydi, R.; Aldajani, B.; Batawil, J.; Alqurashi, L.; Althagafi, A.; Thafar, M.A. Deep Learning-Based Mpox Skin Lesion Detection and Real-Time Monitoring in a Smart Healthcare System. Diagnostics 2025, 15, 2505. https://doi.org/10.3390/diagnostics15192505

Alghoraibi H, Alqurashi N, Alotaibi S, Alkhudaydi R, Aldajani B, Batawil J, Alqurashi L, Althagafi A, Thafar MA. Deep Learning-Based Mpox Skin Lesion Detection and Real-Time Monitoring in a Smart Healthcare System. Diagnostics. 2025; 15(19):2505. https://doi.org/10.3390/diagnostics15192505

Chicago/Turabian StyleAlghoraibi, Huda, Nuha Alqurashi, Sarah Alotaibi, Renad Alkhudaydi, Bdoor Aldajani, Joud Batawil, Lubna Alqurashi, Azza Althagafi, and Maha A. Thafar. 2025. "Deep Learning-Based Mpox Skin Lesion Detection and Real-Time Monitoring in a Smart Healthcare System" Diagnostics 15, no. 19: 2505. https://doi.org/10.3390/diagnostics15192505

APA StyleAlghoraibi, H., Alqurashi, N., Alotaibi, S., Alkhudaydi, R., Aldajani, B., Batawil, J., Alqurashi, L., Althagafi, A., & Thafar, M. A. (2025). Deep Learning-Based Mpox Skin Lesion Detection and Real-Time Monitoring in a Smart Healthcare System. Diagnostics, 15(19), 2505. https://doi.org/10.3390/diagnostics15192505