TurkerNeXtV2: An Innovative CNN Model for Knee Osteoarthritis Pressure Image Classification

Abstract

1. Introduction

1.1. Related Works

1.2. Literature Gaps

- Transformers dominate general computer vision. In KOA imaging, however, most works still use CNNs due to compute, data size, and deployment limits.

- Lightweight, task-specific CNNs for plantar-pressure images are scarce. Existing models target X-ray or MRI.

- Many studies rely on large pretrained backbones. This improves accuracy on small sets but risks overfitting and domain shift.

- Transformer-based KOA studies exist (e.g., Swin/ViT or plug-in modules) but show mixed gains, especially for early KL grades, and often lack external validation.

- Public plantar-pressure datasets are limited. Reproducible baselines and modular, scalable backbones tailored to this modality are missing.

1.3. Motivation and Study Outline

1.4. Innovations and Contributions

- To our knowledge, this is the first dataset specifically tailored for CNN-based OA detection using plantar-pressure imaging.

- A new block was designed that combines max pooling and average pooling to form a pooling-based attention mechanism. This is fused with an inverted bottleneck, creating the TNV2 block as the main feature extractor. The multiple pooling functions improve stability, enhance feature selection, and reduce bias compared to earlier attention modules.

- Conventional downsampling often suffers from routing bias. To address this, a hybrid block was introduced that merges max pooling, average pooling, and patchified grouped convolution, followed by a 2 × 2 fusion layer. This design balances efficiency with richer spatial representation.

- The stem was simplified using a ConvNeXt-style structure, which improves early-stage feature learning and keeps the network modular.

- TurkerNeXtV2 differs from our previous backbones in three core ways. First, the main feature block (TNV2) replaces the MLP + transposed-convolution attention of Attention TurkerNeXt with a pooling-based attention (max + avg) fused to an inverted bottleneck, improving stability and efficiency. Second, the hybrid downsampling (max pool + avg pool + patchified grouped convolution) reduces routing bias versus the single 2 × 2 stride used previously. Third, the stem and head are simplified (ConvNeXt-style stem, GAP + FC head) to keep the model modular and scalable. Unlike our earlier TurkerNeXt pipeline, which added a hand-crafted deep-feature engineering stage with SVM, TurkerNeXtV2 is a compact, end-to-end CNN designed for plantar-pressure images.

- We collected and released a plantar-pressure image dataset, providing a valuable resource for biomedical engineering and clinical research.

- A lightweight CNN that integrates pooling-based attention, hybrid downsampling, and a ConvNeXt-inspired stem has been presented. This design advances the field of lightweight deep learning by demonstrating that compact models can deliver strong accuracy in both general and biomedical image classification.

2. Materials and Methods

2.1. Material

- Grade 0: No radiographic features of OA.

- Grade 1: Doubtful narrowing of joint space, possible osteophytic lipping.

- Grade 2: Definite osteophytes, possible narrowing of joint space.

- Grade 3: Multiple moderate osteophytes, definite joint-space narrowing, some sclerosis, possible bony deformity.

- Grade 4: Large osteophytes marked joint-space narrowing, severe subchondral sclerosis, and definite bony deformity.

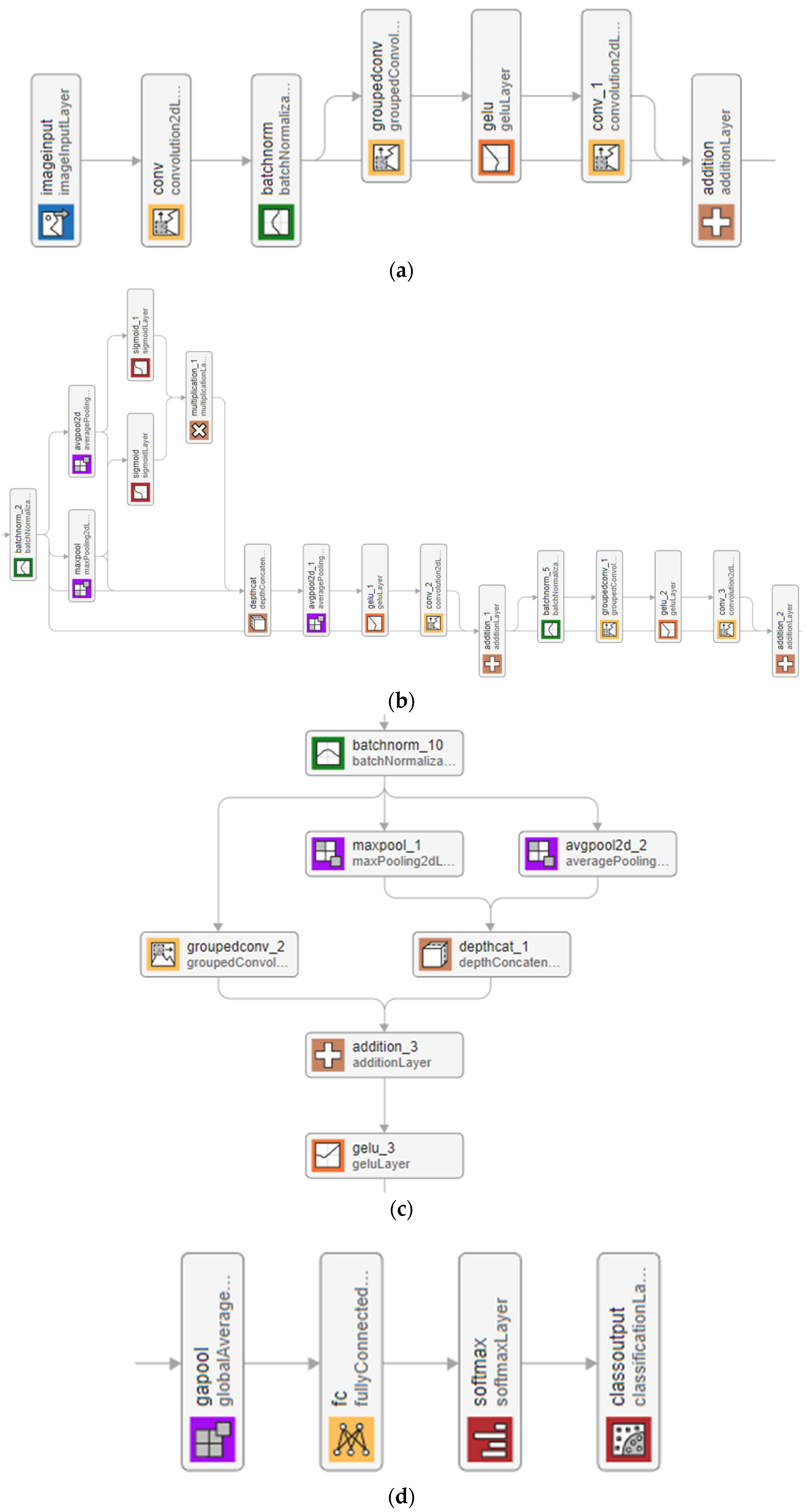

2.2. TurkerNeXtV2

- It uses parallel max-pooling and average-pooling to capture both focal pressure deviations and global context.

- Their element-wise product, gated by a sigmoid, suppresses noise and highlights clinically relevant regions.

- It expands–compresses feature via depth-concatenation and grouped convolutions, keeping the block lightweight.

| Algorithm 1. Pseudocode of the TurkerNeXtV2 |

| Input: Image with a size of 224 × 224 × 3, Output: Classification results. |

| 01: Apply the ConvNeXt-modified stem block to generate the first tensor of size 56 × 56 × 96. 02: Pass the first tensor through the first TNV2 block to create the second tensor of size 56 × 56 × 96. 03: Use the first hybrid downsampling block to create a new tensor of size 28 × 28 × 192. 04: Apply the second TNV2 block to the downsampled tensor to create the third tensor of size 28 × 28 × 192. 05: Deploy the second hybrid downsampling block to create a new tensor of size 14 × 14 × 384. 06: Apply the third TNV2 block to generate a tensor of size 14 × 14 × 384. 07: Apply the third hybrid downsampling block to produce the final downsampled tensor of size 7 × 7 × 768. 08: Generate the last tensor by applying the fourth (final) TNV2 block; its size remains 7 × 7 × 768. 09: Perform GAP to obtain 768 features. 10: Use an FC layer to set the number of classes. 11: Apply the softmax function to produce classification outputs. |

2.2.1. Stem

2.2.2. TurkerNeXtV2 Block

2.2.3. Hybrid Downsampling

2.2.4. Output

3. Experimental Results

3.1. Experimental Setup

3.2. Training Configuration

- Solver: Stochastic Gradient Descent with Momentum (SGDM),

- Standard MATLAB default; widely used optimizer for CNNs; stable convergence.

- Number of Epochs: 30,

- MATLAB default; sufficient for convergence on the datasets used.

- Batch Size: 128,

- Balanced choice for GPU memory and training speed; MATLAB default setting.

- Initial Learning Rate: 0.01,

- Common default in CNN training; reliable for stable convergence in many vision tasks.

- L2 Regularization: 1 × 10−4,

- Default weight decay; reduces risk of overfitting without extra complexity.

- Training–Validation Split: 80:20, randomized.

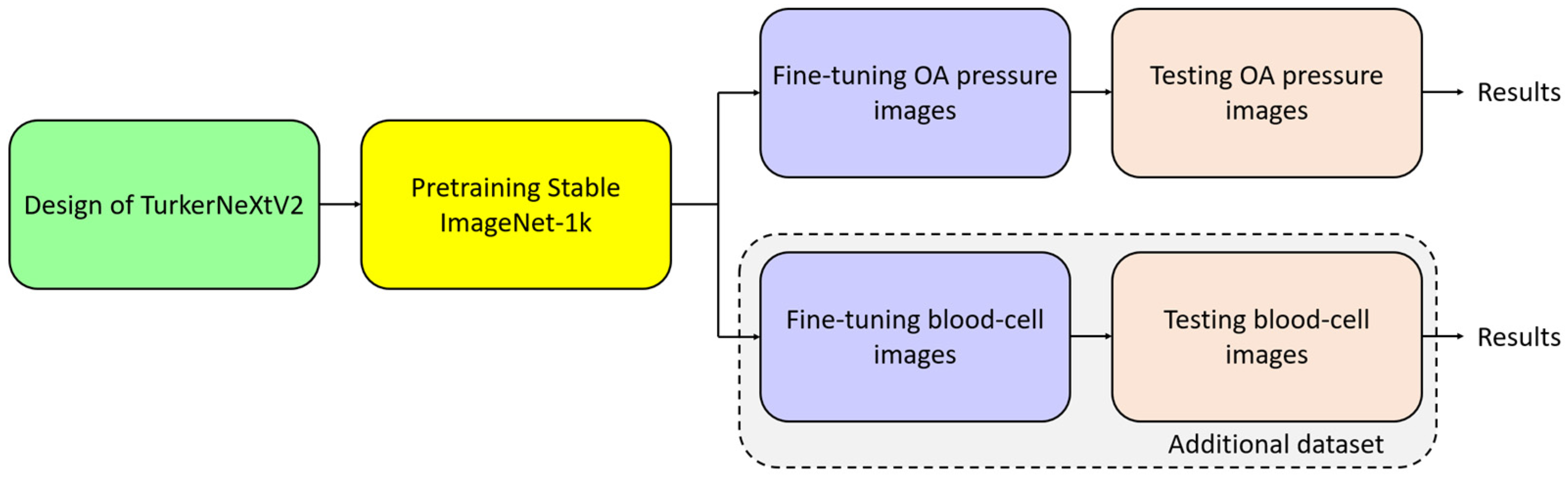

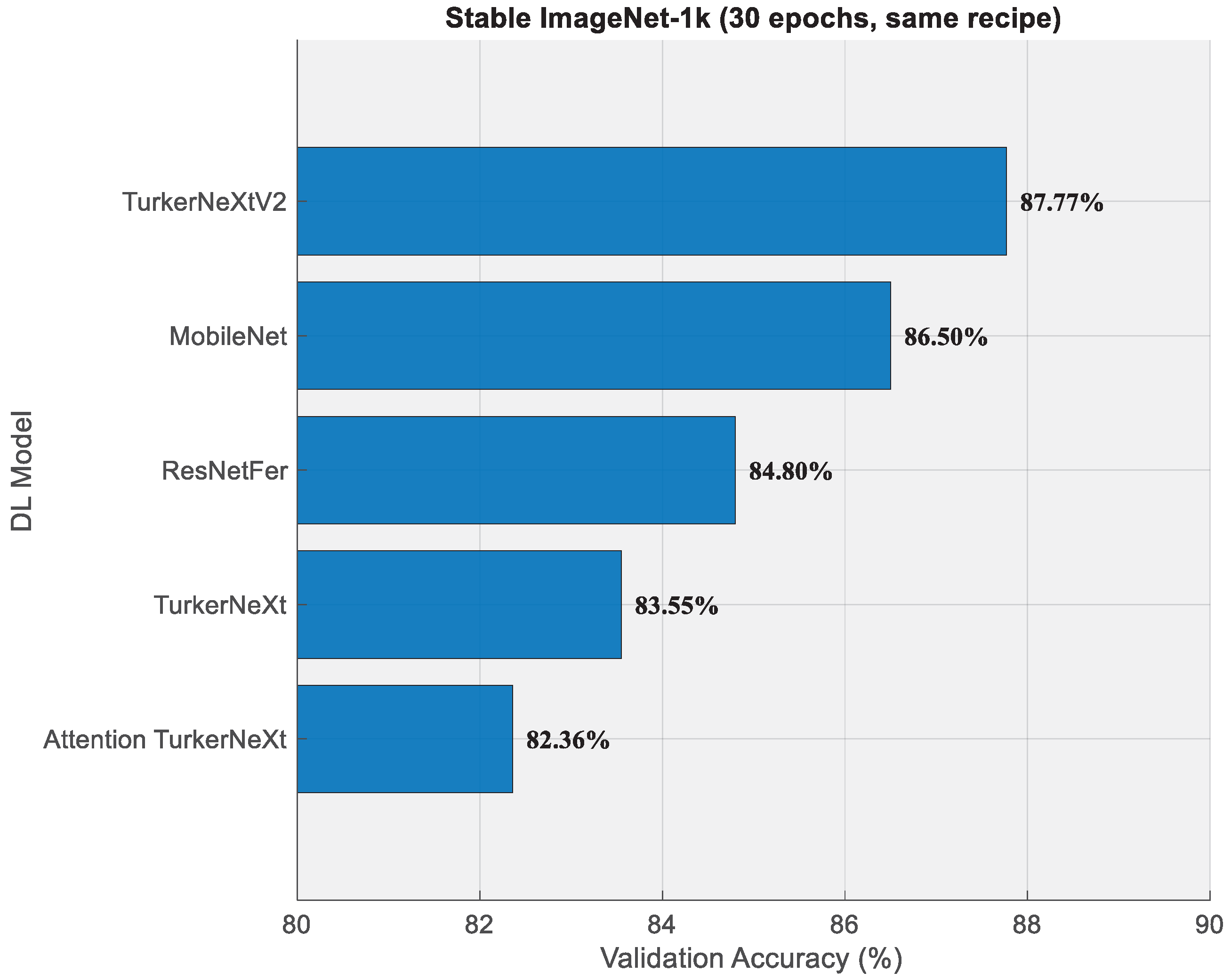

3.3. Pretraining on Stable ImageNet-1k

3.4. Training on Osteoarthritis Dataset

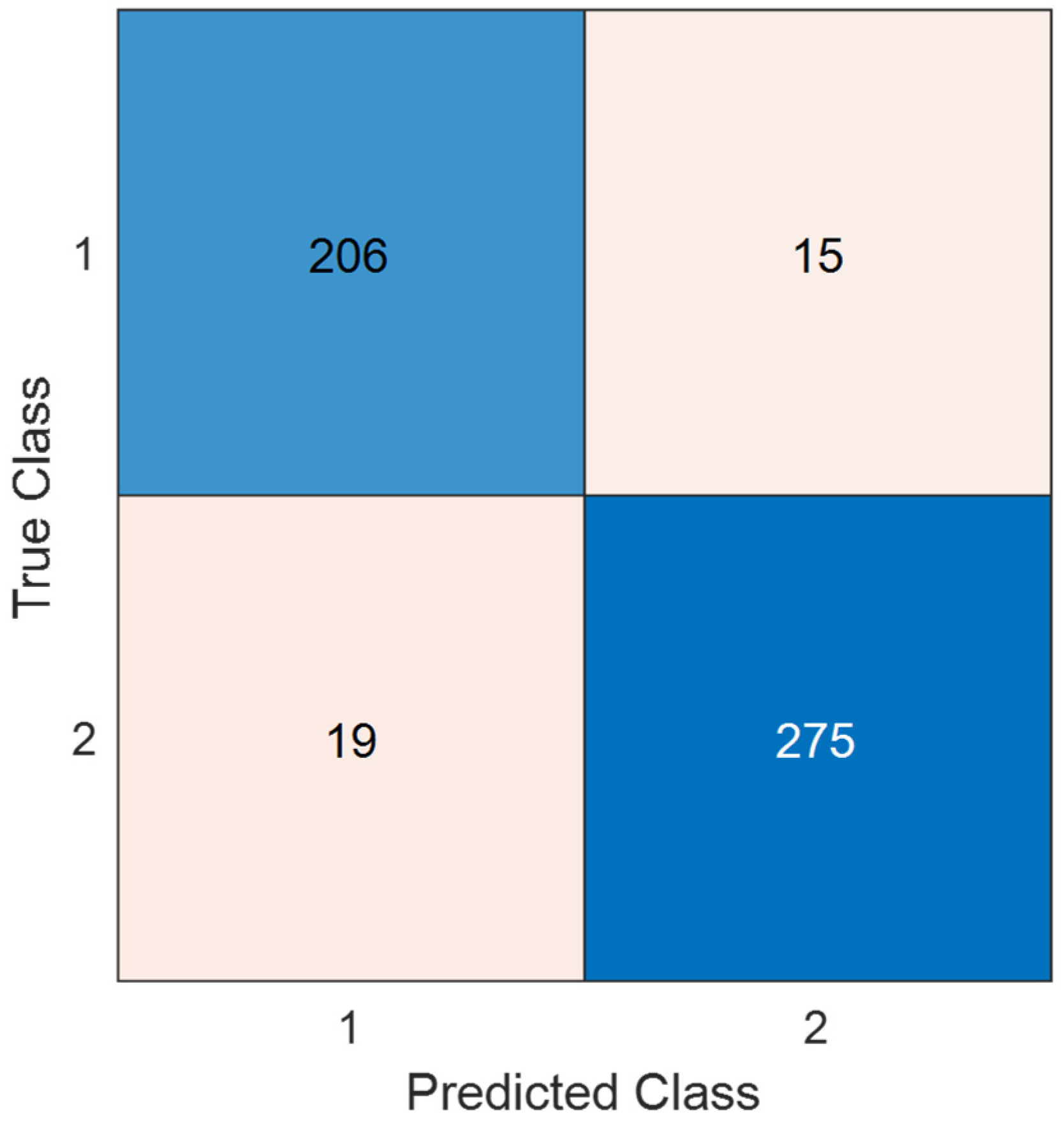

3.5. Test Evaluation

3.6. Computational Efficiency

3.7. Explainability

4. Discussion

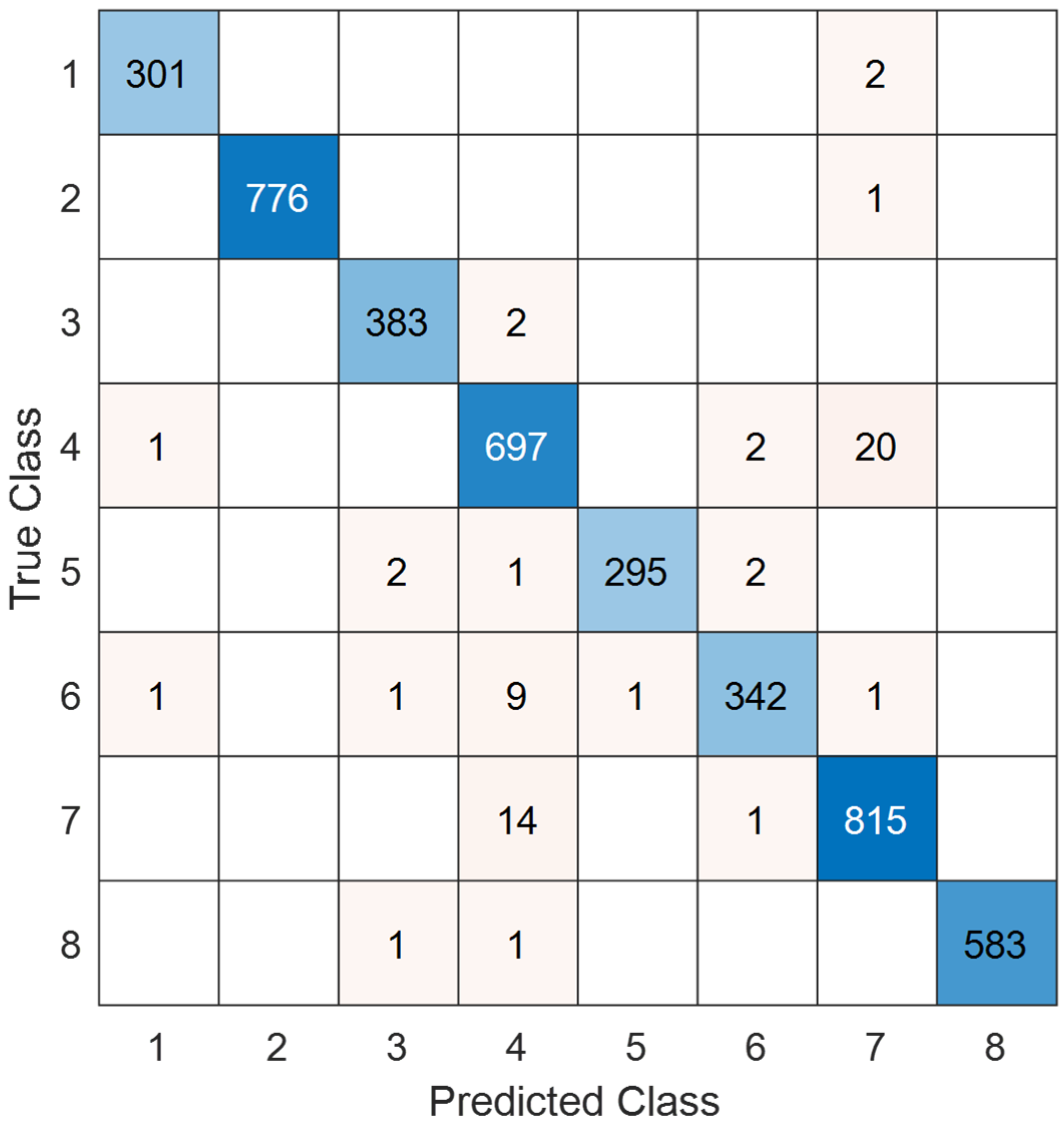

4.1. Test Additional Dataset

4.2. Benchmark

4.3. Key Points

- The presented CNN achieved 93.40% test accuracy on the osteoarthritis pressure-image dataset.

- TurkerNeXtV2 delivered 93.19% precision, 93.38% recall, and 93.28% F1-score on the same dataset.

- Our CNN reached 93.21% sensitivity, 93.54% specificity, and 93.37% geometric mean for osteoarthritis screening.

- TurkerNeXtV2 attained 87.77% validation accuracy on Stable ImageNet-1k after only 30 epochs.

- The recommended DL architecture maintained a lightweight footprint of 7.1 M parameters for 1000-class Stable ImageNet-1k and 6.3 M for 10-class osteoarthritis.

- TurkerNeXtV2 scored 98.52% test accuracy on the public blood-cell dataset, demonstrating strong cross-domain performance.

- 98.74% precision, 98.50% recall, and 98.62% F1-score were computed on the blood-cell dataset.

- TurkerNeXtV2 showcased best precision-recall on class 2 and lowest on classes 4 and 6 of the blood-cell task.

- Our model produced Grad-CAM heatmaps highlighting medial/lateral knee compartments in osteoarthritis and diffuse patterns in controls.

- TurkerNeXtV2 unified ConvNeXt-like stem, pooling-based attention, inverted bottleneck, and hybrid downsampling in one lightweight CNN.

- TurkerNeXtV2 demonstrated consistently high performance across medical (osteoarthritis and blood-cell) and general (Stable ImageNet-1k) domains.

- A new pressure-image dataset for osteoarthritis detection has been provided.

- Three new generation blocks have been utilized in this research to create the introduced TurkerNeXtV2

- High test accuracy (93.40%) is achieved on the osteoarthritis task.

- Strong generalization is demonstrated on Stable ImageNet-1k (87.77%) and a blood-cell dataset (98.52%).

- A lightweight architecture (~6–7 M parameters) is maintained.

- Transformer-inspired attention is implemented without self-attention, keeping computational cost low.

- Clinically interpretable Grad-CAM heatmaps are produced automatically.

- Modular blocks (stem, TNV2, downsampling, output) are easily scaled or adapted.

- The model has only been tested on two medical datasets and Stable ImageNet-1k; its robustness across other domains has not yet been examined.

- Our dataset is modest and from a single center, which may limit coverage of inter-subject variability and device bias. We did not use GAN-based data synthesis in this study.

- The TurkerNeXtV2 architecture will be extended to multi-class osteoarthritis grading tasks that distinguish early, moderate, and severe stages.

- We will evaluate GAN-based augmentation (e.g., CycleGAN/StyleGAN/diffusion) together with conventional augmentations (mixup, CutMix, RandAugment, color and geometric transforms, normalization/denoising) under label-preserving constraints to improve data quality and model generalizability.

- Lighter or larger versions of the TurkerNeXtV2 will be tested on the other image datasets.

- We will conduct shared-budget hyperparameter optimization (e.g., Bayesian optimization or multi-fidelity bandits) to improve TurkerNeXt’s classification performance across datasets.

- In TurkerNeXtV3, patch embedding and multi-head self-attention (MHSA) blocks are planned to be combined with pooling and convolution blocks.

- Lightweight mobile and web deployment versions will be developed for point-of-care screening devices.

- Federated-learning experiments will be conducted to train the model across multiple hospitals while preserving patient privacy.

- Additional ablation studies will be performed to quantify the individual contributions of the pooling-based attention and hybrid downsampling blocks.

- The model will be benchmarked on larger, publicly available biomedical datasets (e.g., chest X-ray, dermoscopy) to confirm cross-domain generalizability.

- The recommended TurkerNeXtV2 is designed to run on existing pressure-plate systems and smart insoles used in gait labs and clinics, to provide real-time, interpretable decision support for medical professionals, and to complement standard imaging (X-ray or MRI) rather than replace it.

- Early OA risk assessment from plantar-pressure data acquired on standard pressure plates or smart insoles in clinics and sports centers; results support clinical judgment and may guide when to order X-ray or MRI.

- TurkerNeXtV2 can analyze pressure trials in real time, reports asymmetry and load indices, and sends summaries to hospital systems for clinician review.

- Remote and longitudinal monitoring with smart insoles or mats at home; data are uploaded securely for follow-up, rehabilitation tracking, and flare-up alerts set by clinicians.

- Pressure-based risk flags can be combined with other modalities in clinic.

- A large vision model (LVM) can be developed using larger version of the introduced TurkerNeXtV2.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Clinical, imaging & study terms | |

| AP | Anteroposterior (X-ray view) |

| B-scan | Cross-sectional OCT scan |

| BMI | Body Mass Index |

| JSW | Joint Space Width |

| KL | Kellgren–Lawrence grading |

| KOA | Knee Osteoarthritis |

| Lat | Lateral (X-ray view) |

| mo | Months (time horizon) |

| MRI | Magnetic Resonance Imaging |

| MSK | Musculoskeletal (radiologists) |

| OA | Osteoarthritis |

| OCT | Optical Coherence Tomography |

| SAG-IW-TSE-FS | Sagittal Intermediate-Weighted Turbo Spin-Echo with Fat Saturation (MRI sequence) |

| SD | Standard Deviation |

| 3D-DESS | Three-Dimensional Double-Echo Steady-State (MRI sequence). |

| Datasets & initiatives | |

| OAI | Osteoarthritis Initiative. |

| MOST | Multicenter Osteoarthritis Study. |

| FNIH | Foundation for the National Institutes of Health (cohort/consortium context). |

| PROGRESS OA | FNIH Biomarkers Consortium PROGRESS OA study. |

| Biomarkers & wearable/gait systems | |

| uCTX-II/sCTX-II | Urinary/Serum C-terminal telopeptide of type II collagen. |

| COMP | Cartilage Oligomeric Matrix Protein. |

| PIIANP | Procollagen II N-terminal propeptide. |

| T2, T1ρ, dGEMRIC | Quantitative MRI markers. |

| IMU | Inertial Measurement Unit (wearable). |

| GAITRite | Instrumented walkway system (brand). |

| sCTX-II | Serum C-terminal telopeptide of type II collagen |

| Methods, architectures & components | |

| ANN | Artificial Neural Network |

| BERT | Bidirectional Encoder Representations from Transformers |

| CNN | Convolutional Neural Network |

| CLAHE | Contrast-Limited Adaptive Histogram Equalization |

| ConvNeXt | ConvNeXt architecture (patchify stem) |

| DC | Depth Concatenation |

| DL | Deep Learning |

| ECA | Efficient Channel Attention |

| EfficientNet | EfficientNet CNN family |

| FC | Fully Connected layer |

| Faster R-CNN | Region-based CNN detector |

| GAP | Global Average Pooling |

| GELU | Gaussian Error Linear Unit (activation) |

| Grad-CAM | Gradient-weighted Class Activation Mapping |

| GUI | Graphical User Interface |

| LDA | Linear Discriminant Analysis |

| ML | Machine Learning |

| MLP | Multi-Layer Perceptron |

| NfNet | Normalizer-Free Network |

| PoolFormer | PoolFormer architecture |

| ResNet | Residual Network |

| ROI | Region of Interest |

| SVM | Support Vector Machine |

| TL | Transfer Learning |

| Transformer (ViT, Swin) | Vision Transformer/Swin Transformer |

| TNV2 | TurkerNeXtV2 block |

| U-Net | U-Net segmentation network |

| XAI | Explainable Artificial Intelligence |

| Xception | Xception CNN (pretrained) |

| YOLO | “You Only Look Once” object detector. |

| Evaluation metrics | |

| Acc | Accuracy. |

| AUC | Area Under the (ROC) Curve. |

| F1/F1-score | F1 measure. |

| κ (Kappa) | Cohen’s Kappa agreement. |

| Sens | Sensitivity (Recall). |

| Spec | Specificity. |

| Balanced Acc | Balanced Accuracy. |

| G-mean | Geometric Mean. |

| Val | Validation (set/score). |

| Imaging views & protocol notes | |

| AP | Anteroposterior (X-ray view). |

| Lat | Lateral (X-ray view). |

| Training & compute | |

| SGDM | Stochastic Gradient Descent with Momentum. |

| LR | Learning Rate. |

| L2 | L2 regularization (weight decay). |

| PC | Personal Computer. |

| GPU | Graphics Processing Unit. |

| RTX | NVIDIA RTX (graphics card series). |

| FLOPs | Floating-Point Operations. |

| FP32/FP16/INT8 | 32-bit/16-bit floating-point; 8-bit integer (quantization). |

| RAM | Random Access Memory. |

References

- Parkinson, L.; Waters, D.L.; Franck, L. Systematic review of the impact of osteoarthritis on health outcomes for comorbid disease in older people. Osteoarthr. Cartil. 2017, 25, 1751–1770. [Google Scholar] [CrossRef]

- Kolasinski, S.L.; Neogi, T.; Hochberg, M.C.; Oatis, C.; Guyatt, G.; Block, J.; Callahan, L.; Copenhaver, C.; Dodge, C.; Felson, D. 2019 American College of Rheumatology/Arthritis Foundation guideline for the management of osteoarthritis of the hand, hip, and knee. Arthritis Rheumatol. 2020, 72, 220–233. [Google Scholar] [CrossRef]

- Allen, K.D.; Thoma, L.M.; Golightly, Y.M. Epidemiology of osteoarthritis. Osteoarthr. Cartil. 2022, 30, 184–195. [Google Scholar] [CrossRef]

- Webster, K.E.; Wittwer, J.E.; Feller, J.A. Validity of the GAITRite® walkway system for the measurement of averaged and individual step parameters of gait. Gait Posture 2005, 22, 317–321. [Google Scholar] [CrossRef]

- Cheng, H.; Hao, B.; Sun, J.; Yin, M. C-terminal cross-linked telopeptides of type II collagen as biomarker for radiological knee osteoarthritis: A meta-analysis. Cartilage 2020, 11, 512–520. [Google Scholar] [CrossRef]

- Hunter, D.J.; Collins, J.E.; Deveza, L.; Hoffmann, S.C.; Kraus, V.B. Biomarkers in osteoarthritis: Current status and outlook—The FNIH Biomarkers Consortium PROGRESS OA study. Skelet. Radiol. 2023, 52, 2323–2339. [Google Scholar] [CrossRef]

- Li, G.; Li, S.; Xie, J.; Zhang, Z.; Zou, J.; Yang, C.; He, L.; Zeng, Q.; Shu, L.; Huang, G. Identifying changes in dynamic plantar pressure associated with radiological knee osteoarthritis based on machine learning and wearable devices. J. Neuroeng. Rehabil. 2024, 21, 45. [Google Scholar] [CrossRef]

- Silverwood, V.; Blagojevic-Bucknall, M.; Jinks, C.; Jordan, J.L.; Protheroe, J.; Jordan, K.P. Current evidence on risk factors for knee osteoarthritis in older adults: A systematic review and meta-analysis. Osteoarthr. Cartil. 2015, 23, 507–515. [Google Scholar] [CrossRef]

- Fan, X.; Xu, H.; Prasadam, I.; Sun, A.R.; Wu, X.; Crawford, R.; Wang, Y.; Mao, X. Spatiotemperal dynamics of osteoarthritis: Bridging insights from bench to bedside. Aging Dis. 2024, 16, 1–35. [Google Scholar] [CrossRef]

- Dai, Y.; Gao, Y.; Liu, F. Transmed: Transformers advance multi-modal medical image classification. Diagnostics 2021, 11, 1384. [Google Scholar] [CrossRef]

- Khan, A.; Rauf, Z.; Sohail, A.; Khan, A.R.; Asif, H.; Asif, A.; Farooq, U. A survey of the vision transformers and their CNN-transformer based variants. Artif. Intell. Rev. 2023, 56, 2917–2970. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Acevedo, A.; Alférez, S.; Merino, A.; Puigví, L.; Rodellar, J. Recognition of peripheral blood cell images using convolutional neural networks. Comput. Methods Programs Biomed. 2019, 180, 105020. [Google Scholar] [CrossRef]

- Acevedo, A.; Merino, A.; Alférez, S.; Molina, Á.; Boldú, L.; Rodellar, J. A dataset of microscopic peripheral blood cell images for development of automatic recognition systems. Data Brief 2020, 30, 105474. [Google Scholar] [CrossRef]

- Sekhri, A.; Kerkouri, M.A.; Chetouani, A.; Tliba, M.; Nasser, Y.; Jennane, R.; Bruno, A. Automatic diagnosis of knee osteoarthritis severity using swin transformer. In Proceedings of the 20th International Conference on Content-Based Multimedia Indexing, Orleans, France, 20–22 September 2023; pp. 41–47. [Google Scholar]

- Sekhri, A.; Tliba, M.; Kerkouri, M.A.; Nasser, Y.; Chetouani, A.; Bruno, A.; Jennane, R. Shifting Focus: From Global Semantics to Local Prominent Features in Swin-Transformer for Knee Osteoarthritis Severity Assessment. In Proceedings of the 2024 32nd European Signal Processing Conference (EUSIPCO), Lyon, France, 26–30 August 2024; pp. 1686–1690. [Google Scholar]

- Bote-Curiel, L.; Munoz-Romero, S.; Gerrero-Curieses, A.; Rojo-Álvarez, J.L. Deep learning and big data in healthcare: A double review for critical beginners. Appl. Sci. 2019, 9, 2331. [Google Scholar] [CrossRef]

- Dindorf, C.; Dully, J.; Simon, S.; Perchthaler, D.; Becker, S.; Ehmann, H.; Diers, C.; Garth, C.; Fröhlich, M. Toward automated plantar pressure analysis: Machine learning-based segmentation and key point detection across multicenter data. Front. Bioeng. Biotechnol. 2025, 13, 1579072. [Google Scholar] [CrossRef]

- Ramadhan, G.T.; Haris, F.; Pusparani, Y.; Rum, M.R.; Lung, C.-W. Classification of plantar pressure based on walking duration and shoe pressure using a convolutional neural network. AIP Conf. Proc. 2024, 3155, 040001. [Google Scholar] [CrossRef]

- Dowling, A.M.; Steele, J.R.; Baur, L.A. Does obesity influence foot structure and plantar pressure patterns in prepubescent children? Int. J. Obes. 2001, 25, 845–852. [Google Scholar] [CrossRef] [PubMed]

- Park, S.-Y.; Park, D.-J. Comparison of foot structure, function, plantar pressure and balance ability according to the body mass index of young adults. Osong Public Health Res. Perspect. 2019, 10, 102. [Google Scholar] [PubMed]

- Diamond, L.E.; Grant, T.; Uhlrich, S.D. Osteoarthritis year in review 2023: Biomechanics. Osteoarthr. Cartil. 2024, 32, 138–147. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Gui, Y.; Gu, G.; Ren, H.; Zhang, W.; Du, Z.; Cheng, G. A plantar pressure detection and gait analysis system based on flexible triboelectric pressure sensor array and deep learning. Small 2025, 21, 2405064. [Google Scholar] [CrossRef]

- Turner, A.; Scott, D.; Hayes, S. The classification of multiple interacting gait abnormalities using insole sensors and machine learning. In Proceedings of the 2022 IEEE International Conference on Digital Health (ICDH), Barcelona, Spain, 10–16 July 2022; pp. 69–76. [Google Scholar]

- Oei, E.H.G.; Runhaar, J. Imaging of early-stage osteoarthritis: The needs and challenges for diagnosis and classification. Skelet. Radiol. 2023, 52, 2031–2036. [Google Scholar] [CrossRef] [PubMed]

- Liao, Q.-M.; Hussain, W.; Liao, Z.-X.; Hussain, S.; Jiang, Z.-L.; Zhu, Y.-H.; Luo, H.-Y.; Ji, X.-Y.; Wen, H.-W.; Wu, D.-D. Computer-Aided Application in Medicine and Biomedicine. Int. J. Comput. Intell. Syst. 2025, 18, 221. [Google Scholar] [CrossRef]

- Doshi, R.V.; Badhiye, S.S.; Pinjarkar, L. Deep Learning Approach for Biomedical Image Classification. J. Imaging Inform. Med. 2025, 1–30. [Google Scholar] [CrossRef] [PubMed]

- Tolstikhin, I.O.; Houlsby, N.; Kolesnikov, A.; Beyer, L.; Zhai, X.; Unterthiner, T.; Yung, J.; Steiner, A.; Keysers, D.; Uszkoreit, J. Mlp-mixer: An all-mlp architecture for vision. Adv. Neural Inf. Process. Syst. 2021, 34, 24261–24272. [Google Scholar]

- Tiulpin, A.; Thevenot, J.; Rahtu, E.; Lehenkari, P.; Saarakkala, S. Automatic knee osteoarthritis diagnosis from plain radiographs: A deep learning-based approach. Sci. Rep. 2018, 8, 1727. [Google Scholar] [CrossRef] [PubMed]

- Guan, B.; Liu, F.; Mizaian, A.H.; Demehri, S.; Samsonov, A.; Guermazi, A.; Kijowski, R. Deep learning approach to predict pain progression in knee osteoarthritis. Skelet. Radiol. 2022, 51, 363–373. [Google Scholar] [CrossRef]

- Abdullah, S.S.; Rajasekaran, M.P. Automatic detection and classification of knee osteoarthritis using deep learning approach. Radiol. Medica 2022, 127, 398–406. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, X.; Gao, T.; Du, L.; Liu, W. An automatic knee osteoarthritis diagnosis method based on deep learning: Data from the osteoarthritis initiative. J. Healthc. Eng. 2021, 2021, 5586529. [Google Scholar] [CrossRef]

- Hu, J.; Zheng, C.; Yu, Q.; Zhong, L.; Yu, K.; Chen, Y.; Wang, Z.; Zhang, B.; Dou, Q.; Zhang, X. DeepKOA: A deep-learning model for predicting progression in knee osteoarthritis using multimodal magnetic resonance images from the osteoarthritis initiative. Quant. Imaging Med. Surg. 2023, 13, 4852. [Google Scholar] [CrossRef]

- Rani, S.; Memoria, M.; Almogren, A.; Bharany, S.; Joshi, K.; Altameem, A.; Rehman, A.U.; Hamam, H. Deep learning to combat knee osteoarthritis and severity assessment by using CNN-based classification. BMC Musculoskelet. Disord. 2024, 25, 817. [Google Scholar] [CrossRef]

- Ahmed, R.; Imran, A.S. Knee osteoarthritis analysis using deep learning and XAI on X-rays. IEEE Access 2024, 12, 68870–68879. [Google Scholar] [CrossRef]

- Touahema, S.; Zaimi, I.; Zrira, N.; Ngote, M.N.; Doulhousne, H.; Aouial, M. MedKnee: A new deep learning-based software for automated prediction of radiographic knee osteoarthritis. Diagnostics 2024, 14, 993. [Google Scholar] [CrossRef]

- Lee, D.W.; Song, D.S.; Han, H.-S.; Ro, D.H. Accurate, automated classification of radiographic knee osteoarthritis severity using a novel method of deep learning: Plug-in modules. Knee Surg. Relat. Res. 2024, 36, 24. [Google Scholar] [CrossRef]

- Abdullah, S.S.; Rajasekaran, M.P.; Hossen, M.J.; Wong, W.K.; Ng, P.K. Deep learning based classification of tibio-femoral knee osteoarthritis from lateral view knee joint X-ray images. Sci. Rep. 2025, 15, 21305. [Google Scholar]

- Vaattovaara, E.; Panfilov, E.; Tiulpin, A.; Niinimäki, T.; Niinimäki, J.; Saarakkala, S.; Nevalainen, M.T. Kellgren-Lawrence grading of knee osteoarthritis using deep learning: Diagnostic performance with external dataset and comparison with four readers. Osteoarthr. Cartil. Open 2025, 7, 100580. [Google Scholar] [CrossRef]

- Yayli, S.B.; Kılıç, K.; Beyaz, S. Deep learning in gonarthrosis classification: A comparative study of model architectures and single vs. multi-model methods. Front. Artif. Intell. 2025, 8, 1413820. [Google Scholar] [CrossRef] [PubMed]

- Bai, X.; Hou, X.; Song, Y.; Tang, Z.; Huo, H.; Liu, J. Plantar pressure classification and feature extraction based on multiple fusion algorithms. Sci. Rep. 2025, 15, 13274. [Google Scholar] [CrossRef]

- Ma’aitah, M.K.S.; Helwan, A.; Radwan, A.; Mohammad Salem Manasreh, A.; Alshareef, E.A. Multimodal model for knee osteoarthritis KL grading from plain radiograph. J. X-Ray Sci. Technol. 2025, 33, 608–620. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Mirzakhalilov, S.; Umirzakova, S.; Ismailov, O.; Sultanov, D.; Nasimov, R.; Cho, Y.I. Lightweight early detection of knee osteoarthritis in athletes. Sci. Rep. 2025, 15, 31413. [Google Scholar] [CrossRef]

- Tuncer, T.; Dogan, S.; Kobat, M.A. TurkerNeXt: A Deep Learning Approach for Automated Heart Failure Diagnosis Using X-Ray Images. E J. Cardiovasc. Med. 2023, 11, 333–334. [Google Scholar]

- Arslan, S.; Kaya, M.K.; Tasci, B.; Kaya, S.; Tasci, G.; Ozsoy, F.; Dogan, S.; Tuncer, T. Attention turkernext: Investigations into bipolar disorder detection using oct images. Diagnostics 2023, 13, 3422. [Google Scholar] [CrossRef]

- Yu, W.; Luo, M.; Zhou, P.; Si, C.; Zhou, Y.; Wang, X.; Feng, J.; Yan, S. Metaformer is actually what you need for vision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10819–10829. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Mummolo, C.; Mangialardi, L.; Kim, J.H. Quantifying dynamic characteristics of human walking for comprehensive gait cycle. J. Biomech. Eng. 2013, 135, 091006. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Powers, D.M.W. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar] [CrossRef]

- Boyd, K.; Eng, K.H.; Page, C.D. Area under the precision-recall curve: Point estimates and confidence intervals. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Prague, Czech Republic, 23–27 September 2013; pp. 451–466. [Google Scholar]

- Chen, S.; Lu, S.; Wang, S.; Ni, Y.; Zhang, Y. Shifted window vision transformer for blood cell classification. Electronics 2023, 12, 2442. [Google Scholar] [CrossRef]

- Üzen, H.; Fırat, H. A hybrid approach based on multipath Swin transformer and ConvMixer for white blood cells classification. Health Inf. Sci. Syst. 2024, 12, 33. [Google Scholar] [CrossRef]

- Nammmmmm. ResNetFER. Available online: https://www.kaggle.com/code/nammmmmm/resnetfer (accessed on 11 September 2025).

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Kinakh, V. Stable ImageNet-1K. Available online: https://www.kaggle.com/datasets/vitaliykinakh/stable-imagenet1k/code (accessed on 31 July 2025).

- Dwivedi, K.; Dutta, M.K. Microcell-Net: A deep neural network for multi-class classification of microscopic blood cell images. Expert Syst. 2023, 40, e13295. [Google Scholar] [CrossRef]

- Ammar, M.; Daho, M.E.H.; Harrar, K.; Laidi, A. Feature Extraction using CNN for Peripheral Blood Cells Recognition. EAI Endorsed Trans. Scalable Inf. Syst. 2022, 9, e12. [Google Scholar] [CrossRef]

- Erten, M.; Barua, P.D.; Dogan, S.; Tuncer, T.; Tan, R.-S.; Acharya, U.R. ConcatNeXt: An automated blood cell classification with a new deep convolutional neural network. Multimed. Tools Appl. 2024, 84, 22231–22249. [Google Scholar] [CrossRef]

- Mondal, S.K.; Talukder, M.S.H.; Aljaidi, M.; Sulaiman, R.B.; Tushar, M.M.S.; Alsuwaylimi, A.A. BloodCell-Net: A lightweight convolutional neural network for the classification of all microscopic blood cell images of the human body. arXiv 2024, arXiv:2405.14875. [Google Scholar]

- Rastogi, P.; Khanna, K.; Singh, V. LeuFeatx: Deep learning–based feature extractor for the diagnosis of acute leukemia from microscopic images of peripheral blood smear. Comput. Biol. Med. 2022, 142, 105236. [Google Scholar] [CrossRef]

- Nisa, N.; Khan, A.S.; Ahmad, Z.; Abdullah, J. TPAAD: Two-phase authentication system for denial of service attack detection and mitigation using machine learning in software-defined network. Int. J. Netw. Manag. 2024, 34, e2258. [Google Scholar] [CrossRef]

- Bilal, O.; Asif, S.; Zhao, M.; Li, Y.; Tang, F.; Zhu, Y. Differential evolution optimization based ensemble framework for accurate cervical cancer diagnosis. Appl. Soft Comput. 2024, 167, 112366. [Google Scholar] [CrossRef]

- Manzari, O.N.; Ahmadabadi, H.; Kashiani, H.; Shokouhi, S.B.; Ayatollahi, A. MedViT: A robust vision transformer for generalized medical image classification. Comput. Biol. Med. 2023, 157, 106791. [Google Scholar] [CrossRef] [PubMed]

- Miraç KÖKÇAm, Ö.; UÇAr, F. BiT-HyMLPKANClassifier: A Hybrid Deep Learning Framework for Human Peripheral Blood Cell Classification Using Big Transfer Models and Kolmogorov–Arnold Networks. Adv. Intell. Syst. 2025, 2500387. [Google Scholar] [CrossRef]

| Study (Year) | Data/Modality | Model (Type) | Task | Performance (Metric) | Validation | Pitfalls/ Limitations |

|---|---|---|---|---|---|---|

| Tiulpin et al., 2018 [30] | MOST & OAI X-rays (~24k images) | Deep Siamese CNN ensemble | KL 0–4 grading | Acc 66.71%, AUC 0.93, κ 0.83 | Yes (cross-dataset) | Early grades remain hard; dataset-specific biases. |

| Guan et al., 2022 [31] | OAI X-rays (6567 knees) + clinical | YOLO ROI + EfficientNet + ANN (fusion) | Pain progression (48 mo) | Combined AUC 0.807, Sens 72.3%, Spec 80.9% | Hold-out | Predicts pain (not structure); label noise risk. |

| Abdullah & Rajasekaran, 2022 [32] | Madurai X-rays (3172 images) | Faster-R-CNN (ROI) + ResNet-50 + AlexNet | ROI detect. + KL grading | ROI 98.52%, OA cls 98.90% | Hold-out | Potential leakage if not patient-wise; local dataset. |

| Wang et al., 2021 [33] | OAI X-rays (4.5k samples) | YOLO + Visual Transformer + ResNet50 | Knee detection + KL grading | Detect 95.57%, KL acc 69.18% | Hold-out | Limited external test; moderate KL accuracy |

| Hu et al., 2023 [34] | MRI, FNIH cohort (n = 364; SAG-IW-TSE-FS, 3D-DESS) | 3D DenseNet169 | KOA progression (24–48 mo) | AUC 0.664 → 0.739 → 0.775 (baseline/12 mo/24 mo) | Multi-timepoint | Small cohort; MRI cost; heavy model. |

| Rani et al., 2024 [35] | OAI X-rays | 12-layer CNN | Binary KOA + KL severity | Binary acc 92.3%, Multiclass acc 78.4%, F1 96.5% | Hold-out | Class imbalance; limited external test. |

| Ahmed & Imran, 2024 [36] | “Knee OA Severity Grading” X-rays (~8260; KL 0–4) | Fine-tuned CNNs (VGG/ResNet/EfficientNet-b7) + Grad-CAM | Binary & multi-class | Binary 99.13%, Multi-class 67% | Hold-out | Multi-class still weak; dataset imbalance. |

| Touahema et al., 2024 [37] | OAI (5k) + Medical Expert I/II + local | Pre-trained Xception (TL); GUI tool | KL grading/severity | Val 99.39%, Test 97.20%, External ≈ 95% | Some external sets | Possible overlap; unclear patient-wise split; very high scores need replication. |

| Lee et al., 2024 [38] | OAI → MOST (independent test 17,040 images) | Plug-in modules compatible with CNN/Transformer | KL 0–4 grading | Per-grade acc: KL1 43%, KL4 96% | Yes (OAI→MOST) | KL-1 hard; added complexity. |

| Sekhri et al., 2023 [16] | OAI (+public sets) X-rays | Swin Transformer variants | KL 0–4 grading | Acc ~70%, F1 ~0.67 (reported) | Partial | In many cases; details/splits vary. |

| Abdullah et al., 2025 [39] | 4334 digital knee X-rays (AP and lateral views) | Faster R-CNN for JSW localization + Fine-tuned DenseNet-201 for KL grading | Localization of tibio-femoral joint space (JSW) + KL classification (1–4) from AP & Lat views | Localization acc: AP 98.74%, Lat 96.12% KL classification acc: AP 98.51%, Lat 92.42% Kappa: AP 0.98, Lat 0.91 | Hold-out | Lateral view underperforms due to overlapping anatomy Longer training times due to multi-stage pipeline |

| Vaattovaara et al., 2025 [40] | 208 AP knee X-rays (external test set) Train: MOST Val: OAI | Deep Siamese CNN + Hourglass knee localization | KL grading (0–4) multi-class & binary (0–1 vs. 2–4) | Multi-class AUC: 0.886 Balanced Acc: 69.3% Kappa: 0.820 Binary AUC: 0.967 Balanced Acc: 90.2% | External test set (n = 208), comparison with 4 readers | KL1 sensitivity low (37.2%) Grade imbalance DL underperforms vs. expert MSK radiologists |

| Yayli et al., 2025 [41] | Knee X-ray (AP), 14,607 images, 3 hospitals | Single-model CNNs (EfficientNet, NfNet, etc.) vs. multi-model pipeline | KL staging | Best single-model: F1 = 0.763, Acc = 0.767; multi-model lower (F1 = 0.736, Acc = 0.740) | Hold-out | CLAHE often hurt performance; class imbalance |

| Bai et al., 2025 [42] | Plantar pressure (RSscan plate), n = 243 healthy males | PCA + optimized K-means + LDA (classical ML) | Classifying plantar pressure distribution types | Acc (original) 89.70%; cross-validated Acc 88.50% (3 classes) | 10-fold CV | Healthy young males only; not disease labels; ML pipeline depends on hand-crafted indices |

| Ma’aitah et al., 2025 [43] | OAI knee X-rays + text | Multimodal Transformer (ViT for image + BERT for text) | KL grading (multimodal) | Overall Acc = 82.85%; Precision = 84.54%; Recall = 82.89 | Hold-out | Evaluation only on OAI; dependency on text description |

| Abdusalomov et al., 2025 [44] | “KOA Severity Grading” X-ray dataset; athletes focus | Lightweight CNN (EfficientNet-B0 + ECA | Early-stage vs. healthy (binary) | Accuracy ≈ 92% (range 91.5–92% in the report) | Hold-out | Binary task; KL 0–4 generalizability to full rating uncertain |

| Tuncer et al., 2023 [45] | Chest X-ray; 4 class: heart failure, Coah, COVID-19, healthy | Lightweight CNN (≈5.7 M parameters); attention + ConvNeXt block fusion; additionally, TurkerNeXt-based deep feature extraction + SVM classifier | Multi-class disease classification (4-class) | Training accuracy 100%; validation accuracy 90.19%; TurkerNeXt-based deep feature engineering model test accuracy 97.36% | Hold-out | Limited data/cohort details |

| Arslan et al., 2023 [46] | OCT retina; top-to-bottom/left-to-right B-scan cases and merged | Attention CNN (~1.6 M parameters); patchify stem + Attention TurkerNeXt blocks; ConvNeXt/Swin/MLP/ResNet-inspired architecture | Binary classification: bipolar disorder vs. healthy | Validation and testing in all cases = 100% (accuracy, sensitivity, specificity, precision, F1, G-mean) | Hold-out | The need for larger and more diverse OCT datasets |

| Variable | Healthy Group (KL 0) | OA Group (KL 4) |

|---|---|---|

| Age (years) (Mean ± SD) | 56.42 ± 6.21 | 65.27 ± 3.53 |

| Sex | Female: 51 (56.7%)/Male: 39 (43.3%) | Female: 42 (77.8%)/Male: 12 (22.2%) |

| Height (cm) (Mean ± SD) | 166.6 ± 7.24 | 159.1 ± 4.77 |

| BMI (kg/m2) (Mean ± SD) | 23.9 ± 2.87 | 26.5 ± 5.10 |

| No | Class | Training (Images) | Test (Images) | Total (Images) |

|---|---|---|---|---|

| 1 | Osteoarthritis | 888 | 221 | 1109 |

| 2 | Control | 1180 | 294 | 1474 |

| Total | 2068 | 515 | 2583 | |

| Stage | Input | Operation | Output |

|---|---|---|---|

| Stem | 224 × 224 × 3 | 56 × 56 × 96 | |

| TNV2 1 | 56 × 56 × 96 | 56 × 56 × 96 | |

| Downsampling 1 | 56 × 56 × 96 | 28 × 28 × 192 | |

| TNV2 2 | 28 × 28 × 192 | 28 × 28 × 192 | |

| Downsampling 2 | 28 × 28 × 192 | 14 × 14 × 384 | |

| TNV2 3 | 14 × 14 × 384 | 14 × 14 × 384 | |

| Downsampling 3 | 14 × 14 × 384 | 7 × 7 × 768 | |

| TNV2 4 | 7 × 7 × 768 | 7 × 7 × 768 | |

| Output size | 7 × 7 × 768 | GAP, FC, Softmax | Number of classes |

| Total learnable parameters | For 10 classes ~6.3 Million For 10,000 classes ~7.1 Million | ||

| Training Loss | 0.0331 |

| Training Accuracy | 100% |

| Validation Loss | 0.4647 |

| Validation Accuracy | 87.77% |

| Training Loss | 1.52 × 10−6 |

| Training Accuracy | 100% |

| Validation Loss | 0.3168 |

| Validation Accuracy | 93.72% |

| Performance Assessment Metrics | Class | Results (%) |

|---|---|---|

| Accuracy | Overall | 93.40 |

| Precision | Osteoarthritis | 91.56 |

| Control | 94.83 | |

| Overall | 93.19 | |

| Recall | Osteoarthritis | 93.21 |

| Control | 93.54 | |

| Overall | 93.38 | |

| F1-score | Osteoarthritis | 92.38 |

| Control | 94.18 | |

| Overall | 93.28 |

| Model | Avg. Time (s) | Images/s | Relative to TurkerNeXtV2 |

|---|---|---|---|

| TurkerNeXtV2 (ours) | 0.0078 | 128.81 | – |

| ResNet50 | 0.0078 | 128.38 | ≈Same (−0.3%) |

| GoogLeNet | 0.0070 | 141.94 | Faster (+10.2%) |

| MobileNetV2 | 0.0095 | 105.64 | Slower (−18.0%) |

| DenseNet201 | 0.0422 | 23.71 | Much slower |

| EfficientNetB0 | 0.0349 | 28.62 | Much slower |

| NasNetMobile | 0.0521 | 19.21 | Much slower |

| InceptionV3 | 0.0138 | 72.32 | Slower (−43.9%) |

| InceptionResNetV2 | 0.0429 | 23.29 | Much slower |

| DarkNet53 | 0.0123 | 81.04 | Slower (−37.1%) |

| Vision Transformer (ViT) | 0.0217 | 46.12 | Much slower |

| Training Loss | 2.29 × 10−5 |

| Training Accuracy | 100% |

| Validation Loss | 0.0571 |

| Validation Accuracy | 98.95% |

| Performance Assessment Metrics | Results (%) |

|---|---|

| Accuracy | 98.52 |

| Precision | 98.74 |

| Recall | 98.50 |

| F1-score | 98.62 |

| Study | Method | Accuracy (%) |

|---|---|---|

| Acevedo et al. [14] | Inceptionv3 + Softmax | 94.90 |

| Acevedo et al. [14] | VGG16 + Softmax | 96.20 |

| Acevedo et al. [14] | Inceptionv3 + SVM | 90.50 |

| Acevedo et al. [14] | VGG16 + SVM | 87.40 |

| Dwivedi and Dutta [60] | Microcell-Net | 97.65 |

| Ammar et al. [61] | CNN + Adaboost | 88.80 |

| Erten et al. [62] | ConcatNeXt + Softmax | 97.77 |

| Erten et al. [62] | ConcatNeXt + INCA + SVM | 98.73 |

| Mondal et al. [63] | BloodCell-Net | 97.10 |

| Rastogi et al. [64] | LeuFeatx + SVM | 91.62 |

| Khan et al. [65] | DCGAN + MobileNetV2 | 93.83 |

| Bilal et al. [66] | ConvLSTM | 96.90 |

| Manzari et al. [67] | MedViT | 95.40 |

| Chen et al. [55] | Shifted Window ViT (for four classes) | 98.03 |

| Kokcam and Ucar [68] | Big Transfer (BiT) and Efficient KAN | 97.39 |

| Uzen and Firat [56] | Swin transformer and ConvMixer (for four classes) | 95.66 |

| This Paper | TurkerNeXtV2 | 98.52 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Esmez, O.; Deniz, G.; Bilek, F.; Gurger, M.; Barua, P.D.; Dogan, S.; Baygin, M.; Tuncer, T. TurkerNeXtV2: An Innovative CNN Model for Knee Osteoarthritis Pressure Image Classification. Diagnostics 2025, 15, 2478. https://doi.org/10.3390/diagnostics15192478

Esmez O, Deniz G, Bilek F, Gurger M, Barua PD, Dogan S, Baygin M, Tuncer T. TurkerNeXtV2: An Innovative CNN Model for Knee Osteoarthritis Pressure Image Classification. Diagnostics. 2025; 15(19):2478. https://doi.org/10.3390/diagnostics15192478

Chicago/Turabian StyleEsmez, Omer, Gulnihal Deniz, Furkan Bilek, Murat Gurger, Prabal Datta Barua, Sengul Dogan, Mehmet Baygin, and Turker Tuncer. 2025. "TurkerNeXtV2: An Innovative CNN Model for Knee Osteoarthritis Pressure Image Classification" Diagnostics 15, no. 19: 2478. https://doi.org/10.3390/diagnostics15192478

APA StyleEsmez, O., Deniz, G., Bilek, F., Gurger, M., Barua, P. D., Dogan, S., Baygin, M., & Tuncer, T. (2025). TurkerNeXtV2: An Innovative CNN Model for Knee Osteoarthritis Pressure Image Classification. Diagnostics, 15(19), 2478. https://doi.org/10.3390/diagnostics15192478