Explainable Transformer-Based Framework for Glaucoma Detection from Fundus Images Using Multi-Backbone Segmentation and vCDR-Based Classification

Abstract

1. Introduction

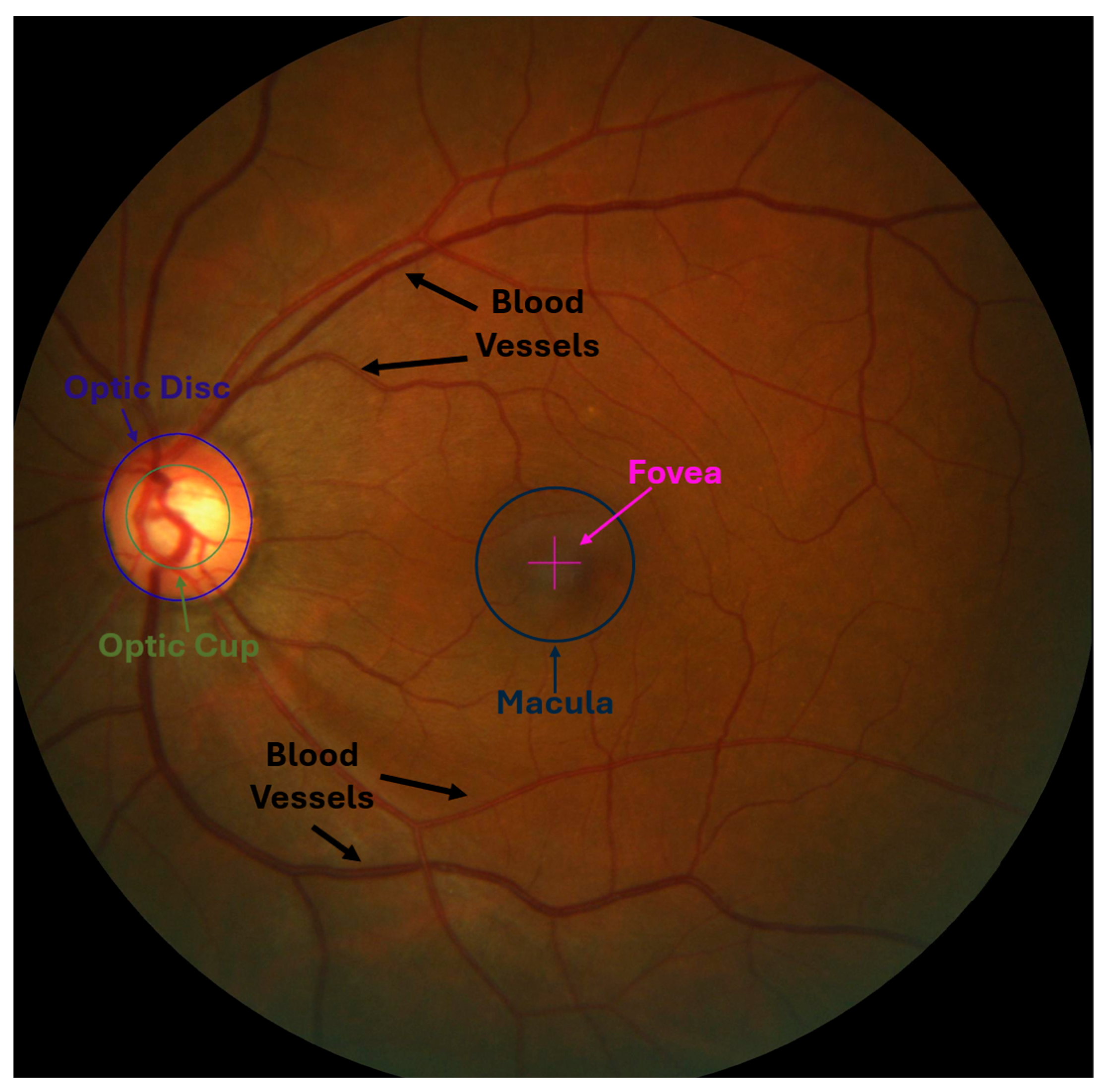

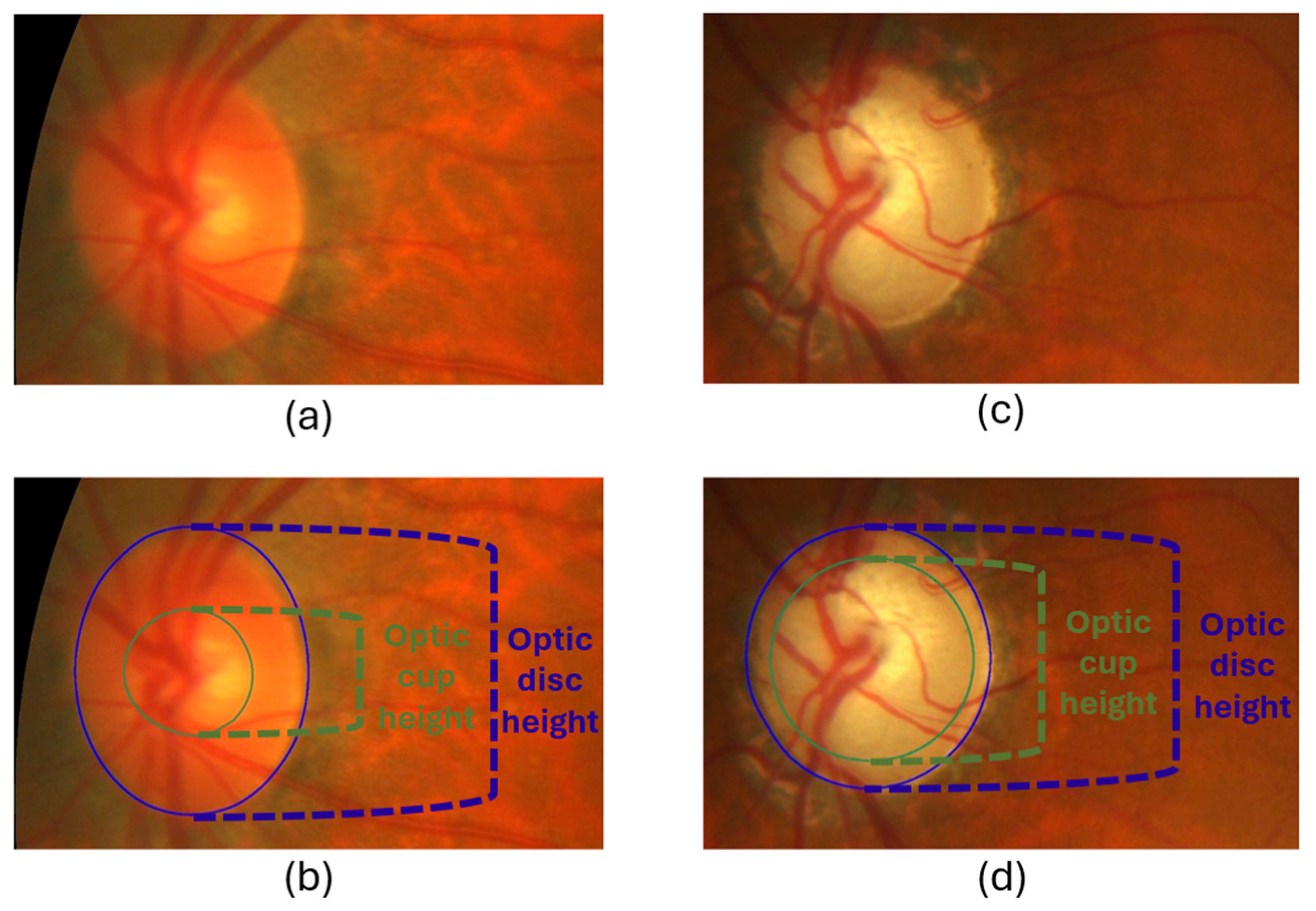

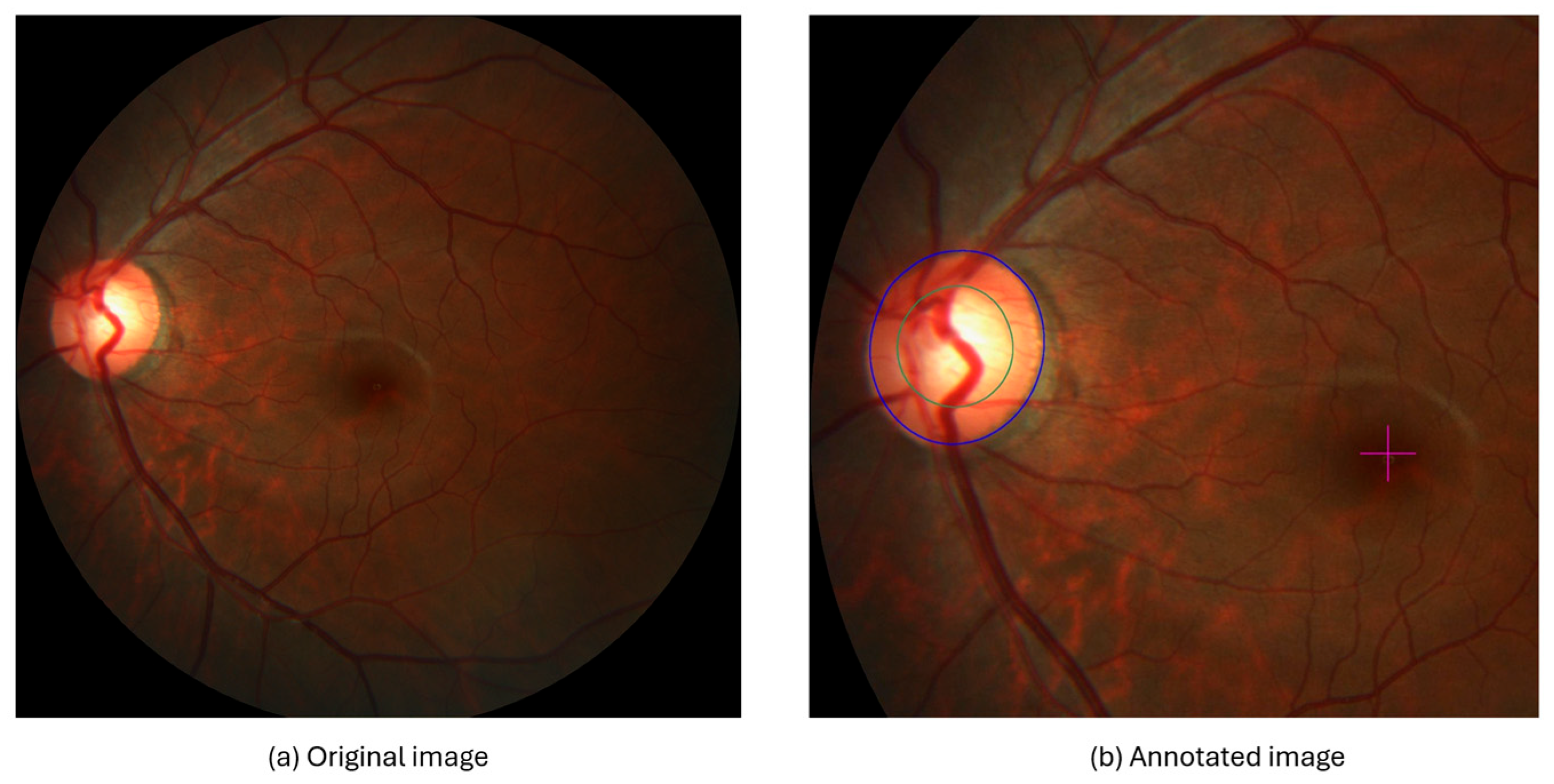

- Optic Nerve: Responsible for transmitting visual information from the retina to the brain. It consists of millions of nerve fibers that gather in the optic disc, which is the beginning of the optic nerve [7].

- Optic Cup (OC): The bright central part of the optic disc, surrounded by the neuroretinal rim, which consists of the remaining tissue of the optic nerve head. It is also considered a blind spot like an optical disc because it does not contain photoreceptors. Its size varies due to several factors, including increased intraocular pressure, one of the main parameters for detecting glaucoma [9].

- Blood Vessels: They supply the optic nerve head and retina with blood that contains oxygen and nutrients. When any abnormalities or changes occur in the blood vessels, they may be an indicator of eye diseases such as glaucoma [10].

- Macula Region and Fovea: The macula region is a round area located near the middle of the retina and has the highest percentage of photoreceptor cells and is responsible for central vision and visual acuity. In contrast, the fovea is the central portion of the macula, which contains a higher density of photoreceptor cells. It is responsible for sharp central vision and is essential for tasks requiring detailed visual discrimination [7,11].

2. Related Work

3. Materials and Methods

3.1. Data Collection

3.2. Data Pre-Processing

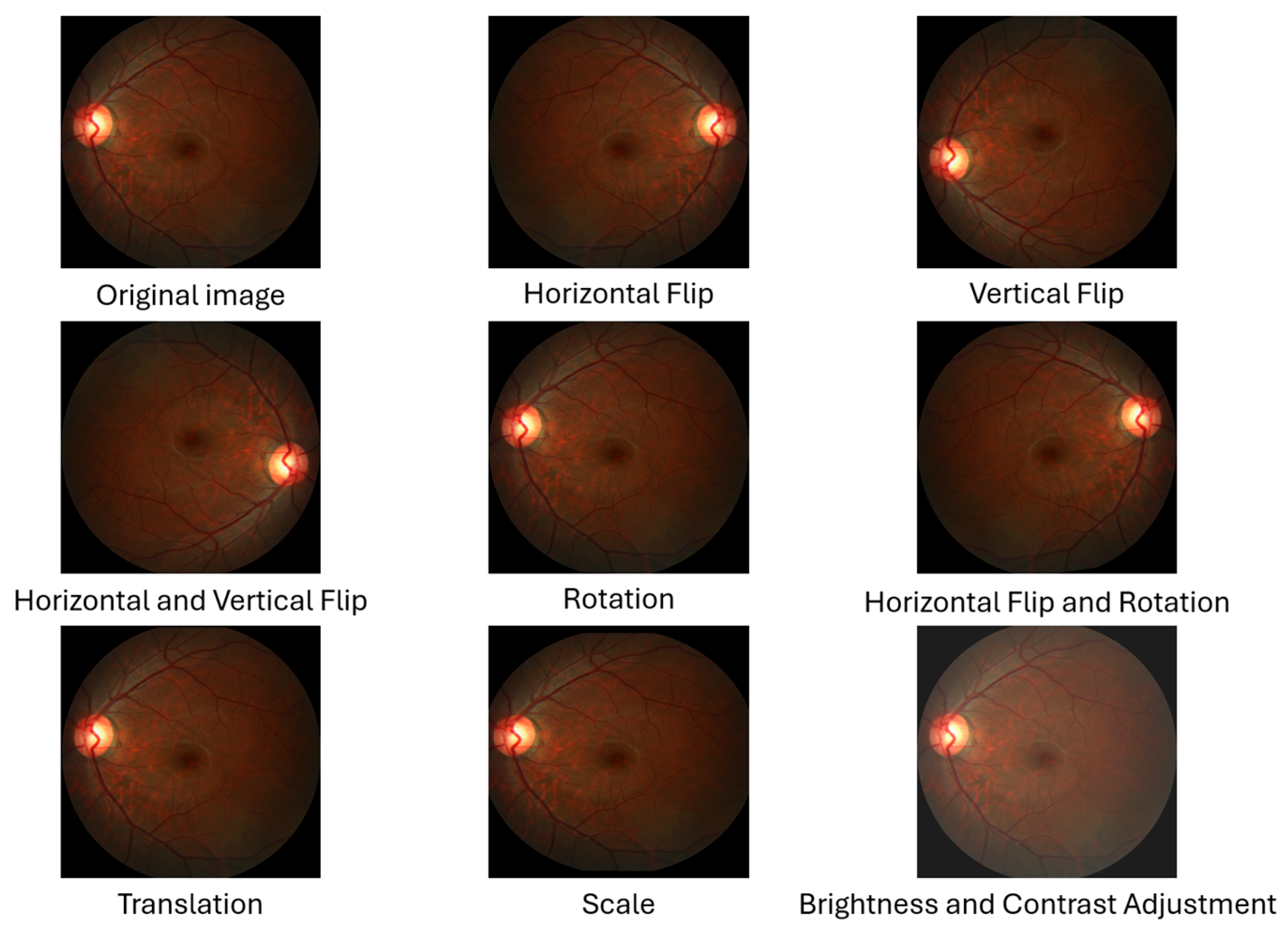

- Horizontal Flip: The image and mask pixels’ locations are flipped from right to left and vice versa. This flip produces a new image representing the eye opposite the original fundus image as if it were an image of both eyes [36].

- Vertical Flip: The image and mask pixels’ locations are flipped from top to bottom and vice versa. This reversal produces a new image, increasing the sample size [36].

- Horizontal and Vertical Flip: A combination of horizontal and vertical flipping was applied sequentially to the image and mask, resulting in a 180-degree rotation [36].

- Rotation: The images are rotated to any number between 0 and 360 degrees; for example, 90°, 180°, or randomly, either to the right or to the left. The rotation value must be determined based on the nature of the dataset [36]. In this study, we used a random angle within the range of ±10 degrees because rotating the images and masks significantly changes the OD and OC dimensions, affecting the vCDR calculation.

- Horizontal Flip and Rotation: A combination of horizontal flipping and rotation was applied sequentially to the image and mask, with the rotation angle randomly chosen within the range of ±10 degrees.

- Translation: All pixels in the image are moved by a specified number of pixels in one or both axes (x-axis and y-axis) to generate a new image. In this study, the image and mask were randomly shifted by a value of 1% of the image dimensions in both axes [36]. This small value was chosen because the OD and OC are located at the edges of some images, and a significant shift may result in the removal of the OD and OC from the image.

- Scale (Zoom In and Out): This is the technique of zooming in or out of the image and mask. In this study, the scale was randomly up or down by a value not exceeding 10%. The reason for choosing this small value is that the OD and OC are present at the edges of some images, and a large scale may lead to the removal of the OD and OC from the image.

- Brightness and Contrast Adjustment: This technique is used only on images and is not applied to the mask. It causes changes in pixel values through several techniques. This study applied random changes in image brightness (±20%) and contrast (±10%) to the images [37].

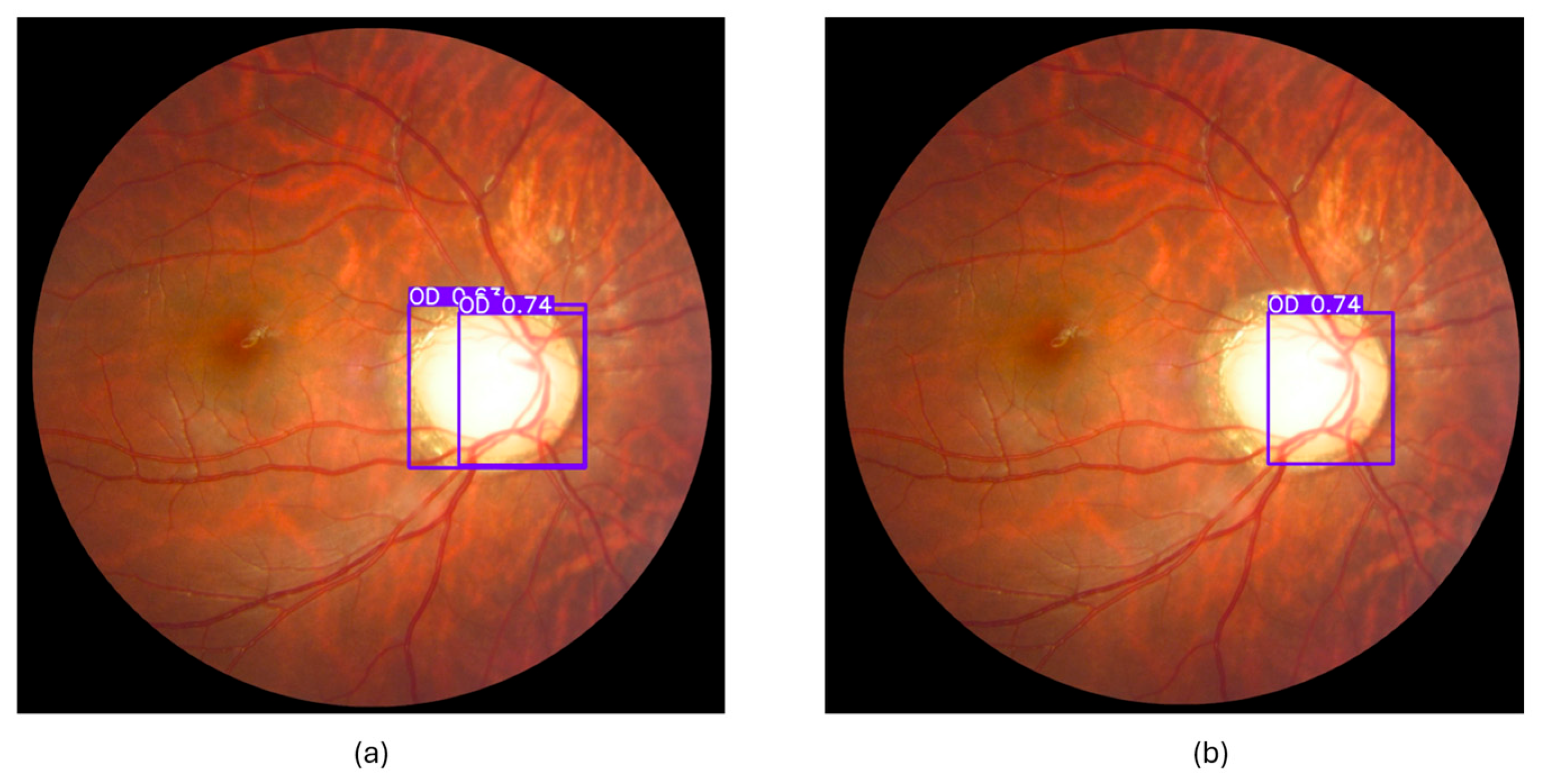

3.3. Object Detection

3.3.1. Dataset Preparation

3.3.2. Training and Evaluation

3.3.3. Cropped Region of Interest (ROI)

3.4. Segmentation and Classification

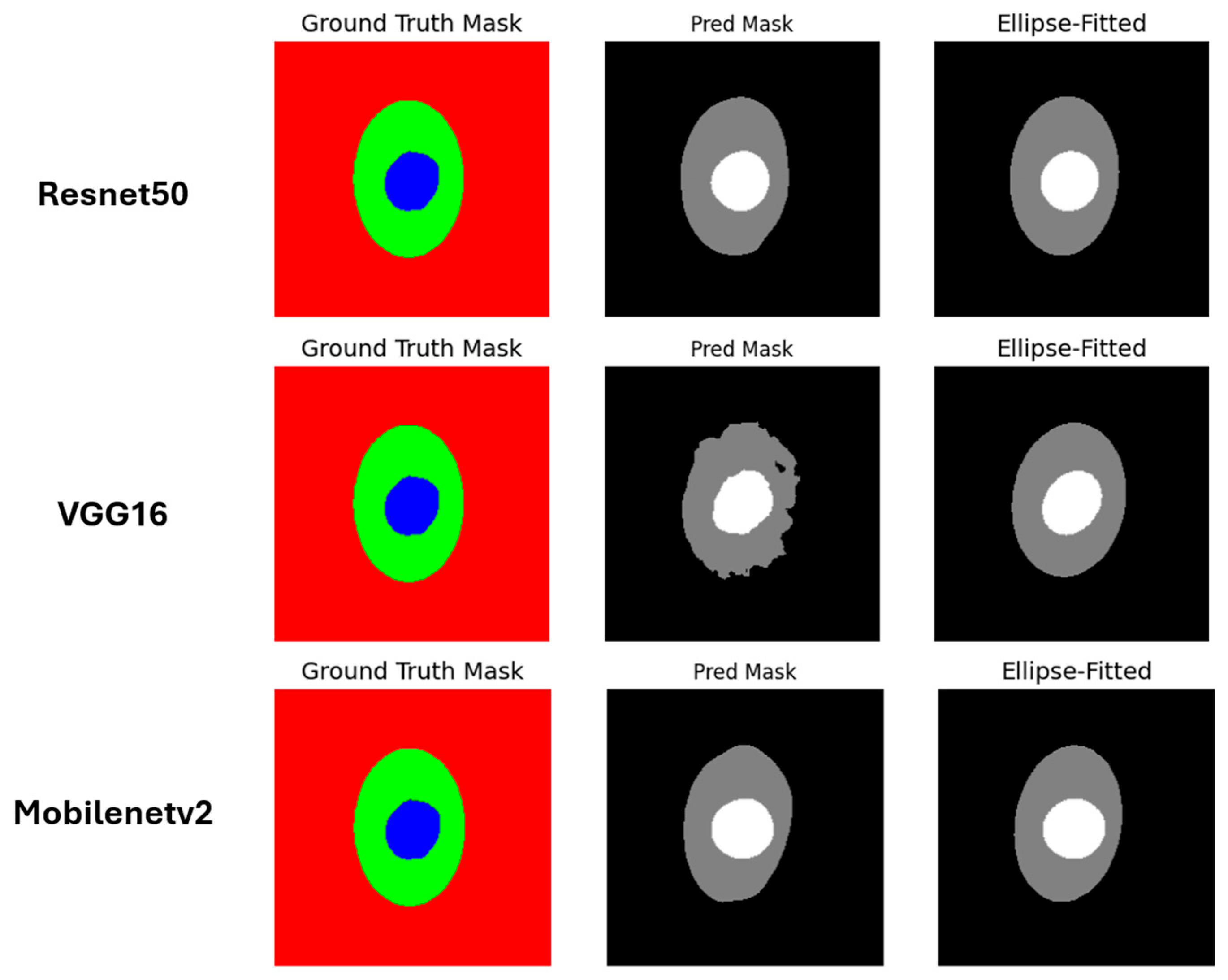

3.4.1. CNN-Based Techniques

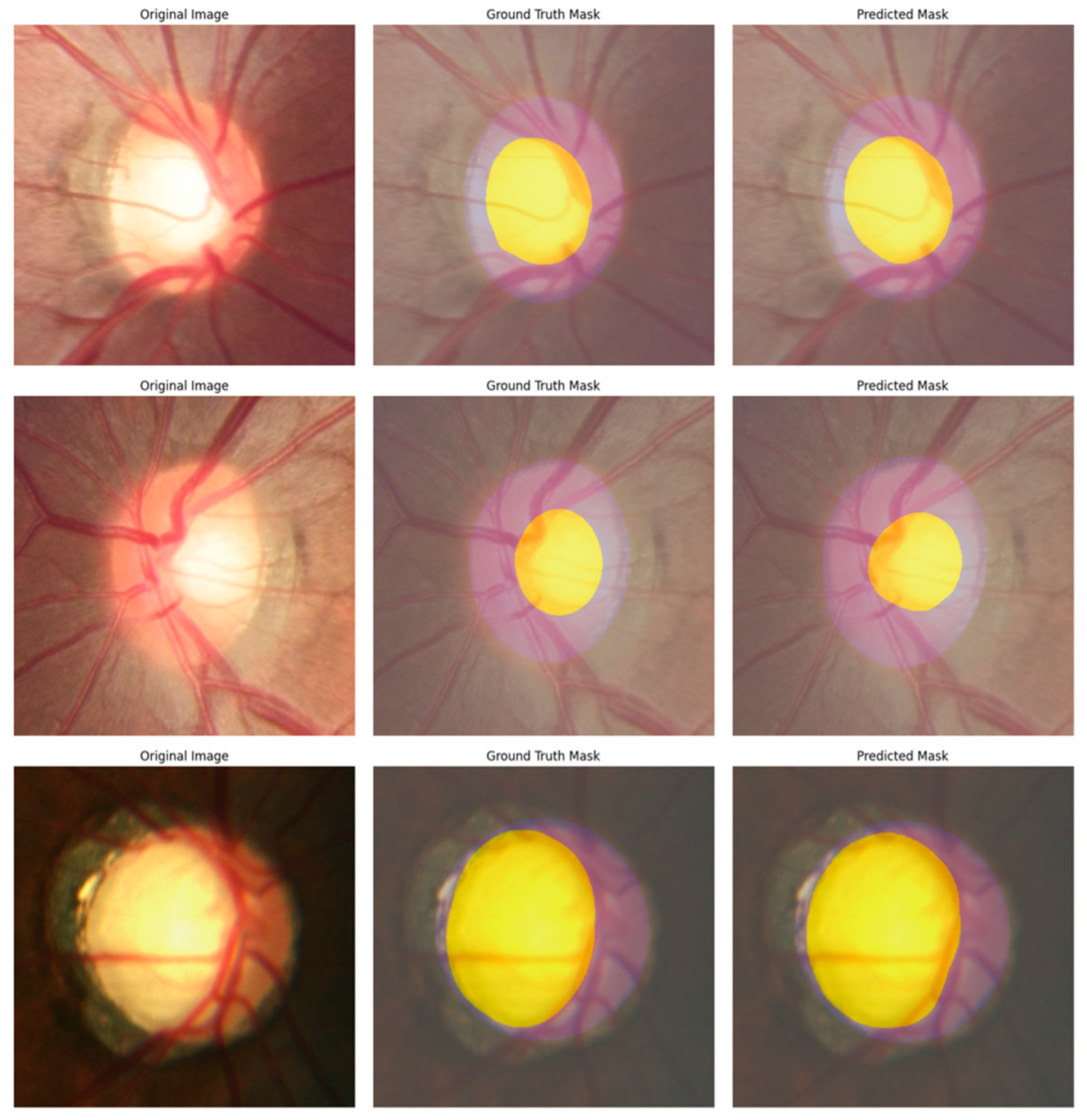

3.4.2. Vision Transformer-Based Techniques

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ONH | Optic Nerve Head |

| OD | Optic Disc |

| OC | Optic Cup |

| IOP | Intraocular Pressure |

| WHO | World Health Organization |

| CDR | Cup-to-Disc Ratio |

| vCDR | Vertical Cup-to-Disc Ratio |

| VF | Visual Fields |

| OCT | Optical Coherence Tomography |

| CAD | Computer-Aided Diagnosis |

| NLP | Natural Language Processing |

| ViTs | Vision Transformers |

| DETR | Detection Transformer |

| CNNs | Convolutional Neural Networks |

| ML | Machine Learning |

| DL | Deep Learning |

| AI | Artificial Intelligence |

| XAI | Explainable Artificial Intelligence |

| TL | Transfer Learning |

| Grad-CAM | Gradient-Weighted Class Activation Mapping |

| LIME | Locally Interpretable Model-Agnostic Explanations |

| REFUGE | Retinal Fundus Glaucoma Challenge |

| ORIGA | Online Retinal Fundus Image Database for Glaucoma Analysis and Research |

| ROI | Region of Interest |

| SiMES | Singapore Malay Eye Study |

| CVAT | Computer Vision Annotation Tool |

| YOLO | You Only Look Once |

| RPN | Region Proposal Network |

| SVM | Support Vector Machine |

| CLAHE | Contrast Limited Adaptive Histogram Equalization |

| mAP | Mean Average Precision |

| AP | Average Precision |

| NMS | Non-Maximum Suppression |

| IoU | Intersection over Union |

| DSC | Dice coefficient |

| MVB | Maximum Voting-Based |

| TP | True Positives |

| FP | False Positives |

| FN | False Negatives |

| W-MSA | Window-Based Multi-Head Self-Attention |

| SW-MSA | Shifted Window-Based Multi-Head Self-Attention |

References

- Afolabi, O.J.; Mabuza-Hocquet, G.P.; Nelwamondo, F.V.; Paul, B.S. The Use of U-Net Lite and Extreme Gradient Boost (XGB) for Glaucoma Detection. IEEE Access 2021, 9, 47411–47424. [Google Scholar] [CrossRef]

- Devecioglu, O.C.; Malik, J.; Ince, T.; Kiranyaz, S.; Atalay, E.; Gabbouj, M. Real-Time Glaucoma Detection From Digital Fundus Images Using Self-ONNs. IEEE Access 2021, 9, 140031–140041. [Google Scholar] [CrossRef]

- Kashyap, R.; Nair, R.; Gangadharan, S.M.P.; Botto-Tobar, M.; Farooq, S.; Rizwan, A. Glaucoma Detection and Classification Using Improved U-Net Deep Learning Model. Healthcare 2022, 10, 2497. [Google Scholar] [CrossRef]

- Nawaz, M.; Nazir, T.; Javed, A.; Tariq, U.; Yong, H.-S.; Khan, M.A.; Cha, J. An Efficient Deep Learning Approach to Automatic Glaucoma Detection Using Optic Disc and Optic Cup Localization. Sensors 2022, 22, 434. [Google Scholar] [CrossRef]

- Chayan, T.I.; Islam, A.; Rahman, E.; Reza, M.T.; Apon, T.S.; Alam, M.G.R. Explainable AI Based Glaucoma Detection Using Transfer Learning and LIME. In Proceedings of the 2022 IEEE Asia-Pacific Conference on Computer Science and Data Engineering (CSDE), Gold Coast, Australia, 18 December 2022; IEEE: New York, NY, USA; pp. 1–6. [Google Scholar]

- Shinde, R. Glaucoma Detection in Retinal Fundus Images Using U-Net and Supervised Machine Learning Algorithms. Intell.-Based Med. 2021, 5, 100038. [Google Scholar] [CrossRef]

- Zhu, J.; Zhang, E.; Del Rio-Tsonis, K. Eye Anatomy. In Encyclopedia of Life Sciences; Wiley: Oxford, UK, 2012; ISBN 978-0-470-01617-6. [Google Scholar]

- Reis, A.S.; Toren, A.; Nicolela, M.T. Clinical Optic Disc Evaluation in Glaucoma. Eur. Ophthalmic Rev. 2012, 6, 92–97. [Google Scholar] [CrossRef]

- Veena, H.N.; Muruganandham, A.; Kumaran, T.S. A Review on the Optic Disc and Optic Cup Segmentation and Classification Approaches over Retinal Fundus Images for Detection of Glaucoma. SN Appl. Sci. 2020, 2, 1476. [Google Scholar] [CrossRef]

- Wang, X.; Wang, M.; Liu, H.; Mercieca, K.; Prinz, J.; Feng, Y.; Prokosch, V. The Association between Vascular Abnormalities and Glaucoma—What Comes First? Int. J. Mol. Sci. 2023, 24, 13211. [Google Scholar] [CrossRef] [PubMed]

- Hou, H.; Moghimi, S.; Zangwill, L.M.; Shoji, T.; Ghahari, E.; Penteado, R.C.; Akagi, T.; Manalastas, P.I.C.; Weinreb, R.N. Macula Vessel Density and Thickness in Early Primary Open-Angle Glaucoma. Am. J. Ophthalmol. 2019, 199, 120–132. [Google Scholar] [CrossRef]

- Barros, D.M.S.; Moura, J.C.C.; Freire, C.R.; Taleb, A.C.; Valentim, R.A.M.; Morais, P.S.G. Machine Learning Applied to Retinal Image Processing for Glaucoma Detection: Review and Perspective. Biomed. Eng. OnLine 2020, 19, 20. [Google Scholar] [CrossRef]

- Tadisetty, S.; Chodavarapu, R.; Jin, R.; Clements, R.J.; Yu, M. Identifying the Edges of the Optic Cup and the Optic Disc in Glaucoma Patients by Segmentation. Sensors 2023, 23, 4668. [Google Scholar] [CrossRef]

- G Chandra, S. Optic Disc Evaluation in Glaucoma. Indian J. Ophthalmol. 1996, 44, 235–239. [Google Scholar]

- Ahn, J.M.; Kim, S.; Ahn, K.-S.; Cho, S.-H.; Lee, K.B.; Kim, U.S. A Deep Learning Model for the Detection of Both Advanced and Early Glaucoma Using Fundus Photography. PLoS ONE 2018, 13, e0207982. [Google Scholar] [CrossRef] [PubMed]

- Thompson, A.C.; Jammal, A.A.; Medeiros, F.A. A Review of Deep Learning for Screening, Diagnosis, and Detection of Glaucoma Progression. Transl. Vis. Sci. Technol. 2020, 9, 42. [Google Scholar] [CrossRef]

- Rana, M.; Bhushan, M. Machine Learning and Deep Learning Approach for Medical Image Analysis: Diagnosis to Detection. Multimed. Tools Appl. 2023, 82, 26731–26769. [Google Scholar] [CrossRef]

- Hosna, A.; Merry, E.; Gyalmo, J.; Alom, Z.; Aung, Z.; Azim, M.A. Transfer Learning: A Friendly Introduction. J. Big Data 2022, 9, 102. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale 2020. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows 2021. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Cheng, B.; Schwing, A.G.; Kirillov, A. Per-Pixel Classification Is Not All You Need for Semantic Segmentation 2021. arXiv 2021, arXiv:2107.06278. [Google Scholar]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Cheng, J.; Liu, J.; Xu, Y.; Yin, F.; Wong, D.W.K.; Tan, N.-M.; Tao, D.; Cheng, C.-Y.; Aung, T.; Wong, T.Y. Superpixel Classification Based Optic Disc and Optic Cup Segmentation for Glaucoma Screening. IEEE Trans. Med. Imaging 2013, 32, 1019–1032. [Google Scholar] [CrossRef]

- Juneja, M.; Singh, S.; Agarwal, N.; Bali, S.; Gupta, S.; Thakur, N.; Jindal, P. Automated Detection of Glaucoma Using Deep Learning Convolution Network (G-Net). Multimed. Tools Appl. 2020, 79, 15531–15553. [Google Scholar] [CrossRef]

- Fu, H.; Cheng, J.; Xu, Y.; Wong, D.W.K.; Liu, J.; Cao, X. Joint Optic Disc and Cup Segmentation Based on Multi-Label Deep Network and Polar Transformation. IEEE Trans. Med. Imaging 2018, 37, 1597–1605. [Google Scholar] [CrossRef] [PubMed]

- Neto, A.; Camera, J.; Oliveira, S.; Cláudia, A.; Cunha, A. Optic Disc and Cup Segmentations for Glaucoma Assessment Using Cup-to-Disc Ratio. Procedia Comput. Sci. 2022, 196, 485–492. [Google Scholar] [CrossRef]

- Civit-Masot, J.; Dominguez-Morales, M.J.; Vicente-Diaz, S.; Civit, A. Dual Machine-Learning System to Aid Glaucoma Diagnosis Using Disc and Cup Feature Extraction. IEEE Access 2020, 8, 127519–127529. [Google Scholar] [CrossRef]

- Agrawal, V.; Kori, A.; Alex, V.; Krishnamurthi, G. Enhanced Optic Disk and Cup Segmentation with Glaucoma Screening from Fundus Images Using Position Encoded CNNs 2018. arXiv 2018, arXiv:1809.05216. [Google Scholar]

- Sreng, S.; Maneerat, N.; Hamamoto, K.; Win, K.Y. Deep Learning for Optic Disc Segmentation and Glaucoma Diagnosis on Retinal Images. Appl. Sci. 2020, 10, 4916. [Google Scholar] [CrossRef]

- Velpula, V.K.; Sharma, L.D. Multi-Stage Glaucoma Classification Using Pre-Trained Convolutional Neural Networks and Voting-Based Classifier Fusion. Front. Physiol. 2023, 14, 1175881. [Google Scholar] [CrossRef]

- Chincholi, F.; Koestler, H. Transforming Glaucoma Diagnosis: Transformers at the Forefront. Front. Artif. Intell. 2024, 7, 1324109. [Google Scholar] [CrossRef]

- Orlando, J.I.; Fu, H.; Barbosa Breda, J.; Van Keer, K.; Bathula, D.R.; Diaz-Pinto, A.; Fang, R.; Heng, P.-A.; Kim, J.; Lee, J.; et al. REFUGE Challenge: A Unified Framework for Evaluating Automated Methods for Glaucoma Assessment from Fundus Photographs. Med. Image Anal. 2020, 59, 101570. [Google Scholar] [CrossRef]

- Zhang, Z.; Yin, F.S.; Liu, J.; Wong, W.K.; Tan, N.M.; Lee, B.H.; Cheng, J.; Wong, T.Y. ORIGA-light: An Online Retinal Fundus Image Database for Glaucoma Analysis and Research. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; IEEE: New York, NY, USA; pp. 3065–3068. [Google Scholar]

- Bajwa, M.N.; Singh, G.A.P.; Neumeier, W.; Malik, M.I.; Dengel, A.; Ahmed, S. G1020: A Benchmark Retinal Fundus Image Dataset for Computer-Aided Glaucoma Detection 2020. arXiv 2020, arXiv:2006.09158. [Google Scholar]

- Goceri, E. Medical Image Data Augmentation: Techniques, Comparisons and Interpretations. Artif. Intell. Rev. 2023, 56, 12561–12605. [Google Scholar] [CrossRef]

- Kumar, T.; Brennan, R.; Mileo, A.; Bendechache, M. Image Data Augmentation Approaches: A Comprehensive Survey and Future Directions. IEEE Access 2024, 12, 187536–187571. [Google Scholar] [CrossRef]

- Alomar, K.; Aysel, H.I.; Cai, X. Data Augmentation in Classification and Segmentation: A Survey and New Strategies. J. Imaging 2023, 9, 46. [Google Scholar] [CrossRef] [PubMed]

- Buslaev, A.; Iglovikov, V.I.; Khvedchenya, E.; Parinov, A.; Druzhinin, M.; Kalinin, A.A. Albumentations: Fast and Flexible Image Augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J. Ultralytics YOLO11. Available online: https://docs.ultralytics.com/models/yolo11/#citations-and-acknowledgements (accessed on 20 November 2024).

- Leading Data Annotation Platform. Available online: https://www.cvat.ai/ (accessed on 10 November 2024).

- Solawetz, J. What Is CVAT (Computer Vision Annotation Tool)? Available online: https://blog.roboflow.com/cvat/ (accessed on 22 March 2025).

- COCO JSON. Available online: https://roboflow.com/formats/coco-json?utm_source=chatgpt.com (accessed on 16 June 2025).

- YOLO Darknet TXT. Available online: https://roboflow.com/formats/yolo-darknet-txt?utm_source=chatgpt.com (accessed on 16 June 2025).

- Padilla, R.; Netto, S.L.; Da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niterói, Brazil, 1–3 July 2020; IEEE: New York, NY, USA; pp. 237–242. [Google Scholar]

- Hosang, J.; Benenson, R.; Schiele, B. Learning Non-Maximum Suppression 2017. arXiv 2017, arXiv:1705.02950. [Google Scholar]

- Müller, D.; Soto-Rey, I.; Kramer, F. Towards a Guideline for Evaluation Metrics in Medical Image Segmentation. BMC Res. Notes 2022, 15, 210. [Google Scholar] [CrossRef]

- Vlăsceanu, G.V.; Tarbă, N.; Voncilă, M.L.; Boiangiu, C.A. Selecting the Right Metric: A Detailed Study on Image Segmentation Evaluation. BRAIN Broad Res. Artif. Intell. Neurosci. 2024, 15, 295. [Google Scholar] [CrossRef]

- Khouy, M.; Jabrane, Y.; Ameur, M.; Hajjam El Hassani, A. Medical Image Segmentation Using Automatic Optimized U-Net Architecture Based on Genetic Algorithm. J. Pers. Med. 2023, 13, 1298. [Google Scholar] [CrossRef]

- Vuppala Adithya Sairam OC/OD Segmentation Using Keras. Available online: https://www.kaggle.com/code/vuppalaadithyasairam/oc-od-segmentation-using-keras (accessed on 12 March 2024).

- MaskFormer. Available online: https://huggingface.co/facebook/maskformer-swin-base-ade (accessed on 20 April 2025).

- Iakubovskii, P.; Amyeroberts; Montalvo, P. Semantic Segmentation Examples. Available online: https://github.com/huggingface/transformers/blob/main/examples/pytorch/semantic-segmentation/README.md (accessed on 14 April 2025).

- Rogge, N. Fine-tuning MaskFormer for Instance Segmentation on Semantic Sidewalk Dataset. Available online: https://github.com/NielsRogge/Transformers-Tutorials/blob/master/MaskFormer/Fine-tuning/Fine_tuning_MaskFormerForInstanceSegmentation_on_semantic_sidewalk.ipynb (accessed on 12 April 2025).

- Fu, H.; Cheng, J.; Xu, Y.; Zhang, C.; Wong, D.W.K.; Liu, J.; Cao, X. Disc-Aware Ensemble Network for Glaucoma Screening From Fundus Image. IEEE Trans. Med. Imaging 2018, 37, 2493–2501. [Google Scholar] [CrossRef] [PubMed]

| Paper | Dataset | Model | Result | Limitation |

|---|---|---|---|---|

| [23] | SiMES and SCES | Superpixel classification and support vector machine (SVM) | AUC: SiMES = 80%, SCES = 82.2% AOE *: OD = 9.5%, OC = 24.1% | Lacks generalization across different datasets and is sensitive to image artifacts. |

| [24] | DRISHTI GS | CNNs with U-Net | Dice score: Disc = 95.05%, Cup = 93.61% IoU: Disc = 90.62%, Cup = 88.09% F1: Disc = 93.56%, Cup 91.62% | Only one dataset was used. |

| [25] | ORIGA and SCES | Deep learning architecture (M-Net with U-shaped CNN) and polar transformation | AOE: OD = 0.07, OC = 0.23 AUC: ORIGA= 0.85, SCES = 0.90 | - |

| [26] | REFUGE, RIM-ONE r3, and DRISHTI GS. | Inception V3 model as the backbone of the U-Net architecture. | Dice score: 80% IoU: 70% F1: 70% | The result was not better than the previous work and used only two models. |

| [27] | RIM-One V3 and DRISHTI. | Ensemble network (generalized U-Net and pretrained transfer learning MobileNetV2). | Dice score: Cup 84% to 89% and Disc 92% to 93%, ACC = 88% | Requires more datasets to train ensembles. |

| [28] | REFUGE and DRISHTI-GS1 | Ensemble approach in segmentation using two CNN models (DenseNet201 and ResNet18) and Contrast Limited Adaptive Histogram Equalization (CLAHE)-enhanced inputs | Dice Score: OC = 0.64 OD = 0.88 MAE-CDR = 0.09, Sensitivity = 0.75, Specificity = 0.85 and ROC = 0.856 | A small portion of the dataset was used for training, validation, and testing. |

| [29] | RIM-ONE, ORIGA, DRISHTI GS1, ACRIMA, and REFUGE. | DeepLab v3+ with MobileNet and ensemble method (pre trained CNNs via transfer learning and SVM). | Dice score: 91.73% IoU: 84.89% ACC: 95.59% | Cannot generalize the result, and no segmentation for OC. |

| [30] | ACRIMA, RIM-ONE, HVD, and Drishti | Classifier Fusion using the maximum voting-based approach (MVB)in Five pre-trained deep CNN models | Two class ACC: 99.57% Three class ACC: 90.55% | Visualization techniques demonstrated have not been measured. |

| [31] | SMDG-19 | Vision Transformer (ViT) and Detection Transformer (DETR) | ACC: DETR = 90.48%, ViT = 87.87%. AUC: DETR = 88%, ViT = 86%. | - |

| Dataset | Country | Size | Glaucoma | Normal | OD Seg | OC Seg | Cls * |

|---|---|---|---|---|---|---|---|

| REFUGE [32] | China | 1200 | 120 | 1080 | Yes | Yes | Yes |

| ORIGA [33] | Singapore | 650 | 168 | 482 | Yes | Yes | Yes |

| G1020 [34] | Germany | 1020 | 296 | 724 | Yes | Missing OC | Yes |

| Model | Prec | Rec | mAP0.5 | mAP0.75 | mAP0.5–0.95 | F1 | Acc |

|---|---|---|---|---|---|---|---|

| YOLOv11 | 99.87% | 99.87% | 99.5% | 99.44% | 87.93% | 99.87% | 99.74% |

| Faster R-CNN | 99.11% | 100% | 100% | 98% | 84.8% | 99.55% | 99.11% |

| U-Net Backbone | Segmentation | Cls * | ||||

|---|---|---|---|---|---|---|

| IoU OD | IoU OC | DSC OD | DSC OC | Mean vCDR Error | Accuracy | |

| ResNet50 | 84.22% | 85.02% | 91.14% | 91.47% | 0.03 | 86% |

| VGG16 | 77.31% | 79.92% | 86.57% | 88.46% | 0.05 | 84% |

| Mobilenetv2 | 84.72% | 85.21% | 91.5% | 91.58% | 0.03 | 87% |

| MaskFormer Backbone | Segmentation | Classification | ||||||

|---|---|---|---|---|---|---|---|---|

| Mean IoU | IoU OD | IoU OC | Mean vCDR Error | Acc | Prec | Rec | F1 | |

| Swin-Base | 92.51% | 88.29% | 91.09% | 0.02 | 84.03% | 80.77% | 88.73% | 84.56% |

| Model | Segmentation | Classification | |||||

|---|---|---|---|---|---|---|---|

| IoU OD | IoU OC | DSC OD | DSC OC | Mean vCDR | Acc | F1 | |

| U-net + ResNet50 | 84.22% | 85.02% | 91.14% | 91.47% | 0.03 | 86% | 86% |

| U-net + VGG16 | 77.31% | 79.92% | 86.57% | 88.46% | 0.05 | 84% | 85% |

| U-net + MobileNetV2 | 84.72% | 85.21% | 91.5% | 91.58% | 0.03 | 87% | 87% |

| MaskFormer + Swin-Base | 88.29% | 91.09% | 93.83% | 93.71% | 0.02 | 84.03% | 84.56% |

| Model | Dataset | Segmentation | Classification | ||||

|---|---|---|---|---|---|---|---|

| IoU OD | IoU OC | DSC OD | DSC OC | Acc | AUC | ||

| [28] | REFUGE, DRISHTI-GS1 | - | - | 88% | 64% | - | 85% |

| [29] | REFUGE, RIM-ONE, DRISHTI-GS1, ORGIA, ACRIMA | 84.89% | - | 91.73% | - | 95.59% | 95.10% |

| [26] | REFUGE, RIM-ONE r3, DRISHTI-GS | 73% (±18) | 72% (±17) | 83% (±17) | 82% (±15) | - | 93% |

| U-net + ResNet50 | REFUGE | 84.22% | 85.02% | 91.14% | 91.47% | 86% | - |

| U-net + VGG16 | REFUGE | 77.31% | 79.92% | 86.57% | 88.46% | 84% | - |

| U-net + MobileNetV2 | REFUGE | 84.72% | 85.21% | 91.5% | 91.58% | 87% | - |

| MaskFormer + Swin-Base | REFUGE | 88.29% | 91.09% | 93.83% | 93.71% | 84.03% | 92.46% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alasmari, H.; Amoudi, G.; Alghamdi, H. Explainable Transformer-Based Framework for Glaucoma Detection from Fundus Images Using Multi-Backbone Segmentation and vCDR-Based Classification. Diagnostics 2025, 15, 2301. https://doi.org/10.3390/diagnostics15182301

Alasmari H, Amoudi G, Alghamdi H. Explainable Transformer-Based Framework for Glaucoma Detection from Fundus Images Using Multi-Backbone Segmentation and vCDR-Based Classification. Diagnostics. 2025; 15(18):2301. https://doi.org/10.3390/diagnostics15182301

Chicago/Turabian StyleAlasmari, Hind, Ghada Amoudi, and Hanan Alghamdi. 2025. "Explainable Transformer-Based Framework for Glaucoma Detection from Fundus Images Using Multi-Backbone Segmentation and vCDR-Based Classification" Diagnostics 15, no. 18: 2301. https://doi.org/10.3390/diagnostics15182301

APA StyleAlasmari, H., Amoudi, G., & Alghamdi, H. (2025). Explainable Transformer-Based Framework for Glaucoma Detection from Fundus Images Using Multi-Backbone Segmentation and vCDR-Based Classification. Diagnostics, 15(18), 2301. https://doi.org/10.3390/diagnostics15182301