From Description to Diagnostics: Assessing AI’s Capabilities in Forensic Gunshot Wound Classification

Abstract

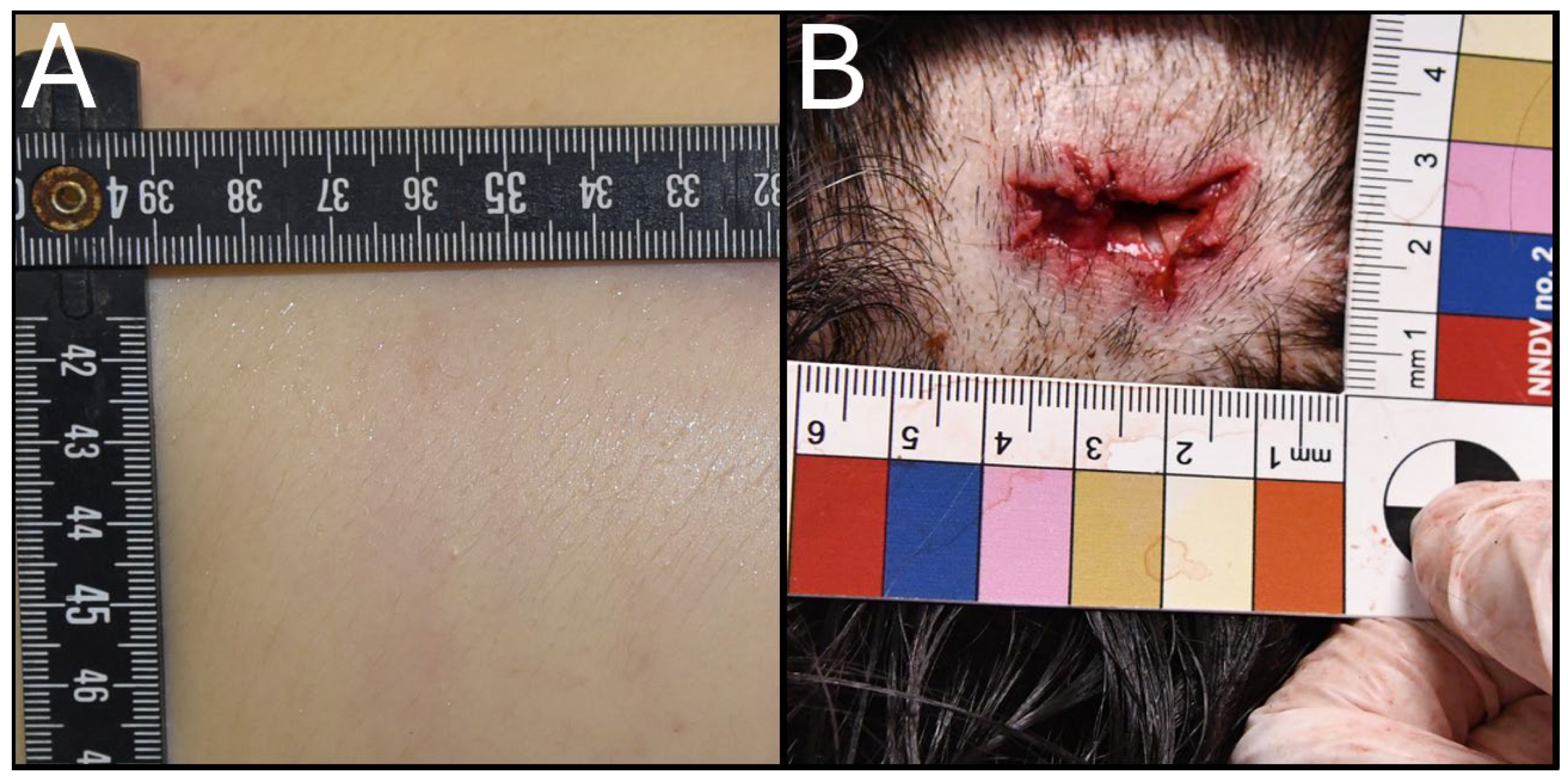

1. Introduction

2. Materials and Methods

2.1. Study Design and AI Model Selection

2.1.1. Phase 1: Initial Assessment of AI Performance

2.1.2. Phase 2: Machine Learning Training

2.1.3. Phase 3: Control Dataset Analysis

2.1.4. Phase 4: Machine Learning Training on Control Images

2.1.5. Phase 5: Evaluation Using Real-Case Firearm Injuries

2.2. Statistical Analysis

2.3. Ethical Considerations

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| GSWs | Gunshot wounds |

| AI | Artificial Intelligence |

| ML | Machine Learning |

| DL | Deep Learning |

References

- Kimberley Molina, D.; Dimaio, V.; Cave, R. Gunshot Wounds: A Review of Firearm Type, Range, and Location as Pertaining to Manner of Death. Am. J. Forensic Med. Pathol. 2013, 34, 366–371. [Google Scholar] [CrossRef]

- Nand, D.; Naghavi, M.; Marczak, L.B.; Kutz, M.; Shackelford, K.A.; Arora, M.; Miller-Petrie, M.; Aichour, M.T.E.; Akseer, N.; Al-Raddadi, R.M.; et al. Global Mortality from Firearms, 1990–2016. JAMA J. Am. Med. Assoc. 2018, 320, 792–814. [Google Scholar] [CrossRef]

- Santaella-Tenorio, J.; Cerdá, M.; Villaveces, A.; Galea, S. What Do We Know about the Association between Firearm Legislation and Firearm-Related Injuries? Epidemiol. Rev. 2016, 38, 140–157. [Google Scholar] [CrossRef] [PubMed]

- Baum, G.R.; Baum, J.T.; Hayward, D.; Mackay, B.J. Gunshot Wounds: Ballistics, Pathology, and Treatment Recommendations, with a Focus on Retained Bullets. Orthop. Res. Rev. 2022, 14, 293–317. [Google Scholar] [CrossRef] [PubMed]

- Stevenson, T.; Carr, D.J.; Harrison, K.; Critchley, R.; Gibb, I.E.; Stapley, S.A. Ballistic Research Techniques: Visualizing Gunshot Wounding Patterns. Int. J. Leg. Med. 2020, 134, 1103–1114. [Google Scholar] [CrossRef] [PubMed]

- Failla, A.V.M.; Licciardello, G.; Cocimano, G.; Di Mauro, L.; Chisari, M.; Sessa, F.; Salerno, M.; Esposito, M. Diagnostic Challenges in Uncommon Firearm Injury Cases: A Multidisciplinary Approach. Diagnostics 2025, 15, 31. [Google Scholar] [CrossRef]

- Gradaščević, A.; Soldatović, I.; Jogunčić, A.; Milosevic, M.; Sarajlić, N. Appearance and Characteristics of the Gunshot Wounds Caused by Different Fire Weapons–Animal Model. Srp. Arh. Za Celok. Lek. 2020, 148, 350–356. [Google Scholar] [CrossRef]

- Karger, B.; Brinkmann, B. Multiple Gunshot Suicides: Potential for Physical Activity and Medico- Legal Aspects. Int. J. Leg. Med. 1997, 110, 188–192. [Google Scholar] [CrossRef]

- Kaur, G.; Mukherjee, D.; Moza, B. A Comprehensive Review of Wound Ballistics: Mechanisms, Effects, and Advancements. Int. J. Med. Toxicol. Leg. Med. 2023, 26, 189–196. [Google Scholar] [CrossRef]

- Ketsekioulafis, I.; Filandrianos, G.; Katsos, K.; Thomas, K.; Spiliopoulou, C.; Stamou, G.; Sakelliadis, E.I. Artificial Intelligence in Forensic Sciences: A Systematic Review of Past and Current Applications and Future Perspectives. Cureus 2024, 16, e70363. [Google Scholar] [CrossRef]

- Sessa, F.; Esposito, M.; Cocimano, G.; Sablone, S.; Karaboue, M.A.A.; Chisari, M.; Albano, D.G.; Salerno, M. Artificial Intelligence and Forensic Genetics: Current Applications and Future Perspectives. Appl. Sci. 2024, 14, 2113. [Google Scholar] [CrossRef]

- Yadav, S.; Yadav, S.; Verma, P.; Ojha, S.; Mishra, S. Artificial Intelligence: An Advanced Evolution In Forensic and Criminal Investigation. Curr. Forensic Sci. 2022, 1, e190822207706. [Google Scholar] [CrossRef]

- Mohsin, K. Artificial Intelligence in Forensic Science. Int. J. Forensic Res. 2023, 4, 172–173. [Google Scholar] [CrossRef]

- Tynan, P. The Integration and Implications of Artificial Intelligence in Forensic Science. Forensic Sci. Med. Pathol. 2024, 20, 1103–1105. [Google Scholar] [CrossRef] [PubMed]

- Sablone, S.; Bellino, M.; Cardinale, A.N.; Esposito, M.; Sessa, F.; Salerno, M. Artificial Intelligence in Healthcare: An Italian Perspective on Ethical and Medico-Legal Implications. Front. Med. 2024, 11, 1343456. [Google Scholar] [CrossRef]

- Cheng, J.; Schmidt, C.; Wilson, A.; Wang, Z.; Hao, W.; Pantanowitz, J.; Morris, C.; Tashjian, R.; Pantanowitz, L. Artificial Intelligence for Human Gunshot Wound Classification. J. Pathol. Informat. 2023, 15, 100361. [Google Scholar] [CrossRef]

- Oura, P.; Junno, A.; Junno, J.A. Deep Learning in Forensic Gunshot Wound Interpretation—A Proof-of-Concept Study. Int. J. Leg. Med. 2021, 135, 2101–2106. [Google Scholar] [CrossRef]

- Sessa, F.; Chisari, M.; Esposito, M.; Guardo, E.; Di Mauro, L.; Salerno, M.; Pomara, C. Advancing Diagnostic Tools in Forensic Science: The Role of Artificial Intelligence in Gunshot Wound Investigation—A Systematic Review. Forensic Sci. 2025, 5, 30. [Google Scholar] [CrossRef]

- Keshavarz, P.; Bagherieh, S.; Nabipoorashrafi, S.A.; Chalian, H.; Rahsepar, A.A.; Kim, G.H.J.; Hassani, C.; Raman, S.S.; Bedayat, A. ChatGPT in Radiology: A Systematic Review of Performance, Pitfalls, and Future Perspectives. Diagn. Interv. Imaging 2024, 105, 251–265. [Google Scholar] [CrossRef]

- Ren, Y.; Guo, Y.; He, Q.; Cheng, Z.; Huang, Q.; Yang, L. Exploring Whether ChatGPT-4 with Image Analysis Capabilities Can Diagnose Osteosarcoma from X-Ray Images. Exp. Hematol. Oncol. 2024, 13, 71. [Google Scholar] [CrossRef]

- Dehdab, R.; Brendlin, A.; Werner, S.; Almansour, H.; Gassenmaier, S.; Brendel, J.M.; Nikolaou, K.; Afat, S. Evaluating ChatGPT-4V in Chest CT Diagnostics: A Critical Image Interpretation Assessment. Jpn. J. Radiol. 2024, 42, 1168–1177. [Google Scholar] [CrossRef]

- Scanlon, M.; Breitinger, F.; Hargreaves, C.; Hilgert, J.N.; Sheppard, J. ChatGPT for Digital Forensic Investigation: The Good, the Bad, and the Unknown. Forensic Sci. Int. Digit. Investig. 2023, 46, 301609. [Google Scholar] [CrossRef]

- Oura, P.; Junno, A.; Junno, J.A. Deep Learning in Forensic Shotgun Pattern Interpretation—A Proof-of-Concept Study. Leg. Med. 2021, 53, 101960. [Google Scholar] [CrossRef] [PubMed]

- Queiroz Nogueira Lira, R.; Geovana Motta de Sousa, L.; Memoria Pinho, M.L.; Pinto da Silva Andrade de Lima, R.C.; Garcia Freitas, P.; Scholles Soares Dias, B.; Breda de Souza, A.C.; Ferreira Leite, A. Deep Learning-Based Human Gunshot Wounds Classification. Int. J. Leg. Med. 2024, 139, 651–666. [Google Scholar] [CrossRef] [PubMed]

- Guleria, A.; Krishan, K.; Sharma, V.; Kanchan, T. ChatGPT: Forensic, Legal, and Ethical Issues. Med. Sci. Law 2024, 64, 150–156. [Google Scholar] [CrossRef]

- Ray, P.P. ChatGPT and Forensic Science: A New Dawn of Investigation. Forensic Sci. Med. Pathol. 2024, 20, 759–760. [Google Scholar] [CrossRef]

- Dinis-Oliveira, R.J.; Azevedo, R.M.S. ChatGPT in Forensic Sciences: A New Pandora’s Box with Advantages and Challenges to Pay Attention. Forensic Sci. Res. 2023, 8, 275–279. [Google Scholar] [CrossRef]

- Xu, H.; Shuttleworth, K.M.J. Medical Artificial Intelligence and the Black Box Problem: A View Based on the Ethical Principle of “Do No Harm”. Intell. Med. 2024, 4, 52–57. [Google Scholar] [CrossRef]

- Ogbogu, U.; Ahmed, N. Ethical, Legal, and Social Implications (ELSI) Research: Methods and Approaches. Curr. Protoc. 2022, 2, e354. [Google Scholar] [CrossRef]

- Sessa, F.; Chisari, M.; Esposito, M.; Karaboue, M.A.A.; Salerno, M.; Cocimano, G. Ethical, Legal and Social Implications (ELSI) Regarding Forensic Genetic Investigations (FGIs). J. Acad. Ethics 2024, 1–21. [Google Scholar] [CrossRef]

| Photo Category | % Blind Analysis | % Trained Analysis | ∆ | Improvement | |

|---|---|---|---|---|---|

| Entrance (n = 28) | ● | (10/28) 36% | (15/28) 54% | 18% | ↑ |

| ● | (5/28) 18% | (8/28) 29% | 11% | ↑ | |

| ● | (13/28) 46% | (5/28) 18% | −28% | ↑ | |

| Exit (n = 8) | ● | (1/8) 13% | (0/8) 0% | −13% | ↓ |

| ● | (4/8) 50% | (4/8) 50% | 0% | = | |

| ● | (3/8) 38% | (4/8) 50% | 12% | ↓ | |

| Total (n = 36) | ● | (11/36) 31% | (15/36) 42% | 11% | ↑ |

| ● | (9/36) 25% | (12/36) 33% | 8% | ↑ | |

| ● | (16/36) 44% | (9/36) 25% | −19% | ↑ |

| E | EML | ERC | Ex | ExML | ExRC | p-Value | |

|---|---|---|---|---|---|---|---|

| ● | 10 | 15 | 14 | 1 | 0 | 1 | >0.05 |

| ● | 5 | 8 | 3 | 4 | 4 | 8 | >0.05 |

| ● | 13 | 5 | 6 | 3 | 4 | 8 | >0.05 |

| Class 1 | Class 2 | p-Value |

|---|---|---|

| E | EML | >0.05 |

| E | ERC | >0.05 |

| EML | ERC | >0.05 |

| Ex | ExML | --- |

| Ex | ExRC | >0.05 |

| ExML | ExRC | --- |

| E | Ex | >0.05 |

| EML | ExML | --- |

| ERC | ExRC | 0.001 |

| Category | % Blind Analysis | % Trained Analysis | ∆ | Improvement | |

|---|---|---|---|---|---|

| Skin (n = 40) | ● | (35/40) 88% | (38/40) 95% | 7% | ↑ |

| ● | (4/40) 10% | (0/40) 0% | −10% | ↑ | |

| ● | (1/40) 3% | (2/40) 5% | 2% | ↓ |

| Example of Description in Blind | Example of Description After ML |

|---|---|

| - Image Description: The image shows a close-up view of a skin surface with a circular wound. The wound appears to be relatively small, red, and slightly raised with an exposed central area that could represent either a healing wound or an ulcerated area. The wound is surrounded by skin with some hair growth. There is a measuring scale visible next to the wound, indicating the wound’s approximate diameter. - Wound Characteristics: Type of Wound: The wound appears to be a small puncture wound or potentially an ulcerated lesion. The raised nature of the wound suggests either inflammation, granulation tissue formation, or early stages of healing. Healing Stage: The wound is likely in the early to intermediate stage of healing, characterized by the presence of granulation tissue. The absence of significant surrounding erythema (redness) or edema (swelling) suggests that the wound is not acutely infected. - Possible Mechanisms: This could be an entrance wound from a low-velocity projectile (e.g., a bullet or shrapnel), a puncture wound from a sharp object, or a lesion resulting from a bite or other trauma. - Forensic Considerations: Nature of the Wound: The circular and well-defined nature of the wound is more consistent with an entrance wound than an exit wound, which would typically be larger and more irregular. If a firearm was involved, this wound might suggest a small-caliber weapon or a low-velocity impact. Evidence Collection: In cases where a firearm or sharp object is suspected, forensic examination would include looking for traces of gunshot residue (if applicable) or foreign particles in the wound. Swabs from the wound could be taken for laboratory analysis. - Conclusion: This image shows a small, circular wound that may be an entrance wound from a projectile, a puncture wound from a sharp object, or an ulcerated lesion. The wound is in an early stage of healing with granulation tissue visible. The presence of a measuring scale helps in documenting the wound’s size for medico-legal purposes. Further forensic analysis would be required to determine the exact cause of the wound, and it could play a significant role in legal proceedings involving trauma assessment. | This image depicts what appears to be a penetrating wound, possibly an entry wound from a firearm projectile, with detailed forensic and medico-legal characteristics: Wound Characteristics: - Shape and Edges: The wound is circular with regular, clean, and slightly retracted edges. Such features are commonly observed in gunshot entrance wounds, particularly those caused by small-caliber firearms or projectiles with minimal deformation. Size: Based on the adjacent scale, the wound diameter appears to be approximately 5–7 mm. This size is consistent with a small-caliber bullet entry wound, though exact dimensions depend on the caliber of the projectile and elasticity of the skin. Depth: While the internal depth cannot be assessed from the image, the clean edges suggest a penetrating or perforating injury. - Surrounding Tissue: Skin: The skin surrounding the wound appears intact without significant bruising, laceration, or tearing. There is no visible stippling (tattooing from gunpowder residue) or soot, which suggests the injury might have been inflicted from a distant range (likely more than 60 cm from the firearm). Absence of burns rules out a contact or near-contact shot. Inflammation: The wound edges show minimal erythema (reddening) and no overt signs of infection, indicating a relatively fresh injury. - Diagnosis: Gunshot Entrance Wound (Primary Consideration): The wound’s circular shape, clean edges, and absence of significant surrounding trauma are consistent with this diagnosis. Puncture Wound (Secondary Possibility): While less likely, a puncture wound from a sharp, narrow object could produce a similar morphology. Bite or Ulcerative Lesion: These are unlikely due to the absence of irregular tearing, jagged edges, or surrounding tissue necrosis. - Possible Mechanism of Injury: The morphology and absence of stippling, soot, or burns suggest the wound was caused by a firearm discharged from an intermediate or distant range. The projectile appears to have penetrated perpendicularly or near-perpendicularly into the skin. - Summary: The wound is highly consistent with a gunshot entrance wound, likely caused by a small-caliber firearm at intermediate to distant range. The clean morphology and absence of surrounding trauma support this conclusion. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sessa, F.; Guardo, E.; Esposito, M.; Chisari, M.; Di Mauro, L.; Salerno, M.; Pomara, C. From Description to Diagnostics: Assessing AI’s Capabilities in Forensic Gunshot Wound Classification. Diagnostics 2025, 15, 2094. https://doi.org/10.3390/diagnostics15162094

Sessa F, Guardo E, Esposito M, Chisari M, Di Mauro L, Salerno M, Pomara C. From Description to Diagnostics: Assessing AI’s Capabilities in Forensic Gunshot Wound Classification. Diagnostics. 2025; 15(16):2094. https://doi.org/10.3390/diagnostics15162094

Chicago/Turabian StyleSessa, Francesco, Elisa Guardo, Massimiliano Esposito, Mario Chisari, Lucio Di Mauro, Monica Salerno, and Cristoforo Pomara. 2025. "From Description to Diagnostics: Assessing AI’s Capabilities in Forensic Gunshot Wound Classification" Diagnostics 15, no. 16: 2094. https://doi.org/10.3390/diagnostics15162094

APA StyleSessa, F., Guardo, E., Esposito, M., Chisari, M., Di Mauro, L., Salerno, M., & Pomara, C. (2025). From Description to Diagnostics: Assessing AI’s Capabilities in Forensic Gunshot Wound Classification. Diagnostics, 15(16), 2094. https://doi.org/10.3390/diagnostics15162094