Evaluating ChatGPT’s Concordance with Clinical Guidelines of Ménière’s Disease in Chinese

Abstract

1. Introduction

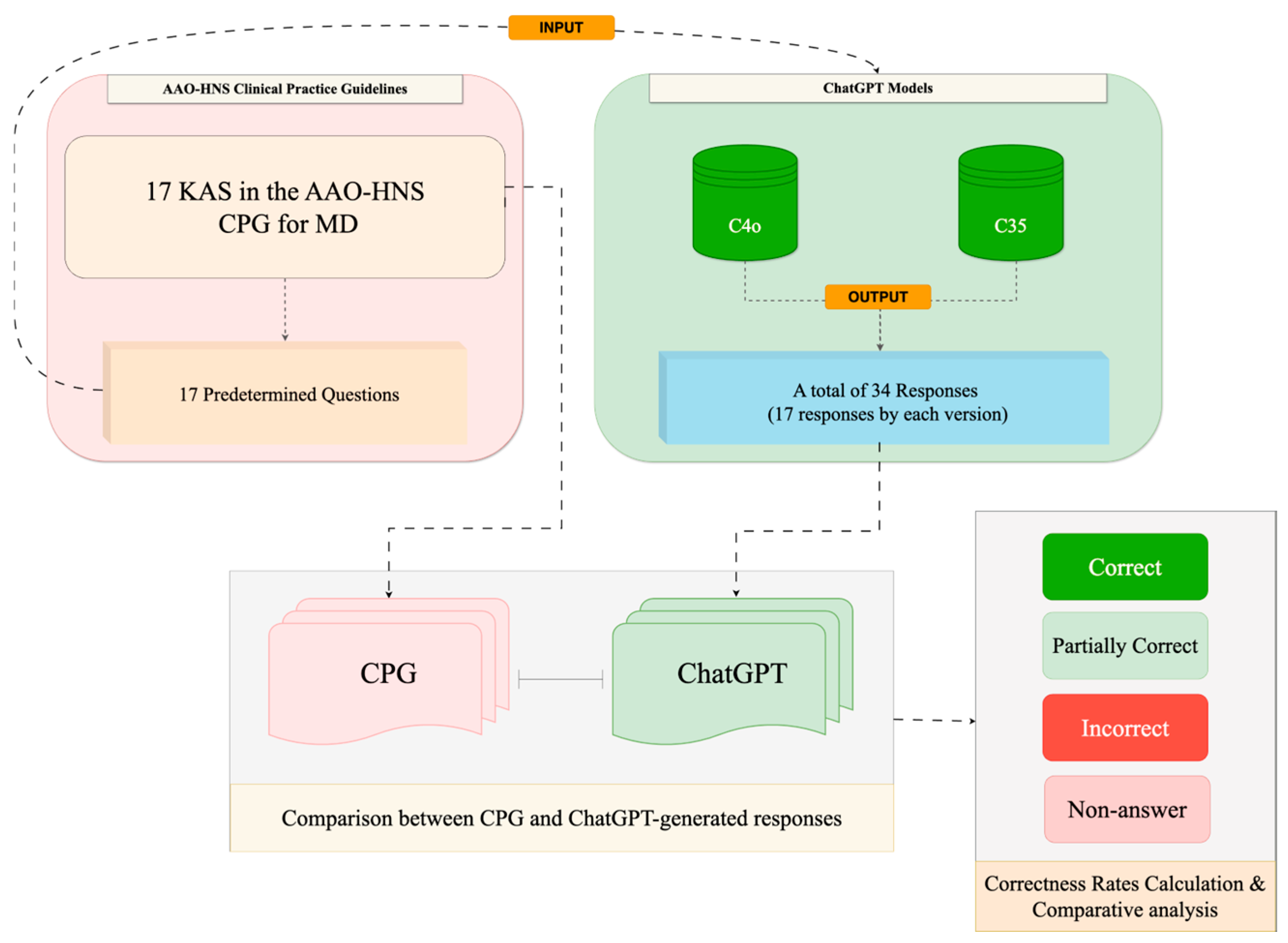

2. Materials and Methods

2.1. Question Design and Prompting

2.2. Response Review and Grading

- Correct: fully adhered to the guidelines, including timeframe, recommended actions, and treatment options.

- Partially Correct: aligned with guidelines but omitted key details.

- Incorrect: contradicted the guidelines.

- Non-answer: restated the question or provided irrelevant information.

2.3. Data Analysis

- ChatGPT-3.5 model (Chinese).

- ChatGPT-4.0 model (Chinese).

2.4. Statistical Evaluation

2.5. Ethical Considerations

3. Results

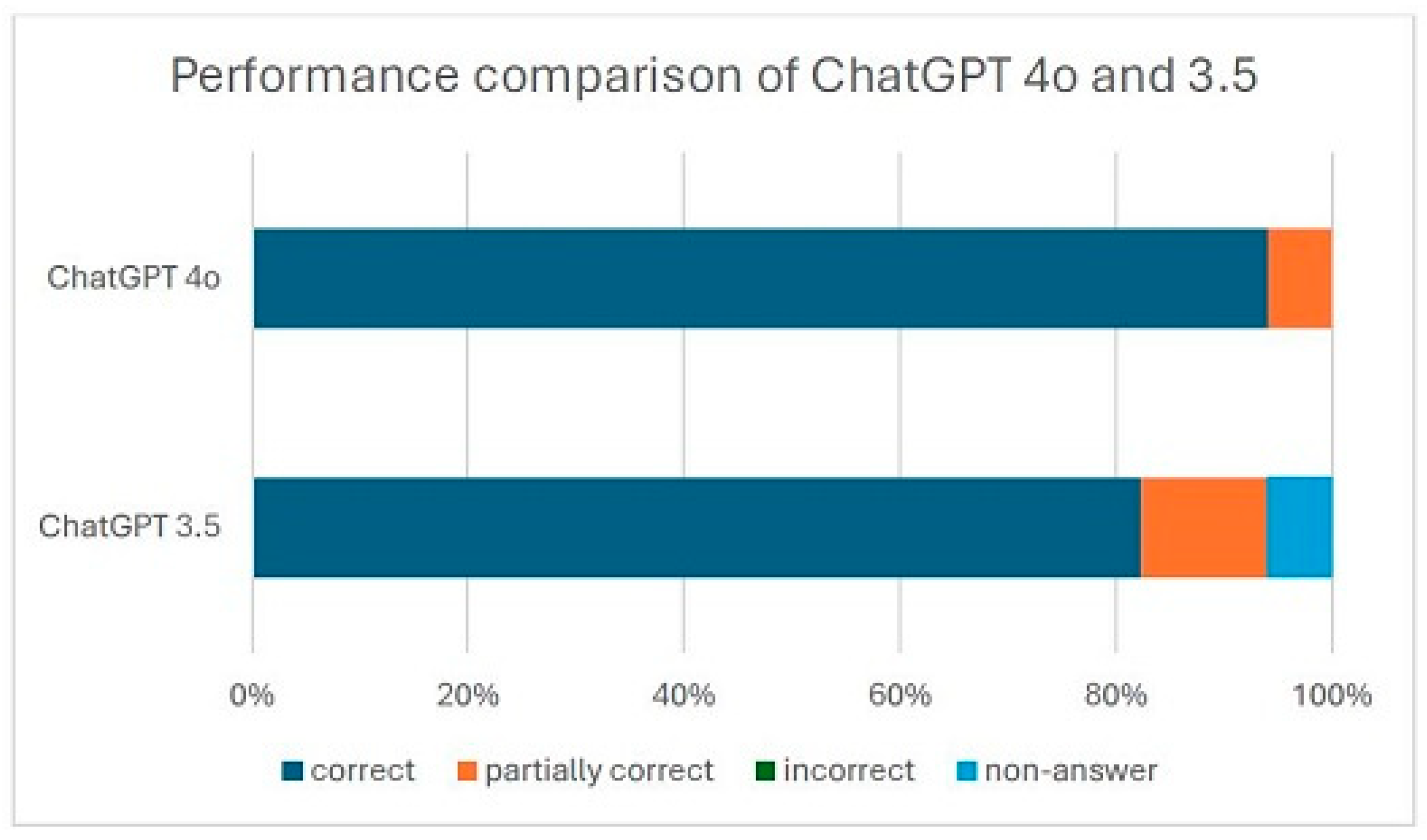

Concordance with the Guideline

4. Discussion

5. Conclusions and Future Direction

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lopez-Escamez, J.A.; Carey, J.; Chung, W.H.; Goebel, J.A.; Magnusson, M.; Mandalà, M.; Newman-Toker, D.E.; Strupp, M.; Suzuki, M.; Trabalzini, F.; et al. Diagnostic criteria for Menière’s disease. J. Vestib. Res. 2015, 25, 1–7. [Google Scholar] [CrossRef]

- Harcourt, J.; Barraclough, K.; Bronstein, A.M. Meniere’s disease. BMJ 2014, 349, g6544. [Google Scholar] [CrossRef] [PubMed]

- Alexander, T.H.; Harris, J.P. Current epidemiology of Meniere’s syndrome. Otolaryngol. Clin. N. Am. 2010, 43, 965–970. [Google Scholar] [CrossRef]

- Gürkov, R.; Pyykö, I.; Zou, J.; Kentala, E. What is Menière’s disease? A contemporary re-evaluation of endolymphatic hydrops. J. Neurol. 2016, 263, S71–S81. [Google Scholar] [CrossRef]

- Basura, G.J.; Adams, M.E.; Monfared, A.; Schwartz, S.R.; Antonelli, P.J.; Burkard, R.; Bush, M.L.; Bykowski, J.; Colandrea, M.; Derebery, J.; et al. Clinical Practice Guideline: Ménière’s Disease. Otolaryngol. Head Neck Surg. 2020, 162, S1–S55. [Google Scholar] [CrossRef] [PubMed]

- Pullens, B.; van Benthem, P.P. Intratympanic gentamicin for Ménière’s disease or syndrome. Cochrane Database Syst. Rev. 2011, 3, CD008234. [Google Scholar] [CrossRef] [PubMed]

- Buki, B.; Hanschek, M.; Jünger, H. Vestibular neuritis: Involvement and clinical features of all three semicircular canals. Auris Nasus Larynx 2017, 44, 288–293. [Google Scholar] [CrossRef]

- Nakashima, T.; Pyykkö, I.; Arroll, M.A.; Casselbrant, M.L.; Foster, C.A.; Manzoor, N.F.; Megerian, C.A.; Naganawa, S.; Young, Y.H. Meniere’s disease. Nat. Rev. Dis. Primers 2016, 2, 16028. [Google Scholar] [CrossRef]

- Yardley, L.; Dibb, B.; Osborne, G. Factors associated with quality of life in Meniere’s disease. Clin. Otolaryngol. Allied Sci. 2003, 28, 436–441. [Google Scholar] [CrossRef]

- Lawlor, P.; Chang, J. History of AI: How Generative AI Grew from Early Research. 2023. Available online: https://www.qualcomm.com/news/onq/2023/08/history-of-ai-how-generative-ai-grew-from-early-research (accessed on 22 August 2023).

- Wach, K.; Duong, C.D.; Ejdys, J.; Kazlauskaitė, R.; Korzynski, P.; Mazurek, G.; Paliszkiewicz, J.; Ziemba, E.W. The dark side of generative artificial intelligence: A critical analysis of controversies and risks of ChatGPT. Entrepr. Bus. Econ. Rev. 2023, 11, 7–30. [Google Scholar] [CrossRef]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Blasiak, A.; Khong, J.; Kee, T. CURATE.AI: Optimizing Personalized Medicine with Artificial Intelligence. SLAS Technol. 2020, 25, 95–105. [Google Scholar] [CrossRef]

- Paul, D.; Sanap, G.; Shenoy, S.; Kalyane, D.; Kalia, K.; Tekade, R.K. Artificial intelligence in drug discovery and development. Drug Discov. Today 2021, 26, 80–93. [Google Scholar] [CrossRef]

- Kanjee, Z.; Crowe, B.; Rodman, A. Accuracy of a Generative Artificial Intelligence Model in a Complex Diagnostic Challenge. JAMA 2023, 330, 78–80. [Google Scholar] [CrossRef]

- Ayoub, N.F.; Lee, Y.J.; Grimm, D.; Divi, V. Head—to—Head Comparison of ChatGPT Versus Google Search for Medical Knowledge Acquisition. Otolaryngol. Head Neck Surg. 2023, 170, 1484–1491. [Google Scholar] [CrossRef]

- Patel, E.A.; Fleischer, L.; Filip, P.; Eggerstedt, M.; Hutz, M.; Michaelides, E.; Batra, P.S.; Tajudeen, B.A. The Use of Artificial Intelligence to Improve Readability of Otolaryngology Patient Education Materials. Otolaryngol. Head Neck Surg. 2024, 171, 603–608. [Google Scholar] [CrossRef] [PubMed]

- Lai, V.D.; Ngo, N.T.; Veyseh, A.P.B.; Man, H.; Dernoncourt, F.; Bui, T.; Nguyen, T.H. Chatgpt beyond english: Towards a comprehensive evaluation of large language models in multilingual learning. arXiv 2023, arXiv:2304.05613. [Google Scholar]

- Lahiji, M.R.; Akbarpour, M.; Soleimani, R.; Asli, R.H.; Leyli, E.K.; Saberi, A.; Akbari, M.; Ramezani, H.; Nemati, S. Prevalence of anxiety and depression in Meniere’s disease; a comparative analytical study. Am. J. Otolaryngol. 2022, 43, 103565. [Google Scholar] [CrossRef]

- Brandt, T.; Dieterich, M. ‘Excess anxiety’ and ‘less anxiety’: Both depend on vestibular function. Curr. Opin. Neurol. 2020, 33, 136–141. [Google Scholar] [CrossRef]

- Borsetto, D.; Corazzi, V.; Obholzer, R.; Bianchini, C.; Pelucchi, S.; Solmi, M. Dizziness, psychological disorders and cognitive decline. Panminerva Medica 2023, 65, 84–90. [Google Scholar] [CrossRef] [PubMed]

- Zou, W.; Li, Q.; Peng, F.; Huang, D. Worldwide Meniere’s disease research: A bibliometric analysis of the published literature between 2002 and 2021. Front. Neurol. 2022, 13, 1030006. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, X.; Zhou, Y.; Mao, F.; Shen, S.; Lin, Y.; Zhang, X.; Chang, T.-H.; Sun, Q. Evaluation of the quality and readability of online information about breast cancer in China. Patient Educ. Couns. 2021, 104, 858–864. [Google Scholar] [CrossRef]

- Moise, A.; Centomo-Bozzo, A.; Orishchak, O.; Alnoury, M.K.; Daniel, S.J. Can ChatGPT Guide Parents on Tympanostomy Tube Insertion? Children 2023, 10, 1634. [Google Scholar] [CrossRef]

- Zalzal, H.G.; Abraham, A.; Cheng, J.; Shah, R.K. Can ChatGPT help patients answer their otolaryngology questions? Laryngoscope Investig. Otol. 2023, 9, e1193. [Google Scholar] [CrossRef]

- Zalzal, H.G.; Cheng, J.; Shah, R.K. Evaluating the Current Ability of ChatGPT to Assist in Professional Otolaryngology Educatidon. OTO Open 2023, 7, e94. [Google Scholar] [CrossRef] [PubMed]

- Chen, F.J.; Nightingale, J.; You, W.S.; Anderson, D.; Morrissey, D. Assessment of ChatGPT vs. Bard vs. guidelines in the artificial intelligence (AI) preclinical management of otorhinolaryngological (ENT) emergencies. Aust. J. Otolaryngol. 2024, 7, 19. [Google Scholar] [CrossRef]

- Ho, R.A.; Shaari, A.L.; Cowan, P.T.; Yan, K. ChatGPT Responses to Frequently Asked Questions on Ménière’s Disease: A Comparison to Clinical Practice Guideline Answers. OTO Open 2024, 8, e163. [Google Scholar] [CrossRef]

- Lee, S.J.; Na, H.G.; Choi, Y.S.; Song, S.Y.; Kim, Y.D.; Bae, C.H. Accuracy of the Information on Sudden Sensorineural Hearing Loss From Chat Generated Pre-Trained Transformer. Korean J. Otorhinolaryngol.-Head Neck Surg. 2024, 67, 74–78. [Google Scholar] [CrossRef]

- Maksimoski, M.; Noble, A.R.; Smith, D.F. Does ChatGPT Answer Otolaryngology Questions Accurately? Laryngoscope 2024, 134, 4011–4015. [Google Scholar] [CrossRef]

- Ye, F.; Zhang, H.; Luo, X.; Wu, T.; Yang, Q.; Shi, Z. Evaluating ChatGPT’s Performance in Answering Questions About Allergic Rhinitis and Chronic Rhinosinusitis. Otolaryngol. Head Neck Surg. 2024, 171, 571–577. [Google Scholar] [CrossRef]

- Wang, S.; Mo, C.; Chen, Y.; Dai, X.; Wang, H.; Shen, X. Exploring the Performance of ChatGPT-4 in the Taiwan Audiologist Qualification Examination: Preliminary Observational Study Highlighting the Potential of AI Chatbots in Hearing Care. JMIR Med. Educ. 2024, 10, e55595. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Dada, A.; Puladi, B.; Kleesiek, J.; Egger, J. ChatGPT in healthcare: A taxonomy and systematic review. Comput. Meth. Programs Biomed. 2024, 245, 108013. [Google Scholar] [CrossRef] [PubMed]

- Schukow, C.; Smith, S.C.; Landgrebe, E.; Parasuraman, S.; Folaranmi, O.O.; Paner, G.P.; Amin, M.B. Application of ChatGPT in Routine Diagnostic Pathology: Promises, Pitfalls, and Potential Future Directions. Adv. Anat. Pathol. 2024, 31, 15–21. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Zaharia, M.; Zou, J. How is ChatGPT’s behavior changing over Time? Harv. Data Sci. Rev. 2024, 6, 1–47. [Google Scholar] [CrossRef]

| Category | ChatGPT-3.5 | ChatGPT-4.0 |

|---|---|---|

| Correct Answers | 14/17 (82.4%) | 16/17 (94.1%) |

| Partially Correct Answers | 2/17 (11.8%) | 1/17 (5.9%) |

| Non-Answers | 1/17 (5.9%) | 0/17 (0%) |

| Incorrect Answers | 0 | 0 |

| Overall Correctness (Correct + Partially Correct) | 94.1% | 100% |

| Statistical Comparison | Non-inferior (p = 0.6012, Fisher’s exact test) | Higher correctness, but not statistically significant |

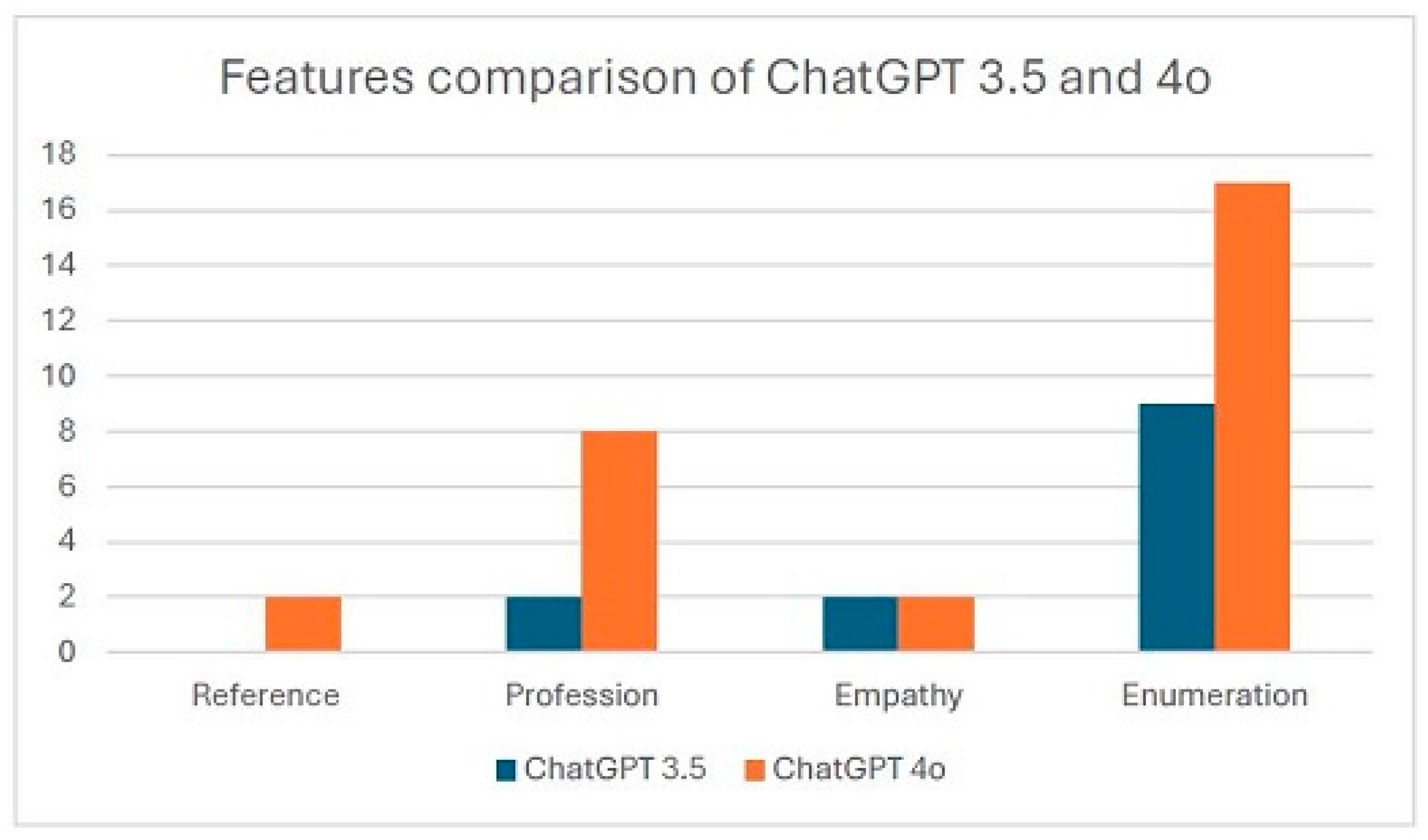

| Citation of References | None | Frequently cited (e.g., AAO-HNS, ICHD) |

| Guideline Adherence | Needed follow-up queries for complete answers in 1 response | Answered accurately on first attempt |

| Empathy in Communication | Promoted open, personalized communication | Added clarity, reassurance, and patient empowerment |

| Recommendation of Specialists | 2 mentions of consulting otolaryngologists/therapists | 8 mentions of consulting specific medical specialists |

| Response Structure | Mixed and less structured | Clearly structured, enumerated, and organized |

| Clarity and Readability | Generally concise, but sometimes incomplete | Clear and comprehensive, but occasionally verbose |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, M.-J.; Hsieh, L.-C.; Chen, C.-K. Evaluating ChatGPT’s Concordance with Clinical Guidelines of Ménière’s Disease in Chinese. Diagnostics 2025, 15, 2006. https://doi.org/10.3390/diagnostics15162006

Lin M-J, Hsieh L-C, Chen C-K. Evaluating ChatGPT’s Concordance with Clinical Guidelines of Ménière’s Disease in Chinese. Diagnostics. 2025; 15(16):2006. https://doi.org/10.3390/diagnostics15162006

Chicago/Turabian StyleLin, Mien-Jen, Li-Chun Hsieh, and Chin-Kuo Chen. 2025. "Evaluating ChatGPT’s Concordance with Clinical Guidelines of Ménière’s Disease in Chinese" Diagnostics 15, no. 16: 2006. https://doi.org/10.3390/diagnostics15162006

APA StyleLin, M.-J., Hsieh, L.-C., & Chen, C.-K. (2025). Evaluating ChatGPT’s Concordance with Clinical Guidelines of Ménière’s Disease in Chinese. Diagnostics, 15(16), 2006. https://doi.org/10.3390/diagnostics15162006