Abstract

Background/Objectives: Diabetic retinopathy (DR) remains one of the primary causes of preventable vision impairment worldwide, particularly among individuals with long-standing diabetes. The progressive damage of retinal microvasculature can lead to irreversible blindness if not detected and managed at an early stage. Therefore, the development of reliable, non-invasive, and automated screening tools has become increasingly vital in modern ophthalmology. With the evolution of medical imaging technologies, Optical Coherence Tomography (OCT) has emerged as a valuable modality for capturing high-resolution cross-sectional images of retinal structures. In parallel, machine learning has shown considerable promise in supporting early disease recognition by uncovering complex and often imperceptible patterns in image data. Methods: This study introduces a novel framework for the early detection of DR through multifractal analysis of OCT images. Multifractal features, extracted using a box-counting approach, provide quantitative descriptors that reflect the structural irregularities of retinal tissue associated with pathological changes. Results: A comparative evaluation of several machine learning algorithms was conducted to assess classification performance. Among them, the Multi-Layer Perceptron (MLP) achieved the highest predictive accuracy, with a score of , along with precision, recall, and F1-score values of , , and , respectively. Conclusions: These results highlight the strength of combining OCT imaging with multifractal geometry and deep learning methods to build robust and scalable systems for DR screening. The proposed approach could contribute significantly to improving early diagnosis, clinical decision-making, and patient outcomes in diabetic eye care.

1. Introduction

Diabetic retinopathy (DR), a serious blood vessel problem linked to diabetes, silently progresses and is a major cause of blindness in working-age adults worldwide. Because it often shows no early warning signs, diagnosing DR in time is a significant medical hurdle. The risk of permanent vision loss from undetected DR is a major concern for patients [1]. DR develops due to long-term high blood sugar that damages the blood vessels of the retina, potentially leading to leaks, bleeding, fatty deposits, and swelling. As it worsens, blood flow to the retina is reduced, sometimes triggering the growth of fragile new blood vessels that can cause more bleeding and scarring [2]. Therefore, finding DR in its initial stages, before lasting damage occurs, is crucial for managing the disease and preventing diabetes-related blindness.

The diagnostic approach for diabetic retinopathy is based on a variety of imaging techniques, each offering distinct insights into structural and vascular changes in the retina. Fundus photography, fluorescein angiography (FA), Optical Coherence Tomography (OCT), and Optical Coherence Tomography Angiography (OCTA) are the most commonly used modalities in clinical practice [3]. OCT has become a particularly powerful and non-invasive imaging tool that provides high-resolution cross-sectional views of the retinal architecture [4]. This modality enables clinicians to detect early microstructural changes such as retinal thickening, intraretinal fluid accumulation, and the presence of microaneurysms—characteristic features of non-proliferative diabetic retinopathy (NPDR). Unlike traditional fundus imaging, OCT allows quantification of the thicknesses of the retinal layer, offering objective criteria for monitoring disease stage and progression [5].

However, OCTA extends the capabilities of conventional OCT by visualizing the retinal microvasculature without the need for contrast agents. This technique is particularly valuable for assessing capillary non-perfusion, microaneurysms, and neovascularization [6,7]. However, OCT remains more widely adopted due to its accessibility, faster acquisition time, and ability to detect early retinal edema before vascular abnormalities become apparent. Although several imaging techniques are available for the diagnosis of diabetic retinopathy, OCT remains a cornerstone of early detection and monitoring due to its precision, safety and detailed structural evaluation of the retina [8].

The human retinal vascular network presents a complex branching structure characterized by irregularity and self-similarity, which cannot be fully captured using classical Euclidean geometry. Fractal geometry offers a more appropriate mathematical approach to describing these complex biological forms [9]. Fractal analysis has been successfully applied to quantify the global complexity of retinal blood vessels, which is particularly useful in the context of diseases like diabetic retinopathy (DR), where vascular patterns are progressively disrupted [10].

However, the retinal vasculature is not uniformly complex; its local geometric features vary across different regions. Therefore, a single fractal dimension is often insufficient to describe the spatial heterogeneity observed in pathological conditions [10]. The multifractal analysis becomes highly relevant. Fractal models can be helpful for spotting general patterns, but they often miss the finer structural details, especially in complex biological systems. Because of that, some researchers prefer using multifractal analysis. It looks at a wider range of scaling behaviors to better understand how vascular structures change in different areas [11]. In diabetic retinopathy, even slight changes in how blood vessels are arranged or spaced out can be important. Multifractal techniques help bring out these subtle shifts, which might otherwise go unnoticed as the disease gradually worsens. These alterations are reflected in the distribution of multifractal parameters such as the generalized dimensions () and the singularity spectrum (), which provide a deeper insight into the underlying pathological processes affecting the retinal microcirculation [12,13], applied to retinal images—such as those acquired by fundus photography or Optical Coherence Tomography (OCT)—multifractal descriptors can highlight early abnormalities that may not yet be clinically visible. For example, in non-proliferative diabetic retinopathy (NPDR), where microaneurysms and capillary occlusions begin to alter the vascular structure, multifractal analysis can identify deviations from normal complexity, helping early diagnosis and disease staging [14].

Compared to fundus photography, which provides two-dimensional en face images limited to surface-level vascular features, Optical Coherence Tomography (OCT) offers cross-sectional, depth-resolved imaging of the retina with micrometer-scale axial resolution. This enables precise visualization of individual retinal layers, such as the inner plexiform layer, outer nuclear layer, and photoreceptor complex—regions that undergo subtle pathological changes in the early stages of diabetic retinopathy. As these microstructural alterations may not manifest as visible fundus abnormalities, OCT allows earlier and more sensitive detection of disease onset. Furthermore, the three-dimensional anatomical information captured by OCT provides richer input for classification models, enhancing feature extraction and improving predictive accuracy. For this reason, OCT has become a preferred modality for structural analysis in retinal diagnostics, particularly for early disease detection workflows.

In recent years, the rapid development of machine learning (ML) techniques has significantly impacted the field of medical image analysis, offering powerful tools for the early detection and classification of diabetic retinopathy [15]. Unlike traditional image processing approaches, which rely heavily on handcrafted features, machine learning algorithms are capable of learning complex representations directly from imaging data. In particular, supervised learning models have been widely employed to classify retinal images into different stages of DR based on annotated datasets, while unsupervised and semi-supervised methods have shown potential in exploring unlabeled data for pattern discovery [16].

These techniques have been applied across various imaging modalities, including fundus photography, Optical Coherence Tomography (OCT), and OCT Angiography (OCTA), to detect subtle structural and vascular changes indicative of disease progression [17]. The integration of ML models has enhanced diagnostic accuracy, consistency, and efficiency, and enabled the development of automated screening systems, especially valuable in low-resource or high-demand clinical environments. Furthermore, the combination of machine learning with advanced image analysis techniques—such as fractal and multifractal analysis—has opened new perspectives for capturing both global and local features of the retinal microarchitecture [18].

Several recent studies have explored the integration of multifractal feature extraction with classification algorithms to enhance the automated detection of retinal diseases, particularly diabetic retinopathy (DR). Lei Yang et al. (2024) [19] proposed a hybrid approach combining two-dimensional empirical mode decomposition and multifractal analysis to extract detailed morphological features from retinal images. They aimed to classify diabetic retinopathy based on the complexity and variation of retinal structures. G. El Damrawi et al. (2020) [20] explored the use of multifractal geometry to analyze OCTA images to distinguish between healthy and non-proliferative DR cases and integrated a neural network classifier to support early automated diagnosis. Devanjali Relan et al. (2020) [21] applied multifractal analysis to high-resolution fundus images to evaluate its ability to distinguish between healthy, diabetic retinopathy, and glaucomatous cases. They emphasized the role of segmented vascular structures in capturing meaningful differences across subgroups. M. Rizzo et al. (2024) [13] investigated the predictive power of vascular tortuosity features in OCTA images for detecting diabetic retinopathy using machine learning algorithms. Their approach also incorporated additional vascular descriptors, including fractal and geometrical metrics, to enhance the classification performance. Tiepei Zhu et al. (2019) [22] conducted a study using multifractal analysis on projection artifact-resolved OCTA images to assess microvascular impairments across different DR stages. They demonstrated that multifractal geometric features correlated strongly with disease severity and outperformed traditional Euclidean metrics in early DR detection. Mohamed M. Abdelsalam (2020) [13] proposed an effective methodology for early DR detection using OCTA images based on blood vessel reconstruction and the extraction of key vascular features such as intercapillary areas, FAZ perimeter, and vessel density. These features were used to train an artificial neural network to classify between diabetic subjects without DR and those with mild-to-moderate NPDR. The approach demonstrated high accuracy and speed, highlighting the value of combining image enhancement with neural network classification in early DR diagnosis. In a key study, Mohamed M. Abdelsalam et al. (2021) [12]. proposed a novel framework for the early detection of non-proliferative diabetic retinopathy (NPDR) by analyzing macular OCTA images through multifractal geometry. The retinal microvascular network was characterized using multifractal parameters such as generalized dimensions and singularity spectrum, which reflect the complex, irregular vascular structures affected by early DR changes. These multifractal features served as discriminative inputs for a supervised machine learning classifier (Support Vector Machine), enabling the automatic identification of early NPDR. This approach highlighted the effectiveness of multifractal geometry in capturing subtle microvascular alterations, and its potential adaptability for recognizing other DR stages or vascular-related retinal diseases. The methodology closely aligns with our current research focus, emphasizing the value of multifractal descriptors in enhancing diagnostic precision from OCTA data.

Optical Coherence Tomography (OCT) has become an essential imaging modality in ophthalmology, particularly in the early detection and monitoring of diabetic retinopathy (DR). Its ability to provide non-invasive, high-resolution cross-sectional images of the retinal layers makes it a powerful tool for identifying subtle structural changes associated with the progression of DR, such as retinal thickening, microaneurysms, or fluid accumulation. Unlike traditional imaging techniques that primarily capture surface-level information, OCT enables clinicians and researchers to visualize the deeper microstructural alterations caused by diabetic complications [3,23].

In recent years, the integration of OCT imaging with automated analytical tools has gained growing attention—for instance, Kh. Tohidul Islam et al. (2019) [24] proposed a deep transfer learning framework to identify diabetic retinopathy from OCT images. Their approach involved retraining pre-existing deep learning models to serve as feature extractors, followed by the application of conventional classifiers for disease detection. This work highlighted the potential of combining OCT with artificial intelligence to enhance diagnostic accuracy and efficiency in DR detection. Building on the value of OCT imaging in diagnosing diabetic retinopathy, the study by M. Sakthi Sree Devi et al. (2021) [25] presents a targeted approach for automated detection of DR through detailed retinal layer analysis. The authors utilize high-resolution OCT images to segment seven distinct retinal layers using the Graph-Cut method, a powerful algorithm driven by gradient information. By extracting critical features such as retinal layer thickness and signs of neovascularization, their methodology effectively distinguishes between healthy and diabetic retinopathy subjects. This study further underscores the potential of OCT-based structural biomarkers in facilitating early and accurate DR detection through image analysis techniques. A. Sharafeldeen et al. (2021) [26] proposed a CAD system for early diabetic retinopathy detection using OCT B-scans by combining morphological features (layer thickness, tortuosity) with high-order reflectivity markers. These features were extracted from segmented retinal layers and classified using SVMs and neural network fusion, highlighting the effectiveness of integrating anatomical and reflectivity cues for accurate early diagnosis. In the same context, Mahmoud Elgafi et al. (2022) [27] introduced a robust approach for diabetic retinopathy detection based on 3D feature extraction from OCT images. Their method involves segmenting individual retinal layers, from which volumetric features such as layer-wise reflectivity and thickness are derived. These features are then processed using a backpropagation neural network for classification. This study underlines the diagnostic value of 3D structural biomarkers within OCT scans, reinforcing the role of this imaging modality in the automated early-stage identification of DR.

Our study presents a novel and comprehensive framework for the early detection of Diabetic Retinopathy using Optical Coherence Tomography (OCT) images, addressing a gap in current research, which predominantly focuses on Optical Coherence Tomography Angiography (OCTA) due to its enhanced vascular detail but limited availability. Our approach leverages a large and accessible OCT dataset, enabling broader clinical applicability. The preprocessing pipeline, developed in MATLAB R2022a, ensures consistent image quality and normalization. We then apply multifractal analysis using the box-counting method to extract meaningful textural descriptors that characterize pathological alterations in retinal structure. These descriptors are subsequently used to train and evaluate a Multi-Layer Perceptron (MLP) classifier, demonstrating robust performance. To the best of our knowledge, this is the first study to integrate multifractal analysis of OCT images with an MLP classifier for DR detection, offering a scalable and practical solution for early-stage screening.

The remainder of this paper is organized as follows: Section 2 presents the proposed methodology. Section 3 reports and discusses the experimental results, highlighting the classification performance and comparing it with other models. Finally, Section 4 concludes the study and outlines possible directions for future research.

2. Methodology

The proposed approach introduces a novel and effective methodology for the early detection of diabetic retinopathy (DR) based on the structural analysis of Optical Coherence Tomography (OCT) images. Unlike prior works that rely primarily on OCTA or fundus photography, our method leverages the anatomical information provided by OCT scans, which are more accessible in standard clinical settings and less affected by motion artifacts or contrast issues.

One of this study’s key innovations is applying multifractal geometry to OCT data, allowing for the quantification of complex spatial patterns and irregularities that emerge in the early stages of DR [25]. This analysis enables the detection of microstructural retinal changes that may not be easily visible through conventional imaging or manual inspection.

In addition, the use of a neural-based classification model (MLP) contributes to enhanced detection accuracy, capturing nonlinear relationships between subtle image patterns associated with disease onset. Compared to traditional imaging-based DR detection methods that often require vascular visibility (such as OCTA or fundus images), our approach provides a non-invasive, structure-based, and clinically scalable solution for early detection.

Furthermore, the methodology has been validated on a large and balanced dataset, ensuring robustness, generalizability, and suitability for deployment in real-world diagnostic workflows.

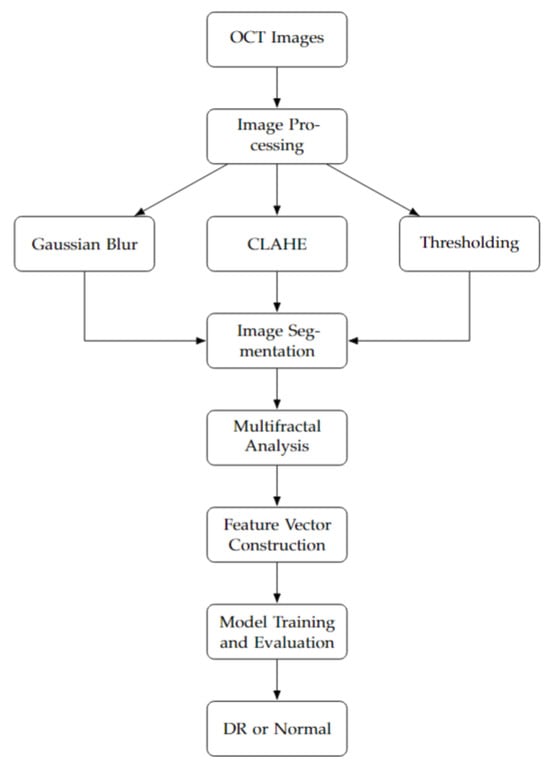

The flowchart in Figure 1 outlines the full methodology of our proposed diabetic retinopathy detection system. Beginning with a balanced dataset of 6000 OCT images, we first apply several preprocessing steps, including Gaussian blur, local contrast enhancement (CLAHE), thresholding, and binarization. These steps enhance structural visibility and reduce noise, preparing the images for analysis. We then perform multifractal analysis to characterize the complex spatial structures present in the OCT scans. The resulting descriptors are compiled into a structured dataset, which undergoes normalization and K-fold cross-validation to ensure robustness and mitigate overfitting.

Figure 1.

Our methodology flowchart.

A range of machine learning classifiers are trained and evaluated, and the MLP model is selected for its superior performance in classifying images into DR and normal classes. This model successfully captures the nonlinear relationships within multifractal patterns, making it well-suited for early DR screening.

2.1. OCT Dataset

In this study, we used the publicly available Retinal OCT Image Classification—C8 dataset compiled by Obuli Sai Naren (2021) [28]. It consists of 24,000 high-resolution OCT images categorized into eight retinal conditions. The images were collected from multiple open-access sources (e.g., Kaggle, OpenICPSR), then preprocessed and class-balanced to ensure uniform representation across classes.

For the purpose of this work, we selected two categories—normal and diabetic retinopathy—to focus specifically on binary classification for early DR detection using OCT-based structural features. The multi-source composition of the dataset ensures a certain degree of pathological and imaging variability, which supports the development of generalizable models. However, detailed clinical metadata such as patient demographics is not provided. While this does not compromise the current study’s experimental scope, future validation on clinically annotated and demographically stratified datasets would help further assess the external applicability of the proposed approach in broader clinical environments.

2.2. OCT Image Processing

To enhance and prepare OCT images for further analysis and classification, a comprehensive preprocessing pipeline was implemented using the open-source Fiji platform (a distribution of ImageJ) through a custom-developed macro script [29]. The main goals of this pipeline were to enhance image contrast, suppress noise, and segment retinal structures accurately to isolate clinically relevant features indicative of diabetic retinopathy (DR). Preprocessing is a crucial step in medical image analysis as it improves the quality and interpretability of the input data, which in turn enhances the performance of subsequent feature extraction and classification algorithms. Each image in the dataset underwent the following sequence of processing operations:

2.2.1. Gaussian Blurring

To suppress high-frequency noise while retaining important structural details, a Gaussian blur was applied with a standard deviation () of 2. The two-dimensional Gaussian function used for convolution is defined as:

This low-pass filter smooths pixel intensity variations and aids in reducing noise and small-scale artifacts that could interfere with segmentation [30].

2.2.2. Contrast Enhancement

Local contrast enhancement was performed using the CLAHE (Contrast-Limited Adaptive Histogram Equalization) algorithm. Unlike traditional histogram equalization, CLAHE operates on small contextual regions (tiles) of the image and redistributes pixel intensities to enhance local contrast while limiting amplification via a predefined clip limit. This technique is especially effective for medical images in which subtle differences in tissue structures are diagnostically significant [31]. CLAHE was configured with a block size of 127, histogram bins of 256, and a clip limit of 3, which enhanced the visibility of retinal layers and vascular abnormalities in darker image regions [32]. The entire pipeline was implemented as a Fiji macro, which ensured batch processing of large image sets while maintaining consistency and reproducibility of the preprocessing steps (Algorithm 1) [33].

| Algorithm 1 Image preprocessing with Gaussian blur and CLAHE. |

|

2.3. Multifractal Analysis

Multifractal analysis is a powerful mathematical framework used to characterize complex patterns and textures that exhibit scale-invariant properties. In the context of medical imaging, particularly OCT images, multifractal analysis allows for the quantification of structural heterogeneity and irregularity in retinal tissues, which may correlate with pathological changes due to diabetic retinopathy. To perform this analysis, we used the Multifrac v1.0.0 plugin—an extension developed for the ImageJ platform—which supports the multifractal characterization of 2D and 3D image data. The plugin is freely available and can be accessed via the official ImageJ v2 website (https://imagej.net/ij/index.html, accessed on 27 December 2024). Multifrac facilitates the computation of multifractal parameters using the box-counting algorithm, a widely adopted approach for fractal dimension estimation. This tool was chosen due to its reliability, ease of integration with ImageJ, and its ability to handle grayscale images with options for preprocessing such as resizing, binarization, and scale restriction. It provides automated analysis and saves results along with processed images into structured directories. For more technical details, readers are referred to the original documentation and publication by Torre et al. (2020) [34].

In this study, we applied 2D multifractal analysis on our preprocessed OCT images using the default settings of the plugin, focusing on the white pixels after binarization to emphasize retinal structures of interest. The multifractal analysis yields a set of features that provide insight into the spatial complexity and scaling behavior of the image textures The primary descriptors extracted through this process are as follows:

- Generalized dimensions:

- −

- Box-counting dimension ().

- −

- Information dimension ().

- −

- Correlation dimension ().

- Singularity spectrum: Characterizes the distribution of singularities (local scaling exponents) in the image and captures its multifractal nature.

These descriptors form the feature vector used in subsequent classification stages. In the following subsections, we will present the mathematical foundations and interpretation of these multifractal features.

2.4. Multifractal Features Extraction

Multifractal analysis provides a rich description of complex structures by characterizing the spatial heterogeneity of singularities in an image. In our study, two types of features are extracted from each OCT image: the generalized dimensions and the singularity spectrum.

2.4.1. Generalized Dimensions

The generalized dimensions characterize how measures (such as pixel intensity distributions) scale across different orders q [35]. They are defined by the formula

where

- is the box size;

- is the probability measure in the i-th box.

Different generalized dimensions highlight different aspects of the image structure:

- Box-counting dimension () for ():where is the number of non-empty boxes. measures the global geometric complexity of the OCT image. Higher complexity may indicate microstructural alterations such as exudates, microaneurysms, or early neovascularization characteristic of proliferative stages of DR.

- Information dimension () for ():reflects the heterogeneity in the distribution of intensity values. Increased irregularity in DR lesions (e.g., localized edema or deposits) leads to a higher entropy captured by .

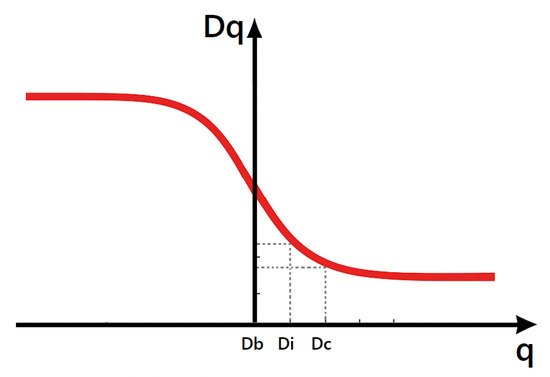

- Correlation dimension () for ():emphasizes clustering patterns. In DR-affected regions, pixel intensities often show strong local correlations due to lesions and structural distortions in retinal layers, making a sensitive descriptor for disease severity (Figure 2).

Figure 2. Generalized dimension curve: , , and extracted at respectively.

Figure 2. Generalized dimension curve: , , and extracted at respectively.

2.4.2. Singularity Spectrum

The singularity spectrum provides a deeper characterization of the distribution of singularities. It describes how different singularity strengths are distributed spatially within the image [36]. The relationship between q, , and is

where

- is the Hölder exponent (local regularity).

- is the fractal dimension of the set of points with singularity strength .

- : Minimum singularity strength—indicates the most irregular structures (e.g., sharp edges or abrupt intensity changes from hemorrhages or exudates).

- : Maximum singularity strength—reflects the smoothest regions (healthy retinal layers).

- : Value of corresponding to the maximum —represents the most dominant singularity type.

- : Maximum height of the singularity spectrum—related to the richness or abundance of dominant structures.

- Spectrum width: —quantifies the degree of multifractality; wider spectra suggest greater textural diversity, typical in advanced DR stages.

- Symmetric shift: —measures the asymmetry; positive or negative shifts may indicate prevalence of finer (positive shift) or coarser (negative shift) textures, respectively.

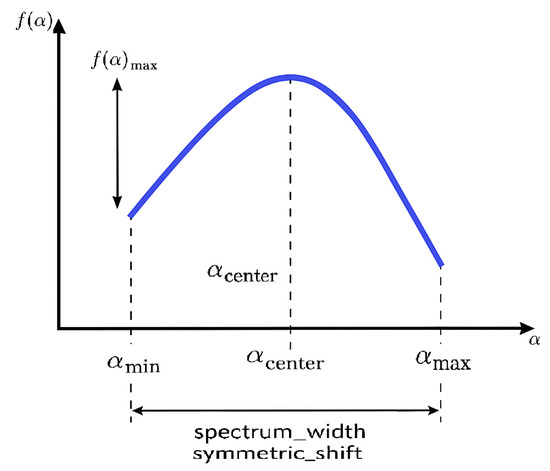

Variations in singularity spectrum parameters, specifically , , and the spectrum width , serve as sensitive indicators of structural alterations within the retinal layers induced by diabetic retinopathy (DR) [12,19] (Figure 3).

Figure 3.

Singularity spectrum: illustrating , , , , spectrum width, and symmetric shift.

The minimum singularity exponent is typically associated with regions of high-intensity concentration, corresponding to densely active pathological zones such as microaneurysms or neovascular tufts. Conversely, reflects sparsely distributed, low-density regions, which may be indicative of vessel dropout or retinal thinning [12,19].

As DR progresses from early non-proliferative stages to more advanced forms, spatial heterogeneity within the retinal microvasculature increases. This progression is quantitatively reflected in the broadening of the singularity spectrum, observed as an increase in the width , suggesting a greater diversity of local scaling behaviors. In early DR, only subtle shifts in and are typically observed, corresponding to microstructural changes that may remain undetectable in conventional clinical imaging.

In contrast, moderate to severe DR stages often exhibit a markedly asymmetric singularity spectrum, highlighting uneven pathological alterations across different retinal regions [19].

Thus, multifractal parameters do not merely quantify geometric complexity; they encapsulate clinically significant information regarding tissue integrity. A broader and more asymmetric singularity spectrum is strongly associated with increased structural irregularity and disease progression. Consequently, these descriptors provide a robust quantitative framework for the early diagnosis, staging, and monitoring of diabetic retinopathy based on OCT image analysis [23].

Table 1 summarizes the multifractal features extracted from OCT images and highlights their clinical relevance for diabetic retinopathy (DR) detection. These metrics provide quantitative insights into microstructural degradation, textural irregularities, and pathological variability in retinal tissues, serving as valuable biomarkers to differentiate between healthy and diabetic-affected retinas.

Table 1.

Summary of extracted multifractal features and their clinical relevance in diabetic retinopathy detection.

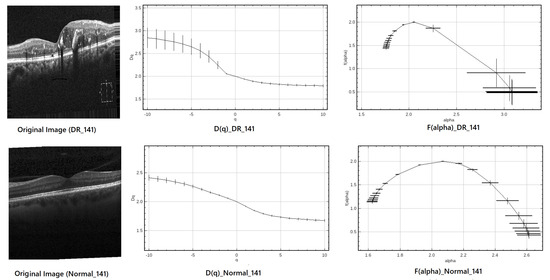

2.4.3. Case Study: Multifractal Analysis of DR and Normal OCT Images (Index 141)

The discriminatory capability of multifractal analysis in retinal OCT images is illustrated through two representative case studies: one pathological case (DR_141) corresponding to a patient with diabetic retinopathy, and one healthy control (Normal_141). For each case, the original OCT scan, the computed generalized dimension curve , and the singularity spectrum are presented in Figure 4. These examples clearly highlight the differences in multifractal properties between healthy and pathological retinal tissues.

Figure 4.

Multifractal analysis of DR_141 (top) and Normal_141 (bottom). Left: original OCT image; center: curve; right: singularity spectrum.

The multifractal descriptors extracted from both images are summarized in Table 2, showing clear differences between the pathological and healthy samples.

Table 2.

Comparison of multifractal features between the DR case (DR_141) and healthy control (Normal_141).

As presented in Table 2, the multifractal descriptors extracted from a representative diabetic retinopathy case (DR_141) and healthy control (Normal_141) exhibit relatively close numerical values for specific parameters, such as the box-counting dimension () and the maximum of the multifractal spectrum (). At first glance, this apparent similarity might suggest a limited discriminative capacity when considering individual descriptors in isolation. However, such observations must be interpreted within the context of a higher-dimensional feature space, where even slight variations across multiple parameters can contribute to meaningful class differentiation.

Indeed, multifractal features are inherently interrelated, and their diagnostic relevance often emerges not from isolated values but from the way they interact and co-vary with one another. For instance, differences in , , the spectrum width (), and the symmetry shift reveal nuanced changes in the local singularity structure of the retinal texture. These subtle alterations, although not visually striking in a univariate comparison, may indicate underlying pathological changes.

To leverage the full potential of these interdependencies, a machine learning classifier is introduced. Unlike traditional threshold-based methods, this classifier can model complex, nonlinear relationships across all features simultaneously. This allows it to capture latent patterns and interactions that are not evident through direct inspection of individual descriptors, thereby providing a more comprehensive and robust framework for distinguishing between normal and pathological retinal images.

From the curves, both diabetic retinopathy (DR) and healthy retinal images exhibit a decreasing trend with increasing q, confirming their multifractal nature. The steeper decline observed in DR cases indicates greater spatial irregularity and structural disruption, which are hallmark characteristics of diabetic retinopathy. This difference is further highlighted by the spectrum. The DR spectrum appears broader and more asymmetric, quantified by

The left-skewness of the DR spectrum suggests a dominance of coarse singularities—areas of low local regularity—typically associated with pathological changes such as hemorrhages or neovascularization. In contrast, the healthy retina presents a narrower and more symmetric spectrum, reflecting a more regular and homogeneous retinal architecture.

Overall, these findings underscore the discriminative power of multifractal descriptors—especially spectrum width and asymmetry—in differentiating retinal abnormalities. This supports their potential utility in the early detection and screening of diabetic retinopathy.

2.5. Multi-Layer Perceptron (MLP)

In this study, the multifractal features extracted from OCT images—such as the box-counting dimension, information dimension, and correlation dimension—were used as input to a Multi-Layer Perceptron (MLP) for the classification of diabetic retinopathy. The MLP is a supervised learning model composed of an input layer, multiple hidden layers, and an output layer, where each neuron in a layer is connected to neurons in the next via weighted connections and biases [37].

We employed the MLP classifier from the scikit-learn library with the following configuration: two hidden layers consisting of 128 and 64 neurons, respectively, a ReLU activation function, an Adam optimizer, and a maximum of 1000 iterations for training. The network architecture is designed to learn complex, nonlinear mappings from the multifractal feature space to the binary output labels (healthy or diabetic retinopathy).

The activation of neurons in each hidden layer is governed by the Rectified Linear Unit (ReLU) function, defined as

The forward pass of the network for a given hidden layer h is expressed as

where denotes the activation output at the h-th hidden layer, is the weight matrix, and is the bias vector. The final output layer produces a probability score used for classification, using a logistic sigmoid function when binary classification is required:

where represents the activations from the last hidden layer, and is the sigmoid function.

The use of the Adam optimizer allows for adaptive learning rates, contributing to faster and more stable convergence during training. This MLP configuration proved effective in learning discriminative patterns from the multifractal features, facilitating accurate classification between healthy and diabetic retinopathy cases.

3. Results and Discussion

3.1. Hyperparameter Optimization and Experimental Setup

In order to ensure a fair and robust comparison across all classifiers, extensive hyperparameter tuning was conducted. The hyperparameters were selected through a combination of empirical testing, grid search, and literature-guided heuristics. Multiple experiments were carried out for each model to identify the configuration that yields the best trade-off between accuracy and generalization performance. The final optimized values were chosen based on their consistent performance across different trials.

Table 3 summarizes the hyperparameters selected for each classifier. These configurations were fixed across all experiments to maintain consistency and comparability between models. The hyperparameters presented above were fixed for all subsequent evaluations in order to preserve the integrity of the comparisons. Special care was taken to prevent overfitting or underfitting, with regularization parameters and model complexities adjusted accordingly.

Table 3.

Optimized hyperparameters and final configurations for all classifiers.

All experiments were conducted on the Kaggle platform using a P100 GPU runtime environment to accelerate training, especially for the deep learning-based model (MLP Neural Network). A stratified K-fold cross-validation strategy (with ) was employed to enhance the reliability of the results. For each fold, the dataset was split into 80% for training and 20% for testing, ensuring that the distribution of the classes remained consistent across all splits. This consistent experimental design across all models ensures that performance comparisons are both fair and statistically meaningful.

3.2. Evaluation Metrics

To objectively assess the performance of the classification models, a comprehensive set of evaluation metrics was utilized. These metrics offer insights into different aspects of model performance, particularly in medical imaging where both false positives and false negatives carry significant implications. The following metrics were considered:

- Accuracy: Represents the proportion of correctly predicted instances (both positive and negative) over the total number of instances. It is defined aswhere = true positives; = true negatives; = false positives; and = false negatives.

- Precision: Measures the ability of the classifier to return only relevant instances among the predicted positives. High precision indicates a low false positive rate:

- Recall (Sensitivity): Represents the model’s ability to correctly identify all relevant instances among the actual positives. It is also known as Sensitivity:

- F1-Score: The harmonic mean of precision and recall, providing a balanced metric that accounts for both false positives and false negatives:

- Specificity: Indicates the proportion of true negatives correctly identified among all actual negative cases. This metric is crucial in medical diagnostics where reducing false alarms is important:

- 95% Confidence Interval (CI): Represents the range within which the true value of the performance metric is expected to lie with 95% certainty. It accounts for variability across cross-validation folds and quantifies the robustness of the model.

- p-value: Used to assess the statistical significance of performance differences between models. A low p-value (typically <0.05) suggests that the observed performance difference is unlikely due to chance and, therefore, statistically significant.

These metrics, when analyzed together, provide a holistic view of each model’s diagnostic capability. Especially in the context of medical imaging, high sensitivity ensures that pathological cases are not missed, while high specificity avoids unnecessary misdiagnosis. Furthermore, statistical metrics like confidence intervals and p-values reinforce the reliability of the reported results.

3.3. Feature Extraction Using Multifractal Analysis

Multifractal analysis was performed to capture the geometric complexity and textural variations of retinal images. This method provides comprehensive quantification of local singularities and global structures in images, making it particularly suitable for detecting pathological changes such as diabetic retinopathy (DR).

From each image, a total of nine multifractal features were extracted: generalized fractal dimensions (, , ), singularity spectrum features , , , ), and derived metrics (, ). These features serve as compact and informative descriptors for subsequent classification tasks.

Table 4 presents the extracted features for a sample of 10 images, including both normal and DR cases, to illustrate the variation across different classes.

Table 4.

Sample of 10 retinal images with extracted multifractal features.

Statistical Summary of Multifractal Features

The overall understanding of the multifractal features extracted from the image dataset was obtained through statistical analysis performed on all samples. Table 5 presents descriptive statistics (count, mean, standard deviation, minimum, quartiles, and maximum) for each multifractal feature, including the box-counting dimension (), information dimension (), correlation dimension (), spectrum width, symmetric shift, minimum Hölder exponent (), maximum Hölder exponent (), central Hölder exponent (), and the maximum of the multifractal spectrum ().

Table 5.

Statistical summary of multifractal features (6000 samples).

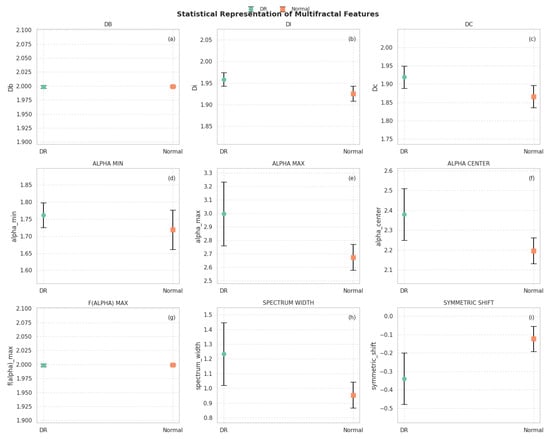

The feature shows very low variance, with values consistently close to 2, highlighting a high level of structural complexity across the dataset. In contrast, the multifractal spectrum parameters , , and spectrum width exhibit broader dispersion, reflecting greater sensitivity to textural variations. The symmetric shift metric spans both negative and positive values, indicating a slight asymmetry in the multifractal spectra of the images. In addition to the global summary, Figure 5 illustrates the comparative distributions of the multifractal features between two sample categories: degraded regions (DRs) and normal regions. Each subplot reports the mean and standard deviation of the values for one descriptor, enabling a direct visual comparison.

Figure 5.

Statistical representation of multifractal features between degraded (DR) and normal regions. (a) DB; (b) DI; (c) DC; (d) ALPHA MIN; (e) ALPHA MAX; (f) ALPHA CENTER; (g) F(ALPHA) MAX; (h) SPECTRUM WIDTH; (i) SYMMETRIC SHIFT.

From this visualization, several observations can be highlighted:

- The box-counting dimension () and show very low variability and nearly identical mean values across both groups, confirming their global stability.

- Slightly higher values for and are observed in DR regions, suggesting increased complexity and heterogeneity in those areas.

- The descriptors and are notably higher in DR samples, while remains relatively stable, indicating broader multifractal spectra in degraded regions.

- The spectrum width is significantly greater in DR areas, reflecting stronger variability in singularities and supporting the hypothesis of increased structural irregularity.

- The symmetric shift presents more negative values in DR regions, implying asymmetry in the multifractal spectrum skewed toward higher singularities, which may correspond to abrupt spectral transitions due to vegetation degradation.

These results suggest that multifractal descriptors can capture subtle yet meaningful variations in hyperspectral texture, particularly useful in distinguishing between healthy and degraded vegetation. These features are thus expected to provide valuable input for the subsequent classification process.

3.4. Classification Using Machine Learning Algorithms

To identify the most suitable classification strategy for our OCT-based multifractal feature set, we conducted a comparative evaluation of several conventional machine learning classifiers, including Support Vector Machine (SVM), k-nearest neighbors (k-NN), Decision Tree (DT), and Multi-Layer Perceptron (MLP). The choice of these models was motivated by the moderate size and low dimensionality of the extracted feature space, which comprised 9 multifractal descriptors per image. Deep learning approaches, such as convolutional neural networks (CNNs), were deliberately excluded due to their high data requirements and reliance on raw image inputs. In contrast, our handcrafted feature-based pipeline provides interpretable descriptors and enables efficient training with limited computational resources. This strategy ensures clinical scalability and maintains interpretability, which is essential for translational deployment in real-world ophthalmic workflows.

A comprehensive comparison of conventional and advanced machine learning classifiers was carried out to assess the discriminative power of multifractal descriptors extracted from OCT images. A 5-fold cross-validation procedure was employed to ensure robustness of evaluation and reduce variance due to data partitioning. The classification performance of each model was evaluated based on four key metrics: accuracy, precision, recall, and F1-score. Table 6 summarizes the detailed results obtained across the five validation folds for each classifier.

Table 6.

Performance comparison of various classifiers over 5-fold cross-validation using multifractal features. Metrics reported include accuracy, precision, recall, and F1-score.

The results presented in Table 6 highlight several important observations. Firstly, all tested models significantly outperform the baseline random chance level (), confirming that the multifractal descriptors contain relevant information for distinguishing between normal and non-proliferative diabetic retinopathy (NPDR) cases.

Among classical classifiers, the Support Vector Machine (SVM) and Decision Tree (DT) exhibit promising results, with average accuracies of and , respectively. These results underline the capability of basic discriminative and rule-based models to capture some degree of structure in the multifractal feature space.

Ensemble-based methods such as Random Forest (RF) and Gradient Boosting (GB) yield improved results, achieving average accuracies around and , respectively. These techniques are known for their robustness to overfitting and their ability to aggregate multiple weak learners, which explains their enhanced performance.

The most notable improvements come from state-of-the-art Gradient Boosting frameworks—XGBoost and LightGBM—which achieve accuracies of and , respectively. These models are particularly efficient in handling high-dimensional data and are optimized for speed and accuracy, making them suitable for complex medical classification tasks.

The Multi-Layer Perceptron (MLP), a type of feedforward artificial neural network, achieves the highest performance across all metrics, with an average accuracy of and an F1-score of . The superior results of the MLP suggest that neural networks are particularly adept at learning the complex, nonlinear relationships embedded in the multifractal descriptors. This underscores the relevance of deep learning architectures in medical image analysis and strengthens the case for their use in automated DR screening systems. While classical machine learning algorithms provide a solid baseline for classification, the use of advanced ensemble techniques and neural networks leads to significant improvements in detection accuracy. These findings support the feasibility of leveraging multifractal analysis combined with powerful classifiers such as MLP for the accurate diagnosis of NPDR.

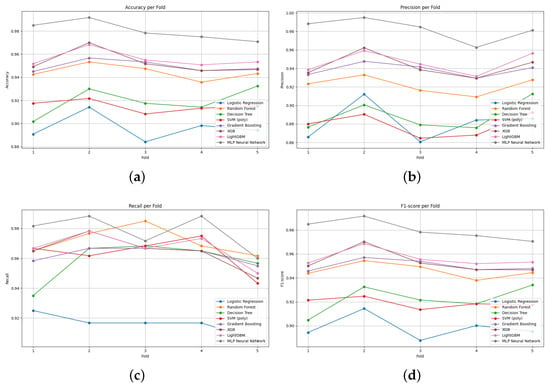

Figure 6 illustrates the comparative performance of various classical and ensemble-based classifiers across five folds of cross-validation using four evaluation metrics: accuracy, precision, recall, and F1-score.

Figure 6.

Performance variation of classification models across 5-fold cross-validation: (a) accuracy, (b) precision, (c) recall, and (d) F1-score. Each curve represents the performance of one classifier evaluated on multifractal features.

From Figure 6a it is evident that the MLP Neural Network and LightGBM consistently outperformed other models in terms of accuracy, maintaining values close to 0.96 and 0.95, respectively. Conversely, traditional classifiers such as Logistic Regression and Decision Tree exhibited the lowest accuracy scores, suggesting limited capacity to capture the complexity of the multifractal features.

Figure 6b highlights the performance in terms of precision. Again, the MLP Neural Network achieved near-perfect precision values across all folds, indicating its strong ability to avoid false positives. Other ensemble models like XGBoost and LightGBM also demonstrated relatively high and stable precision across folds, reinforcing their robustness.

In Figure 6c which presents recall, the results show that Random Forest slightly outperformed other models in some folds. However, the MLP and ensemble-based models (LightGBM, XGBoost, Gradient Boosting) generally maintained higher recall compared to traditional classifiers, indicating superior sensitivity in identifying true positives.

Figure 6d summarizes the balanced performance via the F1-score. The MLP Neural Network consistently attained the highest F1-scores, followed by LightGBM, showcasing their ability to maintain a good trade-off between precision and recall. In contrast, Logistic Regression and Decision Tree were less effective, displaying notable fluctuations and lower mean F1-scores.

In summary, the results suggest that while classical models provide a basic level of performance, ensemble learning techniques and neural network-based models significantly enhance classification performance when applied to multifractal features extracted from retinal images. The MLP Neural Network, in particular, stands out as the most reliable classifier in terms of all four metrics.

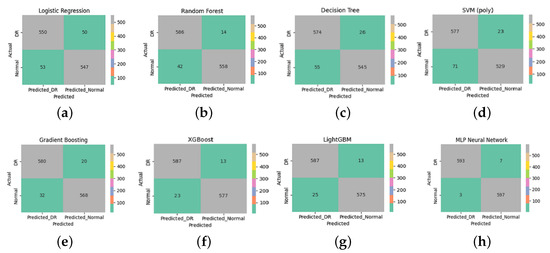

Figure 7 presents the confusion matrices for eight supervised learning models applied to the classification of diabetic retinopathy (DR) and normal retinal images. These matrices detail the counts of true positives (correctly identified DR), true negatives (correctly identified normal cases), false positives, and false negatives.

Figure 7.

Confusion matrices for the evaluated supervised learning models. Each subfigure corresponds to (a) Logistic Regression, (b) Random Forest, (c) Decision Tree, (d) SVM (polynomial), (e) Gradient Boosting, (f) XGBoost, (g) LightGBM, and (h) MLP Neural Network. These matrices illustrate the classification performance for diabetic retinopathy (DR) and normal cases using features extracted via multifractal analysis.

The MLP Neural Network (Figure 7h) achieved the best classification results, with only seven DR cases misclassified as normal (false negatives) and three normal cases misclassified as DR (false positives). This indicates high accuracy, sensitivity, and specificity, reflecting the model’s capacity to generalize well when fed with discriminative features.

A qualitative analysis of the misclassified images revealed that false negatives often corresponded to OCT scans with minimal or subtle structural disruption, potentially below the detection threshold of multifractal descriptors. Conversely, the few false positives were frequently associated with normal images showing noise-like or irregular texture patterns that resembled early pathological changes, leading to over-sensitive detection.

Both XGBoost (Figure 7f) and LightGBM (Figure 7g) also demonstrated strong classification performance, with minimal misclassification (13 false negatives and 23–25 false positives). These gradient-boosting algorithms benefit from their ensemble nature, enabling them to capture complex patterns and interactions within the multifractal features.

The Random Forest model (Figure 7b) also performed well, with 14 false negatives and 42 false positives, outperforming the Decision Tree (Figure 7c), which showed higher misclassification rates. This highlights the benefit of ensemble methods in reducing overfitting and variance compared to a single tree classifier.

Although the SVM with a polynomial kernel (Figure 7d) achieved reasonable results, it exhibited a relatively higher number of false positives (71). It is important to note, however, that this behavior is not due to poor parameter tuning—since the model was tested with the best-performing configuration after thorough optimization—but rather suggests a limitation of this particular kernel type in separating.

Logistic Regression (Figure 7a) achieved balanced but modest results, with 50 false negatives and 53 false positives. This shows its limited ability to capture the data’s nonlinear structure despite the use of advanced features.

From a clinical standpoint, minimizing false negatives is crucial to prevent missing early-stage diabetic retinopathy (DR) cases, while false positives may lead to unnecessary referrals or patient anxiety. Despite the strong overall performance, the few remaining misclassified cases are likely attributable to ultra-early DR manifestations, where structural changes are minimal or subclinical. These borderline cases highlight the limitations of relying solely on OCT-based structural descriptors and underscore the potential benefit of integrating complementary functional modalities. As a future direction, combining our multifractal OCT approach with functional biomarkers such as Electroretinography (ERG) could enhance sensitivity to early neuroretinal dysfunction and reduce diagnostic uncertainty in ambiguous presentations. Overall, these findings emphasize the importance of using robust and expressive classifiers in conjunction with informative feature extraction techniques. In this study, multifractal analysis played a crucial role in characterizing the spatial and textural complexity of retinal images. By capturing multi-scale structural variations, this method provided a rich set of discriminative features that significantly enhanced classification performance across all models.

The consistently high accuracy obtained, particularly by ensemble and deep learning models, underlines the effectiveness of the multifractal analysis in preprocessing and transforming retinal data into a more learnable representation. This reinforces its necessity as a key step in the diagnostic pipeline, enabling better generalization and more reliable detection of diabetic retinopathy.

Table 7 presents the performance metrics obtained for each classification model, including accuracy, precision, sensitivity (recall), specificity, F1-score, and the 95% confidence interval for the accuracy.

Table 7.

Performance metrics of different classification algorithms on OCT image classification.

The results in Table 7 highlight the excellent discriminative power achieved by all classifiers. Among them, the MLP Neural Network attained the highest overall accuracy (), demonstrating its superior ability to learn complex nonlinear representations from the multifractal features extracted. It also exhibited excellent precision (), sensitivity (), and specificity (), though the confidence interval was not reported due to deterministic results on cross-validation folds. Tree-based ensemble methods also performed very competitively:

- LightGBM achieved an accuracy of with a narrow confidence interval [–], indicating a robust and reliable performance.

- XGBoost and Gradient Boosting followed closely, with accuracies of and , respectively.

Traditional classifiers such as Random Forest () and Logistic Regression () also delivered good results, but with slightly lower precision and sensitivity compared to boosting methods and the neural network. The Decision Tree and SVM with polynomial kernel achieved accuracies above , but they exhibited wider confidence intervals, suggesting less stability across different folds.

Overall, these findings confirm that multifractal-based feature extraction, combined with modern machine learning models, can achieve highly accurate and reliable diabetic retinopathy detection from OCT images. The use of ensemble learning and deep architectures provided notable improvements compared to simpler models, particularly in terms of generalization and sensitivity to pathological variations.

The experimental findings demonstrate the strong discriminative ability of multifractal features extracted from OCT images for the early detection of diabetic retinopathy. Among the various classifiers evaluated, the MLP Neural Network consistently outperformed other models across all evaluation metrics, highlighting the effectiveness of combining multifractal analysis with deep learning techniques in modeling the complex structural alterations associated with early retinal pathology.

Ensemble-based methods, such as LightGBM and XGBoost, also exhibited excellent performance, further validating the suitability of gradient-boosting strategies when working with multifractal descriptors. Overall, the proposed pipeline underscores the robustness, scalability, and practical applicability of multifractal analysis on OCT images. Unlike previous studies that primarily utilized OCTA datasets, this work demonstrates that standard OCT imaging—when combined with advanced mathematical modeling—can serve as an equally powerful and more accessible tool for early diabetic retinopathy screening.

3.5. Performance Comparison with State-of-the-Art Methods

To evaluate the effectiveness of the proposed multifractal-based MLP classification approach, we conducted a comparative analysis with several state-of-the-art machine learning and deep learning methods previously applied to retinal image classification. Table 8 summarizes the key performance metrics—accuracy, sensitivity, specificity—as well as dataset characteristics and imaging modalities used in these studies.

Table 8.

Performance comparison with state-of-the-art methods.

As shown in Table 8, our proposed method achieves competitive or superior performance compared to recent approaches, with 98.02% accuracy, 97.80% sensitivity, and 98.84% specificity on 6000 OCT images. While some methods demonstrate marginally higher sensitivity (e.g., SVM + GLCM [41]), they often underperform in terms of overall accuracy or specificity. Additionally, approaches relying on OCTA [12] or massive annotated datasets [39] may not be feasible in routine clinical settings.

In contrast, our model operates on standard OCT images and uses mathematically grounded multifractal features, ensuring both performance and interpretability. This makes it especially suited for integration into real-world ophthalmic workflows, including in primary care or resource-limited settings where early intervention is critical. Unlike deep learning models that often act as “black boxes,” the proposed method provides transparent structural descriptors that facilitate clinical validation and enhance trust among ophthalmologists.

Moreover, the model’s low computational requirements enable deployment on existing diagnostic platforms without the need for specialized hardware or extensive data labeling. By detecting subtle structural changes in retinal layers—often invisible in fundus images—our framework supports truly early-stage diabetic retinopathy screening. Future development will focus on an integrated, user-friendly interface and explainable AI tools to visually highlight pathologically relevant regions, further bridging the gap between algorithmic decision-making and clinical practice.

While the present study establishes that multifractal features extracted from OCT images offer a robust structural biomarker framework for early diabetic retinopathy (DR) detection, future work should advance toward integrating complementary functional modalities to achieve a more comprehensive and biologically faithful diagnostic model. Optical Coherence Tomography (OCT) remains an indispensable structural imaging technique, providing high-resolution visualization of retinal microarchitecture, including the inner retinal layers and retinal pigment epithelium. However, as a purely anatomical modality, OCT cannot interrogate the functional state of retinal neurons, which may be compromised in the earliest neurodegenerative stages of DR, well before visible vascular or tissue disruption occurs. In contrast, Electroretinography (ERG) offers objective, layer-specific assessment of retinal function by recording bioelectrical responses to photic stimuli. Notably, recent studies have demonstrated that ERG abnormalities—particularly reduced oscillatory potentials and attenuated photopic negative response (PhNR)—can be detected in diabetic patients, thus revealing latent inner retinal dysfunction [43,44]. This structural–functional dissociation highlights a critical limitation of relying solely on OCT-based morphological features and underscores the need for multimodal approaches that capture both tissue integrity and neurophysiological performance. Future research should explore the fusion of OCT-derived multifractal descriptors with ERG-derived electrophysiological biomarkers within integrated machine learning pipelines. Such hybrid models may yield more sensitive and specific detection of subclinical DR, enabling earlier therapeutic intervention. Moreover, this multimodal paradigm aligns with the goals of precision ophthalmology, offering a path toward personalized screening, disease staging, and longitudinal monitoring in diabetic eye care.

4. Conclusions

The results of this study confirmed the high effectiveness of combining multifractal analysis with machine learning classifiers for the early detection of diabetic retinopathy using OCT images. Our proposed framework successfully captured subtle structural irregularities associated with early retinal degeneration, achieving highly accurate and reliable classification performances, particularly with the MLP Neural Network.

This work’s key contribution lies in demonstrating that standard OCT imaging when coupled with multifractal mathematical modeling, can provide a powerful, accessible, and cost-effective alternative to more complex imaging techniques like OCTA. This highlights the proposed method’s clinical applicability and scalability for real-world screening programs and ophthalmologic diagnosis.

For future work, we aim to extend the proposed framework to detect and classify other retinal diseases, such as age-related macular degeneration, glaucoma, etc., thus validating its generalizability across diverse pathological conditions. Although this study focused on the binary classification between normal and diabetic retinopathy (DR) images, we acknowledge that specific OCT manifestations of DR may partially resemble those of other ocular pathologies such as retinal vein occlusion (RVO) and choroidal neovascularization (CNV). Our dataset already includes such retinal conditions, and future extensions of this work will address multi-class classification settings to enhance the specificity and reduce potential diagnostic ambiguities. We also plan to evaluate the method on larger and multi-class datasets and explore its adaptability to other imaging modalities. Additionally, future work will involve validating the proposed approach on external OCT datasets from different clinical centers to assess its robustness and generalizability across varied acquisition conditions. Finally, efforts will focus on optimizing the system for real-time implementation to facilitate its integration into clinical practice.

Author Contributions

Conceptualization, A.A.; Methodology, A.A.; Software, A.A. and Y.A.; Writing—review and editing, A.A. and Y.A.; Visualization, A.A. and N.S.T.; Supervision, N.S.T. and S.K.; Funding acquisition, S.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in: https://www.kaggle.com/datasets/obulisainaren/retinal-oct-c8 (accessed on 4 January 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mounirou, B.A.; Adam, N.D.; Yakoura, A.K.; Aminou, M.S.; Liu, Y.T.; Tan, L.Y. Diabetic retinopathy: An overview of treatments. Indian J. Endocrinol. Metab. 2022, 26, 111–118. [Google Scholar] [CrossRef]

- Kumar, K.; Bhowmik, D.; Harish, G.; Duraivel, S.; Kumar, B.P. Diabetic retinopathy-symptoms, causes, risk factors and treatment. Pharma Innov. 2012, 1, 7–13. [Google Scholar]

- Tey, K.Y.; Teo, K.; Tan, A.C.; Devarajan, K.; Tan, B.; Tan, J.; Schmetterer, L.; Ang, M. Optical coherence tomography angiography in diabetic retinopathy: A review of current applications. Eye Vis. 2019, 6, 37. [Google Scholar] [CrossRef]

- Drexler, W.; Fujimoto, J.G. State-of-the-art retinal optical coherence tomography. Prog. Retin. Eye Res. 2008, 27, 45–88. [Google Scholar] [CrossRef]

- Elsharkawy, M.; Elrazzaz, M.; Sharafeldeen, A.; Alhalabi, M.; Khalifa, F.; Soliman, A.; Elnakib, A.; Mahmoud, A.; Ghazal, M.; El-Daydamony, E.; et al. The role of different retinal imaging modalities in predicting progression of diabetic retinopathy: A survey. Sensors 2022, 22, 3490. [Google Scholar] [CrossRef]

- De Carlo, T.E.; Romano, A.; Waheed, N.K.; Duker, J.S. A review of optical coherence tomography angiography (OCTA). Int. J. Retin. Vitr. 2015, 1, 5. [Google Scholar] [CrossRef]

- Jirarattanasopa, P.; Ooto, S.; Tsujikawa, A.; Yamashiro, K.; Hangai, M.; Hirata, M.; Matsumoto, A.; Yoshimura, N. Assessment of macular choroidal thickness by optical coherence tomography and angiographic changes in central serous chorioretinopathy. Ophthalmology 2012, 119, 1666–1678. [Google Scholar] [CrossRef]

- Aumann, S.; Donner, S.; Fischer, J.; Müller, F. Optical coherence tomography (OCT): Principle and technical realization. In High Resolution Imaging in Microscopy and Ophthalmology: New Frontiers in Biomedical Optics; Springer: Cham, Switzerland, 2019; pp. 59–85. [Google Scholar] [CrossRef]

- Popovic, N.; Vujosevic, S.; Popovic, T. Regional patterns in retinal microvascular network geometry in health and disease. Sci. Rep. 2019, 9, 16340. [Google Scholar] [CrossRef]

- Yu, S.; Lakshminarayanan, V. Fractal dimension and retinal pathology: A meta-analysis. Appl. Sci. 2021, 11, 2376. [Google Scholar] [CrossRef]

- Lopes, R.; Betrouni, N. Fractal and multifractal analysis: A review. Med. Image Anal. 2009, 13, 634–649. [Google Scholar] [CrossRef]

- Abdelsalam, M.M.; Zahran, M. A novel approach of diabetic retinopathy early detection based on multifractal geometry analysis for OCTA macular images using support vector machine. IEEE Access 2021, 9, 22844–22858. [Google Scholar] [CrossRef]

- Rizzo, M. Assessing the predictive capability of vascular tortuosity measures in OCTA images for diabetic retinopathy using machine learning algorithms. Bachelor’s Thesis, Universitat Politècnica de Catalunya, Barcelona, Spain, 2024. Available online: http://hdl.handle.net/2117/413148 (accessed on 16 February 2025).

- Labate, D.; Pahari, B.R.; Hoteit, S.; Mecati, M. Quantitative methods in ocular fundus imaging: Analysis of retinal microvasculature. In Landscapes of Time-Frequency Analysis: ATFA 2019; Springer: Cham, Switzerland, 2020; pp. 157–174. [Google Scholar] [CrossRef]

- Uppamma, P.; Bhattacharya, S. Deep learning and medical image processing techniques for diabetic retinopathy: A survey of applications, challenges, and future trends. J. Healthc. Eng. 2023, 2023, 2728719. [Google Scholar] [CrossRef]

- Atwany, M.Z.; Sahyoun, A.H.; Yaqub, M. Deep learning techniques for diabetic retinopathy classification: A survey. IEEE Access 2022, 10, 28642–28655. [Google Scholar] [CrossRef]

- Tan, A.C.; Tan, G.S.; Denniston, A.K.; Keane, P.A.; Ang, M.; Milea, D.; Chakravarthy, U.; Cheung, C.M.G. An overview of the clinical applications of optical coherence tomography angiography. Eye 2018, 32, 262–286. [Google Scholar] [CrossRef]

- Zekavat, S.M.; Raghu, V.K.; Trinder, M.; Ye, Y.; Koyama, S.; Honigberg, M.C.; Yu, Z.; Pampana, A.; Urbut, S.; Haidermota, S.; et al. Deep learning of the retina enables phenome-and genome-wide analyses of the microvasculature. Circulation 2022, 145, 134–150. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, M.; Cheng, J.; Zhang, T.; Lu, F. Retina images classification based on 2D empirical mode decomposition and multifractal analysis. Heliyon 2024, 10, e27391. [Google Scholar] [CrossRef]

- El Damrawi, G.; Zahran, M.; Amin, E.; Abdelsalam, M.M. Enforcing artificial neural network in the early detection of diabetic retinopathy OCTA images analysed by multifractal geometry. J. Taibah Univ. Sci. 2020, 14, 1067–1076. [Google Scholar] [CrossRef]

- Relan, D.; Khatter, K. Effectiveness of Multi-fractal Analysis in Differentiating Subgroups of Retinal Images. In Proceedings of the 2020 IEEE 17th India Council International Conference (INDICON), New Delhi, India, 10–13 December 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Li, Q.; Zhu, X.r.; Sun, G.; Zhang, L.; Zhu, M.; Tian, T.; Guo, C.; Mazhar, S.; Yang, J.K.; Li, Y. Diagnosing diabetic retinopathy in OCTA images based on multilevel information fusion using a deep learning framework. Comput. Math. Methods Med. 2022, 2022, 4316507. [Google Scholar] [CrossRef]

- Ghazal, M.; Khalil, Y.A.; Alhalabi, M.; Fraiwan, L.; El-Baz, A. Early Detection of Diabetics Using Retinal OCT Images. In Diabetes and Retinopathy; Elsevier: Amsterdam, The Netherlands, 2020; pp. 173–204. [Google Scholar] [CrossRef]

- Islam, K.T.; Wijewickrema, S.; O’Leary, S. Identifying Diabetic Retinopathy from OCT Images Using Deep Transfer Learning with Artificial Neural Networks. In Proceedings of the 2019 IEEE 32nd International Symposium on Computer-Based Medical Systems (CBMS), Cordoba, Spain, 5–7 June 2019; pp. 281–286. [Google Scholar] [CrossRef]

- Devi, M.S.S.; Ramkumar, S.; Kumar, S.V.; Sasi, G. Detection of Diabetic Retinopathy Using OCT Image. Mater. Today Proc. 2021, 47, 185–190. [Google Scholar] [CrossRef]

- Sharafeldeen, A.; Elsharkawy, M.; Khalifa, F.; Soliman, A.; Ghazal, M.; AlHalabi, M.; Yaghi, M.; Alrahmawy, M.; Elmougy, S.; Sandhu, H.S.; et al. Precise higher-order reflectivity and morphology models for early diagnosis of diabetic retinopathy using OCT images. Sci. Rep. 2021, 11, 4730. [Google Scholar] [CrossRef]

- Elgafi, M.; Sharafeldeen, A.; Elnakib, A.; Elgarayhi, A.; Alghamdi, N.S.; Sallah, M.; El-Baz, A. Detection of Diabetic Retinopathy Using Extracted 3D Features from OCT Images. Sensors 2022, 22, 7833. [Google Scholar] [CrossRef]

- Naren, O.S. Retinal OCT Image Classification - C8, 2021. Available online: https://www.kaggle.com/datasets/obulisainaren/retinal-oct-c8/versions/2 (accessed on 22 June 2025).

- Schindelin, J.; Arganda-Carreras, I.; Frise, E.; Kaynig, V.; Longair, M.; Pietzsch, T.; Preibisch, S.; Rueden, C.; Saalfeld, S.; Schmid, B.; et al. Fiji: An open-source platform for biological-image analysis. Nat. Methods 2012, 9, 676–682. [Google Scholar] [CrossRef]

- Gonzalez, R.C. Digital Image Processing; Pearson Education India: Tamil Nadu, India, 2009. [Google Scholar]

- Zuiderveld, K.J. Contrast Limited Adaptive Histogram Equalization. In Graphics Gems IV; Heckbert, P.S., Ed.; Academic Press: Cambridge, MA, USA, 1994; pp. 474–485. [Google Scholar] [CrossRef]

- Healy, S.; McMahon, J.; Owens, P.; Dockery, P.; FitzGerald, U. Threshold-based segmentation of fluorescent and chromogenic images of microglia, astrocytes and oligodendrocytes in FIJI. J. Neurosci. Methods 2018, 295, 87–103. [Google Scholar] [CrossRef]

- Garg, M.; Gupta, S. Generation of Binary Mask of Retinal Fundus Image Using Bimodal Masking. J. Adv. Res. Dyn. Control Syst. 2018, 10, 1777–1786. [Google Scholar]

- Torre, I.G.; Heck, R.J.; Tarquis, A. MULTIFRAC: An ImageJ plugin for multiscale characterization of 2D and 3D stack images. SoftwareX 2020, 12, 100574. [Google Scholar] [CrossRef]

- Raghunathan, M.; George, N.B.; Unni, V.R.; Midhun, P.R.; Reeja, K.V.; Sujith, R.I. Multifractal analysis of flame dynamics during transition to thermoacoustic instability in a turbulent combustor. J. Fluid Mech. 2020, 888, A14. [Google Scholar] [CrossRef]

- Senthilkumaran, P. Singularities in Physics and Engineering; IOP Publishing: Bristol, UK, 2024. [Google Scholar] [CrossRef]

- Naskath, J.; Sivakamasundari, G.; Begum, A.A.S. A Study on Different Deep Learning Algorithms Used in Deep Neural Nets: MLP SOM and DBN. Wirel. Pers. Commun. 2023, 128, 2913–2936. [Google Scholar] [CrossRef]

- Safitri, D.W.; Juniati, D. Classification of diabetic retinopathy using fractal dimension analysis of eye fundus image. In Proceedings of the AIP Conference Proceedings; AIP Publishing: Melville, NY, USA, 2017; Volume 1867. [Google Scholar]

- Lin, G.M.; Chen, M.J.; Yeh, C.H.; Lin, Y.Y.; Kuo, H.Y.; Lin, M.H.; Chen, M.C.; Lin, S.D.; Gao, Y.; Ran, A.; et al. Transforming retinal photographs to entropy images in deep learning to improve automated detection for diabetic retinopathy. J. Ophthalmol. 2018, 2018, 2159702. [Google Scholar] [CrossRef]

- Gadekallu, T.R.; Khare, N.; Bhattacharya, S.; Singh, S.; Maddikunta, P.K.R.; Ra, I.H.; Alazab, M. Early detection of diabetic retinopathy using PCA-firefly based deep learning model. Electronics 2020, 9, 274. [Google Scholar] [CrossRef]

- Lachure, J.; Deorankar, A.; Lachure, S.; Gupta, S.; Jadhav, R. Diabetic retinopathy using morphological operations and machine learning. In Proceedings of the 2015 IEEE International Advance Computing Conference (IACC), Banglore, India, 12–13 June 2015; pp. 617–622. [Google Scholar]

- Ghazal, M.; Ali, S.S.; Mahmoud, A.H.; Shalaby, A.M.; El-Baz, A. Accurate detection of non-proliferative diabetic retinopathy in optical coherence tomography images using convolutional neural networks. IEEE Access 2020, 8, 34387–34397. [Google Scholar] [CrossRef]

- Tsay, K.; Safari, S.; Abou-Samra, A.; Kremers, J.; Tzekov, R. Pre-stimulus bioelectrical activity in light-adapted ERG under blue versus white background. Vis. Neurosci. 2023, 40, E004. [Google Scholar] [CrossRef] [PubMed]

- Kremers, J.; Huchzermeyer, C. Electroretinographic responses to periodic stimuli in primates and the relevance for visual perception and for clinical studies. Vis. Neurosci. 2024, 41, E004. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).