Multiclassification of Colorectal Polyps from Colonoscopy Images Using AI for Early Diagnosis

Abstract

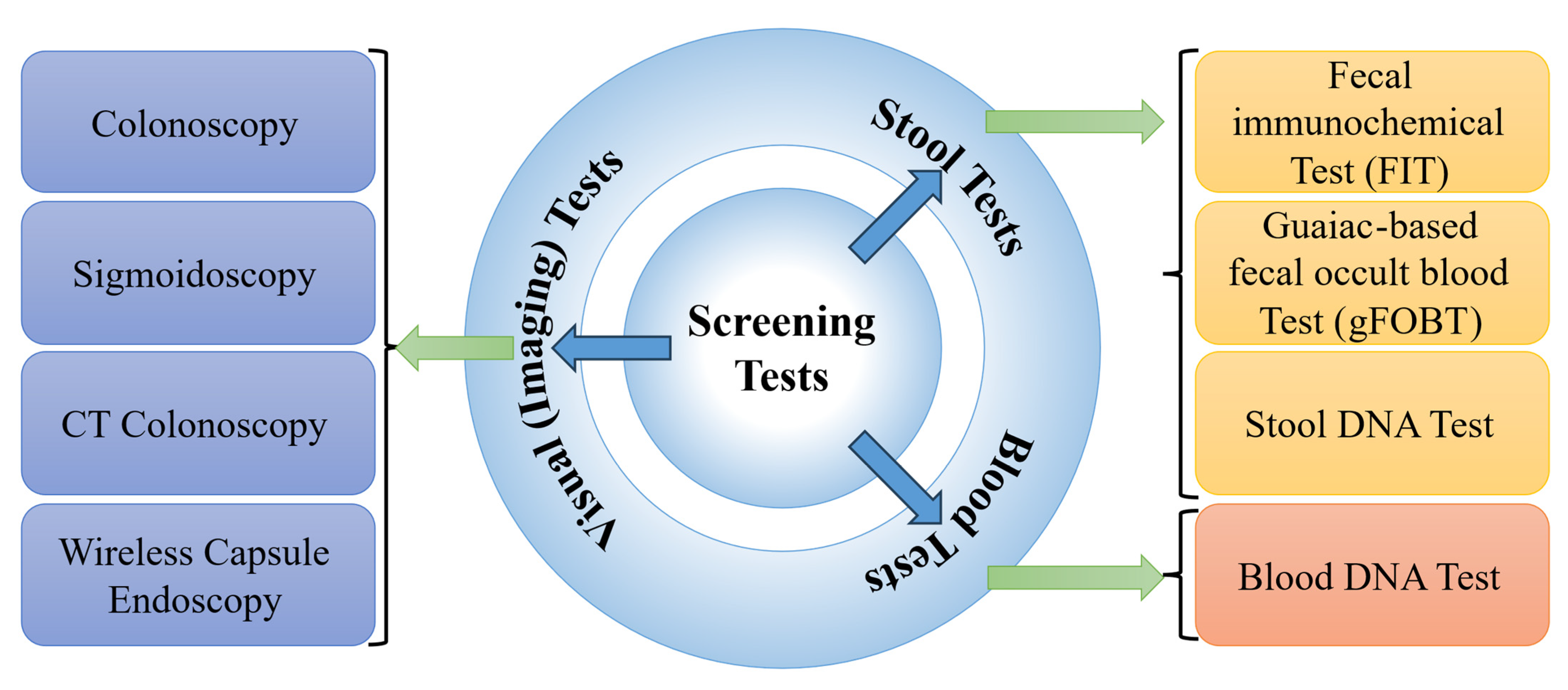

1. Introduction

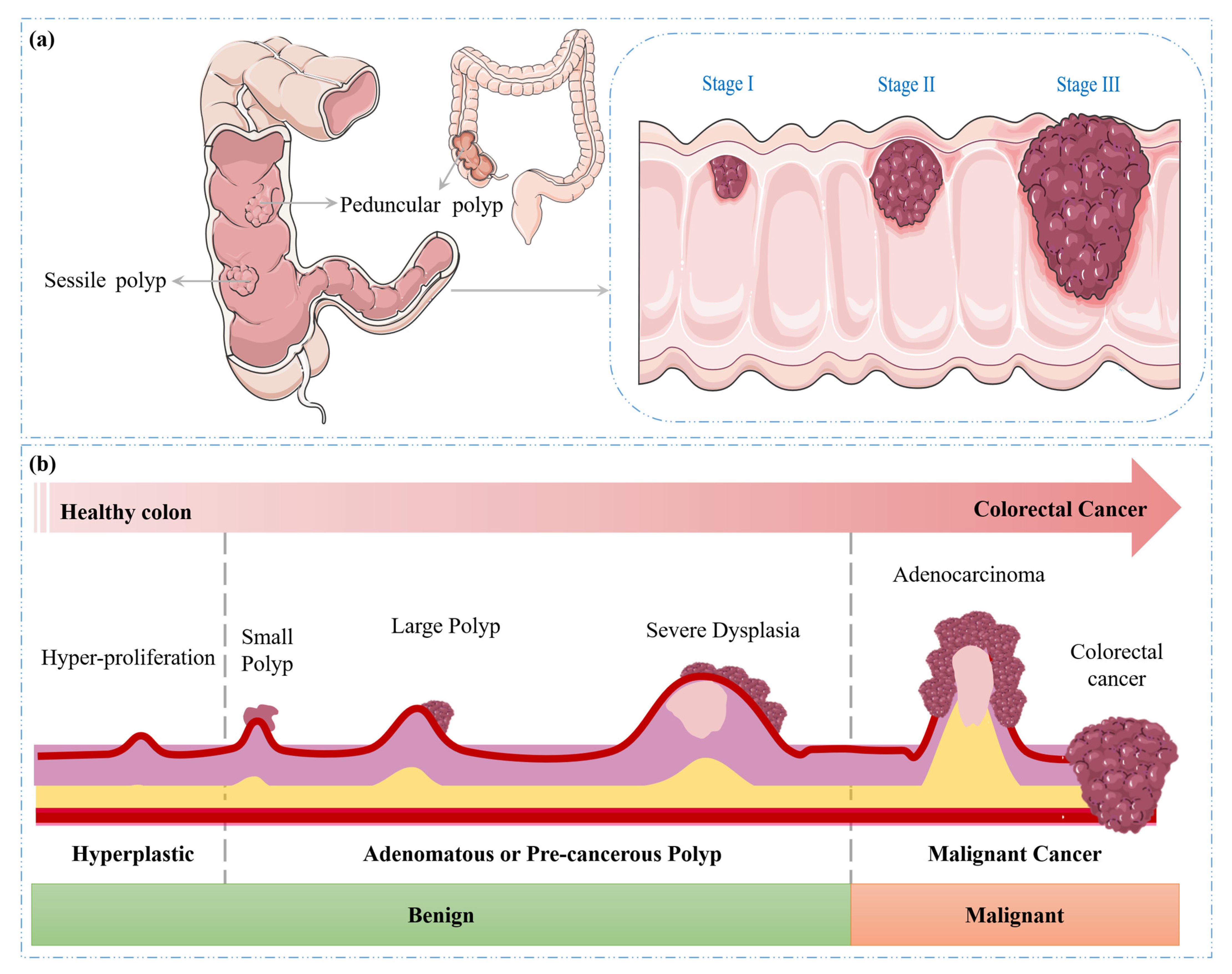

- Serrated polyps: Sessile serrated polyps (SSPs) and traditional serrated adenomas (TSAs) are two common premalignant lesions that share an increased risk of progression to CRC. SSPs are characterized by their relatively flat or slightly elevated morphology and indistinct borders. Conversely, TSAs often exhibit a more pedunculated or sessile growth pattern and a more pronounced adenomatous behaviour, distinguishing them from SSPs [16,17].

- Adenomatous polyps (adenomas): These polyps possess the potential to evolve into cancer and thus are regarded as precancerous. Adenomas are further classified into subtypes: tubular, villous, and tubulovillous [18]. Among these, tubular adenomas are the most prevalent, while villous adenomas, though less common, carry a higher risk of malignancy [19].

1.1. Literature Review

1.2. Contributions

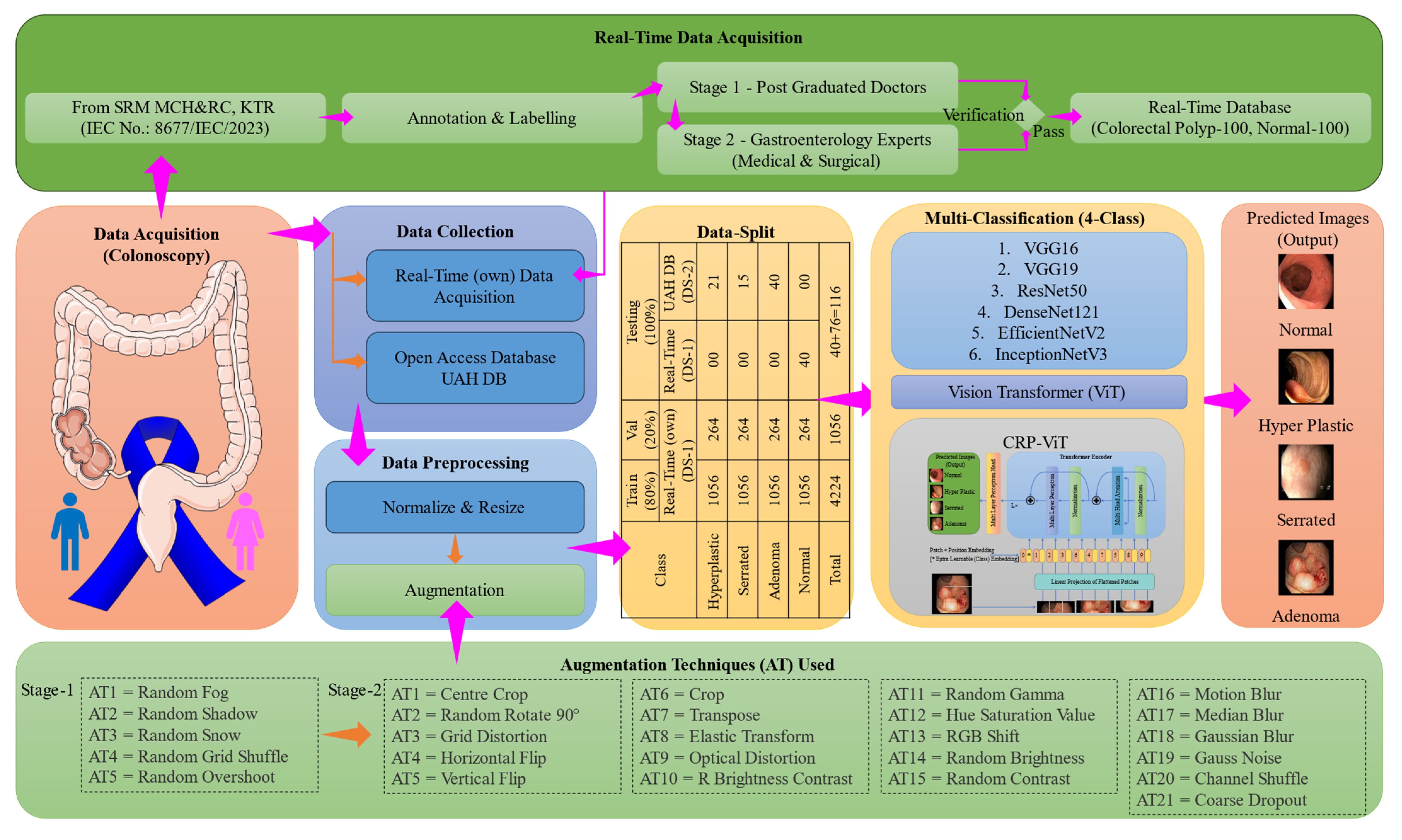

- Dataset collection and preprocessing: A comprehensive colonoscopy image dataset including real-time data is curated and preprocessed to enhance the quality of input data for effective CRP classification. Additionally, ethical clearance is obtained to ensure the responsible collection and use of real-time images in compliance with regulatory guidelines.

- AI-based deep learning model development and performance evaluation: A customized DL-based multiclassification model is designed and implemented to accurately classify different types of colorectal polyps, aiding in the early diagnosis of CRC. The proposed model’s performance is assessed using standard evaluation metrics.

- Comparative analysis: The developed AI model is compared with existing state-of-the-art methods for polyp classification to demonstrate its superiority and effectiveness in clinical diagnosis.

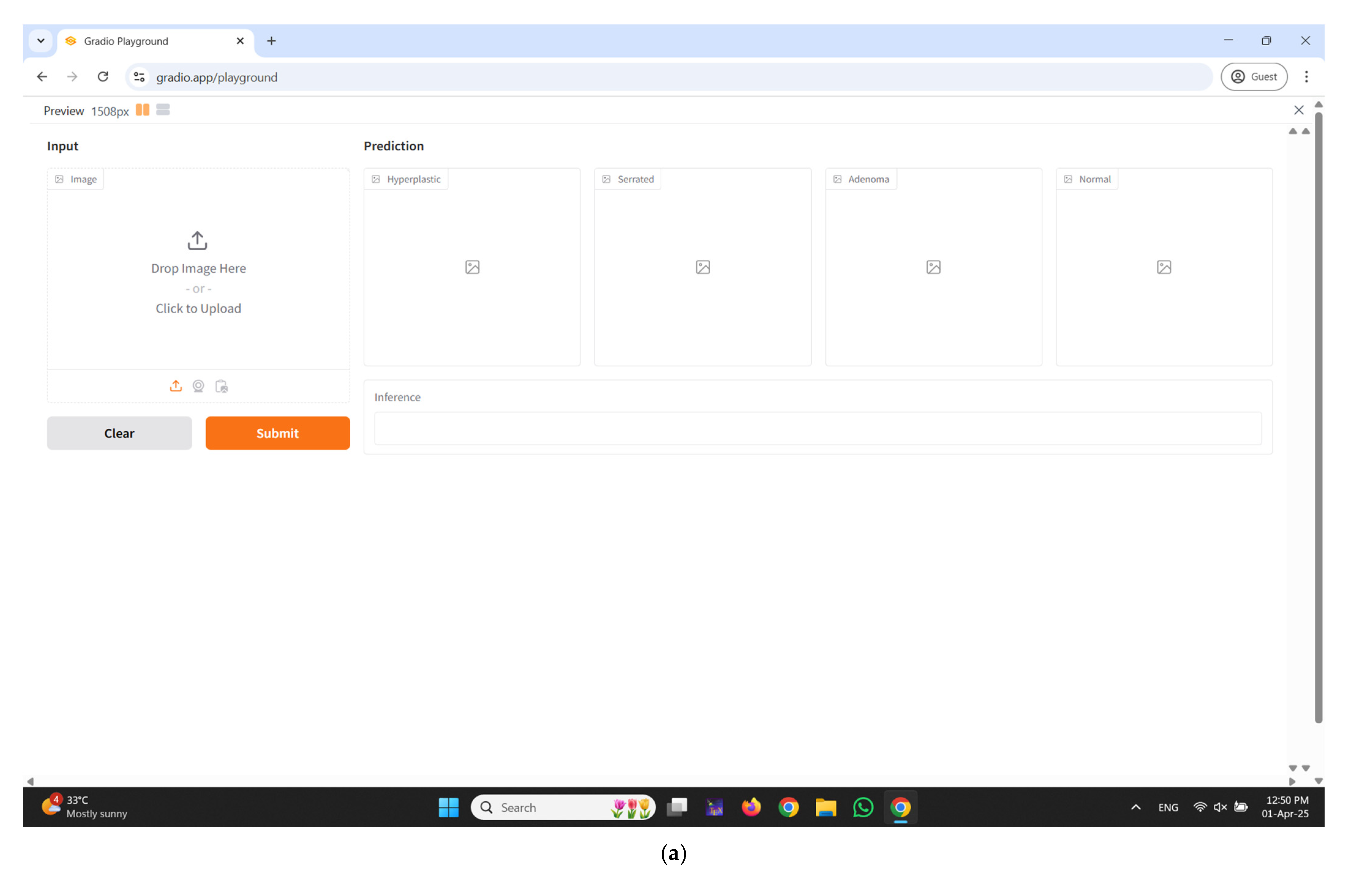

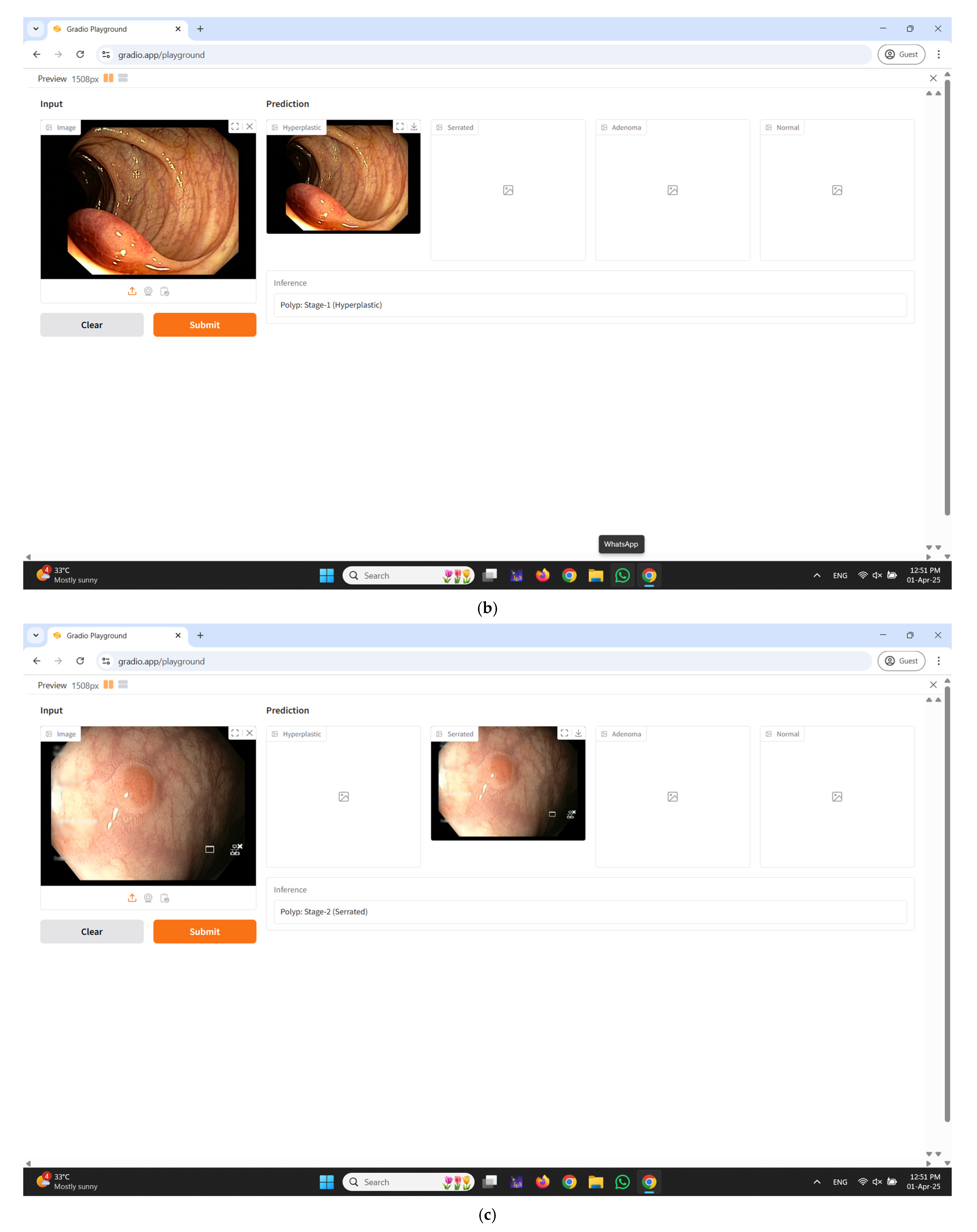

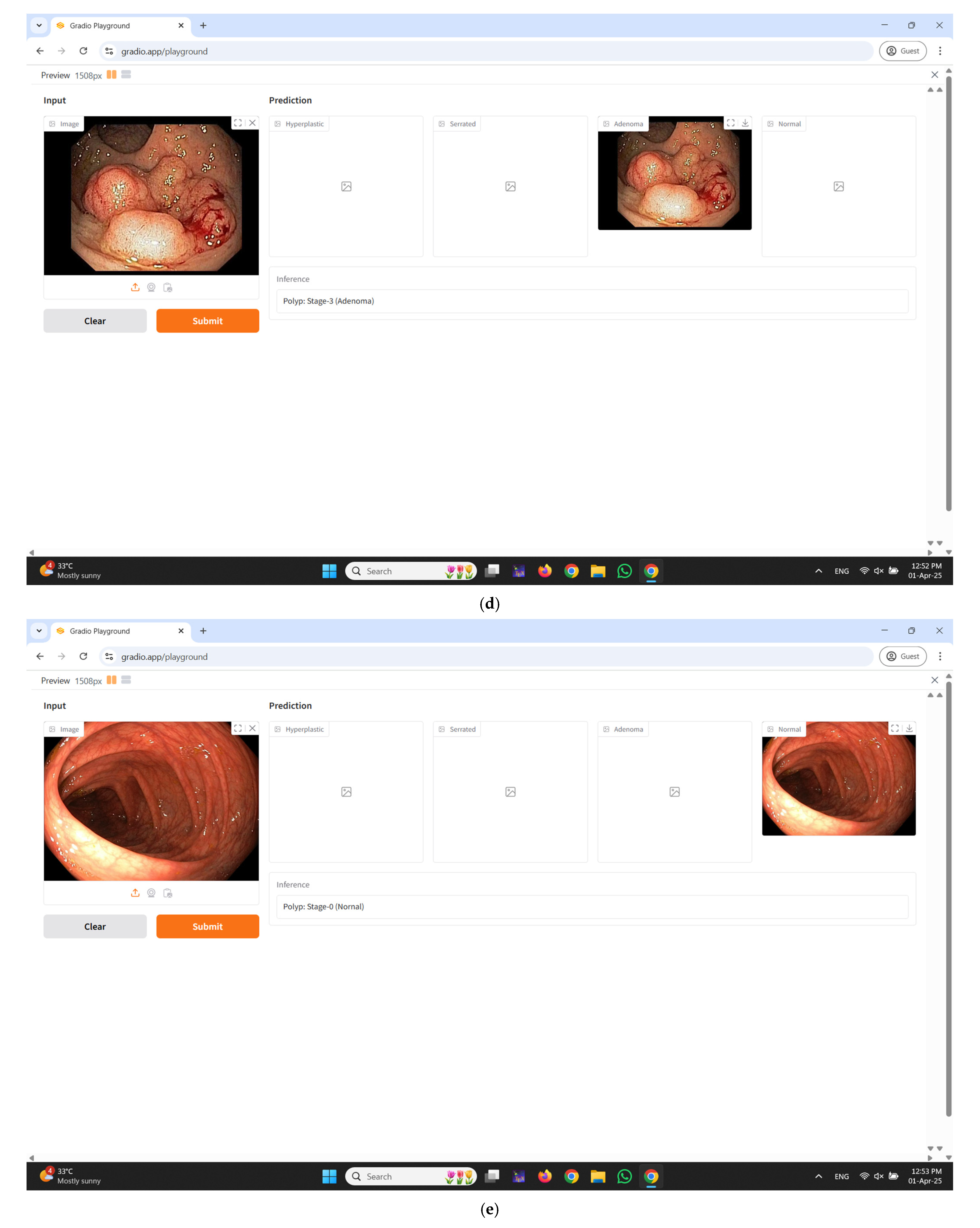

- Interactive interface deployment using Gradio: To enhance usability and clinical translation, a Gradio-based interface is developed, enabling users to upload colonoscopy images and receive immediate visual feedback on the predicted type of polyp. This interactive feature aids clinicians in real-time decision-making during diagnostic procedures.

2. Materials and Methods

2.1. Proposed Workflow

2.2. Data Acquisition and Data Collection

2.3. Image Preprocessing and Data Split

2.4. Deep Learning Classifier

2.5. Real-Time Diagnostic Interface Integration Using Gradio

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CAD | Computer-Aided Diagnosis |

| CNN | Convolutional Neural Network |

| CRC | Colorectal Cancer |

| CRP | Colorectal Polyp |

| CT | Computed Tomography |

| D&T | Diagnostic and Therapeutic |

| DA | Domain Adaptation |

| DA | Data Augmentation |

| DL | Deep Learning |

| DNA | Deoxyribonucleic Acid |

| DS | Dataset |

| DS-1 | Dataset 1 (Real-Time (own)) |

| DS-2 | Dataset 2 (UAH DB) |

| GUI | Graphical User Interface |

| IBD | Inflammatory Bowel Disease |

| IEC | Institutional Ethics Committee |

| ML | Machine Learning |

| MLP | Multi-Layer Perceptron |

| ROC | Receiver Operating Characteristic |

| S1DA | Stage 1 Data Augmentation |

| S2DA | Stage 2 Data Augmentation |

| SSP | Sessile Serrated Polyps |

| TSA | Traditional Serrated Adenomas |

| ViT | Vision Transformer |

| WCE | Wireless Capsule Endoscopy |

References

- Roger, L.; Tomas, F.; Gire, V. Mechanisms and regulation of cellular senescence. Int. J. Mol. Sci. 2021, 22, 13173. [Google Scholar] [CrossRef]

- Wan, M.; Gray-Gaillard, E.F.; Elisseeff, J.H. Cellular senescence in musculoskeletal homeostasis, diseases, and regeneration. Bone Res. 2021, 9, 41. [Google Scholar] [CrossRef]

- Kashyap, A.K.; Dubey, S.K. Molecular mechanisms in cancer development. In Understanding Cancer; Elsevier: Amsterdam, The Netherlands, 2022; pp. 79–90. [Google Scholar]

- Sehgal, P.; Chaturvedi, P. Chromatin and cancer: Implications of disrupted chromatin organization in tumorigenesis and its diversification. Cancers 2023, 15, 466. [Google Scholar] [CrossRef]

- Li, Y.; Liu, F.; Cai, Q.; Deng, L.; Ouyang, Q.; Zhang, X.H.-F.; Zheng, J. Invasion and metastasis in cancer: Molecular insights and therapeutic targets. Signal Transduct. Target. Ther. 2025, 10, 57. [Google Scholar] [CrossRef]

- Kciuk, M.; Gielecińska, A.; Budzinska, A.; Mojzych, M.; Kontek, R. Metastasis and MAPK pathways. Int. J. Mol. Sci. 2022, 23, 3847. [Google Scholar] [CrossRef]

- Selvaraj, J.; Umapathy, S.; Rajesh, N.A. Artificial intelligence based real time colorectal cancer screening study: Polyp segmentation and classification using multi-house database. Biomed. Signal Process. Control 2025, 99, 106928. [Google Scholar] [CrossRef]

- Selvaraj, J.; Umapathy, S. CRPU-NET: A deep learning model based semantic segmentation for the detection of colorectal polyp in lower gastrointestinal tract. Biomed. Phys. Eng. Express 2023, 10, 015018. [Google Scholar] [CrossRef]

- Sawicki, T.; Ruszkowska, M.; Danielewicz, A.; Niedźwiedzka, E.; Arłukowicz, T.; Przybyłowicz, K.E. A review of colorectal cancer in terms of epidemiology, risk factors, development, symptoms and diagnosis. Cancers 2021, 13, 2025. [Google Scholar] [CrossRef]

- Łukaszewicz-Zając, M.; Mroczko, B. Circulating biomarkers of colorectal cancer (CRC)—Their utility in diagnosis and prognosis. J. Clin. Med. 2021, 10, 2391. [Google Scholar] [CrossRef]

- Saraiva, S.; Rosa, I.; Fonseca, R.; Pereira, A.D. Colorectal malignant polyps: A modern approach. Ann. Gastroenterol. 2021, 35, 17. [Google Scholar] [CrossRef]

- Sullivan, B.A.; Noujaim, M.; Roper, J. Cause, epidemiology, and histology of polyps and pathways to colorectal cancer. Gastrointest. Endosc. Clin. 2022, 32, 177–194. [Google Scholar] [CrossRef]

- Wang, J.-D.; Xu, G.-S.; Hu, X.-L.; Li, W.-Q.; Yao, N.; Han, F.-Z.; Zhang, Y.; Qu, J. The histologic features, molecular features, detection and management of serrated polyps: A review. Front. Oncol. 2024, 14, 1356250. [Google Scholar] [CrossRef]

- Kővári, B.; Kim, B.H.; Lauwers, G.Y. The pathology of gastric and duodenal polyps: Current concepts. Histopathology 2021, 78, 106–124. [Google Scholar] [CrossRef]

- Triadafilopoulos, G. Prevalence of Abnormalities at Tandem Endoscopy in Patients Referred for Colorectal Cancer Screening/Surveillance Colonoscopy. Cancers 2024, 16, 3998. [Google Scholar] [CrossRef]

- Mezzapesa, M.; Losurdo, G.; Celiberto, F.; Rizzi, S.; d’Amati, A.; Piscitelli, D.; Ierardi, E.; Di Leo, A. Serrated colorectal lesions: An up-to-date review from histological pattern to molecular pathogenesis. Int. J. Mol. Sci. 2022, 23, 4461. [Google Scholar] [CrossRef]

- Nagtegaal, I.D.; Snover, D.C. Head to head: Should we adopt the term ‘sessile serrated lesion’? Histopathology 2022, 80, 1019–1025. [Google Scholar] [CrossRef]

- Kahraman, D.S.; Sayhan, S. Colon polyps and their pathologic characteristics. In Colon Polyps and Colorectal Cancer; Springer: Cham, Switzerland, 2021; pp. 167–211. [Google Scholar]

- Jungwirth, J.; Urbanova, M.; Boot, A.; Hosek, P.; Bendova, P.; Siskova, A.; Svec, J.; Kment, M.; Tumova, D.; Summerova, S. Mutational analysis of driver genes defines the colorectal adenoma: In situ carcinoma transition. Sci. Rep. 2022, 12, 2570. [Google Scholar] [CrossRef]

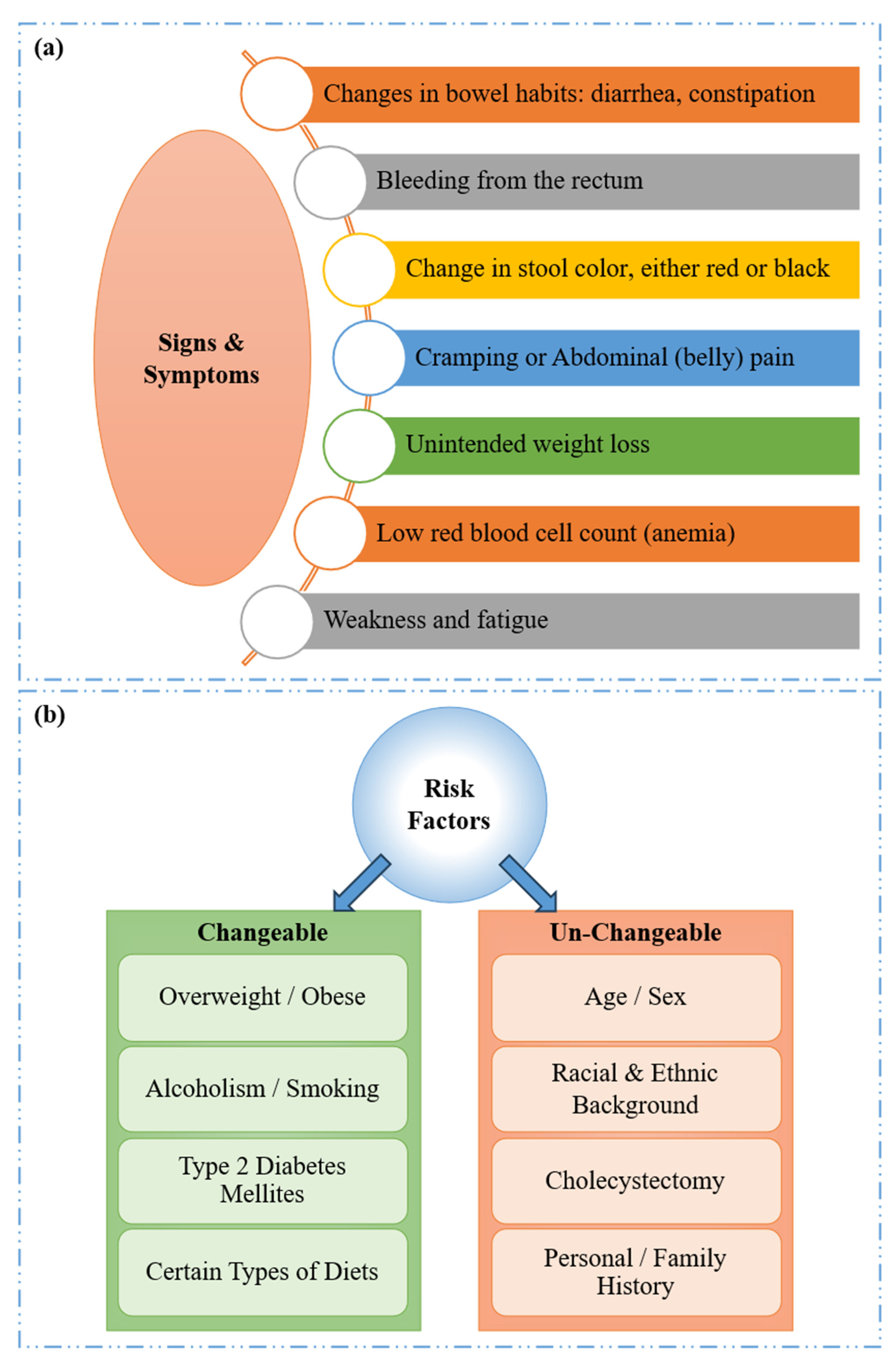

- Holtedahl, K.; Borgquist, L.; Donker, G.A.; Buntinx, F.; Weller, D.; Campbell, C.; Månsson, J.; Hammersley, V.; Braaten, T.; Parajuli, R. Symptoms and signs of colorectal cancer, with differences between proximal and distal colon cancer: A prospective cohort study of diagnostic accuracy in primary care. BMC Fam. Pract. 2021, 22, 148. [Google Scholar] [CrossRef]

- Fritz, C.D.; Otegbeye, E.E.; Zong, X.; Demb, J.; Nickel, K.B.; Olsen, M.A.; Mutch, M.; Davidson, N.O.; Gupta, S.; Cao, Y. Red-flag signs and symptoms for earlier diagnosis of early-onset colorectal cancer. JNCI J. Natl. Cancer Inst. 2023, 115, 909–916. [Google Scholar] [CrossRef]

- Lewandowska, A.; Rudzki, G.; Lewandowski, T.; Stryjkowska-Góra, A.; Rudzki, S. Risk factors for the diagnosis of colorectal cancer. Cancer Control 2022, 29, 10732748211056692. [Google Scholar] [CrossRef]

- Sninsky, J.A.; Shore, B.M.; Lupu, G.V.; Crockett, S.D. Risk factors for colorectal polyps and cancer. Gastrointest. Endosc. Clin. N. Am. 2022, 32, 195–213. [Google Scholar] [CrossRef]

- Leong, S.P.; Naxerova, K.; Keller, L.; Pantel, K.; Witte, M. Molecular mechanisms of cancer metastasis via the lymphatic versus the blood vessels. Clin. Exp. Metastasis 2022, 39, 159–179. [Google Scholar] [CrossRef]

- Zhou, H.; Lei, P.-j.; Padera, T.P. Progression of metastasis through lymphatic system. Cells 2021, 10, 627. [Google Scholar] [CrossRef]

- Chen, K.; Collins, G.; Wang, H.; Toh, J.W.T. Pathological features and prognostication in colorectal cancer. Curr. Oncol. 2021, 28, 5356–5383. [Google Scholar] [CrossRef]

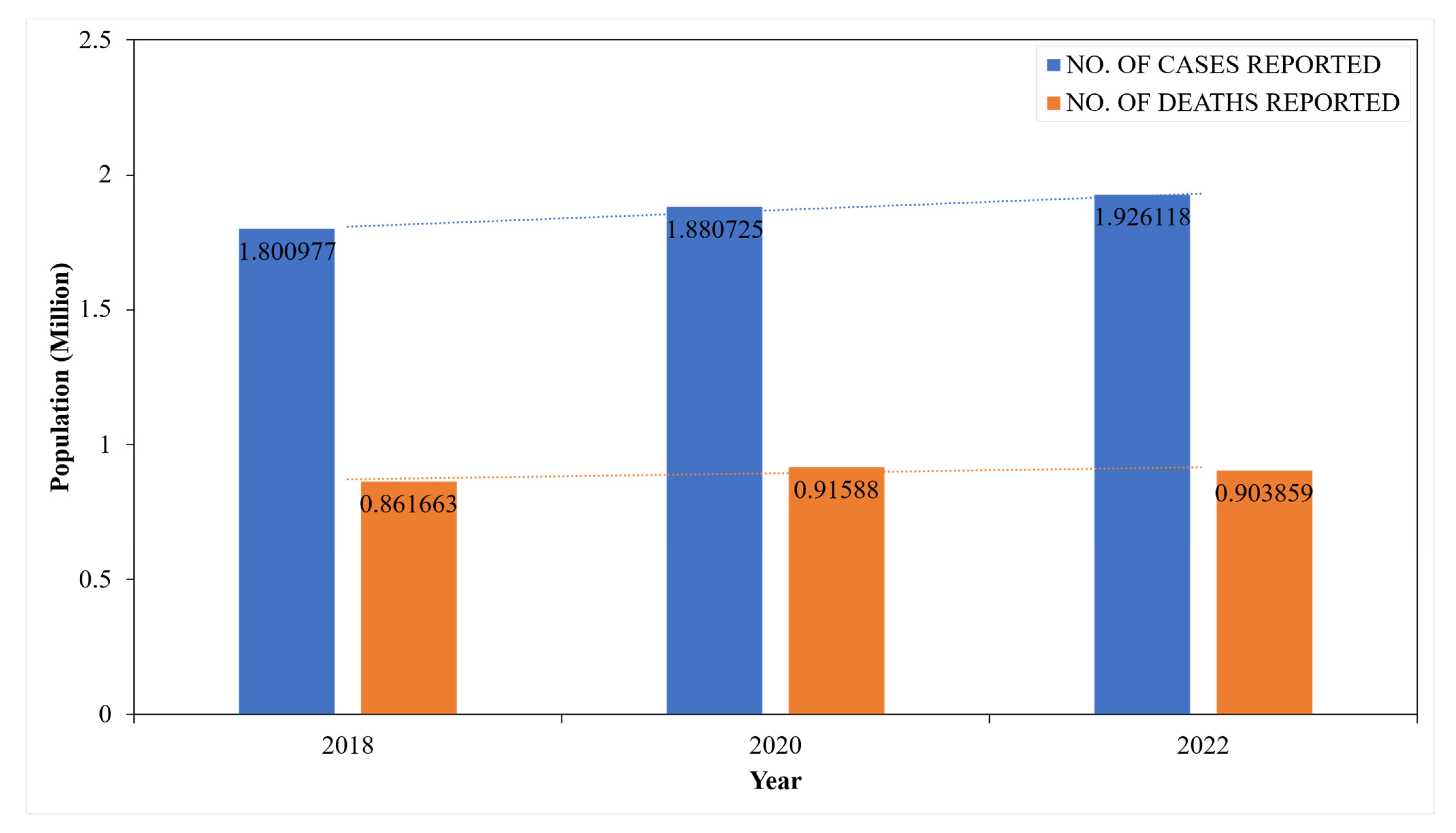

- Bray, F.; Ferlay, J.; Soerjomataram, I.; Siegel, R.L.; Torre, L.A.; Jemal, A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. 2018, 68, 394–424. [Google Scholar] [CrossRef]

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef]

- Bray, F.; Laversanne, M.; Sung, H.; Ferlay, J.; Siegel, R.L.; Soerjomataram, I.; Jemal, A. Global cancer statistics 2022: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. 2024, 74, 229–263. [Google Scholar] [CrossRef]

- Burnett-Hartman, A.N.; Lee, J.K.; Demb, J.; Gupta, S. An update on the epidemiology, molecular characterization, diagnosis, and screening strategies for early-onset colorectal cancer. Gastroenterology 2021, 160, 1041–1049. [Google Scholar] [CrossRef]

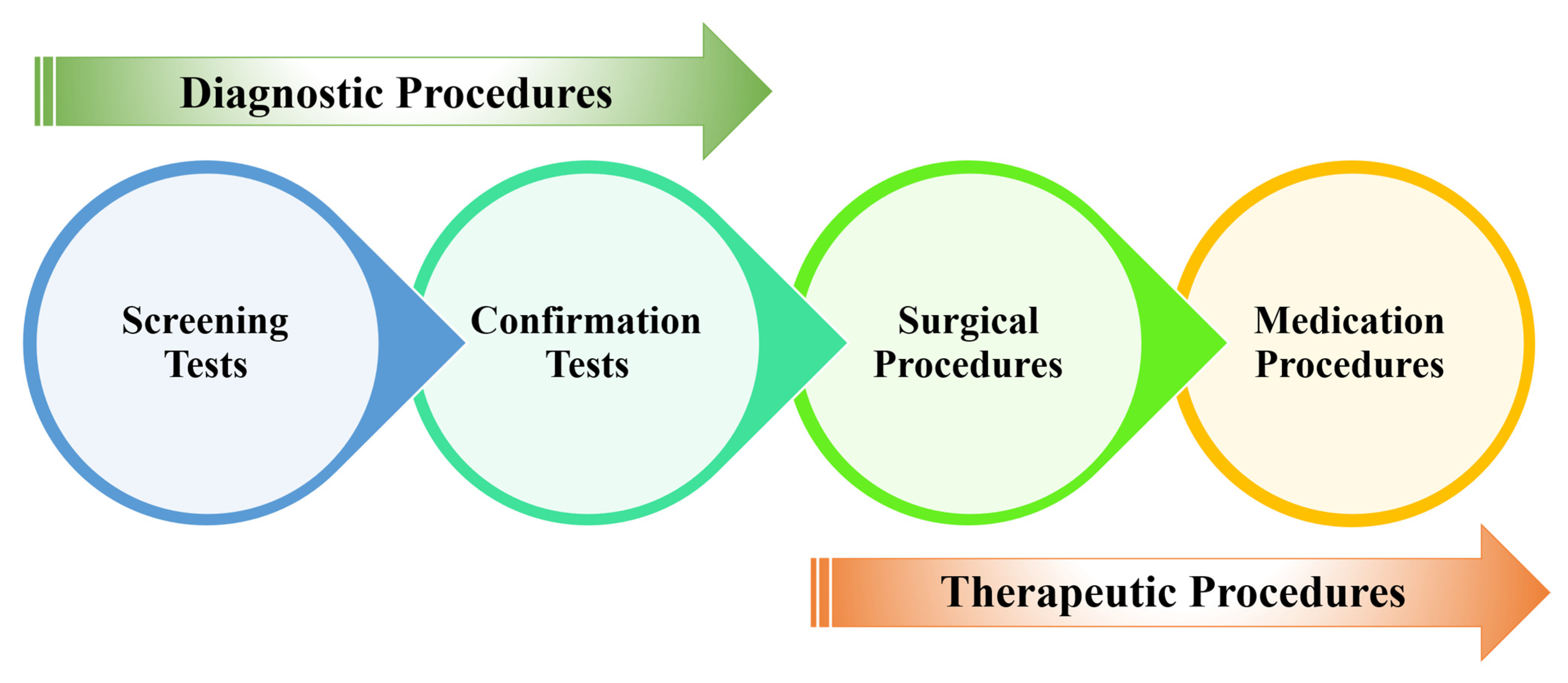

- Naito, Y.; Aburatani, H.; Amano, T.; Baba, E.; Furukawa, T.; Hayashida, T.; Hiyama, E.; Ikeda, S.; Kanai, M.; Kato, M. Clinical practice guidance for next-generation sequencing in cancer diagnosis and treatment (edition 2.1). Int. J. Clin. Oncol. 2021, 26, 233–283. [Google Scholar] [CrossRef]

- Ngu, S.-F.; Ngan, H.Y.; Chan, K.K. Role of adjuvant and post-surgical treatment in gynaecological cancer. Best Pract. Res. Clin. Obstet. Gynaecol. 2022, 78, 2–13. [Google Scholar] [CrossRef]

- Shaukat, A.; Levin, T.R. Current and future colorectal cancer screening strategies. Nat. Rev. Gastroenterol. Hepatol. 2022, 19, 521–531. [Google Scholar] [CrossRef]

- Zygulska, A.L.; Pierzchalski, P. Novel diagnostic biomarkers in colorectal cancer. Int. J. Mol. Sci. 2022, 23, 852. [Google Scholar] [CrossRef]

- Ferrari, A.; Neefs, I.; Hoeck, S.; Peeters, M.; Van Hal, G. Towards novel non-invasive colorectal cancer screening methods: A comprehensive review. Cancers 2021, 13, 1820. [Google Scholar] [CrossRef]

- Cellina, M.; Cacioppa, L.M.; Cè, M.; Chiarpenello, V.; Costa, M.; Vincenzo, Z.; Pais, D.; Bausano, M.V.; Rossini, N.; Bruno, A. Artificial intelligence in lung cancer screening: The future is now. Cancers 2023, 15, 4344. [Google Scholar] [CrossRef]

- Mitsala, A.; Tsalikidis, C.; Pitiakoudis, M.; Simopoulos, C.; Tsaroucha, A.K. Artificial intelligence in colorectal cancer screening, diagnosis and treatment. A new era. Curr. Oncol. 2021, 28, 1581–1607. [Google Scholar] [CrossRef]

- Wesp, P.; Grosu, S.; Graser, A.; Maurus, S.; Schulz, C.; Knösel, T.; Fabritius, M.P.; Schachtner, B.; Yeh, B.M.; Cyran, C.C. Deep learning in CT colonography: Differentiating premalignant from benign colorectal polyps. Eur. Radiol. 2022, 32, 4749–4759. [Google Scholar] [CrossRef]

- Alsufayan, M.; Sulieman, A.; Moslem, R.; Asiri, A.; Alomary, A.; Alanazi, B.M.; Aldossari, H.; Alonazi, B.; Bradley, D.A. Assessment of imaging protocol and patients radiation exposure in computed tomography colonography. Appl. Sci. 2021, 11, 4761. [Google Scholar] [CrossRef]

- Hong, S.M.; Baek, D.H. A review of colonoscopy in intestinal diseases. Diagnostics 2023, 13, 1262. [Google Scholar] [CrossRef]

- Selvaraj, J.; Jayanthy, A. Design and development of artificial intelligence-based application programming interface for early detection and diagnosis of colorectal cancer from wireless capsule endoscopy images. Int. J. Imaging Syst. Technol. 2024, 34, e23034. [Google Scholar] [CrossRef]

- Selvaraj, J.; Jayanthy, A. Automatic polyp semantic segmentation using wireless capsule endoscopy images with various convolutional neural network and optimization techniques: A comparison and performance evaluation. Biomed. Eng. Appl. Basis Commun. 2023, 35, 2350026. [Google Scholar] [CrossRef]

- Shahsavari, D.; Waqar, M.; Chandrasekar, V.T. Image enhanced colonoscopy: Updates and prospects—A review. Transl. Gastroenterol. Hepatol. 2023, 8, 26. [Google Scholar] [CrossRef]

- Li, K.; Fathan, M.I.; Patel, K.; Zhang, T.; Zhong, C.; Bansal, A.; Rastogi, A.; Wang, J.S.; Wang, G. Colonoscopy polyp detection and classification: Dataset creation and comparative evaluations. PLoS ONE 2021, 16, e0255809. [Google Scholar] [CrossRef] [PubMed]

- Aliyi, S.; Dese, K.; Raj, H. Detection of gastrointestinal tract disorders using deep learning methods from colonoscopy images and videos. Sci. Afr. 2023, 20, e01628. [Google Scholar] [CrossRef]

- Jahn, B.; Bundo, M.; Arvandi, M.; Schaffner, M.; Todorovic, J.; Sroczynski, G.; Knudsen, A.; Fischer, T.; Schiller-Fruehwirth, I.; Öfner, D. One in three adenomas could be missed by white-light colonoscopy–findings from a systematic review and meta-analysis. BMC Gastroenterol. 2025, 25, 170. [Google Scholar] [CrossRef] [PubMed]

- Ali, S. Where do we stand in AI for endoscopic image analysis? Deciphering gaps and future directions. npj Digit. Med. 2022, 5, 184. [Google Scholar] [CrossRef]

- Young, E.; Edwards, L.; Singh, R. The role of artificial intelligence in colorectal cancer screening: Lesion detection and lesion characterization. Cancers 2023, 15, 5126. [Google Scholar] [CrossRef] [PubMed]

- Pulumati, A.; Pulumati, A.; Dwarakanath, B.S.; Verma, A.; Papineni, R.V. Technological advancements in cancer diagnostics: Improvements and limitations. Cancer Rep. 2023, 6, e1764. [Google Scholar] [CrossRef]

- Khalifa, M.; Albadawy, M. AI in diagnostic imaging: Revolutionising accuracy and efficiency. Comput. Methods Programs Biomed. Update 2024, 5, 100146. [Google Scholar] [CrossRef]

- Li, M.; Ali, S.M.; Umm-a-OmarahGilani, S.; Liu, J.; Li, Y.-Q.; Zuo, X.-L. Kudo’s pit pattern classification for colorectal neoplasms: A meta-analysis. World J. Gastroenterol. WJG 2014, 20, 12649. [Google Scholar] [CrossRef]

- De Carvalho, T.; Kader, R.; Brandao, P.; Lovat, L.B.; Mountney, P.; Stoyanov, D. NICE polyp feature classification for colonoscopy screening. Int. J. Comput. Assist. Radiol. Surg. 2025, 20, 1015–1024. [Google Scholar] [CrossRef]

- Van Doorn, S.C.; Hazewinkel, Y.; East, J.E.; Van Leerdam, M.E.; Rastogi, A.; Pellisé, M.; Sanduleanu-Dascalescu, S.; Bastiaansen, B.A.; Fockens, P.; Dekker, E. Polyp morphology: An interobserver evaluation for the Paris classification among international experts. Off. J. Am. Coll. Gastroenterol. | ACG 2015, 110, 180–187. [Google Scholar] [CrossRef] [PubMed]

- Uraoka, T.; Saito, Y.; Ikematsu, H.; Yamamoto, K.; Sano, Y. Sano’s capillary pattern classification for narrow-band imaging of early colorectal lesions. Dig. Endosc. 2011, 23, 112–115. [Google Scholar] [CrossRef]

- Komeda, Y.; Handa, H.; Watanabe, T.; Nomura, T.; Kitahashi, M.; Sakurai, T.; Okamoto, A.; Minami, T.; Kono, M.; Arizumi, T. Computer-aided diagnosis based on convolutional neural network system for colorectal polyp classification: Preliminary experience. Oncology 2017, 93, 30–34. [Google Scholar] [CrossRef]

- Sánchez-Peralta, L.F.; Bote-Curiel, L.; Picón, A.; Sánchez-Margallo, F.M.; Pagador, J.B. Deep learning to find colorectal polyps in colonoscopy: A systematic literature review. Artif. Intell. Med. 2020, 108, 101923. [Google Scholar] [CrossRef] [PubMed]

- Mathews, A.A.; Draganov, P.V.; Yang, D. Endoscopic management of colorectal polyps: From benign to malignant polyps. World J. Gastrointest. Endosc. 2021, 13, 356. [Google Scholar] [CrossRef] [PubMed]

- Itoh, H.; Oda, M.; Jiang, K.; Mori, Y.; Misawa, M.; Kudo, S.-E.; Imai, K.; Ito, S.; Hotta, K.; Mori, K. Binary polyp-size classification based on deep-learned spatial information. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 1817–1828. [Google Scholar] [CrossRef]

- Saad, A.I.; Maghraby, F.A.; Badawy, O.M. PolyDSS: Computer-aided decision support system for multiclass polyp segmentation and classification using deep learning. Neural Comput. Appl. 2024, 36, 5031–5057. [Google Scholar] [CrossRef]

- Grosu, S.; Wesp, P.; Graser, A.; Maurus, S.; Schulz, C.; Knösel, T.; Cyran, C.C.; Ricke, J.; Ingrisch, M.; Kazmierczak, P.M. Machine learning–based differentiation of benign and premalignant colorectal polyps detected with CT colonography in an asymptomatic screening population: A proof-of-concept study. Radiology 2021, 299, 326–335. [Google Scholar] [CrossRef]

- Sharma, P.; Balabantaray, B.K.; Bora, K.; Mallik, S.; Kasugai, K.; Zhao, Z. An ensemble-based deep convolutional neural network for computer-aided polyps identification from colonoscopy. Front. Genet. 2022, 13, 844391. [Google Scholar] [CrossRef]

- Barua, I.; Vinsard, D.G.; Jodal, H.C.; Løberg, M.; Kalager, M.; Holme, Ø.; Misawa, M.; Bretthauer, M.; Mori, Y. Artificial intelligence for polyp detection during colonoscopy: A systematic review and meta-analysis. Endoscopy 2021, 53, 277–284. [Google Scholar] [CrossRef]

- Bora, K.; Bhuyan, M.; Kasugai, K.; Mallik, S.; Zhao, Z. Computational learning of features for automated colonic polyp classification. Sci. Rep. 2021, 11, 4347. [Google Scholar] [CrossRef] [PubMed]

- Krenzer, A.; Heil, S.; Fitting, D.; Matti, S.; Zoller, W.G.; Hann, A.; Puppe, F. Automated classification of polyps using deep learning architectures and few-shot learning. BMC Med. Imaging 2023, 23, 59. [Google Scholar] [CrossRef]

- Krenzer, A.; Banck, M.; Makowski, K.; Hekalo, A.; Fitting, D.; Troya, J.; Sudarevic, B.; Zoller, W.G.; Hann, A.; Puppe, F. A real-time polyp-detection system with clinical application in colonoscopy using deep convolutional neural networks. J. Imaging 2023, 9, 26. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Tian, J.; Zhang, C.; Luo, Y.; Wang, X.; Li, J. An improved deep learning approach and its applications on colonic polyp images detection. BMC Med. Imaging 2020, 20, 83. [Google Scholar] [CrossRef] [PubMed]

- Buitrago-Tamayo, A.C.; Lombo-Moreno, C.E.; Ursida, V.; Leguizamo-Naranjo, A.M.; Muñoz-Velandia, O.M.; Vargas-Rubio, R.D. Concordance between nice classification and histopathology in colonic polyps: A tertiary center experience. Ther. Adv. Gastrointest. Endosc. 2024, 17, 26317745241231102. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Targ, S.; Almeida, D.; Lyman, K. Resnet in resnet: Generalizing residual architectures. arXiv 2016, arXiv:1603.08029. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Abid, A.; Abdalla, A.; Abid, A.; Khan, D.; Alfozan, A.; Zou, J. Gradio: Hassle-free sharing and testing of ml models in the wild. arXiv 2019, arXiv:1906.02569. [Google Scholar]

| Database | Participants | Still Frame | Resolution | Type | |

|---|---|---|---|---|---|

| Polyp | Normal | ||||

| Real-Time (own) (DS-1) | 71 | 100 | 100 | 1920 × 1080 | Own |

| UAH DB (DS-2) | 76 | 76 | 00 | 768 × 576 | OA |

| Class | Real-Time (Own) (DS-1) | UAH DB (DS-2) | |

|---|---|---|---|

| Polyp | Hyperplastic | 60 | 21 |

| Serrated | 30 | 15 | |

| Adenoma | 10 | 40 | |

| Normal | 100 | 00 | |

| Total | 200 | 76 | |

| Class | Real-Time (Own) (DS-1) | ||

|---|---|---|---|

| Original Dataset | Stage 1 Augmentation | Stage 2 Augmentation | |

| Hyperplastic | 60 | 60 | 1320 |

| Serrated | 30 | 60 | 1320 |

| Adenoma | 10 | 60 | 1320 |

| Normal | 100 | 60 | 1320 |

| Total | 200 | 240 | 5280 |

| Class | Training (80%) | Validation (20%) | Testing (100%) | |

|---|---|---|---|---|

| Real-Time (OWN) (DS-1) | Real-Time (Own) (DS-1) | UAH DB (DS-2) | ||

| Hyperplastic | 1056 | 264 | 00 | 21 |

| Serrated | 1056 | 264 | 00 | 15 |

| Adenoma | 1056 | 264 | 00 | 40 |

| Normal | 1056 | 264 | 40 | 00 |

| Total | 4224 | 1056 | 40 + 76 = 116 | |

| Optimizers | Epoch | Class | Training | Validation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | |||

| SGD | 50/50 | 0 | 85.12 | 73.41 | 91.57 | 73.2 | 90.82 | 68.99 | 71.86 | 71.86 | 71.59 | 71.59 | 71.86 | 67.71 |

| 1 | 84 | 68.99 | 90.62 | 72.06 | 88.92 | 64.39 | 64.39 | 67.80 | 67.80 | 64.39 | ||||

| 2 | 83.92 | 70.48 | 90.27 | 71.69 | 89.42 | 64.47 | 64.47 | 66.67 | 66.67 | 64.47 | ||||

| 3 | 85.99 | 73.24 | 91.11 | 68.94 | 90.85 | 70.66 | 70.66 | 64.77 | 64.77 | 70.66 | ||||

| ADAM | 48/50 | 0 | 90.61 | 83.93 | 94.78 | 85.04 | 94.03 | 79.19 | 79.93 | 79.93 | 82.95 | 82.95 | 79.93 | 77.84 |

| 1 | 89.91 | 80.26 | 93.85 | 82.77 | 92.94 | 76.03 | 76.03 | 76.89 | 76.89 | 76.03 | ||||

| 2 | 89.24 | 81.55 | 92.92 | 81.63 | 92.37 | 76.14 | 76.14 | 76.14 | 76.14 | 76.14 | ||||

| 3 | 91.29 | 84.33 | 95.16 | 80.49 | 94.5 | 79.28 | 79.28 | 75.38 | 75.38 | 79.28 | ||||

| RMSprop | 50/50 | 0 | 88.85 | 80.73 | 93.41 | 81.72 | 92.59 | 75.19 | 78.08 | 78.08 | 76.89 | 76.89 | 78.08 | 73.96 |

| 1 | 88.12 | 78.29 | 92.7 | 80.59 | 91.58 | 71.12 | 71.12 | 74.62 | 74.62 | 71.12 | ||||

| 2 | 89.23 | 78.5 | 93.36 | 79.17 | 92.6 | 71.06 | 71.06 | 73.48 | 73.48 | 71.06 | ||||

| 3 | 89.83 | 81.36 | 94.08 | 77.27 | 93.21 | 76.02 | 76.02 | 70.83 | 70.83 | 76.02 | ||||

| Optimizers | Epoch | Class | Training | Validation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | |||

| SGD | 50/50 | 0 | 85.12 | 73.41 | 91.57 | 73.2 | 90.82 | 71.47 | 75.49 | 75.49 | 72.35 | 72.35 | 75.49 | 70.08 |

| 1 | 84 | 68.99 | 90.62 | 72.06 | 88.92 | 65.38 | 65.38 | 70.83 | 70.83 | 65.38 | ||||

| 2 | 83.92 | 70.48 | 90.27 | 71.69 | 89.42 | 66.06 | 66.06 | 69.32 | 69.32 | 66.06 | ||||

| 3 | 85.99 | 73.24 | 91.11 | 68.94 | 90.85 | 74.58 | 74.58 | 67.80 | 67.80 | 74.58 | ||||

| ADAM | 47/50 | 0 | 90.61 | 83.93 | 94.78 | 85.04 | 94.03 | 82.48 | 84.53 | 84.53 | 84.85 | 84.85 | 84.53 | 80.87 |

| 1 | 89.91 | 80.26 | 93.85 | 82.77 | 92.94 | 77.34 | 77.34 | 81.44 | 81.44 | 77.34 | ||||

| 2 | 89.24 | 81.55 | 92.92 | 81.63 | 92.37 | 78.28 | 78.28 | 79.17 | 79.17 | 78.28 | ||||

| 3 | 91.29 | 84.33 | 95.16 | 80.49 | 94.5 | 83.74 | 83.74 | 78.03 | 78.03 | 83.74 | ||||

| RMSprop | 50/50 | 0 | 88.85 | 80.73 | 93.41 | 81.72 | 92.59 | 79.69 | 83.46 | 83.46 | 82.20 | 82.20 | 83.46 | 78.13 |

| 1 | 88.12 | 78.29 | 92.7 | 80.59 | 91.58 | 73.59 | 73.59 | 79.17 | 79.17 | 73.59 | ||||

| 2 | 89.23 | 78.5 | 93.36 | 79.17 | 92.6 | 74.44 | 74.44 | 76.14 | 76.14 | 74.44 | ||||

| 3 | 89.83 | 81.36 | 94.08 | 77.27 | 93.21 | 81.82 | 81.82 | 75.00 | 75.00 | 81.82 | ||||

| Optimizers | Epoch | Class | Training | Validation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | |||

| SGD | 46/50 | 0 | 90.12 | 84.38 | 95.16 | 84.94 | 94.45 | 83.76 | 87.80 | 87.80 | 84.47 | 84.47 | 87.80 | 81.91 |

| 1 | 89.77 | 82.2 | 94.51 | 84.38 | 93.61 | 76.84 | 76.84 | 82.95 | 82.95 | 76.84 | ||||

| 2 | 90.67 | 82.31 | 95.55 | 83.71 | 94.18 | 77.74 | 77.74 | 80.68 | 80.68 | 77.74 | ||||

| 3 | 91.25 | 86.34 | 96.12 | 82.01 | 95.1 | 86.42 | 86.42 | 79.55 | 79.55 | 86.42 | ||||

| ADAM | 39/50 | 0 | 95.12 | 91.04 | 97.55 | 90.44 | 96.65 | 89.2 | 90.26 | 90.26 | 91.29 | 91.29 | 90.26 | 88.07 |

| 1 | 94.67 | 87.2 | 96.88 | 89.68 | 95.45 | 85.71 | 85.71 | 88.64 | 88.64 | 85.71 | ||||

| 2 | 94.94 | 87.53 | 97.2 | 89.11 | 96.1 | 86.42 | 86.42 | 86.74 | 86.74 | 86.42 | ||||

| 3 | 95.78 | 91.22 | 97.88 | 87.59 | 97.02 | 90.04 | 90.04 | 85.61 | 85.61 | 90.04 | ||||

| RMSprop | 43/50 | 0 | 94.22 | 88.36 | 96.55 | 89.11 | 95.49 | 87.38 | 87.73 | 87.73 | 89.39 | 89.39 | 87.73 | 86.93 |

| 1 | 93.69 | 85.87 | 96.13 | 88.07 | 94.69 | 85.24 | 85.24 | 87.50 | 87.50 | 85.24 | ||||

| 2 | 94.06 | 86.37 | 96.47 | 87.03 | 95.14 | 85.77 | 85.77 | 86.74 | 86.74 | 85.77 | ||||

| 3 | 95.02 | 89.03 | 97.12 | 85.32 | 96.43 | 89.16 | 89.16 | 84.09 | 84.09 | 89.16 | ||||

| Optimizers | Epoch | Class | Training | Validation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | |||

| SGD | 50/50 | 0 | 94.39 | 84.2 | 98.54 | 82.77 | 98.51 | 81.87 | 93.56 | 84.47 | 95.98 | 84.47 | 93.56 | 80.21 |

| 1 | 93.73 | 79.61 | 98.52 | 82.1 | 98.25 | 91.48 | 78.14 | 94.92 | 82.58 | 91.48 | ||||

| 2 | 93.81 | 79.87 | 98.51 | 81.53 | 98.26 | 89.96 | 77.53 | 93.56 | 78.41 | 89.96 | ||||

| 3 | 94.42 | 84 | 98.31 | 81.06 | 98.33 | 90.53 | 80.89 | 92.67 | 75.38 | 90.53 | ||||

| ADAM | 46/50 | 0 | 95.06 | 88.36 | 98.51 | 88.45 | 98.52 | 87.55 | 95.91 | 87.64 | 96.79 | 88.64 | 95.91 | 86.27 |

| 1 | 94.82 | 86.66 | 98.48 | 87.97 | 98.32 | 94.36 | 85.45 | 95.83 | 86.74 | 94.36 | ||||

| 2 | 94.84 | 86.75 | 98.49 | 87.41 | 98.31 | 93.84 | 85.17 | 95.72 | 84.85 | 93.84 | ||||

| 3 | 95.09 | 88.46 | 98.34 | 86.36 | 98.33 | 94.41 | 86.82 | 95.72 | 84.85 | 94.41 | ||||

| RMSprop | 50/50 | 0 | 93.12 | 87.46 | 98.79 | 87.46 | 93.12 | 86.27 | 94.74 | 85.87 | 96.55 | 87.5 | 94.74 | 84.66 |

| 1 | 92.73 | 84.66 | 98.66 | 86.74 | 92.55 | 93.37 | 82.91 | 95.83 | 86.36 | 93.37 | ||||

| 2 | 92.64 | 85.22 | 98.53 | 86.27 | 92.37 | 92.84 | 83.77 | 95.08 | 84.09 | 92.84 | ||||

| 3 | 93.14 | 87.84 | 98.61 | 84.85 | 93.18 | 93.66 | 86.23 | 94.66 | 80.68 | 93.66 | ||||

| Optimizers | Epoch | Class | Training | Validation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | |||

| SGD | 47/50 | 0 | 91.37 | 83.38 | 94.83 | 84.56 | 94.38 | 83.07 | 92.8 | 85.82 | 94.95 | 84.85 | 95.01 | 81.06 |

| 1 | 90.73 | 81.48 | 94.66 | 84.19 | 93.63 | 89.2 | 77.03 | 94.05 | 82.58 | 91.82 | ||||

| 2 | 90.86 | 81.25 | 94.13 | 82.48 | 93.67 | 89.2 | 77.37 | 93.37 | 80.3 | 92.16 | ||||

| 3 | 92.02 | 86.46 | 93.81 | 81.06 | 95.77 | 92.8 | 84.87 | 95.6 | 76.52 | 95.6 | ||||

| ADAM | 43/50 | 0 | 94.42 | 90.24 | 96.44 | 89.3 | 96.44 | 88.66 | 94.92 | 89.43 | 96.59 | 89.77 | 96.47 | 87.69 |

| 1 | 93.97 | 87.27 | 96.3 | 88.92 | 95.68 | 93.3 | 85.04 | 96.03 | 88.26 | 94.81 | ||||

| 2 | 94.04 | 87.45 | 96.13 | 88.45 | 95.77 | 93.94 | 86.14 | 95.69 | 87.12 | 95.33 | ||||

| 3 | 94.97 | 89.76 | 96.65 | 87.97 | 96.65 | 95.45 | 90.4 | 97.04 | 85.61 | 97.06 | ||||

| RMSprop | 45/50 | 0 | 93.91 | 88.67 | 96.04 | 88.16 | 96.06 | 86.98 | 94.01 | 86.89 | 95.97 | 87.88 | 95.94 | 85.70 |

| 1 | 92.26 | 85.45 | 95.92 | 87.88 | 95 | 92.8 | 84.13 | 95.42 | 86.36 | 94.57 | ||||

| 2 | 92.84 | 85.67 | 95.52 | 86.65 | 95.17 | 93.08 | 84.7 | 95.29 | 85.98 | 94.82 | ||||

| 3 | 94.27 | 88.24 | 96.26 | 85.23 | 96.26 | 93.94 | 87.2 | 96.01 | 82.58 | 96.01 | ||||

| Optimizers | Epoch | Class | Training | Validation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | |||

| SGD | 49/50 | 0 | 92.12 | 83.62 | 94.56 | 83.62 | 94.57 | 82.55 | 92.71 | 84.85 | 98.75 | 84.85 | 92.71 | 80.59 |

| 1 | 91.1 | 82.77 | 94.2 | 82.77 | 93.29 | 91.43 | 78.7 | 98.56 | 82.58 | 91.05 | ||||

| 2 | 91.3 | 82.29 | 94.1 | 82.29 | 93.46 | 90.73 | 77.7 | 98.14 | 79.17 | 90.36 | ||||

| 3 | 92.61 | 81.53 | 94.86 | 81.53 | 95.19 | 91.96 | 81.3 | 98.08 | 75.76 | 92.33 | ||||

| ADAM | 45/50 | 0 | 96.61 | 89.07 | 98.74 | 88.75 | 98.74 | 88.04 | 96.15 | 87.55 | 98.9 | 87.88 | 98.89 | 86.62 |

| 1 | 96.47 | 86.88 | 98.95 | 88.45 | 98.64 | 95.84 | 85.33 | 98.92 | 87.03 | 98.75 | ||||

| 2 | 96.5 | 86.96 | 98.97 | 87.78 | 98.65 | 95.92 | 85.61 | 98.93 | 86.17 | 98.74 | ||||

| 3 | 96.8 | 89.33 | 98.85 | 87.22 | 98.87 | 96.3 | 88.09 | 98.76 | 85.42 | 98.77 | ||||

| RMSprop | 48/50 | 0 | 96.15 | 87.55 | 98.9 | 87.88 | 98.89 | 86.62 | 92.9 | 86.25 | 98.56 | 87.88 | 92.51 | 84.94 |

| 1 | 95.84 | 85.33 | 98.92 | 87.03 | 98.75 | 91.82 | 83.15 | 98.18 | 85.98 | 91.34 | ||||

| 2 | 95.92 | 85.61 | 98.93 | 86.17 | 98.74 | 91.75 | 84.03 | 97.99 | 83.71 | 91.26 | ||||

| 3 | 96.3 | 88.09 | 98.76 | 85.42 | 98.77 | 92.79 | 86.45 | 98.15 | 82.2 | 92.45 | ||||

| Optimizers | Epoch | Class | Training | Validation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | |||

| SGD | 42/50 | 0 | 93.45 | 87.05 | 95.82 | 87.88 | 94.95 | 86.36 | 85.77 | 85.77 | 86.74 | 86.74 | 85.77 | 84.85 |

| 1 | 92.49 | 84.73 | 95.36 | 87.22 | 93.91 | 84.27 | 84.27 | 85.23 | 85.23 | 84.27 | ||||

| 2 | 93 | 85.51 | 95.6 | 86.08 | 94.28 | 83.77 | 83.77 | 84.09 | 84.09 | 83.77 | ||||

| 3 | 93.85 | 88.29 | 96.18 | 84.28 | 95.25 | 85.60 | 85.60 | 83.33 | 83.33 | 85.60 | ||||

| ADAM | 35/50 | 0 | 96.36 | 93.45 | 97.92 | 93.18 | 97.21 | 92.38 | 96.4 | 91.85 | 97.96 | 93.94 | 97.22 | 91.1 |

| 1 | 95.71 | 91.47 | 97.85 | 91.38 | 96.29 | 95.27 | 89.93 | 97.08 | 91.29 | 96.59 | ||||

| 2 | 95.8 | 91.5 | 97.89 | 92.71 | 96.08 | 95.17 | 90.49 | 96.72 | 90.15 | 96.84 | ||||

| 3 | 96.35 | 93.12 | 98.17 | 92.23 | 96.92 | 95.36 | 92.16 | 96.38 | 89.02 | 97.47 | ||||

| RMSprop | 39/50 | 0 | 94.53 | 91.11 | 96.74 | 91.19 | 95.89 | 90.34 | 95.27 | 88.93 | 97.96 | 91.29 | 96.59 | 89.11 |

| 1 | 93.84 | 89.81 | 96.68 | 90.15 | 95.48 | 94.21 | 87.82 | 97.08 | 90.15 | 96.84 | ||||

| 2 | 93.7 | 89.55 | 96.55 | 89.3 | 95.25 | 94.07 | 87.92 | 96.72 | 88.26 | 97.22 | ||||

| 3 | 94.1 | 90.89 | 96.86 | 90.72 | 95.74 | 94.4 | 91.97 | 96.38 | 86.74 | 97.47 | ||||

| Optimizers | Epoch | Class | Training | Validation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy | |||

| SGD | 35/50 | 0 | 92.52 | 92.43 | 97.22 | 91.19 | 95.7 | 91.76 | 95.51 | 91.63 | 97.94 | 91.29 | 96.86 | 89.77 |

| 1 | 91.04 | 91.04 | 96.67 | 91.38 | 95.31 | 93.76 | 86.86 | 97.08 | 90.15 | 96.41 | ||||

| 2 | 91.11 | 91.11 | 96.44 | 91.19 | 95.1 | 94.26 | 88.72 | 96.7 | 89.39 | 97.12 | ||||

| 3 | 92.48 | 92.48 | 97.28 | 91.95 | 95.83 | 94.88 | 92.09 | 96.45 | 88.26 | 97.54 | ||||

| ADAM | 22/50 | 0 | 97.92 | 97.55 | 99.31 | 97.44 | 98.9 | 97.28 | 98.01 | 97.75 | 98.29 | 98.86 | 98.51 | 96.02 |

| 1 | 96.44 | 96.44 | 99.13 | 97.25 | 98.5 | 95.93 | 93.8 | 97.97 | 97.35 | 96.2 | ||||

| 2 | 97.16 | 97.16 | 99.15 | 96.5 | 98.75 | 96.57 | 95.08 | 96.68 | 95.08 | 97.04 | ||||

| 3 | 97.98 | 97.98 | 99.43 | 97.98 | 99 | 96.97 | 97.61 | 96.8 | 92.8 | 99.02 | ||||

| RMSprop | 29/50 | 0 | 96.49 | 96.49 | 98.92 | 96.21 | 98.6 | 95.45 | 97.35 | 95.54 | 98.8 | 97.35 | 98.65 | 94.22 |

| 1 | 94.39 | 94.39 | 98.75 | 95.27 | 98.2 | 93.94 | 92.54 | 95.1 | 93.94 | 96.15 | ||||

| 2 | 94.82 | 94.82 | 98.6 | 94.79 | 98.3 | 92.8 | 93.51 | 92.7 | 92.8 | 96.1 | ||||

| 3 | 96.16 | 96.16 | 99.01 | 94.79 | 98.5 | 92.8 | 95.33 | 91.9 | 92.8 | 99.1 | ||||

| K-Fold | Class | Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy |

|---|---|---|---|---|---|---|---|

| K = 1 | 0 | 99.15 | 97.75 | 99.62 | 98.86 | 99.24 | 96.69 |

| 1 | 98.11 | 94.85 | 99.23 | 97.73 | 98.23 | ||

| 2 | 98.01 | 96.2 | 98.61 | 95.83 | 98.74 | ||

| 3 | 98.11 | 98.03 | 98.13 | 94.32 | 99.37 | ||

| K = 2 | 0 | 99.23 | 97.77 | 99.62 | 99.62 | 99.24 | 97.92 |

| 1 | 98.49 | 97.37 | 99.04 | 98.11 | 98.49 | ||

| 2 | 98.2 | 97.36 | 98.86 | 97.73 | 99.24 | ||

| 3 | 98.77 | 99.22 | 98.7 | 96.21 | 99.79 | ||

| K = 3 | 0 | 97.11 | 96.27 | 97.73 | 97.73 | 97.12 | 95.08 |

| 1 | 95.9 | 93.68 | 97.04 | 95.45 | 96.56 | ||

| 2 | 95.52 | 94.68 | 95.84 | 94.32 | 96.83 | ||

| 3 | 94.87 | 95.7 | 94.74 | 92.8 | 98.16 | ||

| K = 4 | 0 | 97.11 | 95.56 | 97.73 | 96.21 | 97.12 | 95.17 |

| 1 | 95.9 | 94.78 | 96.21 | 93.56 | 96.56 | ||

| 2 | 95.52 | 94.64 | 95.84 | 93.18 | 96.83 | ||

| 3 | 94.87 | 95.72 | 94.74 | 93.18 | 98.16 | ||

| K = 5 | 0 | 98.48 | 97.01 | 98.48 | 96.97 | 98.24 | 96.5 |

| 1 | 96.97 | 95.52 | 96.97 | 96.59 | 97.25 | ||

| 2 | 96.59 | 95.15 | 96.59 | 93.94 | 98.05 | ||

| 3 | 95.83 | 98.41 | 95.83 | 93.94 | 99.48 | ||

| Mean ± SD | 0 | 98.22 ± 0.94 | 96.87 ± 0.86 | 98.64 ± 0.85 | 97.88 ± 1.24 | 98.19 ± 0.95 | 96.27 ± 1.06 |

| 1 | 97.07 ± 1.02 | 95.24 ± 1.22 | 97.70 ± 1.21 | 96.29 ± 1.65 | 97.42 ± 0.81 | ||

| 2 | 96.77 ± 1.06 | 95.61 ± 1.04 | 97.15 ± 1.33 | 95.00 ± 1.61 | 97.94 ± 0.98 | ||

| 3 | 96.89 ± 1.55 | 97.42 ± 1.45 | 96.43 ± 1.68 | 94.09 ± 1.19 | 98.99 ± 0.69 |

| Method | Class | Accuracy | Sensitivity | Specificity | Precision | NPV | Overall Accuracy |

|---|---|---|---|---|---|---|---|

| 80:20 Split (Hold-Out) (A) | 0 | 98.01 | 97.75 | 98.29 | 98.86 | 98.51 | 96.02 |

| 1 | 95.93 | 93.8 | 97.97 | 97.35 | 96.2 | ||

| 2 | 96.57 | 95.08 | 96.68 | 95.08 | 97.04 | ||

| 3 | 96.97 | 97.61 | 96.8 | 92.8 | 99.02 | ||

| K-Fold Cross (Leave-Out) (B) | 0 | 98.22 | 96.87 | 98.64 | 97.88 | 98.19 | 96.27 |

| 1 | 97.07 | 95.24 | 97.7 | 96.29 | 97.42 | ||

| 2 | 96.77 | 95.61 | 97.15 | 95 | 97.94 | ||

| 3 | 96.49 | 97.42 | 96.43 | 94.09 | 98.99 | ||

| Difference Between (A and B) | 0 | 0.21 | 0.88 | 0.35 | 0.98 | 0.32 | 0.25 |

| 1 | 1.14 | 1.44 | 0.27 | 1.06 | 1.22 | ||

| 2 | 0.2 | 0.53 | 0.47 | 0.08 | 0.9 | ||

| 3 | 0.48 | 0.19 | 0.37 | 1.29 | 0.03 |

| Removal/Modification Techniques Applied to ResNet50 Architecture Techniques | Accuracy |

|---|---|

| Stage 1 layer modification | 90.18 |

| Stage 2 layer modification | 91.71 |

| Stage 3 layer modification | 92.78 |

| Stage 4 layer modification | 90.74 |

| Simultaneous modifications across Stages 1 to 4 | 67.06 |

| Modification at the fully connected (FC) layer | 87.85 |

| CRP-ViT | 97.28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Selvaraj, J.; Sadaf, K.; Aslam, S.M.; Umapathy, S. Multiclassification of Colorectal Polyps from Colonoscopy Images Using AI for Early Diagnosis. Diagnostics 2025, 15, 1285. https://doi.org/10.3390/diagnostics15101285

Selvaraj J, Sadaf K, Aslam SM, Umapathy S. Multiclassification of Colorectal Polyps from Colonoscopy Images Using AI for Early Diagnosis. Diagnostics. 2025; 15(10):1285. https://doi.org/10.3390/diagnostics15101285

Chicago/Turabian StyleSelvaraj, Jothiraj, Kishwar Sadaf, Shabnam Mohamed Aslam, and Snekhalatha Umapathy. 2025. "Multiclassification of Colorectal Polyps from Colonoscopy Images Using AI for Early Diagnosis" Diagnostics 15, no. 10: 1285. https://doi.org/10.3390/diagnostics15101285

APA StyleSelvaraj, J., Sadaf, K., Aslam, S. M., & Umapathy, S. (2025). Multiclassification of Colorectal Polyps from Colonoscopy Images Using AI for Early Diagnosis. Diagnostics, 15(10), 1285. https://doi.org/10.3390/diagnostics15101285