Frontiers in Three-Dimensional Surface Imaging Systems for 3D Face Acquisition in Craniofacial Research and Practice: An Updated Literature Review

Abstract

1. Introduction

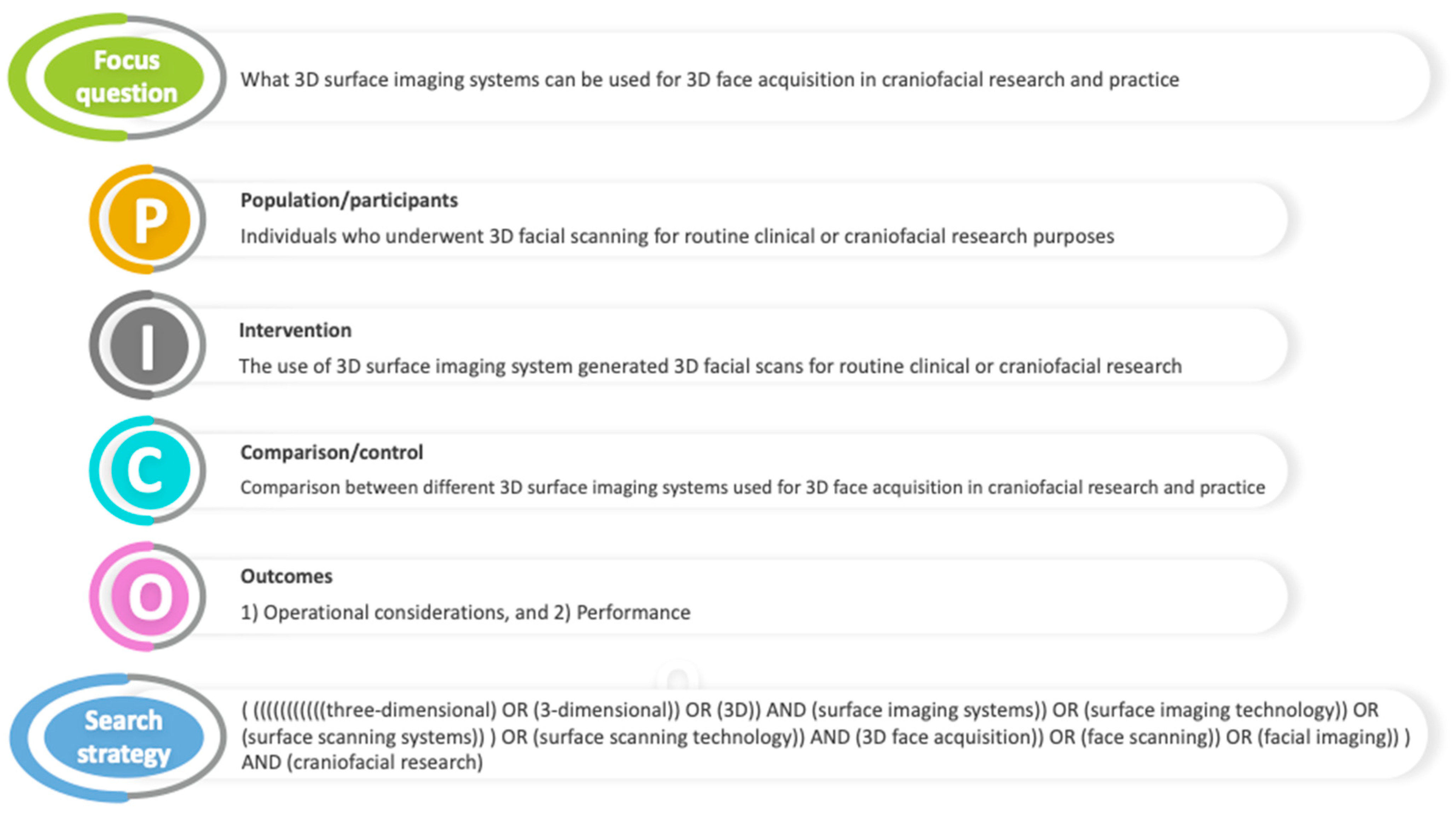

2. Materials and Methods

2.1. Eligibility Criteria

2.2. Information Sources and Literature Search

2.3. Study Selection

2.4. Data Extraction and Outcomes of Interest

- (1)

- Hardware characteristics (portability, system mobility, sensor position, cost-effectiveness);

- (2)

- Software characteristics (CT/CBCT integration, surgery simulation, real-time 3D volumetric visualization, tissue behavior simulation, progress and outcome monitoring);

- (3)

- Functionality (purpose, data delivery, capture speed, processing time, scan range, coverage, optimal 3D measurement range, color image, scan requisite, output format, scan processing software enabled, accuracy, precision, archivable data, user-friendliness, system requirements, calibration time). Table 1 illustrates the definitions of various characteristics studied in 3D face acquisition systems.

3. Results

3.1. Findings on 3D Surface Imaging Technologies and Systems

3.1.1. Laser-Based Scanning

Minolta Vivid 910

FastSCAN II

3.1.2. Stereophotogrammetry

Vectra H1

Di3D FCS-100

3.1.3. Structured Light Scanning

Morpheus 3D

Accu3D

Axis Three XS-200

3.1.4. Cone-Beam Computed Tomography Integrated

Planmeca ProFace

3.1.5. Smartphone-Based Scanning

Bellus3D

- Bellus3D FaceApp

- 2.

- Face Camera Pro

3.1.6. Four-Dimensional Imaging (Dynamic 3D)

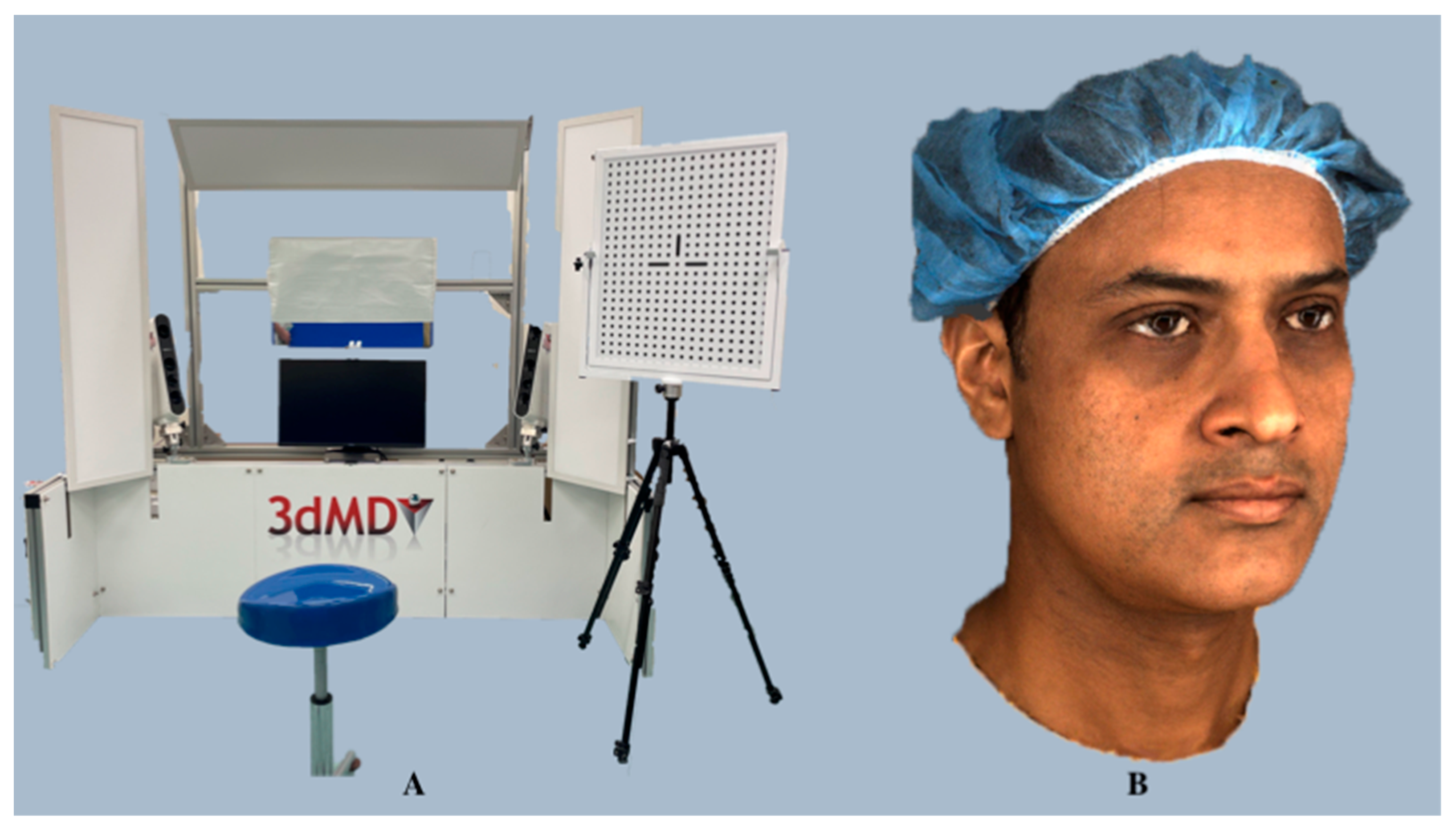

3dMD

DI4D

3.1.7. Red-Green-Blue-Depth (RGB-D)

Intel RealSense D435 Camera

Azure Kinect DK

RAYFace

4. Discussion

4.1. Operational Considerations

4.2. Performance

4.2.1. Accuracy and Calibration

4.2.2. Scanning Time and Data Delivery

4.2.3. Image Quality

4.2.4. 3D Software Solutions

5. Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kau, C.H.; Richmond, S.; Incrapera, A.; English, J.; Xia, J.J. Three-dimensional surface acquisition systems for the study of facial morphology and their application to maxillofacial surgery. Int. J. Med. Robot. 2007, 3, 97–110. [Google Scholar] [CrossRef]

- Li, Y.; Yang, X.; Li, D. The application of three-dimensional surface imaging system in plastic and reconstructive surgery. Ann. Plast. Surg. 2016, 77 (Suppl. S1), S76–S83. [Google Scholar] [CrossRef] [PubMed]

- Karatas, O.H.; Toy, E. Three-dimensional imaging techniques: A literature review. Eur. J. Dent. 2014, 8, 132–140. [Google Scholar] [CrossRef] [PubMed]

- Alshammery, F.A. Three dimensional (3D) imaging techniques in orthodontics—An update. J. Fam. Med. Prim. Care 2020, 9, 2626–2630. [Google Scholar] [CrossRef] [PubMed]

- Cava, S.M.L.; Orrù, G.; Drahansky, M.; Marcialis, G.L.; Roli, F. 3D Face Reconstruction: The Road to Forensics. ACM Comput. Surv. 2023, 56, 77. [Google Scholar] [CrossRef]

- Liopyris, K.; Gregoriou, S.; Dias, J.; Stratigos, A.J. Artificial Intelligence in Dermatology: Challenges and Perspectives. Dermatol. Ther. 2022, 12, 2637–2651. [Google Scholar] [CrossRef] [PubMed]

- Lam, W.Y.; Hsung, R.T.; Choi, W.W.; Luk, H.W.; Pow, E.H. A 2-part facebow for CAD-CAM dentistry. J. Prosthet. Dent. 2016, 116, 843–847. [Google Scholar] [CrossRef] [PubMed]

- Eastwood, P.; Gilani, S.Z.; McArdle, N.; Hillman, D.; Walsh, J.; Maddison, K.; Goonewardene, M.; Mian, A. Predicting sleep apnea from three-dimensional face photography. J. Clin. Sleep Med. 2020, 16, 493–502. [Google Scholar] [CrossRef] [PubMed]

- Lin, W.S.; Harris, B.T.; Phasuk, K.; Llop, D.R.; Morton, D. Integrating a facial scan, virtual smile design, and 3D virtual patient for treatment with CAD-CAM ceramic veneers: A clinical report. J. Prosthet. Dent. 2018, 119, 200–205. [Google Scholar] [CrossRef]

- Joda, T.; Gallucci, G.O. The virtual patient in dental medicine. Clin. Oral Implant. Res. 2015, 26, 725–726. [Google Scholar] [CrossRef]

- Ricciardi, F.; Copelli, C.; De Paolis, L.T. An Augmented Reality System for Maxillo-Facial Surgery; Springer: Cham, Switzerland, 2017; pp. 53–62. [Google Scholar]

- Lo, S.; Fowers, S.; Darko, K.; Spina, T.; Graham, C.; Britto, A.; Rose, A.; Tittsworth, D.; McIntyre, A.; O’Dowd, C.; et al. Participatory development of a 3D telemedicine system during COVID: The future of remote consultations. J. Plast. Reconstr. Aesthet. Surg. 2023, 87, 479–490. [Google Scholar] [CrossRef]

- Moreau, P.; Ismael, S.; Masadeh, H.; Katib, E.A.; Viaud, L.; Nordon, C.; Herfat, S. 3D technology and telemedicine in humanitarian settings. Lancet Digit. Health 2020, 2, e108–e110. [Google Scholar] [CrossRef]

- Jiang, J.G.; Zhang, Y.D. Motion planning and synchronized control of the dental arch generator of the tooth-arrangement robot. Int. J. Med. Robot. 2013, 9, 94–102. [Google Scholar] [CrossRef]

- Knoops, P.G.M.; Papaioannou, A.; Borghi, A.; Breakey, R.W.F.; Wilson, A.T.; Jeelani, O.; Zafeiriou, S.; Steinbacher, D.; Padwa, B.L.; Dunaway, D.J.; et al. A machine learning framework for automated diagnosis and computer-assisted planning in plastic and reconstructive surgery. Sci. Rep. 2019, 9, 13597. [Google Scholar] [CrossRef]

- Liu, W.; Li, M.; Yi, L. Identifying children with autism spectrum disorder based on their face processing abnormality: A machine learning framework. Autism Res. 2016, 9, 888–898. [Google Scholar] [CrossRef]

- Farahani, N.; Braun, A.; Jutt, D.; Huffman, T.; Reder, N.; Liu, Z.; Yagi, Y.; Pantanowitz, L. Three-dimensional imaging and scanning: Current and future applications for pathology. J. Pathol. Inform. 2017, 8, 36. [Google Scholar] [CrossRef] [PubMed]

- Tzou, C.-H.J.; Frey, M. Evolution of 3D surface imaging systems in facial plastic surgery. Facial Plast. Surg. Clin. N. Am. 2011, 19, 591–602. [Google Scholar] [CrossRef] [PubMed]

- Daniele, G.; Annalisa, C.; Claudia, D.; Chiarella, S. 3D surface acquisition systems and their applications to facial anatomy: Let’s make a point. Ital. J. Anat. Embryol. 2020, 124, 422–431. [Google Scholar] [CrossRef]

- Moss, J.P.; Coombes, A.M.; Linney, A.D.; Campos, J. Methods of three-dimensional analysis of patients with asymmetry of the face. Proc. Finn. Dent. Soc. 1991, 87, 139–149. [Google Scholar] [PubMed]

- Germec-Cakan, D.; Canter, H.I.; Nur, B.; Arun, T. Comparison of facial soft tissue measurements on three-dimensional images and models obtained with different methods. J. Craniofac Surg. 2010, 21, 1393–1399. [Google Scholar] [CrossRef] [PubMed]

- Moss, J.P.; Linney, A.D.; Grindrod, S.R.; Mosse, C.A. A laser scanning system for the measurement of facial surface morphology. Opt. Lasers Eng. 1989, 10, 179–190. [Google Scholar] [CrossRef]

- Schwenzer-Zimmerer, K.; Chaitidis, D.; Berg-Boerner, I.; Krol, Z.; Kovacs, L.; Schwenzer, N.F.; Zimmerer, S.; Holberg, C.; Zeilhofer, H.F. Quantitative 3D soft tissue analysis of symmetry prior to and after unilateral cleft lip repair compared with non-cleft persons (performed in Cambodia). J. Craniomaxillofac. Surg. 2008, 36, 431–438. [Google Scholar] [CrossRef]

- Blais, F. Review of 20 years of range sensor development. J. Electron. Imaging 2004, 13, 231–243. [Google Scholar] [CrossRef]

- Lippold, C.; Liu, X.; Wangdo, K.; Drerup, B.; Schreiber, K.; Kirschneck, C.; Moiseenko, T.; Danesh, G. Facial landmark localization by curvature maps and profile analysis. Head Face Med. 2014, 10, 54. [Google Scholar] [CrossRef] [PubMed]

- Minolta Vivid. Available online: https://www.virvig.eu/services/Minolta.pdf (accessed on 1 June 2022).

- Minolta Vivid 910. Available online: https://pdf.directindustry.com/pdf/konica-minolta-sensing-americas/vivid-910/18425- (accessed on 8 December 2023).

- Kau, C.H.; Richmond, S.; Zhurov, A.I.; Knox, J.; Chestnutt, I.; Hartles, F.; Playle, R. Reliability of measuring facial morphology with a 3-dimensional laser scanning system. Am. J. Orthod. Dentofac. Orthop. 2005, 128, 424–430. [Google Scholar] [CrossRef] [PubMed]

- Kovacs, L.; Zimmermann, A.; Brockmann, G.; Baurecht, H.; Schwenzer-Zimmerer, K.; Papadopulos, N.A.; Papadopoulos, M.A.; Sader, R.; Biemer, E.; Zeilhofer, H.F. Accuracy and precision of the three-dimensional assessment of the facial surface using a 3-D laser scanner. IEEE Trans. Med. Imaging 2006, 25, 742–754. [Google Scholar] [CrossRef]

- FASTSCAN II. Available online: https://polhemus.com/_assets/img/FastSCAN_II_Brochure.pdf (accessed on 10 December 2023).

- Thompson, J.T.; David, L.R.; Wood, B.; Argenta, A.; Simpson, J.; Argenta, L.C. Outcome analysis of helmet therapy for positional plagiocephaly using a three-dimensional surface scanning laser. J. Craniofac. Surg. 2009, 20, 362–365. [Google Scholar] [CrossRef]

- Berghagen, N. Photogrammetric Principles Applied to Intra-oral Radiodontia. A Method for Diagnosis and Therapy in Odontology; Springer: Stockholm, Sweden, 1951. [Google Scholar]

- Burke, P.H.; Beard, F.H. Stereophotogrammetry of the face. A preliminary investigation into the accuracy of a simplified system evolved for contour mapping by photography. Am. J. Orthod. 1967, 53, 769–782. [Google Scholar] [CrossRef]

- Plooij, J.M.; Swennen, G.R.; Rangel, F.A.; Maal, T.J.; Schutyser, F.A.; Bronkhorst, E.M.; Kuijpers-Jagtman, A.M.; Bergé, S.J. Evaluation of reproducibility and reliability of 3D soft tissue analysis using 3D stereophotogrammetry. Int. J. Oral Maxillofac. Surg. 2009, 38, 267–273. [Google Scholar] [CrossRef]

- Tzou, C.H.; Artner, N.M.; Pona, I.; Hold, A.; Placheta, E.; Kropatsch, W.G.; Frey, M. Comparison of three-dimensional surface-imaging systems. J. Plast. Reconstr. Aesthet. Surg. 2014, 67, 489–497. [Google Scholar] [CrossRef] [PubMed]

- Wong, J.Y.; Oh, A.K.; Ohta, E.; Hunt, A.T.; Rogers, G.F.; Mulliken, J.B.; Deutsch, C.K. Validity and reliability of craniofacial anthropometric measurement of 3D digital photogrammetric images. Cleft Palate Craniofac. J. 2008, 45, 232–239. [Google Scholar] [CrossRef]

- Heike, C.L.; Upson, K.; Stuhaug, E.; Weinberg, S.M. 3D digital stereophotogrammetry: A practical guide to facial image acquisition. Head Face Med. 2010, 6, 18. [Google Scholar] [CrossRef]

- Camison, L.; Bykowski, M.; Lee, W.W.; Carlson, J.C.; Roosenboom, J.; Goldstein, J.A.; Losee, J.E.; Weinberg, S.M. Validation of the Vectra H1 portable three-dimensional photogrammetry system for facial imaging. Int. J. Oral Maxillofac. Surg. 2018, 47, 403–410. [Google Scholar] [CrossRef]

- Gibelli, D.; Pucciarelli, V.; Cappella, A.; Dolci, C.; Sforza, C. Are portable stereophotogrammetric devices reliable in facial imaging? A validation study of VECTRA H1 device. J. Oral Maxillofac. Surg. 2018, 76, 1772–1784. [Google Scholar] [CrossRef]

- White, J.D.; Ortega-Castrillon, A.; Virgo, C.; Indencleef, K.; Hoskens, H.; Shriver, M.D.; Claes, P. Sources of variation in the 3dMDface and Vectra H1 3D facial imaging systems. Sci. Rep. 2020, 10, 4443. [Google Scholar] [CrossRef] [PubMed]

- Di3D. Available online: http://www.dirdim.com/pdfs/DDI_Dimensional_Imaging_DI3D.pdf (accessed on 14 December 2023).

- Khambay, B.; Nairn, N.; Bell, A.; Miller, J.; Bowman, A.; Ayoub, A.F. Validation and reproducibility of a high-resolution three-dimensional facial imaging system. Br. J. Oral Maxillofac. Surg. 2008, 46, 27–32. [Google Scholar] [CrossRef] [PubMed]

- Winder, R.J.; Darvann, T.A.; McKnight, W.; Magee, J.D.M.; Ramsay-Baggs, P. Technical validation of the Di3D stereophotogrammetry surface imaging system. Br. J. Oral Maxillofac. Surg. 2008, 46, 33–37. [Google Scholar] [CrossRef] [PubMed]

- Fourie, Z.; Damstra, J.; Gerrits, P.O.; Ren, Y. Evaluation of anthropometric accuracy and reliability using different three-dimensional scanning systems. Forensic Sci. Int. 2011, 207, 127–134. [Google Scholar] [CrossRef] [PubMed]

- Al-Anezi, T.; Khambay, B.; Peng, M.J.; O’Leary, E.; Ju, X.; Ayoub, A. A new method for automatic tracking of facial landmarks in 3D motion captured images (4D). Int. J. Oral Maxillofac. Surg. 2013, 42, 9–18. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Xu, T.; Lin, J. Validation of a three-dimensional facial scanning system based on structured light techniques. Comput. Methods Programs Biomed. 2009, 94, 290–298. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.H.; Jung, W.Y.; Seo, Y.J.; Kim, K.A.; Park, K.H.; Park, Y.G. Accuracy and precision of integumental linear dimensions in a three-dimensional facial imaging system. Korean J. Orthod. 2015, 45, 105–112. [Google Scholar] [CrossRef]

- Choi, K.; Kim, M.; Lee, K.; Nam, O.; Lee, H.-S.; Choi, S.; Kim, K. Accuracy and Precision of Three-dimensional Imaging System of Children’s Facial Soft Tissue. J. Korean Acad. Pediatr. Dent. 2020, 47, 17–24. [Google Scholar] [CrossRef][Green Version]

- Lee, K.W.; Kim, S.H.; Gil, Y.C.; Hu, K.S.; Kim, H.J. Validity and reliability of a structured-light 3D scanner and an ultrasound imaging system for measurements of facial skin thickness. Clin. Anat. 2017, 30, 878–886. [Google Scholar] [CrossRef] [PubMed]

- Traisrisin, K.; Wangsrimongkol, T.; Pisek, P.; Rattanaphan, P.; Puasiri, S. The Accuracy of soft tissue prediction using Morpheus 3D simulation software for planning orthognathic surgery. J. Med. Assoc. Thai 2017, 100 (Suppl. S6), S38–S49. [Google Scholar]

- AxisThree XS-200. Available online: https://market-comms.co.th/wp-content/uploads/2017/08/XS200-Spec.pdf (accessed on 22 December 2023).

- AxisThree 3D Simulation Technology. Available online: https://www.bodysculpt.com/3d-simulation-technology/axis-three-3d/ (accessed on 22 December 2023).

- Pauwels, R. History of dental radiography: Evolution of 2D and 3D imaging modalities. Med. Phys. Int. 2020, 8, 235–277. [Google Scholar]

- Planmeca ProFace. Available online: https://www.planmeca.com (accessed on 24 December 2023).

- Liberton, D.K.; Mishra, R.; Beach, M.; Raznahan, A.; Gahl, W.A.; Manoli, I.; Lee, J.S. Comparison of three-dimensional surface imaging systems using landmark analysis. J. Craniofac. Surg. 2019, 30, 1869–1872. [Google Scholar] [CrossRef]

- Amornvit, P.; Sanohkan, S. The accuracy of digital face scans obtained from 3D scanners: An in vitro study. Int. J. Environ. Res. Public Health 2019, 16, 5061. [Google Scholar] [CrossRef] [PubMed]

- Bellus3D. Available online: https://www.bellus3d.com/_assets/downloads/brochures/BellusD-Dental-Pro-Brochure.pdf (accessed on 21 March 2021).

- Bellus3D FaceApp. Available online: https://www.bellus3d.com/_assets/downloads/product/FCP (accessed on 21 March 2021).

- Piedra-Cascón, W.; Meyer, M.J.; Methani, M.M.; Revilla-León, M. Accuracy (trueness and precision) of a dual-structured light facial scanner and interexaminer reliability. J. Prosthet. Dent. 2020, 124, 567–574. [Google Scholar] [CrossRef]

- Cascos, R.; Ortiz del Amo, L.; Álvarez-Guzmán, F.; Antonaya-Martín, J.L.; Celemín-Viñuela, A.; Gómez-Costa, D.; Zafra-Vallejo, M.; Agustín-Panadero, R.; Gómez-Polo, M. Accuracy between 2D Photography and Dual-Structured Light 3D Facial Scanner for Facial Anthropometry: A Clinical Study. J. Clin. Med. 2023, 12, 3090. [Google Scholar] [CrossRef]

- Dzelzkaleja, L.; Knēts, J.; Rozenovskis, N.; Sīlītis, A. Mobile apps for 3D face scanning. In Proceedings of the IntelliSys 2021: Intelligent Systems and Applications, Amsterdam, The Netherlands, 1–2 September 2021; pp. 34–50. [Google Scholar]

- Berretti, S.; Bimbo, A.D.; Pala, P. 3D face recognition using isogeodesic stripes. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2162–2177. [Google Scholar] [CrossRef]

- Drira, H.; Ben Amor, B.; Srivastava, A.; Daoudi, M.; Slama, R. 3D face recognition under expressions, occlusions and pose variations. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2270–2283. [Google Scholar] [CrossRef]

- Alashkar, T.; Ben Amor, B.; Daoudi, M.; Berretti, S. A 3D dynamic database for unconstrained face recognition. In Proceedings of the 5th International conference and exhibition on 3D body scanning technologies, Lugano, Switzerland, 21–22 October 2014. [Google Scholar]

- Beeler, T.; Bickel, B.; Beardsley, P.A.; Sumner, B.; Gross, M.H. High-quality single-shot capture of facial geometry. ACM Trans. Graph. 2010, 29, 1–9. [Google Scholar] [CrossRef]

- Jayaratne, Y.S.; McGrath, C.P.; Zwahlen, R.A. How accurate are the fusion of cone-beam CT and 3-D stereophotographic images? PLoS ONE 2012, 7, e49585. [Google Scholar] [CrossRef]

- Weinberg, S.M.; Naidoo, S.; Govier, D.P.; Martin, R.A.; Kane, A.A.; Marazita, M.L. Anthropometric precision and accuracy of digital three-dimensional photogrammetry: Comparing the Genex and 3dMD imaging systems with one another and with direct anthropometry. J. Craniofac. Surg. 2006, 17, 477–483. [Google Scholar] [CrossRef]

- Lübbers, H.T.; Medinger, L.; Kruse, A.; Grätz, K.W.; Matthews, F. Precision and accuracy of the 3dMD photogrammetric system in craniomaxillofacial application. J. Craniofac. Surg. 2010, 21, 763–767. [Google Scholar] [CrossRef]

- Patel, A.; Islam, S.M.; Murray, K.; Goonewardene, M.S. Facial asymmetry assessment in adults using three-dimensional surface imaging. Prog. Orthod. 2015, 16, 36. [Google Scholar] [CrossRef]

- Liu, Y.; Kau, C.H.; Talbert, L.; Pan, F. Three-dimensional analysis of facial morphology. J. Craniofac. Surg. 2014, 25, 1890–1894. [Google Scholar] [CrossRef] [PubMed]

- Maal, T.J.; van Loon, B.; Plooij, J.M.; Rangel, F.; Ettema, A.M.; Borstlap, W.A.; Bergé, S.J. Registration of 3-dimensional facial photographs for clinical use. J. Oral. Maxillofac. Surg. 2010, 68, 2391–2401. [Google Scholar] [CrossRef] [PubMed]

- Aldridge, K.; Boyadjiev, S.A.; Capone, G.T.; DeLeon, V.B.; Richtsmeier, J.T. Precision and error of three-dimensional phenotypic measures acquired from 3dMD photogrammetric images. Am. J. Med. Genet. A 2005, 138a, 247–253. [Google Scholar] [CrossRef] [PubMed]

- Naini, F.B.; Akram, S.; Kepinska, J.; Garagiola, U.; McDonald, F.; Wertheim, D. Validation of a new three-dimensional imaging system using comparative craniofacial anthropometry. Maxillofac. Plast. Reconstr. Surg. 2017, 39, 23. [Google Scholar] [CrossRef] [PubMed]

- Metzler, P.; Bruegger, L.S.; Kruse Gujer, A.L.; Matthews, F.; Zemann, W.; Graetz, K.W.; Luebbers, H.T. Craniofacial landmarks in young children: How reliable are measurements based on 3-dimensional imaging? J. Craniofac. Surg. 2012, 23, 1790–1795. [Google Scholar] [CrossRef]

- Van der Meer, W.J.; Dijkstra, P.U.; Visser, A.; Vissink, A.; Ren, Y. Reliability and validity of measurements of facial swelling with a stereophotogrammetry optical three-dimensional scanner. Br. J. Oral Maxillofac. Surg. 2014, 52, 922–927. [Google Scholar] [CrossRef]

- Sandbach, G.; Zafeiriou, S.; Pantic, M.; Yin, L. Static and dynamic 3D facial expression recognition: A comprehensive survey. Image Vis. Comput. 2012, 30, 683–697. [Google Scholar] [CrossRef]

- Frowd, C.; Matuszewski, B.; Shark, L.; Quan, W. Towards a comprehensive 3D dynamic facial expression database. In Proceedings of the 9th WSEAS International Conference on Signal, Speech and Image Processing and 9th WSEAS International Conference on Multimedia, Internet and Video Technologies, Budapest, Hungary, 3–5 September 2009; pp. 113–119. [Google Scholar]

- Zhong, Y.; Zhu, Y.; Jiang, T.; Yuan, J.; Xu, L.; Cao, D.; Yu, Z.; Wei, M. A novel study on alar mobility of HAN female by 3dMD dynamic surface imaging system. Aesthetic Plast. Surg. 2022, 46, 364–372. [Google Scholar] [CrossRef] [PubMed]

- Benedikt, L.; Cosker, D.; Rosin, P.L.; Marshall, D. Assessing the uniqueness and permanence of facial actions for use in biometric applications. IEEE Trans. Syst. Man Cybern. A Syst. Hum. 2010, 40, 449–460. [Google Scholar] [CrossRef]

- Alagha, M.A.; Ju, X.; Morley, S.; Ayoub, A. Reproducibility of the dynamics of facial expressions in unilateral facial palsy. Int. J. Oral Maxillofac. Surg. 2018, 47, 268–275. [Google Scholar] [CrossRef] [PubMed]

- Shujaat, S.; Khambay, B.S.; Ju, X.; Devine, J.C.; McMahon, J.D.; Wales, C.; Ayoub, A.F. The clinical application of three-dimensional motion capture (4D): A novel approach to quantify the dynamics of facial animations. Int. J. Oral Maxillofac. Surg. 2014, 43, 907–916. [Google Scholar] [CrossRef] [PubMed]

- Gašparović, B.; Morelato, L.; Lenac, K.; Mauša, G.; Zhurov, A.; Katić, V. Comparing Direct Measurements and Three-Dimensional (3D) Scans for Evaluating Facial Soft Tissue. Sensors 2023, 23, 2412. [Google Scholar] [CrossRef] [PubMed]

- Ulrich, L.; Vezzetti, E.; Moos, S.; Marcolin, F. Analysis of RGB-D camera technologies for supporting different facial usage scenarios. Multimed. Tools Appl. 2020, 79, 29375–29398. [Google Scholar] [CrossRef]

- Siena, F.L.; Byrom, B.; Watts, P.; Breedon, P. Utilising the Intel RealSense camera for measuring health outcomes in clinical research. J. Med. Syst. 2018, 42, 53. [Google Scholar] [CrossRef]

- Bamji, C.; Mehta, S.; Thompson, B.; Elkhatib, T.; Wurster, S.; Akkaya, O.; Payne, A.; Godbaz, J.; Fenton, M.; Rajasekaran, V.; et al. IMpixel 65nm BSI 320MHz demodulated TOF Image sensor with 3μm global shutter pixels and analog binning. In Proceedings of the IEEE International Solid–State Circuits Conference, San Francisco, CA, USA, 11–15 February 2018; pp. 94–96. [Google Scholar]

- Kurillo, G.; Hemingway, E.; Cheng, M.-L.; Cheng, L. Evaluating the accuracy of the Azure Kinect and Kinect v2. Sensors 2022, 22, 2469. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Sheng, B.; Hart, R.; Zhang, Y. The validity of a dual Azure Kinect-based motion capture system for gait analysis: A preliminary study. In Proceedings of the 2020 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Auckland, New Zealand, 7–10 December 2020; pp. 1201–1206. [Google Scholar]

- Albert, J.A.; Owolabi, V.; Gebel, A.; Brahms, C.M.; Granacher, U.; Arnrich, B. Evaluation of the pose tracking performance of the Azure Kinect and Kinect v2 for gait analysis in comparison with a gold standard: A pilot study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef] [PubMed]

- Yoshimoto, K.; Shinya, M. Use of the Azure Kinect to measure foot clearance during obstacle crossing: A validation study. PLoS ONE 2022, 17, e0265215. [Google Scholar] [CrossRef] [PubMed]

- Antico, M.; Balletti, N.; Laudato, G.; Lazich, A.; Notarantonio, M.; Oliveto, R.; Ricciardi, S.; Scalabrino, S.; Simeone, J. Postural control assessment via Microsoft Azure Kinect DK: An evaluation study. Comput. Methods Programs Biomed. 2021, 209, 106324. [Google Scholar] [CrossRef] [PubMed]

- Tölgyessy, M.; Dekan, M.; Chovanec, Ľ.; Hubinský, P. Evaluation of the Azure Kinect and Its comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413. [Google Scholar] [CrossRef]

- McGlade, J.; Wallace, L.; Hally, B.; White, A.; Reinke, K.; Jones, S. An early exploration of the use of the Microsoft Azure Kinect for estimation of urban tree diameter at breast height. Remote Sens. Lett. 2020, 11, 963–972. [Google Scholar] [CrossRef]

- Neupane, C.; Koirala, A.; Wang, Z.; Walsh, K.B. Evaluation of depth cameras for use in fruit localization and sizing: Finding a successor to Kinect v2. Agronomy 2021, 11, 1780. [Google Scholar] [CrossRef]

- Cho, R.-Y.; Byun, S.-H.; Yi, S.-M.; Ahn, H.-J.; Nam, Y.-S.; Park, I.-Y.; On, S.-W.; Kim, J.-C.; Yang, B.-E. Comparative Analysis of Three Facial Scanners for Creating Digital Twins by Focusing on the Difference in Scanning Method. Bioengineering 2023, 10, 545. [Google Scholar] [CrossRef]

- Cho, S.W.; Byun, S.H.; Yi, S.; Jang, W.S.; Kim, J.C.; Park, I.Y.; Yang, B.E. Sagittal relationship between the maxillary central incisors and the forehead in digital twins of Korean adult females. J. Pers. Med. 2021, 11, 203. [Google Scholar] [CrossRef]

- Riphagen, J.M.; Van Neck, J.W.; Van Adrichem, L.N.A. 3D surface imaging in medicine: A review of working principles and implications for imaging the unsedated child. J. Craniofac. Surg. 2008, 19, 517–524. [Google Scholar] [CrossRef]

- Cattaneo, C.; Cantatore, A.; Ciaffi, R.; Gibelli, D.; Cigada, A.; De Angelis, D.; Sala, R. Personal identification by the comparison of facial profiles: Testing the reliability of a high-resolution 3D-2D comparison model. J. Forensic Sci. 2012, 57, 182–187. [Google Scholar] [CrossRef]

- Gibelli, D.; Pucciarelli, V.; Poppa, P.; Cummaudo, M.; Dolci, C.; Cattaneo, C.; Sforza, C. Three-dimensional facial anatomy evaluation: Reliability of laser scanner consecutive scans procedure in comparison with stereophotogrammetry. J. Craniomaxillofac. Surg. 2018, 46, 1807–1813. [Google Scholar] [CrossRef]

- Verhulst, A.; Hol, M.; Vreeken, R.; Becking, A.; Ulrich, D.; Maal, T. Three-dimensional imaging of the face: A comparison between three different imaging modalities. Aesthet. Surg. J. 2018, 38, 579–585. [Google Scholar] [CrossRef]

- ten Harkel, T.C.; Speksnijder, C.M.; van der Heijden, F.; Beurskens, C.H.G.; Ingels, K.J.A.O.; Maal, T.J.J. Depth accuracy of the RealSense F200: Low-cost 4D facial imaging. Sci. Rep. 2017, 7, 16263. [Google Scholar] [CrossRef]

- D’Ettorre, G.; Farronato, M.; Candida, E.; Quinzi, V.; Grippaudo, C. A comparison between stereophotogrammetry and smartphone structured light technology for three-dimensional face scanning. Angle Orthod. 2022, 92, 358–363. [Google Scholar] [CrossRef] [PubMed]

- Nord, F.; Ferjencik, R.; Seifert, B.; Lanzer, M.; Gander, T.; Matthews, F.; Rücker, M.; Lübbers, H.T. The 3dMD photogrammetric photo system in cranio-maxillofacial surgery: Validation of interexaminer variations and perceptions. J. Craniomaxillofac. Surg. 2015, 43, 1798–1803. [Google Scholar] [CrossRef] [PubMed]

- Kovacs, L.; Zimmermann, A.; Brockmann, G.; Gühring, M.; Baurecht, H.; Papadopulos, N.A.; Schwenzer-Zimmerer, K.; Sader, R.; Biemer, E.; Zeilhofer, H.F. Three-dimensional recording of the human face with a 3D laser scanner. J. Plast. Reconstr. Aesthet. Surg. 2006, 59, 1193–1202. [Google Scholar] [CrossRef] [PubMed]

- Aldridge, K.; George, I.D.; Cole, K.K.; Austin, J.R.; Takahashi, T.N.; Duan, Y.; Miles, J.H. Facial phenotypes in subgroups of prepubertal boys with autism spectrum disorders are correlated with clinical phenotypes. Mol. Autism 2011, 2, 15. [Google Scholar] [CrossRef] [PubMed]

- Gerós, A.; Horta, R.; Aguiar, P. Facegram—Objective quantitative analysis in facial reconstructive surgery. J. Biomed. Inform. 2016, 61, 1–9. [Google Scholar] [CrossRef]

- Schlett, T.; Rathgeb, C.; Busch, C. Deep learning-based single image face depth data enhancement. Comput. Vis. Image Underst. 2021, 210, 103247. [Google Scholar] [CrossRef]

- Maal, T.J.; Plooij, J.M.; Rangel, F.A.; Mollemans, W.; Schutyser, F.A.; Bergé, S.J. The accuracy of matching three-dimensional photographs with skin surfaces derived from cone-beam computed tomography. Int. J. Oral Maxillofac. Surg. 2008, 37, 641–646. [Google Scholar] [CrossRef] [PubMed]

- Jodeh, D.S.; Curtis, H.; Cray, J.J.; Ford, J.; Decker, S.; Rottgers, S.A. Anthropometric evaluation of periorbital region and facial projection using three-dimensional photogrammetry. J. Craniofac. Surg. 2018, 29, 2017–2020. [Google Scholar] [CrossRef] [PubMed]

- Andrade, L.M.; Rodrigues da Silva, A.M.B.; Magri, L.V.; Rodrigues da Silva, M.A.M. Repeatability study of angular and linear measurements on facial morphology analysis by means of stereophotogrammetry. J. Craniofac. Surg. 2017, 28, 1107–1111. [Google Scholar] [CrossRef] [PubMed]

- Taylor, H.O.; Morrison, C.S.; Linden, O.; Phillips, B.; Chang, J.; Byrne, M.E.; Sullivan, S.R.; Forrest, C.R. Quantitative facial asymmetry: Using three-dimensional photogrammetry to measure baseline facial surface symmetry. J. Craniofac. Surg. 2014, 25, 124–128. [Google Scholar] [CrossRef]

| Characteristics | Definition | |

|---|---|---|

| Hardware | Portability | Hand-held and compact or bulky and cumbersome to relocate |

| System mobility | System is fixed or mobile while scanning | |

| Sensor position | Sensor is static or dynamic while scanning | |

| Cost-effectiveness | Inexpensive equipment and price-worthy operation to use in a clinical setting | |

| Software | CT/CBCT integration | Permits integration with other imaging tools such as CT/CBCT |

| Surgery simulation | Allows simulation of surgical procedures through indigenous software or third-party-assisted software | |

| Real-time 3D volumetric visualization | Capability to generate real-time photorealistic 3D virtual copy of the face | |

| Tissue behavior simulation | Predicts the post-treatment outcomes based on indigenous software or third-party-assisted software | |

| Progress monitoring and outcome evaluation | Enables treatment monitoring at different time points and outcome evaluation | |

| Functionality | Purpose | Provision of facial measurement-based quantifiable and incessant data |

| Data delivery | Delivers data while the object is still or in motion | |

| Scanning time | Time required by the system to scan an object | |

| Processing time | Time required by the software from editing and merging the acquired meshes to generating a 3D model | |

| Coverage | Captures only the face (excluding ears), face and neck, or full face (ear-to-ear) and neck | |

| Scan requisite | Requires a single scan, multiple continuous scans, or multiple scans stitched together to generate a 3D image | |

| Accuracy | Data generated by the system are sufficiently close to the real data | |

| Precision | Data generated by the system display high reliability | |

| Archivable data | Data generated by the system can be stored in industry standard and easily accessible formats | |

| User-friendly | Does not require specialized training and equipment | |

| System requirements | Does not have extensive hardware or software requirements | |

| 3D Face Acquisition System | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Characteristics | Laser-Based Scanning | Stereophotogrammetry | Structured Light Scanning | CBCT Integrated | Smartphone-Based Scanning | 4D Imaging | RGB-D | |||||||||

| Minolta Vivid 910 | FastSCAN II | Vectra H1 | Di3D FCS-100 | Morpheus 3D | Accu3D | Axis Three XS-200 | Planmeca Pro Face | Bellus3D FaceApp | Bellus3D Face Camera Pro | 3dMD | DI4D | Intel RealSense D435 | Azure Kinect DK | RAYFace | ||

| Hardware | Portability | Y | Y | Y | Y | Y | Y | Y | N | Y | Y | N | N | Y | Y | Y |

| System mobility | Stationary | Mobile | Mobile | Stationary | Stationary | Mobile | Stationary | Stationary | Stationary | Stationary | Stationary | Stationary | Mobile | Mobile | Stationary | |

| Sensor position | Static | Dynamic | Dynamic | Static | Static * | Dynamic | Static | Dynamic | Static * | Static * | Static | Static | Dynamic | Dynamic | Static | |

| Cost-effective | Y | Y | Y | N | - | Y | Y | N | Y | Y | N | N | Y | Y | Y | |

| Software | CT/CBCT integration | - | - | Third-party software | Third-party software | - | - | N | Romexis | N | N | 3dMDvultus/third-party software | - | - | - | RAYFace solution |

| Surgery simulation | - | - | Y | Third-party software | Y | Y | Y | Y | N | N | Y | - | N | - | - | |

| Real-time 3D volumetric visualization | Y | Y | Y | Y | Y | Y | Y | - | N | N | Y | Y | - | - | Y | |

| Tissue behavior simulation | - | - | Y | Third-party software | Y | Y | Y | - | N | N | Y | - | - | - | - | |

| Progress and outcome monitoring | - | - | Y | Y | Y | Y | Y | - | N | N | Y | - | - | - | Y | |

| Functionality | Purpose | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y |

| Data delivery | Still | Still | Still | Still | Still | Still | Still | Still | Still | Still | Motile | Motile | Motile | Motile | Still | |

| Capture speed/Scanning time | 0.3–2.5 s | <1 min | 2 ms | 1 ms | 0.8 s | 0.5 s | <2 s | 30 s | 10 s | 25 s | ≈1.5 ms/1–120 fps | ≈2 ms/f | 90 fps | 20.3 ms | 0.5 s | |

| Processing time | - | - | ≈20 s | 60 s | <2 min | <1 min | - | - | - | 15–30 s | <8 s | 30 s | - | - | <1 min | |

| Scan range | 1300 × 1100 mm | 50 cm | ≈100° | ≈180° | 225 × 300 mm | - | ≈180° | - | - | 66–69° | 190°–360° | ≈180° | 87° × 58° | 120° × 120° | 550 × 310 mm | |

| Coverage | Face | Full face | Full face | Full face | Face | Full face | Face + Neck | Face | Full face | Full face | Full face | Full face | Face | Face | Full face | |

| Optimal 3D measurement range | 0.6–1.2 m | 2–4 inch | 350–450 mm | - | 650 mm | 45–50 cm | 1 m | - | - | 30–45 cm | 1 m | - | 0.3–3 m | 0.25–2.21 m | - | |

| Color image | Y | N | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | Y | |

| Scan requisite | Multiple | Multiple | Multiple | Single | Multiple | Multiple | Single | Single | Single | Single | Single | Single | Multiple | Multiple | Single | |

| Output format | stl, dxf, obj, ascii points, vrml | Several format options | - | Several format options | - | Several format options | - | stl | obj, stl | .obj, .mtl, .jpeg, .stl, .yml | Several format options | Several format options | - | - | stl, .obj | |

| Scan processing software-enabled | Y | Y | Y | Di3Dview | MAS | Accu3DX Pro | Y | Romexis | Y | Y | 3dMDvultus | DI4Dlive | Intel RealSense SDK 2.0 | Y | RAYFace solution | |

| Accuracy | Y | Y | Y | Y | Y | - | - | N | Y | Y | Y | Y | - | Y | - | |

| Precision | Y | Y | Y | Y | Y | - | - | N | Y | Y | Y | - | - | Y | - | |

| Archivable data | Y | Y | Y | Y | - | Y | - | Y | Y | Y | Y | Y | - | - | ||

| User-friendliness | Y | Y | Y | Y | Y | Y | N | - | Y | Y | Y | Y | Y | Y | Y | |

| System requirements | Minimal | Minimal | Minimal | Minimal | Minimal | Minimal | Minimal | Minimal | Minimal | Minimal | Extensive | Minimal | Minimal | Minimal | Minimal | |

| Calibration time | NR | - | NR | 5 min | - | - | <5 min | - | NR | NR | 20–100 s | 5 min | - | - | - | |

| 3D Face Acquisition System | Disadvantages and Limitations |

|---|---|

| Minolta Vivid 910 |

|

| FastSCAN II |

|

| Vectra H1, Di3D FCS-100 |

|

| Morpheus 3D |

|

| Accu3D |

|

| Axis Three XS-200 |

|

| Planmeca Pro Face |

|

| Bellus3D FaceApp Bellus3D Face Camera Pro |

|

| 3dMD |

|

| DI4D |

|

| Intel RealSense D435 |

|

| Azure Kinect DK |

|

| RAYFace |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Singh, P.; Bornstein, M.M.; Hsung, R.T.-C.; Ajmera, D.H.; Leung, Y.Y.; Gu, M. Frontiers in Three-Dimensional Surface Imaging Systems for 3D Face Acquisition in Craniofacial Research and Practice: An Updated Literature Review. Diagnostics 2024, 14, 423. https://doi.org/10.3390/diagnostics14040423

Singh P, Bornstein MM, Hsung RT-C, Ajmera DH, Leung YY, Gu M. Frontiers in Three-Dimensional Surface Imaging Systems for 3D Face Acquisition in Craniofacial Research and Practice: An Updated Literature Review. Diagnostics. 2024; 14(4):423. https://doi.org/10.3390/diagnostics14040423

Chicago/Turabian StyleSingh, Pradeep, Michael M. Bornstein, Richard Tai-Chiu Hsung, Deepal Haresh Ajmera, Yiu Yan Leung, and Min Gu. 2024. "Frontiers in Three-Dimensional Surface Imaging Systems for 3D Face Acquisition in Craniofacial Research and Practice: An Updated Literature Review" Diagnostics 14, no. 4: 423. https://doi.org/10.3390/diagnostics14040423

APA StyleSingh, P., Bornstein, M. M., Hsung, R. T.-C., Ajmera, D. H., Leung, Y. Y., & Gu, M. (2024). Frontiers in Three-Dimensional Surface Imaging Systems for 3D Face Acquisition in Craniofacial Research and Practice: An Updated Literature Review. Diagnostics, 14(4), 423. https://doi.org/10.3390/diagnostics14040423