A Collaborative Learning Model for Skin Lesion Segmentation and Classification

Abstract

1. Introduction

- (1)

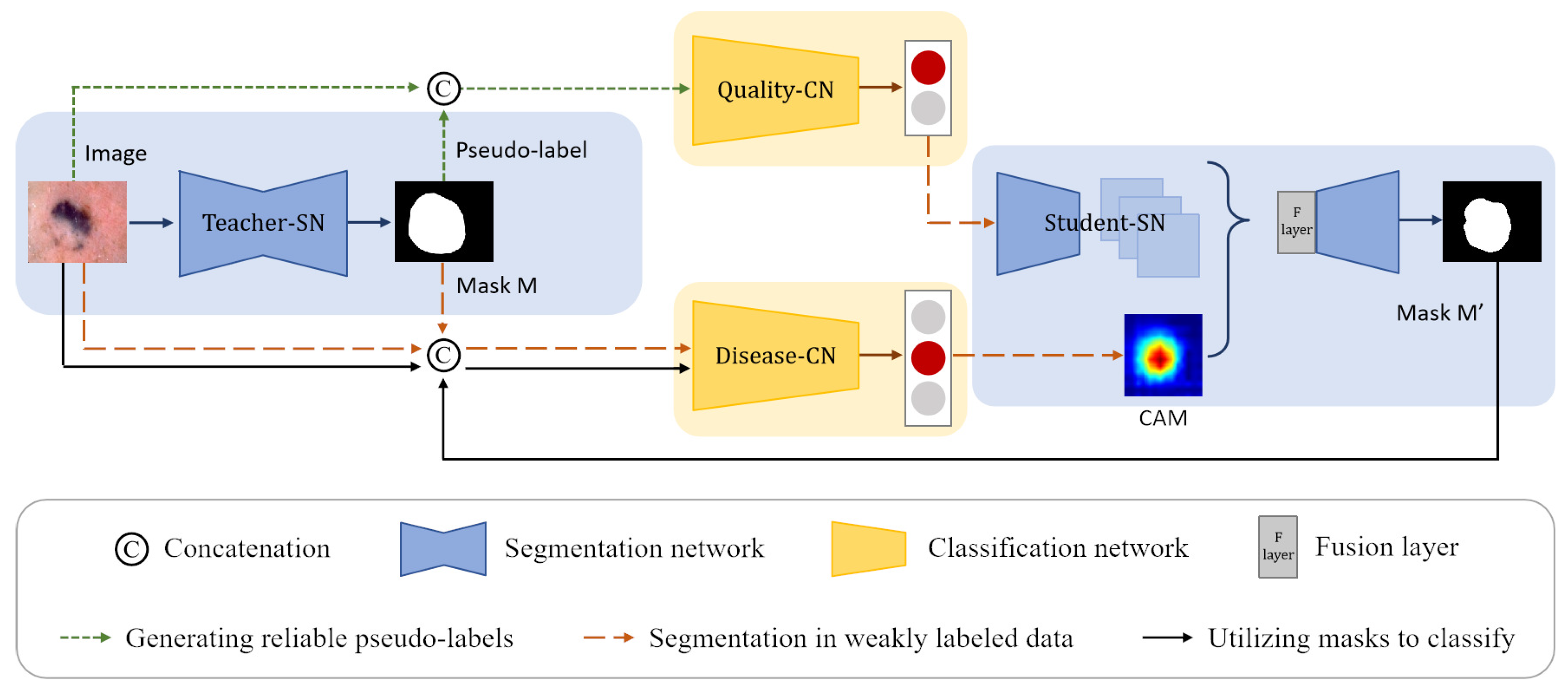

- We propose a CL-DCNN model for accurate skin lesion segmentation and classification. Different from the methods dedicated to segmentation or classification, the model tries to leverage the intrinsic correlation in segmentation and classification tasks, improving segmentation and classification performance with limited annotation data.

- (2)

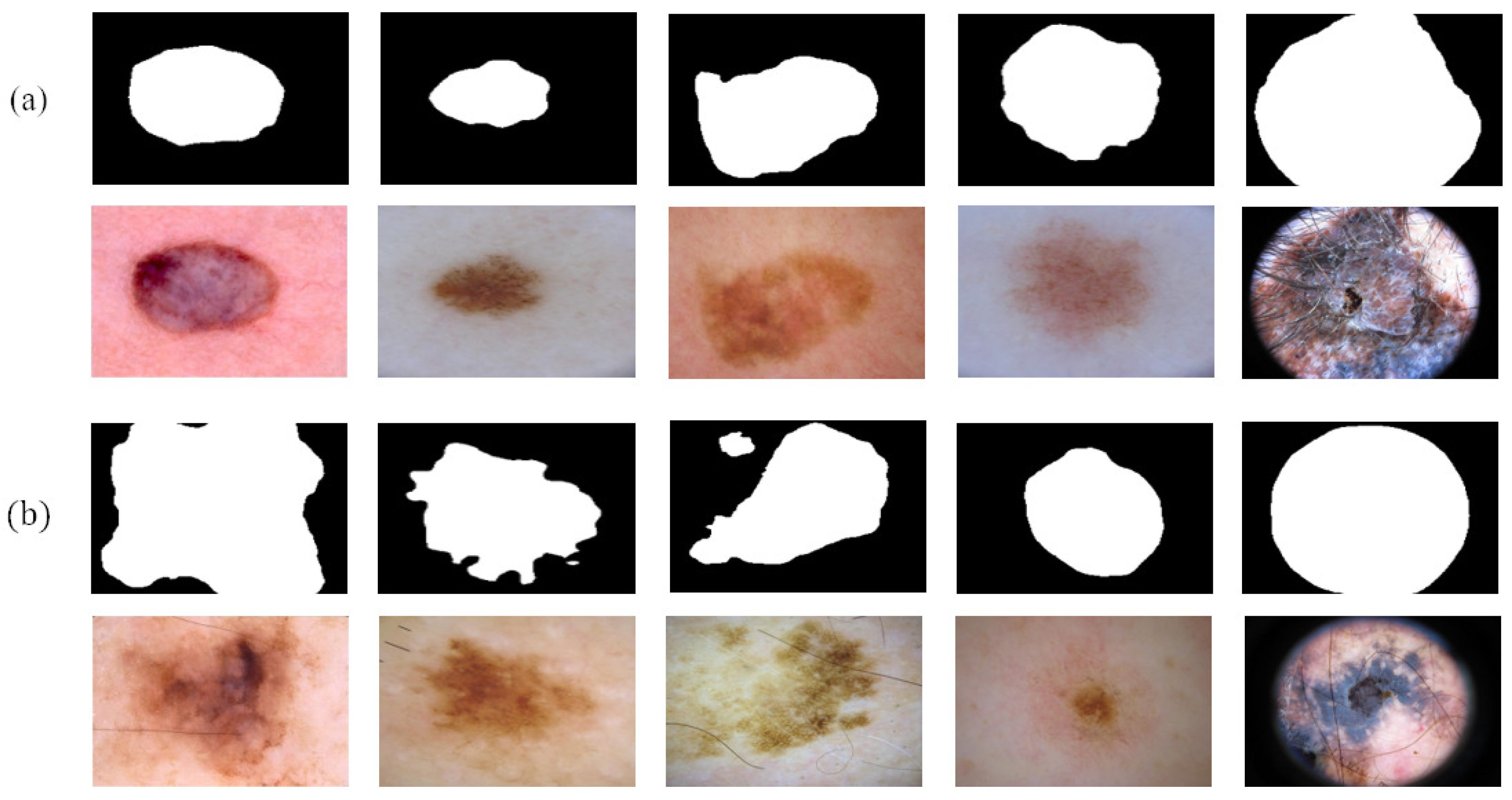

- We provide a self-training method for segmentation by generating high-quality pseudo-labels. Specifically, to alleviate the potential segmentation performance degradation incurred by incorrect pseudo-labels, we screen reliable pseudo-labels based on the similarity between pseudo-labels and ground truth for selective retraining.

- (3)

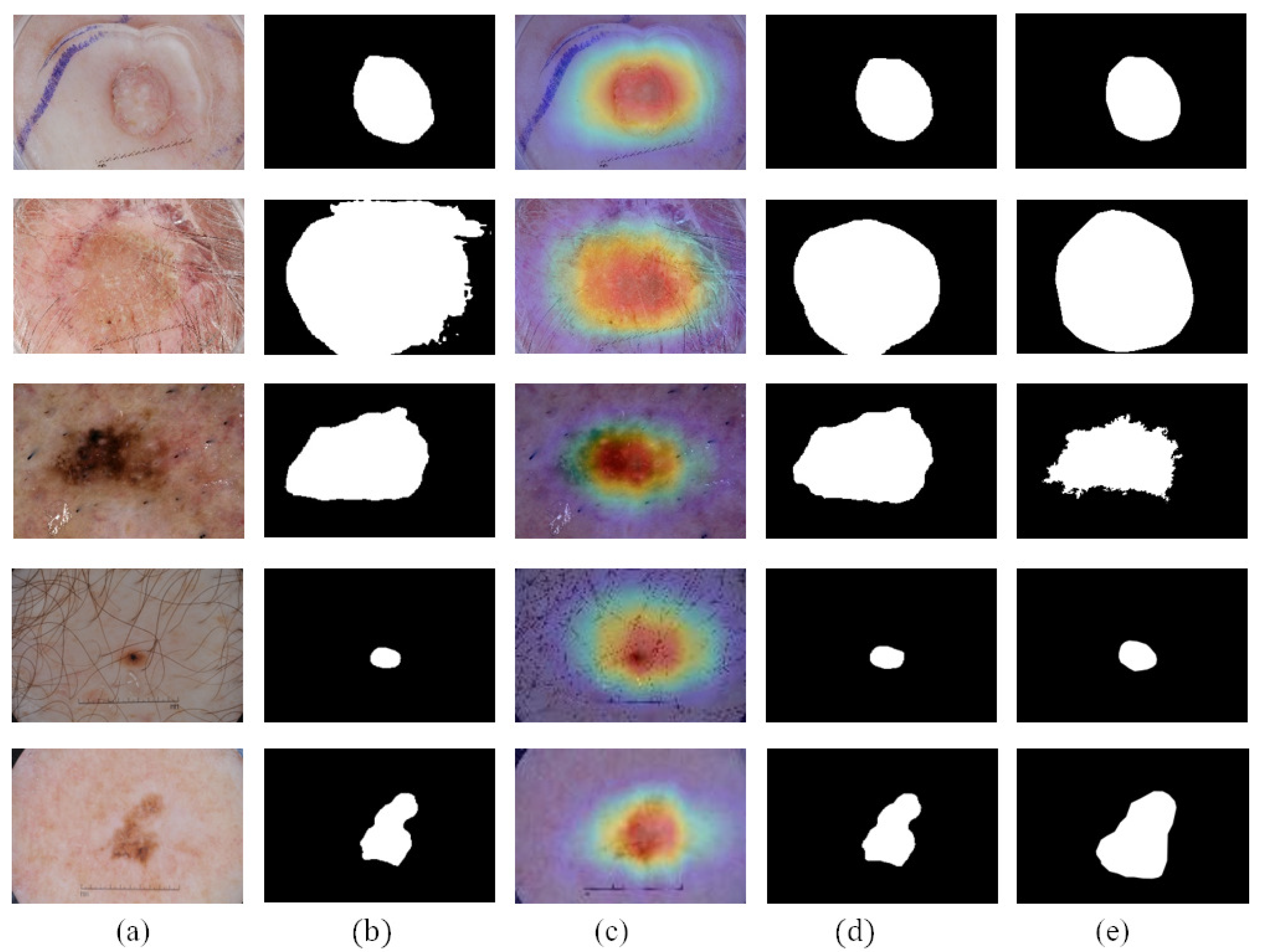

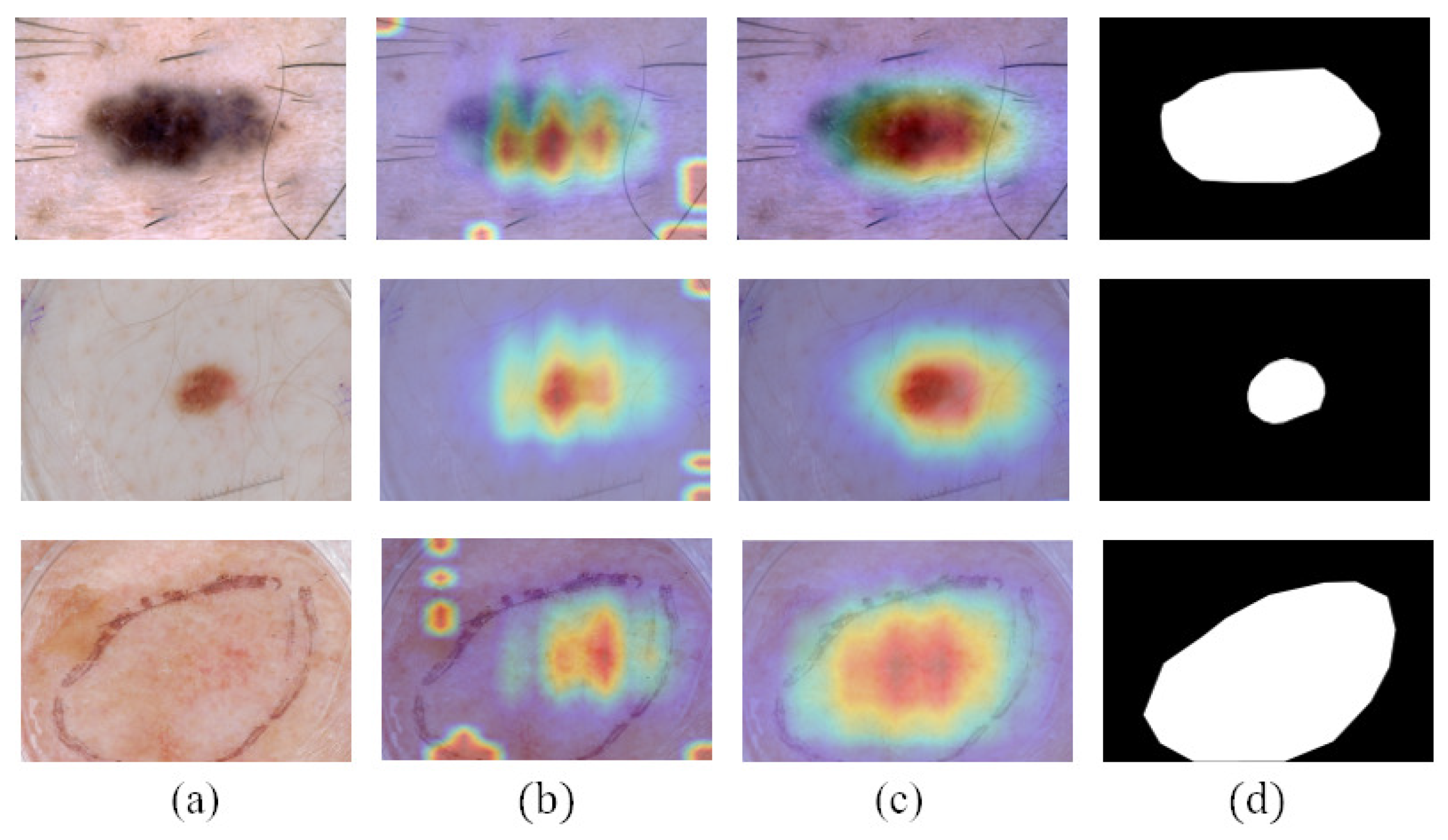

- We employ class activation maps to improve the location ability of the segmentation and apply lesion masks to improve the recognition ability of the classification.

2. Related Work

2.1. Segmentation and Classification of Skin Lesion

2.2. Segmentation and Classification Collaborative Learning

2.3. Self-Training for Segmentation

3. Method

3.1. Problem Definition

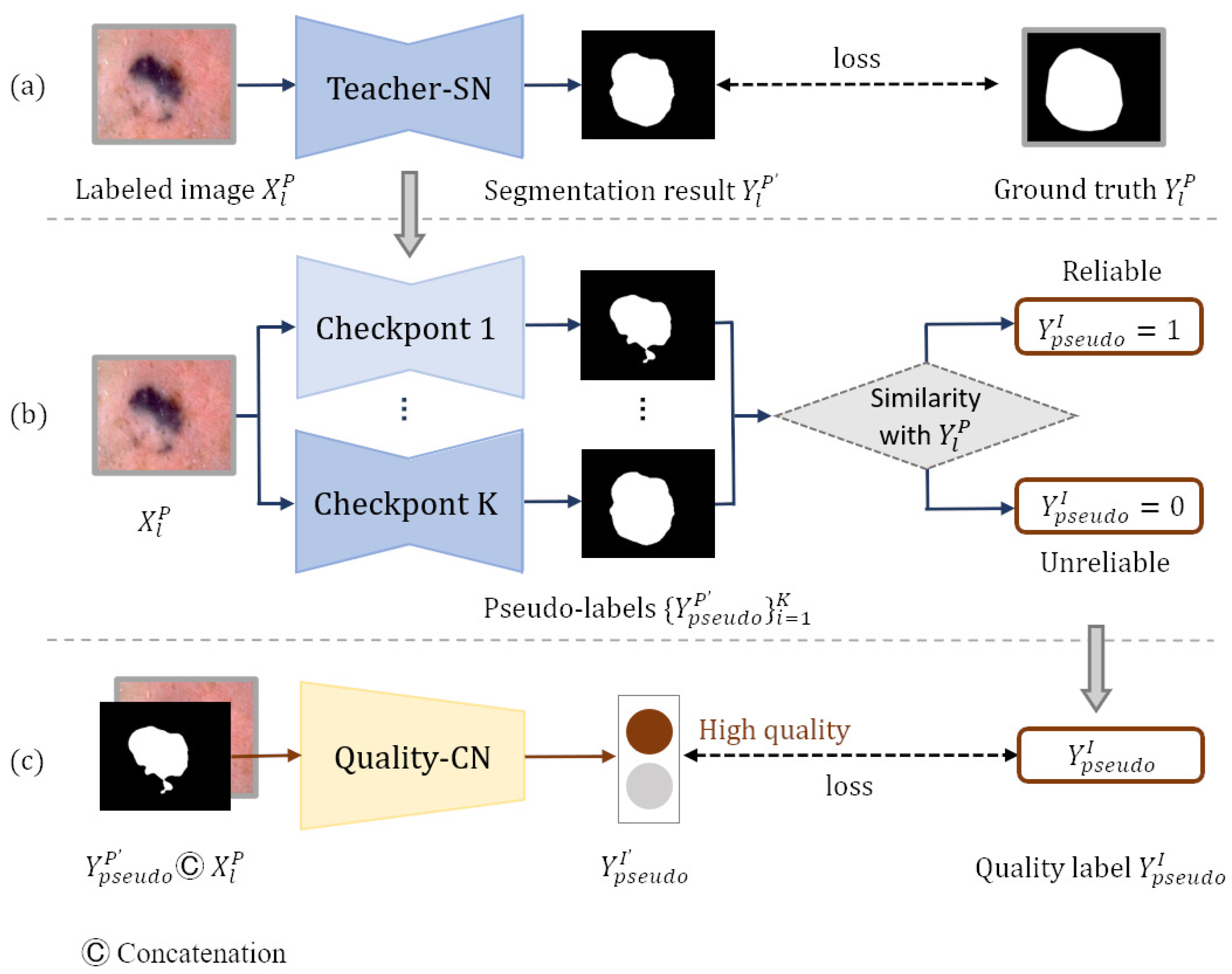

3.2. Generating Reliable Pseudo-Labels

3.2.1. Generating Pseudo-Labels

3.2.2. Screening Reliable Pseudo-Labels

3.2.3. Obtaining Reliable Pseudo-Labels

3.3. Segmentation in Weakly Labeled Data

3.3.1. Generating CAMs

| Algorithm 1: Generate reliable pseudo-labels |

| Input: Pixel-level labeled dataset , unlabeled dataset , teacher-SN T, quality-CN Q Output: Reliable pseudo-labels and corresponding images 1 // Train T to generate pseudo-labels 2 Train T on and save k checkpoints 3 // Train Q to screen reliable pseudo-labels 4 for do 5 for do 6 Generate pseudo-label 7 Compute the Jaccard score s with Equation (1) between and 8 The category of is set according to s by Equation (2) 9 Denote the pseudo-label quality level training set as . 10 Train Q on 11 // Obtain reliable pseudo-labels from 12 13 14 for do 15 Generate pseudo-label 16 If 17 18 19 20 Return |

3.3.2. Refine Segmentation

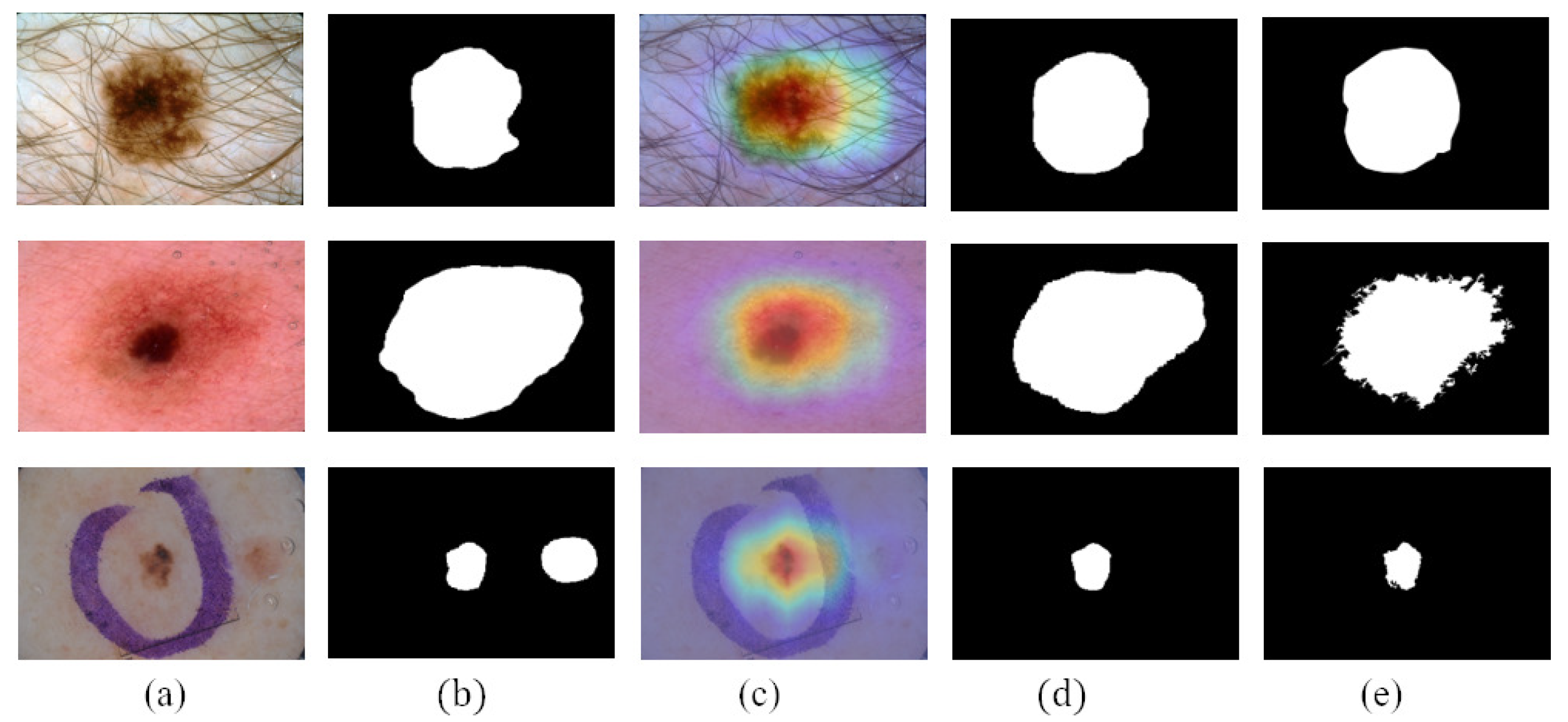

3.4. Utilizing Masks to Classify

4. Experiments

4.1. Dataset

- (1)

- ISIC 2017: ISIC 2017 is a skin lesion segmentation and classification dataset provided by the International Skin Imaging Collaboration Organization. The ISIC 2017 dataset included 2000 dermoscopic images for training, 150 for validation, and 600 for testing. Each dermoscopic image has its corresponding pixel-level expert annotation for segmentation and a gold-standard diagnosis of lesions (melanoma, mole, and seborrheic keratosis) for classification. We use the pixel-level labeled data of ISIC 2017 to train the teacher-SN and student-SN to segment the skin lesions, and we use the image-level labeled data to train the disease-CN to diagnose the type of skin disease.

- (2)

- ISIC Archive: ISIC Archive is a skin lesion classification dataset that contains 1320 image-level annotated dermoscopic images. It comprises 466 cases diagnosed with melanoma, 32 cases with seborrheic keratosis, and 822 cases with nevus. We use ISIC Archive to expand the disease-CN’s training data and serve the ISIC Archive as unlabeled data to generate pseudo-labels. The relevant information of the dataset is shown in Table 2.

4.2. Evaluation Metrics

- (1)

- Segmentation evaluation metrics: we use five indicators of the Jaccard index (JA), Dice coefficient (DI), pixel-wise accuracy (pixel-AC), pixel-wise sensitivity (pixel-SE), and pixel-wise specificity (pixel-SP) to evaluate the segmentation performance.

- (2)

- Classification evaluation metrics: we use four indicators of the area under receive operation curve (AUC), accuracy (AC), sensitivity (SE), and specificity (SP) to evaluate the classification performance.

4.3. Experimental Details

4.4. Experimental Results

4.4.1. Segmentation Results

4.4.2. Classification Results

4.4.3. Advantages of CAMs and Pseudo-Labels

4.4.4. Advantages of Masks

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Siegel, R.L.; Miller, K.D.; Fuchs, H.E.; Jemal, A. Cancer statistics, 2021. CA Cancer J. Clin. 2021, 71, 7–33. [Google Scholar] [PubMed]

- Xie, Y.; Zhang, J.; Lu, H.; Shen, C.; Xia, Y. SESV: Accurate medical image segmentation by predicting and correcting errors. IEEE Trans. Med. Imaging 2020, 40, 286–296. [Google Scholar] [PubMed]

- Luo, X.; Chen, J.; Song, T.; Wang, G. Semi-supervised medical image segmentation through dual-task consistency. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 8801–8809. [Google Scholar]

- Scudder, H. Probability of error of some adaptive pattern-recognition machines. IEEE Trans. Inf. Theory 1965, 11, 363–371. [Google Scholar]

- Yang, L.; Zhuo, W.; Qi, L.; Shi, Y.; Gao, Y. St++: Make self-training work better for semi-supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 4268–4277. [Google Scholar]

- Zhou, Z.H. A brief introduction to weakly supervised learning. Natl. Sci. Rev. 2018, 5, 44–53. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Yu, L.; Chen, H.; Dou, Q.; Qin, J.; Heng, P.A. Automated melanoma recognition in dermoscopy images via very deep residual networks. IEEE Trans. Med. Imaging 2016, 36, 994–1004. [Google Scholar] [CrossRef]

- Shen, T.; Gou, C.; Wang, J.; Wang, F.Y. Simultaneous segmentation and classification of mass region from mammograms using a mixed-supervision guided deep model. IEEE Signal Process. Lett. 2019, 27, 196–200. [Google Scholar] [CrossRef]

- Xie, H.; He, Y.; Xu, D.; Kuo, J.Y.; Lei, H.; Lei, B. Joint segmentation and classification task via adversarial network: Application to HEp-2 cell images. Appl. Soft Comput. 2022, 114, 108156. [Google Scholar]

- Mahbod, A.; Tschandl, P.; Langs, G.; Ecker, R.; Ellinger, I. The effects of skin lesion segmentation on the performance of dermatoscopic image classification. Comput. Methods Programs Biomed. 2020, 197, 105725. [Google Scholar]

- Zhou, Y.; He, X.; Huang, L.; Liu, L.; Zhu, F.; Cui, S.; Shao, L. Collaborative learning of semi-supervised segmentation and classification for medical images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 2079–2088. [Google Scholar]

- Lei, B.; Xia, Z.; Jiang, F.; Jiang, X.; Ge, Z.; Xu, Y.; Qin, J.; Chen, S.; Wang, T.; Wang, S. Skin lesion segmentation via generative adversarial networks with dual discriminators. Med. Image Anal. 2020, 64, 101716. [Google Scholar]

- Wang, X.; Jiang, X.; Ding, H.; Zhao, Y.; Liu, J. Knowledge-aware deep framework for collaborative skin lesion segmentation and melanoma recognition. Pattern Recognit. 2021, 120, 108075. [Google Scholar]

- Wang, J.; Wei, L.; Wang, L.; Zhou, Q.; Zhu, L.; Qin, J. Boundary-aware transformers for skin lesion segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; Springer: Cham, Switzerland, 2021; pp. 206–216. [Google Scholar]

- Bi, L.; Fulham, M.; Kim, J. Hyper-fusion network for semi-automatic segmentation of skin lesions. Med. Image Anal. 2022, 76, 102334. [Google Scholar] [PubMed]

- Mirikharaji, Z.; Hamarneh, G. Star shape prior in fully convolutional networks for skin lesion segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer Assisted Intervention, Granada, Spain, 16–20 September 2018; Springer: Cham, Switzerland, 2018; pp. 737–745. [Google Scholar]

- Wang, X.; Jiang, X.; Ding, H.; Liu, J. Bi-directional dermoscopic feature learning and multi-scale consistent decision fusion for skin lesion segmentation. IEEE Trans. Image Process. 2019, 29, 3039–3051. [Google Scholar]

- Zhang, J.; Xie, Y.; Xia, Y.; Shen, C. Attention residual learning for skin lesion classification. IEEE Trans. Med. Imaging 2019, 38, 2092–2103. [Google Scholar] [PubMed]

- Li, X.; Yu, L.; Jin, Y.; Fu, C.W.; Xing, L.; Heng, P.A. Difficulty-aware meta-learning for rare disease diagnosis. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Lima, Peru, 4–8 October 2020; Springer: Cham, Switzerland, 2020; pp. 357–366. [Google Scholar]

- Yu, Z.; Nguyen, J.; Nguyen, T.D.; Kelly, J.; Mclean, C.; Bonnington, P.; Zhang, L.; Mar, V.; Ge, Z. Early Melanoma Diagnosis with Sequential Dermoscopic Images. IEEE Trans. Med. Imaging 2021, 41, 633–646. [Google Scholar]

- Zhang, J.; Xie, Y.; Wu, Q.; Xia, Y. Skin lesion classification in dermoscopy images using synergic deep learning. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Granada, Spain, 16–20 September 2018; Springer: Cham, Switzerland, 2018; pp. 12–20. [Google Scholar]

- Xie, Y.; Zhang, J.; Xia, Y.; Shen, C. A mutual bootstrapping model for automated skin lesion segmentation and classification. IEEE Trans. Med. Imaging 2020, 39, 2482–2493. [Google Scholar] [CrossRef]

- Zhang, B.; Xiao, J.; Wei, Y.; Sun, M.; Huang, K. Reliability does matter: An end-to-end weakly supervised semantic segmentation approach. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 22 February–1 March 2022; Volume 34, pp. 12765–12772. [Google Scholar]

- Jo, S.; Yu, I.J. Puzzle-CAM: Improved localization via matching partial and full features. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 639–643. [Google Scholar]

- Wei, Y.; Feng, J.; Liang, X.; Cheng, M.M.; Zhao, Y.; Yan, S. Object region mining with adversarial erasing: A simple classification to semantic segmentation approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1568–1576. [Google Scholar]

- Qin, J.; Wu, J.; Xiao, X.; Li, L.; Wang, X. Activation modulation and recalibration scheme for weakly supervised semantic segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 2117–2125. [Google Scholar]

- Yuan, F.; Zhang, L.; Xia, X.; Huang, Q.; Li, X. A gated recurrent network with dual classification assistance for smoke semantic segmentation. IEEE Trans. Image Process. 2021, 30, 4409–4422. [Google Scholar]

- Jin, Q.; Cui, H.; Sun, C.; Meng, Z.; Su, R. Cascade knowledge diffusion network for skin lesion diagnosis and segmentation. Appl. Soft Comput. 2021, 99, 106881. [Google Scholar]

- Wang, Y.; Wang, H.; Shen, Y.; Fei, J.; Li, W.; Jin, G.; Wu, L.; Zhao, R.; Le, X. Semi-Supervised Semantic Segmentation Using Unreliable Pseudo-Labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 4248–4257. [Google Scholar]

- Zheng, Z.; Yang, Y. Rectifying pseudo label learning via uncertainty estimation for domain adaptive semantic segmentation. Int. J. Comput. Vis. 2021, 129, 1106–1120. [Google Scholar]

- Lee, D.-H. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Proceedings of the Workshop on Challenges in Representation Learning, ICML 2013, Atlanta, GA, USA, 16–21 June 2013; Volume 3, p. 896. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2009, 88, 303–308. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Liu, S.A.; Xie, H.; Xu, H.; Zhang, Y.; Tian, Q. Partial Class Activation Attention for Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 16836–16845. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Cao, W.; Zheng, J.; Xiang, D.; Ding, S.; Sun, H.; Yang, X.; Liu, Z.; Dai, Y. Edge and neighborhood guidance network for 2D medical image segmentation. Biomed. Signal Process. Control 2021, 69, 102856. [Google Scholar]

- Liu, Q.; Wang, J.; Zuo, M.; Cao, W.; Zheng, J.; Zhao, H.; Xie, J. NCRNet: Neighborhood context refinement network for skin lesion segmentation. Comput. Biol. Med. 2022, 146, 105545. [Google Scholar] [PubMed]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [PubMed]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet: Rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [PubMed]

- Xie, Y.; Zhang, J.; Xia, Y. Semi-supervised adversarial model for benign–malignant lung nodule classification on chest CT. Med. Image Anal. 2019, 57, 237–248. [Google Scholar]

- Zhang, J.; Xie, Y.; Wu, Q.; Xia, Y. Medical image classification using synergic deep learning. Med. Image Anal. 2019, 54, 10–19. [Google Scholar]

- Yao, P.; Shen, S.; Xu, M.; Liu, P.; Zhang, F.; Xing, J.; Shao, P.; Kaffenberger, B.; Xu, R.X. Single model deep learning on imbalanced small datasets for skin lesion classification. IEEE Trans. Med. Imaging 2021, 41, 1242–1254. [Google Scholar]

| Terms | Definitions |

|---|---|

| Teacher-SN | Teacher-SN is a teacher segmentation network used to generate pseudo-labels and masks. |

| Disease-CN | Disease-CN is a disease classification network used to diagnose skin disease types. |

| Quality-CN | Quality-CN is a pseudo-label quality evaluation network for screening reliable pseudo-labels. |

| Student-SN | Student-SN is a student segmentation network for the fine segmentation of skin lesion regions. |

| is a segmentation training set that contains N dermoscopy images and corresponding pixel-level labels . Some pixels in belong to the skin lesion area, and others belong to the normal skin area. | |

| is a classification training set that contains M dermoscopy images and corresponding image-level labels . represents the type of skin disease (melanoma, nevus, or seborrheic keratosis). | |

| is an unlabeled segmentation training set that contains n dermoscopy images . |

| Dataset | Format | Label | Train | Size Validation | Testing |

|---|---|---|---|---|---|

| ISIC 2017 | Png | Pixel-level | 2000 | 150 | 600 |

| Image-level | |||||

| ISIC Archive | Image-level | 1320 | 0 | 0 |

| Method | JA | DI | Pixel-AC | Pixel-SE | Pixel-SP |

|---|---|---|---|---|---|

| FCN [39] | 75.2 | 84.1 | 93.9 | 82.2 | 97.0 |

| U-Net [40] | 76.5 | 85.2 | 93.3 | 84.5 | 97.3 |

| DAGAN [13] | 77.1 | 85.9 | 93.5 | 83.5 | 97.6 |

| ENGNet [41] | 77.1 | 85.3 | 93.2 | 82.7 | 97.8 |

| NCRNet [42] | 78.6 | 86.6 | 94.0 | 86.9 | 95.9 |

| AG-Net [43] | 76.9 | 85.3 | 93.5 | 83.5 | 97.4 |

| MultiResUNet [44] | 76.8 | 85.2 | 93.6 | 83.9 | 96.8 |

| Ours | 79.1 | 86.7 | 94.1 | 86.5 | 95.9 |

| Method | AC | SE | SP | AUC |

|---|---|---|---|---|

| Xception [36] | 89.8 | 70.1 | 94.3 | 92.8 |

| ARL-CNN [19] | 86.4 | 76.3 | 88.2 | 91.7 |

| SSAC [45] | 86.2 | 73.6 | 91.0 | 91.6 |

| SDL [46] | 90.6 | - | - | 91.3 |

| MWNL-CLS [47] | 76.3 | 56.4 | 76.0 | 91.7 |

| MBDCNN [23] | 90.4 | 78.5 | 93.0 | - |

| Ours | 90.7 | 70.8 | 94.7 | 93.7 |

| Methods | JA | DI | Pixel-AC | Pixel-SE | Pixel-SP | |

|---|---|---|---|---|---|---|

| CAMs | PLs | |||||

| 78.3 | 86.0 | 94.1 | 83.9 | 97.7 | ||

| ✓ | 78.9 | 86.5 | 94.3 | 85.0 | 96.7 | |

| ✓ | ✓ | 79.1 | 86.7 | 94.1 | 86.5 | 95.9 |

| Methods | AC | SE | SP | AUC | |

|---|---|---|---|---|---|

| Teacher-SN’s Mask | Student-SN’s Mask | ||||

| 89.8 | 70.1 | 94.3 | 92.8 | ||

| ✓ | 90.0 | 65.2 | 95.5 | 93.5 | |

| ✓ | 90.7 | 70.8 | 94.7 | 93.7 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Su, J.; Xu, Q.; Zhong, Y. A Collaborative Learning Model for Skin Lesion Segmentation and Classification. Diagnostics 2023, 13, 912. https://doi.org/10.3390/diagnostics13050912

Wang Y, Su J, Xu Q, Zhong Y. A Collaborative Learning Model for Skin Lesion Segmentation and Classification. Diagnostics. 2023; 13(5):912. https://doi.org/10.3390/diagnostics13050912

Chicago/Turabian StyleWang, Ying, Jie Su, Qiuyu Xu, and Yixin Zhong. 2023. "A Collaborative Learning Model for Skin Lesion Segmentation and Classification" Diagnostics 13, no. 5: 912. https://doi.org/10.3390/diagnostics13050912

APA StyleWang, Y., Su, J., Xu, Q., & Zhong, Y. (2023). A Collaborative Learning Model for Skin Lesion Segmentation and Classification. Diagnostics, 13(5), 912. https://doi.org/10.3390/diagnostics13050912