Validation of a Deep Learning Model for Detecting Chest Pathologies from Digital Chest Radiographs

Abstract

:1. Introduction

2. Materials and Methods

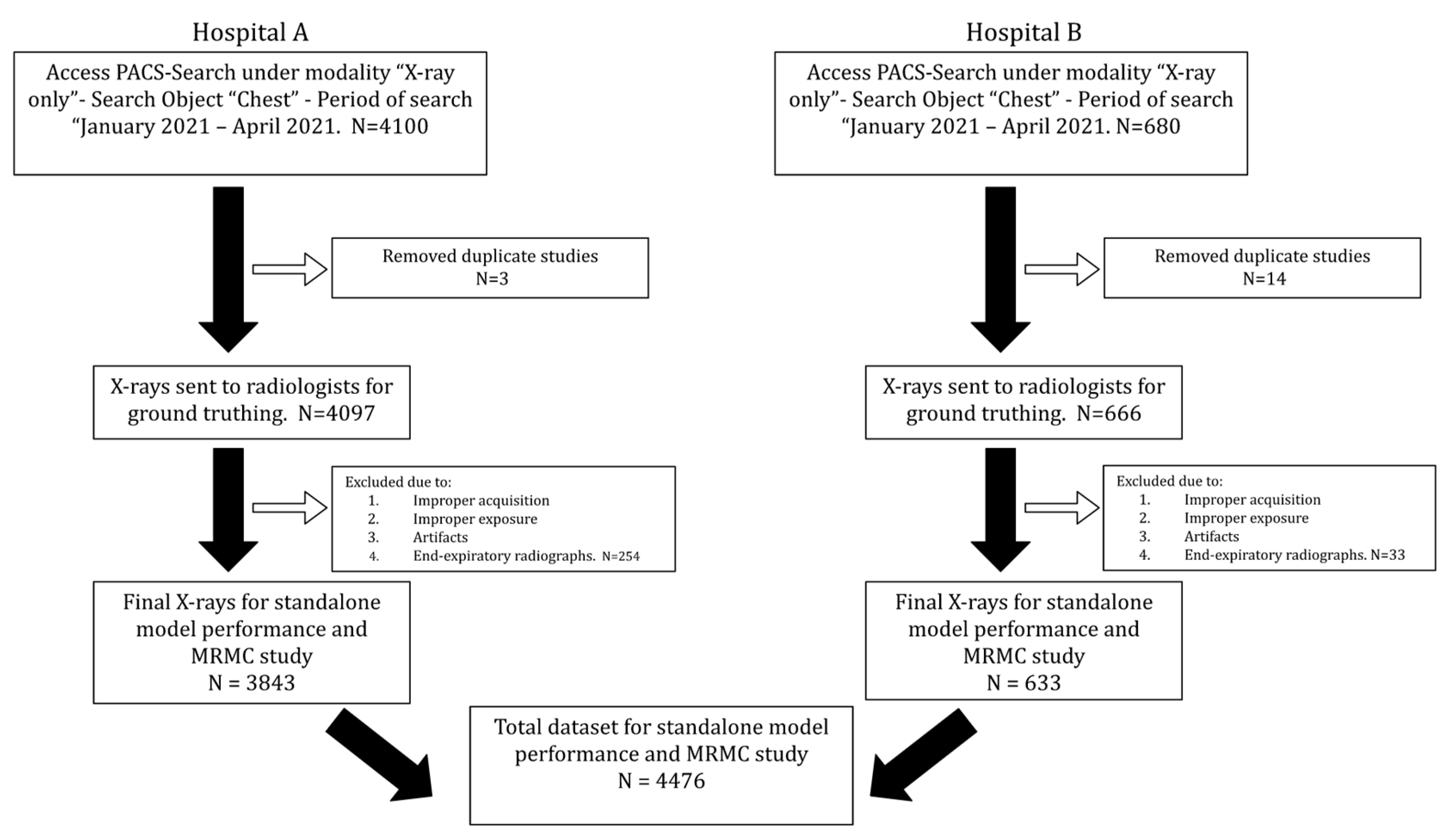

2.1. Data Collection

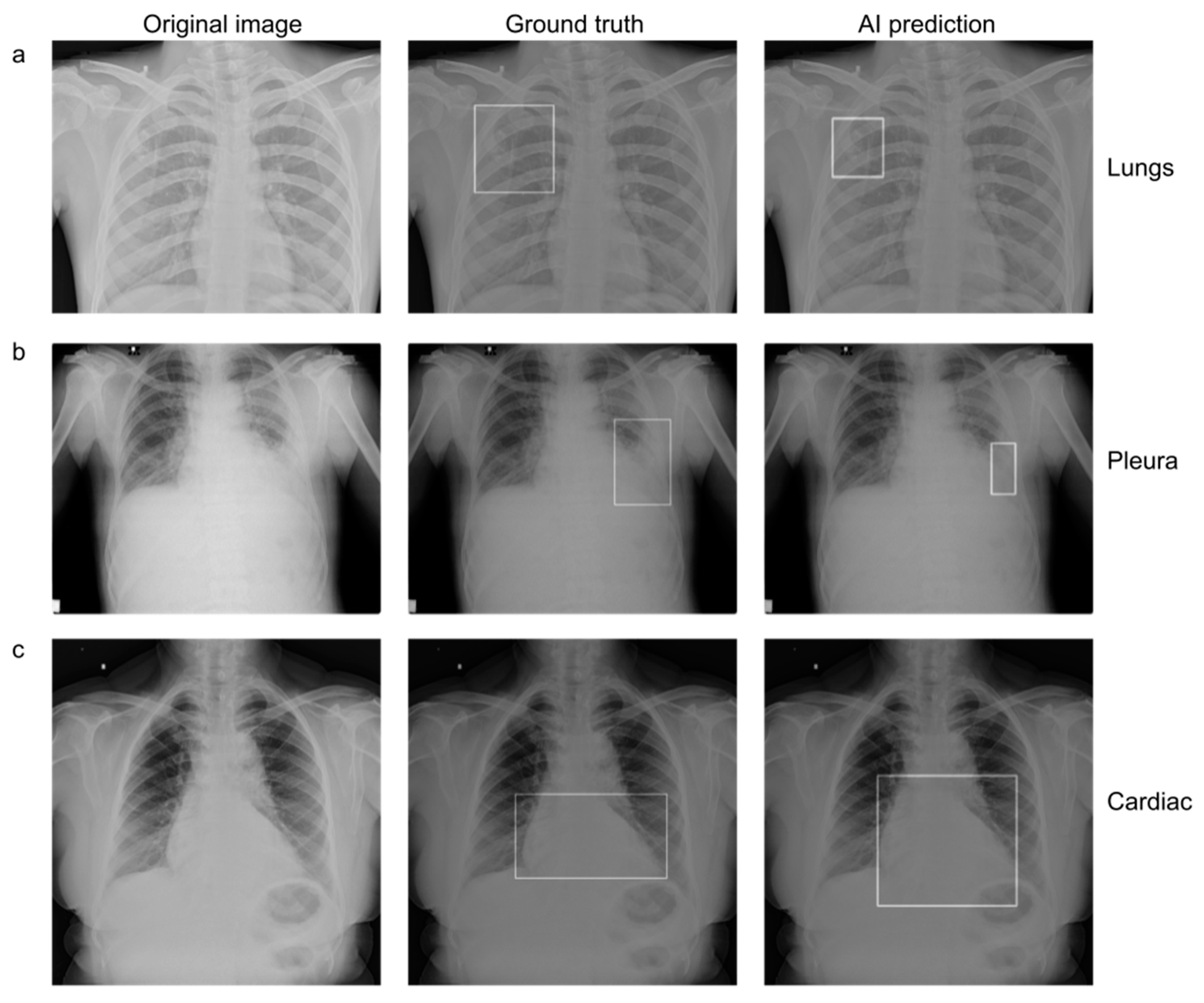

2.2. Establishing Ground Truth

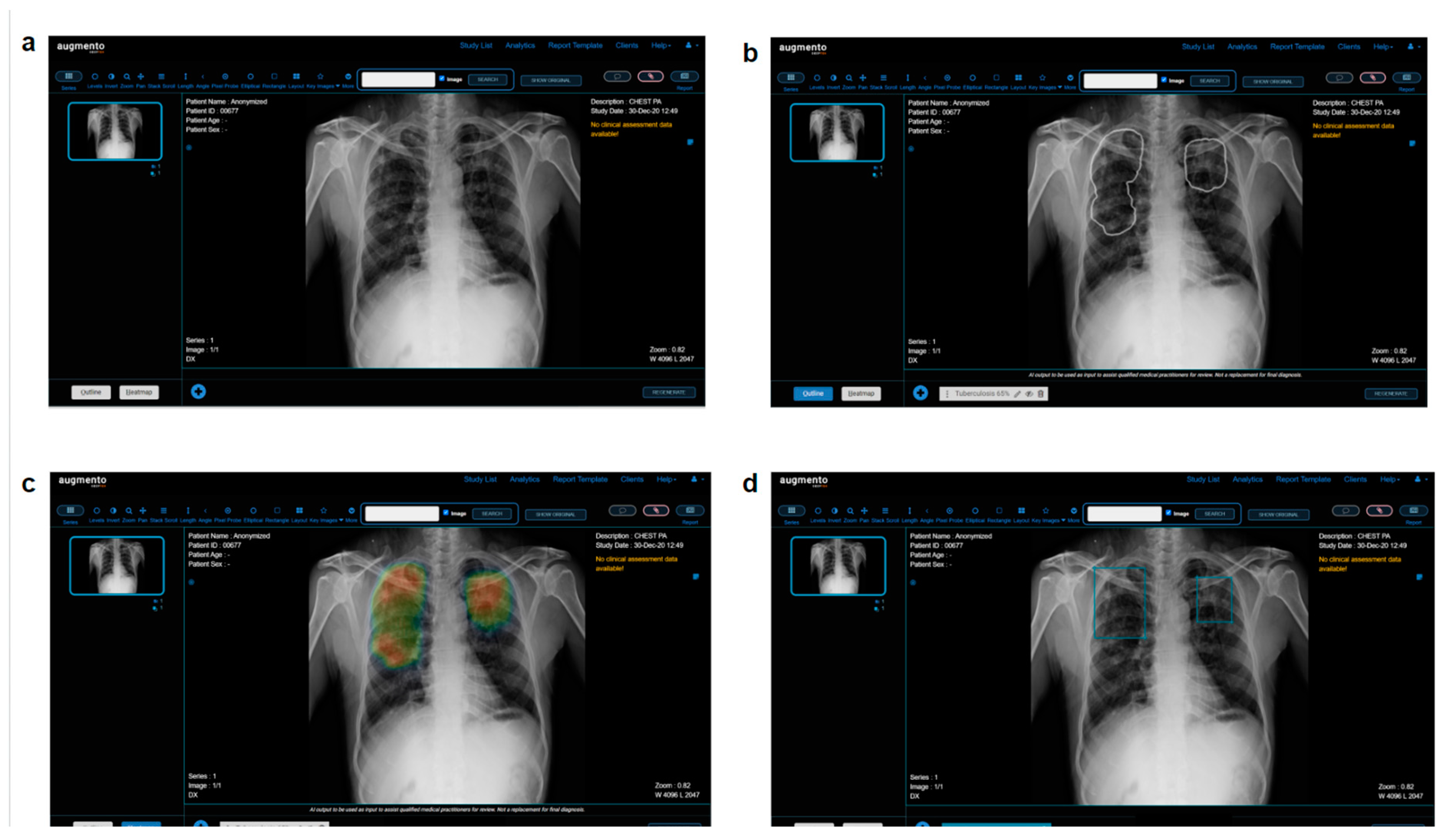

2.3. AI Model

2.4. Multi Reader Multi Case (MRMC) Study

2.5. Statistical Analysis

3. Results

3.1. Data Characteristics

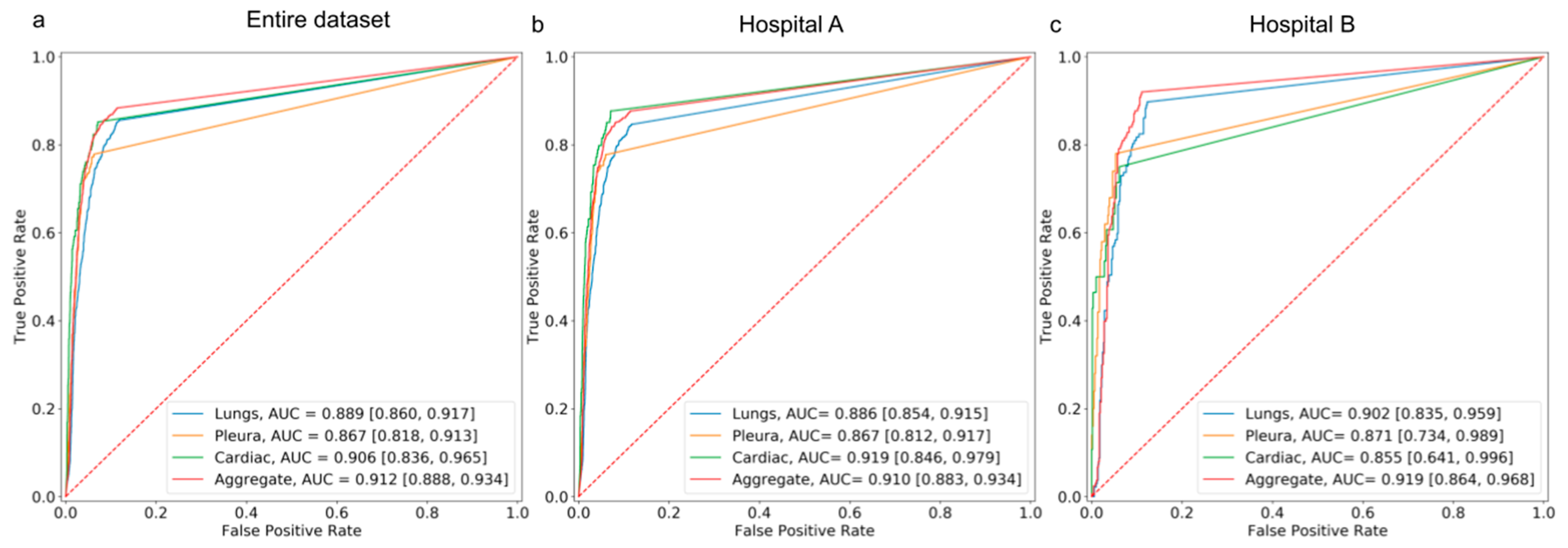

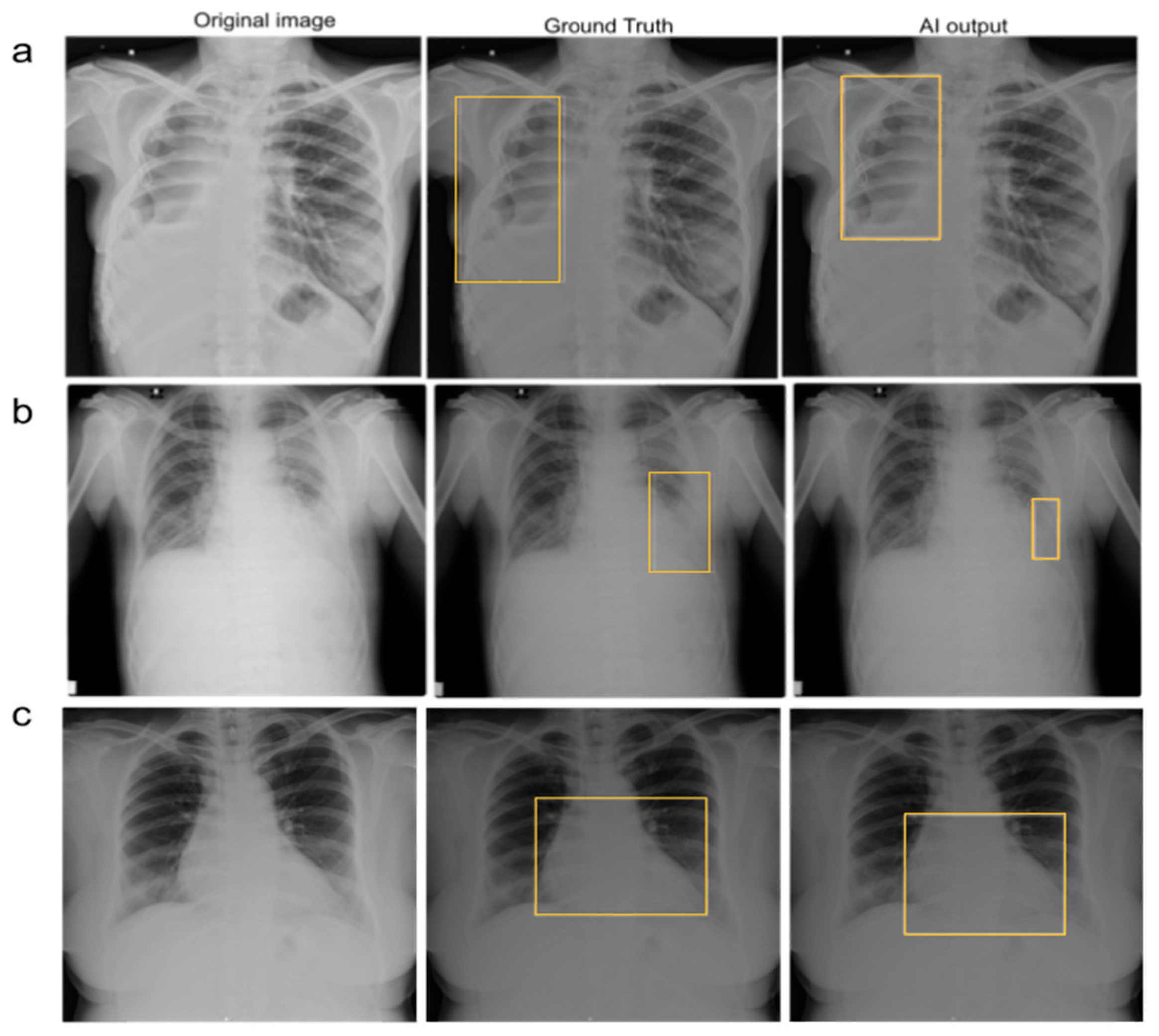

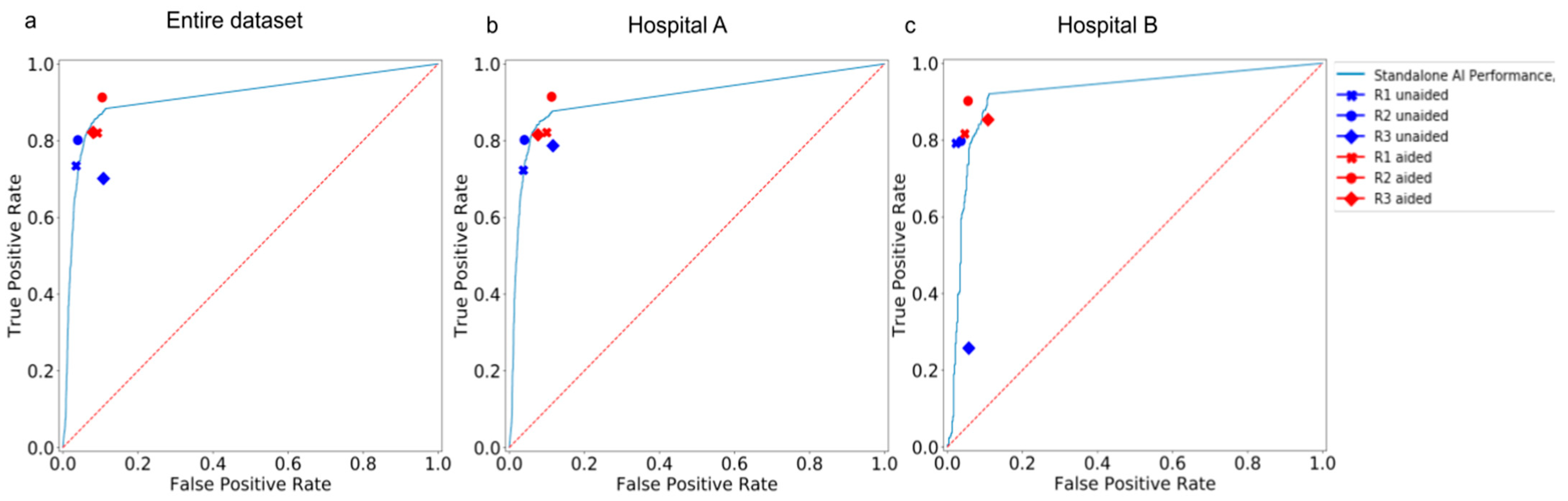

3.2. Standalone Performance of the AI Model

3.3. Comparison between the AI Model and Human Readers

3.4. Comparison between Human Readers in Unaided and Aided Sessions

3.5. Reduction in False-Negative Findings

3.6. Interpretation Time for Each Radiograph

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- GBD 2015 mortality and causes of death collaborators. Global, regional, and national life expectancy, all-cause mortality, and cause-specific mortality for 249 causes of death, 1980–2015: A systematic analysis for the global burden of disease study 2015. Lancet 2016, 388, 1459–1544. [Google Scholar] [CrossRef] [PubMed]

- ACR–SPR–STR Practice Parameter for the Performance of Chest Radiography. Available online: https://www.acr.org/-/media/ACR/Files/Practice-Parameters/ChestRad.pdf (accessed on 2 November 2022).

- Qin, C.; Yao, D.; Shi, Y.; Song, Z. Computer-aided detection in chest radiography based on artificial intelligence: A survey. Biomed. Eng. Online 2018, 17, 113. [Google Scholar] [CrossRef] [PubMed]

- Moses, D.A. Deep learning applied to automatic disease detection using chest X-Rays. J. Med. Imaging Radiat. Oncol. 2021, 65, 498–517. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, Y.; Yamada, K.; Imamura, K.; Kobayashi, K. Radiologist supply and workload: International comparison—Working group of Japanese college of radiology. Radiat. Med. 2008, 26, 455–465. [Google Scholar] [CrossRef]

- Tang, Y.-X.; Tang, Y.-B.; Peng, Y.; Yan, K.; Bagheri, M.; Redd, B.A.; Brandon, C.J.; Lu, Z.; Han, M.; Xiao, J.; et al. Automated abnormality classification of chest radiographs using deep convolutional neural networks. NPJ Digit. Med. 2020, 3, 70. [Google Scholar] [CrossRef]

- van Leeuwen, K.G.; de Rooij, M.; Schalekamp, S.; van Ginneken, B.; Rutten, M.J.C.M. How does artificial intelligence in radiology improve efficiency and health outcomes? Pediatr. Radiol. 2022, 52, 2087–2093. [Google Scholar] [CrossRef]

- Zaglam, N.; Cheriet, F.; Jouvet, P. Computer-aided diagnosis for chest radiographs in intensive care. Pediatr. Crit. Care Med. 2016, 5, 113–121. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Nam, J.G.; Park, S.; Hwang, E.J.; Lee, J.H.; Jin, K.-N.; Lim, K.Y.; Vu, T.H.; Sohn, J.H.; Hwang, S.; Goo, J.M.; et al. Development and validation of deep learning-based automatic detection algorithm for malignant pulmonary nodules on chest radiographs. Radiology 2019, 290, 218–228. [Google Scholar] [CrossRef]

- Ajmera, P.; Pant, R.; Seth, J.; Ghuwalewala, S.; Kathuria, S.; Rathi, S.; Patil, S.; Edara, M.; Saini, M.; Raj, P.; et al. Deep-learning-based automatic detection of pulmonary nodules from chest radiographs. medRxiv 2022. [CrossRef]

- Nagi, A.T.; Awan, M.J.; Mohammed, M.A.; Mahmoud, A.; Majumdar, A.; Thinnukool, O. Performance analysis for COVID-19 diagnosis using custom and state-of-the-art deep learning models. Appl. Sci. 2022, 12, 6364. [Google Scholar] [CrossRef]

- Shamim, S.; Awan, M.J.; Zain, A.M.; Naseem, U.; Mohammed, M.A.; Garcia-Zapirain, B. Automatic COVID-19 lung infection segmentation through modified Unet model. J. Healthc. Eng. 2022, 6566982. [Google Scholar] [CrossRef] [PubMed]

- Lakhani, P.; Sundaram, B. Deep learning at chest radiography: Automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology 2017, 284, 574–582. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.S.; Kim, Y.S.; Kim, M.; Usman, M.; Byon, S.S.; Kim, S.H.; Lee, B.I.; Lee, B.-D. Evaluation of the feasibility of explainable computer-aided detection of cardiomegaly on chest radiographs using deep learning. Sci. Rep. 2021, 11, 16885. [Google Scholar] [CrossRef] [PubMed]

- Ajmera, P.; Kharat, A.; Gupte, T.; Pant, R.; Kulkarni, V.; Duddalwar, V.; Lamghare, P. Observer performance evaluation of the feasibility of a deep learning model to detect cardiomegaly on chest radiographs. Acta Radiol. Open 2022, 11, 20584601221107345. [Google Scholar] [CrossRef] [PubMed]

- Annarumma, M.; Withey, S.J.; Bakewell, R.J.; Pesce, E.; Goh, V.; Montana, G. Automated triaging of adult chest radiographs with deep artificial neural networks. Radiology 2019, 291, 272. [Google Scholar] [CrossRef]

- Dunnmon, J.A.; Yi, D.; Langlotz, C.P.; Ré, C.; Rubin, D.L.; Lungren, M.P. Assessment of convolutional neural networks for automated classification of chest radiographs. Radiology 2019, 290, 537–544. [Google Scholar] [CrossRef]

- Nguyen, N.H.; Nguyen, H.Q.; Nguyen, N.T.; Nguyen, T.V.; Pham, H.H.; Nguyen, T.N.-M. Deployment and validation of an AI system for detecting abnormal chest radiographs in clinical settings. Front. Digit. Health 2022, 4, 890759. [Google Scholar] [CrossRef]

- Albahli, S.; Ahmad Hassan Yar, G.N. AI-Driven deep convolutional neural networks for chest X-Ray pathology identification. J. Xray Sci. Technol. 2022, 30, 365–376. [Google Scholar] [CrossRef]

- Hwang, E.J.; Park, S.; Jin, K.N.; Kim, J.I.; Choi, S.Y.; Lee, J.H.; Goo, J.M.; Aum, J.; Yim, J.J.; Cohen, J.G.; et al. Development and validation of a deep learning-based automated detection algorithm for major thoracic diseases on chest radiographs. JAMA Netw. Open 2019, 2, e191095. [Google Scholar] [CrossRef]

- AUGMENTO. Available online: https://www.deeptek.ai/augmento (accessed on 6 December 2022).

- Obuchowski, N.A.; Rockette, H.E. Hypothesis Testing of Diagnostic Accuracy for Multiple Readers and Multiple Tests an Anova Approach with Dependent Observations. Commun. Stat.-Simul. Comput. 1995, 24, 285–308. [Google Scholar] [CrossRef]

| Study | Size of Test/Validation Dataset | Problem Statement | Method | Results | Advantages | Limitations |

|---|---|---|---|---|---|---|

| Annarumma et al., 2019 [17] | 3229 institutional adult chest radiographs | Developed and tested an AI model, based on deep CNNs, for automated triaging of adult chest radiographs based on the urgency of imaging appearances | Ensemble of two deep CNNs | Sensitivity of 71%, specificity of 95%, PPV of 73%, and NPV of 94% | The AI model was able to interpret and prioritize chest radiographs based on critical or urgent findings |

|

| Dunnmon et al., 2019 [18] | 533 frontal chest radiographs | Assessed the ability of CNNs to enable automated binary classification of chest radiographs | Variety of classification CNNs | AUC of 0.96 | Demonstrated the automated classification of chest radiographs as normal or abnormal |

|

| Nguyen et al., 2022 [19] | 6285 frontal chest radiographs | Deployed and validated an AI-based system for detecting abnormalities on chest X-ray scans in real-world clinical settings | EfficientNet | F1 score of 0.653, accuracy of 79.6%, sensitivity of 68.6%, and specificity of 83.9% | Examined the AI performance on a clinical dataset different from the training dataset |

|

| Saleh et al., [20] | 18,265 frontal-view chest X-ray images | Developed CNN-based DL models and compared their feasibility and performance to classify 14 chest pathologies found on chest X-rays | Variety of classification CNNs with DC-GANs | Accuracy of 67% and 62% for the best-performing model with and without augmentation, respectively | Used GAN-based techniques for data augmentation to address the lack of data for some pathologies |

|

| Hwang et al., [21] | 1089 frontal chest X-ray images | Developed a deep learning–based algorithm that classified chest radiographs into normal and abnormal for various thoracic diseases | Variety of classification CNNs | AUC of 0.979, sensitivity of 0.979, and specificity of 0.880 | AI model outperformed physicians, including thoracic radiologists. Radiologists aided with DLAD performed better than radiologists without the aid of DLAD |

|

| Data Characteristics | No. of Chest Radiographs | ||

|---|---|---|---|

| Hospital A | Hospital B | Total | |

| Total no. of chest radiographs | 3843 | 633 | 4476 |

| No. of radiographs with ROI in lungs | 641 | 137 | 778 |

| No. of radiographs with ROI in cardiac | 114 | 28 | 142 |

| No. of radiographs with ROI in pleura | 275 | 50 | 325 |

| No. of radiographs with at least one ROI from any category | 844 | 163 | 1007 |

| No. of radiographs with ROI in none of the above categories | 2999 | 470 | 3469 |

| Hospital | Category | AUC [95% CI] | Sensitivity [95% CI] | F1 Score [95% CI] | Specificity [95% CI] | Accuracy [95% CI] | NPV [95% CI] |

|---|---|---|---|---|---|---|---|

| A | Lungs | 0.886 [0.854, 0.915] | 0.846 [0.788, 0.899] | 0.690 [0.636, 0.740] | 0.878 [0.856, 0.900] | 0.873 [0.851,0.893] | 0.966 [0.952, 0.978] |

| Pleura | 0.867 [0.812, 0.917] | 0.779 [0.672, 0.873] | 0.598 [0.510, 0.677] | 0.936 [0.920, 0.952] | 0.925 [0.907,0.941] | 0.982 [0.972, 0.991] | |

| Cardiac | 0.919 [0.846, 0.979] | 0.877 [0.739, 1.000] | 0.404 [0.288, 0.508] | 0.925 [0.907, 0.941] | 0.923 [0.905,0.940] | 0.996 [0.992, 1.000] | |

| Aggregate | 0.910 [0.883, 0.934] | 0.876 [0.829, 0.918] | 0.767 [0.722, 0.807] | 0.885 [0.862, 0.906] | 0.883 [0.862,0.902] | 0.962 [0.946, 0.975] | |

| B | Lungs | 0.902 [0.835, 0.959] | 0.898 [0.781, 1.000] | 0.762 [0.641, 0.857] | 0.873 [0.811, 0.926] | 0.878 [0.823,0.924] | 0.969 [0.932, 1.000] |

| Pleura | 0.871 [0.734, 0.989] | 0.780 [0.529, 1.000] | 0.650 [0.400, 0.833] | 0.947 [0.907, 0.980] | 0.934 [0.892,0.975] | 0.980 [0.956, 1.000] | |

| Cardiac | 0.855 [0.641, 0.996] | 0.750 [0.333, 1.000] | 0.488 [0.154, 0.741] | 0.939 [0.899, 0.974] | 0.930 [0.886,0.968] | 0.988 [0.966, 1.000] | |

| Aggregate | 0.919 [0.864, 0.968] | 0.920 [0.828, 1.000] | 0.820 [0.723, 0.898] | 0.887 [0.828, 0.941] | 0.896 [0.848,0.943] | 0.970 [0.934, 1.000] | |

| A + B (Entire dataset) | Lungs | 0.889 [0.860, 0.917] | 0.855 [0.802, 0.903] | 0.702 [0.651, 0.746] | 0.878 [0.856, 0.899] | 0.874 [0.853,0.892] | 0.966 [0.953, 0.978] |

| Pleura | 0.867 [0.818, 0.913] | 0.779 [0.686, 0.869] | 0.605 [0.525, 0.682] | 0.938 [0.923, 0.952] | 0.926 [0.911,0.941] | 0.982 [0.973, 0.990] | |

| Cardiac | 0.906 [0.836, 0.965] | 0.852 [0.720, 0.967] | 0.417 [0.312, 0.514] | 0.927 [0.911, 0.942] | 0.924 [0.909,0.940] | 0.995 [0.990, 0.999] | |

| Aggregate | 0.912 [0.888, 0.934] | 0.883 [0.840, 0.920] | 0.775 [0.736, 0.811] | 0.885 [0.863, 0.906] | 0.885 [0.865,0.903] | 0.963 [0.949, 0.975] |

| Hospital A | |||||||

|---|---|---|---|---|---|---|---|

| Reader | Category | AUC [95% CI] | Sensitivity [95% CI] | Specificity [95% CI] | |||

| Unaided Session | Aided Session | Unaided Session | Aided Session | Unaided Session | Aided Session | ||

| R1 | Lungs | 0.776 [0.738,0.815] | 0.762 [0.723, 0.802] | 0.573 [0.497, 0.651] | 0.537 [0.460, 0.616] | 0.979 [0.969, 0.989] | 0.987 [0.979, 0.994] |

| Pleura | 0.869 [0.819,0.917] | 0.873 [0.823, 0.921] | 0.767 [0.671, 0.863] | 0.786 [0.685, 0.882] | 0.971 [0.959, 0.981] | 0.9602 [0.946, 0.973] | |

| Cardiac | 0.809 [0.713,0.897] | 0.916 [0.858, 0.956] | 0.640 [0.444, 0.815] | 0.929 [0.813, 1.000] | 0.978 [0.968, 0.987] | 0.9019 [0.884, 0.921] | |

| Aggregate | 0.843 [0.812, 0.874] | 0.861 [0.831, 0.888] | 0.723 [0.662, 0.785] | 0.821 [0.767, 0.871] | 0.963 [0.949, 0.975] | 0.900 [0.878, 0.921] | |

| R2 | Lungs | 0.804 [0.766,0.842] | 0.863 [0.830, 0.895] | 0.632 [0.557, 0.707] | 0.793 [0.731, 0.856] | 0.976 [0.965, 0.986] | 0.933 [0.914, 0.949] |

| Pleura | 0.839 [0.784,0.892] | 0.872 [0.819,0.919] | 0.687 [0.579, 0.795] | 0.786 [0.682, 0.881] | 0.989 [0.982, 0.996] | 0.959 [0.945, 0.971] | |

| Cardiac | 0.889 [0.813,0.959] | 0.922 [0.867, 0.962] | 0.807 [0.655, 0.950] | 0.929 [0.821, 1.000] | 0.971 [0.959, 0.982] | 0.915 [0.897, 0.932] | |

| Aggregate | 0.881 [0.854, 0.908] | 0.901 [0.879, 0.923] | 0.802 [0.749, 0.855] | 0.915 [0.876, 0.950] | 0.959 [0.945, 0.973] | 0.888 [0.864, 0.909] | |

| R3 | Lungs | 0.755 [0.714,0.794] | 0.809 [0.771, 0.846] | 0.589 [0.510, 0.669] | 0.669 [0.593, 0.741] | 0.920 [0.901, 0.938] | 0.949 [0.934, 0.964] |

| Pleura | 0.815 [0.760,0.868] | 0.874 [0.824, 0.921] | 0.644 [0.535, 0.750] | 0.786 [0.687, 0.878] | 0.986 [0.979, 0.993] | 0.962 [0.949, 0.975] | |

| Cardiac | 0.912 [0.844,0.967] | 0.8994 [0.831, 0.958] | 0.886 [0.750, 1.000] | 0.859 [0.722, 0.969] | 0.939 [0.923, 0.954] | 0.939 [0.923, 0.953] | |

| Aggregate | 0.8352 [0.806, 0.867] | 0.869 [0.839, 0.896] | 0.786 [0.732, 0.844] | 0.815 [0.759, 0.866] | 0.884 [0.859, 0.906] | 0.923 [0.905, 0.941] | |

| Hospital B | |||||||

| Reader | Category | AUC [95% CI] | Sensitivity [95% CI] | Specificity [95% CI] | |||

| Unaided Session | Aided Session | Unaided Session | Aided Session | Unaided Session | Aided Session | ||

| R1 | Lungs | 0.844 [0.762,0.919] | 0.789 [0.705, 0.870] | 0.723 [0.567, 0.871] | 0.613 [0.447, 0.776] | 0.966 [0.932, 0.992] | 0.966 [0.932, 0.992] |

| Pleura | 0.859 [0.724,0.987] | 0.901 [0.783, 0.987] | 0.740 [0.467, 1.000] | 0.840 [0.600, 1.00] | 0.979 [0.953, 1.000] | 0.962 [0.928, 0.987] | |

| Cardiac | 0.678 [0.500,0.875] | 0.929 [0.779,0.994] | 0.357 [0.000, 0.750] | 0.893 [0.600, 1.000] | 0.998 [0.993, 1.000] | 0.965 [0.933, 0.993] | |

| Aggregate | 0.884 [0.819, 0.942] | 0.885 [0.818, 0.944] | 0.791 [0.667, 0.903] | 0.816 [0.688, 0.927] | 0.976 [0.948, 1.000] | 0.953 [0.912,0.991] | |

| R2 | Lungs | 0.783 [0.697,0.867] | 0.835 [0.751, 0.910] | 0.591 [0.419, 0.757] | 0.715 [0.556, 0.862] | 0.974 [0.942, 1.000] | 0.954 [0.915, 0.985] |

| Pleura | 0.749 [0.600,0.897] | 0.895 [0.764, 0.989] | 0.500 [0.200, 0.800] | 0.820 [0.571, 1.000] | 0.998 [0.987, 1.000] | 0.969 [0.938, 0.993] | |

| Cardiac | 0.875 [0.684,0.990] | 0.926 [0.773, 0.993] | 0.786 [0.400, 1.000] | 0.893 [0.600, 1.000] | 0.964 [0.932, 0.987] | 0.959 [0.924, 0.987] | |

| Aggregate | 0.881 [0.813, 0.942] | 0.923 [0.870, 0.969] | 0.798 [0.667, 0.915] | 0.902 [0.806, 0.977] | 0.964 [0.926, 0.992] | 0.945 [0.902, 0.983] | |

| R3 | Lungs | 0.563 [0.499,0.632] | 0.816 [0.733, 0.892] | 0.183 [0.063, 0.314] | 0.729 [0.571, 0.871] | 0.944 [0.901, 0.977] | 0.901 [0.849, 0.951] |

| Pleura | 0.627 [0.500,0.769] | 0.894 [0.771, 0.996] | 0.260 [0.000, 0.546] | 0.800 [0.556, 1.000] | 0.995 [0.979, 1.000] | 0.988 [0.966, 1.000] | |

| Cardiac | 0.670 [0.490,0.875] | 0.923 [0.769, 0.990] | 0.357 [0.000, 0.750] | 0.893 [0.600, 1.000] | 0.984 [0.960, 1.000] | 0.954 [0.918, 0.982] | |

| Aggregate | 0.600 [0.532, 0.676] | 0.872 [0.807, 0.928] | 0.258 [0.128, 0.395] | 0.853 [0.738, 0.949] | 0.943 [0.901, 0.982] | 0.892 [0.835, 0.945] | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ajmera, P.; Onkar, P.; Desai, S.; Pant, R.; Seth, J.; Gupte, T.; Kulkarni, V.; Kharat, A.; Passi, N.; Khaladkar, S.; et al. Validation of a Deep Learning Model for Detecting Chest Pathologies from Digital Chest Radiographs. Diagnostics 2023, 13, 557. https://doi.org/10.3390/diagnostics13030557

Ajmera P, Onkar P, Desai S, Pant R, Seth J, Gupte T, Kulkarni V, Kharat A, Passi N, Khaladkar S, et al. Validation of a Deep Learning Model for Detecting Chest Pathologies from Digital Chest Radiographs. Diagnostics. 2023; 13(3):557. https://doi.org/10.3390/diagnostics13030557

Chicago/Turabian StyleAjmera, Pranav, Prashant Onkar, Sanjay Desai, Richa Pant, Jitesh Seth, Tanveer Gupte, Viraj Kulkarni, Amit Kharat, Nandini Passi, Sanjay Khaladkar, and et al. 2023. "Validation of a Deep Learning Model for Detecting Chest Pathologies from Digital Chest Radiographs" Diagnostics 13, no. 3: 557. https://doi.org/10.3390/diagnostics13030557

APA StyleAjmera, P., Onkar, P., Desai, S., Pant, R., Seth, J., Gupte, T., Kulkarni, V., Kharat, A., Passi, N., Khaladkar, S., & Kulkarni, V. M. (2023). Validation of a Deep Learning Model for Detecting Chest Pathologies from Digital Chest Radiographs. Diagnostics, 13(3), 557. https://doi.org/10.3390/diagnostics13030557