Abstract

The ongoing coronavirus disease 2019 (COVID-19) pandemic has had a significant impact on patients and healthcare systems across the world. Distinguishing non-COVID-19 patients from COVID-19 patients at the lowest possible cost and in the earliest stages of the disease is a major issue. Additionally, the implementation of explainable deep learning decisions is another issue, especially in critical fields such as medicine. The study presents a method to train deep learning models and apply an uncertainty-based ensemble voting policy to achieve 99% accuracy in classifying COVID-19 chest X-rays from normal and pneumonia-related infections. We further present a training scheme that integrates the cyclic cosine annealing approach with cross-validation and uncertainty quantification that is measured using prediction interval coverage probability (PICP) as final ensemble voting weights. We also propose the Uncertain-CAM technique, which improves deep learning explainability and provides a more reliable COVID-19 classification system. We introduce a new image processing technique to measure the explainability based on ground-truth, and we compared it with the widely adopted Grad-CAM method.

1. Introduction

The COVID-19 pandemic, caused by the Severe Acute Respiratory Syndrome Coronavirus 2 (SARS-CoV-2), is considered the worst pandemic in over a century. It has resulted in unprecedented social and economic disruption globally. It was deemed a pandemic in March 2020 by the World Health Organization. Since then, researchers and physicians have put in several efforts to facilitate the early identification of coronavirus symptoms. According to PubMed [1], 755 research articles with the keyword “coronavirus” were published in 2019, and this number further increased to 1245 in the first 80 days of 2020. Due to an incomplete understanding of the viral genome at the beginning of the pandemic, early identification procedures for the disease were crude. The Real-Time Reverse Transcription Polymerase Chain Reaction (RT-PCR) test, also known as COVID-19 RT-PCR, is considered an optimum standard for detecting COVID-19. The RT-PCR is a qualitative method for detecting SARS-CoV-2 nucleic acids present in respiratory specimens at the time of infection [2]. However, there are still numerous difficulties in COVID-19 detection, including the low sensitivity rate of RT-PCR, which is only 60–70%, along with the high costs associated with the test [3]. As COVID-19 primarily manifests as a lung infection [4], the computed tomography (CT) and chest X-rays (CXRs) have been widely employed for its identification. Artificial intelligence and deep learning techniques have been frequently used to detect COVID-19 infection from CT and CXR images. The deep learning techniques are highly popular because they have outperformed conventional methods. The performance of deep learning algorithms has been demonstrated in various scientific applications, such as computer vision [5], object tracking [6], face identification [7], and surgical skill assessment [8]. In contrast to conventional and machine learning techniques, the training features need to not be selected manually. A model can be trained to learn the best features from the dataset used by adjusting the parameters and convolutional neural network (CNN) architecture. Even before the coronavirus pandemic, deep learning techniques were widely integrated in medical imaging. However, since the pandemic, the popularity of the deep learning techniques has increasingly dramatically, and there has been a subsequent rise in the applications in diverse fields, including medical image processing, data science approaches for pandemic modeling, AI for text mining and natural language processing, AI and the Internet of Things, AI in computational biology and medicine [9], and for COVID-19 detection [10]. Because the main characteristics of COVID-19 lung infection are the consolidations and areas of ground-glass opacity (GGO) [11], CXR datasets are suitable to test algorithms for spotting COVID-19 and other lung diseases. The CNNs are at the forefront of illness classification for diagnosis, as well as region of interest segmentation in medical images owing to their ability to accurately learn local features from a specific medical image, such as a CT scan or chest radiograph. A common strategy used to improve the classification performance is to combine the results of various classifiers to produce the final output. The output scores of the individual classifiers are used to combine ensemble techniques. These classifiers may possess multiple architectures for capturing various data items or input vectors produced from the same data instance. Existing methodologies primarily focus on achieving high-performance accuracy without adequately elaborating on what CNNs actually learn or delving into fundamental CNN problems, such as overconfidence predictions and uncertainty evaluations, which are crucial factors in the field of medical diagnosis.

In this study, we propose a novel weighted average ensemble method based on uncertainty to combine the confidence scores of various CNN models and subsequently maximize their efficiency for both COVID-19 classification and explanation. The miscalibration of predictive models is an underrated issue in CNN, and we have capitalized on this situation and converted this issue into an advantage through uncertainty quantification that is measured using PICP as ensemble voting weight. Effective ensembles require a diverse set of skillful ensemble members with varying distributions of prediction errors. In our training framework, we suggested the integration of the cyclic cosine annealing approach with cross-validation to perform voting for each network for accurate predictions of each network before final voting. The output of our training framework exhibits relatively low generalization error and improved performance compared to other frameworks.

The remainder of this paper is organized as follows: Section 2 explains the proposed approach, Section 3 presents some of the experimental results, Section 4 discusses the results, and Section 5 concludes the paper. The main contributions of this study are:

- A novel CNN training scheme to maximize ensembled model performance and minimize generalization error.

- A novel uncertainty evaluation ensemble method.

- A novel uncertainty-based Gradient-weighted Class Activation Mapping (Grad-CAM) ensemble explanation to for a better explanation of the CNN decisions compared to Grad-CAM.

- A new image processing technique to study the feasibility of the proposed Uncertain-CAM and compare it to normal Gras-CAM.

The proposed approach is evaluated using the COVID-QU-Ex dataset [12].

2. Related Literature

Driven by the significant advancements in the CNN technology, research in computational medical imaging has focused on exploring the potential of CNN in medical images produced by CT, magnetic resonance imaging (MRI), and X-rays. Numerous researchers have investigated deep learning technology as a viable method for classification, detection, and segmentation in various medical domains, such as the diagnosis of glaucoma [13], COVD-19, and brain tumors [10,14,15]. In recent years, the application areas of CNN-based AI systems have broadened, and this may further accelerate the analysis of diverse medical images. This is to address the urgent need for differentiating COVID-19 from other pneumonia infections more quickly and accurately. Although new CNN architectures can be built, a high number of images under each class would be required to enhance efficiency.

A recent study [10] found that most research articles employ transfer learning techniques, and only a few rely on fine-tuning, and barely any studies suggest a unique CNN architecture with performance that is on par with that of transfer-learning-based techniques. Transfer learning from models that have been pretrained on the ImageNet dataset has been used in a majority of the studies [16]. The DarkcovNet model, wherein DarkNet19 was used as a classifier for the YOLO object detection system, was proposed for automatic COVID-19 detection using CXR imaging [17]. They reported classification accuracies of 98.08% for binary classification and 87.02% for multiclass classification (COVID-19, pneumonia, and normal) (COVID-19 vs. normal). Polat et al. adopted a debiasing-data-loader strategy and improved the performance of the transfer learning model to avoid the bias problem and acquire high accuracy values for the automatic detection of COVID-19 cases from CXR images [18]. To classify the recovered features into normal, pneumonia, and COVID-19 classes, local binary pattern-based and image-based features were extracted from CXR pictures [19]. According to the authors, the classification of CXR images had a sensitivity of 97.86%. Based on the segmentation of the lung shape before training, an effective deep learning model was proposed to identify COVID-19, which achieved an accuracy of 96.43% [20].

Chowdhury et al. proposed an ensemble approach was proposed for COVID-19 detection in 2021 [21]. Their method takes several snapshots of the same model, and based on the softmax scores of each snapshot, it applies both the hard (majority voting) and soft (score averaging) ensembles for the final prediction. Another ensemble approach for COVID-19 detection, which used three standard CNN models for training and then averaged their softmax scores for the final prediction, was proposed [22].

An uncertainty-aware framework, which utilized transfer learning to detect COVID-19 using X-ray and CT images, was presented in 2021 by Shamsi et al. (2021) [23]. Different pretrained CNNs were used in their proposed framework to extract deep features from CXR and CT images. Additionally, they used different machine learning and statistical modeling techniques to diagnose COVID-19 and gauge the epistemic uncertainty of the categorization outcomes. The MixMatch semi-supervised framework, introduced by Calderon et al., was aimed at enhancing the model uncertainty estimation for COVID-19 identification [24]. Numerous methods have been proposed for estimating the uncertainty in a test, including MC dropout, deterministic uncertainty quantification, and softmax scores. A deep learning system, RCoNetK, was also suggested by Dong et al. for COVID-19 detection and uncertainty estimation [25]. They used multi-expert uncertainty-aware learning, mutual information maximization, and deformable mixed high-order moment features. The RCoNetK model successfully classified X-ray images into COVID-19, pneumonia, and normal classes, and it yielded a sensitivity of 97.76%.

A majority of the previous studies primarily focused on achieving high-performance accuracy or quantifying uncertainty, and less attention was given to explain what the machine actually learned. The main issue associated with CNN, which is overconfidence prediction, was not addressed sufficiently. Motivated by these shortcomings, we have developed a reliable ensemble deep learning network for a more efficient COVID-19 classification and explanation.

3. Materials and Methods

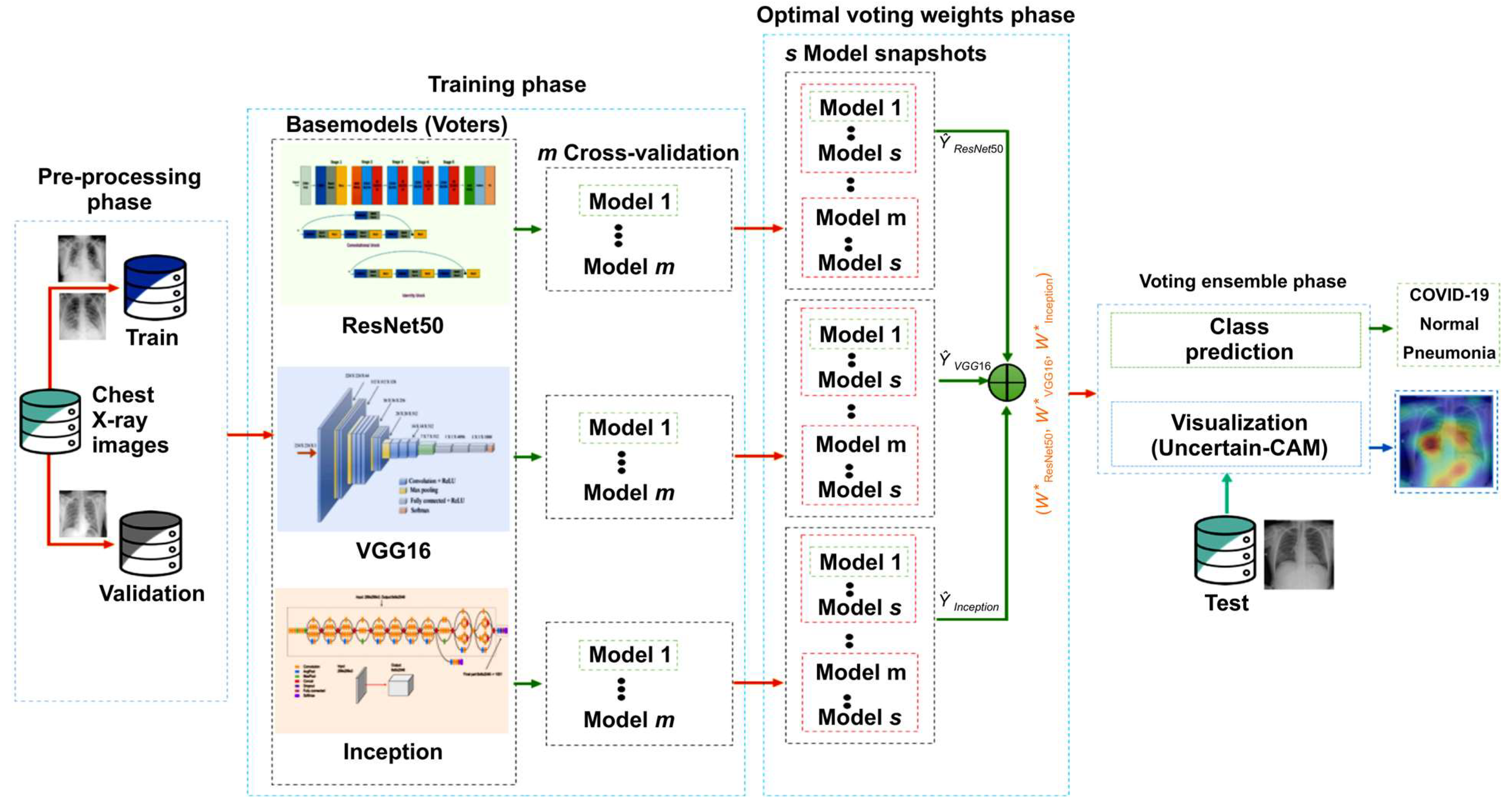

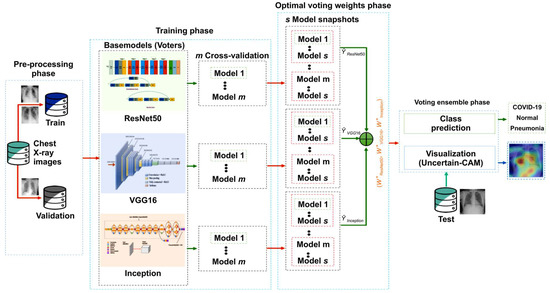

In this section, we briefly review the proposed method. First, we outline the benchmark dataset and then describe the proposed architecture and training methodology. Further, we discuss the ensemble modeling techniques and our proposed explainability method, Uncertain-CAM. All these techniques were combined to produce the suggested analytic pipeline, shown in Figure 1 (block diagram). Reproducibility is available at our repository https://github.com/waleed-aldhahi/uncertain-cam/ (accessed on 20 November 2022).

Figure 1.

Framework of the proposed method. In the preprocessing stage, data were prepared for training phase wherein we generate m models using cross-validation for each CNN network, and for each model, we generate s snapshots models using cyclic cosine annealing. Snapshots models were used to obtain optimal voting weight.

3.1. Data Preparation

The highly afflicted countries originally made only modest efforts to share clinical and radiographic data publicly because of the pandemic’s developing nature. Thus, two datasets, COVID-QU and QaTa-Cov19 databases, were produced by a team of researchers from the Qatar and Tampere Universities [26,27]. While the QaTa-Cov19 dataset includes 2951 COVID-19 CXR, along with their ground-truth infection masks, the COVID-QU dataset includes 3616 COVID-19, 8851 non-COVID cases, and 6012 normal cases. Moreover, additional X-rays were gradually becoming available in the public domain. This led to the creation of COVID-QU-Ex [12], which is an expanded version of these datasets and contains over 33,000 CXR pictures from three distinct classes:

- 11,956 COVID-19 samples

- 11,263 cases of pneumonia caused by viruses or bacteria that are not COVID-19

- 10,701 normal (healthy) samples

This dataset was produced and dispersed in different formats that were publicly accessible datasets and repositories. Through a strict quality control procedure, duplicates, incredibly poor-quality, and overexposed photos were found and eliminated, ensuring the high quality of the dataset. The generated dataset consists of images with a high level of interclass heterogeneity and few variations in resolution, quality, and SNRA. A detailed explanation of different data sources is given in [12].

3.2. Ensemble Learning

Ensemble-based methods are frequently used in various image-classification tasks, as well as several COVID-19 detection approaches. In summary, majority voting, averaging, and weighted averaging of the predictions that are generated by the classifiers considered for constructing the ensemble are the most commonly employed strategies. In most instances, these methods significantly enhance the overall performance. However, it should be noted that these methods do not consider the accuracy of the prediction when producing an outcome [28].

Ensemble learning supports the “wisdom of crowds” notion, which holds that judgments or predictions by large groups of people are often superior to that of a single expert. Similarly, ensemble learning describes a collection (or ensemble) of basic learners or models that collaborate to produce a more accurate final prediction. Due to excessive variation or strong bias, it is likely that a single model, also referred to as a base or weak learner, underperforms when used separately. However, weak learners can be combined to generate a strong learner, and they outperform any individual base model because their combination minimizes bias or variation [29,30].

Let be a sample space containing samples belonging to classes which compose the class set . Let independent voters (basic classifiers) be represented as Given sample , the prediction (vote) by is . Then, the simple ensemble voter (classifier) system is described as

where represents the predicted class, represents the threshold for voting, and is the logic operator AND. In this approach, each voter will possess the same weight regardless of their performance. However, by examining the strength of each voter in recognizing and assigning different weights, the ensemble system will yield accurate judgments and a much better performance. The ensemble weighted majority voting system is described as follows:

where denotes the weight of the basic voter voting to for a given and represents all the weight lists of the majority votes of winner voters . For optimum learning, we suggest the use of varying training data with k-fold cross-validation for each voter, and for each fold model , we create snapshots. The aim of creating model snapshots is to train a single model while continuously lowering the learning rate to reach a local minimum and save a snapshot of the weight of the current model. Further, to move away from the current local minimum, it will be essential to actively accelerate the learning process. The process continues until all cycles are completed. The use of cyclic cosine annealing, aimed at producing model snapshots for CNN, helps gather multiple models during a single training session [31]. The cyclic cosine annealing approach begins with the initial learning rate, progressively drops to the bare minimum, and then shoots back up quickly. Each epoch’s learning rate for cyclic cosine annealing is given by the following expression:

where is the count of the training iterations, is the count of cycles, is the learning rate at epoch , and is the initial learning rate. The weight of the snapshot model is defined as the weight at the bottom of each cycle. The next learning rate cycle uses these weights but permits the learning algorithm to reach various conclusions, producing a variety of snapshot models. Here, model snapshots were obtained in s training cycles, and each snapshot was used for ensemble prediction. By using model snapshot predictions, we applied simple average ensemble predictions for each fold model m of each base classifier (voter). The output of the simple average ensemble predictions for each voter was used to obtain the final weighted voting ensemble, as illustrated in Algorithm 1 and Figure 1. In the next section, we discuss the use of different policies as voting weights.

| Algorithm 1. Machine weighted voting algorithm. |

| Inputs: Entire Data set ; base learning algorithms Output: Machines Vote initialization; Split into for do for do Split into for the th split. Basic classifier train on and validate on for do Create snapshots of Predict on Concatenate predictions on Compute the simple average ensemble predictions End Concatenate predictions End Use to compute optimal voting weights Apply weighted voting ensemble on (4). End |

3.3. Optimal Voting Weights

The weighted average ensembles give some models in the ensemble more credit during the prediction because they assume that they are more skilled than the rest. The weighted average or weighted sum ensemble is an improvement over voting ensembles wherein it is assumed that all models are equally competent and contribute proportionally to ensemble predictions. Each model is given a specific weight that is multiplied by the prediction it makes, and it is utilized in the calculation of the sum or average prediction. The difficulty associated with the use of such an ensemble is finding model weights that yield a performance that is superior to both an ensemble with equal model weights and any contributing models. In this section we explore different techniques used to find the optimal voting weights beside our proposed novel method.

3.3.1. Best Combination

The simplest and possibly the most exhaustive approach is to initiate grid searching for weight values between zero and one for each ensemble member such that the weights across all ensemble members add to one. However, according to Shahhosseini et al. [29], using the optimization model proposed by Perrone et al. [32], which aims to combine predictions from fundamental classifiers by determining the ideal weight to aggregate them such that the resulting ensemble reduces the overall Expected Prediction Error (MSE), is a more effective approach compared to other methods:

where denotes the vector of the basic classifier ’s out-of-bag predictions on the validation samples of cross-validation, is the vector of true response values, and is the weights corresponding to the base model . The optimization model is described in (10), and it is assumed that is the total number of instances, is the true value of observation , is the base model , and i is the prediction of observation .

The formulation is a non-linear convex optimization problem. The computation of the Hessian matrix reveals that the objective function is convex because the constraints are linear. Therefore, this solution is globally deemed the best solution because the local optimum of a convex function (the objective function) on a convex feasible region (the feasible region of the preceding formulation) is guaranteed to be a global optimum [33].

3.3.2. Priori Recognition Performance Statistics

A basic classifier is assigned more weight based on how well it recognizes patterns [30]. Let the confusion matrix of voter fk be

when , denotes the count of samples which relates to class and is classified accurately as by voter fk. When , represents the number of samples belonging to class that are misclassified as by voter fk. The instances classified as become

Then, the conditional probability of this sample truly belonging to class is represented as

when classifier classifies instance as class , its voting weight for class is .

3.3.3. Model Calibration

Neural networks (NNs) are often poorly calibrated [34], i.e., they are overconfident in their predictions. In the classification process, NNs produce “confidence” scores along with the predictions. These confidence levels ideally coincide with the actual likelihood of correctness. For instance, if we provide 100 predictions with a confidence level of 80%, we expect that 80% of the predictions will be true, thus confirming that the network is calibrated. Model calibration is the process of obtaining a trained model and applying a post-processing procedure to enhance its probability estimation.

Let input images and class labels be random variables following the joint ground-truth distribution let be a CNN with , where is the predicted class and is the attributed confidence level. The objective is to calibrate such that it represents the true class probability. In practice, the accuracy of deep learning networks is lower than its confidence. Perfect calibration is defined as

The calibration error which describes the deviation in expectation between confidence and accuracy becomes

Calibration techniques for classifiers are aimed at converting an uncalibrated classifier’s confidence score to a calibrated score , which corresponds to the precision for a specific level of confidence [35]. This calibration technique is a post-processing technique that requires a separate learning phase to establish a mapping along with , which denotes the calibration parameters and can be considered as a probabilistic model . The calibration parameters are typically estimated using maximum likelihood (ML) for all scaling methods while minimizing the NLL loss. Here, the calibration parameters can be calculated by applying an uninformative Gaussian prior with a wide variance over the parameters and inferring the posterior by

where is the likelihood. We can map a new input with the posterior predictive distribution defined by

We modeled the epistemic uncertainty of calibration mapping compared to Bayesian NNs. The distribution acquired by calibration for an instance with index reflects the uncertainty of the model for a specific degree of confidence rather than the model uncertainty for a particular prediction. A distribution is obtained as a calibrated estimate. We utilized stochastic variational inference (SVI) for estimation because the posterior cannot be calculated analytically. The SVI uses a variational distribution (often a Gaussian distribution) whose structure is simple to evaluate [35]. We sampled sets of weights and used them to construct a sample distribution containing estimates for a new single input , with the parameters of the variational distribution optimized to match the true posterior using the evidence lower bound loss.

We adopted the matrix and vector scaling method, which is a multiclass extension of Platt scaling [36]. Let be the logit vector produced before the softmax layer for input . The matrix scaling applies the linear transformation to the following logits:

The parameters and were optimized in accordance with the NLL on the validation data set. Vector scaling can be described as a variant where is constrained to a diagonal matrix, and the number of parameters for matrix scaling accumulates quadratically along with the number of classes .

Calibration Evaluation

In a recent study [37], the expected calibration error (ECE) and the maximum calibration error (MCE) were used as voting weights for basic classifiers wherein the prediction error of the ensemble model declined according to the probability calibration and performed better than simple averaging. The ECE and MCE are commonly used criteria for measuring NN calibration errors [34]. Let be the set of indices of samples whose predicted confidence falls into the interval , . The accuracy of is

where and are the true and predicted labels, respectively, for sample . The average predicted confidence of bin can be defined as

where is the confidence of sample .

The ECE takes the weighted average of the bins’ accuracy/confidence differences of number of samples:

The MCE [34] primarily focuses on high-risk applications, where the maximum accuracy/confidence difference is more important than just the average, which represents the worst-case scenario:

Uncertainty Evaluation

Generally, the tolerance should be significantly less for COVID-19 detection. The predictive posterior distribution shows whether a network has a high or low confidence in its choice based on the input CXR images. Epistemic and aleatoric uncertainty are two distinct types of uncertainties that contribute to predictive uncertainty in deep learning [38]. Aleatoric uncertainty considers the noise that is already present in the observations owing to class overlap and label, homoscedastic, and heteroscedastic noises, which cannot be easily eliminated even with more data. This may be because of the sensor noise in CXR imaging from the random distribution of photons during scan capture. Epistemic or model uncertainty, which does not consider all facets of the data or the lack of training data, compensates for uncertainty in the model parameters. As the amount of training data increased, the epistemic uncertainty related to the model decreased.

Prediction interval coverage probability (PICP) is a metric used for Bayesian models aimed at determining the quality of the uncertainty estimates, i.e., the probability that a sample’s true value is contained within the predictive interval. The mean prediction interval width (MPIW) is another metric used to measure the mean width of all prediction intervals (PIs) in order to evaluate the sharpness of uncertainty estimates.

A PI around the mean estimate can be used to express epistemic uncertainty. We obtained the interval boundaries by selecting quantile-based interval boundaries for a certain confidence level and by assuming a normal distribution:

where represents the observed precision of sample for a specific . If all samples of the measured accuracies lie within a 100(1 − τ)% PI around 100(1 − τ)% of the time, the uncertainty is properly calibrated [35], and the use of as a calibration model produces a PDF for an input with index out of samples. We can calculate the PICP from (25):

The PICP is often used to perform calibrated regression when the true target value is known. However, the true precision of the classification cannot be easily obtained. Thus, we applied a binning method to all available quantities with points to estimate the accuracy for each instance. It is necessary for PICP as for flawless uncertainty calibration. By using this concept, we can calculate the uncertainty by measuring the difference between PICP and . The PI width for a certain is averaged over the entire N data points to create the MPIW, which is a complementary metric. Regarding the two metrics, it is desirable that the models possess larger PICP values and that the MPIW is reducing. By utilizing PICP and MPIW, we can assess both the quality of the calibration mapping and the epistemic uncertainty quantification. In our study, we suggest using PICP as an ensemble voting weight.

3.4. Optimal Voting Weights

Despite achieving significantly high accuracy in numerous detection and classification problems, the CNN has been considered a “Black box” in recent years. Although the CNNs’ architecture, processes, and features extraction methodology are extensively studied, it is still difficult for humans to know how the network decides its classification and the selection criteria of the features, based on which the decision is made. This is especially important in sectors such as the military and medicine, where the reasoning behind the decision is important. Explainable AI research has grown significantly owing to the advancements in deep learning and the need for reliable machine decisions. The Grad-CAMs have been used extensively in medical imaging [39]. To highlight the crucial areas that are class-discriminating saliency maps, a Grad-CAM evaluates the gradients of the feature maps in the final convolution layer on a CNN model for a target image. To determine the target class weights of each filter in Grad-CAM, the gradients flowing back to the final convolutional layer in the CNN model were globally averaged. A combination of weighted feature maps and ReLU activation constitutes a Grad-CAM heat map. The expression of the class-discriminative saliency map for the target image class

where the activation map for the -th filter at the spatial position is denoted as , and highlights the favorable characteristics of the target class. The -th filter’s target class weights (importance weights) are calculated as follows:

where is the probability of classifying the target category as , and the number of pixels in the activation map is represented as . In this study, we suggest the use of the quality of epistemic uncertainty quantification measured by PICP to improve the Grad-CAM output. By integrating the PICP, the target class weights of the -th filter become

The class-discriminative saliency map based on the uncertainty of the target image class becomes

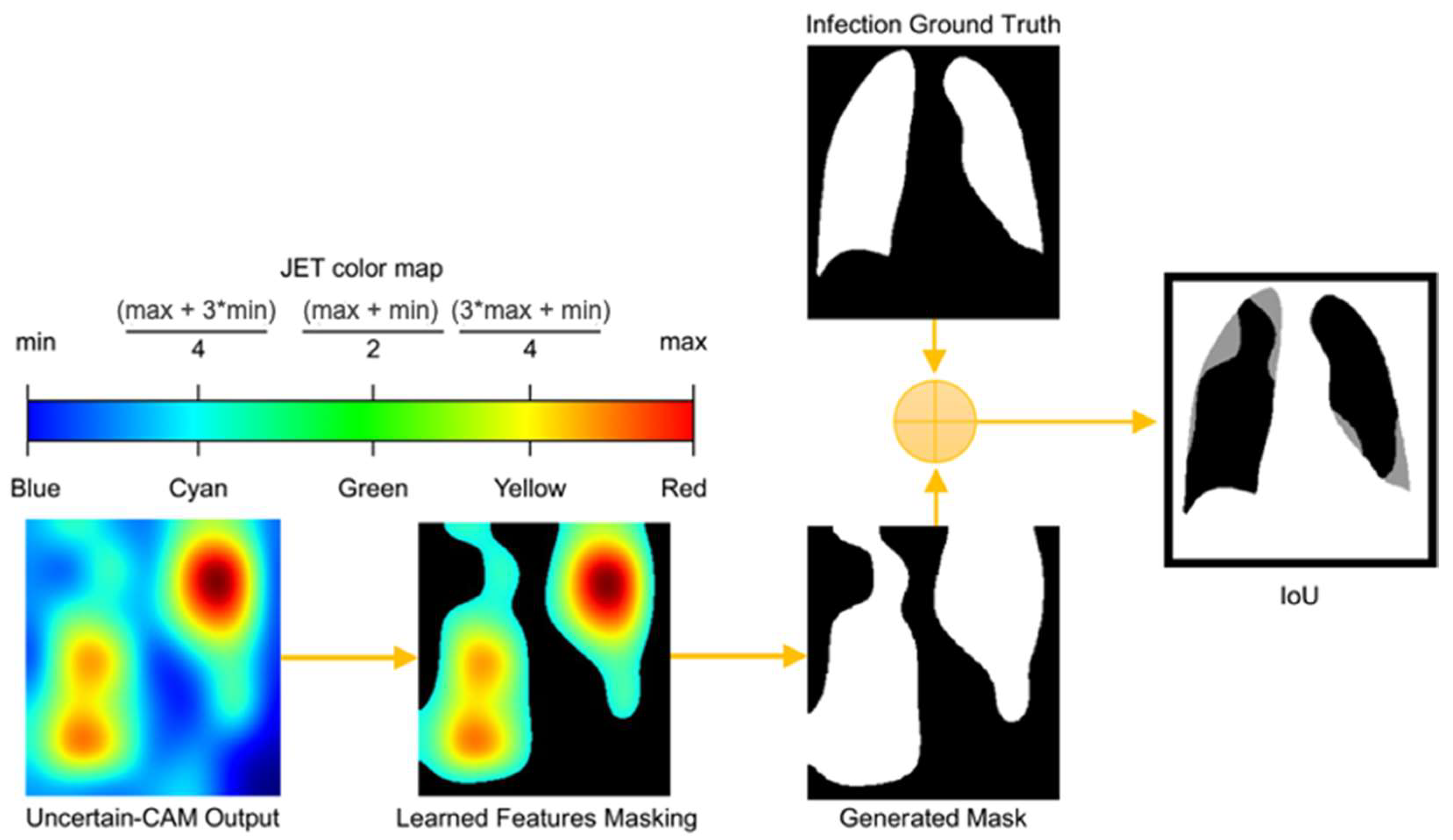

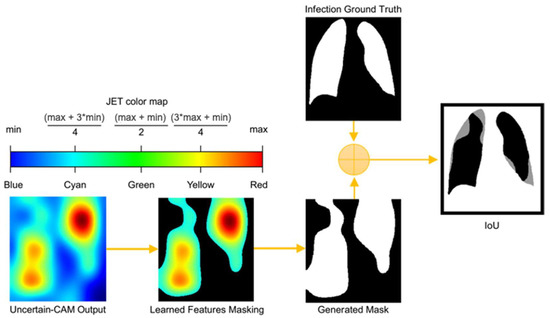

3.5. Uncertain-CAM Evaluation Metrics

For a statistical evaluation of the proposed method, we applied the intersection over union (IoU) method, also known as the Jaccard similarity coefficient, to compare the Uncertain-CAM output with the ground truth, as illustrated in Figure 2 and Algorithm 2. The IoU is a statistical method used to gauge the similarity and diversity and is commonly used as an evaluation metric for semantic image segmentation. It ranges between 0 and 1, where 0 represents the absolute difference and 1 represents the absolute match. Let be the output RGB image of Uncertain-CAM mapped onto the JET color map and is the binary mask ground-truth image. We first converted to HSV color space (hue, saturation, value). The hue channel holds great significance in image processing applications that require object segmentation based on color because it represents the type of color. The saturation varies from unsaturated to representing grayscale, which is totally saturated. The value channel describes color brightness or intensity. Because the JET color map represents the feature importance to the class as it changes from blue (not relevant) to red (most relevant), we can segment using certain lower and upper color ranges representing only the activated features that produce a new mask image, containing only the learned features , where the IoU can be applied. These can be computed as

Figure 2.

Process of computing IoU from Uncertain-CAM Output and Ground Truth. The generated heatmap is masked based on color threshold and is compared with ground truth masked to generate IoU, which describes how much generated masked is aligned with the ground truth masked.

| Algorithm 2: Uncertain-CAM evaluation algorithm. |

| ; Output: IoU initialization; for do 1: Read 2: Convert to HSV Colorspace. 3: Set Color range boundaries 4: Create new binary mask 5: Compute IoU using (30) end |

4. Results

4.1. Data Processing

Data preprocessing is a crucial stage of data cleaning that enhances input data in deep learning projects. It guarantees the generalizability of a particular model, particularly when tested on datasets distinct from those used for training. Preprocessing further improves network performance by minimizing data noise and/or distortions. In our tests, we first used data normalization, rescaling all pixel values to [0, 1] using a pixel-wise multiplication factor of 1/255, yielding a set of CXR images as follows:

where and are the maximum and lowest values in the input data, respectively, and is the normalized data. The histogram equalization was used to improve the image contrast [40]. The images were then downsized to 224 × 224 pixels before the training started. Additionally, the data were adjusted by a 25° rotation, zoom range of 0.2, and alteration of the fill-mode to the nearest. Because data augmentation reduces overfitting, which usually occurs when a statistical model exactly fits its training data, it was used to increase the generalization capacity of our model by introducing changes to the dataset. Unfortunately, when this occurs, the goal of the algorithm is defeated because it cannot perform accurately against unobserved data [41].

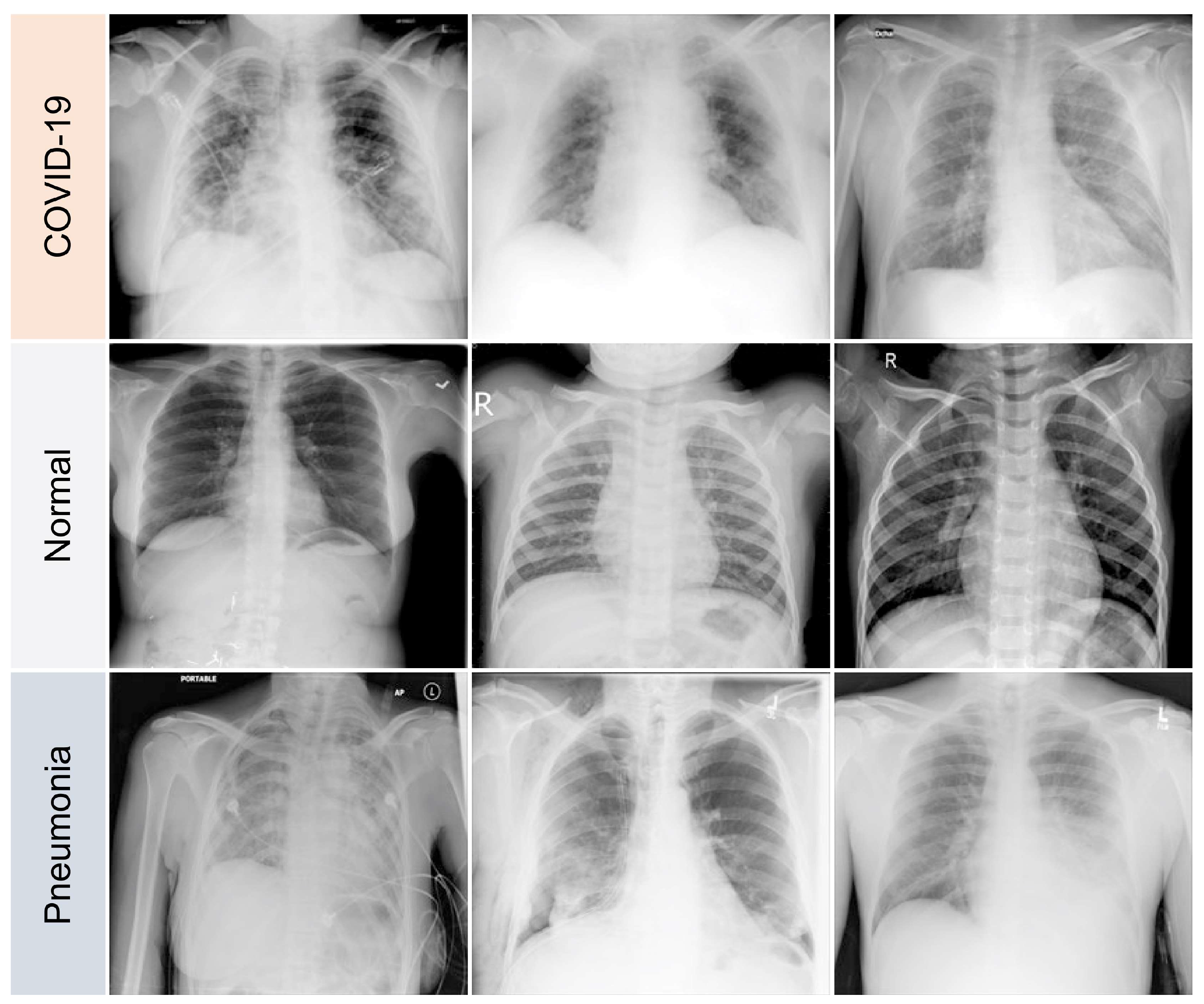

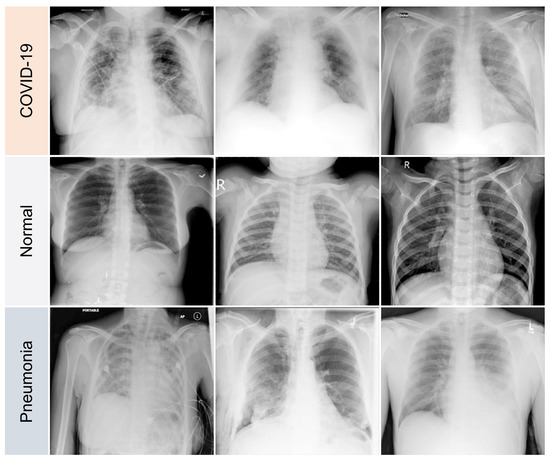

The variations between a few COVID-19 pneumonia case images are shown in Figure 3. In the CXRs of COVID-19 patients, the following principal features are typically observed [42]:

Figure 3.

Samples of dataset used in the study.

- GGOs.

- Odd paving pattern.

- Consolidation of the airspace.

- Thickening of bronchovascular bundles.

- Traction bronchiectasis.

Similarly, the following CXR features have been observed in pneumonia patients [43]:

- GGOs.

- Reticular opacities.

- Vascular thickness.

- Additional widespread distribution along the bronchovascular bundles.

- Thickness in bronchial wall.

Although these features are often seen in pneumonia, isolated lobar, or segmental consolidation without GGO, numerous small pulmonary nodules, tree-in-bud, pneumothorax, cavitation, and hilar lymphadenopathy are uncommon in COVID-19 cases [42]. In addition to the procedures used for the early diagnosis, cure, and isolation phases, radiological imaging is crucial in stemming the outbreak. Chest radiography can be used to identify a few distinctive lung abnormalities associated with COVID-19 infection. Conversely, deep learning algorithms are adept at spotting COVID-19 lung symptoms, and they yield excellent diagnostic accuracy rates.

4.2. Learning COVID

Both the COVID-19 classification and the explanation of the suggested approach were subjected to quantitative evaluation. The ResNet50 [44], Inception V3 [45], and the VGG16 [46] were the foundation models employed in this study. The VGG16 CNN architecture won the 2014 ILSVR (ImageNet) competition and is regarded one of the best visual model architectures created to date. The most distinctive feature of VGG16 is that it emphasizes having convolution layers of 3 × 3 filters with a stride of 1, and it always utilizes the same padding and maxpool layer of 2 × 2 filters with a stride of 2. Throughout the architecture, the convolution and max pool layers were arranged in the same manner. It ends with two fully connected layers and a softmax layer for the output. The 16 layers in VGG16 indicate that there are 16 layers with weights. This network has 138.4 million parameters, making it sizable. The ResNet50 CNN comprises 48 convolutional layers, a maxpool layer, and an average pool layer. The framework introduced by ResNets made it possible to train extremely deep-NN, i.e., the network may have hundreds or thousands of layers and still function well. In the ImageNet dataset, the image recognition model Inception v3 achieved an accuracy of approximately 78.1%. This model has incorporated numerous concepts that are established by various researchers over the years. Convolutions, average pooling, max pooling, concatenations, dropouts, and fully linked layers are symmetric and asymmetric building components that constitute the model itself. The computational efficiency of inception networks has been demonstrated in terms of the number of parameters created by the network and economic cost (memory and other resources) [45].

We used 3-fold cross-validation to realize the model formulation and evaluation processes. Three snapshot models were used for each fold cross-validation, resulting in nine models for the three folds. The data were split into three equivalent subsets that were mutually exclusive for 3-fold cross-validation. Two of the three subsets were utilized as the training set in each iteration, and the third subset was used as the validation set. The final evaluation of each model depended on the average ensemble performance of the nine snapshots created for each model. Before the final weighted voting ensemble stage, each base model was calibrated in order to obtain base model performance, calibration errors, model confidence, and uncertainty. All experiments were repeated ten times in order to minimize statistical variability.

4.3. Performance Evaluation Metrics

During classification training, the evaluation metric is crucial for obtaining the best classifier. Therefore, choosing an appropriate assessment measure is important to differentiate and achieve the best classifier [47]. We considered the following evaluation measures to statistically assess the efficacy of our suggested method: area under the curve (AUC), a popular ranking metric; accuracy, which evaluates the proportion of accurate predictions over the entire count of samples evaluated; precision, which evaluates the proportion of positive patterns that are correctly classified; recall, for evaluating the proportion of positive patterns that are accurately predicted; and F1-score, which describes the harmonic mean between recall and precision values [47]. Let TP and TN represent true positive and true negative, and FP and FN represent false positive and false negative, respectively. Then, the performance metrics are calculated as follows:

4.4. Performance Evaluation

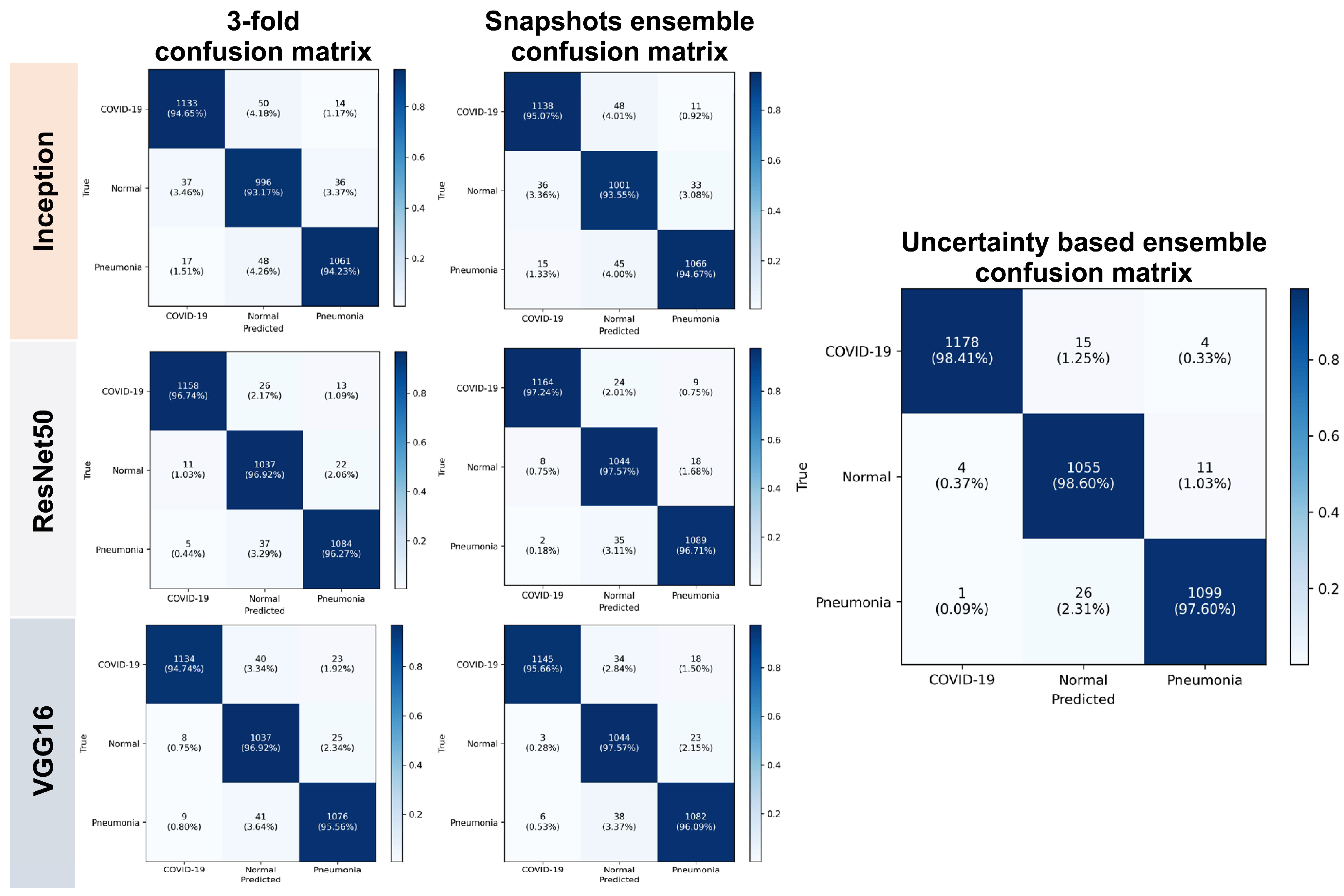

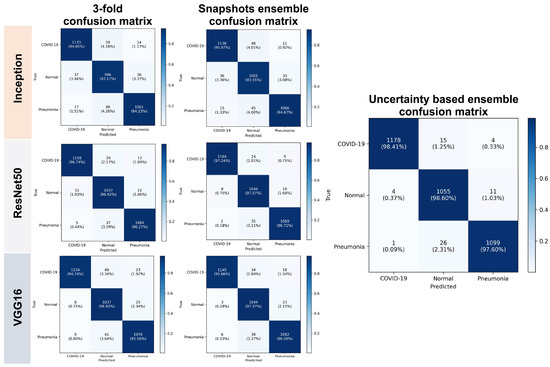

In this section, we statistically evaluated the proposed method on an unseen (test) dataset. Table 1 and Table 2 show both calibration results and performance of the voters. Table 3 and Table 4 list the calibration results and performance of the ensemble models based on different voting strategies. Table 5 presents the performance results for each class for both the base classifiers and ensemble models. The smaller the calibration errors ECE and MCE, the better. For uncertainty quality metrics, we aimed to maximize PICP and minimize MPIW. A few errors can be seen before calibration, and they highlight the overconfident issue of CNN. For unseen data, the ensembled models overperform individual models, and the proposed weighted voting ensembled outperforms other voting strategies and provides a better generalization on unseen data.

Table 1.

Calibration (in %) of voters on unseen data.

Table 2.

Performance (in %) of voters on unseen data.

Table 3.

Calibration (in %) of the ensembled models on unseen data.

Table 4.

Performance (in %) of ensembled models on unseen data.

Table 5.

Performance of the ensembled models per class.

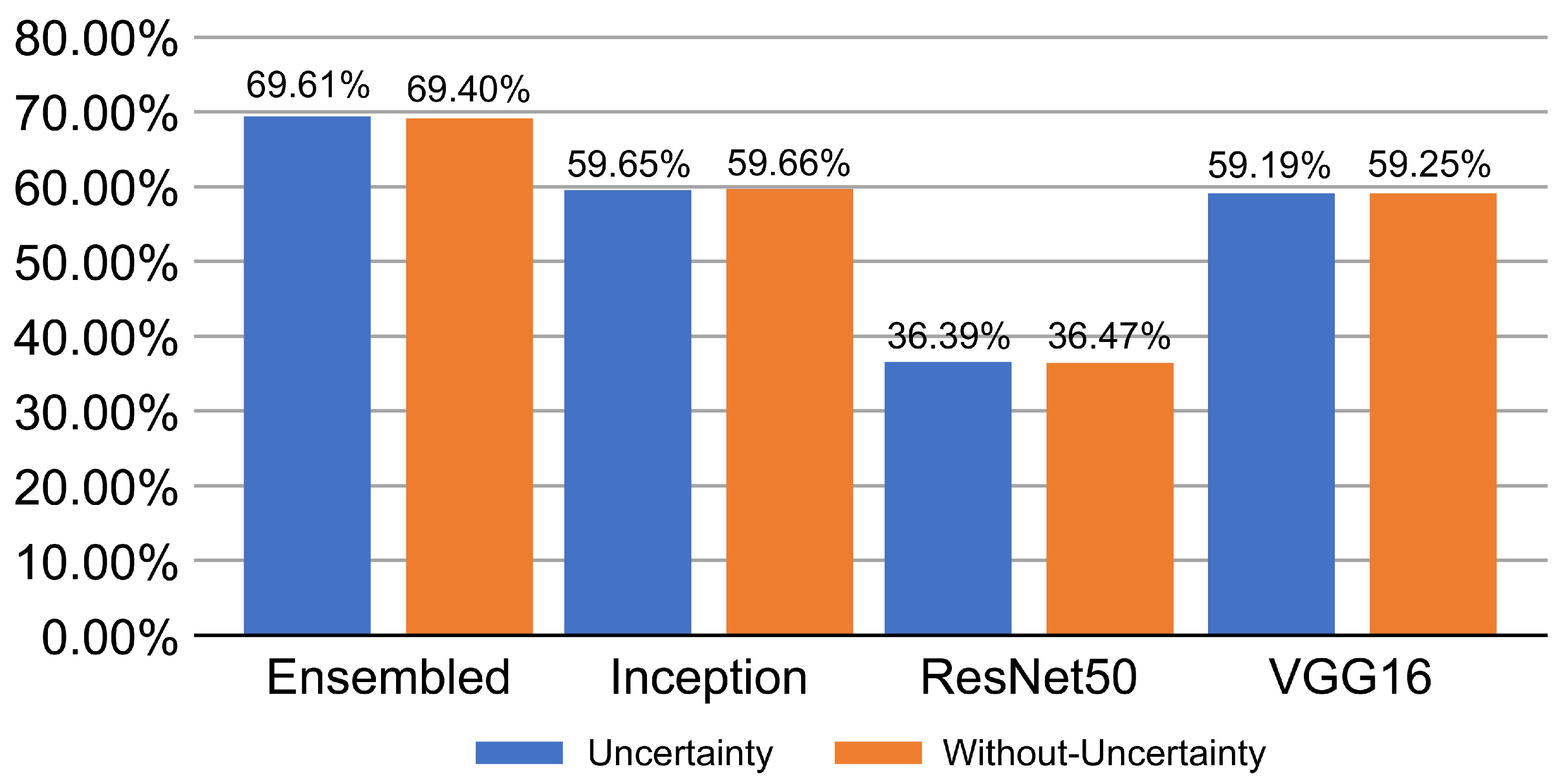

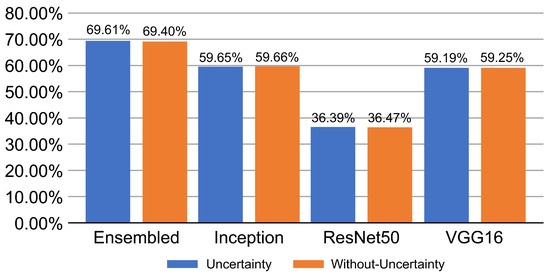

Figure 4 shows the performance of the proposed network for the unseen data and compares the models at different stages of proposed training scheme. The ensembled snapshots overperform the simple cross-validation and the PICP-based ensemble produces a more efficient performance.

Figure 4.

Proposed network performance output on unseen data.

4.5. Explaining COVID-19

Deep-CNNs are described as “black boxes” because they are not naturally interpretable. Accordingly, a global statement on the ethics of AI in radiology, transparency, interpretability, and explainability is required to establish trust between the patient and the provider. Any researcher or physician that deploys such a system should understand, via reading the explanations, how the model reached a specific conclusion regarding the presence of COVID-19 infection or absence thereof. It is not only morally right to seek out explainable machine learning models but also instructive for researchers to confirm that the model contains no data leaks or unintentional bias.

Table 6 provides some samples of explanations for each model that made positive predictions of true positive COVID-19 CXR as heatmaps using the Grad-CAM, where dark red regions represent the most relevant information that the model depends on when making its decision, blue represents the least significant importance, and the ground truth represents the marking of specialized physicians. The proposed Grad-CAM ensemble combines the average weights of each basic model, and the proposed Uncertain-CAM applies uncertainties as weights for each basic model. Figure 5 provides a comparison of the IoU score between the ground truth and the explanation done directly to single models, ensemble model, and the Uncertain-CAM. It demonstrates that our proposed method generates a higher score than the single model explanation; higher scores mean more closeness to the ground truth, as determined by specialized physicians.

Table 6.

Explainability of positive COVID-19 X-ray classified as positive.

Figure 5.

IoU scores with and without uncertainty.

5. Discussion

Recently, COVID-19 detection using X-rays has been extensively studied by researchers across the world. Although various approaches have achieved outstanding results in medical imaging tasks, a majority of these models suffer from high epistemic uncertainty because the small amounts of available data are mostly overconfident and have not been deployed because of lack of reliability, risk of erroneous decisions, and poor generalization on unseen data [48]. In our study, the performance and feature extraction capability of the base models vary, which further demonstrates the efficiency of our proposed network structure to learn better. Table 7 shows a comparison of the effectiveness of our strategy with that of the strategies that are currently employed. Our approach exhibited state-of-the-art performance for both classification and explanation compared to other studies for the same dataset [62,63]. This superior performance may be attributed to the high-quality and large 33,000 CXR images, which could be unfair to compare with other studies with different datasets. Ensemble learning proved to be more reliable not only in terms of accuracy but also in reducing CNN overconfidence. Although CNN explainability still has many shortcomings, the ensemble approach demonstrated great potential to produce a more accurate and meaningful visualization of what was really learned. The calibration and uncertainty not only improve the ensemble prediction but also provide a more reliable output.

Table 7.

Performance of our proposed method compared to state-of-the-art methods.

While the results are encouraging, our analysis pipeline has certain limitations. First, we used a single benchmark dataset to train and test our proposed method. To improve the aleatoric uncertainty evaluation and further strengthen the robustness of our technique, it is ideal to leverage external test (unseen) datasets from various centers. Second, only the CXR images were used as inputs in our methodology. Multiple data sources are frequently employed in clinical practice, including clinical biomarkers and occasional chest CT scans, because they can offer more granular views of damaged lungs. Therefore, integrating multiple inputs can provide a more robust prediction at the patient level. Third, our system utilizes three standard base models, and the investigation of the number and structure of different models should provide more insights on both the accuracy and explanation of our proposed system. Finally, the use of deep learning in medical fields has great potential but is still obscure, as we are yet to fully explain what the machine really learns, wherein the need for reliable explaining tools is indispensable.

6. Conclusions

Previous studies on computational medical imaging focused on investigating the possibilities of CNN applicability in medical images produced by CT, MRI, and X-rays because of the significant advancements due to the CNN technology. Existing methodologies primarily concentrate on achieving high-performance accuracy without adequately elaborating on what CNNs actually learn or delving into fundamental CNN problems, including the overconfidence predictions and uncertainty evaluation, which are crucial factors in the field of medical diagnosis. In this study, we propose a novel weighted average ensemble method based on uncertainty to combine the confidence scores of various CNN models and subsequently maximize their efficiency for both COVID-19 classification and explanation. The proposed methodology uses uncertainty to provide CNN predictions and judgment explanations that are more robust and trustworthy by presenting both high classification accuracy and more insightful justification. The results demonstrated the capacity of the proposed framework to correctly detect COVID-19 cases in a cohort of 33,000 CXR images. The proposed approach outperforms existing deep-learning-based systems for COVID-19 classification in terms of accuracy and trustworthiness. Further, it can be widely used in clinical applications and help front-line medical staff diagnose COVID accurately and quickly. Future research will expand the capacity of our system to combine several inputs, such as clinical indicators and other imaging modalities (such as chest CT), to produce more thorough markers (clinical and visual) that can aid doctors in providing more effective and customized treatment. Furthermore, we will attempt to test our model using a variety of datasets in future studies.

Author Contributions

Conceptualization, W.A.; Methodology, W.A.; Software, W.A.; Validation, W.A. and S.S.; Formal Analysis, W.A.; Investigation, W.A.; Resources, W.A. and S.S.; Data Curation, W.A.; Writing–Original Draft Preparation, W.A.; Writing–Review & Editing, W.A. and S.S.; Visualization, W.A.; Supervision, S.S.; Project Administration, W.A. and S.S.; Funding Acquisition, S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available at https://www.kaggle.com/datasets/anasmohammedtahir/covidqu (accessed on 20 February 2022).

Acknowledgments

The authors would like to thank the Saudi Arabian Ministry of Higher Education. We also thank the Editor and the anonymous Reviewers for their time and effort. The authors would also like to give special thanks to all the participants in this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Coronavirus Research is Being Published at a Furious Pace. Available online: https://www.economist.com/graphic-detail/2020/03/20/coronavirus-research-is-being-published-at-a-furious-pace (accessed on 31 May 2022).

- Mahmoud, G.M.; Majigo, M.V.; Njiro, B.J.; Mawazo, A. Detection Profile of SARS-CoV-2 Using RT-PCR in Different Types of Clinical Specimens: A Systematic Review and Meta-Analysis. J. Med. Virol. 2020, 93, 719–725. [Google Scholar] [CrossRef]

- Brihn, A.; Chang, J.; Yong, K.O.; Balter, S.; Terashita, D.; Rubin, Z.; Yeganeh, N. Diagnostic Performance of an Antigen Test with RT-PCR for the Detection of SARS-CoV-2 in a Hospital Setting—Los Angeles County, California, June–August 2020. MMWR. Morb. Mortal. Wkly. Rep. 2021, 70, 702–706. [Google Scholar] [CrossRef] [PubMed]

- Rehman, A.; Saba, T.; Tariq, U.; Ayesha, N. Deep Learning-Based COVID-19 Detection Using CT and X-Ray Images: Current Analytics and Comparisons. IT Prof. 2021, 23, 63–68. [Google Scholar] [CrossRef]

- Hassaballah, M.; Awad, A.I. Deep Learning in Computer Vision: Principles and Applications; CRC Press: Boca Raton, FL, USA; Taylor and Francis: Boca Raton, FL, USA, 2020. [Google Scholar]

- Ciaparrone, G.; Luque Sánchez, F.; Tabik, S.; Troiano, L.; Tagliaferri, R.; Herrera, F. Deep Learning in Video Multi-Object Tracking: A Survey. Neurocomputing 2020, 381, 61–88. [Google Scholar] [CrossRef]

- Kamra, A. A Survey on Face Analysis and Its Applications (Face Recognition & Facial Age Estimation). Turk. J. Comput. Math. Educ. 2021, 12, 462–466. [Google Scholar] [CrossRef]

- Kasa, K.; Burns, D.; Goldenberg, M.G.; Selim, O.; Whyne, C.; Hardisty, M. Multi-Modal Deep Learning for Assessing Surgeon Technical Skill. Sensors 2022, 22, 7328. [Google Scholar] [CrossRef] [PubMed]

- Ulhaq, A.; Born, J.; Khan, A.; Gomes, D.P.S.; Chakraborty, S.; Paul, M. COVID-19 Control by Computer Vision Approaches: A Survey. IEEE Access 2020, 8, 179437–179456. [Google Scholar] [CrossRef] [PubMed]

- Subramanian, N.; Elharrouss, O.; Al-Maadeed, S.; Chowdhury, M. A Review of Deep Learning-Based Detection Methods for COVID-19. Comput. Biol. Med. 2022, 143, 105233. [Google Scholar] [CrossRef]

- Das, D.; Santosh, K.C.; Pal, U. Truncated Inception Net: COVID-19 Outbreak Screening Using Chest X-Rays. Phys. Eng. Sci. Med. 2020, 43, 915–925. [Google Scholar] [CrossRef]

- Tahir, A.M.; Chowdhury, M.E.H.; Qiblawey, Y.; Khandakar, A.; Rahman, T.; Kiranyaz, S.; Khurshid, U.; Ibtehaz, N.; Mahmud, S.; Ezeddin, M. COVID-QU [Data Set]. Kaggle. 2021. Available online: https://www.kaggle.com/datasets/cf77495622971312010dd5934ee91f07ccbcfdea8e2f7778977ea8485c1914df (accessed on 20 February 2022).

- Sudhan, M.B.; Sinthuja, M.; Pravinth Raja, S.; Amutharaj, J.; Charlyn Pushpa Latha, G.; Sheeba Rachel, S.; Anitha, T.; Rajendran, T.; Waji, Y.A. Segmentation and Classification of Glaucoma Using U-Net with Deep Learning Model. J. Healthc. Eng. 2022, 2022, 1601354. [Google Scholar] [CrossRef]

- Nayak, D.R.; Padhy, N.; Mallick, P.K.; Bagal, D.K.; Kumar, S. Brain Tumour Classification Using Noble Deep Learning Approach with Parametric Optimization through Metaheuristics Approaches. Computers 2022, 11, 10. [Google Scholar] [CrossRef]

- Largent, A.; De Asis-Cruz, J.; Kapse, K.; Barnett, S.D.; Murnick, J.; Basu, S.; Andersen, N.; Norman, S.; Andescavage, N.; Limperopoulos, C. Automatic Brain Segmentation in Preterm Infants with Post-Hemorrhagic Hydrocephalus Using 3D Bayesian U-Net. Hum. Brain Mapp. 2022, 43, 1895–1916. [Google Scholar] [CrossRef] [PubMed]

- Fei-Fei, L.; Deng, J.; Li, K. ImageNet: Constructing a Large-Scale Image Database. J. Vis. 2010, 9, 1037. [Google Scholar] [CrossRef]

- Ozturk, T.; Talo, M.; Yildirim, E.A.; Baloglu, U.B.; Yildirim, O.; Rajendra Acharya, U. Automated Detection of COVID-19 Cases Using Deep Neural Networks with X-Ray Images. Comput. Biol. Med. 2020, 121, 103792. [Google Scholar] [CrossRef]

- Polat, Ç.; Karaman, O.; Karaman, C.; Korkmaz, G.; Balcı, M.C.; Kelek, S.E. COVID-19 Diagnosis from Chest X-Ray Images Using Transfer Learning: Enhanced Performance by Debiasing Dataloader. J. X-ray. Sci. Technol. 2021, 29, 19–36. [Google Scholar] [CrossRef]

- Maheshwari, S.; Sharma, R.R.; Kumar, M. LBP-Based Information Assisted Intelligent System for COVID-19 Identification. Comput. Biol. Med. 2021, 134, 104453. [Google Scholar] [CrossRef] [PubMed]

- Chakraborty, S.; Murali, B.; Mitra, A.K. An Efficient Deep Learning Model to Detect COVID-19 Using Chest X-Ray Images. Int. J. Environ. Res. Public Health 2022, 19, 2013. [Google Scholar] [CrossRef] [PubMed]

- Chowdhury, N.K.; Kabir, M.A.; Rahman, M.M.; Rezoana, N. ECOVNet: A Highly Effective Ensemble Based Deep Learning Model for Detecting COVID-19. PeerJ Comput. Sci. 2021, 7, e551. [Google Scholar] [CrossRef] [PubMed]

- Chaudhary, S.; Qiang, Y. Ensemble deep learning method for Covid-19 detection via chest X-rays. In 2021 Ethics and Explainability for Responsible Data Science (EE-RDS); IEEE: New York, NY, USA, 2021; pp. 1–3. [Google Scholar]

- Shamsi, A.; Asgharnezhad, H.; Jokandan, S.S.; Khosravi, A.; Kebria, P.M.; Nahavandi, D.; Nahavandi, S.; Srinivasan, D. An Uncertainty-Aware Transfer Learning-Based Framework for COVID-19 Diagnosis. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 1408–1417. [Google Scholar] [CrossRef]

- Calderon-Ramirez, S.; Yang, S.; Moemeni, A.; Colreavy-Donnelly, S.; Elizondo, D.A.; Oala, L.; Rodriguez-Capitan, J.; Jimenez-Navarro, M.; Lopez-Rubio, E.; Molina-Cabello, M.A. Improving Uncertainty Estimation with Semi-Supervised Deep Learning for COVID-19 Detection Using Chest X-Ray Images. IEEE Access 2021, 9, 85442–85454. [Google Scholar] [CrossRef] [PubMed]

- Dong, S.; Yang, Q.; Fu, Y.; Tian, M.; Zhuo, C. RCoNet: Deformable Mutual Information Maximization and High-Order Uncertainty-Aware Learning for Robust COVID-19 Detection. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 3401–3411. [Google Scholar] [CrossRef] [PubMed]

- Rahman, T.; Khandakar, A.; Qiblawey, Y.; Tahir, A.; Kiranyaz, S.; Abul Kashem, S.B.; Islam, M.T.; Al Maadeed, S.; Zughaier, S.M.; Khan, M.S.; et al. Exploring the Effect of Image Enhancement Techniques on COVID-19 Detection Using Chest X-ray Images. Comput. Biol. Med. 2021, 132, 104319. [Google Scholar] [CrossRef] [PubMed]

- Mohammed, K. Automated Detection of Covid-19 Coronavirus Cases Using Deep Neural Networks with CT Images. Al-Azhar Univ. J. Virus Res. Stud. 2020, 2, 1–12. [Google Scholar] [CrossRef]

- Paul, A.; Basu, A.; Mahmud, M.; Kaiser, M.S.; Sarkar, R. Inverted Bell-Curve-Based Ensemble of Deep Learning Models for Detection of COVID-19 from Chest X-Rays. Neural Comput. Appl. 2022, 5, 1–15. [Google Scholar] [CrossRef]

- Shahhosseini, M.; Hu, G.; Pham, H. Optimizing Ensemble Weights and Hyperparameters of Machine Learning Models for Regression Problems. Mach. Learn. Appl. 2022, 7, 100251. [Google Scholar] [CrossRef]

- Sun, J.; Li, H. Listed Companies’ Financial Distress Prediction Based on Weighted Majority Voting Combination of Multiple Classifiers. Expert Syst. Appl. 2008, 35, 818–827. [Google Scholar] [CrossRef]

- Huang, G.; Li, Y.; Pleiss, G.; Liu, Z.; Hopcroft, J.E.; Weinberger, K.Q. Snapshot ensembles: Train 1, get m for free. arXiv 2017, arXiv:1704.00109. [Google Scholar]

- Perrone, M.P.; Cooper, L.N.; National Science Foundation, U.S. When Networks Disagree: Ensemble Methods for Hybrid Neural Networks; U.S. Army Research Office: Research Triangle Park, NC, USA, 1992. [Google Scholar]

- Boyd, S. Convex Optimization (9780521833783); Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Liang, G.; Zhang, Y.; Wang, X.; Jacobs, N. Improved Trainable Calibration Method for Neural Networks on Medical Imaging Classification. arXiv 2020, arXiv:2009.04057. [Google Scholar] [CrossRef]

- Küppers, F.; Kronenberger, J.; Schneider, J.; Haselhoff, A. Bayesian Confidence Calibration for Epistemic Uncertainty Modelling. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Nagoya, Japan, 11–17 July 2021; pp. 466–472. [Google Scholar]

- Guo, C.; Pleiss, G.; Sun, Y.; Weinberger, K.Q. On calibration of modern neural networks. In Proceedings of the International Conference on Machine Learning PMLR, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Fan, S.; Zhao, Z.; Yu, H.; Wang, L.; Zheng, C.; Huang, X.; Yang, Z.; Xing, M.; Lu, Q.; Luo, Y. Applying Probability Calibration to Ensemble Methods to Predict 2-Year Mortality in Patients with DLBCL. BMC Med. Informatics Decis. Mak. 2021, 21, 14. [Google Scholar] [CrossRef]

- Kendall, A.; Gal, Y. What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision? Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Cheng, H.D.; Shi, X.J. A Simple and Effective Histogram Equalization Approach to Image Enhancement. Digit. Signal Process. 2004, 14, 158–170. [Google Scholar] [CrossRef]

- Yang, L.; Wang, S.-H.; Zhang, Y.-D. EDNC: Ensemble Deep Neural Network for COVID-19 Recognition. Tomography 2022, 8, 869–890. [Google Scholar] [CrossRef]

- Shi, H.; Han, X.; Jiang, N.; Cao, Y.; Alwalid, O.; Gu, J.; Fan, Y.; Zheng, C. Radiological Findings from 81 Patients with COVID-19 Pneumonia in Wuhan, China: A Descriptive Study. Lancet Infect. Dis. 2020, 20, 425–434. [Google Scholar] [CrossRef]

- Bai, H.X.; Hsieh, B.; Xiong, Z.; Halsey, K.; Choi, J.W.; Tran, T.M.L.; Pan, I.; Shi, L.-B.; Wang, D.-C.; Mei, J.; et al. Radiologists’ performance in differentiating COVID-19 from viral pneumonia on chest CT. Radiology 2020, 296, 200823. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–22 June 2017; pp. 5987–5995. [Google Scholar] [CrossRef]

- Wu, K.; Xiao, J.; Ni, L.M. Rethinking the Architecture Design of Data Center Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep Face Recognition, Proceedings of the British Machine Vision Conference (BMVC), Swansea, UK, 7–11 September 2015; BMVA Press: London, UK, 2015; pp. 1–12. [Google Scholar] [CrossRef]

- Hossin, M.; Sulaiman, M.N. A Review on Evaluation Metrics for Data Classification Evaluations. Int. J. Data Min. Knowl. Manag. Process 2015, 5, 1–11. [Google Scholar] [CrossRef]

- Zhou, S.K.; Greenspan, H.; Davatzikos, C.; Duncan, J.S.; van Ginneken, B.; Madabhushi, A.; Prince, J.L.; Rueckert, D.; Summers, R.M. A Review of Deep Learning in Medical Imaging: Imaging Traits, Technology Trends, Case Studies with Progress Highlights, and Future Promises. Proc. IEEE 2021, 109, 820–838. [Google Scholar] [CrossRef]

- Wang, L.; Lin, Z.Q.; Wong, A. COVID-Net: A Tailored Deep Convolutional Neural Network Design for Detection of COVID-19 Cases from Chest X-Ray Images. Sci. Rep. 2020, 10, 19549. [Google Scholar] [CrossRef]

- Khan, A.I.; Shah, J.L.; Bhat, M.M. CoroNet: A Deep Neural Network for Detection and Diagnosis of COVID-19 from Chest X-Ray Images. Comput. Methods Programs Biomed. 2020, 196, 105581. [Google Scholar] [CrossRef] [PubMed]

- Makris, A.; Kontopoulos, I.; Tserpes, K. COVID-19 detection from chest X-Ray images using Deep Learning and Convolutional Neural Networks. In Proceedings of the 11th Hellenic Conference on Artificial Intelligence, New York, NY, USA, 2–4 September 2020; pp. 60–66. [Google Scholar] [CrossRef]

- Luz, E.; Silva, P.; Silva, R.; Silva, L.; Guimarães, J.; Miozzo, G.; Moreira, G.; Menotti, D. Towards an Effective and Efficient Deep Learning Model for COVID-19 Patterns Detection in X-Ray Images. Res. Biomed. Eng. 2021, 38, 149–162. [Google Scholar] [CrossRef]

- Manokaran, J.; Zabihollahy, F.; Hamilton-Wright, A.; Ukwatta, E. Detection of COVID-19 from chest x-ray images using transfer learning. J. Med. Imaging 2021, 8, 017503. [Google Scholar] [CrossRef]

- Monshi, M.M.A.; Poon, J.; Chung, V.; Monshi, F.M. CovidXrayNet: Optimizing Data Augmentation and CNN Hyperparameters for Improved COVID-19 Detection from CXR. Comput. Biol. Med. 2021, 133, 104375. [Google Scholar] [CrossRef] [PubMed]

- Pham, T.D. Classification of COVID-19 Chest X-Rays with Deep Learning: New Models or Fine Tuning? Health Inf. Sci. Syst. 2020, 9, 2. [Google Scholar] [CrossRef] [PubMed]

- Abdar, M.; Salari, S.; Qahremani, S.; Lam, H.K.; Karray, F.; Hussain, S.; Nahavandi, S. Uncertaintyfusenet: Robust Uncertainty-aware Hierarchical Feature Fusion with Ensemble Monte Carlo Dropout for COVID-19 Detection. arXiv 2021, arXiv:2105.08590. [Google Scholar] [CrossRef]

- Aslan, M.F.; Sabanci, K.; Durdu, A.; Unlersen, M.F. COVID-19 Diagnosis Using State-of-The-Art CNN Architecture Features and Bayesian Optimization. Comput. Biol. Med. 2022, 142, 105244. [Google Scholar] [CrossRef] [PubMed]

- Karim, A.M.; Kaya, H.; Alcan, V.; Sen, B.; Hadimlioglu, I.A. New Optimized Deep Learning Application for COVID-19 Detection in Chest X-ray Images. Symmetry 2022, 14, 1003. [Google Scholar] [CrossRef]

- Saxena, A.; Singh, S.P. A Deep Learning Approach for the Detection of COVID-19 from Chest X-Ray Images Using Convolutional Neural Networks. Adv. Mach. Learn. Artif. Intell. 2022, 3, 52–65. [Google Scholar] [CrossRef]

- Banerjee, A.; Bhattacharya, R.; Bhateja, V.; Singh, P.K.; Lay-Ekuakille, A.; Sarkar, R. COFE-Net: An Ensemble Strategy for Computer-Aided Detection for COVID-19. Measurement 2022, 187, 110289. [Google Scholar] [CrossRef] [PubMed]

- Gour, M.; Jain, S. Uncertainty-Aware Convolutional Neural Network for COVID-19 X-Ray Images Classification. Comput. Biol. Med. 2022, 140, 105047. [Google Scholar] [CrossRef] [PubMed]

- Ibrokhimov, B.; Kang, J.-Y. Deep Learning Model for COVID-19-Infected Pneumonia Diagnosis Using Chest Radiography Images. BioMedInformatics 2022, 2, 654–670. [Google Scholar] [CrossRef]

- Constantinou, M.; Exarchos, T.; Vrahatis, A.G.; Vlamos, P. COVID-19 Classification on Chest X-Ray Images Using Deep Learning Methods. Int. J. Environ. Res. Public Health 2023, 20, 2035. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).