In this section, the important discoveries, trends, and knowledge gaps that have been identified from prior research are highlighted thorough an examination of the body of literature on skin diseases. This study sought to provide a thorough overview of the present understanding in the topic and highlight prospective directions for further research by integrating the collective knowledge from a variety of sources.

5.1. Machine Learning and Deep Learning in Skin Disease Classification

Skin diseases and skin cancer pose significant health concerns worldwide. Early detection and accurate classification of these conditions are crucial for effective treatment and improved patient outcomes. In recent years, the field of dermatology has witnessed remarkable advancements in the development of automated systems for skin disease classification. These systems leverage the power of artificial intelligence (AI) and machine learning techniques to analyze dermatological images and provide reliable diagnoses. The classification of skin diseases and skin cancer traditionally relied on manual examination and subjective interpretation by dermatologists. However, the subjective nature of this process often led to inconsistencies and errors in diagnosis. With the advent of computer-aided diagnosis (CAD) systems, the dermatology community has gained access to powerful tools that can enhance diagnostic accuracy and assist healthcare professionals in decision-making.

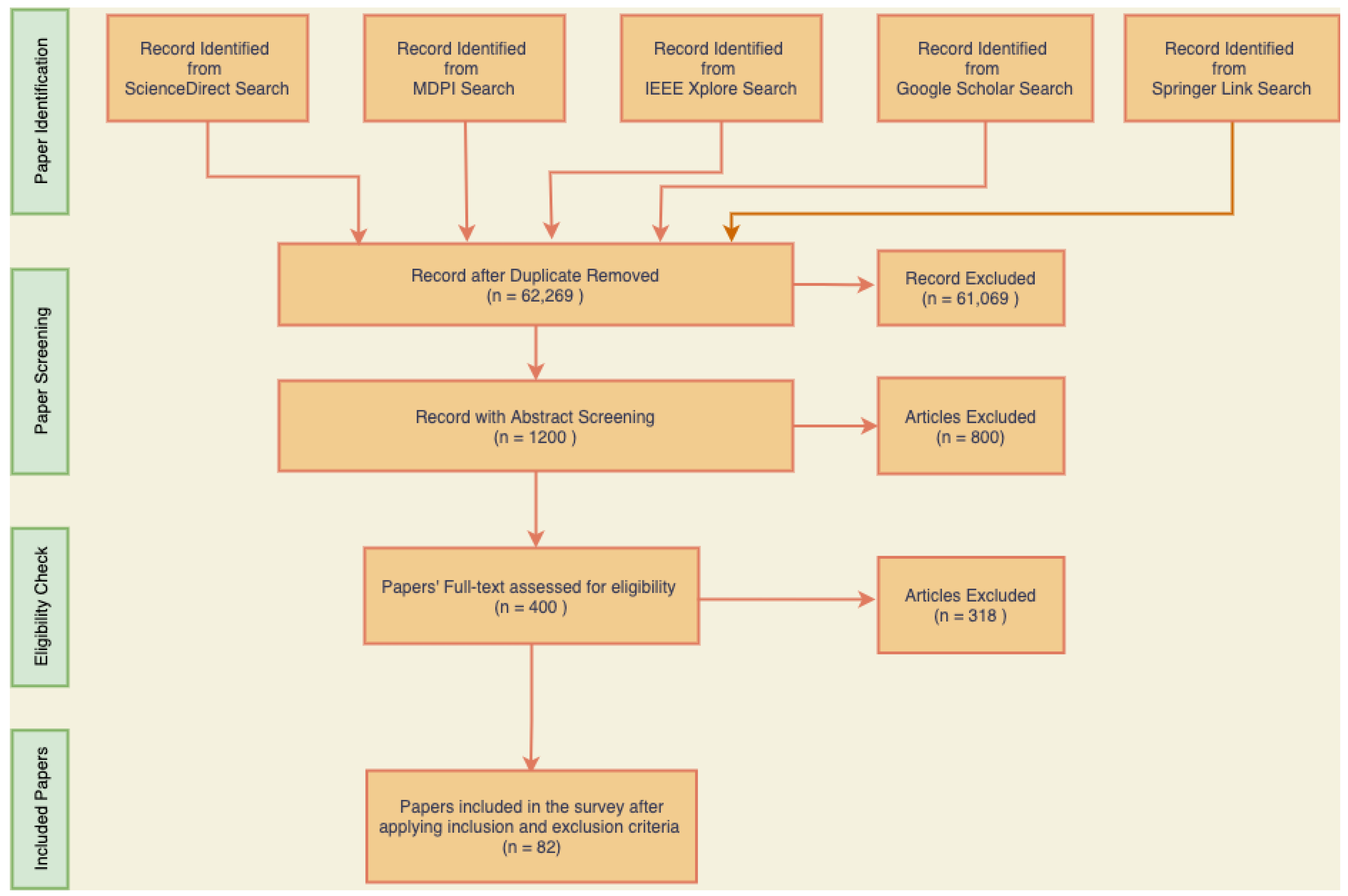

This review report aimed to explore the latest advancements in the field of skin disease and skin cancer classification using AI-based approaches. We examined the methodologies employed in various studies, including deep learning algorithms, convolutional neural networks (CNNs), and image analysis techniques. By analyzing the strengths and limitations of these approaches, we can gain insights into the current state-of-the-art and identify areas for further improvement.

Balaji et al. [

29] presented a method for skin disease detection and segmentation using the dynamic graph cut algorithm and classification through a Naive Bayes classifier. The authors first segmented the skin lesion from the background using the dynamic graph cut algorithm and, then, used texture and color features to classify the skin lesion into one of several categories using the Naive Bayes classifier. The use of both a dynamic graph cut algorithm and a Naive Bayes classifier provides a robust and accurate method for identifying and classifying skin lesions. The authors provided a clear description of the methodology used and the results obtained, including a comparison with existing methods. The authors evaluated their proposed approach using the ISIC 2017 dataset and reported an accuracy of 91.7%, a sensitivity of 70.1%, and a specificity of 72.7%. The dataset is available publicly on the ISIC website for public studies. The approach also scored an accuracy of 94.3% for benign cases, 91.2% for melanoma, and 92.9% for keratosis.

Ali et al. [

30] presented a study on the application of EfficientNets for multiclass skin cancer classification, with the aim of contributing to the prevention of skin cancer. The authors utilized the HAM10000 dataset consisting of 10,015 skin lesion images from seven different classes and compared the performance of different variants of EfficientNets with traditional deep learning models. The proposed approach was mainly focused on the transfer learning technique using an EfficientNet and showed promising results for the evaluation parameters that were selected during the experimental analysis. The authors presented an interesting and relevant study on the application of EfficientNet Variants B0–B7 for skin cancer classification. However, B0 was the best-performing model of the EfficientNets out of B0–B7 with an accuracy of 87.9%. The model was also evaluated in terms of other evaluation parameters and achieved a precision of 88%, a recall of 88%, an F1-score of 87%, and an AUC of 97.53%. Overall, the experimental results of this paper suggested that the proposed skin cancer classification model based on EfficientNets can accurately classify skin cancer and has the potential to be a useful tool in the prevention and early detection of skin cancer. However, the study had a few limitations. Firstly, the authors did not provide a detailed comparison of their results with other state-of-the-art methods for skin cancer classification, which makes it difficult to assess the significance of their findings. Secondly, the study only used a single dataset, which may limit the generalizability of the results. Future studies could benefit from using multiple datasets and exploring the transferability of the models to different domains. Lastly, the authors did not provide any information on the computational resources required for training and evaluating the models, which could be useful for researchers and practitioners looking to replicate or adapt their approach.

Srinivasu et al. [

31] presented a classification approach for skin disease detection using deep learning neural networks with MobileNet V2 and LSTM. The proposed approach involved preprocessing of skin images followed by feature extraction using MobileNet V2 and classification using LSTM. More than 10,000 skin photos made up the dataset utilized for evaluation. These images were divided into fivedifferent skin diseases: melanocytic nevi (NV), basal cell carcinoma (BCC), actinic keratoses and intraepithelial carcinoma (AKIEC), dermatofibroma (DF), and melanoma (MEL). The contribution of the paper was the development of an accurate skin disease classification approach using deep learning techniques that were lightweight and required less computational time. The main limitation of the proposed approach was that it requires a large amount of data to train the model effectively. The proposed approach achieved an accuracy of 90.21%, which outperformed the existing approaches VGG16, AlexNet, MobileNet, ResNet50, U-Net, SegNet, DT, and RF. The sensitivity, recall, and specificity values for each skin disease category were also reported, which further validated the effectiveness of the proposed approach. The results demonstrated that the proposed approach can accurately classify skin diseases, which can aid in the early diagnosis and treatment of such diseases.

Shetty et al. [

32] presented a novel approach for skin lesion classification using a convolutional neural network (CNN) and machine learning techniques. The authors aimed to develop an accurate and automated system for the classification of dermoscopic images into different categories of skin lesions. The paper’s contribution lied in the development of a CNN-based skin lesion classification system that can accurately classify seven different categories of skin lesions with high accuracy. The authors utilized publicly available datasets and compared their proposed system’s performance with other state-of-the-art systems such as EW-FCM + Wide-shuffleNet, shifted MobileNet V2, Shifted GoogLeNet, shifted 2-Nets, Inception V3, ResNet101, InceptionResNet V2, Xception, NASNetLarge, ResNet50, ResNet101 + KcPCA + SVMRBF, VGG16 + GoogLeNet ensemble, and ModifiedMobileNet and outperformed all in terms of accuracy. The limitation of this paper was that the proposed system is limited to dermoscopic images, and it cannot classify clinical images, which are more challenging to classify due to their low contrast and other artifacts. The experimental results showed that the proposed system achieved an overall accuracy of 95.18%, outperforming other state-of-the-art systems.

Jain et al. [

33] proposed a multi-type skin disease classification algorithm using an optimal path deep-neural-network (OP-DNN)-based feature extraction approach. The proposed algorithm achieved improved accuracy compared to other state-of-the-art algorithms for the classification of various skin diseases. The contribution of this paper was the proposal of an OP-DNN-based feature extraction approach for multi-type skin disease classification. This approach improved the accuracy of classification and also reduced the number of features required for classification. The paper also provided experimental results that demonstrated the effectiveness of the proposed approach. The algorithm was evaluated on the ISIC dataset with 23,906 skin lesion images and achieved an accuracy of 95%, which outperformed other algorithms such as KNN, NB, RF, MLP, CNN, and LSTM for multi-type skin disease classification.

Wei et al. [

34] proposed a novel skin disease classification model based on DenseNet and ConvNeXt fusion. The proposed model utilized the strengths of both DenseNet and ConvNeXt to achieve better performance in skin disease classification. The model was evaluated on two different datasets, where one is the publicly available HAM10000 dataset and the other was the dataset from Peking Union Medical College Hospital, and it achieved superior performance compared to the other models. The proposed model addresses the limitations of previous models by combining the strengths of the DenseNet and ConvNeXt architectures, which has not been explored before in skin disease classification. The model achieved state-of-the-art performance on the HAM10000 dataset and can potentially be used in clinical settings to assist dermatologists in diagnosing skin diseases. However, the study did not provide any explanation of how the model’s decisions were made, which may limit its interpretability in a clinical setting. The proposed model achieved an accuracy of 95.29% on the HAM10000 dataset and 96.54% on the Peking Union Medical College Hospital dataset, outperforming the other state-of-the-art models. The model also achieved high sensitivity and specificity for all skin disease categories. The study also conducted ablation experiments to show the effectiveness of the proposed fusion approach, which outperformed the individual DenseNet and ConvNeXt models.

Almuayqil et al. [

35] presented a computer-aided diagnosis system for detecting early signs of skin diseases using a hybrid model that combines different pretrained deep learning models (VGG19, InceptionV3, ResNet50, DenseNet201, and Xception) with traditional machine learning classifiers (LR, SVM, and RF). The proposed system consists of four main steps: preprocessing the input raw image data and metadata; feature extraction using six pretrained deep learning models (VGG19, InceptionV3, ResNet50, DenseNet201, and Xception); features concatenation; classification using machine learning techniques. The proposed hybrid system was evaluated on the HAM10000 dataset of skin images and showed promising results in detecting skin diseases accurately. However, the proposed hybrid approach DenseNet201 combined with LR achieved better performance with an accuracy of 99.94% in detecting skin diseases, which outperformed the other state-of-the-art approaches. The authors also provided a detailed comparison of the proposed model with other state-of-the-art methods, showing its superiority in terms of accuracy and other evaluation metrics.

Reddy et al. [

36] proposed a novel approach for the detection of skin diseases using optimized region growing segmentation and autoencoder-based classification. The proposed approach employs an efficient segmentation algorithm that can identify the affected regions of the skin accurately. Subsequently, a convolutional autoencoder-based classification model was used to classify the skin diseases based on the extracted features. The experimental results indicated that the proposed approach achieved promising results and outperformed several state-of-the-art methods in terms of accuracy and other evaluation metrics. The proposed approach offers several contributions to the field of skin disease detection. Firstly, the proposed segmentation algorithm is optimized for skin disease detection and can accurately identify the affected regions of the skin. Secondly, the proposed autoencoder-based classification model can classify the skin diseases with high accuracy using the extracted features. Lastly, the proposed approach outperformed several state-of-the-art methods in terms of accuracy and other evaluation metrics. One of the limitation of the approach is that it may not generalize well to new datasets with different characteristics as the the model was evaluated on the small dataset used from PH2 with 200 images. The experimental results indicated that the proposed approach achieved an accuracy of 94.2%, which outperformed several state-of-the-art methods. The proposed approach also achieved high values for other evaluation metrics such as the precision, recall, and F1-score, which demonstrated the effectiveness of the proposed approach for skin disease detection.

Malibari et al. [

37] presented an optimal deep-neural-network-driven computer-aided diagnosis (ODNNsingle bondCADSCC) model for skin cancer detection and classification. The Wiener-filtering (WF)-based preprocessing step was used extensively in the described ODNNsingle bondCADSCC model, which was then segmented using U-Net. Moreover, the SqueezeNet model was used to produce a number of feature vectors. Eventually, effective skin cancer detection and classification were achieved by using the improved whale optimization algorithm (IWOA) with a DNN model. IWOA is used in this technique to effectively choose the DNN settings. The comparison study findings demonstrated the suggested ODNNsingle bondCADSCC model’s promising performance against more-recent techniques with a high accuracy of 99.90%. Although the results are promising, it would be helpful to validate the proposed model on a larger dataset to assess its robustness and generalization capabilities. Another limitation is that the proposed model does not provide explanations for its decisions, which is essential for gaining the trust of clinicians and patients.

Qian et al. [

38] proposed a deep convolutional neural network dermatoscopic image classification approach that groups multi-scale attention blocks (GMABs) and uses class-specific loss weighting. To increase the size of the DCNN model, the authors introduced GMABs to several scale attention branches. Hence, utilizing the GMABs to extract multi-scale fine-grained features will help the model better be able to focus on the lesion region, improving the DCNN’s performance. The attention blocks, which may be used in different DCNN structures and trained end-to-end, have a straightforward structure and a limited number of parameters. The model will function successfully if the class-specific loss weighting approach is used to address the issue of category imbalance. As a result of this strategy, the accuracy of samples that are susceptible to misclassification can be greatly increased. To evaluate the model, the HAM10000 dataset was used, and the result showed that the accuracy of the proposed method reached 91.6%, the AUC 97.1%, the sensitivity 73.5%, and the specificity 96.4%. This confirmed that the method can perform well in dermatoscopic classification tasks.

An augmented-intelligence-enabled deep neural networking (AuDNN) system for classifying and predicting skin cancer utilizing multi-dimensional information on industrial IoT standards was proposed by Kumar et al. [

15]. The proposed framework incorporates deep learning algorithms and IoT standards to create a robust and efficient skin cancer classification system. The approach was evaluated on a Kaggle skin cancer dataset and CIA datasets on melanoma categorization of skin lesion images, and the results showed that it outperformed other state-of-the-art methods. The proposed AuDNN framework is a significant contribution to the field of medical image analysis. The integration of IoT standards and deep learning algorithms has created a system that is both robust and efficient for skin cancer classification. The paper also provided a detailed analysis of the performance of the proposed method, which can guide the development of future approaches for skin lesion classification. One limitation of this study was that the dataset used for training and evaluation was not explicitly mentioned. It would be helpful to know more about the dataset and its properties to assess the robustness and generalization capabilities of the proposed method. Another limitation is that the implementation of IoT standards may require significant resources and expertise, which may not be available in all settings. The proposed AuDNN framework achieved an accuracy of 93.26%.

Notwithstanding the amazing developments, the current deep-network-based approaches, which naively adopt the published network topologies in general image classification to the classification of skin lesions, still have much potential for optimization. Using self-attention to describe the global correlation of the features gathered from the conventional deep models, Nakai et al. [

39] suggested an enhanced deep bottleneck transformer model to enhance the performance of skin lesions. For balanced learning, they particularly used an improved transformer module that included a dual-position encoding module to include an encoded position vector on both the key and the query vectors. By replacing the bottleneck spatial convolutions of the late-stage blocks in the baseline deep networks with the upgraded module, they created a unique deep skin lesion classification model to enhance skin lesion classification performance. To validate the effectiveness of different deep models in identifying skin lesions, they conducted comprehensive tests on two benchmark skin lesion datasets, ISIC2017 and HAM10000. With their method, the three quantitative metrics of accuracy, sensitivity, and specificity on the ISIC2017 dataset achieved 92.1%, 90.1%, and 91.9%, respectively. The findings on the accuracy and precision for the HAM10000 dataset were 95.84% and 96.1%. This demonstrated a superb harmony between sensitivity and specificity.

Hossain et al. [

40] first developed an EM dataset with the assistance of knowledgeable dermatologists from the Clermont-Ferrand University Medical Center in France. Second, the authors trained 23 convolutional neural networks (CNNs) on a collection of skin lesion photos. These CNNs were modified versions of the VGG, ResNet, DenseNet, MobileNet, Xception, NASNet, and EfficientNet architectures. Lastly, the authors used transfer learning from pretrained ImageNet models to improve the CNNs’ performance after pretraining them with the HAM10000 skin lesion dataset. Fourth, to examine the explainability of the model, the authors used gradient-weighted class activation mapping to pinpoint the input regions crucial to CNNs for making predictions. Lastly, the authors offered model selection suggestions based on computational complexity and predictive capability. With an accuracy of 84.42% ± 1.36, an AUC of 0.9189 ± 0.0115, a precision of 83.1% ± 2.49, a sensitivity of 87.93% ± 1.47, and a specificity of 80.65% ± 3.59, the customized ResNet50 architecture provided the best classification results. With an accuracy of 83.13% ± 1.2, AUC of 0.9094 ± 0.0129, precision of 82.83% ± 1.75, sensitivity of 85.21% ± 3.91, and specificity of 80.89% ± 2.95, a lightweight model of a modified EfficientNetB0 also performed well. The authors contributed a Lyme disease dataset with twenty-three modified CNN architecturesfor image-based diagnosis, effective customized transfer learning using the combination of ImageNet and the HAM10000 dataset, a lightweight CNN, and a criteria-based guideline for model architecture selection.

Afza et al. [

41] proposed a hierarchical architecture based on two-dimensional superpixels and deep learning to increase the accuracy of skin lesion classification. The authors combined the locally and globally improved photos to improve the contrast of the original dermoscopy images. The proposed method consisted of three steps: superpixel segmentation, feature extraction, and classification using a deep learning model.The proposed method contributes to the field of skin lesion classification by introducing a hierarchical three-step superpixel and deep learning framework. This method improved the accuracy of skin lesion classification and reduced the computational complexity of the task by dividing the image into superpixels and classifying them individually. The proposed method is also generalizable and can be used on other datasets for skin lesion classification. Using an updated grasshopper optimization approach, the collected features were further optimized before being categorized using the Naive Bayes classifier. In order to evaluate the proposed hierarchical technique, three datasets (Ph2, ISBI2016, and HAM1000) consisting of three, two, and seven skin cancer classes were used. For these datasets (Ph2, ISBI2016, and HAM1000), the proposed method had corresponding accuracy levels of 95.40%, 91.1%, and 85.80%. The findings indicated that this strategy can help in classifying skin cancer more accurately.

Alam [

42] proposed S

2C-DeLeNet, a method for detecting skin cancer lesions from dermoscopic images. The proposed method integrates segmentation and classification using a parameter-transfer-based approach. The segmentation network, DeLeNet, was trained on a large-scale dataset for dermoscopic lesion segmentation, and the classification network, S

2CNet, was trained on a public dataset for skin lesion classification. The authors transferred the parameters of the segmentation network to the classification network and fine-tuned the network on the classification task. The architecture of the segmentation sub-network used an EfficientNet B4 backbone in place of the encoder. The classification sub-network contained a “Classification Feature Extraction” component that pulled learned segmentation feature maps towards lesion prediction. The “Feature Coalescing Module” block mixed and trailed each dimensional feature from the encoder and decoder, while the “3D-Layer Residuals” block developed a parallel pathway of low-dimensional features with large variance. These were the blocks created as part of the classification architecture. After tweaking on a publicly accessible dataset, the segmentation achieved a mean Dice score of 0.9494, exceeding existing segmentation algorithms, while the classification achieved a mean accuracy of 0.9103, outperforming well-known and traditional classifiers. Additionally, the network’s already-tuned performance produced very pleasant outcomes when cross-inferring on various datasets for skin cancer segmentation. Thorough testing was performed to demonstrate the network’s effectiveness for not only dermoscopic pictures, but also for other types of medical imaging, demonstrating its potential to be a systematic diagnostic solution for dermatology and maybe other medical specialties. For comparison, eight cutting-edge networks, AlexNet, GoogLeNet, VGG, ResNet, Inception-Net, EfficientNet, DenseNet, and MobileNet, as well as their different iterations, were taken into account, which confirmed that the proposed approach outperformed the state-of-the-art approaches.

With the aid of cutting-edge deep learning methodology, Elashiri et al. [

43] intended to put into practice an efficient way for classifying skin diseases. The contrast-enhancement technique first collects and preprocesses the dataset by histogram equalization. The segmentation of the photos was carried out by the Fuzzy C Means segmentation after preprocessing (FCM). Furthermore, the segmented images were used as the input for ResNet50, VGG16, and Deeplabv3’s deep feature extraction. The features were combined and obtained from the third and bottom layer of these three approaches. Hybrid squirrel butterfly search optimization performs weighted feature extraction to offer these concatenated features to the feature trans-creation phase (HSBSO). The modified long short-term memory (MLSTM) receives the changed features, and the same HSBSO optimizes the architecture there to create the final output for classification. The analysis’s findings supported the notion that the proposed method is more effective than traditional methods in terms of implementing a classification of skin diseases that is accurate.

Adla et al. [

44] proposed a full-resolution convolutional network with hyperparameter optimization for dermoscopy image segmentation-enhanced skin cancer classification. The hyperparameters of the network were optimized through a novel dynamic graph cut algorithm technique. By fusing the wolves’ individualized hunting techniques with their collective hunting methods, the hyperparameters highlighted the need for a healthy balance between exploration and exploitation and produced a neighborhood-based searching approach. The motivation of the authors was to create a full-resolution convolutional-network-based model that is hyperparameter-optimized and is capable of accurately identifying different forms of skin cancer using dermoscopy images. The initial contribution made by the authors was FrCN-DGCA, which uses the DGCA approach to segment skin lesion images and generate image ROIs in a manner similar to how doctors define ROIs. The authors’ second addition was the action bundle, which is used as a hyperparameter by the skin image-segmentation executor they provided in order to improve the segmentation process’s accuracy. This segmentation process was based on the dynamic graph cut. Last, but not least, the authors carried out a quantitative statistical analysis of the skin lesion segmentation findings to show the dependability of the segmentation methodology and to contrast the findings with those of the current state-of-the-art methods. The suggested model performed better than the other designs in tasks requiring skin lesion identification, with an accuracy of 97.986%.

Hierarchy-aware contrastive learning with late fusion (HAC-LF), a revolutionary technique presented by Hsu and Tseng [

45], enhances the performance of multi-class skin classification. A new loss function called hierarchy-aware contrastive loss (HAC Loss) was developed by the developers of HAC-LF to lessen the effects of the major-type misclassification issue. The major-type and multi-class classification performance were balanced using the late fusion method. The ISIC 2019 Challenges dataset, which comprises three skin lesion datasets, was used in a series of tests by the authors to assess the performance of the suggested approach. The experimental results demonstrated that, in all assessment metrics employed in their study, the suggested method outperformed the representative deep learning algorithms for skin lesion categorization. For accuracy, sensitivity, and specificity in the major-type categorization, HAC-LF scored 87.1%, 84.2%, and 88.9%, respectively. Regarding the sensitivity of the minority classes, HAC-LF performed better than the baseline model with an imbalanced class distribution.

A convolutional neural network (CNN) model for skin image segmentation was developed by Yanagisawa et al. [

46] in order to produce a collection of skin disease images suitable for the CAD of various skin disease categories. The DeepLabv3+-based CNN segmentation model was trained to identify skin and lesion areas, and the areas that met the criteria of being more than 80% skin and more than 10% lesion of the picture were segmented out. Atopic dermatitis was distinguished from malignant diseases and their consequences, such as mycosis fungoides, impetigo, and herpesvirus infection, by the created CNN-segmented image database with roughly 90% sensitivity and specificity. The accuracy of identifying skin diseases in the CNN-segmented image dataset was higher than that of the original picture dataset and nearly on par with the manually cropped image dataset.

A multi-site cross-organ calibrated deep learning (MuSClD) approach for the automated diagnosis of non-melanoma skin cancer was presented by Zhou et al. [

47]. To increase the generalizability of the model, the suggested strategy makes use of deep learning models that have been trained on a variety of datasets from various sites and organs. This paper’s key contribution was the creation of a reliable deep-learning-based method for the automated diagnosis of skin cancers other than melanoma. The proposed strategy was intended to go beyond the drawbacks of existing methods, which have poor generalizability because of small sample sizes and a lack of diversity. The MuSClD technique uses datasets from several sites and organs to increase the model’s capacity for generalization. The main drawback of this paper was the lack of explanation for how the suggested deep learning model makes decisions. Although the model had a high degree of accuracy in detecting non-melanoma skin cancer, it is unclear how the model came to that conclusion. This lack of interpretability might prevent the suggested strategy from being used in clinical settings. Using a sizable collection of photos of skin cancers other than melanoma, the MuSClD method was assessed. As measured in terms of the AUC, the suggested method fared better than other cutting-edge approaches. Additionally, the study demonstrated that the MuSClD method is adaptable to changes in imaging modalities and patient demographics, making it appropriate for practical use.

Omeroglu et al. [

48] proposed a novel soft-attention-based multi-modal deep learning framework for multi-label skin lesion classification. The proposed framework utilizes both visual and textual features of skin lesions to improve the classification accuracy. The framework consisted of two parallel branches, one for processing visual features and the other for processing textual features. A soft attention mechanism was incorporated into the framework to emphasize important visual and textual features. The 7-point criteria evaluation dataset, a well-known multi-modality multi-label dataset for skin diseases, was used to evaluate the proposed framework. For multi-label skin lesion classification, it attained an average accuracy of 83.04%. It increased the average accuracy on the test set by more than 2.14% and was more accurate than the most-recent approaches.

Serte and Demirel [

49] applied wavelet transform to extract features and deep learning to classify the features with the intention to enhance the performance of skin lesion classification. First, the wavelet transform was used as a preprocessing step to extract features from the skin lesion images. Then, skin lesions were divided into various groups using a deep learning model that was trained on the retrieved features. The authors tested their method against other cutting-edge approaches using the publicly accessible dataset ISIC 2017 of skin lesions. The use of a deep learning model for classification and the use of a wavelet transform to extract features were the key contributions of this paper. In this study, the best combination of models for melanoma and seborrheic keratosis detection were the ResNet-18-based I-A1-H-V and ResNet-50-based I-A1-A2-A3 models.

Bansal et al. [

50] proposed a grayscale-based lesion segmentation, while texture characteristics were extracted in the RGB color space using global (grey-level co-occurrence matrix (GLCM) for entropy, contrast, correlation, angular second moment, inverse different moment, and sum of squares) and local (LBP and oriented FAST and rotated BRIEF (ORB)) techniques. A total of 52 color attributes for each image were extracted as the color features using histograms of the five color spaces (grayscale, RGB, YCrCb, L*a*b, and HSV), as well as information on the mean, standard deviation, skewness, and kurtosis. The BHHO-S and BHHO-V binary variations of the Harris hawk optimization (HHO) method, which used S-shaped and V-shaped transfer functions with a time-dependent behavior, respectively, for feature selection, were introduced. The classifier that determines whether the dermoscopic image contains melanoma or not was given the selected attributes. The performance of the suggested approaches was compared to that of already-developed metaheuristic algorithms by the authors. The experiment’s findings demonstrated that classifiers that used features chosen using BHHO-S were superior to those that used BHHO-V and those that employed current, cutting-edge metaheuristic methods. The experimental results also showed that, in comparison to global- and other local-texture-feature-extraction strategies, texture features derived utilizing local binary patterns and color features offered higher classification accuracy.

Statistical fractal signatures (

) and statistical-prism-based fractal signatures were the two new fractal signatures that Gutiérrez et al. [

51] used to solve the issue of amorphous pigmentary lesions and blurred edges (S

). In order to classify multiclass skin lesions utilizing the two new fractal signatures and several classifiers, various computer-aided diagnosis techniques were compared. The combination of S

and the LDA classifier yielded the finest outcomes for reliable, impartial, and reproducible techniques.

Using a hybrid model that integrates deep transfer learning, convolutional neural networks (CNNs), and gradient boosting machines (GBMs), Thanka et al. [

52] suggested a new ensemble strategy for the classification of melanoma. The proposed method was examined using 25,331 photos of skin lesions from the ISIC 2019 Challenge, a publicly accessible dataset. According to the experimental findings, the proposed hybrid strategy that merged VGG16 and XGBOOST was successful in achieving an overall accuracy of 99.1%, a sensitivity of 99.4%, and a specificity of 98.8%. The accuracy, sensitivity, and specificity of the proposed hybrid approach, which included VGG16 and LightBGM, were all higher than the figures provided by other models, at 97.2%, 97.8%, and 96.6%, respectively. The preprocessing of the dataset, the kind of CNN model, and the design of the GBM model were all covered in-depth in the authors’ extensive explanation of the approach.

In a study by Brinker et al. [

53], the diagnostic precision of an artificial intelligence (AI) system for melanoma detection in skin biopsy samples was examined. The performance of the AI algorithm was compared to that of 18 leading pathologists from across the world in the study. The mean sensitivity, specificity, and accuracy of the Ensemble CNNs trained on slides with or without annotation of the tumor region as a region of interest were on par with those of the experts (unannotated: 88%, 88%, and 88%, respectively; area under the curve (AUC) of 0.95; annotated: 94%, 90%, and 92%, respectively; AUC of 0.97). The research demonstrated that the AI algorithm had a very low rate of false positives and false negatives and was very reliable in detecting melanoma. The study also discovered that the AI algorithm’s performance was on par with that of skilled pathologists. The pathologists had a 90.33% diagnosis accuracy, an 88.88% sensitivity, and a 91.77% specificity. There was no statistically significant difference between the AI algorithm and the pathologists. Overall, this research showed that AI algorithms could be a useful tool for melanoma diagnosis, with performance on par with that of skilled pathologists.

In order to classify skin lesions, Alenezi et al. [

54] presented a hybrid technique called the wavelet transform-deep residual neural network (WT-DRNNet). The wavelet transformation, pooling, and normalization section of the constructed model employing the suggested approach provided finer details by removing undesired detail from skin lesion images to acquire a better-performing model. The residual neural network built on transfer learning was then used to extract deep features. Finally, the global average pooling approach was combined with these deep features, and the training phase was carried out with the help of the extreme learning machine, which is based on the ReLu and other kinds of activation functions. In order to evaluate the effectiveness of the suggested model, the experimental works employed the ISIC2017 and HAM10000 datasets. The suggested algorithm’s accuracy, specificity, precision, and F1-score metrics for performance were 96.91%, 97.68%, 96.43%, and 95.79% for the ISIC2017 dataset, compared to 95.73%, 98.8%, 95.84%, and 93.44% for the HAM10000 dataset. These outcomes performed better than the state-of-the-art for categorizing skin lesions. As a result, the suggested algorithm can help specialized doctors automatically classify cancer based on photographs of skin lesions.

Alhudhaif et al. [

55] recommended a deep learning approach that was based on mechanisms for focusing attention and enhanced by methods for balancing data. The dataset used in the study was HAM10000, which included 10,015 annotated skin images of seven different types of skin lesions. The dataset was unbalanced and made balanced using techniques that included SMOTE, ADASYN, RandomOverSampler, and data augmentation. A soft attention module was selected as the attention mechanism in order to focus on the features of the input data and generate a feature map. The proposed model consisted of a soft attention module and convolutional layers. By integrating them with the attention mechanism, the authors were able to extract the image features from the convolutional neural networks. The key areas of the image were the focus of the soft attention module. The soft attention module and the applied data-balancing techniques significantly improved the performance of the proposed model. On open-source datasets for skin lesion classification, numerous studies were performed using convolutional neural networks and attention mechanisms. One of the contributions of the proposed approach was the attention mechanism used in the neural network. The balanced and unbalanced HAM10000 dataset’s versions were used for training and the test results at different times. On the unbalanced HAM10000 dataset, training accuracy rates of 85.73%, validation accuracy rates of 70.90%, and test accuracy rates of 69.75% were attained. The SMOTE methods on the balanced dataset yielded accuracy rates of 99.86% during training, 96.41% during validation, and 95.94% during testing. Compared to other balancing methods, the SMOTE method produced better results. It can be seen that the proposed model had high accuracy rates as a result of the applied data-balancing techniques.

Huang et al. [

56] proposed a computer-assisted approach for the analysis of skin cancer. In their study, they combined deep learning and metaheuristic methods. The fundamental concept was to create a deep belief network (DBN) based on an enhanced metaheuristic method called the modified electromagnetic field optimization algorithm (MEFOA) to build a reliable skin cancer diagnosis system. The proposed approach was tested on the HAM10000 benchmark dataset, and its effectiveness was verified by contrasting the findings with recent research regarding accuracy, sensitivity, specificity, precision, and F1 score.

Kalpana et al. [

57] suggested a technique called ESVMKRF-HEAO, which stands for ensemble support vector kernel random-forest-based hybrid equilibrium Aquila optimization. The HAM10000 dataset, which contains different types of skin lesion images, was used to test the suggested prediction model. First, preprocessing was applied to the dataset for noise removal and image quality improvement. Then, the malignant lesion patches were separated from the healthy backdrop using the thresholding-based segmentation technique. Finally, the dataset was given to the proposed classifier as the input, and it correctly predicted and categorized the segmented images into five (melanocytic nevus, basal cell carcinoma, melanoma, actinic keratosis, and dermatofibroma) based on their feature characteristics. The proposed model was simulated using the MATLAB 2019a program, and the performance of the suggested ESVMKRF-HEAO method was assessed in terms of parameters such as the sensitivity, F1-score, accuracy, precision, and specificity. In terms of all metrics, the suggested ESVMKRF-HEAO strategy performed better, especially when it came to the experimental data, and a 97.4% prediction accuracy was achieved.

Shi et al. [

58] proposed a two-stage end-to-end deep learning framework for pathologic evaluation in skin tumor identification, with a particular focus on neurofibromas (NFs), Bowen disease (BD), and seborrheic keratosis (SK). The most-prevalent illnesses involving skin lesions are NF, BD, and SK, and they can seriously harm a person’s body. In their study, the authors suggested two unique methods, the attention graph gated network (AGCN) and chain memory convolutional neural network (CMCNN), for diagnosing skin tumors. Patchwise diagnostics and slidewise diagnostics were the two steps of the framework, where they reported the result of the whole-slide image (WSI) as the input in the proposed diagnosis. Convolutional neural networks (CNNs) were used in the initial screening stage to discover probable tumor locations, and multi-label classification networks were used in the fine-grained classification stage to categorize the detected regions into certain tumor kinds. On a dataset of skin tumor images collected from Huashan Hospital, the suggested framework was tested, and the results showed promising accuracy and receiver operating characteristic curves.

Rafay and Hussain [

59] proposed a technique that utilized a dataset that integrated two different datasets to establish a new dataset of 31 diseases of the skin. In their study, the authors used three different CNN models—EfficientNet, ResNet, and VGG—each with a different architecture for transfer learning on the dataset for skin diseases. EfficientNet was further tuned because it had the best testing precision, where it initially achieved a testing accuracy of 71% with a training split of 70%. However, this was considered to be low; thus, the 70% training split for the 3424 samples was increased, and the model’s accuracy increased as a result to 72%. Again, the experiment was re-executed with a train–test split of 80%:20%, and the improvement in accuracy was 74%. The new dataset was augmented for a further experiment, which then increased the model’s accuracy to 87.15%.

Maqsood and Damaševičius [

60] proposed a methodology for localizing and classifying multiclass skin lesions. The suggested method begins by preprocessing the source dermoscopic images with a contrast-enhancement-based modified bio-inspired multiple exposure fusion method. The skin lesion locations were segmented in the second stage using a specially created 26-layer convolutional neural network (CNN) architecture. The segmented lesion images were used to modify and train four pretrained CNN models (Xception, ResNet-50, ResNet-101, and VGG16) in the third stage. In the fourth stage, all of the CNN models’ deep feature vectors were recovered and combined using the convolutional sparse image decomposition method. The Poisson distribution feature selection approach and univariate measurement were also employed in the fifth stage to choose the optimal features for classification. A multi-class support vector machine (MC-SVM) was then fed the chosen features to perform the final classification. The proposed method performed better in terms of accuracy, sensitivity, specificity, and F1-score. The addition of multiclass classification increased the research’s usefulness in real-world situations. However, the proposed approach lacked interpretability, making it challenging to understand the reasoning behind the classification decisions.

To identify skin diseases, Kalaiyarivu and Nalini [

61] developed a CNN-based method that extracted color features and texture (local binary pattern and gray level co-occurrence matrix) features from hand skin images. In their study, the authors reported the accuracy of the proposed CNN model as 87.5%.

Kousis et al. [

62] employed 11 distinct CNN models in a different study to identify skin cancer. In this method, they used the HAM10000 dataset and DenseNet169 model, reporting an accuracy of 92.25%. Among the 11 CNN architecture configurations considered in the study, DenseNet169 reported the best results and achieved an accuracy of 92.25%, a sensitivity of 93.59%, and an F1-score of 93.27%, which outperformed the existing state-of-the-art.

A hybrid classification strategy employing a CNN and a layered BLSTM was proposed by Ahmad et al. [

63]. In this study, the classification task was carried out by ensembling the BLSTM with a deep CNN network after feature extraction. The accuracy reported by the authors for their experiments on two different datasets (one customized with a size of 6454 images and the other being HAM10000) was 91.73% and 89.47%, respectively.

A deep-learning-based application that classifies many types of skin diseases was proposed by Aijaz et al. [

64]. This method made use of the CNN and LSTM deep learning models. In this study, the experimental analysis was performed on 301 images of psoriasis from the Dermnet dataset and 172 images of normal skin from the BFL NTU dataset. Before extracting the color, texture, and form features, the input sample images underwent image preprocessing comprising data augmentation, enhancement, and segmentation. A convolutional neural network (CNN) and long short-term memory (LSTM) were the two deep learning methods that were used with classification models that were trained on 80% of the images. According to reports, the CNN and LSTM had accuracy rates of 84.2% and 72.3%, respectively. The accuracy results from this study showed that this deep learning technology has the potential to be used in other dermatology fields for better prediction.

Using data from the ISIC 2019 and PH2 databases, Benyahia et al. [

65] examined the classification of skin lesions. The efficiency of 24 machine learning methods as classifiers and 17 widely used pretrained convolutional neural network (CNN) architectures as feature extractors were examined by the authors. The authors found accuracy rates of 92.34% and 91.71%, respectively, for a DenseNet201 combined with Fine KNN or Cubic SVM, using the ISIC 2019 dataset. The hybrid approach (DenseNet201 + Cubic SVM and DenseNet201 + Quadratic SVM) was also evaluated on the PH2 dataset, and the results showed that the suggested methodology outperformed the rivals with a 99% accuracy rate.

5.2. Machine Learning and Deep Learning in Skin Disease Detection

Inthiyaz et al. [

66] presented a study on the use of deep learning techniques for the detection of skin diseases. The authors proposed a skin-disease-detection model based on convolutional neural networks (CNNs) that can classify skin diseases into ten different categories. The model was trained and evaluated using a dataset from Xiangya-Derm of skin disease images. The results showed that the proposed model achieved high accuracy and outperformed existing state-of-the-art models in skin disease detection. The main contribution of this paper was the development of a novel deep-learning-based skin-disease-detection model that can accurately classify skin diseases into different categories. One potential limitation of this study is that the proposed model was only tested on a specific dataset of skin disease images. Therefore, its generalizability to other datasets or real-world scenarios may need to be further evaluated. The paper reported that the proposed skin-disease-detection model achieved an overall accuracy of 87% on the test set, outperforming other existing models for skin disease detection. The authors also performed a comparative analysis of the proposed model with other state-of-the-art models, including ResNet-50, Inception-v3, and VGG-16. The results showed that the proposed model outperformed these models in terms of accuracy and other evaluation metrics. Overall, the experimental results of this paper suggested that the proposed skin-disease-detection model based on deep learning techniques can accurately classify skin diseases and has the potential to be a useful tool for dermatologists and healthcare professionals in diagnosing skin diseases.

Dwivedi et al. [

67] proposed a deep-learning-based approach for automated skin disease detection using the Fast R-CNN algorithm. The proposed approach aimed to address the limitations of traditional approaches that are heavily dependent on domain knowledge and feature extraction. The experimental findings demonstrated that the suggested method achieved an overall accuracy of 90%, which outperformed traditional machine-learning-based approaches. The approach was evaluated on the HAM10000 dataset, which is a widely used benchmark dataset for skin disease detection. The contribution of the paper was the proposed approach for automated skin disease detection using the Fast R-CNN algorithm, which can handle large datasets and achieve high accuracy without the need for domain knowledge or feature extraction. One of the limitations of the proposed approach is that it requires a large amount of labeled data for training, which can be a challenge for some applications. Additionally, the approach is limited to detecting skin diseases included in the HAM10000 dataset, and further evaluation is required for detecting other skin diseases. Overall, the paper presented a promising approach for automated skin disease detection using deep learning, with the potential to improve clinical diagnosis and reduce human error.

Alam and Jihan [

68] presented an efficient approach for detecting skin diseases using deep learning techniques. The proposed approach involves preprocessing of skin images followed by feature extraction using convolutional neural networks (CNNs) and classification using support vector machine (SVM). The dataset used for the evaluation consisted of 10,000 skin images, which were categorized into seven different skin diseases. The approach achieved an accuracy of 95.6%, which is a significant improvement compared to existing approaches. The contribution of the paper is the development of an efficient and accurate skin disease detection approach using deep learning techniques. The main limitation of the proposed approach is that it requires a large amount of data to train the model effectively. In addition, the proposed approach may not be suitable for detecting rare skin diseases that are not present in the training dataset. The proposed approach achieved an accuracy of 95.6%, which outperformed the existing approaches. The precision and recall values for each skin disease category were also reported, which further validated the effectiveness of the proposed approach. The results demonstrated that the proposed approach can accurately detect skin diseases, which can aid in the early diagnosis and treatment of such diseases.

Wan et al. [

69] proposed a detection algorithm for pigmented skin diseases, based on classifier-level and feature-level fusion. The proposed algorithm combines the strengths of multiple classifiers and features to improve the detection accuracy of pigmented skin diseases. The experiments showed that the proposed algorithm outperformed the other state-of-the-art algorithms in terms of accuracy and other parameters. The novelty of the algorithm proposed in this paper for the diagnosis of pigmented skin diseases was its main contribution. The efficiency of the suggested fusion network was visualized using gradient-weighted class activation mapping (Grad_CAM) and Grad_CAM++. The results demonstrated that the accuracy and area under the curve (AUC) of the approach in this study reached 92.1% and 95.3%, respectively, when compared to those of the conventional detection algorithm for pigmented skin conditions. The contribution of this study as claimed by the authors included techniques used to perform the data augmentation, the method used for image augmentation noise, the two-feature-level fusion optimization scheme, and the visualization algorithms (Grad_CAM and Grad_CAM++) to verify the validity of the fusion network.

An optimization-based algorithm to identify skin cancer from a collection of photos was presented by Kumar and Vanmathi [

70]. The input image was created from a database in the primary stage, where it was preprocessed with a Gaussian filter and region of interest (ROI) extraction to weed out noise and mine interesting sections. Using the proposed U-RP-Net, the segmentation was carried out. By combining U-Net and RP-Net in this instance, the proposed U-RP-Net model was created. Meanwhile, the output from the RP-Net and U-Net models was combined using the Jaccard-similarity-based fusion model. To enhance the performance of detection, data augmentation was performed. SqueezeNet was used to locate skin cancer at the end. The Aquila whale optimization (AWO) method was also used to train SqueezeNet. The Aquila optimizer (AO) and whale optimization algorithm were combined to create the new AWO method (WOA). The highest testing accuracy of 92.5%, sensitivity of 92.1%, and specificity of 91.7% were achieved by the developed AWO-based SqueezeNet.

Suicmez et al. [

71] proposed a hybrid learning approach for the detection of melanoma by removing hair from dermoscopic images. The approach combines image-processing techniques and the wavelet transform with machine learning algorithms, including a support vector machine (SVM) and artificial neural network (ANN). In order to speed up the algorithm’s detection time, the system first uses image-processing techniques (masking for saturation and wavelet transform) to eliminate impediments such as hair, air bubbles, and noise from dermoscopic images. Making the lesion more noticeable for detection is another crucial step in this procedure. Melanoma detection was used for the first time using a unique hybrid model that combines deep learning and machine learning as an AI building block. The HAM10000 (ISIC 2018) and ISIC 2020 datasets were utilized to gauge the developed system’s performance ratio after stabilization. The paper demonstrated the effectiveness of the proposed approach in removing hair from dermoscopic images, which is a crucial preprocessing step in melanoma detection. However, the approach is dependent on the quality of the input images, and low-quality images may negatively impact the performance.

Choudhary et al. [

72] proposed a neural-network-based method to separate dermoscopic images including two different kinds of skin lesions. The initiative’s proposed solution was divided into four steps that included initial image processing, skin lesion segmentation, feature extraction, and DNN-based classification. With a median filter, image processing was the initial stage in removing any extra noise. The specific locations of the skin lesions were then segmented using Otsu’s image-segmentation method. The third stage involved further extraction of the skin lesion characteristics, which were retrieved utilizing the RGB color model, 2D DWT, and GLCM. The classification of the various types of skin diseases using a backpropagation deep neural network and the Levenberg–Marquardt (LM) generalization approach to reduce the mean-squared error was the fourth stage. The ISIC 2017 dataset was used to train and test the suggested deep learning model. With DNN, they were able to outperform other state-of-the-art machine learning classifiers with an accuracy of 84.45%.

Lembhe et al. [

73] proposed a synthetic skin-cancer-screening method using a solution or sequence from visual LR images. To improve the image-processing and machine learning methods, a deep learning strategy on super-resolution images was applied. Convolutional neural network models such as VGG 16, ResNet, and Inception V3 can be accurately recreated using image super-resolution (ISR) techniques. This model was created with the help of the Keras backend, and it was evaluated using a sequence or solution from visual LR photos. To improve the altering layers of the neural networks utilized for training, a deep learning strategy on the picture super-resolution was applied. The convolutional neural network model’s ISIC accuracy dataset, which is publicly available, was used to build the model.

A novel hybrid extreme learning machine (ELM) and teaching–learning-based optimization (TLBO) algorithm was developed by Priyadharshini et al. [

74] as a flexible method for melanoma detection. While TLBO is an optimization technique used to fine-tune the network’s parameters for enhanced performance, the ELM is a single-hidden-layer feed-forward neural network that can be trained rapidly and accurately. In contrast to earlier studies, the authors used the two methodologies to identify skin lesions as benign or malignant images, potentially increasing the accuracy of melanoma identification. However, the performance of the proposed method was only tested on a single dataset for skin cancer detection, which is a drawback of the paper. Evaluating the performance of the algorithm on additional skin cancer datasets should have been assessed by the authors to establish its practicality and robustness.

For the purpose of detecting melanoma skin cancer, Dandu et al. [

75] introduced a unique method that combines transfer learning with hybrid classification. To increase the accuracy of melanoma detection, the authors developed a hybrid framework that uses pretrained deep learning models for segmentation and incorporates a hybrid classification technique. The development of a hybrid strategy that successfully combines transfer learning and classification approaches was one of the paper’s contributions. The authors increased melanoma detection accuracy by modifying a pretrained convolutional neural network for skin lesion segmentation and mixing hand-crafted features with segmented lesion features in the classification process. The proposed approach was evaluated in terms of accuracy, precision, and recall on a benchmark dataset. However, the paper did have certain limitations, where clinical validation is needed to evaluate the generalizability and dependability of the suggested strategy across a range of demographics and skin types. The paper might also used more-thorough arguments and justifications for the features used for the hybrid classification technique. Furthermore, the reproducibility and comprehension might be improved by a more-detailed explanation of the specific features used and their significance to melanoma diagnosis. Last, but not least, despite the paper’s promise of increased performance in comparison to current procedures, there was a lack of a thorough comparative analysis using cutting-edge techniques. Such an analysis would offer a more-thorough evaluation of the advantages and disadvantages of the suggested strategy in comparison to other pertinent methods.

In this section skin lesion detection using machine learning and deep learning were examined, and in

Table 8 presented summary of all the prior studies discussed in this study and their performance also presented in

Table 9.