Abstract

Intestinal parasitic infections pose a grave threat to human health, particularly in tropical and subtropical regions. The traditional manual microscopy system of intestinal parasite detection remains the gold standard procedure for diagnosing parasite cysts or eggs. This approach is costly, time-consuming (30 min per sample), highly tedious, and requires a specialist. However, computer vision, based on deep learning, has made great strides in recent years. Despite the significant advances in deep convolutional neural network-based architectures, little research has been conducted to explore these techniques’ potential in parasitology, specifically for intestinal parasites. This research presents a novel proposal for state-of-the-art transfer learning architecture for the detection and classification of intestinal parasite eggs from images. The ultimate goal is to ensure prompt treatment for patients while also alleviating the burden on experts. Our approach comprised two main stages: image pre-processing and augmentation in the first stage, and YOLOv5 algorithms for detection and classification in the second stage, followed by performance comparison based on different parameters. Remarkably, our algorithms achieved a mean average precision of approximately 97% and a detection time of only 8.5 ms per sample for a dataset of 5393 intestinal parasite images. This innovative approach holds tremendous potential to form a solid theoretical basis for real-time detection and classification in routine clinical examinations, addressing the increasing demand and accelerating the diagnostic process. Our research contributes to the development of cutting-edge technologies for the efficient and accurate detection of intestinal parasite eggs, advancing the field of medical imaging and diagnosis.

1. Introduction

Intestinal parasitic infections have significant implications for public health worldwide, particularly in developing and underdeveloped countries. According to the World Health Organization, infectious and parasitic diseases affect approximately 24% of the global population, and in 2020, preventive chemotherapy was administered to 836 million children globally [1]. These infections often result in diarrhea, malnutrition, anemia, and other symptoms, primarily affecting children. Over 100 species of intestinal parasites reproduce daily, hatching at a rate of 200,000 eggs per day. Manual light microscopy serves as the standard method for diagnosing parasitic diseases [2,3]. However, parasite species identification and quantification based on microscopy are complex, labor-intensive, and time-consuming processes that require both a microscope and expert knowledge [4,5]. Additionally, analyzing microscopic images poses challenges for human experts due to the variations and uncertainties in morphological features, such as shape, staining color, and density of parasite species.

Previously, the automation of analyzing intestinal protozoa was limited due to the lack of automatic recognition algorithms for microorganisms under the microscope. However, in recent years, deep learning architectures have revolutionized various machine-learning tasks, particularly in the field of medical image identification and classification. Among these architectures, convolutional neural networks (CNNs) have played a crucial role in significantly improving performance [6,7,8]. Unlike traditional machine learning methods, deep learning architectures directly extract features from images, leading to enhanced validation and detection accuracy [9,10]. Deep learning applied to the detection of plasmodium (malarial parasite) in blood has achieved detection performance close to that of human experts [11,12,13]. Amal H. A. et al. developed a deep learning-based model by conducting experiments on 13,750 parasitized and 13,750 non-parasitic samples, achieving an estimated accuracy rate of 97% for recognizing the samples [14]. In a recent study by Z. Jing et al. [15], a novel approach for fecal cell detection was presented using the Faster R-CNN model combined with the powerful Resnet-152 convolutional neural network architecture. The proposed algorithm demonstrated impressive accuracy, achieving an average precision of 84% on a dataset of 40,560 fecal images. Additionally, the detection time per sample was significantly reduced to only 723 ms, enabling rapid analysis and diagnosis of fecal specimens in clinical settings.

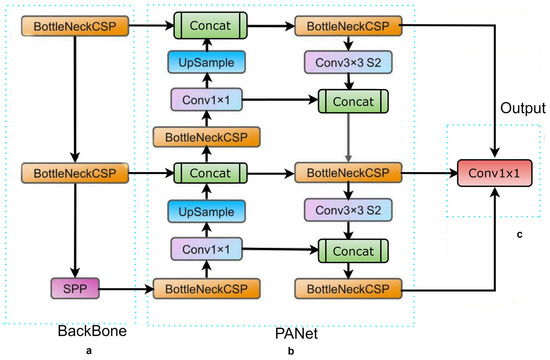

Here, we address the issue of image pre-processing and augmentation for rapid automatic detection and classification in raw parasite images. We have adopted a multi-step routine for data preparation, neural network configuration, model training, and prediction analysis. We applied the You Only Look Once (YOLO) CNN-based model for object detection [15,16,17], specifically the YOLOv5 architecture for detection and classification of samples in the dataset. YOLO is a cutting-edge, real-time object detector, and YOLOv5 builds on YOLOv1-YOLOv4. It has consistently outperformed on two official object detection datasets: Pascal VOC (visual object classes) [18] and Microsoft COCO (common objects in context) [19]. Figure 1 depicts the YOLOv5 network architecture. We have utilized YOLOv5 in this experiment as our initial learner for three reasons. First, YOLOv5 came with CSPDarknet, the backbone of Darknet, including a cross stage partial network (CSPNet) [20] into it. CSPNet minimizes the model’s parameters and FLOPS (floating-point operations per second), while ensuring inference speed and accuracy, while also reducing the model’s size by integrating gradient changes into the feature map and resolving issues with repeated gradient information in large-scale backbones. The task of detecting intestinal parasites required speed and accuracy for detection, and the efficiency of inference on edge devices with limited resources is also derived by the compact model size. The YOLOv5 has used the path aggregation network (PANet) [21] to improve information flow in its neck. PANet utilised a novel feature pyramid network (FPN) structure with an improved bottom-up path that greatly enhances the transmission of low-level features. Furthermore, adaptive feature pooling is used to link feature grids at all levels and permit essential information from each feature level to extend to the next subnetwork. The PANet approach improves the utilization of precise localization signals in down layers, which increases the object’s location accuracy. Third, the YOLO layer, which serves as the powerhouse of YOLOv5, generates feature maps in three different sizes (18 × 18, 36 × 36, and 72 × 72), giving the model the ability to handle objects of varying sizes, from small to medium to large. In a similar way, the growth of a forest fire typically progresses from a small-scale ground fire to a medium-sized trunk fire, and eventually to a large-scale canopy fire. By utilizing multi-scale detection, the YOLOv5 model is able to accurately track these size changes, allowing for faster and more effective fire suppression efforts.

Figure 1.

The YOLOv5 network architecture comprises three essential components: (a) the BackBone (CSPDarknet), (b) the Neck (PANet), and (c) the Output (YOLO Layer). The process begins by feeding the data into the Backbone, which performs feature extraction. Subsequently, the extracted features are passed to the PANet for feature fusion. Finally, the YOLO Layer generates detection results based on the fused features.

We utilized data augmentation techniques on the source parasite images to generate more regularization and divergence in the training dataset. We attempt to optimize the model by applying an additional dataset and using augmentation and transformation to enhance the results. We were able to accurately detect parasites from a separate test set of microscopic images by using trained YOLOv5 algorithms.

2. Related Work

Previous efforts in the computational diagnosis of intestinal parasitic infections have primarily focused on improving detection accuracy through hand-engineered feature extraction techniques, which require specialized skills and experts [22]. However, recent developments in pre-trained deep learning models have the potential to revolutionize medical image analysis, especially in scenarios where data are limited and heterogeneous [23]. These models [24,25,26] can serve as feature extractors for a new model trained on a dataset with fewer images, improving performance in different tasks of computer vision. Recent studies have applied pre-trained deep learning models to the classification and detection tasks of differentiating infected parasitized from microscopic images, achieving classification and detection accuracies above 95% and outperforming traditional machine learning algorithms [27]. However, most of these studies have focused on the detection tasks of intestinal parasite images, which are less sensitive and may miss cysts or eggs of parasites due to morphological features. Therefore, there is a need to scale up the approach of transfer learning for object detection in intestinal parasitic infections, which could provide more insights and considerably improve parasite detection.

In recent years, the You Only Look Once (YOLO) model for object detection has gained significant attention and has been continuously developed. YOLO directly detects multiple objects by predicting multiple bounding boxes and class probabilities, making it a popular one-stage detection model [15,17,28]. With subsequent improvements in accuracy and computational performance, YOLO-based architectures, such as YOLOv4 [29], YOLOv5 [30], and YOLOv8 [31], have been successfully applied in various research works. Although YOLOv8 is the latest version, there is still ongoing debate about the comparisons between the YOLO versions. While YOLOv5 has higher precision and speed than other state-of-the-art algorithms, such as Faster R-CNN [32] and SSD [26], YOLOv5 has higher accuracy and detection speed compared to YOLOv4 and YOLOv8. However, due to the complexity of the YOLOv8 network, a more simplified version of YOLOv5 has been designed to maximize detection speed and improve computational efficiency. This model has been applied in various applications, including the detection of pine wilt disease, trash, and electronic components. In our research, we focus on the YOLOv5 because of its faster detection results and memory usage with low-end GPU devices. We propose using this model for the automatic recognition of six common classes of protozoan cysts and helminthic eggs in parasitic products of stool examination, which is suitable for remote areas with limited laboratory testing capabilities. By utilizing the YOLOv5 model, we aim to improve the detection and classification of protozoan cysts and helminthic eggs for more effective and prompt treatment.

3. Material and Methods

3.1. Dataset Collection

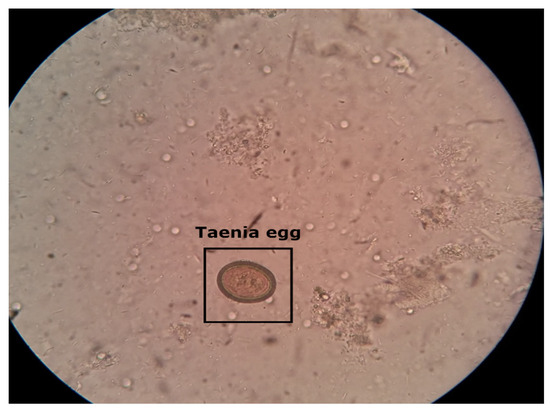

The intestinal parasite dataset used in this research report contains 10× magnification microscopic images with a resolution of 416 × 416 pixels. Five different types of intestinal parasites cysts or eggs collect hookworm eggs, Hymenolepsis nana, Taenia, Ascaris lumbricoides, and Fasciolopsis buski. These images were obtained from Mulago Referral Hospital, located in Uganda, as well as the IEEE Dataport [33,34]. The intestinal parasite images were annotated using the open-source data annotation graphical user interface (GUI) tool “Roboflow” (https://app.roboflow.com/) (accessed on 17 April 2023). Figure 2 depicts microscopic stool images captured using smartphones. The test performance of the model can be affected by the small number of images available for training. Therefore, to reduce the effect of overfitting after model training, data augmentation techniques were used, which include vertical and rotational augmentation.

Figure 2.

Microscopic stool sample of Taenia parasite image captured with a smartphone. Taenia intestinal parasite belonging to the genus Taenia that primarily infects the intestines of humans and other animals.

The images were resized to 416 × 416 for the YOLOv5 algorithms. Finally, the dataset split into training, validation, and testing in the ratios of 70%, 20%, and 10%, respectively.

3.2. Data Preparation

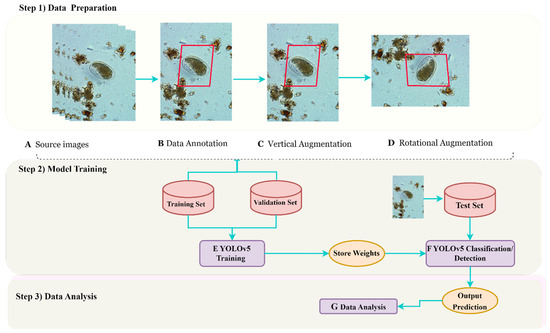

Figure 3 provides a comprehensive overview of the innovative approach implemented for parasite image detection, encompassing essential stages, such as data preparation, annotation, augmentation, training, evaluation, and data analysis. To annotate the parasite images, we utilized the advanced data annotation tool called “Roboflow”. This tool offered a user-friendly graphical user interface (GUI) to accurately draw bounding boxes around both positive and negative images of intestinal parasites. Each bounding box encompassed the entire parasite species depicted in the image, and the annotations were saved in YOLO format, accompanied by corresponding text files. To create a more diverse and robust training dataset, we employed a widely used technique called data augmentation. This involved applying various transformations to each source image, resulting in a larger and more varied dataset for training. The resulting dataset comprised a substantial number of unique images, which served as the training dataset. For evaluation purposes, we utilized a separate test dataset, consisting solely of unannotated parasite images to assess the performance of our model. With this innovative approach, we have confidence that our method will contribute to accurate and efficient parasite detection, bringing us closer to the eradication of parasitic infections. Section 3.3 focuses on deep-learning, specifically YOLOv5 training and prediction.

Figure 3.

This flow chart illustrates the process of data preparation and analysis for utilizing YOLOv5s and YOLOv5l models. Step 1) initiates with the collection of parasite images, which are subsequently annotated and subjected to data augmentation techniques, such as vertical and rotational methods. Moving on to Step 2), the YOLOv5 models are trained using both training and validation datasets, after which PyTorch weight files are applied to optimize their performance in predicting on the test dataset. Utilizing the trained weights, the YOLOv5 detection and classification function is employed to generate output predictions. Finally, in Step 3), advanced data analysis techniques are employed to evaluate the models’ performance. Our innovative flow chart showcases the remarkable possibilities that arise from the combination of advanced technology and data analysis, leading to a revolution in parasite detection and significant enhancements in global healthcare.

3.3. Deep Learning: YOLOv5 Training and Prediction

In a recent study, we employed advanced machine learning techniques, specifically YOLOv5 algorithms, to achieve unparalleled accuracy and efficiency in detecting parasites. These state-of-the-art algorithms allowed us to extract the optimal weights from our extensive dataset of 5393 parasite images, encompassing various resolutions. To enhance our results, we conducted experiments with different variants of the YOLOv5 algorithm and trained our models using both YOLOv5s and YOLOv5l object identification models.

We selected these particular models based on their ease of implementation on freely available cloud computing platforms, such as the Google Collaboratory, making them accessible to a wider audience. Additionally, the YOLOv5 models offer a diverse range of pre-written model architectures, each tailored to specific speed and accuracy requirements under different conditions. Our models were trained using a dataset of approximately 950 MB, and the training process took only one hour on a Tesla T4 GPU(China). The learned weights and biases of our models were automatically saved into a PyTorch weights file, simplifying the process of reusing and improving our results.

As shown in Figure 3, this study showcases the potential of these advanced techniques to revolutionize parasite detection, leading to improved healthcare outcomes worldwide and potentially saving numerous lives. Accurate and efficient parasite detection is particularly crucial in regions with limited access to healthcare resources, as it can have a significant impact on disease prevention and treatment efforts.

The YOLOv5 object recognition version was cloned from the Ultralytics YOLOv5 catalogue [35]. The YOLOv5s and YOLOv5l models were trained using the train.py function for 100 epochs with an image size of 416 × 416 and batch size 16. The models were used for both the classification and detection tasks. Both methods were trained with only the default hyperparameters. On the test dataset, these trained weights were then used to make predictions. A 416 × 416 image was used for testing using the detect.py function. Utilizing hardware from the Google Collaboratory cloud computing service, models were trained and tested.

In conclusion, this groundbreaking study highlights the power of advanced machine learning techniques in addressing real-world challenges. By utilizing state-of-the-art algorithms and leveraging cloud computing platforms, we can enhance our ability to detect and combat diseases more effectively. This study is a promising step towards transforming healthcare outcomes globally and advancing the field of machine learning.

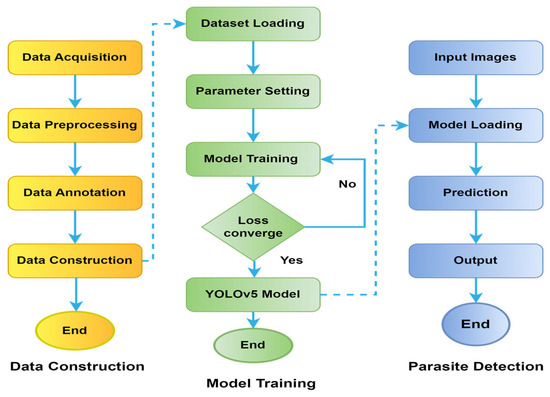

3.4. Experimental Platform

The experiment was executed on the Google Colab System as the base environment with a Nividia K80/T4 GPU (Taiwan) at 0.82 GHz/1.59 GHz GPU memory clock and GPU memory of 12 GB/16 GB. This experiment was carried out with models built on PyTorch [36], which provides libraries for building the main architecture of a deep learning model. The training of the models was performed using the open-source library Pytorch. Figure 4 represents a detailed flowchart of the dataset, as well as training and detection processes of the YOLOv5 model used in this experiment.

Figure 4.

Flowchart of YOLOv5 model for dataset, training, and detection processes.

3.5. Statistical Analyses

To determine the strength of the conducted experiments on the trained YOLOv5 algorithms, Precision, Recall, F1-score, and mAP measures are used for evaluation. The computation methods are provided in Equations (1)–(5).

In this context, the abbreviations TP, TN, FN, and FP represent True Positive (correct detections), True Negative, False Positive (incorrect detections), and False Negative (missed detections), respectively. The F1-score metric, defined in Equation (3), provides a comprehensive evaluation of the trained model by considering the trade-off between Recall and Precision. In addition to the F1-score, the Average Precision (AP) Equation (4) is employed to showcase the overall performance of the models across different thresholds. The following is shown below:

where; represents the precision at a given recall level r, and denotes the change in recall from the previous level. This formulation aligns with established practices and provides a more meaningful representation of the Average Precision metric.

4. Experiment Results

In our pursuit to advance parasite detection, we have gathered a diverse dataset of 5883 images featuring various parasites, including hookworm eggs, Hymenolepis nana, Taenia, Ascaris lumbricoides, and Fasciolopsis. Our collection spans across continents, with images acquired from the Mulago Referral Hospital in Uganda and the IEEE Dataport.

With this dataset, we developed a novel detection algorithm using YOLOv5, with each image in the development dataset meticulously annotated with vibrant rectangular boxes as the ground truth. To further improve the robustness of our model, we applied data aumentation and split our dataset into training and testing sets. As we trained our models, we experimented with various image resolutions and settled on 416 × 416 pixels to achieve optimal performance.

The YOLOv5 algorithms demonstrated improved detection results for intestinal parasites in the test dataset collection. We computed and compared the precision, recall, F1-score, and AP (average precision) of the detected parasites between the YOLOv5s and YOLOv5l models. The training process utilized the SGD optimizer and lasted for 100 epochs, being completed in 1.04 h for YOLOv5l and 0.918 h for YOLOv5s. Additionally, we employed YOLOv5 features to augment the training images by cropping, adjusting the dynamic range, and changing the scale during the training process. YOLOv5 applied image space and color space augmentations within the training dataset, presenting an original image plus three random images each time an image was loaded for training.

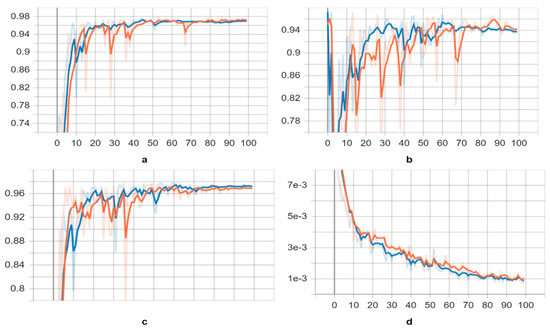

Initially, we focused on training the “s” and “l” variants of the YOLOv5 model using the training datasets. We selected the weights with the best available scores as the key indicators of the overall training stage. The scores of the trained neural networks exhibited a significant increase in the 20th and 24th epochs for both models, while the corresponding loss decreased (refer to Figure 5). These epochs marked the point at which the neural networks began identifying parasite species. However, as the training processes of both models extended to 100 epochs, the validation scores gradually declined after the 90th epoch, and the trained deep neural networks were no longer able to recognize parasites effectively.

Figure 5.

Learning curves of the YOLOv5s and YOLOv5l trained models on parasite dataset, yellow and blue, respectively. As a metric, we used the (a) mean Average Precision (mAP) (0.5) score, (b) precision score, (c) recall score, and (d) class loss score.

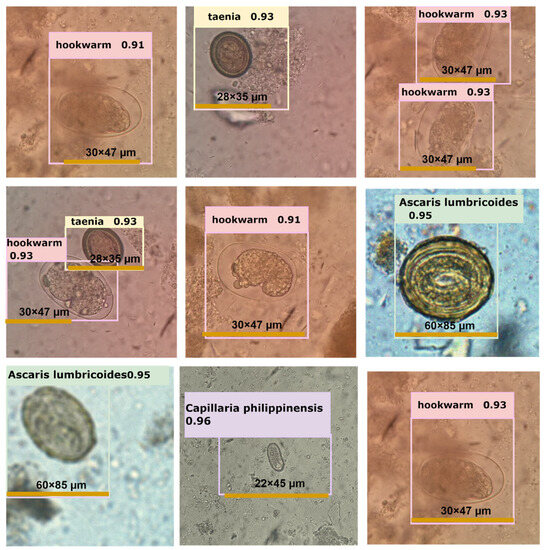

After applying pre-trained weights to both YOLOv5 models for validation, we obtained results presented in Table 1 and visualized in Figure 5. The trained neural networks exhibited improved recognition of parasite species, with slightly higher average mAP (0.5), mAP (0.5:0.95), recall, and precision scores, as indicated in Table 2. In Figure 6, we provide examples of parasite species detection by YOLOv5l models, showcasing their impressive performance, while also highlighting significant distortion in the bounding boxes around the detected species. It is worth noting that the evaluated performance varies depending on the approach used. Although the YOLOv5l model demonstrates higher accuracy, it also possesses more layers and parameters, resulting in slower processing compared to the YOLOv5s model, as illustrated in Table 3. Overall, our findings suggest that the YOLOv5 models are effective in detecting parasite species, offering valuable insights for future research in this field.

Table 1.

Overview of the intestinal parasites dataset obtained after applying data augmentation techniques. The dataset is divided into a training set (70%), validation set (20%) and testing set (10%).

Table 2.

Comparison of the YOLOv5s and YOLOv5l neural networks trained, by using transfer learning and pre-trained on trained weights, on validation datasets.

Figure 6.

Depiction of several examples of intestinal parasite detection performed by YOLOv5l neural networks.

Table 3.

Parasites’ detection speed in YOLOv5 models.

Comparison with Object Detection Models

We evaluated the performance of our proposed methods against four state-of-the-art object detection models: single-shot detector (SSD) [18], Faster R-CNN [19], AlexNet, and ResNet50. While SSD is a lightweight network that detects multiple objects in a single shot, Faster R-CNN requires two shots for object detection—one to identify regions of interest (ROI) and another to detect the object in each ROI using CNNs. We used VGG-16 and ResNet50 as the backbones for SSD and Faster R-CNN, respectively.

Table 4 summarizes the precision results of our methods compared to these four models. Interestingly, our proposed YOLOv5 model outperforms Faster R-CNN with the best average precision. Our YOLOv5 model also outperforms SSDs, despite having a smaller architecture and fewer parameters. The sliding window technique employed in our method works well for parasite egg detection, as it allows us to fix the size of each patch. Unlike natural images containing objects with various shapes and aspect ratios, parasite eggs have a consistent aspect ratio. Thus, the adaptive size of a bounding box offered by SSD and Faster R-CNN does not improve parasite egg detection.

Table 4.

Comparison with object detection models.

5. Discussion

This research paper delves into the utilization of advanced deep learning techniques and cloud computing platforms to enhance parasite detection. The study employed a diverse dataset of 5883 images, encompassing various parasites, such as hookworm eggs, Hymenolepis nana, Taenia, Ascaris lumbricoides, and Fasciolopsis. These images were sourced from the Mulago Referral Hospital in Uganda and the IEEE Dataport. The researchers developed a novel detection algorithm using YOLOv5 and meticulously annotated each image in the development dataset with vibrant rectangular boxes as ground truth. The dataset was split into training and testing sets, and data augmentation techniques were applied to enhance the model’s robustness. The YOLOv5 algorithms exhibited favorable detection results, with Precision, Recall, F1-score, and AP metrics calculated and compared between the YOLOv5s and YOLOv5l models. Image and color space augmentations were implemented in the training dataset, presenting an original image plus three random images each time an image was loaded for training.

The study trained both the “s” and “l” variants of the YOLOv5 model using the training datasets, and the best weights were selected based on the overall training stage. The scores of the trained neural networks experienced a significant increase in the 20th and 24th epochs for both models, accompanied by a corresponding decrease in loss, indicating that the neural networks started to identify parasite species. However, as the training process extended to 100 epochs, the validation scores gradually declined after the 90th epoch, and the trained neural networks were unable to effectively recognize parasites.

Upon applying pre-trained weights to both YOLOv5 models for validation, the trained neural networks exhibited improved recognition of parasite species, demonstrating slightly higher average mAP (0.5), mAP (0.5:0.95), recall, and precision scores. The YOLOv5l model showcased higher accuracy, but it was slower than the YOLOv5s model due to its increased number of layers and parameters. The researchers provided examples of parasite species detection by YOLOv5l models, showcasing their impressive performance while acknowledging significant distortion in the bounding boxes surrounding the detected species. Overall, the findings of the study suggest that the YOLOv5 models are effective in detecting parasite species and offer valuable insights for future research in this field. The research emphasizes the power of advanced machine learning techniques in addressing real-world challenges and improving disease detection and combat.

6. Conclusions

In this study, our main objective was to enhance the performance of two cutting-edge deep-learning architectures, namely, YOLOv5s and YOLOv5l, in the domain of object detection, specifically focused on intestinal parasites. To achieve this, we conducted a comprehensive evaluation of various metrics, including precision, recall, F1-score, and mean average precision. Our assessment was performed on a dataset consisting of 5393 microscopic images of parasites, each with an input image resolution of 416 × 416. Through systematic analysis of these key parameters, our aim was to optimize the algorithms’ capabilities and improve their accuracy in detecting objects. Our findings revealed that YOLOv5l outperformed YOLOv5s in terms of overall accuracy. However, both algorithms demonstrated excellent performance in recognizing and accurately locating the five types of parasites within the images. Notably, the YOLOv5 algorithms exhibited significantly faster processing times compared to Faser-RCNN [37] and ResNet50 [27], which require selective search and consequently consume more time. Interestingly, the mAP performance for the Hookworm, Taenia, and Fasciolopsis buski parasites was outstanding, achieving a score of 0.99. We attribute this success to the distinct characteristics of hookworms and the abundance of hookworm images in our dataset, which facilitated effective data enhancement during training. The mAP values for Ascaris lumbricoides were also commendable, scoring 0.92 for the YOLOv5 algorithms. However, due to the small dataset size and class imbalance, the training model experienced some degree of overfitting. Despite this limitation, our proposed deep learning model for intestinal parasite detection achieved the highest mAP and demonstrated rapid detection and localization of hookworms, Taenia, Fasciolopsis buski, Ascaris lumbricoides, and Hymenolepis nana, with a mAP of approximately 97% and a detection time of 8.5 ms per image. Future work should aim to expand the dataset size and include other intestinal parasites in the analysis to address the overfitting issue associated with small dataset sizes. Additionally, our study contributes to the development of effective deep learning models that can be utilized for identifying intestinal parasitic cysts or eggs in stool examinations.

Author Contributions

Conceptualization, S.K. (Satish Kumarand); methodology, G.A.; software, S.K. (Salahuddin Khan); validation, A.A.C.; formal analysis, S.K. (Satish Kumarand); investigation, A.A.C.; resources, M.A.M.A.; data curation, T.A.; writing—original draft preparation, S.K. (Satish Kumarand); writing—review and editing, S.K. (Satish Kumarand); visualization, S.K. (Salahuddin Khan); supervision, S.K. (Satish Kumarand); project administration, G.A.; funding acquisition A.A.C. All authors have read and agreed to the published version of the manuscript.

Funding

The authors extend their appreciation to the Deputyship for Research and innovation, Ministry of Education in Saudi Arabia for funding this research through the project number IFP-IMSIU-2023063. The authors also appreciate the Deanship of Scientific Research at Imam Mohammad Ibn Saud Islamic University (IMSIU) for supporting and supervising this project.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used and/or analyzed during the current experiment for training and testing purposes are available from the corresponding author on reasonable request. All data annotation and augmentation tools are provided by Roboflow at the following link (https://app.roboflow.com/) (accessed on 17 April 2023). The “You Only Look Once” version 5 (YOLOv5) object detection architecture, written in Python by Ultralytics, is freely available under a GPL-3.0 license (https://github.com/ultralytics/YOLOv5) (accessed on 17 April 2023). Two Jupyter notebooks that are interactive implementations of the YOLOv5 methods for training and detection are provided with this publication. All pre-processed data used in this experiment can be made available upon reasonable request. Correspondence and requests for materials should be addressed to K.S.

Acknowledgments

We express our thanks to laboratory experts from Mulago Referral Hospital located in Uganda and the IEEE dataport who collected the parasitic species used in this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dyer, O. African malaria deaths set to dwarf covid-19 fatalities as pandemic hits control efforts, WHO warns. BMJ 2020, 371, m4711. [Google Scholar] [CrossRef] [PubMed]

- Berzosa, P.; de Lucio, A.; Romay-Barja, M.; Herrador, Z.; González, V.; García, L.; Fernández-Martínez, A.; Santana-Morales, M.; Ncogo, P.; Valladares, B.; et al. Comparison of three diagnostic methods (microscopy, RDT, and PCR) for the detection of malaria parasites in representative samples from Equatorial Guinea 11 Medical and Health Sciences 1108 Medical Microbiology. Malar. J. 2018, 17, 333. [Google Scholar] [CrossRef] [PubMed]

- Dahal, P.; Khanal, B.; Rai, K.; Kattel, V.; Yadav, S.; Bhattarai, N.R. Challenges in Laboratory Diagnosis of Malaria in a Low-Resource Country at Tertiary Care in Eastern Nepal: A Comparative Study of Conventional vs. Molecular Methodologies. J. Trop. Med. 2021, 2021, 3811318. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Li, A.; Lara, D.A.M.; Marín, J.E.G.; Juhas, M.; Zhang, Y. Transfer Learning for Toxoplasma gondii Recognition. Msystems 2020, 5, e00445-19. [Google Scholar] [CrossRef]

- Tangpukdee, N.; Duangdee, C.; Wilairatana, P.; Krudsood, S. Malaria Diagnosis: A Brief Review. Korean J. Parasitol. 2009, 47, 93–102. [Google Scholar] [CrossRef]

- Rani, P.; Kotwal, S.; Manhas, J.; Sharma, V.; Sharma, S. Machine Learning and Deep Learning Based Computational Approaches in Automatic Microorganisms Image Recognition: Methodologies, Challenges, and Developments. Arch. Comput. Methods Eng. 2021, 29, 1801–1837. [Google Scholar] [CrossRef]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; Depristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef]

- Kumar, S.; Arif, T.; Alotaibi, A.S.; Malik, M.B.; Manhas, J. Advances Towards Automatic Detection and Classification of Parasites Microscopic Images Using Deep Convolutional Neural Network: Methods, Models and Research Directions. Arch. Comput. Methods Eng. 2022, 30, 2013–2039. [Google Scholar] [CrossRef]

- Salvi, M.; Acharya, U.R.; Molinari, F.; Meiburger, K.M. The impact of pre- and post-image processing techniques on deep learning frameworks: A comprehensive review for digital pathology image analysis. Comput. Biol. Med. 2020, 128, 104129. [Google Scholar] [CrossRef]

- Pratama, Y.; Fujimura, Y.; Funatomi, T.; Mukaigawa, Y. Parasitic Egg Detection and Classification by Utilizing the YOLO Algorithm with Deep Latent Space Image Restoration and GrabCut Augmentation Graduate School of Science and Technology, Nara Institute of Science and Technology (NAIST). In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 4311–4315. [Google Scholar]

- Gopakumar, G.P.; Swetha, M.; Siva, G.S.; Subrahmanyam, G.R.K.S.G.R.K.S.; Gopakumar, G.; Swetha, M.; Gorthi, S.S. Convolutional neural network-based malaria diagnosis from focus stack of blood smear images acquired using custom-built slide scanner. J. Biophotonics 2018, 11, e201700003. [Google Scholar] [CrossRef]

- Rosado, L.; Da Costa, J.M.C.; Elias, D.; Cardoso, J.S. Mobile-Based Analysis of Malaria-Infected Thin Blood Smears: Automated Species and Life Cycle Stage Determination. Sensors 2017, 17, 2167. [Google Scholar] [CrossRef] [PubMed]

- Díaz, G.; González, F.A.; Romero, E. A semi-automatic method for quantification and classification of erythrocytes infected with malaria parasites in microscopic images. J. Biomed. Inform. 2009, 42, 296–307. [Google Scholar] [CrossRef] [PubMed]

- Alharbi, A.H.; Aravinda, C.V.; Lin, M.; Ashwini, B.; Jabarulla, M.Y.; Shah, M.A. Detection of Peripheral Malarial Parasites in Blood Smears Using Deep Learning Models. Comput. Intell. Neurosci. 2022, 2022, 3922763. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2014, 111, 98–136. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2015, arXiv:1405.0312. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar] [CrossRef]

- Wang, K.; Liew, J.H.; Zou, Y.; Zhou, D.; Feng, J. PANet: Few-Shot Image Semantic Segmentation with Prototype Alignment. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9197–9206. [Google Scholar] [CrossRef]

- Nugroho, H.A.; Akbar, S.A.; Murhandarwati, E.E.H. Feature extraction and classification for detection malaria parasites in thin blood smear. In Proceedings of the 2015 2nd International Conference on Information Technology, Computer, and Electrical Engineering (ICITACEE), Semarang, Indonesia, 16–18 October 2015; Volume 1, pp. 197–201. [Google Scholar] [CrossRef]

- Li, S.; Du, Z.; Meng, X.; Zhang, Y. Multi-stage malaria parasite recognition by deep learning. Gigascience 2021, 10, giab040. [Google Scholar] [CrossRef]

- Yang, F.; Yu, H.; Silamut, K.; Maude, R.J.; Jaeger, S.; Antani, S. Parasite Detection in Thick Blood Smears Based on Customized Faster-RCNN on Smartphones. In Proceedings of the 2019 IEEE Applied Imagery Pattern Recognition Workshop, Washington, DC, USA, 15–17 October 2019. [Google Scholar] [CrossRef]

- Zhang, C.; Jiang, H.; Jiang, H.; Xi, H.; Chen, B.; Liu, Y.; Juhas, M.; Li, J.; Zhang, Y. Deep learning for microscopic examination of protozoan parasites. Comput. Struct. Biotechnol. J. 2022, 20, 1036–1043. [Google Scholar] [CrossRef]

- Nakasi, R.; Mwebaze, E.; Zawedde, A.; Tusubira, J.; Akera, B.; Maiga, G. A new approach for microscopic diagnosis of malaria parasites in thick blood smears using pre-trained deep learning models. SN Appl. Sci. 2020, 2, 1255. [Google Scholar] [CrossRef]

- Suwannaphong, T.; Chavana, S.; Tongsom, S.; Palasuwan, D. Parasitic Egg Detection and Classification in Low-cost Microscopic Images using Transfer Learning. arXiv 2021, arXiv:2107.00968. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Michael, K.; TaoXie; Fang, J.; Imyhxy; Lorna; et al. Ultralytics/YOLOv5: V7.0—YOLOv5 SOTA Realtime Instance Segmentation. Zenodo 2022. [Google Scholar] [CrossRef]

- Ji, S.-J.; Ling, Q.-H.; Han, F. An improved algorithm for small object detection based on YOLO v4 and multi-scale contextual information. Comput. Electr. Eng. 2023, 105, 108490. [Google Scholar] [CrossRef]

- Wan, D.; Lu, R.; Wang, S.; Shen, S.; Xu, T.; Lang, X. YOLO-HR: Improved YOLOv5 for Object Detection in High-Resolution Optical Remote Sensing Images. Remote Sens. 2023, 15, 614. [Google Scholar] [CrossRef]

- Terven, J.R.; Cordova-Esparaza, D.M. A Comprehensive Review of YOLO: From YOLOv1 to YOLOv8 and Beyond. arXiv 2020, arXiv:2304.00501. [Google Scholar]

- Osaku, D.; Cuba, C.; Suzuki, C.; Gomes, J.; Falcão, A. Automated diagnosis of intestinal parasites: A new hybrid approach and its benefits. Comput. Biol. Med. 2020, 123, 103917. [Google Scholar] [CrossRef]

- Quinn, J.A.; Nakasi, R.; Mugagga, P.K.B.; Byanyima, P.; Lubega, W.; Andama, A. Deep Convolutional Neural Networks for Microscopy-Based Point of Care Diagnostics. arXiv 2016, arXiv:1608.02989. [Google Scholar]

- Palasuwan, D.; Naruenatthanaset, K.; Kobchaisawat, T.; Chalidabhongse, T.; Nunthanasup, N.; Boonpeng, K.; Anantrasirichai, N. Parasitic Egg Detection and Classification in Microscopic Images. IEEE DataPort. Available online: https://ieee-dataport.org/competitions/parasitic-egg-detection-and-classification-microscopic-images (accessed on 19 May 2022).

- GitHub—Ultralytics/YOLOv5: YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite. Available online: https://github.com/ultralytics/YOLOv5 (accessed on 25 January 2023).

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in PyTorch. In Proceedings of the NIPS 2017 Workshop on Autodiff, Long Beach, CA, USA, 28 October 2017. [Google Scholar]

- Viet, N.Q. Parasite worm egg automatic detection in microscopy stool image based on Faster R-CNN. In Proceedings of the 3rd International Conference on Machine Learning and Soft Computing, Da Lat, Vietnam, 25–28 January 2019; pp. 197–202. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).