Machine Learning Approach for the Prediction of In-Hospital Mortality in Traumatic Brain Injury Using Bio-Clinical Markers at Presentation to the Emergency Department

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design and Setting

2.2. Definitions

2.3. Feature Selection for ML

2.4. Imputation for Missing Values

2.5. Prediction Models

2.6. Model Training, Evaluation, and Performance Metrics

3. Results

3.1. Characteristics of Study Population

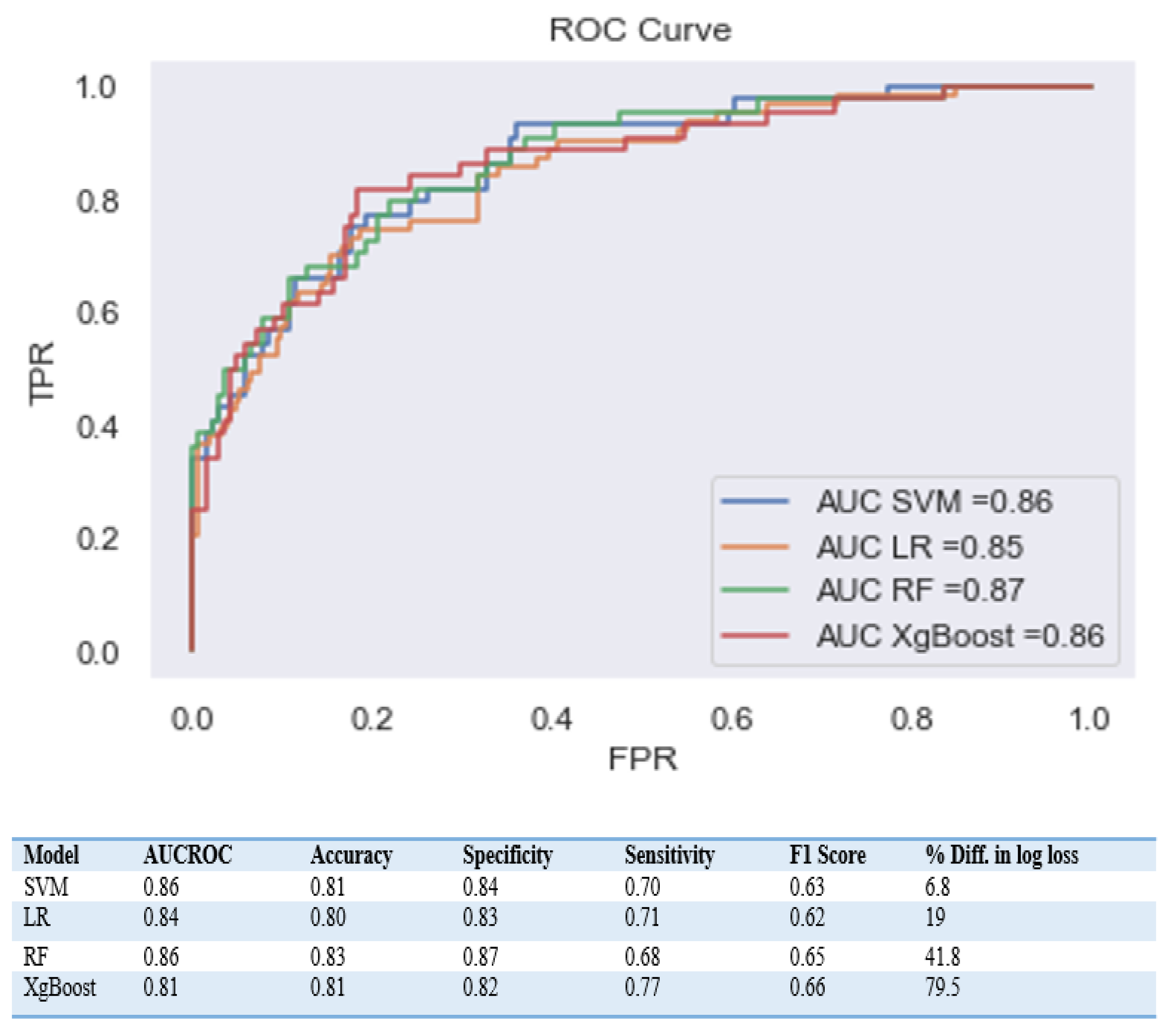

3.2. Comparing Machine Learning Model Performance

4. Discussion

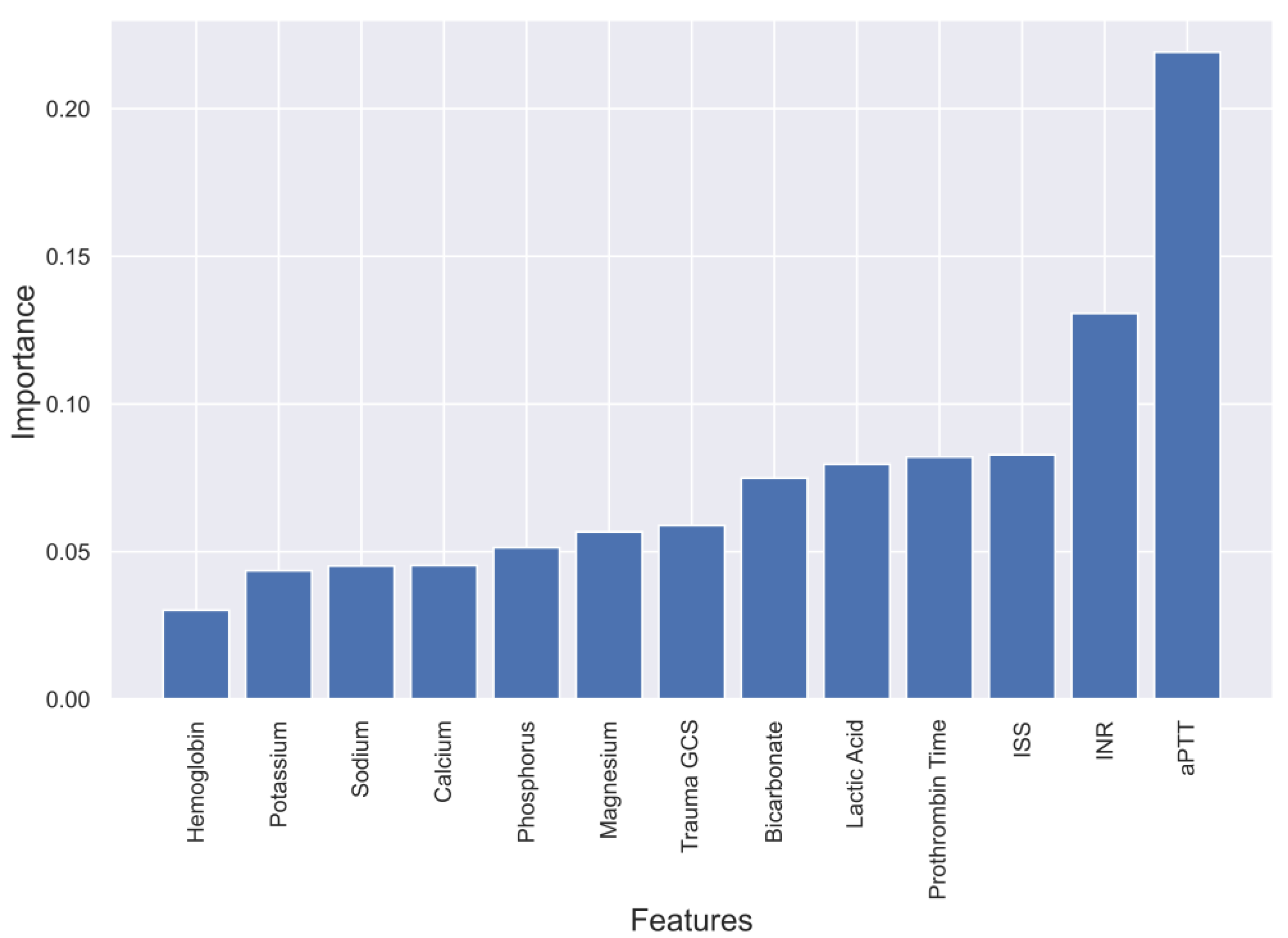

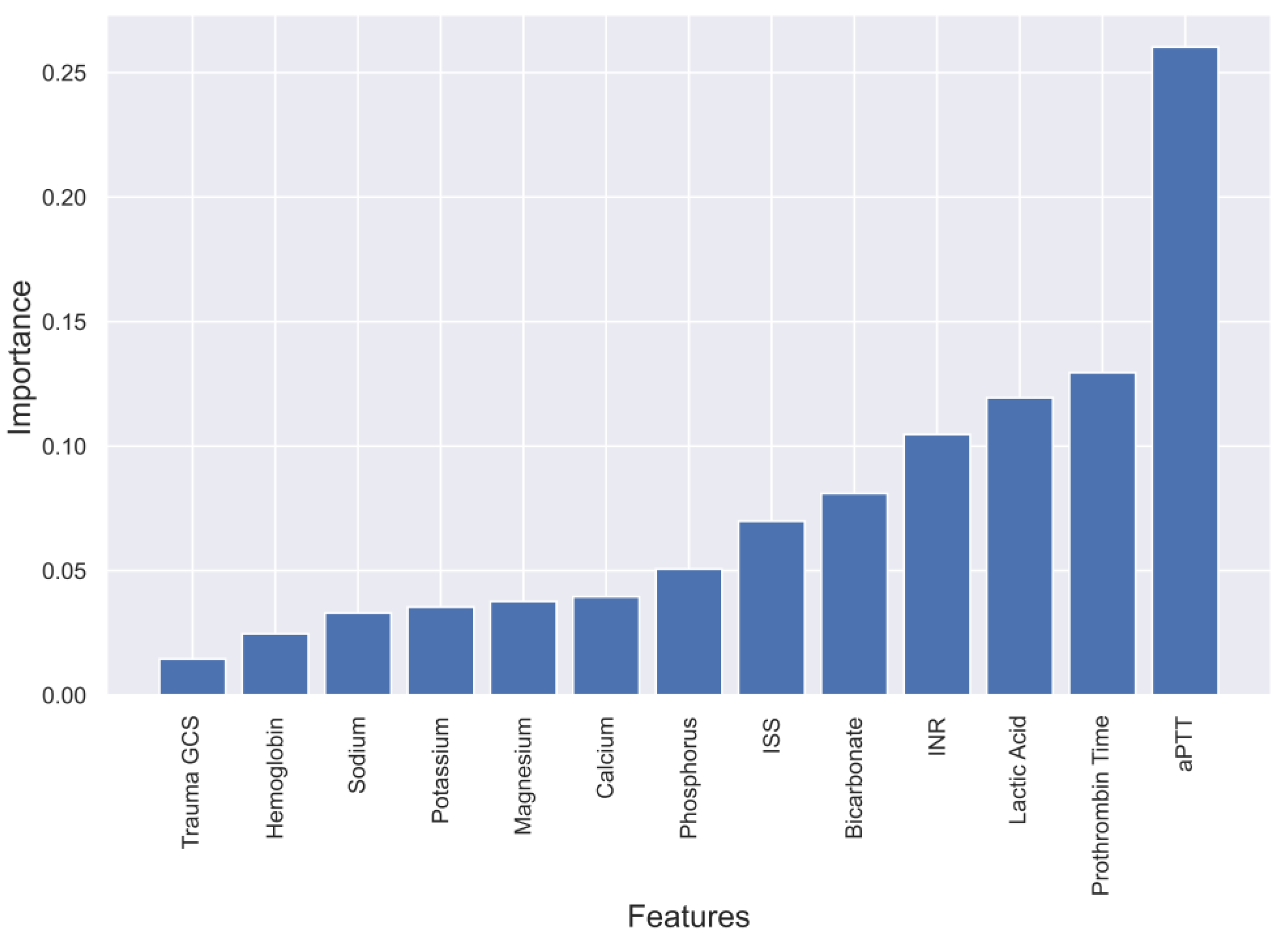

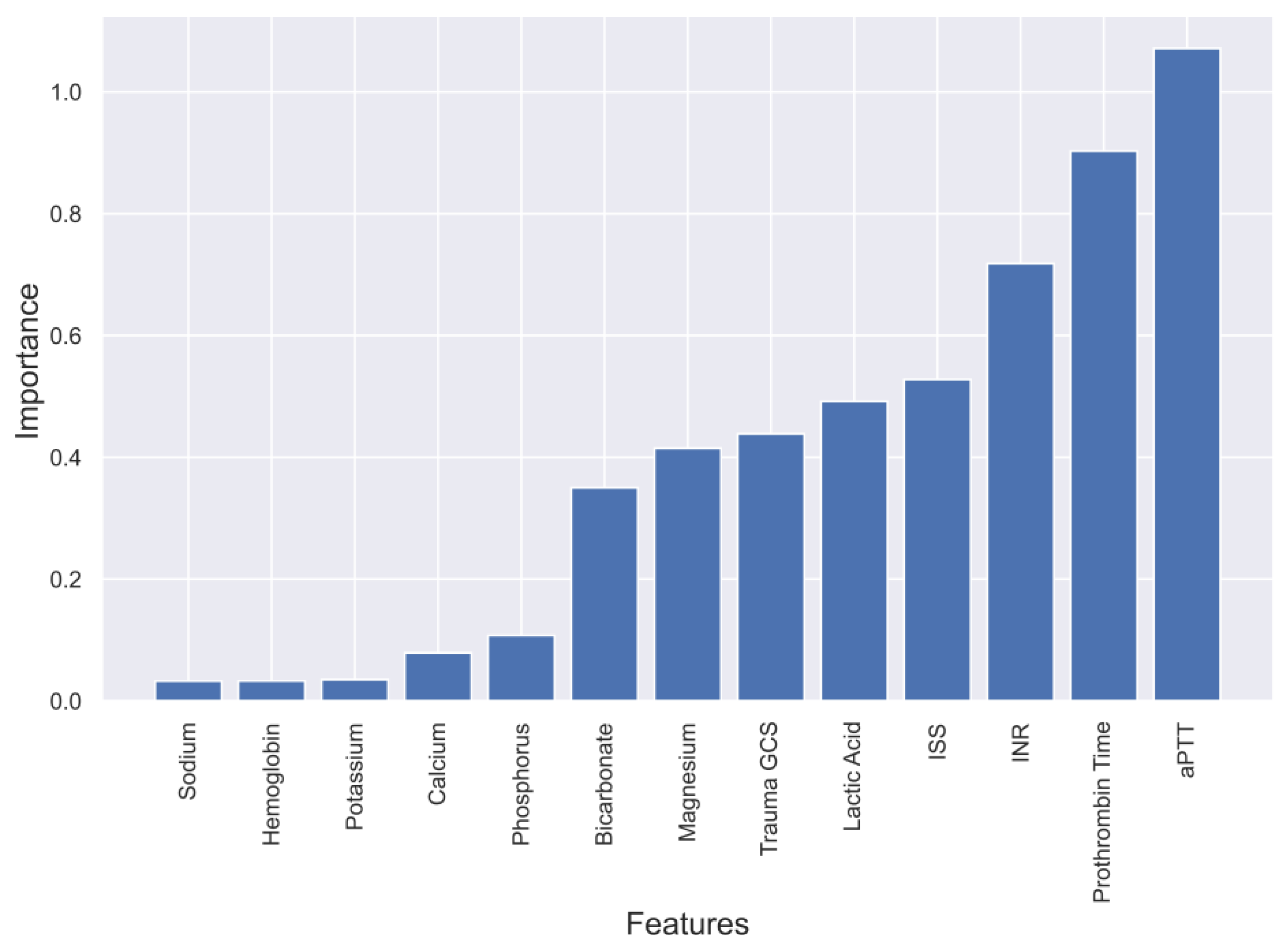

4.1. Evaluation of four Models and Feature Selection Considerations

4.2. Comparing Different ML Algorithms

4.3. Translating the Model into Clinical Practice and Validation Steps

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rached, M.; Gaudet, J.; Delhumeau, C.; Walder, B. Comparison of two simple models for prediction of short term mortality in patients after severe traumatic brain injury. Injury 2019, 50, 65–72. [Google Scholar]

- Dewan, M.C.; Rattani, A.; Gupta, S.; Baticulon, R.E.; Hung, Y.-C.; Punchak, M.; Agrawal, A.; Adeleye, A.O.; Shrime, M.G.; Rubiano, A.M.; et al. Estimating the global incidence of traumatic brain injury. J. Neurosurg. 2018, 130, 1080–1097. [Google Scholar] [CrossRef]

- Andelic, N.; Anke, A.; Skandsen, T.; Sigurdardottir, S.; Sandhaug, M.; Ader, T.; Roe, C. Incidence of hospital-admitted severe traumatic brain injury and in-hospital fatality in norway: A national cohort study. Neuroepidemiology 2012, 38, 259–267. [Google Scholar] [CrossRef]

- Bruns, J., Jr.; Hauser, W.A. The epidemiology of traumatic brain injury: A review. Epilepsia 2003, 44 (Suppl. S10), 2–10. [Google Scholar] [CrossRef] [PubMed]

- Fleminger, S.; Ponsford, J. Long term outcome after traumatic brain injury. BMJ 2005, 331, 1419–1420. [Google Scholar] [PubMed]

- El-Menyar, A.; Mekkodathil, A.; Al-Thani, H.; Consunji, R.; Latifi, R. Incidence, Demographics, and Outcome of Traumatic Brain Injury in The Middle East: A Systematic Review. World Neurosurg. 2017, 107, 6–21. [Google Scholar] [CrossRef]

- Senders, J.T.; Staples, P.C.; Karhade, A.V.; Zaki, M.M.; Gormley, W.B.; Broekman, M.L.D.; Smith, T.R.; Arnaout, O. Machine Learning and Neurosurgical Outcome Prediction: A Systematic Review. World Neurosurg. 2018, 109, 476–486.e1. [Google Scholar]

- Liu, N.T.; Salinas, J. Machine Learning for Predicting Outcomes in Trauma. Shock 2017, 48, 504–510. [Google Scholar] [CrossRef] [PubMed]

- Abujaber, A.; Fadlalla, A.; Gammoh, D.; Abdelrahman, H.; Mollazehi, M.; El-Menyar, A. Prediction of in-hospital mortality in patients with post traumatic brain injury using National Trauma Registry and Machine Learning Approach. Scand. J. Trauma. Resusc. Emerg. Med. 2020, 28, 44. [Google Scholar]

- Amorim, R.L.; Oliveira, L.M.; Malbouisson, L.M.; Nagumo, M.M.; Simoes, M.; Miranda, L.; Bor-Seng-Shu, E.; Beer-Furlan, A.; De Andrade, A.F.; Rubiano, A.M.; et al. Prediction of Early TBI Mortality Using a Machine Learning Approach in a LMIC Population. Front. Neurol. 2019, 10, 1366. [Google Scholar] [CrossRef]

- Hanko, M.; Grendár, M.; Snopko, P.; Opšenák, R.; Šutovský, J.; Benčo, M.; Soršák, J.; Zeleňák, K.; Kolarovszki, B. Random Forest-Based Prediction of Outcome and Mortality in Patients with Traumatic Brain Injury Undergoing Primary Decompressive Craniectomy. World Neurosurg. 2021, 148, e450–e458. [Google Scholar] [CrossRef] [PubMed]

- Dag, A.; Oztekin, A.; Yucel, A.; Bulur, S.; Megahed, F. Predicting Heart Transplantation Outcomes through Data Analytics. Decis. Support. Syst. 2017, 94, 42–52. [Google Scholar] [CrossRef]

- Zolbanin, H.; Delen, D.; Hassan, Z. Predicting Overall Survivability in Comorbidity of Cancers: A Data Mining Approach. Decis. Support Syst. 2015, 74, 150–161. [Google Scholar] [CrossRef]

- Shaikhina, T.; Lowe, D.; Daga, S.; Briggs, D.; Higgins, R.; Khovanova, N. Decision Tree and Random Forest Models for Outcome Prediction in Antibody Incompatible Kidney Transplantation. Biomed. Signal Process. Control 2019, 52, 456–462. [Google Scholar] [CrossRef]

- Premalatha, G.; Bai, V.T. Design and implementation of intelligent patient in-house monitoring system based on efficient XGBoost-CNN approach. Cogn. Neurodyn. 2022, 16, 1135–1149. [Google Scholar] [CrossRef]

- Tu, K.C.; Eric Nyam, T.T.; Wang, C.C.; Chen, N.C.; Chen, K.T.; Chen, C.J.; Liu, C.F.; Kuo, J.R. A Computer-Assisted System for Early Mortality Risk Prediction in Patients with Traumatic Brain Injury Using Artificial Intelligence Algorithms in Emergency Room Triage. Brain Sci. 2022, 12, 612. [Google Scholar] [CrossRef]

- Ng, C.J.; Yen, Z.S.; Tsai, J.C.; Chen, L.C.; Lin, S.J.; Sang, Y.Y.; Chen, J.C. TTAS national working group. Validation of the Taiwan triage and acuity scale: A new computerised five-level triage system. Emerg. Med. J. 2011, 28, 1026–1031. [Google Scholar] [CrossRef]

- Warman, P.I.; Seas, A.; Satyadev, N.; Adil, S.M.; Kolls, B.J.; Haglund, M.M.; Dunn, T.W.; Fuller, A.T. Machine Learning for Predicting In-Hospital Mortality After Traumatic Brain Injury in Both High-Income and Low- and Middle-Income Countries. Neurosurgery 2022, 90, 605–612. [Google Scholar] [CrossRef] [PubMed]

- Hsu, S.D.; Chao, E.; Chen, S.J.; Hueng, D.Y.; Lan, H.Y.; Chiang, H.H. Machine Learning Algorithms to Predict In-Hospital Mortality in Patients with Traumatic Brain Injury. J. Pers. Med. 2021, 11, 1144. [Google Scholar] [CrossRef]

- Bruschetta, R.; Tartarisco, G.; Lucca, L.F.; Leto, E.; Ursino, M.; Tonin, P.; Pioggia, G.; Cerasa, A. Predicting Outcome of Traumatic Brain Injury: Is Machine Learning the Best Way? Biomedicines 2022, 10, 686. [Google Scholar] [CrossRef]

- Raj, R.; Wennervirta, J.M.; Tjerkaski, J.; Luoto, T.M.; Posti, J.P.; Nelson, D.W.; Takala, R.; Bendel, S.; Thelin, E.P.; Luostarinen, T.; et al. Dynamic prediction of mortality after traumatic brain injury using a machine learning algorithm. NPJ Digit. Med. 2022, 5, 96. [Google Scholar] [CrossRef]

- Rau, C.S.; Kuo, P.J.; Chien, P.C.; Huang, C.Y.; Hsieh, H.Y.; Hsieh, C.H. Mortality prediction in patients with isolated moderate and severe traumatic brain injury using machine learning models. PLoS ONE 2018, 13, e0207192. [Google Scholar] [CrossRef] [PubMed]

- Moran, T.; Oyesanya, T.; Espinoza, T.; Wright, D. An Evaluation of Machine Learning Models to Predict Outcomes following Rehabilitation for Traumatic Brain Injury using Uniform Data System for Medical Rehabilitation data. Arch. Phys. Med. Rehabil. 2021, 102, e63. [Google Scholar] [CrossRef]

- Say, I.; Chen, Y.E.; Sun, M.Z.; Li, J.J.; Lu, D.C. Machine learning predicts improvement of functional outcomes in traumatic brain injury patients after inpatient rehabilitation. Front. Rehabil. Sci. 2022, 3, 1005168. [Google Scholar] [CrossRef]

- Miyagawa, T.; Saga, M.; Sasaki, M.; Shimizu, M.; Yamaura, A. Statistical and machine learning approaches to predict the necessity for computed tomography in children with mild traumatic brain injury. PLoS ONE 2023, 18, e0278562. [Google Scholar] [CrossRef] [PubMed]

- Hale, A.T.; Stonko, D.P.; Brown, A.; Lim, J.; Voce, D.J.; Gannon, S.R.; Le, T.M.; Shannon, C.N. Machine-learning analysis outperforms conventional statistical models and CT classification systems in predicting 6-month outcomes in pediatric patients sustaining traumatic brain injury. Neurosurg. Focus 2018, 45, E2. [Google Scholar] [CrossRef]

- Fonseca, J.; Liu, X.; Oliveira, H.P.; Pereira, T. Learning Models for Traumatic Brain Injury Mortality Prediction on Pediatric Electronic Health Records. Front. Neurol. 2022, 13, 859068. [Google Scholar] [CrossRef]

- Matsuo, K.; Aihara, H.; Nakai, T.; Morishita, A.; Tohma, Y.; Kohmura, E. Machine Learning to Predict In-Hospital Morbidity and Mortality after Traumatic Brain Injury. J. Neurotrauma 2020, 37, 202–210. [Google Scholar]

- Minaee, S.; Wang, Y.; Aygar, A.; Chung, S.; Wang, X.; Lui, Y.W.; Fieremans, E.; Flanagan, S.; Rath, J. MTBI Identification From Diffusion MR Images Using Bag of Adversarial Visual Features. IEEE Trans. Med. Imaging 2019, 38, 2545–2555. [Google Scholar] [CrossRef]

- Moyer, J.D.; Lee, P.; Bernard, C.; Henry, L.; Lang, E.; Cook, F.; Planquart, F.; Boutonnet, M.; Harrois, A.; Gauss, T.; et al. Machine learning-based prediction of emergency neurosurgery within 24 h after moderate to severe traumatic brain injury. World J. Emerg. Surg. 2022, 17, 42. [Google Scholar]

- Farzaneh, N.; Williamson, C.A.; Gryak, J.; Najarian, K. A hierarchical expert-guided machine learning framework for clinical decision support systems: An application to traumatic brain injury prognostication. NPJ Digit. Med. 2021, 4, 78. [Google Scholar] [CrossRef] [PubMed]

- Khalili, H.; Rismani, M.; Nematollahi, M.A.; Masoudi, M.S.; Asadollahi, A.; Taheri, R.; Pourmontaseri, H.; Valibeygi, A.; Roshanzamir, M.; Alizadehsani, R.; et al. Prognosis prediction in traumatic brain injury patients using machine learning algorithms. Sci. Rep. 2023, 13, 960. [Google Scholar] [CrossRef]

- Hernandes Rocha, T.A.; Elahi, C.; Cristina da Silva, N.; Sakita, F.M.; Fuller, A.; Mmbaga, B.T.; Green, E.P.; Haglund, M.M.; Staton, C.A.; Nickenig Vissoci, J.R. A traumatic brain injury prognostic model to support in-hospital triage in a low-income country: A machine learning-based approach. J. Neurosurg. 2019, 132, 1961–1969. [Google Scholar] [CrossRef]

- Ye, G.; Balasubramanian, V.; Li, J.K.; Kaya, M. Machine Learning-Based Continuous Intracranial Pressure Prediction for Traumatic Injury Patients. IEEE J. Transl. Eng. Health Med. 2022, 10, 4901008. [Google Scholar] [CrossRef] [PubMed]

- Carra, G.; Güiza, F.; Piper, I.; Citerio, G.; Maas, A.; Depreitere, B.; Meyfroidt, G. CENTER-TBI High-Resolution ICU (HR ICU) Sub-Study Participants and Investigators. Development and External Validation of a Machine Learning Model. for the Early Prediction of Doses of Harmful Intracranial Pressure in Patients with Severe Traumatic Brain Injury. J. Neurotrauma 2023, 40, 514–522. [Google Scholar]

- Zhang, M.; Guo, M.; Wang, Z.; Liu, H.; Bai, X.; Cui, S.; Guo, X.; Gao, L.; Gao, L.; Liao, A.; et al. Predictive model for early functional outcomes following acute care after traumatic brain injuries: A machine learning-based development and validation study. Injury 2023, 54, 896–903. [Google Scholar]

- Zhou, Z.; Huang, C.; Fu, P.; Huang, H.; Zhang, Q.; Wu, X.; Yu, Q.; Sun, Y. Prediction of in-hospital hypokalemia using machine learning and first hospitalization day records in patients with traumatic brain injury. CNS Neurosci. Ther. 2023, 29, 181–191. [Google Scholar] [CrossRef]

- Yang, F.; Peng, C.; Peng, L.; Wang, J.; Li, Y.; Li, W. A Machine Learning Approach for the Prediction of Traumatic Brain Injury Induced Coagulopathy. Front. Med. 2021, 8, 792689. [Google Scholar] [CrossRef]

- Abujaber, A.; Fadlalla, A.; Gammoh, D.; Al-Thani, H.; El-Menyar, A. Machine Learning Model to Predict Ventilator Associated Pneumonia in patients with Traumatic Brain Injury: The C.5 Decision Tree Approach. Brain INJ 2021, 35, 1095–1102. [Google Scholar] [CrossRef]

- Fang, C.; Pan, Y.; Zhao, L.; Niu, Z.; Guo, Q.; Zhao, B. A Machine Learning-Based Approach to Predict Prognosis and Length of Hospital Stay in Adults and Children with Traumatic Brain Injury: Retrospective Cohort Study. J. Med. Int. Res. 2022, 24, e41819. [Google Scholar] [CrossRef]

- Teasdale, G.; Jennett, B. Assessment of coma and impaired consciousness. A practical scale. Lancet 1974, 2, 81–84. [Google Scholar] [CrossRef]

- Copes, W.S.; Champion, H.R.; Sacco, W.J.; Lawnick, M.M.; Gann, D.S.; Gennarelli, T.; MacKenzie, E.; Schwaitzberg, S. Progress in characterizing anatomic injury. J. Trauma 1990, 30, 1200–1207. [Google Scholar] [CrossRef]

- Baker, S.P.; O’Neill, B.; Haddon, W., Jr.; Long, W.B. The injury severity score: A method for describing patients with multiple injuries and evaluating emergency care. J. Trauma 1974, 14, 187–196. [Google Scholar] [CrossRef]

| Variable | Value |

|---|---|

| Age (mean ± standard deviation) | 32.2 y ± 15.0 |

| Males | 863 (93.6%) |

| Mechanism of injury (n, %) | |

| • Falls | 230 (24.9%) |

| • Road traffic injuries | 545 (59.1%) |

| • Other | 147 (15.9%) |

| Types of head injury (n, %) | |

| • Epidural hematoma | 204 (22.1%) |

| • Subdural hematoma | 321 (34.8%) |

| • Subarachnoid hemorrhage | 387 (42.0%) |

| • Compression of basal cisterns | 110 (11.9%) |

| • Effacement of Sulci | 171 (18.5%) |

| • Midline Shifts | 206 (22.3%) |

| Glasgow Coma Scale (GCS) classification (n, %) | |

| • Mild (GCS 14–15) | 66 (7.2%) |

| • Moderate (GCS 9–13) | 166 (18.0%) |

| • Severe (GCS 3–8) | 681 (73.9%) |

| • Injury Severity Score (ISS) (median, IQR) | 27 (18–34) |

| Initial Serum Electrolyte Levels [median, interquartile range (IQR)] | |

| • Initial serum sodium | 141.0 (139–143) |

| • Initial serum potassium | 3.8 (3.4–4.1) |

| • Initial serum calcium | 2.0 (1.8–2.1) |

| • Initial serum magnesium | 0.7 (0.6–0.8) |

| • Initial serum phosphate | 0.9 (0.7–1.2) |

| Other Clinical Parameters (median, IQR) | |

| • Bicarbonate level | 19.6 (16.7–23.0) |

| • Lactic acid level | 2.9 (2.0–4.3) |

| • Prothrombin time | 12.0 (11.1–13.5) |

| • Activated partial thromboplastin time | 26.2 (24.0–31.0) |

| • International normalized ratio | 1.1 (1.1–1.3) |

| • Hemoglobin | 13.0 (11.3–14.4) |

| • Glucose | 8.0 (6.7–10.1) |

| Interventions and Procedures (n, %) | |

| • Intubation | 827 (89.7%) |

| • Blood transfusion | 480 (52.1%) |

| • Massive transfusion protocol activation | 138 (15.0%) |

| • Intracranial pressure monitoring | 208 (24.6%) |

| • Craniotomy/craniectomy | 192 (20.8%) |

| Length of stay in days (LOS) (median, IQR) | |

| • Mechanical ventilator | 5 (2–11) |

| • Intensive care unit | 9 (4–17) |

| • Hospital | 17 (7–32) |

| In-hospital mortality | 204 (22.1%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mekkodathil, A.; El-Menyar, A.; Naduvilekandy, M.; Rizoli, S.; Al-Thani, H. Machine Learning Approach for the Prediction of In-Hospital Mortality in Traumatic Brain Injury Using Bio-Clinical Markers at Presentation to the Emergency Department. Diagnostics 2023, 13, 2605. https://doi.org/10.3390/diagnostics13152605

Mekkodathil A, El-Menyar A, Naduvilekandy M, Rizoli S, Al-Thani H. Machine Learning Approach for the Prediction of In-Hospital Mortality in Traumatic Brain Injury Using Bio-Clinical Markers at Presentation to the Emergency Department. Diagnostics. 2023; 13(15):2605. https://doi.org/10.3390/diagnostics13152605

Chicago/Turabian StyleMekkodathil, Ahammed, Ayman El-Menyar, Mashhood Naduvilekandy, Sandro Rizoli, and Hassan Al-Thani. 2023. "Machine Learning Approach for the Prediction of In-Hospital Mortality in Traumatic Brain Injury Using Bio-Clinical Markers at Presentation to the Emergency Department" Diagnostics 13, no. 15: 2605. https://doi.org/10.3390/diagnostics13152605

APA StyleMekkodathil, A., El-Menyar, A., Naduvilekandy, M., Rizoli, S., & Al-Thani, H. (2023). Machine Learning Approach for the Prediction of In-Hospital Mortality in Traumatic Brain Injury Using Bio-Clinical Markers at Presentation to the Emergency Department. Diagnostics, 13(15), 2605. https://doi.org/10.3390/diagnostics13152605