EfficientNetB0 cum FPN Based Semantic Segmentation of Gastrointestinal Tract Organs in MRI Scans

Abstract

1. Introduction

2. Literature Review

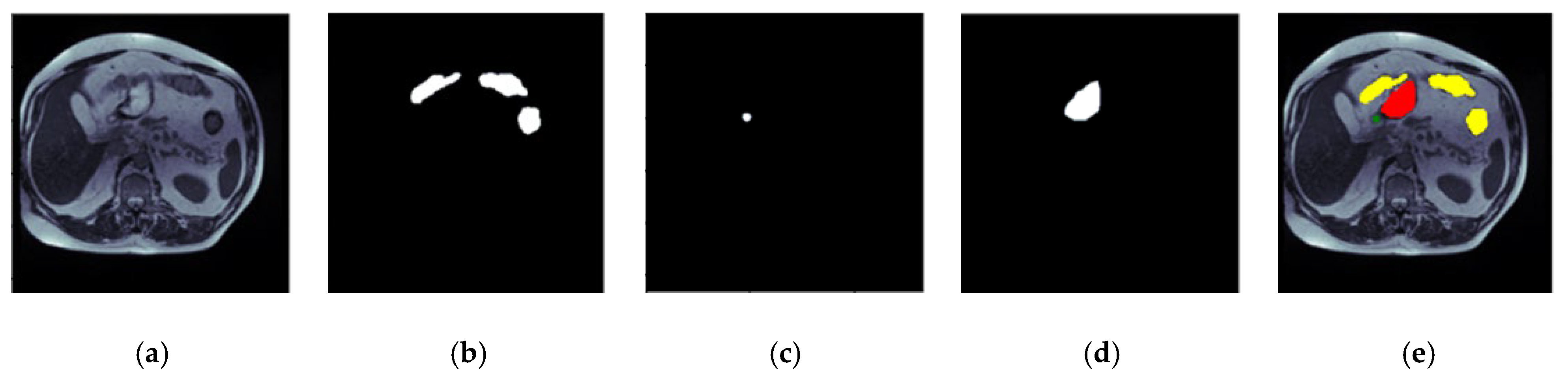

3. Input Dataset

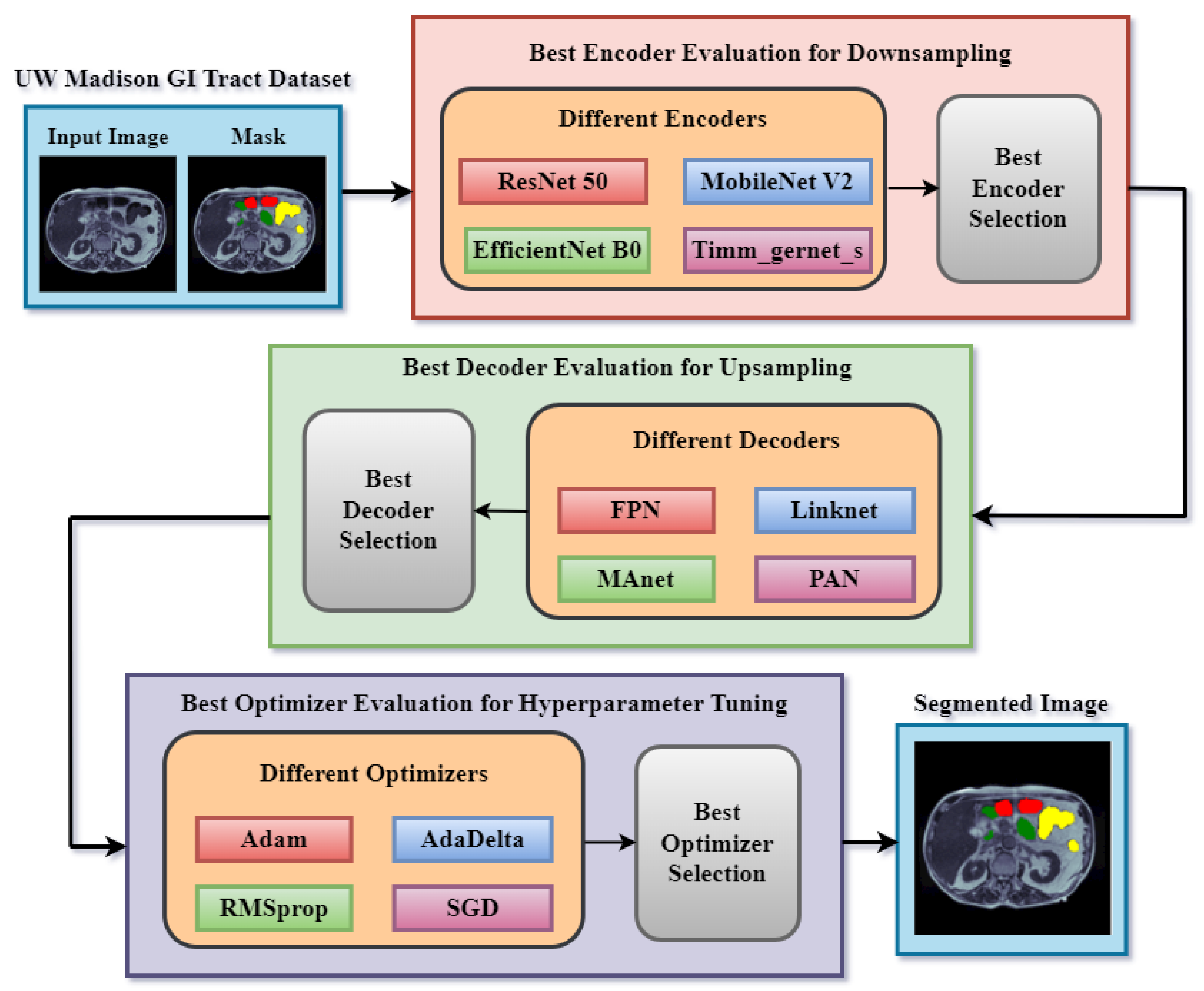

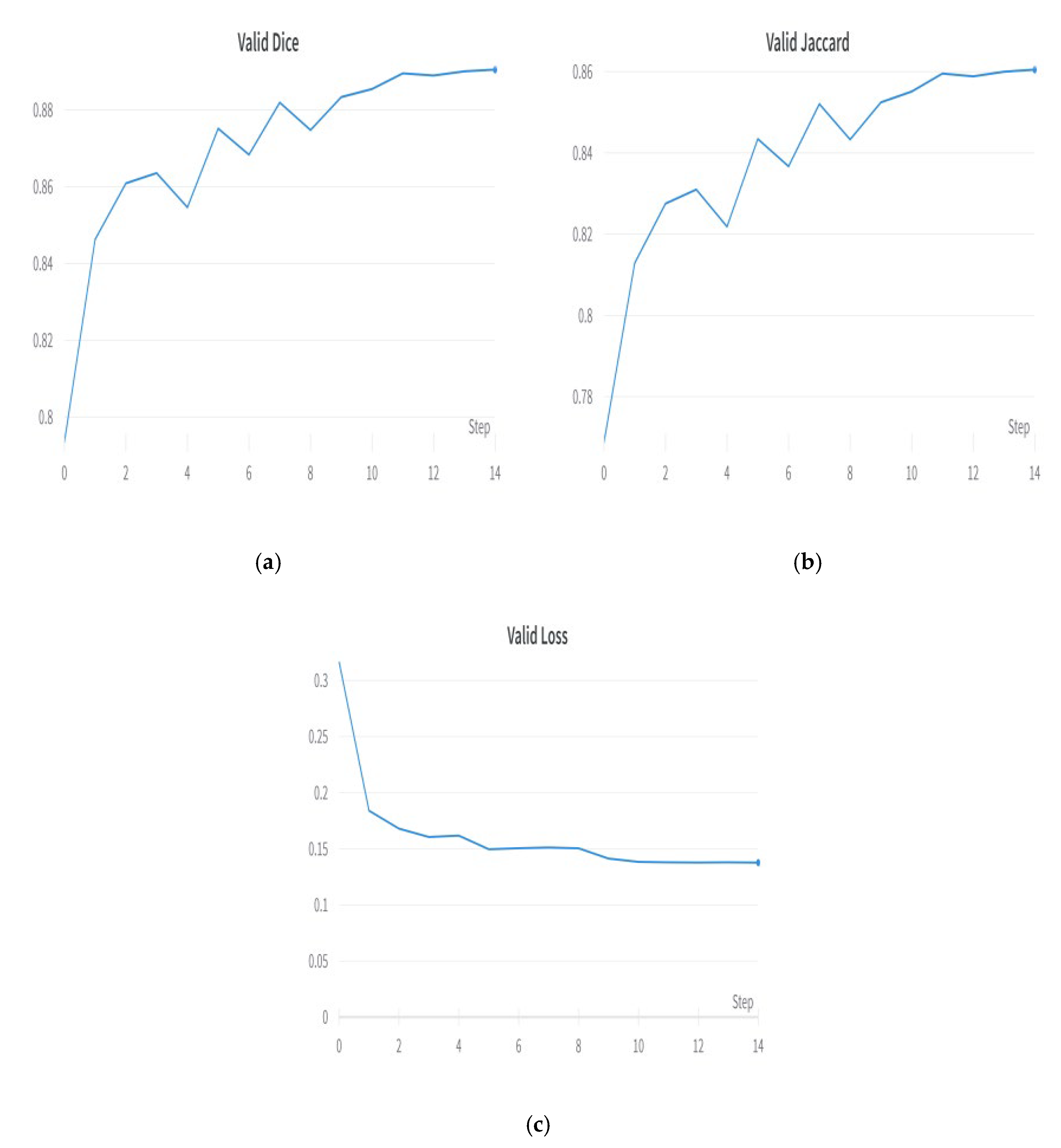

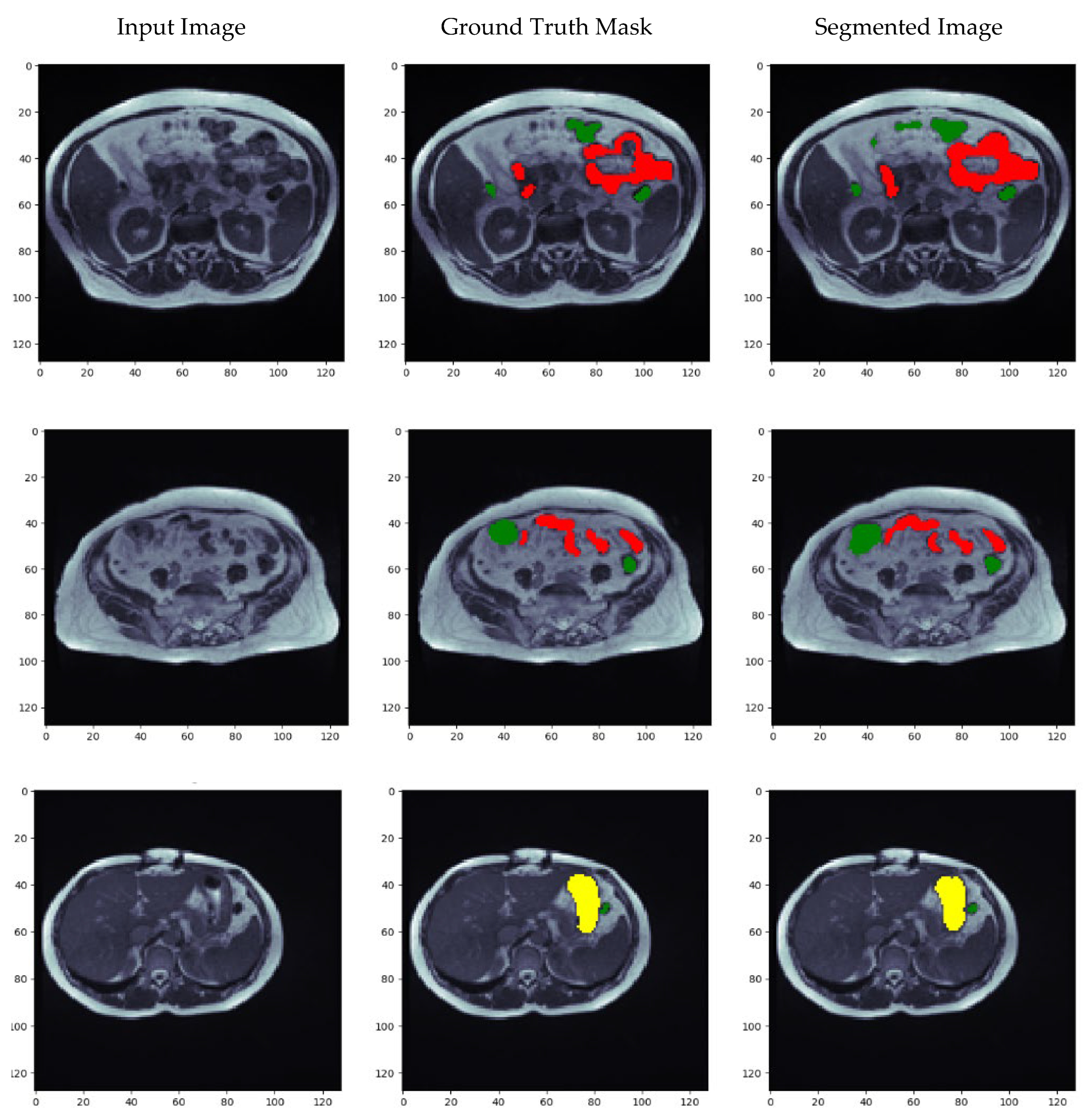

4. Proposed Methodology

5. Results and Discussions

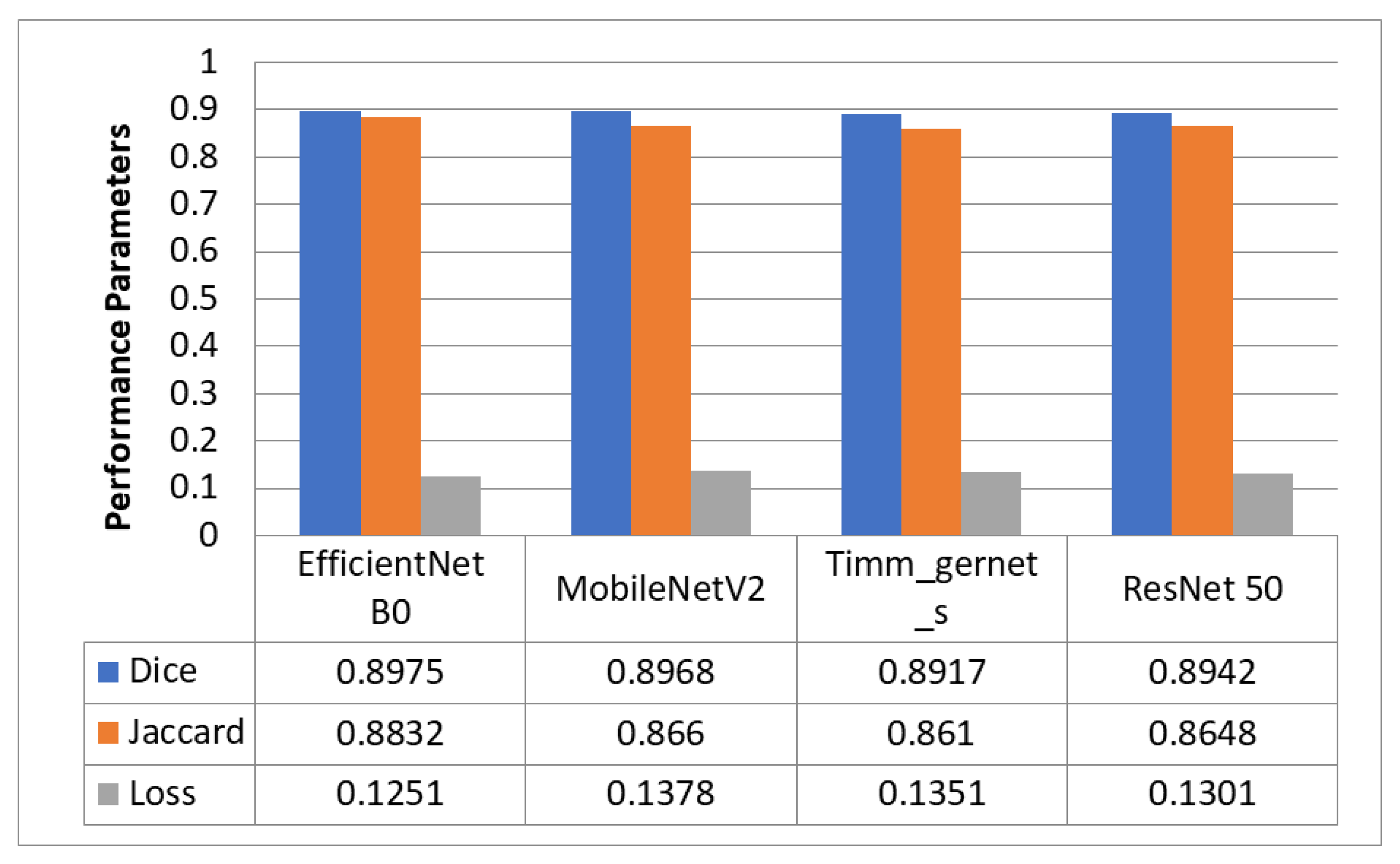

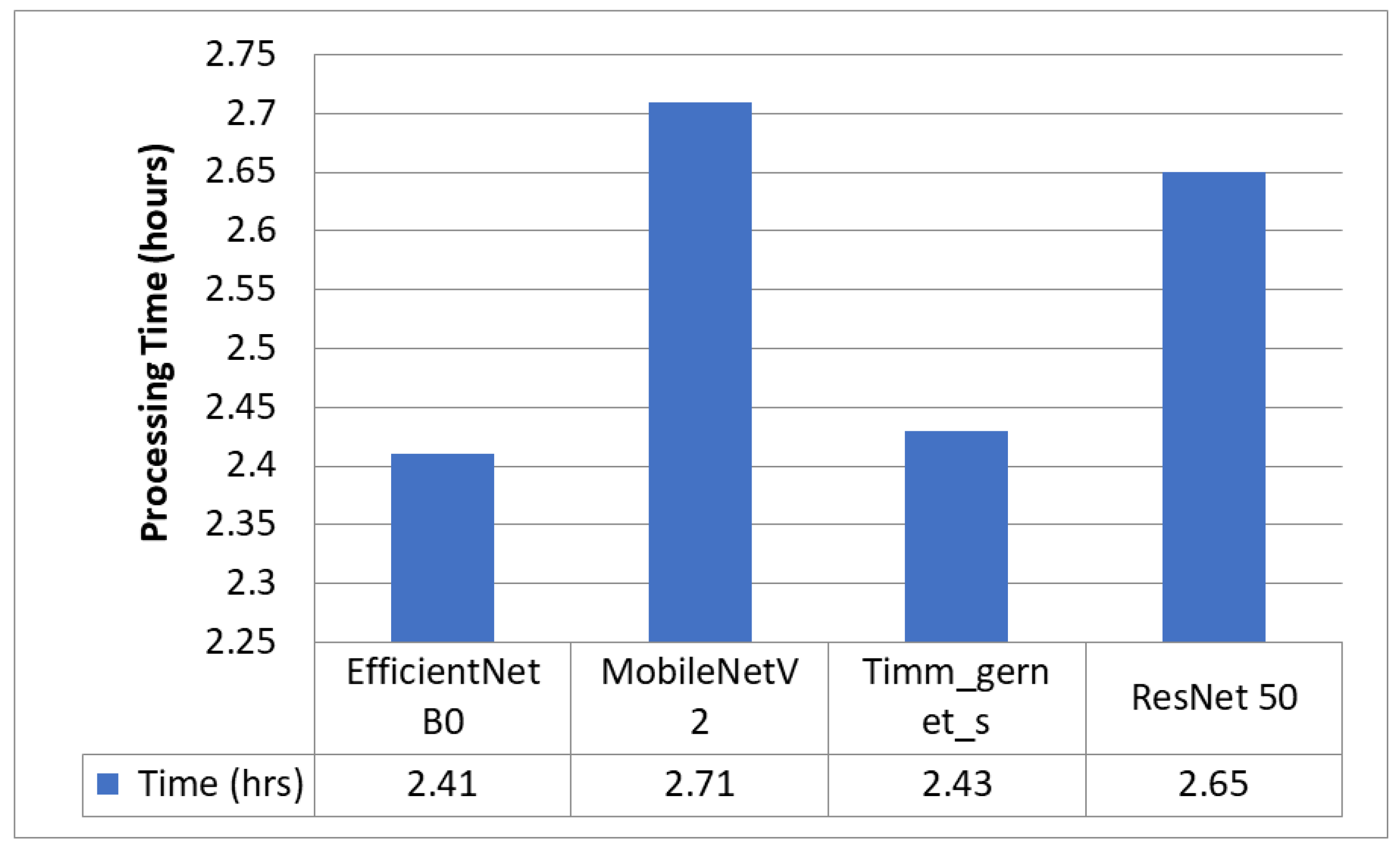

5.1. Encoder Evaluation for Downsampling

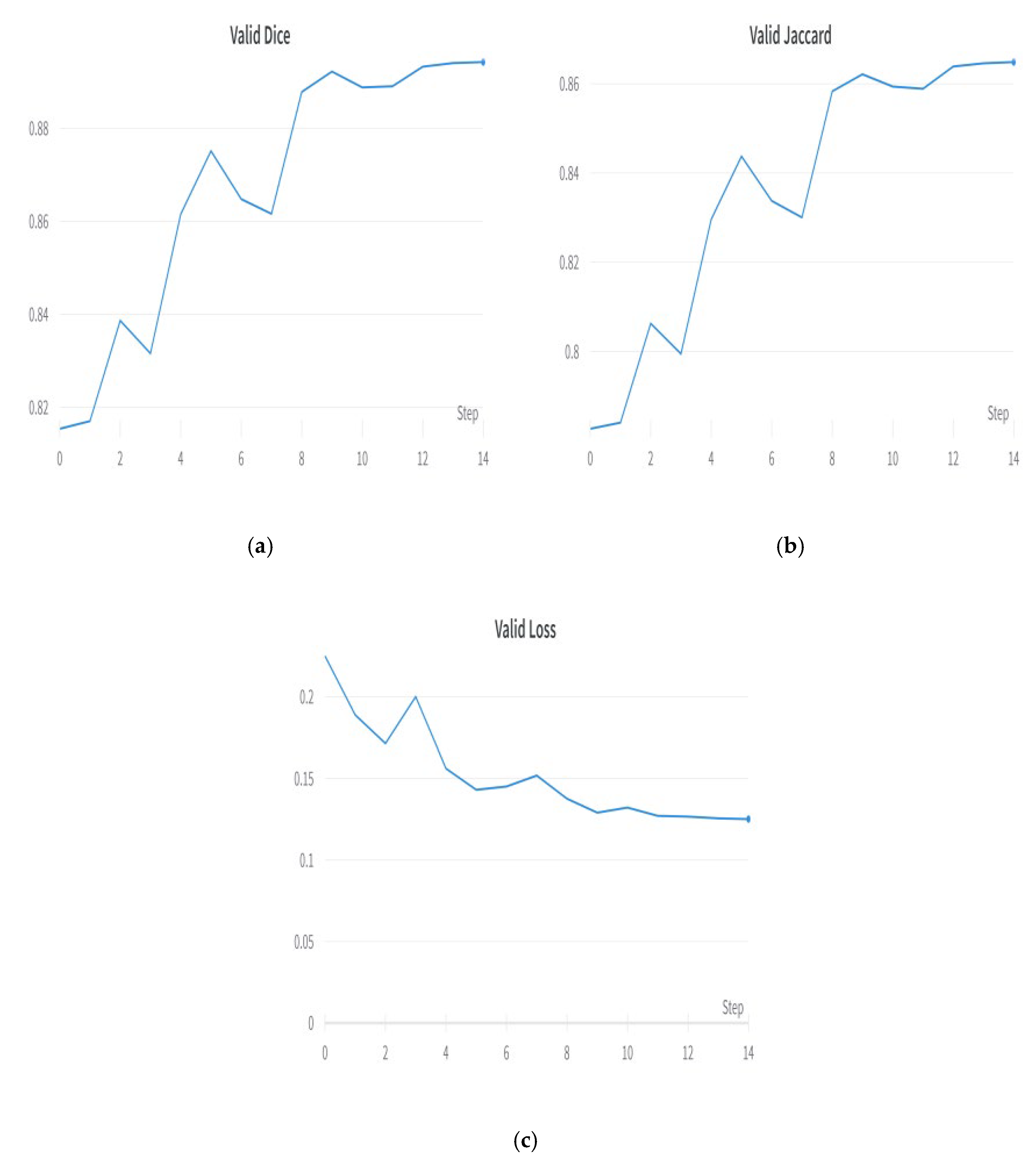

5.2. Best Encoder—EfficientNet B0

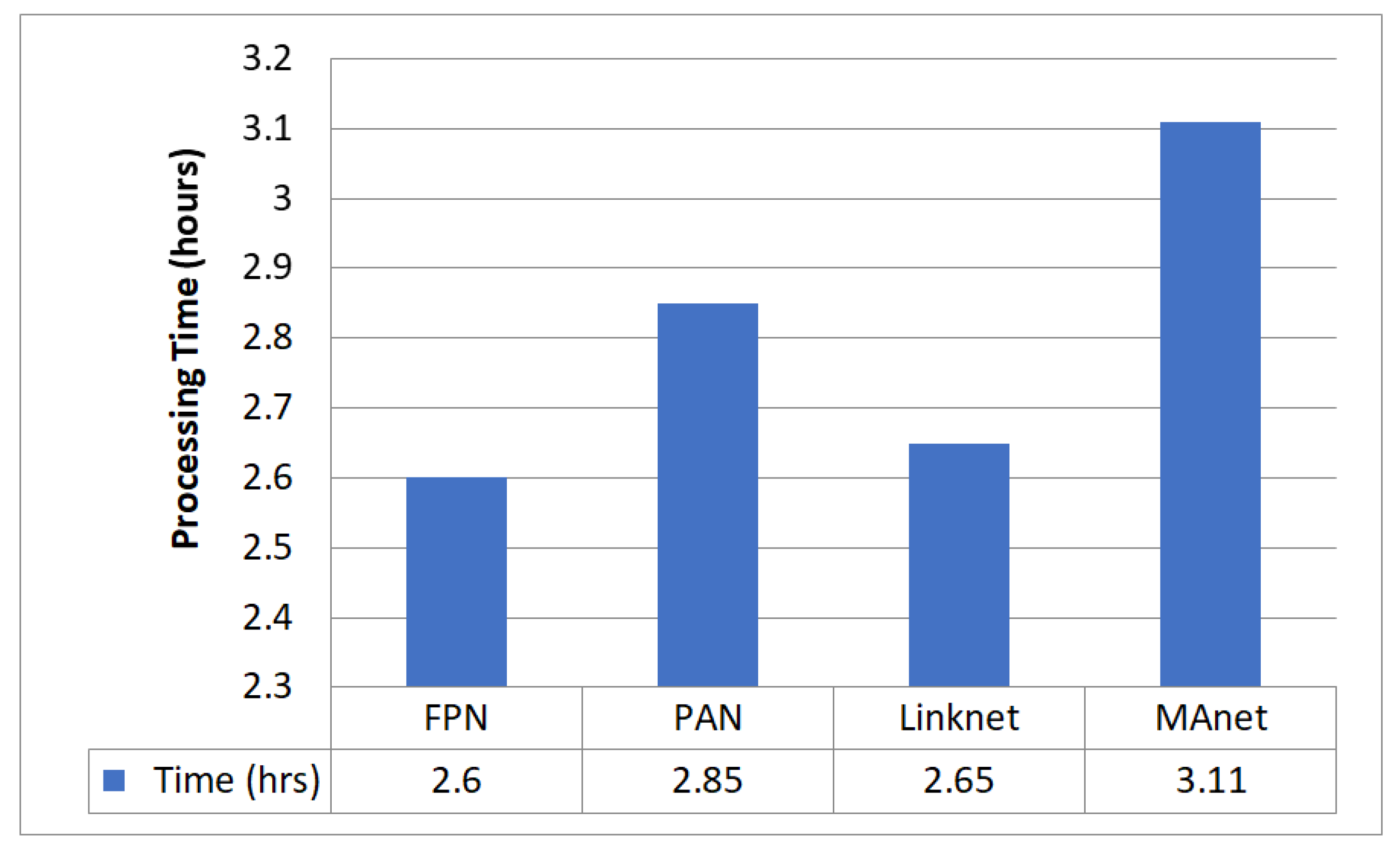

5.3. Decoder Evaluation for Upsampling

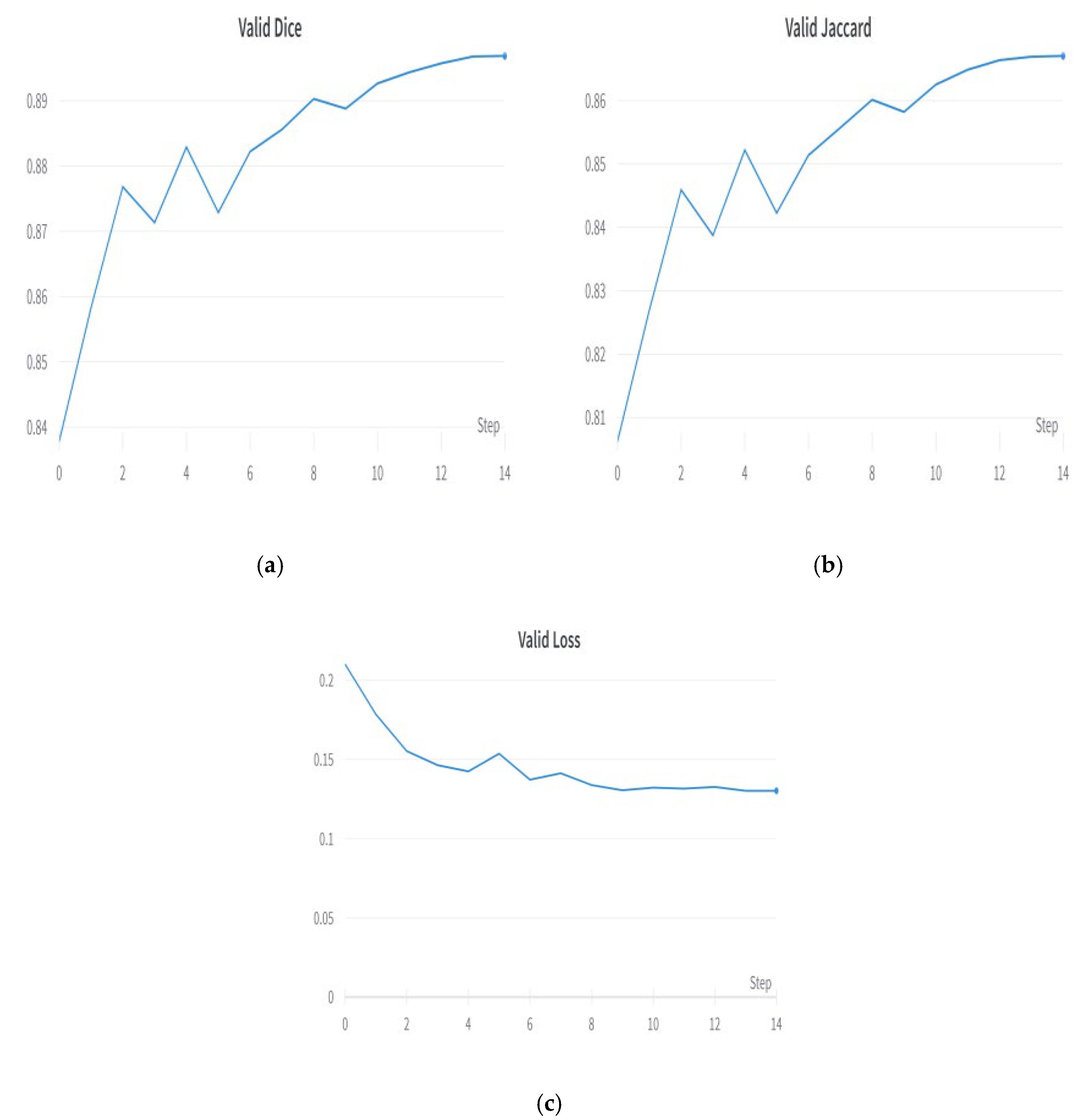

5.4. Best Decoder—FPN

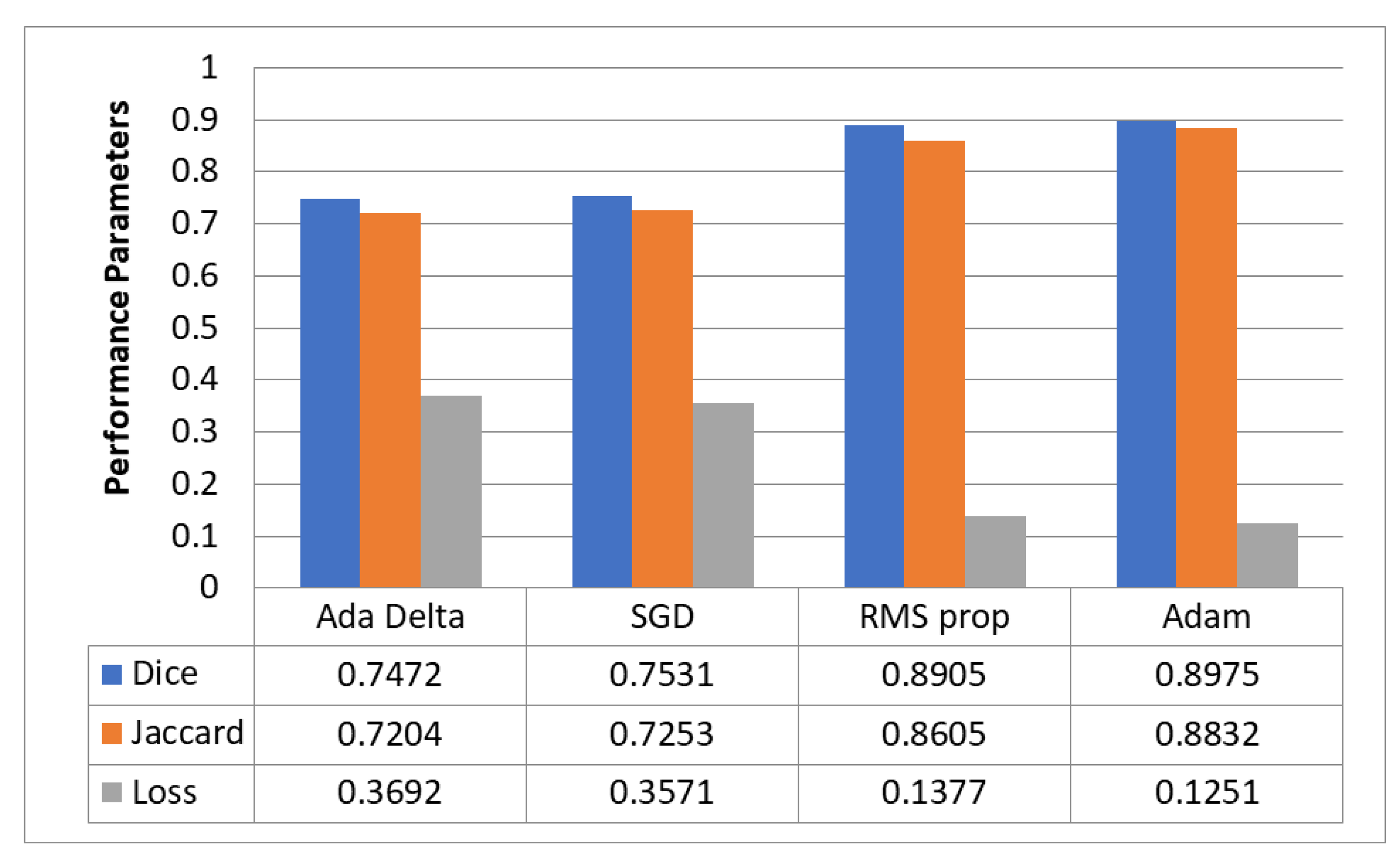

5.5. Optimizer Evaluation for Hyperparameter Tuning

5.6. Best Optimizer—Adam

5.7. Visualization of Results for the Best Optimized Model

6. State-of-the-Art Comparison of UW Madison GI Tract Dataset

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, B.; Meng, M.Q.-H. Tumor Recognition in Wireless Capsule Endoscopy Images Using Textural Features and SVM-Based Feature Selection. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 323–329. [Google Scholar] [CrossRef] [PubMed]

- Bernal, J.; Sánchez, J.; Vilariño, F. Towards Automatic Polyp Detection with a Polyp Appearance Model. Pattern Recognit. 2012, 45, 3166–3182. [Google Scholar] [CrossRef]

- Zhou, M.; Bao, G.; Geng, Y.; Alkandari, B.; Li, X. Polyp Detection and Radius Measurement in Small Intestine Using Video Capsule Endoscopy. In Proceedings of the 2014 7th International Conference on Biomedical Engineering and Informatics, Dalian, China, 14–16 October 2014. [Google Scholar]

- Wang, Y.; Tavanapong, W.; Wong, J.; Oh, J.H.; de Groen, P.C. Polyp-Alert: Near Real-Time Feedback during Colonoscopy. Comput. Methods Programs Biomed. 2015, 120, 164–179. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Yang, G.; Chen, Z.; Huang, B.; Chen, L.; Xu, D.; Zhou, X.; Zhong, S.; Zhang, H.; Wang, T. Colorectal Polyp Segmentation Using a Fully Convolutional Neural Network. In Proceedings of the 2017 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Shanghai, China, 14–16 October 2017. [Google Scholar]

- Dijkstra, W.; Sobiecki, A.; Bernal, J.; Telea, A. Towards a Single Solution for Polyp Detection, Localization and Segmentation in Colonoscopy Images. In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Prague, Czech Republic, 25–27 February 2019. [Google Scholar]

- Lafraxo, S.; El Ansari, M. GastroNet: Abnormalities Recognition in Gastrointestinal Tract through Endoscopic Imagery Using Deep Learning Techniques. In Proceedings of the 2020 8th International Conference on Wireless Networks and Mobile Communications (WINCOM), Reims, France, 27–29 October 2020. [Google Scholar]

- Du, B.; Zhao, Z.; Hu, X.; Wu, G.; Han, L.; Sun, L.; Gao, Q. Landslide Susceptibility Prediction Based on Image Semantic Segmentation. Comput. Geosci. 2021, 155, 104860. [Google Scholar] [CrossRef]

- Gonçalves, J.P.; Pinto, F.A.C.; Queiroz, D.M.; Villar, F.M.M.; Barbedo, J.G.A.; Del Ponte, E.M. Deep Learning Architectures for Semantic Segmentation and Automatic Estimation of Severity of Foliar Symptoms Caused by Diseases or Pests. Biosyst. Eng. 2021, 210, 129–142. [Google Scholar] [CrossRef]

- Scepanovic, S.; Antropov, O.; Laurila, P.; Rauste, Y.; Ignatenko, V.; Praks, J. Wide-Area Land Cover Mapping with Sentinel-1 Imagery Using Deep Learning Semantic Segmentation Models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10357–10374. [Google Scholar] [CrossRef]

- Yuan, Y.; Li, D.; Meng, M.Q.H. Automatic polyp detection via a novel unified bottom-up and top-down saliency approach. IEEE J. Biomed. Health Inform. 2017, 22, 1250–1260. [Google Scholar] [CrossRef]

- Poorneshwaran, J.M.; Kumar, S.S.; Ram, K.; Joseph, J.; Sivaprakasam, M. Polyp Segmentation Using Generative Adversarial Network. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 7201–7204. [Google Scholar]

- Kang, J.; Gwak, J. Ensemble of instance segmentation models for polyp segmentation in colonoscopy images. IEEE Access 2019, 7, 26440–26447. [Google Scholar] [CrossRef]

- Cogan, T.; Cogan, M.; Tamil, L. MAPGI: Accurate identification of anatomical landmarks and diseased tissue in gastrointestinal tract using deep learning. Comput. Biol. Med. 2019, 111, 103351. [Google Scholar] [CrossRef]

- Öztürk, Ş.; Özkaya, U. Gastrointestinal Tract Classification Using Improved LSTM Based CNN. Multimed. Tools Appl. 2020, 79, 28825–28840. [Google Scholar] [CrossRef]

- Öztürk, Ş.; Özkaya, U. Residual LSTM Layered CNN for Classification of Gastrointestinal Tract Diseases. J. Biomed. Inform. 2021, 113, 103638. [Google Scholar] [CrossRef] [PubMed]

- Ye, R.; Wang, R.; Guo, Y.; Chen, L. SIA-Unet: A Unet with Sequence Information for Gastrointestinal Tract Segmentation. In Pacific Rim International Conference on Artificial Intelligence; Springer: Cham, Switzerland, 2022; pp. 316–326. [Google Scholar]

- Nemani, P.; Vollala, S. Medical Image Segmentation Using LeViT-UNet++: A Case Study on GI Tract Data. arXiv 2022, arXiv:2209.07515. [Google Scholar]

- Chou, A.; Li, W.; Roman, E. GI Tract Image Segmentation with U-Net and Mask R-CNN. Image Segmentation with U-Net and Mask R-CNN. Available online: http://cs231n.stanford.edu/reports/2022/pdfs/164.pdf (accessed on 4 June 2023).

- Niu, H.; Lin, Y. SER-UNet: A Network for Gastrointestinal Image Segmentation. In Proceedings of the 2022 2nd International Conference on Control and Intelligent Robotics, Nanjing, China, 24–26 June 2022. [Google Scholar]

- Li, H.; Liu, J. Multi-View Unet for Automated GI Tract Segmentation. In Proceedings of the 2022 5th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Chengdu, China, 19–21 August 2022. [Google Scholar]

- Chia, B.; Gu, H.; Lui, N. Gastrointestinal Tract Segmentation Using Multi-Task Learning; CS231n: Deep Learning for Computer Vision Stanford Spring. 2022. Available online: http://cs231n.stanford.edu/reports/2022/pdfs/75.pdf (accessed on 4 June 2023).

- Georgescu, M.-I.; Ionescu, R.T.; Miron, A.-I. Diversity-Promoting Ensemble for Medical Image Segmentation. arXiv 2022, arXiv:2210.12388. [Google Scholar]

- Kaggle. UW-Madison GI Tract Image Segmentation. Available online: https://www.kaggle.com/competitions/uw-madison-gi-tract-image-segmentation/data (accessed on 8 February 2023).

- Rezende, E.; Ruppert, G.; Carvalho, T.; Ramos, F.; de Geus, P. Malicious Software Classification Using Transfer Learning of ResNet-50 Deep Neural Network. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Srinivasu, P.N.; SivaSai, J.G.; Ijaz, M.F.; Bhoi, A.K.; Kim, W.; Kang, J.J. Classification of Skin Disease Using Deep Learning Neural Networks with MobileNet V2 and LSTM. Sensors 2021, 21, 2852. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Dana, K.; Shi, J.; Zhang, Z.; Wang, X.; Tyagi, A.; Agrawal, A. Context Encoding for Semantic Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Pu, B.; Lu, Y.; Chen, J.; Li, S.; Zhu, N.; Wei, W.; Li, K. MobileUNet-FPN: A Semantic Segmentation Model for Fetal Ultrasound Four-Chamber Segmentation in Edge Computing Environments. IEEE J. Biomed. Health Inform. 2022, 26, 5540–5550. [Google Scholar] [CrossRef]

- Ou, X.; Wang, H.; Zhang, G.; Li, W.; Yu, S. Semantic Segmentation Based on Double Pyramid Network with Improved Global Attention Mechanism. Appl. Intell. 2023, 53, 18898–18909. [Google Scholar] [CrossRef]

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting Encoder Representations for Efficient Semantic Segmentation. arXiv 2017, arXiv:1707.03718. [Google Scholar]

- Chen, B.; Xia, M.; Qian, M.; Huang, J. MANet: A Multi-Level Aggregation Network for Semantic Segmentation of High-Resolution Remote Sensing Images. Int. J. Remote Sens. 2022, 43, 5874–5894. [Google Scholar] [CrossRef]

- Gill, K.S.; Sharma, A.; Anand, V.; Gupta, R.; Deshmukh, P. Influence of Adam Optimizer with Sequential Convolutional Model for Detection of Tuberculosis. In Proceedings of the 2022 International Conference on Computational Modelling, Simulation and Optimization (ICCMSO), Pathum Thani, Thailand, 23–25 December 2022; pp. 340–344. [Google Scholar]

- Gill, K.S.; Sharma, A.; Anand, V.; Gupta, R. Brain Tumor Detection Using VGG19 Model on Adadelta and SGD Optimizer. In Proceedings of the 2022 6th International Conference on Electronics, Communication and Aerospace Technology, Coimbatore, India, 1–3 December 2022. [Google Scholar]

- Zou, F.; Shen, L.; Jie, Z.; Zhang, W.; Liu, W. A Sufficient Condition for Convergences of Adam and RMSProp. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Gower, R.M.; Loizou, N.; Qian, X.; Sailanbayev, A.; Shulgin, E.; Richtarik, P. SGD: General Analysis and Improved Rates. arXiv 2019, arXiv:1901.09401. [Google Scholar]

- Sharma, N.; Gupta, S.; Koundal, D.; Alyami, S.; Alshahrani, H.; Asiri, Y.; Shaikh, A. U-Net Model with Transfer Learning Model as a Backbone for Segmentation of Gastrointestinal Tract. Bioengineering 2023, 10, 119. [Google Scholar] [CrossRef] [PubMed]

| Ref/Year | Techniques | Dice | IoU/Jaccard |

|---|---|---|---|

| [17]/2022 | SIA UNet | 0.78 | - |

| [18]/2022 | CNN Transformer | 0.79 | 0.72 |

| [19]/2022 | UNet and Mask RCNN | 0.51 | - |

| [20]/2022 | UNet on 2.5D | 0.36 | 0.12 |

| [21]/2022 | Ensemble of Different Architectures | 0.88 | - |

| [37]/2022 | UNet | 0.8854 | 0.8819 |

| Proposed Model | EfficientNetB0 and FPN | 0.8975 | 0.8832 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharma, N.; Gupta, S.; Reshan, M.S.A.; Sulaiman, A.; Alshahrani, H.; Shaikh, A. EfficientNetB0 cum FPN Based Semantic Segmentation of Gastrointestinal Tract Organs in MRI Scans. Diagnostics 2023, 13, 2399. https://doi.org/10.3390/diagnostics13142399

Sharma N, Gupta S, Reshan MSA, Sulaiman A, Alshahrani H, Shaikh A. EfficientNetB0 cum FPN Based Semantic Segmentation of Gastrointestinal Tract Organs in MRI Scans. Diagnostics. 2023; 13(14):2399. https://doi.org/10.3390/diagnostics13142399

Chicago/Turabian StyleSharma, Neha, Sheifali Gupta, Mana Saleh Al Reshan, Adel Sulaiman, Hani Alshahrani, and Asadullah Shaikh. 2023. "EfficientNetB0 cum FPN Based Semantic Segmentation of Gastrointestinal Tract Organs in MRI Scans" Diagnostics 13, no. 14: 2399. https://doi.org/10.3390/diagnostics13142399

APA StyleSharma, N., Gupta, S., Reshan, M. S. A., Sulaiman, A., Alshahrani, H., & Shaikh, A. (2023). EfficientNetB0 cum FPN Based Semantic Segmentation of Gastrointestinal Tract Organs in MRI Scans. Diagnostics, 13(14), 2399. https://doi.org/10.3390/diagnostics13142399