A Framework for Prediction of Oncogenomic Progression Aiding Personalized Treatment of Gastric Cancer

Abstract

1. Introduction

- Explore the development of a universal and explicit benchmark dataset specifically tailored for gastric cancer mutations to overcome existing limitations.

- Investigate potential handcrafted feature extraction techniques to preserve the dataset’s integrity and enhance the accuracy of mutation detection models in gastric cancer.

- Examine the shortcomings of current model evaluation methods for accurately assessing the performance of mutation detection models in gastric cancer.

- Propose the development of more robust and comprehensive evaluation techniques to address the limitations of current model evaluation methods.

- Explore the incorporation of improved feature extraction techniques and advanced evaluation methods to enhance the accuracy in the field of gastric cancer mutations.

2. Related Works

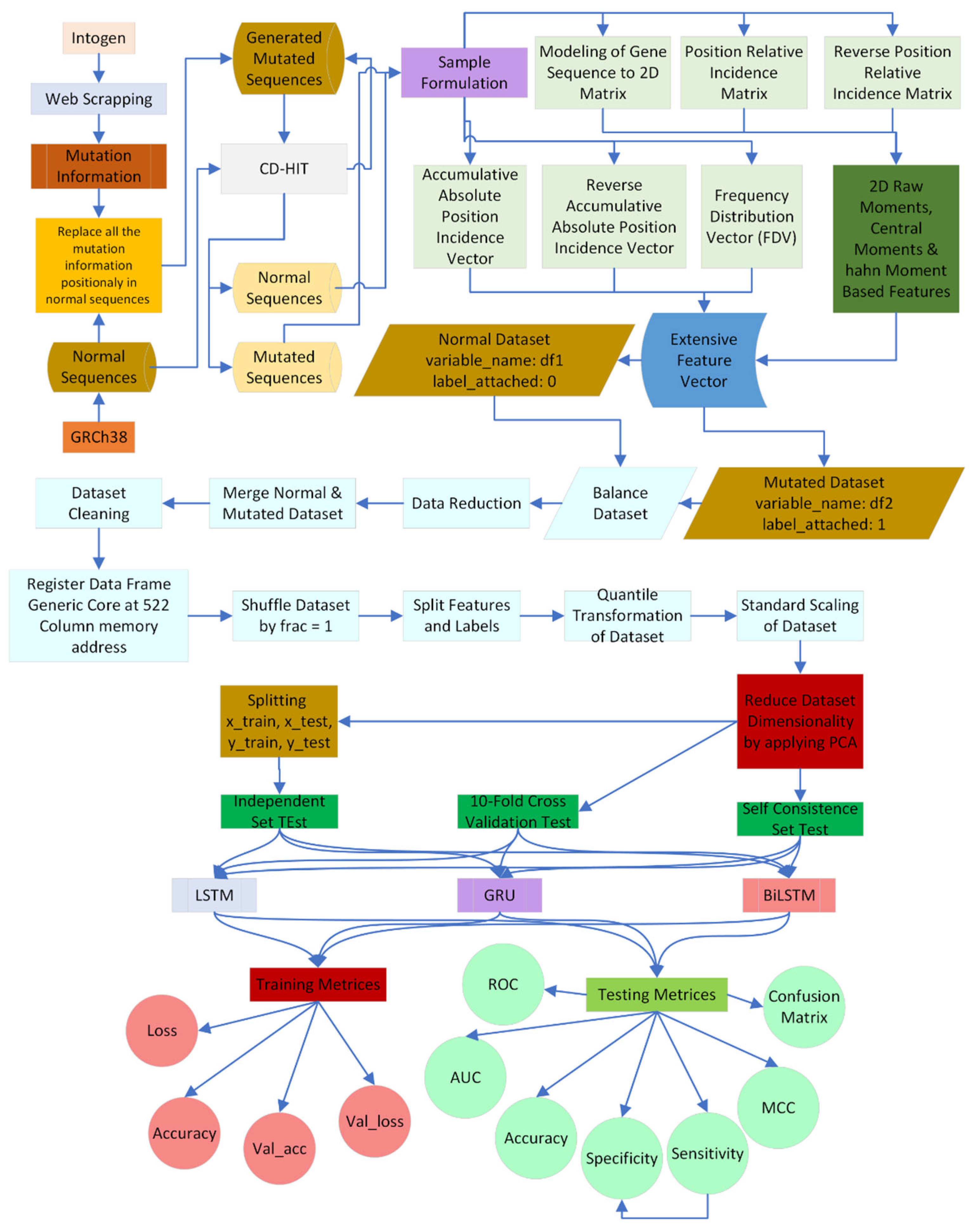

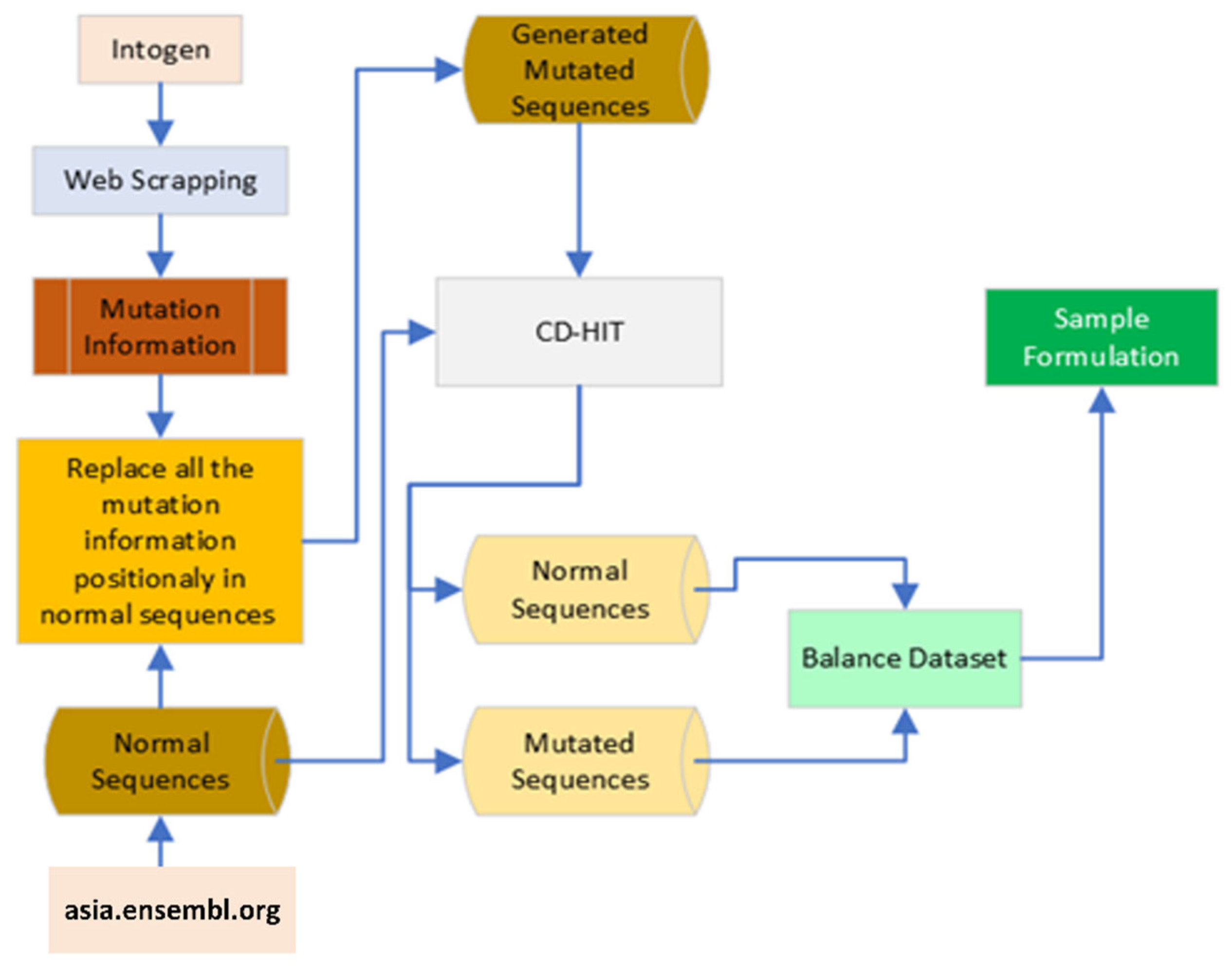

3. Materials and Methods

3.1. Benchmark Dataset Collection

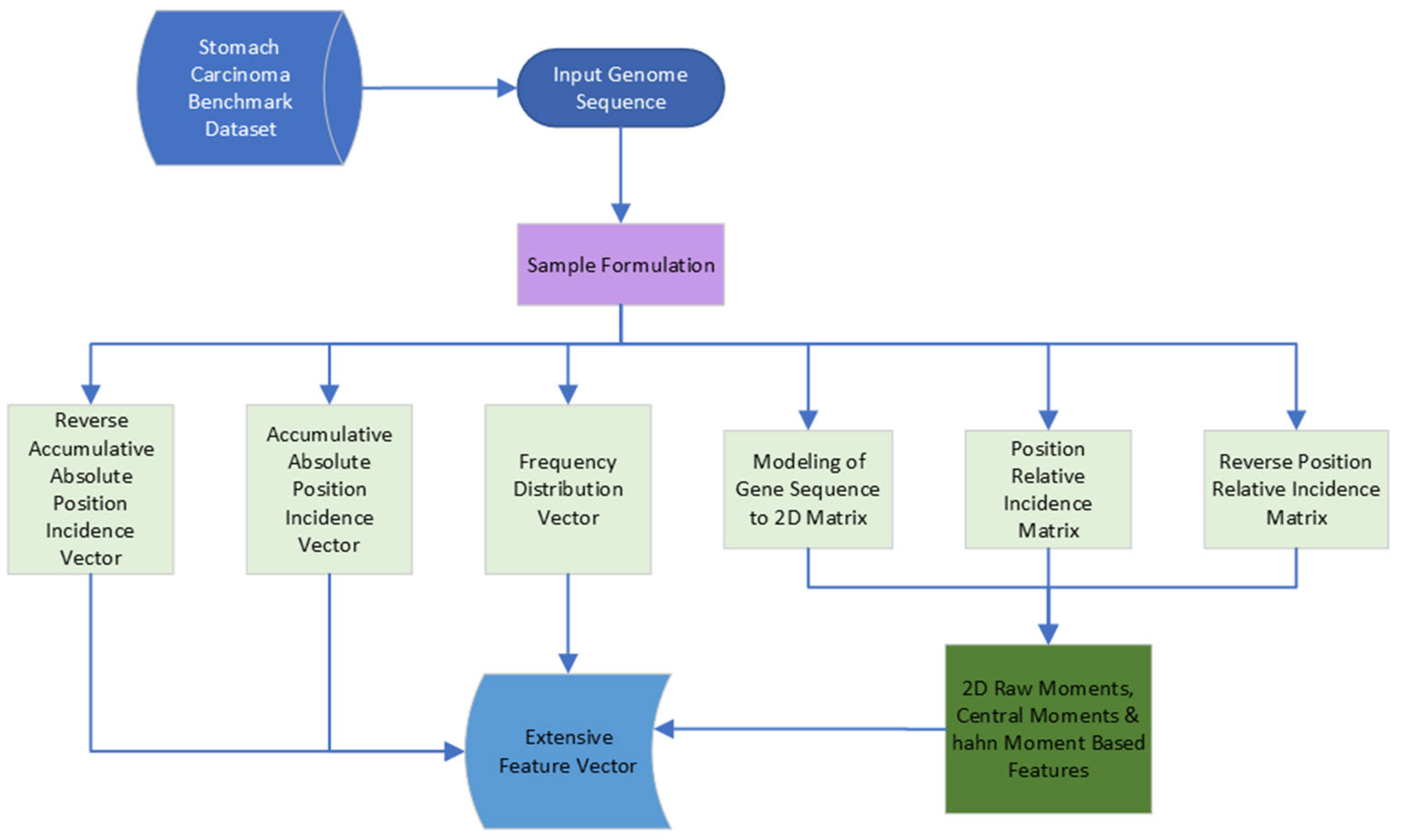

3.2. Feature Extraction

3.2.1. Statistical Moments Calculation

3.2.2. Determination of Position Relative Incident Matrix (PRIM)

3.2.3. Determination Reverse Position Relative Incident Matrix (RPRIM)

3.2.4. Frequency Distribution Vector (FDV)

3.2.5. Accumulative Absolute Position Incidence Vector (AAPIV)

3.2.6. Reverse Accumulative Absolute Position Incidence Vector (RAAPIV)

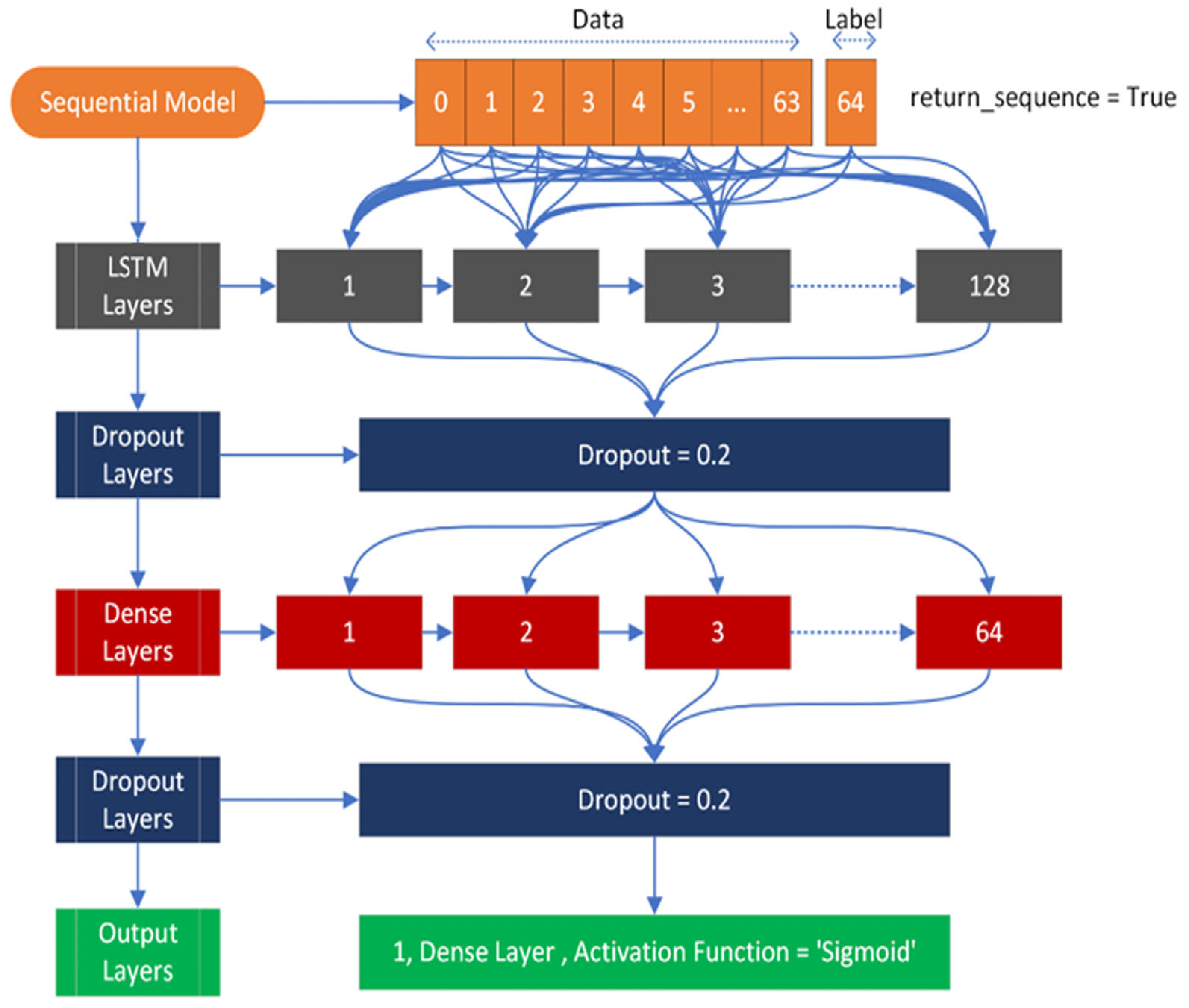

3.3. Classification Algorithms

3.3.1. Long Short-Term Memory (LSTM)

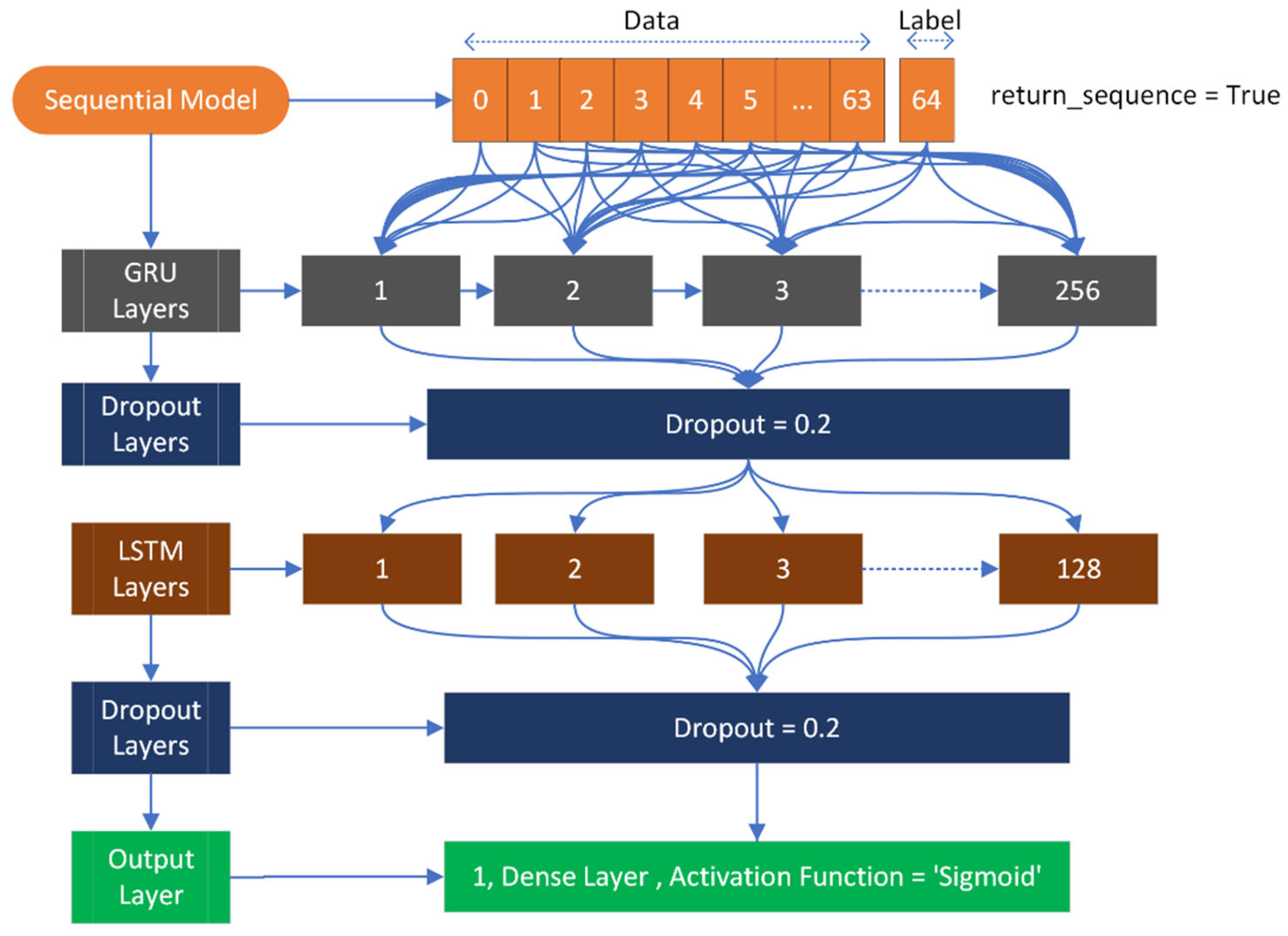

3.3.2. Gated Recurrent Units (GRU)

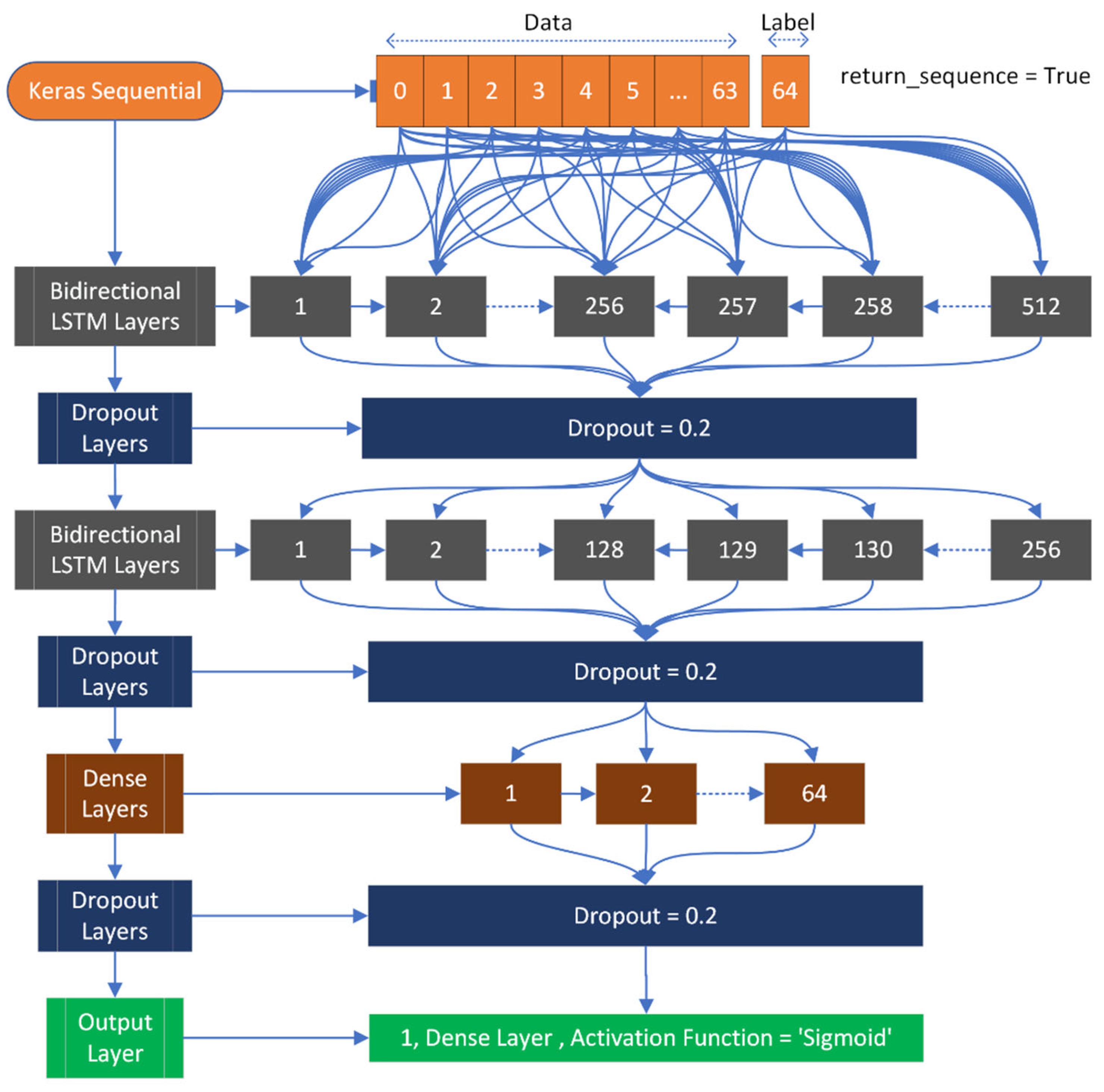

3.3.3. Bidirectional LSTM (Bi-LSTM)

4. Results

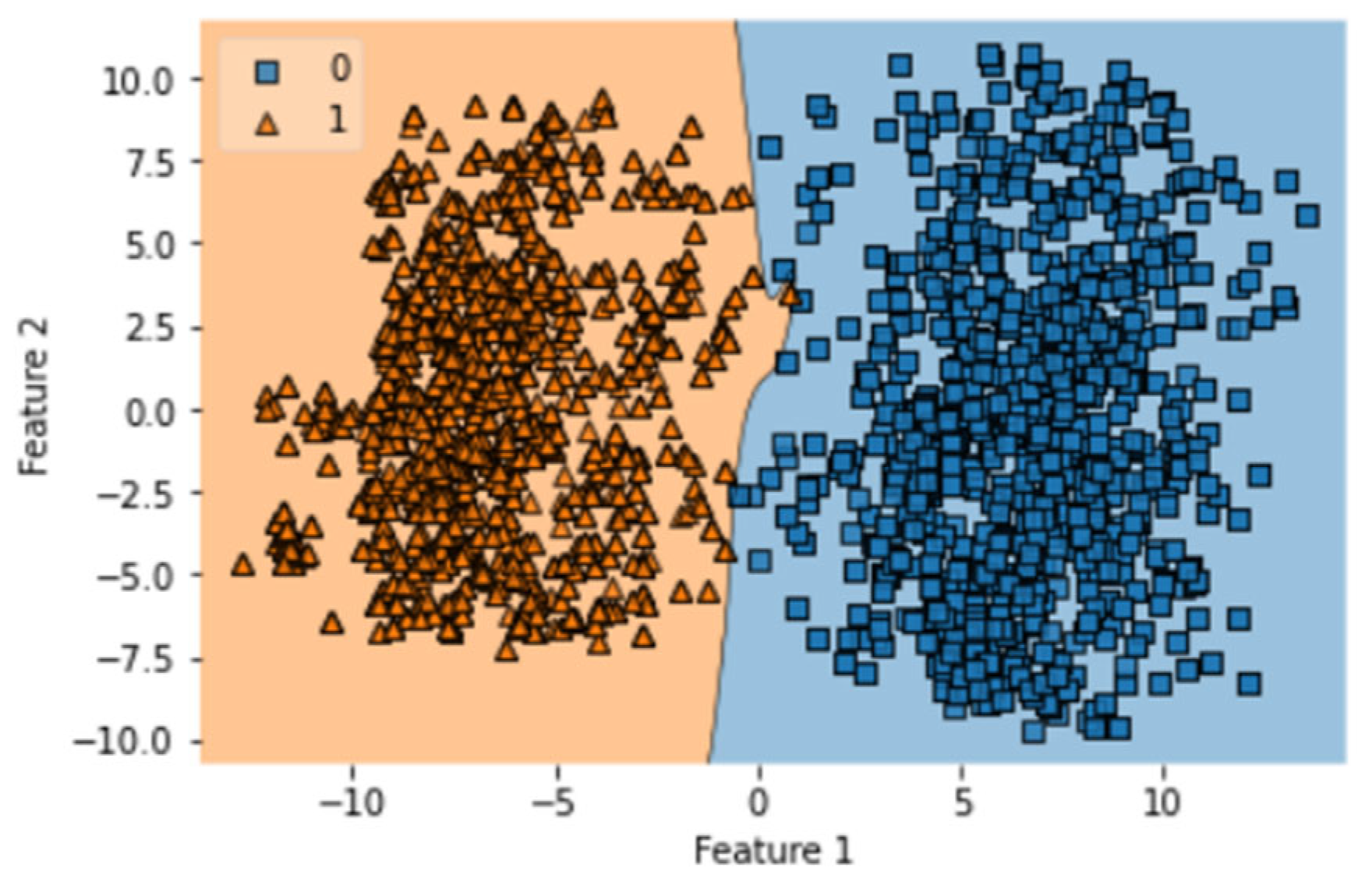

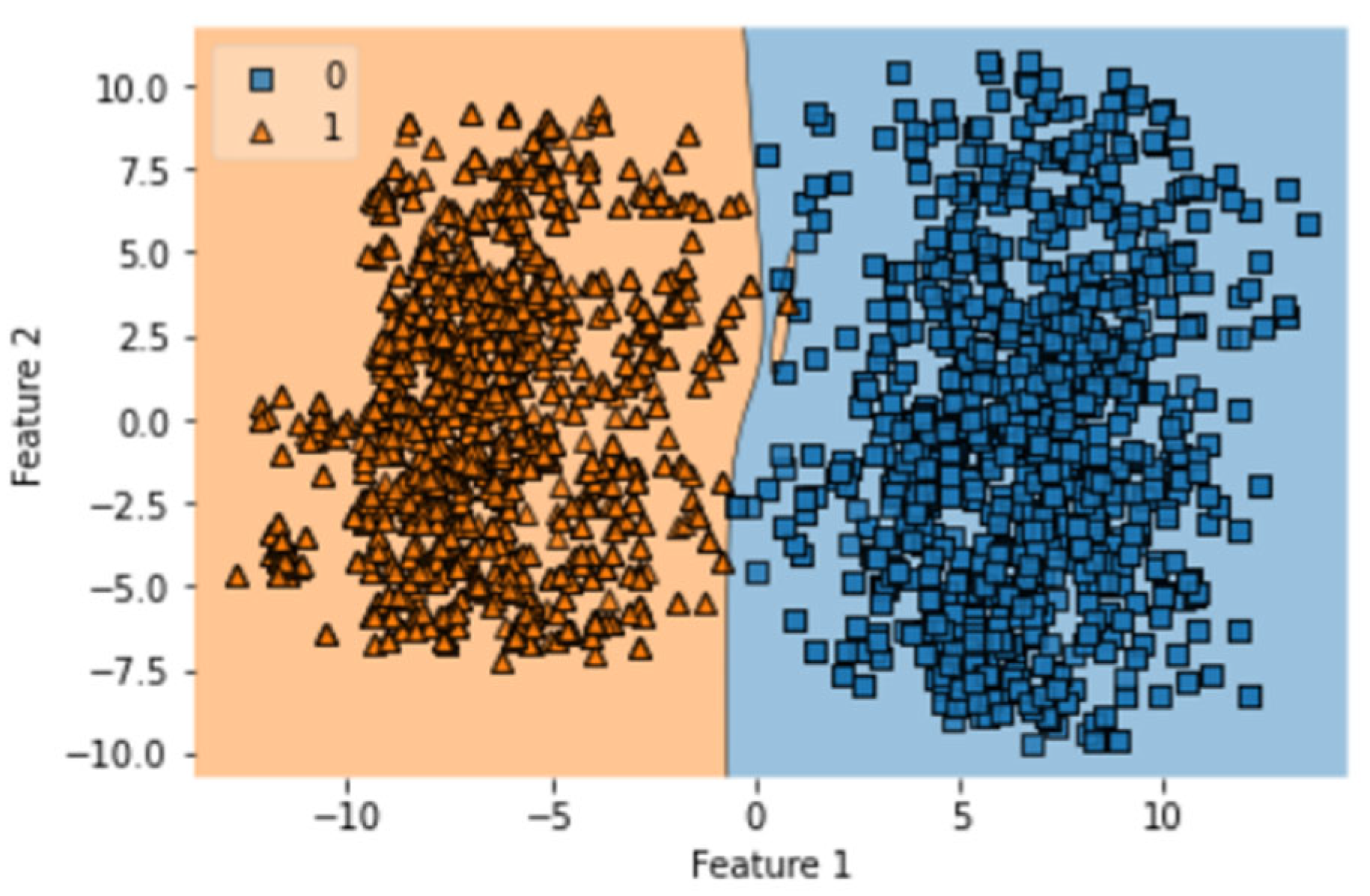

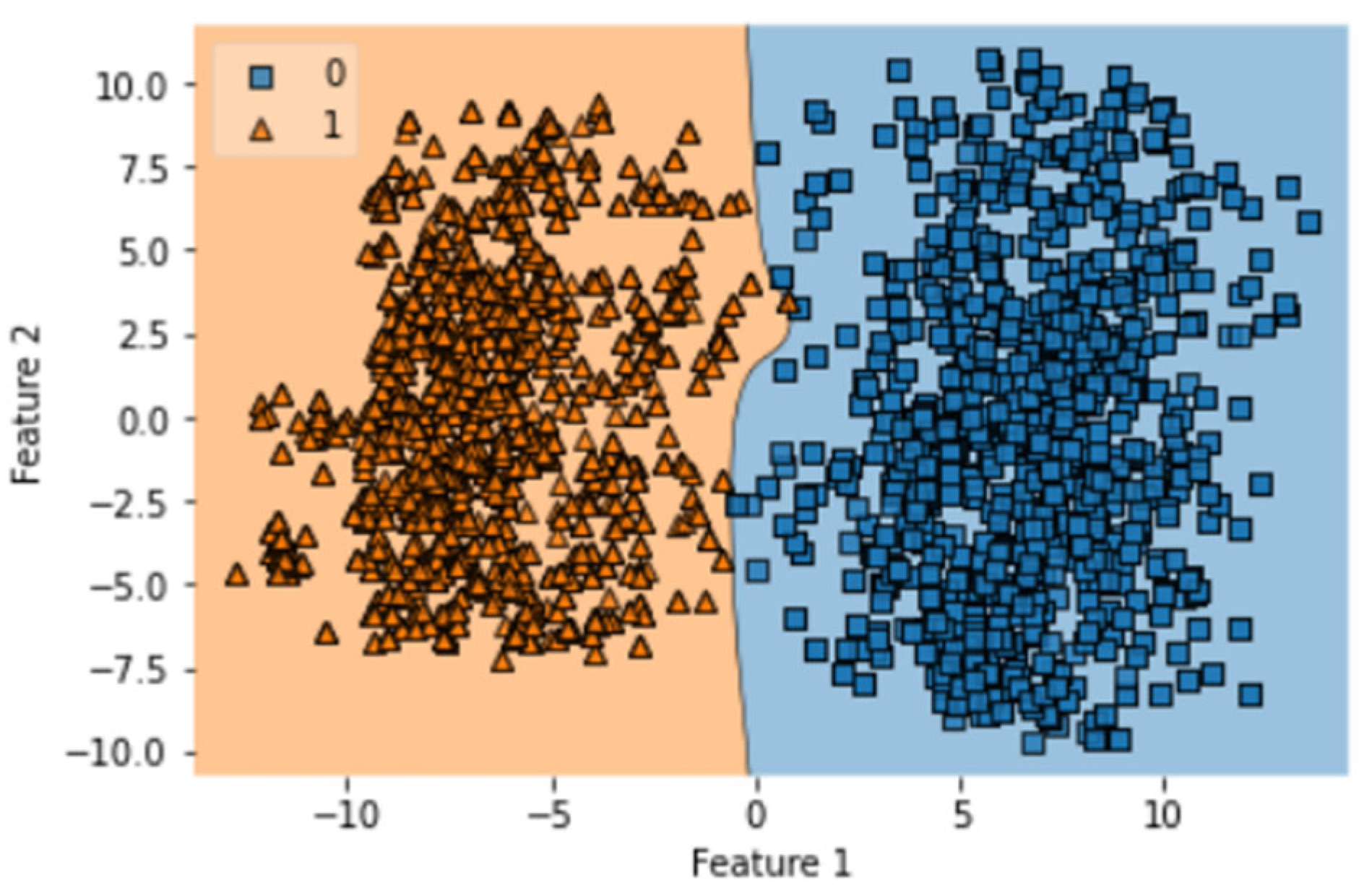

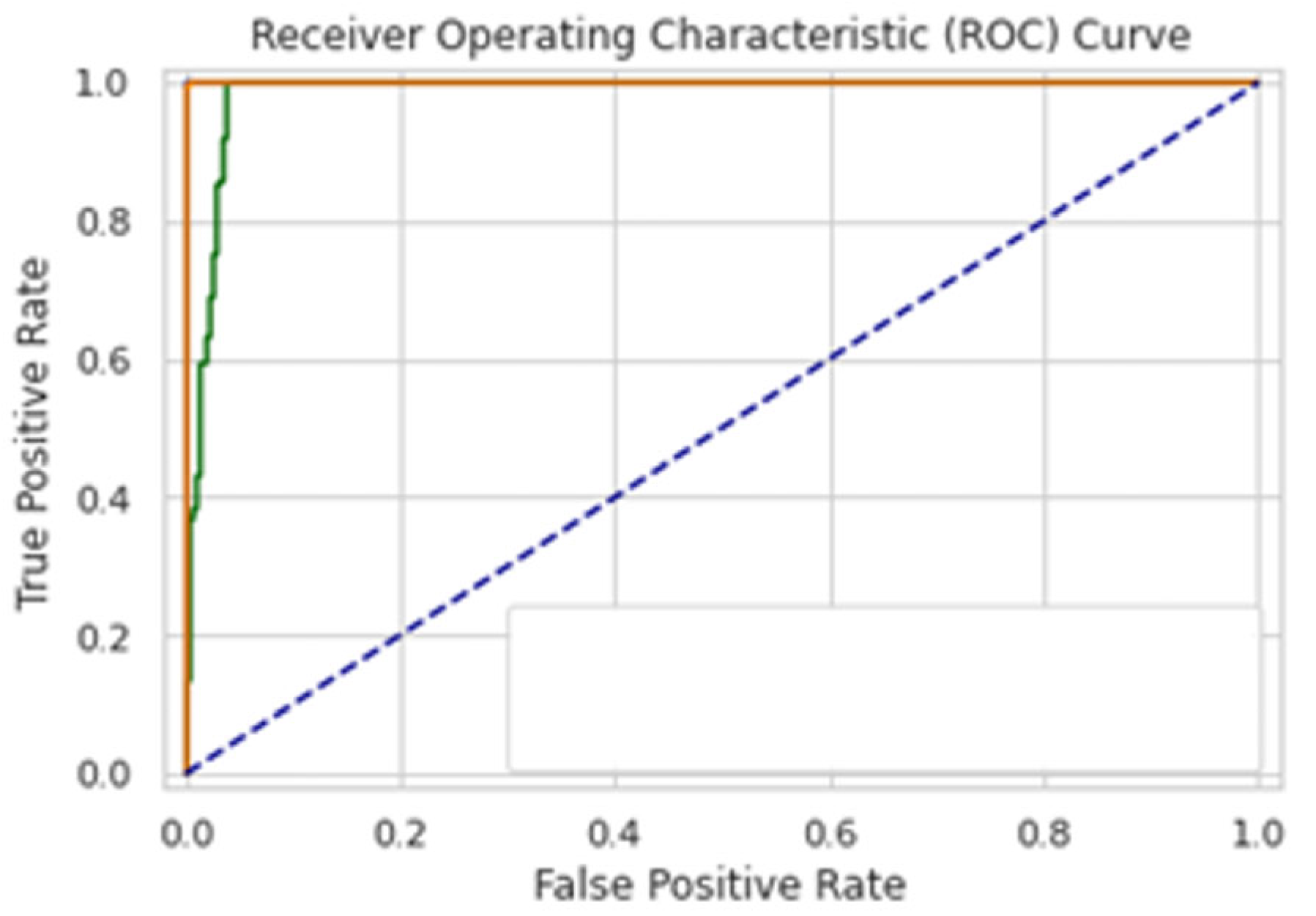

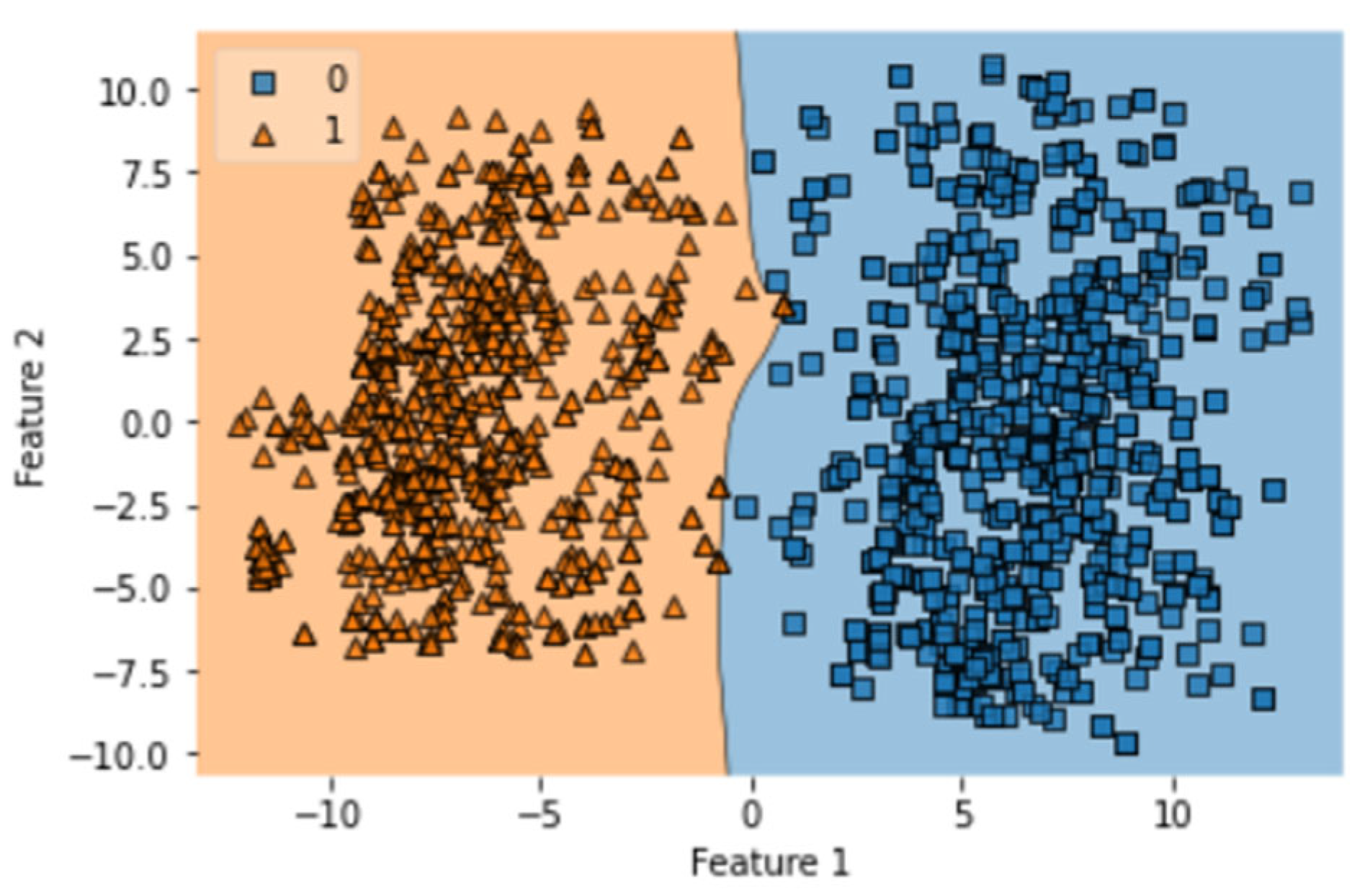

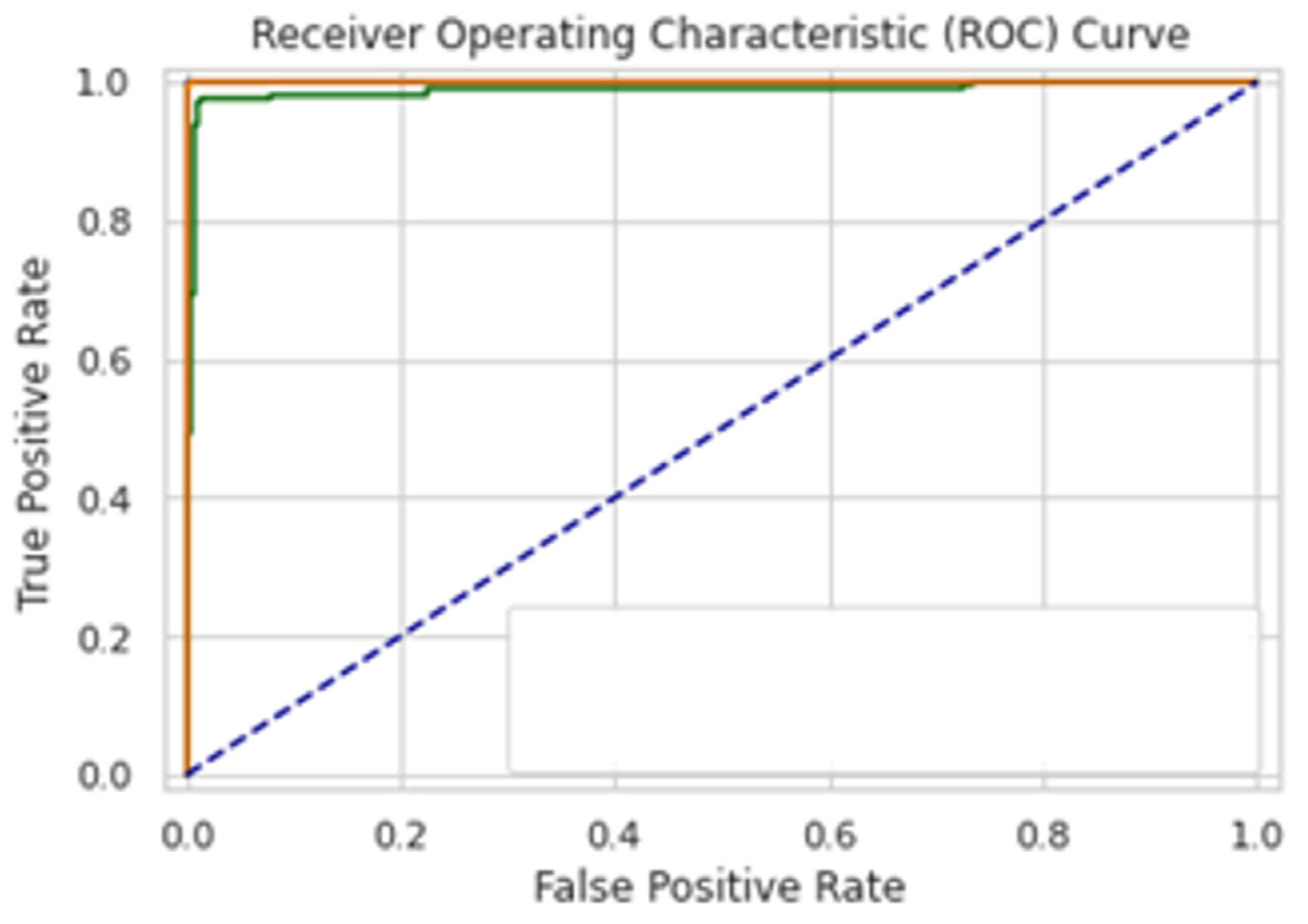

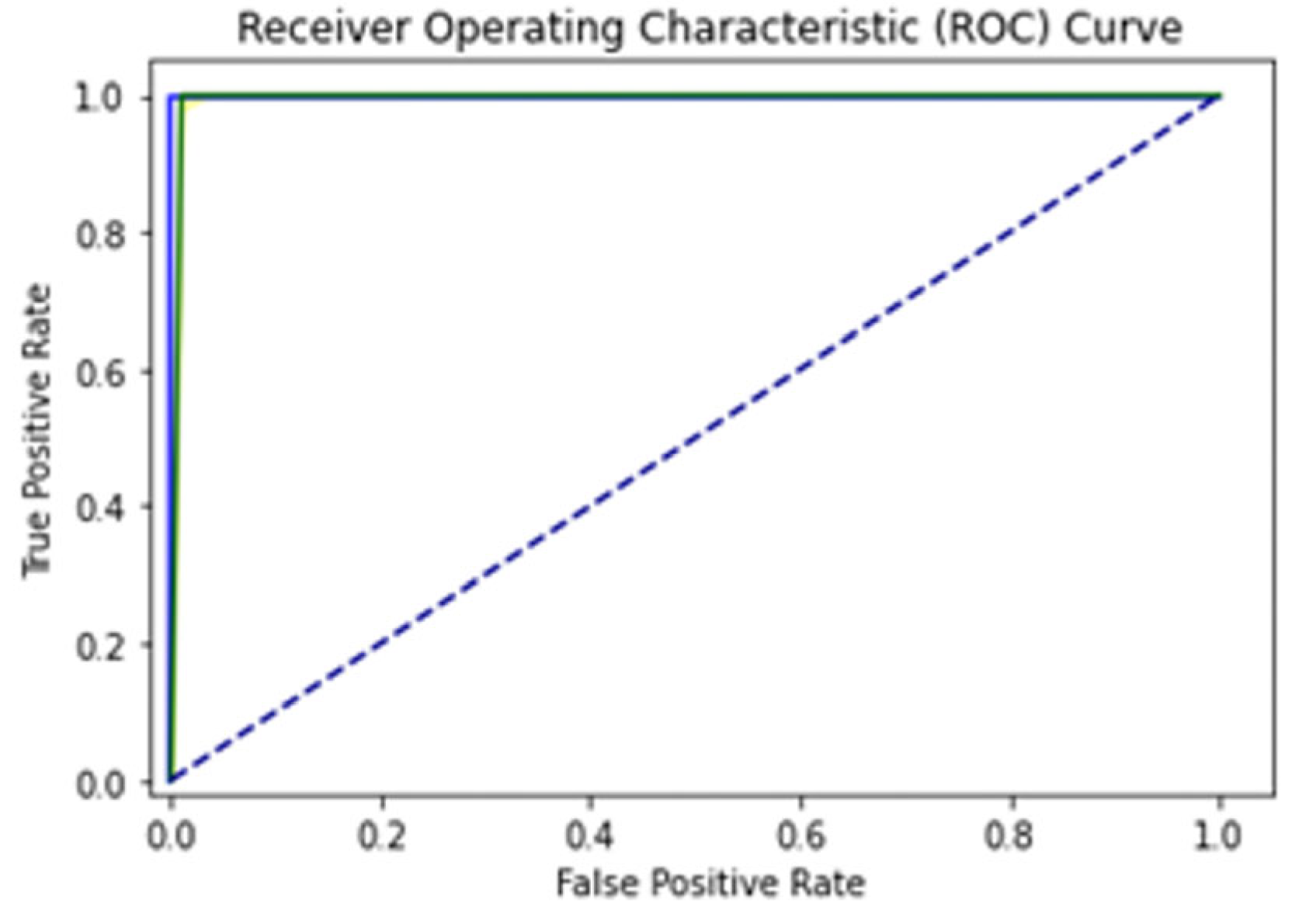

4.1. Self-Consistency Test (SCT)

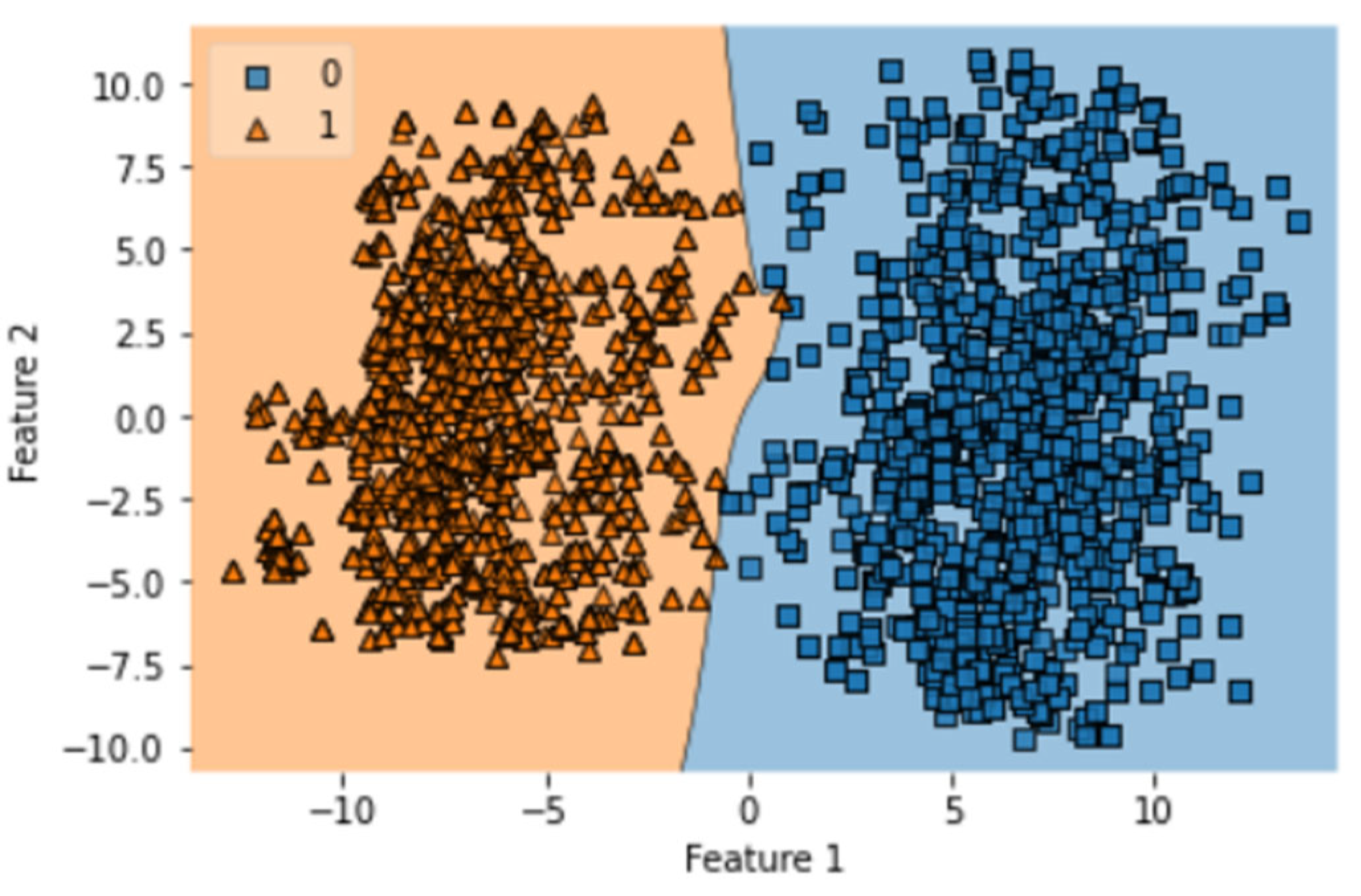

4.2. Independent Set Test (IST)

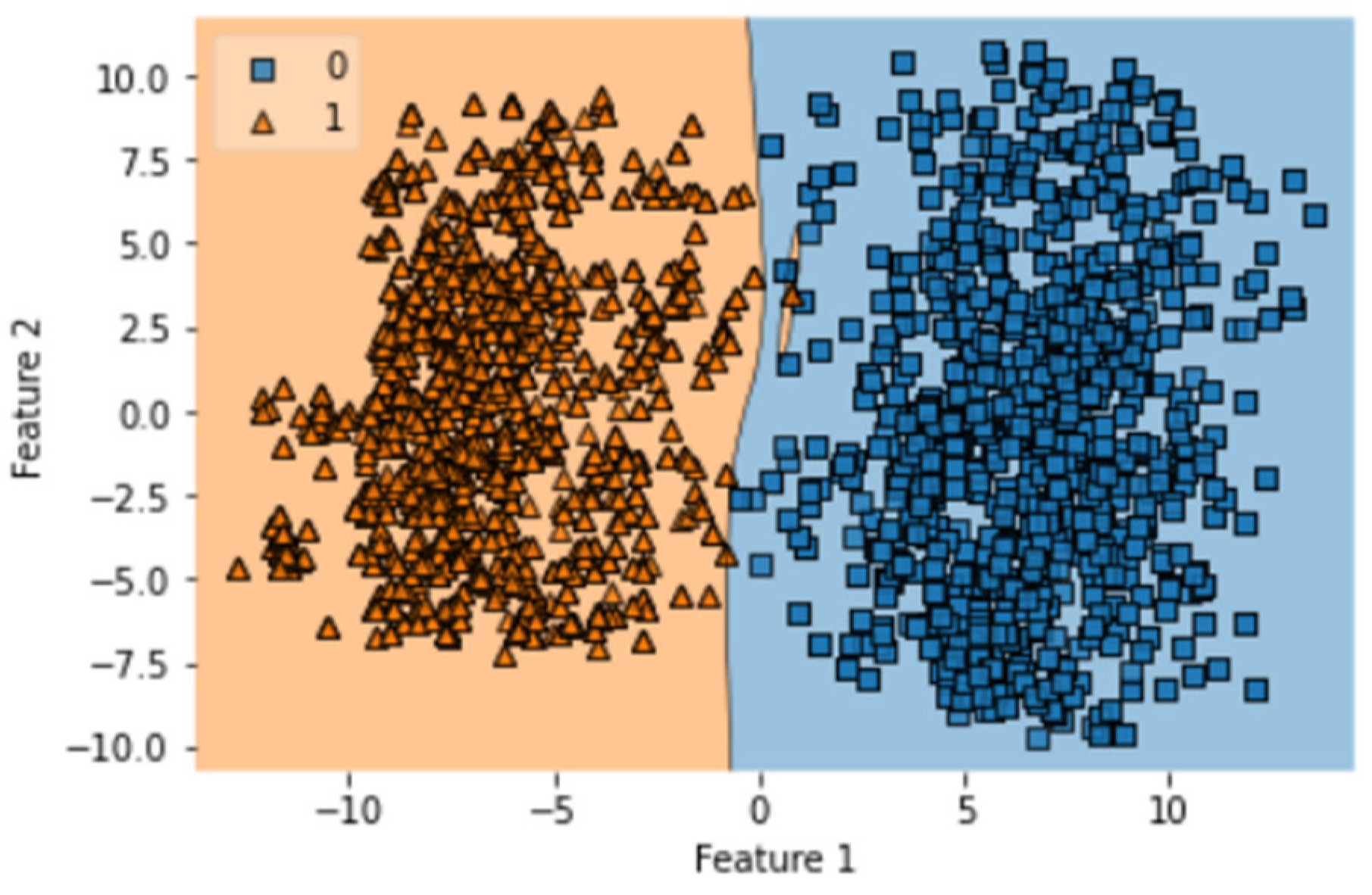

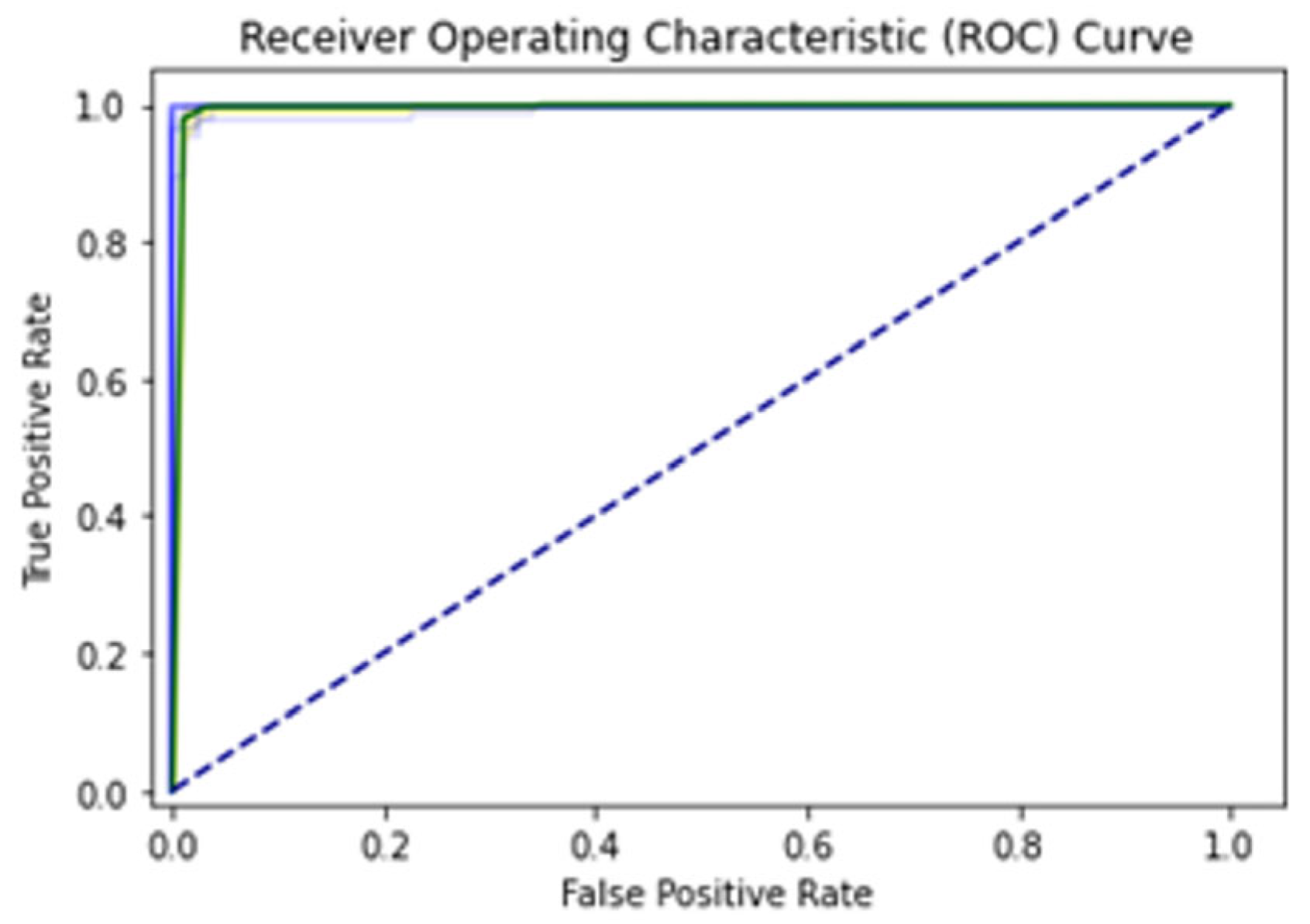

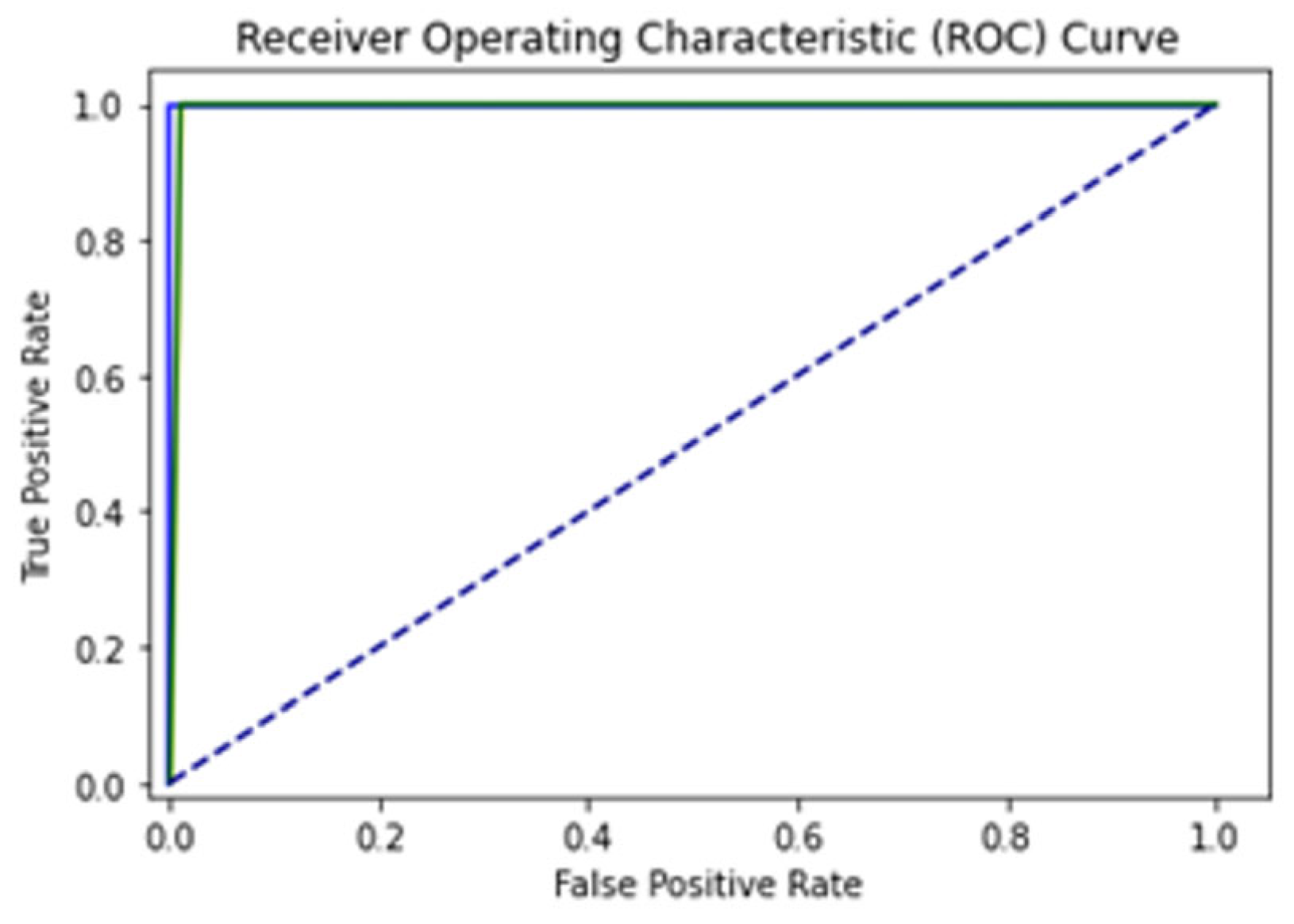

4.3. 10-Fold Cross-Validation Test (FCVT)

4.4. Comparison with Previous Studies

4.5. Complexity Study

5. Analysis and Discussion

6. Conclusions

Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pisani, P.; Bray, F.; Parkin, D. Estimates of the world-wide prevalence of cancer for 25 sites in the adult population. Int. J. Cancer 2001, 97, 72–81. [Google Scholar] [CrossRef] [PubMed]

- Arshad, A.; Khan, Y. DNA Computing A Survey. In Proceedings of the 2019 International Conference on Innovative Computing (ICIC), Lahore, Pakistan, 1–2 November 2019. [Google Scholar]

- Loewe, L.; Hill, W. The population genetics of mutations: Good, bad and indifferent. Philos. Trans. R. Soc. B Biol. Sci. 2010, 365, 1153–1167. [Google Scholar] [CrossRef] [PubMed]

- Pareek, C.; Smoczynski, R.; Tretyn, A. Sequencing technologies and genome sequencing. J. Appl. Genet. 2011, 52, 413–435. [Google Scholar] [CrossRef]

- Kourou, K.; Exarchos, T.; Exarchos, K.; Karamouzis, M.; Fotiadis, D. Machine learning applications in cancer prognosis and prediction. Comput. Struct. Biotechnol. J. 2015, 13, 8–17. [Google Scholar] [CrossRef] [PubMed]

- Mahdi, A.; Omid, H.; Kaveh, S.; Hamid, R.; Alireza, K.; Alireza, T. Detection of small bowel tumor in wireless capsule endoscopyimages using an adaptive neuro-fuzzy inference system. J. Biomed. Res. 2017, 31, 419. [Google Scholar] [CrossRef]

- Sun, M.; Liang, K.; Zhang, W.; Chang, Q.; Zhou, X. Non-Local Attention and Densely-Connected Convolutional Neural Networks for Malignancy Suspiciousness Classification of Gastric Ulcer. IEEE Access 2020, 8, 15812–15822. [Google Scholar] [CrossRef]

- Huang, R.; Kwon, N.; Tomizawa, Y.; Choi, A.; Hernandez-Boussard, T.; Hwang, J. A Comparison of Logistic Regression against Machine Learning Algorithms for Gastric Cancer Risk Prediction Within Real-World Clinical Data Streams. JCO Clin. Cancer Inform. 2022, 6, e2200039. [Google Scholar] [CrossRef]

- Yang, Y.; Zheng, Y.; Zhang, H.; Miao, Y.; Wu, G.; Zhou, L.; Wang, H.; Ji, R.; Guo, Q.; Chen, Z.; et al. An Immune-Related Gene Panel for Preoperative Lymph Node Status Evaluation in Advanced Gastric Cancer. BioMed Res. Int. 2020, 2020, 8450656. [Google Scholar] [CrossRef]

- Wang, Y.; Wei, Y.; Fan, X.; Xu, F.; Dong, Z.; Cheng, S.; Zhang, J. Construction of a miRNA Signature Using Support Vector Machine to Identify Microsatellite Instability Status and Prognosis in Gastric Cancer. J. Oncol. 2022, 2022, 6586354. [Google Scholar] [CrossRef]

- Polash, M.; Hossen, S.; Sarker, R.; Bhuiyan, M.; Taher, A. Functionality Testing of Machine Learning Algorithms to Anticipate Life Expectancy of Stomach Cancer Patients. In Proceedings of the 2022 International Conference on Advancement in Electrical and Electronic Engineering (ICAEEE), Gazipur, Bangladesh, 24–26 February 2022. [Google Scholar]

- Shah, M.A.; Ud Din, S.; Shah, A.A. Analysis of machine learning techniques for detection framework for DNA repair genes to help diagnose cancer: A systematic literature review. In Proceedings of the 2021 International Conference on Innovative Computing (ICIC), Lahore, Pakistan, 9–10 November 2021. [Google Scholar]

- Shah, A.A.; Ehsan, M.K.; Sohail, A.; Ilyas, S. Analysis of machine learning techniques for identification of post translation modification in Protein sequencing: A review. In Proceedings of the 2021 International Conference on Innovative Computing (ICIC), Lahore, Pakistan, 9–10 November 2021. [Google Scholar]

- Ud Din, S.; Shah, M.A.; Shah, A.A. Analysis of machine learning techniques for detection of tumor suppressor genes for early detection of cancer: A systematic literature review. In Proceedings of the 2021 International Conference on Innovative Computing (ICIC), Lahore, Pakistan, 9–10 November 2021. [Google Scholar]

- Butt, A.H.; Khan, Y.D. Canlect-pred: A cancer therapeutics tool for prediction of Target Cancerlectins using experiential annotated proteomic sequences. IEEE Access 2020, 8, 9520–9531. [Google Scholar] [CrossRef]

- Hussain, W.; Rasool, N.; Khan, Y.D. Insights into machine learning-based approaches for virtual screening in drug discovery: Existing strategies and streamlining through FP-Cadd. Curr. Drug Discov. Technol. 2021, 18, 463–472. [Google Scholar] [CrossRef]

- Khan, Y.D.; Alzahrani, E.; Alghamdi, W.; Ullah, M.Z. Sequence-based identification of allergen proteins developed by integration of pseaac and statistical moments via 5-step rule. Curr. Bioinform. 2020, 15, 1046–1055. [Google Scholar] [CrossRef]

- Naseer, S. NPALMITOYLDEEP-PSEAAC: A predictor of N-palmitoylation sites in proteins using deep representations of proteins and PSEAAC via modified 5-Steps Rule. Curr. Bioinform. 2021, 16, 294–305. [Google Scholar] [CrossRef]

- Naseer, S.; Ali, R.F.; Khan, Y.D.; Dominic, P.D. IGluK-deep: Computational identification of lysine glutarylation sites using deep neural networks with general pseudo amino acid compositions. J. Biomol. Struct. Dyn. 2021, 40, 11691–11704. [Google Scholar] [CrossRef] [PubMed]

- Bashashati, A.; Haffari, G.; Ding, J.; Ha, G.; Lui, K.; Rosner, J.; Huntsman, D.; Caldas, C.; Aparicio, S.; Shah, S. DriverNet: Uncovering the impact of somatic driver mutations on transcriptional networks in cancer. Genome Biol. 2012, 13, R124. [Google Scholar] [CrossRef] [PubMed]

- Feng, P.; Ding, H.; Yang, H.; Chen, W.; Lin, H.; Chou, K. iRNA-PseColl: Identifying the Occurrence Sites of Different RNA Modifications by Incorporating Collective Effects of Nucleotides into PseKNC. Mol. Ther. Nucleic Acids 2017, 7, 155–163. [Google Scholar] [CrossRef]

- Stenson, P.; Mort, M.; Ball, E.; Evans, K.; Hayden, M.; Heywood, S.; Hussain, M.; Phillips, A.; Cooper, D. The Human Gene Mutation Database: Towards a comprehensive repository of inherited mutation data for medical research, genetic diagnosis and next-generation sequencing studies. Hum. Genet. 2017, 136, 665–677. [Google Scholar] [CrossRef]

- Wu, Y.; Grabsch, H.; Ivanova, T.; Tan, I.; Murray, J.; Ooi, C.; Wright, A.; West, N.; Hutchins, G.; Wu, J.; et al. Comprehensive genomic meta-analysis identifies intra-tumoural stroma as a predictor of survival in patients with gastric cancer. Gut 2012, 62, 1100–1111. [Google Scholar] [CrossRef]

- Martínez-Jiménez, F.; Muiños, F.; Sentís, I.; Deu-Pons, J.; Reyes-Salazar, I.; Arnedo-Pac, C.; Mularoni, L.; Pich, O.; Bonet, J.; Kranas, H.; et al. A compendium of mutational cancer driver genes. Nat. Rev. Cancer 2020, 20, 555–572. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Bajari, R.; Andric, D.; Gerthoffert, F.; Lepsa, A.; Nahal-Bose, H.; Stein, L.; Ferretti, V. The International Cancer Genome Consortium Data Portal. Nat. Biotechnol. 2019, 37, 367–369. [Google Scholar] [CrossRef]

- IntOGen—Cancer Mutations Browser. Available online: https://intogen.org/search (accessed on 2 October 2022).

- Ensembl Genome Browser 107. Available online: http://asia.ensembl.org/index.html (accessed on 2 October 2022).

- Guo, S.; Deng, E.; Xu, L.; Ding, H.; Lin, H.; Chen, W.; Chou, K. iNuc-PseKNC: A sequence-based predictor for predicting nucleosome positioning in genomes with pseudo k-tuple nucleotide composition. Bioinformatics 2014, 30, 1522–1529. [Google Scholar] [CrossRef]

- Butt, A.; Rasool, N.; Khan, Y.A. Treatise to Computational Approaches Towards Prediction of Membrane Protein and Its Subtypes. J. Membr. Biol. 2016, 250, 55–76. [Google Scholar] [CrossRef] [PubMed]

- Akcay, M.; Etiz, D.; Celik, O. Prediction of Survival and Recurrence Patterns by Machine Learning in Gastric Cancer Cases Undergoing Radiation Therapy and Chemotherapy. Adv. Radiat. Oncol. 2020, 5, 1179–1187. [Google Scholar] [CrossRef]

- Barukab, O.; Khan, Y.D.; Khan, S.A.; Chou, K.-C. Isulfotyr-PSEAAC: Identify tyrosine sulfation sites by incorporating statistical moments via Chou’s 5-steps rule and pseudo components. Curr. Genom. 2019, 20, 306–320. [Google Scholar] [CrossRef]

- Shehryar, S.M.; Shahid, M.A.; Shah, A.A. Mutation detection in genes sequence using machine learning. In Proceedings of the 2021 International Conference on Innovative Computing (ICIC), Lahore, Pakistan, 9–10 November 2021. [Google Scholar]

- Shah, A.A.; Alturise, F.; Alkhalifah, T.; Khan, Y.D. Evaluation of deep learning techniques for identification of sarcoma-causing carcinogenic mutations. Digit. Health 2022, 8, 205520762211337. [Google Scholar] [CrossRef] [PubMed]

- Hussain, W.; Rasool, N.; Khan, Y.D. A sequence-based predictor of zika virus proteins developed by integration of PSEAAC and statistical moments. Comb. Chem. High Throughput Screen. 2020, 23, 797–804. [Google Scholar] [CrossRef] [PubMed]

- Amanat, S.; Ashraf, A.; Hussain, W.; Rasool, N.; Khan, Y.D. Identification of lysine carboxylation sites in proteins by integrating statistical moments and position relative features via general PSEAAC. Curr. Bioinform. 2020, 15, 396–407. [Google Scholar] [CrossRef]

- Mahmood, M.K.; Ehsan, A.; Khan, Y.D.; Chou, K.-C. Ihyd-LysSite (EPSV): Identifying hydroxylysine sites in protein using statistical formulation by extracting enhanced position and sequence variant feature technique. Curr. Genom. 2020, 21, 536–545. [Google Scholar] [CrossRef]

- Naseer, S.; Hussain, W.; Khan, Y.D.; Rasool, N. IPhosS(deep)-PSEAAC: Identify phosphoserine sites in proteins using deep learning on general pseudo amino acid compositions via modified 5-Steps Rule. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020, 19, 1703–1714. [Google Scholar] [CrossRef]

- Naseer, S.; Hussain, W.; Khan, Y.D.; Rasool, N. Optimization of serine phosphorylation prediction in proteins by comparing human engineered features and deep representations. Anal. Biochem. 2021, 615, 114069. [Google Scholar] [CrossRef]

- Malebary, S.J.; Khan, R.; Khan, Y.D. PROTOPRED: Advancing oncological research through identification of proto-oncogene proteins. IEEE Access 2021, 9, 68788–68797. [Google Scholar] [CrossRef]

- Khan, Y.D.; Khan, N.S.; Naseer, S.; Butt, A.H. Isumok-PSEAAC: Prediction of lysine sumoylation sites using statistical moments and Chou’s pseaac. PeerJ 2021, 9, e11581. [Google Scholar] [CrossRef]

- Awais, M.; Hussain, W.; Rasool, N.; Khan, Y.D. ITSP-PSEAAC: Identifying tumor suppressor proteins by using fully connected neural network and PSEAAC. Curr. Bioinform. 2021, 16, 700–709. [Google Scholar]

- Khan, Y.; Rasool, N.; Hussain, W.; Khan, S.; Chou, K. iPhosT-PseAAC: Identify phosphothreonine sites by incorporating sequence statistical moments into PseAAC. Anal. Biochem. 2018, 550, 109–116. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Perez, A.; Perez-Llamas, C.; Deu-Pons, J.; Tamborero, D.; Schroeder, M.; Jene-Sanz, A.; Santos, A.; Lopez-Bigas, N. IntOGen-mutations identifies cancer drivers across tumor types. Nat. Methods 2013, 10, 1081–1082. [Google Scholar] [CrossRef]

- Feng, P.; Yang, H.; Ding, H.; Lin, H.; Chen, W.; Chou, K. iDNA6mA-PseKNC: Identifying DNA N6-methyladenosine sites by incorporating nucleotide physicochemical properties into PseKNC. Genomics 2019, 111, 96–102. [Google Scholar] [CrossRef]

- Khan, Y.; Ahmed, F.; Khan, S. Situation recognition using image moments and recurrent neural networks. Neural Comput. Appl. 2013, 24, 1519–1529. [Google Scholar] [CrossRef]

- Khan, Y.; Ahmad, F.; Anwar, W. A neuro-cognitive approach for Iris recognition using back propagation. World Appl. Sci. J. 1012, 16, 678–685. [Google Scholar]

- Akmal, M.; Rasool, N.; Khan, Y. Prediction of N-linked glycosylation sites using position relative features and statistical moments. PLoS ONE 2017, 12, e0181966. [Google Scholar] [CrossRef]

- Ehsan, A.; Mahmood, K.; Khan, Y.; Khan, S.; Chou, K.A. Novel Modeling in Mathematical Biology for Classification of Signal Peptides. Sci. Rep. 2018, 8, 1039. [Google Scholar] [CrossRef]

- Butt, A.; Khan, S.; Jamil, H.; Rasool, N.; Khan, Y. A Prediction Model for Membrane Proteins Using Moments Based Features. BioMed Res. Int. 2016, 2016, 8370132. [Google Scholar] [CrossRef]

- Khan, Y.; Khan, N.; Farooq, S.; Abid, A.; Khan, S.; Ahmad, F.; Mahmood, M. An Efficient Algorithm for Recognition of Human Actions. Sci. World J. 2014, 2014, 875879. [Google Scholar] [CrossRef]

- Khan, Y.; Khan, S.; Ahmad, F.; Islam, S. Iris Recognition Using Image Moments and k-Means Algorithm. Sci. World J. 2014, 2014, 723595. [Google Scholar] [CrossRef]

- Hussain, W.; Khan, Y.; Rasool, N.; Khan, S.; Chou, K. SPrenylC-PseAAC: A sequence-based model developed via Chou’s 5-steps rule and general PseAAC for identifying S-prenylation sites in proteins. J. Theor. Biol. 2019, 468, 1–11. [Google Scholar] [CrossRef]

- Grada, A.; Weinbrecht, K. Next-Generation Sequencing: Methodology and Application. J. Investig. Dermatol. 2013, 133, e11. [Google Scholar] [CrossRef]

- Mardis, E. The impact of next-generation sequencing technology on genetics. Trends Genet. 2008, 24, 133–141. [Google Scholar] [CrossRef] [PubMed]

- Awais, M.; Hussain, W.; Khan, Y.; Rasool, N.; Khan, S.; Chou, K. iPhosH-PseAAC: Identify Phosphohistidine Sites in Proteins by Blending Statistical Moments and Position Relative Features According to the Chou’s 5-Step Rule and General Pseudo Amino Acid Composition. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021, 18, 596–610. [Google Scholar] [CrossRef]

- Papademetriou, R. Reconstructing with moments. In Proceedings of the 11th IAPR International Conference on Pattern Recognition. Vol. IV. Conference D: Architectures for Vision and Pattern Recognition, The Hague, The Netherlands, 30 August–3 September 1992. [Google Scholar]

- Khan, Y.; Jamil, M.; Hussain, W.; Rasool, N.; Khan, S.; Chou, K. pSSbond-PseAAC: Prediction of disulfide bonding sites by integration of PseAAC and statistical moments. J. Theor. Biol. 2019, 463, 47–55. [Google Scholar] [CrossRef] [PubMed]

- Korthauer, K.; Kendziorski, C. MADGiC: A model-based approach for identifying driver genes in cancer. Bioinformatics 2015, 31, 1526–1535. [Google Scholar] [CrossRef]

- Gruber, N.; Jockisch, A. Are GRU Cells More Specific and LSTM Cells More Sensitive in Motive Classification of Text? Front. Artif. Intell. 2020, 3, 40. [Google Scholar] [CrossRef] [PubMed]

- Reijns, M.; Parry, D.; Williams, T.; Nadeu, F.; Hindshaw, R.; Rios Szwed, D.; Nicholson, M.; Carroll, P.; Boyle, S.; Royo, R.; et al. Signatures of TOP1 transcription-associated mutagenesis in cancer and germline. Nature 2022, 602, 623–631. [Google Scholar] [CrossRef]

- Niu, G.; Wang, X.; Golda, M.; Mastro, S.; Zhang, B. An optimized adaptive PReLU-DBN for rolling element bearing fault diagnosis. Neurocomputing 2021, 445, 26–34. [Google Scholar] [CrossRef]

- Shah, A.; Alturise, F.; Alkhalifah, T.; Khan, Y. Deep Learning Approaches for Detection of Breast Adenocarcinoma Causing Carcinogenic Mutations. Int. J. Mol. Sci. 2022, 23, 11539. [Google Scholar] [CrossRef] [PubMed]

- Shah, A.; Malik, H.; Mohammad, A.; Khan, Y.; Alourani, A. Machine learning techniques for identification of carcinogenic mutations, which cause breast adenocarcinoma. Sci. Rep. 2022, 12, 11738. [Google Scholar] [CrossRef] [PubMed]

- Shah, A.; Khan, Y. Identification of 4-carboxyglutamate residue sites based on position based statistical feature and multiple classification. Sci. Rep. 2020, 10, 16913. [Google Scholar] [CrossRef] [PubMed]

- Saeed, S.; Shah, A.; Ehsan, M.; Amirzada, M.; Mahmood, A.; Mezgebo, T. Automated Facial Expression Recognition Framework Using Deep Learning. J. Healthc. Eng. 2022, 2022, 5707930. [Google Scholar] [CrossRef]

| Paper Citation | Algorithm | Accuracy Achieved | Dataset |

|---|---|---|---|

| [6] | Adaptive Neural-Fuzzy Inference System | 86.00% | PET-Scan, CT-Scan |

| [7] | Densely Connected Convolutional Network | 96.79% | Endoscopy Images |

| [8] | Logistic Regression | 73.20% | Electronic Health Record |

| [9] | Naive Bayes | 74.90% | Gene Expression Data |

| [10] | Support Vector Machine | 70.00% | miRNA |

| [11] | Extra Tree Classifier Random Forest Classifier Bagging Classifier HGB Classifier LGBM Classifier Decision Tree Classifier Gradient Boost Classifier | 97.27% 95.64% 95.21% 95.29% 92.71% 85.75% 79.54% | Surveillance, Epidemiology and End Results (SEER) |

| Gene Symbol | No of Mutations | Gene Symbol | No of Mutations | Gene Symbol | No of Mutations |

|---|---|---|---|---|---|

| TP53 | 293 | FBXW7 | 16 | ARHGEF12 | 13 |

| ARID1A | 76 | MAP2K7 | 22 | PIK3R1 | 5 |

| PIK3CA | 75 | SOHLH2 | 15 | MYH9 | 20 |

| CDH10 | 52 | NIN | 18 | NTRK3 | 17 |

| SMAD4 | 35 | FAT4 | 126 | FAT3 | 90 |

| KRAS | 37 | PRF1 | 15 | BCL9 | 14 |

| APC | 44 | PRKCB | 14 | ATM | 31 |

| KMT2D | 45 | ACVR2A | 24 | KIT | 13 |

| CDH11 | 33 | RNF43 | 16 | CACNA1D | 18 |

| ERBB3 | 28 | BMPR2 | 11 | KDM6A | 11 |

| RHOA | 27 | PPP3CA | 9 | CARS | 8 |

| CTNNB1 | 30 | CASP8 | 6 | GRIN2A | 32 |

| LRP1B | 169 | TOP2A | 12 | NSD1 | 21 |

| ARID2 | 27 | PRRX1 | 9 | FAT1 | 31 |

| CDKN2A | 18 | ARHGEF10L | 10 | CDK12 | 15 |

| BCOR | 28 | TET1 | 23 | FHIT | 3 |

| ERBB2 | 26 | RELA | 9 | BCLAF1 | 20 |

| DCSTAMP | 18 | RB1 | 12 | RECQL4 | 11 |

| TRIM49C | 17 | NRG1 | 23 | CLIP1 | 10 |

| KMT2C | 69 | BMPR1A | 3 | ||

| PTEN | 20 | SDC4 | 5 |

| Self-Consistency Set Test | Independent Set Test | 10-Fold Cross Validation Test | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Metrics | LSTM | GRU | Bi-LSTM | LSTM | GRU | BI-LSTM | LSTM | GRU | Bi-LSTM |

| Accuracy (%) | 97.18 | 98.88 | 98.88 | 97.18 | 99.46 | 99.46 | 97.30 | 97.89 | 97.83 |

| Sensitivity (%) | 98.35 | 100 | 100 | 98.35 | 98.93 | 98.93 | 96.10 | 96.67 | 96.55 |

| Specificity (%) | 96.01 | 97.77 | 97.77 | 96.01 | 100 | 100 | 98.56 | 99.16 | 99.16 |

| MCC | 0.94 | 0.977 | 0.977 | 0.94 | 0.989 | 0.989 | 0.946 | 0.978 | 0.978 |

| AUC | 0.98 | 1.00 | 1.0 | 0.98 | 1.00 | 1.00 | 0.99 | 0.99 | 0.99 |

| Current Study | Previous Studies | ||

|---|---|---|---|

| Algorithms | Accuracies Obtained | Algorithms | Accuracies Obtained |

| LSTM | 97.18 | Adaptive Neural-Fuzzy Inference System [6] | 86.00% |

| GRU | 99.46 | Densely Connected Convolutional Network [7] | 96.79% |

| Bi-LSTM | 99.46 | Logistic Regression [8] | 73.20% |

| Naive Bayes [9] | 74.90% | ||

| Support Vector Machine [10] | 70.00% | ||

| Random Forest Classifier [10] | 95.64% | ||

| LGBM Classifier [11] | 92.71% | ||

| Decision Tree Classifier [11] | 85.75% | ||

| Gradient Boost Classifier [11] | 79.54% | ||

| Obtained Results Using Feature Extraction Techniques Developed in This Study | Obtained Results without Using Feature Extraction Techniques Developed in This Study | ||

|---|---|---|---|

| Algorithms | Accuracies Obtained | Algorithms | Accuracies Obtained |

| LSTM | 97.18 | LSTM | 90.42 |

| GRU | 99.46 | GRU | 91.55 |

| Bi-LSTM | 99.46 | Bi-LSTM | 92.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alotaibi, F.M.; Khan, Y.D. A Framework for Prediction of Oncogenomic Progression Aiding Personalized Treatment of Gastric Cancer. Diagnostics 2023, 13, 2291. https://doi.org/10.3390/diagnostics13132291

Alotaibi FM, Khan YD. A Framework for Prediction of Oncogenomic Progression Aiding Personalized Treatment of Gastric Cancer. Diagnostics. 2023; 13(13):2291. https://doi.org/10.3390/diagnostics13132291

Chicago/Turabian StyleAlotaibi, Fahad M., and Yaser Daanial Khan. 2023. "A Framework for Prediction of Oncogenomic Progression Aiding Personalized Treatment of Gastric Cancer" Diagnostics 13, no. 13: 2291. https://doi.org/10.3390/diagnostics13132291

APA StyleAlotaibi, F. M., & Khan, Y. D. (2023). A Framework for Prediction of Oncogenomic Progression Aiding Personalized Treatment of Gastric Cancer. Diagnostics, 13(13), 2291. https://doi.org/10.3390/diagnostics13132291