1. Introduction

Adaptive optics (AO) has been applied to ophthalmologic imaging to enhance the resolution for in vivo imaging at the cellular level [

1,

2,

3,

4]. The building blocks of retinal microstructures such as cone and rod photoreceptors, retinal pigment epithelial (RPE) cells, blood cells, and microvasculature need to be accurately identified, counted, segmented, and mapped for diagnostic purposes. In general, multiple images are acquired at the same location and are registered. The registered image stacks can then be averaged to improve the signal-to-noise (SNR) ratio or analyzed to reveal temporal dynamics. For small patches of the order of half a degree, image registration is relatively easy using simple cross-correlations. However, as the image size increases to 1–2° in flying-spot scanning laser ophthalmoscopy (SLO) [

5,

6] and even more to 3.5 × 5°, as in line-scanning retinal imagers [

7], more sophisticated image registration techniques are needed to remove image distortions and motion artifacts. Hardware image stabilization [

8,

9,

10,

11,

12,

13] has been developed and proved to be sufficiently good that simple software routines that could align and blend the recorded images. Concomitantly, a software version, strip-based registration [

14,

15,

16] has been developed, based on the assumption that deformations along the strip are relatively negligible due to the high speed of scanning with resonant scanners while torsional motion is neglected altogether. Yet, the living eye is not a rigid sphere, and imaged fields are neither flat nor insensitive to changing optical/geometrical perspectives that accompany motion; rather, the eye is a fluid-filled organ subjected to physical (and thus optical) distortions, as it is periodically pumped with blood and intermittently pulled by muscles. It is reasonable to expect microns of deformations in all directions over 0.5–1.5 mm field sizes, not only orthogonal to or along the strip. If the deformation from one end of the strip to the other is comparable to the cone size, strip-based registration will blur out the cones instead of providing the hoped for improvement in image analysis [

17]. To correct for similar types of local deformations, non-rigid registration methods have been successfully used in medical imaging [

18,

19,

20,

21,

22,

23]. In retinal imaging in particular, non-rigid registration techniques have been used to improve visualization of retinal capillaries using speckle-variance optical coherence tomography (OCTA) [

24] or AO scanning laser ophthalmoscopy [

25]. We propose here the use of a non-rigid registration method to register stacks of high-resolution retinal images to improve the quality of the final average image. Our procedure illustrated here on cone images can be applied for images of other retinal microstructures as well.

2. Materials and Methods

2.1. The Imaging System and Procedure

The multimodal AO retinal imager (MAORI) used here has been described previously [

5,

13]. It included both AO-corrected SLO and optical coherence tomography (OCT) imaging channels that shared a common galvanometer for vertical (slow) scanning and acquiring perfectly registered images simultaneously. MAORI uses broadband (40–60 nm) superluminescent diodes (SLD) for SLO (at 760 nm) and OCT (at 850 nm). It has been designed for a 7.5 mm beam diameter at the eye pupil that provides ~2.5 µm lateral resolution in a normal human eye in both AO-SLO and AO-OCT images. The SLO images (1000 × 1024 pixels) discussed in this paper were acquired at 28 Hz frame rate. Square SLO images with a size adjustable from 0.5 to 3 degrees (in high-resolution mode) can be positioned anywhere within the field of view of the instrument (fixation target) covering ~33 degrees. Additional features of MAORI include an auxiliary LSO imager for global orientation, two pupil configurations for a variety of subject species (including humans, large primates, and small animals), pupil camera, high-speed GPU processing of OCT and WS images, and a LCD-based fixation target. MAORI is a powerful research and clinical platform for high resolution imaging of cells and fine structure in the retina.

2.2. Human Subjects and Imaging Procedure

The multimodal AO retinal imager was tested at Boston Children’s Hospital. A human subject protocol was approved by Boston Children’s Hospital IRB prior to imaging, and all subjects gave informed consent to be imaged. Some of the subjects with small pupils were dilated to enhance the AO correction. The imaging sessions followed a set protocol, including several scans with varied sizes and at different eccentricities. The data analyzed in this paper were obtained from three normal volunteers, A—23-year-old female; B—38-year-old male; and C—19-year-old female, and are shown here for illustration purposes only.

2.3. Deformations

Images were acquired at 28 frames per second. The fast scan for the SLO images was generated with a resonant scanner (14.5 kHz). Either a nonlinear pixel clock or post-processing in software [

26,

27,

28] was needed to properly dewarp the image. Software dewarping assumed that the resonant scanner followed a sinusoidal law of motion that was properly calibrated and was well known with respect to the image. Any error here would result in varying pixel spacing occurring laterally across the image, and when a certain structure of the retina showed up in different parts of different frames it looked like it changed size. In addition, motion artifacts responsible for image deformations included fast eye saccades that generated intra-frame distortions. Electronic noise and optical distortions also affected the image. Jitter in the line trigger when acquiring the SLO image and noise or non-linearity in the slow scan in addition to errors in dewarping generated small local distortions that needed to be eliminated when averaging multiple frames that were acquired tens of milliseconds to several seconds apart. Simple, rigid overlap would add blur to the average image.

Blood flow in capillaries above the photoreceptor layer distorted the image of the cones. In general, capillaries filled with red blood cells cast shadows that hide cones underneath, whereas, occasionally, white blood cells act as moving magnifying glasses, causing the cones underneath to become visible. These events also create local distortions wherein the apparent distance between cones on opposite sides of the capillary changes; the reverse effect, where cones are mostly visible, and a moving shadow transiently hides cones, is also occasionally observed.

Figure 1 shows two consecutive frames from an image stack distorted differently by a blood cell flowing through a capillary. The right side of

Figure 1 shows the overlap of the two patterns of cones circled in the two frames. Clear motion in different directions can be observed; this is also illustrated in frames 3–5 of

Video S1.

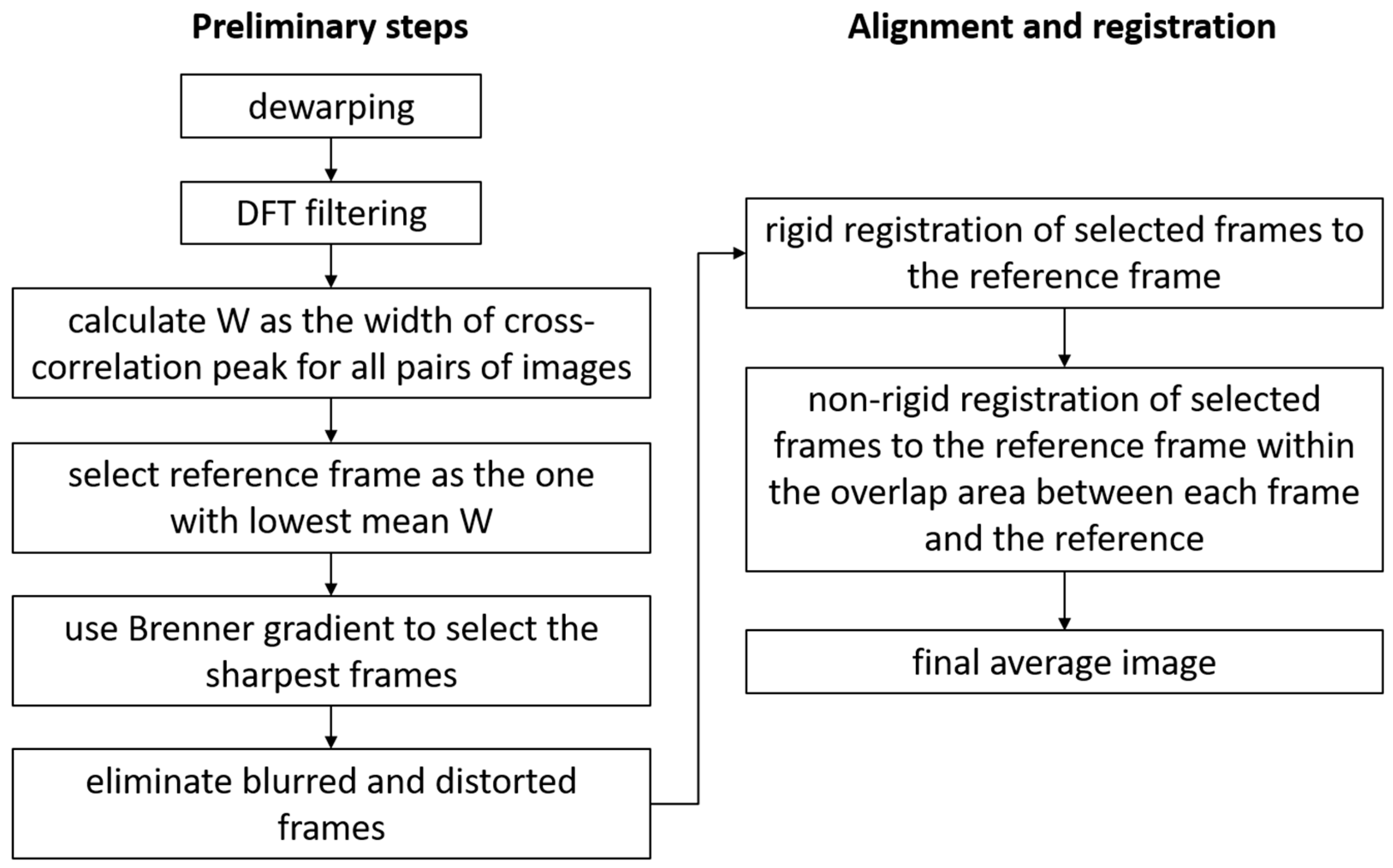

2.4. Preliminary Steps of Image Processing

Assuming that the dewarping was performed either in hardware or as a pre-processing software step, the first step in post-processing was to analyze the images to automatically select the best images and to eliminate the most distorted frames (due to blinks, saccades, intra-frame eye motion). All images were filtered to improve their contrasts and remove background intensity variations. A Discrete Fourier Transform (DFT) filtering procedure was used, in which a disk mask was applied to keep the ring associated with the photoreceptors. The DC was removed as well by filtering out the center peak of the DFT. All the steps of image processing are illustrated in the

Figure 2 flowchart.

The next step was to find the best frame in the data set, a reference frame, which would serve as a template to which all the other frames would be aligned to. This was performed based mostly on the width of the cross-correlations of pairs of images. Similar images typically had high and sharp cross-correlation peaks, whereas dissimilar images had low and broad cross-correlation peaks. A cross-correlation of 2D images was a 2D image. The peak of the cross-correlation indicated the best overlap of the two images and had a more or less conical shape. In that sense, we calculated W as the diameter of the circular section through the peak going down from the apex (maximum) to 90% of the apex height. The peak location provided the x/y shifts of one image with respect to the other one. W used here as a measure of the sharpness of the cross-correlation peak was relatively insensitive to intensity variations between frames and was mostly related to the similarities of the structures contained in the overlapping regions of the two frames. W was calculated for all pair combinations in an image stack, and the mean value was obtained for each image, given all correlations between that specific image and all the other images in the set. The image with the lowest mean was selected as the reference frame since it had the best similarity with the most frames in the dataset.

Another problem that affected the image quality was the focus variations across a dataset. As AO is a dynamic process, and the eye accommodation might not always be paralyzed during imaging, the image sharpness varies among images in a dataset acquired in a clinic. Automatic selection of the sharpest images to be averaged was desirable, and, certainly, the template for alignment should be among the sharpest images in the stack. Brenner gradient (BG) [

29,

30] was used here as a figure of merit to select the sharpest images and to ensure that the reference frame selected based on W is among the sharpest images in the set. The threshold (

thr_w) for W was set as the mean plus one standard deviation (

std_w) for the W values obtained by cross-correlating the reference frame with the entire stack, and the frames with W >

thr_w were considered distorted. Additionally, frames with BGs smaller than 75% of the reference frame’s BG were considered too blurred. The combination of distorted and blurred constituted the invalid frames to be eliminated from the alignment and average process.

The purpose up to here was to eliminate the most distorted frames and Keep the most similar Images to be aligned and averaged. One caveat was that the threshold criteria described above would sometimes eliminate more images than needed. For example, for a stable eye with few motion artifacts, most images were quite similar, and the standard deviations were quite narrow. Therefore, images with small distortions would be eliminated since their metrics fell less than one std away from the mean of the set. We preferred to err on the side of eliminating more images than needed to ensure that the algorithm worked unassisted on a large range of real patient data and not only on the best fixators. In any event, the best quality image sequences needed relatively few images to produce high-quality averages.

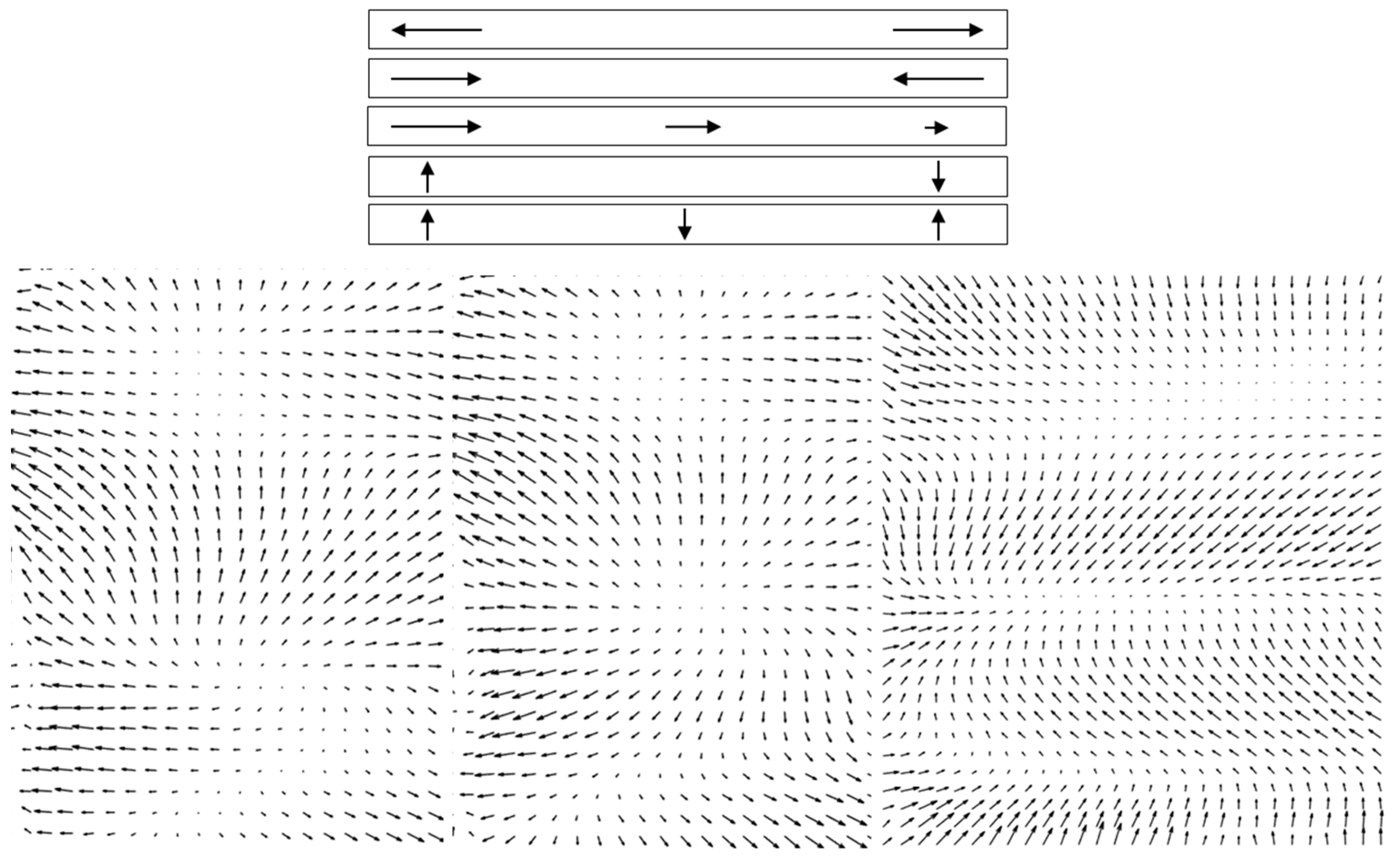

2.5. Non-Rigid Registration

The images in a stack that needed to be registered were generally acquired several tens of milliseconds to several seconds apart. As intra-frame distortions were evident, it was reasonable to expect inter-frame distortions as well. Strip-based registration could not account for deformations of the type illustrated schematically in the top of

Figure 3. Stretch, contraction, or gradient along the strip; rotation and bend of the strip; and combinations of these deformations could occur. To compensate for these local deformations, we applied a non-rigid image registration technique [

31] that improved the quality of the processed images for mapping cones. By using local grid deformations, non-rigid registration accounted for stretching and compressing in different directions in different parts of the image from frame to frame. The method was based on polynomial expansion to approximate the local signal, in which the coefficients were obtained through a weighted least square fit performed iteratively. The deformation field was accumulated at each iteration, and an example is shown in

Video S2. The difference between the reference frame and a new image is shown in the right panel of

Video S2, illustrating the cone displacement as black and white patterns, like shadows whose orientations indicated the directions of relative motion. Perfect overlap of a pixel between frames shows as gray. As the new image is subtracted from the reference frame, the black spots illustrate the positions of cones in the new image moving towards the white spots that illustrate the positions of the cones in the reference frame. The accumulated displacements over all iterations for three pairs of images are illustrated as quiver/velocity plots (MATLAB) in the bottom panel of

Figure 3. These examples clearly demonstrate the need for local correction of the deformations that occur between a reference image and the subsequent images.

Figure 4 shows two examples of the deformation field (left) and the difference image between the reference frame and the new frame for rigid (center—DR) vs. non-rigid (right—DNR) registration. The associated media (

Videos S3 and S4) illustrated a large range of local deformations, some of which could have been corrected with a strip-based registration algorithm. However, many frames included deformation types (as illustrated in

Figure 3) that required non-rigid registration. The center image (difference between the reference frame and a new image) shows cone displacements in different directions, whereas the cones are better aligned, and the difference image is much more uniform, in the right image (difference between the reference frame and non-rigid deformed new image). For the rigid registration (center), simple 2D cross-correlation was used to determine the lateral shift between the images, whereas the non-rigid registration was applied in the overlap region following the rigid alignment of the new image. In both examples in

Figure 4, these images illustrate the improvement in uniformity across the whole frame provided by non-rigid registration.

The non-rigid registration might fail in some parts of the image. Sometimes, the DR image shows a black spot (new frame) in between two white spots (reference frame), mainly at the edge of the overlap region. The algorithm gets confused as to which direction to move the black spot and fails to properly register the new frame. In addition, the registration fails if the local displacement is too large. In these regions where registration fails, the image difference (DNR) has large values, whereas the image difference has small values in regions where the registration is good. These differences can be estimated either with a local standard deviation filter or a simple rectification filter defined as a local mean of the absolute value of the difference image (A =

abs(DNR)). The second option is significantly faster in MATLAB than the first one. One can set a threshold for how good the registration is as

mean(

A)

+ std(

A) and filter out the regions that do not register well (the examples in

Figure 4 are shown without such filtering). The expectation is that each region of the reference frame will have enough frames that will average well to provide the image quality improvement.

3. Results

Figure 5 shows the result of non-rigid registration of 32 frames selected from a stack of 64 frames, as described above, capturing a 1.5 × 1.5° field. The image with the best overall W in the stack was selected as the reference frame (

Figure 5A). All other images were registered to this frame.

Figure 5B shows the filtered reference frame.

Figure 5C is the average image obtained using rigid registration only, and

Figure 5D is the average image after non-rigid registration. Compared to the reference frame, rigid registration produces a blurred image, whereas non-rigid registration generates an image with significantly improved cone definition.

Figure 6 shows a magnified version of the four areas delineated in

Figure 5 by the four white squares. The center column of

Figure 6 shows the rigid registered average, the right column is the non-rigid registered average, whereas the left column shows the corresponding area in the reference frame (areas 1 to 4 shown top to bottom). Cones that are not visible in the reference frame are nicely defined and can be easily counted and mapped in the non-rigid average image.

The cone mosaic is much more distinguishable; the cones are rounder, easier to identify and count, and even foveal cones become identifiable. A cone-counting algorithm has a much better chance to identify and count these cones compared to analyzing individual images. However, it should be noted here that non-rigid registration does not improve errors in the AO corrections. Dewarping artifacts are still visible in the left column of

Figure 6.

The averaging results for the data shown in

Figure 4 are illustrated in

Figure 7. The top line (B1) in

Figure 7 corresponds to the top data in

Figure 4, whereas the center line (B2) in

Figure 7 illustrates the results for the bottom data in

Figure 4. The bottom line (C) in

Figure 7 is from a different scan for the same volunteer analyzed in

Figure 5 and

Figure 6. The left column in

Figure 7 shows the reference frame, the center column is the rigid registration average, and the right column is the non-rigid registration average image. The definition of the cones is clearly improved, and cones that are not visible in the reference frame become visible and nicely defined in the final non-rigid registration average image. The rigid registration average exhibits significant blurring. Clearly, different scans are affected differently by motion artifacts, and the final rigid registration average exhibits different levels of blurriness. Subjectively, in the center column of

Figure 7, the top image shows less degradation as compared to the center image, whereas the bottom image is definitely unusable. However, in all cases, non-rigid registration undoubtedly provides clear improvement in cone definition and mosaic illustration.

4. Discussion

Stable eyes with relatively few small distortions in the acquired images are ideal; however, the reality in the clinic is different. Real data exhibit blinks, saccades, drifts, losses of fixation, focus changes, intensity variations among or within frames, or combinations of these effects. Repeating the scan is not always an option and does not always solve the problem; therefore, attempts need to be made to extract useful information from the available data. One of the most challenging tasks is to automatically identify and remove distorted frames.

There are many reasons for the complex deformations discussed here. A spectrum of eye motions from saccades to drifts and fixation losses contribute to both intra-frame and inter-frame distortions. There are also opto-mechanical influences due to the non-rigid construction of the eye, periodically pumped with blood in pressurized vessels and pulled by a set of powerful muscles that can induce dynamics in the globe and local distortions in the photoreceptor layer. Electronic noise, trigger noise, and galvanometer instabilities add local deformations in the recorded images. The images of the cone mosaic become distorted and need to be corrected to achieve optimal registration. The algorithm described here was designed to work automatically and unassisted on a large set of patient data. Distorted frames are automatically removed from the data set, using parameters (thresholds) calculated based on the dataset using means and standard deviations. A reference frame is selected automatically based on its similarity to the other frames and the image’s sharpness; all other frames are registered to it. Rigid registration through cross-correlation provides the initial alignment of the main features. Non-rigid registration then corrects the local deformations of the images to provide proper registration of the microscopic features like cone photoreceptors or capillaries. The final blending of the registered stack of images shows clear improvement over rigid registration, also correcting for a large range of distortions not accounted for in strip-based registration algorithms. Cones not visible in individual images or blurred with rigid registration become clearly visible with non-rigid registration and have a better chance to be counted and mapped by automatic cone-counting algorithms.

The process runs unsupervised on any number of scans acquired in the clinic on a wide range of healthy or diseased eyes. Some of the thresholds (the 90% peak height for W, or 75% for BG) were set empirically based on the algorithm’s performance on a large number of clinically relevant scans, whereas other thresholds were set based on means and standard deviations within the processed stack’s images. The automatic selection of the most similar images, elimination of the most distorted ones, as well as automatic selection of the reference frame enable efficient processing of multiple scans for a complete analysis of an imaging session. An additional routine has been developed that allows an expert grader to manually select the desired frames. This feature is valuable if the subject switched fixation between two relatively stable fixation positions during the acquisition of an image stack, and the algorithm selected one reference frame for the location with the most similar frames. The other location might also have valuable retinal information, and the grader has the option to manually select and average those frames.

The images shown here illustrated non-rigid registration improvements in photoreceptor imaging. Equally important, the method has been applied to offset aperture/split detection images [

32] that visualized capillary networks and the cellular details of blood vessel walls. Improved definition of cellular details provided by non-rigid registration enabled segmentation of these structures and quantification of characteristic geometrical parameters. The lumen diameters and the wall thicknesses of blood vessels could be evaluated, and local malformations such as thinning or thickening of the vessel wall could be measured and monitored longitudinally. Local wall thinning could be an indication of potential wall rupture with damaging effects on vision. The wall-to-lumen ratio has diagnostic value for diseases that have vascular consequences and has been investigated for a long time in retinal imaging, however, without the cellular resolution afforded by AO.

The automatic co-registration of the SLO and OCT images provides an additional advantage for image registration: the two images are orthogonal to each other and, therefore, 3D registration could be possible for the SLO and OCT images, a feature that is missing in AO-SLO- or AO-OCT-only imagers. To obtain 3D OCT rasters, the image pair SLO/OCT was scanned laterally (perpendicular to the B-scan), and there was significant overlap between consecutive SLO images. Alignment of the SLO images could the necessary correction for proper en face placement of the OCT images, whereas the axial alignment of the OCT images could offer the correction for axial motion that could be used for the analysis of the SLO stack. While the SLO alignment was not used here for OCT correction, and the OCT information was not used for SLO alignment in this paper, the simultaneous acquisition of the OCT and SLO images represents a unique feature of MAORI that may enable cross-modal 3D registration.

5. Conclusions

Simple cross-correlation provides the necessary shift for rigid registration of high-resolution retinal images. However, that is not sufficient for averaging the images shown here. Images were aligned and averaged using rigid registration, and the result was a blurred image. In the past, we have used strip-based registration [

7] to improve the final average image, as compared to simple full-frame rigid registration. However, we soon came to realize that strip-based registration did not work satisfactorily over a wide range of real patient data for the reasons explained in this paper. Therefore, a new type of correction for high-resolution retinal images based on non-rigid registration that accounted for local distortions in the recorded images was introduced here. Distortions included local image stretching, compressing, bending, and twisting due to a number of causes. The final average image showed significant improvement in the definition of cone photoreceptors, as compared to individual images and rigid registration performed by simple cross-correlation.

The non-rigid image registration procedure described here provided improved definition of retinal microstructures that were visible in the high-resolution retinal images, such as cones, rods, capillary networks, aneurysms, microglia, macrophages, hyalocytes, mural cells, endothelial cells, perycites, ganglion cells, lipid deposits, scar tissues, exudates, and thrombi. The average image obtained with a non-rigid registration of a stack of images could be properly segmented to enable the quantification of geometrical characteristics such as thickness, diameter, volume, or density that have diagnostic value in many retinal diseases.

Supplementary Materials

The following supporting information can be downloaded at:

https://www.mdpi.com/article/10.3390/diagnostics13132285/s1, Video S1: Image stack; Video S2: Nonrigid registration iterations; Video S3: Deformation field, rigid/nonrigid registration; Video S4: Deformation field, rigid/nonrigid registration.

Author Contributions

Conceptualization, M.M.; methodology, M.M.; software, M.M.; validation, J.D.A.; formal analysis, M.M.; investigation, M.M., J.D.A. and R.D.F.; resources, A.B.F.; data curation, M.M.; writing—original draft preparation, M.M.; writing—review and editing, J.D.A., R.D.F., A.B.F. and N.I.; visualization, M.M.; supervision, A.B.F. and N.I.; project administration, A.B.F. and N.I. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Eye Institute (NEI), Awards R44EY018509 and R01EY010597, and Department of Defense (DOD), Award W81XWH-14-1-0592.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board (Committee on Clinical Investigation) of Boston Children’s Hospital (protocol code 10950, 15 July 2014).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Acknowledgments

The authors acknowledge Ankit H. Patel and Gopi Maguluri for the support in developing and maintaining the control software of the retinal imaging systems.

Conflicts of Interest

MM, RDF, and NI: Physical Sciences Inc. (PSI) (E, P). JDA and ABF: no conflicts of interest.

References

- Dreher, A.W.; Bille, J.F.; Weinreb, R.N. Active Optical Depth Resolution Improvement of the Laser Tomographic Scanner. Appl. Opt. 1989, 28, 804–808. [Google Scholar] [CrossRef] [PubMed]

- Liang, J.Z.; Williams, D.R.; Miller, D.T. Supernormal vision and high-resolution retinal imaging through adaptive optics. J. Opt. Soc. Am. A 1997, 14, 2884–2892. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hofer, H.; Chen, L.; Yoon, G.Y.; Singer, B.; Yamauchi, Y.; Williams, D.R. Improvement in retinal image quality with dynamic correction of the eye’s aberrations. Opt. Express 2001, 8, 631–643. [Google Scholar] [CrossRef] [Green Version]

- Roorda, A.; Romero-Borja, F.; Donnelly, W.J.; Queener, H.; Hebert, T.J.; Campbell, M.C.W. Adaptive optics scanning laser ophthalmoscopy. Opt. Express 2002, 10, 405–412. [Google Scholar] [CrossRef]

- Hammer, D.X.; Ferguson, R.D.; Mujat, M.; Patel, A.; Plumb, E.; Iftimia, N.; Chui, T.Y.P.; Akula, J.D.; Fulton, A.B. Multimodal adaptive optics retinal imager: Design and performance. J. Opt. Soc. Am. A 2012, 29, 2598–2607. [Google Scholar] [CrossRef] [PubMed]

- Mujat, M.; Ferguson, R.D.; Patel, A.H.; Iftimia, N.; Lue, N.; Hammer, D.X. High resolution multimodal clinical ophthalmic imaging system. Opt. Express 2010, 18, 11607–11621. [Google Scholar] [CrossRef] [PubMed]

- Mujat, M.; Ferguson, R.D.; Iftimia, N.; Hammer, D.X. Compact adaptive optics line scanning ophthalmoscope. Opt. Express 2009, 17, 10242–10258. [Google Scholar] [CrossRef] [Green Version]

- Hammer, D.X.; Ferguson, R.D.; Magill, J.C.; White, M.A.; Elsner, A.E.; Webb, R.H. Image stabilization for scanning laser ophthalmoscopy. Opt. Express 2002, 10, 1542–1549. [Google Scholar] [CrossRef] [Green Version]

- Ferguson, R.D.; Hammer, D.X.; Burns, S.A.; Elsner, A.E. Retinal hemodynamic imaging with the TSLO. Investig. Ophthalmol. Vis. Sci. 2004, 45, 1137. [Google Scholar]

- Ferguson, R.D.; Hammer, D.X.; Elsner, A.E.; Webb, R.H.; Burns, S.A. Wide-field retinal hemodynamic imaging with the tracking scanning laser ophthalmoscope. Opt. Express 2004, 12, 5198–5208. [Google Scholar] [CrossRef]

- Hammer, D.X.; Ferguson, R.D.; Bigelow, C.E.; Iftimia, N.V.; Ustun, T.E.; Burns, S.A. Adaptive optics scanning laser ophthalmoscope for stabilized retinal imaging. Opt. Express 2006, 14, 3354–3367. [Google Scholar] [CrossRef] [Green Version]

- Burns, S.A.; Tumbar, R.; Elsner, A.E.; Ferguson, D.; Hammer, D.X. Large-field-of-view, modular, stabilized, adaptive-optics-based scanning laser ophthalmoscope. J. Opt. Soc. Am. A 2007, 24, 1313–1326. [Google Scholar] [CrossRef] [Green Version]

- Ferguson, R.D.; Zhong, Z.; Hammer, D.X.; Mujat, M.; Patel, A.H.; Deng, Z.; Zou, W.; Burns, S.A. Adaptive optics scanning laser ophthalmoscope with integrated wide-field retinal imaging and tracking. J. Opt. Soc. Am. A 2010, 27, A265–A277. [Google Scholar] [CrossRef] [PubMed]

- Stevenson, S.B.; Roorda, A. Correcting for miniature eye movements in high resolution scanning Laser Ophthalmoscopy. In Ophthalmic Technologies XV; SPIE: Bellingham, WA, USA, 2005; Volume 5688, pp. 145–151. [Google Scholar]

- Vogel, C.R.; Arathorn, D.W.; Roorda, A.; Parker, A. Retinal motion estimation in adaptive optics scanning laser ophthalmoscopy. Opt. Express 2006, 14, 487–497. [Google Scholar] [CrossRef] [PubMed]

- Sheehy, C.K.; Yang, Q.; Arathorn, D.W.; Tiruveedhula, P.; de Boer, J.F.; Roorda, A. High-speed, image-based eye tracking with a scanning laser ophthalmoscope. Biomed. Opt. Express 2012, 3, 2611–2622. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mujat, M.; Patel, A.; Iftimia, N.; Akula, J.D.; Fulton, A.B.; Ferguson, R.D. High-resolution retinal imaging: Enhancement techniques. In Ophthalmic Technologies XXV; SPIE: Bellingham, WA, USA, 2015; Volume 9307, p. 930703. [Google Scholar]

- Holden, M. A review of geometric transformations for nonrigid body registration. IEEE Trans. Med. Imag. 2008, 27, 111. [Google Scholar] [CrossRef] [PubMed]

- Oliveira, F.P.M.; Tavares, J.M.R.S. Medical image registration: A review. Comput. Methods Biomech. Biomed. Eng. 2014, 17, 73–93. [Google Scholar] [CrossRef]

- Gong, L.; Zhang, C.; Duan, L.; Du, X.; Liu, H.; Chen, X.; Zheng, J. Non-rigid image registration using spatially region-weighted correlation ratio and GPU-acceleration. IEEE J. Biomed. Health Inform. 2018, 23, 766–778. [Google Scholar] [CrossRef] [Green Version]

- Clatz, O.; Delingette, H.; Talos, I.; Golby, A.J.; Kikinis, R.; Jolesz, F.A.; Ayache, N.; Warfield, S.K. Robust nonrigid registration to capture brain shift from intraoperative MRI. IEEE Trans. Med. Imag. 2005, 24, 1417–1427. [Google Scholar] [CrossRef] [Green Version]

- Crum, W.R.; Hartkens, T.; Hill, D.L.G. Non-rigid image registration: Theory and practice. Br. J. Radiol. 2004, 77 (Suppl. 2), S140–S153. [Google Scholar] [CrossRef]

- Keszei, A.P.; Berkels, B.; Deserno, T.M. Survey of Non-Rigid Registration Tools in Medicine. J. Digit. Imag. 2017, 30, 102–116. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hendargo, H.C.; Estrada, R.; Chiu, S.J.; Tomasi, C.; Farsiu, S.; Izatt, J.A. Automated non-rigid registration and mosaicing for robust imaging of distinct retinal capillary beds using speckle variance optical coherence tomography. Biomed. Opt. Express 2013, 4, 803–821. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Uji, A.; Ooto, S.; Hangai, M.; Arichika, S.; Yoshimura, N. Image Quality Improvement in Adaptive Optics Scanning Laser Ophthalmoscopy Assisted Capillary Visualization Using B-spline-based Elastic Image Registration. PLoS ONE 2013, 8, e80106. [Google Scholar] [CrossRef] [Green Version]

- Tweed, D.G. Resonant Scanner Linearization Techniques. Opt. Eng. 1985, 24, 1018–1022. [Google Scholar] [CrossRef]

- Haji-Saeed, B.; Khoury, J.; Woods, C.L.; Pyburn, D.; Sengupta, S.K.; Kierstead, J. Mapping approach for image correction and processing for bidirectional resonant scanners. Opt. Eng. 2007, 46, 027007. [Google Scholar]

- Yang, Q.; Yin, L.; Nozato, K.; Zhang, J.; Saito, K.; Merigan, W.H.; Williams, D.R.; Rossi, E.A. Calibration-free sinusoidal rectification and uniform retinal irradiance in scanning light ophthalmoscopy. Opt. Lett. 2015, 40, 85–88. [Google Scholar] [CrossRef] [Green Version]

- Brenner, J.F.; Dew, B.S.; Horton, J.B.; King, T.; Neurath, P.W.; Selles, W.D. An automated microscope for cytologic research a preliminary evaluation. J. Histochem. Cytochem. 1976, 24, 100–111. [Google Scholar] [CrossRef]

- Yazdanfar, S.; Kenny, K.B.; Tasimi, K.; Corwin, A.D.; Dixon, E.L.; Filkins, R.J. Simple and robust image-based autofocusing for digital microscopy. Opt. Express 2008, 16, 8670–8677. [Google Scholar] [CrossRef]

- Forsberg, D.; Andersson, M.; Knutsson, H. Non-rigid diffeomorphic image registration of medical images using polynomial expansion. In Image Analysis and Recognition; Springer: Berlin/Heidelberg, Germany, 2012; pp. 304–312. [Google Scholar]

- Mujat, M.; Sampani, K.; Patel, A.H.; Sun, J.K.; Iftimia, N. Cellular-level analysis of retinal blood vessel walls based on phase gradient images. Diagnostics 2023, in press. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).