Automated Glaucoma Screening and Diagnosis Based on Retinal Fundus Images Using Deep Learning Approaches: A Comprehensive Review

Abstract

:1. Introduction

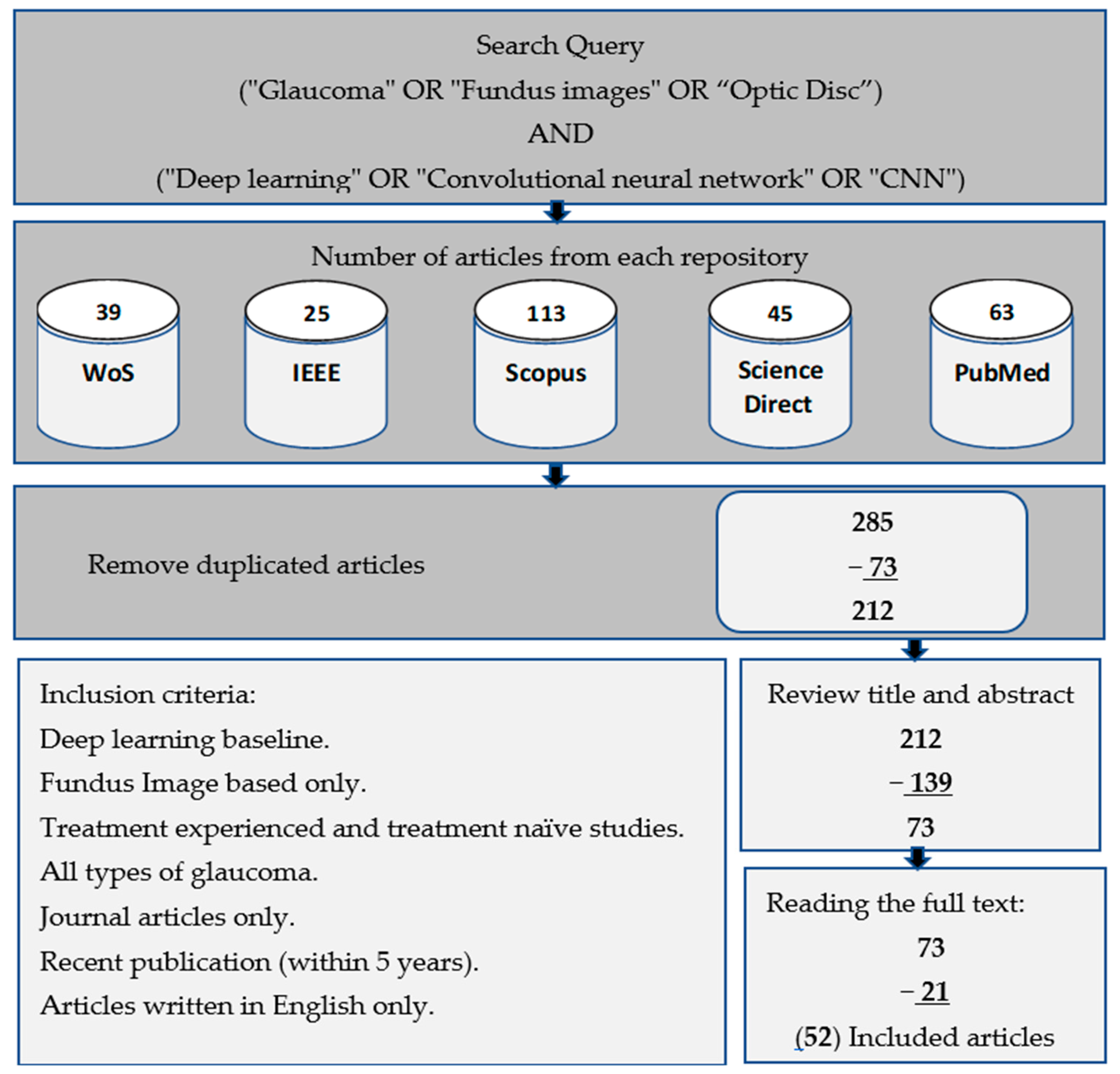

1.1. Information Sources

1.2. Study Selection Procedures

1.3. Search Mechanism

1.4. Paper Organization

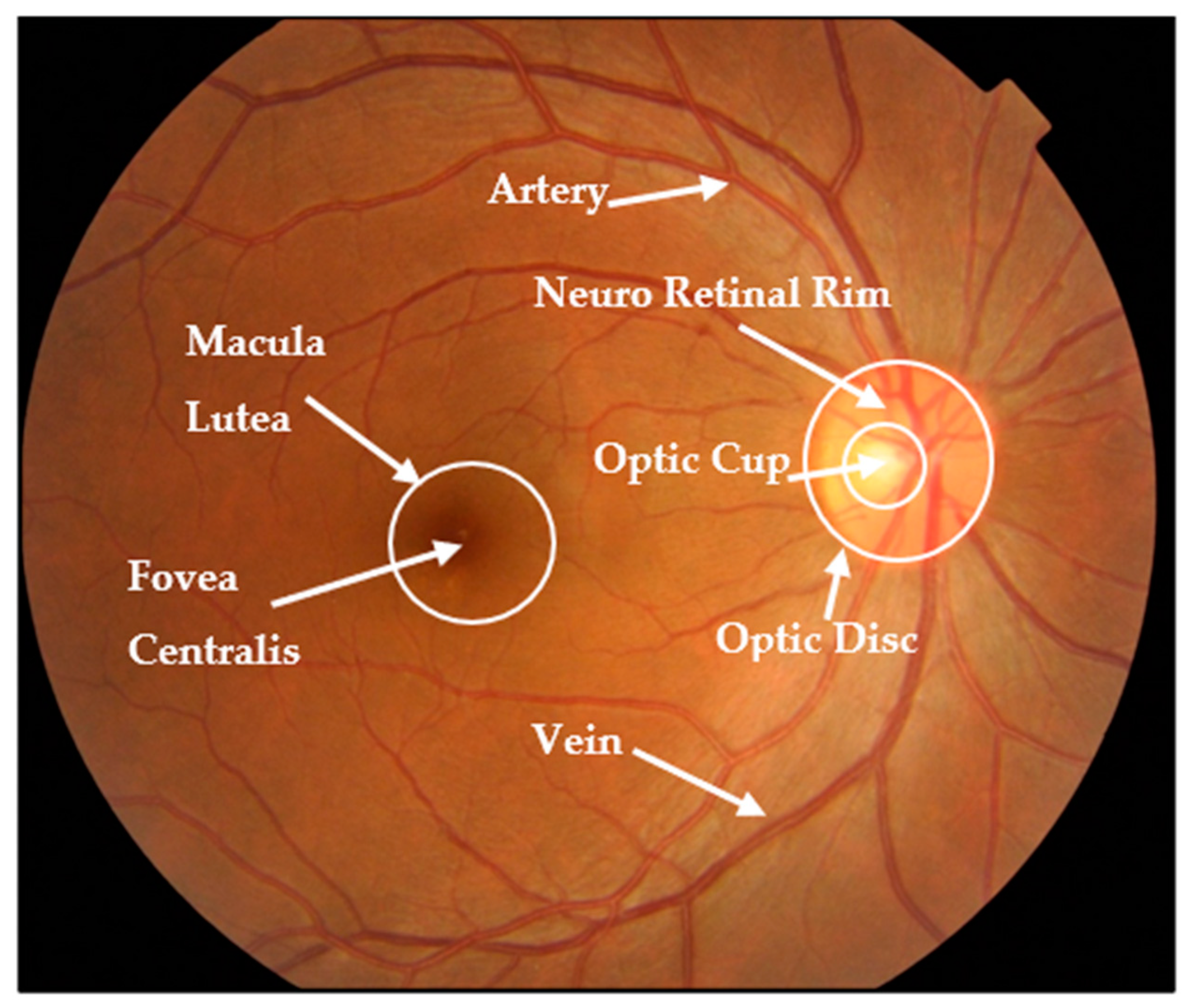

2. Glaucoma Overview: Types, Factors, and Datasets

2.1. Types of Glaucoma

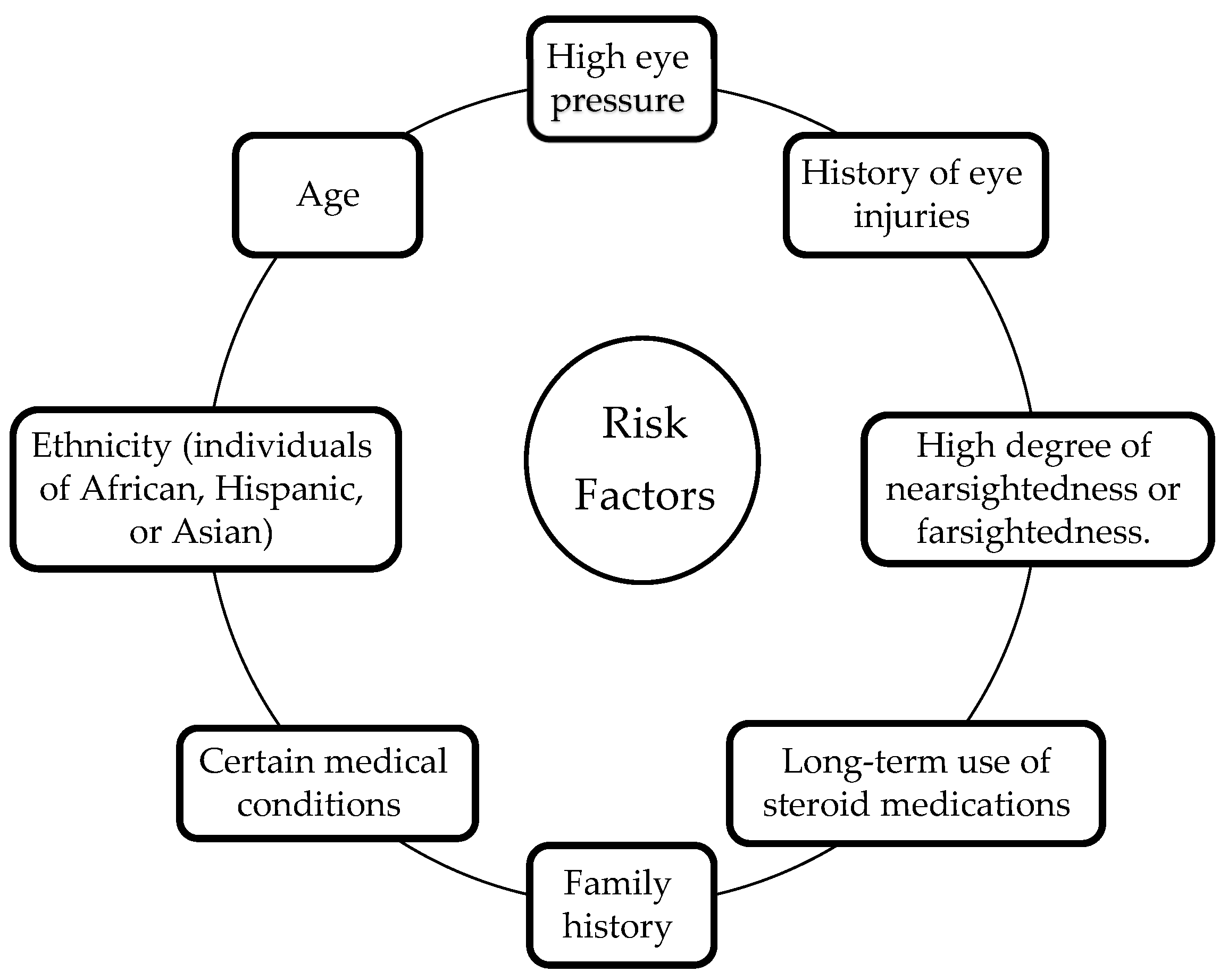

2.2. Risk Factors for Glaucoma

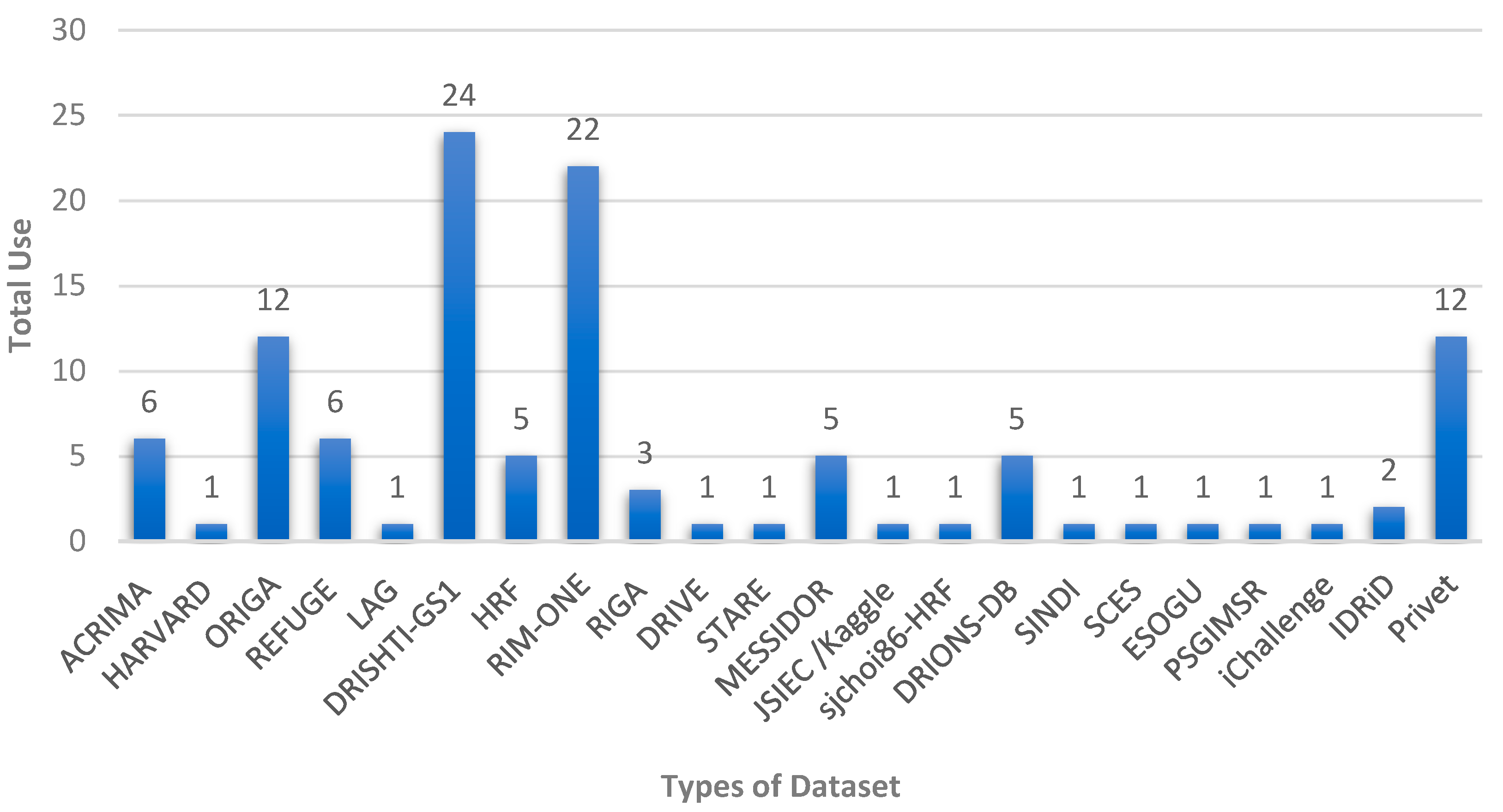

2.3. Retinal Fundus Image Datasets

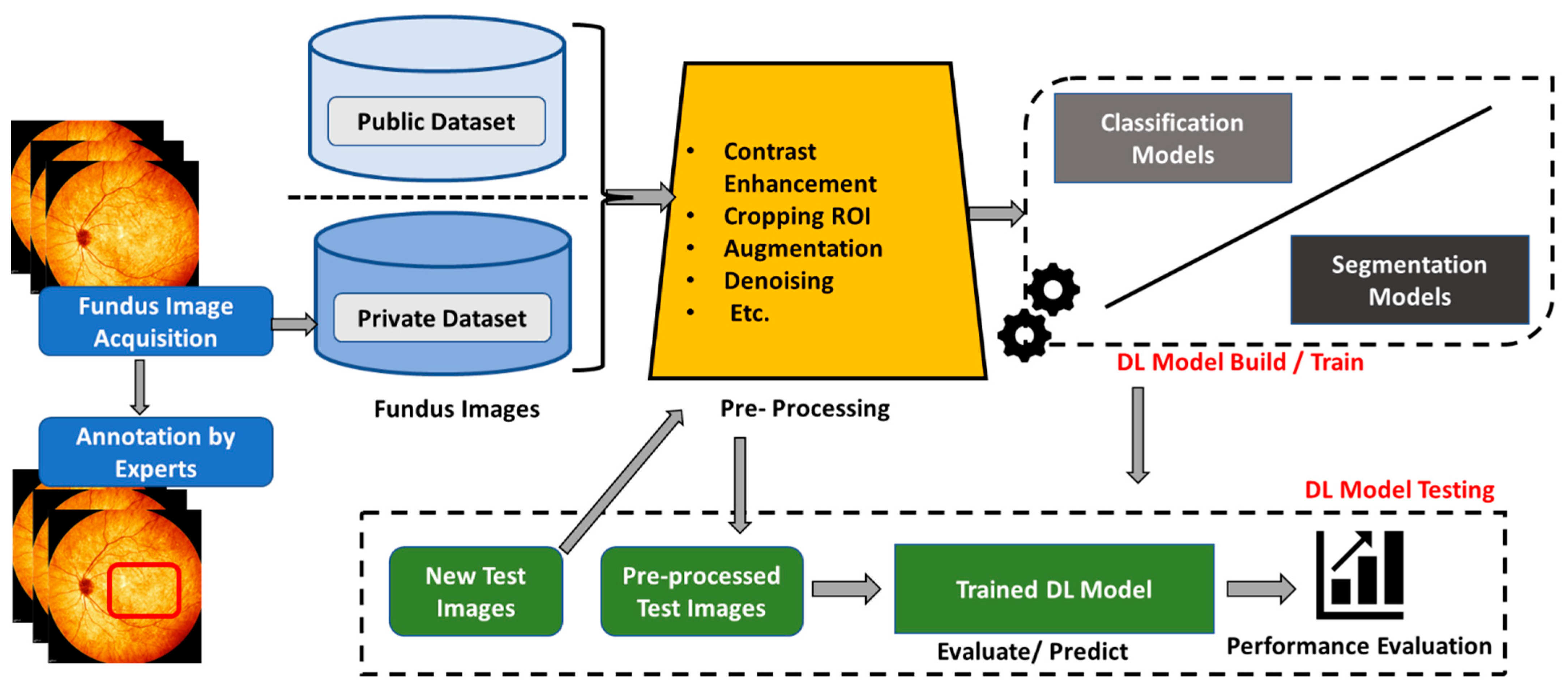

3. Feature Enhancement and Evaluation

3.1. Pre-Processing Techniques

3.2. Optic Nerve Head Localization

- Threshold: A certain boundary value is set to separate the optic disc and optic cup from the surrounding retina, depending on the density of image pixels;

- Edge Detection: Identify and detect edges of the optical disc and optical cup based on sudden changes in pixel values using algorithms such as the Sobel operator or Canny edge detector;

- Template Matching: Locating the optical disc or optic cup in the image using a binary template that matches their shapes;

- Machine Learning/Deep Learning: Training a network to identify the optic disc and optic cup in a fundus image based on a set of predefined features such as texture, size, and shape.

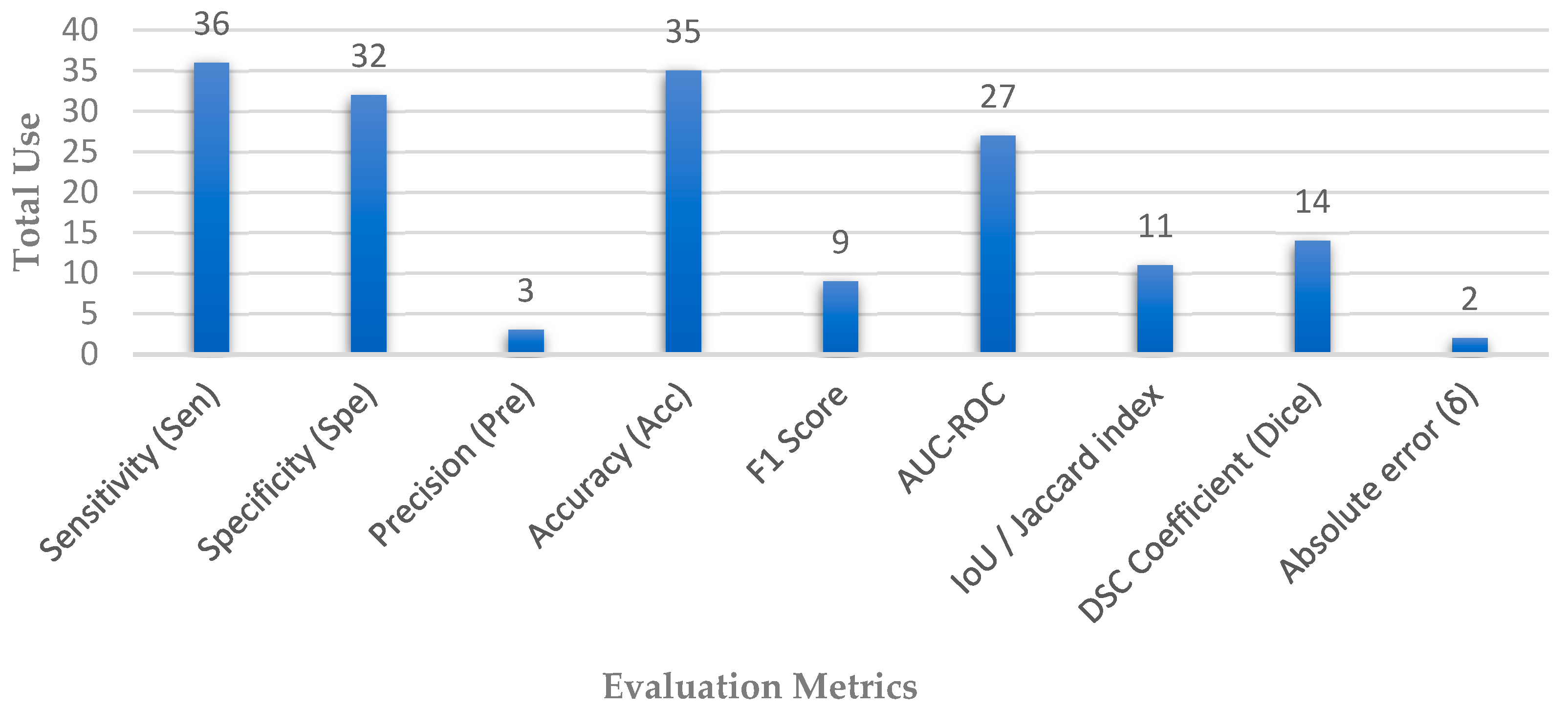

3.3. Performance Metrics

4. Glaucoma Detection

4.1. Glaucoma Diagnosis

| Reference | Dataset | Camera | ACC | SEN | SPE | AUC | F1 |

|---|---|---|---|---|---|---|---|

| Li et al. [26] | Private—LAG | Topcon, Canon, Zeiss | 0.962 | 0.954 | 0.967 | 0.983 | 0.954 |

| RIM-ONE | 0.852 | 0.848 | 0.855 | 0.916 | 0.837 | ||

| Wang et al. [56] | DRIONS-DB, HRF-dataset, RIM-ONE, and DRISHTI-GS1 | - | 0.943 | 0.907 | 0.979 | 0.991 | - |

| Gheisari et al. [57] | Private | Carl Zeiss | - | 0.950 | 0.960 | 0.990 | 0.961 |

| Nayak et al. [58] | Private | Zeiss FF 450 | 0.980 | 0.975 | 0.988 | - | 0.983 |

| Li et al. [59] | Private | Zeiss Visucam 500, Canon CR2 | 0.953 | 0.962 | 0.939 | 0.994 | - |

| Hemelings et al. [60] | Private | Zeiss Visucam | - | 0.980 | 0.910 | 0.995 | - |

| Juneja et al. [61] | DRISHTI-GS and RIM-ONE | - | 0.975 | 0.988 | 0.962 | - | - |

| Liu et al. [62] | Private | Topcon, Canon, Carl Zeiss | - | 0.962 | 0.977 | 0.996 | - |

| Bajwa et al. [63] | ORIGA, HRF, and OCT & CFI | - | - | 0.712 | - | 0.874 | - |

| Kim et al. [64] | Private | - | 0.960 | 0.960 | 1.000 | 0.990 | - |

| Hung et al. [65] | Private | Zeiss Visucam, Canon CR-2AF, and KOWA | 0.910 | 0.860 | 0.940 | 0.980 | 0.860 |

| Cho et al. [66] | Private | Nonmyd7, KOWA | 0.881 | - | - | 0.975 | - |

| Leonardo et al. [67] | ORIGA, DRISHTI-GS, REFUGE, RIM-ONE (r1, r2, r3), and ACRIMA | - | 0.931 | 0.883 | 0.957 | - | - |

| Alghamdi et al. [68] | RIM-ONE and RIGA | - | 0.938 | 0.989 | 0.905 | - | - |

| Devecioglu et al. [40] | ACRIMA | - | 0.945 | 0.945 | 0.924 | - | 0.939 |

| RIM-ONE | - | 0.753 | 0.682 | 0.827 | - | 0.739 | |

| ESOGU | - | 1.000 | 1.000 | 1.000 | - | 1.000 | |

| Juneja et al. [69] | - | - | 0.935 | 0.950 | 0.990 | 0.990 | - |

| De Sales et al. [70] | DRISHTI-GS and RIM-ONEv2 | - | 0.964 | 1.000 | 0.930 | 0.965 | - |

| Joshi et al. [41] | DRISHTI-GS, HRF, DRIONS-DB, and one privet dataset PSGIMSR | - | 0.890 | 0.813 | 0.955 | - | 0.871 |

| Almansour et al. [71] | (RIGA, HRF, Kaggle, ORIGA, and Eyepacs) and one privet dataset (KAIMRC) | - | 0.780 | - | - | 0.870 | - |

| Aamir et al. [72] | Private | - | 0.994 | 0.970 | 0.990 | 0.982 | - |

| Liao et al. [74] | ORIGA | - | - | - | - | 0.880 | - |

| Sudhan et al. [75] | ORIGA | - | 0.969 | 0.970 | 0.963 | - | 0.963 |

| Nawaz et al. [76] | ORIGA | - | 0.972 | 0.970 | - | 0.979 | - |

| Diaz-Pinto et al. [77] | ACRIMA, DRISHTIGS1, sjchoi86-HRF, RIM-ONE, HRF | - | - | 0.934 | 0.858 | 0.960 | - |

| Serte et al. [78] | HARVARD | - | 0.880 | 0.860 | 0.900 | 0.940 | - |

| Jos’e et al. [79] | ORIGA, DRISHTI-GS, RIM-ONE-r1, RIM-ONE-r2, RIM-ONE-r3, iChallenge, and RIGA | - | 0.870 | 0.850 | 0.880 | 0.930 | - |

| Natarajan et al. [7] | ACRIMA | - | 0.999 | 1.000 | 0.998 | 1.000 | 0.998 |

| Drishti- GS1 | - | 0.971 | 1.000 | 0.903 | 0.999 | 0.979 | |

| RIM-ONEv1 | - | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | |

| RIM-ONEv2 | - | 0.999 | 0.990 | 0.995 | 0.998 | 0.992 | |

| Islam et al. [73] | HRF and ACRIMA | - | 0.990 | 1.000 | 0.978 | 0.989 | - |

4.2. Optic Disc/Optic Cup Segmentation

| Reference | Dataset | OD/OC | ACC | SEN | SPE | PRE | AUC | IoU/Jacc | F1 | DSC | δ |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Civit-Masot et al. [80] | DRISHTI-GS | OD | 0.880 | 0.910 | 0.860 | - | 0.960 | - | - | 0.930 | - |

| OC | - | - | - | - | - | - | - | 0.890 | - | ||

| RIM-ONEv3 | OD | - | - | - | - | - | - | - | 0.920 | - | |

| OC | - | - | - | - | - | - | - | 0.840 | - | ||

| Pascal et al. [81] | REFUGE | OD | - | - | - | - | 0.967 | - | - | 0.952 | - |

| OC | - | - | - | - | - | - | - | 0.875 | - | ||

| Shanmugam et al. [82] | DRISHTI-GS | OD | 0.990 | 0.870 | 0.920 | - | - | - | - | - | - |

| OC | 0.990 | 0.860 | 0.950 | - | - | - | - | - | - | ||

| Fu et al. [83] | SCES | OD | 0.843 | 0.848 | 0.838 | - | 0.918 | - | - | - | - |

| SINDI | 0.750 | 0.788 | 0.712 | - | 0.817 | - | - | - | - | ||

| REFUGE | OD | 0.955 | - | - | - | 0.951 | - | - | - | - | |

| Sreng et al. [84] | ACRIMA | 0.995 | - | - | - | 0.999 | - | - | - | - | |

| ORIGA | 0.90 | - | - | - | 0.92 | - | - | - | - | ||

| RIM–ONE | 0.973 | - | - | - | 1 | - | - | - | - | ||

| DRISHTI–GS1 | 0.868 | - | - | - | 0.916 | - | - | - | - | ||

| Yu et al. [85] | RIM–ONE | OD | - | - | - | - | - | 0.926 | - | 0.961 | - |

| OC | - | - | - | - | - | 0.743 | - | 0.845 | - | ||

| DRISHTI–GS1 | OD | - | - | - | - | - | 0.949 | - | 0.974 | - | |

| OC | - | - | - | - | - | 0.804 | - | 0.888 | - | ||

| Natarajan et al. [86] | DRIONS | OD/OC | 0.947 | 0.956 | 0.904 | 0.997 | - | - | - | - | - |

| Ganesh et al. [87] | DRISHTI-GS | OD | 0.998 | 0.981 | 0.980 | 0.997 | - | 0.995 | - | ||

| Juneja et al. [88] | DRISHTI-GS | OD | 0.959 | - | - | - | - | 0.906 | 0.935 | 0.950 | - |

| OC | 0.947 | - | - | - | - | 0.880 | 0.916 | 0.936 | - | ||

| Veena et al. [89] | DRISHTI -GS | OD | 0.985 | - | - | - | - | 0.932 | 0.954 | 0.987 | - |

| OC | 0.973 | - | - | - | - | 0.921 | 0.954 | 0.971 | - | ||

| Tabassum et al. [90] | DRISHTI-GS | OD | 0.997 | 0.975 | 0.997 | - | 0.969 | 0.918 | - | 0.959 | - |

| OC | 0.997 | 0.957 | 0.998 | - | 0.957 | 0.863 | - | 0.924 | - | ||

| RIM-ONE | OD | 0.996 | 0.973 | 0.997 | - | 0.987 | 0.910 | - | 0.958 | - | |

| OC | 0.996 | 0.952 | 0.998 | - | 0.909 | 0.753 | - | 0.862 | - | ||

| Liu et al. [91] | DRISHTI-GS | OD | - | 0.978 | - | 0.978 | - | 0.957 | - | 0.978 | - |

| OC | - | 0.922 | - | 0.915 | - | 0.844 | - | 0.912 | - | ||

| REFUGE | OD | - | 0.981 | - | 0.941 | - | 0.924 | - | 0.960 | - | |

| OC | - | 0.921 | - | 0.875 | - | 0.807 | - | 0.890 | - | ||

| Nazir et al. [92] | ORIGA | OD | 0.979 | - | - | 0.959 | - | 0.981 | 0.953 | - | - |

| OC | 0.951 | - | - | 0.971 | - | 0.963 | 0.970 | - | - | ||

| Rakhshanda et al. [93] | DRISHTI–GS1 | OD | 0.997 | 0.965 | 0.998 | - | 0.996 | - | - | 0.949 | - |

| OC | 0.996 | 0.944 | 0.997 | - | 0.957 | - | - | 0.860 | - | ||

| Wang et al. [94] | MESSIDOR | OD | - | 0.983 | - | - | - | 0.969 | - | 0.984 | - |

| ORIGA | - | 0.990 | - | - | - | 0.960 | - | 0.980 | - | ||

| REFUGE | - | 0.965 | - | - | - | 0.942 | - | 0.969 | - | ||

| Kumar et al. [95] | DRIONS-DB | OD | 0.997 | - | - | - | - | 0.983 | - | - | - |

| RIM-ONE | - | - | - | - | - | 0.979 | - | - | - | ||

| IDRiD | - | - | - | - | - | 0.976 | - | - | - | ||

| Panda et al. [96] | RIM-ONE | OD | - | - | - | - | - | - | - | 0.950 | - |

| OC | - | - | - | - | - | - | - | 0.851 | - | ||

| ORIGA | OD | - | - | - | - | - | - | - | 0.938 | - | |

| OC | - | - | - | - | - | - | - | 0.889 | - | ||

| DRISHTI–GS1 | OD | - | - | - | - | - | - | - | 0.953 | - | |

| OC | - | - | - | - | - | - | - | 0.900 | - | ||

| Fu et al. [97] | (DRIVE, Kaggle, MESSIDOR, and NIVE) | OD | - | - | - | - | 0.991 | - | - | - | - |

| - | - | - | - | - | - | - | - | - | |||

| - | - | - | - | - | - | - | - | - | |||

| - | - | - | - | - | - | - | - | - | |||

| X. Zhao et al. [98] | DRISHTI-GS | OD | 0.998 | 0.949 | 0.999 | - | - | 0.930 | - | 0.964 | - |

| OC | 0.995 | 0.877 | 0.998 | - | - | 0.785 | - | 0.879 | - | ||

| RIM-ONEv3 | OD | 0.996 | 0.924 | 0.999 | - | - | 0.887 | - | 0.940 | - | |

| OC | 0.997 | 0.813 | 0.999 | - | - | 0.724 | - | 0.840 | - | ||

| Hu et al. [99] | RIM-ONE-r3 | OD | - | - | - | - | - | 0.917 | - | 0.956 | - |

| OC | - | - | - | - | - | 0.724 | - | 0.824 | - | ||

| REFUGE | OD | - | - | - | - | - | 0.931 | - | 0.964 | - | |

| OC | - | - | - | - | - | 0.813 | - | 0.894 | - | ||

| DRISHTI-GS | OD | - | - | - | - | - | 0.950 | - | 0.974 | - | |

| OC | - | - | - | - | - | 0.834 | - | 0.900 | - | ||

| MESSIDOR | OD | - | - | - | - | - | 0.944 | - | 0.970 | - | |

| IDRiD | OD | - | - | - | - | - | 0.931 | - | 0.964 | - | |

| Shankaranarayana et al. [101] | ORIGA | OD/OC | - | - | - | - | - | - | - | - | 0.067 |

| RIMONE r3 | OD/OC | - | - | - | - | - | - | - | - | 0.066 | |

| DRISHTI–GS1 | OD/OC | - | - | - | - | - | - | - | - | 0.105 | |

| Bengani et al. [102] | DRISHTI GS1 | OD | 0.996 | 0.954 | 0.999 | - | - | 0.931 | - | 0.967 | - |

| RIM-ONE | OD | 0.995 | 0.873 | 0.998 | - | - | 0.882 | - | 0.902 | - | |

| Wang et al. [103] | DRISHTI-GS | OD/OC | - | - | - | - | - | - | - | - | 0.082 |

| RIM-ONE-r3 | OD/OC | - | - | - | - | - | - | - | - | 0.081 |

5. Research Gaps, Recommendations and Limitations

5.1. Research Gaps

5.2. Future Recommendations

- Pre-processing is crucial for effective analysis;

- Annotating a diverse set of labels is more important than having a large quantity of annotations;

- Performance can be improved through fine-tuning and augmentation techniques;

- Complex features can be captured by applying deeper neural networks;

- Sufficient training data is critical for producing a high-accuracy system;

- Additional loss functions can be integrated to prevent overfitting in specific domains;

- Multi-scale CNN can also provide better feature extraction through various scale strategies;

- Medical expertise is valuable for understanding the underlying structure of diseases.

5.3. Limitations of the Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Borwankar, S.; Sen, R.; Kakani, B. Improved Glaucoma Diagnosis Using Deep Learning. In Proceedings of the 2020 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 2–4 July 2020; pp. 2–5. [Google Scholar] [CrossRef]

- Huang, X.; Sun, J.; Gupta, K.; Montesano, G.; Crabb, D.P.; Garway-Heath, D.F.; Brusini, P.; Lanzetta, P.; Oddone, F.; Turpin, A.; et al. Detecting Glaucoma from Multi-Modal Data Using Probabilistic Deep Learning. Front. Med. 2022, 9, 923096. [Google Scholar] [CrossRef]

- Mahdi, H.; Abbadi, N. El Glaucoma Diagnosis Based on Retinal Fundus Image: A Review. Iraqi J. Sci. 2022, 63, 4022–4046. [Google Scholar] [CrossRef]

- Hemelings, R.; Elen, B.; Barbosa-Breda, J.; Blaschko, M.B.; De Boever, P.; Stalmans, I. Deep Learning on Fundus Images Detects Glaucoma beyond the Optic Disc. Sci. Rep. 2021, 11, 20313. [Google Scholar] [CrossRef] [PubMed]

- Saeed, A.Q.; Abdullah, S.N.H.S.; Che-Hamzah, J.; Ghani, A.T.A. Accuracy of Using Generative Adversarial Networks for Glaucoma Detection: Systematic Review and Bibliometric Analysis. J. Med. Internet Res. 2021, 23, e27414. [Google Scholar] [CrossRef] [PubMed]

- Mohamed, N.A.; Zulkifley, M.A.; Zaki, W.M.D.W.; Hussain, A. An Automated Glaucoma Screening System Using Cup-to-Disc Ratio via Simple Linear Iterative Clustering Superpixel Approach. Biomed. Signal Process. Control. 2019, 53, 101454. [Google Scholar] [CrossRef]

- Natarajan, D.; Sankaralingam, E.; Balraj, K.; Karuppusamy, S. A Deep Learning Framework for Glaucoma Detection Based on Robust Optic Disc Segmentation and Transfer Learning. Int. J. Imaging Syst. Technol. 2022, 32, 230–250. [Google Scholar] [CrossRef]

- Norouzifard, M.; Nemati, A.; Gholamhosseini, H.; Klette, R.; Nouri-Mahdavi, K.; Yousefi, S. Automated Glaucoma Diagnosis Using Deep and Transfer Learning: Proposal of a System for Clinical Testing. In Proceedings of the 2018 International Conference on Image and Vision Computing New Zealand (IVCNZ), Auckland, New Zealand, 19–21 November 2018. [Google Scholar] [CrossRef]

- Elizar, E.; Zulkifley, M.A.; Muharar, R.; Hairi, M.; Zaman, M. A Review on Multiscale-Deep-Learning Applications. Sensors 2022, 22, 7384. [Google Scholar] [CrossRef] [PubMed]

- Abdani, S.R.; Zulkifley, M.A.; Shahrimin, M.I.; Zulkifley, N.H. Computer-Assisted Pterygium Screening System: A Review. Diagnostics 2022, 12, 639. [Google Scholar] [CrossRef]

- Fan, R.; Alipour, K.; Bowd, C.; Christopher, M.; Brye, N.; Proudfoot, J.A.; Goldbaum, M.H.; Belghith, A.; Girkin, C.A.; Fazio, M.A.; et al. Detecting Glaucoma from Fundus Photographs Using Deep Learning without Convolutions: Transformer for Improved Generalization. Ophthalmol. Sci. 2023, 3, 100233. [Google Scholar] [CrossRef]

- Zhou, Q.; Guo, J.; Chen, Z.; Chen, W.; Deng, C.; Yu, T.; Li, F.; Yan, X.; Hu, T.; Wang, L.; et al. Deep Learning-Based Classification of the Anterior Chamber Angle in Glaucoma Gonioscopy. Biomed. Opt. Express 2022, 13, 4668. [Google Scholar] [CrossRef]

- Afroze, T.; Akther, S.; Chowdhury, M.A.; Hossain, E.; Hossain, M.S.; Andersson, K. Glaucoma Detection Using Inception Convolutional Neural Network V3. Commun. Comput. Inf. Sci. 2021, 1435, 17–28. [Google Scholar]

- Chai, Y.; Bian, Y.; Liu, H.; Li, J.; Xu, J. Glaucoma Diagnosis in the Chinese Context: An Uncertainty Information-Centric Bayesian Deep Learning Model. Inf. Process. Manag. 2021, 58, 102454. [Google Scholar] [CrossRef]

- Balasopoulou, A.; Κokkinos, P.; Pagoulatos, D.; Plotas, P.; Makri, O.E.; Georgakopoulos, C.D.; Vantarakis, A.; Li, Y.; Liu, J.J.; Qi, P.; et al. Symposium Recent Advances and Challenges in the Management of Retinoblastoma Globe—Saving Treatments. BMC Ophthalmol. 2017, 17, 1. [Google Scholar]

- Ferro Desideri, L.; Rutigliani, C.; Corazza, P.; Nastasi, A.; Roda, M.; Nicolo, M.; Traverso, C.E.; Vagge, A. The Upcoming Role of Artificial Intelligence (AI) for Retinal and Glaucomatous Diseases. J. Optom. 2022, 15, S50–S57. [Google Scholar] [CrossRef]

- Xue, Y.; Zhu, J.; Huang, X.; Xu, X.; Li, X.; Zheng, Y.; Zhu, Z.; Jin, K.; Ye, J.; Gong, W.; et al. A Multi-Feature Deep Learning System to Enhance Glaucoma Severity Diagnosis with High Accuracy and Fast Speed. J. Biomed. Inform. 2022, 136, 104233. [Google Scholar] [CrossRef] [PubMed]

- Raghavendra, U.; Fujita, H.; Bhandary, S.V.; Gudigar, A.; Tan, J.H.; Acharya, U.R. Deep Convolution Neural Network for Accurate Diagnosis of Glaucoma Using Digital Fundus Images. Inf. Sci. 2018, 441, 41–49. [Google Scholar] [CrossRef]

- Schottenhamm, J.; Würfl, T.; Mardin, S.; Ploner, S.B.; Husvogt, L.; Hohberger, B.; Lämmer, R.; Mardin, C.; Maier, A. Glaucoma Classification in 3x3 Mm En Face Macular Scans Using Deep Learning in Different Plexus. Biomed. Opt. Express 2021, 12, 7434. [Google Scholar] [CrossRef] [PubMed]

- Saba, T.; Bokhari, S.T.F.; Sharif, M.; Yasmin, M.; Raza, M. Fundus Image Classification Methods for the Detection of Glaucoma: A Review. Microsc. Res. Tech. 2018, 81, 1105–1121. [Google Scholar] [CrossRef]

- An, G.; Omodaka, K.; Hashimoto, K.; Tsuda, S.; Shiga, Y.; Takada, N.; Kikawa, T.; Yokota, H.; Akiba, M.; Nakazawa, T. Glaucoma Diagnosis with Machine Learning Based on Optical Coherence Tomography and Color Fundus Images. J. Healthc. Eng. 2019, 2019, 4061313. [Google Scholar] [CrossRef]

- Elangovan, P.; Nath, M.K. Glaucoma Assessment from Color Fundus Images Using Convolutional Neural Network. Int. J. Imaging Syst. Technol. 2021, 31, 955–971. [Google Scholar] [CrossRef]

- Ajesh, F.; Ravi, R.; Rajakumar, G. Early diagnosis of glaucoma using multi-feature analysis and DBN based classification. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 4027–4036. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, J.; Yin, F.; Wong, D.W.K.; Tan, N.M.; Cheung, C.; Hamzah, H.B.; Ho, M.; Wong, T.Y. Introduing ORIGA: An Online Retinal Fundus Image Database for Glaucoma Analysis and Research. Arvo 2011, 3065–3068. [Google Scholar] [CrossRef]

- Orlando, J.I.; Fu, H.; Barbossa Breda, J.; van Keer, K.; Bathula, D.R.; Diaz-Pinto, A.; Fang, R.; Heng, P.A.; Kim, J.; Lee, J.H.; et al. REFUGE Challenge: A Unified Framework for Evaluating Automated Methods for Glaucoma Assessment from Fundus Photographs. Med. Image Anal. 2020, 59, 101570. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Xu, M.; Liu, H.; Li, Y.; Wang, X.; Jiang, L.; Wang, Z.; Fan, X.; Wang, N. A Large-Scale Database and a CNN Model for Attention-Based Glaucoma Detection. IEEE Trans. Med. Imaging 2019, 39, 413–424. [Google Scholar] [CrossRef] [PubMed]

- Phasuk, S.; Poopresert, P.; Yaemsuk, A.; Suvannachart, P.; Itthipanichpong, R.; Chansangpetch, S.; Manassakorn, A.; Tantisevi, V.; Rojanapongpun, P.; Tantibundhit, C. Automated Glaucoma Screening from Retinal Fundus Image Using Deep Learning. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 904–907. [Google Scholar]

- Serte, S.; Serener, A. A Generalized Deep Learning Model for Glaucoma Detection. In Proceedings of the 2019 3rd International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkey, 11–13 October 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Fumero, F.; Alayon, S.; Sanchez, J.L.; Sigut, J.; Gonzalez-Hernandez, M. RIM-ONE: An Open Retinal Image Database for Optic Nerve Evaluation. In Proceedings of the 2011 24th International Symposium on Computer-Based Medical Systems (CBMS), Bristol, UK, 27–30 June 2011; pp. 2–7. [Google Scholar] [CrossRef]

- Almazroa, A.A.; Alodhayb, S.; Osman, E.; Ramadan, E.; Hummadi, M.; Dlaim, M.; Alkatee, M.; Raahemifar, K.; Lakshminarayanan, V. Retinal Fundus Images for Glaucoma Analysis: The RIGA Dataset. SPIE 2018, 10579, 55–62. [Google Scholar] [CrossRef]

- Budai, A.; Bock, R.; Maier, A.; Hornegger, J.; Michelson, G. Robust Vessel Segmentation in Fundus Images. Int. J. Biomed. Imaging 2013, 2013, 154860. [Google Scholar] [CrossRef] [Green Version]

- Decencière, E.; Zhang, X.; Cazuguel, G.; Lay, B.; Cochener, B.; Trone, C.; Gain, P.; Ordóñez-Varela, J.R.; Massin, P.; Erginay, A.; et al. Feedback on a Publicly Distributed Image Database: The Messidor Database. Image Anal. Stereol. 2014, 33, 231–234. [Google Scholar] [CrossRef] [Green Version]

- Zheng, C.; Yao, Q.; Lu, J.; Xie, X.; Lin, S.; Wang, Z.; Wang, S.; Fan, Z.; Qiao, T. Detection of Referable Horizontal Strabismus in Children’s Primary Gaze Photographs Using Deep Learning. Transl. Vis. Sci. Technol. 2021, 10, 33. [Google Scholar] [CrossRef]

- Abbas, Q. Glaucoma-Deep: Detection of Glaucoma Eye Disease on Retinal Fundus Images Using Deep Learning. Int. J. Adv. Comput. Sci. Appl. 2017, 41–45. [Google Scholar] [CrossRef] [Green Version]

- Neto, A.; Camara, J.; Cunha, A. Evaluations of Deep Learning Approaches for Glaucoma Screening Using Retinal Images from Mobile Device. Sensors 2022, 22, 1449. [Google Scholar] [CrossRef]

- Mahum, R.; Rehman, S.U.; Okon, O.D.; Alabrah, A.; Meraj, T.; Rauf, H.T. A Novel Hybrid Approach Based on Deep Cnn to Detect Glaucoma Using Fundus Imaging. Electron. 2022, 11, 26. [Google Scholar] [CrossRef]

- Baskaran, M.; Foo, R.C.; Cheng, C.Y.; Narayanaswamy, A.K.; Zheng, Y.F.; Wu, R.; Saw, S.M.; Foster, P.J.; Wong, T.Y.; Aung, T. The Prevalence and Types of Glaucoma in an Urban Chinese Population: The Singapore Chinese Eye Study. JAMA Ophthalmol. 2015, 133, 874–880. [Google Scholar] [CrossRef] [PubMed]

- Bajwa, M.N.; Singh, G.A.P.; Neumeier, W.; Malik, M.I.; Dengel, A.; Ahmed, S. G1020: A Benchmark Retinal Fundus Image Dataset for Computer-Aided Glaucoma Detection. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Kovalyk, O.; Morales-Sánchez, J.; Verdú-Monedero, R.; Sellés-Navarro, I.; Palazón-Cabanes, A.; Sancho-Gómez, J.L. PAPILA: Dataset with Fundus Images and Clinical Data of Both Eyes of the Same Patient for Glaucoma Assessment. Sci. Data 2022, 9, 291. [Google Scholar] [CrossRef] [PubMed]

- Devecioglu, O.C.; Malik, J.; Ince, T.; Kiranyaz, S.; Atalay, E.; Gabbouj, M. Real-Time Glaucoma Detection from Digital Fundus Images Using Self-ONNs. IEEE Access 2021, 9, 140031–140041. [Google Scholar] [CrossRef]

- Joshi, S.; Partibane, B.; Hatamleh, W.A.; Tarazi, H.; Yadav, C.S.; Krah, D. Glaucoma Detection Using Image Processing and Supervised Learning for Classification. J. Healthc. Eng. 2022, 2022, 2988262. [Google Scholar] [CrossRef]

- Zhao, R.; Chen, X.; Liu, X.; Chen, Z.; Guo, F.; Li, S. Direct Cup-to-Disc Ratio Estimation for Glaucoma Screening via Semi-Supervised Learning. IEEE J. Biomed. Health Inform. 2020, 24, 1104–1113. [Google Scholar] [CrossRef]

- Goutam, B.; Hashmi, M.F.; Geem, Z.W.; Bokde, N.D. A Comprehensive Review of Deep Learning Strategies in Retinal Disease Diagnosis Using Fundus Images. IEEE Access 2022, 10, 57796–57823. [Google Scholar] [CrossRef]

- Sulot, D.; Alonso-Caneiro, D.; Ksieniewicz, P.; Krzyzanowska-Berkowska, P.; Iskander, D.R. Glaucoma Classification Based on Scanning Laser Ophthalmoscopic Images Using a Deep Learning Ensemble Method. PLoS ONE 2021, 16, e0252339. [Google Scholar] [CrossRef]

- Parashar, D.; Agrawal, D. 2-D Compact Variational Mode Decomposition- Based Automatic Classification of Glaucoma Stages from Fundus Images. IEEE Trans. Instrum. Meas. 2021, 70, 1–10. [Google Scholar] [CrossRef]

- Zulkifley, M.A.; Moubark, A.M.; Saputro, A.H.; Abdani, S.R. Automated Apple Recognition System Using Semantic Segmentation Networks with Group and Shuffle Operators. Agric. 2022, 12, 756. [Google Scholar] [CrossRef]

- Viola Stella Mary, M.C.; Rajsingh, E.B.; Naik, G.R. Retinal Fundus Image Analysis for Diagnosis of Glaucoma: A Comprehensive Survey. IEEE Access 2016, 4, 4327–4354. [Google Scholar] [CrossRef]

- Fu, H.; Cheng, J.; Xu, Y.; Wong, D.W.K.; Liu, J.; Cao, X. Joint Optic Disc and Cup Segmentation Based on Multi-Label Deep Network and Polar Transformation. IEEE Trans. Med. Imaging 2018, 37, 1597–1605. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hoover, A. Locating Blood Vessels in Retinal Images by Piecewise Threshold Probing of a Matched Filter Response. IEEE Trans. Med. Imaging 2000, 19, 203–210. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shabbir, A.; Rasheed, A.; Shehraz, H.; Saleem, A.; Zafar, B.; Sajid, M.; Ali, N.; Dar, S.H.; Shehryar, T. Detection of Glaucoma Using Retinal Fundus Images: A Comprehensive Review. Math. Biosci. Eng. 2021, 18, 2033–2076. [Google Scholar] [CrossRef] [PubMed]

- Hagiwara, Y.; Koh, J.E.W.; Tan, J.H.; Bhandary, S.V.; Laude, A.; Ciaccio, E.J.; Tong, L.; Acharya, U.R. Computer-Aided Diagnosis of Glaucoma Using Fundus Images: A Review. Comput. Methods Programs Biomed. 2018, 165, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Serener, A.; Serte, S. Transfer Learning for Early and Advanced Glaucoma Detection with Convolutional Neural Networks. In Proceedings of the 2019 Medical Technologies Congress (TIPTEKNO), Izmir, Turkey, 3–5 October 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Ramesh, P.V.; Subramaniam, T.; Ray, P.; Devadas, A.K.; Ramesh, S.V.; Ansar, S.M.; Ramesh, M.K.; Rajasekaran, R.; Parthasarathi, S. Utilizing Human Intelligence in Artificial Intelligence for Detecting Glaucomatous Fundus Images Using Human-in-the-Loop Machine Learning. Indian J. Ophthalmol. 2022, 70, 1131–1138. [Google Scholar] [CrossRef]

- Mohd Stofa, M.; Zulkifley, M.A.; Mohd Zainuri, M.A.A. Skin Lesions Classification and Segmentation: A Review. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 532–541. [Google Scholar] [CrossRef]

- Chai, Y.; Liu, H.; Xu, J. Glaucoma Diagnosis Based on Both Hidden Features and Domain Knowledge through Deep Learning Models. Knowledge-Based Syst. 2018, 161, 147–156. [Google Scholar] [CrossRef]

- Wang, P.; Yuan, M.; He, Y.; Sun, J. 3D Augmented Fundus Images for Identifying Glaucoma via Transferred Convolutional Neural Networks. Int. Ophthalmol. 2021, 41, 2065–2072. [Google Scholar] [CrossRef]

- Gheisari, S.; Shariflou, S.; Phu, J.; Kennedy, P.J.; Agar, A.; Kalloniatis, M.; Golzan, S.M. A Combined Convolutional and Recurrent Neural Network for Enhanced Glaucoma Detection. Sci. Rep. 2021, 11, 1–11. [Google Scholar] [CrossRef]

- Nayak, D.R.; Das, D.; Majhi, B.; Bhandary, S.V.; Acharya, U.R. ECNet: An Evolutionary Convolutional Network for Automated Glaucoma Detection Using Fundus Images. Biomed. Signal Process. Control. 2021, 67, 102559. [Google Scholar] [CrossRef]

- Li, F.; Yan, L.; Wang, Y.; Shi, J.; Chen, H.; Zhang, X.; Jiang, M.; Wu, Z.; Zhou, K. Deep Learning-Based Automated Detection of Glaucomatous Optic Neuropathy on Color Fundus Photographs. Graefe’s Arch. Clin. Exp. Ophthalmol. 2020, 258, 851–867. [Google Scholar] [CrossRef] [PubMed]

- Hemelings, R.; Elen, B.; Barbosa-Breda, J.; Lemmens, S.; Meire, M.; Pourjavan, S.; Vandewalle, E.; Van de Veire, S.; Blaschko, M.B.; De Boever, P.; et al. Accurate Prediction of Glaucoma from Colour Fundus Images with a Convolutional Neural Network That Relies on Active and Transfer Learning. Acta Ophthalmol. 2020, 98, e94–e100. [Google Scholar] [CrossRef] [PubMed]

- Juneja, M.; Thakur, N.; Thakur, S.; Uniyal, A.; Wani, A.; Jindal, P. GC-NET for Classification of Glaucoma in the Retinal Fundus Image. Mach. Vis. Appl. 2020, 31, 1–18. [Google Scholar] [CrossRef]

- Liu, H.; Li, L.; Wormstone, I.M.; Qiao, C.; Zhang, C.; Liu, P.; Li, S.; Wang, H.; Mou, D.; Pang, R.; et al. Development and Validation of a Deep Learning System to Detect Glaucomatous Optic Neuropathy Using Fundus Photographs. JAMA Ophthalmol. 2019, 137, 1353–1360. [Google Scholar] [CrossRef]

- Bajwa, M.N.; Malik, M.I.; Siddiqui, S.A.; Dengel, A.; Shafait, F.; Neumeier, W.; Ahmed, S. Two-Stage Framework for Optic Disc Localization and Glaucoma Classification in Retinal Fundus Images Using Deep Learning. BMC Med. Inform. Decis. Mak. 2019, 19, 136. [Google Scholar] [CrossRef] [Green Version]

- Kim, M.; Han, J.C.; Hyun, S.H.; Janssens, O.; Van Hoecke, S.; Kee, C.; De Neve, W. Medinoid: Computer-Aided Diagnosis and Localization of Glaucoma Using Deep Learning. Appl. Sci. 2019, 9, 3064. [Google Scholar] [CrossRef] [Green Version]

- Hung, K.H.; Kao, Y.C.; Tang, Y.H.; Chen, Y.T.; Wang, C.H.; Wang, Y.C.; Lee, O.K.S. Application of a Deep Learning System in Glaucoma Screening and Further Classification with Colour Fundus Photographs: A Case Control Study. BMC Ophthalmol. 2022, 22, 483. [Google Scholar] [CrossRef]

- Cho, H.; Hwang, Y.H.; Chung, J.K.; Lee, K.B.; Park, J.S.; Kim, H.G.; Jeong, J.H. Deep Learning Ensemble Method for Classifying Glaucoma Stages Using Fundus Photographs and Convolutional Neural Networks. Curr. Eye Res. 2021, 46, 1516–1524. [Google Scholar] [CrossRef]

- Leonardo, R.; Goncalves, J.; Carreiro, A.; Simoes, B.; Oliveira, T.; Soares, F. Impact of Generative Modeling for Fundus Image Augmentation with Improved and Degraded Quality in the Classification of Glaucoma. IEEE Access 2022, 10, 111636–111649. [Google Scholar] [CrossRef]

- Alghamdi, M.; Abdel-Mottaleb, M. A Comparative Study of Deep Learning Models for Diagnosing Glaucoma from Fundus Images. IEEE Access 2021, 9, 23894–23906. [Google Scholar] [CrossRef]

- Juneja, M.; Thakur, S.; Uniyal, A.; Wani, A.; Thakur, N.; Jindal, P. Deep Learning-Based Classification Network for Glaucoma in Retinal Images. Comput. Electr. Eng. 2022, 101, 108009. [Google Scholar] [CrossRef]

- de Sales Carvalho, N.R.; da Conceição Leal Carvalho Rodrigues, M.; de Carvalho Filho, A.O.; Mathew, M.J. Automatic Method for Glaucoma Diagnosis Using a Three-Dimensional Convoluted Neural Network. Neurocomputing 2021, 438, 72–83. [Google Scholar] [CrossRef]

- Almansour, A.; Alawad, M.; Aljouie, A.; Almatar, H.; Qureshi, W.; Alabdulkader, B.; Alkanhal, N.; Abdul, W.; Almufarrej, M.; Gangadharan, S.; et al. Peripapillary Atrophy Classification Using CNN Deep Learning for Glaucoma Screening. PLoS ONE 2022, 17, e0275446. [Google Scholar] [CrossRef] [PubMed]

- Aamir, M.; Irfan, M.; Ali, T.; Ali, G.; Shaf, A.; Alqahtani Saeed, S.; Al-Beshri, A.; Alasbali, T.; Mahnashi, M.H. An Adoptive Threshold-Based Multi-Level Deep Convolutional Neural Network for Glaucoma Eye Disease Detection and Classification. Diagnostics 2020, 10, 602. [Google Scholar] [CrossRef]

- Islam, M.T.; Mashfu, S.T.; Faisal, A.; Siam, S.C.; Naheen, I.T.; Khan, R. Deep Learning-Based Glaucoma Detection with Cropped Optic Cup and Disc and Blood Vessel Segmentation. IEEE Access 2022, 10, 2828–2841. [Google Scholar] [CrossRef]

- Liao, W.; Zou, B.; Zhao, R.; Chen, Y.; He, Z.; Zhou, M. Clinical Interpretable Deep Learning Model for Glaucoma Diagnosis. IEEE J. Biomed. Health Informatics 2020, 24, 1405–1412. [Google Scholar] [CrossRef]

- Sudhan, M.B.; Sinthuja, M.; Pravinth Raja, S.; Amutharaj, J.; Charlyn Pushpa Latha, G.; Sheeba Rachel, S.; Anitha, T.; Rajendran, T.; Waji, Y.A. Segmentation and Classification of Glaucoma Using U-Net with Deep Learning Model. J. Healthc. Eng. 2022, 2022, 1601354. [Google Scholar] [CrossRef]

- Nawaz, M.; Nazir, T.; Javed, A.; Tariq, U.; Yong, H.S.; Khan, M.A.; Cha, J. An Efficient Deep Learning Approach to Automatic Glaucoma Detection Using Optic Disc and Optic Cup Localization. Sensors 2022, 22, 434. [Google Scholar] [CrossRef]

- Diaz-Pinto, A.; Morales, S.; Naranjo, V.; Köhler, T.; Mossi, J.M.; Navea, A. CNNs for Automatic Glaucoma Assessment Using Fundus Images: An Extensive Validation. Biomed. Eng. Online 2019, 18, 29. [Google Scholar] [CrossRef] [Green Version]

- Serte, S.; Serener, A. Graph-Based Saliency and Ensembles of Convolutional Neural Networks for Glaucoma Detection. IET Image Process. 2021, 15, 797–804. [Google Scholar] [CrossRef]

- Martins, J.; Cardoso, J.S.; Soares, F. Offline Computer-Aided Diagnosis for Glaucoma Detection Using Fundus Images Targeted at Mobile Devices. Computer. Methods Programs Biomed 2020, 192, 105341. [Google Scholar] [CrossRef]

- Civit-Masot, J.; Dominguez-Morales, M.J.; Vicente-Diaz, S.; Civit, A. Dual Machine-Learning System to Aid Glaucoma Diagnosis Using Disc and Cup Feature Extraction. IEEE Access 2020, 8, 127519–127529. [Google Scholar] [CrossRef]

- Pascal, L.; Perdomo, O.J.; Bost, X.; Huet, B.; Otálora, S.; Zuluaga, M.A. Multi-Task Deep Learning for Glaucoma Detection from Color Fundus Images. Sci. Rep. 2022, 12, 1–10. [Google Scholar] [CrossRef]

- Shanmugam, P.; Raja, J.; Pitchai, R. An Automatic Recognition of Glaucoma in Fundus Images Using Deep Learning and Random Forest Classifier. Appl. Soft Comput. 2021, 109, 107512. [Google Scholar] [CrossRef]

- Fu, H.; Cheng, J.; Xu, Y.; Zhang, C.; Wong, D.W.K.; Liu, J.; Cao, X. Disc-Aware Ensemble Network for Glaucoma Screening from Fundus Image. IEEE Trans. Med. Imaging 2018, 37, 2493–2501. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sreng, S.; Maneerat, N.; Hamamoto, K.; Win, K.Y. Deep Learning for Optic Disc Segmentation and Glaucoma Diagnosis on Retinal Images. Appl. Sci. 2020, 10, 4916. [Google Scholar] [CrossRef]

- Yu, S.; Xiao, D.; Frost, S.; Kanagasingam, Y. Robust Optic Disc and Cup Segmentation with Deep Learning for Glaucoma Detection. Comput. Med. Imaging Graph. 2019, 74, 61–71. [Google Scholar] [CrossRef]

- Natarajan, D.; Sankaralingam, E.; Balraj, K.; Thangaraj, V. Automated Segmentation Algorithm with Deep Learning Framework for Early Detection of Glaucoma. Concurr. Comput. Pract. Exp. 2021, 33, e6181. [Google Scholar] [CrossRef]

- Ganesh, S.S.; Kannayeram, G.; Karthick, A.; Muhibbullah, M. A Novel Context Aware Joint Segmentation and Classification Framework for Glaucoma Detection. Comput. Math. Methods Med. 2021, 2021, 2921737. [Google Scholar] [CrossRef]

- Juneja, M.; Singh, S.; Agarwal, N.; Bali, S.; Gupta, S.; Thakur, N.; Jindal, P. Automated Detection of Glaucoma Using Deep Learning Convolution Network (G-Net). Multimed. Tools Appl. 2020, 79, 15531–15553. [Google Scholar] [CrossRef]

- Veena, H.N.; Muruganandham, A.; Senthil Kumaran, T. A Novel Optic Disc and Optic Cup Segmentation Technique to Diagnose Glaucoma Using Deep Learning Convolutional Neural Network over Retinal Fundus Images. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 6187–6198. [Google Scholar] [CrossRef]

- Tabassum, M.; Khan, T.M.; Arsalan, M.; Naqvi, S.S.; Ahmed, M.; Madni, H.A.; Mirza, J. CDED-Net: Joint Segmentation of Optic Disc and Optic Cup for Glaucoma Screening. IEEE Access 2020, 8, 102733–102747. [Google Scholar] [CrossRef]

- Liu, B.; Pan, D.; Song, H. Joint Optic Disc and Cup Segmentation Based on Densely Connected Depthwise Separable Convolution Deep Network. BMC Med. Imaging 2021, 21, 14. [Google Scholar] [CrossRef] [PubMed]

- Nazir, T.; Irtaza, A.; Starovoitov, V. Optic Disc and Optic Cup Segmentation for Glaucoma Detection from Blur Retinal Images Using Improved Mask-RCNN. Int. J. Opt. 2021, 2021, 6641980. [Google Scholar] [CrossRef]

- Imtiaz, R.; Khan, T.M.; Naqvi, S.S.; Arsalan, M.; Nawaz, S.J. Screening of Glaucoma Disease from Retinal Vessel Images Using Semantic Segmentation. Comput. Electr. Eng. 2021, 91, 107036. [Google Scholar] [CrossRef]

- Wang, L.; Gu, J.; Chen, Y.; Liang, Y.; Zhang, W.; Pu, J.; Chen, H. Automated Segmentation of the Optic Disc from Fundus Images Using an Asymmetric Deep Learning Network. Pattern Recognit. 2021, 112, 107810. [Google Scholar] [CrossRef]

- Kumar, E.S.; Bindu, C.S. Two-Stage Framework for Optic Disc Segmentation and Estimation of Cup-to-Disc Ratio Using Deep Learning Technique. J. Ambient Intell. Humaniz. Comput. 2021, 1–13. [Google Scholar] [CrossRef]

- Panda, R.; Puhan, N.B.; Mandal, B.; Panda, G. GlaucoNet: Patch-Based Residual Deep Learning Network for Optic Disc and Cup Segmentation Towards Glaucoma Assessment. SN Comput. Sci. 2021, 2, 99. [Google Scholar] [CrossRef]

- Fu, Y.; Chen, J.; Li, J.; Pan, D.; Yue, X.; Zhu, Y. Optic Disc Segmentation by U-Net and Probability Bubble in Abnormal Fundus Images. Pattern Recognit. 2021, 117, 107971. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, S.; Zhao, J.; Wei, H. Application of an Attention U-Net Incorporating Transfer Learning for Optic Disc and Cup Segmentation. Signal Image Video Process. 2020, 15, 913–921. [Google Scholar] [CrossRef]

- Hu, Q.I.Z.; Hen, X.I.C.; Eng, Q.I.M.; Iahuan, J.; Ong, S.; Uo, G.A.L.; Ang, M.E.N.G.W.; Hi, F.E.I.S.; Hongyue, Z.; Hen, C.; et al. GDCSeg-Net: General Optic Disc and Cup Segmentation Network for Multi-Device Fundus Images. Biomed. Opt. Express 2021, 12, 6529–6544. [Google Scholar] [CrossRef]

- Jin, B.; Liu, P.; Wang, P.; Shi, L.; Zhao, J. Optic Disc Segmentation Using Attention-Based U-Net and the Improved Cross-Entropy Convolutional Neural Network. Entropy 2020, 22, 844. [Google Scholar] [CrossRef] [PubMed]

- Shankaranarayana, S.M.; Ram, K.; Mitra, K.; Sivaprakasam, M. Fully Convolutional Networks for Monocular Retinal Depth Estimation and Optic Disc-Cup Segmentation. IEEE J. Biomed. Health Informatics 2019, 23, 1417–1426. [Google Scholar] [CrossRef]

- Bengani, S.; Arul, A.; Vadivel, S. Automatic Segmentation of Optic Disc in Retinal Fundus Images Using Semi-Supervised Deep Learning. Multimedia Tools Appl. 2020, 80, 3443–3468. [Google Scholar] [CrossRef]

- Wang, S.; Yu, L.; Member, S.; Yang, X.; Fu, C. Patch-Based Output Space Adversarial Learning for Joint Optic Disc and Cup Segmentation. IEEE Trans. Med. Imaging 2019, 38, 2485–2495. [Google Scholar] [CrossRef] [Green Version]

| Dataset | No. of Images | Glaucoma | Normal | Image Size | Cls. * | Seg. | Ground Truth Label |

|---|---|---|---|---|---|---|---|

| ACRIMA [22] | 705 | 396 | 309 | - | ✔ | - | - |

| HARVARD [23] | 1542 | 756 | 786 | - | ✔ | - | - |

| ORIGA [24] | 650 | 168 | 482 | 3072 × 2048 | ✔ | - | - |

| REFUGE [25] | 1200 | 120 | 1080 | 2124 × 2056 1634 × 1634 | ✔ | ✔ | Location of Fovea |

| LAG [26] | 5824 | 2392 | 3432 | 3456 × 5184 | ✔ | - | Attention maps |

| DRISHTI-GS1 [27] | 101 | 70 | 31 | 2896 × 1944 | ✔ | ✔ | CDR values and Disc center |

| HRF [28] | 45 | 27 | 18 | 3504 × 2336 | ✔ | ✔ | Center and Radius for Optic Disc |

| RIM-ONE-r1 [29] | 169 | 51 | 118 | - | ✔ | ✔ | - |

| RIM-ONE-r2 | 455 | 200 | 255 | - | ✔ | - | - |

| RIM-ONE-r3 | 159 | 74 | 85 | 2144 × 1424 | ✔ | ✔ | - |

| RIM-ONE-DL | 485 | 172 | 313 | - | ✔ | ✔ | - |

| RIGA [30] | 750 | - | - | 2240 × 1488 2743 × 1936 | - | - | Provide six boundaries for Optic Disc and Optic Cup |

| DRIVE [31] | 40 | - | - | 768 × 584 | - | ✔ | Vessel Segmentation |

| STARE [31] | 402 | - | - | 605 × 700 | - | ✔ | - |

| MESSIDOR [32] | 1200 | - | - | 1440 × 960 2440 × 1488 2304 × 1536 | - | - | Macular edema information |

| JSIEC/Kaggle [33] | 51 | 13 | 38 | - | ✔ | - | - |

| CHASE [3] | 28 | - | - | 1280 × 960 | ✔ | Vessel Segmentation | |

| sjchoi86-HRF [34] | 401 | 101 | 300 | - | ✔ | - | - |

| DRIONS-DB [35] | 110 | - | - | 600 × 400 | - | - | Contours for the Optic Disc |

| SINDI [36] | 5783 | 113 | 5670 | - | ✔ | - | - |

| SCES [37] | 1676 | 46 | 1630 | 3072 × 2048 | ✔ | - | - |

| G1020 [38] | 1020 | 296 | 724 | - | ✔ | - | - |

| PAPILA [39] | 488 | 155 | 333 | 2576 × 1934 | - | ✔ | - |

| ESOGU [40] | 4725 | 320 | 4405 | - | ✔ | - | - |

| PSGIMSR [41] | 1150 | 650 | 500 | 720 × 576 | - | - | - |

| Pre-Processing Technique | Explanation |

|---|---|

| Contrast Enhancement (Histogram equalization) | Histogram equalization is a technique used to enhance the overall contrast of an image. The main objective of this method is to distribute the pixel values in an image’s histogram more evenly. The underlying principle of this technique is that if the distribution of pixel intensity values is more uniform, the resulting image will have increased contrast and will appear more visually appealing. |

| Contrast Enhancement (CLAHE) | CLAHE (Contrast Limited Adaptive Histogram Equalization) is a technique that improves image contrast by redistributing pixel intensity values. It adapts to local contrast, which will enhance the structures visibility in low-contrast images. CLAHE adjusts contrast of small image regions to avoid over-amplifying noise, which is useful for improving image quality in low-contrast settings. |

| Color Space Transformation | Color space transformation is a technique that improves image analysis accuracy by converting images from one color space to another. In fundus image analysis, the commonly used color spaces are RGB, HSI, and Lab. |

| Noise Removal | Noise removal is a crucial step in improving digital images’ quality by eliminating unwanted noise. Techniques such as median filtering, Gaussian filtering, and wavelet transform are commonly used to remove noise from images, thus improving image analysis accuracy. |

| Cropping of ROI | Cropping ROI is a technique used to isolate regions of interest in an image, such as the optic disc, macula, and blood vessels. This technique can improve the subsequent analysis accuracy by focusing on the relevant structures in the image. |

| Data Augmentation | Data augmentation is a technique used to increase the diversity of the training dataset by creating new variations of existing images. Some examples of this technique, which are used to enhance data variation, including rotation, flipping, scaling, and adding noise. This technique can improve deep learning models’ performance during the training phase by providing more diverse and representative dataset. |

| Metric | Formula | Description |

|---|---|---|

| Sensitivity (Sen) Recall | Sen = TP */(TP + FN) | Measure of the percentage of patients with glaucoma who are correctly detected or identified by the model. |

| Specificity (Spe) | Spe = TN/(TN + FP) | Measures the proportion of patients without glaucoma who are correctly identified by the model. |

| Precision (Pre) | Pre = TP/(TP + FP) | Measures the proportion of patients identified as having glaucoma by the model who actually have the disease. |

| Accuracy (Acc) | Acc = (TP + TN)/(TP + TN + FP + FN) | Measures the proportion of patients who are correctly classified by the model. |

| F1 Score | F1 = 2 TP/(2 TP + FP + FN) | Measures the overall performance of the model in identifying both positive and negative instances. |

| AUC-ROC | The plot of the sensitivity against (1-specificity) | Measures the ability of the model to discriminate between patients with glaucoma and those without the disease. |

| IoU/Jaccard index | IoU = TP/(TP + FN + FP) | Measures the overlap between the predicted and ground truth segmentation masks. |

| DSC Coefficient (Dice) | Dice = 2 TP/(2 TP + FP + FN) | Measures the similarity between the predicted and ground truth segmentation masks. |

| Absolute error (δ) | δ = |CDRp − CDRg| | Measures the absolute error where CDRp and CDRg denote the cup to disc ratio value for the prediction and ground truth, respectively. |

| Reference | Dataset Description | Architecture | Strengths | Limitations |

|---|---|---|---|---|

| Li et al. [26] | A private dataset (LAG), 11,760 images | ResNet | The model consists of three subnets that detect glaucoma using deep features from visualized maps of pathological areas. | The model is complex and requires complex mathematical operations. |

| Wang et al. [56] | DRIONS-DB, HRF, RIM-ONE, and DRISHTI-GS 1), 686 images | VGG & AlexNet | The 3D topographic map of the ONH, reconstructed using the shape from shading method, offers improved visualization of the OC and OD. | The model is complex and resource- intensive. |

| Gheisari et al. [57] | A private dataset, 695 images | VGG & ResNet & RNN (LSTM) | Extraction of spatial and temporal data from fundus videos is much more accurate when CNN and RNN are used in a single system. | Despite its high accuracy, further evaluation of a larger heterogeneous population is required. |

| Nayak et al. [58] | A private dataset, 1426 images | CNN | A feature extraction technique that uses a meta-heuristic approach, requiring fewer parameters for efficient feature learning. | The developed model is incapable of automatically detecting different stages of glaucoma. |

| Li et al. [59] | A private dataset, 26,585 images | ResNet | Integrating fundus images with medical history data slightly improves sensitivity and specificity. | Bias was introduced by subjective grading from two groups, and cropping the optic nerve head region may cause information loss. |

| Hemelings et al. [60] | A private dataset, 8433 images | ResNet | By combining transfer learning, careful data augmentation, and uncertainty sampling, labelling costs were reduced by about 60%. | Considerations include imbalanced data, late-stage glaucoma images, and primarily Caucasian patients in the models. |

| Juneja et al. [61] | DRISHTI-GS and RIM-ONE, 267 images | CNN | By using separable convolutional layers, increasing filter size led to a more accurate classification. | The model’s use of manual cropping of the optic disc leads to data loss. |

| Liu et al. [62] | A private dataset, 241,032 images | ResNet | An online DL system was proposed that updates the model iteratively and automatically using a large-scale database of fundus images. | The model’s generalization ability can be enhanced by human–computer interaction. |

| Bajwa et al. [63] | ORIGA, HRF, and OCT & CFI, 780 images | VGG16 & CNN | An automated disc localization model was created using a semi-automatic method for generating ground truth annotations, facilitating classification. | The proposed network struggles with learning distinctive features to classify glaucomatous images in public datasets. |

| Kim et al. [64] | A private dataset, 2123 images | VGGNet, InceptionNet & ResNet | A weakly supervised localization method highlights glaucomatous areas in input images. A prototype web app for diagnosis and localization of glaucoma was presented, integrating the predictive model and publicly available. | Using an external dataset produced lower accuracy scores compared to using the dedicated dataset during training in the experiments. |

| Hung et al. [65] | A private dataset, 1851 images | EfficientNet | Evaluation methods differed based on binary and ternary classifications, the use of red-free and non-red-free photographs, and the inclusion of high myopia information. | Limitations include a small number of cases, a single ethnic background, and the exclusion of pre-perimetric glaucoma. |

| Cho et al. [66] | A private dataset, 3460 images | InceptionNet | Averaging multiple CNN models with diverse learning conditions and characteristics is more effective in classifying glaucoma stages compared to using a single CNN model. | More diverse data are needed for a generalized model, and further studies are necessary to adjust weighted values per model and improve performance. |

| Leonardo et al. [67] | ORIGA, DRISHTI-GS, REFUGE, RIM-ONE (r1, r2, r3), and ACRIMA, 3187 images | EfficientNet, U-Net | Using GAN to improve quantitative and qualitative image quality, and proposing a new model to evaluate the quality of fundus images. | Generative model and quality evaluator were trained with full field-of-view images, while lower field-of-view images are common in different equipment and datasets. |

| Alghamdi et al. [68] | RIM-ONE and RIGA, 1205 images | VGG-16 | Comparing the performances of three automated glaucoma classification systems (supervised, transfer, and semi-supervised) on multiple public datasets. | The proposed models can only diagnose the presence or absence of glaucoma and cannot classify the severity of a specific retinal disease. |

| Devecioglu et al. [40] | ACRIMA, RIM-ONE, and ESOGU, 5885 images | Self-ONNs | Self-ONNs show high performance in glaucoma detection with reduced complexity compared to deep CNN models, especially with limited data. | The suggested model does not include a segmentation network. |

| De Sales et al. [70] | DRISHTI-GS and RIM-ONEv2, 556 images | VGG16 | The use of 3DCNN resulted in high accuracy and the production of 3D activation maps, which provide additional data details without the need for optic disc segmentation or data augmentation. | It requires more parameters compared to 2D convolution, making it computationally more expensive and technically challenging. |

| Joshi et al. [41] | DRISHTI-GS, HRF, and DRIONS-DB and one privet dataset PSGIMSR, 1391 images | VGG &ResNet & GooglNet | Ensembling pre-trained individual models using a voting system can improve the accuracy of the proposed diagnosis model. | The proposed framework does not include the segmentation of the OD and OC, which could potentially increase the detection performance. |

| Almansour et al. [71] | RIGA, HRF, Kaggle, ORIGA, and Eyepacs and one privet dataset, 3771 images | R-CNN & VGG | Proposing a two-step approach for early diagnosis of glaucoma based on PPA in fundus images using two localization and classification models. | It uses a complex deep learning system to classify PPA versus non-PPA; therefore, an interpretable surrogate model can be used. |

| Liao et al. [74] | ORIGA, 650 images | ResNet | The proposed framework addresses the issue of interpretability in deep learning-based glaucoma diagnosis systems by highlighting the specific regions identified by the network. | Although the method shows good accuracy, it still suffers from the problem of high-resolution feature maps being hard to represent by the proposed method. |

| Sudhan et al. [75] | ORIGA, 650 images | U-Net & DenseNet-201 and DCNN | This model can be useful for various medical image segmentation and classification processes such as diabetic retinopathy, brain tumor detection, breast cancer detection, etc. | The performance of the proposed system can be enhanced by solving the imbalance issue by improving the classifier and reducing the threshold. |

| Nawaz et al. [76] | ORIGA, 650 images | EfficientNet-B0 | Proposing a robust model based on the EfficientNet-B0 for key points extraction to enhance the glaucoma recognition performance while decreasing the model training and execution time. | More robust feature selection methods can be implemented and employed in deep learning models to expand this work to other eye diseases. |

| Diaz-Pinto et al. [77] | ACRIMA, DRISHTI GS1, sjcho 86-HRF, RIM-ONE, HRF, 1707 images | VGG16, VGG19, InceptionV3, ResNet50, and Xception | The study evaluated five ImageNet-trained CNN architectures as classifiers for glaucoma detection and found them to be a reliable option with high accuracy, specificity, and sensitivity. | CNN models’ performance can decrease when tested on databases not used in training, and varying labeling criteria across publicly available databases can impact classification results. |

| Serte et al. [78] | HARVARD, 1542 images | AlexNet, ResNet-50, and ResNet-152 | A graph theory-based technique is recommended for identifying salient regions in fundus images by locating theptic disc and removing extraneous areas, accompanied by an ensemble CNN model to enhance classification accuracy. | A limitation of the approach is the absence of a segmentation process prior to classification, which could potentially result in less accurate outcomes. |

| Jos´e et al. [79] | ORIGA, DRISHTI-GS, RIM-ONE-r1, RIM-ONE-r2, RIM-ONE-r3, iChallenge, and RIGA, 3231 images | Multi-scale encoder— decoder network and Mobile Net | The newly created pipeline can enable large-scale glaucoma screenings in environments where it was previously impractical because of its capability to operate without an Internet connection and run on low-cost mobile devices. | The dataset utilized in this study poses some challenges, including imbalanced classes and a limited number of samples for deep learning techniques. |

| Natarajan et al. [7] | ACRIMA, Drishti- GS 1, RIM-ONEv1, and RIM-ONEv2, 2180 images | SqueezeNet | A highly accurate, lightweight glaucoma detection model has been introduced. The model’s stages can be used separately or with other models through transfer learning for future ocular disorder diagnosis and treatment frameworks. | The model only uses deep features and does not consider geometric or chromatic measures in the disc and cup region, but the classifier output does not provide insights for ophthalmologists. |

| Islam et al. [73] | HRF and ACRIMA and one privet dataset BEH, 1188 images | U-Net, EfficientNet, MobileNet, DenseNet, and GoogLeNe | A new dataset for identifying glaucoma with lower training time was developed by segmenting blood vessels from retinal fundus images using the U-Net model. | The accuracy of the blood vessel segmentation model is slightly lower compared to the segmentation of the optic cup and optic disc. |

| Reference | Dataset Description | Architecture | Strengths | Limitations |

|---|---|---|---|---|

| Civit-Masot et al. [80] | DRISHTI-GS, and RIM-ONE v3, 136 images | U-Net, Mobile Net | The implementation used a lightweight MobileNet for embedded model deployment, and a reporting tool was created to aid physicians in decision-making. | There is a need to train models using larger datasets from both public and private sources. |

| Pascal et al. [81] | REFUGE, 1200 fundus images | U-Net (VGG) | The study employs a single DL architecture and multi-task learning to perform glaucoma detection, fovea location, and OD/OC segmentation with limited resources and small sample sizes. | The use of shared models requires additional effort from experts, and obtaining pixel-wise annotations of objects for segmentation and fovea location is more time consuming and expensive. |

| Fu et al. [83] | ORIGA, SCES, and SINDI, 8109 images | U-Net ResNet | A segmentation-guided network is employed to localize the disc region and generate screening results, while a pixel-wise polar transformation enhances deep feature representation by converting the image to the polar coordinate system. | Despite using a complex ensemble system consisting of multiple layers and transformations for glaucoma detection, the results are not as high as simpler algorithms that produce better outcomes. |

| Sreng et al. [84] | REFUG, ACRIMA, ORIGA, RIM–ONE, and DRISHTI–GS1, 2787 images | DeepLabv3+ (Mobile Net) AlexNet, Google Net, InceptionV3, XceptionNet, Resnet, ShufieNet, SqueezeNet, MobileNet, InceptionResNet, & DenseNet | The proposed framework employs five deep CNNs for OD segmentation, eleven pretrained CNNs using transfer learning for glaucoma classification, and an SVM classifier for optimal decision-making. | The study used high-quality images, emphasizing the need for a representative dataset with co-morbidities and low-quality images. In addition, the proposed segmentation method is limited to OD only, and further development is required to segment both OD and OC for improved classification accuracy. |

| Yu et al. [85] | ORIGA, RIM–ONE, and DRISHTI–GS1, 882 images | U-Net (ResNet) ReNeXt (ResNet) | The proposed segmentation/classification model uses a pre-trained network for fast training and a morphological post-processing module to refine the optic disc and cup segmentations based on the largest segmented blobs. | Model performance is impacted by low-quality images and severe disc atrophy, requiring training on images with less perfect quality and pathological discs with atrophy to improve segmentation. |

| Natarajan et al. [86] | DRIONS, 2311 images | MKFCM VGG | This research employs Modified Kernel Fuzzy C-Means (MKFCM) clustering for optimal clustering of retinal images, achieving accuracy even in noisy or corrupted input images. | Data augmentation can improve algorithm performance, making it applicable to various glaucoma retinal diseases, including cases where healthy images are misclassified as mild or glaucomatous. |

| Ganesh et al. [87] | ACRIMA, DRISHTI-GS, RIM-ONEv1, REFUGE, and RIM-ONEv2, 1830 images | U-Net (inception) | The proposed system integrates segmentation and classification within a single framework and utilizes inception blocks in the encoder and decoder blocks of the GD-YNet to capture features at multiple scales. | The ResNeXt block architecture is restricted by assuming a cardinality value of 32, and the encoder–decoder paths have basic inception modules with dimension-reduction capabilities, which is another limitation. |

| Juneja et al. [88] | DRISHTI-GS, 101 images | U-Net | The proposed architecture utilizes two neural networks in tandem, with the second network building upon the processed output of the first. This concatenated network achieves superior accuracy with reduced complexity. | The proposed system only outputs the CDR and does not provide information about the severity level of the disease. |

| Tabassum et al. [90] | DRISHTI-GS, RIM-ONE, and REFUGE, 660 images | U-Net (CDED-Net) | The proposed encoder–decoder design is computationally efficient and eliminates the need for pre/post-processing steps by reusing information in the decoder stage and using a shallower network structure. | The algorithm has limitations that require further research and testing with diverse data. Additionally, its effectiveness in diagnosing other retinal diseases needs to be verified. |

| Liu et al. [91] | DRISHTI-GS and REFUGE, 1301 images | U-Net (DDSC-Net) | The network uses a deep separable convolution network with dense connections and a multi-scale image pyramid at the input end. | The model may not provide consistent results for fundus images captured by different devices and institutions, indicating a generalization issue. |

| Nazir et al. [92] | ORIGA, 650 images | Mask-RCNN | The proposed method accurately segments OD and OC regions in retinal images for glaucoma diagnosis, even with image blurring, noise, and lighting variations. | The proposed model, based on R-CNN, could be improved by incorporating newer deep learning techniques such as EfficientNet. |

| Rakhshanda et al. [93] | RIM–ONE v3, and DRISHTI–GS1, 260 images | encoder–decoder Network & VGG16 | The study combines semantic segmentation and medical image segmentation to enhance performance and reduce memory usage and training/inference times. | Semantic segmentation has a limitation in that it cannot distinguish between adjacent objects, such as OD and OC. |

| Wang et al. [94] | MESSIDOR, ORIGA, and REFUGE, 1970 images | Multi-scale encoder— decoder network, (Modified U-Net) | The proposed network uses multiple multi-scale input features to counteract pooling operations and preserve important image data. Integration via element-wise subtraction highlights shape and boundary changes for precise object segmentation. | Limitations include the model’s sensitivity to object boundaries and small image sizes used in experiments due to GPU memory constraints. |

| Kumar et al. [95] | BinRushed, Magrabia, MESSIDOR, DRIONS-DB, RIM-ONE, and IDRiD, 1839 images | U-Net | The study utilized a modified U-Net architecture with 19 layers and implemented ground truth generation to improve the model’s training and testing procedures. | Transfer Learning or GANs can potentially reduce the time required to build the model. |

| Panda et al. [96] | ORIGA, RIM–ONE, and DRISHTI–GS1, 882 images | Residual DL | The proposed technique has the potential to assist doctors in making highly accurate decisions regarding glaucoma assessment in mass screening programs conducted in suburban or peripheral clinical settings. | Incorporating post-processing techniques to obtain the segmentation output adds an extra step that increases the model’s complexity. |

| Fu et al. [97] | DRIVE, Kaggle, MESSIDOR, and NIVE, 11,640 images | U-Net | The proposed method combines a data-driven U-Net and model-driven probability bubbles to locate the OD, resulting in a more robust joint probability approach for localization, ensuring effectiveness. | This paper’s scope is limited to interactions between the model-driven probability bubble approach and the deep network’s output layer, without covering deeper interactions in hidden layers. |

| Zhao et al. [98] | DRIONS-DB and DRISHTI-GS, 211 images | U-Net with transfer learning | The proposed algorithm can effectively segment OD/OC in fundus images, even with only a small number of labels. While providing fast segmentation, the method also maintains a relatively high level of accuracy. | Compared to existing algorithms used for comparison, the proposed method demonstrates an OD/OC segmentation accuracy that is only slightly lower by less than 3%. |

| Hu et al. [99] | REFUG, MESSIDOR, RIM-ONE-r3, DRISHTI-GS, and IDRiD, 2741 images | Encoder- decoder | The proposed model effectively handles issues caused by domain shifts from different acquisition devices and limited sample datasets, which may result in inadequate training. | The proposed model did not address the issue of the blurred boundary between the OD and OC. |

| Baixin et al. [100] | MESSIDOR and RIM-ONE-r1, 1369 images | U-Net | The proposed method enhances semantic segmentation with channel dependencies and integrates multi-scale data into the attention mechanism to utilize contextual information. | The suggested method did not perform as well as previous methods, possibly due to factors such as network design, data pre-processing, and hyperparameter adjustment. |

| Shankaranarayana et al. [101] | ORIGA, RIMONE r3, and DRISHTI–GS1, 910 images | Encoder decoder | The proposed pretraining scheme outperforms the standard denoising autoencoder. It is also adaptable to different semantic segmentation tasks. | Although increasing the batch size during training may enhance the performance of the proposed models, it was not feasible in the study due to system limitations. |

| Bengani et al. [102] | DRISHTI GS1, RIM-ONE, and Kaggle’s DR, 88,962 images | Encoder- Decoder | This model trains quickly and has a small disk space requirement compared to other models that exhibit similar performance. | The study did not experiment with any method to train the autoencoder and segmentation network simultaneously. |

| Wang et al. [103] | DRISHTI-GS dataset, RIM-ONE-r3, and the REFUGE, 1460 images | DeepLabv3+ and MobileNetV2 | It proposed a new segmentation network with a morphology-aware loss function to produce accurate optic disc and optic cup segmentation results by guiding the network to capture smoothness priors in the masks. | No domain generalization techniques were used in this study to address the problem of retraining a new network when the image comes from a new target domain. |

| Process | Gap |

|---|---|

| Datasets | Private datasets pose limitations in research, as they hinder accurate assessment by making it challenging to compare the results of different datasets. Moreover, some methods may utilize unsuitable datasets, leading to authors generating private ground truths. |

| Enhancement | Image enhancement algorithms can cause artifacts and distortions, making the image unusable. In addition, parameter selection is subjective and can vary depending on the application and preference; however, these techniques can also be computationally expensive. |

| Localization | Using only the bright circular region principle for localizing the optic disc in retinal fundus images is inaccurate due to other bright areas in the image. Some methods also require manual intervention, such as manual annotation of the disc area and its radius. |

| Segmentation | Typically, when using the same segmentation method for both the optic disc and optic cup, the results for the latter are not as satisfactory. This is due to disregarding the relationship between different parts of the retina in most of the proposed methods. |

| Classification | The accuracy of separating different parts of the retina depends heavily on the extracted features, which are crucially dependent on several parameters. However, most methods only consider a few of these parameters, and large datasets are also required for optimal network fitting. Unfortunately, such datasets are not readily available for glaucoma patients, as the training procedures are time-consuming. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zedan, M.J.M.; Zulkifley, M.A.; Ibrahim, A.A.; Moubark, A.M.; Kamari, N.A.M.; Abdani, S.R. Automated Glaucoma Screening and Diagnosis Based on Retinal Fundus Images Using Deep Learning Approaches: A Comprehensive Review. Diagnostics 2023, 13, 2180. https://doi.org/10.3390/diagnostics13132180

Zedan MJM, Zulkifley MA, Ibrahim AA, Moubark AM, Kamari NAM, Abdani SR. Automated Glaucoma Screening and Diagnosis Based on Retinal Fundus Images Using Deep Learning Approaches: A Comprehensive Review. Diagnostics. 2023; 13(13):2180. https://doi.org/10.3390/diagnostics13132180

Chicago/Turabian StyleZedan, Mohammad J. M., Mohd Asyraf Zulkifley, Ahmad Asrul Ibrahim, Asraf Mohamed Moubark, Nor Azwan Mohamed Kamari, and Siti Raihanah Abdani. 2023. "Automated Glaucoma Screening and Diagnosis Based on Retinal Fundus Images Using Deep Learning Approaches: A Comprehensive Review" Diagnostics 13, no. 13: 2180. https://doi.org/10.3390/diagnostics13132180

APA StyleZedan, M. J. M., Zulkifley, M. A., Ibrahim, A. A., Moubark, A. M., Kamari, N. A. M., & Abdani, S. R. (2023). Automated Glaucoma Screening and Diagnosis Based on Retinal Fundus Images Using Deep Learning Approaches: A Comprehensive Review. Diagnostics, 13(13), 2180. https://doi.org/10.3390/diagnostics13132180