Comparison of Diagnostic Performance in Mammography Assessment: Radiologist with Reference to Clinical Information Versus Standalone Artificial Intelligence Detection

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Population

2.2. Imaging Modalities

2.3. Imaging Analysis

2.4. Data Analysis

3. Results

3.1. Clinicopathological Characteristics

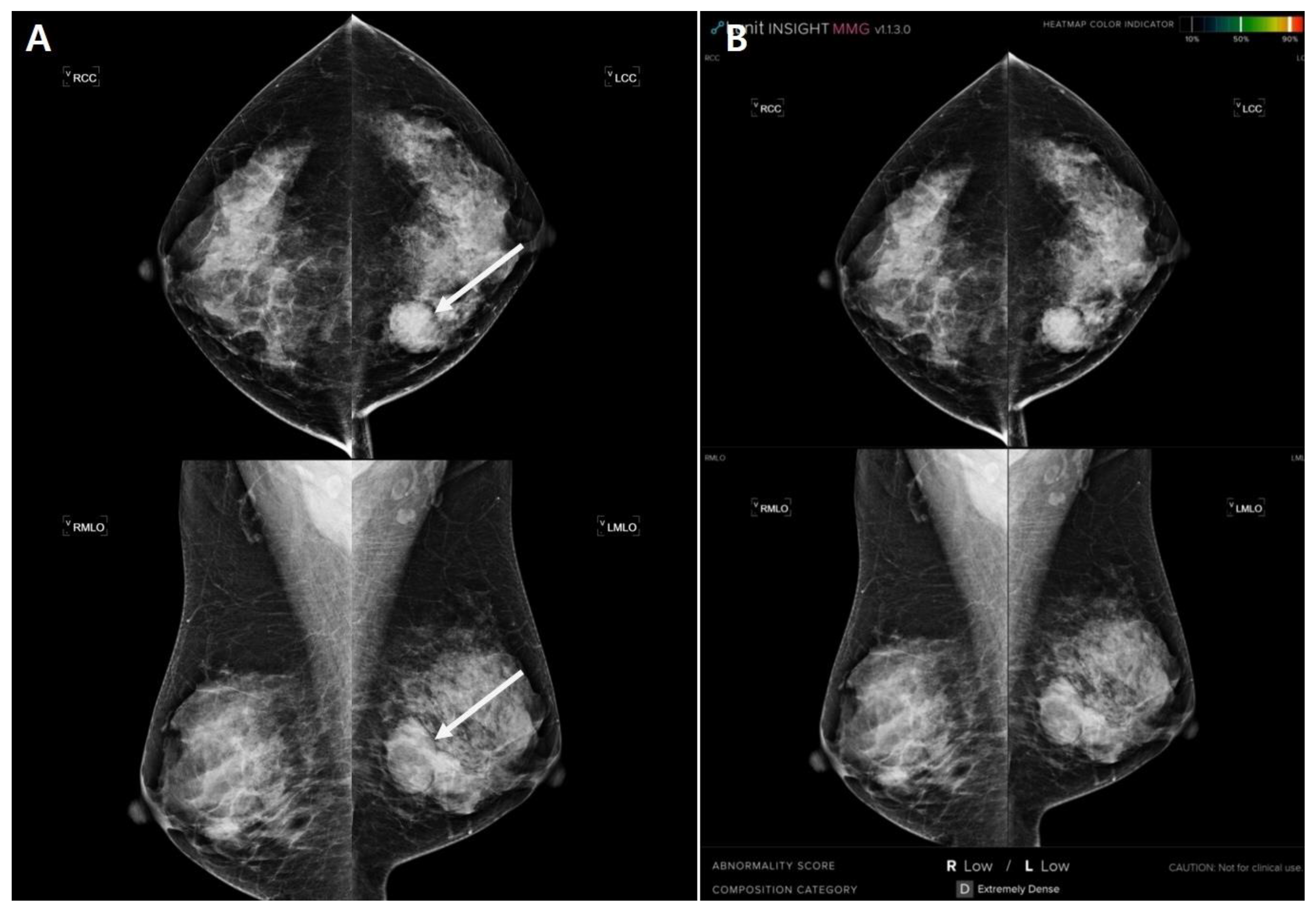

3.2. Analysis of Mammography by Radiologists and AI

3.3. Concordance of Lesion Location between Mammography and Pathology

3.4. Predictors of Concordance of Lesion Location with Pathology

3.5. ‘Invisible’ Cases in Radiologists’ and AI Analyses

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tabár, L.; Vitak, B.; Chen, T.H.-H.; Yen, A.M.-F.; Cohen, A.; Tot, T.; Chiu, S.Y.-H.; Chen, S.L.-S.; Fann, J.C.-Y.; Rosell, J. Swedish two-county trial: Impact of mammographic screening on breast cancer mortality during 3 decades. Radiology 2011, 260, 658. [Google Scholar] [CrossRef] [PubMed]

- Nelson, H.D.; Fu, R.; Cantor, A.; Pappas, M.; Daeges, M.; Humphrey, L. Effectiveness of breast cancer screening: Systematic review and meta-analysis to update the 2009 US Preventive Services Task Force recommendation. Ann. Intern. Med. 2016, 164, 244–255. [Google Scholar] [CrossRef] [PubMed]

- Sprague, B.L.; Arao, R.F.; Miglioretti, D.L.; Henderson, L.M.; Buist, D.S.; Onega, T.; Rauscher, G.H.; Lee, J.M.; Tosteson, A.N.; Kerlikowske, K. National performance benchmarks for modern diagnostic digital mammography: Update from the Breast Cancer Surveillance Consortium. Radiology 2017, 283, 59. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.H.; Lee, E.H.; Jun, J.K.; Kim, Y.M.; Chang, Y.-W.; Lee, J.H.; Kim, H.-W.; Choi, E.J. Interpretive performance and inter-observer agreement on digital mammography test sets. Korean J. Radiol. 2019, 20, 218–224. [Google Scholar] [CrossRef]

- U.S. Food and Drug Administration. Premarket Approval (PMA). Available online: https://www.accessdata.fda.gov/scrIpts/cdrh/cfdocs/cfpma/pma.cfm?id=P970058 (accessed on 23 September 2021).

- Brem, R.F.; Baum, J.; Lechner, M.; Kaplan, S.; Souders, S.; Naul, L.G.; Hoffmeister, J. Improvement in sensitivity of screening mammography with computer-aided detection: A multiinstitutional trial. AJR Am. J. Roentgenol. 2003, 181, 687–693. [Google Scholar] [CrossRef]

- Chan, H.-P.; Samala, R.K.; Hadjiiski, L.M. CAD and AI for breast cancer—Recent development and challenges. Br. J. Radiol. 2019, 93, 20190580. [Google Scholar] [CrossRef]

- Mayo, R.C.; Kent, D.; Sen, L.C.; Kapoor, M.; Leung, J.W.; Watanabe, A.T. Reduction of false-positive markings on mammograms: A retrospective comparison study using an artificial intelligence-based CAD. J. Digit. Imaging 2019, 32, 618–624. [Google Scholar] [CrossRef]

- Lehman, C.D.; Wellman, R.D.; Buist, D.S.; Kerlikowske, K.; Tosteson, A.N.; Miglioretti, D.L.; Consortium, B.C.S. Diagnostic accuracy of digital screening mammography with and without computer-aided detection. JAMA Intern. Med. 2015, 175, 1828–1837. [Google Scholar] [CrossRef]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Yoon, J.H.; Kim, E.-K. Deep learning-based artificial intelligence for mammography. Korean J. Radiol. 2021, 22, 1225. [Google Scholar] [CrossRef]

- Pacilè, S.; Lopez, J.; Chone, P.; Bertinotti, T.; Grouin, J.M.; Fillard, P. Improving Breast Cancer Detection Accuracy of Mammography with the Concurrent Use of an Artificial Intelligence Tool. Radiol. Artif. Intell. 2020, 2, e190208. [Google Scholar] [CrossRef] [PubMed]

- Yala, A.; Schuster, T.; Miles, R.; Barzilay, R.; Lehman, C. A deep learning model to triage screening mammograms: A simulation study. Radiology 2019, 293, 38–46. [Google Scholar] [CrossRef] [PubMed]

- Kohli, M.; Prevedello, L.M.; Filice, R.W.; Geis, J.R. Implementing machine learning in radiology practice and research. Am. J. Roentgenol. 2017, 208, 754–760. [Google Scholar] [CrossRef] [PubMed]

- Fazal, M.I.; Patel, M.E.; Tye, J.; Gupta, Y. The past, present and future role of artificial intelligence in imaging. Eur. J. Radiol. 2018, 105, 246–250. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.-E.; Kim, H.H.; Han, B.-K.; Kim, K.H.; Han, K.; Nam, H.; Lee, E.H.; Kim, E.-K. Changes in cancer detection and false-positive recall in mammography using artificial intelligence: A retrospective, multireader study. Lancet Digit. Health 2020, 2, e138–e148. [Google Scholar] [CrossRef] [PubMed]

- Salim, M.; Wåhlin, E.; Dembrower, K.; Azavedo, E.; Foukakis, T.; Liu, Y.; Smith, K.; Eklund, M.; Strand, F. External evaluation of 3 commercial artificial intelligence algorithms for independent assessment of screening mammograms. JAMA Oncol. 2020, 6, 1581–1588. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Ruiz, A.; Lång, K.; Gubern-Merida, A.; Broeders, M.; Gennaro, G.; Clauser, P.; Helbich, T.H.; Chevalier, M.; Tan, T.; Mertelmeier, T. Stand-alone artificial intelligence for breast cancer detection in mammography: Comparison with 101 radiologists. JNCI J. Natl. Cancer Inst. 2019, 111, 916–922. [Google Scholar] [CrossRef]

- Dembrower, K.; Wåhlin, E.; Liu, Y.; Salim, M.; Smith, K.; Lindholm, P.; Eklund, M.; Strand, F. Effect of artificial intelligence-based triaging of breast cancer screening mammograms on cancer detection and radiologist workload: A retrospective simulation study. Lancet Digit. Health 2020, 2, e468–e474. [Google Scholar] [CrossRef]

- Tran, W.T.; Sadeghi-Naini, A.; Lu, F.-I.; Gandhi, S.; Meti, N.; Brackstone, M.; Rakovitch, E.; Curpen, B. Computational radiology in breast cancer screening and diagnosis using artificial intelligence. Can. Assoc. Radiol. J. 2021, 72, 98–108. [Google Scholar] [CrossRef]

- Katzen, J.; Dodelzon, K. A review of computer aided detection in mammography. Clin. Imaging 2018, 52, 305–309. [Google Scholar] [CrossRef]

- McKinney, S.M.; Sieniek, M.; Godbole, V.; Godwin, J.; Antropova, N.; Ashrafian, H.; Back, T.; Chesus, M.; Corrado, G.S.; Darzi, A. International evaluation of an AI system for breast cancer screening. Nature 2020, 577, 89–94. [Google Scholar] [CrossRef] [PubMed]

- Wu, N.; Phang, J.; Park, J.; Shen, Y.; Huang, Z.; Zorin, M.; Jastrzębski, S.; Févry, T.; Katsnelson, J.; Kim, E. Deep neural networks improve radiologists’ performance in breast cancer screening. IEEE Trans. Med. Imaging 2019, 39, 1184–1194. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez-Ruiz, A.; Krupinski, E.; Mordang, J.-J.; Schilling, K.; Heywang-Köbrunner, S.H.; Sechopoulos, I.; Mann, R.M. Detection of breast cancer with mammography: Effect of an artificial intelligence support system. Radiology 2019, 290, 305–314. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, A.T.; Lim, V.; Vu, H.X.; Chim, R.; Weise, E.; Liu, J.; Bradley, W.G.; Comstock, C.E. Improved cancer detection using artificial intelligence: A retrospective evaluation of missed cancers on mammography. J. Digit. Imaging 2019, 32, 625–637. [Google Scholar] [CrossRef]

- Sasaki, M.; Tozaki, M.; Rodríguez-Ruiz, A.; Yotsumoto, D.; Ichiki, Y.; Terawaki, A.; Oosako, S.; Sagara, Y.; Sagara, Y. Artificial intelligence for breast cancer detection in mammography: Experience of use of the ScreenPoint Medical Transpara system in 310 Japanese women. Breast Cancer 2020, 27, 642–651. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wallis, M.G. Artificial intelligence for the real world of breast screening. Eur. J. Radiol. 2021, 144, 109661. [Google Scholar] [CrossRef]

- Sechopoulos, I.; Teuwen, J.; Mann, R. Artificial intelligence for breast cancer detection in mammography and digital breast tomosynthesis: State of the art. Semin. Cancer Biol. 2021, 72, 214–225. [Google Scholar] [CrossRef]

- Nikitin, V.; Filatov, A.; Bagotskaya, N.; Kil, I.; Lossev, I.; Losseva, N. Improvement in ROC curves of readers with next generation of mammography CAD. ECR 2014. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- The Jamovi Project. jamovi (Version 1.6) [Computer Software]. Available online: https://www.jamovi.org (accessed on 23 September 2021).

- Geras, K.J.; Mann, R.M.; Moy, L. Artificial intelligence for mammography and digital breast tomosynthesis: Current concepts and future perspectives. Radiology 2019, 293, 246. [Google Scholar] [CrossRef]

- Rodriguez-Ruiz, A.; Lång, K.; Gubern-Merida, A.; Teuwen, J.; Broeders, M.; Gennaro, G.; Clauser, P.; Helbich, T.H.; Chevalier, M.; Mertelmeier, T. Can we reduce the workload of mammographic screening by automatic identification of normal exams with artificial intelligence? A feasibility study. Eur. Radiol. 2019, 29, 4825–4832. [Google Scholar] [CrossRef] [PubMed]

- Lian, J.; Li, K. A review of breast density implications and breast cancer screening. Clin. Breast Cancer 2020, 20, 283–290. [Google Scholar] [CrossRef] [PubMed]

- Nazari, S.S.; Mukherjee, P. An overview of mammographic density and its association with breast cancer. Breast Cancer 2018, 25, 259–267. [Google Scholar] [CrossRef] [PubMed]

- Freer, P.E. Mammographic breast density: Impact on breast cancer risk and implications for screening. Radiographics 2015, 35, 302–315. [Google Scholar] [CrossRef] [PubMed]

- Lång, K.; Dustler, M.; Dahlblom, V.; Åkesson, A.; Andersson, I.; Zackrisson, S. Identifying normal mammograms in a large screening population using artificial intelligence. Eur. Radiol. 2021, 31, 1687–1692. [Google Scholar] [CrossRef] [PubMed]

| Clinicopathological Characteristics | |

|---|---|

| Age (Years) | 57.3 ± 12.1 |

| Patient’s symptoms | |

| None | 212 (54.1) |

| Palpation | 153 (39.0) |

| Pain | 13 (3.3) |

| Discharge | 8 (2.0) |

| Others | 6 (1.5) |

| Confirmation method | |

| Biopsy | 242 (61.7) |

| BCS | 90 (23.0) |

| Mastectomy | 60 (15.3) |

| Location of pathologic lesion | |

| Right | 202 (51.5) |

| Left | 181 (46.2) |

| Both | 9 (2.3) |

| Histology | |

| Invasive ductal carcinoma | 310 (79.1) |

| Ductal carcinoma in situ | 42 (10.7) |

| Invasive lobular carcinoma | 12 (3.1) |

| Mucinous carcinoma | 8 (2.0) |

| Tubular carcinoma | 8 (2.0) |

| Invasive micropapillary carcinoma | 3 (0.8) |

| Invasive tubulolobular carcinoma | 2 (0.5) |

| Encapsulated papillary carcinoma | 2 (0.5) |

| Metaplastic carcinoma | 2 (0.5) |

| Adenoid cystic carcinoma | 1 (0.3) |

| Papillary ductal carcinoma in situ | 1 (0.3) |

| Lobular carcinoma in situ | 1 (0.3) |

| Radiologists | |

|---|---|

| Breast density | |

| a | 39 (9.9) |

| b | 71 (18.1) |

| c | 195 (49.7) |

| d | 87 (22.2) |

| Presence of previous MG | |

| Nonexistent | 233 (59.4) |

| Existent | 159 (40.6) |

| Effects of past MG | |

| Nonexistence | 289 (73.7) |

| Existence | 103 (26.3) |

| Lesion type | |

| Invisible | 36 (9.2) |

| Mass | 142 (36.2) |

| Calcifications | 77 (19.6) |

| Mass + Calcifications | 61 (15.6) |

| Asymmetry | 46 (11.7) |

| Distortion | 20 (5.1) |

| Asymmetry + Calcifications | 6 (1.5) |

| Other | 4 (1.0) |

| Location of lesion | |

| Invisible | 36 (9.2) |

| Right | 178 (45.4) |

| Left | 171 (43.6) |

| Both | 7 (1.8) |

| AI | |

| Location of lesion | |

| Invisible | 57 (14.5) |

| Right | 163 (41.6) |

| Left | 157 (40.1) |

| Both | 15 (3.8) |

| Lesion score | |

| <10 | 57 (14.5) |

| 10≤_<50 | 43 (11.0) |

| 50≤_<90 | 60 (15.3) |

| 90≤ | 232 (59.2) |

| Analysis by | |||

|---|---|---|---|

| n | Radiologists | AI | |

| Kappa | |||

| All | 392 | 0.819 | 0.698 |

| Surgical validation | 150 | 0.833 | 0.701 |

| Effect of previous MG | |||

| Nonexistent | 289 | 0.778 | 0.694 |

| Existent | 103 | 0.944 | 0.707 |

| Symptoms | |||

| Nonexistent | 212 | 0.742 | 0.636 |

| Existent | 180 | 0.917 | 0.777 |

| MG density | |||

| Fatty (a, b) | 110 | 0.948 | 0.804 |

| Dense (c, d) | 282 | 0.773 | 0.660 |

| Predictor | Estimate a | Standard Error | p-Value | Odds Ratio | 95% Confidence Interval | |

|---|---|---|---|---|---|---|

| Lower | Upper | |||||

| Previous MG influence (E/N) | 2.146 | 0.625 | <0.001 | 8.55 | 2.51 | 29.09 |

| Symptoms (E/N) | 1.703 | 0.422 | <0.001 | 5.49 | 2.40 | 12.55 |

| MG density (F/D) | 1.644 | 0.622 | 0.008 | 5.18 | 1.53 | 17.51 |

| Pathology | AI (Invisible in Radiologist) | Radiologists (Invisible in AI) |

|---|---|---|

| Concordance | 4 | 27 |

| Discordance | 32 | 30 |

| Total | 36 | 57 |

| Case No. | Age (Year) | Symptom | Radiologists | AI | |||||

|---|---|---|---|---|---|---|---|---|---|

| MG Density | Lesion Location | Lesion Type | Previous MG Influence | Lesion size on MG (cm) | AI Score (%) | Location by AI | |||

| R1 | 66 | none | c | R | Asymmetry | - | 0.5 | 8.46 | R |

| R2 | 38 | palpation | c | R | Calcification | - | 0.4 | 0.1 | L |

| R3 | 53 | none | b | L | Asymmetry | Existence | 0.4 | 1.3 | L |

| R4 | 53 | palpation | c | L | Mass | - | 3.7 | 0.85 | L |

| R5 | 67 | pain | a | L | Asymmetry | - | 1.6 | 2 | L |

| R6 | 58 | none | c | L | mass | Existence | 0.7 | 7.5 | L |

| R7 | 48 | palpation | c | L | asymmetry | - | 4 | 0.17 | L |

| R8 | 40 | palpation | d | R | asymmetry | - | 3.2 | 0.28 | R |

| R9 | 44 | none | d | R | distortion | - | 1.5 | 0.01 | R |

| R10 | 45 | none | d | R | calcification | - | 1.6 | 6.53 | R |

| R11 | 47 | none | d | R | calcification | Existence | 0.5 | 1.92 | L |

| R12 | 45 | palpation | d | R | mass | - | 1.5 | 0.15 | R |

| R13 | 51 | none | c | R | calcification | - | 0.5 | 3.5 | R |

| R14 | 66 | none | a | R | mass | Existence | 0.6 | 0.01 | L |

| R15 | 59 | none | b | L | other | Existence | 2.7 | 0.83 | L |

| R16 | 66 | discharge | c | L | asymmetry | Existence | 0.7 | 3.5 | L |

| R17 | 42 | none | b | L | mass | - | 1 | 0.68 | L |

| R18 | 67 | none | b | R | asymmetry | - | 0.7 | 0.21 | R |

| R19 | 46 | palpation | c | L | mass | - | 1.5 | 1.03 | L |

| R20 | 56 | none | c | L | mass | Existence | 0.8 | 2.53 | R |

| R21 | 70 | none | c | L | distortion | Existence | 1 | 5.27 | L |

| R22 | 50 | palpation | c | R | asymmetry | Existence | 1 | 0.13 | R |

| R23 | 47 | none | d | L | calcification | - | 0.5 | 6.29 | L |

| R24 | 78 | none | c | R | asymmetry | Existence | 1 | 0.08 | R |

| R25 | 46 | none | c | L | distortion | Existence | 0.8 | 8.72 | L |

| R26 | 68 | palpation | b | L | distortion | Existence | 1.5 | 7.06 | L |

| R27 | 59 | none | c | R | asymmetry | Existence | 1.4 | 0.34 | L |

| Case No. | Age (Year) | Symptom | MG Density | AI Score (%) | Lesion Location |

|---|---|---|---|---|---|

| A1 | 35 | Palpation | d | 14.48 | L |

| A2 | 49 | Other | c | 35.46 | R |

| A3 | 46 | Palpation | c | 30.86 | L |

| A4 | 42 | Palpation | c | 24.49 | L |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, W.J.; An, J.K.; Woo, J.J.; Kwak, H.Y. Comparison of Diagnostic Performance in Mammography Assessment: Radiologist with Reference to Clinical Information Versus Standalone Artificial Intelligence Detection. Diagnostics 2023, 13, 117. https://doi.org/10.3390/diagnostics13010117

Choi WJ, An JK, Woo JJ, Kwak HY. Comparison of Diagnostic Performance in Mammography Assessment: Radiologist with Reference to Clinical Information Versus Standalone Artificial Intelligence Detection. Diagnostics. 2023; 13(1):117. https://doi.org/10.3390/diagnostics13010117

Chicago/Turabian StyleChoi, Won Jae, Jin Kyung An, Jeong Joo Woo, and Hee Yong Kwak. 2023. "Comparison of Diagnostic Performance in Mammography Assessment: Radiologist with Reference to Clinical Information Versus Standalone Artificial Intelligence Detection" Diagnostics 13, no. 1: 117. https://doi.org/10.3390/diagnostics13010117

APA StyleChoi, W. J., An, J. K., Woo, J. J., & Kwak, H. Y. (2023). Comparison of Diagnostic Performance in Mammography Assessment: Radiologist with Reference to Clinical Information Versus Standalone Artificial Intelligence Detection. Diagnostics, 13(1), 117. https://doi.org/10.3390/diagnostics13010117