Artificial Intelligence in Colon Capsule Endoscopy—A Systematic Review

Abstract

:1. Introduction

2. Methods

Quality Assessment of the Included Studies

3. Results

3.1. Literature Search

3.2. Study Characteristics

3.3. Quality of the Included Studies

| First Author, Year of Publication, Country | Application | Type of AI Method | Evaluation for Each Frame or for Each Video | Included Videos, n | Frames Available from These Videos | Frames Available for Training the Model if Applicable | Selected Frames for Testing the Developed AI Method | Reference Group |

|---|---|---|---|---|---|---|---|---|

| Becq 2018 France [14] | Bowel cleansing assessment | 1. Red over green (R/G ratio) 2. Red over brown (R/(R + G ratio) | Frame | 12 | 79,497 | N/A | 216 (R/G set) 192 (R/(R + G) set) | 2 CCE readers |

| Buijs 2018 Denmark [16] | Bowel cleansing assessment | 1. Non- linear index model 2. SVM model | Video | 41 | Unknown | Unknown | N/A | 4 CCE readers |

| Figueiredo 2011 Portugal [17] | Polyp detection | Protrusion based algorithm | Frame | 5 | Unknown | N/A | 1700 | Subsequent colonoscopy |

| Mamonov 2014 USA [15] | Polyp detection | Binary classification after pre-selection | Frame | 5 | 18,968 | N/A | 18,968 | Known reviewed CCE dataset |

| Nadimi 2020 Denmark [19] | Polyp detection | CNN | Frame | 255 | 11,300 | 7910 | 1695 | Unknown amount of CCE readers |

| Yamada 2020 Japan [20] | Colorectal neoplasia detection | CNN | Frame | 184 | 20,717 | 15,933 | 4784 | 3 CCE readers |

| Saraiva 2021 Portugal [21] | Protruding lesion detection | CNN | Frame | 24 | 1,017,472 | 2912 | 728 | 2 CCE readers |

| Saraiva 2021 Portugal [22] | Blood detection | CNN | Frame | 24 | 3,387,259 | 4660 | 1165 | 2 CCE readers |

| Herp 2021 Denmark [18] | Capsule localization | T-T model | Frame | 84 | Unknown | N/A | Unknown | Unknown amount of CCE readers |

| Risk of Bias | Applicability Concerns | ||||||

|---|---|---|---|---|---|---|---|

| Patient Selection | Index Test | Reference Standard | Flow and Timing | Patient Selection | Index Test | Reference Standard | |

| Becq [14] | + | + | − | − | + | − | − |

| Buijs [16] | + | − | − | − | − | + | − |

| Figueiredo [17] | + | − | − | − | − | + | − |

| Mamonov [15] | + | + | − | − | − | − | − |

| Nadimi [19] | + | − | − | − | − | − | − |

| Yamada [20] | + | − | − | − | − | − | − |

| Saraiva [21] | + | − | − | − | − | − | − |

| Saraiva [22] | + | − | − | − | − | − | − |

| Herp [18] | + | − | − | − | − | + | − |

3.4. Artificial Intelligence for the Assessment of Bowel Cleansing Quality in CCE-2

3.4.1. Development of the Proposed AI Models for Computed Assessment of Bowel Cleansing

3.4.2. Performance of the Proposed AI Models for Computed Assessment of Bowel Cleansing

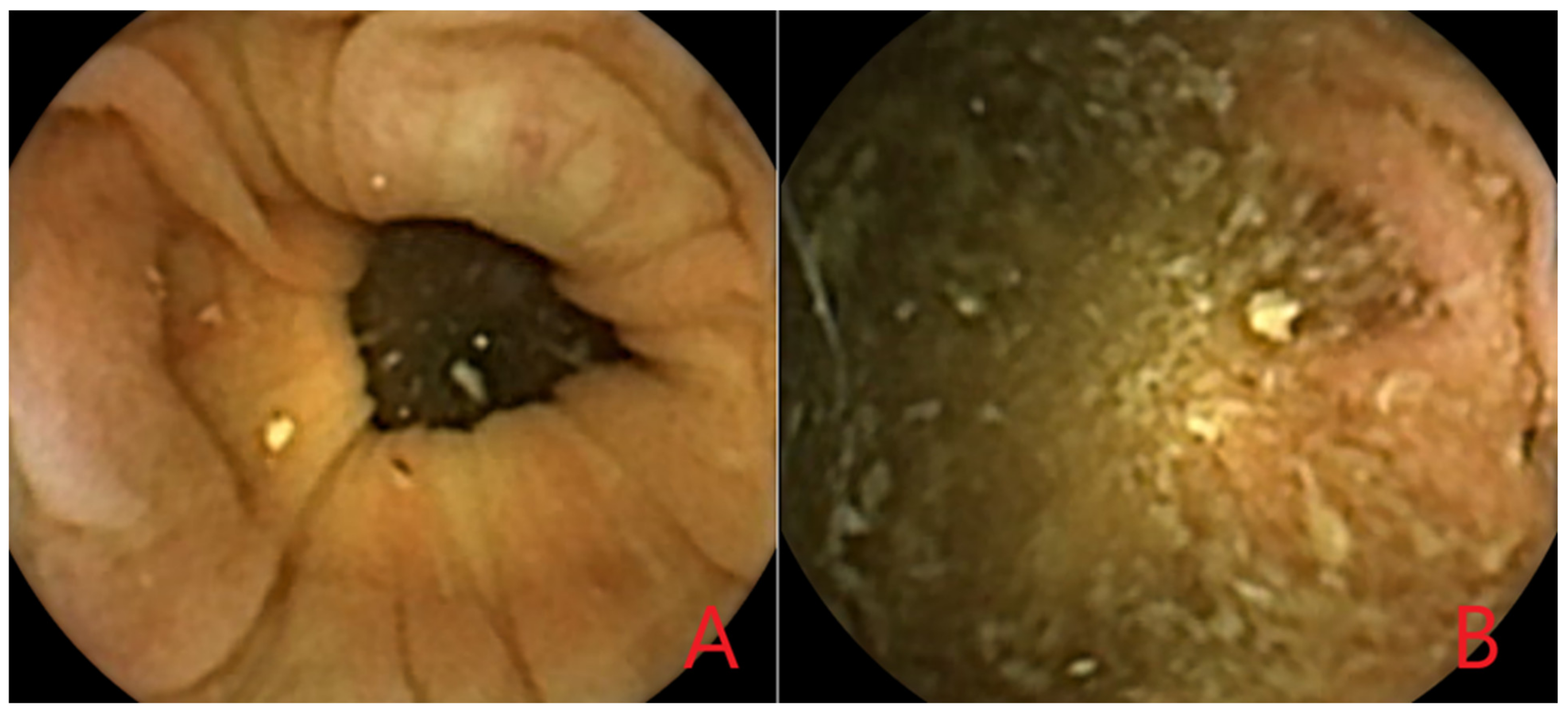

3.5. Artificial Intelligence for Polyp Detection in CCE-2

3.5.1. Development of the Proposed AI Models for Polyp Detection

3.5.2. Performance of the Proposed AI models for Polyp Detection

3.6. Other Artificial Intelligence for CCE-2

4. Discussion

Necessary Action Points to Reach Implementation of AI Technology for CCE in Daily Practice

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

References

- Spada, C.; Hassan, C.; Galmiche, J.P.; Neuhaus, H.; Dumonceau, J.M.; Adler, S.; Epstein, O.; Gay, G.; Pennazio, M.; Rex, D.K.; et al. Colon capsule endoscopy: European Society of Gastrointestinal Endoscopy (ESGE) Guideline. Endoscopy 2012, 44, 527–536. [Google Scholar] [CrossRef]

- Spada, C.; Hassan, C.; Bellini, D.; Burling, D.; Cappello, G.; Carretero, C.; Dekker, E.; Eliakim, R.; de Haan, M.; Kaminski, M.F.; et al. Imaging alternatives to colonoscopy: CT colonography and colon capsule. European Society of Gastrointestinal Endoscopy (ESGE) and European Society of Gastrointestinal and Abdominal Radiology (ESGAR) Guideline—Update 2020. Eur. Radiol. 2021, 31, 2967–2982. [Google Scholar] [CrossRef] [PubMed]

- Spada, C.; Pasha, S.F.; Gross, S.A.; Leighton, J.A.; Schnoll-Sussman, F.; Correale, L.; Gonzalez Suarez, B.; Costamagna, G.; Hassan, C. Accuracy of First- and Second-Generation Colon Capsules in Endoscopic Detection of Colorectal Polyps: A Systematic Review and Meta-analysis. Clin. Gastroenterol. Hepatol. 2016, 14, 1533–1543.e8. [Google Scholar] [CrossRef] [PubMed]

- Kjolhede, T.; Olholm, A.M.; Kaalby, L.; Kidholm, K.; Qvist, N.; Baatrup, G. Diagnostic accuracy of capsule endoscopy compared with colonoscopy for polyp detection: Systematic review and meta-analyses. Endoscopy 2021, 53, 713–721. [Google Scholar] [CrossRef] [PubMed]

- Vuik, F.E.; Moen, S.; Nieuwenburg, S.A.; Schreuders, E.H.; Kuipers, E.J.; Spaander, M.C. Applicability of Colon Capsule Endoscopy as Pan-endoscopy: From bowel preparation, transit- and rating times to completion rate and patient acceptance. Endosc. Int. Open 2021, 9, E1852–E1859. [Google Scholar] [CrossRef] [PubMed]

- Buijs, M.M.; Kroijer, R.; Kobaek-Larsen, M.; Spada, C.; Fernandez-Urien, I.; Steele, R.J.; Baatrup, G. Intra and inter-observer agreement on polyp detection in colon capsule endoscopy evaluations. United Eur. Gastroenterol. J. 2018, 6, 1563–1568. [Google Scholar] [CrossRef] [PubMed]

- Soffer, S.; Klang, E.; Shimon, O.; Nachmias, N.; Eliakim, R.; Ben-Horin, S.; Kopylov, U.; Barash, Y. Deep learning for wireless capsule endoscopy: A systematic review and meta-analysis. Gastrointest. Endosc. 2020, 92, 831–839.e8. [Google Scholar] [CrossRef] [PubMed]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Hassan, C.; Spadaccini, M.; Iannone, A.; Maselli, R.; Jovani, M.; Chandrasekar, V.T.; Antonelli, G.; Yu, H.; Areia, M.; Dinis-Ribeiro, M.; et al. Performance of artificial intelligence in colonoscopy for adenoma and polyp detection: A systematic review and meta-analysis. Gastrointest. Endosc. 2021, 93, 77–85.e6. [Google Scholar] [CrossRef]

- Antonelli, G.; Badalamenti, M.; Hassan, C.; Repici, A. Impact of artificial intelligence on colorectal polyp detection. Best Pract. Res. Clin. Gastroenterol. 2021, 52–53, 101713. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Group, P. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. BMJ 2009, 339, b2535. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M.; QUADAS-2 Group. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Becq, A.; Histace, A.; Camus, M.; Nion-Larmurier, I.; Abou Ali, E.; Pietri, O.; Romain, O.; Chaput, U.; Li, C.; Marteau, P.; et al. Development of a computed cleansing score to assess quality of bowel preparation in colon capsule endoscopy. Endosc. Int. Open 2018, 6, E844–E850. [Google Scholar] [CrossRef]

- Mamonov, A.V.; Figueiredo, I.N.; Figueiredo, P.N.; Tsai, Y.H. Automated polyp detection in colon capsule endoscopy. IEEE Trans. Med. Imaging 2014, 33, 1488–1502. [Google Scholar] [CrossRef]

- Buijs, M.M.; Ramezani, M.H.; Herp, J.; Kroijer, R.; Kobaek-Larsen, M.; Baatrup, G.; Nadimi, E.S. Assessment of bowel cleansing quality in colon capsule endoscopy using machine learning: A pilot study. Endosc. Int. Open 2018, 6, E1044–E1050. [Google Scholar] [CrossRef]

- Figueiredo, P.N.; Figueiredo, I.N.; Prasath, S.; Tsai, R. Automatic polyp detection in pillcam colon 2 capsule images and videos: Preliminary feasibility report. Diagn. Ther. Endosc. 2011, 2011, 182435. [Google Scholar] [CrossRef]

- Herp, J.; Deding, U.; Buijs, M.M.; Kroijer, R.; Baatrup, G.; Nadimi, E.S. Feature Point Tracking-Based Localization of Colon Capsule Endoscope. Diagnostics 2021, 11, 193. [Google Scholar] [CrossRef]

- Nadimi, E.S.; Buijs, M.M.; Herp, J.; Kroijer, R.; Kobaek-Larsen, M.; Nielsen, E.; Pedersen, C.D.; Blanes-Vidal, V.; Baatrup, G. Application of deep learning for autonomous detection and localization of colorectal polyps in wireless colon capsule endoscopy. Comput. Electr. Eng. 2020, 81, 106531. [Google Scholar] [CrossRef]

- Yamada, A.; Niikura, R.; Otani, K.; Aoki, T.; Koike, K. Automatic detection of colorectal neoplasia in wireless colon capsule endoscopic images using a deep convolutional neural network. Endoscopy 2021, 53, 832–836. [Google Scholar] [CrossRef]

- Saraiva, M.M.; Ferreira, J.P.S.; Cardoso, H.; Afonso, J.; Ribeiro, T.; Andrade, P.; Parente, M.P.L.; Jorge, R.N.; Macedo, G. Artificial intelligence and colon capsule endoscopy: Development of an automated diagnostic system of protruding lesions in colon capsule endoscopy. Technol. Coloproctol. 2021, 25, 1243–1248. [Google Scholar] [CrossRef]

- Mascarenhas Saraiva, M.; Ferreira, J.P.S.; Cardoso, H.; Afonso, J.; Ribeiro, T.; Andrade, P.; Parente, M.P.L.; Jorge, R.N.; Macedo, G. Artificial intelligence and colon capsule endoscopy: Automatic detection of blood in colon capsule endoscopy using a convolutional neural network. Endosc. Int. Open 2021, 9, E1264–E1268. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Adjei, P.E.; Lonseko, Z.M.; Du, W.; Zhang, H.; Rao, N. Examining the effect of synthetic data augmentation in polyp detection and segmentation. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1289–1302. [Google Scholar] [CrossRef]

- Ozyoruk, K.B.; Gokceler, G.I.; Bobrow, T.L.; Coskun, G.; Incetan, K.; Almalioglu, Y.; Mahmood, F.; Curto, E.; Perdigoto, L.; Oliveira, M.; et al. EndoSLAM dataset and an unsupervised monocular visual odometry and depth estimation approach for endoscopic videos. Med. Image Anal. 2021, 71, 102058. [Google Scholar] [CrossRef]

| Embase.com (1971-) |

| (‘capsule endoscopy’/exp OR ‘capsule endoscope’/de OR ((capsule * OR videocapsule *) NEAR/3 (endoscop * OR colonoscop *)):ab,ti) AND (‘large intestine’/exp OR ‘large intestine disease’/exp OR ‘large intestine tumor’/exp OR colonoscopy/exp OR (colon * OR colorectal * OR rectal OR rectum OR large-intestin *):ab,ti) AND (‘artificial intelligence’/exp OR ‘machine learning’/exp OR ‘software’/exp OR ‘algorithm’/exp OR automation/de OR ‘computer analysis’/de OR ‘computer assisted diagnosis’/de OR ‘image processing’/de OR ((artificial * NEAR/3 intelligen *) OR (machine NEAR/3 learning) OR (compute * NEAR/3 (aided OR assist * OR technique *)) OR software * OR algorithm * OR automat * OR (image NEAR/3 (processing OR matching OR analy *)) OR support-vector * OR svm OR hybrid * OR neural-network * OR autonom * OR (unsupervis * NEAR/3 (learn * OR classif *))):Ab,ti) NOT ([animals]/lim NOT [humans]/lim) |

| Medline ALL Ovid (1946-) |

| (Capsule Endoscopy/OR Capsule Endoscopes/OR ((capsule * OR videocapsule *) ADJ3 (endoscop * OR colonoscop *)).ab,ti.) AND (Intestine, Large/OR Colorectal Neoplasms/OR exp Colonoscopy/OR (colon * OR colorectal * OR rectal OR rectum OR large-intestin *).ab,ti.) AND (exp Artificial Intelligence/OR exp Machine Learning/OR Software/OR Algorithms/OR Automation/OR Diagnosis, Computer-Assisted/OR Image Processing, Computer-Assisted/OR ((artificial * ADJ3 intelligen *) OR (machine ADJ3 learning) OR (compute * ADJ3 (aided OR assist * OR technique *)) OR software * OR algorithm * OR automat * OR (image ADJ3 (processing OR matching OR analy *)) OR support-vector * OR svm OR hybrid * OR neural-network * OR autonom * OR (unsupervis * ADJ3 (learn * OR classif *))).ab,ti.) NOT (exp animals/ NOT humans/) |

| Web of Science Core Collection (1975-) |

| TS=((((capsule * OR videocapsule *) NEAR/2 (endoscop * OR colonoscop *))) AND ((colon * OR colorectal * OR rectal OR rectum OR large-intestin *)) AND (((artificial * NEAR/2 intelligen *) OR (machine NEAR/2 learning) OR (compute * NEAR/2 (aided OR assist * OR technique *)) OR software * OR algorithm * OR automat * OR (image NEAR/2 (processing OR matching OR analy *)) OR support-vector * OR svm OR hybrid * OR neural-network * OR autonom * OR (unsupervis * NEAR/2 (learn * OR classif *))))) |

| Cochrane CENTRAL register of Trials (1992-) |

| (((capsule * OR videocapsule *) NEAR/3 (endoscop * OR colonoscop *)):ab,ti) AND ((colon * OR colorectal * OR rectal OR rectum OR large-intestin *):ab,ti) AND (((artificial * NEAR/3 intelligen *) OR (machine NEAR/3 learning) OR (compute * NEAR/3 (aided OR assist * OR technique *)) OR software * OR algorithm * OR automat * OR (image NEAR/3 (processing OR matching OR analy *)) OR support-vector * OR svm OR hybrid * OR neural-network * OR autonom * OR (unsupervis * NEAR/3 (learn * OR classif *))):Ab,ti) |

| Google scholar |

| “capsule|videocapsule endoscopy|colonoscopy” colon|colonoscopy|colorectal “artificial intelligence”|”machine learning”|”computer aided|assisted”|software|algorithm|automated|”image processing|matching|analysis”|”support vector”|”neural network” |

| Study | Type of AI | Frames/Videos Analyzed, n | Adequately Cleansed Frames/Videos, % | Sensitivity, % | Specificity, % | PPV, % | NPV, % | Level of Agreement AI with Readers, % | Videos Misclassified More than One Class |

|---|---|---|---|---|---|---|---|---|---|

| Becq * [14] | R/G ratio | 216 frames | 16.7% | 86.5% | 78.2% | 45.1% | 96.6% | - | - |

| R/(R + G) ratio | 192 frames | 9.9% | 95.5% | 63.0% | 25.0% | 99.0% | - | - | |

| Buijs ** [16] | Non-linear index model | 41 videos | Unknown | - | - | - | - | 32% | 32% |

| SVM model | 41 videos | Unknown | - | - | - | - | 47% | 12% |

| Study | Type of AI | Application | Frames Analyzed, n | Amount of Polyps or Colorectal Neoplasia, n | Amount of Frames Containing Polyps, n | Cut-off Value | Accuracy | Sensitivity on a per Frame Basis, % | Specificity on a per Frame Basis, % | Sensitivity on a per Polyp Basis, % | Specificity on a per Polyp Basis, % |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Figueiredo [17] | Protrusion based algorithm | Polyp detection | 1700 | 10 | Unknown | - | - | - | - | - | - |

| Mamonov [15] | Binary classification after pre-selection | Polyp detection | 18,968 | 16 | 230 | 37 | - | 47.4% | 90.2% | 81.3% | 90.2% |

| 40 | - | - | - | 81.3% | 93.5% | ||||||

| Nadimi * [19] | CNN | Polyp detection | 1695 | Unknown | Unknown | - | 98.0% | 98.1% | 96.3% | - | - |

| Yamada ** [20] | CNN | Colorectal neoplasia detection | 4784 | 105 | Unknown | - | 83.9% | 79.0% | 87.0% | 96.2% | Unknown |

| Saraiva [21] | CNN | Protruding lesion detection | 728 | Unknown | 172 | - | 92.2% | 90.7% | 92.6% | - | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moen, S.; Vuik, F.E.R.; Kuipers, E.J.; Spaander, M.C.W. Artificial Intelligence in Colon Capsule Endoscopy—A Systematic Review. Diagnostics 2022, 12, 1994. https://doi.org/10.3390/diagnostics12081994

Moen S, Vuik FER, Kuipers EJ, Spaander MCW. Artificial Intelligence in Colon Capsule Endoscopy—A Systematic Review. Diagnostics. 2022; 12(8):1994. https://doi.org/10.3390/diagnostics12081994

Chicago/Turabian StyleMoen, Sarah, Fanny E. R. Vuik, Ernst J. Kuipers, and Manon C. W. Spaander. 2022. "Artificial Intelligence in Colon Capsule Endoscopy—A Systematic Review" Diagnostics 12, no. 8: 1994. https://doi.org/10.3390/diagnostics12081994

APA StyleMoen, S., Vuik, F. E. R., Kuipers, E. J., & Spaander, M. C. W. (2022). Artificial Intelligence in Colon Capsule Endoscopy—A Systematic Review. Diagnostics, 12(8), 1994. https://doi.org/10.3390/diagnostics12081994