1. Introduction

The global COVID-19 epidemic has infected 347 millions of people around the globe and over 5.5 million deaths confirmed by the World Health Organization (WHO) as of 25 January 2022 [

1]. The main strategy for better managing this pandemic is to find, isolate, and care for patients as soon as possible. The ability to quickly, easily, affordably, and reliably identify COVID-19 pathology in a person is critical to abating the spread of COVID-19 contagion. The traditional method for detecting COVID-19 is actually reverse transcription polymerase chain reaction (RT-PCR) tests [

2]. Small quantities of viral RNA are collected from a nasal swab, augmented, and evaluated during the RT-PCR test with virus detection signified generally with a fluorescent dye. Unfortunately, the RT-PCR procedure is time-consuming and manual, taking up to two days to complete. False-positive polymerase chain reaction (PCR) testing has also been recorded in some studies [

3,

4]. Imaging-technology-related techniques such as computed tomography (CT) imaging, CXR-imaging-based [

5,

6,

7,

8], and ultrasound imaging [

9] are examples of other research methods. CT scanning machines are often troublesome to operate for COVID patients since they must often be moved to the CT room, the equipment must be thoroughly cleaned after each use, and there is a higher risk of radiation exposure [

9].

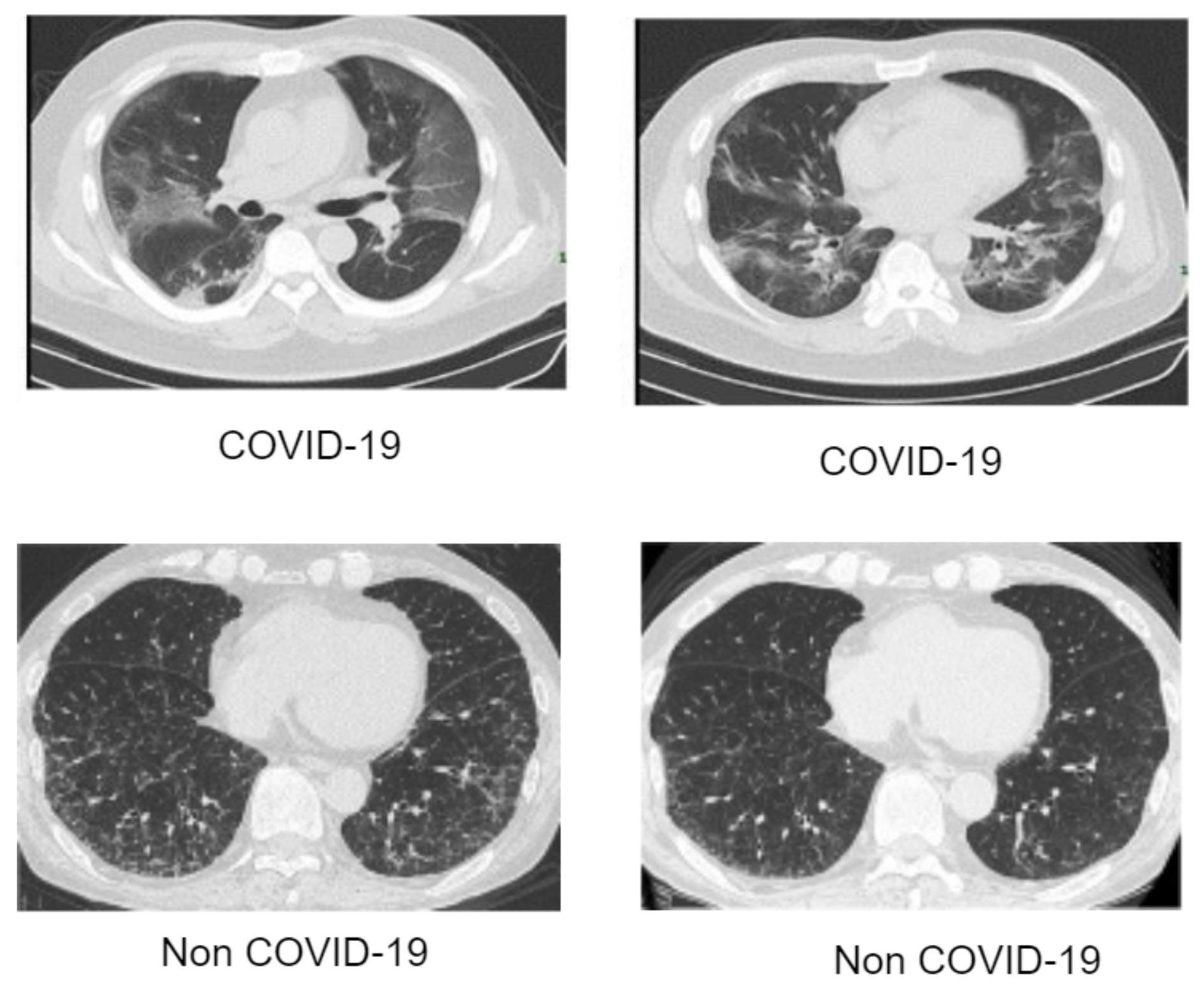

CT has been successfully used as a supportive method for COVID-19 condition evaluation, despite the fact that it is not approved as a primal diagnostic means [

6]. The most general and common CT findings are considered to be the ground-glass opacities (GGO), which is at the beginning stage, accelerating stage, and air space combination during the peak stage while the bronchovesicular congealing in the contusions and pulling bronchiectasis are both evident during the reception stage. Machine learning algorithms have been reported with significant performance for the diagnosis of COVID-19 using CXR and CT scans. Multilayered perceptron (MLP), as a common method of ANN, has shown promising prediction capability of COVID-19 cases with an acceptable accuracy [

10].

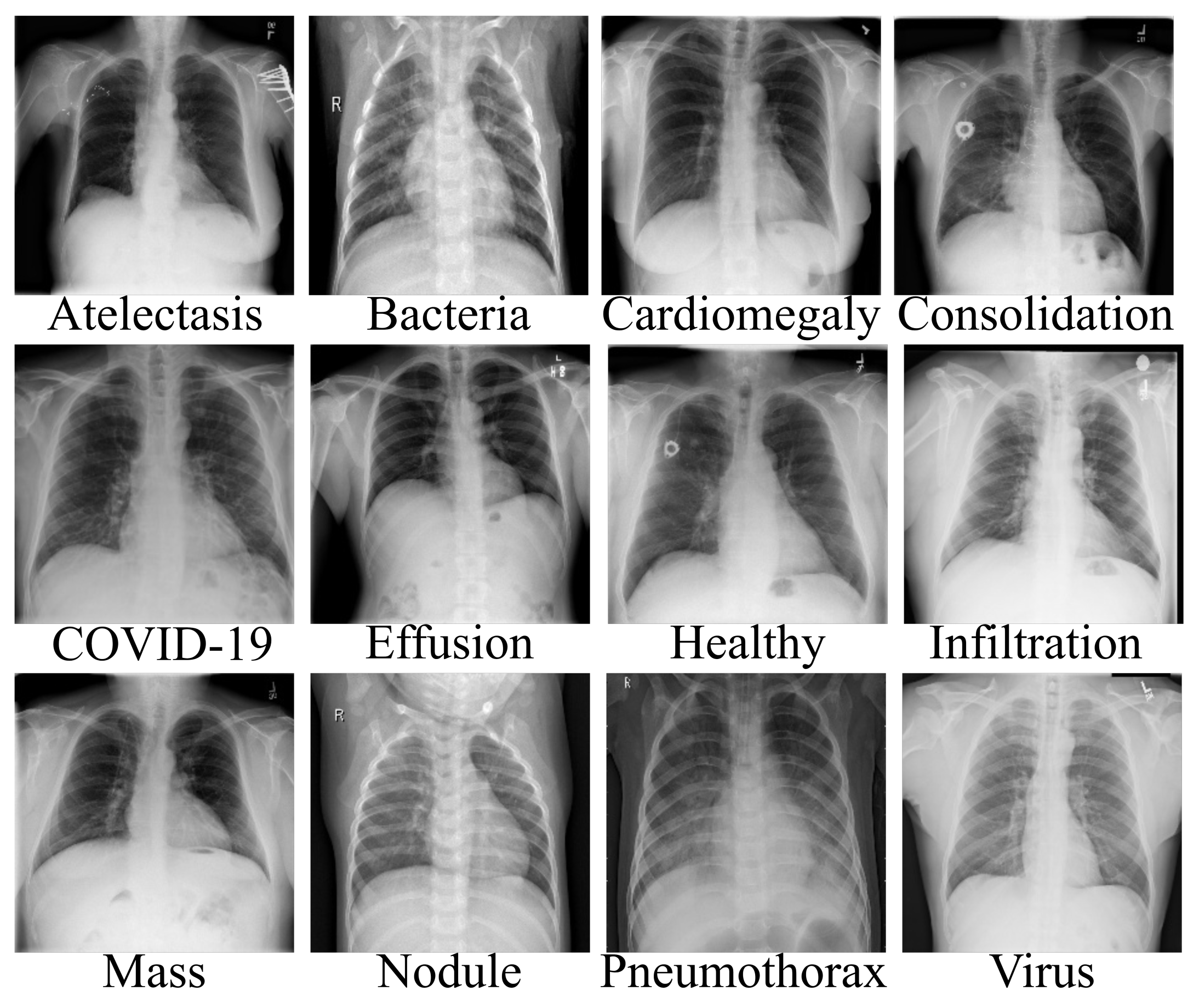

The application of DL frameworks to diagnose COVID-19 from CT images has shown promise results in several studies [

6,

11,

12]. CT scans and RT-PCR tests are relatively expensive [

13], and clinicians are compelled to conduct limited testing for only vulnerable populations due to excessive demand. CXR imaging is a relatively low-cost form of detecting lung infections and it can also be used to detect COVID19 [

14]. With relatively small and large datasets, convolutional neural networks (CNNs) have obtained up-to-date results in medical imaging research [

15,

16,

17,

18]. Due to the large number of parameters, CNNs can easily overfit on a small dataset; as a result, generalization efficiency is reciprocal to the dimension of the labeled data. Tiny datasets present the most difficult task in the medical imaging domain because of the restricted quantity and variety of samples [

5,

6,

7].

A range of medical biomarkers and abnormalities have also been investigated as indicators of disease development in research, and there are some indications that imaging data could supplement these models [

19,

20,

21]. While these methodologies have been utilized to examine COVID-19 in recent research, some have been employed to multi-institutional chest X-ray image samples [

22,

23]. The relationship between ground-glass obscurities and lung fusion on CXR with disease severity and development has been qualitatively characterized in recent research [

24]. The study of attack detection and ECG-based biometric identification has utilized DL algorithm combined with wavelet multiresolution analysis extensively [

25,

26].

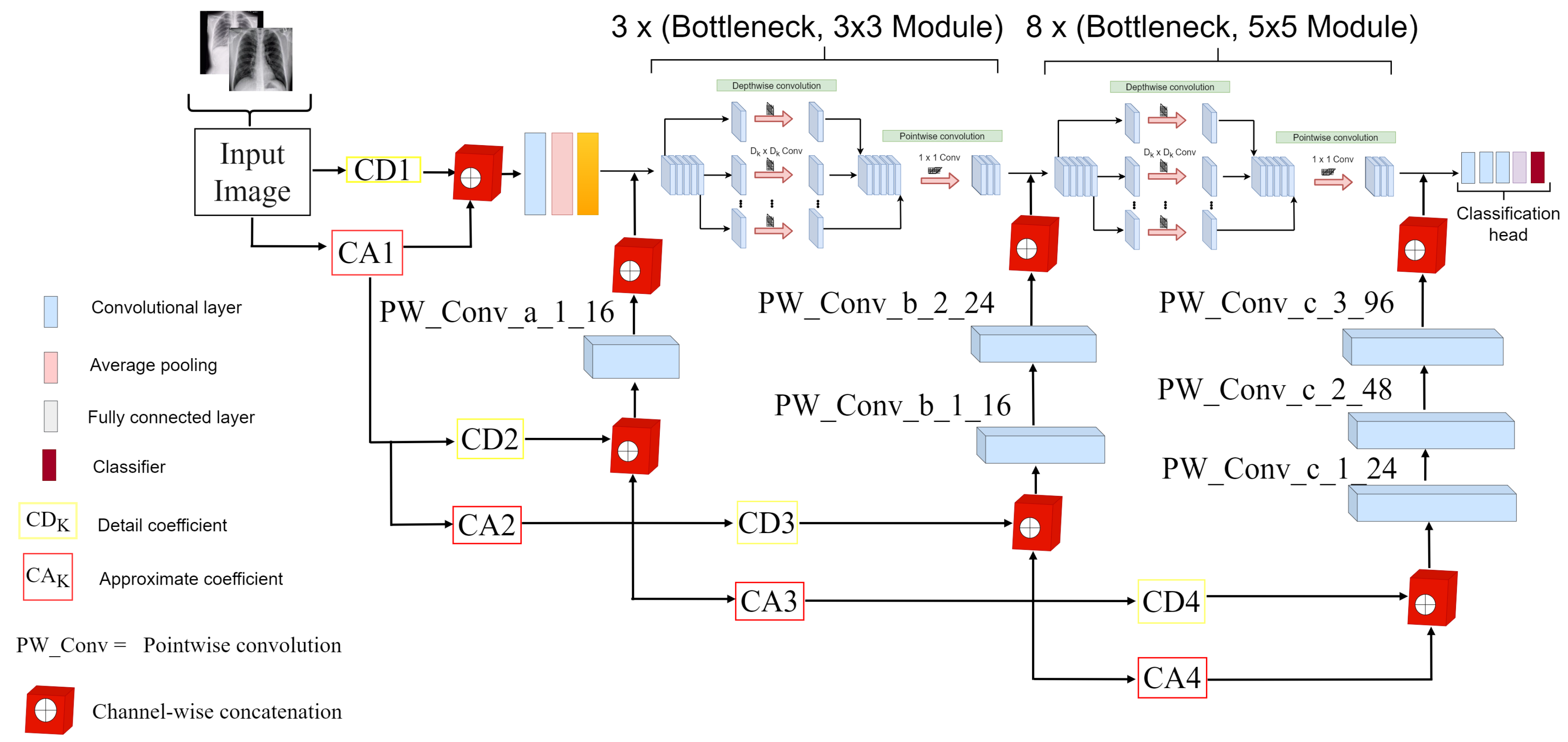

The aim of this research is to establish a conceptual depthwise separable convolution network with wavelet multiresolution analysis module for COVID-19 screening from chest X-ray (CXR) and computed tomography (CT). In consideration of a novel medical predicament such as COVID-19, obtaining adequately accessible compilation of medical image dataset for training deep learning (DL) algorithms is difficult due to the time and resources required to collect and mark images.

Medical image mining is a time-consuming and costly procedure that necessitates the involvement of radiologists and researchers [

6]. Furthermore, due to the recent nature of the COVID-19 outbreak, adequate data of CXR images are difficult to come by. However, in COVID-19 AI-based screening systems from CT and CXR imaging, loss of spatial information is still a major concern which, in most occasions, results from the downsampling operation. The consequence of this is that the AI-based system will learn incomplete information from the data, thereby missing the distinct features for optimal classification.

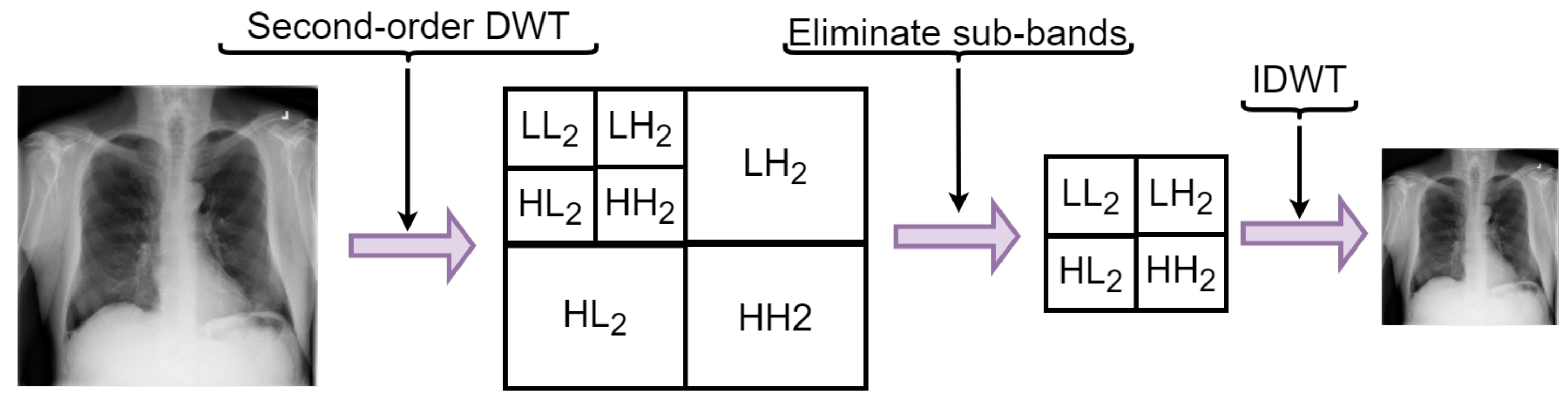

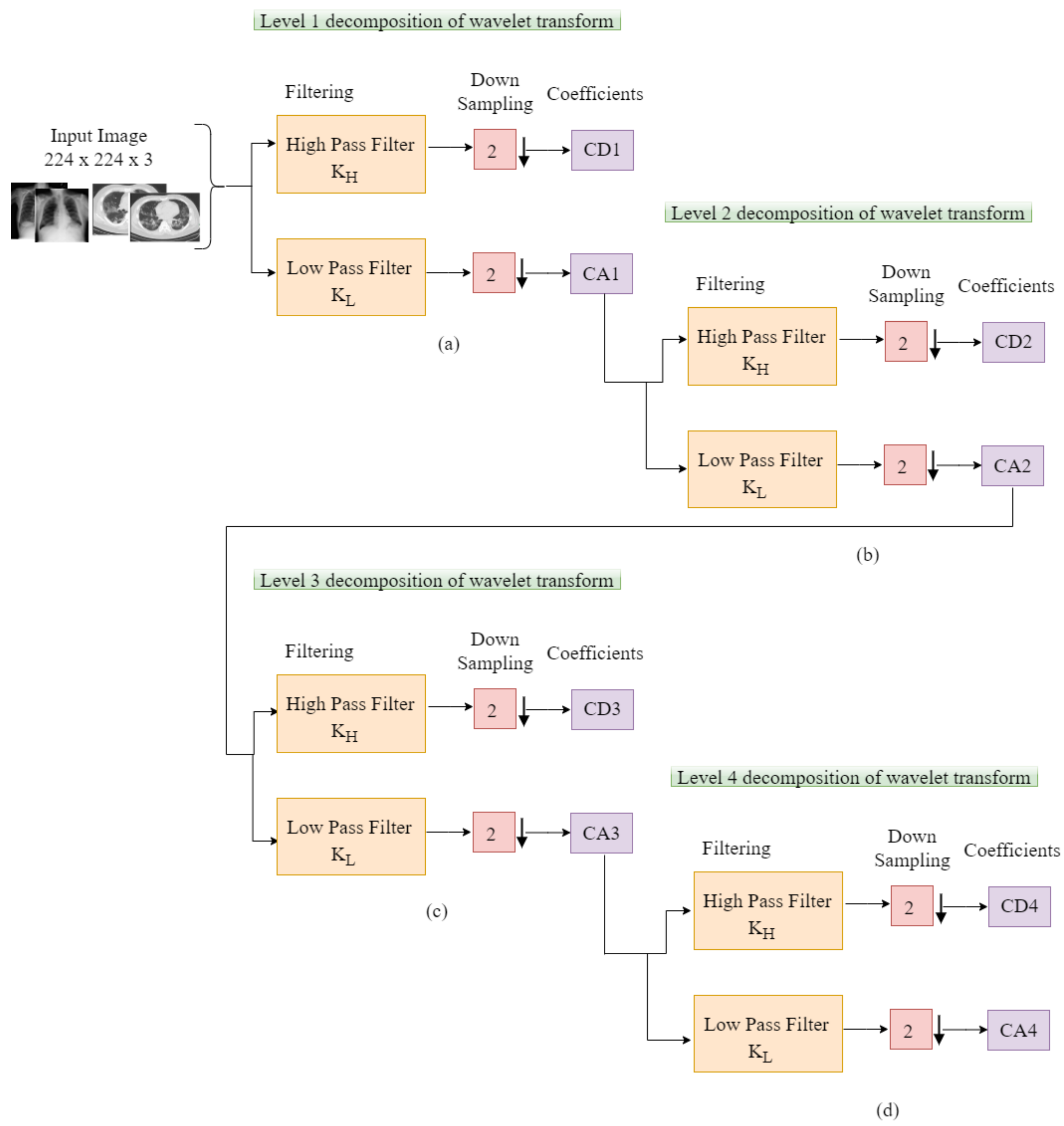

In view to alleviate this drawback, we proposed a novel depthwise separable convolution network with wavelet multiresolution analysis module that optimizes the downsampling operation without losing spatial details for COVID-19 classification. The contributions of this work include: (1) Magnify the feature extraction robustness of the network by replacing the max-pooling layers with discrete wavelet transform (DWT) pooling for the loss reduction of spatial details and to achieve reduction in dimension without losing positional details by employing scaling and wavelet functions. (2) The depthwise separable connectivity framework ensures reusability of feature maps by directly connecting previous layer to all subsequent layers for extracting feature representations from few dataset. This enables the model to learn the spatial details for effective classification. (3) This paper is the first work that introduces a depthwise separable convolution network with wavelet multiresolution analysis module for feature extraction from radiograph images. The proposed model is an end-to-end learning techniques for COVID-19 classification that achieves much higher diagnosis accuracy.

The subsequent sections of this article is coordinated as follows. In

Section 2, we survey related essays. In

Section 3, we give a detailed explanation of the methodology, descriptive information about the dataset, and the implementation technicalities. The experimental outcomes are presented in

Section 4. In

Section 5, we give more light on the evaluation and validation of our model. In

Section 6, we discuss the relevance of our proposed scheme. The concluding phase is detailed in

Section 7.

2. Related Works

COVID-19 investigations based on DL algorithms have been on the rise in most research articles at present. The ImageNet weights were pretrained on 18-layer custom ResNet architecture against 100 COVID-19 and 1431 pneumonia instances of CXR dataset as proposed in [

27].

According to Lu et al. [

19], who adopted a neural network approach for the prediction of intensive care unit admission, concluded that biomarkers such as creatinine, C-reactive protein, etc. indicated momentary variations among admitted COVID-19 patients in the ward and transferred to the intensive care unit in contrast to the patients not transferred. Li et al. [

28] formulated a DL model and a risk rating algorithm for the outcome of intensive care unit admission and death in the hospital. The ROC-AUC was utilized as a metric to evaluate the model performance. The authors discovered that these biomarkers were the leading ICU indicators, aside age, cardiac troponin, and oxygen saturation, which were the main death indicators. Similarly, Hou et al. [

29] formulated a machine learning (ML) algorithm to predict the leading ICU admission and the main mortality indicators, which are temperature, procalcitonin, age, lactate dehydrogenase, lymphocytes, pulse oxygen saturation, ferritin, and C-creative protein.

Nneji et al. [

30] suggested a scheme that combines wavelet transform and generative adversarial network (GAN) CNN in order to enhance the low quality of radiograph images for COVID-19 identification. A custom-based residual CNN approach was suggested in [

31,

32] to accurately differentiate COVID-19 instances from healthy CXR images and other pneumonia-related ailment. COVIDX-Net is a compilation of DL frameworks that were trained on 25 verified COVID-19 instances [

33]. Recent studies have focused on automatic coronavirus pneumonia investigation from CT scans with encouraging results [

34,

35,

36].

A ResNet-50 transfer learning based CNN algorithm was proposed in [

37] to identify COVID-19 on a private dataset with an overall score of 94% accuracy against a regular standard CT slice. In [

38], a weakly supervised approach was suggested where segmentation masks were produced automatically in which the CT image and mask are supplied to the algorithm for classification. The authors of this essay claimed that their procedure obtained 95.9% AUC. A combination of DL algorithm was suggest in [

39] to achieve lung field segmentation by hybridizing 3D ResNet-50 transfer learning model with U-Net preprocessor model in a single architecture to classify COVID-19 and distinguish it from non-COVID-19 instances in a broad range of data from nonpublic datasets extracted from six hospitals. The authors of this study claim that their algorithm obtained 87.1% sensitivity.

ML approach was proposed in [

40] to tackle the difficulty of automatically differentiating COVID-19 from other acquired pneumonia diseases. Infection-size-conscious techniques with random forest classifier algorithm was proposed in [

41] to remove infection and lung areas by means of segmented scan to categorize images based on infection size using 1071 healthy and 182 COVID-19 instances. The authors of this essay claimed that the algorithm obtained 87.9% accuracy when trained on public and private datasets. A joint function pyramid network-based attention module and ResNet-50 proposed in [

42] obtained 86.4% accuracy and 90.3% sensitivity when tested on a private dataset of 24 healthy and 27 COVID-19 individual instances. A DL-inspired random forest model was proposed in [

43] to focus on extensive features to check COVID-19 severity. The procedure achieved an overall accuracy of 87.5% on 176 instances.

In summary, most studies, including those that have utilized CXR and CT imaging, rely on an insufficient number of COVID-19 images from various sources with no standardized protocols. They appear to be simple applications of existing AI-based algorithms leading to minimal AI innovation and clinical utility. The high data discrepancy associated with various studies causes comparison perplexity despite the fact that all models performed admirably [

44]. Generally, models for COVID-19 examination and investigation based on CXR or CT images perform well.

Notwithstanding, a few models utilize10 COVID-19 test instances, and at least one model utilizes external validation attributed to data scarcity. As a consequence, they may or may not be applicable to other contexts. A system that uses less data and attains high significant achievement in accuracy with less training instances is required. This will permit to a greater extent the inclusion of uncommon data class in the testing set. The objective of this article is to formulate a scheme that can help to enhance previous models and achieve state-of-the-art results.

5. Evaluation

In this section, we presents an ablation study of the structural configuration of our proposed model with different depthwise bottleneck modules. We selected a few pretrained models and compared them with our proposed network in terms of classification performance using the same dataset. we only fine-tuned the last layer to correspond to the number of classes in our dataset. Another study was conducted to compare our proposed network with several state-of-the-art COVID-19 imaged-based screening methods.

In order to verify the effectiveness of our proposed model, we compared our designed WMR-DepthwiseNet with up-to-date models. For fair comparison, we run four state-of-the-art COVID-19 methods on the same dataset. From all indications, our proposed model outperforms the up-to-date methods and the deep learning pretrained models with a promising performance. The evaluation criterion adopted as the metric to evaluate the diagnosis performance of our proposed WMR-DepthwiseNet is as follows: accuracy (ACC), precision (PRE), sensitivity (SEN), specificity (SPE), area under curve (AUC), and F1-Score.

where

,

, and

indicates the outcomes of true positive, false positive, and false negative, respectively.

5.1. Ablation Study

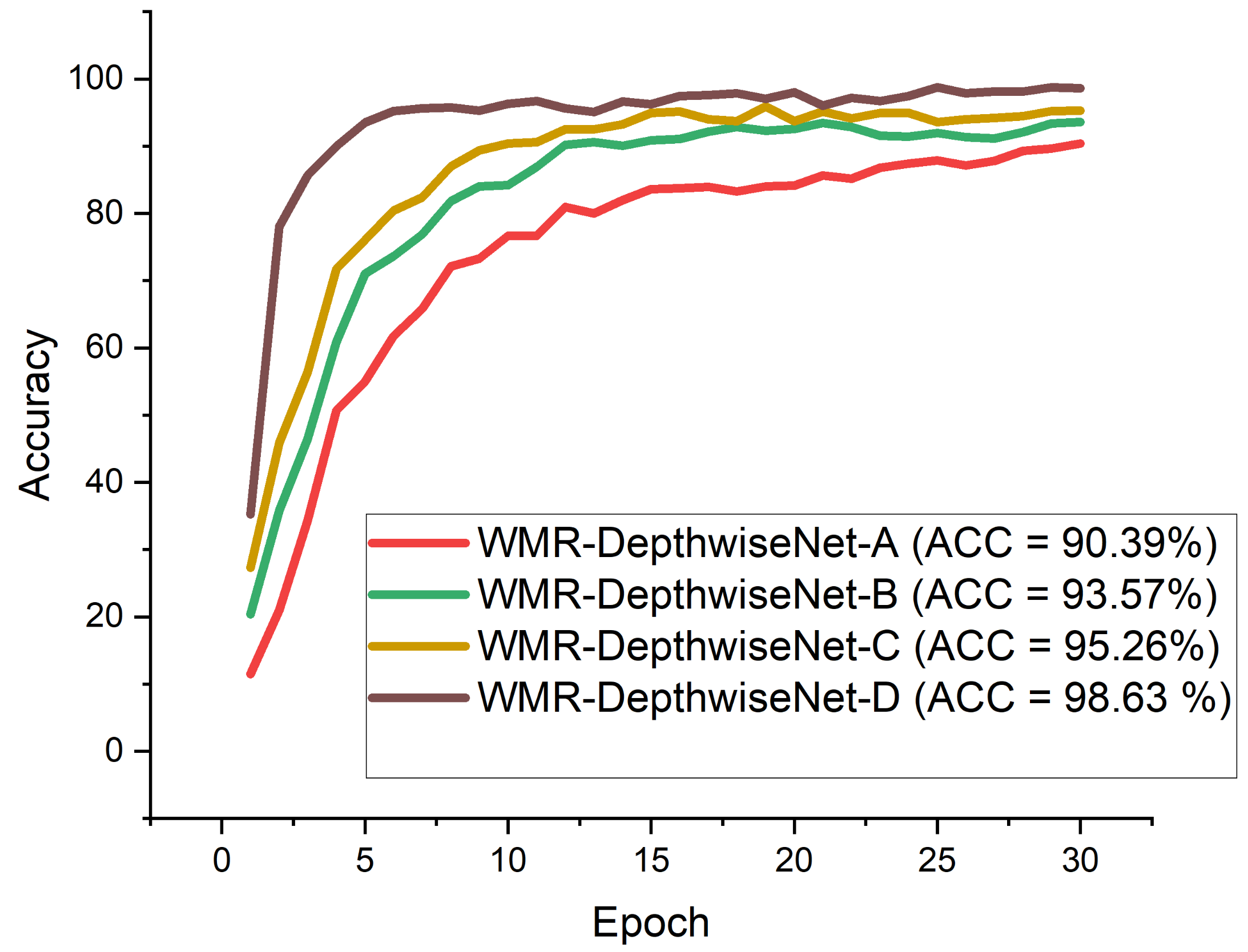

Firstly, we performed ablation study on different configurations of our proposed WMR-DepthwiseNet with different depthwise separable convolution bottlenecks. Particularly, we made comparison on the following architectures.

WMR-DepthwiseNet-A: (bn 3 × 3) + (bn 5 × 5): This network employs bottleneck modules of depthwise separable convolution, bottleneck modules of depthwise separable convolution.

WMR-DepthwiseNet-B: (bn 3 × 3) + (bn 5 × 5): This network employs bottleneck modules of depthwise separable convolution, bottleneck modules of depthwise separable convolution.

WMR-DepthwiseNet-C: (bn 3 × 3) + (bn 5 × 5):This network employs bottleneck modules of depthwise separable convolution, bottleneck modules of depthwise separable convolution.

WMR-DepthwiseNet-D: (bn 3 × 3) + (bn 5 × 5):This network employs bottleneck modules of depthwise separable convolution, bottleneck modules of depthwise separable convolution.

The experimental results of the ablation study are summarized in

Table 4 and

Table 5. At first, we evaluated our proposed network using the same dataset to examine the effect of different depthwise saparable bottleneck modules on the performance of the models. The number of

depthwise separable convolution bottleneck utilized varied from

,

,

, and

.

From all indications, the WMR-DepthwiseNet-D with

+

achieved the highest performance across all the metrics. WMR-DepthwiseNet-A with

+

achieve the least score of 92.71% sensitivity as presented in

Table 4 on CXR dataset and 91.46% sensitivity on CT dataset as presented in

Table 5. However, an average increment of 4.38% was achieved on both CXR and CT dataset when a depthwise saparable bottleneck modules of

is adopted as shown in

Table 4 and

Table 5. It is worth mentioning that the WMR-DepthwiseNet-D with

+

preserve more spatial details and hence improves model performance. To this end, our combined depthwise separable convolution network with wavelet multiresolution analysis module called WMR-DepthwiseNet-D achieves the best result across all evaluation metrices using both CXR and CT dataset as represented in

Figure 8 and

Figure 9. More to the point, the strategy of combining depthwise separable convolution network with wavelet multiresolution analysis enhances the performance of the WMR-DepthwiseNet by a wide margin.

5.2. COVID-19 Classification Evaluation

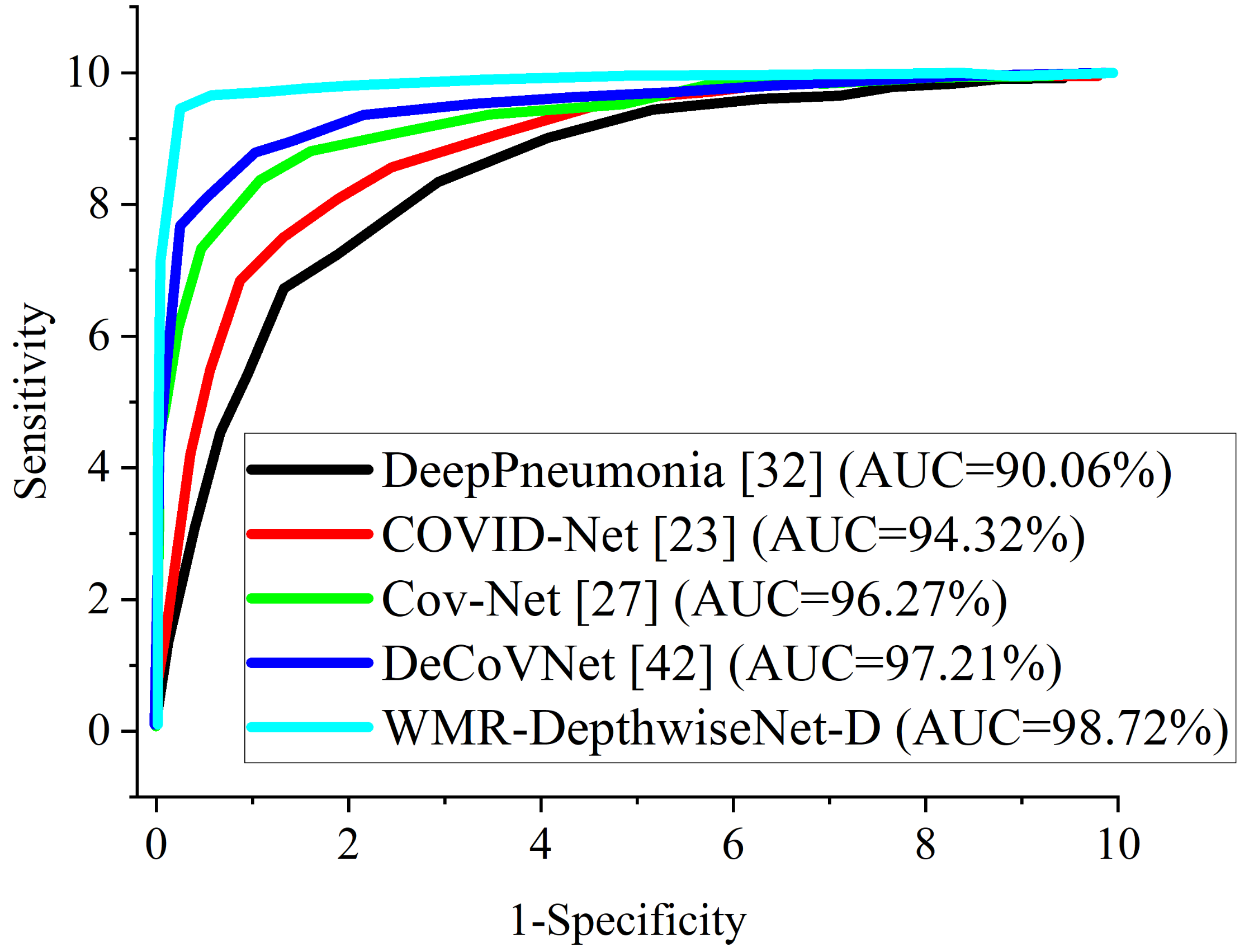

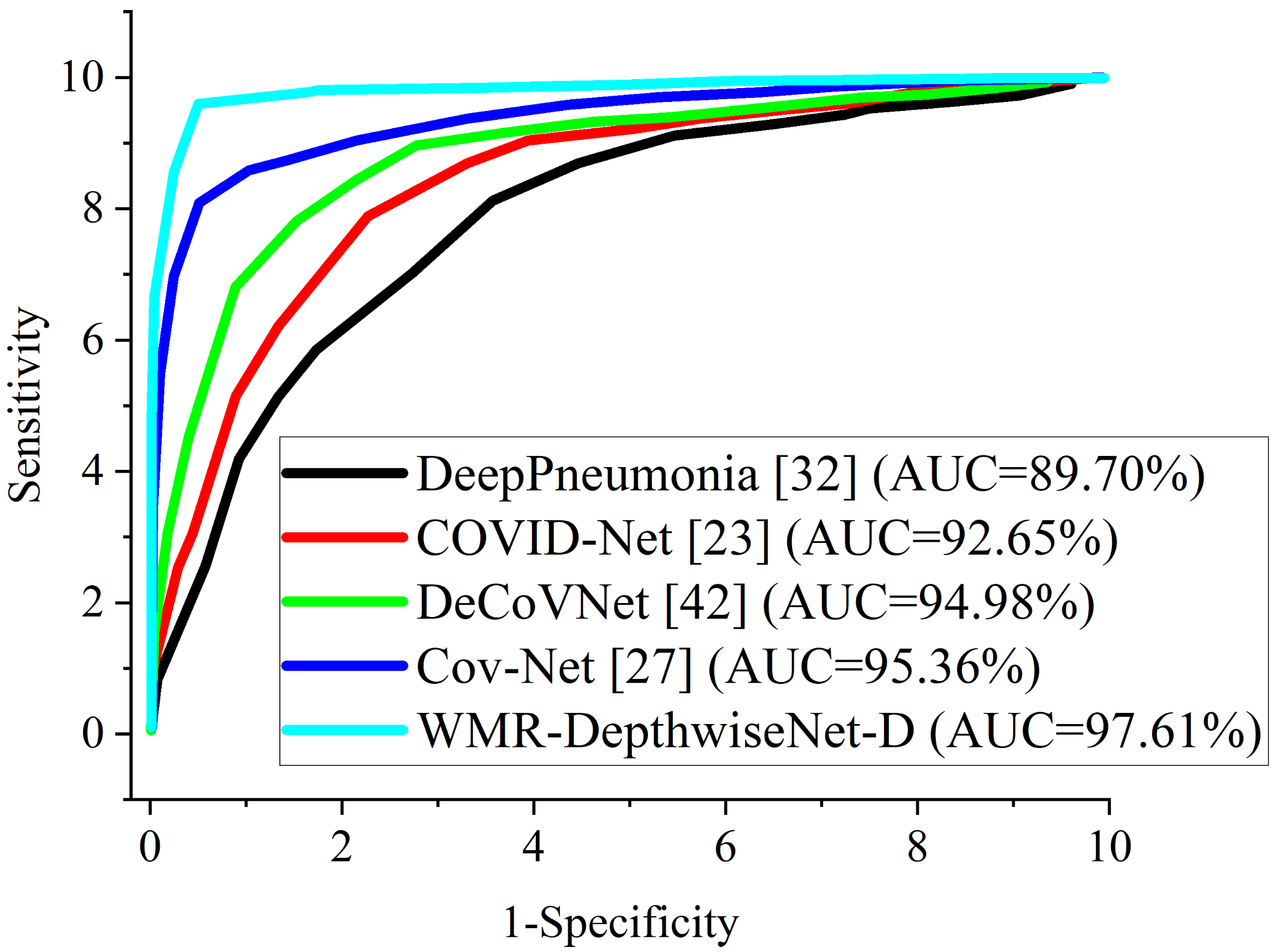

We compare the findings of our proposed model with well-known CNN pre-trained models and up-to-date COVID-19 screening methods. According to

Table 6 and

Table 7, our proposed WMR-DepthwiseNet outperforms all the selected pretrained models yielding state-of-the-art results using the same CXR and CT dataset. Our proposed approach yields 98.46% sensitivity, 97.99% specificity, 98.63% accuracy, 98.72% AUC, 98.87% precision, and 98.92% F1-score on CXR dataset as shown in

Table 6.

Table 7 shows that our model yields 97.78% sensitivity, 96.22% specificity, 96.83% accuracy, 97.61% AUC, 97.02% precision, and 97.37% F1-score on CT dataset.

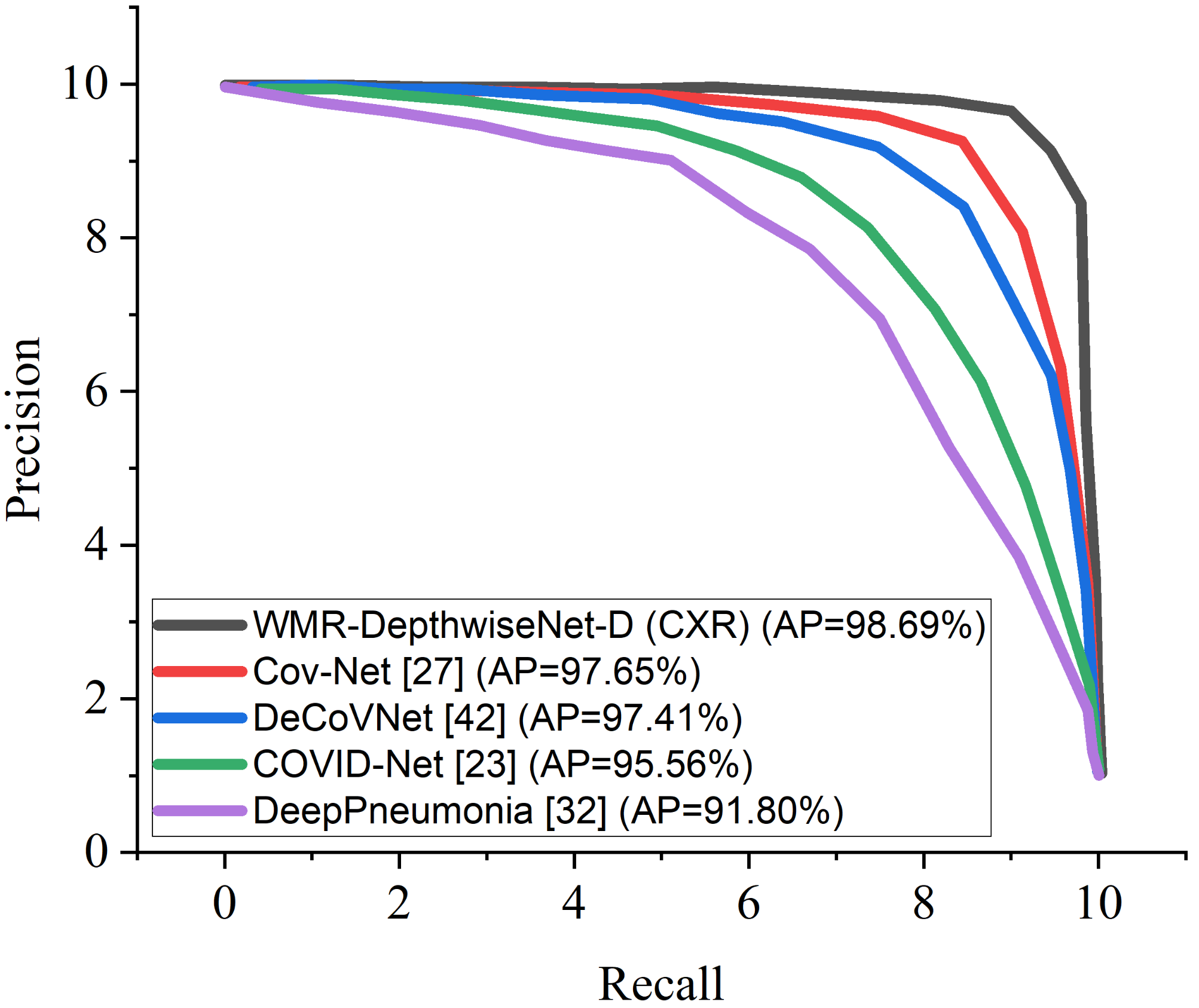

Figure 8 and

Figure 9 illustrate the stability and convergence of the proposed WMR-DepthwiseNet-D in the test curve of the accuracy graphs for both CXR and CT datasets, respectively. More so, the performance of our proposed model can also be seen in the ROC-AUC curve as illustrated in

Figure 10 and

Figure 11 for both CXR and CT dataset respectively. The precision–recall curve is another important performance metric we adopted in our comparison as presented in

Figure 12 and

Figure 13. From all indications, our proposed model outweighs all the other models across all the evaluation metrics. Owing to our depthwise separable convolution network with wavelet multiresolution analysis module, the model achieves 98.63% accuracy on CXR dataset and 96.83% accuracy on CT dataset. The efficacy of integrating wavelet multiresolution analysis module with depthwise separable convolution network to modify the learning process is demonstrated by this result. Our model achieves 98.72% AUC, which is significantly higher than the other approaches. These findings confirm the benefits of wavelet multiresolution analysis module in our proposed model. Our proposed approach also achieves the highest specificity score of 97.99%, demonstrating the critical function of the WMR-DepthwiseNet.

As several attempts at COVID-19 classification have been made, we are now comparing the findings of our proposed WMR-DepthwiseNet with previous up-to-date COVID-19 screening methods. In detecting COVID-19 from CT exams, Chen et al. [

11] uses CNN-based U-Net++ to recapture attributes from high-resolution CT exams for detecting COVID-19. The authors reported an accuracy of 95.2%. Shi et al. [

41] uses an infection-size-based random forest approach to obtain region-specific attributes from CT exams for COVID-19 classification achieving 89.4% accuracy.

In separating COVID-19 from other viral pneumonia, Xu et al. [

52] and Wang et al. [

33] works are quite impressive achieving overall accuracy of 86.7% and 82.9%, respectively. However, their biggest flaw was that they only calculated a few indicators, which was insufficient to adequately represent the classification’s overall results. Song et al. [

42] formulated a deep learning scheme called deepPneumonia to distinguish COVID-19 instances using CT exams achieving 86.1% accuracy. Using CT scans, Wang et al. [

12] detected COVID-19 using CNN achieving 92.36% accuracy. Jin et al. [

53] utilized a logistic regression scheme for detecting COVID-19. The authors claimed that their method achieved 96.5% accuracy. Jin et al. [

54] formulated an AI-based scheme for detecting COVID-19, achieving 95.7% accuracy. Barstugan et al. [

40] formulated a ML scheme for classifying COVID-19 using CT scans and achieved 90.7% accuracy.

Table 8 presents a summary of the aforementioned methods in comparison with our proposed scheme. Tabrizchi et al. [

55] suggested an enhanced densely connected convolutional networks (DenseNet) technique for three class classification based on transfer learning (TL). The proposed model obtained an overall accuracy of 95.7%, sensitivity of 87.4%, and specificity of 95.7%. The authors claimed that the high performance of their suggested TL model is due to the classifier’s robustness in dealing with imbalanced data classes and fewer datasets.Tabrizchi et al. [

56] conducted a review of previously published methods and used artificial intelligence (AI) image-based diagnosis methods to detect coronavirus infection with zero or near-zero false positives and false negatives. The goal of their research is to develop the best accurate COVID-19 detection method among AI approaches including machine learning (ML), artificial neural networks (ANN), and ensemble learning (EL). The machine learning model with SVM classifier surpasses the order models, with accuracy of 99.2%, precision of 98.2%, and recall of 100%.

In another experiment, we compared the formulated WMR-DepthwiseNet with four selected COVID-19 models using the same dataset for fairness. Cov-Net [

37] and DeCoVNet [

53] show quite an impressive result followed by COVID-Net [

33]. However, our proposed model outperforms the aforementioned COVID-19 models including DeepPneumonia [

42], which had previously yielded up-to-date results and the other models as dipicted in

Table 9 and

Table 10 using the same CXR and CT dataset. Though the complex lung structures and indistinct infection areas pose unusual challenges, our proposed framework still achieves accurate results, demonstrating its robust strengths.

The proposed WMR-DepthwiseNet has competitive classification efficiency for COVID-19 recognition. The underlying explanation may be that the proposed WMR-DepthwiseNet can better utilize the extracted features of high-level discriminative representation. It is worth noting that the the deptwise separable convolution network with wavelet multiresolution analysis module can handle small-scale data while using less computing power than conventional deep-learning-based approaches. To further examine the performance of the suggested scheme with different hyper-parameter tuning, we presented a statistical report in

Table 11 showing the yielded results by the formulated scheme using CXR and CT datasets. with a learning rate of

and

dropout using SGD optimize, the model obtained the least score of 88.18% accuracy on the CXR dataset. For the CT dataset, the model obtained the least score of 89.33% accuracy using RSMprop optimizer with a larning rate of

and

dropout. Utilizing

dropout and learning rate of

, the model obtained the best accuracy score of 98.63% and 96.83% with Adam optimizer on both CXR and CT datasets, respectively.

5.3. Cross-Dataset Evaluation

Despite the outstanding results record by the proposed model, we also presented cross-dataset evaluation to investigate if there is any discrepancy regarding the results obtained. We study the influence of training a model in one data distribution and evaluating it in another in this experiment. This situation is more realistic because training a model with images from all available sensors, environments, and persons is nearly impossible. We maintain same manner of data split of training, validation and test with 50%, 25%, and 25%, respectively, as shown in

Table 12 and

Table 13. The COVID-CXR scans dataset [

47] is utilized for training the system while the COVID-CXR scans dataset [

58] is utilized for the testing. Similarly, for the CT dataset, The COVID-CT scans dataset [

48] is utilized for training the system while the COVID-CT scans dataset [

59] is utilized for the testing. We ensured that no images from the training dataset source are present in the test dataset source. We adopted the well-known dataset reported in [

47,

48,

58,

59] for the experiment because it has been used by various researchers in the literature.

Although we emphasize that the training images used to train the model and the test images are drawn from different distributions. Other test designs were also investigated, such as employing the COVID-CXR training partition as a test and combining both COVID-CXR partitions as a bigger test set (See

Table 12). We employed similar approach to the COVID-CT dataset (See

Table 13). We also examine the inverse scenario in which the train and test set from the COVID-CXR dataset [

58] are used for training and the train set from the COVID-CXR dataset [

47] are used for testing. Similarly, the train and test set from the COVID-CT dataset [

48] are used for training and the train set from the COVID-CT dataset [

59] are used for testing.

When we examine cross-dataset assessment to intra-dataset evaluation, the model performance did not change that much which indicates that there is no significant bias in the results reported using the intra-dataset.

Table 14 presents the results for the cross-dataset evaluation on the proposed model for the CXR dataset while

Table 15 presents the results for the CT dataset. We believe that the slight changes in the performance of our model can be attributed to the data acquisition variation. Images from distinct dataset can be taken using different equipment and image sensors, causing relevant features on the images to change, yet the proposed model performed satisfactorily.

6. Discussion

It is important to make some remark about the proposed depthwise separable convolution network with wavelet multiresolution analysis module. Manual detection of COVID-19 by an expert utilizing CXR and CT can have a high sensitivity but a low specificity of 25%. This inadequate specificity leads to false positive predictions, which leads to ineffective therapy and wasted money. Our proposed WMR-DepthwiseNet has a high specificity of 96.22%, which can be used to help expert radiologists reduce the number of false positive instances reported.

More importantly, the stated result in terms of Receiver Operating Characteristic (ROC) can aid expert radiologist in achieving a trade-off between specificity and sensitivity by telling the overall accuracy as illustrated in

Figure 10 and

Figure 11. The Receiver Operating Characteristic (ROC) maximizes the true positive prediction and also minimizes the false positive rate. From the ROC curve, it is obvious that the formulated model outperforms the other algorithms with the overall accuracy of 98.72% on CXR dataset and 97.61% on CT dataset.

More interestingly, the precision–recall curve also shows that our proposed WMR-DepthwiseNet outweighs the other models with an average precision of 98.69% on CXR dataset and 97.02% on CT dataset. The precision-recall graph demonstrates the trade-off between precision and sensitivity. It is obvious that the model performs better than the other up-to-date COVID-19 models as shown in

Figure 12 and

Figure 13 which means our model has higher precision associated with higher sensitivity.

Furthermore, some comments on WMR-DepthwiseNet computational cost and model complexity are necessary. We combined depthwise separable convolution network with wavelet multi-resolution analysis module for feature extraction. We adopted wavelet pooling instead of the usual max-pooling operator for down-sizing operation which reduced model complexity and computation time. Another intriguing feature of our WMR-DepthwiseNet is its capacity to preserve high-level features without loss of spatial details. In terms of computing cost, the formulated algorithm was trained on an NVIDIA GTX 1080 and implementation on Keras framework. In comparison to earlier up-to-date models, the complexity of the proposed scheme is much reduced with fewer parameters as a result of the wavelet pooling strategy adopted. In all the assessment metrics, the proposed WMR-DepthwiseNet outperforms their counterparts as depicted in

Table 8. Our proposed strategy consistently produces better performance in terms of SEN, SEP, ACC, AUC, PRE, and F1Score. The explanation for this is that our proposed WMR-DepthwiseNet learns high-level discriminative details. Furthermore, the WMR-DepthwiseNet outperforms up-to-date approaches with better classification results.