Mathematical Analysis and Motion Capture System Utilization Method for Standardization Evaluation of Tracking Objectivity of 6-DOF Arm Structure for Rehabilitation Training Exercise Therapy Robot

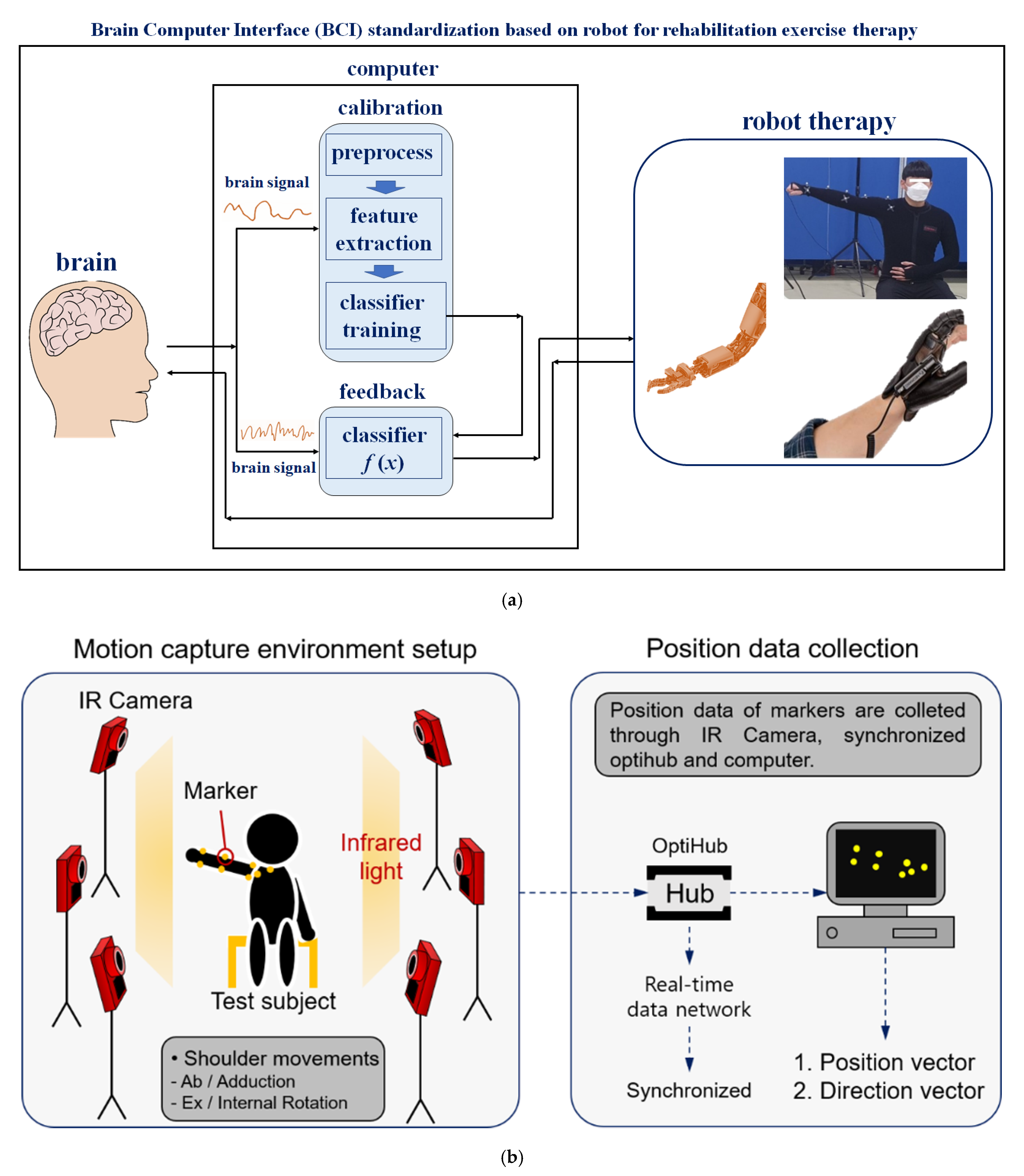

Abstract

:1. Introduction

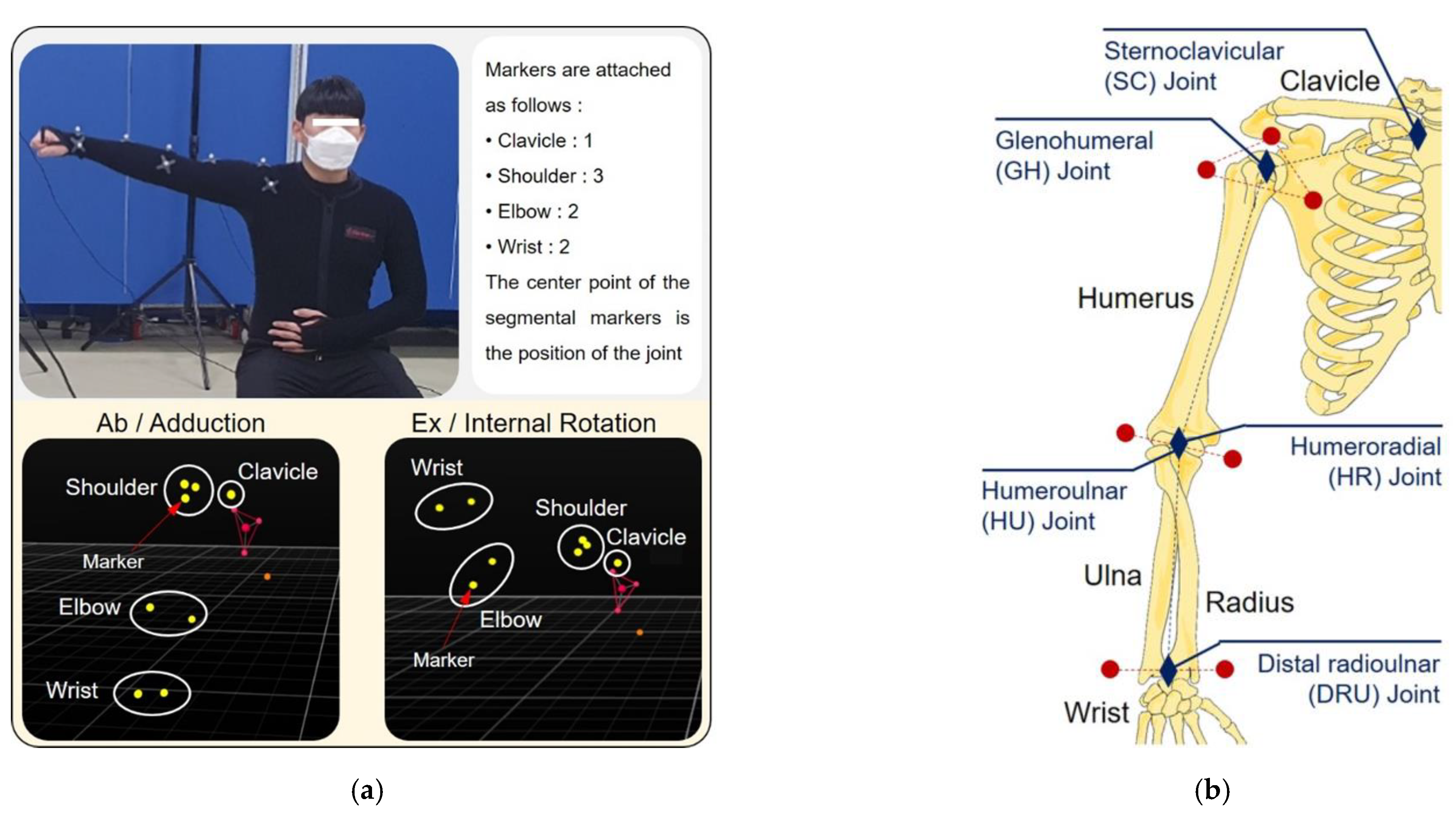

2. Analysis of Motion Capture

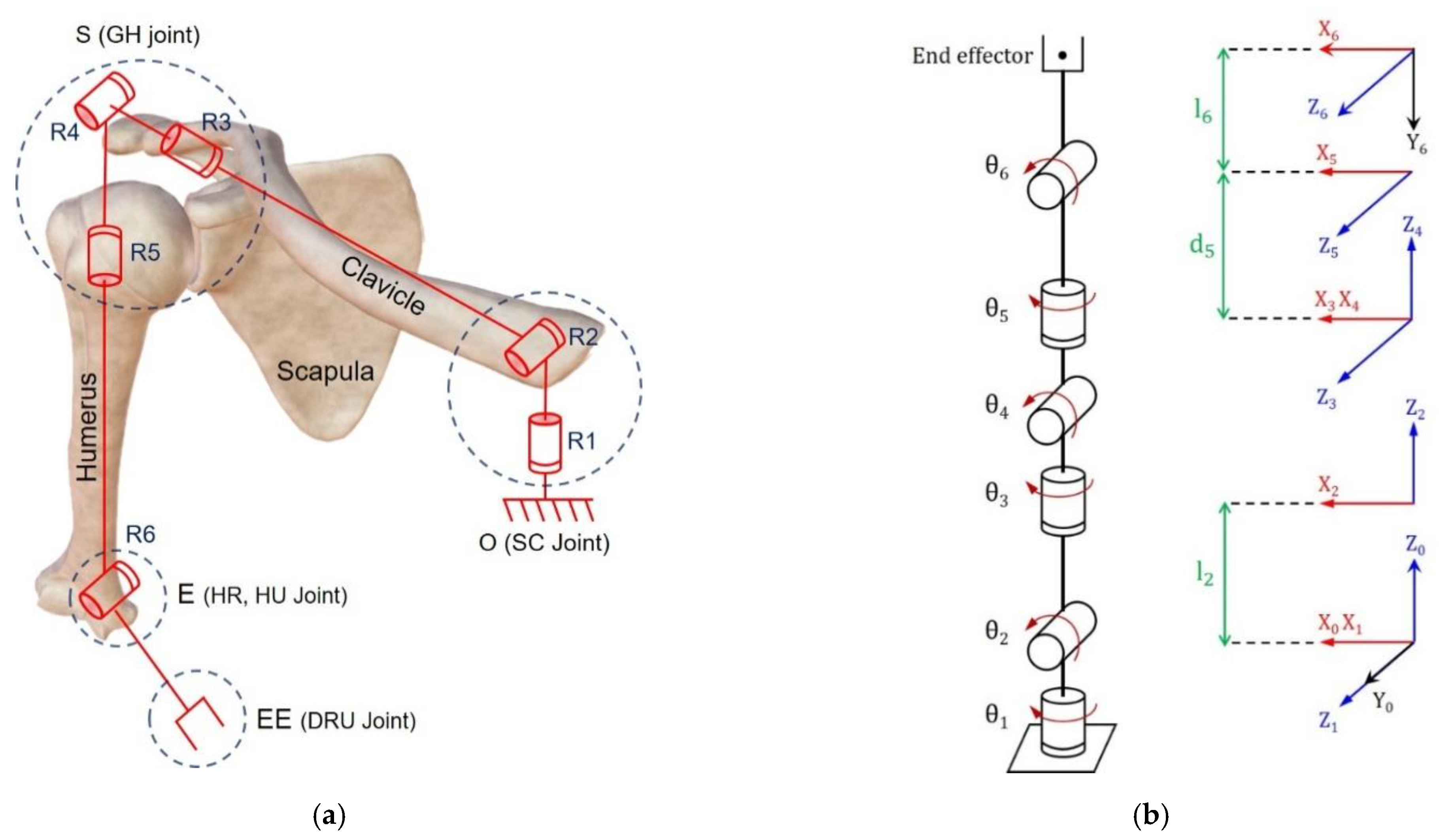

3. Mechanism and Mathematical Analysis

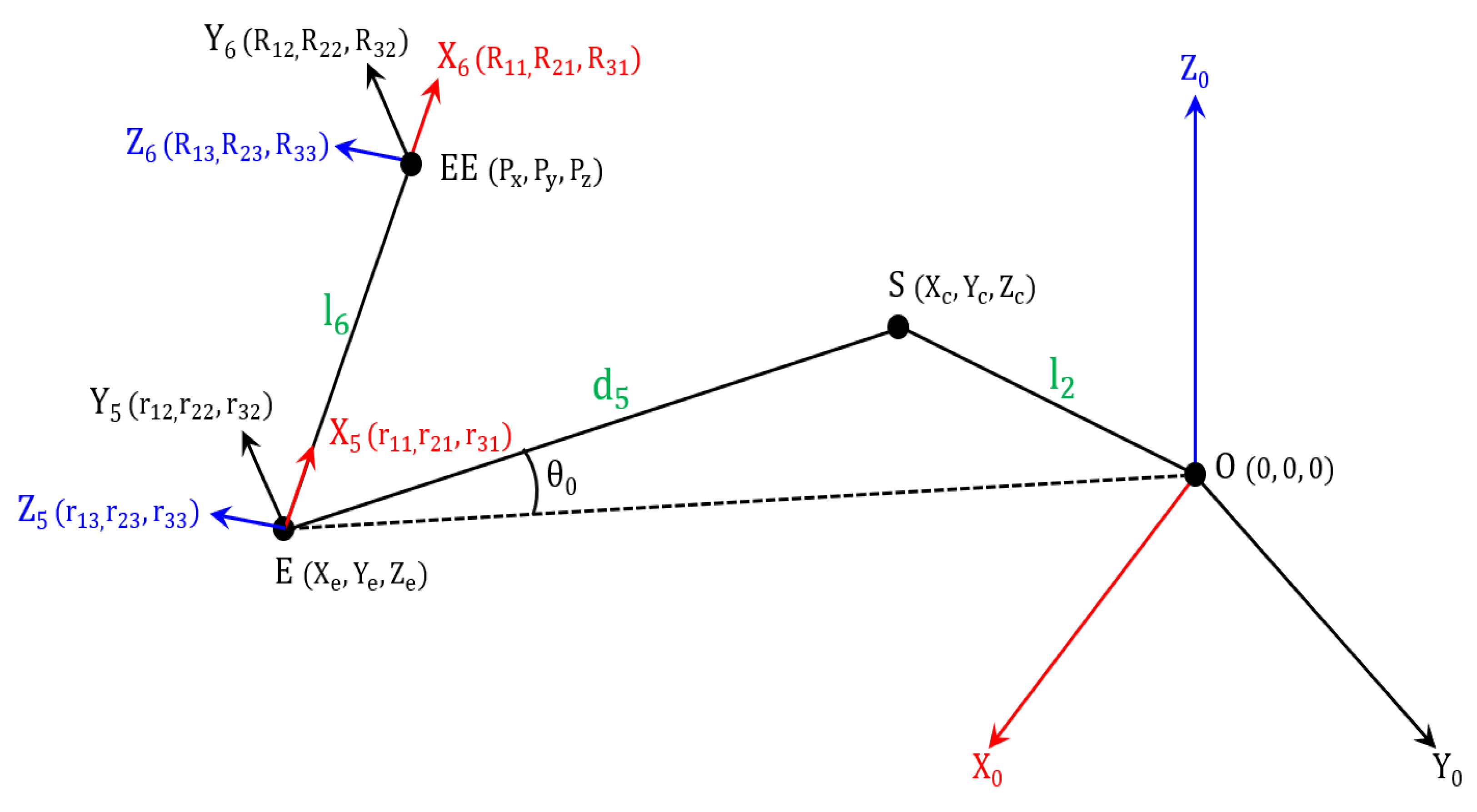

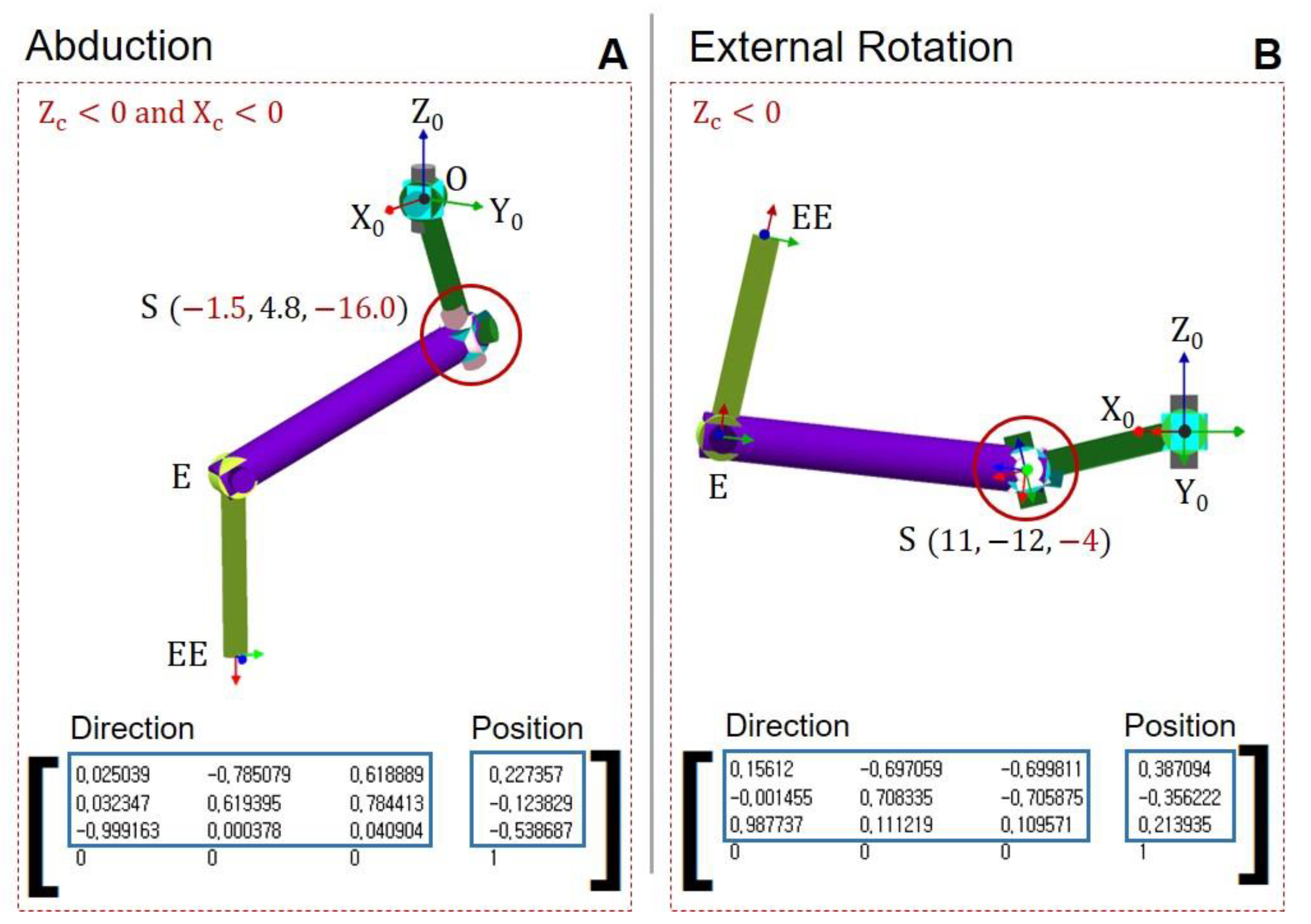

3.1. Forward Kinematics

3.2. Inverse Kinematics

3.2.1. Position Vector Analysis

3.2.2. Joint Angle Analysis

4. Experiment Results and Discussion

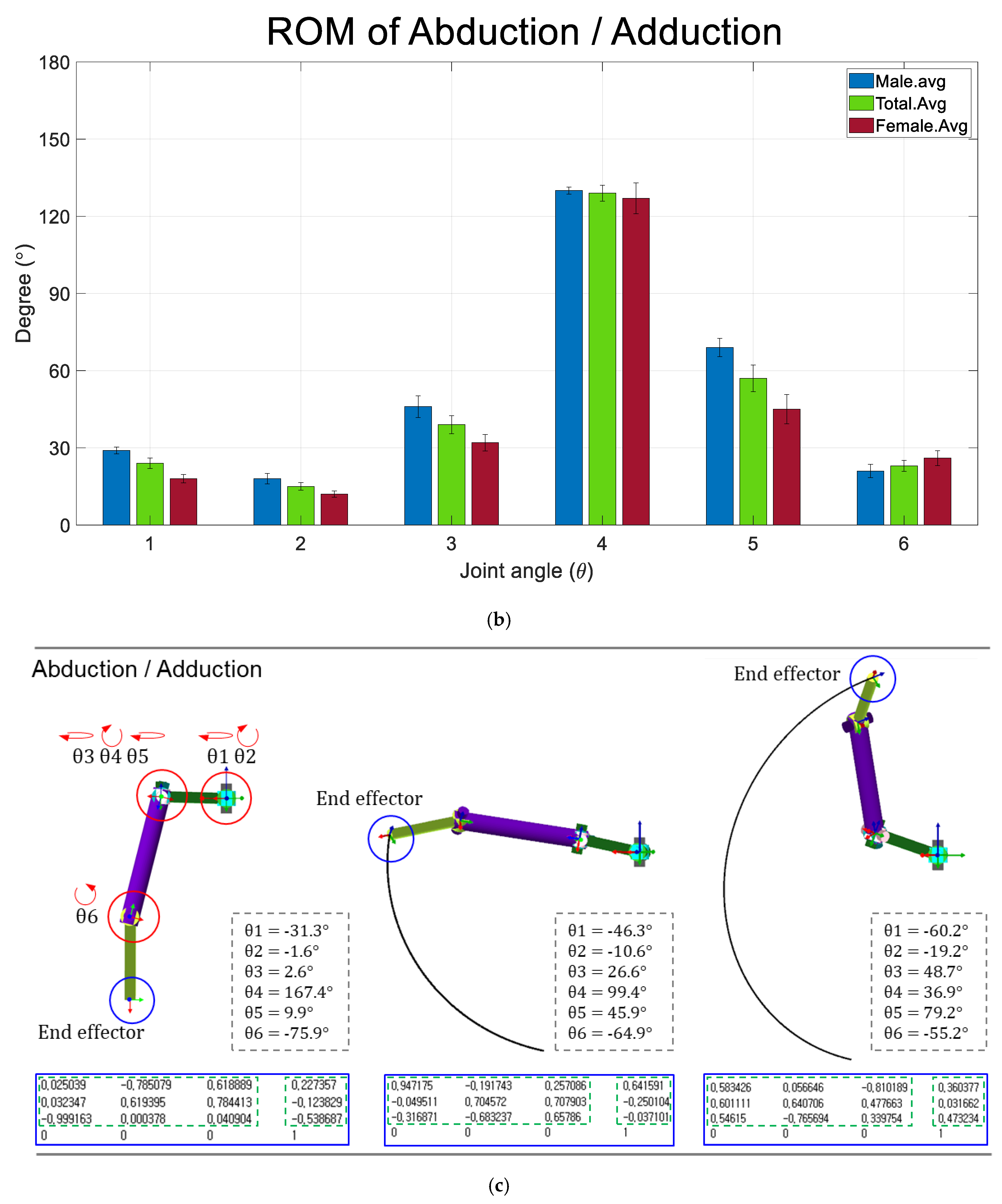

4.1. Abduction and Adduction

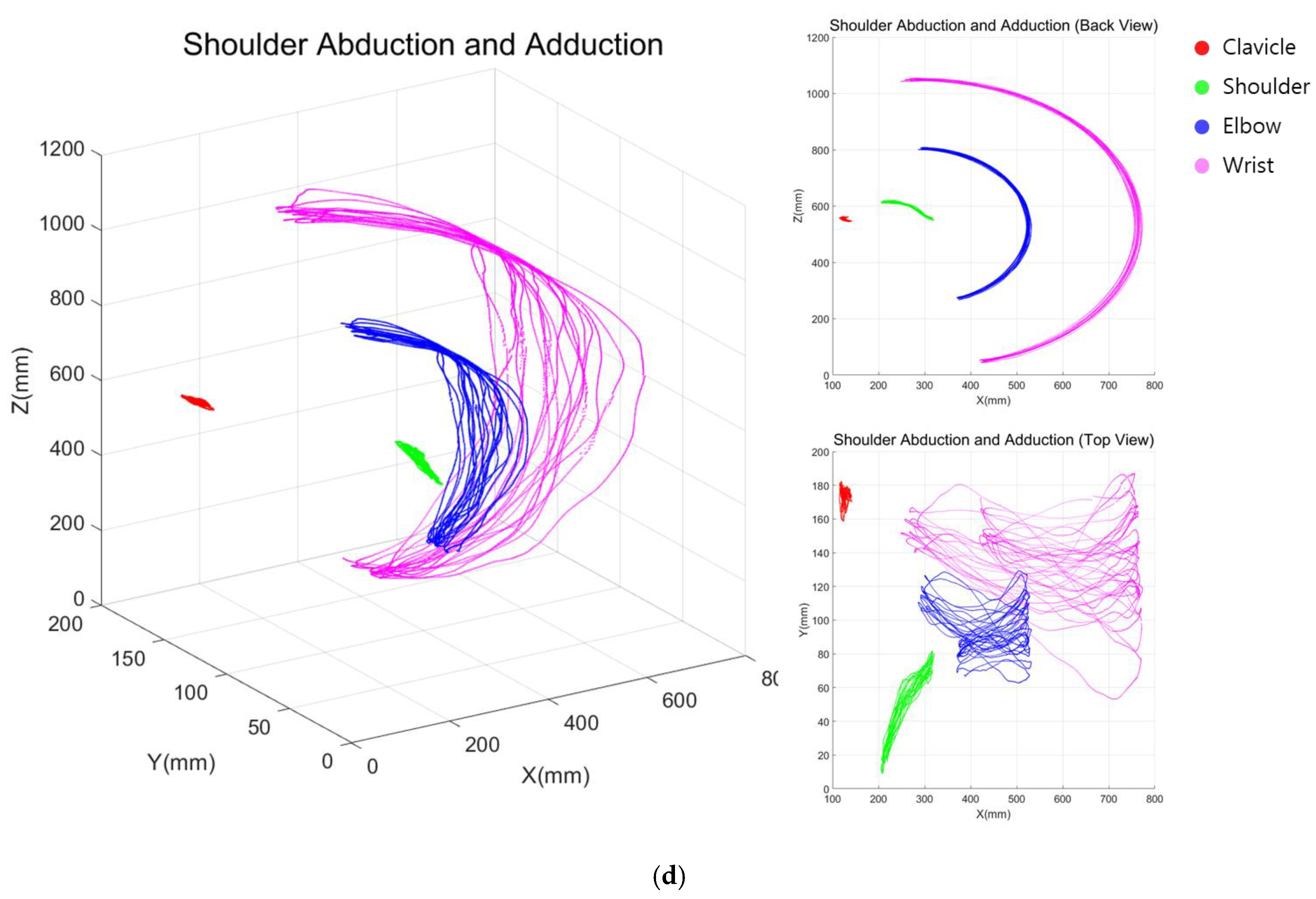

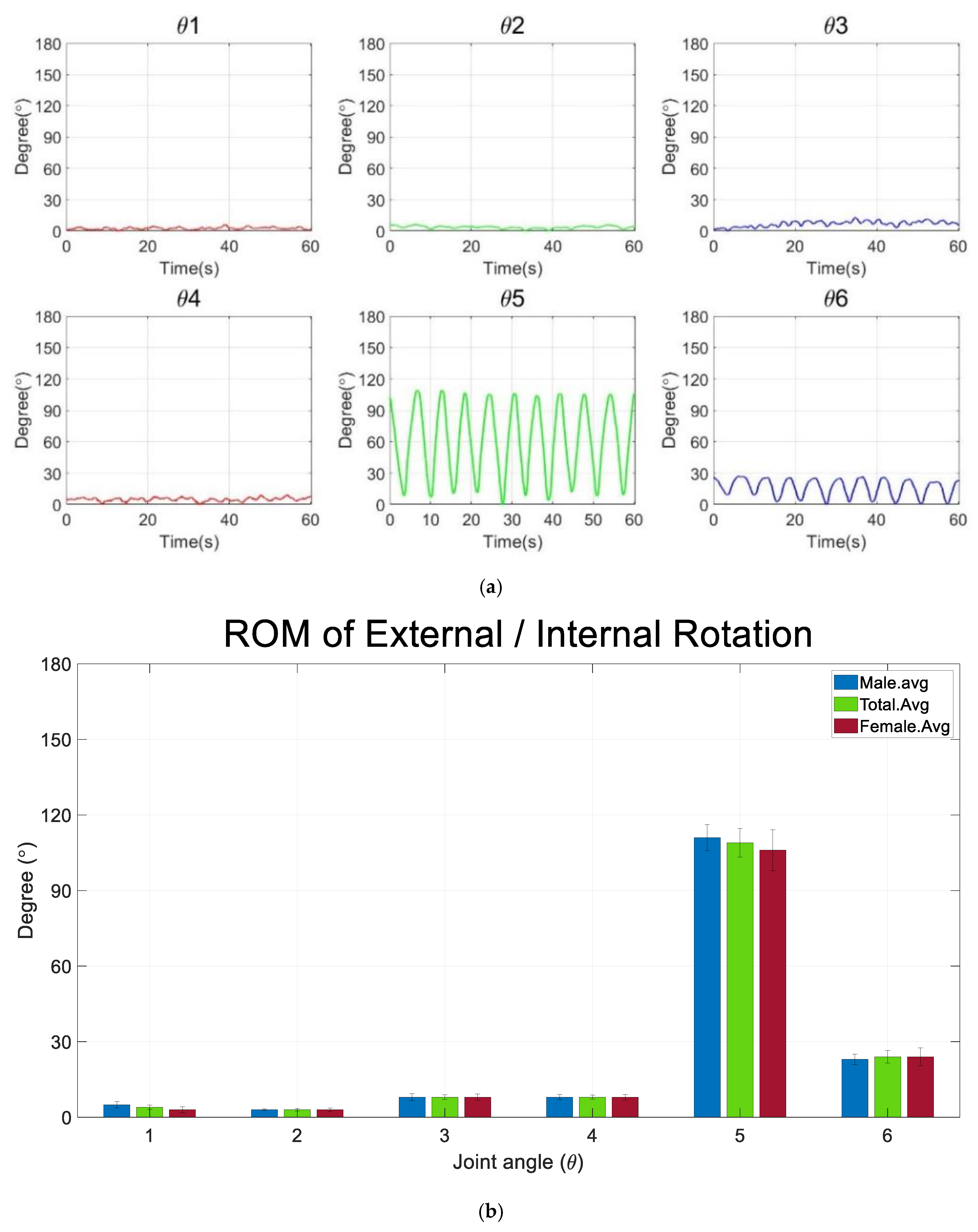

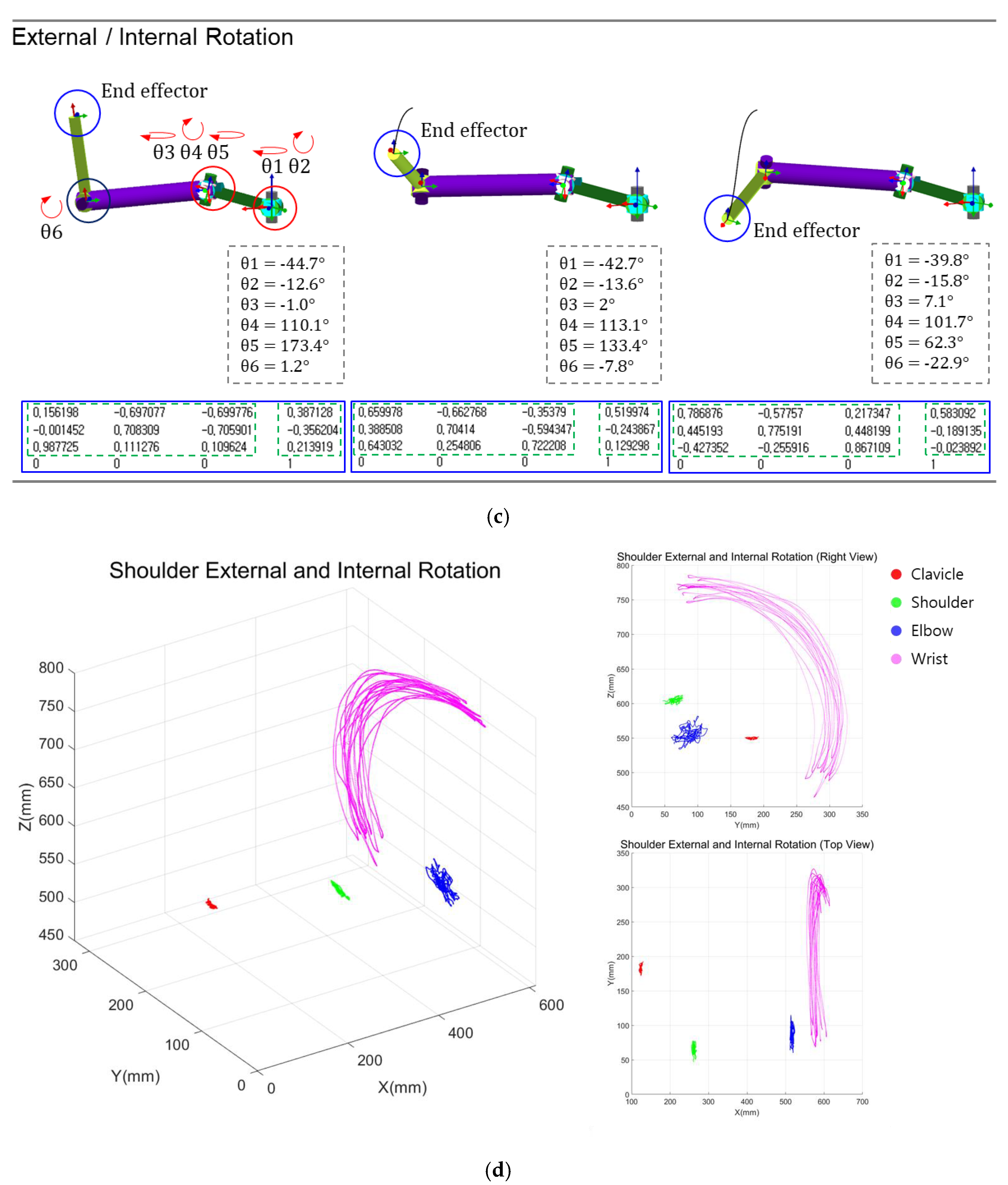

4.2. External and Internal Rotation

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cho, C.H.; Bae, K.C.; Kim, D.H. Treatment strategy for frozen shoulder. Clin. Orthop. Surg. 2019, 11, 249–257. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.H.; Song, W.K. Robot-assisted reach training for improving upper extremity function of chronic stroke. Tohoku J. Exp. Med. 2015, 237, 149–155. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kang, B.; Lee, H.; In, H.; Jeong, U.; Chung, J.; Cho, K.J. Development of a polymer-based tendon-driven wearable robotic hand. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA) 2016, Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Woo, H.; Lee, J.; Kong, K. Gait assist method by wearable robot for incomplete paraplegic patients. J. Korea Robot. Soc. 2017, 12, 144–151. [Google Scholar] [CrossRef]

- Lee, K.S.; Park, J.H.; Beom, J.; Park, H.S. Design and evaluation of passive shoulder joint tracking module for upperlimb rehabilitation robots. Front. Neurorobot. 2018, 12, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Cho, K.H.; Song, W.K. Robot-assisted reach training with an active assistant protocol for long term upper extremity impairment poststroke: A randomized controlled trial. Arch. Phys. Med. Rehabil. 2019, 100, 213–219. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Pan, X.; Guo, Y.; Qu, H. Design of rehabilitation medical product system for elderly apartment based on intelligent endowment. ASP Trans. Internet Things 2022, 2, 1–9. [Google Scholar]

- Wolpaw, J.R. Brain-computer interface technology: A review of the first international meeting. IEEE Trans. Rehabil. Eng. 2000, 8, 164–173. [Google Scholar] [CrossRef]

- Yang, H.E.; Kyeong, S.; Lee, S.H.; Lee, W.J.; Ha, S.W.; Kim, S.M. Structural and functional improvements due to robot-assisted gait training in the stroke-injured brain. Neurosci. Lett. 2017, 637, 114–119. [Google Scholar] [CrossRef]

- Mehrholz, J.; Thomas, S.; Kugler, J.; Pohl, M.; Elsner, B. Electromechanical-assisted training for walking after stroke. Cochrane Database Syst. Rev. 2020, 10, CD006185. [Google Scholar]

- Carpinella, I.; Lencioni, T.; Bowman, T. Effects of robot therapy on upper body kinematics and arm function in persons post stroke: A pilot randomized controlled trial. J. Neuroeng. Rehabil. 2020, 17, 1–19. [Google Scholar] [CrossRef] [Green Version]

- Pereira, S.; Silva, C.C.; Ferreira, S. Anticipatory postural adjustments during sitting reach movement in post-stroke subjects. J. Electromyogr. Kinesiol. 2014, 24, 165–171. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, J. A Study on the Therapeutic Effect of the Upper Limb Rehabilitation Robot “Camillo” and Improvement of Clinical Basis in Stroke Patients with Hemiplegia. Master’s Thesis, Dongguk University, Seoul, Republic of Korea, 2021. [Google Scholar]

- Wu, G.; Helm, V.D.; Veeger, F.C.; Makhsous, H.E.; Van Roy, M.; Anglin, P.; Nagels, C.; Karduna, J.; McQuade, A.R.; Wang, K.; et al. ISB Recommendation on definitions of joint coordinate systems of various joints for the reporting of human joint motion--Part II: Shoulder, elbow, wrist and hand. J. Biomech. Int. Soc. Biomech. 2005, 38, 981–992. [Google Scholar] [CrossRef] [PubMed]

- Jackson, M.; Michaud, B.; Tétreault, P.; Begon, M. Improvements in measuring shoulder joint kinematics. J. Biomech. 2012, 45, 2180–2183. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Dong, M.; Li, J.; Cao, Q. A modified kinematic model of shoulder complex based on vicon motion capturing system: Generalized GH joint with floating centre. Sensors 2020, 20, 3713. [Google Scholar] [CrossRef]

- Liu, Y.; Huang, S.; Huang, Y. Motor imagery EEG classification for patients with amy-otrophic lateral sclerosis using fractal dimension and Fisher’s criterion-based channel selection. Sensors 2017, 17, 1557. [Google Scholar]

- Gainmann, B.; Allison, B.; Pfurtscheller, G. Brain-Computer Interface, Revolutionizing Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Nijholt, A.; Tan, D. Brain-computer interfacing for intelligent system. IEEE Intell. Syst. 2008, 23, 72–79. [Google Scholar] [CrossRef] [Green Version]

- Sajda, P.; Muller, K.-R.; Shenoy, K.V. Brain-computer interfaces. IEEE Signal Process. Mag. 2008, 25, 16–28. [Google Scholar] [CrossRef]

- Asif, S.; Webb, P. Kinematics analysis of 6-DoF articulated robot with spherical wrist. Math. Probl. Eng. 2021, 2021, 1–11. [Google Scholar] [CrossRef]

- Denavit, J.; Hartenberg, R.S. A kinematic notation for lower-pair mechanisms based on matrices. ASME J. Appl. Mech. 1995, 22, 215–221. [Google Scholar] [CrossRef]

- Yoon, K.C.; Cho, S.M.; Kim, K.G. Coupling effect suppressed compact surgical robot with 7-Axis multi-joint using wire-driven method. Mathematics 2022, 10, 1698. [Google Scholar] [CrossRef]

- Ropars, M.; Cretual, A.; Thomazeau, H.; Kaila, R.; Bonan, I. Volumetric definition of shoulder range of motion and its correlation with clinical signs of shoulder hyperlaxity. A motion capture study. J. Shoulder Elb. Surg. 2015, 24, 310–316. [Google Scholar] [CrossRef]

- Bagg, S.D.; Forrest, W.J. A biomechanical analysis of scapular rotation during arm abduction in the scapular plane. Am. J. Phys. Med. Rehabil. 1988, 67, 238–245. [Google Scholar] [PubMed]

- Barnes, C.J.; Van Steyn, S.J.; Fischer, R.A. The effects of age, sex, and shoulder dominance on range of motion of the shoulder. J. Shoulder Elb. Surg. 2001, 10, 242–246. [Google Scholar] [CrossRef] [PubMed]

- Rigoni, M.; Gill, S.; Babazadeh, S.; Elsewaisy, O.; Gillies, H.; Nguyen, N.; Pathirana, P.N.; Page, R. Assessment of Shoulder Range of Motion Using a Wireless Inertial Motion Capture Device-A Validation Study. Sensors 2019, 19, 1781. [Google Scholar] [CrossRef] [Green Version]

- Ahatzitofis, A.; Zarpals, D.; Kollias, S.; Daras, P. DeepmoCap: Deep optical motion capture using multiple depth sensors and retro-reflectors. Sensors 2019, 19, 282. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2D pose estimation using part affinity fields. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar] [CrossRef] [Green Version]

- Qiu, S.; Hao, Z.; Wang, Z.; Liu, L.; Liu, J.; Zhao, H.; Fortino, G. Sensor combination selection strategy for kayak cycle phase segmentation based on body sensor networks. IEEE Internet Things J. 2022, 9, 1–12. [Google Scholar] [CrossRef]

- Walha, R.; Lebel, K.; Gaudreault, N.; Dagenais, P.; Cereatti, A.; Croce, U.D. The accuracy and precision of gait spatio-temporal parameters extracted from an instrumented sock during treadmill and overground walking in healthy subjects and patients with a foot impairment secondary to psoriatic arthritis. Sensors 2021, 21, 6179. [Google Scholar] [CrossRef]

- Kim, Y.; Baek, S.; Bae, B.C. Motion capture of the human body using multiple depth sensors. ETRI J. 2017, 39, 181–190. [Google Scholar] [CrossRef]

- Steinebach, T.; Grosse, E.H.; Glock, C.H.; Wakula, J.; Lunin, A. Accuracy evaluation of two markerless motion capture systems for measurement of upper extremities: Kinect V2 and Captiv. Hum. Factors Ergon. Manuf. Serv. Ind. 2020, 30, 291–302. [Google Scholar] [CrossRef]

| Joint | Link Angle | Link Offset | Link Length | Link Twist |

|---|---|---|---|---|

| Height (mm) | |||||||

|---|---|---|---|---|---|---|---|

| M.avg | |||||||

| M1 | |||||||

| M2 | |||||||

| M3 | |||||||

| M4 | |||||||

| M5 | |||||||

| 7.1 | 2.9 | 4.4 | 9.5 | 3.2 | 8.1 | 5.8 | |

| SEM | 3.2 | 1.3 | 2.0 | 4.2 | 1.4 | 3.6 | 2.6 |

| F.avg | 160 | 18.3 | 11.5 | 31.9 | 127.2 | 44.8 | 26.2 |

| F1 | 161 | 21.5 | 11.3 | 26.0 | 132.9 | 48.2 | 24.9 |

| F2 | 159 | 14.4 | 12.4 | 25.8 | 116.1 | 23.5 | 33.7 |

| F3 | 165 | 13.5 | 6.6 | 33.1 | 108.2 | 63.6 | 28.0 |

| F4 | 160 | 21.7 | 14.8 | 45.2 | 146.4 | 42.5 | 14.5 |

| F5 | 154 | 20.3 | 12.5 | 29.2 | 132.5 | 46.3 | 29.9 |

| 3.5 | 3.6 | 2.7 | 7.2 | 13.5 | 12.9 | 6.5 | |

| SEM | 1.6 | 1.6 | 1.2 | 3.2 | 6.0 | 5.7 | 2.9 |

| T.avg | 23.6 | 14.6 | 39.0 | 128.8 | 57.1 | 23.5 | |

| SEM | 2.0 | 1.5 | 3.5 | 3.1 | 5.2 | 2.1 |

| Height | |||||||

|---|---|---|---|---|---|---|---|

| M.avg | 4.9 | 3.2 | 8.0 | 8.5 | 111.1 | 23.4 | |

| M1 | 2.6 | 3.4 | 8.5 | 9.4 | 109.9 | 18.8 | |

| M2 | 2.8 | 2.9 | 3.5 | 3.9 | 97.5 | 22.1 | |

| M3 | 2.0 | 2.2 | 5.4 | 9.3 | 99.5 | 20.6 | |

| M4 | 9.0 | 4.4 | 11.5 | 10.2 | 125.9 | 32.1 | |

| M5 | 8.0 | 3.3 | 11.2 | 9.5 | 122.7 | 23.6 | |

| 7.1 | 3.0 | 0.7 | 3.2 | 2.3 | 11.6 | 4.6 | |

| 3.2 | 1.3 | 0.3 | 1.4 | 1.0 | 5.2 | 2.1 | |

| F.avg | 160 | 2.9 | 3.4 | 7.9 | 7.5 | 106.0 | 23.6 |

| F1 | 161 | 6.6 | 6.9 | 12.3 | 8.8 | 138.5 | 42.9 |

| F2 | 159 | 1.3 | 1.0 | 5.6 | 5.1 | 79.7 | 22.3 |

| F3 | 165 | 1.2 | 1.8 | 5.7 | 4.4 | 89.9 | 19.2 |

| F4 | 160 | 3.4 | 4.5 | 7.5 | 8.4 | 122.4 | 19.5 |

| F5 | 154 | 2.0 | 3.0 | 8.4 | 10.9 | 90.3 | 13.9 |

| 7.1 | 2.0 | 2.1 | 2.4 | 2.4 | 22.4 | 10.0 | |

| 3.2 | 0.9 | 0.9 | 1.1 | 1.1 | 10.0 | 4.5 | |

| T.avg | 3.9 | 3.3 | 8.0 | 8.0 | 107.6 | 23.5 | |

| 0.9 | 0.5 | 0.9 | 0.8 | 5.7 | 2.5 |

| Reference | Average Accuracy [%] | Performance of a Motion Capture |

|---|---|---|

| this work | 97.6 | OptiTrack |

| [28] | 94.8 | optical motion capture method (DeepMoCap) |

| [29] | 93.6 | multi-person pose estimation |

| [30] | 95.9 | OptiTrack |

| [31] | 95.0 | IMU Sensor (mobilitylLab system) |

| [32] | 85.3 | multiple Kinect sensors |

| [33] | 70.0 | kinect V2 and captiv sensor |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seol, J.; Yoon, K.; Kim, K.G. Mathematical Analysis and Motion Capture System Utilization Method for Standardization Evaluation of Tracking Objectivity of 6-DOF Arm Structure for Rehabilitation Training Exercise Therapy Robot. Diagnostics 2022, 12, 3179. https://doi.org/10.3390/diagnostics12123179

Seol J, Yoon K, Kim KG. Mathematical Analysis and Motion Capture System Utilization Method for Standardization Evaluation of Tracking Objectivity of 6-DOF Arm Structure for Rehabilitation Training Exercise Therapy Robot. Diagnostics. 2022; 12(12):3179. https://doi.org/10.3390/diagnostics12123179

Chicago/Turabian StyleSeol, Jaehwang, Kicheol Yoon, and Kwang Gi Kim. 2022. "Mathematical Analysis and Motion Capture System Utilization Method for Standardization Evaluation of Tracking Objectivity of 6-DOF Arm Structure for Rehabilitation Training Exercise Therapy Robot" Diagnostics 12, no. 12: 3179. https://doi.org/10.3390/diagnostics12123179

APA StyleSeol, J., Yoon, K., & Kim, K. G. (2022). Mathematical Analysis and Motion Capture System Utilization Method for Standardization Evaluation of Tracking Objectivity of 6-DOF Arm Structure for Rehabilitation Training Exercise Therapy Robot. Diagnostics, 12(12), 3179. https://doi.org/10.3390/diagnostics12123179