Inter/Intra-Observer Agreement in Video-Capsule Endoscopy: Are We Getting It All Wrong? A Systematic Review and Meta-Analysis

Abstract

1. Introduction

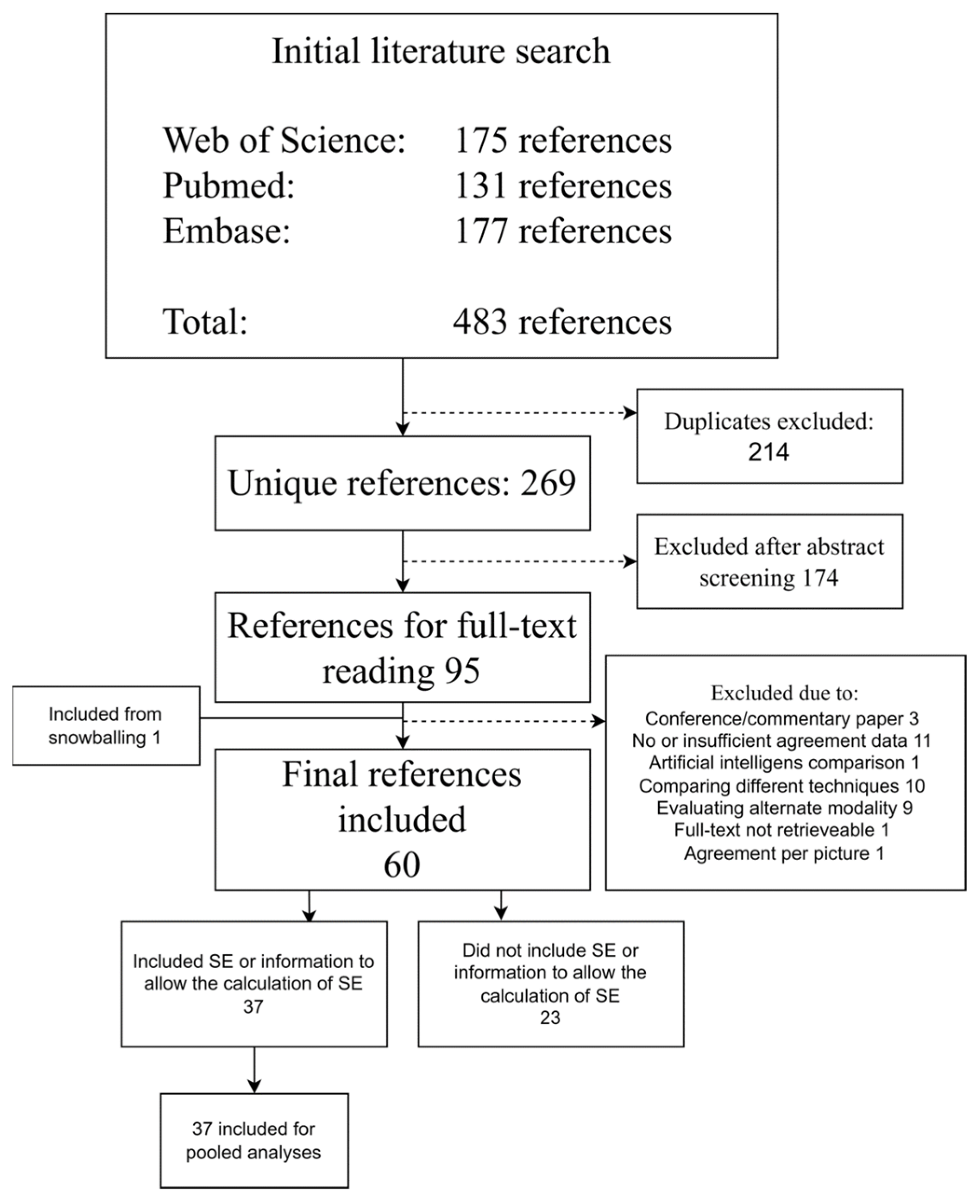

2. Materials and Methods

2.1. Data sources and Search Strategy

2.2. Inclusion and Exclusion Criteria

2.3. Screening of References

2.4. Data Extraction

2.5. Study Assessment and Risk of Bias

2.6. Statistics

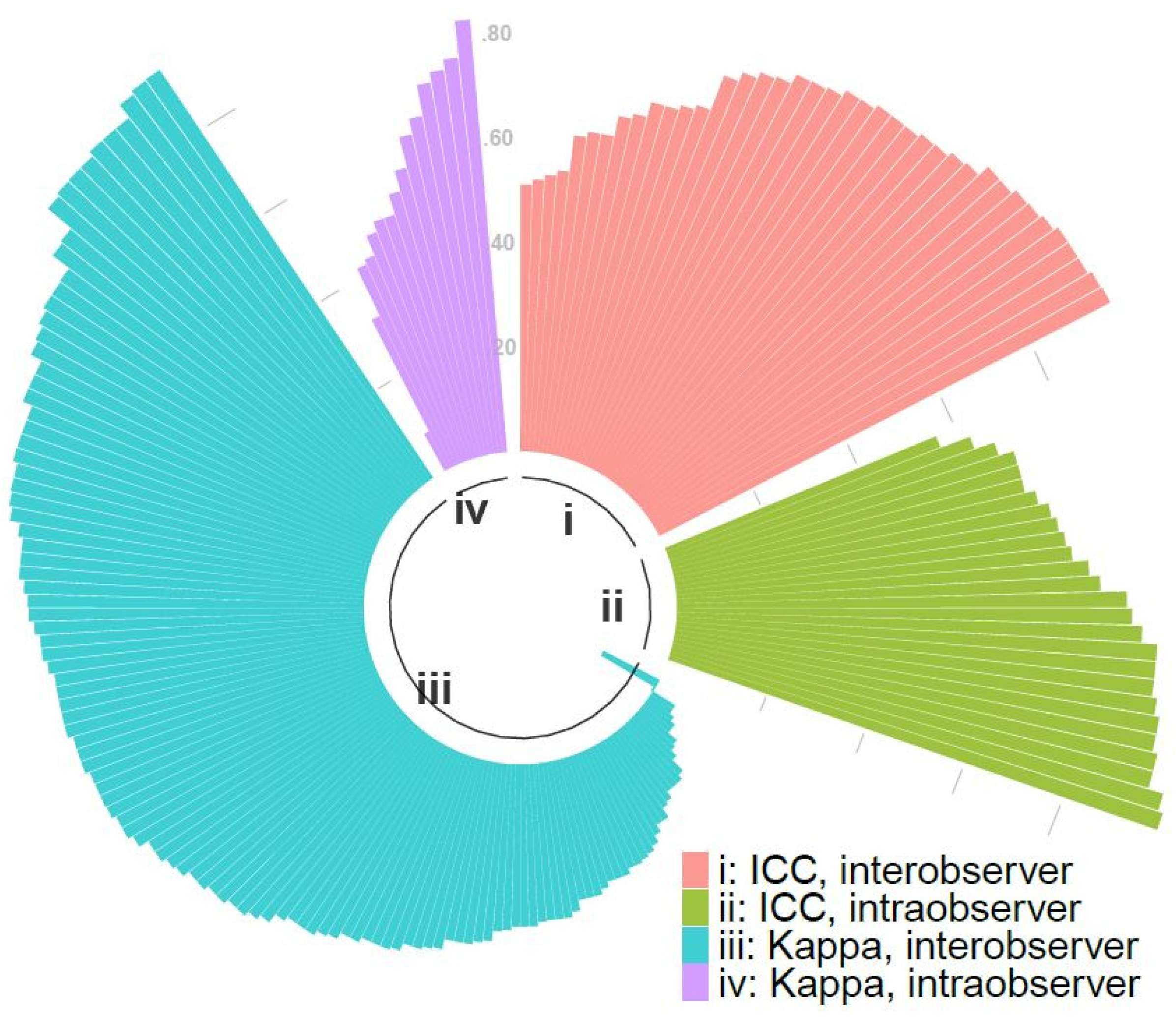

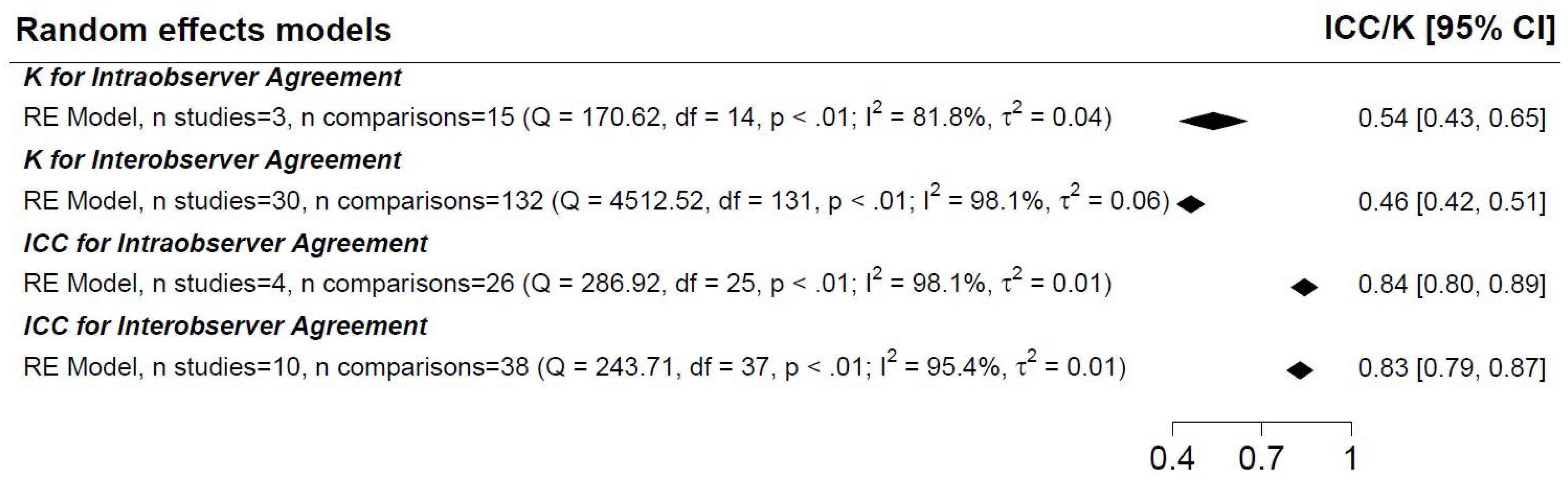

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Meron, G.D. The development of the swallowable video capsule (M2A). Gastrointest. Endosc. 2000, 52, 817–819. [Google Scholar] [CrossRef]

- Spada, C.; Hassan, C.; Bellini, D.; Burling, D.; Cappello, G.; Carretero, C.; Dekker, E.; Eliakim, R.; de Haan, M.; Kaminski, M.F.; et al. Imaging alternatives to colonoscopy: CT colonography and colon capsule. European Society of Gastrointestinal Endoscopy (ESGE) and European Society of Gastrointestinal and Abdominal Radiology (ESGAR) Guideline-Update 2020. Endoscopy 2020, 52, 1127–1141. [Google Scholar] [CrossRef] [PubMed]

- MacLeod, C.; Wilson, P.; Watson, A.J.M. Colon capsule endoscopy: An innovative method for detecting colorectal pathology during the COVID-19 pandemic? Colorectal. Dis. 2020, 22, 621–624. [Google Scholar] [CrossRef]

- White, E.; Koulaouzidis, A.; Patience, L.; Wenzek, H. How a managed service for colon capsule endoscopy works in an overstretched healthcare system. Scand. J. Gastroenterol. 2022, 57, 359–363. [Google Scholar] [CrossRef]

- Zheng, Y.; Hawkins, L.; Wolff, J.; Goloubeva, O.; Goldberg, E. Detection of lesions during capsule endoscopy: Physician performance is disappointing. Am. J. Gastroenterol. 2012, 107, 554–560. [Google Scholar] [CrossRef] [PubMed]

- Beg, S.; Card, T.; Sidhu, R.; Wronska, E.; Ragunath, K.; UK capsule endoscopy users’ group. The impact of reader fatigue on the accuracy of capsule endoscopy interpretation. Dig. Liver Dis. 2021, 53, 1028–1033. [Google Scholar] [CrossRef] [PubMed]

- Rondonotti, E.; Pennazio, M.; Toth, E.; Koulaouzidis, A. How to read small bowel capsule endoscopy: A practical guide for everyday use. Endosc. Int. Open 2020, 8, E1220–E1224. [Google Scholar] [CrossRef] [PubMed]

- Koulaouzidis, A.; Dabos, K.; Philipper, M.; Toth, E.; Keuchel, M. How should we do colon capsule endoscopy reading: A practical guide. Adv. Gastrointest. Endosc. 2021, 14, 26317745211001984. [Google Scholar] [CrossRef] [PubMed]

- Spada, C.; McNamara, D.; Despott, E.J.; Adler, S.; Cash, B.D.; Fernández-Urién, I.; Ivekovic, H.; Keuchel, M.; McAlindon, M.; Saurin, J.C.; et al. Performance measures for small-bowel endoscopy: A European Society of Gastrointestinal Endoscopy (ESGE) Quality Improvement Initiative. United Eur. Gastroenterol. J. 2019, 7, 614–641. [Google Scholar] [CrossRef] [PubMed]

- Liberati, A.; Altman, D.G.; Tetzlaff, J.; Mulrow, C.; Gøtzsche, P.C.; Ioannidis, J.P.A.; Clarke, M.; Devereaux, P.J.; Kleijnen, J.; Moher, D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: Explanation and elaboration. BMJ 2009, 339, b2700. [Google Scholar] [CrossRef] [PubMed]

- Slim, K.; Nini, E.; Forestier, D.; Kwiatowski, F.; Panis, Y.; Chipponi, J. Methodological index for non-randomized studies (MINORS): Development and validation of a new instrument. ANZ J. Surg. 2003, 73, 712–716. [Google Scholar] [CrossRef] [PubMed]

- McHugh, M.L. Interrater reliability: The kappa statistic. Biochem. Med. 2012, 22, 276–282. [Google Scholar] [CrossRef]

- Liu, J.; Tang, W.; Chen, G.; Lu, Y.; Feng, C.; Tu, X.M. Correlation and agreement: Overview and clarification of competing concepts and measures. Shanghai Arch. Psychiatry 2016, 28, 115–120. [Google Scholar] [PubMed]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–613. [Google Scholar] [CrossRef] [PubMed]

- Chan, Y.H. Biostatistics 104: Correlational analysis. Singap. Med. J. 2003, 44, 614–619. [Google Scholar]

- Viechtbauer, W. Conducting Meta-Analyses in R with the metafor Package. J. Stat. Softw. 2010, 36, 1–48. [Google Scholar] [CrossRef]

- Wickham, H.; Averick, M.; Bryan, J.; Chang, W.; D’Agostino McGowan, L.; François, R.; Grolemund, G.; Hayes, A.; Henry, L.; Hester, J.; et al. Welcome to the tidyverse. J. Open Source Softw. 2019, 4, 1686. [Google Scholar] [CrossRef]

- Adler, D.G.; Knipschield, M.; Gostout, C. A prospective comparison of capsule endoscopy and push enteroscopy in patients with GI bleeding of obscure origin. Gastrointest. Endosc. 2004, 59, 492–498. [Google Scholar] [CrossRef]

- Alageeli, M.; Yan, B.; Alshankiti, S.; Al-Zahrani, M.; Bahreini, Z.; Dang, T.T.; Friendland, J.; Gilani, S.; Homenauth, R.; Houle, J.; et al. KODA score: An updated and validated bowel preparation scale for patients undergoing small bowel capsule endoscopy. Endosc. Int. Open 2020, 8, E1011–E1017. [Google Scholar] [CrossRef] [PubMed]

- Albert, J.; Göbel, C.M.; Lesske, J.; Lotterer, E.; Nietsch, H.; Fleig, W.E. Simethicone for small bowel preparation for capsule endoscopy: A systematic, single-blinded, controlled study. Gastrointest. Endosc. 2004, 59, 487–491. [Google Scholar] [CrossRef]

- Arieira, C.; Magalhães, R.; Dias de Castro, F.; Carvalho, P.B.; Rosa, B.; Moreira, M.J.; Cotter, J. CECDAIic-a new useful tool in pan-intestinal evaluation of Crohn’s disease patients in the era of mucosal healing. Scand. J. Gastroenterol. 2019, 54, 1326–1330. [Google Scholar] [CrossRef] [PubMed]

- Biagi, F.; Rondonotti, E.; Campanella, J.; Villa, F.; Bianchi, P.I.; Klersy, C.; De Franchis, R.; Corazza, G.R. Video capsule endoscopy and histology for small-bowel mucosa evaluation: A comparison performed by blinded observers. Clin. Gastroenterol. Hepatol. 2006, 4, 998–1003. [Google Scholar] [CrossRef] [PubMed]

- Blanco-Velasco, G.; Pinho, R.; Solórzano-Pineda, O.M.; Martínez-Camacho, C.; García-Contreras, L.F.; Murcio-Pérez, E.; Hernández-Mondragón, O.V. Assessment of the Role of a Second Evaluation of Capsule Endoscopy Recordings to Improve Diagnostic Yield and Patient Management. GE Port. J. Gastroenterol. 2022, 29, 106–110. [Google Scholar] [CrossRef] [PubMed]

- Bossa, F.; Cocomazzi, G.; Valvano, M.R.; Andriulli, A.; Annese, V. Detection of abnormal lesions recorded by capsule endoscopy. A prospective study comparing endoscopist’s and nurse’s accuracy. Dig. Liver Dis. 2006, 38, 599–602. [Google Scholar] [CrossRef] [PubMed]

- Bourreille, A. Wireless capsule endoscopy versus ileocolonoscopy for the diagnosis of postoperative recurrence of Crohn’s disease: A prospective study. Gut 2006, 55, 978–983. [Google Scholar] [CrossRef]

- Brotz, C.; Nandi, N.; Conn, M.; Daskalakis, C.; DiMarino, M.; Infantolino, A.; Katz, L.C.; Schroeder, T.; Kastenberg, D. A validation study of 3 grading systems to evaluate small-bowel cleansing for wireless capsule endoscopy: A quantitative index, a qualitative evaluation, and an overall adequacy assessment. Gastrointest. Endosc. 2009, 69, 262–270. [Google Scholar] [CrossRef]

- Buijs, M.M.; Kroijer, R.; Kobaek-Larsen, M.; Spada, C.; Fernandez-Urien, I.; Steele, R.J.; Baatrup, G. Intra and inter-observer agreement on polyp detection in colon capsule endoscopy evaluations. United Eur. Gastroenterol. J. 2018, 6, 1563–1568. [Google Scholar] [CrossRef]

- Chavalitdhamrong, D.; Jensen, D.M.; Singh, B.; Kovacs, T.O.; Han, S.H.; Durazo, F.; Saab, S.; Gornbein, J.A. Capsule Endoscopy Is Not as Accurate as Esophagogastroduodenoscopy in Screening Cirrhotic Patients for Varices. Clin. Gastroenterol. Hepatol. 2012, 10, 254–258.e1. [Google Scholar] [CrossRef]

- Chetcuti Zammit, S.; McAlindon, M.E.; Sanders, D.S.; Sidhu, R. Assessment of disease severity on capsule endoscopy in patients with small bowel villous atrophy. J. Gastroenterol. Hepatol. 2021, 36, 1015–1021. [Google Scholar] [CrossRef]

- Christodoulou, D.; Haber, G.; Beejay, U.; Tang, S.J.; Zanati, S.; Petroniene, R.; Cirocco, M.; Kortan, P.; Kandel, G.; Tatsioni, A.; et al. Reproducibility of Wireless Capsule Endoscopy in the Investigation of Chronic Obscure Gastrointestinal Bleeding. Can. J. Gastroenterol. 2007, 21, 707–714. [Google Scholar] [CrossRef]

- Cotter, J.; Dias de Castro, F.; Magalhães, J.; Moreira, M.J.; Rosa, B. Validation of the Lewis score for the evaluation of small-bowel Crohn’s disease activity. Endoscopy 2014, 47, 330–335. [Google Scholar] [CrossRef] [PubMed]

- De Leusse, A.; Landi, B.; Edery, J.; Burtin, P.; Lecomte, T.; Seksik, P.; Bloch, F.; Jian, R.; Cellier, C. Video Capsule Endoscopy for Investigation of Obscure Gastrointestinal Bleeding: Feasibility, Results, and Interobserver Agreement. Endoscopy 2005, 37, 617–621. [Google Scholar] [CrossRef]

- de Sousa Magalhães, R.; Arieira, C.; Boal Carvalho, P.; Rosa, B.; Moreira, M.J.; Cotter, J. Colon Capsule CLEansing Assessment and Report (CC-CLEAR): A new approach for evaluation of the quality of bowel preparation in capsule colonoscopy. Gastrointest. Endosc. 2021, 93, 212–223. [Google Scholar] [CrossRef] [PubMed]

- Delvaux, M.; Papanikolaou, I.; Fassler, I.; Pohl, H.; Voderholzer, W.; Rösch, T.; Gay, G. Esophageal capsule endoscopy in patients with suspected esophageal disease: Double blinded comparison with esophagogastroduodenoscopy and assessment of interobserver variability. Endoscopy 2007, 40, 16–22. [Google Scholar] [CrossRef]

- D’Haens, G.; Löwenberg, M.; Samaan, M.A.; Franchimont, D.; Ponsioen, D.; van den Brink, G.R.; Fockens, P.; Bossuyt, P.; Amininejad, L.; Rajamannar, G.; et al. Safety and Feasibility of Using the Second-Generation Pillcam Colon Capsule to Assess Active Colonic Crohn’s Disease. Clin. Gastroenterol. Hepatol. 2015, 13, 1480–1486.e3. [Google Scholar] [CrossRef]

- Dray, X.; Houist, G.; Le Mouel, J.P.; Saurin, J.C.; Vanbiervliet, G.; Leandri, C.; Rahmi, G.; Duburque, C.; Kirchgesner, J.; Leenhardt, R.; et al. Prospective evaluation of third-generation small bowel capsule endoscopy videos by independent readers demonstrates poor reproducibility of cleanliness classifications. Clin. Res. Hepatol. Gastroenterol. 2021, 45, 101612. [Google Scholar] [CrossRef] [PubMed]

- Duque, G.; Almeida, N.; Figueiredo, P.; Monsanto, P.; Lopes, S.; Freire, P.; Ferreira, M.; Carvalho, R.; Gouveia, H.; Sofia, C. Virtual chromoendoscopy can be a useful software tool in capsule endoscopy. Rev. Esp. Enferm. Dig. 2012, 104, 231–236. [Google Scholar] [CrossRef][Green Version]

- Eliakim, R.; Yablecovitch, D.; Lahat, A.; Ungar, B.; Shachar, E.; Carter, D.; Selinger, L.; Neuman, S.; Ben-Horin, S.; Kopylov, U. A novel PillCam Crohn’s capsule score (Eliakim score) for quantification of mucosal inflammation in Crohn’s disease. United Eur.Gastroenterol. J. 2020, 8, 544–551. [Google Scholar] [CrossRef]

- Esaki, M.; Matsumoto, T.; Kudo, T.; Yanaru-Fujisawa, R.; Nakamura, S.; Iida, M. Bowel preparations for capsule endoscopy: A comparison between simethicone and magnesium citrate. Gastrointest. Endosc. 2009, 69, 94–101. [Google Scholar] [CrossRef]

- Esaki, M.; Matsumoto, T.; Ohmiya, N.; Washio, E.; Morishita, T.; Sakamoto, K.; Abe, H.; Yamamoto, S.; Kinjo, T.; Togashi, K.; et al. Capsule endoscopy findings for the diagnosis of Crohn’s disease: A nationwide case—Control study. J. Gastroenterol. 2019, 54, 249–260. [Google Scholar] [CrossRef]

- Ewertsen, C.; Svendsen, C.B.S.; Svendsen, L.B.; Hansen, C.P.; Gustafsen, J.H.R.; Jendresen, M.B. Is screening of wireless capsule endoscopies by non-physicians feasible? Ugeskr. Laeger. 2006, 168, 3530–3533. [Google Scholar] [PubMed]

- Gal, E.; Geller, A.; Fraser, G.; Levi, Z.; Niv, Y. Assessment and Validation of the New Capsule Endoscopy Crohn’s Disease Activity Index (CECDAI). Dig. Dis. Sci. 2008, 53, 1933–1937. [Google Scholar] [CrossRef]

- Galmiche, J.P.; Sacher-Huvelin, S.; Coron, E.; Cholet, F.; Ben Soussan, E.; Sébille, V.; Filoche, B.; d’Abrigeon, G.; Antonietti, M.; Robaszkiewicz, M.; et al. Screening for Esophagitis and Barrett’s Esophagus with Wireless Esophageal Capsule Endoscopy: A Multicenter Prospective Trial in Patients with Reflux Symptoms. Am. J. Gastroenterol. 2008, 103, 538–545. [Google Scholar] [CrossRef] [PubMed]

- García-Compeán, D.; Del Cueto-Aguilera, Á.N.; González-González, J.A.; Jáquez-Quintana, J.O.; Borjas-Almaguer, O.D.; Jiménez-Rodríguez, A.R.; Muñoz-Ayala, J.M.; Maldonado-Garza, H.J. Evaluation and Validation of a New Score to Measure the Severity of Small Bowel Angiodysplasia on Video Capsule Endoscopy. Dig. Dis. 2022, 40, 62–67. [Google Scholar] [CrossRef] [PubMed]

- Ge, Z.Z.; Chen, H.Y.; Gao, Y.J.; Hu, Y.B.; Xiao, S.D. The role of simeticone in small-bowel preparation for capsule endoscopy. Endoscopy 2006, 38, 836–840. [Google Scholar] [CrossRef]

- Girelli, C.M.; Porta, P.; Colombo, E.; Lesinigo, E.; Bernasconi, G. Development of a novel index to discriminate bulge from mass on small-bowel capsule endoscopy. Gastrointest. Endosc. 2011, 74, 1067–1074. [Google Scholar] [CrossRef] [PubMed]

- Goyal, J.; Goel, A.; McGwin, G.; Weber, F. Analysis of a grading system to assess the quality of small-bowel preparation for capsule endoscopy: In search of the Holy Grail. Endosc. Int. Open 2014, 2, E183–E186. [Google Scholar] [PubMed]

- Gupta, A.; Postgate, A.J.; Burling, D.; Ilangovan, R.; Marshall, M.; Phillips, R.K.; Clark, S.K.; Fraser, C.H. A Prospective Study of MR Enterography Versus Capsule Endoscopy for the Surveillance of Adult Patients with Peutz-Jeghers Syndrome. AJR Am. J. Roentgenol. 2010, 195, 108–116. [Google Scholar] [CrossRef] [PubMed]

- Gupta, T. Evaluation of Fujinon intelligent chromo endoscopy-assisted capsule endoscopy in patients with obscure gastroenterology bleeding. World J. Gastroenterol. 2011, 17, 4590. [Google Scholar] [CrossRef]

- Chen, H.-B.; Huang, Y.; Chen, S.-Y.; Huang, C.; Gao, L.-H.; Deng, D.-Y.; Li, X.-J.; He, S.; Li, X.-L. Evaluation of visualized area percentage assessment of cleansing score and computed assessment of cleansing score for capsule endoscopy. Saudi J. Gastroenterol. 2013, 19, 160–164. [Google Scholar]

- Jang, B.I.; Lee, S.H.; Moon, J.S.; Cheung, D.Y.; Lee, I.S.; Kim, J.O.; Cheon, J.H.; Park, C.H.; Byeon, J.S.; Park, Y.S.; et al. Inter-observer agreement on the interpretation of capsule endoscopy findings based on capsule endoscopy structured terminology: A multicenter study by the Korean Gut Image Study Group. Scand. J. Gastroenterol. 2010, 45, 370–374. [Google Scholar] [CrossRef]

- Jensen, M.D.; Nathan, T.; Kjeldsen, J. Inter-observer agreement for detection of small bowel Crohn’s disease with capsule endoscopy. Scand. J. Gastroenterol. 2010, 45, 878–884. [Google Scholar] [CrossRef] [PubMed]

- Lai, L.H.; Wong, G.L.H.; Chow, D.K.L.; Lau, J.Y.; Sung, J.J.; Leung, W.K. Inter-observer variations on interpretation of capsule endoscopies. Eur. J. Gastroenterol. Hepatol. 2006, 18, 283–286. [Google Scholar] [CrossRef] [PubMed]

- Lapalus, M.G.; Ben Soussan, E.; Gaudric, M.; Saurin, J.C.; D’Halluin, P.N.; Favre, O.; Filoche, B.; Cholet, F.; de Leusse, A.; Antonietti, M.; et al. Esophageal Capsule Endoscopy vs. EGD for the Evaluation of Portal Hypertension: A French Prospective Multicenter Comparative Study. Am. J. Gastroenterol. 2009, 104, 1112–1118. [Google Scholar] [CrossRef] [PubMed]

- Laurain, A.; de Leusse, A.; Gincul, R.; Vanbiervliet, G.; Bramli, S.; Heyries, L.; Martane, G.; Amrani, N.; Serraj, I.; Saurin, J.C.; et al. Oesophageal capsule endoscopy versus oesophago-gastroduodenoscopy for the diagnosis of recurrent varices: A prospective multicentre study. Dig. Liver Dis. 2014, 46, 535–540. [Google Scholar] [CrossRef] [PubMed]

- Laursen, E.L.; Ersbøll, A.K.; Rasmussen, A.M.O.; Christensen, E.H.; Holm, J.; Hansen, M.B. Intra- and interobserver variation in capsule endoscopy reviews. Ugeskr. Laeger. 2009, 171, 1929–1934. [Google Scholar] [PubMed]

- Leighton, J.; Rex, D. A grading scale to evaluate colon cleansing for the PillCam COLON capsule: A reliability study. Endoscopy 2011, 43, 123–127. [Google Scholar] [CrossRef]

- Murray, J.A.; Rubio–Tapia, A.; Van Dyke, C.T.; Brogan, D.L.; Knipschield, M.A.; Lahr, B.; Rumalla, A.; Zinsmeister, A.R.; Gostout, C.J. Mucosal Atrophy in Celiac Disease: Extent of Involvement, Correlation with Clinical Presentation, and Response to Treatment. Clin. Gastroenterol. Hepatol. 2008, 6, 186–193. [Google Scholar] [CrossRef] [PubMed]

- Niv, Y.; Niv, G. Capsule Endoscopy Examination—Preliminary Review by a Nurse. Dig. Dis. Sci. 2005, 50, 2121–2124. [Google Scholar] [CrossRef] [PubMed]

- Niv, Y.; Ilani, S.; Levi, Z.; Hershkowitz, M.; Niv, E.; Fireman, Z.; O’Donnel, S.; O’Morain, C.; Eliakim, R.; Scapa, E.; et al. Validation of the Capsule Endoscopy Crohn’s Disease Activity Index (CECDAI or Niv score): A multicenter prospective study. Endoscopy 2012, 44, 21–26. [Google Scholar] [CrossRef] [PubMed]

- Oliva, S.; Di Nardo, G.; Hassan, C.; Spada, C.; Aloi, M.; Ferrari, F.; Redler, A.; Costamagna, G.; Cucchiara, S. Second-generation colon capsule endoscopy vs. colonoscopy in pediatric ulcerative colitis: A pilot study. Endoscopy 2014, 46, 485–492. [Google Scholar] [CrossRef] [PubMed]

- Oliva, S.; Cucchiara, S.; Spada, C.; Hassan, C.; Ferrari, F.; Civitelli, F.; Pagliaro, G.; Di Nardo, G. Small bowel cleansing for capsule endoscopy in paediatric patients: A prospective randomized single-blind study. Dig. Liver Dis. 2014, 46, 51–55. [Google Scholar] [CrossRef]

- Omori, T.; Matsumoto, T.; Hara, T.; Kambayashi, H.; Murasugi, S.; Ito, A.; Yonezawa, M.; Nakamura, S.; Tokushige, K. A Novel Capsule Endoscopic Score for Crohn’s Disease. Crohns Colitis 2020, 2, otaa040. [Google Scholar] [CrossRef]

- Park, S.C.; Keum, B.; Hyun, J.J.; Seo, Y.S.; Kim, Y.S.; Jeen, Y.T.; Chun, H.J.; Um, S.H.; Kim, C.D.; Ryu, H.S. A novel cleansing score system for capsule endoscopy. World J. Gastroenterol. 2010, 16, 875–880. [Google Scholar] [PubMed]

- Petroniene, R.; Dubcenco, E.; Baker, J.P.; Ottaway, C.A.; Tang, S.J.; Zanati, S.A.; Streutker, C.J.; Gardiner, G.W.; Warren, R.E.; Jeejeebhoy, K.N. Given Capsule Endoscopy in Celiac Disease: Evaluation of Diagnostic Accuracy and Interobserver Agreement. Am. J. Gastroenterol. 2005, 100, 685–694. [Google Scholar] [CrossRef] [PubMed]

- Pezzoli, A.; Cannizzaro, R.; Pennazio, M.; Rondonotti, E.; Zancanella, L.; Fusetti, N.; Simoni, M.; Cantoni, F.; Melina, R.; Alberani, A.; et al. Interobserver agreement in describing video capsule endoscopy findings: A multicentre prospective study. Dig. Liver Dis. 2011, 43, 126–131. [Google Scholar] [CrossRef] [PubMed]

- Pons Beltrán, V.; González Suárez, B.; González Asanza, C.; Pérez-Cuadrado, E.; Fernández Diez, S.; Fernández-Urién, I.; Mata Bilbao, A.; Espinós Pérez, J.C.; Pérez Grueso, M.J.; Argüello Viudez, L.; et al. Evaluation of Different Bowel Preparations for Small Bowel Capsule Endoscopy: A Prospective, Randomized, Controlled Study. Dig. Dis. Sci. 2011, 56, 2900–2905. [Google Scholar] [CrossRef] [PubMed]

- Qureshi, W.A.; Wu, J.; DeMarco, D.; Abudayyeh, S.; Graham, D.Y. Capsule Endoscopy for Screening for Short-Segment Barrett’s Esophagus. Am. J. Gastroenterol. 2008, 103, 533–537. [Google Scholar] [CrossRef] [PubMed]

- Ravi, S.; Aryan, M.; Ergen, W.F.; Leal, L.; Oster, R.A.; Lin, C.P.; Weber, F.H.; Peter, S. Bedside live-view capsule endoscopy in evaluation of overt obscure gastrointestinal bleeding-a pilot point of care study. Dig. Dis. 2022. [Google Scholar] [CrossRef] [PubMed]

- Rimbaş, M.; Zahiu, D.; Voiosu, A.; Voiosu, T.A.; Zlate, A.A.; Dinu, R.; Galasso, D.; Minelli Grazioli, L.; Campanale, M.; Barbaro, F.; et al. Usefulness of virtual chromoendoscopy in the evaluation of subtle small bowel ulcerative lesions by endoscopists with no experience in videocapsule. Endosc. Int. Open 2016, 4, E508–E514. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Rondonotti, E.; Koulaouzidis, A.; Karargyris, A.; Giannakou, A.; Fini, L.; Soncini, M.; Pennazio, M.; Douglas, S.; Shams, A.; Lachlan, N.; et al. Utility of 3-dimensional image reconstruction in the diagnosis of small-bowel masses in capsule endoscopy (with video). Gastrointest. Endosc. 2014, 80, 642–651. [Google Scholar] [CrossRef] [PubMed]

- Sciberras, M.; Conti, K.; Elli, L.; Scaramella, L.; Riccioni, M.E.; Marmo, C.; Cadoni, S.; McAlindon, M.; Sidhu, R.; O’Hara, F.; et al. Score reproducibility and reliability in differentiating small bowel subepithelial masses from innocent bulges. Dig. Liver Dis. 2022, 54, 1403–1409. [Google Scholar] [CrossRef] [PubMed]

- Shi, H.Y.; Chan, F.K.L.; Higashimori, A.; Kyaw, M.; Ching, J.Y.L.; Chan, H.C.H.; Chan, J.C.H.; Chan, A.W.H.; Lam, K.L.Y.; Tang, R.S.Y.; et al. A prospective study on second-generation colon capsule endoscopy to detect mucosal lesions and disease activity in ulcerative colitis (with video). Gastrointest. Endosc. 2017, 86, 1139–1146.e6. [Google Scholar] [CrossRef]

- Triantafyllou, K.; Kalantzis, C.; Papadopoulos, A.A.; Apostolopoulos, P.; Rokkas, T.; Kalantzis, N.; Ladas, S.D. Video-capsule endoscopy gastric and small bowel transit time and completeness of the examination in patients with diabetes mellitus. Dig. Liver Dis. 2007, 39, 575–580. [Google Scholar] [CrossRef]

- Usui, S.; Hosoe, N.; Matsuoka, K.; Kobayashi, T.; Nakano, M.; Naganuma, M.; Ishibashi, Y.; Kimura, K.; Yoneno, K.; Kashiwagi, K.; et al. Modified bowel preparation regimen for use in second-generation colon capsule endoscopy in patients with ulcerative colitis: Preparation for colon capsule endoscopy. Dig. Endosc. 2014, 26, 665–672. [Google Scholar] [CrossRef] [PubMed]

- Wong, R.F.; Tuteja, A.K.; Haslem, D.S.; Pappas, L.; Szabo, A.; Ogara, M.M.; DiSario, J.A. Video capsule endoscopy compared with standard endoscopy for the evaluation of small-bowel polyps in persons with familial adenomatous polyposis (with video). Gastrointest. Endosc. 2006, 64, 530–537. [Google Scholar] [CrossRef]

- Zakaria, M.S.; El-Serafy, M.A.; Hamza, I.M.; Zachariah, K.S.; El-Baz, T.M.; Bures, J.; Tacheci, I.; Rejchrt, S. The role of capsule endoscopy in obscure gastrointestinal bleeding. Arab. J. Gastroenterol. 2009, 10, 57–62. [Google Scholar] [CrossRef]

- Rondonotti, E.; Soncini, M.; Girelli, C.M.; Russo, A.; Ballardini, G.; Bianchi, G.; Cantù, P.; Centenara, L.; Cesari, P.; Cortelezzi, C.C.; et al. Can we improve the detection rate and interobserver agreement in capsule endoscopy? Dig. Liver Dis. 2012, 44, 1006–1011. [Google Scholar] [CrossRef]

- Leenhardt, R.; Koulaouzidis, A.; McNamara, D.; Keuchel, M.; Sidhu, R.; McAlindon, M.E.; Saurin, J.C.; Eliakim, R.; Fernandez-Urien Sainz, I.; Plevris, J.N.; et al. A guide for assessing the clinical relevance of findings in small bowel capsule endoscopy: Analysis of 8064 answers of international experts to an illustrated script questionnaire. Clin. Res. Hepatol. Gastroenterol. 2021, 45, 101637. [Google Scholar] [CrossRef]

- Ding, Z.; Shi, H.; Zhang, H.; Meng, L.; Fan, M.; Han, C.; Zhang, K.; Ming, F.; Xie, X.; Liu, H.; et al. Gastroenterologist-Level Identification of Small-Bowel Diseases and Normal Variants by Capsule Endoscopy Using a Deep-Learning Model. Gastroenterology 2019, 157, 1044–1054. [Google Scholar] [CrossRef]

- Xie, X.; Xiao, Y.F.; Zhao, X.Y.; Li, J.J.; Yang, Q.Q.; Peng, X.; Nie, X.B.; Zhou, J.Y.; Zhao, Y.B.; Yang, H.; et al. Development and validation of an artificial intelligence model for small bowel capsule endoscopy video review. JAMA Netw. Open 2022, 5, e2221992. [Google Scholar] [CrossRef] [PubMed]

- Dray, X.; Toth, E.; de Lange, T.; Koulaouzidis, A. Artificial intelligence, capsule endoscopy, databases, and the Sword of Damocles. Endosc. Int. Open 2021, 9, E1754–E1755. [Google Scholar] [CrossRef] [PubMed]

- Horie, Y.; Yoshio, T.; Aoyama, K.; Yoshimizu, S.; Horiuchi, Y.; Ishiyama, A.; Hirasawa, T.; Tsuchida, T.; Ozawa, T.; Ishihara, S.; et al. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest. Endosc. 2019, 89, 25–32. [Google Scholar] [CrossRef] [PubMed]

- Cho, B.J.; Bang, C.S.; Park, S.W.; Yang, Y.J.; Seo, S.I.; Lim, H.; Shin, W.G.; Hong, J.T.; Yoo, Y.T.; Hong, S.H.; et al. Automated classification of gastric neoplasms in endoscopic images using a convolutional neural network. Endoscopy 2019, 51, 1121–1129. [Google Scholar] [CrossRef]

- Wang, P.; Berzin, T.M.; Glissen Brown, J.R.; Bharadwaj, S.; Becq, A.; Xiao, X.; Liu, P.; Li, L.; Song, Y.; Zhang, D.; et al. Real-time automatic detection system increases colonoscopic polyp and adenoma detection rates: A prospective randomised controlled study. Gut 2019, 68, 1813–1819. [Google Scholar] [CrossRef]

- Yung, D.; Fernandez-Urien, I.; Douglas, S.; Plevris, J.N.; Sidhu, R.; McAlindon, M.E.; Panter, S.; Koulaouzidis, A. Systematic review and meta-analysis of the performance of nurses in small bowel capsule endoscopy reading. United Eur. Gastroenterol. J. 2017, 5, 1061–1072. [Google Scholar] [CrossRef]

- Handa, Y.; Nakaji, K.; Hyogo, K.; Kawakami, M.; Yamamoto, T.; Fujiwara, A.; Kanda, R.; Osawa, M.; Handa, O.; Matsumoto, H.; et al. Evaluation of performance in colon capsule endoscopy reading by endoscopy nurses. Can. J. Gastroenterol. Hepatol. 2021, 2021, 8826100. [Google Scholar] [CrossRef]

| Kappa | Intra-Class Correlation | Spearman Rank Correlation | |||

|---|---|---|---|---|---|

| Value | Evaluation | Value | Evaluation | Value | Evaluation |

| >0.90 | Almost perfect | >0.9 | Excellent | ±1 | Perfect |

| 0.80–0.90 | Strong | 0.75–0.9 | Good | ±0.8–0.9 | Very strong |

| 0.60–0.79 | Moderate | 0.5–<0.75 | Moderate | ±0.6–0.7 | Moderate |

| 0.40–0.59 | Weak | <0.5 | Poor | ±0.3–0.5 | Fair |

| 0.21–0.39 | Minimal | ±0.1–0.2 | Poor | ||

| <0.20 | None | 0 | None | ||

| Reference (Year) | Single or Multi Center Study | n Included for Review (Total) | Indication | Finding Group(s) | MINORS Score (0–14) |

|---|---|---|---|---|---|

| Adler DG (2004) [18] | Single | 20 (20) | GI bleeding | Blood; Erosions/Ulcerations | 11 |

| Alageeli M (2020) [19] | Multi | 25 (25) | GI bleeding, CD, screening for HPS | Cleanliness | 11 |

| Albert J (2004) [20] | Single | 36 (36) | OGIB, suspected CD, suspected SB tumor, refractory sprue, FAP | Cleanliness | 12 |

| Arieira C (2019) [21] | Single | 22 (22) | Known CD | IBD | 8 |

| Biagi F (2006) [22] | Multi | 21 (32) | CeD, IBS, known CD | Villous atrophy | 10 |

| Blanco-Velasco G (2021) [23] | Single | 100 (100) | IDA, GI bleeding, known CD, SB tumors, diarrhea | Blood; IBD; Blended outcomes | 11 |

| Bossa F (2006) [24] | Single | 39 (41) | OGIB, HPS, known CD, CeD, diarrhea | Blood; Blended outcomes; Other lesions; Polyps; Erosions/Ulcerations; Angiodysplasias | 8 |

| Bourreille A (2006) [25] | Multi | 32 (32) | IIleocolonic resection | Blended outcomes; Other lesions; Villous atrophy; Erosions/Ulcerations | 12 |

| Brotz C (2009) [26] | Single | 40 (541) | GI bleeding, abdominal pain, diarrhea, anemia, follow-up of prior findings | Cleanliness | 10 |

| Buijs MM (2018) [27] | Single | 42 (136) | CRC screening | Blended outcomes; Polyps; Cleanliness | 13 |

| Chavalitdhamrong D (2012) [28] | Multi | 65 (65) | Portal hypertension | Other lesions | 12 |

| Chetcuti Zammit S (2021) [29] | Multi | 300 (300) | CeD, seronegative villous atrophy | IBD; Villous atrophy; Erosions/Ulcerations; Blended outcomes | 13 |

| Christodoulou D (2007) [30] | Single | 20 (20) | GI bleeding | Other lesions; Angiodysplasias; Polyps; Blood | 11 |

| Cotter J (2015) [31] | Single | 70 (70) | Known CD | IBD | 12 |

| De Leusse A (2005) [32] | Single | 30 (64) | GI bleeding | Blood; Angiodysplasias; Other lesions; Erosions/Ulcerations; Blended outcomes; | 12 |

| de Sousa Magalhães R (2021) [33] | Single | 58 (58) | Incomplete colonoscopy | Cleanliness | 11 |

| Delvaux M (2008) [34] | Multi | 96 (98) | Known or suspected esophageal disease | Blended outcomes | 13 |

| D’Haens G (2015) [35] | Multi | 20 (40) | Known CD | IBD | 11 |

| Dray X (2021) [36] | Multi | 155 (637) | OGIB | Cleanliness | 12 |

| Duque G (2012) [37] | Single | 20 (20) | GI bleeding | Blended outcomes | 11 |

| Eliakim R (2020) [38] | Single | 54 (54) | Known CD | IBD | 11 |

| Esaki M (2009) [39] | Single | 75 (102) | OGIB, FAP, GI lymphoma, PJS, GIST, carcinoid tumor | Cleanliness | 12 |

| Esaki M (2019) [40] | Multi | 50 (108) | Suspected CD | Other lesions; Erosions/Ulcerations | 10 |

| Ewertsen C (2006) [41] | Single | 33 (34) | OGIB, carcinoid tumors, angiodysplasias, diarrhea, immune deficiency, diverticular disease | Blended outcomes | 8 |

| Gal E (2008) [42] | Single | 20 (20) | Known CD | IBD | 7 |

| Galmiche JP (2008) [43] | Multi | 77 (89) | GERD symptoms | Other lesions | 12 |

| Garcia-Compean D (2021) [44] | Single | 22 (22) | SB angiodysplasias | Angiodysplasias; Blended outcomes | 12 |

| Ge ZZ (2006) [45] | Single | 56 (56) | OGIB, suspected CD, abdominal pain, suspected SB tumor, FAP, diarrhea, sprue | Cleanliness | 12 |

| Girelli CM (2011) [46] | Single | 25 (35) | Suspected submucosal lesion | Other lesions | 12 |

| Goyal J (2014) [47] | Single | 34 (34) | NA | Cleanliness | 11 |

| Gupta A (2010) [48] | Single | 20 (20) | PJS | Polyps | 11 |

| Gupta T (2011) [49] | Single | 60 (60) | OGIB | Other lesions | 12 |

| Hong-Bin C (2013) [50] | Single | 63 (63) | GI bleeding, abdominal pain, chronic diarrhea | Cleanliness | 11 |

| Jang BI (2010) [51] | Multi | 56 (56) | NA | Blended outcomes | 10 |

| Jensen MD (2010) [52] | Single | 30 (30) | Known or suspected CD | Other lesions; IBD; Blended outcomes | 11 |

| Lai LH (2006) [53] | Single | 58 (58) | OGIB, known CD, abdominal pain | Blended outcomes | 10 |

| Lapalus MG (2009) [54] | Multi | 107 (120) | Portal hypertension | Other lesions | 11 |

| Laurain A (2014) [55] | Multi | 77 (80) | Portal hypertension | Other lesions | 12 |

| Laursen EL (2009) [56] | Single | 30 (30) | NA | Blended outcomes | 12 |

| Leighton JA (2011) [57] | Multi | 40 (40) | Healthy volunteers | Cleanliness | 13 |

| Murray JA (2008) [58] | Single | 37 (40) | CeD | IBD; Villous atrophy | 12 |

| Niv Y (2005) [59] | Single | 50 (50) | IDA, abdominal pain, known CD, CeD, GI lymphoma, SB transplant | Blended outcomes | 11 |

| Niv Y (2012) [60] | Multi | 50 (54) | Known CD | IBD | 13 |

| Oliva S (2014) [61] | Single | 29 (29) | UC | IBD | 14 |

| Oliva S (2014) [62] | Single | 198 (204) | Suspected IBD, OGIB, other symptoms | Cleanliness | 12 |

| Omori T (2020) [63] | Single | 20 (196) | Known CD | IBD | 8 |

| Park SC (2010) [64] | Single | 20 (20) | GI bleeding, IDA, abdominal pain, diarrhea | Cleanliness; Blended outcomes | 8 |

| Petroniene R (2005) [65] | Single | 20 (20) | CeD, villous atrophy | Villous atrophy | 12 |

| Pezzoli A (2011) [66] | Multi | 75 (75) | NA | Blood; Blended outcomes | 12 |

| Pons Beltrán V (2011) [67] | Multi | 31 (273) | GI bleeding, suspected CD | Cleanliness | 14 |

| Qureshi WA (2008) [68] | Single | 18 (20) | BE | Other lesions | 11 |

| Ravi S (2022) [69] | Single | 10 (22) | GI bleeding | Other lesions | 14 |

| Rimbaş M (2016) [70] | Single | 64 (64) | SB ulcerations | IBD | 12 |

| Rondonotti E (2014) [71] | Multi | 32 (32) | NA | Other lesions | 11 |

| Sciberras M (2022) [72] | Multi | 100 (182) | Suspected submucosal lesion | Other lesions | 10 |

| Shi HY (2017) [73] | Single | 30 (150) | UC | IBD; Blood; Erosions/Ulcerations | 14 |

| Triantafyllou K (2007) [74] | Multi | 87 (87) | Diabetes mellitus | Cleanliness; Blended outcomes | 11 |

| Usui S (2014) [75] | Single | 20 (20) | UC | IBD | 9 |

| Wong RF (2006) [76] | Single | 19 (32) | FAP | Polyps | 13 |

| Zakaria MS (2009) [77] | Single | 57 (57) | OGIB | Blended outcomes | 9 |

| Test Statistic | Mean | CI 95% | Range | Comparisons, n (Inter/Intra) | Studies, n | Evaluation |

|---|---|---|---|---|---|---|

| Kappa | 0.53 | 0.51; 0.55 | −0.33; 1.0 | 424 (383/41) | 46 | Weak |

| ICC | 0.81 | 0.78; 0.84 | 0.51; 1.0 | 73 (41/32) | 11 | Good |

| Spearman Rank | 0.73 | 0.68; 0.78 | 0.30; 1.0 | 60 (60/0) | 5 | Moderate |

| Kendall’s coefficient | 0.89 | 0.86; 0.92 | 0.77; 1.0 | 20 (18/2) | 2 | n too small |

| Kolmogorov–Smirnov | 0.99 | - | 0.98; 1.0 | 2 (2/0) | 1 | n too small |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cortegoso Valdivia, P.; Deding, U.; Bjørsum-Meyer, T.; Baatrup, G.; Fernández-Urién, I.; Dray, X.; Boal-Carvalho, P.; Ellul, P.; Toth, E.; Rondonotti, E.; et al. Inter/Intra-Observer Agreement in Video-Capsule Endoscopy: Are We Getting It All Wrong? A Systematic Review and Meta-Analysis. Diagnostics 2022, 12, 2400. https://doi.org/10.3390/diagnostics12102400

Cortegoso Valdivia P, Deding U, Bjørsum-Meyer T, Baatrup G, Fernández-Urién I, Dray X, Boal-Carvalho P, Ellul P, Toth E, Rondonotti E, et al. Inter/Intra-Observer Agreement in Video-Capsule Endoscopy: Are We Getting It All Wrong? A Systematic Review and Meta-Analysis. Diagnostics. 2022; 12(10):2400. https://doi.org/10.3390/diagnostics12102400

Chicago/Turabian StyleCortegoso Valdivia, Pablo, Ulrik Deding, Thomas Bjørsum-Meyer, Gunnar Baatrup, Ignacio Fernández-Urién, Xavier Dray, Pedro Boal-Carvalho, Pierre Ellul, Ervin Toth, Emanuele Rondonotti, and et al. 2022. "Inter/Intra-Observer Agreement in Video-Capsule Endoscopy: Are We Getting It All Wrong? A Systematic Review and Meta-Analysis" Diagnostics 12, no. 10: 2400. https://doi.org/10.3390/diagnostics12102400

APA StyleCortegoso Valdivia, P., Deding, U., Bjørsum-Meyer, T., Baatrup, G., Fernández-Urién, I., Dray, X., Boal-Carvalho, P., Ellul, P., Toth, E., Rondonotti, E., Kaalby, L., Pennazio, M., & Koulaouzidis, A., on behalf of the International CApsule endoscopy REsearch (I-CARE) Group. (2022). Inter/Intra-Observer Agreement in Video-Capsule Endoscopy: Are We Getting It All Wrong? A Systematic Review and Meta-Analysis. Diagnostics, 12(10), 2400. https://doi.org/10.3390/diagnostics12102400